Abstract

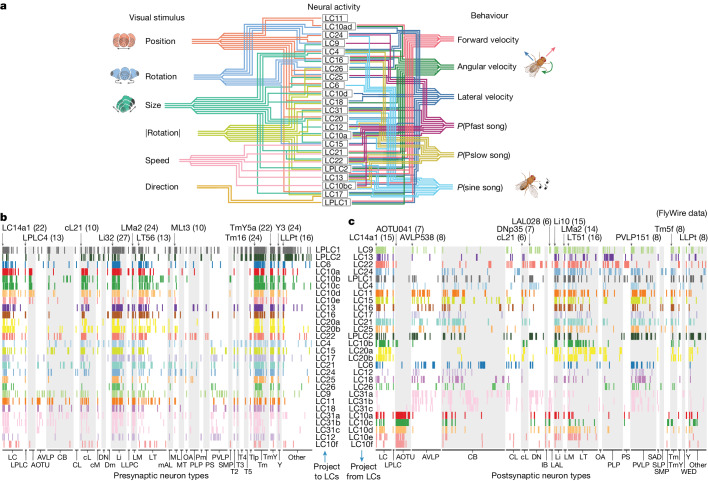

The rich variety of behaviours observed in animals arises through the interplay between sensory processing and motor control. To understand these sensorimotor transformations, it is useful to build models that predict not only neural responses to sensory input1–5 but also how each neuron causally contributes to behaviour6,7. Here we demonstrate a novel modelling approach to identify a one-to-one mapping between internal units in a deep neural network and real neurons by predicting the behavioural changes that arise from systematic perturbations of more than a dozen neuronal cell types. A key ingredient that we introduce is ‘knockout training’, which involves perturbing the network during training to match the perturbations of the real neurons during behavioural experiments. We apply this approach to model the sensorimotor transformations of Drosophila melanogaster males during a complex, visually guided social behaviour8–11. The visual projection neurons at the interface between the optic lobe and central brain form a set of discrete channels12, and prior work indicates that each channel encodes a specific visual feature to drive a particular behaviour13,14. Our model reaches a different conclusion: combinations of visual projection neurons, including those involved in non-social behaviours, drive male interactions with the female, forming a rich population code for behaviour. Overall, our framework consolidates behavioural effects elicited from various neural perturbations into a single, unified model, providing a map from stimulus to neuronal cell type to behaviour, and enabling future incorporation of wiring diagrams of the brain15 into the model.

Subject terms: Computational neuroscience, Visual system, Social behaviour

A deep neural network with ‘knockout training’ is used to model sensorimotor transformations and neural perturbations of male Drosophila melanogaster during visually guided social behaviour and provides predictions and insights into relationships between stimuli, neurons and behaviour.

Main

To understand how the brain transforms sensory information into behavioural action, an emerging and popular approach is to first train a deep neural network (DNN) model on a behavioural task performed by an animal (for example, recognizing an object in an image) and then compare the neural activity of the animal to the internal activations of the DNN1–3,5,16,17. A shortcoming of this approach is that the DNN does not predict how an individual neuron causally contributes to behaviour, making it difficult to interpret the role of the neuron in the sensorimotor transformation. Here we overcome this drawback by perturbing the internal units of a DNN model while predicting the behaviour of animals whose neurons have also been perturbed, a method that we call knockout training. This approach places a strong constraint on the model: each model unit must contribute to behaviour in a way that matches the causal contribution of the corresponding real neuron to behaviour. An added benefit is that the model infers neural activity from (perturbed) behaviour alone. This is especially useful when studying complex, natural behaviours, for which it can be challenging (or impossible in some systems) to obtain simultaneous recordings of neural activity. Here we use this approach to investigate the sensorimotor transformations of Drosophila males during natural social behaviours, including pursuit of and singing to a female9.

A deep network model of vision to behaviour

The Drosophila visual system contains a bottleneck between the optic lobes and the central brain in the form of visual projection neurons, which comprise approximately 200 different cell types18,19. The primary cell types of this bottleneck (Fig. 1a) are the 57 lobula columnar (LC) and lobula plate (LPLC) neuron types identified so far (we use ‘LC types’ to refer both to LC and LPLC neuron types), making up about 3.5% of all neurons in the brain. The LC neuron types receive input from the lobula and lobula plate in the optic lobe and send axons to optic glomeruli in the central brain12,20. Neurons of a single LC type innervate only one optic glomerulus in the posterior lateral protocerebrum, posterior ventrolateral protocerebrum or anterior optic tubercle neuropils, and prior studies have uncovered mappings between specific LC types, visual features and specific behaviours11,21–28. For example, LPLC2 neurons respond to a looming object and synapse onto the giant fibre neuron to drive an escape take-off25. LC11 neurons respond to small, moving spots and contribute to freezing behaviour27,28. For courtship, the LC10a neurons (and LC9 neurons, to a lesser extent) of a male participate in tracking the position of the female and driving turns towards the female11,22,23, but it is not yet known whether other LC types contribute to male social behaviours. As recordings from LC neurons reveal that even simple stimuli can drive responses in multiple LC types29–31, we explored whether the representation of the female during courtship might be distributed across the LC population, and similarly whether multiple LC types might be required to drive behaviour.

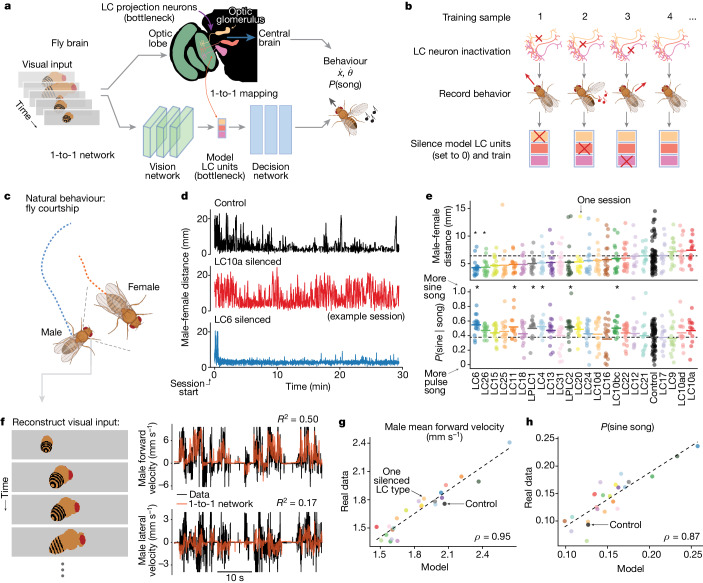

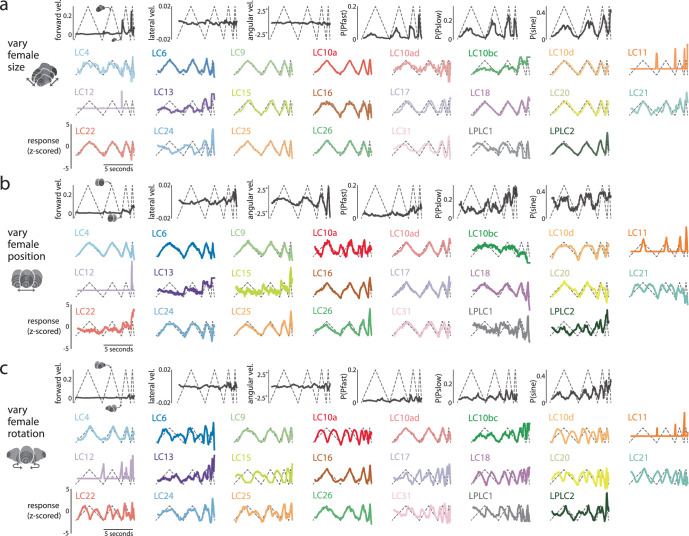

Fig. 1. Identifying a one-to-one mapping between real neurons and internal units of a DNN with knockout training.

a, We model the transformation from vision to behaviour in male flies with a DNN that comprises a bottleneck of model units to match the bottleneck of optic glomeruli in the visual system of the fly. We seek a one-to-one mapping in which one model unit corresponds to one optic glomerulus (innervated by a single LC neuron type) both in activity and in contribution to behaviour (for example, movement and song produced by wing vibration). b, We designed knockout training to fit this 1-to-1 network. After silencing an LC neuron type and recording the resulting behaviour, during training we ‘knocked out’ the model LC unit (that is, we set its activity value to 0 (red crosses)) corresponding to the silenced LC type. c, We (bilaterally) genetically inactivated males for each of 23 LC neuron types and then recorded the interactions of each male with a female during natural courtship. d, Courtship behaviour noticeably changed between control and LC-silenced male flies. Example sessions are shown. e, Changes in the average male-to-female distance following silencing of each LC type in males (top) and changes in the proportion of song that was sine versus pulse (bottom). Each dot denotes one courtship session. Short lines denote means; horizontal dashed line denotes mean of control sessions. Asterisks denote significant deviation from control. P < 0.05, permutation test, false discovery rate-corrected for multiple comparisons; n > 12. f, The 1-to-1 network takes as input an image sequence of the 10 most recent time frames (approximately 300 ms) of the visual experience of the male. Each image is a reconstruction of what the male fly observed based on male and female joint positions of that time frame (for example, c). The 1-to-1 network reliably predicts forward velocity (right, top), lateral velocity (right, bottom) and other behavioural variables (Extended Data Fig. 3) of the male fly. R2 values are from held-out frames across control sessions. g,h, The 1-to-1 network also reliably predicts overall mean changes in behaviour across males with different silenced LC neuron types, such as forward velocity (g) and sine song (h). Correlation ρ values were significant (P < 0.002, permutation test; n = 23).

We designed a novel DNN modelling approach for identifying the functional roles of LC neuron types using behavioural data from genetically altered flies. The DNN model has three components: (1) a front-end convolutional vision network that reflects processing in the optic lobe; (2) a bottleneck layer of LC units in which each model LC unit represents the summed activity of neurons of the same LC type (that is, the overall activity level of an optic glomerulus); and (3) a decision network with dense connections that maps LC responses to behaviour, reflecting downstream processing in the central brain and ventral nerve cord (Fig. 1a). We imposed the bottleneck layer to have the same number of units as LC neuron types we manipulated, and our goal was to identify a one-to-one mapping between model LC units and LC neuron types. We did not incorporate biological realism into the vision and decision networks, opting instead for highly expressive mappings to ensure accurate prediction; we focused on explaining LC function. We collected training data to fit the model by blocking synaptic transmission32 in each of 23 different LC types in male flies12,33 and recorded the movements of the LC-silenced male and song production during natural courtship (Methods). We then devised a fitting procedure called knockout training, which involves training the model using the entire behavioural dataset of both perturbed and unperturbed sets of males. Critically, when training the model on data from a male with a particular LC type silenced (Fig. 1b), we set to 0 (that is, we knocked out) the activity of the corresponding model LC unit (correspondence was arbitrarily chosen at initialization; see Methods). The resulting model captures the behavioural repertoire of each genetically altered fly when the corresponding model LC unit is silenced, thereby aligning the model LC units to the real LC neurons. In simulations (Extended Data Fig. 2), knockout training correctly identified the activity and contribution to behaviour of each silenced neuron type (a one-to-one mapping) for neuron types that, when silenced, led to changes in behaviour. We refer to the resulting DNN model as the ‘1-to-1 network’.

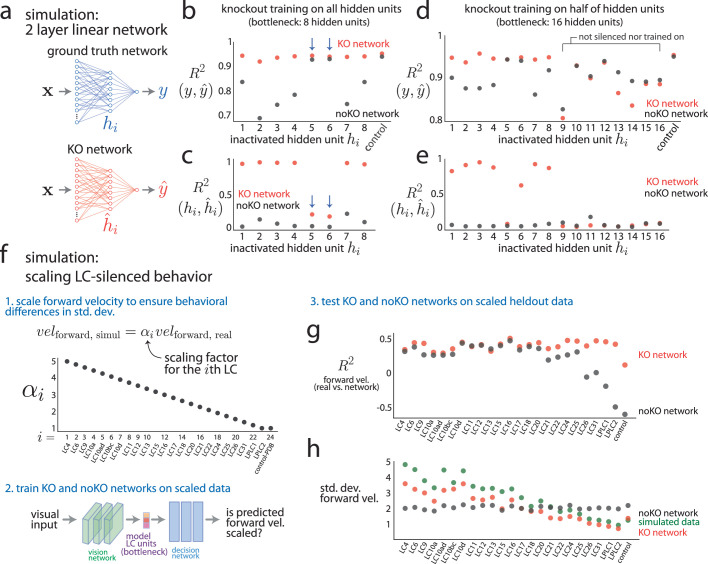

Extended Data Fig. 2. Testing the efficacy of knockout training with simulations.

We tested the ability of knockout training to correctly identify the one-to-one mapping of silenced neuron types with two simulations. We compare a network trained with knockout (‘KO network’) to a network trained without knockout (‘noKO network’) for which no model units are inactivated (i.e., the noKO network has no knowledge that any silencing occurred). a. A simple simulation with 2 layer linear networks. The ground truth network (top) is a randomly-initialized, untrained 2 layer linear network with 48 input variables ( where ), 8 hidden units (hi for i = 1, …, 8), and 1 output unit (y). We use the same network architecture for the KO network (bottom). We seek a one-to-one mapping between the ground truth hidden units hi and the model’s estimated hidden units . We generated training data by silencing each hidden unit of the ground truth network (i.e., setting hi = 0) and recording the resulting silenced output y as well as observing control data (for which no silencing occurred). For each case, we drew 1,000 input samples, which yielded 9, 000 training samples in total. We then trained the model either using knockout training (‘KO network’) or without it (‘noKO network’). We generated a test set in the same way as but independent of the training set; the test set also had 9, 000 test samples in total. b. We tested the KO network’s ability to correctly predict the silenced output y of the ground truth network. We collected the KO network’s predicted output to 1, 000 test samples for each silenced hidden unit of the ground truth network by knocking out the corresponding hidden unit in the KO network. We then computed the R2 (coefficient of determination) between y and for each silenced unit as well as control (red dots). We evaluated the noKO network with the same test set but did not knockout any hidden units during training or evaluation (black dots). We found that the KO network better predicted silenced output than that of the noKO network for most of the hidden units (red dots above black dots) but performance was roughly equal for control data (‘control’ red and black dots overlap). The KO and noKO networks had similar prediction performance for some of the silenced hidden units (i = 5 and 6, arrows); these units contributed little to the output of the ground truth network and, when silenced, led to outputs similar to those observed during control sessions. c. We then tested the KO network’s ability to correctly predict the hidden unit activity hi for the ith hidden unit of the ground truth network (i.e., its “neural” responses). For the same test set as in b, we collected the KO network’s responses of its hidden units and computed the R2 (Pearson’s correlation squared) between hi and (red dots). We performed the same evaluation for the noKO network (black dots) and found that the KO network substantially better predicted the activity of the ground truth’s hidden units versus the noKO network’s predictions (red dots above black dots). We observed some hidden units with low prediction performance both for the KO and noKO networks (i = 5 and 6, arrows). As expected, knockout training cannot identify mappings for these hidden units that contribute little to the ground truth network’s output (b, i = 5 and 6). Taking b and c together, we conclude that knockout training successfully identified the one-to-one mapping. d. We wondered to what extent does knockout training recover the one-to-one mapping when not all ground truth hidden units are silenced. This setting is more similar to our modeling of the fruit fly visual system, where we cannot silence all possible LC types. To test this, we gave the ground truth network and the model network each 16 hidden units (instead of 8 units) but only silenced the first 8 hidden units of the ground truth network (i = 1, 2, …, 8). We generated the training and test sets in the same manner as in a, ignoring the extra last 8 hidden units (i = 9, 10, …, 16), and trained the network with knockout training. The KO network correctly predicted output y for the first 8 silenced units (i = 1, 2, …, 8) but not for output resulting from silencing one of the last 8 units on which the KO network was not trained (i = 9, 10, …, 16, red and black dots overlap). The noKO network had worse prediction than that of the KO network for hidden units that contributed to the output (i = 1, 2, …, 8, black dots below red dots for most hidden units); inactivated hidden units with similar performance between KO and noKO networks (red dots and black dots overlap, i = 5) are due to the same reasons as that in b (arrows). e. Same as c except for 16 hidden units. As expected, the KO network recovers the activity for most of the first 8 hidden units (i = 1, 2, …, 8, red dots above black dots) but fails to recover the activity of the last 8 hidden units (i = 9, 10, …, 16, red and black dots close to R2 = 0). We note that the KO and noKO networks have similar poor performance for hidden unit 5 for the same reasons as the hidden units i = 5 and 6 in c. Taking d and e together, we conclude that knockout training still works to identify a one-to-one mapping (predicting both output/behavior and response) for hidden units that have been silenced—even if the remaining units in the bottleneck are never silenced. This motivates us to train the 1-to-1 network on behavioral data from silencing 23 LC types individually even though we do not have access to behavioral data from silencing the other remaining LC types in the bottleneck (57 LC types total in the bottleneck). f. Given that knockout training works in a simple simulation setting (a-e), we moved to testing knockout training for the 1-to-1 network used to model the fruit fly visual system (Fig. 1a). Although we could simulate data coming from a trained 1-to-1 network as ground truth, we were more interested in the case where there was a mismatch between the model and the real system—almost certainly the case using the 1-to-1 network to predict LC neuron types. Still, we sought some way to assess a ground truth change in behavior for the LC-silenced data and devised the following approach. For the ith LC type, we scale its forward velocity by αi, where αi decreases from 5 to 1 as index i increases incrementally. We then train a KO network on this scaled data in the same manner as that of the 1-to-1 network; we also train a noKO network that has no knowledge if its training sample comes from LC-silenced or control males. We only train the networks to predict forward velocity (no other behavioral outputs). g. We computed prediction performance R2 (coefficient of determination) between predicted and actual forward velocity on held-out frames for each LC-silenced behavior. The KO network had better prediction than that of the noKO network both for the most scaled and least scaled LC types (red dots above black dots for leftmost and rightmost dots). h. This change in performance between KO and noKO networks for the most and least scaled LC types in g can be explained by how the KO and noKO networks each predict the standard deviation of forward velocity. As expected from our scaling of the real data (f), the standard deviation of the simulated data linearly falls as we consider the later LC types (green dots, compare with black dots in f). We find that the standard deviations predicted by the KO network also linearly decrease (red dots) while those predicted by the noKO network remain relatively flat (black dots). Because the noKO network has no information about which LC type was silenced, the noKO network must predict roughly the same standard deviation for all LC types, choosing an intermediate standard deviation (around 2 s.d.). This also helps to explain why the KO and noKO networks differed in prediction more for the rightmost LC types (g, ‘LC26’ to ‘control’) because the noKO network overestimated the standard deviation for these LC types (black dots above green dots for ‘LC26’ to ‘control’) leading to larger errors (and a negative coefficient of determination) versus underestimating the standard deviation which does not lead to as large drops in R2 (g, LC4 to LC10d). These simulations show that knockout training can reliably identify one-to-one mappings between model units and internal units given behavior resulting from silencing those internal units.

Before fitting the model with courtship data (Fig. 1c), we quantified the extent to which (bilaterally) silencing each LC neuron type changes behaviour of the male fly (Extended Data Fig. 1). Consistent with previous studies11,23, we found that silencing LC10a neurons resulted in failures to initiate chasing, as male-to-female distances remained large over time (Fig. 1d, middle, 1e, top); we found similar results with silencing LC922. We also found strong effects on both chasing and singing when silencing other LC types. For example, silencing LC6 and LC26 neurons resulted in stronger and more persistent chasing, as male-to-female distances remained small over time (Fig. 1d, bottom, 1e, top). We observed a large number of LC types (LC4, LC6, LC11, LPLC1, LPLC2 and LC10bc) that, after silencing, significantly increased the amount of sine song relative to pulse song (Fig. 1e, bottom)—sine song typically occurs near the female34. Across behavioural measures, we found that the silencing of any single LC type did not match the behavioural deficits of blind flies (Extended Data Fig. 1). This suggests that many LC types would need to be silenced together to uncover large effects on courtship. We therefore modelled the perturbed behavioural data with the 1-to-1 network, enabling us to silence any possible combination of LC types in silico.

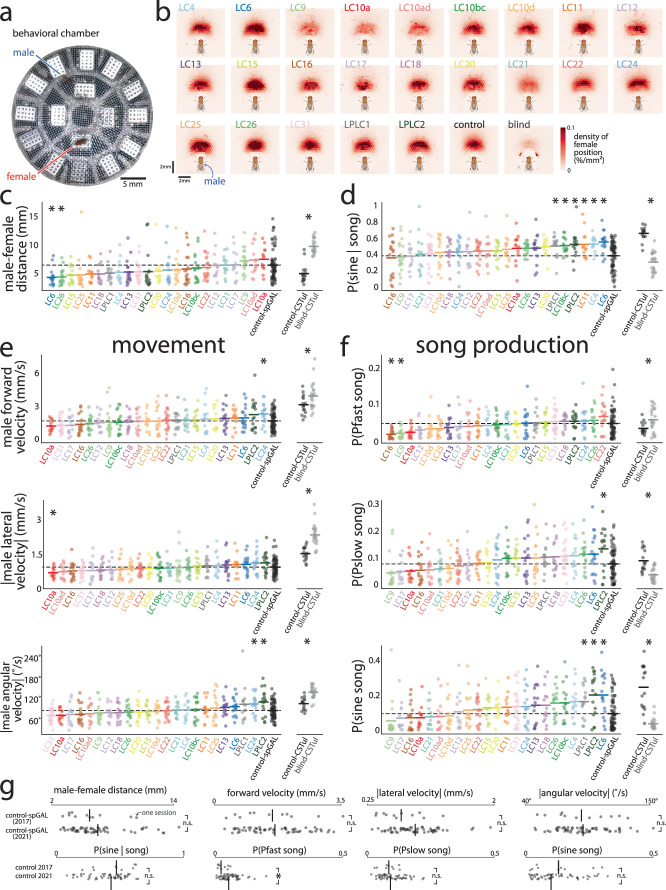

Extended Data Fig. 1. Different changes in behavior when silencing different LC neuron types of the male’s visual system.

The main finding is that no single LC type showed a substantial change relative to control compared to the change observed between blind and control flies—suggesting no single LC type is the sole contributor to courtship behaviors. a. Image of circular behavioral chamber used to estimate the positions of a male (blue) and female (red) fruit fly during courtship. Joint positions for each frame were identified with the behavioral tracking software SLEAP51. Audio waveforms of song were detected with 16 microphones tiling the chamber (white boxes). b. Density of female position relative to the male’s egocentric view, conditioned on which LC type was silenced in the male as well as control-spGAL (‘control’) and blind-CSTul (‘blind’) males (multiple sessions per heatmap). Silencing any single LC neuron type did not extinguish courtship chasing (compare LC-silenced heatmaps to that of blind males); however, silencing some LC types did lead to a noticeable decrease in the amount of time females were positioned in front of the male versus control sessions (e.g., compare LC9, LC10a, LC10ad, and LC21 to control). c. Male-female distance averaged across the entire session for each silenced LC type (reproduced from Fig. 1e, top panel). Each dot is for one session; lines denote means and dashed line denotes the mean for control sessions. Statistically significant changes from control flies are indicated by an asterisk (p < 0.05, permutation test, corrected for the false discovery rate of multiple hypothesis testing by the Benjamini-Hochberg procedure, n > 12 for n sessions per LC type). We note that the spread across sessions (i.e., scatter of dots) per LC type is large; one likely reason for this spread is that the females were PIBL (pheromone insensitive and blind)—PIBL females tend to show larger individual differences in copulation time than wildtype females9. We also considered changes to behavior between control and blind male flies in CSTul flies (right, data from refs. 9,52 recorded in an 8-microphone arena, asterisks denote p < 0.05, permutation test, n≥15); the change in male-female distance between control and blind flies (an average of +4.80 mm) was substantially larger than the largest change between an LC type and control (for LC10a, an average of +1.03 mm; for LC6, −2.15 mm). Differences between our control-spGAL flies and control-CSTul flies are most likely due to the criteria for keeping a session (CSTul sessions were stopped and discarded if the male failed to begin courtship in the first 5 minutes; we did not have such restrictions for our control or LC-silenced sessions). Thus, only the relative changes between control-spGAL and LC-silenced sessions and the relative changes between control-CSTul and blind-CSTul should be compared. d. Proportion of sine song given song production. Same data as in Fig. 1e (bottom panel) except the LC types are ordered based on increasing proportions. Same format as in a. e. Mean changes in movement, including forward velocity (top panel), lateral velocity (middle panel), and angular velocity (bottom panel), averaged over the entire session. The absolute value was taken for lateral and angular velocity (i.e., speed), as we were interested in changes away from the male’s heading direction (e.g., a large turn to the right or left both indicated a large deviance). Same format as in a. f. Changes in the male’s song production, including the probability of sine, Pfast, and Pslow song. Same format as in a. Although we observed some significant changes in behavior (asterisks), overall we did not observe any LC types that, after silencing, resulted in changes to behavior on par with the changes observed between control and blind flies—opposite of what we were expecting if only one or two LC types were the dominant contributors to courtship. This suggests that multiple LC types need to be silenced together to obtain large deficits in behavior, consistent with our modeling results (Fig. 4). Previous studies have identified LC types LC10a and LC9 as contributing to courtship11,22,23, and our results are consistent: LC10a and LC9 show an increase in male-to-female distance (c, LC10a and LC9), as previously reported. A new implication for LC10a and LC9 is for song production: Both LC types tend towards a reduction in song production for all three song types (f, LC10a and LC9). The metrics we use here (e.g., taking the mean forward velocity across an entire session) are coarse summary statistics and do not represent all possible ways in which behavior may change due to silencing. In addition, variability across sessions per LC type was large, making it difficult to identify true changes. This motivated us to use the 1-to-1 network to model the LC-silenced and control behavior, as the 1-to-1 network can be used to directly identify the largest changes to the sensorimotor transformation due to LC silencing. In particular, we can use a metric—the coefficient of determination R2—that considers more possible changes than simply a change in mean offset. We use R2 when comparing changes to behavior for the 1-to-1 network (Fig. 4), but we cannot use R2 for the data here, as the visual inputs were not the same across silenced behavioral datasets. g. Our behavioral experiments comprised two sets of data collection that were 4 years apart, and we wondered if large deviations occurred for control-spGAL sessions between the two sets (both sets had the same genetic lines). We separated the control sessions into two groups (‘control 2017’ and ‘control 2021’, named for the year of collection) and found no significant difference between them across the movement and song statistics (n.s. denotes p > 0.05, permutation test) except for Pfast song (asterisk, p < 0.05, permutation test, n > 10). Thus, we felt confident in merging the two sets of data collection for further analysis.

We performed knockout training to fit the parameters of the 1-to-1 network. The model inputs were videos of the visual input of the male fly during natural courtship (Methods and Fig. 1f, left); the model outputs comprised the male movements (forward, lateral and angular velocity) and song production, which included sine song and two forms of pulse song (Pfast and Pslow35). The 1-to-1 network reliably predicted these behavioural variables in held-out data (Fig. 1f, right and Extended Data Fig. 3). Notably, the 1-to-1 network also predicted differences in behaviour observed across silenced LC types (Fig. 1g,h and Extended Data Fig. 4). We confirmed that knockout training outperformed other possible training procedures, such as dropout training36 and training without knockout (that is, an unconstrained network) (Extended Data Figs. 3 and 4), and that results were largely consistent for different random initializations of the 1-to-1 network (Extended Data Fig. 5). Thus, the 1-to-1 network reliably estimated the behaviour of the male from visual input alone, even for male flies with a silenced LC type.

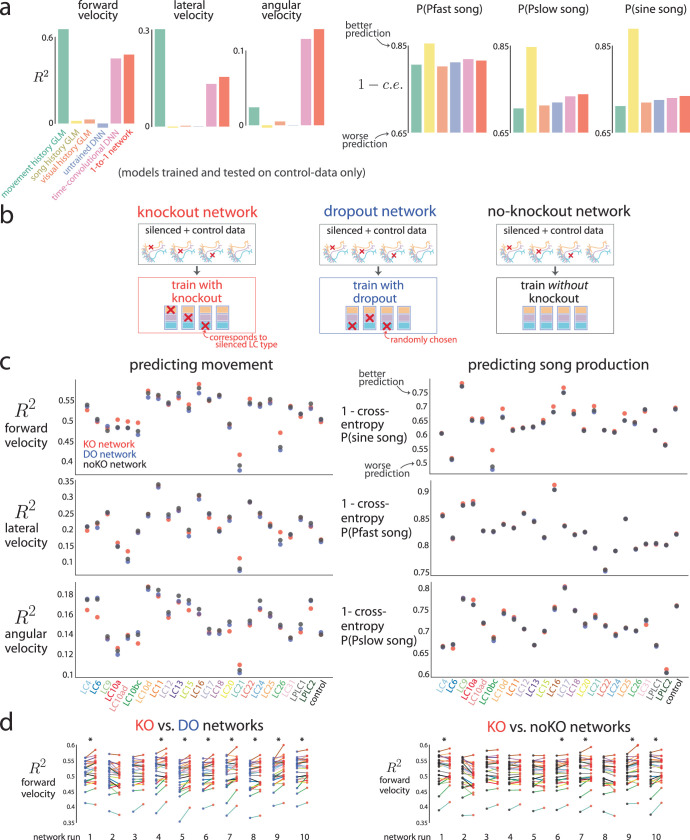

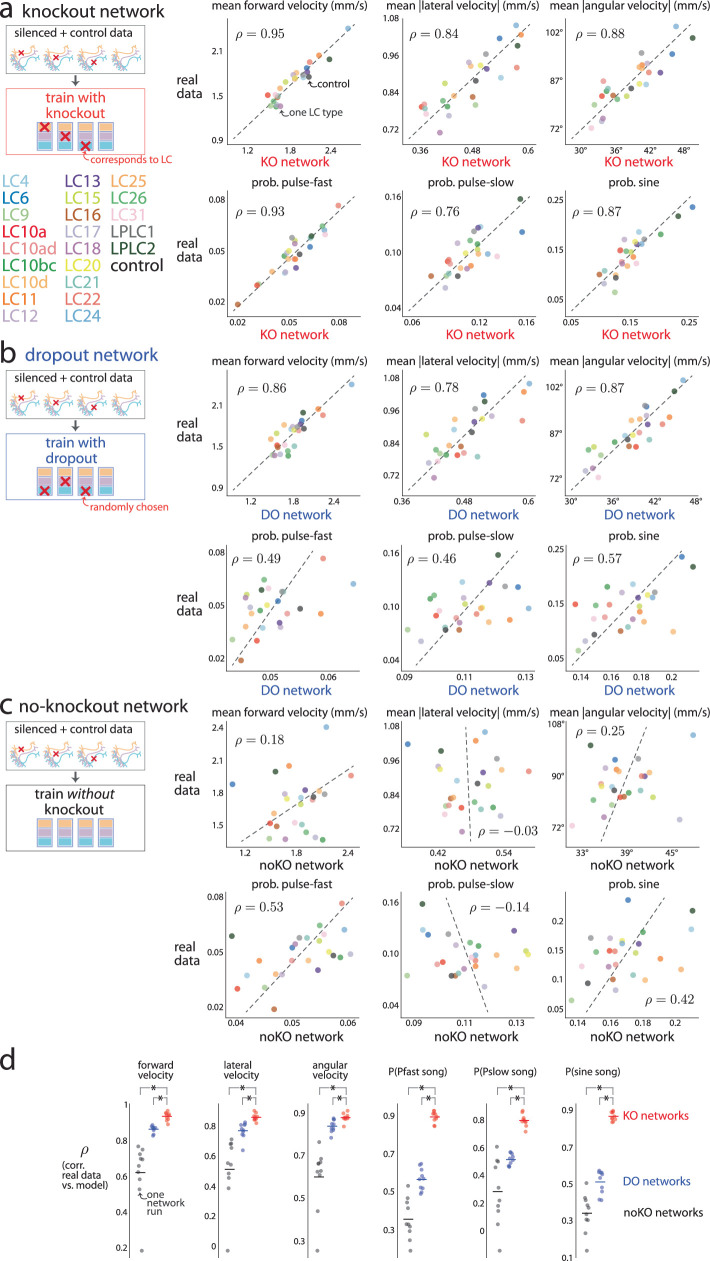

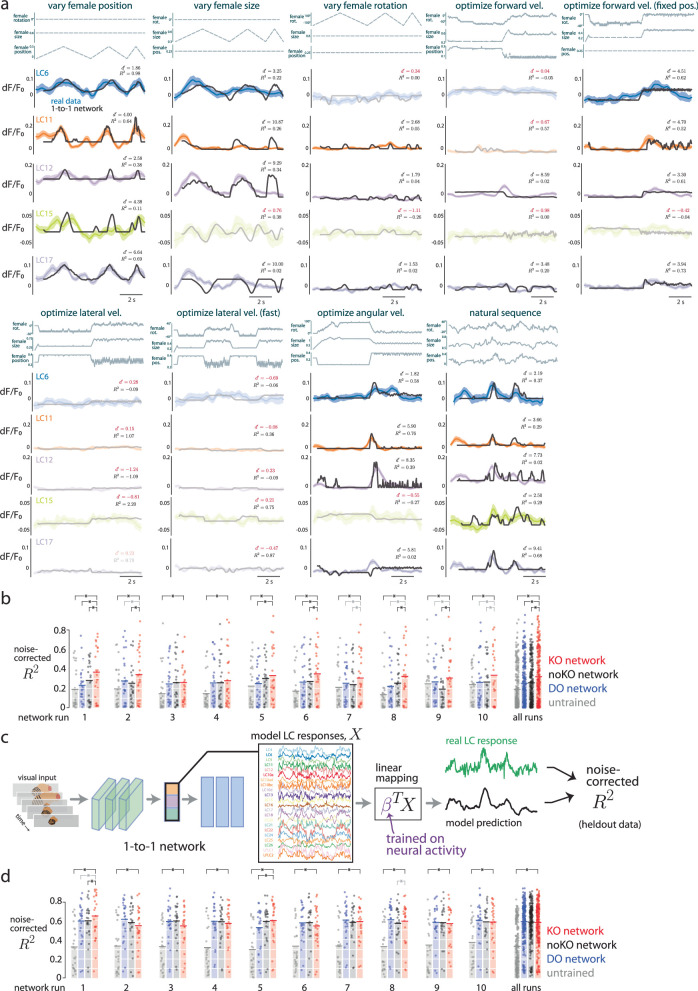

Extended Data Fig. 3. Predicting behavior frame-by-frame.

Here we compare the extent to which the 1-to-1 network better predicts frame-by-frame behavior versus other network architectures and baseline models as well as other training procedures. a. We considered different network architectures for the 1-to-1 network and compared their prediction performance to baseline models. We trained each model on control sessions only and tested on held-out test frames of control sessions. For baseline models, we considered a generalized linear model (GLM) that took as input the last 300 ms of movement history, including forward, lateral, and angular velocity (‘movement history GLM’); past song history, including Pfast, Pslow, and sine song (‘song history GLM’); as well as the male’s past visual history represented by female size, position, and rotation (‘visual history GLM’). The movement-history-GLM had good prediction of forward and lateral velocity (two leftmost plots), as expected, but failed at predicting angular velocity and song production. Its good prediction (R2 > 0.6 for forward velocity) stems from the fact that an animal’s forward velocity at time step t is likely similar to its forward velocity at time step t − 1 based on the physics of movement. Likewise, the song history GLM best predicted song production (three rightmost plots), as songs often occur in bouts, but failed at predicting moment-to-moment movement (three leftmost plots). Also expected was the poor prediction performance of the visual-history-GLM, whose inputs of the fictive female’s parameters likely must pass through a strong nonlinear transformation to accurately recover behavior (all orange bars are low). Next, we considered the DNN architecture of the 1-to-1 network (Fig. 1a). We trained the 1-to-1 network on control data only (i.e., no knockout training was performed) for this analysis. The 1-to-1 network’s prediction performance was better than any GLM model for angular velocity and showed good performance for song production (red bars). The 1-to-1 network did not outperform the movement-history-GLM on forward and lateral velocity; providing past movement history to the 1-to-1 network is an intriguing direction not investigated in this work. We confirmed that an untrained network with the same architecture as the 1-to-1 network (‘untrained DNN’, only its last readout layer was trained) had little prediction ability. Finally, we trained a more complicated version of the 1-to-1 network which had 3-d convolutions in both space (2-d) and time (1-d) in the vision network (‘time-convolutional DNN’ with 3 × 3 × 3 convolutional kernels). This greatly increased the number of parameters but ultimately did not improve prediction performance versus the 1-to-1 network (pink versus red bars). We suspect that with more data, the time-convolutional DNN will outperform the current architecture of the 1-to-1 network, as motion processing occurs before the LC bottleneck46. b. As a test of the 1-to-1 network’s ability to uncover a one-to-one mapping between model LC units and real LC neurons, we tested the extent to which the 1-to-1 network accurately predicts behavior on held-out courtship frames for each silenced LC type. An important comparison is to measure the 1-to-1 network’s prediction performance relative to networks with the same architecture and training data but with different training procedures. Here, we illustrate three different training procedures. Knockout training (left, red) sets to 0 the model LC unit that corresponds to the LC neuron type silenced for that training frame (no model LC units’ values are set to 0 for frames from control sessions). We refer to the resulting trained network as the knockout (KO) network or, interchangeably, as the 1-to-1 network. Dropout training36 (middle, blue) sets to 0 a randomly-chosen model LC unit for each training frame, independent of the frame’s silenced LC type (no model LC units’ values are set to 0 for frames from control sessions). In this case, the number of ‘dropped out’ units equals that of the ‘knocked out’ units. We refer to the resulting trained network as the dropout (DO) network. Finally, we train a network without knocking out any of the model LC units and refer to it as the noKO network (right, black). The DO and noKO networks are appropriate controls (i.e., null hypotheses) for the KO network. The DO and noKO networks have no knowledge that any LC silencing has occurred; in other words, the DO and noKO networks assume all male flies, regardless of an LC type being silenced or not, have the same behavioral output to the same input stimulus. Thus, the DO and noKO networks cannot reliable detect changes in behavior for different silenced LC types unless the statistics of the visual input itself differs across silenced LC types. The latter may occur if, for example, silenced flies do not chase the female, the female will be visually smaller for most frames, leading DO and noKO networks to correctly predict a decrease in song production (as song is produced in close proximity to the female). c. We tested the KO, DO, and noKO network’s performance of predicting the male fly’s movement (left) and song production (right) for the next frame given the 10 past frames of visual input (a period of 300 ms) across many LC-silenced and control flies (459 sessions in total). All test frames were held out from any training or validation sets and sampled randomly in 3 s time periods across sessions (27,000 test frames per each LC type and control, see Methods). We computed the coefficient of determination R2 for behavioral outputs of movement (forward, lateral, and angular velocity) and 1 - binary cross-entropy (where a value close to 1 indicates good prediction) for behavioral outputs of song production (probabilities of Pfast, Pslow, and sine song). We found that overall, the KO network better predicts forward velocity than the DO and noKO networks (top left, red dots above black and blue dots) as well as the probability of sine song (top right). Changes in prediction performance between KO and DO/noKO networks across LC types were relatively small, suggesting changes in behavior were subtle, consistent with overall mean changes in behavior (Extended Data Fig. 1). In addition, R2 may change little for large second-order changes in behavior, such as variance (Extended Data Fig. 2g, leftmost dots). We confirmed in Fig. 1g–h and Extended Data Fig. 4 that the KO network accurately predicted mean changes in behavior better than DO and noKO networks. We note that R2 values for movement (left column, R2 ≈ 0.5 for forward velocity, R2 ≈ 0.15 for lateral and angular velocity) were not close to 1 because we predict rapid changes to movement variables frame-to-frame (with a frame rate of 30 Hz). Because the 1-to-1 network is deterministic (i.e., returning the same output for the same visual input), it fails to account for the fact that a male fly’s moment-to-moment decision is stochastic—in other words, the male responds differently to repeats of the same stimulus sequence. To take this stochasticity into account, one would need to present identical repeats of the same visual stimulus sequence and record the resulting behavior. This is not possible for our natural courtship experiments, where a male fly’s visual experience is determined by his behavior. However, this may be possible in future experiments using virtual reality, where the experimenter has greater control over a male fly’s visual input. d. Results in c were for a KO network with one random initialization. To see if this effect holds for different initializations, we trained 10 runs of the KO network, each with a different random initialization and random ordering of training samples. We found that for 8 of the 10 runs, KO networks outperformed DO networks (left); 5 of the 10 runs, KO networks outperformed noKO networks (right) in predicting forward velocity. For each run (i.e., ‘network run 1’), the same randomly initialized network and randomized order of training samples was used as a starting point for the KO, DO, and noKO network. Each connected pair of dots denotes one LC type with the color of the line connecting two dots denoting the LC type identity (same colors as in c). An asterisk denotes a significant difference in means (p < 0.05, paired, one-sided permutation test, n = 23). Network run 1 was chosen as the 1-to-1 network in c as well as Figs. 1–4 due to its high prediction for both behavior and neural responses.

Extended Data Fig. 4. Assessing model predictions of mean changes in behavior.

Given the mean changes in behavior due to silencing (where the mean is taken over the entire session, Extended Data Fig. 1), we wondered to what extent the knockout (KO) network predicted these overall changes versus training a dropout (DO) network, for which a randomly-chosen model LC unit was inactivated during training, and a noKO network, for which no inactivation of any model LC unit was performed during training. a. For each LC neuron type, we computed the average behavior across all held-out courtship frames in the test set (‘real data’). We then computed the mean behavior as predicted by the KO network across the same frames (‘KO network’). Each dot denotes one LC type (color dots) or control session (black dot); colors are indicated at left. Dashed lines are the best linear fit; the correlation ρ is taken across all LC types excluding the control sessions. The KO network has large ρ’s across behavior outputs, indicating good prediction of overall changes. b. Same as in a except for the DO network; for evaluation, no model LC units were inactivated (i.e., dropout was used for regularization36). Correlations were smaller for the DO network than for the KO network (compare ρ’s between a and b). However, for the movement variables, correlations for the DO network were only slightly smaller than those of the KO network. Because the DO network had no access to which LC type was silenced, this suggests that the statistics of visual inputs differed across LC types. For example, imagine if the DO network accurately predicted the behavior of control male flies, including that the male does not sing when the female is far away. Then, if silencing LC10a resulted in the male not being interested in courting the female, the female would be far away in most frames, and the DO network would correctly predict a decrease in song production, even though the DO network has no knowledge LC10a was silenced. Thus, DO training is an appropriate control to ask whether the sensorimotor transformation has changed or if the male has altered his desire to pursue courtship. This also motivates future experiments with virtual reality where the male’s visual statistics can be matched between LC-silenced and control males. c. Same as in a and b except for the noKO network. Correlations were substantially smaller than for the KO and DO networks (compare ρ’s between a and c), indicating that the noKO network could not recover behavior from LC-silenced flies. d. We trained 10 networks each for KO, DO, and noKO training. Each of the 10 networks had different random initializations and different random orderings of training samples. For a fair comparison, the same initialized network and ordering was shared across KO, DO, and noKO training for each of the 10 runs. We then computed the ρ’s of overall mean behaviors for each network and real data. For each of the six behavioral outputs, we found that the KO network predicted the changes in behavior across LC types better than the predicted changes for the DO and noKO networks (red dots above blue and black dots). Each dot denotes one network, and each asterisk denotes that the mean of the KO network is significantly greater than the mean of either the DO or noKO network (p < 0.05, paired, one-tailed permutation test, n = 10). Network run 1 was chosen as the exemplar network in a-c (as well as in Figs. 1–4).

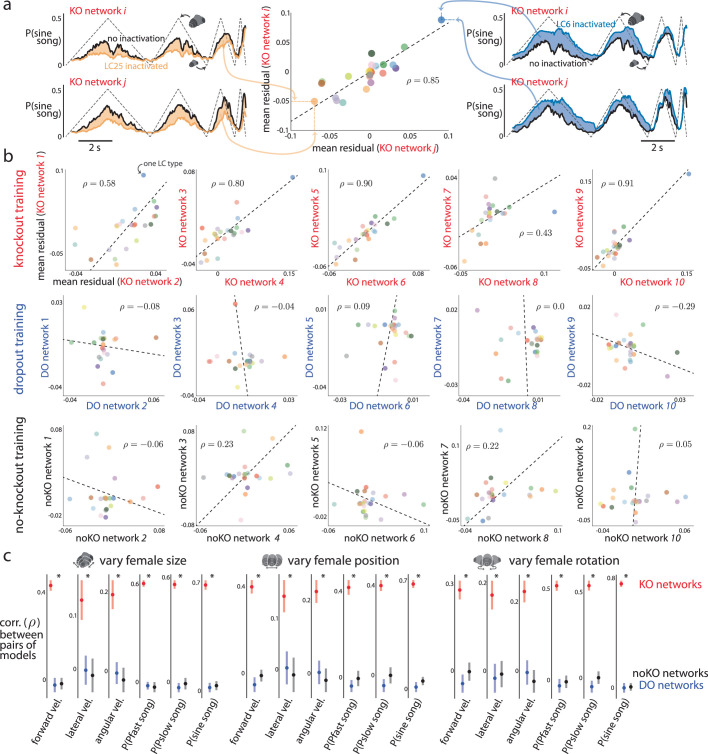

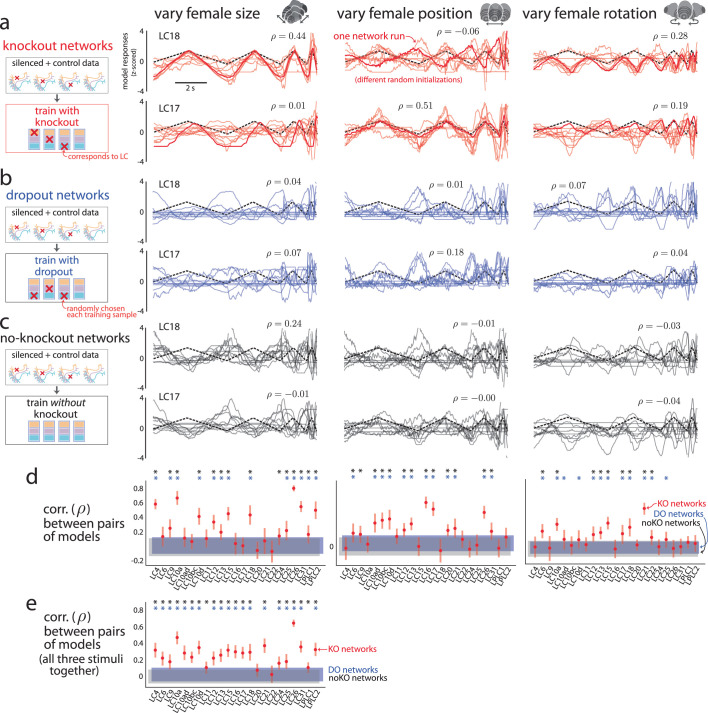

Extended Data Fig. 5. Consistency in behavioral predictions across networks with different random initializations.

Deep neural networks with the same architecture and trained on the same data may converge to different internal representations depending on their parameter initializations and the ordering of training samples observed by stochastic gradient descent. We wondered to what extent the solution identified by knockout (KO) training changes for different random initializations and different orderings of training data. If KO training is consistent for the 1-to-1 network’s architecture, then we would expect to see that different training runs of a KO network should converge to similar predictions in behavior. See Extended Data Fig. 6 for a similar analysis of the 1-to-1 network’s consistency in neural predictions. a. To test this, we trained 10 KO networks, each with a different random initialization and different ordering of training samples. We then passed as input a dynamic stimulus sequence in which the fictive female varied her size over time (dashed trace in top left plot; female position and rotation remained fixed). Inactivating LC25 (orange line, top left plot) resulted in an overall decrease in the probability of song relative to that of no inactivation (black line, top left plot); we can compute the overall change in behavior by taking the mean residual between the two (orange shade, top left plot). If two KO networks were consistent, we would expect that this KO network i should match its mean residual if we were to perform the same procedure for another KO network j; indeed, this is what we saw (compare top and bottom panels on the left). Inactivating LC6 resulted in an increase in probability of song for both KO networks (rightmost plots). We quantified the consistency between the two KO networks by computing the correlation across LC types of the time-averaged residuals (middle scatter plot); a correlation ρ close to 1 indicates that both KO networks consistently have the same predictions of behavior for different silenced LC types. b. Scatter plots for 5 pairings of the 10 KO networks (top row) of the time-averaged residuals of probability of sine song for the stimulus in which the fictive female varies in size (same as in a). Each dot denotes one LC type, and colors correspond to LC names in Extended Data Fig. 4. Dashed lines denote best linear fit. We also assessed the consistency of DO networks (middle row) and noKO networks (bottom row), which were substantially lower than the ρ’s for the KO network. c. Correlations of time-averaged residuals for the three dynamic stimulus sequences and all six behavioral outputs. Each dot is the mean across all 45 pairs of networks; error bars denote 1 s.e.m. The KO networks had significantly larger mean pairwise correlations (asterisk denotes p < 0.05, paired permutation test, n = 45) than those for the DO and noKO networks (red dots above blue and black dots) for all stimulus sequences and behavioral outputs. We conclude that the KO networks are consistent in behavioral predictions. An important use of the ensemble of 10 KO networks is for estimating model uncertainty for a particular stimulus sequence. A single KO network can only give one prediction for a stimulus sequence (Fig. 4d,e); one may erroneously conclude that the model is equally certain about all stimulus sequences. Instead, the 1-to-1 network may be more uncertain for different stimulus sequences, especially those that are rarely observed during natural courtship. Thus, before experimentally testing the 1-to-1 network’s predictions, one may first check if the 1-to-1 network is confident in its prediction by assessing the extent to which different network runs agree on the same prediction. If there is large agreement (as seen here), the 1-to-1 network is confident in its predictions. On the other hand, a mismatch in its predictions and experimental data is more interesting than a stimulus sequence for which the 1-to-1 network is uncertain (and thus expected to not agree with experimental data).

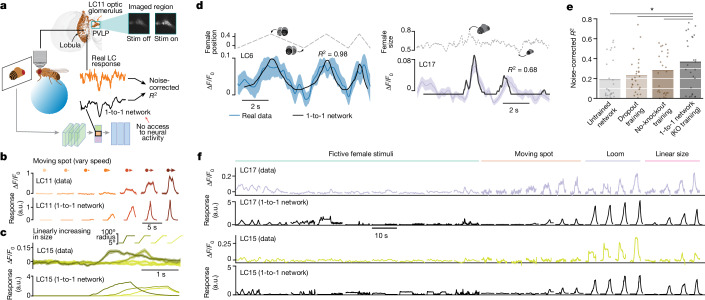

Comparing real and model neural activity

One prediction from our simulations (Extended Data Fig. 2) is that the knockout training procedure, which leverages natural behavioural data only, should nonetheless learn the visual responses of real LC neurons. We recorded LC calcium dynamics in head-fixed, passively viewing male flies walking on an air-supported ball (Fig. 2a and Methods). We targeted 5 different LC neuron types (LC6, LC11, LC12, LC15 and LC17), chosen because silencing each one led to noticeable changes in courting behaviour (Fig. 1e and Extended Data Fig. 1). We first presented artificial stimuli (Fig. 2b,c and Methods) used to characterize LC responses in previous studies29–31. Despite the fact that the 1-to-1 network never had access to neural data, we found that its predicted responses largely matched their corresponding real LC responses for artificial stimuli (Fig. 2b,c, compare top and bottom, and Extended Data Fig. 7).

Fig. 2. Model LC responses from the 1-to-1 network match real LC neural responses.

a, We recorded LC responses using calcium imaging while a head-fixed male fly viewed dynamic stimulus (stim) sequences. We fed the same stimuli into the 1-to-1 network and tested whether the predicted responses (black trace) for a given model LC unit matched the real response of the corresponding LC neuron (orange trace, summed calcium dynamics within the region occupied by the glomerulus ‘imaged region’) by computing the noise-corrected R2 between the two (normalized) traces over time (Methods). The 1-to-1 network never had access to real LC responses during training, and only one pre-specified model LC unit was used to predict responses of each LC type. b, Real (top) and model (bottom) responses of LC11 to a moving spot with different speeds. a.u., arbitrary units. c, Real and model responses of LC15 to a spot with linearly increasing size. d, Real (colour traces) and model (black traces) LC responses to stimulus sequences of a fictive female changing in position and size (dashed traces). Shaded regions denote 90% bootstrapped confidence intervals of the mean; noise-corrected R2 values are indicated. e, Average noise-corrected R2 across all stimulus sequences and LC types for different networks (bars). Each dot denotes one LC type and stimulus pair. Dots with low R2 values primarily corresponded to weakly driving stimuli (Extended Data Fig. 8). The knockout network outperformed all other networks (*P < 0.05, paired, one-sided permutation test; n = 27). f, Real (colour traces, repeat-averaged responses) and model LC (black traces, unnormalized) responses across all presented artificial and natural stimuli. LC17 and LC15 are shown here; LC6, LC11 and LC12 responses are shown in Extended Data Fig. 7.

Extended Data Fig. 7. Predicting real LC responses to artificial stimuli and predicting response magnitude.

a. In addition to our own recordings, we further tested the 1-to-1 network’s neural predictions on a large number of LC neuron types whose responses were recorded in another study31. One caveat was that these responses were recorded from females, not males. We considered responses to artificial stimuli of laterally moving spots with different diameters and different movement directions as well as looming spots with different loom accelerations (top row). Traces denote responses averaged over repeats and flies, shaded regions denote 1 s.e.m. (some regions are small enough to be hidden by the mean traces). Data same as in Fig. 3a of ref. 31. b. Model LC responses from the 1-to-1 network. We fed as input the same stimuli but changed the spot to a fictive female facing forward (to better match these artificial stimuli to the fictive female stimuli on which the 1-to-1 network was trained). For visual comparison, we matched the mean and standard deviation (taken across all stimuli) of each LC type’s model responses to those of the real LC responses; we also flipped the sign of a model LC unit’s responses to ensure a positive correlation with the real LC type (flipping was only performed for LC6 and LC21). To account for adaptation effects, model LC unit’s responses decayed to their initial baseline after no change in the original responses occurred (see Methods). Overall, it appeared that almost all the real LC neurons and model LC units respond to these artificial stimuli. Some of the best qualitative matches were LC11—where the 1-to-1 network correctly identified the object size selectivity of LC11 neurons27—LC15, LC17, and LC21. A failure of the model was predicting LC12 responses; this was true of our LC recordings as well (c and Extended Data Fig. 8). This failure may be due to an unlucky random initialization, as networks trained with knockout over 10 training runs were not in strong agreement of LC12’s responses (Extended Data Fig. 6). Another explanation is that LC12 only weakly contributes to behavior for these simplified stimuli. If this were the case, then KO training would not be able to identify LC12’s contributions to behavior nor its neural activity. One piece of evidence that this might be the explanation is that solely inactivating LC12 for simple, dynamic stimulus sequences did not lead to any change in the model’s behavioral output (Fig. 4f). For natural stimulus sequences, LC12 does appear to play a role (Fig. 4c), motivating the use of more naturalistic stimuli when recording from LC types (Fig. 2). c. We continued to test the 1-to-1 network’s neural predictions by comparing the model’s response magnitudes for different types of stimuli. We wondered whether the relative magnitudes of model LC responses across all stimulus sequences qualitatively matched that of real LC responses. If so, it indicates that the model’s selectivity for certain stimuli matches real LC selectivity. This is different from our quantitative comparisons that normalized model LC responses for each stimulus separately (Fig. 2d,e and Extended Data Fig. 8). We note that a priori, we would not expect the 1-to-1 network to predict response magnitude, as downstream weights could re-scale any activity of the model LC units. However, as found when comparing the internal representations of deep neural networks to one another60, the relative magnitudes of internal units may be an important part of encoding informative representations. Same format as in Fig. 2f for the three remaining recorded LC types (LC6, LC11, and LC12). For LC6, the 1-to-1 network correctly predicts a larger response to loom than responses to a moving spot and a spot varying its size linearly (‘linear size’); however, it overestimates the responses to fictive female stimuli. For LC11, the model accurately identifies LC11’s object selectivity (‘moving spot’) and suppression to loom and linear size. Similar to LC6, the 1-to-1 network overestimates LC11’s response magnitudes to the fictive female stimuli. We again found that for LC12, the 1-to-1 network has overly large responses to the fictive female stimuli but does predict magnitudes for moving spot, loom, and linear size. The model LC12 responses to loom and linear size appear to be inverted (i.e., flipping model LC12 responses to loom and linear size would better match the real LC12 responses)—this is likely a consequence of the fact that the sign of an LC’s response is unidentifiable for the 1-to-1 network, as one could simply flip the sign of the model LC unit’s response and the readout weights of downstream units. Other possible reasons are mentioned in b.

We then tested the predictions of the 1-to-1 network on more naturalistic stimulus sequences (that is, a fictive female varying her position, size and rotation; Supplementary Video 1). We found that the recorded LC neurons responded to many of these naturalistic stimulus sequences (Fig. 2d, colour traces, and Extended Data Fig. 8) and found reliable matches between real LC responses and their corresponding model LC responses (Fig. 2d, black traces versus colour traces, and Extended Data Fig. 8), yielding an average noise-corrected R2 of approximately 0.35. This was a significant improvement over other networks with the same architecture but trained with dropout or without knockout procedures (Fig. 2e); training on behaviour was important for prediction, as these networks outperformed an untrained network (Fig. 2e, untrained). The prediction performance of the 1-to-1 network was consistent with our expectations—exact matches were unlikely owing to differences in behavioural state during courtship (on which the 1-to-1 network was trained) and during imaging11,31.

Extended Data Fig. 8. Real LC responses and predicted responses to stimulus sequences of a moving fictive female.

a. We considered 9 different stimulus sequences in which a female varied her rotation, size, and position (three top traces for each stimulus sequence, see Methods for stimulus descriptions). We found that the 1-to-1 network’s predictions (black traces) largely predicted the responses of the real LC neurons (color traces), despite the facts that the 1-to-1 network was never given access to neural data and that we directly read out from a single model LC unit. The average of all reported noise-corrected R2s here is the same as that reported in Fig. 2e. We only considered stimulus sequences for which the real LC responses reliably varied across time for the stimulus sequence. To measure this, we computed the between splits of repeats (i.e., a signal-to-noise ratio across repeats) and considered any stimulus sequence with a as unreliable, removing it from our analyses (translucent traces; see Methods). For some LC types, we detected a reliable response to only one or a few stimuli (e.g., LC15 only responded to ‘vary female position’ and ‘natural sequence’). We noticed that none of the LC neurons responded to stimulus sequences for which the fictive female’s parameters were chosen to optimize the 1-to-1 network’s output of lateral velocity (‘optimize lateral vel.’ and ‘optimize lateral vel. (fast)’, see Methods). This may be due to the fast changes in female position which were not present in other stimulus sequences. For each stimulus and LC type, we computed a noise-corrected R2 between the real and model predicted responses. This noise-corrected R2 overlooks any differences in mean, standard deviation, and sign of the response, which are unidentifiable by the KO network. For visual clarity, we centered, scaled, and flipped the sign of the 1-to-1 network predictions (black traces) to match the mean, standard deviation, and sign of the LC responses (color traces) for each stimulus. We accounted for the smoothness of calcium traces by applying a causal smoothing filter to the model LC responses as well as fitting the mean offset of the relu thresholding (see Methods). Interestingly, all LC types responded reliably to varying female position (‘vary female position’, color traces) despite the facts that the optic glomeruli have weak retinotopy12,61 and that the calcium trace is a sum of the activity of almost all neurons for the same LC type (presumably averaging away any spatial information). This suggests that either our targeted region for calcium imaging (Fig. 2a) was biased to read out from a subset of LC neurons with nearby receptive fields or that these LC neurons have some selectivity in female position (perhaps as direction selectivity). The latter may be more likely, as the male needs to better estimate female position than can be done simply by comparing coarse differences between the two optic lobes. Consistent with our findings, a previous study has identified another LC type—LC10a—to respond to an object’s position11. That our 1-to-1 network also predicted positional selectivity in the LC types (black traces) supports the notion that some optic glomeruli may track female position despite weak retinotopy. More work is needed to understand how object position is encoded within a single optic glomerulus and how that information is read out61. b. Results in a were for a KO network with one random initialization. To see if this effect holds for different initializations, we trained 10 runs of the KO network, each with a different random initialization and random ordering of training samples. We compared the runs of the KO network to those of the dropout (DO) network, for which a randomly-chosen model LC unit was dropped out during training, as well as noKO networks for which no knockout occurred during training. These are the same networks used to predict moment-to-moment behavior (Extended Data Fig. 3d). Each bar denotes the mean R2, and each dot denotes one combination of LC type and stimulus (i.e., non-shaded traces in a). Black asterisks denote a significant increase in mean R2 (p < 0.05, paired, one-sided permutation test, n = 27), gray asterisks denote a trend (p < 0.15). We observed that random initialization played more of a role for neural prediction than for behavioral prediction (Extended Data Fig. 3d). This is not so surprising, as the networks were never trained to predict neural responses. Still, the KO networks tended to outperform the other types of networks (red bars larger than other bars); combining across all runs, the KO network performed significantly better (‘all runs’, p < 0.002 for comparisons between KO and other networks, paired, one-sided permutation test, n = 270). In addition, the untrained networks performed poorly (gray bars), indicating that training networks on behavior did improve neural predictions. c. For the results in a and b, we considered a one-to-one mapping in which we directly compared a model LC unit’s response with real LC responses; our 1-to-1 network never had access to neural data for training. Here, we wondered if we relaxed this assumption (i.e., train a linear mapping from all model LC units to real LC responses), to what extent would the model’s prediction of real LC responses improve. The basic setup was the following. We feed a stimulus sequence into the 1-to-1 network (fully trained with knockout training) and collect responses from all model LC units, denoted as for K model LC units (here, K = 23) and the T timepoints of the stimulus sequence. We then define a linear mapping to map the K model LC responses to the real LC response. We use real LC responses to train β. Specifically, for each of the 4 cross-validation folds, we train β on 75% of the real LC responses (randomly selected) using ridge regression. Training the linear mapping on responses to other stimuli led to worse performance, as expected, because the stimuli were largely different from each other—training on responses to a fictive female changing in position was not predictive of responses to a fictive female changing in size. We then predict the responses for the remaining held-out timepoints. We concatenate the predictions across the 4 folds and then compute the noise-corrected R2 in the same way as in Fig. 2d,e. Thus, the reported cross-validated noise-corrected R2s indicate the extent to which the 1-to-1 network, given neural data on which to train, can predict held-out real LC responses. Another view is that in this setting, the 1-to-1 network is a task-driven model trained on behavioral data with an internal representation (the model LC bottleneck) that reflects the activity of real LC neurons up to a linear transformation1. d. Prediction performance using the linear mapping for different networks and network runs (see Methods). For each network, we trained a new linear mapping between the model LC responses and the real LC responses. Overall, prediction performance greatly increased: The 1-to-1 network (or KO network) with the linear mapping had a noise-corrected R2 at ~ 65% (network run 1, averaged over all recorded LCs and fictive female stimulus sequences), an additive increase of ~ 30% over that of the 1-to-1 network with the one-to-one mapping comparison ( ~ 35%, Fig. 2e). We also found that, for the linear mapping, the performance of the 1-to-1 network was similar to those of the other networks trained with dropout (DO) or no knockout (noKO) (leftmost plot, red bar close to black and blue bars). This similarity in performance was not unexpected and indicates that all 3 networks (KO, DO, and noKO) have similar internal representations (up to a linear transformation) at the layer of their LC bottlenecks. However, the 1-to-1 network’s representation is better aligned to the LC types along its coordinate axes—where each model LC unit corresponds to one axis—than those of the other networks (Fig. 2e). Networks trained with behavioral data (KO, DO, and noKO) outperformed an untrained network (gray bar), indicating that training on behavior was helpful in identifying LC response properties. That the untrained network was somewhat predictive of LC responses (bar for ‘untrained’ above 0) stems from an inductive bias in which the network’s convolutional filters, even with randomized weights, can detect large changes of the visual stimulus (e.g., a fictive female moving back and forth). That a linear combination of random features is often predictive in a regression setting is a well-studied phenomenon in machine learning62 and has been observed in predicting visual cortical responses63. This trend in similarity of performance held across all 10 network runs (same runs as in b) for the different training procedures: The KO network consistently better predicted real LC responses than the untrained network but less so when compared to the DO and noKO networks (red bars at similar heights to black and blue bars across network runs). This trend held when combining across all runs (‘all runs’). A black asterisk indicates a KO network with a mean prediction performance significantly above that of another network (p < 0.05, paired, one-sided permutation test); a gray asterisk indicates a trend (0.05 < p < 0.15). Each bar denotes the mean R2, and each dot denotes one LC type and stimulus combination (i.e., the non-shaded traces in a); n = 27 for statistical tests for each run and n = 270 for all runs. Network run 1 was the chosen 1-to-1 network for Figs. 1–4. The results here indicate that by simply training a network on courtship behavioral data (i.e., a task-driven approach), we have identified a highly-predictive image-computable model of LC neurons. To our knowledge, ours is the first image-computable model of the LC population proposed. An important point is that this encoding model (using a linear mapping) does not identify a one-to-one mapping between model LC units and LC types, as the model is unable to relate the encoded LC neurons to behavior—this is precisely the reason we built the 1-to-1 network. Training the 1-to-1 network both on behavior and neural responses is a worthwhile goal, but care is needed to ensure the neural responses are recorded during natural behavior to achieve as best a match as possible.

We further tested the predictions of the 1-to-1 network by assessing the extent to which the 1-to-1 network predicted response magnitudes across both natural and artificial stimuli and found reasonable matches (Fig. 2f and Extended Data Fig. 7). We also gave the 1-to-1 network partial access to neural data by using real LC responses to fit a linear mapping between all model LC units and one real LC neuron type. We found that held-out prediction improved to a noise-corrected R2 of approximately 0.65 (Extended Data Fig. 8), suggesting that better alignments between the model LC units and real LC types exist, at least for neural prediction. The 1-to-1 network was the most consistent in its neural predictions (across ten different random initializations) compared with other training procedures (Extended Data Fig. 6), suggesting that knockout training converges to a similar solution despite a different initialization. There are yet additional ways to test the model: by silencing or activating combinations of LC types predicted by the model to act in concert or by recording from LC types under conditions more similar to natural courtship. Nevertheless, we interpret our tests of the model to suggest that the 1-to-1 network has learned a reasonable mapping between visual stimulus and an individual LC type as well as the contribution of an individual LC type to behaviour. The sections that follow examine the 1-to-1 network that led to the best prediction of both behaviour and neural responses (of the ten different initializations; Extended Data Figs. 3 and 8).

Extended Data Fig. 6. Consistency in LC response predictions across networks with different random initializations.

We wondered to what extent knockout training converged to different solutions in predicting LC responses given different random initializations and different orderings of training data. See Extended Data Fig. 5 for consistency in behavioral predictions. a. We performed knockout training on 10 different runs—each run had a different random initialization and different random ordering of training data. We then fed into the KO networks as input three dynamic stimulus sequences in which the fictive female varied her size (left column), position (middle column), and rotation (right column) (same sequences as in Figs. 3f and 4d,e). For LC18 (top row), model responses were consistent for female size and rotation but not position. Each trace is from one KO network run; the bold trace is for network run 1 (chosen as the 1-to-1 network in Figs. 1–4). Traces across all three stimulus sequences were z-scored and then flipped in sign to ensure the largest possible mean correlation ρ over time (as sign is not identifiable via knockout training). For LC17 (bottom row), model responses were consistent for female position but not size or rotation, suggesting consistency was stimulus dependent. This is in line with the idea that knockout training can only identify a one-to-one mapping for stimulus sequences that lead to noticeable changes in behavior from LC-silencing (Extended Data Fig. 2); KO networks disagree on stimulus sequences that lead to little to no change in behavior, as some change is needed in order to identify an LC type’s role in driving behavior. b-c. We assessed the consistency of the dropout (DO) networks for which a randomly-chosen model LC unit was inactivated (b) and noKO networks for which no inactivation was performed (c). Both DO and noKO networks had poor consistency for LC18 and LC17 across all stimulus sequences (largest ρ = 0.24). d. We computed the mean correlation (dots) across all 45 pairs of networks and found that the KO networks had significantly larger mean correlations than DO networks (blue asterisks, p < 0.05, paired, one-sided permutation test, n = 45) and noKO networks (black asterisks, p < 0.05, paired, one-sided permutation test, n = 45) for the three different stimulus sequences. e. We concatenated the responses for each network across all three stimulus sequences and re-computed the mean correlation (dots). Almost all of the LC types show a significant increase in mean correlation for KO network runs versus DO network runs (blue asterisks, p < 0.05, paired, one-sided permutation test, n = 45) and noKO network runs (black asterisks, p < 0.05, paired, one-sided permutation test, n = 45). Error bars in d and e denote 1 s.e.m. Taken together, these results indicate that knockout training identified consistent KO networks that reliably predict neural responses. That KO networks were more consistent than DO and noKO networks suggests that knockout training captured meaningful changes in behavior. Because KO networks may disagree more for different stimulus sequences (a notion of uncertainty), future experiments should take this uncertainty into account when testing the 1-to-1 network’s predictions. In fact, presenting stimulus sequences for which the KO networks disagree the most may be the most informative, as we can use the responses to these sequences to rule out some of the KO networks.

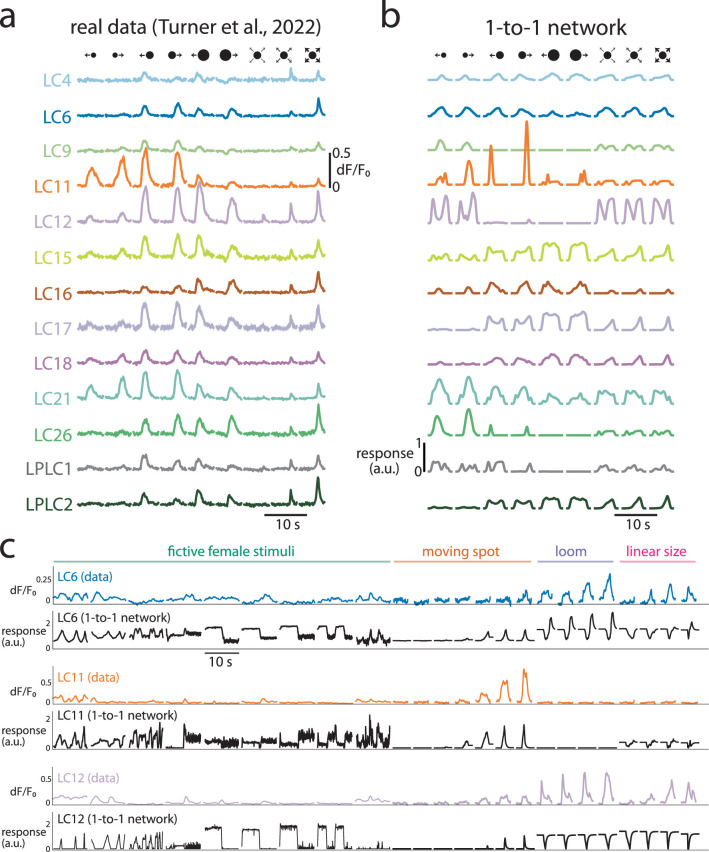

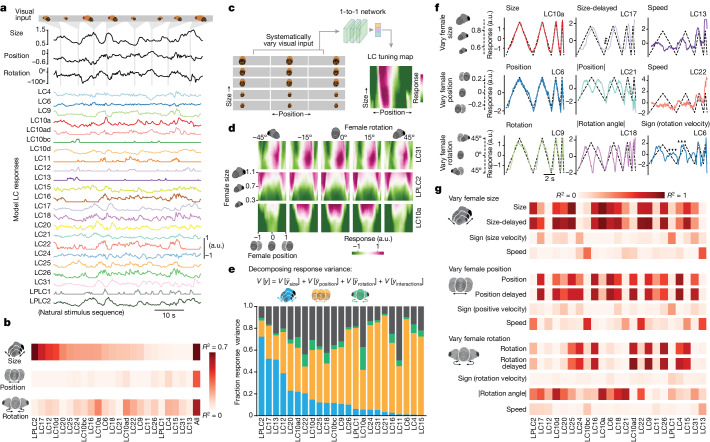

Visual feature encoding of the model LC units

We next tested how the population of 23 model LC units encodes the movements of the female. We found that the majority of model LC units in the 1-to-1 network responded to changes in female position, size and rotation (Fig. 3a). Moreover, almost no model LC unit directly encoded any single visual parameter (Fig. 3b, low R2 values for any one LC type, but high R2 for a linear mapping of all LC types).

Fig. 3. Visual features of female motion are distributed across the population of model LC units.

a, Almost all model LC units responded to a fictive female changing in size, position and rotation. b, Cross-validated R2 between each primary visual parameter and model LC responses for natural stimulus sequences. Columns are sorted based on female size (top). The end column of each row (all) is the cross-validated R2 between a linear combination (identified via ridge regression) between all model LC units and a single visual parameter. c, We characterized the tuning preferences of each model LC unit by systematically varying the three visual parameters and computing a heat map of the model LC responses. Each input sequence was static (that is, all ten frames were repeats of the same image). d, Tuning heat maps for example model LC units (see Extended Data Fig. 9 for all LC types). e, We used variance decomposition (Methods) to decompose the response variance V[y] of each model LC unit into components solely due to either female size (blue), position (orange) or rotation (green) as well as interactions between these visual parameters V[yinteractions] (black). A large fraction of response variance for a given parameter indicates that a model LC unit more strongly changes its response y to variations in this parameter relative to those in other parameters. Because the 1-to-1 network is deterministic, all response variance can be attributed to variations of the parameters (that is, there is no repeat-to-repeat variability). f, Example model LC responses to dynamic stimulus sequences in which the fictive female solely varied either her size, position or rotation angle over time (dashed traces). Different model LC units appear either to directly encode a visual parameter (for example, LC10a encodes size) or encode features derived from the parameter, such as a delay (LC17, arrows) or speed at which female size changes (LC13). Responses for all model LC units are in Extended Data Fig. 10. g, R2 between model responses and visual parameter features for the stimulus sequences in f. Columns are in the same order as those in b.

Males pursue females at a range of distances and positions, and we can use the 1-to-1 network to uncover how the LC population encodes these contexts by examining 3D ‘tuning maps’ (Fig. 3c, Extended Data Fig. 9 and Methods). Some model LC units, such as LC31, were driven by the position of the female (in front of the male), independent of female size and rotation (Fig. 3d, top), whereas other model LC units, such as LPLC2, were driven by large female sizes, consistent with its known response to looming stimuli17,24,25,31. Model LC10a was driven by female position (in front of the male), consistent with prior work11,23, but we found this was only true for conditions in which he is close and directly behind her (Fig. 3d, bottom). Model LC9 and LC22 were similarly driven by females in front of and facing away from the male, but at larger distances (Extended Data Fig. 9).

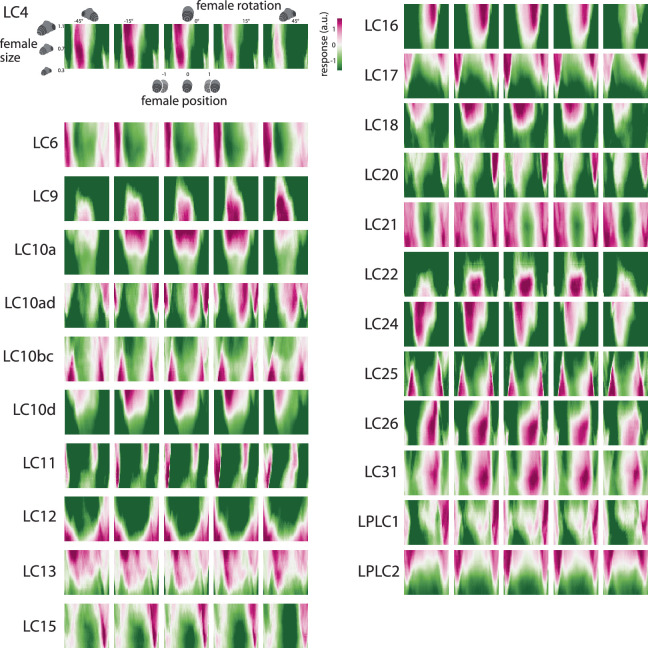

Extended Data Fig. 9. Model LC tuning heat maps.

Each “pixel” in the heatmap corresponds to the response of the model LC unit to one input stimulus sequence in which a static fictive female fly has a given size, position, and rotation (i.e., all 10 images of the input sequence were the same, see Methods). We then systematically varied female size, position, and rotation across stimulus sequences (125,000 sequences in total). Same format as in Fig. 3c,d but for all model LC units.

To quantify these interactions, we decomposed the response variance37 of each model LC unit into four components (Fig. 3e). Most model LC units encoded changes in female position (Fig. 3e, orange bars), roughly half encoded female size (Fig. 3e, blue bars), and female rotation was weakly encoded (Fig. 3e, green bars are small). However, almost all model LC units encoded some nonlinear interaction among the three visual parameters (Fig. 3e, black bars; on average around 25% of the response variance for each model LC unit).

We next considered non-naturalistic stimulus sequences, varying one visual parameter at a time (Fig. 3f, dashed lines, and Supplementary Video 2). For example, we varied the size of the female over time at different speeds, while keeping her position and rotation constant (Fig. 3f, top, dashed lines). For this stimulus, some model LC units perfectly encoded female size (Fig. 3f, top left, LC10a), some model LC units encoded a time-delayed version of size (Fig. 3f, top, middle, LC17), whereas other model LC units encoded the speed at which female size changed (Fig. 3f, top right, LC13). Similar relationships were present for other stimulus sequences and model LC units (Fig. 3f, bottom two rows); we note that the 1-to-1 network was predictive of real LC responses for similar types of stimulus sequences (Fig. 2d,e and Extended Data Fig. 8).

Compiling these results, we find that most model LC units encode some aspect of female size, position and rotation (Fig. 3g). Our results were consistent with previous studies, such as LC11 encoding the position of a small moving spot27,28 (Fig. 3g, LC11 has highest R2 for ‘position’ in ‘vary female position’ than in other stimulus sequences) and LPLC2 encoding loom24 (Fig. 3g, LPLC2 has highest R2 for ‘size’ in ‘vary female size’). Recently, LPLC2 has also been found to encode the speed of a moving spot38, consistent with the predictions of our model (Fig. 3g, LPLC2 has high R2 for ‘speed’ in ‘vary female position’). Model units LC4, LC6, LC15, LC16, LC17, LC18, LC21 and LC26 all encode female size (Fig. 3g, top), matching recent findings that these LC neurons respond to looming objects of various sizes29–31; our 1-to-1 network also uncovers that these LC types probably encode other visual features as well. Of note, results differed between varying a single female parameter versus combinations of parameters (compare with Fig. 3b,g); this highlights the importance of using more naturalistic stimuli to probe the visual system.

We conclude that the model LC units encode visual stimuli in a distributed way: each visual stimulus feature is encoded by multiple model LC units (Fig. 3g, rows each have multiple red squares), and each model LC unit encodes multiple visual stimulus features (Fig. 3g, columns each have multiple red squares). Consistent with this, the response-maximizing stimulus sequence for each model LC unit strongly drove responses of other model LC units, even when optimized for these other responses to be suppressed (a ‘one hot activation’; Extended Data Fig. 11).

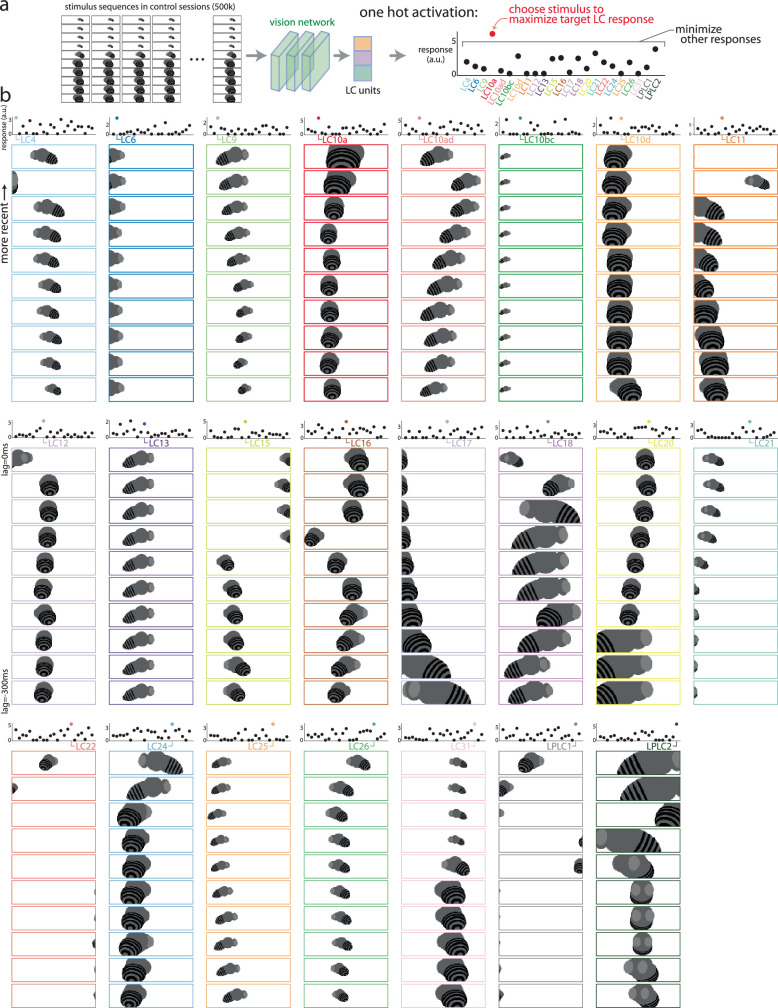

Extended Data Fig. 11. Maximizing visual inputs for each model LC unit.

To better understand the differences in stimulus preference across the model LC units, we optimized the visual input history that maximized each model LC unit’s response while minimizing responses of all other model LC units (i.e., a ‘one-hot’ maximizing stimulus). a. We considered a large number of candidate stimulus sequences taken from the training dataset of control sessions (500,000 stimulus sequences in total). We fed each stimulus sequence as input into the 1-to-1 network, extracting the responses of the model LC units. We chose the stimulus sequence that maximized a chosen model LC unit’s response while minimizing the responses for all other model LC units. We used the following objective function fi(x) for the ith chosen model LC unit, adopted from64: where x is the visual input sequence of 10 frames and ri is the response of the ith model LC unit. The objective function fi(x) is maximized for large responses of the ith model LC unit and responses as small as possible for all other units. Thus, we optimize stimulus sequences as “one-hot maximizations”. b. Maximizing stimulus sequences for each model LC unit with the most recent frame as the top image. One hot maximization worked for a handful of model LC units (LC9, LC10a, LC11, LC12, LC15; top panel shows responses of all model LC units to that stimulus sequence); surprisingly, one-hot maximization failed to drive a single model LC unit for many of the other LC types (at least one black dot has similar value to color dot), indicating that these model LC units share stimulus preferences with other model LC units. Some stimulus sequences have smooth changes to the fictive female’s parameters, such as LC10a and the increase in female size. However, other maximizing stimulus sequences show large jumps of the fictive female (e.g., LC4, LC11, LC12, LC22, etc.); even though these stimulus sequences were chosen from natural courtship, they likely represent outliers that strongly drive responses. This is especially true of model LC11 that prefers a small female moving at a fast speed, consistent with LC11 being a small object detector27,28. These maximizing stimulus sequences represent predictions of the 1-to-1 network that can be tested in future experiments to see if they truly elicit large responses from LC neurons, much like recent work has identified images to drive visual cortical neurons of macaque monkey64–67. Other objective functions, such as maximizing the response variation across time with a longer stimulus sequence, and other constraints, such as restricting how much a fictive female may change between consecutive frames or requiring the fictive female to not remain static, are easily possible with the 1-to-1 network. Our main finding here is that many of the one-hot maximizing stimuli failed to only activate the targeted LC type; this is further evidence that visual features are distributed across the LC population.

Linking model LC units to behaviour

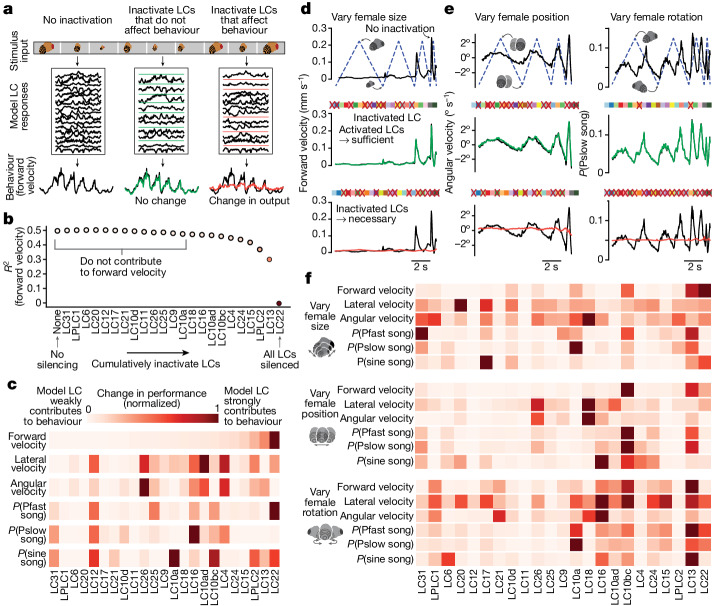

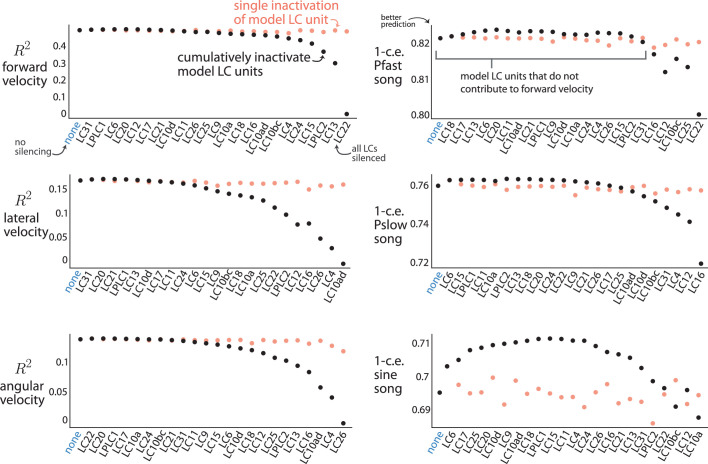

Given that visual features appeared to be distributed across the LC population (Figs. 2 and 3), we tested the hypothesis that combinations of LC types drive the male’s singing and pursuit of the female. We systematically inactivated model LC units in different combinations (or alone)—experiments that are not easily performed in a real flies, even with excellent genetic tools—and then examined which model LC units were necessary and sufficient to guide behaviour (Fig. 4a).

Fig. 4. Combinations of model LC units are required for behaviour.

a, We assess whether a group of model LC units are sufficient and necessary for behaviour if we inactivate all model LC units not in that group (middle, sufficient) or inactivate only that group of model LC units (right, necessary). b, We identify which model LC units contribute to forward velocity by cumulatively inactivating model LC units in a greedy manner (that is, inactivate the next model LC unit that, once inactivated, maintains the best prediction performance R2). The model LC units with the largest changes in performance (for example, LC13 and LC22) contribute the most. c, Results for cumulative inactivation for all six behavioural outputs; forward velocity (top) is the same as in b. Columns of each row are ordered based on the ordering of forward velocity (top). d, For a dynamic stimulus sequence of a fictive female only varying her size, we used our approach in a to identify the sufficient and necessary model LC units for the male forward velocity of the male (top). Red crosses denote inactivation; each square represents a model LC unit; colours match those in Fig. 3a. The active model LC units in the middle row are the same as those inactivated in the bottom row. e, Other example behavioural outputs and stimulus sequences to assess necessity and sufficiency. Same format as in d. For predicting Pslow song (right column), all but LC11 and LC25 were required, although not every LC type contributed as strongly. f, Results of cumulative inactivation for the dynamic stimulus sequences in d,e. Same format, colour legend and ordering of columns as in c.

We began by testing which model LC unit, when inactivated, maintained the best performance in predicting the behaviour of control flies. In a greedy and cumulative manner, we repeatedly inactivated the model LC unit that maintained the best performance while keeping all previously chosen LCs inactivated (Fig. 4b); eventually prediction performance had to decrease because of the bottleneck imposed by the model LC units. The inactivated model LC units that led to the largest drops in performance were the strongest contributors to each behaviour (Fig. 4b, rightmost dots). Separately inactivating each model LC unit resulted in little to no drop in prediction performance (Extended Data Fig. 12).