Abstract

Consumer-grade heart rate (HR) sensors are widely used for tracking physical and mental health status. We explore the feasibility of using Polar H10 electrocardiogram (ECG) sensor to detect and predict cigarette smoking events in naturalistic settings with several machine learning approaches. We have collected and analyzed data for 28 participants observed over a two-week period. We found that using bidirectional long short-term memory (BiLSTM) with ECG-derived and GPS location input features yielded the highest mean accuracy of 69% for smoking event detection. For predicting smoking events, the highest accuracy of 67% was achieved using the fine-tuned LSTM approach. We also found a significant correlation between accuracy and the number of smoking events available from each participant. Our findings indicate that both detection and prediction of smoking events are feasible but require an individualized approach to training the models, particularly for prediction.

Introduction

Over 11% of U.S. adults smoke cigarettes, with approximately 1600 youth smoking their first cigarettes each day1. It is well-established that smoking causes cancer, heart disease, stroke and many other chronic diseases2. However, quitting smoking is a challenging task. While 70% of smokers desire to quit, fewer than 10% do so annually3. There are only three medications (i.e., bupropion, varenicline, various dosage forms of medicinal nicotine) that are approved by the United States Food and Drug Administration (FDA) as aids for smoking cessation. Unfortunately, the most recent of these was approved in 2006 demonstrating how infrequently new treatments are introduced. In the absence of new therapies, it is critical to maximize the efficacy of the medications that are currently available. One area for improvement is in better timing the use of fast acting dosage form of medicinal nicotine, such as the nicotine gum or nicotine lozenge. The ability to deliver just-in-time interventions until recently was limited as there were no methods available to detect “warning” patterns antecedent to smoking in real-time. The introduction and acceptance of consumer wearable technology capable of sensing a variety of physiological parameters offers the possibility to detect the physiological response to smoking triggers before smoking occurs. Studies that aim to approximate just-in-time interventions suggest that such interventions have the potential to be effective. For example, two studies reported results of providing tailored messages based on ecological momentary assessment data that was collected multiple times per day. One study found that tailored messages were perceived as helpful while the other found that they decreased the impact of three common smoking triggers4,5. Another study found that real-time monitoring and notification of smoking episodes resulted in reduced smoking6.

In order to support just-in-time intervention for smoking cessation, we need to identify the possible patterns and triggers that lead to smoking events. Previous research has shown that smoking is frequently triggered by stressful events7. It has also been demonstrated that biomarker features such as Heart Rate (HR) and Heart Rate Variability (HRV) extracted from ECG signals can be used to measure mental stress8,9. Further, a previous study demonstrated that ECG signals show acute changes during smoking events10, as well as long-term differences observed between smokers and non-smokers11. It’s much less understood whether dynamic changes in these physiological signals could also be used to mark periods that immediately preceded the smoking event.

Smoking events happen in complex daily life settings and consumer wearable sensors can potentially provide meaningful data in such naturalistic environments12. These sensors are already widely used for tracking physiological measures including cardiac activity. The most common wearable sensors for cardiovascular measurements are wrist-worn smart watches, however for training in sports, chest strap sensors are also widely used and are proven to be more accurate than their wrist-worn counterparts13,14. Consumer chest strap sensors collect ECG signals and commonly also have an embedded 3D accelerometer. Based on previous research showing its very high accuracy and reliability in comparison to clinical-grade ECG, we selected for this study the Polar H10 chest strap as the ECG sensor with Global Positioning System (GPS) location data collected from a smartphone.

In recent years, there has been increased interest in smoking event detection and prediction. Dumortier et al. used Naïve Bayes (NB), decision tree and discriminant analysis methods for evaluating the urge to smoke15. However, the research was done in a controlled environment and participants were asked to report a large amount of information.

Abo-Tabiket. al. used a 1D convolutional neural network; however, this work was done with only 5 participants and the time window for the prediction is 2 hours long16. Fan et. al. used a smartphone and a smartwatch with a gyroscope, accelerometer and digital compass; however, their work did not investigate the prediction for smoking events and participants were required to report their activities for labeling, which is not feasible in naturalistic environments17. Senyurek et al. used a customized system including an inertial sensor and a smart lighter system along with a Support Vector Machine (SVM) classifier for cigarette smoking detection; Their study did not involve any physiological data and there was no investigation on prediction of smoking events18. Building upon this prior work, our current study aims to provide insights on the feasibility of both detecting and predicting smoking events for future development of just-in-time interventions and further understanding of the physiological changes during and before a smoking event.

Methods

Participants

The work reported here is part of an Institutional Review Board approved study conducted at the University of Minnesota. The participants were recruited from across the United States, as all study procedures were designed to be remote due to COVID-19 related restrictions prior to and after the study onset. To be included in the study, participants had to be at least 21 years of age and report smoking at least 10 cigarettes per day (on average over the past year). Those using medication likely to alter the physiological parameters being measured were excluded from the study. The participants were asked to wear multiple wearable sensors as they go about their daily lives for approximately 14 consecutive days. The participants were also asked to use our custom-built PhysiAware™ app on their smartphone to collect data from the sensors and to report each instance of smoking. In addition to registering the time of each smoking event, the participants also used the app to provide the reason for the smoking event.

Of the 36 enrolled participants in whom data was available, we excluded 8 participants in whom smoking and physiological data was available for fewer than five smoking events. For these excluded participants, the smoking and/or sensor data were missing due to a variety of reasons including technical software and hardware failures and/or due to participants no reporting all smoking events that were occurring in the naturalistic settings The resulting dataset that was used in the current analysis consisted of the remaining 28 participants’ data. The mean age of these 28 participants is 41.14 years old (SD: 8.50). Twelve of the 28 participants (43%) were male. The average number of smoking events reported per day is 9.22 (SD: 5.55) for the first week in the study. The participants in this study consisted of twenty-two Caucasian, five Black/African Americans and one person identified as more than one race. Two of these study participants identified as Hispanic or Latino. As shown in Table 1, we use different sizes of time windows for our analysis. The mean number of smoking events available for a range of time windows varies because availability of a smoking event was determined based on two factors – the participant reporting the event via the PhysiAware app and having non-missing ECG data around the reported event timestamp. Longer time windows result in encountering more missing data and consequently fewer usable smoking events.

Table 1.

Summary of counts of smoking events collected. Total Events, Mean and SD rows represent the total, mean and standard deviation of the number of smoking events for all participants, respectively. Prior-to-event timeframe counts show the number of events with usable sensor data collected prior to the registered event (prediction use case). Centered-on-event timeframe counts reflect numbers of events with usable sensor data on both sides of the smoking event (detection use case). For instance, the 20 min in the Centered-on-event column means the time window is 10 minutes before and after the start of the event.

| Number of self-reported smoking events | |||||

| Prior-to-event timeframe | Centered-on-event timeframe | ||||

| Time Window | 10 min | 15 min | 20 min | 20 min | 40 min |

| Total Events | 1635 | 1541 | 1461 | 1225 | 892 |

| Mean | 58.39 | 55.04 | 52.18 | 43.73 | 31.84 |

| SD | 57.80 | 56.63 | 53.95 | 50.34 | 37.19 |

Preprocessing and Feature Generation

The raw ECG data collected from Polar H10 through the PhysiAware app installed on participants’ smartphones was subjected to pre-processing. The sampling frequency of the raw Polar H10 ECG waveform is 130Hz; however, the collected data were not completely continuous due to frequent small gaps in the data streamed from the sensors to the smartphone. The majority of these gaps (approximately 52% of all gaps on average for each participant) were below 60 seconds in length. These gaps were likely caused by interruptions in Bluetooth Low Energy (BLE) connectivity between the Polar H10 device and the smartphone19. During pre-processing, we filled these short gaps by dividing the length of the gap in half and then copying that many samples from the preceding part of the signal to fill the first half of the gap and the samples from the signal following the gap to fill the second half of the gap. This approach to handling missing data samples was chosen based on the assumption that the heart rate does not typically change dramatically over a short period of time. Gaps larger than 60 seconds were excluded from analysis.

After filling in the short missing gaps, we loaded the ECG data into the Kubios software package20, which we used to preprocess the ECG data with noise detection and beat correction algorithms. Kubios also generates HRV features in the time, frequency, non-linear domains and other relevant features. The detrending method used in Kubios is based on smoothness priors regularization and it removes the nonstationary slow trend of the ECG signal. Bandpass filtering and detrending methods were considered for generating HRV features, however, we opted to use Kubios instead as it is the gold standard for ECG processing20. Kubios produces a total of 24 HRV features generated for every 60-second window, with a 10-second overlap, as illustrated in Figure 1. We subsequently used these features as input to machine learning algorithms. The larger missing data gaps of greater than 60 seconds in length were filled with zeros prior to loading into Kubios to maintain the time alignment between raw ECG data and the HRV features. Kubios generates ‘NaN’ values for the zero-filled sections of HRV features.

Figure 1.

Illustration of training and testing data acquisition for (a) smoking events detection and (b) smoking events prediction. Note: the adjacent location of smoking and non-smoking events here is only for illustration purposes – non-smoking events were selected at random outside of the smoking events’ window boundaries. Each smoking or non-smoking time window is composed of sliding time frames with overlaps.

PhysiAware collects the accelerometer data from the Polar H10 sensor at 200 Hz sampling frequency. We filled any gaps in these data shorter than 60 seconds using the same procedure as was used for the ECG data. Another variable we calculated from acceleration data is the overall magnitude of the acceleration along the three axes in the cartesian coordinate system, the X, Y, and Z axes. The calculation of the magnitude is as follows: . We then generated a set of features from time domain and frequency domain for G-value, X, Y, and Z axis acceleration data for every 60 seconds with 10 seconds sliding time window to time-align with the HRV features. There are 84 accelerometer features in total. The PhysiAware app also collects location information from the participant’s smartphone as GPS coordinates. We use the participants’ first reported coordinates as the initial location. We then calculate the distance, in both longitude and latitude, between the location collected at each time point and the initial location. The total number of location features is thus 2, the longitude distance and the latitude distance. The location features are of interest due to previous research on using location for smoking cessation21. In summary, the PhysiAware app captures the ECG and accelerometer data from Polar H10 chest strap and location data from the smartphone. A total number of 110 features after preprocessing the raw data were used as the features for the machine learning algorithms. For all the features, we normalized them with unit variance and zero mean.

Definition of Prediction vs Detection of Smoking Events

The participants in this study were asked to report smoking events on the PhysiAware app every time they smoked a cigarette. They reported both the time and the perceived reason for smoking. We use these reported smoking events as labels for training and testing predictive models. We consider a time window around the reported smoking event time to label a chunk of data as a smoking event. We experimented with different lengths of time windows, including 10-, 15-, and 20-minute for prediction of smoking events and 20- and 40-minute time windows for detection of smoking events. In laboratory settings, one smoking event lasts about 5 minutes22 on average. However, in naturalistic settings, the timespan of smoking events varies largely. One study has shown that a smoking event could be as long as 14 minutes in naturalistic settings23. Thus, we use a range of time windows to account for such variations. For the detection use case, the time window is centered around the smoking label, and for prediction the time window is fixed to be exclusively before the labeled smoking event timestamp, as shown in Figure 1. Therefore, the difference between prediction and detection is defined by the placement of the time window relative to the reported smoking event. The non-smoking events are selected randomly from the remaining data and use the same length time windows to match with the smoking events. The number of non-smoking events was matched to the number of smoking events in order to create a balanced dataset. By labeling the data as smoking and non-smoking, we created a binary classification problem for predicting and detecting smoking events in naturalistic settings. Due to having a balanced dataset, we are able to use accuracy as a metric for performance.

Investigation on how Leave-One-Out vs Individual Participant’s Data Impact Model Performance

We examined the performance of machine learning models under two scenarios: one where the model is trained and tested on a single individual’s data alone (i.e., within-participant cross validation - WPCV) and one where the model is trained and tested with the Leave-One(participant)-Out Cross-Validation (LOOCV) method. For the WPCV method, we split each participant’s data into train, test, and validation sets stratified by smoking event index and used 10 folds for cross-validation. The stratified by event splitting prevented the data from the same smoking event from showing up in both the train and test sets. To avoid overfitting, we used early stopping during training and selected a simple network aside from using cross validation. For the LOOCV method, we first used data from 27 of the 28 participants in the study to train a model. We then fine-tune the model on the training set of the remaining individual’s data, before finally testing on the individuals’ test set. This represents a configuration of precision medicine, where a machine learning model is trained a priori, but then custom tailored to each patient at model deployment time.

Detection and Prediction using Machine Learning

The machine learning methods we used for detecting and predicting smoking events include sequence neural network models LSTM and BiLSTM. We also used traditional classification models including SVM, K-Nearest Neighbors (KNN), Random Forest (RF), NB and Logistic Regression (LR). The input shapes for the LSTM and BiLSTM are different from the input of non-neural networks. With a single layer of LSTM, we use a total of 110 features including HRV features, accelerometer data features, and location features as input. For a 10-, 15-, 20- and 40-minute window this results in the input shape of 11X110, 17X110, 23X110 and 47X110 tensor, respectively. The first dimension of the input (11, 17, 23, and 47) represents the number of sliding frames in the 10-, 15-, 20-, and 40-minute time windows, respectively. The second dimension (110) represents the total number of features per frame. For BiLSTM models, we used two BiLSTM layers and a dense layer. The input for BiLSTM models is the same as LSTM models. For non-neural network models, the features are averaged to 1D array. For instance, with a 20-minute time window, each smoking event’s data contains 23 rows of features extracted for 1 minute with a 10 second sliding window. We then average the 23 rows into a single row, as non-neural network models are unable to handle the temporal information.

Feature Permutation Importance

The feature permutation importance (FPI) represents the importance of each feature to the accuracy by measuring the mean absolute error (MAE) after shuffling the individual feature24. We calculated the FPI for both the WPCV method and LOOCV method. We calculated FPI within each cross-validation iteration and then used the average of all iterations to represent the final FPI to mitigate the limitations of small data size. We shuffled every individual feature in a loop after training the model and calculated the difference between MAE and the baseline error which is the error calculated without any shuffling. The difference between MAE and baseline error represents how important the feature helps improve the model performance. Thus, the higher the difference between MAE and baseline error, the more important the shuffled feature is. For the FPI analysis with the WPCV method, we calculated the difference between MAE and baseline error for each participant after training on their own data. For the FPI analysis with LOOCV method, we calculated the difference between MAE and baseline error for the individual person after training on all the other participants’ data.

By obtaining the FPI, we can capture the features that contributed the most to the accuracy of the model. This informs us about what are the most important factors to predict or detect smoking. By calculating FPI with the WPCV method, we can get the most important features for each individual participant. By calculating FPI with LOOCV method, we can get the most generalizable features among all participants for smoking events detection and prediction. However, the permutation importance is only meaningful when the model has high accuracy23. For this reason, we analyze feature importance only on participants for which the model achieved above 60% accuracy and who have 10 or more smoking events. Filtering by the above thresholds, there are 21 participants included.

Results

Detection of Smoking Events

We first summarized the results for the detection of smoking events. We tested a variety of machine learning models, sequence neural network models including LSTM and BiLSTM and other traditional models. Detection uses data from both before and after each reported smoking event. The task of the machine learning model is to classify each window as either a smoking or non-smoking class. We opted to use accuracy as the metric for examining model performance since our dataset is balanced. We also calculated the weighted average of accuracy based on the number of smoking events due to the large discrepancy of number of smoking events among study participants. We experimented with 20- and 40-minute windows. Table 2 and Table 3 include the accuracy results from machine learning methods we implemented for smoking detection on the 20- and 40-minute time windows which contain data from 10, 20 minutes before and 10, 20 minutes after the smoking events and with both LOOCV and WPCV methods. Combining the results from both Table 2 and Table 3, models with 20-minute time windows generate 3% higher accuracy than the 40-minute time windows for detection.

Table 2.

Results of accuracy of LOOCV evaluation for detection and prediction models. * We recognize that SVM is technically a neural model but group it here with other non-neural models for convenience.

| Detection | Prediction | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Window | 40 min | 20 min | 10 min | 15 min | 20 min | |||||

| Mean (SD) | Wt. Mean (SD) | Mean (SD) | Wt. Mean (SD) | Mean (SD) | Wt. Mean (SD) | Mean (SD) | Wt. Mean (SD) | Mean (SD) | Wt. Mean (SD) | |

| Sequence neural models | ||||||||||

| BiLSTM | ||||||||||

| Without finetuning | 0.62 (0.10) | 0.65 (0.09) | 0.62 (0.09) | 0.66 (0.07) | 0.60 (0.08) | 0.61 (0.07) | 0.61 (0.07) | 0.62 (0.06) | 0.62 (0.11) | 0.63 (0.08) |

| With finetuning | 0.66 (0.14) | 0.73 (0.12) | 0.67 (0.12) | 0.75 (0.10) | 0.65 (0.08) | 0.68 (0.07) | 0.66 (0.08) | 0.69 (0.07) | 0.66 (0.11) | 0.69 (0.08) |

| LSTM | ||||||||||

| Without finetuning | 0.60 (0.11) | 0.63 (0.11) | 0.61 (0.09) | 0.64 (0.08) | 0.60 (0.08) | 0.61 (0.07) | 0.61 (0.07) | 0.61 (0.06) | 0.61 (0.10) | 0.63 (0.07) |

| With finetuning | 0.65 (0.14) | 0.72 (0.13) | 0.66 (0.11) | 0.73 (0.10) | 0.66 (0.08) | 0.69 (0.07) | 0.66 (0.08) | 0.69 (0.07) | 0.67 (0.10) | 0.70 (0.08) |

| Non-sequence and Non-neural models | ||||||||||

| RF | 0.62 (0.11) | 0.65 (0.09) | 0.64 (0.09) | 0.66 (0.07) | 0.58 (0.07) | 0.60 (0.08) | 0.62 (0.08) | 0.60 (0.08) | 0.59 (0.11) | 0.59 (0.09) |

| KNN | 0.58 (0.10) | 0.58 (0.08) | 0.56 (0.09) | 0.60 (0.08) | 0.57 (0.07) | 0.58 (0.07) | 0.58 (0.06) | 0.58 (0.06) | 0.59 (0.09) | 0.60 (0.07) |

| SVM* | 0.61 (0.11) | 0.65 (0.10) | 0.62 (0.11) | 0.66 (0.10) | 0.61 (0.09) | 0.62 (0.08) | 0.60 (0.08) | 0.60 (0.09) | 0.61 (0.09) | 0.62 (0.09) |

| NB | 0.62 (0.11) | 0.64 (0.10) | 0.61 (0.12) | 0.66 (0.09) | 0.61 (0.07) | 0.61 (0.07) | 0.61 (0.08) | 0.60 (0.07) | 0.60 (0.10) | 0.61 (0.08) |

| LR | 0.60 (0.12) | 0.62 (0.10) | 0.61 (0.11) | 0.64 (0.10) | 0.60 (0.08) | 0.59 (0.07) | 0.61 (0.09) | 0.60 (0.08) | 0.59 (0.09) | 0.58 (0.08) |

Table 3.

Results of accuracy of WPCV evaluation for detection and prediction models.

| Detection | Prediction | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Window | 40 min | 20 min | 10 min | 15 min | 20 min | |||||

| Mean (SD) | Wt. Mean (SD) | Mean (SD) | Wt. Mean (SD) | Mean (SD) | Wt. Mean (SD) | Mean (SD) | Wt. Mean (SD) | Mean (SD) | Wt. Mean (SD) | |

| Sequence neural models | ||||||||||

| BiLSTM | 0.62 (0.19) | 0.73 (0.15) | 0.69 (0.13) | 0.78 (0.11) | 0.66 (0.09) | 0.70 (0.08) | 0.66 (0.09) | 0.70 (0.08) | 0.66 (0.11) | 0.70 (0.09) |

| LSTM | 0.63 (0.26) | 0.72 (0.13) | 0.63 (0.23) | 0.75 (0.13) | 0.65 (0.17) | 0.70 (0.09) | 0.66 (0.18) | 0.70 (0.07) | 0.67 (0.19) | 0.71 (0.09) |

| Non-sequence and Non-neural models | ||||||||||

| RF | 0.58 (0.16) | 0.69 (0.12) | 0.65 (0.18) | 0.76 (0.12) | 0.63 (0.09) | 0.67 (0.08) | 0.65 (0.08) | 0.68 (0.07) | 0.61 (0.16) | 0.68 (0.10) |

| KNN | 0.56 (0.19) | 0.70 (0.15) | 0.63 (0.16) | 0.73 (0.12) | 0.62 (0.11) | 0.66 (0.08) | 0.62 (0.09) | 0.66 (0.07) | 0.59 (0.15) | 0.64 (0.11) |

| SVM* | 0.57 (0.20) | 0.71 (0.16) | 0.62 (0.19) | 0.75 (0.13) | 0.61 (0.12) | 0.67 (0.09) | 0.61 (0.11) | 0.67 (0.09) | 0.60 (0.15) | 0.67 (0.10) |

| NB | 0.60 (0.17) | 0.69 (0.12) | 0.64 (0.16) | 0.72 (0.10) | 0.61 (0.08) | 0.65 (0.07) | 0.61 (0.09) | 0.66 (0.08) | 0.61 (0.12) | 0.66 (0.09) |

| LR | 0.61 (0.17) | 0.72 (0.13) | 0.65 (0.15) | 0.75 (0.12) | 0.62 (0.10) | 0.65 (0.08) | 0.63 (0.09) | 0.67 (0.08) | 0.60 (0.12) | 0.66 (0.09) |

As shown in Table 3, the best performance for detecting smoking events is 69% average accuracy with the BiLSTM approach with 20 minutes time window by using WPCV. For the other non-sequence and non-neural network methods, RF and LR with 20-minute time window are the best-performing algorithms and they achieved 65% accuracy on average when applied to individual participants’ data by using WPCV. With LOOCV methods, we used fine-tuning to increase model performance. The BiLSTM model with a 20-minute time window and LOOCV method yields an average of 62% accuracy without fine-tuning. After fine-tuning the model on the development subset of the hold-out participant’s data, the average accuracy improves to 67%. All sequence neural network models have shown increases in accuracy after fine-tuning with a 5% increase on average.

Due to the large disparity of number of smoking events among our participants, we also calculated the Pearson correlation between accuracy and the number of smoking events for each algorithm. The Pearson correlation between the accuracies and the number of smoking events, for RF, KNN, SVM, NB, LR, BiLSTM, and LSTM are 0.51, 0.55, 0.60, 0.42, 0.57, 0.56, 0.71 with p-values smaller than 0.05. Thus, the number of smoking events and the accuracy of models are positively correlated. Moreover, the weighted average accuracy is considerably higher than the unweighted average accuracy, increasing from 64% to 75% on average for smoking detection. In addition, we segmented the participants into 2 groups by the median of the number of smoking events. For participants with more than the median number of smoking events, the average accuracy by using WPCV is higher (with an average accuracy of 73%) than using the LOOCV method (with an average accuracy of 66%). For participants with smoking events less than the median number of smoking events, the average accuracy (with an average accuracy of 59%) is higher with the LOOCV method compared to using WPCV (with an average accuracy of 56%).

Prediction of Smoking Events

Prediction of smoking events is a harder, yet a more useful problem than detection. If we can identify tobacco usage before it occurs, this will enable potential just-in-time intervention for smoking cessation. We demonstrate the results for the prediction of smoking events in this section. For the prediction of smoking events, we selected data from only before a smoking event. We used 10-, 15- and 20-minute time windows before each smoking event with sequence neural network and non-neural network models.

Table 3 shows the results of the machine learning models accuracies in predicting smoking events using WPCV with 10-, 15- and 20-minute time windows. Combining the results from Table 2 and Table 3, it shows the average accuracies are all rounded to 62% when averaging across all models for 10-, 15- and 20-minute 3 different time windows for smoking prediction. Moreover, average accuracy is 62% with LOOCV method across all models, and 63% with WPCV method. The best performance for smoking events prediction is 67% by using LSTM with a 20-min time window before a smoking event. Sequence neural models achieved higher accuracy than the other algorithms for prediction, with average accuracy of 66% and 61% separately.

We again calculated the correlation between the average accuracies from RF, KNN, SVM, NB, LR, BiLSTM, and LSTM and the number of smoking events. The correlations are 0.41, 0.44, 0.49, 0.38, 0.34, 0.47, and 0.47 with p-values smaller than 0.05. These correlations are smaller than the correlations from the detection of smoking events using 20 minutes window. We used a ranking to segment the participants into 2 groups, by using the median number of smoking events. For participants with above the median smoking events, the models achieved 5% higher accuracy with WPCV than LOOCV. While for participants with fewer smoking events, the models performed 2% better on average with LOOCV compared with WPCV (see Figure 2 for illustration of the difference).

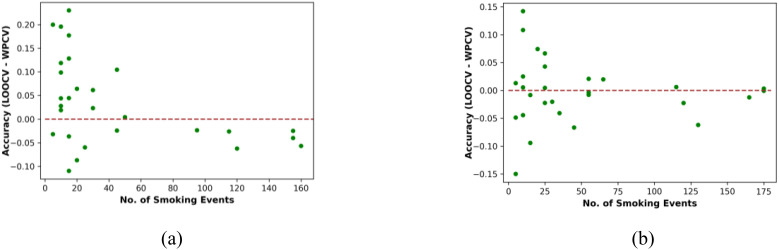

Figure 2:

Difference between accuracy of LOOCV and WPCV for all participants for (a) smoking detection, (b) smoking prediction.

The quality and the amount of data collected from each participant varied. To investigate the impact of data quality and quantity on model accuracy for prediction purposes, we further rank our participants by both the number of smoking events and the percentage of ‘No Contact’ values provided by the Polar H10 sensor. The percentage of ‘No Contact’ represents the percentage of data collected from H10 that had poor contact with skin. We segregate participants into top 25%, top 50%, and top 75% groups by these two criteria. The sequence neural network models (20 minutes time window) using WPCV achieved 73% accuracy for the top 25% group with voluminous and high-quality data. The accuracy was 70% and 67% for the top 50% and top 75% groups, respectively.

Difference between Accuracy of LOOCV and WPCV

For each participant, we calculated the difference in accuracy between LOOCV and WPCV to investigate if the amount of data influences the difference in accuracy between LOOCV and WPCV for both types of smoking event detection. Figure 2 illustrates the differences in performance measured with LOOCV and WPCV methods. The x-axis represents the number of smoking events, the y-axis represents the difference between the accuracy with LOOCV method and the accuracy with WPCV for each participant. Each dot in Figure 2 represents one participant.

Feature Permutation Importance (FPI) Results

We analyzed FPI from the BiLSTM model for predicting smoking events to obtain the most important features. We chose to use BiLSTM for this analysis because it has stable and high accuracy for most participants as shown in Table 2 and Table 3. We calculated the FPI with WPCV method and the FPI with the initial training part of LOOCV method. As shown in Figure 3, we categorized the features into 9 feature domains. Acc. X Axis, Acc. Y Axis and Acc. Z Axis represent accelerometer features in 3 dimensions. G-value refers to features related to the overall magnitude of the acceleration from X, Y, and Z axes. HRV (Time Domain), HRV (Freq. Domain), and HRV (Nonlinear Domain) refer to time, frequency, and nonlinear domain features calculated from ECG signal, respectively. HRV (Other) represents other relevant features produced by Kubios software such as stress index and activity intensity. Lastly, Location represents the features related to GPS coordinates.

Figure 3.

(a) The average FPI across all participants using WPCV with BiLSTM. (b) The average FPI using LOOCV method with BiLSTM.

Feature importance across all individual participants with WPCV: We calculated the average of FPI across our participants based on the BiLSTM with WPCV. We observed that there exists a variety in FPI among our participants. For a few participants, location features are the most influential. For others, the HRV nonlinear domain features are the most important. By calculating the average, we can determine which feature domains played more important roles overall for contributing to the accuracy of BiLSTM on individual participant’s data. Figure 3 (a) shows the bar plot of the average FPI by feature domains across all individual participants. The x-axis shows the difference between the MAE and the baseline error. As shown in Figure 3 (a), the HRV (Other) features influence the accuracy more than other HRV features. It also reveals that location features play the most significant role in predicting smoking events across individual participants.

Generalized Feature Permutation Importance with LOOCV: We further investigated the FPI from the training LOOCV folds to understand which features are more generalizable among all of our participants for predicting. Figure 3 (b) shows the feature importance ranking using the BiLSTM model with LOOCV. It can be seen from Figure 3 (b) that the most important features are HRV features, compared to location and accelerometer features.

Discussion

In this paper, we explored using sequence neural network models and non-neural networks models for smoking events detection and prediction in naturalistic settings. We generated the accuracies and weighted average of accuracies with different models with different time windows and under two different methods - WPCV and LOOCV. Additionally, to understand the correlation between amount of available data (no. of smoking events) and accuracy, we calculated the Pearson correlation and analyzed the model performance by grouping our participants based on a ranking measurement. Finally, we used FPI analysis to discover the most generalizable features with LOOCV and most important features for individual participant with WPCV.

Figure 2 (a) shows that for smoking detection, for most participants that have fewer smoking events, models trained on all the other participants’ data with LOOCV have higher accuracy than models trained on their own data with WPCV. For these participants, it is difficult to learn useful patterns from their own limited data, however, the LOOCV method allows useful patterns to still be extracted from all the other participants’ data. On the contrary, for participants with more smoking events, models trained on their own data with WPCV have higher accuracy than models trained on all the other participants’ data with LOOCV. This indicates that for participants with more data, the patterns learned from their own data are more useful. Figure 2 (b) shows there is not a significant relationship between the difference of LOOCV and WPCV and the number of smoking events.

We observed moderate Pearson correlation between accuracies from models and the number of smoking events. Due to the positive correlation, we would expect to see an increase in accuracy for both detection and prediction with an increasing amount of data available. It is noteworthy that the correlation of accuracy with the number of smoking events is lower for prediction, relative to detection. This is likely because prediction is a harder problem than detection. Detection of smoking events allows for the inclusion of the actual smoking behavior, which is easier to detect with more available data. On the contrary, the data used for prediction of smoking events does not include any actual smoking behavior, such as puffs, and having more available data does not guarantee high accuracy due to people’s behavioral and physiological differences before smoking. In other words, detection is a more readily solvable problem, given sufficient data.

For both detection and prediction of smoking events tasks, LSTM and BiLSTM overperform other non-sequence non-neural models in most cases, on average 5% better (66% compared to 61%) for prediction and 4% better (65% compared to 61%) for detection. Sequence neural models are the best performing likely because they take into account temporal information. Smoking events involve changes in an individual’s physiological and behavioral characteristics over time, which can be detected by LSTM and BiLSTM. Other non-sequence and non-neural network models only use the average of features of data and cannot account for these temporal changes over the time windows. This gives sequence neural models a key advantage.

By comparing accuracy of each individual participant using WPCV and LOOCV, we observed the LOOCV method reports better accuracy for participants with less than the median amount of data by approximately 3%. We may infer from this that there exist generalizable patterns for smoking event detection among most participants, which can be hard to extract from a single participant’s data. It is noteworthy that WPCV (trained on a single participant) and LOOCV (trained on all participants) show highly different results in feature importance analysis. Location and accelerometer features, which have high importance with WPCV, have low importance for LOOCV. This indicates that location and accelerometer features are highly dependent on each individual and are not as generalizable. On the contrary, HRV features are important for both WPCV and LOOCV. Notably, HRV features are the most important features for LOOCV, which shows that they are more generalizable. This means that physiological patterns are similar across different participants, which can be used to create robust predictions of smoking events.

The results presented in this paper should be interpreted in light of several limitations. First, the size of the data sample is relatively small necessitating further confirmation of the results in larger studies. Second, this feasibility study has revealed several challenges with collecting continuous physiological data in naturalistic environments remotely. For example, one significant challenge that resulted in significant data loss for several participants is the variability in how battery management standards are implemented by different manufacturers using the Android operating system. While PhysiAware was implemented to run constantly in the background, it was stopped on some Android smartphone models each time the phone would enter the locked screen mode. This could be avoided by changing the battery management configuration; however, the configuration could not be set programmatically and had to be done by the participant. Third, the timestamps of smoking events were self-reported by the participants using their smartphones. These timestamps may not be precisely aligned with the exact timing of the smoking event and, clearly, some smoking events may not have been reported at all.

Conclusion

The study presented here resulted in several key findings. First, we found that the BiLSTM and LSTM models show good performance for predicting and detecting smoking events in naturalistic settings. Neural models outperformed non-sequence and non-neural models by a large margin (62% - 69% vs. 56% - 66%). There is no substantial difference in model performance with different time windows for prediction, while 20-minute window (10-before and 10-after smoking event) is optimal for detection. Second, our analysis of feature importance revealed that location and accelerometer features are important when training on each individual participant’s data (WPCV), but less important when using LOOCV. Conversely, the HRV features extracted from ECG data proved to be more generalizable, showing high FPI with LOOCV. This shows that there are patterns among participants’ ECG signals leading up to smoking events, while location and accelerometer features are not as generalizable. Hence, we conclude that physiological features extracted from ECG are good indicators of imminent smoking. Finally, the positive correlation between the number of available smoking events and the models’ performance in both detection and prediction scenarios has an important practical implication. If we were to deploy, for example, the prediction model to help smokers quit by optimizing the timing of nicotine replacement therapy, similarly to the design of the current study, we would ask the smoker to go through an “enrollment” period during which they would smoke ad libitum and report each smoking event as accurately as possible. The data collected during the enrollment would then be used to train an individualized predictive model. In the current study, we simulated this enrollment period as the first week of the 2-week study; however, the enrollment could be reasonably expanded to 10 or 14 days instead of 7 days to increase the number of smoking events and the resulting model’s accuracy. The app that the smoker would use to report events could also alert them to any hardware or software issues such as poor skin contact and data loss and provide suggestions for mitigation.

In this work, we investigated the feasibility of using machine learning models to detect and predict tobacco usage (smoking events) in naturalistic settings. A unique strength of our study is that it was conducted completely remotely without a single in-person visit. We found that, in general, it is indeed feasible to both detect and predict smoking events which suggests that there are patterns obtainable from wearable and mobile devices that coincide with or precede tobacco usage. Obtaining the features that are best indicators for smoking detection and using different time windows will allow us to obtain deeper insight into craving and substance use and dependence.

Acknowledgements

The research was supported by the National Institutes of Health National Center for Advancing Translational Sciences, grant UL1 TR002494 and the National Institute on Drug Abuse, grant R21DA049446. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

Figures & Table

References

- 1.CDCTobaccoFree. Centers for Disease Control and Prevention. Fast Facts. 2022 [cited 2023 Feb 16]. Available from: https://www.cdc.gov/chronicdisease/resources/publications/factsheets/tobacco.htm.

- 2.CDCTobaccoFree. Centers for Disease Control and Prevention. Health Effects of Smoking and Tobacco Use. 2022 [cited 2023 Feb 16]. Available from: https://www.cdc.gov/tobacco/basic_information/health_effects/index.htm.

- 3.Quitting Smoking Among Adults — United States, 2000–2015 | MMWR [Internet] [cited 2023 Mar 20]. Available from: https://www.cdc.gov/mmwr/volumes/65/wr/mm6552a1.htm.

- 4.Hébert ET, Stevens EM, Frank SG, Kendzor DE, Wetter DW, Zvolensky MJ, et al. An ecological momentary intervention for smoking cessation: The associations of just-in-time, tailored messages with lapse risk factors. Addict Behav. 2018 Mar;78:30–5. doi: 10.1016/j.addbeh.2017.10.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Businelle MS, Ma P, Kendzor DE, Frank SG, Vidrine DJ, Wetter DW. An Ecological Momentary Intervention for Smoking Cessation: Evaluation of Feasibility and Effectiveness. J Med Internet Res. 2016 Dec 12;18(12):e321. doi: 10.2196/jmir.6058. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Dar R. Dar of Real-Time Monitoring and Notification of Smoking Episodes on Smoking Reduction: A Pilot Study of a Novel Smoking Cessation App. Nicotine Tob Res Off J Soc Res Nicotine Tob. 2018 Nov 15;20(12):1515–8. doi: 10.1093/ntr/ntx223. [DOI] [PubMed] [Google Scholar]

- 7.Cohen S, Lichtenstein E. Perceived stress, quitting smoking, and smoking relapse. Health Psychol. 1990;9(4):466–78. doi: 10.1037//0278-6133.9.4.466. [DOI] [PubMed] [Google Scholar]

- 8.Alberdi A, Aztiria A, Basarab A. Towards an automatic early stress recognition system for office environments based on multimodal measurements: A review. J Biomed Inform. 2016 Feb;59:49–75. doi: 10.1016/j.jbi.2015.11.007. [DOI] [PubMed] [Google Scholar]

- 9.Pakhomov SVS, Thuras PD, Finzel R, Eppel J, Kotlyar M. Using consumer-wearable technology for remote assessment of physiological response to stress in the naturalistic environment. PLOS ONE. 2020 Mar 25;15(3):e0229942. doi: 10.1371/journal.pone.0229942. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Ramakrishnan S, Bhatt K, Dubey AK, Roy A, Singh S, Naik N, et al. Acute electrocardiographic changes during smoking: an observational study. BMJ Open. 2013;3(4):e002486. doi: 10.1136/bmjopen-2012-002486. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Devi MRR, Arvind T, Kumar PS. ECG Changes in Smokers and Non Smokers-A Comparative Study. J Clin Diagn Res JCDR. 2013 May;7(5):824–6. doi: 10.7860/JCDR/2013/5180.2950. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Can YS, Chalabianloo N, Ekiz D, Ersoy C. Continuous Stress Detection Using Wearable Sensors in Real Life: Algorithmic Programming Contest Case Study. Sensors. 2019 Apr 18;19(8):E1849. doi: 10.3390/s19081849. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Speer KE, Semple S, Naumovski N, McKune AJ. Measuring Heart Rate Variability Using Commercially Available Devices in Healthy Children: A Validity and Reliability Study. Eur J Investig Health Psychol Educ. 2020 Mar;10(1):390–404. doi: 10.3390/ejihpe10010029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Espinosa HG, Thiel DV, Sorell M, Rowlands D. Can We Trust Inertial and Heart Rate Sensor Data from an APPLE Watch Device? Proceedings. 2020;49(1):128. [Google Scholar]

- 15.Dumortier A, Beckjord E, Shiffman S, Sejdić E. Classifying smoking urges via machine learning. Comput Methods Programs Biomed. 2016 Dec 1;137:203–13. doi: 10.1016/j.cmpb.2016.09.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Abo-Tabik M, Costen N, Darby J, Benn Y. Towards a Smart Smoking Cessation App: A 1D-CNN Model Predicting Smoking Events. Sensors. 2020 Jan;20(4):1099. doi: 10.3390/s20041099. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Fan C, Gao F. A New Approach for Smoking Event Detection Using a Variational Autoencoder and Neural Decision Forest. IEEE Access. 2020;8:120835–49. [Google Scholar]

- 18.Senyurek V, Imtiaz M, Belsare P, Tiffany S, Sazonov E. Cigarette Smoking Detection with An Inertial Sensor and A Smart Lighter. Sensors. 2019 Jan;19(3):570. doi: 10.3390/s19030570. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Yu H, Kotlyar M, Dufresne S, Thuras P, Pakhomov S. Feasibility of Using an Armband Optical Heart Rate Sensor in Naturalistic Environment. Pac Symp Biocomput Pac Symp Biocomput. 2023;28:43–54. [PMC free article] [PubMed] [Google Scholar]

- 20.Tarvainen MP, Niskanen JP, Lipponen JA, Ranta-aho PO, Karjalainen PA. Kubios HRV – Heart rate variability analysis software. Comput Methods Programs Biomed. 2014 Jan 1;113(1):210–20. doi: 10.1016/j.cmpb.2013.07.024. [DOI] [PubMed] [Google Scholar]

- 21.Chatterjee S, Moreno A, Lizotte SL, et al. SmokingOpp: Detecting the Smoking “Opportunity” Context Using Mobile Sensors. Proc ACM Interact Mob Wearable Ubiquitous Technol. 2020;4(1):4:1–4:26. doi: 10.1145/3380987. doi:10.1145/3380987. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Hoffmann D, Hoffmann I. The changing cigarette, 1950-1995. J Toxicol Environ Health. 1997 Mar;50(4):307–64. doi: 10.1080/009841097160393. [DOI] [PubMed] [Google Scholar]

- 23.Tang Q. Tang detection of puffing and smoking with wrist accelerometers. Northeastern University. 2014.

- 24.Scikit-learn: Machine Learning in Python, Pedregosa, et al. JMLR 12. 2011. pp. 2825–2830.