Abstract

Multi-hazard risk assessment has long been centered on small scale needs, whereby a single community or group of communities’ exposures are assessed to determine potential mitigation strategies. While this approach has advanced the understanding of hazard interactions, it is limiting on larger scales or when significantly different hazard types are present. In order to address some of these issues, an approach is developed where multiple hazards coalesce with losses into an index representing the risk landscape. Exposures are assessed as a proportion of land-area, allowing for multiple hazards to be combined in a single calculation. Risk calculations are weighted by land-use types (built, dual-benefit, natural) in each county. This allows for a more detailed analysis of land impacts and removes some of the bias introduced by monetary losses in heavily urbanized counties. The results of the quantitative analysis show a landscape where the risk to natural systems is high and the western United States is exposed to a bulk of the risk. Land-use and temporal profiles exemplify a dynamic risk-scape. The calculation of risk is meant to inform community decisions based on the unique set of hazards in that area over time.

Keywords: Climate, spatial, exposures, multi-hazard, index, land-use

1. INTRODUCTION

The goal of reducing impacts from hazard exposure has never been more important. According to the United Nations 2012 Report on Development Agenda, the global increase in hazard exposures from 1970 to 2010 was between 114 and 192 percent, depending on the hazard. These exposures resulted in over 1.1 million deaths and $1.3 trillion in property losses, with an additional 2.7 billion people adversely impacted in that same time (UN 2012). As populations continue to grow, urbanize and shift into areas with greater exposure potential, the impact will only continue to grow (Chester et al. 2000, Lall and Deichmann 2010, Garschagen and Romero-Lankao 2015, Güneralp et al. 2015). On top of this, the severity and location of hazards continue to change, yielding a dynamic landscape requiring continual reassessment and long-term mitigation solutions (Miletti 1999).

The hazard landscape or “hazard-scape” is complex and difficult to fully represent in a comprehensive manner without some form of model by which exposures are tallied and assigned to locations (Cutter, Burton, & Emrich, 2010). In order to effectively reduce risks, communities must assess their unique hazard-scape by using integrated, holistic, multi-hazard assessments intended to represent the full range of past, present and future exposures. In this context, the International Panel on Climate Change (IPCC) considers disaster risk to be “the possibility of adverse effects in the future” (Fields 2012). The starting point is a hazard, defined as a phenomena caused by the onset of events, whether acute or chronic, that are perceived to cause harm (Burton and Kates 1964). Hazards can be natural (geophysical, hydrological, climatological, or biological) or technological (environmental degradation, pollution, or accidents). An exposure occurs when a hazard intersects with the landscape, whether a living population or the natural or built environment. In the event of an exposure, there is a potential for loss, defined here as a risk. Losses are incorporated as a measure of consequences associated with hazard from exposure. They serve as a proxy for vulnerability in the calculation of risk.

While integration of multiple hazards is not a new concept, there has been a push to include more refined multi-hazard models into community planning for risk reduction. Hewitt and Burton first discussed the concept of “hazardousness of place”, whereby a specified geographic unit is assessed for all hazards impacting it (1971). Their theoretical framework suggests a need for more location-specific work, as opposed to hazard-specific analysis, to inform policy decisions. Cutter takes this a step further with a “hazards of place” conceptual model through which location-dependent risk, or vulnerability, is defined by the complex interaction of hazards caused by natural, societal, and technological events (Cutter and Solecki 1989, Cutter 1996). The place-based approach allows for the combination of multiple hazards and mitigation activities. More recent research in multi-hazard risk assessments has attempted such analyses, focusing on either small geographic areas or looking at a cascade of hazards resulting from a single source (e.g. volcano) (Bell and Glade 2011, Thierry 2008). There are also examples of hazard integration using spatial tools for loss estimation, planning and mitigation purposes (FEMA 2013, Schmidt et al. 2011, Tate et al. 2011). Other attempts at multi-hazard integration use spatial assessment with the assignment of ordinal classification schemes for intensity (Batista et al 2004, Schmidy-Thome 2005, Greives et al. 2006). This is necessary due to the vast array of impacts stemming from natural and technological hazards, but forces decisions on weighting and lacks consideration of outcomes. While these methods present a robust way to integrate and assess multiple hazards, they do not lend as well to the construction of an index value for comparison across larger spaces.

In addition to simply combining hazards, temporal interactions between hazards are a significant consideration, where the potential exists for hazards to interact and amplify one another through both proximity and timing of occurrences. In the event that multiple hazards occur close in proximity and timeframe, disaster fatigue can occur, leaving the landscape more susceptible to harm from subsequent exposures (Kappes et al. 2012). There are additional psychologic impacts from repeated exposures and exacerbating effects stemming from hazardous facilities via chemical releases, explosions, or epidemics (Reser 2007, Watson et al. 2007). Consideration of these “cascading” impacts is important, and a primary reason for the use of multi-hazard assessment (Miletti 1999, Marzocchi et al. 2012). The goal is to operationalize an approach that will enable the merger of multiple hazards into a meaningful measure for communities to assess strategies and limit future vulnerabilities. This feat is challenged as a result of patchwork landscapes, differences in hazard size and intensity, and the enormity of data that must be processed to retain a useful level of resolution. Despite the difficulty, developing a scalable multi-hazard assessment for the entire U.S. at the county scale is important and necessary to establish a baseline risk profile for all areas of the country.

2. METHODS

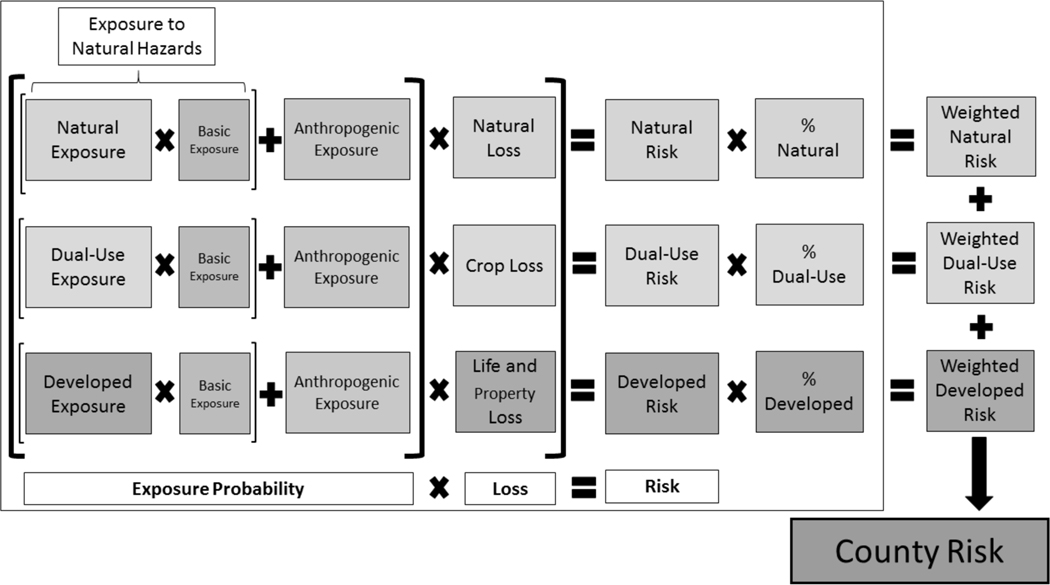

The Patterns of Risk using an Integrated Spatial Multi-Hazard (PRISM) approach allows risk to be assessed at the county level across states or regions and facilitates the integration of any hazard type provided it has an associated spatial component. Risk, in this approach, is represented as the probability of a hazard exposure (identified as proportional land area) multiplied by the degree of vulnerability (identified hazard losses). Exposure probability entails both historic and proximity-based measures of hazards responsible for risk. Losses provide a measure of the lives lost/impacted, monetary losses in crops and property, and losses of natural land integrity. Data inclusion, conceptual development and processing of the risk parameters is described in this section. A conceptual overview, provided in Fig. (1), demonstrates the breakdowns of each risk component.

Fig. (1).

PRISM Approach conceptual overview. Showing breakdown of exposures and land categories along with the inclusion of land-type weighting values used to derive a final risk value for all hazards in a county. Components of risk are identified in top and bottom bars to demonstrate steps in calculation of final score. Color codes: Green = Natural Risk components, Blue = Dual-Use components, and Grey = Developed components. Basic exposure and anthropogenic exposures are the same across all land-type categories.

To address the spatial extent of hazard exposure and cope with the varied landscapes (e.g. hazard proximities and level of development) across the country, counties are used as the foundation for risk calculation. Though use of smaller spatial units is possible, the utility of the method better aligns with a larger aggregation for the purposes of county and regional governance. In addition, there is lack of certain metrics at finer scales, making calculation of risk based on land extent challenging. Loss data, for example, uses counties as its finest level of data aggregation, and in 15 years of data, only 3 major boundary changes have occurred (US Census 2010). Assessment of land-type distribution within each county is used to weigh exposure and loss values and account for differences in development and economic drivers. Proportional county exposure values allow different hazard types to be combined in a consistent manner, regardless of intensity or temporal variation.

2.1. Data Refinement

In the formulation of a hazard-scape, three inputs are utilized: a land-use layer, a natural hazard layer, and technological hazard layer. The land-use layer is used to weight hazard exposures according to the landscape types present in a county; Natural, Dual-Benefit, or Developed (Table 1). The natural hazard layer is used to define historic and proximity-based exposure probabilities. The technological hazard layer is used to add potential exposures resulting from man-made facilities. Each of these are calculated in units of total acreage and assigned to county boundaries using ArcGIS 10.3, where exposure size is calculated as a proportion of the county acreage in each land-use class.

Table 1.

Compression of 2011 NLCD categories from 20 down to 3 for use in exposure calculations.

| NLCD Classification (20 Categories) | PRISM Classification (3 Categories) |

|---|---|

| Developed, Open Space | Developed Land |

| Developed, Low Intensity | |

| Developed, Medium Intensity | |

| Developed, High Intensity | |

| Deciduous Forest | Dual-Benefit Environment |

| Evergreen Forest | |

| Mixed Forest | |

| Pasture/Hay | |

| Cultivated Crops | |

| Woody Wetlands | |

| Emergent Herbaceous Wetlands | |

| Open Water | Natural Environment (non-human) |

| Perennial Ice/Snow | |

| Barren Land (Rock/Sand/Clay) | |

| Dwarf Scrub* | |

| Shrub/Scrub | |

| Grassland/Herbaceous | |

| Sedge/Herbaceous* | |

| Lichens* | |

| Moss* |

data only available in Alaska. Left column represents original classification of land and right column represents new classification.

2.1.1. Land Use Classifications

The land-use surface is developed using data from the 2011 version of the Multi-Resolution Land Characteristics Consortium (MRLC) National Land Cover Database (NLCD), which is based on LandSat imagery covering the entirety of the conterminous U.S. (Homer et al. 2015). The reason for this choice is two-fold. Firstly, the data was satellite-derived, spatially-referenced, and at a 30 × 30-meter resolution for the entire U.S. Although higher resolution alternatives existed, using those would have meant gathering multiple, disparate data sources and yielded a surface with high levels of inconsistency. The second advantage of using NLCD for surface exposure data is due to its well documented and regular collection, being compiled every 5 years and closely corresponding to the social data.

Land coverage classification in the NLCD falls into one of 20 different categories, making the data highly detailed. For the purposes of this approach, however, the original 20 classifications are compressed into 3 categories, developed lands, dual-benefit lands, and natural lands (Table 1). Exposure extents are assigned to each land-type and calculated as a proportion. This allows exposure to be calculated for a specific land or aggregated up to a land-type weighted county value.

2.1.2. Natural Hazards Exposure

There are a total of 8 (9 if high and low temperature extremes are counted separately) natural hazard types assessed using historical events along with an additional 4 proximity-based exposure potentials that use modeled data or location to approximate exposure probability (Table 2). Points, areas or tracks of each hazard are collected for each hazard and geo-referenced. Depending on the hazard, further processing is carried out to estimate the spatial extent of impacts. These extents are converted to proportional land-type areas by county and hazard type to represent exposure. In the case of droughts, for instance, the data is represented as an aerial extent. Other hazards are represented by path data only, such as hurricanes and tornadoes. In these instances, a buffer is used to estimate exposure extent. Table 2 provides a summary of all 11 natural hazards and the methods utilized for determination of spatial extent.

Table 2.

Summary of Natural Hazard Calculations. For each natural hazard type, the class of data is either historic (based on previous exposures to land area) or modeled (some combination of historic or land-characteristic data used to create exposure probabilities). Spatial extent explains the methods used to create data for the exposure calculations.

| Natural Hazard Type | Class | Spatial Extent | Exposure Calculation |

|---|---|---|---|

| Hurricane | Historic and Modeled | Buffer of 100 miles surrounding tracks for storms > Category 1. | Proportion of each land-use classification used to estimate exposure extent. |

| Tornado | Historic and Modeled | Adaptive buffer based on path width data and mapped tornado tracks. | Proportion of each land-use classification used to estimate exposure extent. |

| Earthquake | Modeled w/Historic | USGS Modeled data -historic events and probability of event exceeding 0.1g PGA. | Proportion of each land-use classification used to estimate exposure extent. |

| Fire | Historic | Ignition sites of historic fires with adaptive buffer using total burn acreage. | Proportion of each land-use classification used to estimate exposure extent. |

| Drought | Historic | U.S. Drought monitor database extents for 5-year period in four categories (D1-D4) | Proportion of each land-use classification used to estimate exposure extent. |

| Wind | Historic | Entire county used as extent. | Avg. number of damaging wind events (>50 mph) per year within the county. |

| Hail | Historic | Entire county used as extent. | Avg. number of damaging hail events (0.75”) per year within the county. |

| Landslide | Modeled w/Historic | USGS historic landslide incidence and susceptibility based on slope and terrain types. | Proportion of each land-use classification used to estimate exposure extent. |

| Temperature Extremes (high and low temperature deviations) | Historic | Entire county used as extent. | The avg. deviation of annual min and max val. from the 32-year average high and low temps. |

| Inland Flood | Modeled | 0.5-mile buffer around inland waterways (rivers, streams, lakes, ponds, etc.) | Proportion of each land-use classification used to estimate exposure extent. |

| Coastal Flood (SLR/Storm) | Modeled | Buffer along all coastal counties for land within 2 feet of current sea level at mean high tide. | Proportion of each land-use classification used to estimate exposure extent. |

For each hazard type, the impact of the event is dependent on land-type and extent. Measuring hazards is typically accomplished through some form of index based on features of the hazard. The challenge in combining hazards thus centers on how to make the diverse indices equivalent numerically for combination in a summary index. An example of this challenge would come from hurricanes and tornadoes, for instance. Hurricanes are measured based on maximum wind speed. While related, this does not consider the accompanying threats of flooding or tornadoes that typically cause damage in the wake of a storm. Tornadoes are similarly measured using wind speed as a baseline for index development. Though both are wind-based storms, the indices for hurricanes and tornados are not directly relatable and do not capture the differences in other characteristics. Tornadoes are much more focused in their paths and intensity, while hurricanes are vaster in extent. The distinction between these two storm types is clear in the characteristics of impact. Hurricanes, because of their extent and necessity for water, have a more probable impact on larger populations and fragile coastal ecosystems. Tornadoes, with narrower path widths and higher probability inland, do not possess the same population threat as hurricanes. Due to the differential impacts and methods of measurement for these storm types, along with many other hazards, combining them through measures of intensity scale is not very enlightening. Measuring the exact location and extent of exposure to assess impact creates a much better assessment.

Extent decisions are established for each hazard based on data and physical characteristics. Hurricane wind exposure calculations use a buffer distance of 100 miles for all storms exceeding the Category 1 (winds > 74 mph) rating. This cut point is shown from previous research to be the most robust in relation to damages experienced and corresponds to additional research on hurricane and typhoon eye wall structure (Simpson and Riehl 1981, Weatherford and Gray 1988, Zhu 2008).

Data from the National Oceanic and Atmospheric Administration (NOAA) Storm Events Database was used to estimate tornado extents (NOAA 2010). Spatial information related to the tornado, including the path coordinates, length, and width were used to create an adaptive buffer around every tornado occurrence between 2000 and 2015. Since wider and longer paths are typically associated with more severe storms this method translates well as a severity proxy (Brooks 2004).

Earthquake exposure is assessed using data available from the USGS that models and maps earthquake magnitude probability based on proximity to fault lines, substrate existing in the area, and historic events. In order to predict ground movement for a specified ground composition, the USGS Earthquake Hazards Program uses the Peak Ground Acceleration (PGA), presented as a percentage of gravity (USGS 2003). Forecast models are created by USGS for areas with historic or potential exposure to earthquakes exceeding the 0.1 g PGA threshold. According to research conducted by FEMA, a PGA of greater than 10% (0.1 g) is considered of significant velocity to cause damage to buildings not up to code or older in age (FEMA 2009). While all three land-use types are calculated for PRISM, only developed land factors into the final calculations for the index because of the limited or non-existent damage to predominantly natural systems exposed to earthquakes.

Fire data from 2000–2010 were collected from the U.S. Geologic Survey (USGS) Wildland Fire Information database (Hawbaker et al. 2017). Each fire occurrence has a point of ignition, identified with a latitude and longitude, as well as the total acreage burned. This value is used to create an adaptive buffer by back-calculating a buffer radius around the points. The circular burn buffers are then used to estimate land proportions exposed to fire in each county.

Drought exposure is based on data from the U.S. Drought Monitor Database (NDMC 2017). This dataset contains shapefiles of drought extent collected on a weekly basis since 2000, categorized into 5 levels of severity ranging from D0 (abnormally dry) to D4 (exceptional drought). For the purposes of this research, only categories D1-D4 were used. Due to the frequency of data collection, four samples, one representing drought conditions in each season (winter-first week of January, spring- first week of April, summer-first week of July, fall- first week of October), were taken from each year between 2005 and 2010. Annual and 5-year averages were tabulated for each county within each of the 4 drought categories (D1-D4) to represent total exposures as well as the severity of exposures.

Damaging hail and wind events exposures are assessed for the entire county using data from the NOAA Storm Prediction Center Historic Climatic Database (NWS 2016). A spatial buffer could not be used due to the lack of specific information on the spatial extent of hail and wind events. To calculate risk for the hail and wind events, the annual hail events within each county boundary are averaged over a 10-year span, from 2000–2010. The thresholds for damage-inducing events, a hail diameter of greater than 0.75 inches and wind gusts exceeding 50 miles per hour, are based on NOAA research (NWS 2007).

Landslide data comes from USGS incidence and susceptibility data, modelled and mapped based for locations of past landslides along with data on slope and terrain types that cause higher landslide risk (Radbruch-Hall et al. 1982; King and Beikman 1974). For the purposes of exposure likelihood, only the first four classes are used: High landslide incidence (>15% area involved in land-sliding), moderate landslide incidence (1.5–15% area involved in land-sliding), high susceptibility to land-sliding with low incidence, and moderate susceptibility with low incidence. The percentage of land falling into each of these four categories is summed to determine a probability extent for exposure to landslides.

Temperature extremes are assessed based on a 32-year record of maximum and minimum annual temperatures recorded in each county. Using this record, the annual average high and low temperatures are compared to establish and average deviation value above or below what is considered “normal” for the 3 decades. This is done by subtracting the annual high and low average temperatures from the 32-year mean values and inverting the result. Similar to the wind and hail data, the values for this metric represent the entire county and are not split into three land-type classifications. Both a deviation from annual low temperatures and annual high temperatures are calculated and used as individual metrics.

2.1.3. Proximity-Based Hazard Exposure

While tornadoes and hurricanes both have a documented historic risk based on events of the past 15 years in this study, it is important to also consider the inherent, or “basic” risk to locations based on their proximity to hazardous areas. This is very similar to the probability or susceptibility models done for earthquakes and landslide. Coastal counties along the Atlantic and Gulf of Mexico are at risk during any given hurricane season. Lack of occurrence data over the past 15 years does not exclude these counties from possessing future hurricane risk. The same applies to counties located in areas of higher tornado probability. Lack of impact in past does not preclude future incidents. Both hurricanes and tornadoes were assigned to counties as a basic risk established by location.

A proximity-based hurricane exposure probability score of 0.15% was assigned to all counties within 75 miles of the Atlantic or Gulf of Mexico coastlines. This percentage is created using the average probability of hurricane landfall in each, non-El Nino year (48%), divided 305, which is the number of coastal counties where hurricanes could strike (Bove et al. 1998). Proximity-based tornado exposure probability was assigned using historic tornado data to select all counties with any tornadic activity in past 15 years. Each of these counties was assigned a basic risk score of 0.06% based on past climatological models (Schaefer et al. 1986).

Flood exposure is also quantified as a basic exposure in this research due to its calculation and the inherent risk present as a result of proximity to defined features. It is measured within two categories, inland exposure and coastal exposure. For the inland category, all rivers, streams, lakes and ponds were mapped using a ½ mile buffer to represent a potential exposure to flooding from these sources. Coastal flooding calculations use the NOAA Sea Level Rise predictions (Parris et al. 2012). These are based on height (mean high water) above sea level and derived from digital elevation maps (DEMs) along the coast. All land 2 feet or less above sea level are categorized as at risk.

2.1.4. Technological Hazards

In addition to natural hazards, a place may have additional exposure potential because of proximity to technological hazards. Technological hazards, such as nuclear power plants, Superfund sites, or toxic release sites, can have the potential to impact nearby populations regardless of natural exposure; however, natural hazards can exacerbate the technological risk. Table 3 shows the technological hazards used in this approach.

Table 3.

Technological Hazards metrics used in the PRISM approach and the methods used to collect and assess.

| Technological Hazard Type | Spatial Extent | Exposure Calculation |

|---|---|---|

| Nuclear Facilities | All nuclear reactors and storage facilities in US identified and given a 10-mile buffer. | Proportion of each land-use classification used to estimate exposure extent. |

| Superfund Sites | Superfund sites - proposed or active in the US identified and given a 5-mile buffer. | Proportion of each land-use classification used to estimate exposure extent. |

| Toxic Release Inventory (TRI) Sites | TRI facilities identified when threshold chemical release criteria are met and given a ¼-mile buffer. | Proportion of each land-use classification used to estimate exposure extent. |

| Resource Conservation and Recovery (RCRA) Sites | All RCRA Site (LQGs, TSDs, and TRANSs) point data from EPA FRS Geodatabase are given a ¼-mmile buffer. | Proportion of each land-use classification used to estimate exposure extent. |

The Federal Emergency Management Agency (FEMA) tracks limited technological hazards through the Radiological Emergency Preparedness Program (REPP), for commercial power plants, and the Army chemical weapons stockpiles in the Chemical Stockpile Emergency Preparedness Program (CSEPP), for military chemical weapons stockpiles. In each of these preparedness programs, the concern is focused on potential human exposure to these hazards. However, many of the technological hazards could have a serious negative impact on plants and animals, suggesting this definition of technological risk needs to be expanded to encompass non-human ecosystems as well. In the case of crop land, even if human settlements are not directly impacted an exposure could still directly impact the food supply. For this reason, the point data for nuclear and chemical sites are assessed here for their impact on all land-use types and the data are expanded to include Superfund sites and sites on the Toxic Release Inventory (TRI).

Nuclear reactors or storage facilities in an area present additional hazards in the event of a leak. While most of these facilities are designed and built to withstand geologic and meteorological events common to their area, accidents are still possible and the underlying risk is still elevated because of their presence. The U.S. Nuclear Regulatory Commission (USNRC) designates an official Emergency Planning Zone (EPZ) based on the plume exposure pathway distance of 10-miles. This distance is designed to limit exposure to radioactive materials through sheltering, evacuation, or the use of potassium iodide, if necessary (USNRC 2014). Nuclear facilities are mapped by point location and given a 10-mile buffer to designate their EPZ. Using methods described for natural hazards, risk is assigned to each county with percentages of risk distributed across each of the three land-use categories.

In contrast to nuclear facilities, superfund sites are slightly more complicated than nuclear facilities since they are not all the same. The sites can be in one of four conditions: proposed, active, completed, or deleted, depending on how far along the site is in the clean-up process. In addition, the chemicals present at a site can vary in their toxicity and range of potential travel. Generally, the impact of these sites will be localized, regardless of the chemical(s) present. Therefore, a buffer of one mile is created around each site that has been active during the past ten years and percentages of land covered are calculated for each of the three land-use types.

Toxic Release Inventory (TRI) and Resource Conservation and Recovery (RCRA) sites are by far the most numerous and varied of the technological hazards. Their potential to increase risk in the event of another hazard makes their inclusion important. TRI and RCRA sites have the capacity to impact air, water, and land quality and exist within both highly populated and more sparsely populated areas. TRI data was gathered from the TRI Explorer, which catalogs over 53,000 facilities that have been tracked in the past 20 years. To remain temporally consistent, only TRI sites logged within the 2000–2010 timeframe were used here. Data for RCRA sites was obtained from the EPAs Facility Registry Service (FRS) and only includes sites with greater exposure potential than TRIs, classified as Large Quantity Generators (LQGs), Hazardous Waste Transportation Services (TRANS) and Treatment, Storage and Disposal facilities (TSDs). To calculate the risk to each land type, a simple count of TRI and RCRA sites without a buffer in each county is used. TRI and RCRA density (count per area) with each land type in a county will represent the potential exposure.

2.1.4. Losses

Historic losses give exposure metrics the context needed to become a measure of intensity and impact across the landscape. In this approach, losses are combined into three categories, property, crop, and human, using data compiled from the Hazard and Vulnerability Research Institute’s (HVRI) Spatial Hazard Events and Losses in the U.S (SHELDUS) database (HVRI 2017). Property and crop losses both serve as severity measures inbuilt and managed ecosystems, while human losses encompassing both fatalities and injuries, represent the social severity of the hazards. Losses are reported as per capita in 5-year segments. Losses are assigned to specific land classifications based on the type. Human and property losses are assigned to the built environment and crop losses are assigned to the dual-benefit environment. Natural losses are assessed using additional data from the NLCD on land cover change. This dataset identified land-use pixels that have changed to pervious surfaces (indicating built environment) between 2006 and 2011. The percentage of total county land converted to the built environment is used as a proxy for natural lands lost. The damage posed to natural systems resulting from population stresses or other anthropogenic influences, represented by the natural land loss, foreshadows potential reductions in ecosystem services (Hey and Philippi 1995).

2.2. Risk Calculation

While the specific issues related to collection and development of each risk exposure layer have been described, the primary challenge comes in combining these to represent an overall risk landscape. In other words, how do the risks, both natural and technological, interact to create exposure likelihoods? A simple additive calculation cannot adequately answer this question. Instead, the calculation is more complicated, and a nuanced structure that accounts for not only each exposure, but the likelihood of loss that stems from the occurrence frequencies and the hazard severity.

Combining hazards in a meaningful way requires blending of spatial exposures and losses. Using the methods described, every hazard can be addressed by calculating a proportion of county land both impacted historically and land that could be impacted based on proximity to hazard zones (i.e. coastal Atlantic counties and hurricanes).

The complexity of the hazard-scape is represented in the final calculations, where the interplay of natural hazards, technological hazards, losses, and county landscape are used to create a risk score. This process, as shown in Fig. (1), is much more nuanced than a simple additive index. The combination of exposure extents, land-type distributions, and the associated losses are demonstrated in detail within section 3.3 with a regional analysis and break out of calculations for the high, medium, and low-risk counties in that area. Hazards are combined in steps based on the spatial extents, characteristics and land type first, then hazard type second. The first step modifies the historical exposures by weighting them with proximity-based hazards. Spatial extents for each hazard are based on the proportion of land area and added within land-types before modification. Proximity-based hazards are proportional as well, based on hazard probability, and added to one prior to being multiplied by the historic exposure.

The combined historic and proximity-based exposure value is added to the land-type proportion of technological hazards to create the final exposure probability. There will be three of these probability scores for each county, one for each land type. The exposure probability is then multiplied by the land specific loss (e.g. crop and structural loss for dual-benefit lands) to create a land-type risk score. Each county will have three risk scores following this step along with proportional land area types. These values are used to weight the risk scores and create a single risk value for the county. By weighting the historic exposure scores using both land types and proximity measures, the resulting PRISM score can capture the changes in risk resulting from locational and landscape-derived differences. The final score is thus dependent not only on direct hazard impacts, but also the changes occurring to the surrounding landscape.

3. RESULTS

The primary goal of the PRISM approach is to create a national landscape that accounts for a full suite of hazard exposures spatially. Along with losses, the data created from PRISM forms the risk domain of the Climate Resilience Screening Index (CRSI). However, the potential applications of the PRISM approach are much broader than inclusion in CRSI. In addition to creating an integrated risk value, the results can also be broken out into many categories for analysis. Some of the potential analyses enabled by PRISM include the examination of (1) temporal trends, (2) natural versus anthropogenic risks, (3) separate risk values for major land categories, and (4) multiple spatial scales ranging from county up to states and EPA regions. Prior to these type of specific analyses, a summary of the data is presented here to provide an overview of the results from the CRSI Risk domain as well as some specific spatial results.

3.1. National Results

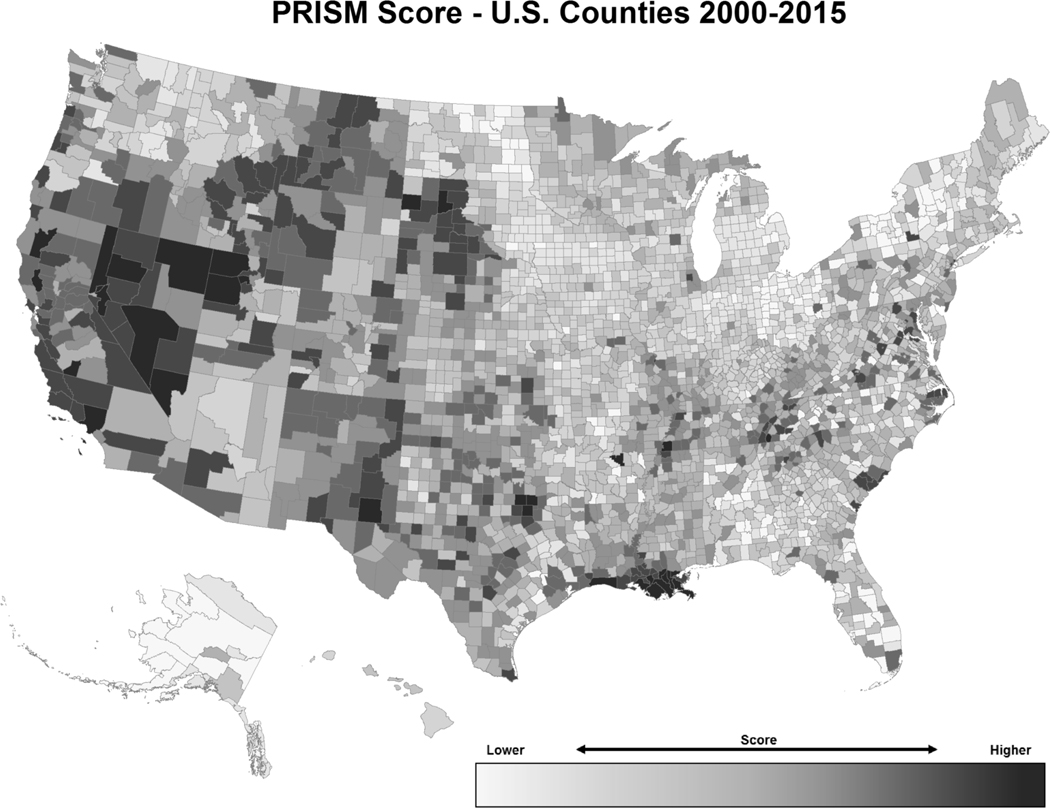

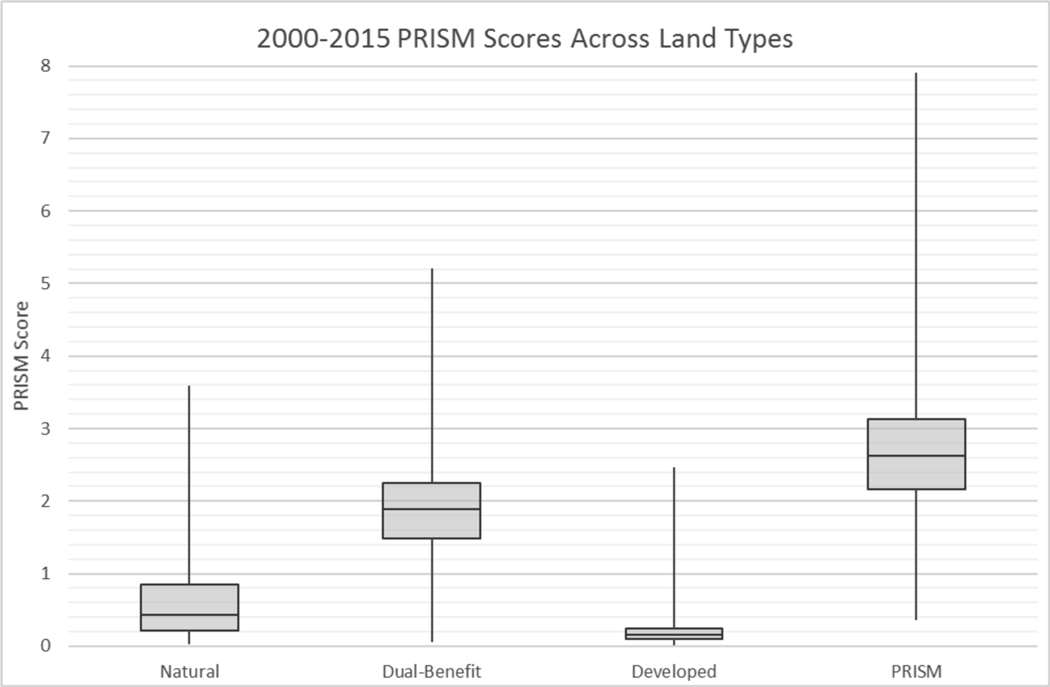

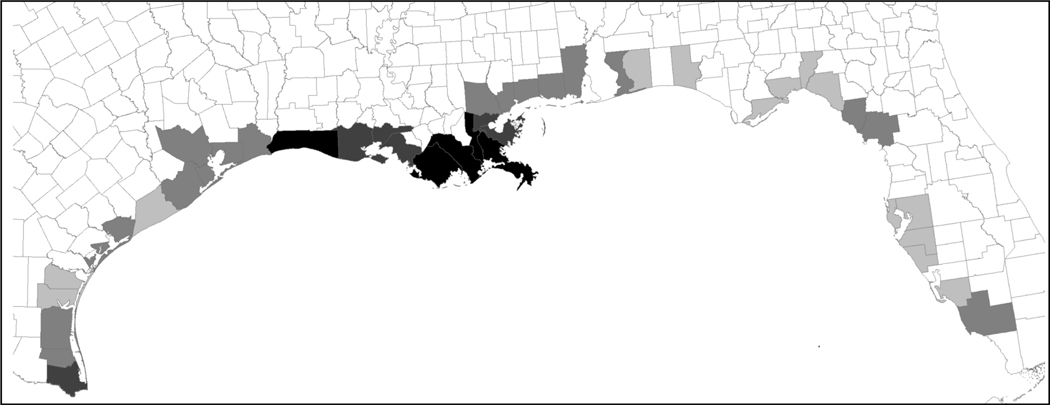

The overall results, shown as a map in Fig. (2), and their variation across each land type are compared using a boxplot in Fig. (3). There are clear regional patterns in the data, with much of the Southwest, Louisiana, and plains states exhibiting higher risk levels. In addition, areas in the Appalachians and along the Eastern coastline display higher risk values. The Southeast, despite exposure to tornados and hurricanes, exhibits a comparatively low overall risk score. This could be due to the high exposure to drought and wildfires in the Western U.S. Interestingly, dual-benefit lands seem to drive much of the risk across the U.S. over the 15-year span. Risks to these lands are significantly higher than those in natural or developed lands, and the median falls closer to that of the overall score.

Fig. (2).

PRISM Overall County Scores for all land types and entire 16-year period of measurement.

Fig. (3).

Box Plots of Overall PRISM Score along with Land-type scores. Data represented in this graph are from 2000 – 2015.

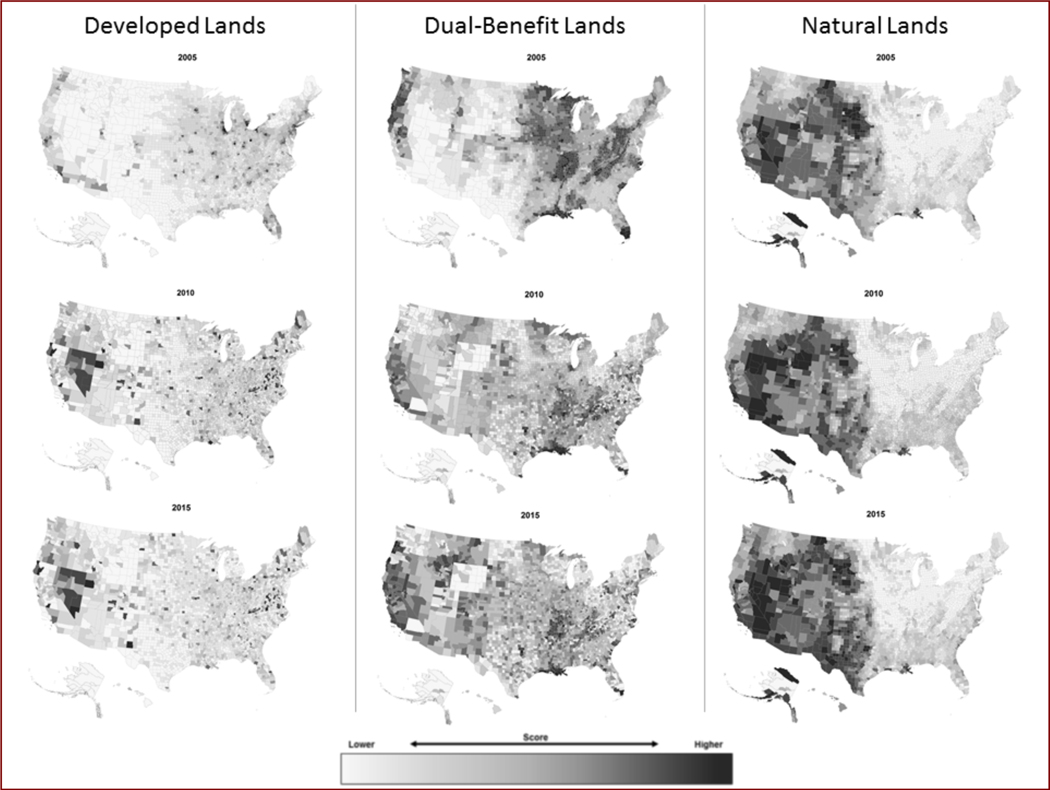

Analyzing the distribution of land types in the U.S., it is evident that much of the weight attributable to dual-benefit lands is due to the percentage of land in this category. Over 50 percent of U.S lands are classified as dual-benefit, with a bulk of those falling into either crop land in the middle of the country, and wetlands along the coast. Each of these land types provides a major service to urban communities and higher risk values to these lands is a significant concern. In addition to explaining the overall weighting as a function of percent coverage, this overall land distribution also highlights the importance of county-specific calculations for each category. While most of the U.S. is either natural or dual-benefit land, there are many counties that have a very small amount of this land type. Each county, state and region will have a very different land coverage, and thus a very different risk profile that must be managed. This distribution is shown in Fig. (5).

Fig. (5).

Land-type Risk values break-down by time span. Each panel represents a specific land type within the counties over the same 5-year blocks of time. The left column represents risk to developed lands alone, the middle column represents risk to dual-benefit lands alone, and the right column represents risk to natural lands alone. In each map, darker colors indicate higher risk values, or a combination of exposure and losses for each land-type.

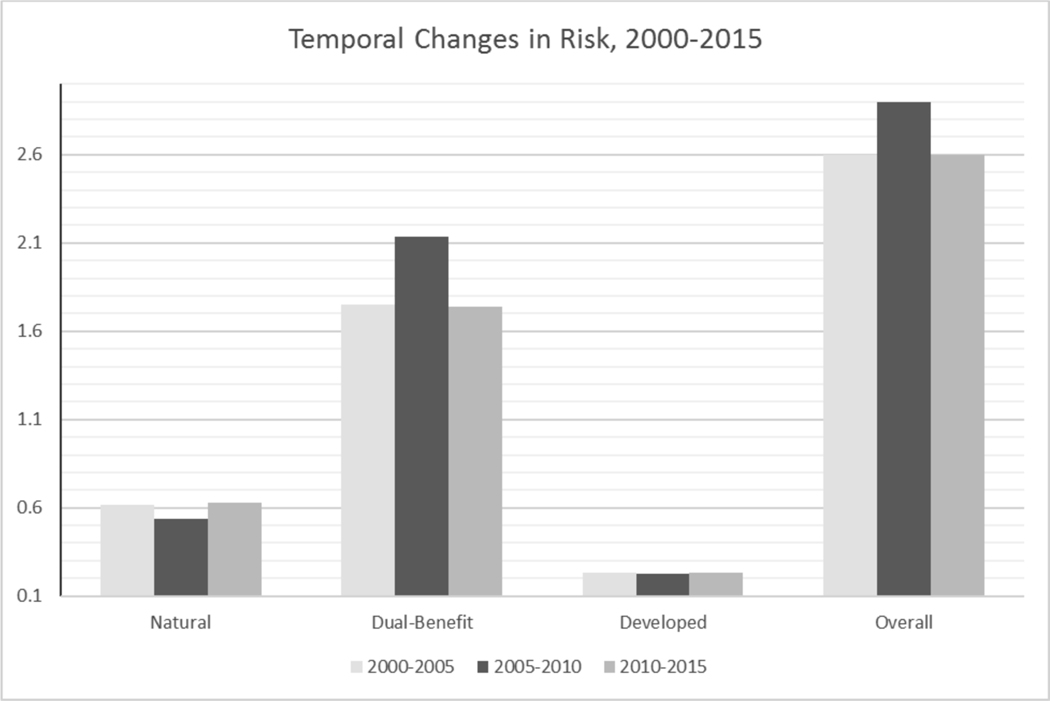

Temporal trends for PRISM across the three 5-year time periods (Fig. 4) show that risk to dual-benefit lands consistently encompasses a majority of the overall score. Through each time period, both natural and developed land risk scores remain relatively low and unchanged. This is relatively surprising given the rise in sprawl and increased population in urban areas. Dual-benefit, however, experiences a spike in the 2005–2010 period. This spike is also evident in the overall score, despite both natural and developed lands experiencing a slight risk decrease over the same period. Reasons for this increase could be tied to changes in extent and severity of drought and wildfires, which impact much of the dual-benefit lands during this time period.

Fig. (4).

Graph of Temporal Risk Changes across each land type and the overall PRISM Score.

In addition to temporal patterns, there are clear patterns of risk distribution among the three land types both at a regional and county level. Regionally, both dual-benefit and natural lands dominate different parts of the country. Most of the Western U.S is predominantly natural, while the Midwest and up into portions of the Northeast is primarily dual-benefit. As suspected, the developed lands, except a few pockets of the interior, exists almost entirely along the coastline. Few counties are homogeneous, instead of having a mix of the three land-types.

Using a risk measure weighted by land-type distribution it is possible to adjust for county and regional patterns and better assess the extent of threat without exaggerating the impact of hazards on developed areas where monetary losses may be higher. The two forms of analysis, hazard-exposures and land-use types, are examined here using exposure and loss layers for the U.S. between 2000 and 2015. First, we will examine land-type changes and the accompanying shift in risk profiles that occur as the result of development and exposure types. Second, we conduct a temporal analysis that examines changes in the natural and technological risks. In each of these examples, the goal is to demonstrate flexibility and display the unique data produced for the analysis of multiple hazards.

The effects of county land-type composition are demonstrated through a series of maps (Fig. 5), showing risk scores by land-use type for each of the three time-periods. Comparing this to the date in Fig. (3) can be used to address the distribution of risk versus the overall risk values, especially in the case of dual-benefit lands. While some increases in the spatial distribution of risk to developed lands occurs over the fifteen years, natural risk seems unchanged. While this matches up with the overall scores through time, the dual-benefit lands seem to exhibit a drastic spatial shift in risk from Eastern counties to Western counties. In the first time-period, risk in this category is primarily observed in the Eastern portions of the country. As time progresses, the areas of higher risk move Westward and appear to increase substantially along the West coast by the final time period.

Large spatial shifts like this are important to look at in more detail. Despite a relatively unchanged overall risk profile for the country, the land-type specific burden does appear to have shifted regionally. With regards to planning, a shift of this magnitude could also indicate that areas not normally exposed to hazards are now experiencing impacts, and could be potentially unprepared.

This national analysis is important from the perspective of regional and temporal changes in risk, however, it is a compilation of multiple values across many geographies and years. To showcase the value of an integrated multi-hazard approach, a more in-depth investigation of the risk components is also conducted using smaller geographic areas with higher risk scores. There are two that stand out in the overall approach; segments of the Southwest and the Gulf Coast of Louisiana, which are analyzed in more detail. This break down of risk components from the final score will call out specific hazards and risk areas and demonstrate why single risk values are valuable, yet require decomposition. Using a smaller number, the top 100 counties in overall risk, will allow for better analysis of their unique risk profiles.

3.2. High-Risk County Analysis

The top 100 risk counties in the US represent twenty-three states, yet four states account for over fifty-percent of this group. Included in these four are, in order from most to least counties, Louisiana, Nevada, California, and Texas. Despite the similar overall risk scores, the two highest risk states, Nevada and Louisiana, have considerably different exposure profiles. In Louisiana, exposures from anthropogenic hazards are considerably higher than Nevada, with an average score of 0.067 versus 0.005. This is especially true in dual-benefit lands, where the exposure is more than 30 times greater (0.003 in NV and 0.103 in LA). Basic exposure in Louisiana (2.47) is also more than double that of Nevada (1.20). Driving the anthropogenic hazards in Louisiana is a significantly higher exposure to Superfund sites along the coast along with higher exposures to nuclear and RCRA sites. Elevated basic exposure in Louisiana is based on low-lying land threatened by both coastal and inland flooding. This produces a potentially dangerous combination in the event of natural hazard exposures exacerbating risk due to chemical or other toxic contamination related to these sites. In Nevada, the exposure from natural hazards (2.68) is larger than Louisiana (2.08), driven largely by fire and drought, which are both closely related and cover a majority of the state’s land area.

While the counties examined in these two states are similar in overall risk, it is helpful to examine the exposure metrics driving this risk. Changing patterns in risk drivers may require considerably different strategies when it comes to mitigation efforts. In Nevada, for instance, wildfires and drought would be handled through efforts such as prescribed burns, removal of undergrowth and potential fuel sources, and treatment of surfaces to minimize combustion. Louisiana would take a very different approach to mitigation by cleaning up hazardous waste sites, building flood control structures, and managing development in flood zones. In each instance, the end goal is the same, yet funding and policy would be handled in a different manner.

3.3. Regional and Hazard Specific Applications

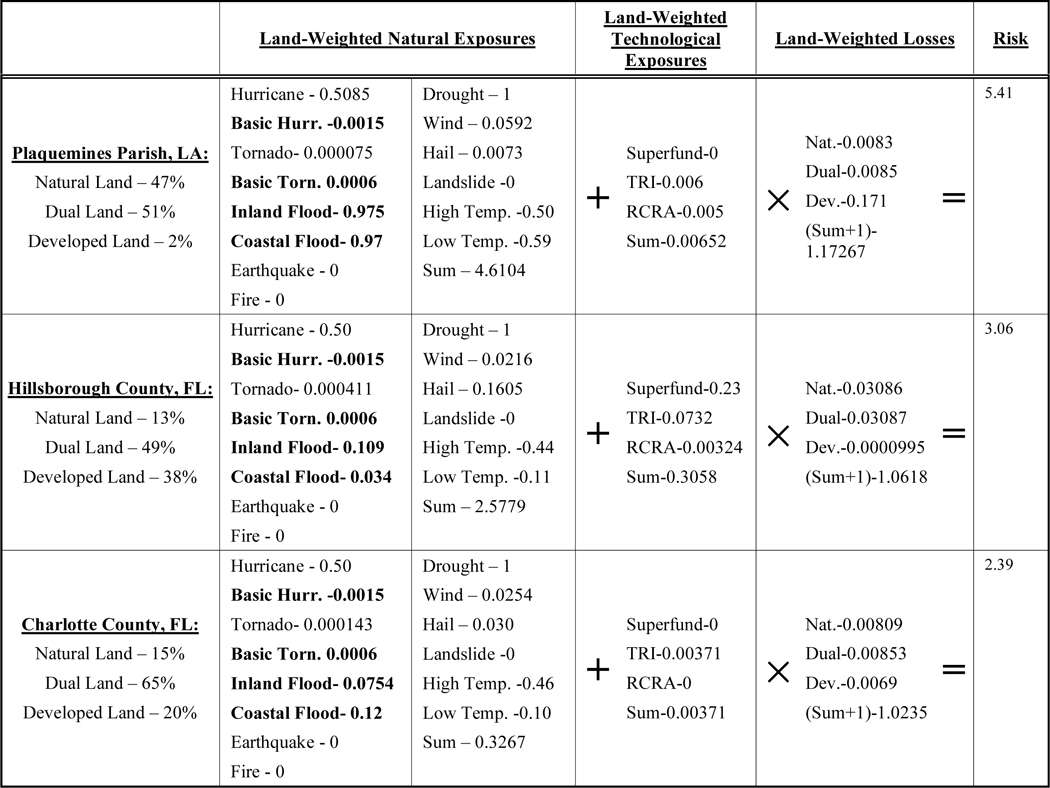

In addition to the inspection of the broader risk profiles, PRISM can also be used to analyze regional patterns or investigate hazard-specific spatio-temporal changes. A subset consisting of Gulf Coast Counties is analyzed first to demonstrate the regional utility of PRISM. In this example, the representative sub-set consists of fifty-four coastal counties from five states bordering the Gulf of Mexico. Over the sixteen-year study period, the average PRISM score of these counties is 3.21, which is considerably higher than the national average of 2.7. From this set of counties, Plaquemines Parish, LA has the highest PRISM score (5.41) of the subset (second highest of the entire U.S), while Charlotte County, FL has the lowest (2.39).

The PRISM scores in this example are deconstructed by land-type and risk domain (exposure and loss) to better analyze and compare patterns of risk. Looking first at the mean land-type risk, there are distinction between counties that become apparent. Developed land risk is low (mean = 0.22) apart from a few counties with significantly higher scores. Dual-benefit land appears to drive much of the overall risk with a mean of 2.21 and maximum of 5.21. Natural lands fall in between the two with a mean risk score of 0.76. From an exposure perspective, the dual-benefit risk is likely driven by a reliance on saltwater marshes and other land-types associated with flood and storm protection in these coastal counties. With urban development and sea level rise threatening the hazard mitigation function of natural and dual-benefit lands, the risk of loss resulting from future exposures to spreading urban environments should be expected to increase. The trend toward higher risk in heavily urbanizing counties is already evident (Figure along the Gulf coast, where rapidly developing counties from the past decade also possess high risk values across all three land-type categories.

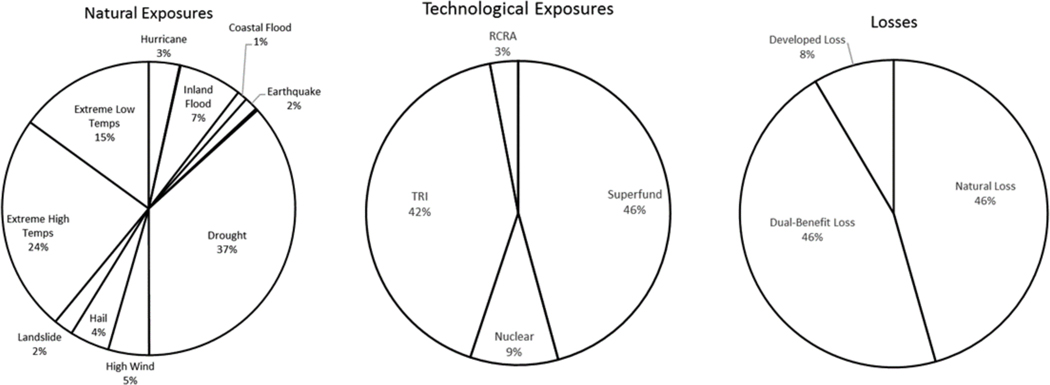

A deconstruction of the risk domains, exposure and loss, reveals yet more detail and another layer on top of the land-type analysis. Exposure measures are assessed independently to determine the extent of impact in an area and assess the relative distribution of exposure probabilities. An example of this can be seen in Fig. (6), with three pie charts that show the distribution of exposure and loss across each of the hazards and how these translate to losses by land type. What is striking about this graphic is both the sizeable amount of natural hazard exposure to drought and temperature extremes and the comparatively small amount of loss to developed lands. Despite these counties having higher populations and infrastructure, the primary drivers of risk are related to temperature and rainfall events that impact crops, forests, marshes, and other natural lands not primarily related to the built environment. This is contrary to the standard measures of risk that focus on built environments and report monetary losses.

Fig. (6).

Gulf Coast Counties overall PRISM score. 54 counties in 5 states with risk estimate calculated from 16-year period (2000–2015). Darker colors indicate higher risk probability across all land-types for all hazard types.

Putting the two analyses together paints a more holistic picture of risk in a region presented with a number of potential exposures. Risk location is explained through closer analysis of exposure types along with land-type characteristics. Many areas of the Gulf coast are sparsely populated, yet have numerous vulnerable natural resources. This vulnerability shows up in the deconstruction of PRISM exposure where a bulk of the losses occur in dual-benefit lands as a result of temperature and rainfall events. In a location where hurricanes are what most people would consider the primary risk concern, the actual exposure threats are quite different when compared alongside the full spectrum of exposures and losses.

To best capture the influence of exposure extents, land types, and losses, Table 4 shows the land weighted, scaled values associated with three of the Gulf Coast counties: Terrebonne Parish (highest), Charlotte County, FL (lowest), and Hillsborough County, FL (middle). The numbers represent exposure and loss values already weighted by land-use type. Land use percentages are shown under the county names to provide some insight into the differences between the counties. Interestingly, the county with the least developed land, Plaquemines, has the highest level of exposure to natural hazards and the highest loss values.

Table 4.

Example of PRISM calculation for Plaquemines Parish, Hillsborough County, and Charlotte County to display the influence of exposure and loss changes on the final score. Land-use percentages are shown in left column with county names. Bolded natural exposures represent basic exposures in the model. All exposures and losses are land-weighted prior to entry in this example.

|

There are a few key measures easily identifiable and responsible for the differences in the final risk scores between these three examples. What separates Plaquemines Parish from the other two counties is both the flood risk and losses in developed lands. Whereas Hillsborough and Charlotte counties have similarly intermediate levels of exposure, they are relatively small in comparison. This is largely the result of Plaquemines’ situation along the Mississippi River delta and the Gulf of Mexico. This Parish encompasses most of the delta and is experiencing significant losses due to both rising sea levels and land subsidence (Zou et al. 2016). These losses put most land in the parish at great risk from both riverine floods and coastal flooding as a result of decreased buffers provided by the marsh. Developed loss values for Plaquemine parish also stand out as significant compared to the other two counties. This is remarkable due to the small land percentage designated as developed. Losses to both human life and property are significantly higher than most other counties. Knowing this helps focus efforts by highlighting the intersections of exposure and loss.

The other key difference, and a feature that distinguishes the PRISM method, is the handling of losses as a function of exposure. High exposure potential can be tempered by low losses; just as lower exposure potential can be greatly inflated by high losses. For example, if losses comparable to Charlotte county (1.02 instead of 1.17), were experienced in Plaquemines Parish, the resulting risk would be 4.7. Similarly, if Charlotte county were to increase losses to a level comparable to Plaquemines, the overall risk score would be 2.72. These examples provide some evidence of the utility of PRISM in the identification of highest exposure risks and more importantly in the determination of causal relationships driving the losses. Information gleaned from this analysis can be applied to more targeted mitigation actions.

4. DISCUSSION

While there are existing multi-hazard approaches, a surface based exposure approach melding multiple natural and technological hazards is novel and produces results that are highly adaptive and scalable. In a decision-making context, this makes the data useful for planning and analysis at the county, state and regional level. Resilience is defined through a regional ecology approach, where external forces act on a community, and the interactions with neighboring places for support are significant in recovery (Holling 1986). In this context, it makes sense to expand the reach of hazards integration across the entirety of the United States. From a policy standpoint as well, it is logical to analyze hazards at the minimum of a regional scale. Many disasters far exceed local capacity to rebound, and regional or national resources are needed to aid recovery in both the short and long terms. As development in areas of high exposure probability increase (e.g. coastal areas), the losses will continue to rise and strain development goals and the ability to mitigate (Miletti 1999).

In addition to policy concerns, there is also the consideration of rapidly changing landscapes and shifting hazard-scapes, making it critical to have an approach that can be updated on a regular basis.

A good example of this would be through examination of county losses. Knowing that per capita human or property loss numbers increase over time only tells part of the story. The other part is told by the change in land-use or hazard exposure. Without a more detailed analysis, there is no way to know whether the increased losses are the result of expanding urban areas where hazards occur, increased exposure to hazards due to a change in patterns, or simply the existence of a new hazard. By accounting for each of these factors, the PRISM approach allows for a more detailed examination of all exposure types, and is capable of answering these types of questions.

PRISM results can also facilitate decisions at the county, state or regional level through use of a comparative value system that is consistent across the United States. For a large-scale multi-hazard approach, this is an incredibly important step that removes conflicts arising due to qualitative or region specific assessments of hazard severity. In PRISM exposure is calculated along with other hazard parameters, where applicable (e.g. wind speed, earthquake magnitude, etc.), to assess magnitude, which allows for comparability across hazard types. Because of this uniform exposure assessment, regional disparities in exposure type do not impact the analysis. Impact in the form of spatial extent and loss, not severity of the event, matters. It does not matter if a category 2 or a category 5 hurricane hits a county. What matters is how many people, structures, and other environments are in the path along with losses sustained as a result of the exposure. If a county is well prepared for the hazard, it would be expected that losses are less. It is very possible, following this logic, for a county exposed to a category 3 storm to have greater losses than another county exposed to a category 5 storm. In the end, the strength of the storm is of secondary concern to the extent and type of losses endured. For this reason, the storm severity is only used as a threshold value to determine risk. It is not used to categorize the exposures.

While the probability of hazard recurrence is not solely based on historical frequencies, it does aid in the discussion both from an exposure standpoint and in the determination of disaster fatigue. The impact of multiple events over a relatively short period of time can also have a major impact on a communities’ recovery and future resilience (Bobrowski 2013). People are likely to be worn down, resulting in loss of business and potential emigration from the area. Long term impacts to the economy and social networks may take years to recover; if they ever do.

While the PRISM model was developed with a specific purpose, the final product can yield much greater use in general hazards planning. Aside from the consideration of potential hazard-type exposure to an area, it is equally important to consider the land types being impacted. All places are different, and this makes for differences in their vulnerabilities. In most cases, risk is assessed solely with regards to developed lands. People worry about impacts to lives, jobs, and structures primarily, while risk models fail to separate out the landscape to account for different land uses. The PRISM model uses three land classification categories to look at risk in the context of management decisions. The three choices for land use were meant to distinguish and call attention to places where conflicts of interest may arise. These dual-benefit lands are defined by their combined value to both humans and to ecosystem health. These lands typically do not hold many people and are neglected in most discussions of risk, whether it be natural or anthropogenic. Much of this land type is threatened, however, and this threat appears to be on the increase. Looking at the descriptive statistics for each land type, it is also clear that dual-benefit lands are the driver behind the overall risk value.

Given the challenges discussed here, a new approach to multi-hazard assessment is outlined with an intent to create a single measure of hazard risk for a location to be incorporated into further assessments of resilience, namely the Climate Resilience Screening Index (CRSI; Summers et al. 2017). This model uses an integrated spatial approach to assess multi-hazard exposure of a landscape to distill a single value representing a cross-section of this exposure with the vulnerabilities across counties. This is a novel approach meant to streamline the assessment of multi-hazard risks via the removal of both subjective impact measures and complicated hazard maps.

Fig. (7).

Three pie charts showing distribution of exposure and loss across fifty-four counties bordering the Gulf of Mexico. The percentages represent the distribution of average exposure probability for each hazard type between 2000 – 2015.

ACKNOWLEDGEMENTS

Declared none.

Footnotes

ETHICS APPROVAL AND CONSENT TO PARTICIPATE

Not applicable.

HUMAN AND ANIMAL RIGHTS

No Animals/Humans were used for studies that are the basis of this research.

CONSENT FOR PUBLICATION

Not applicable.

CONFLICT OF INTEREST

The authors declare no conflict of interest, financial or otherwise.

REFERENCES

- Batista MJ, Martins L, Costa C, Relv AM, Schmidt-Thome P, Greiving S, Fleishauer M, Peltonen L. 2004. Preliminary results of a risk assessment study for uranium contamination in central Portugal. Proceedings of the Internation Workshop on Environmental Contamination from Uranium Production Facilities and Remediation Measures, ITN/DPRSN, Lisboa. February 11–13, 2004. [Google Scholar]

- Bell R, and Glade T. 2011. Multi-hazard analysis in natural risk assessments. WIT Transactions on State of the Art in Science and Engineering 53: 1–10. [Google Scholar]

- Bobrowski P, ed. 2013. Encyclopedia of Natural Hazards. 1135 p. Springer. [Google Scholar]

- Bove M, O’Brien J, Eisner J, Landsea C, and Niu X. 1998. Effect of El Nino on U.S. Landfalling Hurricanes, Revisited. Bulletin of the American Meteorological Society 79(11): 2477–2482. [Google Scholar]

- Brooks H. 2004. On the Relationship of Tornado Path Length and Width to Intensity. Weather and Forecasting 19: 310–319. [Google Scholar]

- Burton I, and Kates RW. 1964. The Perception of Natural Hazards in Resource Management. Natural Resources Journal 3(3): 412–441. [Google Scholar]

- Chester DK, Degg M, Duncan AM, Guest JE. 2000. The increasing exposure of cities to the effects of volcanic eruptions: a global survey. Global Environmental Change Part B: Environmental Hazards 2(3): 89–103. [Google Scholar]

- Cutter SL, and Solecki WD. 1989. The national pattern of airborne toxic releases. Professional Geographer 41(2): 149–161. [Google Scholar]

- Cutter SL. 1996. Vulnerability to environmental hazards. Progress in Human Geography 20(4): 529–539. [Google Scholar]

- Cutter S, Burton C, and Emrich C. 2010. Disaster Resilience Indicators for Benchmarking Baseline Conditions. Journal of Homeland Security and Emergency Management 7(1): 51-51. [Google Scholar]

- Federal Emergency Management Agency (FEMA). 2009. National Earthquake Hazards Reduction Program Recommended Seismic Provisions for New Buildings and Other Structures (FEMA P-750). [Google Scholar]

- Federal Emergency Management Agency (FEMA). 2013. Multi-hazard Loss Estimation Methodology: Flood Model Hazus-MH Technical Manual. Department of Homeland Security, Federal Emergency Management Agency Mitigation Division. Washington, D.C. [Google Scholar]

- Garschagen M, and Romero-Lankao P. 2015. Exploring the relationships between urbanization trends and climate change vulnerability. Climate Change 133(1): 37–52. [Google Scholar]

- Greiving S, Fleischhauer M, Lückenkötter J. 2006. A methodology for an integrated risk assessment of spatially relevant hazards. Journal of Environmental Planning and Management 49(1): 1–19. [Google Scholar]

- Güneralp B, Güneralp I, Liu Y. 2015. Changing global patterns of urban exposure to flood and drought hazards. Global Environmental Change 31: 217–225. [Google Scholar]

- Hawbaker T, Vanderhoof M, Beal Y, Takacs J, Schmidt G, Falgout J, Williams B, Brunner N, Caldwell M, Picotte J, Howard S, Stitt S, and Dwyer JL. 2017. Landsat Burned Area Essential Climate Variable products for the conterminous United States (1984 – 2015): U.S. Geological Survey data release, 10.5066/F73B5X76. [DOI] [Google Scholar]

- Hazards and Vulnerability Research Institute (HVRI). 2016. Spatial Hazard Events and Losses Database for the United States, Version 15.2. [Online Database]. Columbia, SC: Hazards and Vulnerability Research Institute, University of South Carolina. [Google Scholar]

- Hewitt K, and Burton I. 1971. The Hazardousness of a Place: A Regional Ecology of Damaging Events. University of Toronto Press. [Google Scholar]

- Hey D, and Philippi N. 1995. Flood Reduction through Wetland Restoration: The Upper Mississippi River Basin as a Case History. Restoration Ecology 3(1): 4–17. [Google Scholar]

- Holling CS. 1986. The resilience of terrestrial ecosystems; local surprise and global change. eds. Clark WC and Munn RE. Sustainable Development of the Biosphere. Cambridge University Press, Cambridge, U.K. [Google Scholar]

- Homer C, Dewitz J, Yang L, Jin S, Danielson P, Xian G, Coulston J, Herold N, Wickham J, and Megown K. 2015. Completion of the 2011 National Land Cover Database for the conterminous United States-Representing a decade of land cover change information. Photogrammetric Engineering and Remote Sensing 81(5): 345–354. [Google Scholar]

- Field C, Barros V, Stocker B, Qin D, Dokken K, Mastrandrea M, Mach K, Plattner S, Allen M, Tignor M, and Midgley P eds. 2012. Managing the Risks of Extreme Events and Disasters to Advance Climate Change Adaptation. A Special Report of Working Groups I and II of the Intergovernmental Panel on Climate Change. Cambridge University Press, Cambridge, UK, and New York, NY, USA. [Google Scholar]

- Kappes M, Keiler M, Elverfeldt K, and Glade T. 2012. Challenges of analyzing multi-hazard risk: a review. Natural Hazards 64: 1925–1958. [Google Scholar]

- King P, and Beikman H. 1974. Geologic map of the United States (exclusive of Alaska and Hawaii) U.S. Geological Survey, scale 1:2,500,000, 2 sheets. [Google Scholar]

- Lall SV, Deichmann W. 2010. Density and Disasters: Economics of Urban Hazard Risk. The World Bank Research Observer 27(1): 74–105. [Google Scholar]

- Marzocchi W, Garcia-Aristizabal A, Gasparini P, Mastellone M, and Di Ruocco A. 2012. Basic principles of multi-risk assessment: a case study in Italy. Natural Hazards 62: 551–573. [Google Scholar]

- Miletti D. 1999. Disasters by design: A reassessment of Natural Hazards in the United States. Joseph Henry Press. Washington, D.C. [Google Scholar]

- National Drought Mitigation Center (NDMC), U.S. Department of Agriculture (USDA), National Oceanic and Atmospheric Administration (NOAA). 2017. United States Drought Monitor Database. http://droughtmonitor.unl.edu/MapsAndData/GISData.aspx. [Google Scholar]

- National Weather Service (NWS). 2007. Storm Data Preparation. Directive National Weather Service Instruction 10–1605. National Weather Service. [Google Scholar]

- National Weather Service (NWS). 2016. Severe Weather Database Files (1950–2016). NOAA NWS. http://www.spc.noaa.gov/wcm/#data . [Google Scholar]

- Parris A, Bromirski P, Burkett V, Cayan D, Culver M, Hall J, Horton R, Knuuti K, Moss R, Obeysekera J, Sallenger A, and Weiss J. 2012. Global Sea Level Rise Scenarios for the US National Climate Assessment. NOAA Tech Memo OAR CPO-1. http://cpo.noaa.gov/sites/cpo/Reports/2012/NOAA_SLR_r3.pdf. [Google Scholar]

- Radbruch-Hall D, Colton R, Davies W, Lucchitta I, Skipp B, and Varnes D. 1982. Landslide Overview Map of the Conterminous United States, Geological Survey Professional Paper 1183. U.S. Geological Survey, Washington. [Google Scholar]

- Reser JP. 2007. The Experience of Natural Disasters: Psychological Perspectives and Understandings. In: Lidstone J, Dechano LM, Stoltman JP. eds. International Perspectives on Natural Disasters: Occurrence, Mitigation, and Consequences. Advances in Natural and Technological Hazards Research vol 21. Springer, Dordrecht [Google Scholar]

- Schaefer J, Kelly D, and Abbey R. 1986. A minimum assumption tornado-hazard probability model. Journal of Climate and Applied Meteorology 25: 1934–1945. [Google Scholar]

- Schmidt-Thome P (Ed.) 2006. ESPON Project 1.3.1 – Natural and technological hazards and risks affecting the spatial development of European regions. Geological Survey of Finland. [Google Scholar]

- Simpson R, and Reihl H. 1981. The Hurricane and Its Impact. Louisiana State University Press: Baton Rouge and London. [Google Scholar]

- Tate E, Burton C, Berry M, Emrich C, and Cutter S. 2011. Integrated Hazards Mapping Tool. Transaction in GIS 15(5): 689–706. [Google Scholar]

- Thierry P. 2008. Multi-hazard risk mapping and assessment on an active volcano: the GRINP project at Mount Cameroon. Natural Hazards. 45(3): 429–456. [Google Scholar]

- Geological Survey US. 2003. Earthquake Hazards Program: U.S. Seismic Design Maps: U.S. Geological Survey, 10.5066/F7MC8X8S. [DOI] [Google Scholar]

- U.S. Nuclear Regulatory Committee (USNRC). 2014. Emergency Preparedness at Nuclear Power Plants. www.nrc.gov. [Google Scholar]

- Watson JT, Gayer M, and Connolly MA. 2007. Epidemics after Natural Disasters. Emerging Infectious Diseases 13(1): 1–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weatherford C, and Gray W. 1988. Typhoon structure as revealed by aircraft reconnaissance. Part II: Structural variability. Monthly Weather Review 116: 1044–1056. [Google Scholar]

- Zhu P. 2008. A multiple scale modeling system for coastal hurricane wind damage mitigation. Natural Hazards 47: 577. doi: 10.1007/s11069-008-9240-8. [DOI] [Google Scholar]

- Zou L, Kent J, Lam NS, Cai H, Qiang Yi., Li K. 2016. Evaluating Land Subsidence Rates and Their Implications for Land Loss in the Lower Mississippi River Basin. Water 8(1): 10–25. [Google Scholar]