Abstract

Background

Although surveillance systems used to mitigate disasters serve essential public health functions, communities of color have experienced disproportionate harms (eg, criminalization) as a result of historic and enhanced surveillance.

Methods

To address this, we developed and piloted a novel, equity-based scoring system to evaluate surveillance systems regarding their potential and actual risk of adverse effects on communities made vulnerable through increased exposure to policing, detention/incarceration, deportation, and disruption of access to social services or public resources. To develop the scoring system, we reviewed the literature and surveyed an expert panel on surveillance to identify specific harms (eg, increased policing) that occur through surveillance approaches.

Results

Scores were based on type of information collected (individual and/or neighborhood level) and evidence of sharing information with law enforcement. Scores were 0 (no risk of harm identified), 1 (potential for risk), 2 (evidence of risk), and U (data not publicly accessible). To pilot the scoring system, 44 surveillance systems were identified between June 2020 and October 2020 through an environmental scan of systems directly related to COVID-19 (n=21), behavioral and health-related services (n=11), and racism and racism-related factors (n=12). A score of 0-2 was assigned to 91% (n=40) of the systems; 9% were scored U; 30% (n=13) scored a 0. Half scored a 1 (n=22), indicating a “potential for the types of harm of concern in this analysis.” “Evidence of harm,” a score of 2, was found for 12% (n=5).

Conclusions

The potential for surveillance systems to compromise the health and well-being of racialized and/or vulnerable populations has been understudied. This project developed and piloted a scoring system to accomplish this equity-based imperative. The nobler pursuits of public health to improve the health for all must be reconciled with these potential harms.

Keywords: COVID, Policing, Big Data, Health Inequities/Health Equity, Racism

Introduction

In the United States, securing and managing land, resources, and populations to the benefit of white supremacy occurs through policing.1–4 Policing has recently been established within the public health field as harming the Black, Indigenous, and People of Color (BIPOC) community well-being in a myriad of ways, as it increases exposure to violence (through shootings), mental distress (consistent threat of harassment), and increased risk of incarceration,5–7 detention, deportation, and denial or disruption of services. Surveillance is central to mediating these harms, yet the role of surveillance in policing is underappreciated within public health.8–10 Surveillance is widely used within public health and integrates many if not all public health functions, in particular those needed during disasters such as COVID-19.11–14 Within health sciences, public health surveillance has been an essential state function for preventing and mitigating adverse health outcomes amongst groups (community, local, state, and national level).

Efforts to monitor and mitigate public health disasters that intersect policing can harm BIPOC communities by stigmatizing them and encouraging law enforcement to intervene (eg, incarceration, deportation). Given these connections, what then is the relationship between public health, surveillance, and policing? As public health data systems are expanding and being integrated into other surveillance systems (and as pandemics emerge), it is important for the public health workforce to be aware of these connections—to specifically know the role of data to policing, and its points of intersect with public health. Though the need exists, we are aware of no systematic approach within the field to assess these connections and their associated harm of increased exposure to incarceration, detention, deportation, and denial/disruption of services (ie, access to housing). This article explains how a novel scoring system was developed to guide the assessment of these potential harms and presents the results of a pilot study that applied the scoring system to COVID surveillance systems and other surveillance tools used to track health inequities. The scoring system assesses the potential for public health systems to contribute harm to BIPOC communities through information use by and sharing with law enforcement.

Below, we define surveillance-related harm due to policing, discuss its history and its relationship to public health crises, and present the novel scoring system development and pilot results.

Surveillance-Related Harm: History and Intersections of Policing, Racism, and Public Health

In 2018, the American Public Health Association declared that policing harms BIPOC communities in a myriad of ways. It increases mental distress due to, for instance, the persistent threat of police harassment (eg, stop and frisk), exposure to violence from law enforcement, and increased risk of incarceration, detention, deportation, and denial/disruption of services.5–7 Surveillance plays a key role in enabling these harms, yet little evidence exists of the effects of such surveillance on public health crises, in particular inequities in infectious disease disasters. Surveillance, as the systematic monitoring of groups for a wide array of factors, is used within public health (public health surveillance) as well as in policing. Surveillance-related harm attributable to policing occurs through a number of ways including physical violence (eg, beatings and shootings); mental distress (eg, due to perpetual risk of being stopped and frisked by police); incarceration, detention by local or federal authorities (ie, immigration and naturalization enforcement [ICE]); and denial or disruption of access to services (eg, public housing). The literature summarized below indicates it is important to consider (1) the historical relationship between surveillance, racism, and public health; (2) the contemporary relationships between surveillance, policing, and the technology industry; and (3) recent examples of surveillance and criminalization specific to COVID-19.

Historically, the forms of state-sanctioned violence on which the United States was founded, which include settler-colonialism, chattel slavery, and segregation,3,8,15 relied heavily upon the surveillance technologies available at the time. Table 1 includes 3 historical examples of the relationship between surveillance, public health, and racism and describes the harms on both the individual and community level associated with the public health and law enforcement partnerships and sharing of information. Historically, elected officials and others believed that infectious conditions originated among or were exacerbated within racially stigmatized populations.16 Health surveillance and research provided the tools and data needed for other agencies, such as law enforcement, to take actions to protect the health and interests of more privileged, “desirable,” and white populations and corporations.

Table 1.

The intersection of public health surveillance, policing, and racism: historical and contemporary examples

|

Community

|

Disease

|

Context

|

Public health surveillance

|

Surveillance-related harm

|

| Historical cases | ||||

| Chinese residents of San Francisco late 1800s | Cholera | Chinese people stigmatized as diseased, culturally inferior, and risk to white communities | Public health maps produced “evidence” that city officials used to target, cite Chinese residents | Maps were used in the policing of Chinese residents, and data provided rationale for their economic and social exclusion |

| Mexican communities, Los Angeles 1930s | Tuberculosis and syphilis | Mexican communities were marked both ill and illegal, therefore a risk of disease transmission for “innocent,” “clean” residents | Health organizations tracked Mexican patients to determine whether they discontinued their syphilis and/or tuberculosis treatment regimens | Mexican patients not completing treatment were deported. This rationale also resulted in Mexican patients without tuberculosis being deported |

| Black Panther Party and Coalition activism early 1970s | Violence associated with “urban” centers | Government agencies sought to surveil and incarcerate actual and potential activists that were protesting and organizing against racial and other social injustices | UCLA sought to establish a Center whose framework was that violence is treatable through medical intervention by investing in data (ie, behavioral) for the study and treatment of “violent” persons | While activists challenged the development of the Center, medicalization of violence increases criminalization of BIPOC communities as it locates violence within them and not structures |

| Contemporary cases | ||||

| BIPOC community, predominantly Black; Detroit, MI37 | COVID-19 | Police already use facial recognition technology and cameras to surveil residents for actual or potential crime | Emergency stay-at-home orders issued to reduce COVID spread. Police used technology to identify noncompliance with COVID emergency orders | BIPOC disproportionately identified using police surveillance data for noncompliance, and fined (eg, $1000) and/or incarcerated |

| BIPOC community, predominantly Black; New York City, NY38 | COVID-19 | Before the pandemic, stop and frisk tactics used by New York police documented as racist and causing harm | Police enforce COVID social-distancing orders | Of 40 persons arrested for noncompliance with the orders, 35 were Black |

| Residents of Louisville, KY39 | COVID-19 | The GPS monitors were described as a better alternative to arresting and detaining persons | GPS ankle monitors were being used to enforce orders of isolation on individuals who tested positive for COVID-19 and allegedly noncompliant with stay-at-home orders | Electronic monitoring has been known to have adverse effects for those who are shackled to it |

BIPOC, Black, Indigenous, and People of Color

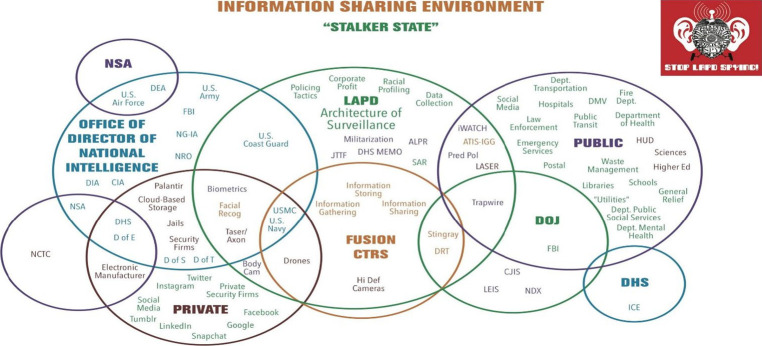

Surveillance strategies and rationales reflect the particular contexts of state crises and emergencies.17,18 In the last 3 decades, the “War on Terror” has bolstered the unprecedented expansion of the surveillance industry and this is directly connected to policing in the United States.9,19,20 Since the passing of the 2004 Intelligence Reform and Terrorism Prevention Act, the technologic industry has established the infrastructure needed to work across the public and private sectors in the United States, including policing. The effect of these changes have resulted in considerable and disproportionate surveilling of BIPOC communities, borders, and nations as their land and movement are inherently believed to be simultaneously desirable (ie, for gentrification; resources) and more threatening (ie, needing policing or military intervention).9,21–23 For example, Palantir, a US-based software company founded in 2003 with multiple defense, law enforcement, and enforcement-related contracts, has been drawing concern by both its own employees and the general public over the use of its software in the apprehension and detention of migrants at the US-Mexico border.24,25 US Immigration and Customs Enforcement (ICE) has become the largest domestic surveillance agency in the nation.26 Securitization is historical and racially complex, connecting histories of colonialism and imperialism. While it is not new, it has scaled up considerably, resulting in a complex “information-sharing environment” with many public health and equity-relevant consequences. The mechanisms by which it operates are typically obscured, with abolitionist organizations such as Stop LAPD Spying Coalition seeking to illuminate these mechanisms. Figure 1 describes the surveillance architecture by which such data sharing occurs in Los Angeles County and connects this to national and global institutions. In this diagram, private and public refers to how these entities are funded and does not describe data availability.

Figure 1.

Information-sharing environment: the Stalker State

Source: Developed by StopLAPDspying.org and reproduced with permission from Stop LAPD Spying Coalition

As with historical epidemics, surveillance efforts to mitigate the COVID pandemic continue to lead to heightened surveillance of BIPOC communities owing to racist assumptions about disease and criminality among them. The implications are exacerbated by the dominance of the surveillance industry, which criminalizes diverse populations more efficiently. The industry has been expanding its focus to manage public health disasters.27 For example, Palantir has been awarded COVID-19–related contracts and has sought pandemic management contracts in other countries as well.28,29 There have been many reasons why adherence to COVID-19 mitigation and containment strategies have been challenging for racially marginalized communities.30–32 These challenges include being overrepresented in essential workforces that had to be in person and underrepresentation in workforces that allowed for working from home or remotely.33,34 In addition, communities of color were overrepresented in dense and overcrowded housing including those where disease transmission risk was high such as prisons, jails, and detention centers.35,36 In BIPOC communities, residents who do not comply with COVID-19 public health strategies experience heightened risk of being cited or arrested for the violations (Table 1).37–39

Development of the Novel Scoring System

To develop the scoring system and establish the criteria for each score, we conducted a review of the literature on the intersections between race and risk assessments and convened an expert panel. The scores were developed to assess public health surveillance systems' potential to facilitate harm through increased exposure to policing, detention/incarceration, deportation, and disruption of access to social services or public resources. The scores assess harm by type of data that is collected and whether the data are used or shared with law enforcement or law enforcement–like agencies. We consider a public health surveillance system to be any technology (ie, database) that systematically collects health or health-related information on population well-being and well-being–related factors. Given our focus on equity-based work, this includes systems that examine structural racism or racism-related factors (such as discrimination).

The purpose of our literature review was to focus on race and risk assessments (including predictive tools such as algorithms). We reviewed and went beyond typical databases (such as PubMed, JSTOR, and Lexus Nexus) and identified publications produced by nonprofits and community organizations, ranging from mainstream reformist criminal justice entities to abolitionist ones that are referenced below. We focused on historic case studies of public health surveillance, racialization, and racism and identified contemporary examples of increased criminalization, stigmatization, and harm associated with COVID-19 surveillance and the expansion of the surveillance industry. Across all of these explorations were considerations in regard to (1) de-identified data, confidentiality, and anonymity; (2) public versus private use datasets; (3) reproduction of racism within databases and analytic techniques; and (4) data used to develop predictive analytics and assessments.

We examined ideas raised in the literature with an expert panel on surveillance with whom we conducted three 2-hour sessions between December 2020 and January 2021. Expert panel members (N=8) were public health practitioners, epidemiologists, and community-based organizers. The members of the panel had extensive and a wide range of expertise on surveillance with different members having developed public health surveillance systems, used surveillance systems to address health inequities or public health crises, and/or organized political education and community-based actions around police and surveillance abolition. Between n=4 and n=6 members of the expert panel participated in each session. Two members of the research team facilitated each session. The research assistants recorded and transcribed panel discussions verbatim, using Zoom technology. Two independent reviewers used NVIVO, a qualitative analysis software, to code, identify themes, and discuss recurring points, which were later shared and clarified with the panel in subsequent meetings.

Prior evidence40–42 indicates widespread agreement that the growth of the surveillance industry is forming a new “data environment” characterized by (1) the development and adoption of new technologies; (2) the reliance on systems and strategies to increase data collection, sharing, and operability; (3) algorithms and other analytic strategies to use the data to “prevent” terrorism and/or crime; (4) a focus on infrastructure that allows for information to be digitized and shared and communicated more rapidly; and (5) automation of racial profiling. The role of police officers here then is aid in the obtaining of data to be used in this data environment.43 In other words, the police departments themselves are a source of data for surveillance.

In the development of the scoring system, we reviewed the literature to explore whether de-identified data within existing surveillance systems used by public health, in particular during crises, can aid law enforcement in finding specific individuals. Debates about whether the de-identification of surveillance data guarantees the anonymity of individuals exist in the literature; re-identification may be possible whether the data included are samples or entire populations.44–52 Additionally, we reviewed the growing development of and reliance on predictive tools in policing and prosecution for the type of data they use and whether they exacerbate the potential for harm. These tools purport to be able to assess future criminality by profiling individuals and their communities based on such information as the ZIP code and demographic characteristics (eg, employment history) and use data on neighborhoods and networks (social network analysis) to build associations across groups of people.53–56 Evidence on the effect of gang injunctions shows how these databases can have collateral consequences, such as longer sentences and denial of resources, and increase racial stigmatization.57 Finally, the review also raised concerns about an emerging practice among public health units: the adoption of policing-like applications (such as Citizen App) in COVID-19 contact tracing. These policing tools are known to racially profile neighborhoods. While contact tracing is an important public health strategy for disease prevention and mitigation, if COVID-19 data generated by this app were shared with law or immigration enforcers, could it in turn support large-scale criminalization of groups of people through network associations and impede long-term public health goals of community well-being?

The expert panel interviews shed light on the tension between public health needing to scale up and integrate surveillance systems, so that data can be readily available for use in real time during crises, and the uncertainty as to how law enforcement and social service institutions would use COVID-19 data, or public health data more broadly, to police, incarcerate/detain, deport, or deny access to housing and social services. Particularly salient and relevant to the development of the score is the potential implication there is to the increased value of data of all types in both the development and implementation of tools and algorithms to automate risk profiling of individuals, neighborhoods, and communities. The literature supports insights from the expert panel as well.22,58,59

Scoring System

Based on the literature review and expert panel results, the scoring system focused on operationalizing 2 characteristics that may contribute to harm when they co-occur. First, racial profiling occurs when data and algorithms are used to make decisions about the level of “criminality” or “eligibility” for social services of an individual or of members of a community. Therefore, the first criterion was whether the data are reported and therefore publicly available, at both the individual and neighborhood levels, which has the “potential” to harm either through data being associated with the individual or being used to predict how other individuals or neighborhoods would behave. The second criterion was whether the data are either used or shared with law enforcement. Racial profiling occurs through the use of data and algorithms to make decisions about an individual's or neighborhoods' “criminality” or “eligibility” for needed services. Law enforcement and others (eg, social service agencies such as those granting government subsidies) have used both neighborhood- (less than county-level) and individual-level data to asses risk.22,58,60 During COVID-19, we found evidence of police labelling neighborhoods as COVID-19 “hotspots” on the basis of COVID-19 data.61,62 There is the potential then for these marked neighborhoods to either attract more surveillance through policing or to result in police feeling more threatened by “hotspot” residents and therefore increasing police violence.

To score the evidence of risk associated with each system, we conducted searches of general and academic audience publications (such as newspapers and investigative-reporting sources) for reports of the specific data within that surveillance system being used or shared in a way that caused increased exposure to policing, incarceration, detention, deportation, and/or disruption/denial of social services. The surveillance system website documentation that was readily and easily available was reviewed for published information on sharing and data use histories or policies. The risks are inherently greater than the information available would suggest because for instance future technologies may be able to exploit the data in ways we cannot currently anticipate. It is not possible to quantify the level of risk of any particular system fully, based on the information that is available publicly. Therefore, our goal was to use publicly available information to identify the specific potential or actual harms of each surveillance tool being examined. The focus on publicly available data sources and evidence is important, because it renders this approach readily adaptable by community-based organizations that may have limited access to academic and other sources of information.

The final scoring system is an ordinal measure of 3 levels of potential harm as indicated by the scores 0, 1, and 2: 0=the data are aggregated by county level or greater; 1=a potential for harm exists because the system reports information about individuals or neighborhoods and shares this information with other agencies, though no evidence was found of data use or sharing with law enforcement and/or resulting in denial or disruption of social services; 2=both individual- and neighborhood-level information is reported and the search retrieved evidence that the data the system collected about individuals were used by or shared with law enforcement and/or it resulted in the denial or disruption of social services. A fourth score, U, not included in the ranked levels, is also a part of the measure with U assigned to systems where the data were not publicly easily accessible or available about the system to see how the information is reported.

Applying and Piloting the Scoring System

We piloted the equity-based scoring system on 3 different types of public health surveillance systems: (1) COVID-19 monitoring systems (n= 21), (2) non-COVID behavioral and health service surveillance systems (n= 11), and (3) databases used in tracking racism and/or discrimination (n=12). This last category of systems is not those traditionally classified as public health systems. In recent years, public health scientists have been using nontraditional health surveillance systems to do public health equity–related work, such as Google Search Terms database by Google or Twitter Search by Twitter.63,64 Therefore, we have included them in this pilot. We included them here with certainty that their functions and algorithms have been used heavily for these purposes.

For the purposes of the pilot, we applied the scoring system to the 44 public health surveillance systems identified via a series of environmental scans conducted between June and October 2020 to inform Centers for Disease Control and Prevention (CDC) COVID response and we excluded those with insufficient information available publicly to conduct the review. The environmental scan occurred as part of a larger project. For this larger project, a multidisciplinary team of experts in epidemiology, community health, critical race theory, policy, and data science were conducting a rapid environmental scan of existing surveillance systems on which to build a future COVID-19 surveillance system. This team of experts included technical monitors from the CDC. Expert input was intended to quickly assess the resources available for conducting public health surveillance at the time the environmental scan was undertaken. More detail about the methods used to conduct the environmental scans and the results are published elsewhere.65 Three members of the research team independently scored each system. The final scores were reached through iterative reviews of any discrepancies to achieve consensus. The section below summarizes the findings upon application of the scoring system to the surveillance systems identified through the series of environmental scans.

Results

The results of the pilot test are presented in Tables 2 and 3. The environmental scan identified 44 surveillance systems with sufficient information about them available publicly so that they could be scored (COVID-19 [n=21]; other behavioral and health service surveillance systems [n=11]; systems that track racism, discrimination, or stigma [n=12]). Overall, a score of 0-2, which indicates having enough minimal information to evaluate how the data are reported, was assigned to 91% (n=40) of the systems. Approximately one-third (30%) (n=13) scored a 0. Half were assigned a score of 1 (n=22), indicating a “potential for the types of harm of concern in this analysis.” “Evidence of harm” was found for 12% (n=5). Table 2 summarizes the results by type of surveillance system (ie, COVID, non–COVID-19, racism-based system), for those systems with sufficient information available; it excludes the n=4 (Amerispeak/NORC General Social Survey, CDC INFO Query, CDC National Syndromic Surveillance Program, and Porter Novelli/Summer Styles) that were scored U owing to not having easily accessible public-facing information.

Table 2.

Pilot of the Equity-Based Scoring System, N=44

|

Score

|

Surveillance systems, n (%)

|

Total, N

|

||

|

COVID-19

|

Non–COVID-19

|

Racism

|

||

| Number of reviewed systems | 21 (47.7) | 11 (25.0) | 12 (27.2) | 44 |

| Evidence of information-sharing riska | ||||

| 0 - No risk was identified | 9 (69.2) | 2 (15.4) | 2 (15.4) | 13 |

| 1 - Potential for risk, no evidence | 10 (47.6) | 5 (23.8) | 6 (28.5) | 21 |

| 2 - Potential for risk, evidence | 2 (40.0) | 0 (0.0) | 3 (60.0) | 5 |

| U – Not scored | 0 (0) | 4 (80.0) | 1 (20.0) | 5 |

Scores mean the following: 0=county-level or greater data reported; 1=potential for harm from individual or neighborhood data reporting, but no evidence found of law enforcement data use or sharing and/or denial or disruption of social services; 2=evidence of harm as individual/neighborhood data reported and evidence found of law enforcement data use or sharing and/or denial or disruption of social services. Four systems scored a U, indicating data were not publicly available for assessment.

Table 3.

Scores of the public health surveillance systems, N=39

|

Surveillance system

|

System name, source (n=44)

|

Data reported on individual, neighborhood

|

Evidence of harm

|

Scorea

|

| COVID-19 (n=21) | 1point3acres | No | No | 0 |

| CDC COVID Data Tracker | No | No | 0 | |

| CORD-19 | No | No | 0 | |

| COVID Tracking Project | No | No | 0 | |

| COVID-19 Demographic and Economic Analysis | No | No | 0 | |

| Google COVID-19 Public Forecast | No | No | 0 | |

| Johns Hopkins University - State Policy Tracker | No | No | 0 | |

| Johns Hopkins University Dashboard | No | No | 0 | |

| American Hospital Ass. Hospital Capacity Dataset | Yes | No | 1 | |

| CDC Lab Confirmed COVID-19 Hospitalizations | Yes | No | 1 | |

| COVI-19 UCLA Law Behind Bars Tracking Project | Yes | No | 1 | |

| COVID-19 Case Surveillance | Yes | No | 1 | |

| COVID-19 In Virginia | Yes | No | 1 | |

| Harvard/Monash | Yes | No | 1 | |

| LA County Dept. of Public Health | Yes | No | 1 | |

| National Vital Statistics Program | Yes | No | 1 | |

| NCDHHS COVID-19 Response | Yes | No | 1 | |

| Tennessee Epidemiology and Surveillance Data | Yes | No | 1 | |

| US Census Household Pulse Survey | Yes | No | 1 | |

| Google, COVID-19 Community Mobility Reports | Yes | Yes | 2 | |

| Google, COVID-19 Search Symptoms Trends | Yes | Yes | 2 | |

| Non–COVID-19, behavioral and health service (n=7) | CDC Influenza Surveillance | No | No | 0 |

| Current Population Survey | No | No | 0 | |

| California Health Interview Survey | Yes | No | 1 | |

| CDC BRFSS | Yes | No | 1 | |

| Health & Retirement Study | Yes | No | 1 | |

| Nat. Longitudinal Study of Adolescent to Adult Health | Yes | No | 1 | |

| National Hospital Ambulatory Medical Care Survey | Yes | No | 1 | |

| Racism, stigma, and discrimination (n=11) | Equal Opportunity Employment Commission | No | No | 0 |

| STOP AAPI Hate | No | No | 0 | |

| American Community Survey | Yes | No | 1 | |

| Decennial Census | Yes | No | 1 | |

| Home Mortgage Disclosure Act | Yes | No | 1 | |

| Pew Research Center | Yes | No | 1 | |

| Project Implicit | Yes | No | 1 | |

| Youth Risk Behavior Surveillance Survey | Yes | No | 1 | |

| FBI Hate Crimes | Yes | Yes | 2 | |

| Yes | Yes | 2 | ||

| Yes | Yes | 2 |

Scores mean the following: 0=county-level or greater data reported; 1=potential for harm from individual or neighborhood data reporting, but no evidence found of law enforcement data use or sharing and/or denial or disruption of social services; 2=evidence of harm as individual/neighborhood data reported and evidence found of law enforcement data use or sharing and/or denial or disruption of social services. Four systems scored a U, indicating data were not publicly available for assessment.

Table 3 lists those surveillance systems that received a 0-2 score (n=40). Of the 5 surveillance systems that scored a 2, indicating the highest level of risk, n=3 were owned by Google (COVID19 Search Symptoms by Google,66 COVID-19 Community Mobility by Google, and Google Search Terms), n=1 was owned by Twitter (Twitter Search), and n=1 was owned by US law enforcement (FBI Hate Crimes).67 While Google and Twitter received scores of 2 owing to evidence of their data being used or shared with law enforcement,68–71 the FBI Hate Crimes database is created and maintained by the nation's highest law enforcement agency, a criterion for this score.

Of the n=22 (55%) systems scored “1,” n=5 were connected to COVID dashboards developed and used by local jurisdictions to track the pandemic in those communities. The score of 1 indicated the availability of the information at the neighborhood level. Another COVID-related database scoring 1 was the COVID-19 UCLA Law Behind Bars Project, a database tracking the epidemiology of COVID-19 in incarcerated settings including prisons, jails, and detention centers. This database was given a 1 owing to the potential for prison and jail staff to treat and stigmatize incarcerated populations because of COVID-19 disease case rates and to label facilities themselves as “hotspots.”

US Census data reported on the individual/neighborhood level (such as US Census Household Pulse Survey, American Community Survey, and Decennial Census) also scored a 1 even though the Census is clear it does not share its data with law enforcement.72,73 These systems received a 1 because data from the Census can be used to describe neighborhoods, and therefore are also vulnerable to creating risk profiles of groups of people by location. Databases collecting data on service utilization such as the National Hospital Ambulatory Medical Care Survey also scored a 1, because this type of data has the potential to create a neighborhood “risk” profile that can be used for decision-making algorithms predicting crime or recidivism based on emergency department use and studies correlating emergency department use with crime.7,74 No evidence could be found of this information being used or shared for this purpose.

Discussion

Understudied within public health are the ways and extent to which public health surveillance systems are associated with increasing community harm through sharing and/or use in law enforcement policing strategies, and this article presents pilot findings on the development and application of a novel scoring system to address this equity-based need, which we believe community advocates and public health professionals should discuss and consider. Our findings indicate a substantial potential for surveillance systems used in public health to harm BIPOC communities by exposing them to police-associated harms because of the types of data being collected (individual/neighborhood-level data) and through use/sharing with law enforcement agencies. The focus on publicly available data sources and evidence provides an approach that community-based organizations with limited access to academic and other sources of information may adopt.

A strength of this project is the consideration given to distinguishing between potential for and evidence of surveillance-mediated, policing-associated harms. We identified a few surveillance systems used within public health with evidence of the data being used or shared with law enforcement, resulting in increased criminalization of BIPOC communities. These systems are not traditional public health surveillance tools, but their use within public health for disease prevention and mitigation purposes has grown. For example, Google location data gathered through their platform were used to track the movements of protestors during the George Floyd uprisings; Twitter data were shared with Dataminr, an organization that claims “to integrate all publicly available data signals to create the dominant information discovery platform,” to track Black Lives Matter organizers and activists.68,70,75 The fact that these are not traditional public health systems that require substantial buy-in to collect health and health-related data may play a role here in that these corporations are not beholden to or expected to maintain community-level trust.

Yet, several widely used public health surveillance systems have the potential to perpetuate policing-associated harms through their data. The types of data used to conduct health equity work may also be used by law enforcement to monitor and predict the behaviors of BIPOC individuals and communities. In the development of our scoring system, we found extant evidence of behavioral data being useful in the generation of risk assessments to predict a person's criminality or being used to describe the likelihood that an environment itself is associated with the resources needed to protect against criminality. To assess the level or degree for this potential is beyond the scope of this pilot study. Future studies may want to explore the possibility of being able to do so and contribute to the literature investigating the extent to which these types of harms can be addressed.

This pilot found that surveillance systems with community benefits could potentially criminalize and harm communities because of the type of information collected, as the potential exists for these marked neighborhoods to either attract more surveillance through policing or to result in police feeling more threatened by the members of the “hotspot” and therefore increasing risk of police violence.76 For example, community-level indicators could be used by law enforcement to affect their decision-making on what neighborhoods to patrol. This finding is troubling as for decades, if not centuries, researchers have argued that a socioecologic model of examining health was needed to replace traditional behavioral models of disease causation. Following a Public Health Critical Race Praxis practice,77 we are also concerned with the roots of these inequities and the reasons behind why we must be vigilant and concerned with how our systems for managing the health of populations can be used to further criminalize them.1–4 This is particularly concerning given the growing inequity produced by the pandemic and pandemic-related decisions and policies that have disproportionately affected BIPOC. Also, the targeted attacks on pregnant women and people with the capacity to give birth through criminalizing their access to reproductive health care also highlight the fight against weaponization of data (ie, menstrual data) and the sophisticated ways people and their behaviors are being tracked and monitored (ie, through apps that track menstruation and are shared with police in ways that may identify people who have recently had an abortion).78,79

In this vein, the next steps include implementation of the pilot on a larger scale to identify and compare the potential for harm through public health surveillance across systems, agencies, and institutions. The information-sharing environment of public health surveillance systems still remains unclear and needs to be better understood as the results from the pilot raise the following questions: (1) what is the relationship between public health agencies (eg, health departments and CDC) with other agencies (eg, law enforcement) within the “Information-Sharing Environment”? and (2) what are the cross-institutional practices and policies with regard to how public health data are investigated, collected, shared, used, and analyzed?

While data-sharing practices are publicly available for public health systems that explicitly collect sensitive data with deductive disclosure risk for participants (such as Add Health) or with technologies and platforms used by and developed for enforcement agencies (like Palantir), it remains unclear whether there are explicit prohibitions of using data within these systems for research into algorithms or technologies that medicalize political and social ills, such as research that created PredPol.80–85 PredPol, created through academic and law enforcement partnerships, is a predictive policing technology using machine-learning algorithms to predict crime patterns using historic and daily reported crime data. Taking racially biased crime data collected for more than a decade and combining it with current local data, the technology generates neighborhood “hotspots.” What is considered crime cannot be divorced from what and who is determined to be criminal, with criminality deeply tied to who and what in the sociopolitical (ie, racial) order needs to be controlled. Also, what produces crime is deeply connected to social determinants of health. Community rejection and academic pushback against PredPol have highlighted the need to better understand this information-sharing pathway as research expands in these areas. While there are attempts to protect research participant data from being subpoenaed or used by law enforcement through Certificates of Confidentiality for example, the use of public health data, strategies, and tools to predict crime and safety, as opposed to addressing the root causes of it, are also important to review.86

This is particularly salient, as there is an established precedence of medicalizing social phenomenon to the detriment of BIPOC communities. Given the community call to action to replace criminalization strategies of population management with care, public health approaches to addressing issues of violence may unwittingly reproduce the same structural racism. As a result of racialized communities being more likely to be in surveillance systems, the databases disproportionately increase the opportunity to generate “negative” risk profiles for some groups over others. In addition to the increased harm from racial profiling, the use of public health data could also increase distrust of public health surveillance to the detriment of COVID-19 mitigation and containment.

To examine these tensions further, there are organizations exploring the role of data, surveillance, racism, health, and equity by spreading awareness about these tensions between surveillance and community well-being, to encourage research about the role of technology in pandemic management, and to explore the role of data in furthering social justice goals.87–89 For example, there is community-based resistance to the use of social media data, whether publicly available or not, to be used to criminalize BIPOC communities through the sharing with law enforcement.90 Additionally, organizations such as Stop LAPD Spying Coalition are exploring these issues in partnership with abolitionist organizations drawing connections between historic state-sanctioned violence, in particular violence against unhoused communities, and shifts in debates about community-based care and the role of public health within it.

The following should be noted when interpreting the findings. The relative statistics (percentages) cannot be used to generalize and compare trends by surveillance system types, as our sample may not be representative and/or exhaustive. For several of the surveillance systems, data can be downloaded for any/all users. Thus, the evidence for using the data is difficult to establish and cannot be determined by our level of access. State-level data, albeit de-identified and aggregated across communities, can still perpetuate harm and stigma at the regional level (eg, South versus Northeast). Additionally, systems assigned a U score because data were not publicly easily accessible or available to our team to confidently report on should also be followed up on for future assessments.

Resultantly, limitations exist for this pilot that require careful consideration. First, the surveillance systems included in the analyses were selected on the basis of public access to both the system and information about each system's information-sharing practices. Thus, the study does not represent a comprehensive sample of all systems, and those that are not available publicly may engage in greater levels of data collection and sharing. While the scoring schema is guided by extant literature and guidance from an expert panel, surveillance strategies are always evolving, and emerging strategies that are not yet recognized may be overlooked or the scoring system may not readily apply to them. This is particularly important given how all systems, including surveillance, evolve to reinforce white supremacy.8

Finally, while we focused on sharing with law enforcement agencies, there are other agencies that perform law enforcement–like actions such as those that execute government actions for child and family services and housing. These other agencies also use data or share data in ways that increase BIPOC community's proximity to risks such as policing and associated harms.58,91

As the communities most criminalized by race, place, and poverty are simultaneously heavily surveilled by police and other law enforcement–like agencies during and beyond pandemics, the interconnections between public health surveillance, data, and policing need to be urgently evaluated. Without an explicit social justice approach (both equity driven and antiracist), traditional public health surveillance strategies may place the communities most vulnerable during pandemics at an increased risk for surveillance-related harms during and beyond disasters.

Conclusions

As an essential state function, public health is charged with protecting and promoting population-level health both within and beyond disasters such as the COVID-19 pandemic. Public health surveillance can also play a key role in advancing goals of equity and social justice by understanding the effect of disease, such as COVID-19, and disease-related health determinants, such as evictions, on BIPOC communities. A tension exists though between this goal and the harm caused through surveillance, as it increases exposure to policing and the subsequent risk of harm from incarceration, deportation, detention, and denial/disruption of services. The same communities most affected by this surveillance-mediated harm are also those most affected during public health emergencies. As the COVID-19 pandemic is not the last disaster of its type, the concerns raised here will continue to be relevant in future public health emergencies and beyond.

Acknowledgments

The formative work was unfunded. Development of the manuscript was supported by the National Institute of Minority Health and Health Disparities (NIMHD), of the National Institutes of Health (NIH) under award number 5S21MD000103.

Development and piloting of the scoring system was supported in part by funding from the CDC (Centers for Disease Control and Prevention) Foundation. The source of this information is the COVID-19 Stigma Project, a joint project of the CDC Foundation and Howard University/UCLA Center for the Study of Racism, Social Justice & Health CDC Foundation. This project is also supported in part by the CDC of the US Department of Health and Human Services (HHS) as part of a financial assistance award totaling $6,827,718 with 98% funded by CDC/HHS and $135,000 and 2% funded by a COVID-19 Research Award from the UCLA David Geffen School of Medicine.

We thank the staff at the Center for the Study of Racism, Social Justice & Health and UCLA FSPH Department of Community Health Sciences for administrative and technical support; and members of the COVID-19 Task Force on Racism & Equity for feedback on earlier versions of this work.

Footnotes

Disclaimer The contents are those of the authors and do not necessarily represent the official views of, or an endorsement by, the CDC/HHS, NIH/NIMHD, or the US government.

Conflict of Interest No conflicts of interest reported by authors.

Author Contributions Research concept and design: Amani; Acquisition of data: Amani, Garcia, Khan, McAndrew; Data analysis and interpretation: Amani, McAndrew, Sharif, Garcia, Nwankwo; Manuscript draft: Amani, McAndrew, Sharif, Garcia, Nwankwo, Cabral, Abotsi-Kowu, Khan, Le, Ponder, Ford; Acquisition of funding: Ford, Ponder; Administrative: Amani, McAndrew, Cabral; Supervision: Amani.

References

- 1.Muhammad KG. Harvard University Press; 2010. Condmenation of Blackness: Race, Crime and the Making of Modern Urban America. [Google Scholar]

- 2.Vitale A. The End of Policing. Verso. 2017.

- 3.Hernandez KL. UNC Press Books; 2017. City of Inmates: Conquest, Rebellion, and the Rise of Human Caging in Los Angeles, 1771-1965. [Google Scholar]

- 4.Schrader S. University of California Press; 2019. Badges without Borders: How Global Counterinsurgency Transformed American Policing. [Google Scholar]

- 5.American Public Health Association. Addressing Law Enforcement Violence as a Public Health Issue. 2018. Last accessed July 18, 2022 from https://www.apha.org/policies-and-advocacy/public-health-policy-statements/policy-database/2019/01/29/law-enforcement-violence.

- 6.COVID Racism Study. Policing: the pandemic that endures. Medium . 2021. 2021. Last accessed July 18, 2022 from https://covidracism.medium.com/policing-the-pandemic-that-endures-c59d0ca5d81c.

- 7.Theall KP, Francois S, Bell CN, Anderson A, Chae D, Laveist TA. Neighborhood police encounters, health, and violence in a southern city. Health Aff . 2022;41(2):228–236. doi: 10.1377/HLTHAFF.2021.01428/ASSET/IMAGES/LARGE/FIGUREEX3.JPEG. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Browne S. Duke University Press; 2015. Dark Matters on the Surveillance of Blackness. [Google Scholar]

- 9.Camp J, Heatherton C. Total policing and the global survaillence empire today: an interview with Arun Kundani. In: Camp JT, Heatherton C, editors. Policing the Planet Why the Policing Crisis Led to Black Lives Matter . 320. Versa books; 2016. [Google Scholar]

- 10.Davis M, Wiener J. Verso Books; 2020. Set the Night on Fire: L.A. in the Sixties. [Google Scholar]

- 11.Rasmussen SA, Goodman RA Centers for Disease Control and Prevention. Oxford University Press; 2019. The CDC Field Epidemiology Manual. [Google Scholar]

- 12.Berkelman RL, Sullivan PS, Buehler JW. Public health surveillance. In. Oxford Textbook of Public Health 5th ed. In: Detels R, Beaglehole R, Lansang MA, Guildford M, editors. Oxford University Press; 2009. p. 2044. https://doi/org/10.1093/MED/9780199218707.003.0042 . [Google Scholar]

- 13.Lyerla R, Stroup DF. Toward a public health surveillance system for behavioral health. Public Health Rep . 2018;133(4):360. doi: 10.1177/0033354918772547. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Ibrahim NK. Epidemiologic surveillance for controlling Covid-19 pandemic: types, challenges and implications. J Infect Public Health . 2020;13(11):1630–1638. doi: 10.1016/J.JIPH.2020.07.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Dunbar-Ortiz Roxanne. Beacon Press; 2021. Not “A Nation of Immigrants”: Settler Colonialsim, White Supremacy, and History of Erasure and Exclusion. [Google Scholar]

- 16.Conrad P. Johns Hopkins University Press; 2007. The Medicalization of Society: On the Transformation of Human Conditions into Treatable Disorders. [Google Scholar]

- 17.Klein N. Henry Holt and Company; 2007. The Shock Doctrine: The Rise of Disaster Capitalism. [Google Scholar]

- 18.French MA. Woven of War-Time Fabrics: the globalization of public health surveillance. Surveill Soc . 2009;6(2):101–115. doi: 10.24908/ss.v6i2.3251. [DOI] [Google Scholar]

- 19.Swaine J, Ackerman S, Siddiqui S. Ferguson forced to return Humvees as US military gear still flows to local police. The Guardian . Aug 11, 2015. Last accessed September 2, 2022 from https://www.theguardian.com/us-news/2015/aug/11/ferguson-protests-police-militarization-humvees.

- 20.Price M. National Security and Local Police. 2013. Last accessed September 2, 2022 from https://www.brennancenter.org/our-work/research-reports/national-security-and-local-police.

- 21.Kelly RD. Thug nation: on state violence and disposability. In: Camp JT, Heatherton C, editors. Policing the Planet Why the Policing Crisis Led to Black Lives Matter . Versa Books; 2016. p. 320. [Google Scholar]

- 22.McElroy E, Vergerio M. Automating gentrification: landlord technologies and housing justice organizing in New York City homes. Environ Plan D Soc Space . 2022;40(4):026377582210888. doi: 10.1177/02637758221088868. [DOI] [Google Scholar]

- 23.Anti-Eviction Mapping Project. UCLA Luskin Institute on Inequality and Democracy. Jul 19, 2022. Last accessed. from https://antievictionmappingproject.github.io/cnap-story-map/

- 24.MacMillan D, Dwoskin E. The war inside Palantir: Data-mining firms ties to ICE under attack by employees. The Washington Post . Aug 22, 2019. Last accessed July 9, 2022 from https://www.washingtonpost.com/business/2019/08/22/war-inside-palantir-data-mining-firms-ties-ice-under-attack-by-employees/

- 25.Jacobsen A. Dutton; 2022. First Platoon: A Story of Modern War in the Age of Identity Dominance. [Google Scholar]

- 26.Georgetown Law Center on Privacy & Technology. American Dragnet Data-Driven Deportation in the 21st Century. Jul 19, 2022. Last accessed. from https://americandragnet.org/

- 27.Khan AS, Fleischauer A, Casani J, Groseclose SL. The next public health revolution: public health information fusion and social networks. Am J Public Health . 2010;100(7):1237. doi: 10.2105/AJPH.2009.180489. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Palantir Technologies Inc. Coronavirus Contracts. Jul 19, 2022. Accessed. from https://projects.propublica.org/coronavirus-contracts/vendors/palantir-technologies-inc.

- 29.England NHS. NHS COVID-19 Data Store. Jul 19, 2022. Last accessed. from https://www.england.nhs.uk/contact-us/privacy-notice/how-we-use-your-information/covid-19-response/nhs-covid-19-data-store/

- 30.Krieger N. ENOUGH. COVID-19, structural racism, police brutality, plutocracy, climate change-and time for health justice, democratic governance, and an equitable, sustainable future. Am J Public Health . 2020;110(11):1620–1623. doi: 10.2105/AJPH.2020.305886. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Tan SB, deSouza P, Raifman M. Structural racism and COVID-19 in the USA: a county-level empirical analysis. J Racial Ethn Heal Disparities . 2022;9(1):236–246. doi: 10.1007/s40615-020-00948-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Bailey ZD. Robin Moon J. Racism and the political economy of COVID-19: will we continue to resurrect the past? J Health Polit Policy Law . 2020;45(6):937–950. doi: 10.1215/03616878-8641481. [DOI] [PubMed] [Google Scholar]

- 33.McClure ES, Vasudevan P, Bailey Z, Patel S, Robinson WR. Racial capitalism within public health—how occupational settings drive COVID-19 disparities. Am J Epidemiol . 2020;189(11):1244–1253. doi: 10.1093/AJE/KWAA126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Peretz PJ, Islam N, Matiz LA. Community health workers and Covid-19—addressing social determinants of health in times of crisis and beyond. N Engl J Med . 2020;383(19):e108. doi: 10.1056/nejmp2022641. [DOI] [PubMed] [Google Scholar]

- 35.Fortuna LR, Tolou-Shams M, Robles-Ramamurthy B, Porche M V. Inequity and the disproportionate impact of COVID-19 on communities of color in the United States: the need for a trauma-informed social justice response. Psychol Trauma . 2020;12(5):443–445. doi: 10.1037/TRA0000889. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Saloner B, Parish K, Ward JA, Dilaura G, Dolovich S. COVID-19 cases and deaths in federal and state prisons. JAMA . 2020;324(6):602–603. doi: 10.1001/JAMA.2020.12528. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Emmer P, Woods E, Purnell D, Ritchie A, Wang T. Unmasked: Impacts of Pandemic Policing. 2020. Jul 19, 2022. Last accessed. from https://communityresourcehub.org/resources/unmasked-impacts-of-pandemic-policing/

- 38.Southall A. Scrutiny of social-distance policing as 35 of 40 arrested are Black. The New York Times . May 7, 2020. Last accessed July 19, 2022 from https://www.nytimes.com/2020/05/07/nyregion/nypd-social-distancing-race-coronavirus.html.

- 39.Lerner K. Government Enforcement of Quarantine Raises Concerns About Increased Surveillance. 2020. Last accessed July 19, 2022 from https://theappeal.org/coronavirus-quarantine-surveillance-electronic-monitoring/

- 40.Noble SU. New York University Press; 2018. Algorithms of Oppression: How Search Engines Reinforce Racism. [DOI] [PubMed] [Google Scholar]

- 41.Brayne S. Oxford University Press; 2020. Predict and Surveil: Data, Discretion, and the Future of Policing. [Google Scholar]

- 42.Benjamin R. Assessing risk, automating racism. Science . 2019;366(6464):421–422. doi: 10.1126/SCIENCE.AAZ3873. [DOI] [PubMed] [Google Scholar]

- 43.Ferguson AG. NYU Press; 2017. The Rise of Big Data Policing Surveillance, Race, and the Future of Law Enforcement. [Google Scholar]

- 44.Rocher L, Hendrickx JM, de Montjoye YA. Estimating the success of re-identifications in incomplete datasets using generative models. Nat Commun . 2019;10(1):1–9. doi: 10.1038/s41467-019-10933-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Ohm P. Broken promises of privacy: responding to the surprising failure of anonymization. UCLA Law Rev . 2010;57:1701. Last accessed July 20, 2022 from https://papers.ssrn.com/sol3/papers.cfm?abstract_id=1450006. [Google Scholar]

- 46.Culnane C, Rubinstein BIP, Teague V. Health Data in an Open World. 2017. [DOI]

- 47.Loukides G, Denny JC, Malin B. The disclosure of diagnosis codes can breach research participants' privacy. J Am Med Informatics Assoc . 2010;17(3):322–327. doi: 10.1136/JAMIA.2009.002725/3/AMIAJNL2725FIG5.JPEG. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Douriez M, Doraiswamy H, Freire J, Silva CT. Anonymizing NYC taxi data: does it matter? 2016 IEEE International Conference on Data Science and Advanced Analytics(DSAA) Montreal Canada. 2016. In. [DOI]

- 49.Lavrenovs A, Podins K. Privacy violations in Riga open data public transport system. 2016 IEEE 4th Workshop on Advances in Information Electronic and Electrical Engineering (AIEEE) Vilnius Lithuania. 2016. In. [DOI]

- 50.El Emam K, Arbuckle L. De-Identification: A Critical Debate - Future of Privacy Forum. 2014. Jul 19, 2022. Last accessed. from https://fpf.org/blog/de-identification-a-critical-debate/

- 51.Barth-Jones DC. The “re-Identification” of Governor William Weld's medical information: a critical re-examination of health data identification risks and privacy protections, then and now. SSRN Electron J . Jul, 2012. [DOI]

- 52.Keeling K. Looking for M—Queer temporality, Black political possibility, and poetry from the future. GLQ A J Lesbian Gay Stud . 2009;15(4):565–582. doi: 10.1215/10642684-2009-002. [DOI] [Google Scholar]

- 53.Schenwar M, Law V, Alexander M. The New Press; 2021. Prison by Any Other Name: The Harmful Consequences of Popular Reforms. [Google Scholar]

- 54.Pt 36 - Police state & data driven prosecution [Video] YouTube. November 25, 2020. Last accessed July 20, 2022 from https://www.youtube.com/watch?v=QNW0-tA_qAg.

- 55.Moore MR, Arcos RN, Rimkunas MP, et al. Data-Informed Community-Focused Policing. 2020. Jul 20, 2022. Last accessed. from https://lapdonlinestrgeacc.blob.core.usgovcloudapi.net/lapdonlinemedia/2021/12/data-informed-guidebook-042020.pdf.

- 56.Gascón G. Transformative Justice Prosecution Strategies to Reform the Justice System and Enhance Community Safety. 2019. Jul 20, 2022. Last accessed. from https://www.georgegascon.org/wp-content/uploads/2020/09/SFDA_Transformative-Justice_George-Gascon_2019.pdf.

- 57.Muñiz A. Rutgers University Press; 2015. Police, Power, and the Production of Racial Boundaries. [Google Scholar]

- 58.Eubanks V. St. Martin's Press; 2018. Automating Inequality: How High-Tech Tools Profile, Police, and Punish the Poor. [Google Scholar]

- 59.Zuboff S. The Age of Surveillance Capitalism: The Fight for a Human Future at the New Frontier of Power. PublicAffairs. 2019.

- 60.Chohlas-Wood A. Understanding Risk Assessment Instruments in Criminal Justice. 2020. Jul 19, 2022. Last accessed. from https://www.brookings.edu/research/understanding-risk-assessment-instruments-in-criminal-justice/#footnote-14.

- 61.PredPol. Using Custom PredPol Boxes for COVID-19 Patrols. Apr 8 20, 2020. Jul 8 20, 2022. Last accessed. from https://blog.predpol.com/using-custom-predpol-boxes-for-covid-19-patrols.

- 62.Rubinstein D, Slotnik D, Shapiro E, Stack L. Fearing 2nd wave, city will adopt restrictions in hard-hit areas. The New York Times . October 8, 2020. Last accessed July 20, 2022 from https://www.nytimes.com/2020/10/04/nyregion/nyc-covid-shutdown-zip-codes.html.

- 63.Bradford NJ, Amani B, Walker V, Sharif MZ, Ford CL. Barely Tweeting and rarely about racism: assesing US State Health Department Twitter use during the COVID-19 vaccine rollout. Ethn Dis . 2022;32(2):151–164. doi: 10.18865/ed.32.3.257. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Criss S, Nguyen TT, Norton S, et al. Advocacy, hesitancy, and equity: exploring U.S. race-related discussions of the COVID-19 vaccine on Twitter. Int J Environ Res Public Health . 2021;18(11):5693. doi: 10.3390/IJERPH18115693. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Ford CL, Amani B, Harawa NT, et al. Adequacy of existing surveillance systems to monitor racism, social stigma and COVID inequities: a detailed assessment and recommendations. Int J Environ Res Public Health . 2021;18(24):13099. doi: 10.3390/IJERPH182413099. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.COVID-19 Community Mobility Reports. Jul 19, 2022. Last accessed. from https://www.google.com/covid19/mobility/

- 67.Federal Bureau of Investigation. Hate Crimes. Jul 19, 2022. Last accessed. from https://www.fbi.gov/investigate/civil-rights/hate-crimes.

- 68.Biddle S. Police surveilled George Floyd protests with help from Twitter-affiliated startup Dataminr. The Intercept . Jul 9, 2020. Last accessed July 20, 2022 from https://theintercept.com/2020/07/09/twitter-dataminr-police-spy-surveillance-black-lives-matter-protests/

- 69.Whittaker Z. Minneapolis police tapped Google to identify George Floyd protesters. Tech Crunch . Feb 6, 2021. Last accessed July 20, 2022 from https://techcrunch.com/2021/02/06/minneapolis-protests-geofence-warrant/

- 70.Ng A. Google is giving data to police based on search keywords, court docs show. CNET . Oct 8, 2020. Last accessed July 20, 2022 from https://www.cnet.com/news/privacy/google-is-giving-data-to-police-based-on-search-keywords-court-docs-show/

- 71.Bhuiyan J. What happens when ICE asks Google for your user information. Los Angeles Times . Mar 24, 2021. Last accessed July 20, 2022 from https://www.latimes.com/business/technology/story/2021-03-24/federal-agencies-subpoena-google-personal-information.

- 72.United States Census Bureau. How We Protect Your Information. Last accessed July 19, 2022 from https://www.census.gov/programs-surveys/surveyhelp/protect-information.html.

- 73.United States Census Bureau. Title 13, US Code - History. Last accessed July 19, 2022 from https://www.census.gov/history/www/reference/privacy_confidentiality/title_13_us_code.html.

- 74.Bellis MA, Leckenby N, Hughes K, Luke C, Wyke S, Quigg Z. Nighttime assaults: using a national emergency department monitoring system to predict occurrence, target prevention and plan services. BMC Public Health . 2012;12(1):1–13. doi: 10.1186/1471-2458-12-746/FIGURES/3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Biddle S. Dataminr targets communities of color for police. The Intercept . Oct 21, 2020. Last accessed July 20, 2022 from https://theintercept.com/2020/10/21/dataminr-twitter-surveillance-racial-profiling/

- 76.United States Court of Appeals for the Fourth Circuit. No. 20-1495. Leaders of a Beautiful Struggle; Erricka Bridgeford; Kevin James, Plaintiffs – Appellants, v Baltimore Police Department; Michael S. Harrison, in his official capacity as Baltimore Police Commissioner, Defendants – Appellees. 2021. Last accessed September 2, 2022 from https://www.eff.org/files/2021/06/24/201495a.p.pdf.

- 77.Ford CL, Airhihenbuwa CO. The public health critical race methodology: praxis for antiracism research. Soc Sci Med . 2010;71(8):1390–1398. doi: 10.1016/J.SOCSCIMED.2010.07.030. [DOI] [PubMed] [Google Scholar]

- 78.Kaltheuner F. How data targets people seeking abortion. Human Rights Watch . May 5, 2022. Last accessed July 19, 2022 from https://www.hrw.org/news/2022/05/05/how-data-targets-people-seeking-abortion.

- 79.Hatmaker T. Congress probes period tracking apps and data brokers over abortion privacy concerns. TechCrunch . Jul 8, 2022. Last accessed September 2, 2022 from https://techcrunch.com/2022/07/08/house-oversight-letter-abortion-period-apps-data-brokers/?guce_referrer=aHR0cHM6Ly93d3cuZ29vZ2xlLmNvbS8&guce_referrer_sig=AQAAAM888opSEzdWi0cnkknlLFvndMZfZ40Ld2-VuZPTnr1MuY2t5LwV0HLJ04sC0nyPwNqRPIBGuvD1z7tM5gt2Pg3zI9vBvcmlJCivqoaNanM9af7z-BmvSXBgLgDBBT8NWUcz6OLYatPxJF2wWOYYqsbFeZNDnO9K6cPARmUb1qw6&guccounter=2.

- 80.National Center for Advancing Translational Sciences. N3C Frequently Asked Questions. Last accessed July 9, 2022 from https://ncats.nih.gov/n3c/about/program-faq#privacy-and-security.

- 81.Add Health. Frequently Asked Questions. Last accessed July 19, 2022 from https://addhealth.cpc.unc.edu/documentation/frequently-asked-questions/

- 82.Mohler GO, Short MB, Malinowski S, et al. Randomized controlled field trials of predictive policing. J Am Stat Asssoc . 2016;110(512):1399–1411. doi: 10.1080/01621459.2015.1077710. [DOI] [Google Scholar]

- 83.Guariglia M. Technology can't predict crime, it can only weaponize proximity to policing. Electronic Frontier Foundation . 2020. Last accessed July 19, 2022 from https://www.eff.org/deeplinks/2020/09/technology-cant-predict-crime-it-can-only-weaponize-proximity-policing.

- 84.Stop LAPD Spying. Over 450 academics reject Predpol. Medium . 2019. Last accessed July 19, 2022 from https://stoplapdspying.medium.com/over-450-academics-reject-predpol-790e1d1b0d50.

- 85.Benjamin R. Polity Press; 2021. Race After Technology: Abolitionist Tools for the New Jim Code. [Google Scholar]

- 86.Wolf LE, Patel MJ, Williams Tarver BA, Austin JL, Dame LA, Beskow LM. Certificates of confidentiality: protecting human subject research data in law and practice. J Law Med Ethics . 2015;43(3):594–609. doi: 10.1111/jlme.12302. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Data For Black Lives. About D4BL. Jul 19, 2022. Last accessed. from https://d4bl.org/about.

- 88.Community Justice Exchange. From Data Criminalization to Prison Abolition. Jul 19, 2022. Last accessed. from https://abolishdatacrim.org/en.

- 89.Social Science Research Council (SSRC) Public Health, Surveillance, and Human Rights Network. Covid-19 and the Social Sciences. Jul 19, 2022. Last accessed. from https://covid19research.ssrc.org/public-health-surveillance-and-human-rights-network/

- 90.American Civil Liberties Union. Letter to Twitter regarding Dataminir and Surveillance. July 9, 2020. Last accessed July 19, 2022 from https://mediajustice.org/wp-content/uploads/2020/07/Letter-to-Twitter-re-Dataminr-and-surveillance.pdf.

- 91.Roberts DE. Torn Apart: How the Child Welfare System Destroys Black Families—and How Abolition Can Build a Safer World. Basic Books. 2022.