Abstract

Background

Growing evidence suggests that increasing opportunities for social engagement has the potential to support successful aging. However, many older adults may have limited access to in-person social engagement opportunities due to barriers such as transportation. We outline the development, design, methodology, and baseline characteristics of a randomized controlled trial that assessed the benefits of a social engagement intervention delivered through the OneClick video conferencing platform to older adults with varying levels of cognitive functioning.

Methods

Community-dwelling older adults with and without cognitive challenges were randomly assigned to a social engagement intervention group or a waitlist control group. Participants were asked to attend twice-weekly social engagement events for 8 weeks via OneClick. Outcomes included social engagement and technology acceptance for both groups at baseline, week-4, and week-8 assessments. As an extension, the waitlist control group had an opportunity to participate in the intervention, with outcomes assessed at weeks 12 and 16.

Results

We randomly assigned 99 participants (mean age = 74.1 ± 6.7, range: 60–99), with 50 in the immediate intervention group and 49 in the waitlist control group. About half of the participants reported living alone (53.5%), with a third (31%) falling into the cognitively impaired range on global cognitive screening. The groups did not differ at baseline on any of the outcome measures.

Conclusions

Outcomes from this study will provide important information regarding the feasibility and efficacy of providing technology-based social engagement interventions to older adults with a range of cognitive abilities.

Keywords: Randomized controlled trial, Social engagement, Technology, Older adults, Cognitive impairment, Videoconferencing

1. Introduction

As the population of older adults continues to grow, addressing modifiable risk factors such as social isolation and loneliness, which are known to negatively impact physical, emotional, and cognitive health, is critical [[1], [2], [3], [4], [5]]. Older adults are at an increased risk of experiencing social isolation and loneliness due to biological and psychosocial factors, including age-related changes in mobility [[6], [7], [8]], sensory functions such as vision and hearing loss [[9], [10], [11], [12]], cognitive challenges such as mild memory impairment [[13], [14], [15], [16], [17]], and shrinking social networks due to loss of family and friends [18,19]. Social engagement interventions provide a potential approach to mitigate the negative impacts of social isolation and loneliness in older adults.

Intervention studies in aging offer evidence supporting the benefits of in-person social engagement on health outcomes [[20], [21], [22]]. However, many older adults have limited opportunities for in-person socializing due to factors such as illness, reduced mobility, low social capital, transportation barriers, and caregiving responsibilities. Communication technologies thus serve as a valuable tool to facilitate social interactions without needing to leave the home environment. In particular, video technologies offer the benefits of real-time, face-to-face interactions while reducing the barriers related to in-person activities. Video technology-based interventions such as I-CONECT [[23], [24], [25]] have shown the feasibility and benefits of such interventions in older adults. These interventions have primarily focused on enhancing cognitive health by offering one-on-one web-based conversations with trained research staff.

Informal, peer-driven conversations play an important role in fostering social connectedness and well-being [[26], [27], [28]]. To explore the potential benefits of connecting older adult peers over shared interests, we developed an online social engagement intervention aimed at improving social health and quality of life by providing opportunities for casual, small-group discussions around various topics (e.g., gardening, space exploration, healthy foods). Our intervention was specifically developed to be accessible to a wide range of older adults, including individuals with varying levels of cognitive abilities and computer proficiency. To deliver the intervention, we utilized a video technology platform called OneClick, which is a browser-based platform specifically designed for and tested by a range of older adult users, including those with mild cognitive impairment [29]. OneClick was developed as an alternative to existing videoconferencing platforms and online social media/meet-up sites. It connects people virtually without the need to exchange private information, download an application, or create a username and password. Herein, we report the study design, sample baseline demographics, and characteristics of our randomized controlled trial (RCT). This trial was registered on ClinicalTrials.gov (NCT05380180).

The goal of this RCT was to rigorously assess the efficacy of our 8-week social engagement intervention. Participants randomized to the intervention group received the social engagement OneClick intervention for 8 weeks, whereas participants randomized to the waitlist group received no intervention for the first 8 weeks. We hypothesized that participants in the intervention group would demonstrate improvements in primary outcome measures, including social isolation, loneliness, and quality of life. Additionally, we expected to observe improvements in secondary outcome measures, including social networks and social activity frequency.

2. Study design

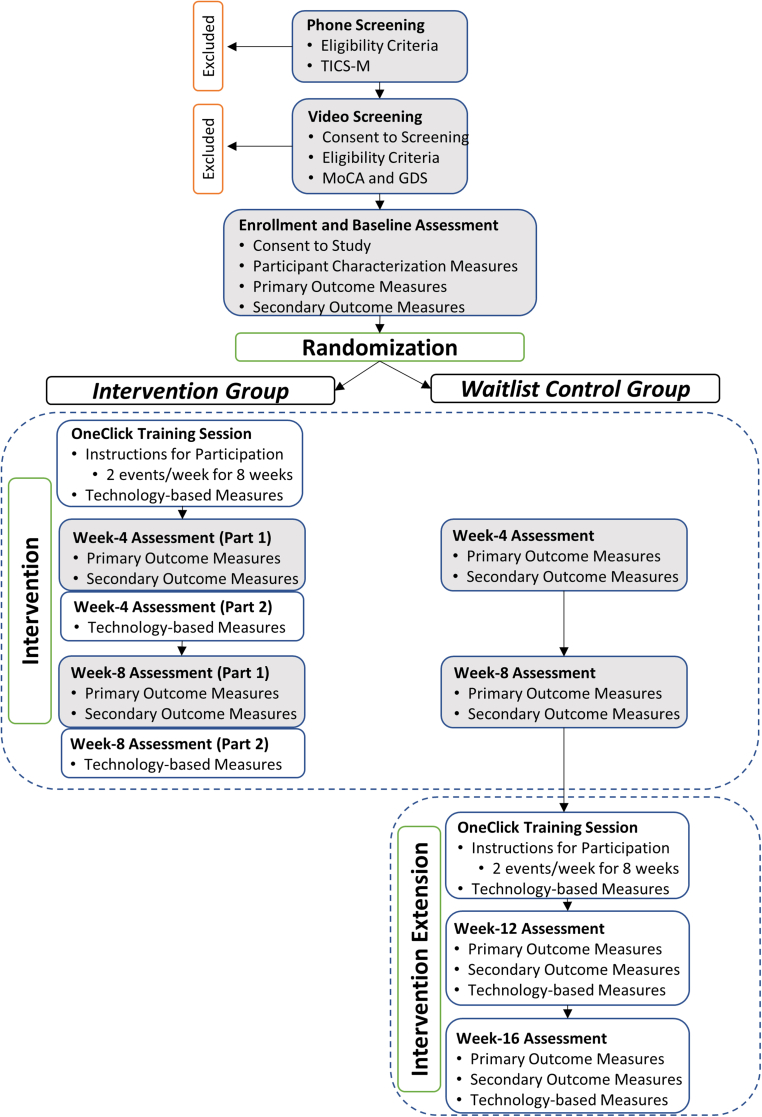

The RCT was designed with a waitlist control condition to evaluate the benefits of the social engagement intervention. The protocol was approved by the Institutional Review Board at the University of Illinois Urbana-Champaign (IRB #22212). An overview of the study design is depicted in Fig. 1. Details are provided in the following sections.

Fig. 1.

Overview of Study Design. TICS-M: Telephone interview for Cognitive Status – Modified; MoCA: Montreal Cognitive Assessment; GDS: Geriatric Depression Scale. Filled gray boxes represent masked sessions.

2.1. Study population

We recruited community-dwelling older adults with a range of cognitive abilities. Participants were included if they were 65 years or older, fluent in English, had adequate vision/hearing for video conferencing, had access to a device with a webcam (computer, laptop, or tablet), had internet access, and had the ability/availability to actively participate in the study for up to 16 weeks. Participants were excluded if they reported a diagnosis of dementia or had a score less than 22 on the Telephone Interview for Cognitive Status – Modified (TICS-M [30]) or an education-adjusted score less than 20 on the Montreal Cognitive Assessment (MoCA [31]). Participants were excluded if they reported a diagnosis of major depressive disorder or other major psychiatric illness, or if they exhibited elevated depressive symptoms, defined as a score of nine or higher on the Geriatric Depression Scale (GDS) – Short Form [32]. Participants were excluded if they lived in an assisted living or skilled nursing facility. Participants were recruited across the United States by word of mouth, participant registries, and outreach through local and national organizations that serve older adults.

2.2. Eligibility screening

A two-stage screening process was employed. First, a pre-screening was completed over the phone, wherein initial eligibility criteria were assessed via self-report. In addition, the TICS-M [30] was administered to screen for severe cognitive impairment. Pre-screening failures were defined as participants who indicated an interest in the study and completed the phone pre-screening but were ineligible due to an inclusion/exclusion factor, as outlined in Section 2.1. Individuals who did not meet the criteria for participation due to dynamic factors such as travel plans or access to technology (e.g., computer not in working condition) were placed on a participant reserve list. If their availability and/or access to technology changed, they were invited to continue in the next screening step (i.e., video screening) if the pre-screening was completed less than three months prior. If more than three months had passed, pre-screening was performed again to capture any relevant interval history.

If determined eligible at pre-screening, potential participants were scheduled for the video screening session. Participants provided written informed consent via electronic signature. During video screening, an additional self-report measure was administered to cross-check previously reported eligibility criteria and capture background demographics (TechSAge Background Questionnaire, modified from Ref. [33]). The MoCA [31] was administered to screen for advanced cognitive impairment (excluded if score <20), and the GDS [32] was administered to screen for elevated depressive symptoms (excluded if score >9).

Technology compatibility was tested during video screening, which took place over the OneClick platform. If the participant was unable to connect over OneClick, screening was completed over Zoom, enrollment was postponed, and a troubleshooting session was scheduled with a technical support research team member. If the participant was still unable to connect to the platform due to poor internet or an outdated device (more than ten years old), they were excluded from the study. Eligibility screening failures were defined as participants who provided initial consent but subsequently failed to meet the additional eligibility criteria (e.g., MoCA score, GDS score) and thus were not enrolled in the study. Participants were paid for their time during the video screening, regardless of eligibility status.

2.3. Enrollment and baseline assessment

Following the video screening, participants deemed eligible were provided with two options: 1) stay on the video call and complete the baseline assessment, or 2) schedule the baseline assessment for another day. At the start of the baseline assessment, participants provided written informed consent to complete the study procedures. A battery of measures was administered, including participant characterization measures, primary outcome measures, and secondary outcome measures (see Section 2.7 for details regarding assessment procedures). Upon completion of these measures, participants were randomized into the intervention or waitlist control group. Participants assigned to the intervention group participated in the OneClick social engagement intervention for 8 weeks, with outcomes assessed at week-4 and week-8. Participants assigned to the waitlist control group received no intervention for the first 8 weeks, with outcomes assessed at week-4 and week-8. Subsequently, as an extension to the trial, participants assigned to the waitlist control group had an opportunity to participate in the OneClick intervention, with intervention effects assessed at week-12 and week-16 (see Fig. 1).

The University of Illinois Urbana-Champaign was the coordinating site, with recruitment, screening, and assessments also administered by a community-based partner, CJE SeniorLife, based in Chicago, IL. To maintain trial fidelity, procedures were formalized in a Manual of Operating Procedures and followed by both sites. A first wave of participants was enrolled for the intervention kickoff in May 2022 to ensure there was a sufficient pool of participants to attend the events. After that, recruitment and enrollment occurred on a rolling basis until January 2023.

2.4. Randomization

We used a blocked randomization design with global cognition as a stratification variable to ensure an equal distribution of participants with varying cognitive status in the intervention and control groups. Global cognitive status was defined by MoCA score (no cognitive impairment: score >25; mild cognitive challenges: score 20–25). The allocation table consisted of permuted blocks, varying between four and six assignments per block. The table was created by a statistician and uploaded to the randomization module in REDCap [34]. The randomization module in REDCap was then used to allocate participants as they were enrolled, only after all the baseline assessment measures were completed (see Fig. 1). By using the randomization module, the allocation table remained hidden from all study personnel for the study duration, and the next assignment was concealed from the assessor during allocation.

2.5. Social engagement technology intervention

2.5.1. OneClick platform

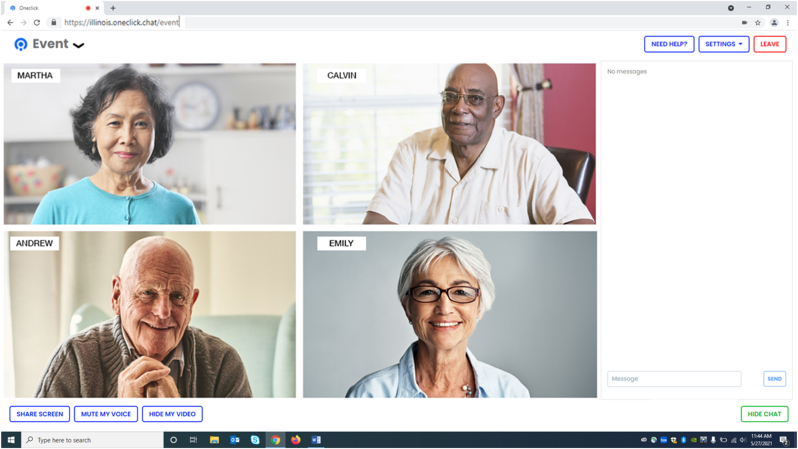

OneClick is a web-based videoconferencing platform that connects people over shared interests in live, small-group conversations (see Fig. 2). In distinct contrast to existing videoconferencing platforms and online social media/meet-up sites, OneClick connects people virtually without the need to exchange private information, download an application, or create a username and password. To optimize this platform for use by older adults with a range of cognitive abilities, we used an iterative design process that included needs assessment, preference understanding, heuristic evaluation, and user testing [29]. Changes to the platform were made to accommodate the needs of users with cognitive impairment, thus improving the usability, ease of use, and enjoyment of the system.

Fig. 2.

Screenshot of the OneClick platform with pseudo participants.

We then completed an experiential field trial [35] to assess OneClick's usability, specifically in the context of conducting social engagement events for older adults with (n = 5) and without (n = 8) cognitive challenges. Participants reported an overall positive experience using the OneClick platform for social engagement events and provided valuable feedback to improve the event structure (e.g., breakout room size, length of discussion) and content (e.g., preferences for topics of conversation). We used this feedback to update the event structure to include a maximum breakout room size of five participants and a discussion length of 30 min. We developed 60 unique content topics for discussion (detailed in Section 2.5.3). After these changes were made, we conducted two additional pilot trials with older adults (n = 10), after which minor changes were made to the intervention delivery procedures (e.g., order of assessments, data management, event reminders) and platform (e.g., improved visibility of icons). These changes improved clarity and minimized administrative and participant burden.

2.5.2. Pre-intervention training session

Participants were not required to have any prior experience with video technology to participate in this study. All participants first received a one-on-one training session completed remotely using the OneClick platform. This session allowed for individualized instruction regarding features of the platform and event logistics. Some information about the participant's specific device was gathered to better assist them if they were to encounter technical difficulties during the intervention (e.g., using a PC desktop versus an iPad). Prior to this session, a packet of instructional materials was mailed to the participant so they could follow along during the training session and reference it as needed throughout the intervention. A single-page quick-reference guide was provided for participants to use during the events as a visual reminder of useful actions (e.g., muting, hiding video, refreshing the page). For more detailed information about the implementation of participant training and technology support, see Ref. [36].

2.5.3. Social engagement events

During the 8-week study intervention period, participants were asked to attend remote social engagement events hosted via the OneClick platform. We developed these events to encourage social engagement among participants through live, small-group discussions focused on topics of shared interest. Each event covered one of 60 topics across five topic categories: Arts and Culture; Nature, Health, and Wellness; Life Experiences; Science and Technology; and Recreation and Sports. Events were offered five times per week, and participants were asked to attend a minimum of two per week for a total of 16 events. Participants had the flexibility to choose the events that were most interesting to them and worked with their schedule. Participants were allowed to attend more than two events per week if they desired. Events were attended by study participants, as well as a pool of cognitively healthy older adult volunteers serving as conversational partners. Volunteers were recruited from the community by word of mouth. They were required to be over the age of 50, have access to a device with reliable internet, and complete a virtual training session. During the training, volunteers were instructed on etiquette, confidentiality, and event participation. The volunteer conversation partners did not serve as facilitators but instead received the same event instructions as consented participants.

During an event, participants clicked on an event link provided to them via email to join the session without the need to enter a username and password. Participants then gathered in a main “welcome” room with the other participants and the event host. The host was a trained research staff member from the study team. The host welcomed everyone and then started a short 4 to 5 min presentation. The presentation comprised a series of pictures relating to the topic to provide a jumping-off point for the conversation. After this, the host moved everyone to a breakout room. Participants were assigned to rooms randomly. The host determined the number of breakout rooms by considering the total number of participants attending an event, with a possible range of 2–5 participants per room. Participants were given 30 min to discuss the topic, and conversation starters were provided via the chat feature to help support the conversation as needed. Breakout room sessions were not facilitated; the goal was to allow participants to have a non-intrusive conversation with their peers. However, if needed, a participant could request that the host join the room at any time by clicking the ‘request host’ button on the OneClick platform. A technical support telephone line was provided, with one to two research staff available at each event to help with any technical issues. At the end of the event, participants were asked to complete a brief survey where they had an opportunity to rate the topic and conversation and share any additional feedback in an open-ended comment field.

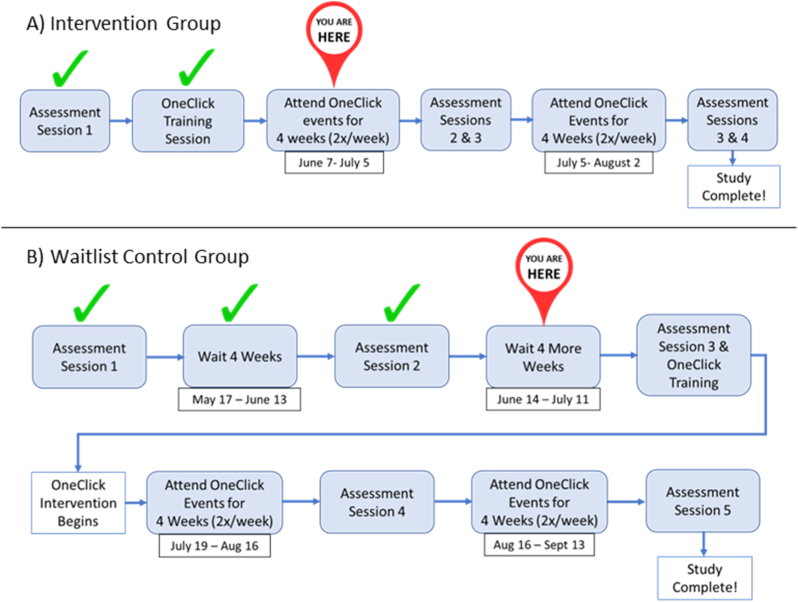

2.6. Participant retention

We employed several retention strategies to minimize participant attrition. General strategies included responding promptly to emails and phone calls, responding to issues that arose during the intervention immediately (e.g., poor audio quality) to ensure participant satisfaction, and providing participants with an easy-to-understand document, referred to as a “Participant Roadmap” (Fig. 3). This roadmap was updated after each study assessment and illustrated where participants were in the study process, along with important dates. The dates were unique to each individual participant, depending on their intervention start date, which was defined as the day they completed their OneClick training session.

Fig. 3.

Participant roadmap examples.

2.7. Study assessment procedures

2.7.1. Measures

A battery of measures was administered over the course of the study, including 1) participant characterization measures, 2) primary outcome measures, 3) secondary outcome measures, and 4) technology-based measures. A description of each measure is in Table 1.

Table 1.

Description of measures.

| Participant Characterization Measures | |||

|---|---|---|---|

| Measure | Construct | Description | Scoring |

| TechSAge Background Questionnaire [33] – Modified | General history and demographics | Includes questions related to demographic information, housing and transportation, occupational status, health information, perceptual abilities, and physical mobility. | Mix of multiple-choice, yes/no, and open-ended questions. |

| Montreal Cognitive Assessment [31] | Cognitive status | Standardized cognitive screening measure used to evaluate global cognitive status. Cognitive functioning assessed across various domains including visuospatial/executive, naming, memory, attention, language, abstraction, and orientation. | Types of responses vary. Scores range from 0 to 30, with higher scores indicating better cognition. Education-adjusted cutoff score for exclusion is < 20. |

| Geriatric Depression Scale-Short Form [32] | Depressive symptoms | Questions probe for any depressive symptoms the respondent may be experiencing, specifically reflecting over the past week. | Yes/no questions. Scores range from 0 to 15 with higher scores indicating elevated depressive symptoms. Cutoff for exclusion is > 9. |

| Logical Memory – Delayed Story Recall (Story A) [37] | Episodic memory | Well-established measure to assess memory. Individuals are read a short passage (Anna Thompson story) and asked to recall as many details as possible both immediately and after a delay. | Scores range from 0 to 25 points, with higher scores indicating better verbal episodic memory. |

| Category Fluency [38] | Language and executive functioning | Participants are asked to recall as many words that belong to a given category (e.g., animals) in 1 min. | Score is the total number of correct words recalled, with higher scores indicating better language/executive skills. |

|

Primary Outcome Measures | |||

|---|---|---|---|

| Measure | Construct | Description | Scoring |

| Friendship Scale [40] | Perceived social connectedness | A short scale measuring six dimensions that contribute to social isolation. | 5-point Likert scale (0 = not at all, 1 = occasionally, 2 = about half the time, 3 = most of the time, 4 = almost always). Scores range from 0 to 24, with higher scores indicating better social connectedness. |

| UCLA Loneliness Scale-Version 3 [39] | Subjective feelings of loneliness | Measures how often a person feels disconnected from others. | 4-point Likert scale (1 = never, 2 = rarely, 3 = sometimes, 4 = always). Scores range from 20 to 80, with higher scores indicating greater degrees of loneliness. |

| Quality of Life [41] | Perceived quality of life | Respondents rate the quality of various aspects of their life, including physical health, energy, family, money, and others. This measure was developed specifically for individuals with cognitive impairment. One item pertaining to marriage was removed due to a high number of not-applicable responses. | 4-point Likert scale (1 = poor, 2 = fair, 3 = good, 4 = excellent). Total scores range from 13 to 48, with higher scores indicating higher quality of life. |

|

Secondary Outcome Measures | |||

|---|---|---|---|

| Measure | Construct | Description | Scoring |

| Lubben Social Network Index [43] | Social network structure | Respondents provide the size of their social networks for family and friends and the frequency of their interactions with them. | Each response is scored from 0 to 5, with total scores ranging from 0 to 30. Higher scores indicate larger and more frequent social network interactions. |

| Social Activity Frequency (adapted from Refs. [26,44]) | Social activity engagement | Respondents rate how often during the past year they have engaged in ninea different activities that involve social interaction. | 5-point Likert scale (1 = once a year or less, 2 = several times a year, 3 = several times a month, 4 = several times a week, 5 = every day or almost every day). Scores range from 9 to 45, with higher scores indicating more frequent social participation. |

|

Technology-Based Measures | |||

|---|---|---|---|

| Measure | Construct | Description | Scoring |

| Perceived Ease of Use (adapted from Ref. [46]) | Ease of use of the OneClick System | Scale to measure ease of use of a system | 7-point Likert scale (1 = extremely unlikely, 2 = quite unlikely, 3 = slightly unlikely, 4 = neither, 5 = slightly likely, 6 = quite likely, 7 = extremely likely). Scores are averaged across 6 items, ranging from 1 to 7. Higher scores indicate better ease of use. |

| Perceived Usefulness (adapted from Ref. [45]) | Usefulness of the OneClick System | Scale to measure the usefulness of a system | Uses the same 7-point Likert scale as above. Scores are averaged across 6 items, ranging from 1 to 7. Higher scores indicate better usefulness. |

| Perceived Enjoyment (adapted from Ref. [47]) | Enjoyment of the OneClick System | Scale to measure one's enjoyment of a system | Uses the same 7-point Likert scale as above. Scores are averaged across 4 items, ranging from 1 to 7. Higher scores indicate better ease of use. |

| Intention to Use (adapted from Ref. [48]) | Intention to use the OneClick System | Scale to measure one's intention to adopt a system | Uses the same 7-point Likert scale as above. The score is from one item ranging from 1 to 7. Higher scores indicate a greater likelihood of adoption |

| System Usability Scale [45] | Usability of the OneClick system | Scale to measure one's perceived usability of a system. | 5-point Likert scale (1 = strongly disagree, 2 = disagree, 3 = neutral, 4 = agree, 5 = strongly agree). Scores range from 0 to 100. A score of 68 is considered average. |

| Computer Proficiency Questionnaire – 12 [49] | Aptitude for using computers | Assesses an individual's experience level with computers as well as how comfortable and successful they feel while using them. | 5-point Likert scale (1 = never tried, 2 = not at all, 3 = not very easily, 4 = somewhat easily, 5 = very easily). Total scores range from 6 to 30, with higher scores indicating higher computer proficiency. |

| Post-Engagement Interview | Opinions regarding OneClick and the social engagement intervention | Locally developed qualitative semi-structured interview | Interviews will be transcribed and analyzed using thematic analysis. |

This scale originally included 10 items, but 1 item was dropped due to numerous “Do not wish to respond” answers (see Section 3.2).

Participant characterization measures were chosen to capture important demographic and background information (TechSAge Background Questionnaire [33] – Modified) and to characterize mood (Geriatric Depression Scale [32]), episodic memory (Logical Memory [37]), and language (category fluency [38]). These measures may also serve as potential moderating variables during analysis. With the exception of category fluency, participant characterization measures were only collected at one time point, during the baseline assessment. Given that a previous social engagement intervention found changes in category fluency [23], this measure was collected during the technology-related outcomes assessments.

The primary and secondary outcome measures were focused on capturing potential changes in social health. Primary outcome measures included two measures of social engagement, the UCLA Loneliness Scale [39] and Friendship Scale [40], as well as a global measure of quality of life [41]. These measures are widely used in the context of social engagement and were utilized during our field trial [35]. Secondary outcome measures were guided by a published framework of social engagement in older adults with cognitive impairment [42], which outlined the importance of considering both social network and social activity aspects of social engagement. We used the well-established Lubben Social Network Scale [43] to measure social networks. Few validated scales exist to measure social activity participation so we developed a measure of social activity by adapting items from the Social Engagement Scale [44] as well as considering activities in which older adults with and without cognitive impairment engage [26].

The technology-based measures focused on evaluating the platform and the use of the technology for the purpose of social engagement. The platform was assessed using the System Usability Scale [45], an industry standard for measuring usability. To characterize additional factors that impact one's likelihood of adopting a new technology, we included a subset of questionnaires to measure aspects of technology acceptance, including perceived ease of use and usefulness (modified from Ref. [46]), perceived enjoyment (modified from Ref. [47]) and intention to use (modified from Ref. [48]). We included the Computer Proficiency Questionnaire [49] to assess participants' ability to complete various tasks on the computer (sending email, using a calendar, etc.).

2.7.2. Assessment schedule

Primary and secondary outcome measures were administered to both groups at baseline, week-4, and week-8, and to those in the waitlist control group at week-12 and week-16. Technology-based measures were first administered to both groups following their OneClick training session, thus serving as their baseline for these measures. These measures were administered again to those in the intervention group at week-4 and week-8 and to those in the waitlist control group during their extension at week-12 and week-16.

2.7.3. Masking

All assessors administering primary and secondary outcome measures at week-4 and week-8 assessments were masked to group assignment. Given the nature of behavioral interventions, participants and intervention personnel delivering the social engagement events and providing technological support were not masked to group assignment. To mitigate the risk of unmasking during outcomes assessments, participants were asked to keep their group assignment to themselves and were reminded by the assessor to do so at the start of the assessment session. Any accidental unmasking was documented. The technology-based measures required the assessor to know the group assignment, as these measures pertained to their experience with OneClick during the social engagement events. Thus, all technology-based measures were administered by an unmasked assessor at a separate time. Similarly, it was not feasible to maintain masking during the extension period, so all assessments completed at week-12 and week-16 were unmasked.

2.7.4. Assessor training and fidelity

All assessments were completed by trained research staff following manualized protocols. Assessors were trained and overseen by clinicians and researchers with expertise working with older adults. In addition, special efforts were made to train all staff in best practices for communicating with individuals with cognitive challenges. All baseline assessment sessions were audio recorded, and fidelity was checked for 20% of all assessments to evaluate the consistency of administration [50].

2.7.5. Data collection and management

Study data were collected and managed using REDCap [34] electronic data capture tools hosted at the University of Illinois Urbana-Champaign. All surveys were completed online using REDCap with the assessor via video chat or phone.

2.8. Statistical analysis

To measure the main effects of the intervention on primary and secondary outcomes, we will compare the intervention group to the waitlist control group. Dependent variables will include change scores in primary and secondary outcomes from baseline to week-4 and from baseline to week-8. In addition, we will perform a separate analysis of covariance with age and cognitive ability (MoCA and Logical Memory - Delayed Recall) as covariates. We plan to implement an intent-to-treat analysis where all participants who were allocated to either the intervention or waitlist group will be included in the analysis, regardless of the number of sessions attended or their completion of assessments.

Power analyses based on two independent sample t-tests were conducted to estimate a medium-sized effect of intervention (Cohen's d = 0.5) based on past research on similar behavioral interventions [5]. An initial sample size of 100 (50 per intervention group) was estimated to provide sufficient power (0.8) to conduct the proposed non-exploratory analyses. Accounting for an attrition rate of up to 20%, the final intended sample size was 120 participants (60 per intervention group).

3. Results

3.1. Sample and baseline characteristics

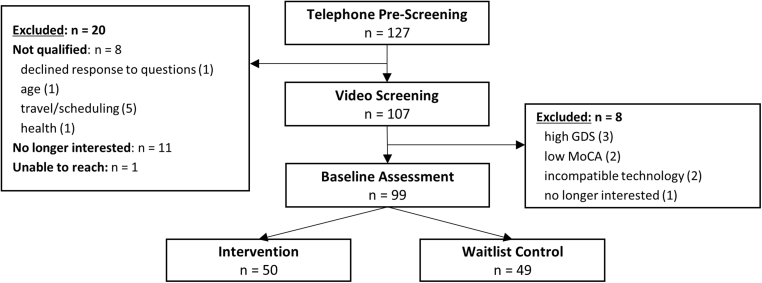

Consistent with The Consolidated Standards of Reporting Trials (CONSORT) [51], a diagram outlining the participant flow from recruitment to allocation is presented in Fig. 4. We recruited and completed pre-screening on a total of 127 potential participants. Of these, 20 individuals were excluded prior to the video screening. The primary reasons for exclusion at this point were that they were no longer interested (55%) or had difficulty with the time commitment and scheduling (25%). Following the video screening, another eight potential participants were excluded. A total of 99 participants were enrolled; 68 were categorized as having normal cognition, and 31 were classified as presenting with mild cognitive challenges based on MoCA scores. Demographics and baseline measures for both the intervention and waitlist control groups are reported in Table 2. Most participants were female (83.9%), identified as White (86.9%), were retired (77.8%), and lived alone (53.5%). Eighty-seven percent wore corrective visual aids, 19.2% wore hearing aids, and 15.2% used a walking aid.

Fig. 4.

CONSORT diagram.

Table 2.

Baseline characteristics.

|

Participant Characterization Measures | |||

|---|---|---|---|

| Intervention (n = 50) | Waitlist Control (n = 49) | Statistic | |

| Age | 73.5 (6.3), 65-90 | 74.6 (7.1), 65-99 | t(97) = −0.82; p = 0.41 |

| Education | χ2(6) = 6.54; p = 0.36 | ||

| High School | 0.0 % | 2.0 % | |

| Vocational | 1.0 % | 0.0 % | |

| Some College | 6.1 % | 4.0 % | |

| Bachelor's | 10.1 % | 16.2 % | |

| Master's | 24.2 % | 22.2 % | |

| Doctorate | 8.8 % | 5.1 % | |

| Do not wish to respond | 1.0 % | 0.0 % | |

| Sex | χ2(1) = 2.30; p = 0.13 | ||

| Female | 38.4 % | 43.4 % | |

| Male | 12.1 % | 6.1 % | |

| Race | χ2(4) = 5.70; p = 0.22 | ||

| Black | 2.0 % | 5.1 % | |

| White | 46.5 % | 40.4 % | |

| More than one | 1.0 % | 1.0 % | |

| Other (write-in) | 0.0 % | 3.0 % | |

| Do not wish to respond | 1.0 % | 0.0 % | |

| Living Situation | χ2(4) = 2.17; p = 0.71 | ||

| Alone | 25.3 % | 28.3 % | |

| Friend | 2.0 % | 1.0 % | |

| Spouse | 21.2 % | 16.2 % | |

| Roommate/Family | 1.0 % | 3.0 % | |

| More than one | 1.0 % | 1.0 % | |

| Self-Reported Health (rated 1–5, from poor to excellent) | 3.4 (0.9), 1-5 | 3.3 (0.8), 2-5 | t(97) = 0.30; p = 0.76 |

| Self-Reported Memory (rated 1–5, from poor to excellent) | 3.3 (0.8), 2-5 | 3.4 (0.7), 2-5 | t(97) = −0.71; p = 0.48 |

| Geriatric Depression Scale (possible range: 0–15) | 1.7 (1.9), 0-8 | 1.5 (1.7), 0-7 | t(97) = 0.53; p = 0.60 |

| MoCA (possible range: 0–30) | 26.7 (2.5), 20-30 | 26.6 (2.3), 22-30 | t(97) = −0.60; p = 0.55 |

| Logical Memory Delayed (possible range: 0–25) | 12.8 (4.0), 3-22 | 13.7, (4.2), 0-21 | t(97) = −0.50; p = 0.62 |

| Category Fluency - Animals | 21.0 (6.5), 9-35 | 21.6 (4.1), 8-30 | t(97) = −0.48; p = 0.63 |

|

Primary and Secondary Outcome Measures | |||

|---|---|---|---|

| Intervention (n = 50) | Waitlist Control (n = 49) | Statistic | |

| Friendship Scale (possible range: 0–24) | 20.4 (3.3), 10-24 | 20.6 (3.3), 10-24 | t(97) = −0.27; p = 0.79 |

| UCLA Loneliness Scale (possible range: 20–80) | 37.9 (8.9), 22-56 | 37.1 (9.4), 20-57 | t(97) = 0.46; p = 0.65 |

| Quality of Life (possible range: 13–48) | 37.5 (5.2), 22-48 | 37.8 (5.1), 27-48 | t(97) = −0.28; p = 0.78 |

| Lubben Social Network Index (possible range: 0–30) | 18.7 (4.4), 8-30 | 19.1 (4.5), 6-27 | t(97) = −0.37; p = 0.71 |

| Social Activity Questionnaire (possible range: 9–45) | 26.8 (4.0), 17-33 | 28.0 (4.0), 17-35 | t(97) = −1.88; p = 0.06 |

|

Technology-Based Measuresa | |||

|---|---|---|---|

| Intervention (n = 48) | Waitlist Control (n = 41) | Statistic | |

| Perceived Ease of Use (possible range: 1–7) | 5.7 (0.8), 4-7 | 5.9 (0.7), 4.7–7 | t(87) = 0.43; p = 0.67 |

| Perceived Usefulness (possible range: 1–7) | 5.0 (0.9), 2.8–6.3 | 4.7 (1.3), 1-7 | t(87) = 1.13; p = 0.26 |

| Perceived Enjoyment (possible range: 1–7) | 5.8 (0.7), 4-7 | 5.7 (1.2), 1-7 | t(87) = 0.59; p = 0.56 |

| Intention to Use (possible range: 1–7) | 5.7 (1.1), 2-7 | 5.6 (1.5), 1-7 | t(87) = 0.37; p = 0.71 |

| Computer Proficiency Questionnaire (possible range: 6–30) | 27.0 (3.6), 16.5–30 | 27.9 (2.8), 20.5–30 | t(87) = −1.30; p = 0.20 |

| System Usability Scale (possible range: 0–100) | 73.96 (13.9), 50–97.5 | 70.24 (14.9), 42.5–100 | t(87) = 1.22; p = 0.23 |

Note: For quantitative variables, group cells represent mean (standard deviation), range; statistics were derived from independent sample t-tests. For categorical variables, group cells represent percentage of the entire sample (n = 99); statistics were derived from Pearson Chi-square tests.

These do not reflect the entire enrollment sample, as the technology-based measures were not completed during the initial baseline but following the OneClick training session.

3.2. Missing data

For the outcome and technology-based measures, participants were given the option to choose “Do not wish to respond” to any of the items on the questionnaires and surveys. This choice was offered to allow participants the option to decline to answer a question by choice. Other than instances where this choice was made, there were no missing responses. If less than 5% of participants responded “Do not wish to respond” to a particular item, missing item scores were imputed with the mean response for that item from the entire sample. Only one measure, the locally developed Social Activity Questionnaire, included an item where more than 5% declined to respond ("How often do you do paid work in the community?"). In this case, the item was dropped from the measure across the entire sample.

4. Discussion and project challenges

Results from this RCT will inform our understanding of the benefits of a technology-based social engagement intervention for older adults with and without cognitive challenges. In addition, findings will aid in the refinement of the content and delivery of our social engagement intervention as well as the OneClick platform to implement this social engagement intervention to large groups of older adults living in geographically distant locations.

Our intended sample size was 120 participants, out of which we enrolled 99 older adults equally distributed across our two groups and stratified by cognitive status. We experienced some challenges with recruitment, due to which we did not reach our intended sample size. The primary challenge we experienced was people's ability to make a time commitment to participate in the study for 8–16 weeks and their availability to attend two social engagement events per week for 8 weeks. Given that the events were synchronous, we needed to find a variety of days and times that worked for a range of older adults (e.g., both working and retired) while maintaining feasible staffing requirements in the context of a research setting. We recruited individuals from all over the country, so four time zones needed to be accommodated. We scheduled events five days a week, including one weekend day, and varied event offerings from morning to evening. To reduce potential attrition due to scheduling conflicts, we first sent an FAQ sheet outlining the study requirements and event schedule to interested participants before they signed up for screening. Although our carefully planned schedule worked for many, there were still a number of potential participants who later reported they were unable to participate due to the timing. If this intervention is adopted by home and community-based organizations, the schedules of the clientele should be carefully considered before finalizing an event schedule.

The COVID-19 pandemic required us to move our baseline assessment and OneClick training session to remote administration. We had planned to assess individuals in their homes or in our research facilities and conduct in-person training to use the OneClick platform. This approach was not safe at the time of the study kickoff, so all procedures were completed online. Many hours were spent training staff on how to talk through technological challenges over the phone with individuals who were just developing experience with video technology or who had cognitive impairment. Over time, this process was refined [36], and we enrolled several individuals with lower levels of technology experience, as indicated by the range on the Computer Proficiency Questionnaire [49] (Table 2). Despite some initial challenges, the move to remote administration of assessment and training provided us the opportunity to reach participants from around the country, improving the geographical representation of our sample and supporting the potential scalability of such interventions.

Reflecting the success of our randomization process, the two groups did not differ at baseline on any of the baseline measures, including demographics, primary and secondary outcome measures, and technology-based measures. It is, however, worth noting that our sample enrolled had a relatively high baseline level of social engagement, with many participants already performing at celling on some of our social engagement measures. The outcomes from this trial will inform the development and refinement of technology-based social engagement interventions to support meaningful social interactions in older adults.

Clinical trial registration

This trial was registered on ClinicalTrials.gov (NCT05380180).

Funding statement

This research was supported by the National Institutes of Health through the National Institute on Aging Small Business Innovation Research program [R43AG059450; R44AG059450].

CRediT authorship contribution statement

Elizabeth A. Lydon: Writing – review & editing, Writing – original draft, Visualization, Methodology, Investigation, Formal analysis. George Mois: Writing – review & editing, Project administration. Shraddha A. Shende: Writing – review & editing, Methodology, Investigation. Dillon Myers: Software, Resources, Methodology, Funding acquisition, Conceptualization. Margaret K. Danilovich: Writing – review & editing, Resources, Methodology, Investigation. Wendy A. Rogers: Writing – review & editing, Supervision, Resources, Methodology, Funding acquisition, Conceptualization. Raksha A. Mudar: Writing – review & editing, Writing – original draft, Supervision, Resources, Methodology, Funding acquisition, Conceptualization.

Declaration of competing interest

The authors declare the following financial interests/personal relationships which may be considered as potential competing interests:

Dillon Myers is a cofounder and member of the Board of Advisors for OneClick. He was not involved in any activities related to recruitment, data collection, or analysis.

Acknowledgments

The authors thank LifeLoop (formerly iN2L) for their assistance in developing event content; James Graumlich for his insights regarding the RCT design; and Alan Gibson for his contribution to the development and optimization of the OneClick platform. We would also like to thank the post-doctoral researchers, staff, and students involved in the development and implementation of the project, including Xia Yu S. Chen, Saahithya Gowrishankar, Sarah E. Jones, Madina Khamzina, Allura Lothary, Vincent Mathias, John Marendes, Noah Olivero, Kori Trotter, Michael Varzino, Vinh Vo, Teresa S. Warren, and Sharbel Yako. We also appreciate the technical support of the OneClick staff, including Gabby Hersey, Mac Dziedziela, and Manish Gautam.

References

- 1.E. National Academies of Sciences and Medicine . The National Academies Press; Washington, DC: 2020. Social Isolation and Loneliness in Older Adults: Opportunities for the Health Care System. [DOI] [PubMed] [Google Scholar]

- 2.Perissinotto C.M., Stijacic Cenzer I., Covinsky K.E. Loneliness in older persons: a predictor of functional decline and death. Arch. Intern. Med. 2012;172:1078–1083. doi: 10.1001/archinternmed.2012.1993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Marioni R.E., Proust-Lima C., Amieva H., Brayne C., Matthews F.E., Dartigues J.F., Jacqmin-Gadda H. Social activity, cognitive decline and dementia risk: a 20-year prospective cohort study chronic disease epidemiology. BMC Publ. Health. 2015;15:1–8. doi: 10.1186/s12889-015-2426-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Pantell M., Rehkopf D., Jutte D., Syme S.L., Balmes J., Adler N. Social isolation: a predictor of mortality comparable to traditional clinical risk factors. Am. J. Publ. Health. 2013;103:2056–2062. doi: 10.2105/AJPH.2013.301261. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Czaja S.J., Moxley J.H., Rogers W.A. Social support, isolation, loneliness, and health among older adults in the PRISM randomized controlled trial. Front. Psychol. 2021;12 doi: 10.3389/FPSYG.2021.728658. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Merchant R.A., Liu S.G., Lim J.Y., Fu X., Chan Y.H. Factors associated with social isolation in community-dwelling older adults: a cross-sectional study. Qual. Life Res. 2020;29:2375–2381. doi: 10.1007/S11136-020-02493-7. [DOI] [PubMed] [Google Scholar]

- 7.Pettigrew S., Donovan R., Boldy D., Newton R. Older people's perceived causes of and strategies for dealing with social isolation. Aging Ment. Health. 2014;18:914–920. doi: 10.1080/13607863.2014.899970. [DOI] [PubMed] [Google Scholar]

- 8.Smith T. “On their own”: social isolation, loneliness and chronic musculoskeletal pain in older adults. Qual. Ageing. 2017;18:87–92. doi: 10.1108/QAOA-03-2017-0010. [DOI] [Google Scholar]

- 9.Shukla A., Harper M., Pedersen E., Goman A., Suen J.J., Price C., Applebaum J., Hoyer M., Lin F.R., Reed N.S. Hearing loss, loneliness, and social isolation: a systematic review. Otolaryngology-Head Neck Surg. (Tokyo) 2020;162:622–633. doi: 10.1177/0194599820910377. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Mick P., Kawachi I., Lin F.R. The association between hearing loss and social isolation in older adults. Otolaryngology-Head Neck Surg. (Tokyo) 2014;150:378–384. doi: 10.1177/0194599813518021. [DOI] [PubMed] [Google Scholar]

- 11.Bott A., Saunders G. A scoping review of studies investigating hearing loss, social isolation and/or loneliness in adults. Int. J. Audiol. 2021;60:30–46. doi: 10.1080/14992027.2021.1915506. [DOI] [PubMed] [Google Scholar]

- 12.Coyle C.E., Steinman B.A., Chen J. Visual acuity and self-reported vision status: their associations with social isolation in older adults. J. Aging Health. 2016;29:128–148. doi: 10.1177/0898264315624909. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Parikh P.K., Troyer A.K., Maione A.M., Murphy K.J. The impact of memory change on daily life in normal aging and mild cognitive impairment. Gerontol. 2016;56:877–885. doi: 10.1093/geront/gnv030. [DOI] [PubMed] [Google Scholar]

- 14.Zhaoyang R., Sliwinski M.J., Martire L.M., Katz M.J., Scott S.B. Features of daily social interactions that discriminate between older adults with and without mild cognitive impairment. J. Gerontol.: Ser. Bibliogr. 2021 doi: 10.1093/geronb/gbab019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Kotwal A.A., Kim J., Waite L., Dale W. Social function and cognitive status: results from a US nationally representative survey of older adults. J. Gen. Intern. Med. 2016;31:854–862. doi: 10.1007/s11606-016-3696-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Deng J.-H., Huang K.-Y., Hu X.-X., Huang X.-W., Tang X.-Y., Wei X., Feng L., Lu G.-D. Midlife long-hour working and later-life social engagement are associated with reduced risks of mild cognitive impairment among community-living Singapore elderly. J. Alzheim. Dis. 2019;67:1067–1077. doi: 10.3233/JAD-180605. [DOI] [PubMed] [Google Scholar]

- 17.Nygård L., Kottorp A. Engagement in instrumental activities of daily living, social activities, and use of everyday technology in older adults with and without cognitive impairment. Br. J. Occup. Ther. 2014;77:565–573. doi: 10.4276/030802214X14151078348512. [DOI] [Google Scholar]

- 18.de Vries B., Johnson C. The death of friends in later life. Adv. Life Course Res. 2002;7:299–324. doi: 10.1016/S1040-2608(02)80038-7. [DOI] [Google Scholar]

- 19.Stein J., Bär J.M., König H.H., Angermeyer M., Riedel-Heller S.G. Social loss experiences and their association with depression in old age-results of the leipzig longitudinal study of the aged (LEILA 75+) Psychiatr. Prax. 2019;46:141–147. doi: 10.1055/A-0596-9701/ID/JR774-35. [DOI] [PubMed] [Google Scholar]

- 20.Kelly M.E., Duff H., Kelly S., McHugh Power J.E., Brennan S., Lawlor B.A., Loughrey D.G. The impact of social activities, social networks, social support and social relationships on the cognitive functioning of healthy older adults: a systematic review. Syst. Rev. 2017;6:259. doi: 10.1186/s13643-017-0632-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Poscia A., Stojanovic J., La Milia D.I., Duplaga M., Grysztar M., Moscato U., Onder G., Collamati A., Ricciardi W., Magnavita N. Interventions targeting loneliness and social isolation among the older people: an update systematic review. Exp. Gerontol. 2018;102:133–144. doi: 10.1016/j.exger.2017.11.017. [DOI] [PubMed] [Google Scholar]

- 22.Dickens A.P., Richards S.H., Greaves C.J., Campbell J.L. Interventions targeting social isolation in older people: a systematic review. BMC Publ. Health. 2011;11:647. doi: 10.1186/1471-2458-11-647. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Dodge H.H., Zhu J., Mattek N.C., Bowman M., Ybarra O., Wild K.V., Loewenstein D.A., Kaye J.A. Web-enabled conversational interactions as a method to improve cognitive functions: results of a 6-week randomized controlled trial. Alzheimer's Dementia: Transl. Res. Clin. Interv. 2015;1:1–12. doi: 10.1016/j.trci.2015.01.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Yu K., Wild K., Potempa K., Hampstead B.M., Lichtenberg P.A., Struble L.M., Pruitt P., Alfaro E.L., Lindsley J., MacDonald M., Kaye J.A., Silbert L.C., Dodge H.H. The internet-based conversational engagement clinical trial (I-CONECT) in socially isolated adults 75+ years old: randomized controlled trial protocol and COVID-19 related study modifications. Front Digit Health. 2021;3 doi: 10.3389/fdgth.2021.714813. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Dodge H.H., Yu K., Wu C.-Y., Pruitt P.J., Asgari M., Kaye J.A., Hampstead B.M., Struble L., Potempa K., Lichtenberg P., Croff R., Albin R.L., Silbert L.C. Internet-based conversational engagement randomized controlled clinical trial (I-CONECT) among socially isolated adults 75+ years old with normal cognition or MCI: topline results. Gerontol. 2023 doi: 10.1093/geront/gnad147. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Amano T., Morrow-Howell N., Park S. Patterns of social engagement among older adults with mild cognitive impairment. J. Gerontol. B Psychol. Sci. Soc. Sci. 2020;75:1361–1371. doi: 10.1093/geronb/gbz051. [DOI] [PubMed] [Google Scholar]

- 27.Park M.J., Park N.S., Chiriboga D.A. A latent class analysis of social activities and health among community-dwelling older adults in Korea. Aging Ment. Health. 2018;22:625–630. doi: 10.1080/13607863.2017.1288198. [DOI] [PubMed] [Google Scholar]

- 28.Morrow-Howell N., Putnam M., Lee Y.S., Greenfield J.C., Inoue M., Chen H. An investigation of activity profiles of older adults. J. Gerontol. B Psychol. Sci. Soc. Sci. 2014;69:809–821. doi: 10.1093/geronb/gbu002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Nie Q., Nguyen L.T., Myers D., Gibson A., Kerssens C., Mudar R.A., Rogers W.A. Design guidance for video chat system to support social engagement for older adults with and without mild cognitive impairment. Gerontechnology. 2020;20:1–15. doi: 10.4017/gt.2020.20.1.398.08. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Welsh K.A., Breitner J.C.S., Magruder-Habib K.M. Detection of dementia in the elderly using telephone screening of cognitive status. Neuropsychiatry Neuropsychol. Behav. Neurol. 1993;6:103–110. [Google Scholar]

- 31.Nasreddine Z.S., Phillips N.A., Bédirian V., Charbonneau S., Whitehead V., Collin I., Cummings J.L., Chertkow H. The Montreal Cognitive Assessment, MoCA: a brief screening tool for mild cognitive impairment. J. Am. Geriatr. Soc. 2005;53:695–699. doi: 10.1111/j.1532-5415.2005.53221.x. [DOI] [PubMed] [Google Scholar]

- 32.Sheikh J.I., Yesavage J.A. 9/Geriatric depression scale (GDS) Clin. Gerontol. 1986;5:165–173. doi: 10.1300/J018V05N01_09. [DOI] [Google Scholar]

- 33.Remillard E.T., Griffiths P.C., Mitzner T.L., Sanford J.A., Jones B.D., Rogers W.A. The TechSAge Minimum Battery: a multidimensional and holistic assessment of individuals aging with long-term disabilities. Disabil Health J. 2020;13 doi: 10.1016/j.dhjo.2019.100884. [DOI] [PubMed] [Google Scholar]

- 34.Harris P.A., Taylor R., Thielke R., Payne J., Gonzalez N., Conde J.G. Research electronic data capture (REDCap)--a metadata-driven methodology and workflow process for providing translational research informatics support. J. Biomed. Inf. 2009;42:377–381. doi: 10.1016/J.JBI.2008.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Nguyen L.T., Nie Q., Lydon E., Myers D., Gibson A., Kerssens C., Mudar R.A., Rogers W.A. Exploring video chat for social engagement in older adults with and without cognitive impairment. Innov Aging. 2019;3:S932. doi: 10.1093/geroni/igz038.3391. [DOI] [Google Scholar]

- 36.Mois G., Lydon E.A., Mathias V.F., Jones S.E., Mudar R.A., Rogers W.A. Best practices for implementing a technology-based intervention protocol: participant and researcher considerations. Arch. Gerontol. Geriatr. 2024;122 doi: 10.1016/j.archger.2024.105373. [DOI] [PubMed] [Google Scholar]

- 37.Wechsler D. The Psychological Corporation; San Antonio, TX: 1997. WMS-III Administration and Scoring Manual. [Google Scholar]

- 38.Goodglass H., Kaplan E. The assessment of aphasia and related disorders. Contemp. Psychol.: Journal. Rev. 1972;17 doi: 10.1037/0010768. 614–614. [DOI] [Google Scholar]

- 39.Russell D.W. UCLA loneliness scale (version 3): reliability, validity, and factor structure. J. Pers. Assess. 1996;66:20–40. doi: 10.1207/s15327752jpa6601_2. [DOI] [PubMed] [Google Scholar]

- 40.Hawthorne G. Measuring social isolation in older adults: development and initial validation of the friendship scale. Soc. Indicat. Res. 2006;77:521–548. doi: 10.1007/s11205-005-7746-y. [DOI] [Google Scholar]

- 41.Logsdon R.G., Gibbons L.E., McCurry S.M., Teri L. Assessing quality of life in older adults with cognitive impairment. Psychosom. Med. 2002;64:510–519. doi: 10.1097/00006842-200205000-00016. [DOI] [PubMed] [Google Scholar]

- 42.Lydon E.A., Nguyen L.T., Nie Q., Rogers W.A., Mudar R.A. An integrative framework to guide social engagement interventions and technology design for persons with mild cognitive impairment. Front. Public Health. 2022;9:2278. doi: 10.3389/FPUBH.2021.750340. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Lubben J.E. Assessing social networks among elderly populations. Fam. Community Health. 1988;11:42–52. doi: 10.1097/00003727-198811000-00008. [DOI] [Google Scholar]

- 44.Morgan K., Dallosso H.M., Arie T., Byrne E.J., Jones R., Waite J. Mental health and psychological well-being among the old and the very old living at home. Br. J. Psychiatr. 1987;150:801–807. doi: 10.1192/bjp.150.6.801. [DOI] [PubMed] [Google Scholar]

- 45.Brooke J. SUS: a quick and dirty usability scale. Usability Eval. Ind. 1995;189 [Google Scholar]

- 46.Davis F.D. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 1989;13:319–340. doi: 10.2307/249008. [DOI] [Google Scholar]

- 47.Pikkarainen T., Pikkarainen K., Karjaluoto H., Pahnila S. Consumer acceptance of online banking: an extension of the Technology Acceptance Model. Internet Res. 2004;14:224–235. doi: 10.1108/10662240410542652. [DOI] [Google Scholar]

- 48.Venkatesh V., Thong J.Y.L., Xu X. Consumer acceptance and use of information technology: extending the unified theory of acceptance and use of technology. MIS Q. 2012;36:157–178. doi: 10.2307/41410412. [DOI] [Google Scholar]

- 49.Boot W.R., Charness N., Czaja S.J., Sharit J., Rogers W.A., Fisk A.D., Mitzner T., Lee C.C., Nair S. Computer proficiency questionnaire: assessing low and high computer proficient seniors. Gerontol. 2015;55:404–411. doi: 10.1093/geront/gnt117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Walton H., Spector A., Tombor I., Michie S. Measures of fidelity of delivery of, and engagement with, complex, face-to-face health behaviour change interventions: a systematic review of measure quality. Br. J. Health Psychol. 2017;22:872–903. doi: 10.1111/BJHP.12260. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Schulz K.F., Altman D.G., Moher D., Group the C. CONSORT 2010 Statement: updated guidelines for reporting parallel group randomised trials. BMC Med. 2010;8:18. doi: 10.1186/1741-7015-8-18. [DOI] [PMC free article] [PubMed] [Google Scholar]