Abstract

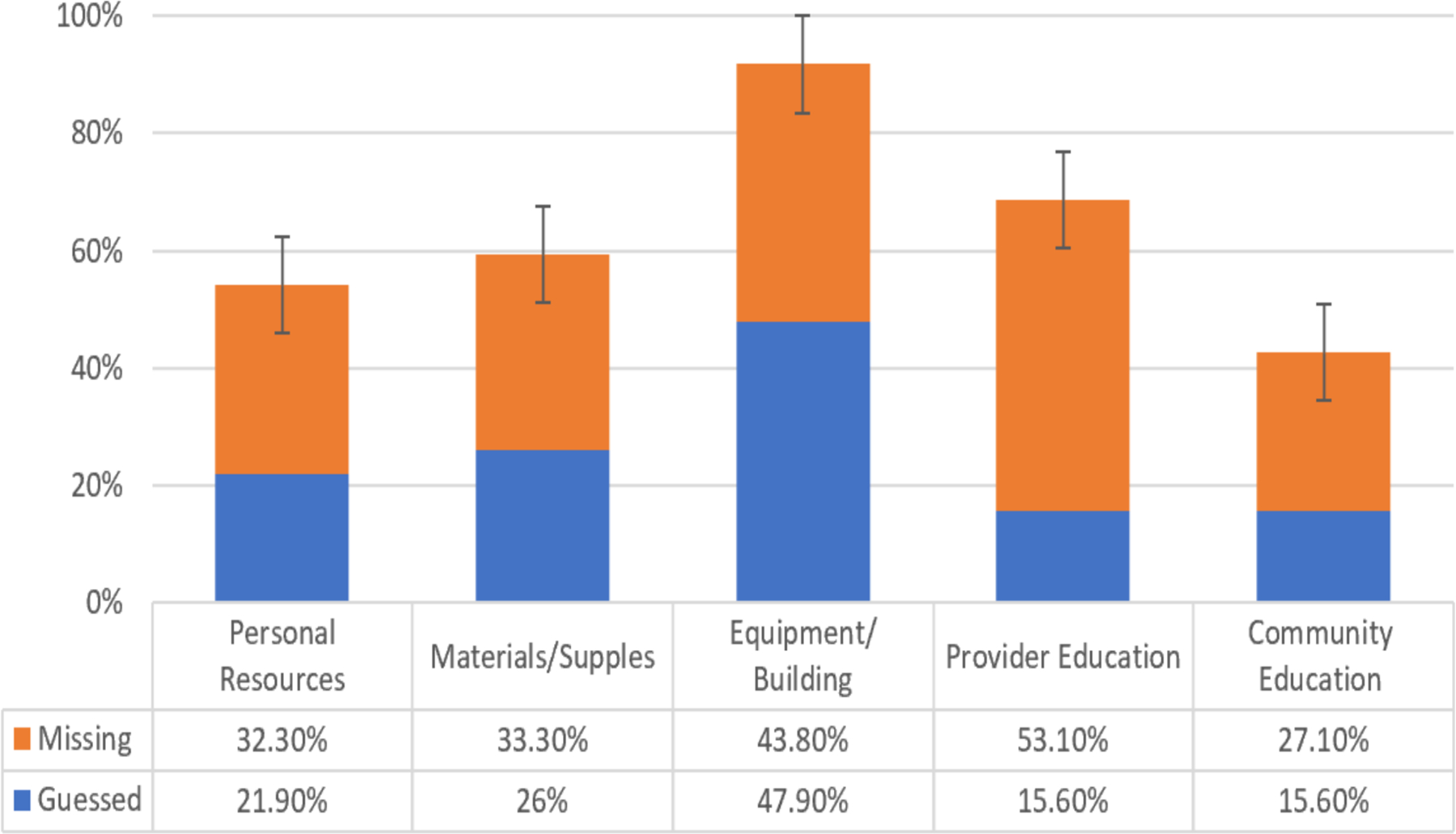

The number of clinical trials is rapidly growing, and automation of literature processing is becoming desirable but unresolved. Our purpose was to assess and increase the readiness of clinical trial reports for supporting automated retrieval and implementation in public health practice. We searched the Medline database for a random sample of clinical trials of HIV/AIDS management with likely relevance to public health in Africa. Five authors assessed trial reports for inclusion, extracted data, and assessed quality based on the FAIR principles of scientific data management (findable, accessible, interoperable, and reusable). Subsequently, we categorized reported results in terms of outcomes and essentials of implementation. A sample of 96 trial reports was selected. Information about the tested intervention that is essential for practical implementation was largely missing, including personnel resources needed 32·3% (.95 CI: 22·9–41·6); material/supplies needed 33·3% (.95 CI: 23·9–42·8); major equipment/building investment 42·8% (CI: 33·8–53·7); methods of educating providers 53·1% (CI: 43·1–63·4); and methods of educating the community 27·1% (CI: 18·2–36·0). Overall, 65% of studies measured health/biologic outcomes, among them, only a fraction showed any positive effects. Several specific design elements were identified that frequently make clinical trials unreal and their results unusable. To sort and interpret clinical trial results easier and faster, a new reporting structure, a practice- and retrieval-oriented trial outline with numeric outcomes (PROTON) table was developed and illustrated. Many clinical trials are either inconsequential by design or report incomprehensible results. According to the latest expectations of FAIR scientific data management, all clinical trial reports should include a consistent and practical impact-oriented table of clinical trial results.

Keywords: clinical trials, public health practice

Introduction

The rapid growth of scientific literature represents a formidable challenge to practitioners and policymakers. Particularly, randomized controlled clinical trials (RCTs) are becoming increasingly sought-after; as such, trials are the cornerstone of drug approval, evidence-based medicine, clinical practice guidelines, and strategic decisions in public health improvement. The number of registered trials in clinicaltrials.gov more than tripled over the past 10 years.1 Practitioner readers are further challenged by the variable and inconsistent reporting of clinical trial outcomes.2 A pertinent article famously questioned in its title: “Seventy-five trials and eleven systematic reviews a day: how will we ever keep up?”3

Meanwhile, outcome-oriented, numeric, and normative target setting is becoming common in public health strategic plans. The US program of ending the HIV epidemic, defines specific health outcome expectations and rigorous timelines.4 The UNAIDS Program sets the 90–90-90 worldwide targets to help end the AIDS epidemic.5 In Egypt, HCV elimination is targeted by 2030.6 Tanzania set the goal of national elimination of mother to child HIV transmission.7 Achieving such exactly engineered public health targets demands specific, up-to-date, effective and well-substantiated actions based on evidence from well-developed and documented clinical research studies.

Over the years, many standards have been developed and achieved limited improvement in the reporting of RCTs. Among them, the most popular is the CONSORT statement, a 25-item checklist.8 Over 50% of the core medical journals support the CONSORT statement. However, the mean proportion of adherence to it was only 68·11% among double-blind RCTs for ischemic stroke studies;9 48% for RCTs in trauma surgery,10 and only 61·84% for RCTs evaluating oral anticoagulants.11 Meanwhile, checklists have a tendency to mix practical messages with research quality attributes. Furthermore, the checklist requires only page identification for specific items at the time of submission but such manuscript pagination is obsolete and invisible in the printed article.

These formidable challenges lead to a need for automation or use of artificial intelligence in literature searches and processing. Most recently, the emergent need to process COVID related information led to the creation of CORD-19, the COVID-19 Open Research Dataset, and associated AI searches.12 However, it turned out that free text PDF, the primary distribution format of scientific papers, is not amenable to text processing. Simultaneously, the increasingly embraced FAIR (Findable, Accessible, Interoperable, Reusable) Principles of scientific data management put emphasis on better access to research results by enhancing the ability of machines to automatically find and use the data and thereby better supporting synthesis and application of research results.13

The rapid expansion of literature demands improved processing automation, but the barriers are insurmountable with the current technologies. Artificial intelligence cannot find information that is problematic to locate or entirely missing in clinical trial reports. The goal of this study was to identify practical challenges of filtering clinical trial results for targeted public health improvement and to facilitate retrieval and implementation of substantiated interventions with structural recommendations for reporting practically relevant clinical trial information.

Search Methods

Based on a randomly selected, illustrative pool of HIV-AIDS prevention and management trial reports, this study examined trial reports for retrieval characteristics and practical applicability. Prevention and treatment of HIV/AIDS is a field that illustrates the public health challenge of absorbing the latest research results in many parts of the world, among them sub-Saharan Africa. Our collaborative team of researchers and public health practitioners from Augusta University, Kilimanjaro Christian Medical University College (KCMUCo), Cairo University, and the Military College of Medical Sciences of the Tanzania People’s Defense Force collected a pool of randomized clinical trial reports and abstracted practical, relevant information.

Sample

Research reports were collected based on the following eligibility criteria: i) public health intervention for the prevention or treatment of HIV/AIDS; ii) randomized controlled clinical trials; iii) completed trial with published results; and iv) published in the last 20 years. Eligible studies were selected and included randomly. This study did not intend to obtain a complete or comprehensive collection of all eligible trials in this field.

Model construct

Following a series of discussions and consensus development, our team created a practice-oriented abstraction structure for gathering essential information from clinical trial reports for presentation to clinicians. The following minimum necessary elements were identified as essential for practical application of interventions and results obtained from randomized clinical trials:

Trial identification: Literature reference and indexing as clinical trial.

Patient group: What type of patients would be benefit from the change?

Old practice: What is the current or prior practice that should be discontinued?

New practice: What is the new intervention/practice? What supplies are needed for implementation? What major equipment/building investment is needed? What are the methods of educating providers? What are the methods of educating patients and community?

Outcome benefits: What is the primary between-group, numeric health impact of changing to the new practice? What is the secondary between-group, numeric health impact of changing to the new practice?

Abstraction

After identification of selected trial reports, a PDF copy was obtained. Members of the research team abstracted practical information from the retrieved full trial reports in the pool. The abstraction copied the relevant statements from the trial reports into an Excel spreadsheet form (i.e., raw data). When a particular piece of information was not explicitly stated in the report but could be reasonably deduced, such information was noted accordingly (e.g., not stated but apparent use of patient education materials). Subsequently, abstracted raw data were coded in the adjacent next column for easy comparison with the original statement and also for formula-based counting of frequencies.

Data quality

The quality of abstraction was crosschecked by another team member in the study. Two co-authors (CA and EAB) cross-checked all data for precision throughout the following stages:

Verification of studies regarding eligibility criteria, including PubMed indexed Publication type Randomized Controlled Trial and completeness of the trial (i.e., the report is not about a partial or planned trial).

Crosschecking and elimination of duplicates, consolidating all information into one spreadsheet; and rechecking abstraction of numeric and factual information regarding intervention resource needs, and numeric biologic outcome difference (i.e., outcome calculated correctly as a percentage difference between intervention and control group - not comparing before and after), verifying that outcome variables, biologic or process, were categorized correctly.

Team discussion and resolution of all discrepancies through a consensus process. Any disagreements in the data extraction process were resolved by discussion between the two authors doing quality check and if necessary shared with other co-authors until a consensus was achieved. Ultimately, consensus was reached on the classification of outcome measures, including biological and process parameters.

Data availability

The complete list of eligible studies, including trial reports, raw trial abstraction data, and also curated, coded data are deposited to the Augusta University Scholarly Commons: https://augusta.openrepository.com/augusta

Analyses

Initially, the collected sample of studies was described in frequency calculations. Factors impeding the practical use of clinical trials were identified through analyses of missing information ratios and reported outcome data. Ratios were calculated with .95 confidence intervals. Abstracted results were subsequently analyzed and ranked based on demonstrated biologic outcome improvement. In addition to estimating and testing the distribution of biologic outcomes, statistics were prepared regarding the number of missing and guessed items as appropriate.

Reporting design

Based on the analyses, a Practice- and Retrieval Oriented Trial Outline with Numeric outcomes (PROTON) reporting template was developed. It was designed to facilitate retrieval, filtering for beneficial results, locating actionable information and supporting practical implementation. Such improved reporting of randomized controlled clinical trials was expected to meet criteria for FAIR data management: (F)indable by designating a purpose-built publication table; (A)ccessible by communicating essential information in a searchable structure; (I)nteroperable by having numeric results in a standardized structure for knowledge representation; and (R)eusable by having well-described attributes for replication.

Results

We collected 107 randomly selected clinical trials involving HIV prevention and treatment interventions. In the selection process, 11 were initially eliminated as they were indexed as controlled clinical trials but did not report any results. The resulting study sample of HIV/AIDS clinical trial reports is described in Table 1. The average age of the sampled trial reports was 7.69 years (± 4.48).

Table 1.

Description of the sample of HIV/AIDS clinical trial reports (N=96).

| N (%) | N (%) | ||

|---|---|---|---|

| Trial design | Intervention | ||

| RCT | 88 (90.7) | Prevention | 33 (34.0) |

| cRCT | 9 (9.3) | Treatment | 39 (40.2) |

| Education | 12 (12.4) | ||

| General support | 13 (13.4) | ||

| Site continent | Year of publication | ||

| Africa | 54 (55.7) | 1995–2005 | 6 (6.2) |

| Asia | 8 (8.2) | 2006–2010 | 10 (10.3) |

| Europe | 3 (3.1) | 2011–2015 | 42 (43.3) |

| South America | 2 (2.1) | 2016–2018 | 39 (40.2) |

| USA | 30 (30.9) | ||

| Target population | Number patients participated | ||

| Children (exposed) | 8 (8.2) | <100 | 21 (21.7) |

| Adolescents (exposed) | 12 (12.4) | 100–1000 | 49 (50.5) |

| Women only (HIV positive) | 11 (11.3) | >1000 | 27 (27.8) |

| HIV negative Adults | 16 (16.5) | ||

| Men and women (HIV positive) | 50 (51.6) | ||

| Number of centers | Funding source | ||

| Single center | 27 (28.1) | US | 73 (75.3) |

| Multi center | 57 (59.4) | UK | 11 (11.3) |

| Other | 13 (13.4) |

The ratios of missing practice relevant information in the clinical trial report sample are shown in Figure 1. It portrays the level of availability of information deemed to be essential for practitioners for the introduction into public health practice. Most of the clinical trials mentioned some aspect of community education but other practice relevant information was mostly missing. In the majority of randomized clinical trial reports, essential information about necessary resources of intervention implementation and other aspects of realization were routinely missing.

Figure 1.

Ratio of missing practice relevant resource information in the clinical trial pool.

The health outcome benefits (biologic improvement) regarding the evaluated new intervention/practice is summarized in Table 2. A total number of 62 studies measured health/biologic outcomes. Among them, 30 studies reported improved health outcomes. The remaining studies did not even attempt to measure and demonstrate health outcome improvements.

Table 2.

Tested health outcome benefits of changing to a new practice.

| Health outcome assessment (primary or secondary) | No of Studies |

|---|---|

| Drug resistance | 2 |

| Drug toxicity | 2 |

| HIV related morbidity reduction | 6 |

| HIV transmission reduction | 12 |

| Maternal CD4 changes | 1 |

| Mental health improvement | 5 |

| Nutrition/physical status improvement | 5 |

| PMTCT reduction | 5 |

| Viral suppression | 19 |

| WHO staging | 1 |

| Drug adherence | 4 |

| Behavioral benefits | 18 |

| Missing health outcome assessment | 34 |

A significant number of studies included biases that prevented their success in meeting the needs of introduction into practice. Some reports provided multivariate analyses and graphic illustrations of differences that appear to be statistically non-significant or clinically not relevant without clearly stating that the differences are non-significant and therefore quite likely non-existent.

Many randomized clinical trials appear to be based on an unreal design. For example, when the “control arm” of a study is not the current mainstream practice then assessing the practical improvement resulting from the new intervention can become very difficult. Similarly, reporting the average viral load change resulting from an intervention is less informative than the number of people benefiting from a specified threshold viral load. Table 3 provides a more comprehensive list of deficiencies that can make clinical trials unreal.

Table 3.

Design elements that can make clinical trials unreal.

| Population | Patient eligibility criteria too strict or esoteric |

|---|---|

| Process | Tested intervention is complex, not real-life |

| Chosen control not the current mainstream practice | |

| Unrealistic compliance expectations | |

| Apparent lack of community partners | |

| Outcome | Failure to measure health status outcomes |

| Outcome measures are irrelevant in actual care | |

| Assessment is limited to short-term outcomes | |

| Reporting | Between-groups result comparisons unreported |

| Difference between averages instead of percent benefiting | |

| Non-practical, overly complex statistics, confusing analyses |

Based on the above listed observations and various pertinent recommendations, the Practice- and Retrieval Oriented Trial Outline with Numeric outcomes (PROTON) template was developed for FAIR reporting of randomized controlled clinical trials. Table 4 presents the PROTON template and also data from an illustrative clinical trial. Such improved structural reporting of randomized controlled clinical trials meets nearly all criteria for FAIR data management. The only exception is that the description of eligible patients and interventions are not reliant on standard vocabularies, mainly to avoid trying to standardize innovative interventions into potentially non-fitting existing vocabularies.

Table 4.

Example of Practice- and Retrieval Oriented Trial Outline with Numeric Outcomes (PROTON).

| Title: Improving feeding and growth of HIV positive children through nutrition training of frontline health workers in Tanga, Tanzania | ||||||

| Reference: Sunguya BF, Mlunde LB. Urassa DP, Poudel KC, Ubuguyu OS, Mkopi NP, et al. (2017). Improving feeding and growth of HIV-positive children through nutrition training of frontline health workers in Tanga, Tanzania. BMC Pediatri 17:94. | ||||||

| Trial registration and indexing: Registration: ISRCTN65346364 and PubMed Clinical Trial | ||||||

| Target group of patients: HIV-positive children attending HIV care and treatment centers; caregivers of such children, who accompany them to the care and treatment centers and supervise their medical and nutritional care at home; and the midlevel providers who provide nutrition care to the HIV-positive children. | ||||||

| Old practice (control): Clinical HIV-staging, adherence counseling, provision of ART, and management of opportunistic infections | ||||||

| New practice (intervention): nutrition training intervention; training for midlevel providers, nutrition counseling and care to caregivers | ||||||

| Supplies needed for implementation: TBD | ||||||

| Major equipment/building needed: Guessed - None | ||||||

| Methods of educating providers: Midlevel providers in the intervention arm received the 13 h and 40 min nutrition training conducted for two consecutive days. The training was organized into a total of 18 sessions, based on the standard Guidelines for an Integrated Approach to the Nutritional Care of HIV-infected Children (6 months to 14 years) by the World Health Organization. | ||||||

| Methods of patient and community education: TBD | ||||||

| NUMERIC HEALTH OUTCOMES | ||||||

| Binary outcome events: Underweight (age 6–120 months) | Point of measuring: 6 months after the start | |||||

| Group | Subjects | Events | Percentage | C.I. | Odds ratio | Significance |

| A. Intervention | 242 | 55 | 22.7% | 17.6–28.5 | ||

| 0.3535 | 0.0001 | |||||

| B. Control | 229 | 104 | 45.4% | 38.8– 52.1 | ||

| Continuous measure (unit): Weight (kg) | Point of measuring: 6 months after the start | |||||

| Group | Subjects | Average | SD | C.I. | *Difference | Significance |

| A. Intervention | 383 | 22 | 7.1 | 21.3 to 22.7 | ||

| 1.5 | 0.0048 | |||||

| B. Control | 362 | 20.5 | 7.4 | 19.7 to 21.3 | ||

Discussion

In general, many clinical trials do not contain the necessary practical information for successful implementation in non-research clinical health care settings. Abstracting information about intervention and numeric outcomes from traditional trial reports is exceedingly difficult. Traditional methods sections tend to mix intervention description with details of trial methodology. Furthermore, only a few trials demonstrate biologic outcome improvement substantiating introduction in patient care. In the absence of publication standards, essential information is often missing and no amount of automation or artificial intelligence can find what is not in the clinical trial report.

Practitioners need to be supported in searching, selecting, quality filtering, and practically abstracting essential information from clinical trial reports. Introducing a streamlined, structured communication form, like the PROTON template we recommend, appears to be an imperative option in support of more effective retrieval and implementation. With current publication practices, it is very difficult to abstract practical applicable information from currently published clinical trials, thus placing an excessive burden on readers and practitioners in interpreting the results and ultimately implementing the results. If researcher-authors of clinical trials cannot provide essential information with the necessary level of clarity then the reader/practitioners will have no chance to reproduce the results.

In describing the novel intervention evaluation in a clinical trial, the only meaningful way to make sure vitally important comparisons are clearly and unambiguously available is to put them in a standardized table that can be easily located in any manuscript. It is a natural expectation that the intervention evaluated in a clinical trial is clearly described in an identifiable location of the manuscript. It should not be dispersed in the different sections or be hard to find when reading and evaluating the manuscript. Similarly, the numerical outcomes and endpoint measurements should be in a clearly identifiable location within the manuscript. Research and clinical trials have a high degree of variability, but some basic information should never be missing. It is never enough to communicate binary outcomes without the group size or without the number of observed outcomes in both the intervention and control groups. Results of continuous outcome variables are useless without complete information about sample size, average, and standard deviation in both intervention and control groups. It is important to note, that this study is entirely focused on the practical message, not the quality of published clinical trial reports. Historically, quality assessment of research publications has been focused on research technicalities (e.g., sampling power, accuracy of measurement, soundness of statistical analysis, and others). In contrast, we hypothesized that clinical publications with marginal or unsatisfactory practical message do not merit further scrutiny of research quality in considerations for application. When a publication appears to have a practically valuable message, then quality assessment based on research technicalities can follow.

We observed a very high ratio of deficiencies and missing data in many of the clinical trial reports. Moreover, it is well known that many clinical trial outcomes are not reported, mainly due to negative results. According to Dwan et al.14 and Ioannidis,15 51·8% and 55% of clinical trials yielding negative results remain unpublished, respectively. The combination of the ratio of non-reported trials and ratio of missing data in the reported trials shows an overall high frequency of non-reproducibility in clinical research. Such a ratio of non-reproducible clinical research appears to be in the same range as the 89% ratio of non-reproducible preclinical studies reported by pharmaceutical companies.16

The high ratio of non-reproducible clinical trials also calls into question the ethical participation of large numbers of patients in essentially useless studies. The ethics concerns of ineffective patient participation further emphasize the need to create quality reporting thresholds in the publication of clinical trials.

This study looked at a randomly selected group of trial reports that have great potential to improve public health. Searches for such reports cannot be limited to high impact or high circulation journals. Impact factor is about journal citation and not necessarily about practice-oriented structuring of the information. Regardless of the journal’s impact factor, proper structuring is a pressing need.

Conclusions

The results of this study urge authors of clinical trial manuscripts and journal editorial policies to implement standards for the location and structuring of the description of intervention and numeric endpoint comparisons between controls and intervention groups. Decades after many standard recommendations, clinical trial reporting remains woefully inadequate for automated processing and easy interpretation by practitioners. The current “patchy”, dispersed and often-incomplete trial reporting practices are no longer sustainable.

The proposed minimalist reporting standard for clinical trials should become a general threshold for scientific publishing. Clinical trials should not be published without a clearly identifiable description of the intervention that is being evaluated and without numeric outcome results in all tested groups ready for comparison. It is important that FAIR data management, Findability, Accessibility, Interoperability, and Reusability should become the norm in clinical research as well. Improved structuring practices are vital for clear communication, automated retrieval, and unambiguous interpretation of clinical trial results in public health practice. Ultimately, this should lead to improved implementation of important clinical trials into clinical practice.

Acknowledgements

The authors thank the team members of the Biomedical Research Innovation laboratory for helpful discussions and recognize partial funding support from the US National Institutes of Health (R01 GM146338-01). The authors also thank Maria Zulfiqar for her work as a graduate research assistant in preparing the manuscript for publication.

Role of the funding source statement

The funder of the study had no role in study design, data collection, data analysis, data interpretation, or writing of this report.

Footnotes

Conflict of interest statement

All authors declare no competing interests.

Data availability statement

Data are available upon request. Data from our study will be made available upon request to the Augusta University Scholarly Commons data repository. https://augusta.openrepository.com/

References

- 1.National Library of Medicine. ClinicalTrials.Gov: Trends, Charts, and Maps. 2021. Available from: https://classic.clinicaltrials.gov/ct2/resources/trends

- 2.Ioannidis JP, Caplan AL, Dal-Ré R. Outcome reporting bias in clinical trials: why monitoring matters. BMJ 2017;356:j405. [DOI] [PubMed] [Google Scholar]

- 3.Bastian H, Glasziou P, Chalmers I. Seventy-five trials and eleven systematic reviews a day: how will we ever keep up? PLoS Med 2010;7:e1000326. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Fauci AS, Redfield RR, Sigounas G, Weahkee MD, Giroir BP. Ending the HIV epidemic: a plan for the United States. JAMA 2019;321:844–5. [DOI] [PubMed] [Google Scholar]

- 5.Alleyne G, Beaglehole R, Bonita R. (2015). Quantifying targets for the SDG health goal. Lancet 2015;385:208–9. [DOI] [PubMed] [Google Scholar]

- 6.Omran D, Alboraie M, Zayed RA, et al. Towards hepatitis C virus elimination: Egyptian experience, achievements and limitations. World J Gastroenterolo 2018;24:4330. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Ministry of Health of the United Republic of Tanzania. National Aids Control Programme National Guidelines For The Management of HIV And AIDS. 2017. Available from: https://platform.who.int/docs/default-source/mca-documents/policy-documents/guideline/TZA-RH-43-02-GUIDELINE-2017-eng-National-Guidelines-for-Management-of-HIV-and-AIDS-2017-AR.pdf

- 8.Schulz KF, Altman DG, Moher D. CONSORT 2010 statement: updated guidelines for reporting parallel group randomized trials. Ann Intern Med 2010;152:726–32. [DOI] [PubMed] [Google Scholar]

- 9.Kodounis M, Liampas IN, Constantinidis TS, et al. Assessment of the reporting quality of double-blind RCTs for ischemic stroke based on the CONSORT statement. J Neurol Sci 2020;415:116938. [DOI] [PubMed] [Google Scholar]

- 10.Lee SY, Teoh PJ, Camm CF, Agha RA. Compliance of randomized controlled trials in trauma surgery with the CONSORT statement. J Trauma Acute Care Surg 2013;75:562–72. [DOI] [PubMed] [Google Scholar]

- 11.Liampas I, Chlinos A, Siokas V, Brotis A, Dardiotis E. Assessment of the reporting quality of RCTs for novel oral anticoagulants in venous thromboembolic disease based on the CONSORT statement. J Thromb Thrombolysis 2019;48:542–53. [DOI] [PubMed] [Google Scholar]

- 12.Wang LL, Lo K, Chandrasekhar Y, et al. Cord-19: The covid-19 open research dataset. ArXiv 2004:10706v4. [Google Scholar]

- 13.Wilkinson MD, Dumontier M, Aalbersberg IJ, et al. The FAIR Guiding Principles for scientific data management and stewardship. Sci Data 2016;3:160018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Dwan K, Gamble C, Williamson PR, Kirkham JJ, Group RB. Systematic review of the empirical evidence of study publication bias and outcome reporting bias—an updated review. PloS One 2013;8:e66844. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Ioannidis JP. Clinical trials: what a waste. BMJ 2014;349:g7089. [DOI] [PubMed] [Google Scholar]

- 16.Begley CG, Ellis LM. Raise standards for preclinical cancer research. Nature 2012;483:531–3. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The complete list of eligible studies, including trial reports, raw trial abstraction data, and also curated, coded data are deposited to the Augusta University Scholarly Commons: https://augusta.openrepository.com/augusta

Data are available upon request. Data from our study will be made available upon request to the Augusta University Scholarly Commons data repository. https://augusta.openrepository.com/