Abstract

Purpose:

The purpose of this study was to understand the role of implicit racial bias in auditory-perceptual evaluations of dysphonic voices by determining if a biasing effect exists for novice listeners in their auditory-perceptual ratings of Black and White speakers.

Method:

Thirty speech-language pathology graduate students at Boston University listened to audio files of 20 Black speakers and 20 White speakers of General American English with voice disorders. Listeners rated the overall severity of dysphonia of each voice heard using a 100-unit visual analog scale and completed the Harvard Implicit Association Test (IAT) to measure their implicit racial bias.

Results:

Both Black and White speakers were rated as less severely dysphonic when their race was labeled as Black. No significant relationship was found between Harvard IAT scores and differences in severity ratings by race-labeling condition.

Conclusions:

These findings suggest a minimizing bias in the evaluation of dysphonia for Black patients with voice disorders. These results contribute to the understanding of how a patient’s race may impact their visit with a clinician. Further research is needed to determine the most effective interventions for implicit bias retraining and the additional ways that implicit racial bias impacts comprehensive voice evaluations.

Keywords: voice assessment, perceptual bias, voice disorder

1. Introduction

Auditory-perceptual assessment is a critical component of a comprehensive voice assessment and voice screening aimed to detect the presence of dysphonia. A voice disorder is defined as when an individual expresses concern about having an atypical voice that does not meet their daily needs given differences in vocal quality, pitch, loudness, resonance, and/or duration.1 A voice disorder occurs when these differences are inappropriate for an individual’s age, gender, cultural background, or geographic location.2-4 The term dysphonia is a diagnosis of impaired voice production as recognized by a clinician that encompasses the auditory-perceptual symptoms of voice disorders, including altered vocal quality, pitch, or loudness.5

Auditory-perceptual methods of voice evaluation are subject to bias, defined as a systematic deviation from a normative response.6 Explicit bias is within an individual's conscious awareness whereas implicit bias is an automatic and subconscious bias.7 Implicit perceptual biases in voice evaluations can arise when any information beyond acoustic data are presented. In a study examining whether diagnostic information impacted auditory-perceptual evaluations of voice completed by graduate student clinicians, researchers found that listeners consistently rated voices as more severe on the measures of roughness and breathiness when they knew the speaker’s medical diagnosis.8 Sauder and Eadie 9 later found that including a diagnosis impacted how listeners assessed dysphonia, whether the diagnosis was accurate or not. They found that inaccurate diagnoses showed a greater effect on listeners’ perceptions of roughness and breathiness than did accurate diagnoses. This study found evidence for both an overpathologizing bias as well as a minimizing bias that was contingent upon the feature being assessed (roughness or breathiness) and the accuracy of the diagnosis.9 A minimizing bias is defined as when symptoms are perceived as more normative for one group over another due to subconscious practitioner error (not due to actual differences between groups). An overpathologizing bias is defined as when individuals within a group are perceived as requiring more treatment due to subconscious practitioner error (not due to actual differences between groups).9,10 This study found that, overall, speech-language pathology graduate students are susceptible to bias in voice evaluations. This evidence suggests that perceptual bias exists in auditory-perceptual evaluations of voice when patient information is given, though there is little research on racial bias specifically.

Studies of implicit bias in healthcare have spotlighted that patients of color are evaluated, diagnosed, and ultimately treated differently from White patients. A systematic review examining implicit bias in healthcare professionals found that 35 out of 42 eligible articles reported notable levels of implicit bias in healthcare professionals.11 All eligible articles that compared implicit bias to quality of care found a significant negative relationship between the two.11 Another systematic review focused specifically on implicit racial/ethnic bias in healthcare providers found that providers’ implicit bias consistently correlated with worse interactions between patients and providers.12 Even when physicians reported no explicit racial bias, implicit measures still found a significant preference for White Americans amongst these physicians, along with implicit stereotypes that Black Americans are less cooperative during medical visits and in general.13

To address how implicit racial bias—a subconscious attitude against a specific social group 11—may influence voice evaluations, we must first establish whether race creates inherent differences in voice. Race is a social construct,14 therefore, it should have no relationship to anatomical or physiological differences in the vocal mechanism. In a study that paired vowel productions of Black and White adults based on age, height, and weight, the authors failed to find significant differences between races on nearly every1 measure.15 This finding was supported in another study that compared the vowel productions of Black and White elderly speakers and found no acoustic differences between races.16 This suggests that Black speakers and White speakers, when paired on demographics (e.g., age, sex), should sound comparable during vowel production. Although acoustic characteristics may be influenced by sex and age, no acoustic characteristics of voice should imply a speaker’s race. Any difference in auditory-perceptual ratings of White and Black speakers when paired on other factors may therefore be the result of the listener's implicit racial biases.

Racial disparities in medical evaluations have been well-documented for decades, with examples such as Black patients being less likely to receive commonly prescribed medications and angiograms when in the hospital after a heart attack.17 Rathore et al.18 found that medical students were less likely to diagnose a Black woman with angina compared to a White man, even when presenting with identical symptoms. Studies like this suggest that nonmedical factors such as implicit racial biases may impact diagnostic evaluations specifically by activating a minimizing bias in which Black patients’ conditions are not viewed as severely or taken as seriously as White patients.18-21 However, research is needed to see if this minimizing bias transfers to the field of speech-language pathology.

Within the field of speech-language pathology, clinician racial bias has been broadly investigated, though there is a lack of research assessing its presence and impact within the specialty field of voice disorders. One study reported that Black students were less likely to receive services for specific language impairments when examining disparities in special education.22 Another study revealed that Black clients with aphasia were recommended for a limited frequency of speech therapy services even though they had large communicative impairments.23 This study also reported that 80% of the Black clients with aphasia surveyed had at least one negative treatment experience with a speech-language pathologist.23

There is no outline for how to address implicit racial bias in the field of speech-language pathology, though it has a large impact on clinical decision-making. The field also lacks racial diversity with 91.7% of ASHA members being White.24 This indicates a mismatch from the racial composition of the United States population25 and is likely a source of considerable bias in the field. ASHA’s Issues in Ethics statement26 requires that clinicians approach all client relationships with “awareness, knowledge, and skills about their own culture and cultural biases, strengths, and limitations.” This requires an understanding of one’s own implicit racial biases and how they may impact one’s clinical practice. One of the most common measures used to quantify implicit racial bias is the Harvard Implicit Association Test (IAT), which asks participants to pair pictures of Black and White individuals to words with positive and negative connotations as fast as they can.27

The purpose of the current study was to understand the role of implicit racial bias in auditory-perceptual evaluations of dysphonic voices. This was assessed by determining if a biasing effect exists for novice listeners in their auditory-perceptual ratings of dysphonia of Black and White speakers of General American English. Novice participants were asked to rate the overall severity of dysphonia of voice samples using a 100-unit visual analog scale, with their only knowledge of each voice being sex, age, and race of the speaker. Sex and age were accurately labeled 100% of the time. However, race was labeled accurately 50% of the time and inaccurately for the remaining 50% to determine how race labeling influenced severity ratings. We anticipated a main effect of race labeling, regardless of underlying speaker race. Specifically, we hypothesized that speakers would be rated as less severely dysphonic when their race was labeled as Black, consistent with Black patients' presenting conditions being perceived as less severe in other areas of healthcare literature. This study also tested for an interaction effect to see if this was true only for speakers of one underlying race, though we did not anticipate a significant interaction given our hypothesis that race labeling would influence ratings regardless of true speaker race. Additionally, we hypothesized that the difference in mean ratings according to race labeling (labeled Black - labeled White) would negatively correlate with listeners’ implicit racial biases, as measured by the IAT. The implications of these findings may provide insight into the role of implicit racial bias in clinical settings.

2. Methods

2.1. Voice Recordings

Audio files consisted of two sustained vowels (/a/ and /i/) and the following two sentences from The Rainbow Passage: “The rainbow is a division of white light into many beautiful colors. These take the shape of a long round arch, with its path high above and its two ends apparently beyond the horizon”.28 All audio files were recorded in either a sound-treated booth at Boston University or in a quiet room at Boston Medical Center with a headset microphone (Shure Model SM35XLR, Shure Model WH20XLR, or Sennheiser Model PC131) sampled at 44.1 kHz with a 16-bit resolution. All microphones were placed 7 cm from the mouth at a 45-degree angle.29 These files were concatenated to make a single speech sample per speaker that was then normalized for peak intensity using a MATLAB script (version 9.10.0.1613233 [R2021a]; The MathWorks, Inc., Natick, MA).

2.2. Speakers

All speakers were selected retrospectively from an existing research database at the Stepp Lab for Sensorimotor Rehabilitation Engineering at Boston University. The current study included only speakers of General American English in order to eliminate dialect or accented speech as a confound. Language classifications were made based on samples of spontaneous speech. African American English was identified using specific linguistic characteristics outlined in research.30 After the first author classified the language variety of all eligible speakers, a trained research assistant performed the same task, blinded to the classifications of the first listener. Where there were discrepancies between both raters, a third classification by the second author determined the final decision. Speech samples from a total of 65 Black speakers were evaluated, and 45 were excluded from the study due to the use of African American English or foreign-accented speech.

A list of 60 White speakers of the same sex and aged within ±5 years of each of the 20 Black speakers was generated to create three potential speaker pairings. A voice-specialized speech-language pathologist rated the overall severity of dysphonia of each sample using a 100-unit visual analog scale. The first author selected the White speaker that was closest in overall severity of dysphonia to each age- and sex-matched Black speaker as their counterpart for the study. This matching process was intended to minimize differences between voices, allowing for interpretation of differences in ratings to be an effect of race labeling instead of alternative factors.

The final sample included 20 Black speakers (17 females, 3 males, M = 40.1 years, SD = 16.7 years, range = 18 - 67 years) and 20 White speakers (17 females, 3 males, M = 39.6 years, SD = 16.3 years, range = 19 - 66 years). Only speaker sex is reported as gender information was not available for all speakers2. White and Black speakers all had been diagnosed with a voice disorder by a laryngologist (Table 1). The average overall severity of dysphonia of Black speakers was 20.9 (SD = 12.6, range = 4.9 - 50.7), and the average severity of White speakers was 17.6 (SD = 10.0, range = 3.4 - 33.3).

Table 1.

Laryngeal Diagnoses of Black and White speakers.

| Diagnosis | N |

|---|---|

| Vocal hyperfunction | |

| Phonotraumatic | 12 |

| Non-phonotraumatic | 24 |

| Vocal fold scarring | 1 |

| Paradoxical vocal fold movement | 1 |

| Reinke’s edema | 1 |

| Vocal fold atrophy | 1 |

Total n = 40

2.3. Listeners

Thirty individuals (29 cisgender women, 1 cisgender man, M = 24.4 years, SD = 4.3, range = 22 – 46; 21 White, 3 Asian, 3 White Hispanic, 2 Black, 1 mixed race) participated as listeners in the current study. Listeners had all taken a graduate level voice disorders class at Boston University within 14 months of the study. All listeners were in the process of obtaining their Master’s degree in speech-language pathology (MS-SLP) from Boston University. The study listeners were all speakers of English who presented without neurological impairment and without speech, voice, language, or hearing disorders. Listeners were financially compensated for their time. They were presented with the opportunity to participate in a paid research study but were otherwise blinded to the study purpose. Informed consent was obtained from all participants prior to the study, in compliance with the Boston University Institutional Review Board.

2.4. Experimental Conditions

Session 1 Ratings.

In the first study session, listeners were randomly assigned to one of two conditions. Fifteen listeners were provided with entirely accurate race labels for each voice heard and 15 listeners were provided with entirely inaccurate race labels for each voice heard. Each listener heard all 40 speakers in Session 1, with 20% of audio samples repeated to measure intrarater reliability. The order of accurate-inaccurate sessions was counterbalanced such that 50% of listeners heard 48 voices with accurate race labels in their first session.

Session 2 Ratings.

Four to seven days after their first study session, both groups of listeners returned to complete the same evaluations but with the opposite accuracy condition of their first session. Each listener once again heard all 40 speakers with 20% (8/40) repeated for intrarater reliability. Participants who first received 48 accurate race labels now received 48 inaccurate race labels and vice versa.

2.5. Listening Procedure

The listening procedure was completed remotely by all participants using the online behavioral research platform Gorilla Experiment Builder (gorilla.sc). Listeners were instructed to complete both study sessions in a quiet environment on their device of choice using their own headphones for improved audition. Listening sessions were supervised remotely by a study author in order to solve technological problems and answer listener questions. Each listening session began with a volume adjustment and headphone screening task from the Gorilla open materials repository.31 The volume adjustment allowed listeners to adjust their device volume to a comfortable level during the presentation of a 4-second clip of white noise. The headphone screening consisted of a Huggin's pitch task in which listeners identified which of three segments of white noise contained a tone. This confirmed that all listeners used headphones for the listening procedure.31

Once listeners passed the volume adjustment and the headphone screening, they began the primary listening task in which they were presented with the voice recordings. Participants rated 48 voices during each listening session: the 20 unique audio files of Black speakers, the 20 unique audio files of White speakers, and eight of the 40 audio files that were repeated to measure intrarater reliability. Ratings for all 40 unique audio files were used to measure interrater reliability as all listeners heard the same set of recordings. Each voice recording was presented with the speaker's sex, age, and their labeled race.

Listeners rated the overall severity of dysphonia of each speaker's voice using an online visual analog scale (VAS). The VAS ranged from 0-100 with an anchor at 10 for mild, 35 for moderate, and 72 for severe to align with the anchor positions on the current printable version of the Consensus Auditory-Perceptual Evaluation of Voice (CAPE-V) form available from ASHA (asha.org/form/cape-v/). The CAPE-V is an instrument commonly used by clinicians to standardize evaluations32 that is both valid and reliable for those trained in voice disorders.33,34 Once each listener had entered their overall severity of dysphonia rating for one speaker, they were presented with the next speaker's audio file until they had rated all 48 samples. Audio files were presented in a randomized order. Listeners were instructed to listen to the entire audio file at least once before rating the speaker's severity. Listeners were able to play each audio file for a second time in case they experienced a distraction in their environment during initial presentation of the recording. Each listening session took approximately one hour. Interrater reliability was calculated using an intraclass correlation coefficient (ICC(C,1)) and intrarater reliability was calculated using a Pearson correlation (r).

2.6. Implicit Bias Task

As the final task of the second session, participants completed the Harvard Implicit Association Test (IAT) in Gorilla to measure their implicit racial bias.35 This is a commonly used measure that asks participants to sort pictures and words into groups as fast as they can. Pictures include those of Black and White men and women, and words include those with positive and negative connotations. Completion of the IAT took approximately 5 minutes. The study collected the amount of time in milliseconds that it took participants to pair stimuli and analyzed response times to quantify implicit bias using the central tendency measures algorithm outlined in Greenwald et al.27 The range of potential Harvard IAT scores using this algorithm is −1.0 to +1.0, with negative numerical values indicating an anti-White bias and positive numerical values indicating an anti-Black bias.

2.7. Statistical Analyses

To test our hypotheses regarding the effects of labeled and actual race, we constructed a mixed-design analysis of variance (ANOVA) to measure the main effects of labeled race and speaker race and their interaction on the outcome of ratings of overall severity of dysphonia. For the audio files heard twice to measure reliability, the first individual rating was used in the model. Listener and speaker were entered to the mixed-design ANOVA as random variables. The effect size for each significant ANOVA factor was calculated as a squared partial curvilinear correlation (ηp2). Effect sizes of ~0.01 were classified as small, ~0.09 as medium, and >0.25 as large.36 All statistical analyses were completed in Minitab (version 19.2020.1; Minitab LLC, State College, PA), with the criterion for statistical significance set a priori at α=0.05.

3. Results

3.1. Descriptive statistics

Thirty listeners completed 40 ratings per study session (not including repeated trials for reliability), resulting in 80 ratings per listener and 2400 ratings total. As shown in Table 2, these ratings were evenly distributed among four conditions: Black speakers labeled Black, Black speakers labeled White, White speakers labeled White, and White speakers labeled Black.

Table 2.

Ratings of overall severity of dysphonia across race label conditions.

| Condition | N | Mean | SD | Range |

|---|---|---|---|---|

| Black speakers | ||||

| Labeled | 600 | 21.39 | 19.35 | 0 - 78 |

| Black | ||||

| Labeled | 600 | 24.48 | 20.20 | 0 - 92 |

| White | ||||

| White speakers | ||||

| Labeled | 600 | 16.81 | 16.63 | 0 - 84 |

| White | ||||

| Labeled | 600 | 14.74 | 15.21 | 0 - 75 |

| Black |

SD = standard deviation

3.2. Reliability

Both interrater and intrarater reliability were interpreted such that values less than 0.5 indicated poor reliability, values between 0.50 and 0.75 indicated moderate reliability, values between 0.75 and 0.90 indicated good reliability, and values greater than 0.90 indicated excellent reliability.37 Interrater reliability amongst the 30 listeners was calculated to be moderate at ICC = .54 (95% CI [0.43, 0.66]). This is consistent with interrater reliability values found in previous studies for both novice listeners and experienced listeners completing auditory-perceptual voice evaluations.38-41 Intrarater reliability was calculated to be good on the ratings completed twice by each listener, with the average reliability being r = .78 (SD = .15, range: .48 - .99).

3.3. Mixed-design ANOVA

We hypothesized a main effect of race labeling, regardless of underlying speaker race. Specifically, we hypothesized that speakers would be rated as less severely dysphonic by novice listeners when their race was labeled as Black. The mixed-design ANOVA revealed that there was no significant effect of speaker race (F(1, 2367) = 3.78, p = .06), such that Black speaker ratings (M = 22.93, SDpool = 19.78) were not significantly different from White speaker ratings (M = 15.78, SDpool = 15.94) across conditions. There were large and significant effects of listener (F(29, 2367) = 53.73, p < .001, ηp2 = .40) and speaker (F(38, 2367) = 68.02, p < .001, ηp2 = .53), such that average severity ratings were different for each speaker and each listener performed differently on the experiment, both as expected for these random variables.

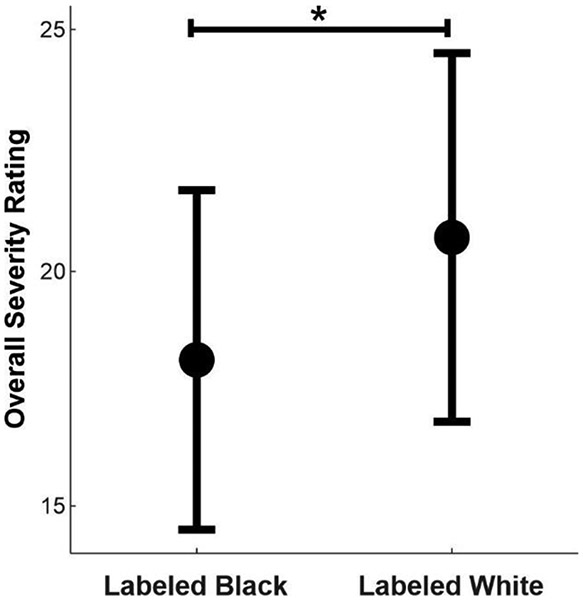

As hypothesized, there was a small but significant main effect of race labeling, (F(1, 2367) = 33.41, p < .001, ηp2 = .01), such that labeling a speaker as Black resulted in less severe dysphonia ratings (Figure 1). Black speakers were rated as significantly less severe when labeled Black (M = 21.39, SD = 19.35) compared to when labeled White (M = 24.48, SD = 20.2) and White speakers were also rated as significantly less severe when labeled Black (M = 14.74, SD=15.21) compared to when labeled White (M = 16.81, SD = 16.63).

Figure 1.

Mean overall severity of dysphonia ratings of all speakers across labeling conditions. Error bars are 95% confidence intervals. The y-axis is truncated for improved visualization; the full visual analog scale ranges from 0 - 100.

There was no significant interaction between labeled race and speaker race (F(1, 2367) = 1.32, p = .25), meaning the main effect of race labeling was true regardless of underlying speaker race. These findings support the hypothesis that race labeling would influence ratings of overall severity of dysphonia regardless of true speaker race, as this result was true for both White and Black speakers.

3.4. Pearson correlation

The hypothesis predicting that the difference in mean ratings (rating when labeled Black - rating when labeled White) would negatively correlate with IAT scores was tested using a Pearson correlation. A negative difference in mean ratings indicated that speakers labeled Black were rated as significantly less dysphonic. Positive IAT scores indicated anti-Black implicit bias. Contrary to the hypothesis, these variables were not significantly correlated (r(28) = −.05, p = .78; Figure 2).

Figure 2.

Listener scores on the Harvard IAT and mean differences of speaker ratings of overall severity of dysphonia (Labeled Black - Labeled White). Negative rating differences indicate Black race labeling resulting in decreased perceived overall severity of dysphonia.

4. Discussion

The aim of the current study was to further understand the role of implicit racial bias in auditory-perceptual evaluations of dysphonic voices. This was assessed by determining if a biasing effect existed for novice listeners in their auditory-perceptual ratings of Black and White speakers of General American English who have voice disorders.

4.1. Impact of Race Labeling

We hypothesized that speakers would be rated as less severely dysphonic by novice listeners when their race was labeled as Black, consistent with Black patients' presenting conditions being perceived as less severe in other areas of healthcare literature. The results from the mixed-design ANOVA support this hypothesis. Black and White speakers were both rated as significantly less dysphonic when their race was labeled as Black. There is no biological basis for race-based differences in voice,15,16 and this study found no significant effect of speaker race. This allows us to interpret the significant effect of race labeling as a result of implicit bias, not as a result of differences between Black and White speaker groups. This aligns with the minimizing bias for Black individuals presenting with various health conditions that has been shown throughout healthcare literature.17-21 Minimizing bias refers to the implicit tendency for providers to judge symptoms as normative for marginalized individuals that they would judge as abnormal for non-marginalized individuals.10 This finding suggests that knowing a patient's race will impact a novice speech-language pathologist's perception of their overall severity of dysphonia. This finding provides evidence of another way that auditory-perceptual evaluations are subject to perceptual bias, in addition to previous findings supporting that medical diagnosis knowledge and accuracy of listed diagnosis can bias auditory-perceptual evaluations.8,9

Although this finding is significant and concerning for the care of Black patients with voice disorders, it is important to recognize the difference between statistical and clinical significance. The aforementioned results are statistically significant, but the mean difference between Black speakers labeled Black and Black speakers labeled White was only 3.09 points on a 100-point scale, and the mean difference between White speakers labeled White and White speakers labeled Black was only 2.07 points. A rating difference of 2-3 points is unlikely to alter the interpretation of a patient's overall severity of dysphonia for those with moderate or severe voice impairment, which is reassuring as it applies to the clinical care of Black patients with this degree of impairment. However, for patients with mild dysphonia, such as many of the participants in this study, a rating difference of 2-3 points could perhaps be clinically significant for determining whether a dysphonia diagnosis is given or whether a patient is referred for a comprehensive voice assessment. A complete chart outlining how race labeling impacted severity interpretation for each of the 40 individual speakers is included in Table 3.

Table 3.

Mean overall severity ratings of each speaker across race labeling conditions.

| Speaker ID | Age | Sex | Race | Rating Labeled White |

Rating Labeled Black |

|---|---|---|---|---|---|

| 01 | 55 | Female | Black | 22.87 | 15.97 |

| 02 | 40 | Female | Black | 12.00 | 7.73 |

| 03 | 27 | Female | Black | 17.13 | 14.97 |

| 04 | 61 | Female | Black | 32.13 | 23.23 |

| 05 | 49 | Female | Black | 36.33 | 28.77 |

| 06 | 43 | Female | Black | 11.77 | 10.67 |

| 07 | 30 | Female | Black | 9.80 | 9.30 |

| 08 | 18 | Female | Black | 41.40 | 40.70 |

| 09 | 62 | Female | Black | 16.50 | 13.10 |

| 10 | 19 | Female | Black | 33.67 | 28.33 |

| 11 | 27 | Female | Black | 37.20 | 33.10 |

| 12 | 63 | Female | Black | 39.00 | 32.13 |

| 13 | 50 | Female | Black | 44.03 | 43.97 |

| 14 | 67 | Male | Black | 12.63 | 14.27 |

| 15 | 35 | Female | Black | 13.40 | 11.50 |

| 16 | 32 | Female | Black | 26.67 | 27.30 |

| 17 | 61 | Male | Black | 50.93 | 43.43 |

| 18 | 20 | Female | Black | 3.20 | 3.33 |

| 19 | 19 | Female | Black | 20.20 | 18.53 |

| 20 | 24 | Male | Black | 8.67 | 7.36 |

| 21 | 65 | Male | White | 25.43 | 24.70 |

| 22 | 39 | Female | White | 6.50 | 5.70 |

| 23 | 52 | Female | White | 19.27 | 18.80 |

| 24 | 57 | Female | White | 32.80 | 31.23 |

| 25 | 49 | Female | White | 33.03 | 27.67 |

| 26 | 20 | Female | White | 21.77 | 17.27 |

| 27 | 52 | Female | White | 32.93 | 28.53 |

| 28 | 28 | Female | White | 5.40 | 2.80 |

| 29 | 57 | Female | White | 18.77 | 16.47 |

| 30 | 66 | Female | White | 29.37 | 28.00 |

| 31 | 20 | Female | White | 4.50 | 2.80 |

| 32 | 20 | Female | White | 6.50 | 3.80 |

| 33 | 19 | Female | White | 11.03 | 5.50 |

| 34 | 37 | Female | White | 25.40 | 21.87 |

| 35 | 63 | Male | White | 12.40 | 13.27 |

| 36 | 35 | Female | White | 11.73 | 11.80 |

| 37 | 31 | Female | White | 8.63 | 8.70 |

| 38 | 26 | Female | White | 16.77 | 14.73 |

| 39 | 37 | Female | White | 7.87 | 6.53 |

| 40 | 19 | Male | White | 6.10 | 4.70 |

4.2. Implicit Racial Bias and Ratings of Overall Severity of Dysphonia

A negative correlation between difference in mean ratings of overall severity of dysphonia (Labeled Black - Labeled White) and Harvard IAT scores was hypothesized and tested using a Pearson correlation. No significant relationship was found between these two variables. Nearly all participants (26/30) showed a minimizing bias for Black speakers (mean ratings difference > 0), yet this was not related to Harvard IAT scores. Multiple explanations could contribute to this finding.

First, it is possible that the Harvard IAT was not a representative measure of these listeners' implicit biases. Although the Harvard IAT has been used in research for over two decades and was initially found both valid and reliable, recent studies have suggested weak predictive validity42 and weak construct validity43 of the measure. Second, activation of implicit biases may differ situationally. Research shows that cognitive load (e.g., mental fatigue) can increase the likelihood of activating implicit biases that would alter task performance44,45 and that increased subjectivity or ambiguity may increase our reliance on implicit associations.46,47 Based on this, it is possible that the IAT had a lowered cognitive load or was less subjective for some listeners than the auditory-perceptual rating task, which may explain why performance on the two measures did not follow the predicted trend. Third, the IAT is a well-known measure given its frequent use since 1998. As listeners began the task, they may have been aware that the IAT would be measuring implicit racial biases, whereas they were not aware that race labeling was being manipulated in the auditory-perceptual rating task. Task awareness may have influenced why implicit racial biases were activated differently on the IAT compared to on auditory-perceptual ratings, and this awareness may have resulted in listeners attempting to alter their performance on what was intended to be an automatic measure.

Finally, all participants of this study underwent anti-racist/anti-bias training throughout their two years in the Boston University MS-SLP program. In the program's training, students attended three lectures on intersectionality, bias, and privilege that highlighted action steps towards becoming an anti-racist/anti-bias speech-language pathologist. Students each received a workbook with written reinforcement of lecture content and additional resources for continuing education. Boston University's MS-SLP program also has a student-run anti-racist focus group that has collaborated with faculty to discuss ways to be anti-racist and anti-biased across course content areas (e.g., evaluation and diagnosis, speech sound disorders, aphasia). Questions students were tasked with answering during their training included, "Have you ever caught yourself in a thought that was driven by implicit bias? What happened" and "How is my privilege showing up in the assumptions that I make about the individuals with whom I work?" Similarly, all students were required to read a text on racial disparities in healthcare for the program's Healthcare Seminar course. This consistent emphasis on implicit bias retraining woven throughout the program may have influenced this particular group of listeners' performance in the study, as humans have the capacity to alter their implicit biases with intentional retraining efforts after gaining awareness of biases.48-50

Ultimately, implicit racial bias was present in the severity ratings task (shown by the significant effect of race labeling), and we ruled out this being due to any underlying differences between the Black and White speakers. The Harvard IAT may not have effectively captured these biases (shown by the lack of association between IAT scores and severity ratings) as implicit racial bias could have been activated differently on the severity ratings task than it was on the Harvard IAT.

4.3. Future Directions for Clinical Practice

Implicit biases permeate through all aspects of our social interactions, but they can be altered through conscious efforts made to unlearn the associations our brains have automatically built over time. An initial step in one's conscious efforts would likely be taking implicit bias tests to increase awareness of one's automatic beliefs, especially those that contradict their outward beliefs. There is a growing body of evidence suggesting that implicit bias reduction strategies are successful. Research has shown significant reductions in implicit racial bias specifically as a result of a 12-week intervention.50 Given the evidence of cognitive load (e.g., distractions and time pressures) increasing implicit bias activation, healthcare settings have been recommended to increase staffing to decrease productivity demand, decrease unnecessary information in electronic medical records, and increase use of cognitive aids such as algorithms, checklists, and clinical decision support tools.45 Mindfulness has been suggested as an implicit bias retraining intervention for clinicians to increase provider awareness and control over implicit biases towards patients.51 Other studies have suggested that intergroup exposure, or even just imagining intergroup contact, can increase positive implicit attitudes towards that group.52 When our exposure to a group is limited, we rely on stereotypes, but increased exposure to examples that counter the stereotypes we rely on may help as well. A concern many individuals have with taking the IAT is discomfort and fear of what their responses may reveal, therefore increased discussion around the inevitable nature of implicit biases may facilitate decreased stigma around this topic.

It is important to train graduate students and new voice clinicians completing auditory-perceptual evaluations using diverse voices that are representative of the racial composition of the United States population. Using heterogenous voices in training will better represent the caseload of a voice clinician. Similarly, clinicians are often trained in auditory-perceptual measures without knowing a patient’s race and without seeing images of the patient. During a clinical voice evaluation, access to this information likely does bias clinicians, therefore providing images/demographic information during auditory-perceptual trainings may be more applicable to the environment in which clinicians see patients. Auditory-perceptual evaluation trainings using diverse voices with race information provided may simulate chart reviews/patient visits while allowing for increased recognition and discussion of implicit biases as part of auditory-perceptual training.

Finally, it is recommended that voice-specialized clinicians recognize auditory-perceptual evaluations, even with standardized instruments like the CAPE-V, as a subjective tool. Cross-checking evaluations with colleagues and doing team calibrations may prioritize patient care and reduce discrepancies between providers and between patients. Ultimately, improved patient care is facilitated through recognition that we cannot be fully objective in evaluating patients. Implicit biases are pervasive, but taking conscious efforts to retrain our negative biases may improve clinical judgment and decision-making.

4.4. Limitations and Future Study Directions

A limitation of this study is that all listeners were novice clinicians. Beyond this, most intended to be generalist clinicians or specialists in content areas not including voice disorders upon completion of their graduate training. Given these listeners were less experienced with auditory-perceptual ratings and less likely to use them in future clinical settings, a future direction for this research would be conducting this study with experienced voice clinicians who are more likely to conduct auditory-perceptual evaluations of dysphonia on a regular basis. Additionally, the majority of study listeners were White, which was a reflection of the MS-SLP program and reflects the current racial composition of speech-language pathology. Another future direction would be conducting this study with a more racially heterogenous listener sample. This would allow for analyzing IAT data by race of listener, though it is important to note that minoritized individuals completing the IAT often show anti-minoritized group biases. Specifically, 29% of 370 Black individuals completing the IAT demonstrated an automatic preference for White individuals over Black individuals.53

Another limitation is that the speakers of this study had overall severity of dysphonia ratings that were skewed, with more individuals with mild dysphonia than moderate and severe. As mentioned previously, the visual analog scale ranged from 0-100 with anchors at 10 for mild, 35 for moderate, and 72 for severe.32 Based on the voice-specialized clinician ratings, the average overall severity of dysphonia of all Black speakers was 20.9 and the average overall severity of dysphonia of all White speakers was 17.6. Although speakers with moderate and moderate-to-severe dysphonia were included in the sample, the majority of speakers in the study had mild dysphonia. This was a result of the previous study database being biased towards hyperfunctional voice disorders, including referrals from a specialty clinic for professional voice users who are more likely to seek care even with mild hyperfunction (Table 1). A future direction may include replicating the study with more moderate and severe voices to see if increasing the range of dysphonia severity will influence the findings.

Additionally, the average intrarater reliability (r = .78, SD = .15, range: .48 - .99) on ratings completed twice by each listener is interpreted as good.37 The lower values in this range may be attributed to the novice experience level of participants in completing auditory-perceptual voice evaluations, yet the large number of listeners in this study lends credibility to the mean differences and statistical findings.

It is important to note the narrowness of this study's scope as it focused exclusively on auditory-perceptual evaluations of voice. Although these particular findings may not be clinically significant for all patients, implicit racial bias is pervasive in all aspects of healthcare practice. Auditory-perceptual evaluations such as the CAPE-V are a frequently used tool in clinical voice evaluations, but they are used in combination with other measures, including (but not limited to) case history interviews, patient-reported measures, acoustic and aerodynamic data, and stroboscopic findings to comprehensively evaluate and diagnose voice disorder presence and severity. Future research is needed to address the impact of implicit racial bias on these measures given the existing evidence that implicit racial bias may cause Black patients to be viewed as less cooperative during medical visits, more susceptible to negative interactions with providers, and overall less likely to receive standard quality of medical care.11-13,17,18,54

4.5. Conclusion

This study found that both Black and White speakers were rated as having less severe dysphonia when their race was labeled as Black. This suggests a minimizing bias for Black patients with voice disorders who present to speech-language pathologists for evaluation. These results contribute to the understanding of how demographic information unrelated to a voice disorder may impact a patient's visit with a clinician. Future directions include replicating this study with more experienced clinicians and with more severely dysphonic speakers. Further research is needed to determine the most effective interventions for implicit bias retraining and the additional ways that implicit racial bias impacts comprehensive voice evaluations.

Acknowledgments

This work was supported by grants DC015570 (CES), DC013017 (CES), DC021080 (KLD), and DC015446 (REH) from the National Institute on Deafness and Other Communication Disorders (NIDCD); an ASHFoundation New Century Scholars Doctoral Scholarship (KLD); and a PhD Scholarship from the Council of Academic Programs in Communication Sciences and Disorders (KLD). The authors thank Jen Weston and Daniel Buckley for their contributions.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Declarations of Interest:

C.E. Stepp has received consulting fees from Altec, Inc. and Delsys, Inc., companies focused on developing and commercializing technologies related to human movement. Stepp's interests were reviewed and are managed by Boston University in accordance with their conflict of interest policies.

Declaration of Competing Interest

C.E. Stepp has received consulting fees from Altec, Inc. and Delsys, Inc., companies focused on developing and commercializing technologies related to human movement. Stepp's interests were reviewed and are managed by Boston University in accordance with their conflict of interest policies.

A significant difference was found for only one aerodynamic measure, such that White speakers produced higher maximum-flow declination rates. One can interpret this finding as spurious given the relatively small sample size (20 in each group) and large number of tests (12 one-way analyses of variance).

Gender information was available for 23/40 speakers (18 cisgender women, 5 cisgender men). Gender information was not available for the remaining 17 speakers as it was not collected at the time of previous study enrollment.

References

- 1.American Speech-Langauge-Hearing Association. Definitions of communication disorders and variants. 1993. Available from www.asha.org/policy [PubMed]

- 2.Aronson AE, Bless DM. Clinical Voice Disorders. Thieme; 2009. [Google Scholar]

- 3.Boone DR, McFarlane SC, Von Berg SL, Zraick RN. The voice and voice therapy. Allyn & Bacon; 2010. [Google Scholar]

- 4.Lee L, Stemple J, Glaze L, Kelchner L. Quick Screen for Voice and Supplementary Documents for Identifying Pediatric Voice Disorders. Language, speech, and hearing services in schools. November/January 2004;35:308–19. doi: 10.1044/0161-1461(2004/030) [DOI] [PubMed] [Google Scholar]

- 5.Stachler RJ, Francis DO, Schwartz SR, et al. Clinical Practice Guideline: Hoarseness (Dysphonia) (Update). Otolaryngology–Head and Neck Surgery. 2018;158(S1):S1–S42. doi: 10.1177/0194599817751030 [DOI] [PubMed] [Google Scholar]

- 6.Kahneman D, Slovic P, Tversky A. Judgment under Uncertainty: Heuristics and Biases. Cambridge University Press; 1982. [DOI] [PubMed] [Google Scholar]

- 7.Reihl Kristina M., Ph.D. ,, Hurley Robin A., M.D. ,, Taber Katherine H., Ph.D. Neurobiology of Implicit and Explicit Bias: Implications for Clinicians. The Journal of Neuropsychiatry and Clinical Neurosciences. 2015;27(4):A6–253. doi: 10.1176/appi.neuropsych.15080212 [DOI] [PubMed] [Google Scholar]

- 8.Eadie T, Sroka A, Wright DR, Merati A. Does knowledge of medical diagnosis bias auditory-perceptual judgments of dysphonia? J Voice. Jul 2011;25(4):420–9. doi: 10.1016/j.jvoice.2009.12.009 [DOI] [PubMed] [Google Scholar]

- 9.Sauder C, Eadie T. Does the Accuracy of Medical Diagnoses Affect Novice Listeners' Auditory-Perceptual Judgments of Dysphonia Severity? J Voice. Mar 2020;34(2):197–207. doi: 10.1016/j.jvoice.2018.08.001 [DOI] [PubMed] [Google Scholar]

- 10.López SR. Patient variable biases in clinical judgment: conceptual overview and methodological considerations. Psychol Bull. Sep 1989;106(2):184–203. doi: 10.1037/0033-2909.106.2.184 [DOI] [PubMed] [Google Scholar]

- 11.FitzGerald C, Hurst S. Implicit bias in healthcare professionals: a systematic review. BMC Medical Ethics. 2017/March/01 2017;18(1):19. doi: 10.1186/s12910-017-0179-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Maina IW, Belton TD, Ginzberg S, Singh A, Johnson TJ. A decade of studying implicit racial/ethnic bias in healthcare providers using the implicit association test. Soc Sci Med. Feb 2018;199:219–229. doi: 10.1016/j.socscimed.2017.05.009 [DOI] [PubMed] [Google Scholar]

- 13.Green AR, Carney DR, Pallin DJ, et al. Implicit bias among physicians and its prediction of thrombolysis decisions for black and white patients. J Gen Intern Med. Sep 2007;22(9):1231–8. doi: 10.1007/s11606-007-0258-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Bryant BE, Jordan A, Clark US. Race as a Social Construct in Psychiatry Research and Practice. JAMA Psychiatry. Feb 1 2022;79(2):93–94. doi: 10.1001/jamapsychiatry.2021.2877 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Sapienza CM. Aerodynamic and acoustic characteristics of the adult African American voice. Journal of voice : official journal of the Voice Foundation. 1997;11 4:410–6. [DOI] [PubMed] [Google Scholar]

- 16.Xue SA, Fucci D. Effects of Race and Sex on Acoustic Features of Voice Analysis. Perceptual and Motor Skills. 2000;91(3):951–958. doi: 10.2466/pms.2000.91.3.951 [DOI] [PubMed] [Google Scholar]

- 17.Arora S, Stouffer GA, Kucharska-Newton A, et al. Fifteen-Year Trends in Management and Outcomes of Non–ST-Segment–Elevation Myocardial Infarction Among Black and White Patients: The ARIC Community Surveillance Study, 2000–2014. Journal of the American Heart Association. 2018/October/02 2018;7(19):e010203. doi: 10.1161/JAHA.118.010203 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Rathore SS, Lenert LA, Weinfurt KP, et al. The effects of patient sex and race on medical students ratings of quality of life. The American Journal of Medicine. 2000;108(7):561–566. doi: 10.1016/S0002-9343(00)00352-1 [DOI] [PubMed] [Google Scholar]

- 19.Johnson-Jennings M, Duran B, Hakes J, Paffrath A, Little MM. The influence of undertreated chronic pain in a national survey: Prescription medication misuse among American indians, Asian Pacific Islanders, Blacks, Hispanics and whites. SSM - Population Health. 2020/August/01/ 2020;11:100563. doi: 10.1016/j.ssmph.2020.100563 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Javitt JC, McBean AM, Nicholson GA, Babish JD, Warren JL, Krakauer H. Undertreatment of Glaucoma among Black Americans. New England Journal of Medicine. 1991;325(20):1418–1422. doi: 10.1056/nejm199111143252005 [DOI] [PubMed] [Google Scholar]

- 21.Odonkor CA, Esparza R, Flores LE, et al. Disparities in Health Care for Black Patients in Physical Medicine and Rehabilitation in the United States: A Narrative Review. Pm r. Feb 2021;13(2):180–203. doi: 10.1002/pmrj.12509 [DOI] [PubMed] [Google Scholar]

- 22.Morgan PL, Farkas G, Hillemeier MM, Li H, Pun WH, Cook M. Cross-Cohort Evidence of Disparities in Service Receipt for Speech or Language Impairments. Exceptional Children. 2017;84(1):27–41. doi: 10.1177/0014402917718341 [DOI] [Google Scholar]

- 23.Mahendra N, Spicer J. Access to Speech-Language Pathology Services for African-American Clients with Aphasia: A Qualitative Study. Perspectives on Communication Disorders and Sciences in Culturally and Linguistically Diverse (CLD) Populations. 2014/August/01 2014;21(2):53–62. doi: 10.1044/cds21.2.53 [DOI] [Google Scholar]

- 24.American Speech-Language-Hearing Association. Highlights and trends: Member and affiliate counts, year end 2018. 2019. https://www.asha.org/siteassets/surveys/2010-2019-member-and-affiliate-profiles.pdf

- 25.Bureau of Professional Licensing. FAQs for Implicit Bias Training. 2021. https://www.michigan.gov/-/media/Project/Websites/lara/bpl/Shared-Files/Implicit-Bias-Training-FAQs.pdf?rev=3bc940b9f585408db605070baf61c83e

- 26.American Speech-Langauge-Hearing Association. Issues in Ethics: Cultural and Linguistic Competence. https://www.asha.org/practice/ethics/cultural-and-linguistic-competence/

- 27.Greenwald AG, Nosek BA, Banaji MR. Understanding and using the Implicit Association Test: I. An improved scoring algorithm. Journal of Personality and Social Psychology. 2003;85(2):197–216. doi: 10.1037/0022-3514.85.2.197 [DOI] [PubMed] [Google Scholar]

- 28.Fairbanks G. Voice and articulation drillbook, 2nd edn. New York: Harper & Row; 1960. [Google Scholar]

- 29.Patel RR, Awan SN, Barkmeier-Kraemer J, et al. Recommended Protocols for Instrumental Assessment of Voice: American Speech-Language-Hearing Association Expert Panel to Develop a Protocol for Instrumental Assessment of Vocal Function. American Journal of Speech-Language Pathology. 2018;27(3):887–905. doi:doi: 10.1044/2018_AJSLP-17-0009 [DOI] [PubMed] [Google Scholar]

- 30.Charity AH. African American English: An Overview. Perspectives on Communication Disorders and Sciences in Culturally and Linguistically Diverse (CLD) Populations. 2008;15(2):33–42. doi:doi: 10.1044/cds15.2.33 [DOI] [Google Scholar]

- 31.Milne AE, Bianco R, Poole KC, et al. An online headphone screening test based on dichotic pitch. Behavior Research Methods. 2021/August/01 2021;53(4):1551–1562. doi: 10.3758/s13428-020-01514-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Kempster GB, Gerratt BR, Verdolini Abbott K, Barkmeier-Kraemer J, Hillman RE. Consensus auditory-perceptual evaluation of voice: development of a standardized clinical protocol. Am J Speech Lang Pathol. May 2009;18(2):124–32. doi: 10.1044/1058-0360(2008/08-0017) [DOI] [PubMed] [Google Scholar]

- 33.Helou LB, Solomon NP, Henry LR, Coppit GL, Howard RS, Stojadinovic A. The Role of Listener Experience on Consensus Auditory-Perceptual Evaluation of Voice (CAPE-V) Ratings of Postthyroidectomy Voice. American Journal of Speech-Language Pathology. 2010;19(3):248–258. doi:doi: 10.1044/1058-0360(2010/09-0012) [DOI] [PubMed] [Google Scholar]

- 34.Zraick RI, Kempster GB, Connor NP, et al. Establishing validity of the Consensus Auditory-Perceptual Evaluation of Voice (CAPE-V). Am J Speech Lang Pathol. Feb 2011;20(1):14–22. doi: 10.1044/1058-0360(2010/09-0105) [DOI] [PubMed] [Google Scholar]

- 35.Greenwald AG, Banaji MR, Nosek BA. Race Implicit Association Test. Project Implicit. https://implicit.harvard.edu/implicit/ [Google Scholar]

- 36.Witte RS, Witte JS. Statistics (9th ed.). Wiley; 2009. [Google Scholar]

- 37.Koo TK, Li MY. A Guideline of Selecting and Reporting Intraclass Correlation Coefficients for Reliability Research. J Chiropr Med. Jun 2016;15(2):155–63. doi: 10.1016/j.jcm.2016.02.012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Kelchner LN, Brehm SB, Weinrich B, et al. Perceptual evaluation of severe pediatric voice disorders: rater reliability using the consensus auditory perceptual evaluation of voice. J Voice. Jul 2010;24(4):441–9. doi: 10.1016/j.jvoice.2008.09.004 [DOI] [PubMed] [Google Scholar]

- 39.Wong DW-M, Chan RW, Wu C-H. Effect of Training With Anchors on Auditory-Perceptual Evaluation of Dysphonia in Speech-Language Pathology Students. Journal of Speech, Language, and Hearing Research. 2021;64(4):1136–1156. doi:doi: 10.1044/2020_JSLHR-20-00214 [DOI] [PubMed] [Google Scholar]

- 40.Law T, Kim JH, Lee KY, et al. Comparison of Rater’s Reliability on Perceptual Evaluation of Different Types of Voice Sample. Journal of Voice. 2012/September/01/ 2012;26(5):666.e13–666.e21. doi: 10.1016/j.jvoice.2011.08.003 [DOI] [PubMed] [Google Scholar]

- 41.Nagle KF. Clinical Use of the CAPE-V Scales: Agreement, Reliability and Notes on Voice Quality. Journal of Voice. 2022/December/19/ 2022;doi: 10.1016/j.jvoice.2022.11.014 [DOI] [PubMed] [Google Scholar]

- 42.Meissner F, Grigutsch LA, Koranyi N, Müller F, Rothermund K. Predicting Behavior With Implicit Measures: Disillusioning Findings, Reasonable Explanations, and Sophisticated Solutions. Review. Frontiers in Psychology. 2019-November-08 2019;10 doi: 10.3389/fpsyg.2019.02483 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Schimmack U. Invalid Claims About the Validity of Implicit Association Tests by Prisoners of the Implicit Social-Cognition Paradigm. Perspect Psychol Sci. Mar 2021;16(2):435–442. doi: 10.1177/1745691621991860 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Johnson TJ, Hickey RW, Switzer GE, et al. The Impact of Cognitive Stressors in the Emergency Department on Physician Implicit Racial Bias. Acad Emerg Med. Mar 2016;23(3):297–305. doi: 10.1111/acem.12901 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Burgess DJ. Are providers more likely to contribute to healthcare disparities under high levels of cognitive load? How features of the healthcare setting may lead to biases in medical decision making. Med Decis Making. Mar-Apr 2010;30(2):246–57. doi: 10.1177/0272989x09341751 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Fridell LA. The Science of Implicit Bias and Implications for Policing. In: Fridell LA, ed. Producing Bias-Free Policing: A Science-Based Approach. Springer International Publishing; 2017:7–30. [Google Scholar]

- 47.Hirsh AT, Hollingshead NA, Ashburn-Nardo L, Kroenke K. The Interaction of Patient Race, Provider Bias, and Clinical Ambiguity on Pain Management Decisions. The Journal of Pain. 2015/June/01/ 2015;16(6):558–568. doi: 10.1016/j.jpain.2015.03.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.FitzGerald C, Martin A, Berner D, Hurst S. Interventions designed to reduce implicit prejudices and implicit stereotypes in real world contexts: a systematic review. BMC Psychology. 2019/May/16 2019;7(1):29. doi: 10.1186/s40359-019-0299-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Liu FF, Coifman J, McRee E, et al. A Brief Online Implicit Bias Intervention for School Mental Health Clinicians. International Journal of Environmental Research and Public Health. 2022;19(2):679. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Devine PG, Forscher PS, Austin AJ, Cox WT. Long-term reduction in implicit race bias: A prejudice habit-breaking intervention. J Exp Soc Psychol. Nov 2012;48(6):1267–1278. doi: 10.1016/j.jesp.2012.06.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Burgess DJ, Beach MC, Saha S. Mindfulness practice: A promising approach to reducing the effects of clinician implicit bias on patients. Patient Education and Counseling. 2017/February/01/ 2017;100(2):372–376. doi: 10.1016/j.pec.2016.09.005 [DOI] [PubMed] [Google Scholar]

- 52.Turner RN, Crisp RJ. Imagining intergroup contact reduces implicit prejudice. 10.1348/014466609X419901. British Journal of Social Psychology. 2010/March/01 2010;49(1):129–142. doi: 10.1348/014466609X419901 [DOI] [PubMed] [Google Scholar]

- 53.Rich M. Exploring Racial Bias Among Biracial and Single-Race Adults: The IAT. 2015. 2015-August-19. https://policycommons.net/artifacts/618935/exploring-racial-bias-among-biracial-and-single-race-adults/

- 54.Sabin JA, Greenwald AG. The influence of implicit bias on treatment recommendations for 4 common pediatric conditions: pain, urinary tract infection, attention deficit hyperactivity disorder, and asthma. Am J Public Health. May 2012;102(5):988–95. doi: 10.2105/ajph.2011.300621 [DOI] [PMC free article] [PubMed] [Google Scholar]