Abstract

Survival analysis is a valuable tool for estimating the time until specific events, such as death or cancer recurrence, based on baseline observations. This is particularly useful in healthcare to prognostically predict clinically important events based on patient data. However, existing approaches often have limitations; some focus only on ranking patients by survivability, neglecting to estimate the actual event time, while others treat the problem as a classification task, ignoring the inherent time-ordered structure of the events. Additionally, the effective utilisation of censored samplesdata points where the event time is unknown is essential for enhancing the model’s predictive accuracy. In this paper, we introduce CenTime, a novel approach to survival analysis that directly estimates the time to event. Our method features an innovative event-conditional censoring mechanism that performs robustly even when uncensored data is scarce. We demonstrate that our approach forms a consistent estimator for the event model parameters, even in the absence of uncensored data. Furthermore, CenTime is easily integrated with deep learning models with no restrictions on batch size or the number of uncensored samples. We compare our approach to standard survival analysis methods, including the Cox proportional-hazard model and DeepHit. Our results indicate that CenTime offers state-of-the-art performance in predicting time-to-death while maintaining comparable ranking performance. Our implementation is publicly available at https://github.com/ahmedhshahin/CenTime.

MSC: 41A05, 41A10, 65D05, 65D17

Keywords: Survival Analysis, Deep Learning, Censoring

Highlights

-

•

CenTime offers a novel approach to modelling censoring in survival analysis tasks.

-

•

Directly estimates time-to-event rather than ranking patients by survivability.

-

•

Delivers robust performance even with limited uncensored training data.

-

•

Excels in precise time-to-event predictions with comparable ranking performance.

1. Introduction

Survival analysis has been applied in many areas, including genomics (Lee and Lim, 2019), healthcare (Lee et al., 2018, Zhao et al., 2022, Shahin et al., 2022, Lu et al., 2023), manufacturing (Richardeau and Pham, 2012), marketing (Kim and Suk Kim, 2014), and social sciences (Emmert-Streib and Dehmer, 2019). To keep the language concrete, we will discuss and evaluate the healthcare scenario of patient survival, bearing in mind that our methods are generally applicable.

Survival analysis has a long research history, from traditional statistical methods to modern machine learning methods (Wang et al., 2019). Kaplan and Meier proposed an early method that models the proportions of patients at risk at given times (Kaplan and Meier, 1958). The main constraint of the K-M approach is that it cannot model the influence of covariates. The later developed Cox proportional hazards model (Cox, 1972) overcomes some of the limitations of the K-M approach, but cannot directly estimate survival times. Rather, it estimates the relative likelihood of death for one patient compared to another.

A key challenge in survival analysis is dealing with censored data. In right censoring, we know that the patient was alive up to the censoring time, but we do not know when they died or indeed if they are still alive. Naively disregarding censored samples negatively impacts the performance of survival models and leads to statistically biased results (Buckley and James, 1979). As a result, a large body of the literature has focused on leveraging censored training samples to improve survival models’ performance and make more accurate predictions.

Our main contribution is to directly model the time of death of a patient, for which we introduce a novel censoring model. We compare our approach with a standard Cox and classical censoring model and apply these methods to predicting the survival of Idiopathic Pulmonary Fibrosis (IPF) patients based on volumetric Computerised Tomography (CT) images and associated clinical data. IPF is a progressive fibrotic lung disease with a variable and unpredictable progression rate, making it an ideal test case for our proposed approaches. For completeness we include comparisons with the Cox model, using standard techniques to estimate actual survival time from a ranking. Implementing the Cox model is also computationally demanding and we introduce an approximation to make this tractable.

1.1. Preliminaries

We aim to learn the distribution , where is a random variable associated with the death time ; represents model parameters and are a set of covariates (e.g. CT scan and clinical data). For simplicity, we assume that the death time is discrete and refers to the number of months that a patient survives post the CT scan.

Our training data is a collection of uncensored and right-censored observations. The observation for an uncensored sample is represented as , where indicates that the death time is known. For a right-censored sample, the observation is represented as , where indicates that the death time is unknown, and only the censoring time is known, . The data index set is , the uncensored observation index set is , and the censored observation index set is . The approaches described below can be naturally extended to accommodate the other forms of censoring, such as left censoring and interval censoring, see Appendix A.

2. Centime : an event-conditional censoring model

We introduce CenTime which enables the direct learning of a death time distribution from either censored or uncensored data. CenTime uses a novel censoring mechanism that we believe is more representative of censoring in some clinical situations. The method is generally applicable to other forms of censoring (left, interval), see Appendix A. Here we concentrate on right censoring. Specifically, we first sample the death time and then generate a censoring time from a distribution up to the death time. This results in the censored time model

| (1) |

The objective then is to maximise the log likelihood

| (2) |

The objective in (2) is the likelihood of a mixture model containing contributions from the uncensored data and censored data, with each term being a consistent objective for the event model parameters (i.e. estimators based on either contribution converge to the true parameters as the number of samples increases). This implies that even in the scenario where we only have censored training data, the model can learn the underlying event model.

The model also has the advantage that, if needed, we can easily sample data from this model a given proportion of censored to uncensored data. If a proportion of censored to uncensored data is required, for a chosen one can simply sample censored datapoints from and uncensored datapoints from . This feature is absent in classical censoring models, in which it is not possible to sample data with a required proportion of censored to uncensored data.

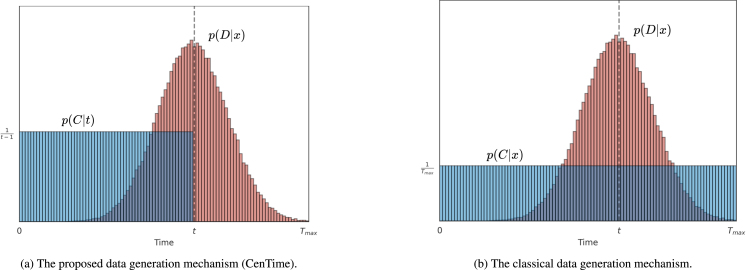

We still need to make two assumptions — the censoring distribution and the event distribution . We define the event distribution below in Section 2.1 and here we define the censoring distribution . In principle, this can also be learned from the data but for simplicity we assume a uniform censoring distribution for and 0 elsewhere (see Fig. 1(a)), giving

| (3) |

For any event distribution model the likelihood objective to maximise is

| (4) |

Fig. 1.

Distributional survival analysis data generation mechanisms. (a) In the proposed event-conditional censoring model (CenTime), is drawn from the death time distribution and is uniformly sampled up to . (b) In the classical model, and represent randomly drawn death and censoring times from the corresponding distributions. If , the patient is censored and the observation is the censoring time. Otherwise, the patient is uncensored and observation is the death time.

2.1. Event time distribution

We need to make an appropriate choice for the event time distribution . We employ a discretised form of the Gaussian distribution

| (5) |

In this formulation, and are parameters of the distribution that are predicted by the model (a neural network parameterized by ), and is a normalisation factor, defined as

| (6) |

This formulation has the following advantages

-

•

The term ensures a heavier penalty for predictions that deviate significantly from the true death time, promoting closer predictions. This stands in contrast to approaches that treat death times as independent categories (Lee et al., 2018), which do not fully capture this relationship.

-

•

The model only outputs two quantities . This keeps the number of parameters low, reducing the risks of overfitting compared to treating this as a classification task, with category for each timepoint (Lee et al., 2018).

In principle, the form of the distribution is also learnable, but we found that the discrete Gaussian performed well in our experiments.

3. Previous works

3.1. Classical censoring model

One common approach in the literature is to assume that censoring times follow a distribution and death times follow a distribution . These times are independently sampled and then compared: if the censoring time is less than the death time, the observation is the censoring time; otherwise, it is the death time (Lee et al., 2018, Klein and Moeschberger, 2003), see Fig. 1(b). This leads to the following model

| (7) |

where if and if . For a uniform censoring distribution a (right) censored observation then has the following likelihood

| (8) |

and the likelihood of an uncensored observation is given by

| (9) |

Omitting additive constants, the objective then is to maximise

| (10) |

Comparing this with the CenTime censoring mechanism (4), the difference is the factor in the censored summation term. Also, for this classical approach, if one wanted to generate data from the model, one cannot a priori decide on how many samples are censored or uncensored. The generation process (10) generates either a censored or uncensored datapoint, with the probability of this happening being a function of .

3.2. Cox model

We briefly review the standard Cox proportional hazards approach (Cox, 1972). The Cox model is ubiquitous in survival analysis — however, it cannot directly predict the death time nor deal easily with censored data. Both of these issues are we believe vital for modern survival analysis applications. Whilst the Cox model does not directly produce a prediction for the death time, there are standard approaches to estimate the death time (Breslow, 1974), and as such it is an important baseline comparison method. The hazard function models the chance that a patient will die in an infinitesimal time interval given that death has not occurred before

| (11) |

The Cox model (Cox, 1972) constrains the hazard function (conditioned on the patient covariates ) to the form

| (12) |

Here is the baseline hazard function, which depends only on , while depends on the patient covariates and are the model parameters. The standard Cox model (Cox, 1972) further constrains the hazard function (conditioned on the patient covariates ) to the linear form . DeepSurv (Katzman et al., 2018) and other deep neural networks extend it to non-linear . For each patient we define the risk set as all those patients that have not died before patient and define the relative death risk as

| (13) |

The partial log-likelihood is then defined as the sum of for the set of uncensored patients

| (14) |

As can be seen from (14), Cox-based methods utilise the censored data only in constructing the risk set and maximise the likelihood that the uncensored patients die before patients in the risk set. In our experiments, is a high-dimensional CT scan, and the function is a deep neural network that is costly to compute in both time and memory. A typical approach to optimising (14) is stochastic gradient descent, which involves selecting minibatches of training observations at each iteration , where denotes the minibatch index set at iteration (Katzman et al., 2018). The objective then is to maximise

| (15) |

where represents the model parameters at iteration , is the uncensored observation index set for samples in minibatch , and is the risk set for patient in the same minibatch.

However, (15) is a ranking objective that compares patients within the minibatch based on their predicted mortality risk. This requires large minibatch sizes for robust training; however, for high-resolution input (e.g. 3D CT scans), we are limited by GPU memory to small minibatch sizes. Consequently, the minibatches often contain only censored patients, i.e. . In such cases, (15) is undefined, and these minibatches are excluded from the training process, resulting in a significant reduction in the training data. To overcome this, we use a memory bank (Wu et al., 2018, He et al., 2016) to store neural network predictions for later iterations (Shahin et al., 2022), see Appendix B for details. We call this approach CoxMB and compare it with the standard Cox model in our experiments.

3.3. DeepSurv

Katzman et al. (2018) This is a deep neural network that is trained using the Cox objective function (14), outputting a single scalar value that represents the risk of death. It is compared with our CoxMB model, which uses a memory bank to store the risk of death for each patient during training, using this information to penalise the model for inaccuracies in predicting the ranking of patients’ survival times.

3.4. DeepHit

Lee et al. (2018) approach survival analysis as a classification task with categories. Specifically, a neural network predicts a vector of values, which a softmax function then transforms into a death distribution, . This approach, however, has a few challenges: (1) the ordinal nature of the death time is not directly captured because the softmax function regards different death times as separate classes; (2) if is large, the model requires more parameters, heightening the risk of overfitting; (3) some death times might not be represented in the training data, which could reduce softmax probabilities to zero, yielding no gradient and impeding the learning process for these times. All of these issues are addressed by our alternative formulation in Section 2.1.

To leverage the censored data, DeepHit uses a combination of the classical censoring model (10) and a ranking objective. Specifically, the objective function is composed of two terms , where represents the classical likelihood (10) with a softmax function to model the death time distribution, and is a ranking term that penalises the model for inaccuracies in predicting the ranking of patients’ survival times, mirroring the Cox objective

| (16) |

where , with being a hyperparameter set to 0.1, following the official implementation. represents the cumulative distribution function of the predicted distribution .

3.5. DeepHit ( only)

To evaluate the contribution of the likelihood term in DeepHit, we train another model with the same architecture but without the ranking term .

4. Experiments

We evaluate our methods on a practical and challenging real data problem. IPF is a chronic fibrotic interstitial lung disease of unknown cause, associated with progressive fibrosis (stiffening and scarring of lung tissue), deterioration of lung function, and shortened survival (Lederer and Martinez, 2018, Barratt et al., 2018). Survival analysis of patients with IPF is fundamental for studies that evaluate factors associated with disease progression and is part of the analysis of clinical drug trials. However, it is a challenging task due to the heterogeneous progression trajectories of IPF and the lack of available mortality predictors and survival models. Cox models are often used in these studies to identify associations with mortality (Jacob et al., 2017, Gao et al., 2021). Despite its popularity, the Cox model has several limitations. Primarily, it relies on the assumption of proportional hazards, which states that the relative hazard remains constant over time between different patients. This assumption is not always accurate, particularly in progressive diseases such as IPF. Furthermore, the Cox model estimates the relative hazard, rather than the actual death time, which is often more useful and easier to interpret.

More similar to our method, other approaches train models to predict death time, rather than ranking patients according to their death risk. One notable example of this approach is DeepHit (Lee et al., 2018), which uses a fully connected layer in a deep network to output the probability of death at every possible time. This approach treats death-time prediction as a one-of- classification problem and does not encode the natural assumption that making a small error in the time of death should be penalised less than predicting a large error in the time of death.

4.1. Dataset and preprocessing

We use the Open Source Imaging Consortium (OSIC)1 dataset which encompasses lung CT scans along with contemporaneous clinical data in addition to mortality labels in months ( and if , otherwise ). We examine the performance of different methods using exclusively CT images or a combination of CT images and clinical data, as each contains pertinent information related to disease progression in IPF. The dataset consists of 728 samples, which we randomly divided into training (70%), validation (15%), and test (15%) sets. The mean and standard deviation of the metrics are reported over five runs with different random splits. Approximately 65% (470 samples) of the dataset are right-censored.

For the imaging data, only CT scans with a slice thickness of 3 mm are considered. All scans are cropped to the lung area using the lung segmentation model trained by Hofmanninger et al. (2020). These scans are then resampled to achieve an isotropic pixel spacing of via linear interpolation. Following this, the scans are resized to dimensions of 256 × 256 × 256 voxels using bicubic interpolation. Later, we apply histogram matching and a windowing operation within the range [−1024, 150] Hounsfield Units to remove irrelevant information. Finally, we normalise the scans to have zero mean and unit variance based on the statistics drawn from the training set. We apply random rotation (up to 15 degrees) and translation (up to 20 pixels) to augment the training data.

In experiments involving clinical data, we incorporate six clinical features: age, sex, smoking history (categorised as never-smoked, ex-smoker, or current smoker), antifibrotic treatment (yes or no), Forced Vital Capacity (FVC) percent, and carbon monoxide diffusion capacity (DLCO). To ensure the correspondence between the imaging and clinical data, we only include patients whose lung function tests were performed within 3 months of the CT scan. Continuous features (age, FVC percent and DLCO) are normalised to have zero mean and unit variance, while categorical features are transformed via one-hot encoding. Missing values are sampled using a latent variable model following (Shahin et al., 2022). During testing, we use the most probable value from the missing data imputation model.

Table 1.

Comparison of the test performance of the different methods on OSIC dataset when trained on imaging data only, as well as combined imaging and clinical data. The mean and standard deviation are reported over five runs with different random train/val/test splits. The best results are highlighted in bold.

| Data | Method | C-Index | MAE | RAE |

|---|---|---|---|---|

| Imaging | DeepSurv (Cox) | 67.441 ± 4.572 | 44.898 ± 19.505 | 2.286 ± 1.414 |

| CoxMB | 71.067 ± 5.572 | 28.887 ± 2.315 | 1.762 ± 0.807 | |

| DeepHit | 53.165 ± 8.313 | 31.074 ± 7.765 | 1.830 ± 0.522 | |

| DeepHit ( only) | 57.607 ± 4.813 | 29.862 ± 3.742 | 1.926 ± 0.869 | |

| Classical censoring | 68.844 ± 5.313 | 20.448 ± 4.787 | 1.407 ± 0.853 | |

| CenTime | 69.273 ± 0.946 | 19.319 ± 1.613 | 1.338 ± 0.665 | |

| Imaging + Clinical | DeepSurv (Cox) | 72.100 ± 2.186 | 27.603 ± 3.345 | 1.718 ± 0.742 |

| CoxMB | 68.877 ± 2.413 | 24.413 ± 2.548 | 1.892 ± 0.868 | |

| DeepHit | 54.980 ± 3.490 | 31.246 ± 4.599 | 2.240 ± 0.862 | |

| DeepHit ( only) | 52.882 ± 3.843 | 28.718 ± 2.077 | 2.059 ± 0.722 | |

| Classical censoring | 70.350 ± 2.947 | 20.476 ± 1.85 | 1.546 ± 0.611 | |

| CenTime | 70.957 ± 3.048 | 19.178 ± 0.795 | 1.480 ± 0.671 | |

4.2. Implementation details

In our experimental setup, the event distribution models parameterize the distribution using and . A deep learning model parameterized by is used to learn , while is fixed at 12 months. This helps to stabilise the training process and mitigate overfitting (see Nix and Weigend, 1994 for a similar observation). For DeepHit, the output of the model is a vector of size , representing the logits of the 1-of- classification labels. Finally, the DeepSurv and CoxMB models output a single scalar that represents the predicted risk of death, in (14). We evaluate the performance of the models when trained on imaging data exclusively, as well as combined imaging and clinical data.

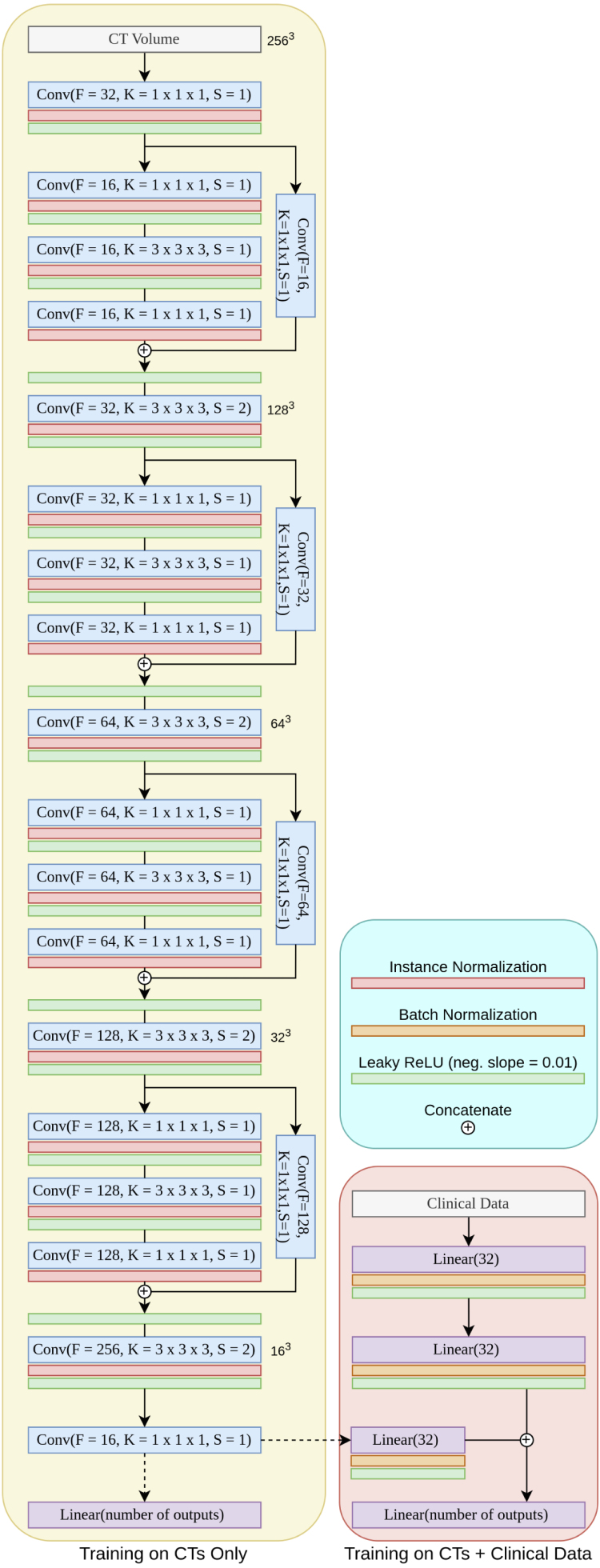

To process HRCT scans, we use a 3D Convolutional Neural Network (CNN), as illustrated in Appendix C (left). The network initiates with a 3D convolutional layer, which is followed by an instance normalisation layer and a leaky ReLU activation function. We then stack four residual blocks, each comprising three 3D convolutional layers (He et al., 2016). After each convolutional layer, we use instance normalisation (Ulyanov et al., 2016) and leaky ReLU (Maas et al., 2013) layers. We utilised 1 × 1 × 1 kernels for the first and last convolutional layers, while the middle layer used a 3 × 3 × 3 kernel. In a parallel branch, we use a single convolutional layer, and the outputs of the two branches are concatenated. The output of this series of layers is then passed through another convolutional layer, designed with a stride of 2, to halve the spatial dimension. Finally, we use a convolutional layer with 16 filters and a 1 × 1 × 1 kernel to produce a compact feature representation. We flatten this representation and input it into the final fully connected layer. In designing this network, we were aware that the progression of IPF manifests itself in fine pulmonary patterns, such as honeycombing, reticulation, and ground glass opacities. To capture these nuances, we opt for small kernels and deliberately avoid pooling layers, as this could result in the loss of fine image details.

When we incorporate clinical data, we use a Multi-Layer Perceptron (MLP) that consists of two fully connected layers with 32 neurones each, each followed by batch normalisation (Ioffe and Szegedy, 2015) and leaky ReLU activation (Maas et al., 2013), as detailed in Appendix C (right). The MLP output is concatenated with the CNN output. The CNN output, which represents imaging data, is projected to a 32-element vector to balance the contributions from both imaging and clinical data. The combined output is subsequently propagated through a final fully connected layer.

For optimisation, we use AdamW optimiser (Loshchilov and Hutter, 2018) with a learning rate of 10−4 for the classical and event-conditional censoring models and for DeepHit, DeepSurv, and CoxMB. The optimal learning rate value was tuned via a random search based on the performance on the validation set. Additionally, we apply a cosine annealing learning rate scheduler and gradient clipping. Due to the high resolution of the imaging data (256 × 256 × 256), we use a batch size of 2 for all models. We train the models for an initial 300 epochs. However, training is halted if there is no improvement in validation performance for 50 consecutive epochs. In CoxMB, we use a value of 1.0. We use a of 156 months for all models, which is the maximum observed time in the dataset. The models are implemented using PyTorch and trained on a single NVIDIA A6000 GPU.

4.3. Evaluation metrics

Concordance index.

The estimates the probability that the predicted risks or survival times of a randomly chosen pair of patients will have the same ordering as their actual survival times (Harrell et al., 1996)

| (17) |

A pair is considered concordant if the ranking predicted by the model matches the true ranking, and discordant if it does not. A perfect model will have a . It is worth noting that the C-Index is a ranking metric, which only assesses the order in which the predicted values should be ranked compared to the true ranking.

Mean absolute error.

The MAE assesses the difference between death times predicted by the model and the true death times

| (18) |

where is the predicted death time for patient .

Relative absolute error.

We also report the RAE which quantifies the relative deviation of the predicted time from the true death time

| (19) |

4.4. Results

The evaluation of survival analysis performance depends on the particular clinical objective. For instance, if the aim is to stratify patients into high and low-risk groups, the C-Index is a suitable metric. In contrast, if the objective is a precise prediction of the time of death for each patient, metrics such as MAE and RAE are more appropriate.

In Table 1, we report the test performance of the different methods on the OSIC dataset. For the Cox-based methods, we notice that the introduction of memory banks during training (CoxMB) leads to a significant performance improvement compared to the DeepSurv model, which employs the standard Cox objective function (Cox, 1972, Katzman et al., 2018). This improvement can be seen through the increase in C-Index by 3.63, a reduction of the MAE by 16 months, and a decrease in the RAE by 0.046.

Upon inclusion of clinical data, CoxMB upholds superior performance on MAE in contrast to DeepSurv, whereas DeepSurv excels in ranking performance. This performance divergence, particularly with respect to the decline of the C-Index in the CoxMB case, can likely be attributed to the high noise level and the presence of missing values in clinical data. In general, DeepSurv seems to benefit more from the inclusion of clinical data than CoxMB, where the improvements are marginal. CoxMB already performs well on the imaging data, and the clinical data do not provide much additional information.

For distribution-based methods, CenTime outperforms all other distribution-based baselines in C-Index, MAE, and RAE metrics, whether trained solely on imaging data or a combination of imaging and clinical data. The superiority of our method is particularly noticeable in the hybrid case, where the MAE decreases by 9.92 and 1.3 months compared to the DeepHit and the classical censoring models, respectively. Similarly, the C-Index improves by 12.22 and 0.61 compared to these models. Comapred to DeepSurv and CoxMB, CenTime offers a remarkable improvement in MAE (8.43 and 5.23 months, respectively) and a comparable ranking performance. This demonstrates the effectiveness of CenTime in efficiently capturing the censoring process. Interestingly, CenTime significantly outperforms DeepHit. In addition to the different modelling of the censoring process, this can be attributed to the different ways each model handles the event distribution. CenTime applies a discretised version of the Gaussian distribution (as per (5)), whereas DeepHit considers it as a classification problem comprising classes, executed using a fully-connected layer followed by a softmax function. By disregarding the ordinal nature of the time variable and facing the potentially large class number, , DeepHit is more susceptible to overfitting.

In summary, CenTime outperforms all the baselines in predicting the time of death for IPF patients, whether trained solely on imaging data or a combination of imaging and clinical data. Additionally, it delivers competitive C-Index performance despite not incorporating a ranking objective. This makes it a more appropriate choice for clinical scenarios where the precise prediction of the time of death takes precedence over the ranking of patients’ survival times. On the other hand, if the ranking of survival times is of paramount importance, CoxMB model offers a more robust training strategy by employing memory banks, especially beneficial when training on high-resolution imaging data.

4.4.1. Performance under limited uncensored training data

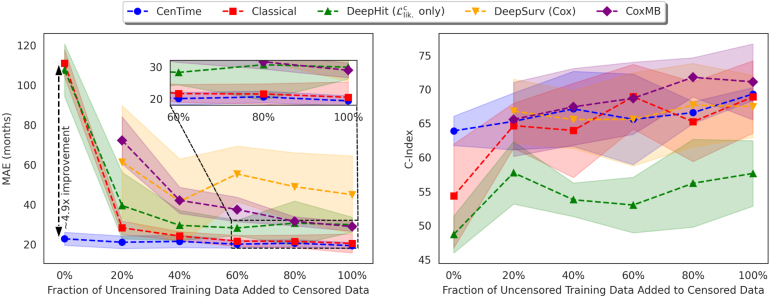

The amount of uncensored data available for training survival models is typically limited. Therefore, it is critical for learning algorithms to use the available censored data effectively to improve performance. In this subsection, we examine the performance of the different methods when trained on a limited amount of uncensored data, in addition to the censored data (imaging only). We randomly sample 0% (purely censored), 20%, 40%, 60%, 80%, and 100% of the uncensored data. In each scenario, all the censored data is added to compose the training set. The results are presented in Fig. 2.

Fig. 2.

Performance of the different methods when trained on gradually increasing percentages of uncensored data added to the censored data. 0% corresponds to training on purely censored data, while 100% corresponds to training on the full training set. The mean and standard deviation are reported over five runs with different random train/val/test splits.

The initial observation is that Cox-based models (DeepSurv and CoxMB) are only trainable when uncensored examples are available during training. This is because the objective function is defined solely for uncensored examples (see (14)). Second, when utilising purely censored data, CenTime shows a significant improvement (x in terms of MAE) over the classical and DeepHit models. This is because CenTime forms a consistent estimator of the model parameter even with purely censored data, a feature not shared by the classical and DeepHit models. As the amount of uncensored data included in the training data increases, we generally observe an improvement in the performance of all models, and the differences between the various methods diminish. However, CenTime continues to outperform the other methods in terms of MAE and offers competitive performance in terms of the C-Index. These findings underscore the effectiveness of our proposed approach in modelling the censoring process and utilising it efficiently.

Furthermore, we observe that the performance of the CoxMB model, when trained with a limited amount of uncensored data, is comparable to that of the DeepSurv model. This can be attributed to the lessened effectiveness of the memory bank when the amount of uncensored data is limited. However, as the amount of uncensored data increases, the memory bank efficacy improves and the performance of CoxMB consistently surpasses that of the DeepSurv model. This is evident in both the C-Index and the MAE metrics. Intriguingly, the C-Index performance of CenTime is comparable to that of DeepSurv, despite the fact that it does not use a ranking objective. This further underlines the robustness and versatility of our proposed event-conditional censoring model.

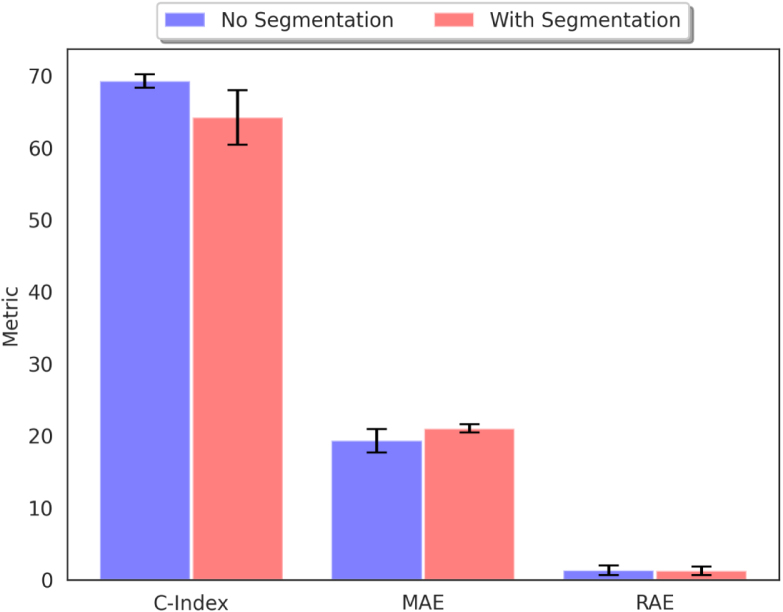

4.4.2. Effect of lung segmentation

Idiopathic Pulmonary Fibrosis predominantly affects the lungs, making this area the most relevant in CT scans. However, there is some evidence suggesting that the disease can also affect other organs, such as the heart (Agrawal et al., 2016). Therefore, we examine the effect of lung segmentation on the performance of CenTime, when trained on imaging data. We train the model with and without lung segmentation (using Hofmanninger et al. (2020)) and report the results in Fig. 3. We do not observe a significant difference in the performance, which suggests that the model is able to learn the relevant features from the lung area without the need for explicit segmentation. This also allows the model to benefit from information in the non-lung area (e.g. heart) if it is relevant to the survival prediction task.

Fig. 3.

Effect of lung segmentation on the performance of CenTime.

5. Conclusions

Our work demonstrates the limitations of existing survival methods and addresses them. Traditional Cox-based methods (i) assume the strong proportional hazards assumption, which is not always true, (ii) estimate the relative hazard rather than the actual death time, which is often more useful and easier to interpret, and (iii) represent a ranking method and, therefore, require a large batch size, which is not always feasible. DeepHit (iv) does not encode the ordinal nature of the target survival time variable, (v) approaches the problem as a classification task, which becomes prone to overfitting with a large number of classes. Our CenTime model addresses all these limitations. By modelling the death and censoring likelihoods, it circumvents the hazards proportionality assumption (i), directly estimates the death time (ii), and imposes no batch size restrictions (iii). Furthermore, because of the adoption of the discretised Gaussian distribution, our model naturally encodes the ordinal nature of the target survival time variable (iv) and, by outputting only the discretised Gaussian distribution parameters, is less susceptible to overfitting (v). Finally, compared to the classical censoring mechanism, CenTime offers a convenient alternative to the classical censoring model by providing a consistent estimator even with purely censored data alone and should be particularly useful in situations with only very limited uncensored entries.

Our results underscore the effectiveness of CenTime in predicting the time of death, while also offering competitive performance in terms of ranking, even without a ranking objective. This makes CenTime a compelling choice for clinical scenarios where accurate prediction of the time of death takes precedence over the ranking of patients’ survival times, particularly when dealing with limited observed death time data.

Declaration of competing interest

The authors declare the following financial interests/personal relationships which may be considered as potential competing interests: This work was supported by the Open Source Imaging Consortium (OSIC) https://www.osicild.org. Wellcome Trust (221915/Z/20/Z) support Daniel C. Alexander’s work on this topic. This research was funded in whole or in part by the Wellcome Trust (209553/Z/17/Z). For the purpose of open access, the author has applied a CC-BY public copyright licence to any author accepted manuscript version arising from this submission. This project was also supported by the NIHR UCLH Biomedical Research Centre, UK. Joseph Jacob reports fees from Boehringer Ingelheim, Roche, NHSX, Takeda, Gilead, Microsoft Research and GlaxoSmithKline unrelated to the submitted work. Joseph Jacob reports UK patent application numbers 2113765.8 and GB2211487.0 unrelated to the submitted work.

Acknowledgements

This work was supported by the Open Source Imaging Consortium (OSIC) https://www.osicild.org. Wellcome Trust (221915/Z/20/Z) support Daniel C. Alexander’s work on this topic. This research was funded in whole or in part by the Wellcome Trust, United Kingdom (209553/Z/17/Z). For the purpose of open access, the author has applied a CC-BY public copyright licence to any author accepted manuscript version arising from this submission. This project was also supported by the NIHR UCLH Biomedical Research Centre, UK . Joseph Jacob reports fees from Boehringer Ingelheim, Roche, NHSX, Takeda, Gilead, Microsoft Research and GlaxoSmithKline unrelated to the submitted work. Joseph Jacob reports UK patent application numbers 2113765.8 and GB2211487.0 unrelated to the submitted work.

Footnotes

Appendix A. Additional forms of censoring

In the main text, we focused on right-censoring, which is the most common form of censoring in survival analysis. Nevertheless, the versatility of CenTime enables its application to the other variants of censoring: left-censoring and interval-censoring. In this section, we discuss these additional types of censoring and delineate how our approach can be naturally adapted to handle them.

A.1. Left-censoring

In left-censoring, the event is known to have occurred before a specific time . Take, for example, a patient reported dead at time , and this is all the information we have. We do not know the exact time of death, but we know it occurred within . This is in contrast to right-censoring, where the event is known to occur after a particular time . According to CenTime, we will first sample a death time from the distribution , then sample a censoring time from a distribution whose support is . If we assume a uniform censoring distribution for states , and using (1), a left-censored observation has the following likelihood

| (A.1) |

The likelihood for the uncensored observations remains as . Left-censored observations are then incorporated into the objective function as follows

| (A.2) |

where denotes the set of left-censored observations.

A.2. Interval-censoring

Interval-censoring arises when the event is known to have occurred within a specific time interval . For instance, a patient is reported to be alive at time and subsequently reported dead at time . Although the exact time of death is unknown, we know that it occurred within . According to our conditional censoring model, we will first sample a death time from the distribution , then sample a lower censoring time from a distribution whose support is and an upper censoring time from a distribution whose support is . Similarly to the other forms of censoring, we assume a uniform censoring distribution for the states and for the two censoring distributions, respectively. The likelihood for an interval-censored observation is then

| (A.3) |

The objective function is then

| (A.4) |

where is the set of interval-censored observations.

Appendix B. Cox with memory bank

To overcome this, we use a memory bank (Wu et al., 2018, He et al., 2020) to store neural network predictions for later iterations. The memory bank, represented as , is a queue of size with representing the fraction of the training dataset stored, and representing the floor function. A value of 1 corresponds to the storage of the entire training set in the memory bank, while a means that no samples are stored, which is equivalent to the standard Cox objective. For every training iteration , we calculate predictions for the th minibatch and store them in , along with the corresponding event indicators and death times (or censoring times for censored samples). The memory bank is updated as

| (B.1) |

where denotes concatenation. If the memory bank is full (i.e. ), the oldest samples are removed and new samples are added. After iterations, will contain the tuples . At each iteration , we calculate the risk set for each uncensored patient in using the stored event indicators and times. The Cox loss for samples in is then calculated using the risk set and the available predictions in the memory bank as

| (B.2) |

where is the set of uncensored samples in at iteration , and and are the predictions for patients and in , respectively, and are functions of the model parameters at iteration or any previous iteration . The loss is used to update the current parameters of the model . By updating at each iteration and using it to calculate the loss, we can effectively approximate the Cox loss on a sample size larger than allowed by the standard Cox objective. We refer to this method as CoxMB and compare its performance to the standard Cox objective in our experiments.

Appendix C. Model architecture

See Fig. C.4.

Fig. C.4.

Model architecture. Left: 3D CNN for processing HRCT scans. Right: MLP to process clinical data. : Number of filters, : kernel size, : stride. In the case of using HRCTs only, the top architecture is used. In the case of using HRCT and clinical data, the outputs of CNN and MLP are concatenated.

Appendix D. Effect of memory bank size in CoxMB

We examine the effect of the size of the memory bank in the CoxMB model, trained on imaging data. is the fraction of training samples stored in the memory bank during training. We train the CoxMB model with different values of and report the results in Table D.2. We observe that the performance of the CoxMB model improves as the memory bank size increases. This is expected, as a larger memory bank allows the model to store more information about the ranking of patients’ survival times, which is then used to penalise the model for inaccuracies in predicting the ranking. We anticipate that this depends on the size of the training set and thus requires tuning for each dataset. However, we observe that the performance of the CoxMB model is relatively stable for a wide range of values (0.4 to 1.0), suggesting that the model is not very sensitive to the choice of .

Table D.2.

Effect of memory bank size on the performance of CoxMB model.

| K | C-Index |

|---|---|

| 0.0 | 67.441 ± 4.572 |

| 0.2 | 67.968 ± 2.712 |

| 0.4 | 70.884 ± 3.844 |

| 0.6 | 70.154 ± 0.975 |

| 0.8 | 73.294 ± 4.056 |

| 1.0 | 71.067 ± 5.572 |

Data availability

The OSIC dataset is online and available for OSIC members.

References

- Agrawal Abhinav, Verma Isha, Shah Varun, Agarwal Abhishek, Sikachi Rutuja R. Cardiac manifestations of idiopathic pulmonary fibrosis. Intractable Rare Dis. Res. 2016;5(2):70–75. doi: 10.5582/irdr.2016.01023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barratt Shaney L., Creamer Andrew, Hayton Conal, Chaudhuri Nazia. Idiopathic pulmonary fibrosis (IPF): an overview. J. Clinical Med. 2018;7(8):201. doi: 10.3390/jcm7080201. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Breslow Norman. Covariance analysis of censored survival data. Biometrics. 1974:89–99. [PubMed] [Google Scholar]

- Buckley Jonathan, James Ian. Linear regression with censored data. Biometrika. 1979;66(3):429–436. [Google Scholar]

- Cox David. Regression models and life-tables. J. R. Stat. Soc. Ser. B Stat. Methodol. 1972;34(2):187–202. [Google Scholar]

- Emmert-Streib Frank, Dehmer Matthias. Introduction to survival analysis in practice. Mach. Learn. Knowl. Extraction. 2019;1(3):1013–1038. [Google Scholar]

- Gao Jing, Kalafatis Dimitrios, Carlson Lisa, Pesonen Ida HA, Li Chuan-Xing, Wheelock Åsa, Magnusson Jesper M., Sköld C. Magnus. Baseline characteristics and survival of patients of idiopathic pulmonary fibrosis: a longitudinal analysis of the Swedish IPF Registry. Respir. Res. 2021;22(1):1–13. doi: 10.1186/s12931-021-01634-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harrell Frank E., Lee Kerry L., Mark Daniel B. Multivariable prognostic models: issues in developing models, evaluating assumptions and adequacy, and measuring and reducing errors. Stat. Med. 1996;15(4):361–387. doi: 10.1002/(SICI)1097-0258(19960229)15:4<361::AID-SIM168>3.0.CO;2-4. [DOI] [PubMed] [Google Scholar]

- He, Kaiming, Fan, Haoqi, Wu, Yuxin, Xie, Saining, Girshick, Ross, 2020. Momentum contrast for unsupervised visual representation learning. In: 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). pp. 9726–9735.

- He, Kaiming, Zhang, Xiangyu, Ren, Shaoqing, Sun, Jian, 2016. Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. pp. 770–778.

- Hofmanninger Johannes, Prayer Forian, Pan Jeanny, Röhrich Sebastian, Prosch Helmut, Langs Georg. Automatic lung segmentation in routine imaging is primarily a data diversity problem, not a methodology problem. Eur. Radiol. Exp. 2020;4(1):1–13. doi: 10.1186/s41747-020-00173-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ioffe Sergey, Szegedy Christian. International Conference on Machine Learning. pmlr; 2015. Batch normalization: Accelerating deep network training by reducing internal covariate shift; pp. 448–456. [Google Scholar]

- Jacob Joseph, Bartholmai Brian J., Rajagopalan Srinivasan, Kokosi Maria, Nair Arjun, Karwoski Ronald, Walsh Simon L.F., Wells Athol U., Hansell David M. Mortality prediction in idiopathic pulmonary fibrosis: evaluation of computer-based CT analysis with conventional severity measures. Eur. Respir. J. 2017;49(1) doi: 10.1183/13993003.01011-2016. [DOI] [PubMed] [Google Scholar]

- Kaplan Edward L., Meier Paul. Nonparametric estimation from incomplete observations. J. Am. Stat. Assoc. 1958;53(282):457–481. [Google Scholar]

- Katzman Jared L., Shaham Uri, Cloninger Alexander, Bates Jonathan, Jiang Tingting, Kluger Yuval. DeepSurv: personalized treatment recommender system using a Cox proportional hazards deep neural network. BMC Med. Res. Methodol. 2018;18(1):1–12. doi: 10.1186/s12874-018-0482-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim Juyoung, Suk Kim Myung. Analysis of automobile repeat-purchase behaviour on CRM. Ind. Manag. Data Syst. 2014;114(7):994–1006. [Google Scholar]

- Klein John P., Moeschberger Melvin L. Springer; 2003. Survival Analysis: Techniques for Censored and Truncated Data; p. 74. [Google Scholar]

- Lederer David J., Martinez Fernando J. Idiopathic pulmonary fibrosis. N. Engl. J. Med. 2018;378(19):1811–1823. doi: 10.1056/NEJMra1705751. [DOI] [PubMed] [Google Scholar]

- Lee Seungyeoun, Lim Heeju. Review of statistical methods for survival analysis using genomic data. Genom. Inform. 2019;17(4) doi: 10.5808/GI.2019.17.4.e41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee, Changhee, Zame, William, Yoon, Jinsung, Van Der Schaar, Mihaela, 2018. Deephit: A deep learning approach to survival analysis with competing risks. In: Proceedings of the AAAI Conference on Artificial Intelligence. Vol. 32, (1).

- Loshchilov, Ilya, Hutter, Frank, 2018. Decoupled weight decay regularization. In: International Conference on Learning Representations.

- Lu Yaozhi, Aslani Shahab, Zhao An, Shahin Ahmed H., Barber David, Emberton Mark, Alexander Daniel C., Jacob Joseph. 2023. A hybrid CNN-RNN approach for survival analysis in a Lung Cancer Screening study. arXiv preprint arXiv:2303.10789. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maas Andrew L., Hannun Awni Y., Ng Andrew Y., et al. International Conference on Machine Learning. Vol. 30. pmlr; 2013. Rectifier nonlinearities improve neural network acoustic models. [Google Scholar]

- Nix, D.A., Weigend, A.S., 1994. Estimating the mean and variance of the target probability distribution. In: Proceedings of 1994 IEEE International Conference on Neural Networks (ICNN’94). Vol. 1, pp. 55–60.

- Richardeau Frederic, Pham Thi Thuy Linh. Reliability calculation of multilevel converters: Theory and applications. IEEE Trans. Ind. Electron. 2012;60(10):4225–4233. [Google Scholar]

- Shahin Ahmed H., Jacob Joseph, Alexander Daniel, Barber David. Proceedings of the 5th International Conference on Medical Imaging with Deep Learning. Vol. 172. 2022. Survival analysis for idiopathic pulmonary fibrosis using CT images and incomplete clinical data; pp. 1057–1074. (Proceedings of Machine Learning Research). [Google Scholar]

- Ulyanov Dmitry, Vedaldi Andrea, Lempitsky Victor. 2016. Instance normalization: The missing ingredient for fast stylization. arXiv preprint arXiv:1607.08022. [Google Scholar]

- Wang Ping, Li Yan, Reddy Chandan K. Machine learning for survival analysis: A survey. ACM Comput. Surv. 2019;51(6):1–36. [Google Scholar]

- Wu, Zhirong, Xiong, Yuanjun, Yu, Stella X., Lin, Dahua, 2018. Unsupervised feature learning via non-parametric instance discrimination. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. pp. 3733–3742.

- Zhao, An, Shahin, Ahmed H., Zhou, Yukun, Gudmundsson, Eyjolfur, Szmul, Adam, Mogulkoc, Nesrin, van Beek, Frouke, Brereton, Christopher J., van Es, Hendrik W., Pontoppidan, Katarina, Savas, Recep, Wallis, Timothy, Unat, Omer, Veltkamp, Marcel, Jones, Mark G., van Moorsel, Coline H. M., Barber, David, Jacob, Joseph, Alexander, Daniel C., 2022. Prognostic imaging biomarker discovery in survival analysis for idiopathic pulmonary fibrosis. In: International Conference on Medical Image Computing and Computer-Assisted Intervention. pp. 223–233.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The OSIC dataset is online and available for OSIC members.