Abstract

Generalized linear models (GLMs) are very widely used, but formal goodness-of-fit (GOF) tests for the overall fit of the model seem to be in wide use only for certain classes of GLMs. We develop and apply a new goodness-of-fit test, similar to the well-known and commonly used Hosmer–Lemeshow (HL) test, that can be used with a wide variety of GLMs. The test statistic is a variant of the HL statistic, but we rigorously derive an asymptotically correct sampling distribution using methods of Stute and Zhu (Scand J Stat 29(3):535–545, 2002) and demonstrate its consistency. We compare the performance of our new test with other GOF tests for GLMs, including a naive direct application of the HL test to the Poisson problem. Our test provides competitive or comparable power in various simulation settings and we identify a situation where a naive version of the test fails to hold its size. Our generalized HL test is straightforward to implement and interpret and an R package is publicly available.

Supplementary Information

The online version contains supplementary material available at 10.1007/s11749-023-00912-8.

Keywords: Empirical regression process, Exponential dispersion family, Generalized linear model, Goodness-of-fit test, Hosmer–Lemeshow test

Introduction

Generalized linear models (GLMs), including among others the linear, logistic, and Poisson regression models, have been used in a vast number of application domains and are extremely popular in medical and biological applications.

Naturally, it is desirable to have a model that fits the observed data well. Hosmer and Lemeshow (1980) constructed a GOF test for the logistic regression model, the HL test, which applies a Pearson test statistic to differences of observed and expected event counts from data grouped based on the ordered fitted values from the model. As a result, their test is very easy to interpret and is extremely popular, particularly in medical applications. Due to the simplicity of the test, it is tempting to naively apply it to other GLMs with minimal modification, as has occasionally been suggested in the literature (Bilder and Loughin 2014; Agresti 1996, p. 90). However, the HL test has not been rigorously justified outside of the binomial setting, and hence, its validity is unknown. Indeed, there are indications that its limiting distribution is incorrect in the non-binomial setting, as simulation results in Sect. 5 suggest.

A considerable number of GOF tests available for GLMs with a non-binomial or non-normal response (or other regression models beyond GLMs) involve kernel density estimation or some other form of smoothing—for example, the test of Cheng and Wu (1994). However, González-Manteiga and Crujeiras (2013) mention that the selection of the smoothing parameter used in some tests is “a broadly studied problem in regression estimation but with serious gaps for testing problems”. Also, use of continuous covariates in a GLM model can render certain basic tests, such as the Pearson chi-squared test, invalid (Pulkstenis and Robinson 2002).

In this paper, we work towards two goals. First, we explore some of the properties of the “naive” application of the HL test to other GLM distributions. Second, we derive a more appropriate modification to the HL test statistic and determine its correct limiting null sampling distribution. The modification is based on an application of theory developed by Stute and Zhu (2002). We show that the test statistic has an asymptotic chi-squared distribution for many GLMs in the exponential dispersion family, adding to the appealing simplicity of the test. We investigate both the new and the naive tests’ small-sample performances in a series of carefully designed computational experiments.

Section 2 gives an overview of previously developed GOF tests. Section 3 has a more detailed description of certain GOF tests, including the naive HL test and our new test. We present our main theorems concerning the asymptotic distribution of our proposed test under the null hypothesis as well as consistency results. The design for our simulation study comparing these tests is laid out in Sect. 4, and the results are provided in Sect. 5. We find that our test provides competitive or comparable power to other available tests in various simulation settings, is computationally efficient, and avoids the use of kernel-based estimators. Finally, we discuss the results and potential future work in Sect. 6. Proofs of several results are in the supplementary material and an R package implementing the generalized Hosmer–Lemeshow test is available at https://github.com/nikola-sur/goodGLM.

Background and notation

We let Y be a response variable that is associated with a covariate vector, X, where . We write , , to denote a random sample where each has the same distribution as (X, Y), and provides observed data . Limits are taken with the sample size n tending to infinity.

The HL GOF test assesses departures between observed and expected event counts from data grouped based on the fitted values from the GLM. For instance, in the binary case for logistic regression, we assume

for some . The likelihood function is then given by

from which a maximum likelihood estimate (MLE), , of can be obtained. Computing the HL test statistic starts with partitioning the data into G groups. This is often done in a way so that the groups are of approximately equal size and fitted values within each group are similar. The partition fixes interval endpoints, for , and . The are often set to be equal to the logit of equally spaced quantiles of the fitted values, . We define , , , , and for , where is the indicator function on a set A. Here, represents the number of observations in the gth group, and represents the average of the fitted values within the gth group. The HL test statistic is

| 1 |

The theory behind this test, based on work by Moore and Spruill (1975), suggests that the asymptotic null distribution of the test statistic follows the distribution of a weighted sum of chi-squared random variables. Hosmer and Lemeshow (1980) approximated this distribution with a single chi-squared distribution, where the degrees of freedom were determined partly by simulation.

To generalize the HL test to other GLMs, we follow Stute and Zhu (2002). We assume that under the null hypothesis, given by (4) below. As a consequence, , and we may define , and . We also assume that for all x in the support of X. The GLM that we fit and test assumes that the conditional density of Y given is an exponential family member with inverse link function, m. Specifically,

| 2 |

where the vector , , and x are related by , and is a suitable dominating measure. The parameter must belong to and we must have for all x in the support of X. In such a model, the variance is a function of the mean and we may write , for a smooth function v determined by the function b. In some cases it is of interest to add an (unknown) dispersion parameter, , to the model and assume further that there is a scalar such that the density of Y given has the form

| 3 |

where is a (possibly different) dominating measure, and , , and x are related as before. With as in (3), we can write . The parameter space for is for a suitable .

Let . We test the null hypothesis

| 4 |

for a pre-specified inverse link function . Our alternative hypothesis is

| 5 |

That is, we simultaneously test for a misspecification of the link function and of the conditional response distribution. We denote the maximum likelihood estimate of by and let be some consistent estimate of ; if there is no dispersion parameter, we take .

For many popular link functions , it is automatic that almost surely for all and any X distribution. For other link functions and some distributions for the predictor X this may not hold for all ; this motivates our definition of . Similar comments apply to models with an unknown dispersion parameter as in (3); see the supplementary material.

Related work

A grouped GOF test for logistic regression models similar to the HL test was introduced by Tsiatis (1980), which uses a data-independent partitioning scheme (i.e., not random). Canary et al. (2016) constructed a generalized Tsiatis test statistic for binary regression models with a non-canonical link, and then used a data-dependent partitioning scheme. We note that the theoretical validity of such a data-dependent partitioning method for the generalized Tsiatis test should be verified, similar to what was done by Halteman (1980) for logistic regression. Our work focuses on proving the validity of a large class of data-dependent partitioning schemes, also allowing for extensions to a much wider class of GLMs, as Canary (2013) briefly suggested. Our test also extends the statistic with all weights equal to one, from Hosmer and Hjort (2002), to a broader class of problems. There are some other versions of the HL test for specific models other than logistic regression, including binomial regression models with a log link (Blizzard and Hosmer 2006; Quinn et al. 2015) and other non-canonical links (Canary et al. 2016). Other models include the multinomial regression model (Fagerland et al. 2008) and the proportional odds and other ordinal logistic regression models (Fagerland and Hosmer 2013, 2016).

GOF tests not based on the HL test have been constructed that can be used with broader classes of GLM models (Su and Wei 1991; Stute and Zhu 2002; Cheng and Wu 1994; Lin et al. 2002; Liu et al. 2004; Rodríguez-Campos et al. 1998; Xiang and Wahba 1995). A review of GOF tests for regression models is given by González-Manteiga and Crujeiras (2013). While many of these tests appear to have merit, they do not seem to have been widely adopted in practice. For instance, the p-values accompanying some tests need to be obtained through simulation and calculations may be time consuming in the presence of several explanatory variables (Christensen and Lin 2015).

Methods and test statistics

Tests for GOF require statistics measuring departures from the null hypothesis. We use the residual process defined in Stute and Zhu (2002) for by:

| 6 |

For the special case of logistic regression, the HL test can be rewritten as a quadratic form, in terms of . Define the (length G) vector

| 7 |

Then, the HL test statistic can be rewritten as , where

| 8 |

Provided that —a requirement not always cited in references to the HL test—and using Theorem 5.1 in Moore and Spruill (1975), Hosmer and Lemeshow (1980) show that their test statistic is asymptotically distributed under the null hypothesis as a weighted sum of chi-squared random variables, with

where each is a chi-squared random variable with 1 degree of freedom, and each is an eigenvalue of a particular matrix that depends on and the distribution of X. Then, through simulations, they conclude that the term can be approximated in various settings by a distribution, leading to the recommended degrees of freedom. In other words, However, in certain settings with a finite sample size this does not serve as a good approximation, as we discuss in Sect. 5.

Naive generalization of the Hosmer–Lemeshow test

The HL test statistic depends on the binomial assumption only through D in (8), with the gth diagonal element representing an estimate of the variance of the counts in the gth group, divided by n. To extend this test to other GLMs, it is tempting to define a “naive” HL test statistic

| 9 |

where

for , similar to the estimates of the variances of group counts given in the original HL test through D. For example, for Poisson regression models,

since the conditional variance of the response is equal to the conditional mean. This idea is very briefly suggested in Agresti (1996) on p. 90, and in Bilder and Loughin (2014), but has not been developed further or assessed in the literature. The limiting distribution of this test statistic has not been determined, although one might naively assume that it retains the same limit as the original HL test. Implementing this test for Poisson regression models, our simulation results suggest that as the number of estimated parameters in the model increases, the mean and variance of the test statistic tend to decrease for a fixed sample size. Thus, it is apparent that the naive limiting distribution is not correct. Further properties of this test are discussed in Sect. 5.

The generalized HL test statistic

The HL test uses a limiting distribution that is only partially supported mathematically and does not seem to be appropriate for finite samples for GLMs outside of logistic regression. Rather than try to fix the flaws in the naive HL test, we propose a test statistic whose limiting law is demonstrated by appropriate techniques to be chi-squared with a determined number of degrees of freedom (less than or equal to G). We allow cell boundaries that may depend on the data but must be distinct and properly ordered for each n. We assume each converges in probability to some , that these limits are all distinct and that they satisfy for all g. In our simulation study, described in Sect. 4, we use random interval endpoints so that is approximately equal across groups. Implementation details are in the supplementary material.

We follow Stute and Zhu (2002) to directly develop our generalized HL test and rigorously determine its correct limiting distribution. We first define

for , and . Note that in the above definition of , we write to denote the derivative of m(u) with respect to u. Also, let be the matrix whose ith row is given by , for . Define and the same as and , respectively, but evaluated at instead of .

Let be the identity matrix, and denote the generalized hat matrix by . Define

| 10 |

Denote the Moore–Penrose pseudoinverse of a matrix A by . Our “generalized HL” (GHL) test statistic is then given as

| 11 |

We note that without the term in , the GHL test statistic reduces to the naive GHL statistic. Under some conditions described next, we have under the null hypothesis that

| 12 |

with , where is specified below in (13).

In this work, we focus on the GHL test with groups. However, the impact of the choice of the number of groups is a well-known limitation of group-based test statistics. In the long history of the HL test, most sources use , and in our limited investigation we found no evidence that this is a bad choice. As informal guidance, we remark that Bilder and Loughin (2014) suggest trying a few different values of G to make sure that a single result is not overly influenced by unfortunate grouping.

GHL test statistic limiting distribution

We first state conditions used in our main theorem on the limiting distribution of under the null hypothesis (4). The theorem describes sufficient conditions for the convergence in distribution given in (12). Conditions (A), (C), and the first inequality in (B) below come from Stute and Zhu (2002). We assume the conditional density of Y given X is given by (2) or (3), with respect to some dominating measure, say . For models without a dispersion parameter, the score function from observation i is , given by , and the Fisher information matrix is .

Condition (A)

exists and is positive definite.

- Let . Under the null hypothesis, we have

Condition (B): The function m is twice continuously differentiable and the function v is continuously differentiable. For some we have

where

Condition (C): Define . Then, is uniformly continuous in u at (and ). This condition requires that have a continuous distribution; in particular, .

Condition (D): Under the null hypothesis, with as defined below,

, and

.

We specify when is as in (2) or (3). Define the column vector

Define the vector-valued function by

The matrix is defined by

| 13 |

where, for ,

In the supplementary material, we prove our main theorem.

Theorem 1

Suppose that , conditions (A), (B), and (C) hold, and that . Assume the cell boundaries satisfy for and that the are distinct. Then, under the null hypothesis given by (4), with as in (2) or (3), we have

where is as defined in (7), and is given by (13).

If there exists any sequence of matrices that satisfies condition (D), then, putting ,

If conditions (A), (B), and (C) hold, then the particular matrix in (10) converges almost surely to , so (D1) holds under the null hypothesis. Finally, if conditions (i) and (ii) of Sect. 3.4 are satisfied, then conditions (B) and (C) hold.

GLMs for which the GHL test is valid

Formally, conditions (A), (B), (C), and (D) should be verified before using the generalized HL test statistic, . From Theorem 1, the conditions below are sufficient for the validity of conditions (B) and (C) provided that is of the form presented in (2) or (3):

-

(i)

One of the distribution/link function combinations from Table 1 is used.

-

(ii)

The joint probability distribution of the explanatory variables, X, has compact support. For all in an open neighbourhood of the variable has a bounded continuous Lebesgue density, the support, , of the linear predictor is an interval, and .

The supplementary material outlines how to verify conditions (A), (B), (C), and (D), weaken the compactness assumption in (ii), and extend our test to other GLMs.

Table 1.

Several possible distribution and link function combinations. Distributions are parameterized so that or represents the mean of the distribution. *Gamma distribution with variance . **Inverse Gaussian distribution with variance . ***Negative binomial distribution with variance . For the negative binomial distribution, k is assumed to be known

| Distribution | Example possible links |

|---|---|

| Normal | identity |

| Bernoulli | logit, probit, cauchit, cloglog |

| Poisson | log, square root |

| Gamma()* | log |

| IG()** | log |

| NB()*** | log |

Consistency of the GHL test

We discuss power in terms of consistency; we outline one possible set of conditions on the alternative distribution, the specific model, and the choice of cell boundaries that will ensure that our test is consistent. Precise versions of conditions (K1-5) that follow are in the supplementary material.

Our conclusions are affected by the presence or absence of an intercept term. Here, we present results for models that do not have an intercept. For such models, we assume that the rows of the design matrix are i.i.d. and have a Lebesgue density. Models with intercepts are discussed in the supplementary material. We let denote the linear predictor of our GLM.

Behaviour of coefficient estimator under the alternative

In the supplementary material, we give conditions (K1), used to check conditions in White (1982), which guarantee that the estimate has a limit under the alternative being considered; we denote this limit by . The most important additional components are a restriction to a compact parameter set not containing , say , uniformity over in some of our conditions, and the assumption that X has a density. From White (1982), conditions (K1) are enough to ensure the existence of a (possibly not unique) maximizer of the GLM likelihood.

Also in the supplementary material, we give conditions (K2) on the joint density of X and Y which come, essentially, from White (1982). The two conditions (K1) and (K2) now imply Assumptions A1, A2, and A3 of White (1982). In turn, these imply almost sure convergence of to a unique . We write for the linear predictor evaluated at . That is, .

Behaviour of interval endpoints under the alternative

We consider both fixed and random interval endpoints. Our consistency results need assumptions about the probability that belongs to each limiting interval; these probabilities depend on and the assumptions will be false for . For some choices of intervals, there can be intervals that have no observations almost surely. We need to assume that this does not happen for the predictor . In the supplementary material, we present condition (K3), which strengthens our main condition (C); this condition requires .

There may be such that is bounded; in that case some methods of choosing boundaries (like fixed boundaries) will not satisfy (K3). However, the method used in our simulations chooses cell boundaries using the estimate so as to make all the cells have approximately the same sum of variances of the responses. For this method, (K3) holds.

Behaviour of covariance estimate under the alternative

We consider our estimate of . Let and be the matrices in (10) and (13) evaluated at a general ; as before, . Condition (B) imposes moment conditions in a neighbourhood implying our estimate is consistent for . Assumption (K4) extends these conditions to every so that they apply to the unknown value . Under conditions (K1–4), our arguments show that, with probability equal to 1, uniformly in ; moreover, is a continuous function of so converges to .

In the supplementary material, we show that under reasonable conditions the matrix has rank G or and that when n is large has the same rank with high probability. We delineate the special cases where rank arises. Here we give one common special case of our results; a more general version is in the supplementary material.

Theorem 2

Fix . Assume conditions (K1), (K3), and (K4) and that we are not fitting an intercept. Then, unless there is a constant c such that

| 14 |

for all . If (14) holds for all , then .

We turn to the rank of . Unless identity (14) holds on , the rank of is G under our conditions. Since converges to and is in , we find that converges to 1 in this case.

Our consistency theorem

Consistency requires that we have modelled the mean incorrectly in a fairly strong sense. For , define

Also define .

Condition (K5): The model fitted does not have an intercept and either:

For every c the set where identity (14) does not hold has positive Lebesgue measure. For all , ; or

Identity (14) holds for all u, and for all .

Theorem 3

Under conditions (K1), (K2), (K3), (K4), and (K5),

We note that in the above theorem, the test based on is consistent against the alternative in question. That is, for any , under we have as , where .

Simulation study design

We perform a simulation study to assess the performance of our proposed test. The purpose of this paper is to extend the HL test to a wide array of new distribution models. We therefore focus on applications to data generated from Poisson distributions, since Poisson appears to be a common non-binomial GLM, but we include other response distributions such as the gamma and inverse Gaussian distributions. While this paper does not study the performance of the GHL test in binomial models, we do compare the performance of the HL test and the binomial version of the GHL test in Surjanovic and Loughin (2021). That work arose because we discovered that certain plausible data structures cause the regular HL test to fail, whereas the binomial version of the GHL test appropriately resolves the issue. We carefully document that problem and discuss it in Surjanovic and Loughin (2021) as a warning to practitioners who routinely apply HL to binomial models.

The binomial version of the GHL test is also studied in Hosmer and Hjort (2002), where it is equivalent to their test when all weights are set to 1. Full details of simulation settings are in the following sections and the supplementary material. We first give an overview and describe features common to all experiments.

Unless otherwise specified, the mean is taken to be where is the log link, and the null hypothesis is that the Poisson distribution with this structure is correct. We compare rejection rates under different null and alternative hypothesis settings for four different GOF tests: the naive generalized HL test, our new generalized HL test (GHL), the Stute-Zhu (SZ) test, and the Su-Wei (SW) test. For HL and GHL, we use unless specified otherwise. The naive generalized HL test is included to demonstrate that a test without proper theoretical justification may fail. We believe that the SZ test has an appealing construction, but is perhaps not as well known as the SW test, which can be found in other simulation studies. These two tests are included because they do not rely heavily on kernel-based density estimation and are relatively straightforward to implement.

For let be 1 if and only if for all . Define

Using our notation, the SW test statistic is defined as

| 15 |

The SZ test statistic has a more complicated form as a Cramér–von Mises statistic applied to a specially transformed version of the process. We also slightly modify the SZ test statistic to detect overdispersion in the Poisson case. These test statistics, including the modification to SZ, are described in greater detail in the supplementary material.

We use sample sizes of 100 and 500 throughout this simulation study, representing moderate and large sample sizes in many studies in medical and other disciplines where GLMs are used. Unless otherwise stated, only the results for the sample size of 100 are reported for the null and power simulation settings; important differences between the two settings are summarized in Sect. 5. For each simulation setting, we produce 2500 realizations. On each realization, we record a binary value for each test indicating whether the test rejects the null hypothesis for that data set. The proportion of the 2500 realizations for which a test rejects the null hypothesis estimates the test’s true probability of type I error or power in that setting. In the null simulations, approximate 95% confidence intervals for the binomial probability of rejection can be obtained from the observed rejection rates by adding and subtracting 0.009 (). Conservative 95% confidence intervals for power can be obtained from the observed rejection rates by adding and subtracting 0.02, accounting for the widest interval, when a proportion is equal to 0.5 (). The simulations are performed using R.

Null distribution

Under the null hypothesis described by (4), where is a Poisson distribution, we consider six settings with a log link and three with a square root link, varying the distribution of explanatory variables and the true parameter values in each case. In the first three null settings with a log link, a model with a single covariate, X, and an intercept term is used. These settings serve to examine the effect of small and large fitted values on the null distribution of the test statistics. The distribution of X and values of and are chosen so that the fitted values take on a wide range of values (approximately 0.1 to 100) in the first setting, are moderate in size (approximately 1 to 10) for the second setting, and are very small (approximately 0.1 to 1) for the third setting. Specifics on these and other settings are given in the supplementary material.

For settings 4, 5, and 6, coefficients are chosen so that the fitted values are moderate in size (rarely less than 1, with an average of approximately 4 or 5), so that other sources of potential problems for the GOF tests can be explored. The fourth setting examines a model including two continuous covariates and one dichotomous covariate (the “Normal-Bernoulli” model), and is similar to the one used in Hosmer and Hjort (2002). The fifth and sixth simulation settings examine the effects of correlated and right-skewed covariates, respectively. It is well known that in the presence of multicollinearity the variance of regression parameter estimates can become inflated. The correlated covariates setting is included to assess the impact of multicollinearity on the GOF tests, since the estimated covariance matrix of the regression coefficients is used in the calculation of the GHL test statistic. The right-skewed covariate in setting 6 is included in order to assess its potential impact on the SZ test, since this test makes use of kernel-based density estimation as a part of the calculation of the test statistic, albeit in a one-dimensional case.

We next create three settings labelled 1b, 2b, and 3b, that are the same as settings 1, 2, and 3, respectively, except the true and fitted models use a square root link, rather than a log link. We also use simulations to verify that the proposed GHL test maintains its size in models where there are unknown dispersion parameters that must be estimated. We consider several settings with gamma, inverse Gaussian, and negative binomial responses with a single covariate, log link, and moderate-sized means. We fix the true dispersion parameter to be , so that the variance of the response distribution given its mean, , is , , and , for the gamma, inverse Gaussian, and negative binomial responses, respectively. The dispersion parameter for the gamma and inverse Gaussian models is estimated using a weighted average of the squared residuals, which is the default in summary.glm(). The negative binomial distribution with an unknown dispersion parameter is a popular alternative to Poisson regression that does not fall within the exponential dispersion family framework presented. In this case, the parameter that controls the variance is estimated using maximum likelihood, which is the default estimation procedure in MASS::glm.nb() in R.

Finally, we consider simulation settings that examine the performance of the GHL and naive generalized HL tests when only discrete covariates are present. We repeat setting 2 above, except that X is sampled uniformly from 30 or 50 possible values on a grid. These numbers of points are chosen so that the data can be split into at least groups. All model coefficients and further details of the simulation study, such as the implementation of the GHL test for the negative binomial model, are given in the supplementary material.

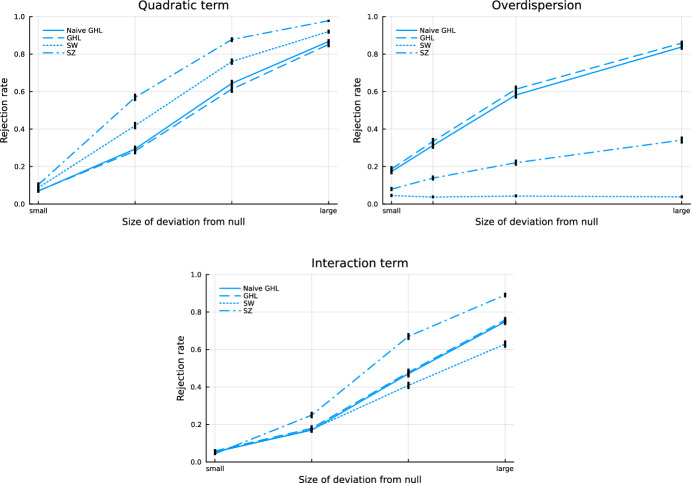

Power

To examine the power of the GOF tests, we consider four types of deviations from the null model: a missing quadratic term, overdispersion, a missing interaction term, and an incorrectly specified link function. These settings are similar to those used in Hosmer and Hjort (2002) and are realistic model misspecifications. In the first three settings, the severity of deviation between the true model and the fitted model is controlled by regression parameters to represent four levels ranging from “small” to “large” deviations from the assumed additive linear model. In the incorrect link setting, the true model uses a square root link, but the fitted model assumes a log link. In all four settings, we use a Poisson GLM and choose appropriate regression coefficients so that the fitted values are moderate in size, rarely less than 1 and often smaller than 10, to ensure that a large rejection rate is not simply due to small fitted values in the Pearson-like test statistics. All four power simulation settings are described in detail in the supplementary material.

Performance with larger models

We additionally assess the performance of each of the tests with larger models. The theoretical results presented in this work assume a constant dimension, d, and consider limiting distributions as the sample size tends to infinity. Here, we again consider fixed d, but as a factor worth studying on its own.

Realizations of Y are again drawn from a Poisson distribution with a log link and parameters. We use sample sizes and 500. To keep the distribution of the fitted values approximately constant as d is varied, we set , and . This gives a distribution of fitted values mostly within the interval [1, 10], ensuring that expected counts within each group used in the calculation of the Pearson statistic are sufficiently large. The SW test is omitted due to computational challenges that arise with this test with large models and because we observe that a large proportion of the data needs to be omitted when d is large and for the test statistic to be computed (see the supplementary material).

Simulation results

Null distribution

From the null simulation results in Table 2, we see that the estimated type I error rate for the GHL test does not significantly differ from the nominal level, since all values are in the interval (0.041, 0.059). However, the naive generalized HL test falls out of this interval in three settings, whereas the SW and SZ tests fall out of this interval in two and four settings, respectively. Interestingly, even with in setting 4, we begin to see a decreased type I error rate for the naive generalized HL test, a phenomenon discussed in more detail later in this section. However, with a sample size of 500, the naive generalized HL test and the SZ test generally have better empirical rejection rates, whereas the SW test has similar poor performance even with a larger sample size. Table 3 summarizes type I error rates for the GHL test in the presence of a dispersion parameter. A larger sample size is sometimes needed to ensure that the finite sampling distribution of the test statistic is well approximated by its limiting chi-squared distribution. In our simulation settings, the estimated type I error rate is closer to the nominal rate for the larger sample size of 500. Type I error rates for settings with discrete covariates are in Table 4. The naive generalized HL test and the GHL test hold their size for the considered sub-settings.

Table 2.

Estimated type I error rates (null setting simulation results)

| Statistic/setting | 1 | 1b | 2 | 2b | 3 | 3b |

|---|---|---|---|---|---|---|

| 0.058 | 0.061 | 0.042 | 0.051 | 0.051 | 0.047 | |

| 0.053 | 0.056 | 0.049 | 0.052 | 0.052 | 0.050 | |

| 0.043 | 0.062 | 0.041 | 0.052 | 0.042 | 0.048 | |

| 0.032 | 0.045 | 0.039 | 0.039 | 0.044 | 0.041 |

| Statistic/setting | 4 | 5 | 6 | |||

|---|---|---|---|---|---|---|

| 0.037 | 0.055 | 0.059 | ||||

| 0.048 | 0.056 | 0.054 | ||||

| 0.051 | 0.048 | 0.053 | ||||

| – | 0.045 | 0.049 |

Numbers in italics represent cases where the estimated rejection rate is significantly different from 0.05 (i.e., outside of the interval (0.041, 0.059))

Table 3.

Estimated type I error rates for the GHL test in the presence of a dispersion parameter for samples of size and . For the negative binomial response with , approximately 3% of simulation draws were discarded due to GLM convergence warnings

| Distribution | ||

|---|---|---|

| Gamma | 0.041 | 0.042 |

| Inverse Gaussian | 0.064 | 0.055 |

| Negative binomial | 0.042 | 0.053 |

Table 4.

Estimated type I error rates for the naive generalized HL and GHL tests when all covariates are discrete. Sample sizes and are considered, with the discrete covariate being a random sample of size n from a uniformly spaced sequence of length on

| Statistic | ||

|---|---|---|

| () | 0.051 | 0.051 |

| () | 0.053 | 0.042 |

| () | 0.052 | 0.052 |

| () | 0.050 | 0.044 |

Power

The power simulation results displayed in Fig. 1 show our new test does have power to detect each of the violated model assumptions we tested. However, for those model flaws that are detectable by the SZ and SW tests, these two tests generally have better power than the tests based on grouped residuals. In the simulation settings we explored, our test had power to detect overdispersion while the SW and SZ tests had little or no power, although these two competitors were not necessarily designed to detect overdispersion.

Fig. 1.

Power simulation results for the first three settings. Solid red lines are 95% Wilson CIs for the mean rejection rate (colour figure online)

Larger models

The null distribution of the naive generalized HL test statistic, , is not well approximated by the usual distribution in this setting with a finite sample size. The impact of the number of parameters on the estimated mean and level of the naive generalized HL test statistic can be seen in Table 5, where the degrees of freedom approximation for this test deteriorates as the model size grows, relative to the sample size. This adverse effect is less pronounced with a larger sample size. The estimated type I error rates steadily decrease for the naive generalized HL test from about 0.050 to 0.001 as d grows from 2 to 50 with a sample size of 100, and down to 0.030 with a sample size of 500. Our proposed test does not seem to be affected by the number of parameters present in the model. Similar results were obtained for both tests with , used to ensure that , as is required by the traditional HL test on which the naive GHL test is based.

Table 5.

Estimated means (top) and levels (bottom) of the naive HL and generalized HL statistics for Poisson regression models with and

| Statistic | n | ||||||

|---|---|---|---|---|---|---|---|

| 100 | 8.05 | 7.24 | 6.48 | 5.61 | 4.76 | 3.85 | |

| 100 | 8.99 | 8.89 | 9.03 | 9.09 | 9.20 | 9.13 | |

| 500 | 8.02 | 7.86 | 7.81 | 7.57 | 7.34 | 7.23 | |

| 500 | 8.98 | 8.95 | 9.09 | 9.09 | 9.02 | 9.02 |

| Statistic | n | ||||||

|---|---|---|---|---|---|---|---|

| 100 | 0.050 | 0.030 | 0.012 | 0.005 | 0.002 | 0.001 | |

| 100 | 0.044 | 0.049 | 0.048 | 0.053 | 0.054 | 0.056 | |

| 500 | 0.049 | 0.047 | 0.045 | 0.036 | 0.030 | 0.030 | |

| 500 | 0.050 | 0.043 | 0.056 | 0.054 | 0.051 | 0.054 |

For the naive HL and generalized HL test statistics, the means should be approximately and , respectively. In all cases, the type I error rate should be

Discussion

The simulation results of Sect. 5 show that the GHL test provides competitive or comparable power in various simulation settings. Our test is also computationally efficient, straightforward to implement, and works in a variety of scenarios. There is no need for a choice of a kernel bandwidth (although the choice of number of groups, G, can play a role in determining the outcome of the test), and the output can be interpreted in a meaningful way by assessing differences between observed and expected counts in each of the groups. The naive generalization of the HL test does not work well under certain settings, but use of the GHL test resolves these issues.

Supplementary Information

Below is the link to the electronic supplementary material.

Acknowledgements

We acknowledge the support of the Natural Sciences and Engineering Research Council of Canada (NSERC). N.S. acknowledges the support of a Vanier Canada Graduate Scholarship.

Declarations

Conflict of interest

The authors declare no conflict of interest.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- Agresti A. An introduction to categorical data analysis. New York: Wiley; 1996. [Google Scholar]

- Bilder CR, Loughin TM. Analysis of categorical data with R. Boston: Chapman and Hall/CRC; 2014. [Google Scholar]

- Blizzard L, Hosmer DW. Parameter estimation and goodness-of-fit in log binomial regression. Biom J. 2006;48(1):5–22. doi: 10.1002/bimj.200410165. [DOI] [PubMed] [Google Scholar]

- Canary JD (2013) Grouped goodness-of-fit tests for binary regression models. PhD thesis, University of Tasmania

- Canary JD, Blizzard L, Barry RP, Hosmer DW, Quinn SJ. Summary goodness-of-fit statistics for binary generalized linear models with noncanonical link functions. Biom J. 2016;58(3):674–690. doi: 10.1002/bimj.201400079. [DOI] [PubMed] [Google Scholar]

- Cheng KF, Wu JW. Testing goodness of fit for a parametric family of link functions. J Am Stat Assoc. 1994;89(426):657–664. doi: 10.1080/01621459.1994.10476790. [DOI] [Google Scholar]

- Christensen R, Lin Y. Lack-of-fit tests based on partial sums of residuals. Commun Stat Theory Methods. 2015;44(13):2862–2880. doi: 10.1080/03610926.2013.844256. [DOI] [Google Scholar]

- Fagerland MW, Hosmer DW. A goodness-of-fit test for the proportional odds regression model. Stat Med. 2013;32(13):2235–2249. doi: 10.1002/sim.5645. [DOI] [PubMed] [Google Scholar]

- Fagerland MW, Hosmer DW. Tests for goodness of fit in ordinal logistic regression models. J Stat Comput Simul. 2016;86(17):3398–3418. doi: 10.1080/00949655.2016.1156682. [DOI] [Google Scholar]

- Fagerland MW, Hosmer DW, Bofin AM. Multinomial goodness-of-fit tests for logistic regression models. Stat Med. 2008;27(21):4238–4253. doi: 10.1002/sim.3202. [DOI] [PubMed] [Google Scholar]

- González-Manteiga W, Crujeiras RM. An updated review of goodness-of-fit tests for regression models. TEST. 2013;22(3):361–411. doi: 10.1007/s11749-013-0327-5. [DOI] [Google Scholar]

- Halteman WA (1980) A goodness of fit test for binary logistic regression. Unpublished doctoral dissertation, Department of Biostatistics, University of Washington, Seattle, WA

- Hosmer DW, Hjort NL. Goodness-of-fit processes for logistic regression: simulation results. Stat Med. 2002;21(18):2723–2738. doi: 10.1002/sim.1200. [DOI] [PubMed] [Google Scholar]

- Hosmer DW, Lemeshow S. Goodness of fit tests for the multiple logistic regression model. Commun Stat Theory Methods. 1980;9(10):1043–1069. doi: 10.1080/03610928008827941. [DOI] [Google Scholar]

- Lin DY, Wei LJ, Ying Z. Model-checking techniques based on cumulative residuals. Biometrics. 2002;58(1):1–12. doi: 10.1111/j.0006-341X.2002.00001.x. [DOI] [PubMed] [Google Scholar]

- Liu A, Meiring W, Wang Y. Testing generalized linear models using smoothing spline methods. Stat Sin. 2004;15:235–256. [Google Scholar]

- Moore DS, Spruill MC. Unified large-sample theory of general chi-squared statistics for tests of fit. Ann Stat. 1975;3:599–616. doi: 10.1214/aos/1176343125. [DOI] [Google Scholar]

- Pulkstenis E, Robinson TJ. Two goodness-of-fit tests for logistic regression models with continuous covariates. Stat Med. 2002;21(1):79–93. doi: 10.1002/sim.943. [DOI] [PubMed] [Google Scholar]

- Quinn SJ, Hosmer DW, Blizzard CL. Goodness-of-fit statistics for log-link regression models. J Stat Comput Simul. 2015;85(12):2533–2545. doi: 10.1080/00949655.2014.940953. [DOI] [Google Scholar]

- Rodríguez-Campos MC, González-Manteiga W, Cao R. Testing the hypothesis of a generalized linear regression model using nonparametric regression estimation. J Stat Plan Inference. 1998;67(1):99–122. doi: 10.1016/S0378-3758(97)00098-0. [DOI] [Google Scholar]

- Stute W, Zhu L-X. Model checks for generalized linear models. Scand J Stat. 2002;29(3):535–545. doi: 10.1111/1467-9469.00304. [DOI] [Google Scholar]

- Su JQ, Wei LJ. A lack-of-fit test for the mean function in a generalized linear model. J Am Stat Assoc. 1991;86(414):420–426. doi: 10.1080/01621459.1991.10475059. [DOI] [Google Scholar]

- Surjanovic N, Loughin TM (2021) Improving the Hosmer–Lemeshow goodness-of-fit test in large models with replicated trials. arXiv preprint arXiv:2102.12698 [DOI] [PMC free article] [PubMed]

- Tsiatis AA. A note on a goodness-of-fit test for the logistic regression model. Biometrika. 1980;67(1):250–251. doi: 10.1093/biomet/67.1.250. [DOI] [Google Scholar]

- White H. Maximum likelihood estimation of misspecified models. Econometrica. 1982;50(1):1–25. doi: 10.2307/1912526. [DOI] [Google Scholar]

- Xiang D, Wahba G (1995) Testing the generalized linear model null hypothesis versus ‘smooth’ alternatives. Technical Report 953, Department of Statistics, University of Wisconsin

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.