The constant introduction of new health technologies, coupled with limited healthcare resources, has engendered a growing interest in economic evaluation as a way of guiding decision makers towards interventions that are likely to offer maximum health gain. In particular, cost effectiveness analyses—which compare interventions in terms of the extra or incremental cost per unit of health outcome obtained—have become increasingly familiar in many medical and health service journals.

Considerable uncertainty exists in regard to valid economic evaluations. Firstly, several aspects of the underlying methodological framework are still being debated among health economists. Secondly, there is often considerable uncertainty surrounding the data, the assumptions that may have been used, and how to handle and express this uncertainty. In the absence of data at the patient level sensitivity analysis is commonly used; however, a number of alternative methods of sensitivity analysis exist, with different implications for the interval estimates generated (see box). Finally, there is a substantial amount of subjectivity in presenting and interpreting the results of economic evaluations.

The aim of this paper is to give an overview of the handling of uncertainty in economic evaluations of healthcare interventions.3 It examines how analysts have handled uncertainty in economic evaluation, assembled data on the distribution and variance of healthcare costs, and proposed guidelines to improve current practice. It is intended as a contribution towards the development of agreed guidelines for analysts, reviewers, editors, and decision makers.4–7

Summary points

Economic evaluations are beset by uncertainty concerning methodology and data

A review of 492 articles published up to December 1996 found that a fifth did not attempt any analysis to examine uncertainty

Only 5% of these studies reported some measure of cost variance

Closer adherence to published guidelines would greatly improve the current position

Use of a methodological reference case will improve comparability

Nature of the evidence

A structured review examined the methods used to handle uncertainty in the empirical literature, and this was supplemented by a review of methodological articles on the specific topic of confidence interval estimation for cost effectiveness ratios. The first step in the empirical review was a search of the literature to identify published economic evaluations that reported results in terms of cost per life year or cost per quality adjusted life year (QALY). This form of study was chosen as the results of these studies are commonly considered to be sufficiently comparable to be grouped together and reported in cost effectiveness league tables.

Searches were conducted for all such studies published up to the end of 1996 using Medline, CINAHL, Econlit, Embase, the Social Science Citation Index, and the economic evaluation databases of the Centre for Reviews and Dissemination at York University and the Office of Health Economics and International Federation of Pharmaceutical Manufacturers’ Association. Articles identified as meeting the search criteria were reviewed by using a form designed to collect summary information on each study, including the disease area, type of intervention, nature of the data, nature of the results, study design, and the methods used to handle uncertainty. This information was entered as keywords into a database to allow interrogation and cross referencing of the database by category.

This overall dataset was then used to focus on two specific areas of interest, using subsets of articles to perform more detailed reviews. Firstly, all British studies were identified and reviewed in detail, and information on the baseline results, the methods underlying those results, the range of results representing uncertainty, and the number of previously published results quoted for purposes of comparison were entered on to a relational database. By matching results by the methods used in a retrospective application of a methodological “reference case” (box),5 a subset of results with improved comparability was identified, and a rank ordering of these results was then attempted. Where a range of values accompanied the baseline results, the implications of this uncertainty for the rank ordering was also examined.

The “reference case”

The Panel on Cost-Effectiveness in Health and Medicine, an expert committee convened by the US Public Health Service in 1993, proposed that all published cost effectiveness studies contain at least one set of results based on a standardised act of methods and conventions—a reference case analysis—which would aid comparability between studies. The features of this reference case were set out in detail in the panel’s report.5

The current review used this concept retrospectively, selecting for comparison a subset of results which conformed to the following conditions:

An incremental analysis was undertaken;

A health service perspective was employed; and

Both costs and health outcomes were discounted at the UK Treasury approved rate of 6% per annum.

Secondly, all studies that reported cost data at the patient level were identified and reviewed in detail with respect to how they had reported the distribution and variance of healthcare costs. Thirdly, and in parallel with the structured review, five datasets of patient level cost data were obtained and examined to show how the healthcare costs in those data were distributed and to elucidate issues surrounding the analysis and presentation of differences in healthcare cost.

Economic analyses are not simply concerned with costs, but also with effects, with the incremental cost effectiveness ratio being the outcome of interest in most economic evaluations. Unfortunately, ratio statistics pose particular problems for standard statistical methods. The review examines a number of proposed methods that have appeared in the recent literature for estimating confidence limits for cost effectiveness ratios (when patient level data are available).

Findings

Trends in economic evaluations

A total of 492 articles published up to December 1996 were found to match the search criteria and were fully reviewed. The review found an exponential rate of increase in published economic evaluations over time and an increasing proportion reporting cost per QALY results. Analysis of the articles in terms of the method used by analysts to handle uncertainty shows that the vast majority of studies (just over 70%) used one way sensitivity analysis methods to quantify uncertainty (see box B1). Of some concern is that almost 20% of studies did not attempt any analysis to examine uncertainty, although there is weak evidence to show that this situation has improved over time.

Box 1.

: Sensitivity analysis

Sensitivity analysis involves systematically examining the influence of uncertainties in the variables and assumptions employed in an evaluation on the estimated results. It encompasses at least three alternative approaches.1

- One way sensitivity analysis systematically examines the impact of each variable in the study by varying it across a plausible range of values while holding all other variables in the analysis constant at their “best estimate” or baseline value.

- Extreme scenario analysis involves setting each variable to simultaneously take the most optimistic (pessimistic) value from the point of view of the intervention under evaluation in order to generate a best (worst) case scenario. Of course, in real life the components of an evaluation do not vary in isolation nor are they perfectly correlated, hence it is likely that one way sensitivity analysis will underestimate, and extreme scenario analysis overestimate, the uncertainty associated with the results of economic evaluation.

- Probabilistic sensitivity analysis, which is based on a large number of Monte Carlo simulations, examines the effect on the results of an evaluation when the underlying variables are allowed to vary simultaneously across a plausible range according to predefined distributions. These probabilistic analyses are likely to produce results that lie between the ranges implied by one way sensitivity analysis and extreme scenario analysis, and therefore may produce a more realistic estimate of uncertainty.2

Handling of uncertainty

Of the 492 studies, 60 reported results for the United Kingdom. From these, 548 baseline results were extracted for different subgroups. The importance of separate baselines for different subgroups of patients is shown in the results of an evaluation of an implantable cardioverter defibrillator where the average cost per life year saved across the whole patient group—£57 000—masks important differences between patients with different clinical characteristics.8 For patients with a low ejection fraction and inducible arrhythmia that is not controlled by drugs, the cost effectiveness of the device is £22 000 per year of life saved. By contrast, the use of the device in patients with high ejection fraction and inducible arrhythmia that is controlled by drugs is associated with an incremental cost effectiveness of around £700 000 per year of life saved.

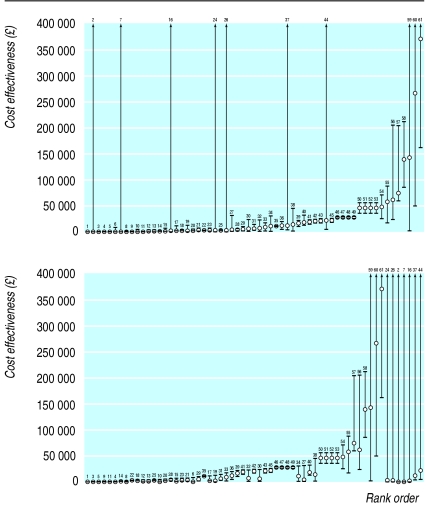

The 548 baseline results used no fewer than 106 different methodological scenarios, and consequently a “reference case” methodological scenario was applied retrospectively to each article; this resulted in a total of 333 methodologically comparable baseline results. These results were converted to a common cost base year and ranked to give a comprehensive “league table” of results for the United Kingdom. Of the 333 results, 61 reported an associated range of high and low values to represent uncertainty. Alternative rankings based on the high or low values from this range showed that there could be considerable disruption to the ranked order based on the baseline point estimates only. This is illustrated by figure 1, which shows the rank ordering of these 61 results by their baseline values and by the highest value from their range. This analysis of UK studies reporting the ranges of sensitivity analyses raises the further concern that the median number of variables included in the sensitivity analysis was just two. Therefore, the ranges of values shown in figure 1 are likely to be less than if a comprehensive analysis of all uncertain variables had been conducted. Clearly, this would further increase the potential for the rank order to vary depending on the value chosen from the overall range.

Figure 1.

Alternative rank orderings of 61 British cost effectiveness results by baseline value (above) and highest sensitivity analysis value (below)

Cost data at patient level

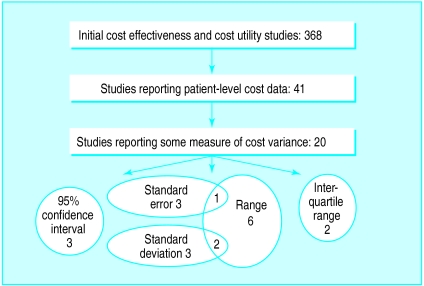

Of the 492 studies on the database, only 53 had patient level cost data and just 25 of these reported some measure of cost variance. Eleven reported only ranges, which are of limited usefulness in quantifying variance. Five articles gave a standard error, seven a standard deviation, and only four studies (<1%) had calculated 95% confidence intervals for cost.

In the five datasets of cost at the patient level, analysis indicated that many cost data were substantially skewed in their distribution. This may cause problems for parametric statistical tests for the equality of two means. One method for dealing with this is to transform the data to an alternative scale of measurement—for example by means of log, square root, or reciprocal transformations. However, our analysis of these data indicated that although a transformation may modestly improve the statistical significance of observed cost differences or may reduce the sample size requirements to detect a specified difference, it is difficult to give the results of a transformed or back transformed scale a meaningful economic interpretation, especially if we intend to use the cost information as part of a cost effectiveness ratio. It would be appropriate to use non-parametric bootstrapping to test whether the sample size of a study’s cost data is sufficient for the central limit theorem to hold, and to base analyses on mean values from untransformed data.

Estimating confidence intervals for cost effectiveness ratios

Finally, our review identified a number of different methods for estimating confidence intervals for cost effectiveness ratios that have appeared in the recent literature,9–14 and we applied each of these methods to one of the five datasets listed above.15 These different methods produced very different intervals. Examination of their statistical properties and evidence from recent Monte Carlo simulation studies14,16 suggests that many of these methods may not perform well in some circumstances. The parametric method based on Fieller’s theorem and the non-parametric approach of bootstrapping have been shown to produce consistently the best results in terms of the number of times, in repeated sampling, the true population parameter is contained within the interval.14,16

Recommendations

Uncertainty in economic evaluation is often handled inconsistently and unsatisfactorily. Recently published guidelines should improve this situation, but we emphasise the following:

Ensure that the potential implications of uncertainty for the results are considered in all analyses;

When reporting cost and cost effectiveness information, make more use of descriptive statistics. Interval estimates should accompany each point estimate presented;

Sensitivity analyses should be comprehensive in their inclusion of all variables;

Cost and cost effectiveness data are often skewed. Significance tests may be more powerful on a transformed scale, but confidence interval should be reported on the original scale. Even when data are skewed, economic analyses should be based on means of distributions;

Where patient level data on both cost and effect are available, the parametric approach based on Fieller’s theorem or the non-parametric approach of bootstrapping should be used to estimate a confidence interval for the cost effectiveness ratio;

When comparing results between studies, ensure that they are representative;

Using a methodological reference case when presenting results will increase the comparability of results between studies.

Figure 2.

The handling of cost variance by studies reporting patient level cost data

Acknowledgments

This article is adapted from Health Services Research Methods: A Guide to Best Practice, edited by Nick Black, John Brazier, Ray Fitzpatrick, and Barnaby Reeves, published by BMJ Books.

Footnotes

Series editor: Nick Black

Competing interests: None declared.

References

- 1.Briggs AH. Handling uncertainty in the results of economic evaluation. London: Office of Health Economics; 1995. (OHE briefing paper No 32.) [Google Scholar]

- 2.Manning WG, Fryback DG, Weinstein MC. Reflecting uncertainty in cost-effectiveness analysis. In: Gold MR, Siegel JE, Russell LB, Weinstein MC, editors. Cost-effectiveness in health and medicine. New York: Oxford University Press; 1996. pp. 247–275. [Google Scholar]

- 3.Briggs AH, Gray AM. Handling uncertainty when performing economic evaluations of health care interventions: a systematic review with special reference to the variance and distributional form of cost data. Health Technol Assess 1999;3(2). [PubMed]

- 4.Drummond MF, Jefferson TO. Guidelines for authors and peer reviewers of economic submissions to the BMJ. BMJ. 1996;313:275–283. doi: 10.1136/bmj.313.7052.275. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Gold MR, Siegel JE, Russell LB, Weinstein MC, editors. Cost-effectiveness in health and medicine. New York: Oxford University Press; 1996. [Google Scholar]

- 6.Canadian Coordinating Office for Health Technology Assessment. Guidelines for the economic evaluation of pharmaceuticals: Canada. 2nd ed. Ottawa: CCOHTA; 1997. [DOI] [PubMed] [Google Scholar]

- 7.Drummond MF, O’Brien B, Stoddart GL, Torrance GW. Methods for the economic evaluation of health care programmes. 2nd ed. Oxford: Oxford University Press; 1997. [Google Scholar]

- 8.Anderson MH, Camm AJ. Implications for present and future applications of the implantable cardioverter-defibrillator resulting from the use of a simple model of cost efficacy. Br Heart J. 1993;69:83–92. doi: 10.1136/hrt.69.1.83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.O’Brien BJ, Drummond MF, Labelle RJ, Willan A. In search of power and significance: issues in the design and analysis of stochastic cost-effectiveness studies in health care. Med Care. 1994;32:150–163. doi: 10.1097/00005650-199402000-00006. [DOI] [PubMed] [Google Scholar]

- 10.Wakker P, Klaassen M. Confidence intervals for cost-effectiveness ratios. Health Econ. 1995;4:373–382. doi: 10.1002/hec.4730040503. [DOI] [PubMed] [Google Scholar]

- 11.Van Hout BA, Al MJ, Gordon GS, Rutten FF. Costs, effects and C/E-ratios alongside a clinical trial. Health Econ. 1994;3:309–319. doi: 10.1002/hec.4730030505. [DOI] [PubMed] [Google Scholar]

- 12.Chaudhary MA, Stearns SC. Estimating confidence intervals for cost-effectiveness ratios: an example from a randomized trial. Stat Med. 1996;15:1447–1458. doi: 10.1002/(SICI)1097-0258(19960715)15:13<1447::AID-SIM267>3.0.CO;2-V. [DOI] [PubMed] [Google Scholar]

- 13.Briggs AH, Wonderling DE, Mooney CZ. Pulling cost-effectiveness analysis up by its bootstraps: a non-parametric approach to confidence interval estimation. Health Econ. 1997;6:327–340. doi: 10.1002/(sici)1099-1050(199707)6:4<327::aid-hec282>3.0.co;2-w. [DOI] [PubMed] [Google Scholar]

- 14.Polsky D, Glick HA, Willke R, Schulman K. Confidence intervals for cost-effectiveness ratios: a comparison of four methods. Health Econ. 1997;6:243–252. doi: 10.1002/(sici)1099-1050(199705)6:3<243::aid-hec269>3.0.co;2-z. [DOI] [PubMed] [Google Scholar]

- 15.Fenn P, McGuire A, Phillips V, Backhouse M, Jones D. The analysis of censored treatment cost data in economic evaluation. Med Care. 1995;33:851–863. doi: 10.1097/00005650-199508000-00009. [DOI] [PubMed] [Google Scholar]

- 16.Briggs AH, Mooney CZ, Wonderling DE. Constructing confidence intervals around cost-effectiveness ratios: an evaluation of parametric and non-parametric methods using Monte Carlo simulation. Stat Med (in press). [DOI] [PubMed]