Abstract

Background The integration of entrustable professional activities (EPAs) within objective structured clinical examinations (OSCEs) has yielded a valuable avenue for delivering timely feedback to residents. However, concerns about feedback quality persist.

Objective This study aimed to assess the quality and content alignment of verbal feedback provided by examiners during an entrustment-based OSCE.

Methods We conducted a progress test OSCE for internal medicine residents in 2022, assessing 7 EPAs. The immediate 2-minute feedback provided by examiners was recorded and analyzed using the Quality of Assessment of Learning (QuAL) score. We also analyzed the degree of alignment with EPA learning objectives: competency milestones and task-specific abilities. In a randomized crossover experiment, we compared the impact of 2 scoring methods used to assess residents’ clinical performance (3-point entrustability scales vs task-specific checklists) on feedback quality and alignment.

Results Twenty-one examiners provided feedback to 67 residents. The feedback demonstrated high quality (mean QuAL score 4.3 of 5) and significant alignment with the learning objectives of the EPAs. On average, examiners addressed in their feedback 2.5 milestones (61%) and 1.2 task-specific abilities (46%). The scoring methods used had no significant impact on QuAL scores (95% CI -0.3, 0.1, P=.28), alignment with competency milestones (95% CI -0.4, 0.1, P=.13), or alignment with task-specific abilities (95% CI -0.3, 0.1, P=.29).

Conclusions In our entrustment-based OSCE, examiners consistently offered valuable feedback aligned with intended learning outcomes. Notably, we explored high-quality feedback and alignment as separate dimensions, finding no significant impact from our 2 scoring methods on either aspect.

Introduction

While a traditional objective structured clinical examination (OSCE) primarily focuses on assessing specific clinical skills, an entrustment-based OSCE assesses learners’ readiness to independently carry out essential professional activities.1 The inclusion of entrustable professional activities (EPAs) in OSCEs, in addition to its primary purpose of assessing autonomy, establishes a solid framework for delivering timely verbal feedback to residents.1-3 Promptly provided after performance, observation-based feedback closely aligned with learning objectives has the potential to enhance residents’ autonomy and professional growth.4-7 Concerns have been raised, particularly with the introduction of new scoring methods, regarding the quality and alignment of feedback in OSCEs, underscoring the need for a comprehensive analysis.8,9

In a study by Martin et al,10 residents emphasized the significance of verbal feedback, even over their performance scores, particularly when the feedback is constructive and fosters their development as professionals. However, despite the existence of numerous methods for assessing feedback quality, few disclose strong psychometric properties, underscoring the necessity of utilizing validated instruments.11 Examiners’ self-assessment and students’ perception have limitations in providing objective and reliable measures. Physicians often lack self-awareness regarding their abilities,12 while students tend to misremember the content of feedback8 and to favor praise over constructive feedback.13

Another aspect of feedback is its content coherence with the principle of alignment stressing consistency between assessment, learning activities, and intended learning outcomes.14 If EPAs represent the expected learning outcomes and competency milestones are indicators of their achievement, feedback should consequently be congruent with these elements.15 Further, it is essential that feedback align with task-specific abilities that encompass the necessary knowledge and skills, since such abilities make up the fundamental criteria for entrusting autonomous practice.16 Failure to provide aligned feedback not only overlooks residents’ needs but also contradicts the purpose of a progress test within competency-based education.1

The scoring method used by the examiner to assess the clinical performance of the resident might influence the frequency, specificity, and timeliness of feedback.10,17 Previous studies on OSCEs have encountered limitations in determining the impact of scoring methods on feedback due to the simultaneous utilization of global scales and checklists.8,18

To address these gaps and concerns, our study aims to conduct a comprehensive analysis of the verbal feedback provided by examiners in an entrustment-based OSCE. We will assess the quality of feedback and its alignment with EPAs’ learning objectives (competency milestones and task-specific abilities).16 To assess residents’ clinical performance, examiners will employ either a 3-point entrustability scale or a task-specific checklist. Through a randomized crossover experiment, we will explore the effect of these 2 scoring methods on feedback quality and alignment.

KEY POINTS

What Is Known

Educators in graduate medical education continue to strive for ways to improve the quality of feedback given to residents and identify potential barriers to providing quality feedback.

What Is New

This study of internal medicine objective structured clinical examination (OSCE) examiners showed that feedback quality was high, aligned with learning objectives, and was not affected by choice of grading rubric framework.

Bottom Line

Programs directors incorporating OSCEs can feel reassured, based on this evidence, that both entrustability scales and task-specific checklists can produce equally valuable feedback as measured by Quality of Assessment of Learning scores.

Methods

Setting

Our study was conducted at Laval University School of Medicine in an urban Canadian setting. Since 2019, the internal medicine residency program has embraced EPAs as part of its competency-based teaching and assessment approach. The OSCE serves as an annual mandatory progress test. While OSCE outcomes are primarily formative, contributing to ongoing learning without standalone consequences, they are incorporated into programmatic assessments by a competency committee. The 2022 OSCE marked the institution’s inaugural implementation of an entrustment-based OSCE with immediate feedback. Sixty-seven out of 76 postgraduate year (PGY) 2 and PGY-3 residents participated across 3 waves. The OSCE comprised 10 stations: 7 for EPA assessments, 2 for questionnaire completion and 1 designated break station. Immediate feedback, lasting 2 minutes, was provided to residents by examiners after each EPA station. At that time, residents were unaware of the study’s focus, the scoring methods utilized, or their individual performance scores.

A committee of 4 clinician educators in internal medicine determined the clinical scenarios, task-specific abilities (2 or 3 per EPA), and checklist items (on average 20 per EPA). An example of the 2 scoring methods used in our study (3-point entrustability scale or task-specific checklist) is provided as online supplementary data. As shown in Table 1, the committee selected 7 core EPAs (3 complex medical situations, 2 acute care situations, and 2 shared decisions with patients and families) from the Royal College of Physicians and Surgeons of Canada framework, encompassing competency milestones in medical expertise, communication, collaboration, scholarship, and professionalism for PGY-2 and PGY-3 residents in internal medicine.19 The inclusion of these milestones is based on their universal presence across multiple competency frameworks.20

Table 1.

Design Overview of the 2022 Entrustment-Based Objective Structured Clinical Examination

| EPA | OSCE Stations | EPA Description | Competency Milestones (Numbers Refer to Table 4) | Task | Task-Specific Abilities |

| Complex medical situations | Cirrhosis | Assessing and managing patients with complex chronic conditions | Medical expertise (ME1, ME2, ME4) Communication (COM1) Professionalism (P1) |

Perform a targeted physical examination of the liver and provide counseling for a patient with liver disease (outpatient) |

|

| Spondyloarthritis | Assessing and managing patients with complex chronic conditions | Medical expertise (ME1, ME2, ME4) Collaboration (COL1) Professionalism (P1) |

Perform a targeted physical examination and propose a management plan for a patient with sacroiliitis (outpatient) |

|

|

| Diabetes | Assessing and managing patients with complex chronic conditions | Medical expertise (ME3, ME4, ME5) Communication (COM1) Professionalism (P1) |

Initiate insulin therapy for a patient with decompensated diabetes mellitus and apprehension toward treatment (outpatient) |

|

|

| Acute care situations | Stroke | Assessing, resuscitating, and managing unstable and critically ill patients | Medical expertise (ME2, ME6, ME7) Collaboration (COL2) Professionalism (P1) |

Manage a patient in the emergency department with acute stroke and hypertension who requires systemic thrombolysis (inpatient) |

|

| Hyponatremia | Assessing, resuscitating, and managing unstable and critically ill patients | Medical expertise (ME2, ME4, ME6) Scholarship (S1) Professionalism (P1) |

Evaluate and treat a patient with severe symptomatic hyponatremia in a context of a junior resident supervision (inpatient) |

|

|

| Shared decisions | Thrombolysis | Discussing serious and/or complex aspects of care with patients, families, and caregivers | Medical expertise (ME8) Communication (COM1, COM2, COM3) Professionalism (P1) |

Obtain a substituted consent for systemic thrombolysis from a family member for a patient suffering from an acute stroke with aphasia (inpatient) |

|

| COPD | Caring for patients at the end of life | Medical expertise (ME3, ME8) Communication (COM1, COM2) Professionalism (P1) |

Establish goals of care with a patient hospitalized with terminal COPD and a pulmonary mass (inpatient) |

|

Abbreviations: OSCE, objective structured clinical examination; EPA, entrustable professional activity; ME, medical expert role; COM, communicator role; P, professional role; US, ultrasound; CT, computed tomography; MRI, magnetic resonance imaging; COL, collaborator role; NSAID, nonsteroidal anti-inflammatory drug; TNF, tumor necrosis factor; S, scholar role; COPD, chronic obstructive pulmonary disease.

Participants

Clinical preceptors, PGY-4 and PGY-5 residents from the department of medicine, volunteered as examiners and were assigned to stations based on their expertise. Throughout the OSCE, examiners had access to a modified version of Table 1 detailing EPAs, competency milestones, and task-specific abilities. A written questionnaire asked about their familiarity with clinical content and milestones on a 5-point Likert-type scale (5=very familiar; 4=sufficiently familiar; 3=moderately familiar; 2=somewhat familiar; 1=not familiar), aiming to identify potential confounding factors influencing feedback provision. Two videos were presented to all examiners, explaining best feedback practices and proper use of entrustability scales (online supplementary data). Examiners were unaware of the study’s focus on feedback or the instruments employed for feedback measurement.

Intervention

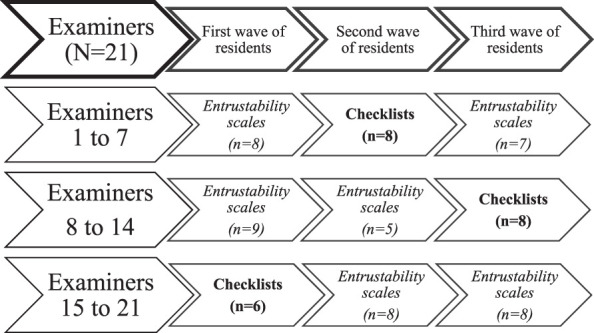

In a randomized crossover design detailed in the Figure, every examiner assessed two-thirds of residents using a 3-point entrustability scale (nonautonomous, partially autonomous, or autonomous) and one-third using a task-specific checklist. Examiners did not use both scoring methods simultaneously.

Figure.

Crossover Study Design: Examiners Assessed Residents With Entrustability Scales (N=45) or Checklists (N=22)

Outcome

We assessed feedback quality using recordings of the feedback, randomly distributed to 2 blinded educators in internal medicine (A.L., D.T.L.). Each educator listened to 60% of the audio recordings, with a 20% overlap to calculate interrater agreement. A.L. and D.T.L. rated the quality of the feedback using the Quality of Assessment of Learning (QuAL) score,21 with 3 questions for a total of 5 points:

Does the examiner comment on the performance? (0=no comment at all; 1=no comment on performance; 2=somewhat; 3=yes/full description)

Does the examiner provide a suggestion for improvement? (0=no; 1=yes)

Is the examiner’s suggestion linked to the behavior described? (0=no; 1=yes)

The QuAL score has validity evidence as a workplace-based assessment and offers a promising framework for evaluating feedback quality based on best practices.21

To assess feedback quality through different lenses, examiners’ perspectives were collected through a single written question. Following each interaction with a resident, examiners were prompted with the question: “How confident are you that the feedback provided will enhance this student’s autonomy?” answered using a 5-point Likert-type scale (5=very confident; 4=sufficiently confident; 3=moderately confident; 2=somewhat confident; 1=not confident).

Assessing feedback alignment, A.L. and D.T.L. counted the number of competency milestones and task-specific abilities (detailed in Table 1) mentioned in the examiner’s feedback. For example, the feedback “Among the exams to prescribe, what was not mentioned was the abdominal ultrasound” contained the milestone “ME2: Select and interpret investigations based on clinical priorities,” and the task-specific ability “Ask for imaging.”

Residents’ perspectives were collected through a single written question. Following their participation in both the spondyloarthritis and diabetes stations, residents were prompted to provide an answer to: “Following the feedback received in the previous station, your autonomy level to accomplish the same task in a clinical setting is…” using a 5-point Likert-type scale (5=clearly increased; 4=increased; 3=similar; 2=decreased; 1=clearly decreased).

Analysis

We used SPSS Statistics version 21 (IBM Corp) for paired t tests when comparing scoring methods. We used intraclass correlation coefficient (2-way mixed-effects model) to calculate interrater absolute agreement.

The Research Ethics Committee of Laval University approved this study (No. 2019-390).

Results

All OSCE examiners—a total of 21—agreed to take part in the study. They provided feedback to 67 residents, all of whom consented to take part in the study. All the 2-minute feedback sessions were recorded (469 audio recordings). Four recordings were excluded due to technical issues. All 469 examiners’ questionnaires and 198 of 201 residents’ questionnaires were collected (3 printed questionnaires were lost during collection). Examiners’ characteristics are presented in Table 2. On average, across all cases and examiners, examiners demonstrated a familiarity score of 4.6 of 5 (SD=0.7) with the clinical content (eg, initiating insulin) and 3.5 of 5 (SD=1.1) with the milestones (eg, developing patient-centered management plans) of the EPA.

Table 2.

Examiners’ Characteristics and Experience (N=21)

| Variable | n (%) |

| Status | |

| Preceptor | 17 (81.0) |

| Senior resident | 4 (19.0) |

| Sex | |

| Female | 10 (48) |

| Male | 11 (52) |

| Age (y) | |

| <30 | 3 (14.3) |

| 30-39 | 11 (52.4) |

| 40-49 | 3 (14.3) |

| ≥50 | 4 (19.0) |

| Formal training in medical education | |

| Graduate degree | 2 (9.5) |

| Certificate degree | 1 (4.8) |

| Single courses | 2 (9.5) |

| None | 16 (76.2) |

| Experience as preceptor (y) | |

| None | 4 (19.0) |

| <1 | 3 (14.3) |

| 1-5 | 6 (28.6) |

| 6-10 | 1 (4.8) |

| 11-20 | 4 (19.0) |

| >20 | 3 (14.3) |

| Experience as OSCE examiner (No. of OSCEs) | |

| None | 5 (23.8) |

| 1-2 | 5 (23.8) |

| 3-4 | 7 (33.3) |

| 5-6 | 1 (4.8) |

| ≥7 | 3 (14.3) |

| No. of EPAs assessed in a clinical setting | |

| None | 2 (9.5) |

| 1-5 | 2 (9.5) |

| 5-15 | 4 (19.0) |

| >15 | 13 (61.9) |

Abbreviations: OSCE, objective structured clinical examination; EPA, enstrustable professional activity.

Study results pertaining to feedback quality and feedback alignment are detailed in Table 3. Regarding feedback quality, the mean QuAL score was 4.3 of 5 (SD=0.4) with an interrater agreement of 0.68 (substantial). Table 4 showcases illustrative feedback quotes corresponding to each competency milestone alongside their respective QuAL scores. Examiners self-assessed the quality of their feedback at mean 4.2 of 5 (SD=0.4).

Table 3.

Examiners’ (N=21) Feedback Quality and Alignment in an Entrustment-Based OSCE Assessing Residents with Entrustability Scales or Checklists

| Methods | Complex Medical Situation EPAs |

Acute Care

EPAs |

Shared Decision

EPAs |

Mean Results | 95% CI | P value | |

| Feedback quality | Mean QuAL score/5 (SD) | -0.3, 0.1 | .28 | ||||

| Entrustability scale | 4.4 (0.3) | 4.2 (0.5) | 4.0 (0.7) | 4.2 (0.5) | |||

| Checklist | 4.3 (0.3) | 4.6 (0.3) | 4.2 (0.6) | 4.4 (0.5) | |||

| Total | 4.3 (0.2) | 4.3 (0.3) | 4.1 (0.6) | 4.3 (0.4) | |||

| Mean score of examiners’ self-assessment of the quality of feedback they gave, 5-point Likert scale (SD) | 0.0, 0.3 | .04 | |||||

| Entrustability scale | 4.2 (0.3) | 4.4 (0.6) | 4.1 (0.5) | 4.2 (0.4) | |||

| Checklist | 4.1 (0.2) | 4.4 (0.6) | 3.9 (0.5) | 4.1 (0.4) | |||

| Total | 4.2 (0.3) | 4.3 (0.6) | 4.1 (0.4) | 4.2 (0.4) | |||

| Feedback alignment | Alignment with competency milestones: mean number of competency milestones discussed by the examiner (SD) | -0.4, 0.1 | .13 | ||||

| Entrustability scale | 2.3 (0.6)/4.0a | 2.5 (0.6)/4.5a | 2.5 (0.4)/4.0a | 2.4 (0.5)/4.1 | |||

| Checklist | 2.4 (0.6) | 2.8 (0.6) | 2.7 (0.7) | 2.6 (0.6) | |||

| Total | 2.3 (0.5) | 2.6 (0.6) | 2.6 (0.4) | 2.5 (0.5) | |||

| Alignment with task-specific abilities: mean number of task-specific abilities discussed by the examiner (SD) | -0.3, 0.1 | .29 | |||||

| Entrustability scale | 1.1 (0.5)/2.7 | 1.0 (0.5)/2.5 | 1.6 (0.5)/2.5 | 1.2 (0.5)/2.6 | |||

| Checklist | 1.3 (0.2) | 1.3 (0.4) | 1.4 (0.7) | 1.3 (0.4) | |||

| Total | 1.1 (0.4) | 1.0 (0.4) | 1.6 (0.5) | 1.2 (0.5) | |||

Abbreviations: OSCE, objective structured clinical examination; EPA, enstrustable professional activity, QuAL, Quality of Assessment of Learning score.

Mean of 2 or 3 stations of the same type of EPA. For instance, acute care EPAs consist of 2 stations (hyponatremia and stroke), yielding an average number of milestones of 4.5 and an average number of task-specific abilities of 2.5.

Table 4.

Illustrative Feedback Quotes Aligned With Competency Milestones

| EPAs | Competency Milestones | Examiner’s Immediate Verbal Feedback: Illustrative Quotes From the Study |

QuAL

Score (/5) |

| Complex medical situations | ME1: Perform clinical assessments that address all relevant issues | Regarding physical exam, your assessment was good. I suggest you review the percussion of the liver, it was good but still needed a bit of improvement. Your maneuvers for liver palpation and ascites were well executed. You were systematic. (Examiner 15) | 5 |

| ME2: Select and interpret investigations based on clinical priorities | Among the paraclinical exams, what was not mentioned was the abdominal ultrasound. It is a must in this type of case. (Examiner 8) | 4 | |

| ME3: Develop patient centered management plans that address multimorbidity, frailty, and/or complexity of patient presentations | Since the patient was catabolic, there is a contraindication to an SGLT2 inhibitor […] In this case with the A1C at 10% and the catabolic state, although non-pharmacological treatments are important, you need to focus your efforts on insulin. (Examiner 21) | 4 | |

| ME4: Establish plans for ongoing care | In fact, for the treatment for spondyloarthropathy, NSAID should be tried first then biological agents. DMARDS and prednisone are not used. (Examiner 16) | 1 | |

| ME5: Establish goals of care in collaboration with the patient and family | If you want to refer the patient to community resources for diabetes support, it would be preferable to clearly identify them for the patient. (Examiner 7) | 4 | |

| COM1: Provide information to patients and their families clearly and compassionately | Even though the patient has had diabetes for a long time, make sure that words such as hypoglycemia and glycated hemoglobin are understood. (Examiner 7) | 4 | |

| COL1: Use effective communication strategies with physicians and other colleagues in the health care professions | You were able to communicate well with me. You clearly elaborated the differential diagnosis and told me what you thought more versus less likely. (Examiner 9) | 3 | |

| Acute care situations | ME2: Select and interpret investigations based on clinical priorities | The exam that was expected was an angio-CT scan since the patient is a candidate for thrombectomy. (Examiner 11) | 1 |

| ME3: Develop patient centered management plans that address multimorbidity, frailty, and/or complexity of patient presentations | A trick to assess patient’s visual capacity you can use in patient who have difficulty collaborating like this aphasic patient, is to check for their eye movement when you are moving around the room or if they blink to rapid oncoming movement. (Examiner 18) | 1 | |

| ME6: Consider urgency, and potential for deterioration, in advocating for the timely execution of a procedure or therapy | Before the angio-CT scan, you did not start labetalol and that might delay thrombolysis. A patient with a blood pressure at 190-200 [mmHg] has a higher risk of bleeding. (Examiner 4) | 3 | |

| ME7: Focus the assessment, performing in a time-effective manner without excluding key elements in a patient with an unstable medical condition | You recognized that the patient was unstable and that you needed to act fast. You administered the right treatment and knew the target rate of correction for the natremia. (Examiner 10) | 3 | |

| S1: Balance supervision and graduated responsibility, ensuring the safety of patients and learners | When you are working with a student, you must not forget the medical situation comes first. You seemed more focused on the supervision and less on the medical management. You can say that you need to address the medical situation first and afterwards you will take time to explain how you managed the patient. (Examiner 3) | 5 | |

| COL2: Delegate tasks and responsibilities in an appropriate and respectful manner | A trick I would suggest especially when you have multiple things to do is use closed loop communication to make sure things are done. (Examiner 10) | 1 | |

| Shared decisions | ME3: Develop patient centered management plans that address multimorbidity, frailty, and/or complexity of patient presentations | The most important thing is that the patient understands that his COPD is a chronic and terminal disease for which there is no cure so that he gets why palliative care is discussed […] He will consult again since his disease is incurable. (Examiner 13) | 1 |

| ME8: Adapt care as the complexity, uncertainty, and ambiguity of the patient’s clinical situation evolves | His 7 recent hospitalizations are signs of an unfavorable evolution of his disease. That on top of a lung mass means the patient may not have the energy to stay at home. (Examiner 6) | 1 | |

| COM1: Provide information to patients and their families clearly and compassionately | I appreciated how you were empathic and listened to the patient’s wishes. When he mentioned his concerns, you responded. When he was uncertain, you were able to answer in terms he could easily understand. (Examiner 6) | 3 | |

| COM2: Use communication skills and strategies that help the patient and his family make informed decisions | I really liked your approach: it was orderly and organized. It was done at a pace that was respectful of the family even though it was an urgent decision. You informed the husband of the delays to give him a time frame to come to a decision. (Examiner 12) | 3 | |

| COM3: Recognize when strong emotions (such as anger, fear, anxiety, or sadness) are impacting an interaction and respond appropriately | I liked the fact that you noticed his reaction and said: “Have you ever been in a similar situation?” You recognized that he had already been through the same experience. It is a good demonstration of empathy. (Examiner 5) | 3 | |

| All | P1: Exhibit appropriate professional behaviors | When you came in the room, you immediately asked the husband to leave so you could take care of his wife. In general, and even more in this situation where the treatment has associated risks and the patient cannot communicate, you need to involve the family. You need to be careful as to how your initial interaction might have been perceived. (Examiner 4) | 5 |

Abbreviations: EPA, enstrustable professional activity; ME, medical expert role; COM, communicator role; COL, collaborator role; S, scholar role; P, professional role.

In terms of feedback alignment, examiners on average gave feedback on 2.5 of 4.1 (SD=0.5) competency milestones and 1.2 of 2.6 (SD=0.5) task-specific abilities with an interrater agreement of 0.60 (moderate) and 0.71 (substantial), respectively. In other words, examiners gave feedback on 61% of the preestablished milestones and 46% of the task-specific abilities of the EPA. There were no notable distinctions observed in feedback quality or alignment across all types of EPAs (complex medical situations, acute care situations, and shared decisions).

The 2 scoring methods used, 3-point entrustability scales vs task-specific checklists, had no significant impact on QuAL score (95% CI -0.3, 0.1, P=.28), alignment with competency milestones (95% CI -0.4, 0.1, P=.13), or alignment with task-specific abilities (95% CI -0.3, 0.1, P=.29).

Residents rated the quality of feedback at an average of 3.4 of 5 (SD=1.0) when assessed using entrustability scales and 3.5 of 5 (SD=0.8) when assessed with checklists.

Discussion

In the transition from traditional to entrustment-based OSCEs, attention is directed not only toward its assessment characteristics but also toward the resulting feedback.7,10,22 This study yielded 3 key findings. First, examiners consistently provided high-quality feedback across various types of EPAs, offering practical suggestions based on direct observations. Second, there was strong alignment between examiners’ feedback content and the intended learning outcomes of the OSCE, covering multiple competency milestones for each EPA assessed. Finally, the 2 scoring methods had no noticeable impact on feedback quality or alignment.

Prior studies assessing feedback quality have employed different approaches. Moineau et al18 employed a 5-point rating scale encompassing 7 questions, while Humphrey-Murto et al8 assessed feedback quality based on the examiner’s discussion of neutral, positive, or negative points. Well adapted to analyze brief feedback, the QuAL score uses 3 objective questions (2 of which are dichotomous), and it demonstrates significant interrater agreement.21 A high QuAL score not only involves a comprehensive performance description and suggestions for improvement but, more importantly, establishes a crucial link between suggestions and the observed performance with the aim of fostering autonomy.1

Our study quantified the alignment with intended learning outcomes (ie, competency milestones at 61% and task-specific abilities at 46%). These findings are consistent with an analysis of case discussions in our internal medicine residency program, showing 56% alignment of supervisors’ feedback with intended learning outcomes.23 We believe our OSCE’s constructive alignment with an entrustment-based curriculum utilizing EPAs as learning outcomes and milestones as indicators of achievement contributed to our results.14,16 Knowing the importance of examiners’ backgrounds, it is noteworthy that our examiners demonstrated a considerable familiarity with the content and milestones of their respective stations.24,25

Our crossover design allowed us to isolate the effect of 2 scoring methods from other variables (clinical scenario, examiners’ ability, examiners’ familiarization). We allocated two-thirds of the assessments to be conducted utilizing the entrustability scale. This decision stemmed from our principal objective to conduct a thorough analysis of an entrustment-based OSCE. Renting et al26 suggested that a competency-based scoring method in the right clinical scenario should align supervisors’ feedback. However in most studies, including ours, the scoring methods tested had minimal impact on feedback quality, which primarily reflects the abilities of the examiners.27,28

Our study takes into account the limitations of relying solely on examiners’ self-assessment or residents’ perception of feedback, particularly in high-pressure assessment scenarios.12,13,29 Even when feedback is delivered effectively, various emotional and cognitive factors can impact its reception.22 Therefore, we opted against directly comparing residents’ and examiners’ perspectives for 3 reasons: QuAL score results offer greater validity; the 2 questionnaires differed; and residents were surveyed after only 2 EPAs, which were both complex medical situations.

The generalizability of the study findings may be limited due to the study’s single-institution design, hence a relatively small, yet typical, group of examiners. The large number of recordings offsets, at least partially, this limitation. However, the knowledge of being recorded may have influenced the examiners’ feedback responses. Training videos and EPA descriptions encapsulated our expectations and possibly contributed to improving feedback. Future OSCEs should involve similar training interventions to yield comparable results. Our examiners, being volunteers, might have exhibited higher motivation and familiarity with EPAs and feedback compared to the average clinician, potentially explaining the higher quality of feedback in our study compared to the one by Marcotte et al27 and a recent analysis of EPA’s written comments by Madrazo et al.30 This divergence might also be due to our study’s use of audio recordings which could have captured a more comprehensive view of feedback’s content than written comments. The QuAL score, being a relatively new scoring tool, has not been previously employed to analyze feedback in OSCEs and may have shortcomings in capturing all dimensions of feedback quality, especially if the feedback is longer and complex.

As part of a quality assurance process, or in future studies looking at the discrepancies stated above, QuAL scores could be used during the OSCE by students and/or examiners to self-assess feedback. Furthermore, the incorporation of qualitative data through resident focus groups would delve deeper into the complex factors that influence residents’ receptivity to feedback during OSCEs.22,31 Future studies on programmatic assessment could aim to promote residents’ progression by strategically selecting EPAs to target low-opportunity situations of clinical practice within the design of an OSCE, expecting to deliver high-quality feedback that aligns with specific learning objectives.28

Conclusions

In our entrustment-based OSCE, examiners consistently offered valuable feedback aligned with intended learning outcomes. Notably, we explored high-quality feedback and alignment as separate dimensions, finding no significant impact from our 2 scoring methods on either aspect.

Supplementary Material

Acknowledgments

The authors wish to thank all the examiners and residents for their contribution to this project; the OSCE expert committee: Gabriel Demchuk, Rémi Lajeunesse, Marie-Josée Dupuis, Rémi Savard-Dolbec, and Julie Bergeron; the Internal Medicine Residency Program (Dr. Isabelle Kirouac), the Department of Medicine (Dr. Jacques Couët), and the Assessment Unit Team at the Faculty of Medicine of Université Laval for supporting this project (Dr. Christine Drouin); Ms. Sarah-Caroline Poitras and Julie Bouchard for helping with many logistical aspects; Dr. Gabriel Demchuk, Dr. Marianne Bouffard-Côté, and Jérôme Bourgoin for helping with the training videos; and Douglas Michael Massing for copyediting the first version.

Editor’s Note

The online supplementary data contains a 3-point entrustability scale and task-specific checklist for the diabetes station and further descriptions of the videos used in the study.

Author Notes

Funding: The authors report no external funding source for this study.

Conflict of interest: The authors declare they have no competing interests.

This work was previously presented at the Canadian Society of Internal Medicine Annual Meeting, Quebec City, Quebec, Canada, October 11-14, 2023.

References

- 1.Halman S, Fu AYN, Pugh D. Entrustment within an objective structured clinical examination (OSCE) progress test: bridging the gap towards competency-based medical education. Med Teach . 2020;42(11):1283–1288. doi: 10.1080/0142159X.2020.1803251. [DOI] [PubMed] [Google Scholar]

- 2.CarlLee S, Rowat J, Suneja M. Assessing entrustable professional activities using an orientation OSCE: identifying the gaps. J Grad Med Educ . 2019;11(2):214–220. doi: 10.4300/JGME-D-18-00601.2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Holzhausen Y, Maaz A, Marz M, Sehy V, Peters H. Exploring the introduction of entrustment rating scales in an existing objective structured clinical examination. BMC Med Educ . 2019;19(1):319. doi: 10.1186/s12909-019-1736-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Harrison CJ, Molyneux AJ, Blackwell S, Wass VJ. How we give personalised audio feedback after summative OSCEs. Med Teach . 2015;37(4):323–326. doi: 10.3109/0142159X.2014.932901. [DOI] [PubMed] [Google Scholar]

- 5.Rush S, Ooms A, Marks-Maran D, Firth T. Students’ perceptions of practice assessment in the skills laboratory: an evaluation study of OSCAs with immediate feedback. Nurse Educ Pract . 2014;14(6):627–634. doi: 10.1016/j.nepr.2014.06.008. [DOI] [PubMed] [Google Scholar]

- 6.Allen R, Heard J, Savidge M, Bittergle J, Cantrell M, Huffmaster T. Surveying students’ attitudes during the OSCE. Adv Health Sci Educ Theory Pract . 1998;3(3):197–206. doi: 10.1023/A:1009796201104. [DOI] [PubMed] [Google Scholar]

- 7.Hodder RV, Rivington R, Calcutt L, Hart I. The effectiveness of immediate feedback during the objective structured clinical examination. Med Educ . 1989;23(2):184–148. doi: 10.1111/j.1365-2923.1989.tb00884.x. [DOI] [PubMed] [Google Scholar]

- 8.Humphrey-Murto S, Mihok M, Pugh D, Touchie C, Halman S, Wood TJ. Feedback in the OSCE: what do residents remember? Teach Learn Med . 2016;28(1):52–60. doi: 10.1080/10401334.2015.1107487. [DOI] [PubMed] [Google Scholar]

- 9.Hollingsworth MA, Richards BF, Frye AW. Description of observer feedback in an objective structured clinical examination and effects on examinees. Teach Learn Med . 1994;6(1):49–53. doi: 10.1080/10401339409539643. [DOI] [Google Scholar]

- 10.Martin L, Sibbald M, Brandt Vegas D, Russell D, Govaerts M. The impact of entrustment assessments on feedback and learning: trainee perspectives. Med Educ . 2020;54(4):328–336. doi: 10.1111/medu.14047. [DOI] [PubMed] [Google Scholar]

- 11.Bing-You R, Hayes V, Varaklis K, Trowbridge R, Kemp H, McKelvy D. Feedback for learners in medical education: what is known? A scoping review. Acad Med . 2017;92(9):1346–1354. doi: 10.1097/ACM.0000000000001578. [DOI] [PubMed] [Google Scholar]

- 12.Davis DA, Mazmanian PE, Fordis M, Van Harrison R, Thorpe KE, Perrier L. Accuracy of physician self-assessment compared with observed measures of competence: a systematic review. JAMA . 2006;296(9):1094–1102. doi: 10.1001/jama.296.9.1094. [DOI] [PubMed] [Google Scholar]

- 13.Boehler ML, Rogers DA, Schwind CJ, et al. An investigation of medical student reactions to feedback: a randomised controlled trial. Med Educ . 2006;40(8):746–749. doi: 10.1111/j.1365-2929.2006.02503.x. [DOI] [PubMed] [Google Scholar]

- 14.Biggs J, Tang C. McGraw-Hill Education (UK) 2011. Teaching for Quality Learning at University . [Google Scholar]

- 15.Lockyer J, Carraccio C, Chan MK, et al. Core principles of assessment in competency-based medical education. Med Teach . 2017;39(6):609–616. doi: 10.1080/0142159X.2017.1315082. [DOI] [PubMed] [Google Scholar]

- 16.ten Cate O, Chen HC. The ingredients of a rich entrustment decision. Med Teach . 2020;42(12):1413–1420. doi: 10.1080/0142159X.2020.1817348. [DOI] [PubMed] [Google Scholar]

- 17.Kogan JR, Holmboe ES, Hauer KE. Tools for direct observation and assessment of clinical skills of medical trainees: a systematic review. JAMA . 2009;302(12):1316–1326. doi: 10.1111/medu.12220. [DOI] [PubMed] [Google Scholar]

- 18.Moineau G, Power B, Pion AM, Wood TJ, Humphrey-Murto S. Comparison of student examiner to faculty examiner scoring and feedback in an OSCE. Med Educ . 2011;45(2):183–191. doi: 10.1111/j.1365-2923.2010.03800.x. [DOI] [PubMed] [Google Scholar]

- 19.The Royal College of Physicians and Surgeons of Canada Entrustable professional activities for internal medicine. Accessed April 10, 2024. https://www.royalcollege.ca/content/dam/documents/ibd/general-internal-medicine/epa-guide-general-internal-medicine-e.pdf.

- 20.Englander R, Cameron T, Ballard AJ, Dodge J, Bull J, Aschenbrener CA. Toward a common taxonomy of competency domains for the health professions and competencies for physicians. Acad Med . 2013;88(8):1088–1094. doi: 10.1097/ACM.0b013e31829a3b2b. [DOI] [PubMed] [Google Scholar]

- 21.Chan TM, Sebok-Syer SS, Sampson C, Monteiro S. The Quality of Assessment of Learning (Qual) score: validity evidence for a scoring system aimed at rating short, workplace-based comments on trainee performance. Teach Learn Med . 2020;32(3):319–329. doi: 10.1080/10401334.2019.1708365. [DOI] [PubMed] [Google Scholar]

- 22.Eva KW, Armson H, Holmboe E, et al. Factors influencing responsiveness to feedback: on the interplay between fear, confidence, and reasoning processes. Adv Health Sci Educ Theory Pract . 2012;17(1):15–26. doi: 10.1007/s10459-011-9290-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Lafleur A, Cote L, Witteman HO. Analysis of supervisors’ feedback to residents on communicator, collaborator, and professional roles during case discussions. J Grad Med Educ . 2021;13(2):246–256. doi: 10.4300/JGME-D-20-00842.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Kogan JR, Dine CJ, Conforti LN, Holmboe ES. Can rater training improve the quality and accuracy of workplace-based assessment narrative comments and entrustment ratings? A randomized controlled trial. Acad Med . 2023;98(2):237–247. doi: 10.1097/ACM.0000000000004819. [DOI] [PubMed] [Google Scholar]

- 25.Junod Perron N, Louis-Simonet M, Cerutti B, Pfarrwaller E, Sommer J, Nendaz M. The quality of feedback during formative OSCEs depends on the tutors’ profile. BMC Med Educ . 2016;16(1):293. doi: 10.1186/s12909-016-0815-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Renting N, Gans RO, Borleffs JC, Van Der Wal MA, Jaarsma AD, Cohen-Schotanus J. A feedback system in residency to evaluate CanMEDS roles and provide high-quality feedback: exploring its application. Med Teach . 2016;38(7):738–745. doi: 10.3109/0142159X.2015.1075649. [DOI] [PubMed] [Google Scholar]

- 27.Marcotte L, Egan R, Soleas E, Dalgarno N, Norris M, Smith C. Assessing the quality of feedback to general internal medicine residents in a competency-based environment. Can Med Educ J . 2019;10(4):e32–e47. [PMC free article] [PubMed] [Google Scholar]

- 28.van der Vleuten C, Verhoeven B. In‐training assessment developments in postgraduate education in Europe. ANZ J Surg . 2013;83(6):454–459. doi: 10.1111/ans.12190. [DOI] [PubMed] [Google Scholar]

- 29.Yarris LM, Linden JA, Gene Hern H, et al. Attending and resident satisfaction with feedback in the emergency department. Acad Emerg Med . 2009;16(suppl 2):76–81. doi: 10.1111/j.1553-2712.2009.00592.x. [DOI] [PubMed] [Google Scholar]

- 30.Madrazo L, DCruz J, Correa N, Puka K, Kane SL. Evaluating the quality of written feedback within entrustable professional activities in an internal medicine cohort. J Grad Med Educ . 2023;15(1):74–80. doi: 10.4300/JGME-D-22-00222.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Kornegay JG, Kraut A, Manthey D, et al. Feedback in medical education: a critical appraisal. AEM Educ Train . 2017;1(2):98–109. doi: 10.1002/aet2.10024. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.