Abstract.

Significance

Widefield microscopy of the entire dorsal part of mouse cerebral cortex enables large-scale (“mesoscopic”) imaging of different aspects of neuronal activity with spectrally compatible fluorescent indicators as well as hemodynamics via oxy- and deoxyhemoglobin absorption. Versatile and cost-effective imaging systems are needed for large-scale, color-multiplexed imaging of multiple fluorescent and intrinsic contrasts.

Aim

We aim to develop a system for mesoscopic imaging of two fluorescent and two reflectance channels.

Approach

Excitation of red and green fluorescence is achieved through epi-illumination. Hemoglobin absorption imaging is achieved using 525- and 625-nm light-emitting diodes positioned around the objective lens. An aluminum hemisphere placed between objective and cranial window provides diffuse illumination of the brain. Signals are recorded sequentially by a single sCMOS detector.

Results

We demonstrate the performance of our imaging system by recording large-scale spontaneous and stimulus-evoked neuronal, cholinergic, and hemodynamic activity in awake, head-fixed mice with a curved “crystal skull” window expressing the red calcium indicator jRGECO1a and the green acetylcholine sensor . Shielding of illumination light through the aluminum hemisphere enables concurrent recording of pupil diameter changes.

Conclusions

Our widefield microscope design with a single camera can be used to acquire multiple aspects of brain physiology and is compatible with behavioral readouts of pupil diameter.

Keywords: mesoscope, mesoscale, cerebral cortex, fluorescence, hemoglobin absorption

1. Introduction

With the rapidly expanding list of fluorescent probes for neuronal activity and the development of large-scale measurements,1 widefield microscopy has become the measurement modality of choice for mesoscale studies of neuronal circuits and underlying behavior when cellular resolution is not required.2–7 Probes available in distinct spectral variants8 can be spectrally multiplexed for simultaneous imaging of different aspects of neuronal activity.9 Using multiplexed probes for in vivo widefield imaging of head-fixed mice with “crystal skull” cranial windows10 or transcranial imaging, optionally combined with thinned-skull preparations,11 provides a view of the entire dorsal part of the cerebral cortex including the primary sensory, motor, and higher association areas.12,13

In the healthy brain, increases in neuronal activity are often accompanied by increases in blood flow, volume, and oxygenation in the active region. The increase in blood flow and volume occurs, in large part, due to dilation of cerebral arterioles responding to vasoactive messengers released by active neurons.14,15 This hemodynamic response increases oxygen supply beyond the actual demand leading to a net increase in blood oxygenation. Both oxy- and deoxyhemoglobin (HbO and HbR, respectively) strongly absorb light in the blue-green spectrum. Therefore, the hemodynamic response results in time-variant modulation of fluorescence signals within that spectral range. The methods developed to correct fluorescent signals for this hemodynamic artifact fall into two categories. For the first correction method, enhanced green fluorescent protein (EGFP)-based indicators, including GCaMP, iGluSnFR, and GRAB indicators, are excited off-peak at their isosbestic point in the 390- to 420-nm range. When excited at these wavelengths, fluorescence emission is independent from the analyte concentration (i.e., , [glutamate], etc.) but affected by hemodynamic changes.16,17 This signal can then be regressed out of the analyte-dependent signal to remove hemodynamic artifacts. Correction methods measuring changes in hemoglobin absorption use either a single reflectance measurement at 520 to 530 nm or estimate changes in HbO and HbR concentrations ( and ) from simultaneous acquisition of light absorption from at least two wavelengths.18 Furthermore, quantitative estimates of and are important measurables on their own; they aid in understanding cerebrovascular physiology and interpretation of noninvasive imaging signals generated through, for example, functional magnetic resonance imaging.19

Current stationary widefield imaging setups typically use light-emitting diodes (LEDs) with sequential illumination, one or two low-noise, high-sensitivity scientific CMOS cameras, and low (smaller than 2×) magnification optics with a large field of view.20 Experimental demands led to various adaptations of this basic blueprint. In systems dedicated for widefield calcium imaging with EGFP-based indicators, blue LEDs (450 to 480 nm) are used for fluorescence excitation, with light delivered through the objective lens or through side-illumination in some cases. Hemodynamic correction of green fluorescence signals either uses off-peak (390 to 420 nm) fluorescence excitation3,5,21–28 or is based on reflectance measurements at 520 to 530 nm.6,7,29–31 Several groups incorporated a second fluorescence channel with excitation at 560 to 590 nm to enable two-color fluorescence imaging of red fluorescent calcium indicators like jRCaMP1b or jRGECO1a in combination with either EGFP-derived indicators, such as GCaMP6,32 ,33 or flavoprotein autofluorescence.34,35 Widefield imaging systems dedicated to the investigation of neurovascular interactions typically combine imaging of green4,7,30,36–38 or red fluorescent indicators,34,39,40 or green and red fluorescent indicators35 with reflectance imaging for and estimation at two or three wavelengths, typically in the range of 525 to 565 nm and 590 to 625 nm. Light for reflectance imaging is delivered directly from one or more multicolor light engines,7,30,40 through a single light guide,39 through an illumination ring,22,41,42 or through several optical fibers connected to a single light source38 to illuminate the sample surface at an angle. Some researchers report the use of linear polarizers in front of the light sources to minimize specular reflection from the glass window.7,30,42 For experiments involving behavioral tasks or visual stimulation, different solutions to block illumination light from reaching the animal’s eyes have been reported, for example, through implanted light shielding,38,43 custom light-shielding between objective and headplate,3,44 or imaging stage design.45

Here, we describe a versatile widefield imaging system that enables two-color fluorescence imaging in addition to concurrent acquisition of two reflectance channels for quantitative estimation of and with a single sCMOS detector. Specular reflections from curved glass cranial windows that interfere with reflectance imaging are minimized using diffuse illumination with 525- and 625-nm light for reflectance imaging by combining a custom-built ring illuminator with an aluminum hemisphere between the objective and the cranial window. In addition, the aluminum hemisphere shields illumination light to prevent it from reaching the animal’s eyes and thereby avoids unwanted visual stimulation of the animal. We provide optical design blueprints, a wiring diagram, a timing chart, data acquisition software, and an inventory of all parts. We demonstrate the performance of our new widefield microscope in awake mice expressing the mApple-based calcium indicator jRGECO1a46 and the EGFP-based acetylcholine (ACh) probe .17

2. Results

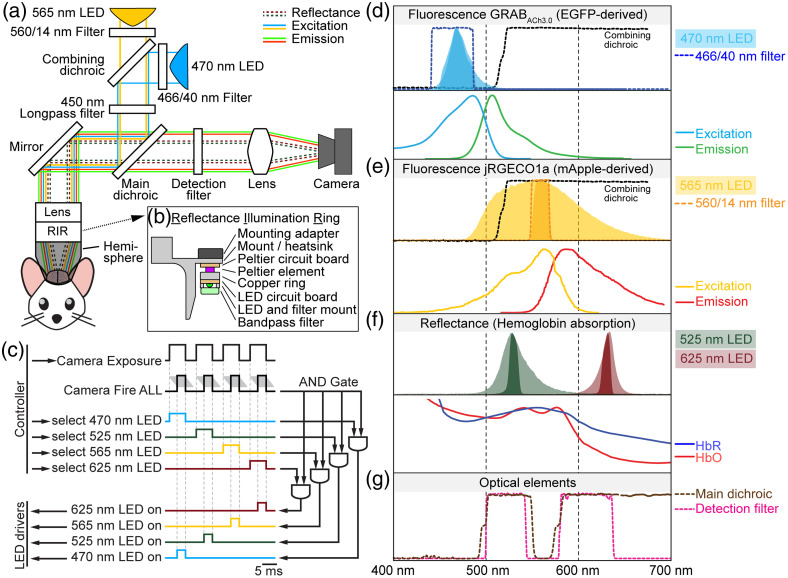

We designed and manufactured a widefield imaging system with a field of view of covering the dorsal cortex of a mouse. We use a single sCMOS detector for sequential recording of two fluorescent indicators (here EGFP-derived and mApple-derived jRGECO1a) as well as hemoglobin absorption at 525 and 625 nm at an effective frame rate of 10 Hz or better without changes in the configuration of the emission path. Figure 1 and Fig. S1 in the Supplementary Material provide an overview of the system layout and illustrate the spectral properties of the light sources and filter elements together with HbO and HbR absorption spectra as well as jRGECO1a and excitation and emission spectra. For fluorescence excitation, we use epi-illumination through the objective lens. A 470-nm LED with a maximal power of 3.7 W provides light for excitation, which is filtered through a 466/40 nm excitation bandpass filter, resulting in a maximal power of 403 mW at the sample surface. For jRGECO1a excitation, we use light from a 565-nm LED with a maximal power of 8.6 W, which is filtered through a 560/14 nm excitation bandpass filter reaching a maximal power of 75 mW at the sample surface. A secondary peak in the 565-nm LED spectrum at is removed by an additional 450-nm longpass filter in the excitation path [Fig. 1(a)]. The collimated and filtered light is coupled into the infinity space using a multiband dichroic mirror (488/561 nm, “main dichroic”). This, in combination with a multiband “detection filter” (523/610 nm), allows and jRGECO1a fluorescence at 500 to 540 nm and 580 to 640 nm, respectively, to reach the sCMOS detector while blocking excitation light at 440 to 480 nm and 550 to 570 nm from reaching the camera [Figs. 1(d), 1(e), and 1(g)]. Typical powers at the sample surface during image acquisition were 90 to 177 and 68 to 75 mW for 470 and 565 nm, respectively, at illumination times of 3 to 6 ms. We observed no or minimal bleaching over continuous acquisition periods of up to 30 min. The wavelengths for hemoglobin absorption imaging are within the respective emission bands of the green (525 nm) and red (625 nm) fluorophore [Fig. 1(f)]. The LEDs used for hemoglobin absorption imaging are placed in a custom-designed reflectance illumination ring (RIR) located between the objective lens and the sample surface [Fig. 1(b) and Fig. S1(D) in the Supplementary Material]. The RIR contains six 525-nm LEDs and six 625-nm LEDs positioned at equal distances with maximal powers of 170 mW and 371 mW per LED, respectively. To enable a simple procedure for estimation of and , we placed 10-nm bandpass filters in front of each LED. This leads to a maximal power of 39.5 mW (525 nm) and 51 mW (625 nm) at the sample surface. During image acquisition, typical powers at the sample surface were 10.5 and 4.4 mW for the 525- and 625-nm LEDs, respectively, at 3-ms illumination times. We placed a hollow aluminum hemisphere between the RIR and the sample surface internally reflecting the light of the 525-and 625-nm LEDs. For imaging, the opening of the hemisphere is brought close to the sample surface achieving diffuse illumination and efficiently eliminating most reflections from the curved glass window covering the brain surface.

Fig. 1.

Schematics of the wide field imaging system and fluorescence and reflectance spectra. (a) A single sCMOS camera is used to measure emission of green and red fluorophores and two hemoglobin absorption wavelengths. A 565-nm LED excites mApple-based jRGECO1a and a 470-nm LED excites EGFP-based ; both LEDs are filtered through excitation filters. The main dichroic mirror reflects excitation light toward a 45-deg-mirror and then a 0.63× objective lens to focus the excitation light onto the sample. Below the objective lens is the reflectance illumination ring (RIR) further detailed in panel (b). An aluminum hemisphere between the RIR and the sample surface internally reflects the 525- and 625-nm LEDs for diffuse illumination and prevents specular reflections and illumination light from reaching the animal’s eyes. Fluorescence emission and reflectance pass the main dichroic and the detection filter before being focused by a tube lens to the camera. (b) Cross section of the RIR setup. Each of the six 525- and 625-nm LEDs are arranged on a ring-shaped board at equal distances. Peltier elements actively cool the LEDs to prevent temperature changes resulting in unstable illumination intensities. 10-nm wide excitation filters are mounted in front of each LED. (c) Timing diagram to trigger LEDs and camera acquisition: MATLAB controls the DAQ system via digital triggers controlling camera and LED drivers. A different LED illuminates the sample during each frame for near-simultaneous acquisition. The effective frame rate is determined by the length of the four-frame cycle (typically 100 ms). Grey lines next to the camera Fire ALL trigger represent exposure of pixel rows in rolling shutter mode. The Fire ALL trigger is sent when exposure overlaps for all rows. Each LED driver (bottom left) is controlled by the output of a separate AND gate. The Fire ALL trigger is the common input to every AND gate, whereas the individual LED selection triggers are the second input for each AND gate. (d). Top: light-blue and dark-blue shaded areas represent the 470-nm LED spectrum before and after passing the excitation filter (BP466/40, blue dotted line). Bottom: excitation (solid blue line) and emission (solid green line) spectrum of EGFP-derived .17 (e) Top: light-orange and dark-orange areas represent the 565-nm LED spectrum before and after passing the excitation filter (BP560/14, orange dotted line). Bottom: excitation (solid yellow line) and emission (solid red line) spectrum of mApple-derived jRGECO1a.16 (f) Top: LED spectra are confined by excitation filters (525 nm in light and dark green; 625 nm in light and dark red before and after passing through the respective excitation filters). Bottom: absorption spectra of oxyhemoglobin (HbO, red line) and deoxyhemoglobin (HbR, blue line) from Prahl.47 (g) Spectra of the main dichroic (brown) and detection filter (pink). Light must pass through both filters to reach the camera.

With the chosen sCMOS camera working in rolling shutter mode, we ensure that the LEDs emit light only when all rows of the sCMOS chip are simultaneously exposed. Therefore, we control the LED drivers with two digital trigger signals combined through a logical AND gate integrated circuit (IC) [Fig. 1(c)]. The first input to the AND gate IC is generated by the camera and is in “high” state when all rows are being exposed; the second input is generated by the microscope control computer and selects the respective LED wavelength to be switched on [Fig. 1(c)]. Only when both signals are in high state, the LED power supply receives a trigger signal to switch the respective LED on. Timing delays of the AND gate IC and the LED driver are and , respectively, enabling virtually delay-free LED power switches for typical exposure times of 2 to 8 ms per individual LED.

The imaging setup is controlled through a DAQ interface programmed via MATLAB. The main acquisition script generates digital trigger signals controlling the imaging setup based on exposure time, which can be different for every wavelength, acquisition rate, and total acquisition time. Additional triggers are generated to synchronize or control external devices, such as stimulus apparatuses, behavioral cameras, electrophysiology recording equipment, and behavioral control devices. The currents of the individual LEDs are set in MATLAB and sent via a USB-based remote-control interface to the LED drivers. The sCMOS camera is controlled through the manufacturer’s software, where parameters, such as field of view and on-camera pixel binning are set. This software also drives image acquisition; however, the camera is set to respond to exposure triggers generated by the DAQ interface, with the (variable) trigger length determining the exposure time. During acquisition, the DAQ interface records feedback signals from camera, microscope control, and external devices allowing synchronization of data streams during off-line postprocessing and analysis.

Although our single-camera approach reduces complexity and cost, we use multiband filters to image two fluorophores present in the sample at the same time. The lack of spectral separation on the emission side can potentially create crosstalk when fluorophores are excited off-resonance. Note that both fluorophores undergo dynamic changes in fluorescence intensity, so the degree of crosstalk will vary in time and space. Published spectra show that (or EGFP) is essentially not excited at 565 nm, whereas jRGECO1a (or mApple) fluorescence is excited at 470 nm. To quantify the amount of crosstalk under well-controlled conditions, we collected images of purified EGFP and mApple proteins with various combinations of illumination power and exposure time for both the 470- and 565-nm LEDs. In line with the published spectra, we observed that the 565-nm LED practically does not excite purified EGFP while mApple shows fluorescence when excited with the 470-nm LED [Figs. S2(A) and S2(B) in the Supplementary Material]. Based on this in vitro calibration with EGFP and mApple, and the typical acquisition parameters we used for in vivo imaging in - and jRGECO1a-expressing animals, we determined that the level of crosstalk of to fluorescence measured upon 565-nm excitation is , whereas fluorescence from jRGECO1a contributes about 3% to 9% to the fluorescence intensity measured upon 470-nm excitation (Fig. S2 in the Supplementary Material; see Appendix C for a detailed discussion of the crosstalk analysis). To verify that the degree of crosstalk is similar in recordings from awake mice expressing and jRGECO1a, we applied a simplified correction approach that does not incorporate dynamic changes of hemoglobin absorption on fluorescence excitation and emission (details in Appendix C). After applying the correction, we used cross-correlation analysis to verify that the degree of crosstalk is within the same range as established under in vitro conditions (Fig. S3 in the Supplementary Material). Here, it should be noted that high correlation between and jRGECO1a signals is expected primarily due to their underlying physiological covariance33 and is only influenced to a small degree by optical crosstalk, as shown in the analysis. However, while the in vitro calibration provides us with the parameters necessary to perform crosstalk correction of in vivo data, we decided not to further use crosstalk correction for the recorded in vivo data as it likely will add additional noise without sufficient benefit—at least under the experimental conditions present in this study (see Appendix C for further discussion).

In addition, it has been reported for mApple-based calcium indicators, such as jRGECO1a, that illumination with blue light leads to photoswitching, which causes a reversible, calcium-independent increase in fluorescence intensity.46 In a control experiment with an awake jRGECO1a-expressing mouse, we did not observe jRGECO1a photoswitching under 470-nm illumination conditions typical for two-color fluorescence imaging in - and jRGECO1a-expressing mice. However, when the 470-nm illumination intensity was increased by an order of magnitude compared to typical imaging conditions, we observed photoswitching of jRGECO1a (Fig. S4 in the Supplementary Material).

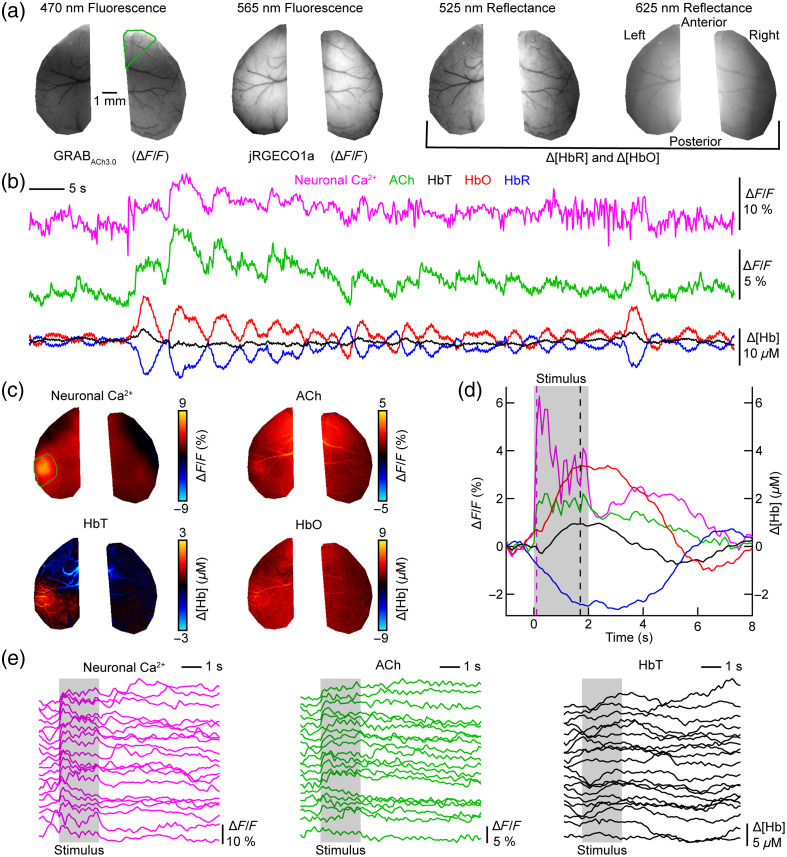

To demonstrate the capabilities of our new imaging system design under in vivo conditions, we recorded spontaneous and stimulus-induced changes in neuronal calcium, ACh, and hemodynamic signals in awake head-fixed mice expressing jRGECO1a and (Fig. 2). Mice were implanted with a curved glass cranial window exposing both hemispheres of the dorsal cortex while leaving the bone in-between the two hemispheres above the superior sagittal sinus intact48 [Fig. 2(a)]. Images were registered to the Allen Mouse Common Coordinate Framework49 to generate time courses from predefined regions of the dorsal cortex. Measurements of spontaneous activity show a high correlation between neuronal calcium and ACh time courses [Fig. 2(b) shows right secondary motor cortex], which is consistent with published results.33 We then recorded the response to a sensory stimulus consisting of a 2-s, 3-Hz air puff sequence (20 repetitions, 25-s interstimulus interval) delivered to the right whisker pad [Figs. 2(c)–2(e)]. Ratio maps show neuronal activation and functional hyperemia in the contralateral barrel cortex [green ROI in calcium map, Fig. 2(c)]. The stimulus-averaged time course calculated from the contralateral barrel cortex shows that the imaging system operates at a sufficient signal-to-noise ratio to record the neuronal calcium response to the six individual air puffs comprising one stimulus train [Fig. 2(d)]. Despite high trial-to-trial variability [Fig. 2(e)], which is consistent with published widefield imaging studies in awake mice,26 responses to individual air puffs are still discernible in individual trial recordings. The time course of the hemodynamic response shows a broad peak and poststimulus undershoot. The hemodynamic correction we apply to the green fluorophore compensates for darkening that occurs when hemoglobin levels increase. Figure S5 in the Supplementary Material compares the trial-averaged ACh time courses with and without correction for hemodynamic artifacts.

Fig. 2.

Spontaneous and stimulus-evoked activity in the somatosensory cortex. (a) Average of the first ten images collected during a 10-min acquisition period for each illumination wavelength. The two hemispheres were manually masked around the cranial window. A green polygon was drawn around the right secondary motor cortex, which was determined by registration to the Allen Atlas.49 (b) Spontaneous activity in the right secondary motor cortex [green polygon in panel (a)] for calcium, acetylcholine (ACh), and oxygenated, deoxygenated, and total hemoglobin (HbO, HbR, and HbT). (c) Ratio maps averaged across 20 trials showing the response to a 2-s, 3-Hz train of air puffs to the right whisker pad. Maps show data from 1.7 s after stimulus onset except for the calcium map, which shows data from 0.1 s after stimulus onset. (d) Average time course of the stimulus-evoked response in the contralateral barrel cortex [green region in panel (c)]. The pink dashed line indicates when the calcium ratio map in panel (c) is shown, whereas the black dashed line indicates when ACh, HbT, and HbO ratio maps in panel (c) are shown. The gray-shaded area indicates the duration of the stimulus. (e) Response of the contralateral barrel cortex to individual stimulus trains. The trials are sorted according to the magnitude of the calcium response.

With a unit price of >$20,000, the sCMOS camera is the most expensive component of the imaging system. To show how the system performance is affected by camera choice, we repeated the experiment shown in Fig. 2 with a CMOS camera costing around $750 (Fig. S6 in the Supplementary Material). Time courses of signals averaged from spatially connected pixels in a region of interest show stimulus-evoked and spontaneous changes in neuronal calcium, ACh, and hemodynamic signals [Figs. S6(B), S6(D), and S6(E) in the Supplementary Material]. On the other hand, we observed spatially structured noise across the field of view [compare Fig. 2(c) with Fig. S6(C) in the Supplementary Material] that could impact pixel-based analysis methods.

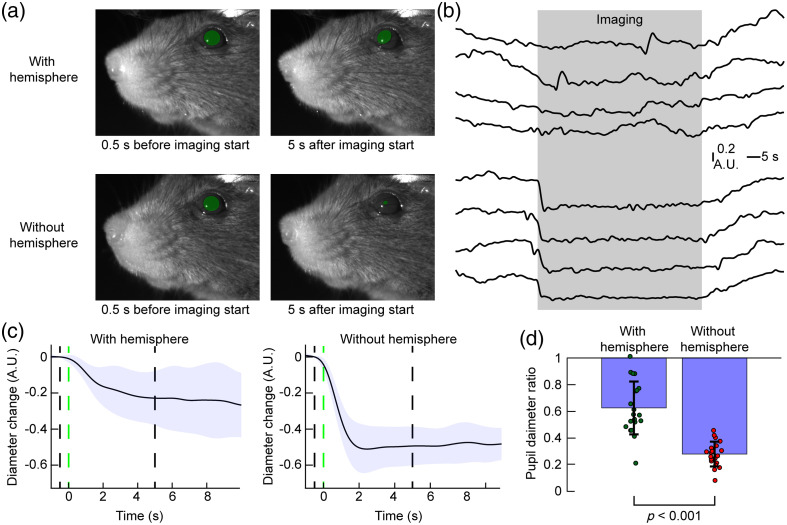

The aluminum hemisphere in-between the cranial window and the imaging system reflects 525- and 625-nm light and provides diffuse illumination minimizing specular reflections at the curved glass window that interfere with reflectance imaging. Inhomogeneities of signal intensity across the sample surface likely occur due to the curvature of the “crystal skull” glass window [Fig. 2(a)]. While enabling diffuse illumination, the aluminum hemisphere also acts as a shield preventing the strobing illumination light from reaching the mouse’s eyes. For example, four-color imaging with an effective acquisition rate of 10 Hz would produce an undesired visual stimulus at 40 Hz. To illustrate the shielding effect, we recorded changes of the animal’s pupil diameter during mesoscopic imaging in presence or absence of the aluminum hemisphere (Fig. 3). For this experiment, a behavioral camera recorded the pupil diameter continuously while widefield illumination across the four wavelengths at 40 Hz was switched on and off at 60-s intervals; we recorded 20 off-to-on and on-to-off transitions per test. The images shown in Fig. 3(a) show the pupil before and after turning the illumination on; Video 1 shows a recording of the off-to-on transition with and without hemisphere. Figure 3(b) shows representative 2-min time series covering the off-to-on and on-to-off transitions in presence or absence of the hemisphere. Without the hemisphere, pupil size measurements are largely dominated by the state of widefield illumination. In the presence of the hemisphere, pupil size changes are largely independent from widefield illumination [Fig. 3(c)]. Then we compared the relative change in pupil size from 1.5 to 0.5 s before versus 2 to 3 s after start of widefield illumination averaged over 20 transitions and observed a significantly larger drop in pupil size without the hemisphere [; -test; Fig. 3(d)]. Pupil tracking is often used as an important variable to define behavioral states50,51 and it is therefore desirable to combine pupil recordings and mesoscale imaging.40 In our system, the hemisphere eliminates interference of widefield illumination on behavioral recordings and enables high-quality measurements of pupil dynamics during mesoscale imaging.

Fig. 3.

Aluminum hemisphere shields illumination and reduces pupil constriction during mesoscale imaging. (a) Images of the mouse face 0.5 s before and 5 s after widefield imaging acquisition begins. Green circles highlight the pupils. (b) Example 2-min pupil diameter time courses showing the off-to-on and on-to-off transition with (top) and without (bottom) hemisphere. The gray-shaded area indicates the period when widefield acquisition was performed. (c) Average pupil diameter changes upon beginning of widefield imaging (20 trials; traces show average ± standard deviation). Pupil diameter 1.5 to 0.5 s before imaging was defined as baseline for each trial. The green-dashed line indicates the start of widefield imaging, the black dashed lines indicate time points of the images shown in panel (a). (d) Comparison of the ratio of pupil size 2 to 3 s after start of imaging to pupil size 1.5 to 0.5 s before imaging. Significance was tested with a student’s test (Video 1, MP4, 5.93 MB [URL: https://doi.org/10.1117/1.NPh.11.3.034310.s1]).

3. Discussion and Conclusion

Here, we describe a new widefield microscope design for mesoscopic imaging of dorsal mouse cortex. Several groups describe widefield calcium imaging with a single fluorescent indicator and concurrent hemodynamic imaging, whereas other groups describe two-color fluorescence widefield imaging without hemodynamic imaging; however, we identified one report describing a system for combined two-color fluorescence and hemodynamic imaging using two cameras.35 Compared to previous publications, our system has several unique features:

First, the system enables quasi-simultaneous imaging of two spectrally distinct fluorophores (here: EGFP-based and mApple-based jRGECO1a) together with quantitative estimates of and via two-color absorption imaging on a single detector. Changes in [HbO] and [HbR] are used to correct hemodynamic artifacts of the recorded fluorescence signals. Further, we can use them to study the relationship between cortical hemodynamics and different aspects of brain activity, such as neuronal firing and neuromodulation, thereby gaining further insights into brain state-dependent regulation of cerebral blood flow and metabolism.52

Second, we use a single detector. Using one camera drastically reduces the overall cost to build the system but requires sequential image acquisition for every wavelength (here: four wavelengths). We achieve sufficient SNR with negligible spectral crosstalk at a 10 Hz acquisition rate, which is within the range for widefield imaging studies in awake animals.23,33

Finally, the illumination light is contained within an aluminum hemisphere. In addition to providing diffuse illumination for light from 525- to 625-nm LEDs to minimize reflections at the glass window, the hemisphere blocks most of the illumination light from the animal’s view. This prevents interference with behavioral measurements of pupil diameter as well as unwanted stimulation of the visual system. Pupil size measurements are often used as a noninvasive, indirect readout of alertness.50,53 We expect our shielded illumination design will enable seamless integration of mesoscale imaging with behavioral experiments based on visual stimulation27,51 and virtual reality.54

The overall cost to build our widefield imaging system with the components used is around $45,000, with the camera comprising the bulk of the cost (∼$25,000). Although this is a lower price than for typical commercially available widefield imaging systems, modifications could further reduce the overall cost of the system. Depending on the brightness of the used fluorophores, their expression level, as well as experimental demands, sufficient imaging performance could be achieved with a less expensive camera (see Fig. S6 in the Supplementary Material). Furthermore, we used commercially available LED drivers as well as commercially available LEDs for fluorescence excitation. Using a custom design or already available open-source components with similar performance characteristics, especially regarding their response time to digital triggers, could further reduce the overall cost (see, e.g., Ref. 41).

Our imaging system can easily be modified or expanded to suit the requirements of different experimental studies; changing an LED and the corresponding optical filters will allow combining other fluorescent indicators if they are spectrally separated. This would, for example, allow integration of calcium indicators excited with near-infrared light.55 As the spectral diversity of genetically encoded molecular probes expands, widefield imaging with three spectrally separated fluorescent indicators might soon become a feasible approach. Furthermore, we and others combined mesoscale imaging with extracellular electrophysiology, providing simultaneous access to cortical network activity as well as single- or multiunit activity in targeted cortical and subcortical areas.21,56,57

Our current system design has the following limitations. First, despite aiming to minimize specular reflections and illumination inhomogeneity of 530- and 630-nm LEDs through diffuse illumination, we still observe inhomogeneous levels of absolute image brightness across the field of view. In practice, however, some variations of absolute brightness will not affect the readout of relative (i.e., intensity-normalized) changes in fluorescence or absorption, except for potentially reduced signal-to-noise levels in darker regions of the image. A possible way to further improve illumination homogeneity of both reflectance and fluorescence illumination is the incorporation of an integration device with uniformity correction, such as EUCLID58 into our imaging system. Further, uneven signal intensity in the medial-to-lateral direction is due to the curvature of the glass window covering the cranial exposure [see Fig. 2(a)]. Use of curved glass also causes different cortical depths to match the focal plane of the imaging system, which potentially introduces spatially heterogenous sampling bias. Here, the use of adaptive lens optics such as metalenses59 could improve uniformity of illumination and could be used to match the focal plane across the field of view. Designing an imaging system with a single camera reduces overall system cost. However, the use of two cameras would allow for higher acquisition rates through spectral multiplexing. Further, the dual-band dichroic mirror and emission filter cut the available spectral bandwidth of the emission band (500 to 540 nm) and the mApple/jRGECO1a excitation band (550 to 570 nm). This potentially enables crosstalk due to off-peak excitation of the red fluorophore (see further discussion in Appendix C). However, with the fluorophores at the expression levels used, we achieve sufficient SNR with negligible spectral crosstalk at a 10-Hz acquisition rate, which is within the range of appropriate temporal resolution for widefield imaging studies in awake animals.23,33 Furthermore, the extension of the imaging system by a second camera requires minimal modifications to the overall layout, allowing for higher acquisition speeds, if desired. Another consideration during the design of our system was the use of lasers instead of LEDs for excitation of the green and red fluorescent proteins. While less expensive, LEDs have a broad emission spectrum that requires clean-up through excitation filters. This limits the emitted light to the usable spectral range [see, e.g., Fig. 1(e), 565-nm LED] leading to an overall reduction in the available power compared to the nominal output (75 mW versus 8.6 W for the 565-nm LED), potentially requiring longer integration times to achieve sufficient SNRs. Here, the use of a laser would enable excitation at higher power levels, if required. A drawback of using lasers instead of LEDs would be a substantial increase in the cost of the system.

In summary, given the versatility of our system and the comparatively low cost in contrast to commercial intravital imaging setups, we expect our widefield imaging system design to become a useful platform for neurovascular studies in the neuroscience and neurophotonics communities.

4. Appendix A: Methods

4.1. Experimental Animals

All procedures were conducted with approval from Boston University Institutional Animal Care and Use Committee and in accordance with the National Institutes of Health Guide for the Care and Use of Laboratory Animals. Standard rodent chow and water were provided ad libitum. Mice were housed in a 12-h light cycle with lights turning on at 7:30 am. We used three female mice of the Thy1-jRGECO1a GP8.2016,46 line; cortex-wide expression was induced by injection of AAV9-hSyn- (WZ Biosciences, ) into each transverse sinus ( per animal) of 1-day old neonates according to procedures described by Lohani et al.33

Headpost implantation and craniotomy in adult (8- to 16-week old) animals follows procedures described by Kilic et al.48 Briefly, a custom-designed headpost machined from titanium was attached to the cranium and a modified crystal skull curved glass window was used to replace the dorsal cranium on both hemispheres.10 Prior to surgery, the original curved glass (12 mm width, labmaker.org) was cut in half to obtain separate glass pieces for each hemisphere. Following the implantation procedure, a silicone plug was placed on top of the glass window and a 3D-printed cap was fixed to the headpost to prevent heat loss. Mice were allowed to recover for at least 1 week following surgery before behavioral training commenced.

To adapt mice to be comfortable under head fixation, animals were handled until they remained calm in the gloved hand of the investigator. Mice were then head-fixed for increasing periods while they received a reward of sweetened condensed milk. Data acquisition was started when animals tolerated 1 h of head fixation.

4.2. Imaging System Optical Components

The imaging system is assembled from standard optomechanical components compatible with 2-inch lens tubes. The imaging system is placed on an elevated platform (, Thorlabs, MB2424) on a vibration-isolating table.

A 470-nm LED (Thorlabs, SOLIS-470C) is used to excite and a 565-nm LED (Thorlabs, SOLIS-565C) is used to excite jRGECO1a. A excitation filter (Semrock, FF01-466/40, diameter 50 mm) is placed in front of the 470-nm LED and a excitation filter (Semrock, FF01-560/14, diameter 50 mm) is placed in front of the 565-nm LED. A dichroic mirror combines excitation light from 565- and 470-nm LEDs (Semrock, FF520-Di02, ). The output of these LEDs is filtered through a 450-nm longpass filter (Chroma, AT450lp) to remove low-wavelength light from the 565-nm LED that leaks through the primary excitation filter. A 45-deg mirror (Thorlabs, PF20-03-P01, diameter 50.8 mm) reflects excitation light down toward the mouse and reflects emitted light into the plane of the camera. A dual-band “main dichroic” beam splitter (Semrock, Di03-R488/461, ) reflects excitation light toward the mirror and transmits emitted light toward the camera. A dual-band emission filter (Semrock, FF01-523/610, diameter 50 mm) is placed between the dichroic mirror and the camera. A tube lens (Olympus, MVX-TLU-MVX10) projects the image onto the sCMOS camera (Andor, Zyla 4.2P-CL10 or Sona 4.2B-6).

An objective lens (Olympus, ZDM-1-MVX063) focuses the fluorescence excitation light onto the brain for epi-illumination and projects the emitted light toward the camera. The objective lens is surrounded by the RIR assembly, which contains six equally spaced 525-nm LEDs (CREE LED, XBDGRN-00-0000-000000C02) and six equally spaced 625-nm LEDs (CREE LED, XBDRED-00-0000-000000701) for reflectance imaging. Bandpass filters were cut to 9.4-mm diameter and placed in front of the reflectance LEDs. These filters are 532/10 nm bandpass filters (Edmund Optics, 65094) for the 525-nm LEDs and bandpass filters (Edmund Optics, 65105) for the 625-nm LEDs. Six Peltier elements (Ferro Tec, 81044) controlled by a feedback-controlled temperature controller (Thorlabs, TED200C) actively cool the LEDs in the RIR to maintain the LEDs at room temperature during data acquisition. Temperature is measured on the LED ring with a 10-kΩ thermistor (TDK, NTCG163JF103FT1). Heat from the Peltier elements is transferred to a custom heat sink made of aluminum. The RIR allows fixation of the custom aluminum hemisphere (bead-blasted) with a 15-mm opening located directly above the cranial window.

To perform imaging tests with a different camera model (ac1920-150uc, Basler with Python 2000 CMOS chip from ON semiconductor in global shutter mode), a 45-deg mirror (Edmund Optics, 83-538) behind the detection filter was used to divert the light path to a second camera arm with alternative tube lens (ThorLabs, TTL180-A). The camera was controlled through the manufacturer’s software (Pylon Viewer); binning (sum) with analog gain disabled was used with exposure times set to 6 ms for all frames.

4.3. Control of Imaging Acquisition

We use a computer-controlled DAQ device (National Instruments, PCIe-6363) to operate the imaging system. The DAQ device is connected to two I/O connector boards (National Instruments, CB-68LPR) inside a control interface box. The control interface relays a DAQ-generated exposure trigger to the camera. An IC with an OR gate (Texas Instruments, SN74AHC32N) within the control interface receives the “Fire ALL” trigger from the camera as an input. The Fire ALL trigger is positive when the exposure state overlaps for all rows in the rolling shutter mode. The output of the OR gate is the common input to an IC with four AND gates (Texas Instruments, SN74AC08N). The second—wavelength-specific—input to each individual AND gate is the LED selection trigger generated by the DAQ system. Each output of the AND gate connects to one LED driver (Thorlabs, DC2200) controlling one type of LED. During normal operation of the imaging system, the “Fire ALL” trigger controls when the LED turns on. The other input to the OR gate is a camera bypass trigger that allows the LEDs to be triggered while the camera is off. We use a custom MATLAB script to control the DAQ system. We used image capture software (Andor, SOLIS) to control camera and image acquisition.

The animal was positioned under 525-nm illumination and the platform carrying the animal was adjusted to bring the brain surface into focus. Then the aluminum dome is lowered to minimize the distance between its opening and the brain surface. Illumination intensities (i.e., LED currents) were adjusted to bring image intensities into the dynamic range of the camera at 16-bit image depth. The acquisition window was cropped to the size of the field of view; image height (number of rows) was a variable entered into the MATLAB script controlling image acquisition: the length of the period when all rows are exposed (“FIRE all”) depends on image height and requires adjustment of the camera exposure trigger generated by the DAQ system. Typical image sizes were with a field of view and resolution at binning. Based on selected exposure times and frame rate, the MATLAB script calculates the total number of frames per acquisition run; this number is entered into the image capture software. The camera is set to “External Exposure” mode and image acquisition is started. Then execution of the MATLAB script is started causing the DAQ interface to send LED selection and camera triggers to the control interface.

4.4. Sensory Stimulation and Behavioral Recordings

The MATLAB acquisition script generates additional triggers for synchronization with behavioral apparatuses, such as to deliver stimuli by puffing compressed air to the animals’ whiskers. Stimuli are defined by duration, frequency, repetitions, interstimulus interval for individual stimuli and sequences of prestimulus baseline, stimulus, and poststimulus observation period. Based on these parameters, the MATLAB script calculates the required imaging time and the number of images. For air puff stimuli, the 5-V trigger is sent to a picopump (WPI, PV830) connected to a 2-mm diameter plastic tube that terminates in a glass capillary to deliver pressurized air to the mouse whiskers.

The animal’s face and whiskers are recorded during imaging with a CMOS camera (Basler, acA1920-150uc) through a variable zoom lens (Edmund Optics, 67715). A 940-nm LED (Thorlabs, M940L3) continuously illuminates the animal, and a 920-nm longpass filter prevents light from the microscope from contaminating the camera recording. The mesoscope control system triggers the CCD camera to acquire a single frame during each acquisition cycle.

An accelerometer (Analog Devices, ADXL335) is placed underneath the mouse bed to record animal’s motion. The accelerometer generates analog signals for motion in , , and direction, which are recorded by a separate DAQ system (NI, USB-6363) receiving a synchronization signal from the mesoscope control system.

4.5. Image Processing

The camera control software (Solis, Andor) stores the acquired images as raw data (“.dat”) together with metadata files (“.ini” and “.sifx”). For all subsequent import and processing steps, we use MATLAB. Raw image data are loaded into MATLAB, bypassing conversion into image (.tif) files by the camera software and enabling multistream data import from high-performance solid-state drives with MATLAB’s multiprocessor environment. For all channels, a baseline image representing the temporal average of all images for that channel is generated. For fluorescence channels, images are computed on a pixel-by-pixel basis by dividing each image by the baseline image. After calculating , linear trends are removed from the time course of each pixel using the MATLAB function “detrend.” The modified Beer–Lambert law is used to calculate changes in HbO and HbR concentrations from 525 and 625 nm reflectance (see implementation for MATLAB’s multiprocessor environment in Appendix B). Estimated and are used to correct green fluorescence measurements for artificial intensity changes caused by dynamic changes in excitation and emission light absorption by hemoglobin. The correction method has been described by Ma et al.4 and its implementation is further detailed in Appendix B. In this correction approach, the measured fluorescence is equal to the artifact-free change in fluorescence multiplied by a correction factor containing the pathlength in tissue and the tissue absorption coefficient. Pathlengths have been previously estimated based on Monte-Carlo simulations.4 Since hemoglobin accounts for most of the light absorption in brain tissue, light absorption depends largely on and and the known HbO and HbR extinction coefficients at the respective wavelengths of fluorescence excitation and emission. We calculate the light absorption coefficient for every pixel at every time point based on our estimations of and and apply it to correct for hemodynamic darkening of in the green () channel.

Measurements with several consecutive trials are interpolated at 100-ms intervals using a time vector relative to the stimulus onset. Trial-averaged ratio maps are calculated by averaging individual time courses across trials.

Behavioral video recordings are stored as image sequences and imported into MATLAB. To calculate pupil size, an elliptical region is drawn defining the eye and an intensity threshold is manually defined. The pupil size is calculated as the number of pixels below the threshold. The resulting time series is normalized to its maximal and minimal pupil size within the recording.

4.6. In Vitro Crosstalk Calibration

Solutions of purified EGFP (Abcam, ab84191) and mApple (kindly provided by Ahmed Abdelfattah) in phosphate-buffered saline (PBS) were prepared prior to imaging. samples of EGFP, mApple, or PBS-only control solution were each injected into one channel of separate 6-channel slides (ibidi, -Slide VI-Flat). The center of the fluorophore-filled channel was focused under the widefield imaging system before starting image acquisition for each slide. Illumination during an imaging sequence alternated between the 470-and 565-nm LEDs while stepping through a series of camera exposure times and increasing LED currents. Three trials were conducted for each sample, with the position of the slide under the objective being shifted between trials to account for intensity variations. To calculate the fluorescence intensity, pixel values were averaged over a region of interest covering the center of the fluorophore-containing channel. Then we took the mean of the three trials per condition and subtracted the fluorescence intensity of the PBS control. Further analysis of crosstalk is detailed in Appendix C.

4.7. Photoswitching of jRGECO1a

A variant of the MATLAB acquisition script was used to create two types of acquisition cycles: One cycle includes 470-nm illumination but disables image acquisition during 470-nm illumination (no camera exposure trigger and the FIRE ALL trigger is bypassed), whereas the other cycle does not include 470-nm illumination and has normal image acquisition. The combination of both cycles enables intermittent 470-nm illumination cycles at variable frequencies. Image analysis is performed on baseline-normalized time courses averaged from all pixels across the brain surface.

5. Appendix B: Optimization of Δ[HbO] and Δ[HbR] Estimation Process for MATLAB Using an Explicit Solution of HbR and HbO with Measured Reflectance at 525 and 625 nm

Concentration changes of deoxy- and oxyhemoglobin ([HbR] and [HbO]) can be estimated using a system of linear equations (here, we use intensity measurements at two wavelengths (525 and 625 nm) and two unknowns ([HbR] and [HbO]). For further discussion, see Ma et al. (2016).18

The molar extinction coefficients of Hb and HbO at 525 and 625 nm are known variables:

| (1) |

| (2) |

We estimate the optical density in every pixel for every time point from the measured intensities and as follows:

| (3) |

| (4) |

The pathlengths and are known variables; the “baseline” intensities and are estimated using the temporal average of the intensities at the given pixel.

When we solve Eqs. (1) and (2) explicitly, combine them with Eqs. (3) and (4), and combine all variables that are constant over space and/or time, we enable an efficient (i.e., fast) approach for estimating [Hb] and [HbO].

To estimate , we first solve Eqs. (1) and (2) for :

| (5a) |

| (5b) |

and combine Eqs. (5a) and (5b):

| (5c) |

Then we solve Eq. (5c) for :

| (5d) |

| (5e) |

| (5f) |

| (5g) |

Then we combine all constant variables in Eq. (5g) to define and :

| (5h) |

Now, we insert the definition of and from Eqs. (3) and (4):

| (6a) |

and combine again all variables that are constant over time:

| (6b) |

| (6c) |

Finally, we combine all constant variables into three parameters , , and :

| (7a) |

with

| (7b) |

| (7c) |

and

| (7d) |

Note that and are invariant in time and space, whereas is only time-invariant—its value changes for every pixel.

In the same way, we can estimate [Hb]. First, we solve Eqs. (1) and (2) for [HbO]:

| (8a) |

| (8b) |

and combine Eqs. (8a) and (8b):

| (8c) |

We solve Eq. (8c) for :

| (8d) |

| (8e) |

| (8f) |

| (8g) |

We combine all constant variables in Eq. (8g) to define and

| (8h) |

and insert the definition of and from Eqs. (3) and (4):

| (9a) |

Then, we combine again all variables that are constant over time:

| (9b) |

| (9c) |

Finally, we combine all constant variables into three parameters , , and :

| (10a) |

with

| (10b) |

| (10c) |

and

| (10d) |

Note that and are invariant in time and space, whereas is only time-invariant—its value changes for every pixel.

The implementation in MATLAB is as follows:

-

(1)

calculate , , , and [dim: 1];

-

(2)

calculate and [dim: ];

-

(3)

calculate and [dim: ];

-

(4)

calculate and [dim: ];

-

(5)

estimate , [dim: ];

-

(6)

combine [dim: ];

-

(7)

estimate , and [dim: ];

-

(8)

combine [dim: ].

6. Appendix C: Evaluation of Potential Crosstalk Between EGFP- and mApple-Derived Fluorophores

6.1. In-Vitro Calibration with Purified EGFP and mApple Fluorescent Proteins

We performed fluorescence measurements of purified EGFP and mApple that were excited at 475 nm and 565 nm using series of increasing exposure times and optical powers. For further evaluation, we calculated the radiant energy for each measurement as the product of illumination power, estimated from the LED current using a separately measured power calibration curve, and exposure time.

Then we defined the illumination ratio for EGFP and mApple as radiant energy at off-peak illumination divided by radiant energy at on-peak illumination combining different illumination conditions for on- and off-peak excitation into a single variable:

| (11) |

and

| (12) |

Note that every illumination ratio can be achieved by different combinations of optical powers and exposure times. This means that pairs of fluorescence measurements are performed with different combinations of optical power and exposure time for off- and on-peak excitation but match the chosen illumination ratio. The corresponding individual on- and off-peak fluorescence intensities have different values. We define their ratio, measured at a certain LED power and exposure time at off-peak excitation relative to the fluorescence intensity at a certain LED power and exposure time at on-peak excitation for each fluorophore as crosstalk ratio for EGFP and mApple as

| (13) |

and

| (14) |

We again substitute LED power and exposure time by radiant energy :

| (15) |

and

| (16) |

To estimate the crosstalk ratio , we chose 20 pairs of measurements matching a given illumination ratio . The pairs of fluorescence intensities from off-peak excitation versus on-peak excitation, when plotted against each other, follow a linear relationship [Figs. S2(C) and S2(D) in the Supplementary Material], which is expected given the underlying linear relationship between fluorescence intensity and radiant energy [shown in Figs. S2(A) and S2(B) in the Supplementary Material]. Accordingly, we can estimate the crosstalk ratio from the slope of the linear fit between fluorescence from off- and on-peak excitation for the 20 pairs of values for each given illumination ratio [Figs. S2(C) and S2(D) in the Supplementary Material]. Finally, for each fluorophore, we plot the crosstalk ratio versus illumination ratio for EGFP and mApple (i.e., versus and versus ; Fig. S2(E) in the Supplementary Material). In the resulting graph, a small value of indicates weak off-peak excitation compared to on-peak excitation, and the resulting crosstalk ratio is small. For increasing values of , off-peak excitation becomes increasingly stronger compared to on-peak excitation leading to a higher crosstalk ratio . Given that EGFP shows only very weak off-peak excitation at 565 nm, its crosstalk ratio increases only modestly with . In contrast, as mApple is more efficiently excited off-peak at 470 nm, the crosstalk ratio rises faster with increasing .

6.2. In-Vivo Correction of jRGECO1a Crosstalk Upon 470-nm Excitation in and jRGECO1a-Expressing Animals

In experiments with jRGECO1a- and -expressing mice (Fig. 2), the used LED powers and exposure times for fluorescence excitation at 470 and 565 nm define a range of illumination ratios. Based on the in vitro calibration with EGFP and mApple, we estimated that for EGFP-derived , of its fluorescence present at 470-nm excitation contributes to fluorescence measured at 565-nm excitation, whereas for mApple-derived jRGECO1a, 3% to 9% of its fluorescence present at 565-nm excitation contributes to fluorescence measured at 470-nm excitation [highlighted areas in Fig. S2(E) in the Supplementary Material].

Calibration of the crosstalk ratio enables us to estimate the level of fluorescence present at 470-nm excitation due to off-peak excitation of jRGECO1a for a given combination of illumination power and exposure times, i.e., radiant energies and :

| (17) |

We assume here that fluorescence measured at 565 nm is solely due to jRGECO1a fluorescence with negligible contribution from , given the minor () contribution of EGFP fluorescence at 565-nm excitation. This enables us to define the following correction term to remove jRGECO1a crosstalk from fluorescence measured at 470-nm excitation:

| (18) |

or

| (19) |

To apply this correction approach based on in vitro determined crosstalk ratios to in vivo data, we make the following assumptions: (a) fluorescence acquired at 565 nm is solely caused by jRGECO1a and (b) dynamic changes in jRGECO1a fluorescence between consecutive acquisition of images at 470- and 565-nm excitation are negligible. Further, we do not consider wavelength-dependent absorption of light for fluorescence excitation and emission through hemoglobin. Incorporating [HbO] and [HbR] estimates into the correction would result in combining measurements from all four wavelengths to correct for crosstalk in fluorescence measurements at 470-nm excitation, potentially confounding this measurement, i.e., fluorescence, by the sum of measurement noise from all measured channels.

To verify that the degree of in vivo crosstalk matches our in vitro estimation, following Eq. (19), we assume that we can estimate the “true” signal by subtracting a proportion of the 565-nm fluorescence image intensity from the 470-nm fluorescence image on a pixel-by-pixel and frame-by-frame basis. Here we subtracted three different crosstalk ratios (we tested , 3%, 5%, and 10%) and estimated the cross correlation of the resulting, corrected timeseries with the timeseries (Fig. S3 in the Supplementary Material). For of 5% and 10%, we observe clear, negative zero-lag cross-correlation indicative for overcorrection. This result suggests that the degree of crosstalk established under in vitro conditions is a reasonable estimate of the crosstalk we observe in vivo. Based on this evaluation, we suggest that under the illumination conditions for in vivo imaging used here, the benefits of spectral crosstalk correction are minimal. Therefore, we did not perform crosstalk correction for the data shown in Fig. 2.

However, it is possible that levels of crosstalk increase, particularly when fluorescence from on-peak excitation of one fluorophore approaches levels of the other fluorophore due to off-peak excitation at the same wavelength. First, this could happen due to low expression of one fluorescent indicator versus the other. Here, using AAV delivery into newborn pups, we achieved relatively high levels of expression, diminishing the potential effect of jRGECO1a crosstalk on measurements. Second, this situation could also arise when one fluorescent indicator has low levels of basal fluorescence in virtual absence of its analyte, i.e., ACh for in this study. When ACh levels are low (low fluorescence) and neuronal calcium levels are high (high jRGECO1a fluorescence), the measurement at 470-nm excitation could potentially show higher contribution from jRGECO1a fluorescence compared to periods where ACh levels are high. To further address and quantify this effect, however, is very difficult with the used animals under the given imaging conditions as jRGECO1a and signals show high correlation due to their underlying physiological covariance. If further examination of jRGECO1a crosstalk into the green fluorescence channel is required, animals expressing jRGECO1a and EGFP, or a mutated version of a green-fluorescent indicator that does not show activity or ligand-dependent changes in fluorescence could be used.

Supplementary Material

Acknowledgments

We would like to thank Ahmed Abdelfattah for generously providing purified mApple fluorescent protein for calibration of our imaging system. We gratefully acknowledged the support from the NIH BRAIN Initiative (Grant Nos. R01MH111359, U19NS123717, R01DA050159, and R01NS108472). Patrick Doran was supported by the Ruth L. Kirschstein Predoctoral Fellowship (Grant No. F31NS118949). Rockwell P. Tang was supported by NIGMS (Grant No. T32GM145455). The authors acknowledged that data analysis was performed on the Shared Computing Cluster, which is administered by Boston University’s Research Computing Services. We also would like to thank the members of the Neurovascular Imaging Laboratory for helpful discussions.

Biography

Biographies of the authors are not available.

Contributor Information

Patrick R. Doran, Email: pdoran@bu.edu.

Natalie Fomin-Thunemann, Email: nfominth@bu.edu.

Rockwell P. Tang, Email: rptang@bu.edu.

Dora Balog, Email: dbalog@bu.edu.

Bernhard Zimmerman, Email: bzim@bu.edu.

Kıvılcım Kılıç, Email: kilickivilcim@gmail.com.

Emily A. Martin, Email: emart@bu.edu.

Sreekanth Kura, Email: skura@bu.edu.

Harrison P. Fisher, Email: hfisher@bu.edu.

Grace Chabbott, Email: gracec00@bu.edu.

Joel Herbert, Email: jherb@bu.edu.

Bradley C. Rauscher, Email: bcraus@bu.edu.

John X. Jiang, Email: jxjiang@bu.edu.

Sava Sakadzic, Email: sava@nmr.mgh.harvard.edu.

David A. Boas, Email: dboas@bu.edu.

Anna Devor, Email: adevor@bu.edu.

Ichun Anderson Chen, Email: a.chen@prisma-tx.com.

Martin Thunemann, Email: martinth@bu.edu.

Disclosures

The authors declare that they have no conflicts of interest.

Code and Data Availability

Design blueprints, parts inventory, data acquisition and analysis software are available on GitHub (https://github.com/NIL-NeuroScience/WidefieldImagingAnalysis); raw and processed data are available on Zenodo (for Fig. 2, resting state: https://doi.org/10.5281/zenodo.10798934; for Fig. 2 stimulus: https://doi.org/10.5281/zenodo.10798996; for Fig. 3: https://doi.org/10.5281/zenodo.10798658; for Fig. S3 in the Supplementary Material: https://doi.org/10.5281/zenodo.10798091; for Fig. S6 resting state in the Supplementary Material: https://doi.org/10.5281/zenodo.10805179; and for Fig. S6 stimulus in the Supplementary Material: https://doi.org/10.5281/zenodo.10805381).

Author Contributions

Patrick R. Doran performed investigation, formal analysis, software, visualization, writing of the original draft, review, and editing. Natalie Fomin-Thunemann performed investigation, review, and editing. Rockwell P. Tang performed investigation, visualization, writing of the original draft, review, and editing. Dora Balog performed software, review, and editing. Bernhard Zimmermann, Kıvılcım Kılıç, Joel D. Herbert, Grace Chabbott, Bradley C. Rauscher, and John X. Jiang performed resources, review, and editing. Sreekanth Kura and Harrison P. Fisher performed software, review, and editing. Sava Sakadzic and David A. Boas performed conceptualization, review, and editing. Anna Devor performed conceptualization, funding acquisition, supervision, writing of the original draft, review, and editing. Ichun Anderson Chen performed conceptualization, methodology, supervision, review, and editing. Martin Thunemann performed conceptualization, software, supervision, visualization, writing of the original draft, review, and editing.

References

- 1.Abdelfattah A. S., et al. , “Neurophotonic tools for microscopic measurements and manipulation: status report,” Neurophotonics 9(S1), 013001 (2022). 10.1117/1.NPh.9.S1.013001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Bouchard M. B., et al. , “Ultra-fast multispectral optical imaging of cortical oxygenation, blood flow, and intracellular calcium dynamics,” Opt. Express 17(18), 15670–15678 (2009). 10.1364/OE.17.015670 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Couto J., et al. , “Chronic, cortex-wide imaging of specific cell populations during behavior,” Nat. Protoc. 16(7), 3241–3263 (2021). 10.1038/s41596-021-00527-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Ma Y., et al. , “Resting-state hemodynamics are spatiotemporally coupled to synchronized and symmetric neural activity in excitatory neurons,” Proc. Natl. Acad. Sci. U. S. A. 113(52), E8463–E8471 (2016. b). 10.1073/pnas.1525369113 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Mohajerani M. H., et al. , “Spontaneous cortical activity alternates between motifs defined by regional axonal projections,” Nat. Neurosci. 16(10), 1426–1435 (2013). 10.1038/nn.3499 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Vanni M. P., et al. , “Mesoscale mapping of mouse cortex reveals frequency-dependent cycling between distinct macroscale functional modules,” J. Neurosci. 37(31), 7513–7533 (2017). 10.1523/JNEUROSCI.3560-16.2017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Wright P. W., et al. , “Functional connectivity structure of cortical calcium dynamics in anesthetized and awake mice,” PLoS One 12(10), e0185759 (2017). 10.1371/journal.pone.0185759 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Zhao Y., et al. , “An expanded palette of genetically encoded indicators,” Science 333(6051), 1888–1891 (2011). 10.1126/science.1208592 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Lin M. Z., Schnitzer M. J., “Genetically encoded indicators of neuronal activity,” Nat. Neurosci. 19(9), 1142–1153 (2016). 10.1038/nn.4359 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Kim T. H., et al. , “Long-term optical access to an estimated one million neurons in the live mouse cortex,” Cell Rep. 17(12). 3385–3394 (2016). 10.1016/j.celrep.2016.12.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Silasi G., et al. , “Intact skull chronic windows for mesoscopic wide-field imaging in awake mice,” J. Neurosci. Methods 267, 141–149 (2016). 10.1016/j.jneumeth.2016.04.012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Cramer S. W., et al. , “Through the looking glass: a review of cranial window technology for optical access to the brain,” J. Neurosci. Methods 354, 109100 (2021). 10.1016/j.jneumeth.2021.109100 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Higley M. J., Cardin J. A., “Spatiotemporal dynamics in large-scale cortical networks,” Curr. Opin. Neurobiol. 77, 102627 (2022). 10.1016/j.conb.2022.102627 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Echagarruga C. T., et al. , “nNOS-expressing interneurons control basal and behaviorally evoked arterial dilation in somatosensory cortex of mice,” Elife 9, e60533 (2020). 10.7554/eLife.60533 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Uhlirova H., et al. , “Cell type specificity of neurovascular coupling in cerebral cortex,” Elife 5, e14315 (2016. b). 10.7554/eLife.14315 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Dana H., et al. , “High-performance calcium sensors for imaging activity in neuronal populations and microcompartments,” Nat. Methods 16(7), 649–657 (2019). 10.1038/s41592-019-0435-6 [DOI] [PubMed] [Google Scholar]

- 17.Jing M., et al. , “An optimized acetylcholine sensor for monitoring in vivo cholinergic activity,” Nat. Methods 17(11), 1139–1146 (2020). 10.1038/s41592-020-0953-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Ma Y., et al. , “Wide-field optical mapping of neural activity and brain haemodynamics: considerations and novel approaches,” Philos. Trans. R. Soc., B 371(1705), 20150360 (2016. a). 10.1098/rstb.2015.0360 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Uhlirova H., et al. , “The roadmap for estimation of cell-type-specific neuronal activity from non-invasive measurements,” Philos. Trans. R. Soc., B 371(1705), 20150356 (2016. a). 10.1098/rstb.2015.0356 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Cardin J. A., Crair M. C., Higley M. J., “Mesoscopic imaging: shining a wide light on large-scale neural dynamics,” Neuron 108(1), 33–43 (2020). 10.1016/j.neuron.2020.09.031 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Allen W. E., et al. , “Global representations of goal-directed behavior in distinct cell types of mouse neocortex,” Neuron 94(4), 891–907.e6 (2017). 10.1016/j.neuron.2017.04.017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Jacobs E. A. K., et al. , “Cortical state fluctuations during sensory decision making,” Curr. Biol. 30(24), 4944–4955.e7 (2020). 10.1016/j.cub.2020.09.067 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Lake E. M. R., et al. , “Simultaneous cortex-wide fluorescence imaging and whole-brain fMRI,” Nat. Methods 17(12), 1262–1271 (2020). 10.1038/s41592-020-00984-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Lerner T. N., et al. , “Intact-brain analyses reveal distinct information carried by SNc dopamine subcircuits,” Cell 162(3), 635–647 (2015). 10.1016/j.cell.2015.07.014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.MacDowell C. J., Buschman T. J., “Low-dimensional spatiotemporal dynamics underlie cortex-wide neural activity,” Curr. Biol. 30(14), 2665–2680.e8 (2020). 10.1016/j.cub.2020.04.090 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Musall S., et al. , “Single-trial neural dynamics are dominated by richly varied movements,” Nat. Neurosci. 22(10), 1677–1686 (2019). 10.1038/s41593-019-0502-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Peters A. J., et al. , “Striatal activity topographically reflects cortical activity,” Nature 591(7850), 420–425 (2021). 10.1038/s41586-020-03166-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Zatka-Haas P., et al. , “Sensory coding and the causal impact of mouse cortex in a visual decision,” Elife 10, e63163 (2021). 10.7554/eLife.63163 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Gilad A., et al. , “Behavioral strategy determines frontal or posterior location of short-term memory in neocortex,” Neuron 99(4), 814–828.e7 (2018). 10.1016/j.neuron.2018.07.029 [DOI] [PubMed] [Google Scholar]

- 30.Mitra A., et al. , “Spontaneous infra-slow brain activity has unique spatiotemporal dynamics and laminar structure,” Neuron 98(2), 297–305.e6 (2018). 10.1016/j.neuron.2018.03.015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Wekselblatt J. B., et al. , “Large-scale imaging of cortical dynamics during sensory perception and behavior,” J. Neurophysiol. 115(6), 2852–2866 (2016). 10.1152/jn.01056.2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Gribizis A., et al. , “Visual cortex gains independence from peripheral drive before eye opening,” Neuron 104(4), 711–723.e3 (2019). 10.1016/j.neuron.2019.08.015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Lohani S., et al. , “Spatiotemporally heterogeneous coordination of cholinergic and neocortical activity,” Nat. Neurosci. 25(12), 1706–1713 (2022). 10.1038/s41593-022-01202-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Raut R. V., et al. , “Arousal as a universal embedding for spatiotemporal brain dynamics,” bioRxiv: 2023.2011.2006.565918 (2023).

- 35.Wang X., et al. , “Dual fluorophore imaging combined with optical intrinsic signal to acquire neural, metabolic and hemodynamic activity,” Proc. SPIE PC11946, PC119460L (2022). 10.1117/12.2609860 [DOI] [Google Scholar]

- 36.Matsui T., Murakami T., Ohki K., “Transient neuronal coactivations embedded in globally propagating waves underlie resting-state functional connectivity,” Proc. Natl. Acad. Sci. U. S. A. 113(23), 6556–6561 (2016). 10.1073/pnas.1521299113 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Sunil S., et al. , “Neurovascular coupling is preserved in chronic stroke recovery after targeted photothrombosis,” Neuroimage Clin. 38, 103377 (2023). 10.1016/j.nicl.2023.103377 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Valley M. T., et al. , “Separation of hemodynamic signals from GCaMP fluorescence measured with wide-field imaging,” J. Neurophysiol. 123(1), 356–366 (2020). 10.1152/jn.00304.2019 [DOI] [PubMed] [Google Scholar]

- 39.Park K., et al. , “Optical imaging of stimulation-evoked cortical activity using GCaMP6f and jRGECO1a,” Quant. Imaging Med. Surg. 11(3), 998–1009 (2020). 10.21037/qims-20-921 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Shahsavarani S., et al. , “Cortex-wide neural dynamics predict behavioral states and provide a neural basis for resting-state dynamic functional connectivity,” Cell Rep. 42(6), 112527 (2023). 10.1016/j.celrep.2023.112527 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Harrison T. C., Sigler A., Murphy T. H., “Simple and cost-effective hardware and software for functional brain mapping using intrinsic optical signal imaging,” J. Neurosci. Methods 182(2), 211–218 (2009). 10.1016/j.jneumeth.2009.06.021 [DOI] [PubMed] [Google Scholar]

- 42.White B. R., et al. , “Imaging of functional connectivity in the mouse brain,” PLoS One 6(1), e16322 (2011). 10.1371/journal.pone.0016322 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Makino H., et al. , “Transformation of cortex-wide emergent properties during motor learning,” Neuron 94(4), 880–890.e8 (2017). 10.1016/j.neuron.2017.04.015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Nietz A. K., et al. , “To be and not to be: wide-field Ca2+ imaging reveals neocortical functional segmentation combines stability and flexibility,” Cereb. Cortex 33(11), 6543–6558 (2023). 10.1093/cercor/bhac523 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Padawer-Curry J. A., et al. , “Wide-field optical imaging in mouse models of ischemic stroke,” in Neural Repair: Methods and Protocols, Karamyan V. T., Stowe A. M., Eds., pp. 113–151, Springer, New York. [DOI] [PubMed] [Google Scholar]

- 46.Dana H., et al. , “Sensitive red protein calcium indicators for imaging neural activity,” Elife 5, e12727 (2016). 10.7554/eLife.12727 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Prahl S., “Optical absorption of hemoglobin,” 1999, https://omlc.org/spectra/hemoglobin/ (accessed 11/3/2023).

- 48.Kilic K., et al. , “Chronic cranial windows for long term multimodal neurovascular imaging in mice,” Front. Physiol. 11, 612678 (2020). 10.3389/fphys.2020.612678 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Wang Q., et al. , “The Allen mouse brain common coordinate framework: a 3D reference Atlas,” Cell 181(4), 936–953.e20 (2020). 10.1016/j.cell.2020.04.007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Reimer J., et al. , “Pupil fluctuations track rapid changes in adrenergic and cholinergic activity in cortex,” Nat. Commun. 7, 13289 (2016). 10.1038/ncomms13289 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Vinck M., et al. , “Arousal and locomotion make distinct contributions to cortical activity patterns and visual encoding,” Neuron 86(3), 740–754 (2015). 10.1016/j.neuron.2015.03.028 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Machler P., et al. , “A suite of neurophotonic tools to underpin the contribution of internal brain states in fMRI,” Curr. Opin. Biomed. Eng. 18, 100273 (2021). 10.1016/j.cobme.2021.100273 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Joshi S., et al. , “Relationships between pupil diameter and neuronal activity in the locus coeruleus, colliculi, and cingulate cortex,” Neuron 89(1), 221–234 (2016). 10.1016/j.neuron.2015.11.028 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Pinto L., et al. , “Task-dependent changes in the large-scale dynamics and necessity of cortical regions,” Neuron 104(4), 810–824 (2019). 10.1016/j.neuron.2019.08.025 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Qian Y., et al. , “Improved genetically encoded near-infrared fluorescent calcium ion indicators for in vivo imaging,” PLoS Biol. 18(11), e3000965 (2020). 10.1371/journal.pbio.3000965 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Lu X., et al. , “Widefield imaging of rapid pan-cortical voltage dynamics with an indicator evolved for one-photon microscopy,” Nat. Commun. 14(1), 6423 (2023). 10.1038/s41467-023-41975-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Thunemann M., et al. , “Deep 2-photon imaging and artifact-free optogenetics through transparent graphene microelectrode arrays,” Nat. Commun. 9(1), 2035 (2018). 10.1038/s41467-018-04457-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Celebi I., Aslan M., Unlu M. S., “A spatially uniform illumination source for widefield multi-spectral optical microscopy,” PLoS One 18(10), e0286988 (2023). 10.1371/journal.pone.0286988 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Yang F., et al. , “Wide field-of-view metalens: a tutorial,” Adv. Photonics 5(3), 033001 (2023). 10.1117/1.AP.5.3.033001 [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Design blueprints, parts inventory, data acquisition and analysis software are available on GitHub (https://github.com/NIL-NeuroScience/WidefieldImagingAnalysis); raw and processed data are available on Zenodo (for Fig. 2, resting state: https://doi.org/10.5281/zenodo.10798934; for Fig. 2 stimulus: https://doi.org/10.5281/zenodo.10798996; for Fig. 3: https://doi.org/10.5281/zenodo.10798658; for Fig. S3 in the Supplementary Material: https://doi.org/10.5281/zenodo.10798091; for Fig. S6 resting state in the Supplementary Material: https://doi.org/10.5281/zenodo.10805179; and for Fig. S6 stimulus in the Supplementary Material: https://doi.org/10.5281/zenodo.10805381).