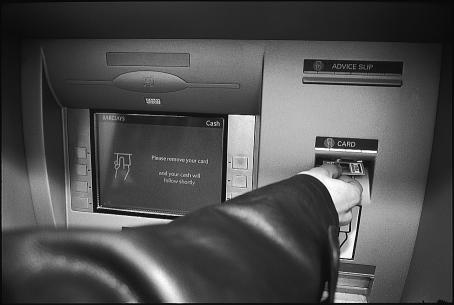

The automated teller machine that dispenses cash and other banking transactions has become ubiquitous in many parts of the world. Most machines follow one of two sequences to complete a transaction. Some dispense the money first and then return the card. Others reverse these two steps. Since the aim of the transaction is to obtain the money, common sense and research in human factors predict that the person using the machine is more likely to forget the card if it is returned after the money is dispensed.1 The order is designed into the system and produces a predictable risk of error.

Researchers have documented the extent of errors and their effect on patient safety.2,3 Like the card forgotten at the automated teller machine, many of the adverse events resulted from an error made by a person who was capable of performing the task safely, had done so many times in the past, and faced significant personal consequences for the error. Although we cannot change the aspects of human cognition that cause us to err, we can design systems that reduce error and make them safer for patients.4 My aim here is to outline an approach to designing safe systems of care based on the work of human factors experts and reliability engineers.

Summary points

Many errors are attributable to characteristics of human cognition, and their risk is predictable

Systems can be designed to help prevent errors, to make them detectable so they can be intercepted, and to provide means of mitigation if they are not intercepted

Tactics to reduce errors and mitigate their adverse effects include reducing complexity, optimising information processing, using automation and constraints, and mitigating unwanted effects of change

Strategies for the design of safe systems of care

Designers of systems of care can make them safer by attending to three tasks: designing the system to prevent errors; designing procedures to make errors visible when they do occur so that they may be intercepted; and designing procedures for mitigating the adverse effects of errors when they are not detected and intercepted.

Preventing errors

In complex systems such as those that deliver health care many factors influence the rate of errors. Vincent et al have suggested a nested hierarchy of factors that determine the safety of a healthcare system. The factors relate to: institutional context, organisation and management, work environment, care team, individual team member, task, and patient.5 The proximal causes of error and adverse events are usually associated with some combination of the care team, one of its members, the task performed, and the patient. Cook and Woods have called this part of Vincent's hierarchy the “sharp end” of the healthcare system.6 To prevent errors these factors must be considered in the design of the care system. However, the less obvious factors—institutional context, organisation and management, and work environment, the “blunt end” of the system—must also be addressed.

Making errors visible

Although errors cannot be reduced to zero, the aim of the system should be to reduce to zero the instances in which an error harms a patient. A safe system has procedures and attributes that make errors visible to those working in the system so that they can be corrected before causing harm.

Inspection or “double checking,” such as the inspection of physician medication orders (prescriptions) by the pharmacist and the checking of a nurse's dose calculations by another nurse or by a computer, are examples of making errors visible. If patients are educated about the course of their treatment and their medications in the context of a trusting relationship, patients can also be effective at identifying errors. They should be encouraged to ask questions and to speak up when unusual circumstances arise.

Mitigating the effects of errors

Not all errors will be intercepted before reaching the patient. When errors go undetected, processes are needed that quickly reverse or halt the harm caused to the patient by the error. For example, antidotes for high hazard drugs, when they exist, should be readily available at the point of administration. Lessons learnt from other more frequently used emergency procedures, such as resuscitation of a patient in cardiac arrest, will be helpful to develop these procedures.

Tactics for reducing errors and adverse events

Many tactics are available to make system changes to reduce errors and adverse events; they fall into five categories1,7–10:

Reduce complexity

Optimise information processing

Automate wisely

Use constraints

Mitigate the unwanted side effects of change.

These tactics can be deployed to support any of the three strategic components of error prevention, detection, and mitigation.

Reduce complexity

Complexity causes errors.11–15 Researchers who have studied this relationship have developed operational definitions of complexity of a task using measures that include: steps in the task, number of choices, duration of execution, information content, and patterns of intervening, distracting tasks. These measures provide a convenient list of factors to consider when simplifying individual tasks or multitask processes.

Some medical treatment, diagnostic processes, or individual cases are inherently complex given the current state of scientific knowledge.16 However, many sources of complexity are readily removed. Leape provides some examples of a complexity inducing proliferation of choices resulting from personal preference. These include non-therapeutic differences in drug doses and times of administration, different locations for resuscitation equipment on different units, and different methods for the same surgical dressings.17 Complexity is also reduced by eliminating delays, missing information, and other defects in operations.

Optimise information processing

Human interaction and the associated processing of information are at the heart of health care. The aim of the optimisation should be to increase understanding, to reduce reliance on memory, and to preserve short term memory for essential tasks for which it is needed.

Use of checklists, protocols, and other reminders for patient and clinician interactions is a basic requirement of a safe system. These aids have the additional benefit of preserving the limited capacity of short term memory for problem solving and decision making. Norman suggests “putting information in the world” as a design principle for improving information processing.7 Examples of tools to help adhere to this principle include colour coding, size or shape differentiation, and the elimination of names (as in drugs) that sound alike.

Automate wisely

Types of automation include those that support tasks, for example, medication order entry; those that perform tasks, such as robotic packaging of medications in the pharmacy and automatic set up of radiation therapy equipment; and those that collect and present information such as disease registries and computer reminder systems.

Automation affects the performance of a system in ways that are often more complex than anticipated and often introduces new types of errors.15,18–20 For example, automated drug infusion devices allow the concentrations of drugs in the patient's blood to remain relatively constant—but errors in setting up the devices can cause overdoses and underdoses.

Principles for the wise application of automation include:

Keep the aim focused on system improvement rather than automating what is technologically feasible

Use technology to support not supplant the human operator

Recognise and reduce during the design and implementation phases new cognitive demands required by the technology, especially those demands that occur at busy or critical times.

Use constraints

A constraint restricts certain actions. When used to restrict actions that result in error, constraints become one of the most reliable forms of error proofing. Constraints are of various types: physical, procedural, and cultural. Physical constraints take advantage of the properties of the physical world—for example, it is impossible to insert a three pronged, grounded plug in a two holed electrical outlet. They are often used to prevent error associated with the set up and use of equipment—for example in connecting anaesthetic equipment.

Procedural constraints increase the difficulty of performing an action that results in error. Removing concentrated electrolytes from patient care units in a hospital is an example of a procedural constraint. Other examples of procedural constraints include order forms that list only limited sets of actions, standardising the prostheses used in joint replacement surgery, and computer medication order systems that prevent abnormally high doses from being ordered.

Any society has strong cultural conventions that define acceptable behaviour. These cultural constraints can be quite strong—try entering a crowded elevator in the United States and face towards the rear rather than toward the door. Many industrial safety programmes rely on cultural constraints by defining unsafe acts and rigidly censuring those who perform them. In a culture of safety, using non-standard abbreviations in prescriptions and scheduling nurses to work back to back shifts would be considered unacceptable. Regardless of whether physical and procedural constraints can be designed into the system, in a labour intensive industry such as health care cultural constraints will be an essential part of an advanced safety initiative.

Mitigate the unwanted side effects of change

Advances aimed at improving care, such as new medical procedures, surgical techniques, or monitoring equipment, often introduce unwanted side effects. Clinicians must go through a learning curve and alter their familiar routines. During this period chances for error and harm are increased. Precautions can be taken to mitigate the unwanted effects of change:

Use a formal process to predict opportunities for error and harm before making the change and eliminate them if possible or mitigate their effect with standardised contingency plans and training

Test the changes on a small scale with minimum risk and devote resources to redesign the procedure as problems are identified

Monitor the clinical outcomes, errors, and adverse events over time during testing and implementation.

Setting aims

System change requires will. The will for change can be articulated by setting aims that specify the level of system performance with respect to errors and adverse events. Because of the gravity of an adverse event, improvements should be thought of in terms of decreasing orders of magnitude of errors, 1 per 100, 1 per 1000, 1 per 10 000.

Error rates in any system must ultimately be determined empirically. However, some first approximations of current system performance and potential performance can be developed using nominal error rates developed by specialists in human factors supplemented by internal data at check points or from research studies.21 Table 1 contains examples of these error rates.

Table 1.

Nominal human error rates of selected activities (adapted from Park21)

| Activity* | Probability of human error (No of errors/No of opportunities for the error) |

|---|---|

| General error of commission—for example, misreading a label | 0.003 |

| General error of omission in the absence of reminders | 0.01 |

| General error of omission when items are embedded in a procedure—for example, cash card is returned from cash machine before money is dispensed | 0.003 |

| Simple arithmetic errors with self checking but without repeating the calculation on another sheet of paper | 0.03 |

| Monitor or inspector fails to recognise an error | 0.1 |

| Staff on different shifts fail to check hardware condition unless required by checklist or written directive | 0.1 |

| General error rate given very high stress levels where dangerous activities are occurring rapidly | 0.25 |

Unless otherwise indicated, assumes the activities are performed under no undue time pressures or stress.

The error rates in table 1 are for a single task. Most processes in health care contain many steps and tasks. The error rate for a process increases as the steps in the process, and therefore its complexity, increase. Table 2 contains the error rates for a process given the number of steps in the process and the error rate at each step.

Table 2.

Error rates for processes with multiple steps

| No of steps |

Base error rate of each step

|

|||

|---|---|---|---|---|

| 0.05 | 0.01 | 0.001 | 0.0001 | |

| 1 | 0.05 | 0.01 | 0.001 | 0.0001 |

| 5 | 0.33 | 0.05 | 0.005 | 0.002 |

| 25 | 0.72 | 0.22 | 0.02 | 0.003 |

| 50 | 0.92 | 0.39 | 0.05 | 0.005 |

| 100 | 0.99 | 0.63 | 0.1 | 0.01 |

In reality error rates at each step will vary and there may be hundreds of steps. However, in practice a few of the steps will be most hazardous or have error rates that are substantially worse than the rest, so the computation can be done using these steps.22 Since the commission and omission error rates for simple tasks in a relatively stress free environment are in the parts per thousand, empirically developed rates that are in the parts per hundred indicate that rudimentary application of the system changes described in this paper will produce substantial improvements in safety.

Improvements to a system with empirical error rates in the parts per thousand will necessitate more sophisticated system changes including substantial use of all three types of constraints and substantial levels of automation.

Much is yet to be learnt about how to design healthcare systems that are effective and safe. However, a solid foundation of knowledge and methods exist on which to build the healthcare systems that both patients and clinicians deserve.

Figure.

ANETTE HAUG

ANETTE HAUG

Design of the system ensures that he won't forget to take his card

Footnotes

Competing interests: None declared.

References

- 1.Salvendy G. Handbook of human factors and ergonomics. New York: Wiley; 1997. [Google Scholar]

- 2.Brennan T, Leape L, Laird N, Hebert L, Localio AR, Lawthers AG, et al. Incidence of adverse events and negligence in hospitalized patients. N Engl J Med. 1991;324:370–376. doi: 10.1056/NEJM199102073240604. [DOI] [PubMed] [Google Scholar]

- 3.Bates D, Cullen D, Laird N, Petersen LA, Small SD, Servi D, et al. Incidence of adverse drug events and potential adverse drug events. JAMA. 1995;274:29–34. [PubMed] [Google Scholar]

- 4.Reason JT. Human error. New York: Cambridge University Press; 1990. [Google Scholar]

- 5.Vincent C, Taylor-Adams S, Stanhope N. Framework for analysing risk and safety in clinical medicine. BMJ. 1998;316:1154–1157. doi: 10.1136/bmj.316.7138.1154. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Cook RI, Woods DD. Operating at the sharp end: the complexity of human error. In: Bogner MS, editor. Human error in medicine. Hillsdale, NJ: Erlbaum; 1994. pp. 255–310. [Google Scholar]

- 7.Norman DA. The psychology of everyday things. New York: Basic Books; 1988. [Google Scholar]

- 8.Norman DA. Things that make us smart. New York: Addison-Wesley; 1993. [Google Scholar]

- 9.Leveson NG. Safeware, systems safety and computers. New York: Addison-Wesley; 1993. [Google Scholar]

- 10.Leape L, Bates D, Cullen D, Cooper J, Demonaco HJ, Gallivan T, et al. Systems analysis of adverse drug events. JAMA. 1995;274:35–43. [PubMed] [Google Scholar]

- 11.Wichman H, Oyasato A. Effects of locus of control and task complexity on prospective remembering. Human Factors. 1983;25:583–591. doi: 10.1177/001872088302500512. [DOI] [PubMed] [Google Scholar]

- 12.Maylor EA, Rabbitt GH, James GH, Kerr SA. Effects of alcohol, practice, and task complexity on reaction time distributions. Q J Experimental Psychol. 1992;44A:119–139. doi: 10.1080/14640749208401286. [DOI] [PubMed] [Google Scholar]

- 13.Hinckley CM, Barkman P. The role of variation, mistakes, and complexity in producing nonconformities. J Qual Technol. 1995;27:242–249. [Google Scholar]

- 14.Murray S, Caldwell B. Human performance and control of multiple systems. Human Factors. 1996;38:323–329. doi: 10.1177/001872089606380212. [DOI] [PubMed] [Google Scholar]

- 15.Fraass B, Lash K, Matrone G, Volkman SK, McShan DL, Kessler ML, et al. The impact of treatment complexity and computer-control delivery technology on treatment delivery errors. Int J Radiation Oncol Biol Phys. 1998;42:651–659. doi: 10.1016/s0360-3016(98)00244-2. [DOI] [PubMed] [Google Scholar]

- 16.Strong M. Simplifying the approach: what can we do? Neurology. 1999;53(suppl 5):S31–S34. [PubMed] [Google Scholar]

- 17.Leape L. Error in medicine. JAMA. 1994;272:1851–1857. [PubMed] [Google Scholar]

- 18.Sarter NB, Woods DD. Pilot interaction with cockpit automation: operational experiences with the flight management system. Int J Aviation Psychol. 1992;4:1–28. [Google Scholar]

- 19.Leveson NG, Turner CS. An investigation of the Therac-25 accidents. IEEE Computer. 1993;26:18–41. [Google Scholar]

- 20.Bates DW, Teich JM, Lee J. The impact of computerized physician order entry on medication error prevention. J Am Med Information Soc. 1999;6:13–21. doi: 10.1136/jamia.1999.00660313. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Park K. Human error. In: Salvendy G, editor. Handbook of human factors and ergonomics. New York: Wiley; 1997. pp. 150–173. [Google Scholar]

- 22.Ushakov I. Handbook of reliability engineering. New York: Wiley; 1994. [Google Scholar]