Abstract

Validation metrics are key for tracking scientific progress and bridging the current chasm between artificial intelligence (AI) research and its translation into practice. However, increasing evidence shows that particularly in image analysis, metrics are often chosen inadequately. While taking into account the individual strengths, weaknesses, and limitations of validation metrics is a critical prerequisite to making educated choices, the relevant knowledge is currently scattered and poorly accessible to individual researchers. Based on a multi-stage Delphi process conducted by a multidisciplinary expert consortium as well as extensive community feedback, the present work provides the first reliable and comprehensive common point of access to information on pitfalls related to validation metrics in image analysis. While focused on biomedical image analysis, the addressed pitfalls generalize across application domains and are categorized according to a newly created, domain-agnostic taxonomy. The work serves to enhance global comprehension of a key topic in image analysis validation.

Measuring performance and progress in any given field critically depends on the availability of meaningful outcome metrics. In a field such as athletics, this process is straightforward because the performance measurements (e.g., the time it takes an athlete to run a given distance) exactly reflect the underlying interest (e.g., which athlete runs a given distance the fastest?). In image analysis, the situation is much more complex. Depending on the underlying research question, vastly different aspects of an algorithm’s performance might be of interest (Fig. 1) and meaningful in determining its future practical, for example clinical, applicability. If the performance of an image analysis algorithm is not measured according to relevant validation metrics, no reliable statement can be made about the suitability of this algorithm in solving the proposed task, and the algorithm is unlikely to ever reach the stage of real-life application. Moreover, unsuitable algorithms could be wrongly regarded as the best-performing ones, sparking entirely futile resource investment and follow-up research while obscuring true scientific advancements. In determining new state-of- the-art methods and informing future directions, the use of validation metrics actively shapes the evolution of research. In summary, validation metrics are the key for both measuring and informing scientific progress, as well as bridging the current chasm between image analysis research and its translation into practice.

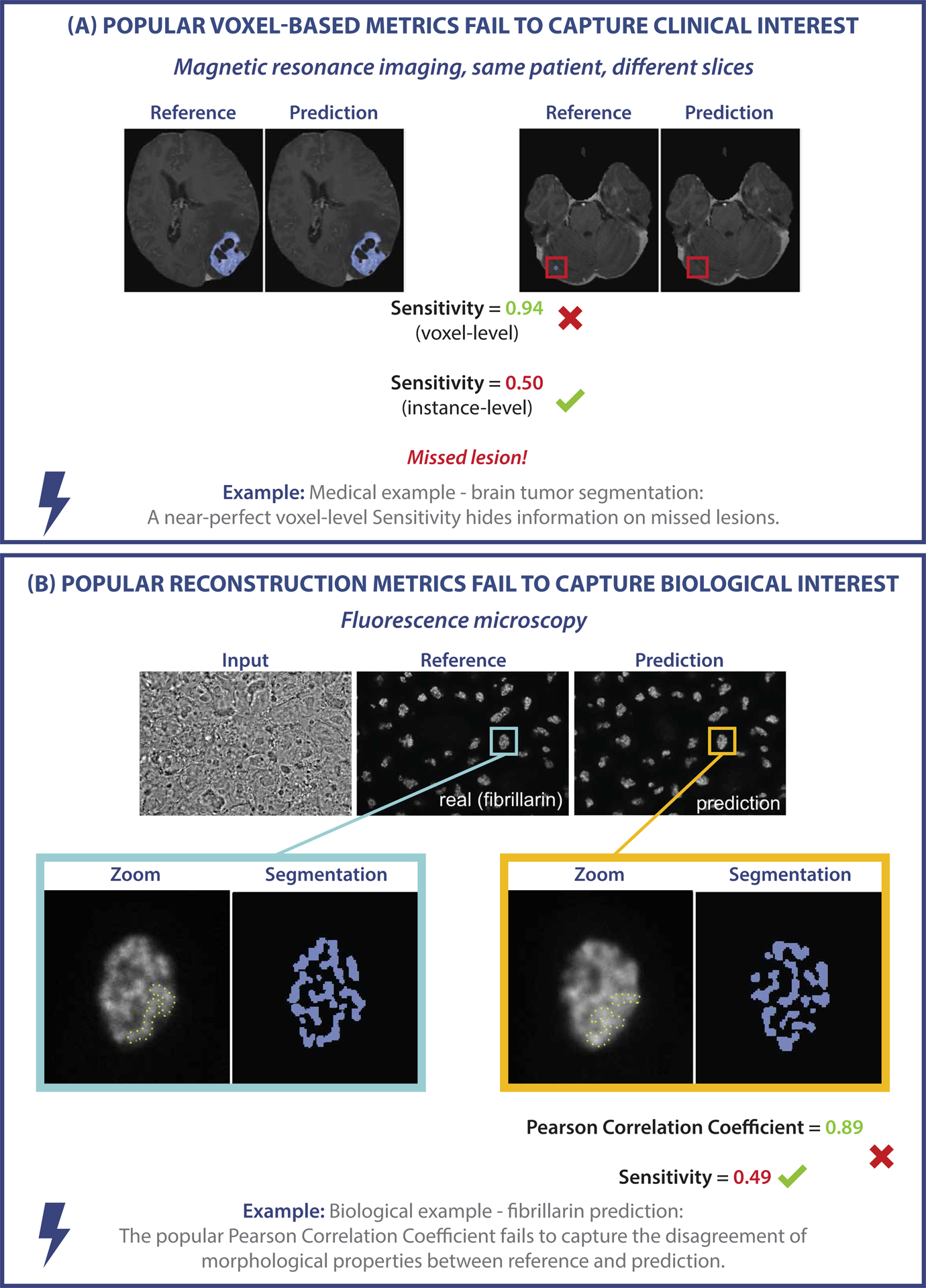

Figure 1:

Examples of metric-related pitfalls in image analysis validation. (A) Medical image analysis example: Voxel-based metrics are not appropriate for detection problems. Measuring the voxel-level performance of a prediction yields a near-perfect Sensitivity. However, the Sensitivity at the instance level reveals that lesions are actually missed by the algorithm. (B) Biological image analysis example: The task of predicting fibrillarin in the dense fibrillary component of the nucleolus should be phrased as a segmentation task, for which segmentation metrics reveal the low quality of the prediction. Phrasing the task as image reconstruction instead and validating it using metrics such as the Pearson Correlation Coefficient yields misleadingly high metric scores [4, 26, 29, 36, 36].

In image analysis, while for some applications it might, for instance, be sufficient to draw a box around the structure of interest (e.g., detecting individual mitotic cells or regions with apoptotic cell debris) and optionally associate that region with a classification (e.g., a mitotic vs an interphase cell), other applications (e.g., cell tracing for fluorescent signal quantification) could require determining the exact structure boundaries. The suitability of any individual validation metric thus depends crucially on the properties of the driving image analysis problem. As a result, numerous metrics have so far been proposed in the field of image processing. In our previous work, we analyzed all biomedical image analysis competitions conducted within a period of about 15 years [21]. We found a total of 97 different metrics reported in the field of biomedicine alone, each with its own individual strengths, weaknesses, and limitations, and hence varying degrees of suitability for meaningfully measuring algorithm performance on any given research problem. Such a vast range of options makes tracking all related information impossible for any individual researcher and consequently renders the process of metric selection error-prone. Thus, the frequent reliance on flawed, historically grown validation practices in current literature comes as no surprise. To make matters worse, there is currently no comprehensive resource that can provide an overview of the relevant definitions, (mathematical) properties, limitations, and pitfalls pertaining to a metric of interest. While taking into account the individual properties and limitations of metrics is imperative for choosing adequate validation metrics, the required knowledge is thus largely inaccessible.

As a result, numerous flaws and pitfalls are prevalent in image analysis validation, with re- searchers often being unaware of them due to a lack of knowledge of intricate metric properties and limitations. Accordingly, increasing evidence shows that metrics are often selected inadequately in image analysis (e.g., [11, 17, 35]). In the absence of a central information resource, it is common for researchers to resort to popular validation metrics, which, however, can be entirely unsuitable, for instance due to a mismatch of the metric’s inherent mathematical properties with the underlying research question and specifications of the data set at hand (see Fig. 1).

The present work addresses this important roadblock in image analysis research with a crowd- sourcing-based approach that involved both a Delphi process undergone by a multidisciplinary expert consortium as well as a social media campaign. It represents the first comprehensive collection, visualization, and detailed discussion of pitfalls, drawbacks, and limitations regarding validation metrics commonly used in image analysis. Our work provides researchers with a reliable, single point of access to this critical information. Owing to the enormous complexity of the matter, the metric properties and pitfalls are discussed in the specific context of classification problems, i.e., image analysis problems that can be considered classification tasks at either the image, object, or pixel level. Specifically, these encompass the four problem categories of image-level classification, semantic segmentation, object detection, and instance segmentation. Our contribution includes a dedicated profile for each metric (Suppl. Note 3) as well as the creation of a new common taxonomy that categorizes pitfalls in a domain-agnostic manner (Fig. 2). The taxonomy is depicted for individual metrics in provided tables (see Extended Data Tabs. 1–5) and enables researchers to quickly grasp whether using a certain metric comes with pitfalls in a given use case.

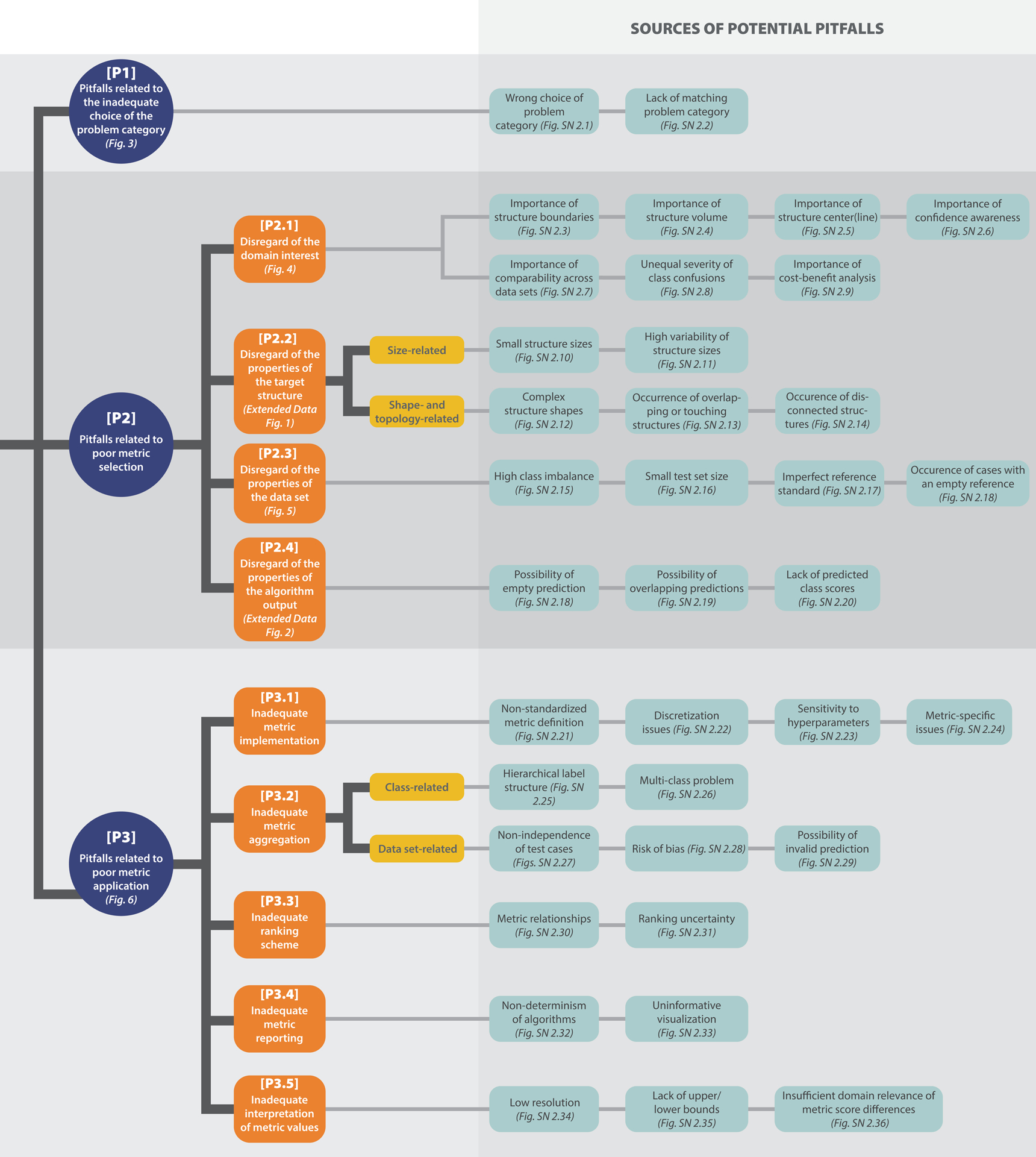

Figure 2:

Overview of the taxonomy for metric-related pitfalls. Pitfalls can be grouped into three main categories: [P1] Pitfalls related to the inadequate choice of the problem category, [P2] pitfalls related to poor metric selection, and [P3] pitfalls related to poor metric application. [P2] and [P3] are further split into subcategories. For all categories, pitfall sources are presented (green), with references to corresponding illustrations of representative examples. Note that the order in which the pitfall sources are presented does not correlate with importance.

While our work grew out of image analysis research and practice in the field of biomedicine, a field of high complexity and particularly high stakes due to its direct impact on human health, we believe the identified pitfalls to be transferable to other application areas of imaging research. It should be noted that this work focuses on identifying, categorizing, and illustrating metric pitfalls, while the sister publication of this work gives specific recommendations on which metrics to apply under which circumstances [22].

Information on metric pitfalls is largely inaccessible

Researchers and algorithm developers seeking to validate image analysis algorithms frequently face the problem of choosing adequate validation metrics while at the same time navigating a range of potential pitfalls. Following common practice is often not the best option, as evidenced by a number of recent publications [11, 17, 21, 35]. Making an educated choice is notably complicated by the absence of any comprehensive databases or reviews covering the topic and thus the lack of a central resource for reliable information on validation metrics.

This lack of accessibility is considered by experts to be a major bottleneck in image analysis validation [21]. To illustrate this point, we searched the literature for available information on commonly used validation metrics. The search was conducted on the platform Google Scholar using search strings that combined different notations of the metric name, including synonyms and acronyms, with search terms indicating problems, such as “pitfall” or “limitation”. The mean and median number of hits for the metrics addressed in the present work were 159,329 and 22,100, respectively, and ranged between 49 for centerline Dice Similarity Coefficient (clDice) and 962,000 for Sensitivity. Moreover, despite valuable literature on individual relevant aspects (e.g., [5, 6, 13, 17, 32, 33, 35]), we did not find a common point of entry to metric-related pitfalls in image analysis in the form of a review paper or other credible source. We conclude that the key knowledge required for making educated decisions and avoiding pitfalls related to the use of validation metrics is highly scattered and not accessible by individuals.

Historically grown practices are not always justified

To obtain an initial insight into current common practice regarding validation metrics, we prospectively captured the designs of challenges organized by the IEEE Society of the International Symposium of Biomedical Imaging (ISBI), the Medical Image Computing and Computer Assisted Interventions (MICCAI) Society and the Medical Imaging with Deep Learning (MIDL) foundation. The organizers of the respective competitions were asked to provide a rationale for the choice of metrics in their competition. An analysis of a total of 138 competitions conducted between 2018 and 2022 revealed that metrics are frequently (in 24% of the competitions) based on common practice in the community. We found, however, that common practices are often not well-justified, and poor practices may even be propagated from one generation to the next.

One remarkable example for this issue is the widespread adoption of an incorrect naming and inconsistent mathematical formulation of a metric proposed for cell instance segmentation. The term “mean Average Precision (mAP)” usually refers to one of the most common metrics in object detection (object-level classification) [20, 28]. Here, Precision denotes the Positive Predictive Value (PPV), which is “averaged” over varying thresholds on the predicted class scores of an object detection algorithm. The “mean” Average Precision (AP) is then obtained by taking the mean over classes [10, 28]. Despite the popularity of mAP, a widely known challenge on cell instance segmentation1 introduced a new “Mean Average Precision” in 2018. Although the task matches the task of the original “mean” AP, object detection, all terms in the newly proposed metric (mean, average, and precision) refer to entirely different concepts. For instance, the common definition of Precision from literature TP/(TP + FP) was altered to TP/(TP + FP + FN), where TP, FP, and FN refer to the cardinalities of the confusion matrix (i.e., the true/false positives/negatives). The latter formula actually defines the Intersection over Union (IoU) metric. Despite these problems, the terminology was adopted by subsequent influential works [16, 30, 31, 39], indicating widespread propagation and usage within the community.

A multidisciplinary Delphi process reveals numerous pitfalls in biomedical image analysis validation

With the aim of creating a comprehensive, reliable collection and future point of access to biomedical image analysis metric definitions and limitations, we formed an international multidisciplinary consortium of 62 experts from various biomedical image analysis-related fields that engaged in a multi-stage Delphi process [2] for consensus building. The Delphi process comprised multiple surveys, developed by a coordinating team and filled out by the remaining members of the consortium. Based on the survey results, the list of pitfalls was iteratively refined by collecting pitfall sources, specific feedback and suggestions on pitfalls, and final agreement on which pitfalls to include and how to illustrate them. Further pitfalls were crowdsourced through the publication of a dynamic preprint of this work [28] as well as a social media campaign, both of which asked the scientific community for contributions. This approach allowed us to integrate distributed, cross-domain knowledge on metric-related pitfalls within a single resource. In total, the process revealed 37 distinct sources of pitfalls (see Fig. 2). Notably, these pitfall sources (e.g., class imbalances, uncertainties in the reference, or poor image resolution) can occur irrespective of a specific imaging modality or application. As a result, many pitfalls generalize across different problem categories in image processing (image-level classification, semantic segmentation, object detection, and instance segmentation), as well as imaging modalities and domains. A detailed discussion of all pitfalls can be found in Suppl. Note 2.

A common taxonomy enables domain-agnostic categorization of pitfalls

One of our key objectives was to facilitate information retrieval and provide structure within this vast topic. Specifically, we wanted to enable researchers to identify at a glance which metrics are affected by which types of pitfalls. To this end, we created a comprehensive taxonomy that categorizes the different pitfalls in a semantic fashion. The taxonomy was created in a domain- agnostic manner to reflect the generalization of pitfalls across different imaging domains and modalities. An overview of the taxonomy is presented in Fig. 2, and the relations between the pitfall categories and individual metrics can be found in Extended Data Tabs. 1–5. We distinguish the following three main categories:

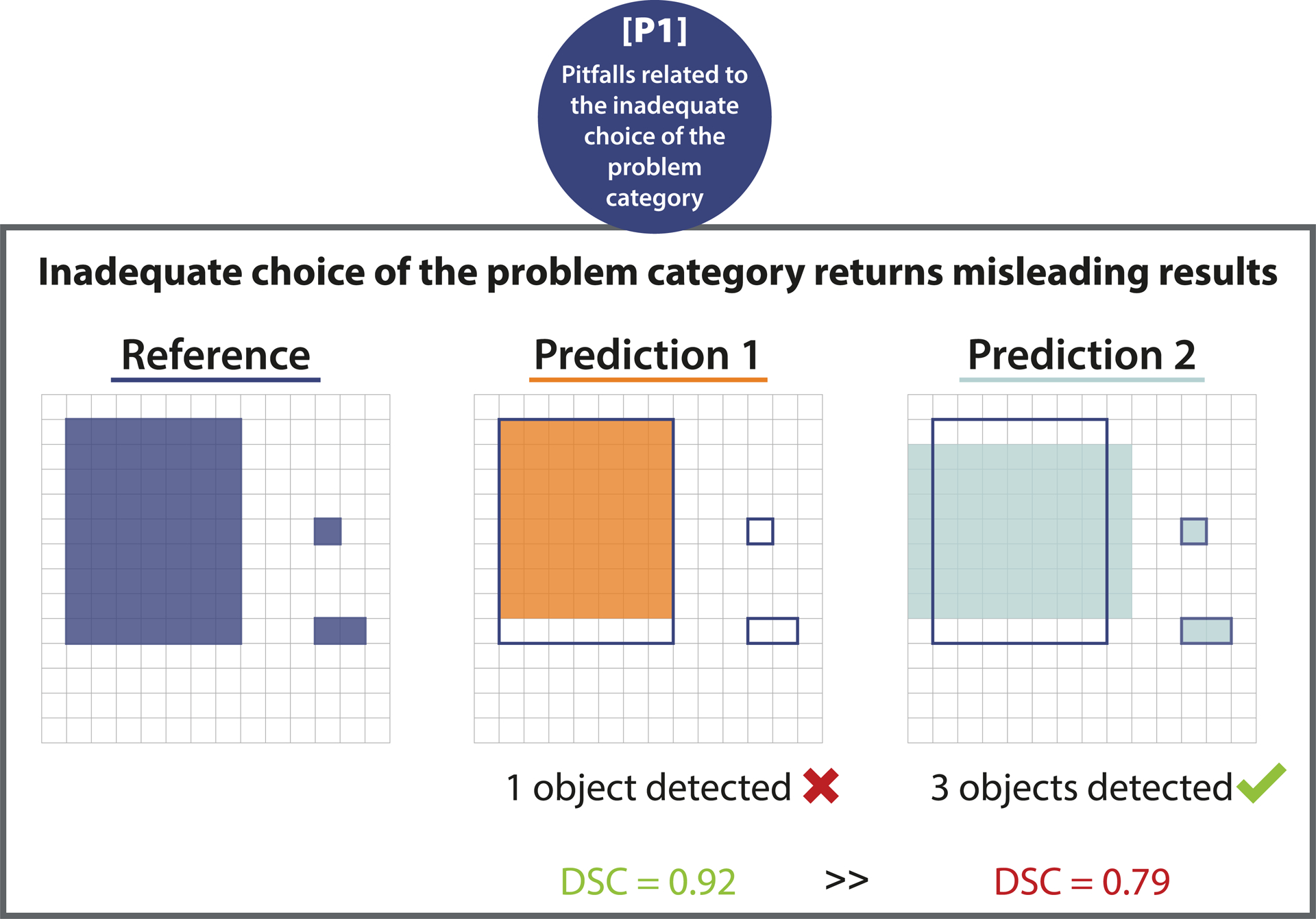

[P1] Pitfalls related to the inadequate choice of the problem category.

A common pitfall lies in the use of metrics for a problem category they are not suited for because they fail to fulfill crucial requirements of that problem category, and hence do not reflect the domain interest (Fig. 1). For instance, popular voxel-based metrics, such as the Dice Similarity Coefficient (DSC) or Sensitivity, are widely used in image analysis problems, although they do not fulfill the critical requirement of detecting all objects in a data set. In a cancer monitoring application they fail to measure instance progress, i.e., the potential increase in number of lesions (Fig. 1), which can have serious consequences for the patient. For some problems, there may even be a lack of matching problem category (Fig. SN 2.2), rendering common metrics inadequate. We present further examples of pitfalls in this category in Suppl. Note 2.1.

[P2] Pitfalls related to poor metric selection.

Pitfalls of this category occur when a validation metric is selected while disregarding specific properties of the given research problem or method used that make this metric unsuitable in the particular context. [P2] can be further divided into the following four subcategories:

[P2.1] Disregard of the domain interest.

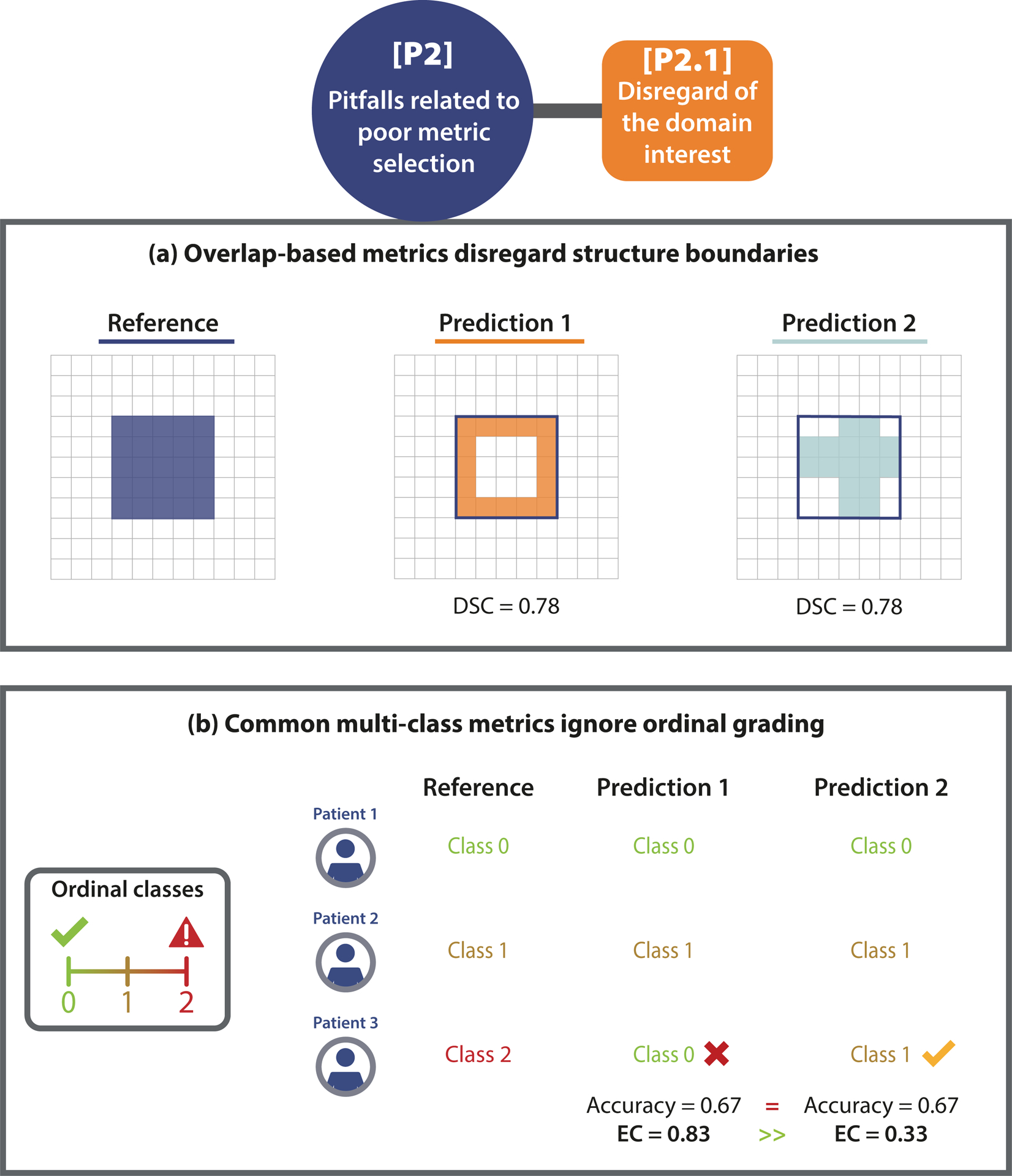

Commonly, several requirements arise from the domain interest of the underlying research problem that may clash with particular metric limitations. For example, if there is particular interest in the structure boundaries, it is important to know that overlap-based metrics such as the DSC do not take the correctness of an object’s boundaries into account, as shown in Fig. 4(a). Similar issues may arise if the structure volume (Fig. SN 2.4) or center(line) (Fig. SN 2.5) are of particular interest. Other domain interest-related properties may include an unequal severity of class confusions. This may be important in an ordinal grading use case, in which the severity of a disease is categorized by different scores. Predicting a low severity for a patient that actually suffers from a severe disease should be substantially penalized. Common classification metrics do not fulfill this requirement. An example is provided in Fig. 4(b). On pixel level, this property relates to an unequal severity of over- vs. undersegmentation. In applications such as radiotherapy, it may be highly relevant whether an algorithm tends to over- or undersegment the target structure. Common overlap-based metrics, however, do not represent over- and undersegmentation equally [38]. Further pitfalls may occur if confidence awareness (Fig. SN 2.6), comparability across data sets (Fig. SN 2.7), or a cost-benefit analysis (Fig. SN 2.9) are of particular importance, as illustrated in Suppl. Note 2.2.1.

Figure 4: [P2.1] Disregard of the domain interest.

(a) Importance of structure boundaries. The predictions of two algorithms (Prediction 1/2) capture the boundary of the given structure substantially differently, but lead to the exact same Dice Similarity Coefficient (DSC), due to its boundary un- awareness. This pitfall is also relevant for other overlap-based metrics such as centerline Dice Similarity Coefficient (clDice), pixel-level Fβ Score, and Intersection over Union (IoU), as well as localization criteria such as Box/Approx/Mask IoU, Center Distance, Mask IoU > 0, Point inside Mask/Box/Approx, and Intersection over Reference (IoR). (b) Unequal severity of class con- fusions. When predicting the severity of a disease for three patients in an ordinal classification problem, Prediction 1 assumes a much lower severity for Patient 3 than actually observed. This critical issue is overlooked by common metrics (here: Accuracy), which measure no difference to Prediction 2, which assesses the severity much better. Metrics with pre-defined weights (here: Expected Cost (EC)) correctly penalize Prediction 1 much more than Prediction 2. This pitfall is also relevant for other counting metrics, such as Balanced Accuracy (BA), Fβ Score, Positive Likelihood Ratio (LR+), Matthews Correlation Coefficient (MCC), Net Benefit (NB), Negative Predictive Value (NPV), Positive Predictive Value (PPV), Sensitivity, and Specificity.

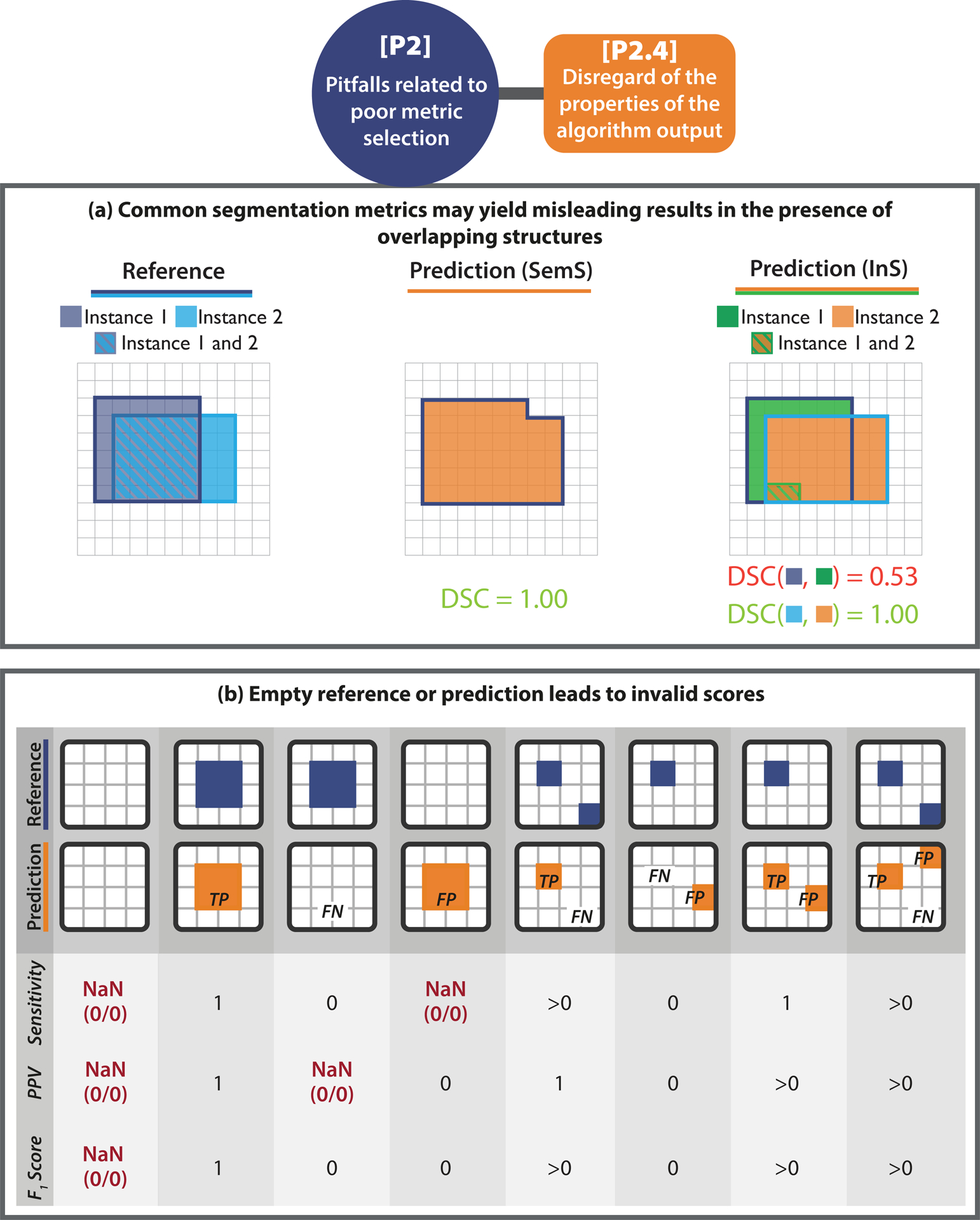

[P2.2] Disregard of the properties of the target structures.

For problems that require capturing local properties (object detection, semantic or instance segmentation), the properties of the target structures to be localized and/or segmented may have important implications for the choice of metrics. Here, we distinguish between size-related and shape- and topology-related pitfalls. Common metrics, for example, are sensitive to structure sizes, such that single-pixel differences may hugely impact the metric scores, as shown in Extended Data Fig. 1(a). Shape- and topology-related pitfalls may relate to the fact that common metrics disregard complex shapes (Extended Data Fig. 1(b)) or that bounding boxes do not capture the disconnectedness of structures (Fig. SN 2.14). A high variability of structure sizes (Fig. SN 2.11) and overlapping or touching structures (Fig. SN 2.13) may also influence metric values. We present further examples of [P2.2] pitfalls in Suppl. Note 2.2.2.

[P2.3] Disregard of the properties of the data set.

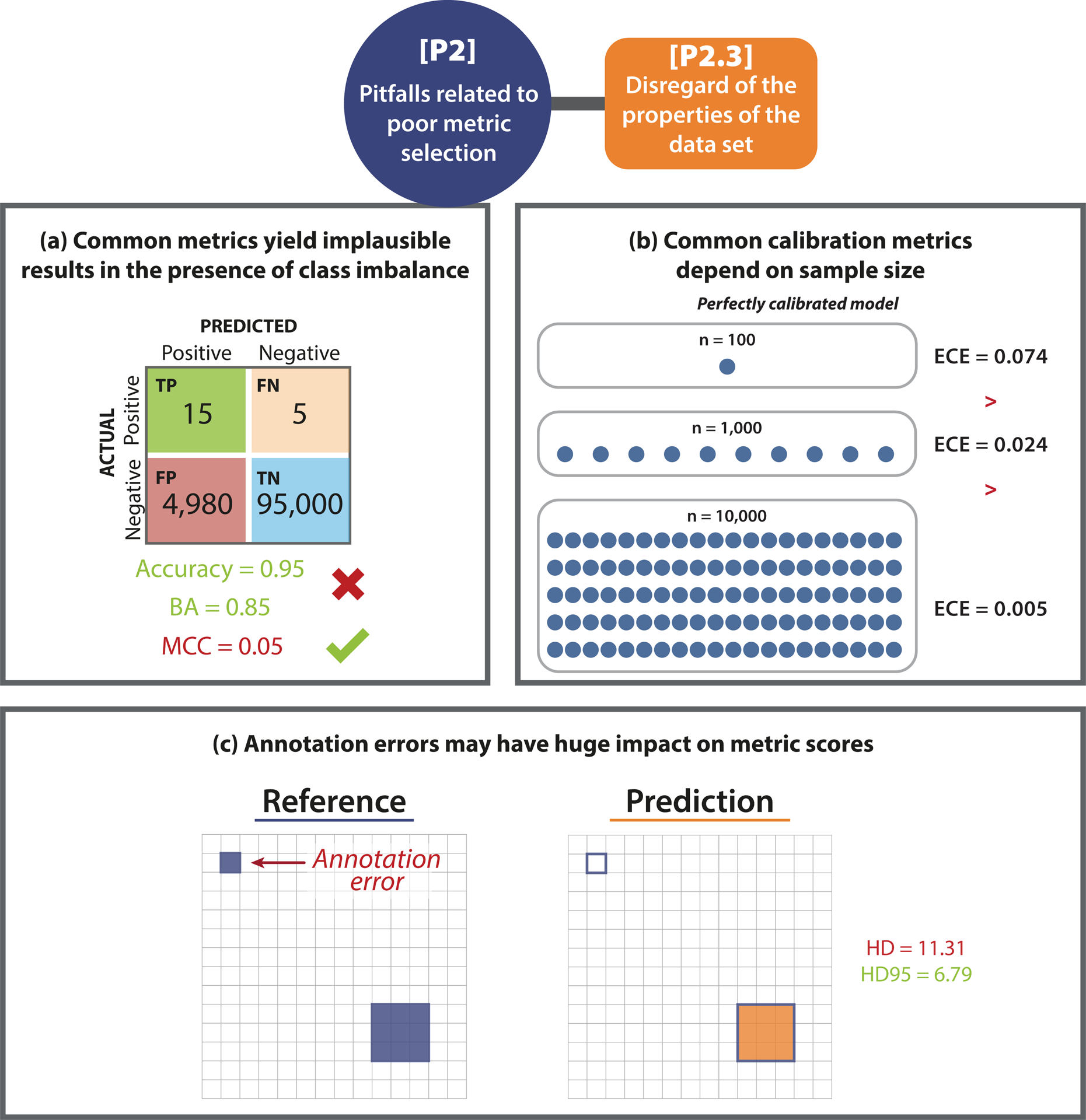

Various properties of the data set such as class imbalances (Fig. 5(a)), small sample sizes (Fig. 5(b)), or the quality of the reference annotations, may directly affect metric values. Common metrics such as the Balanced Accuracy (BA), for instance, may yield a very high score for a model that predicts many False Positive (FP) samples in an imbalanced setting (see Fig. 5(a)). When only small test data sets are used, common calibration metrics (which are typically biased estimators) either underestimate or overestimate the true calibration error of a model (Fig. 5(b)) [14]. On the other hand, metric values may be impacted by reference annotations (Fig. SN 2.17). Spatial outliers in the reference may have a huge impact on distance-based metrics such as the Hausdorff Distance (HD) (Fig. 5(c)). Additional pitfalls may arise from the occurrence of cases with an empty reference (Extended Data Fig. 2(b)), causing division by zero errors. We present further examples of [P2.3] pitfalls in Suppl. Note 2.2.3.

Figure 5: [P2.3] Disregard of the properties of the data set.

(a) High class imbalance. In the case of underrepresented classes, common metrics may yield misleading values. In the given example, Accuracy and Balanced Accuracy (BA) have a high score despite the high amount of False Positive (FP) samples. The class imbalance is only uncovered by metrics considering predictive values (here: Matthews Correlation Coefficient (MCC)). This pitfall is also relevant for other counting and multi-threshold metrics such as Area under the Receiver Operating Characteristic Curve (AUROC), Expected Cost (EC) (depending on the chosen costs), Positive Likelihood Ratio (LR+), Net Benefit (NB), Sensitivity, Specificity, and Weighted Cohen’s Kappa (WCK). (b) Small test set size. The values of the Expected Calibration Error (ECE) depend on the sample size. Even for a simulated perfectly calibrated model, the ECE will be substantially greater than zero for small sample sizes [14]. (c) Imperfect reference standard. A single erroneously annotated pixel may lead to a large decrease in performance, especially in the case of the Hausdorff Distance (HD) when applied to small structures. The Hausdorff Distance 95th Percentile (HD95), on the other hand, was designed to deal with spatial outliers. This pitfall is also relevant for localization criteria such as Box/Approx Intersection over Union (IoU) and Point inside Box/Approx. Further abbreviations: True Positive (TP), False Negative (FN), True Negative (TN).

[P2.4] Disregard of the properties of the algorithm output.

Reference-based metrics compare the algorithm output to a reference annotation to compute a metric score. Thus, the content and format of the prediction are of high importance when considering metric choice. Overlapping predictions in segmentation problems, for instance, may return misleading results. In Extended Data Fig. 2(a), the predictions only overlap to a certain extent, not representing that the reference instances actually overlap substantially. This is not detected by common metrics. Another example are empty predictions that may cause division by zero errors in metric calculations, as illustrated in Extended Data Fig. 2(b), or the lack of predicted class scores (Fig. SN 2.20). We present further examples of [P2.4] pitfalls in Suppl. Note 2.2.3.

[P3] Pitfalls related to poor metric application.

Once selected, the metrics need to be applied to an image or an entire data set. This step is not straightforward and comes with several pitfalls. For instance, when aggregating metric values over multiple images or patients, a common mistake is to ignore the hierarchical data structure, such as data from several hospitals or a varied number of images per patient. We present three examples of [P3] pitfalls in Fig. 6; for more pitfalls in this category, please refer to Suppl. Note 2.3. [P3] can further be divided into five subcategories that are presented in the following paragraphs.

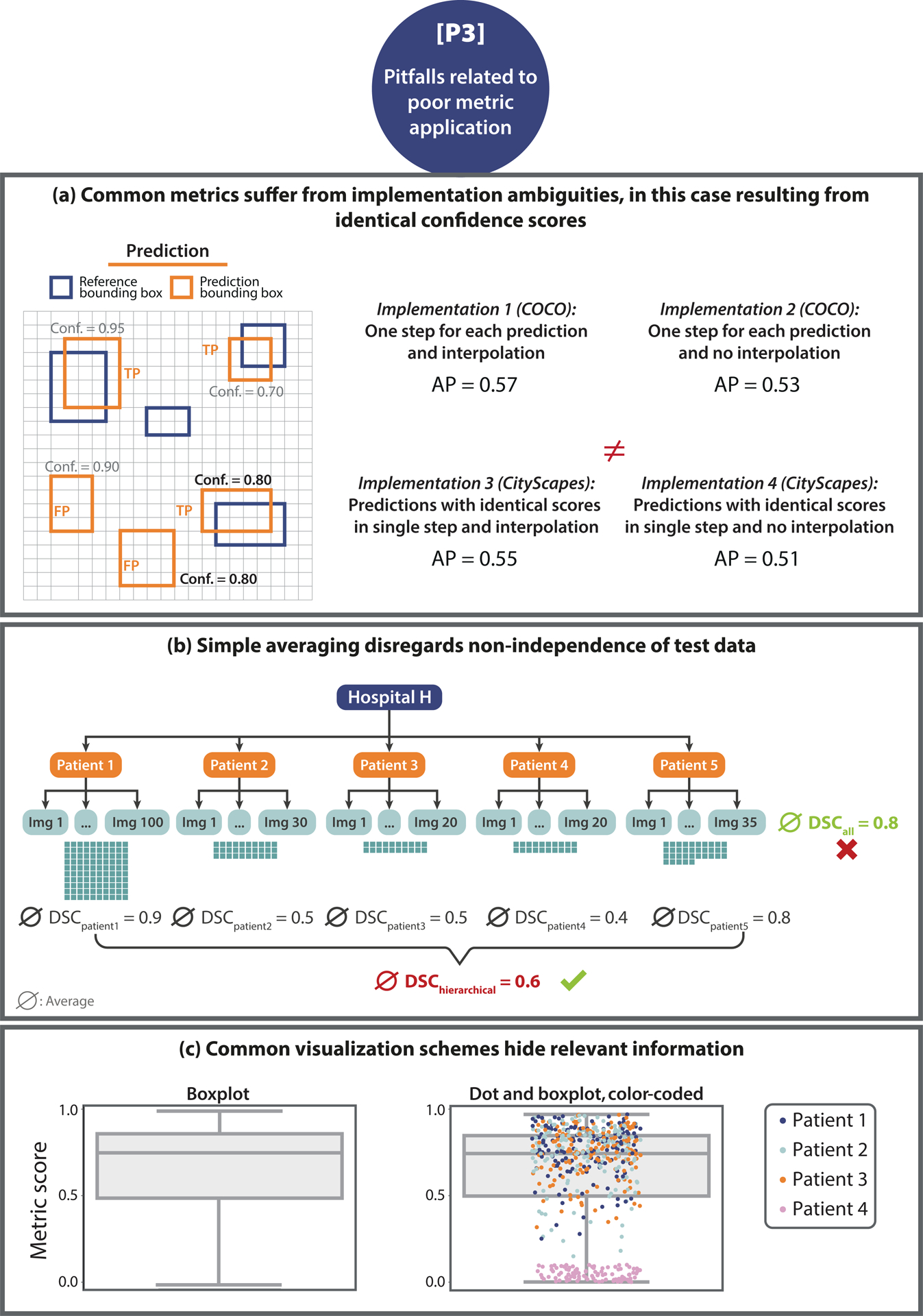

Figure 6: [P3] Pitfalls related to poor metric application.

(a) Non-standardized metric implementation. In the case of the Average Precision (AP) metric and the construction of the Precision- Recall (PR)-curve, the strategy of how identical scores (here: confidence score of 0.80 is present twice) are treated has a substantial impact on the metric scores. Microsoft Common Objects in Context (COCO) [20] and CityScapes [7] are used as examples. (b) Non-independence of test cases. The number of images taken from Patient 1 is much higher compared to that acquired from Patients 2–5. Averaging over all Dice Similarity Coefficient (DSC) values, denoted by ∅, results in a high averaged score. Aggregating metric values per patient reveals much higher scores for Patient 1 compared to the others, which would have been hidden by simple aggregation. (c) Uninformative visualization. A single box plot (left) does not give sufficient information about the raw metric value distribution. Adding the raw metric values as jittered dots on top (right) adds important information (here: on clusters). In the case of non-independent validation data, color/shape-coding helps reveal data clusters.

[P3.1] Inadequate metric implementation.

Metric implementation is, unfortunately, not standardized. As shown by [12], different researchers typically employ various different implementations for the same metric, which may yield a substantial variation in the metric scores. While some metrics are straightforward to implement, others require more advanced techniques and offer different possibilities. In the following, we provide some examples for inadequate metric implementation:

The method of how identical confidence scores are handled in the computation of the AP metric may lead to substantial differences in the metric scores. Microsoft Common Objects in Context (COCO) [20], for instance, processes each prediction individually, while CityScapes [7] processes all predictions with the same score in one joint step. Fig. 6(a) provides an example with two predictions having the same confidence score, in which the final metric scores differ depending on the chosen handling strategy for identical confidence scores. Similar issues may arise with other curve-based metrics, such as Area under the Receiver Operating Characteristic Curve (AUROC), AP, or Free-Response Receiver Operating Characteristic (FROC) scores (see e.g., [24]).

Metric implementation may be subject to discretization issues such as the chosen discretization of continuous variables, which may cause differences in the metric scores, as exemplary illustrated in Fig. SN 2.22.

For metrics assessing structure boundaries, such as the Average Symmetric Surface Distance (ASSD), the exact boundary extraction method is not standardized. Thus, for example, the boundary extraction method implemented by the Liver Tumor Segmentation (LiTS) challenge [1] and that implemented by Google DeepMind2 may produce different metric scores for the ASSD. This is especially critical for metrics that are sensitive to small contour changes, such as the HD.

Suboptimal choices of hyperparameters may also lead to metric scores that do not reflect the domain interest. For example, the choice of a threshold on a localization criterion (see Fig. SN 2.23) or the chosen hyperparameter for the Fβ Score will heavily influence the subsequent metric scores [34].

More [P3.1] pitfalls can be found in Suppl. Note 2.3.1.

[P3.2] Inadequate metric aggregation.

A common pitfall with respect to metric application is to simply aggregate metric values over the entire data set and/or all classes. As detailed in Fig. 6(b) and Suppl. Note 2.3.2, important information may get lost in this process, and metric results can be misleading. For example, the popular TorchMetrics framework calculates the DSC metric by default as a global average over all pixels in the data set without considering their image or class of origin3. Such a calculation eliminates the possibility of interpreting the final metric score with respect to individual images and classes. For example, errors in small structures may be suppressed by correctly segmented larger structures in other images (see e.g., Fig. SN 2.26). An adequate aggregation scheme is also crucial for handling hierarchical class structure (Fig. SN 2.27), missing values (Fig. SN 2.29), and potential biases (Fig. SN 2.28) of the algorithm. Further [P3.2] pitfalls are shown in Suppl. Note 2.3.2.

[P3.3] Inadequate ranking scheme.

Rankings are often created to compare algorithm performances. In this context, several pitfalls pertain to either metric relationships or ranking uncertainty. For example, to assess different properties of an algorithm, it is advisable to select multiple metrics and determine their values. However, the chosen metrics should assess complementary properties and should not be mathematically related. For example, the DSC and IoU are closely related, so using both in combination would not provide any additional information over using either of them individually (Fig. SN 2.30). Note in this context that unawareness of metric synonyms can equally mislead. Metrics can be known under different names; for instance, Sensitivity and Recall refer to the same mathematical formula. Despite this fact potentially appearing trivial, an analysis of 138 biomedical image analysis challenges [22] found three challenges that unknowingly used two versions of the same metric to calculate their rankings. Moreover, rankings themselves may be unstable (Fig. SN 2.31). [21] and [37] demonstrated that rankings are highly sensitive to altering the metric aggregation operators, the underlying data set, or the general ranking method. Thus, if the robustness of rankings is disregarded, the winning algorithm may be identified by chance rather than true superiority.

[P3.4] Inadequate metric reporting.

A thorough reporting of metric values and aggregates is important both in terms of transparency and interpretability. However, several pitfalls are to be avoided in this regard. Notably, different types of visualization may vary substantially in terms of interpretability, as shown in Figs 6(c). For example, while a box plot provides basic information, it does not depict the distribution of metric values. This may conceal important information, such as specific images on which an algorithm performed poorly. Other pitfalls in this category relate to the non-determinism of algorithms, which introduces a natural variability to the results of a neural network, even with fixed seeds (Fig. SN 2.32). This issue is aggravated by inadequate reporting, for instance, reporting solely the results from the best run instead of proper cross-validation and reporting of the variability across different runs. Generally, shortcomings in reporting, such as providing no standard deviation or confidence intervals in the presented results, are common. Concrete examples of [P3.4] pitfalls can be found in Suppl. Note 2.3.4.

[P3.5] Inadequate interpretation of metric values.

Interpreting metric scores and aggregates is an important step for the analysis of algorithm performances. However, several pitfalls can arise from the interpretation. In rankings, for example, minor differences in metric scores may not be relevant from an application perspective but may still yield better ranks (Fig. SN 2.36). Furthermore, some metrics do not have upper or lower bounds, or the theoretical bounds may not be achievable in practice, rendering interpretation difficult (Fig. SN 2.35). More information on interpretation-based pitfalls can be found in Suppl. Note 2.3.4.

The first illustrated common access point to metric definitions and pitfalls

To underline the importance of a common access point to metric pitfalls, we conducted a search for individual metric-related pitfalls on the platforms Google Scholar and Google, with the purpose of determining how many of the pitfalls identified through our work could be located in existing resources. We were only able to locate a portion of the pitfalls identified by our approach in existing research literature (68%) or online resources such as blog posts (11%; 8% were found in both). Only 27% of the located pitfalls were presented visually.

Our work now provides this key resource in a highly structured and easily understandable form. Suppl. Note 2, contains a dedicated illustration for each of the pitfalls discussed, thus facilitating reader comprehension and making the information accessible to everyone regardless of their level of expertise. A further core contribution of our work are the metric profiles presented in Suppl. Note 2, which, for each metric, summarize the most important information deemed of particular relevance by the Metrics Reloaded consortium of the sister work to this publication [22]. The profiles provide the reader with a compact, at-a-glance overview of each metric and an enumeration of the limitations and pitfalls identified in the Delphi process conducted for this work.

DISCUSSION

Flaws in the validation of biomedical image analysis algorithms significantly impede the translation of methods into (clinical) practice and undermine the assessment of scientific progress in the field [19]. They are frequently caused by poor choices due to disregarding the specific properties and limitations of individual validation metrics. The present work represents the first comprehensive collection of pitfalls and limitations to be considered when using validation metrics in image-level classification, semantic segmentation, instance segmentation, and object detection tasks. Our work enables researchers to gain a deep understanding of and familiarity with both the overall topic and individual metrics by providing a common access point to previously largely scattered and inaccessible information — key knowledge they can resort to when conducting validation of image analysis algorithms. This way, our work aims to disrupt the current common practice of choosing metrics based on their popularity rather than their suitability to the underlying research problem. This practice, which, for instance, often manifests itself in the unreflected and inadequate use of the DSC, is concerningly prevalent even among prestigious, high-quality biomedical image analysis competitions (challenges) [8, 11, 15, 17, 18, 21, 23, 35]. The educational aspect of our work is complemented by dedicated ‘metric profiles’ which detail the definitions and properties of all metrics discussed. Notably, our work pioneers the examination of artificial intelligence (AI) validation pitfalls in the biomedical domain, a domain in which they are arguably more critical than in many others as flaws in biomedical algorithm validation can directly affect patient wellbeing and safety.

We posited that shortcomings in current common practice are marked by the low accessibility of information on the pitfalls and limitations of commonly used validation metrics. A literature search conducted from the point of view of a researcher seeking information on individual metrics confirmed that the number of search results far exceeds any amount that could be overseen within reasonable time and effort, as well as the lack of a common point of entry to reliable metric information. Even when knowing the specific pitfalls and related keywords uncovered by our consortium, only a fraction of those pitfalls could be found in existing literature, indicating the novelty and added value of our work.

For transparency, several constraints regarding our literature search must be noted. First, it must be acknowledged that the remarkably high search result numbers inevitably include duplicates of papers (e.g., the same work in a conference paper and on arXiv) as well as results that are out of scope (e.g., [3], [9]), in the cited examples for instance due to a metric acronym (AUC) simultaneously being an acronym for another entity (a trinucleotide) in a different domain, or the word “sensitivity” being used in its common, non-metric meaning. Moreover, common words used to describe pitfalls such as “problem” or “issue” are by nature present in many publications discussing any kind of research, rendering them unusable for a dedicated search, which could, in turn, account for missing publications that do discuss pitfalls in these terms. Similarly, when searching for specific pitfalls, many of the returned results containing the appropriate keywords did not actually refer to metrics or algorithm validation but to other parts of a model or biomedical problem (e.g., the need for stratification is commonly discussed with regard to the design of clinical studies but not with regard to their validation). Character limits in the Google Scholar search bar further complicate or prevent the use of comprehensive search strings. Finally, it is both possible and probable that our literature search did not retrieve all publications or non-peer-reviewed online resources that mention a particular pitfall, since even extensive search strings might not cover the particular words used for a pitfall description.

None of these observations, however, detracts from our hypothesis. In fact, all of the above observations reinforce our finding that, for any individual researcher, retrieving information on metrics of interest is difficult to impossible. In many cases, finding information on pitfalls only appears feasible if the specific pitfall and its related keywords are exactly known, which, of course, is not the situation most researchers realistically find themselves in. Overall accessibility of such vital information, therefore, currently leaves much to be desired.

Compiling this information through a multi-stage Delphi process allowed us to leverage distributed knowledge from experts across different biomedical imaging domains and thus ensure that the resulting illustrated collection of metric pitfalls and limitations is both comprehensive and of maximum practical relevance. Continued proximity of our work to issues occurring in practical application was achieved through sharing the first results of this process as a dynamic preprint [27] with dedicated calls for feedback, as well as crowdsourcing further suggestions on social media.

Although their severity and practical consequences might differ between applications, we found that the pitfalls generalize across different imaging modalities and application domains. By categorizing them solely according to their underlying sources, we were able to create an overarching taxonomy that goes beyond domain-specific concerns and thus enjoys broad applicability. Given the large number of identified pitfalls, our taxonomy crucially establishes structure in the topic. Moreover, by relating types of pitfalls to the respective metrics they apply to and illustrating them, it enables researchers to gain a deeper, systemic understanding of the causes of metric failure.

Our complementary Metrics Reloaded recommendation framework, which guides researchers towards the selection of appropriate validation metrics for their specific tasks and is introduced in a sister publication to this work [22], shares the same principle of domain independence. Its recommendations are based on the creation of a ‘problem fingerprint’ that abstracts from specific domain knowledge and, informed by the pitfalls discussed here, captures all properties relevant to metric selection for a specific biomedical problem. In this sister publication, we present recommendations to avoid the pitfalls presented in this work. Importantly, the finding that pitfalls generalize and can be categorized in a domain-independent manner opens up avenues for future expansion of our work to other fields of ML-based imaging, such as general computer vision (see below), thus freeing it from its major constraint of exclusively focusing on biomedical problems.

It is worth mentioning that we only examined pitfalls related to the tasks of image-level classification, semantic segmentation, instance segmentation, and object detection, as these can all be considered classification tasks at different levels (image/object/pixel) and hence share similarities in their validation. While including a wider range of biomedical problems not considered classification tasks, such as regression or registration, would have gone beyond the scope of the present work, we envision this expansion in future work. Moreover, our work focused on pitfalls related to reference-based metrics. Including pitfalls pertaining to non-reference-based metrics, such as metrics that assess speed, memory consumption, or carbon footprint, could be a future direction to take. Finally, while we aspired to be as comprehensive as possible in our compilation, we cannot exclude that there are further pitfalls to be taken into account that the consortium and the participating community have so far failed to recognize. Should this be the case, our dynamic Metrics Reloaded online platform, which is currently under development and will continuously be updated after release, will allow us to easily and transparently append missed pitfalls. This way, our work can remain a reliable point of access, reflecting the state of the art at any given moment in the future. In this context, we note that we explicitly welcome feedback and further suggestions from the readership of Nature Methods.

The expert consortium was primarily compiled in a way to cover the required expertise from various fields but also consisted of researchers of different countries, (academic) ages, roles, and backgrounds (details can be found in the Suppl. Methods). It mainly focused on biomedical applications. The pitfalls presented here are therefore of the highest relevance for biological and clinical use cases. Their clear generalization across different biomedical imaging domains, however, indicates broader generalizability to fields such as general computer vision. Future work could thus see a major expansion of our scope to AI validation well beyond biomedical research. Regardless of this possibility, we strongly believe that by raising awareness of metric-related pitfalls, our work will kick off a necessary scientific debate. Specifically, we see its potential in inducing the scientific communities in other areas of AI research to follow suit and investigate pitfalls and common practices impairing progress in their specific domains.

In conclusion, our work presents the first comprehensive and illustrated access point to information on validation metric properties and their pitfalls. We envision it to not only impact the quality of algorithm validation in biomedical imaging and ultimately catalyze faster translation into practice, but to raise awareness on common issues and call into question flawed AI validation practice far beyond the boundaries of the field.

Extended Data

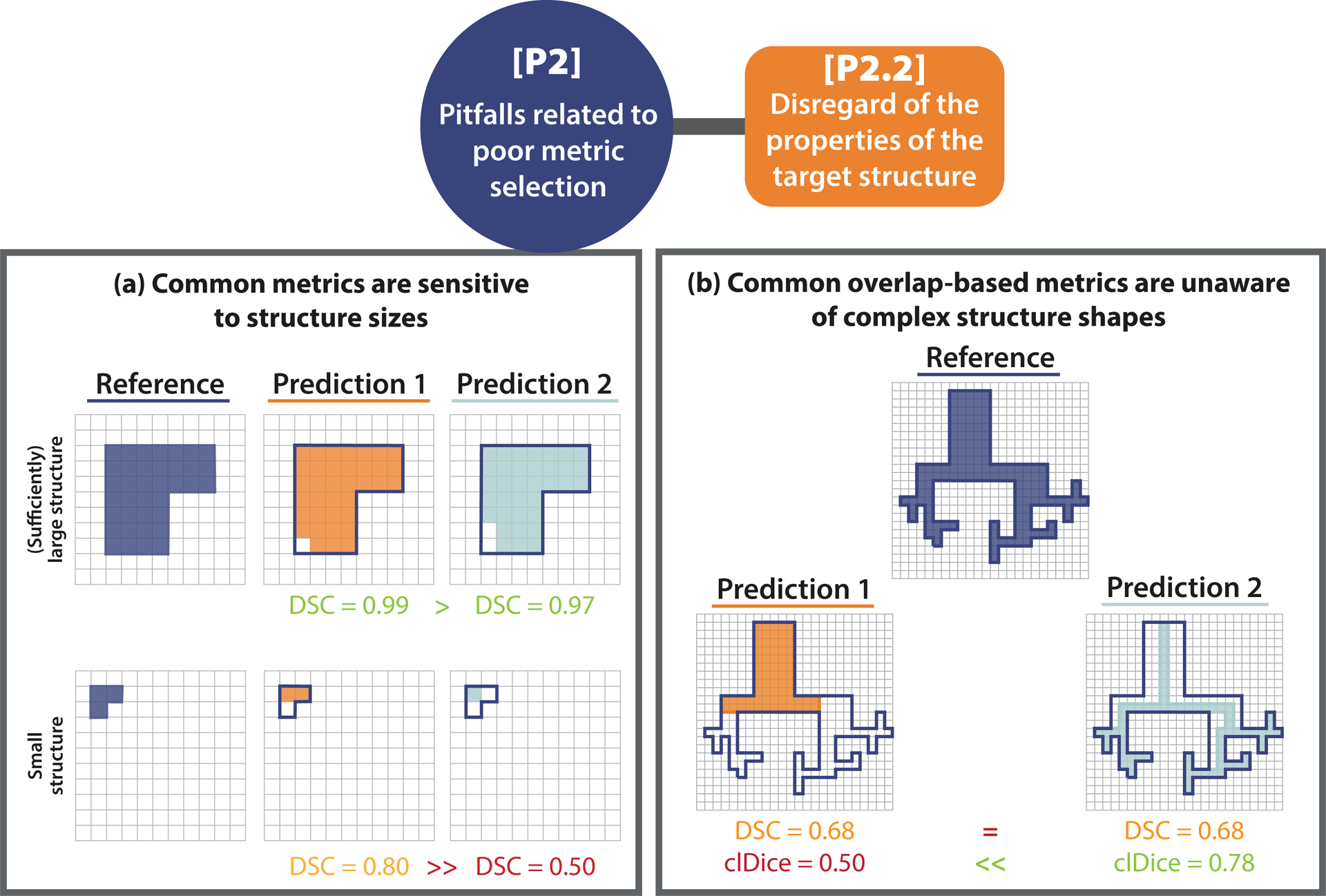

Extended Data Fig. 1. [P2.2] Disregard of the properties of the target structures.

[P2.2] Disregard of the properties of the target structures. (a) Small structure sizes. The predictions of two algorithms (Prediction 1/2) differ in only a single pixel. In the case of the small structure (bottom row), this has a substantial effect on the corresponding Dice Similarity Coefficient (DSC) metric value (similar for the Intersection over Union (IoU)). This pitfall is also relevant for other overlap-based metrics such as the centerline Dice Similarity Coefficient (clDice), and localization criteria such as Box/Approx/Mask IoU and Intersection over Reference (IoR). (b) Complex structure shapes. Common overlap-based metrics (here: DSC) are unaware of complex structure shapes and treat Predictions 1 and 2 equally. The clDice uncovers the fact that Predictions 1 misses the fine-granular branches of the reference and favors Predictions 2, which focuses on the center line of the object. This pitfall is also relevant for other overlap-based such as metrics IoU and pixel-level Fβ Score as well as localization criteria such as Box/Approx/Mask IoU, Center Distance, Mask IoU > 0, Point inside Mask/Box/Approx, and IoR.

Extended Data Fig. 2. [P2.4] Disregard of the properties of the algorithm output.

[P2.4] Disregard of the properties of the algorithm output. (a) Possibility of overlapping predictions. If multiple structures of the same type can be seen within the same image (here: reference objects R1 and R2), it is generally advisable to phrase the problem as instance segmentation (InS; right) rather than semantic segmentation (SemS; left). This way, issues with boundary-based metrics resulting from comparing a given structure boundary to the boundary of the wrong instance in the reference can be avoided. In the provided example, the distance of the red boundary pixel to the reference, as measured by a boundary-based metric in SemS problems, would be zero, because different instances of the same structure cannot be distinguished. This problem is overcome by phrasing the problem as InS. In this case, (only) the boundary of the matched instance (here: R2) is considered for distance computation. (b) Possibility of empty prediction or reference. Each column represents a potential scenario for per-image validation of objects, categorized by whether True Positives (TPs), False Negatives (FNs), and False Positives (FPs) are present (n > 0) or not (n = 0) after matching/assignment. The sketches on the top showcase each scenario when setting “n > 0” to “n = 1”. For each scenario, Sensitivity, Positive Predictive Value (PPV), and the F1 Score are calculated. Some scenarios yield undefined values (Not a Number (NaN)).

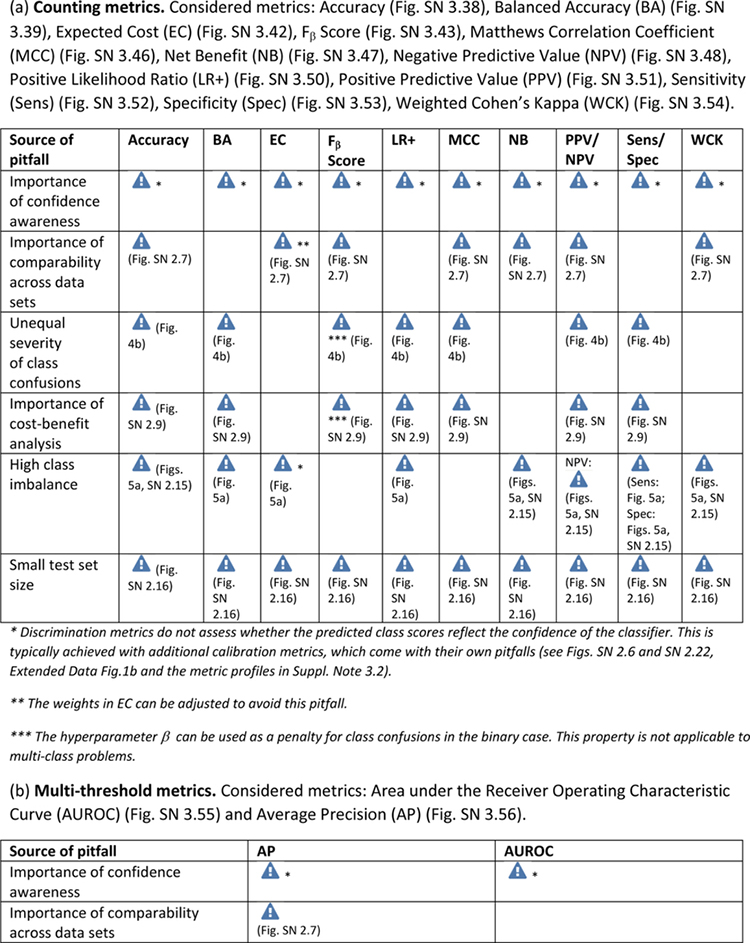

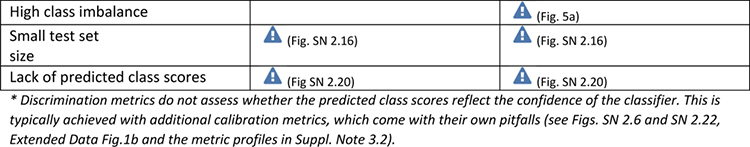

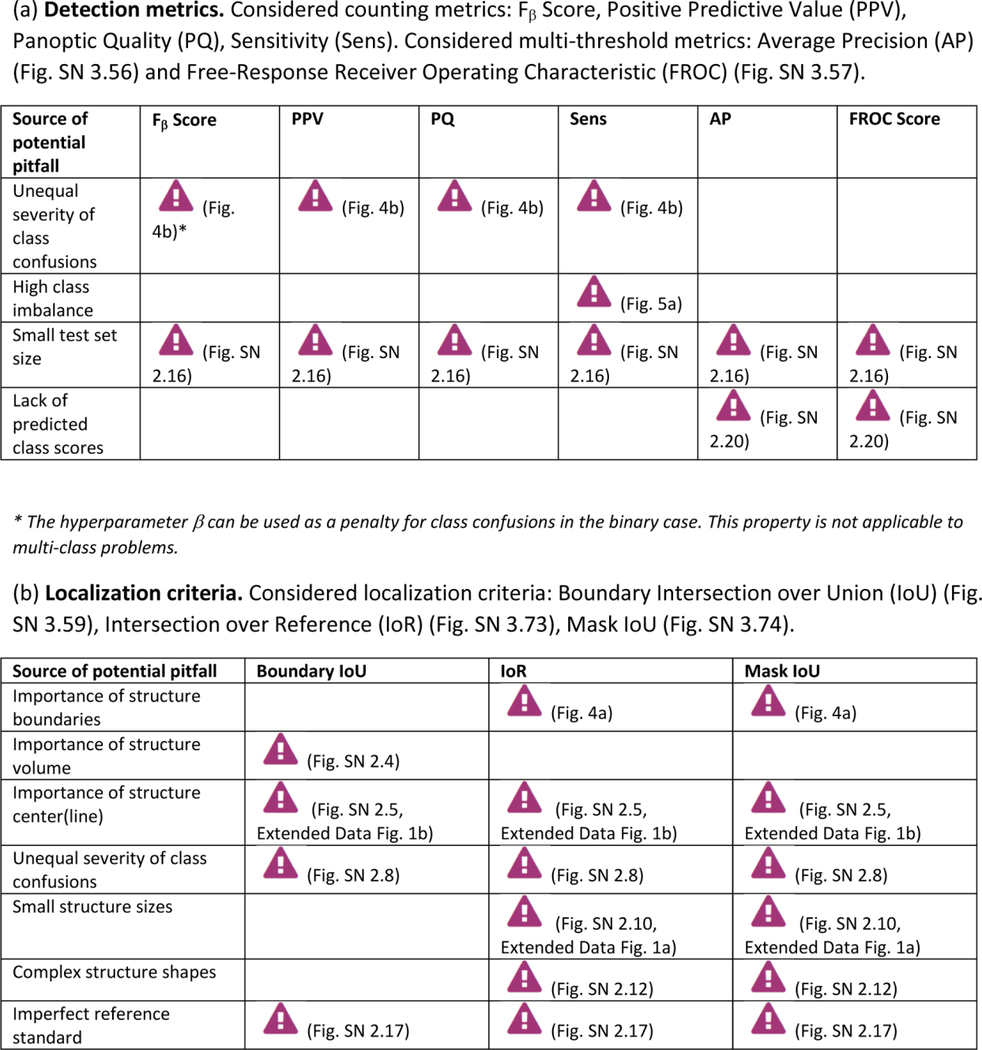

Extended Data Tab. 1. Overview of pitfall sources for image-level classification metrics.

((a): counting metrics, (b): multi-threshold metrics) related to poor metric selection [P2]. Pitfalls for semantic segmentation, object detection and instance segmentation are provided in Extended Data Tabs. 2–5 respectively. A warning sign indicates a potential pitfall for the metric in the corresponding column, in case the property represented by the respective row holds true. Comprehensive illustrations of pitfalls are available in Suppl. Note 2. A comprehensive list of pitfalls is provided separately for each metrics in the metrics cheat sheets (Suppl. Note 3). Note that we only list sources of pitfalls relevant to the considered metrics. Other sources of pitfalls are neglected for this table.

|

|

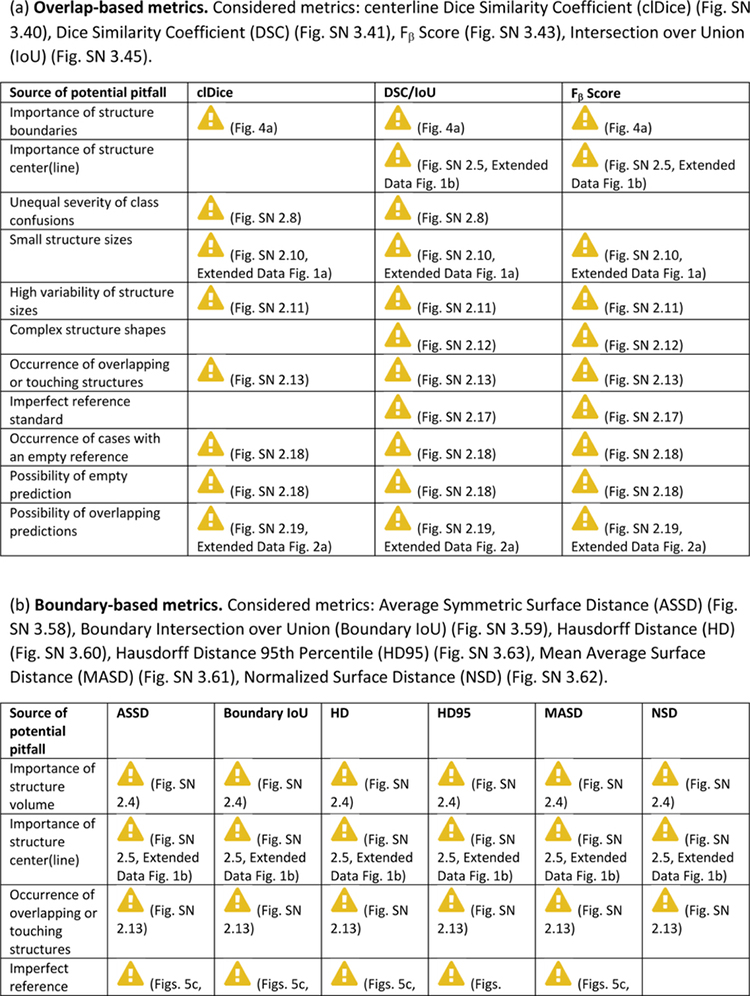

Extended Data Tab. 2. Overview of pitfall sources for semantic segmentation metrics.

((a): overlap-based metrics, (b): boundary-based metrics) related to poor metric selection [P2]. A warning sign indicates a potential pitfall for the metric in the corresponding column, in case the property represented by the respective row holds true. Comprehensive illustrations of pitfalls are available in Suppl. Note 2. A comprehensive list of pitfalls is provided separately for each metrics in the metrics cheat sheets (Suppl. Note 3). Note that we only list sources of pitfalls relevant to the considered metrics. Other sources of pitfalls are neglected for this table.

|

|

Can be mitigated by the choice of the percentile

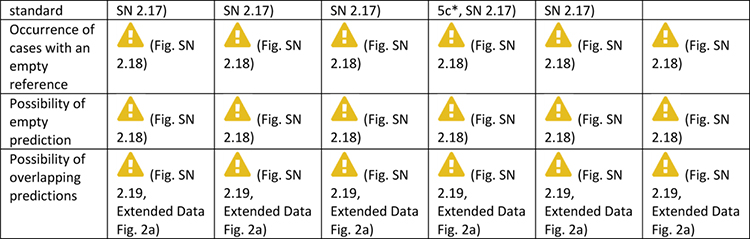

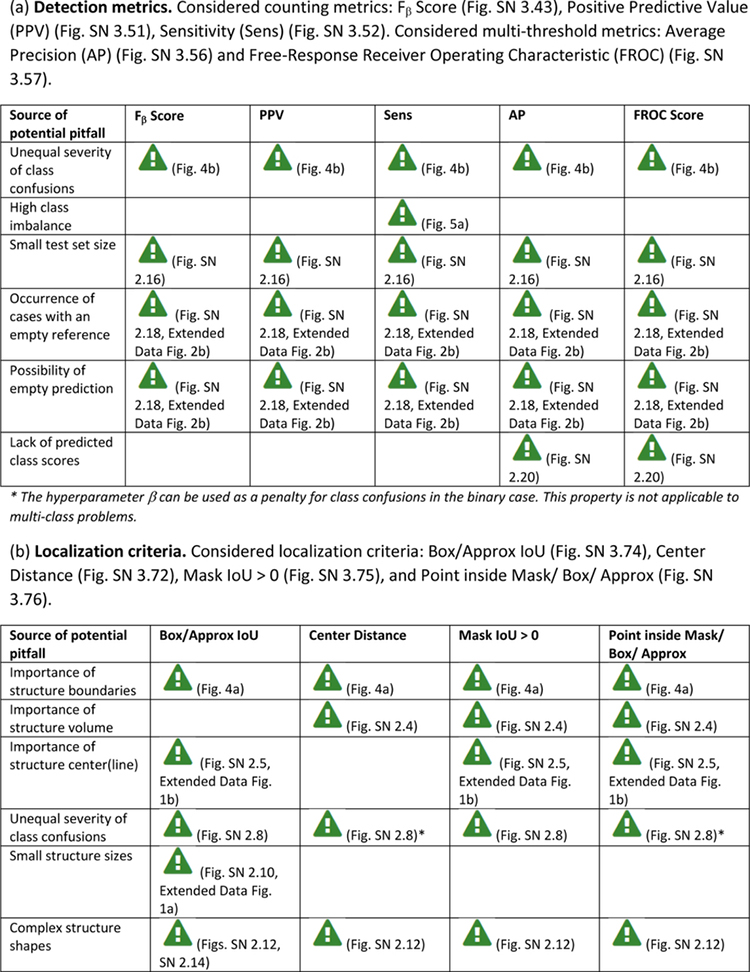

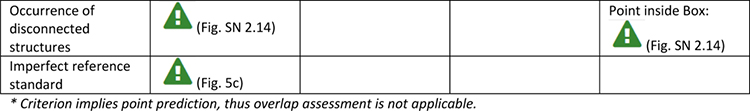

Extended Data Tab. 3. Overview of sources of pitfalls for object detection metrics.

((a): detection metrics, (b): localization criteria) related to poor metric selection [P2]. A warning sign indicates a potential pitfall for the metric in the corresponding column, in case the property represented by the respective row holds true. Comprehensive illustrations of pitfalls are available in Suppl. Note 2. A comprehensive list of pitfalls is provided separately for each metrics in the metrics cheat sheets (Suppl. Note 3). Note that we only list sources of pitfalls relevant to the considered metrics. Other sources of pitfalls are neglected for this table.

|

|

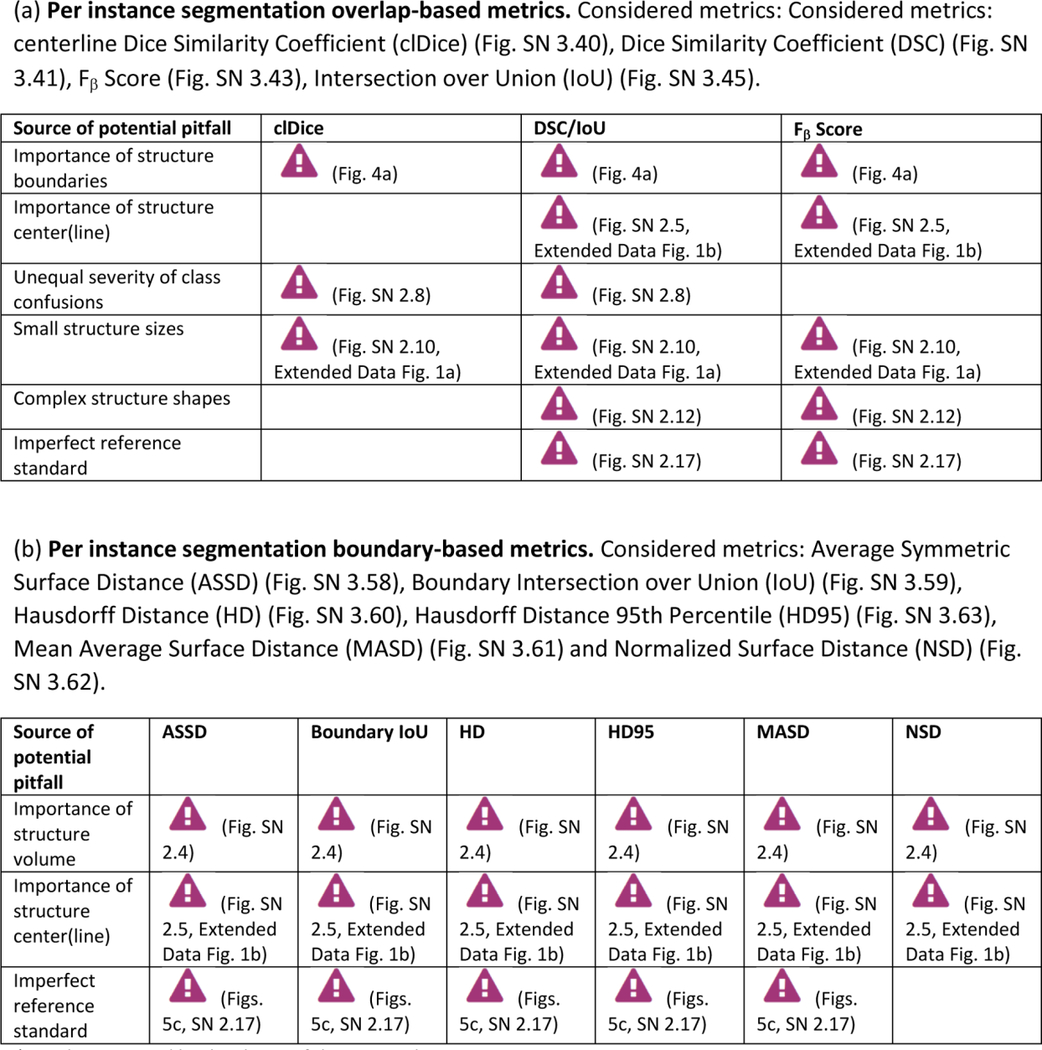

Extended Data Tab. 4. Overview of sources of pitfalls for instance segmentation metrics (Part 1).

((a): detection metrics, (b): localization criteria) related to poor metric selection [P2]. A warning sign indicates a potential pitfall for the metric in the corresponding column, in case the property represented by the respective row holds true. Comprehensive illustrations of pitfalls are available in Suppl. Note 2. A comprehensive list of pitfalls is provided separately for each metrics in the metrics cheat sheets (Suppl. Note 3). Note that we only list sources of pitfalls relevant to the considered metrics. Other sources of pitfalls are neglected for this table.

|

Extended Data Tab. 5. Overview of sources of pitfalls for instance segmentation metrics (Part 2).

((a) per instance segmentation overlap-based metrics, (b) per instance segmentation boundary-based metrics) related to poor metric selection [P2]. A warning sign indicates a potential pitfall for the metric in the corresponding column, in case the property represented by the respective row holds true. Comprehensive illustrations of pitfalls are available in Suppl. Note 2. Note that we only list sources of pitfalls relevant to the considered metrics. Other sources of pitfalls are neglected for this table.

|

Can be mitigated by the choice of the percentile

Supplementary Material

Figure 3:

[P1] Pitfalls related to the inadequate choice of the problem category. Wrong choice of problem category. Effect of using segmentation metrics for object detection problems. The pixel-level Dice Similarity Coefficient (DSC) of a prediction recognizing every structure (Prediction 2) is lower than that of a prediction that only recognizes one of the three structures (Prediction 1).

ACKNOWLEDGEMENTS

This work was initiated by the Helmholtz Association of German Research Centers in the scope of the Helmholtz Imaging Incubator (HI), the MICCAI Special Interest Group for biomedical image analysis challenges, and the benchmarking working group of the MONAI initiative. It has received funding from the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation programme (grant agreement No. [101002198], NEURAL SPICING) and the Surgical Oncology Program of the National Center for Tumor Diseases (NCT) Heidelberg. It was further supported in part by the Intramural Research Program of the National Institutes of Health Clinical Center as well as by the National Cancer Institute (NCI) and the National Institute of Neurological Disorders and Stroke (NINDS) of the National Institutes of Health (NIH), under award numbers NCI:U01CA242871 and NINDS:R01NS042645. The content of this publication is solely the responsibility of the authors and does not represent the official views of the NIH. T.A. acknowledges the Canada Institute for Advanced Research (CIFAR) AI Chairs program, the Natural Sciences and Engineering Research Council of Canada. F.B. was co-funded by the European Union (ERC, TAIPO, 101088594). Views and opinions expressed are however those of the authors only and do not necessarily reflect those of the European Union or the European Research Council. Neither the European Union nor the granting authority can be held responsible for them. M.J.C. acknowledges funding from Wellcome/EPSRC Centre for Medical Engineering (WT203148/Z/16/Z), the Wellcome Trust (WT213038/Z/18/Z), and the InnovateUK funded London AI Centre for Value-Based Healthcare. J.C. is supported by the Federal Ministry of Education and Research (BMBF) under the funding reference 161L0272. V.C. acknowledges funding from NovoNordisk Foundation (NNF21OC0068816) and Independent Research Council Denmark (1134-00017B). B.A.C. was supported by NIH grant P41 GM135019 and grant 2020-225720 from the Chan Zuckerberg Initiative DAF, an advised fund of the Silicon Valley Community Foundation. G.S.C. was supported by Cancer Research UK (programme grant: C49297/A27294). M.M.H. is supported by the Natural Sciences and Engineering Research Council of Canada (RGPIN- 2022-05134). A.Ka. is supported by French State Funds managed by the “Agence Nationale de la Recherche (ANR)” - “Investissements d’Avenir” (Investments for the Future), Grant ANR-10- IAHU-02 (IHU Strasbourg). M.K. was funded by the Ministry of Education, Youth and Sports of the Czech Republic (Project LM2018129). Ta.K. was supported in part by 4UH3-CA225021- 03, 1U24CA180924-01A1, 3U24CA215109-02, and 1UG3-CA225-021-01 grants from the National Institutes of Health. G.L. receives research funding from the Dutch Research Council, the Dutch Cancer Association, HealthHolland, the European Research Council, the European Union, and the Innovative Medicine Initiative. S.M.R. wishes to acknowledge the Allen Institute for Cell Science founder Paul G. Allen for his vision, encouragement and support. M.R is supported by Innosuisse grant number 31274.1 and Swiss National Science Foundation Grant Number 205320_212939. C.H.S. is supported by an Alzheimer’s Society Junior Fellowship (AS-JF-17-011). R.M.S. is supported by the Intramural Research Program of the NIH Clinical Center. A.T. acknowledges support from Academy of Finland (Profi6 336449 funding program), University of Oulu strategic funding, Finnish Foundation for Cardiovascular Research, Wellbeing Services County of North Ostrobothnia (VTR project K62716), and Terttu foundation. S.A.T. acknowledges the support of Canon Medical and the Royal Academy of Engineering and the Research Chairs and Senior Research Fellowships scheme (grant RCSRF1819\8\25).

We would like to thank Peter Bankhead, Gary S. Collins, Robert Haase, Fred Hamprecht, Alan Karthikesalingam, Hannes Kenngott, Peter Mattson, David Moher, Bram Stieltjes, and Manuel Wiesenfarth for the fruitful discussions on this work.

We would like to thank Sandy Engelhardt, Sven Koehler, M. Alican Noyan, Gorkem Polat, Hassan Rivaz, Julian Schroeter, Anindo Saha, Lalith Sharan, Peter Hirsch, and Matheus Viana for suggesting additional illustrations that can be found in [27].

COMPETING INTERESTS

The authors declare the following competing interests: F.B. is an employee of Siemens AG (Munich, Germany). B.v.G. is a shareholder of Thirona (Nijmegen, NL). B.G. is an employee of HeartFlow Inc (California, USA) and Kheiron Medical Technologies Ltd (London, UK). M.M.H. received an Nvidia GPU Grant. Th. K. is an employee of Lunit (Seoul, South Korea). G.L. is on the advisory board of Canon Healthcare IT (Minnetonka, USA) and is a shareholder of Aiosyn BV (Nijmegen, NL). Na.R. is the founder and CSO of Histofy (New York, USA). Ni.R. is an employee of Nvidia GmbH (Munich, Germany). J.S.-R. reports funding from GSK (Heidelberg, Germany), Pfizer (New York, USA) and Sanofi (Paris, France) and fees from Travere Therapeutics (California, USA), Stadapharm (Bad Vilbel, Germany), Astex Therapeutics (Cambridge, UK), Pfizer (New York, USA), and Grunenthal (Aachen, Germany). R.M.S. receives patent royalties from iCAD (New Hampshire, USA), ScanMed (Nebraska, USA), Philips (Amsterdam, NL), Translation Holdings (Alabama, USA) and PingAn (Shenzhen, China); his lab received research support from PingAn through a Cooperative Research and Development Agreement. S.A.T. receives financial support from Canon Medical Research Europe (Edinburgh, Scotland). The remaining authors declare no competing interests.

Footnotes

CODE AVAILABILITY STATEMENT

We provide reference implementations for all Metrics Reloaded metrics within the MONAI open- source framework. They are accessible at https://github.com/Project-MONAI/MetricsReloaded.

DATA AVAILABILITY STATEMENT

No data was used in this study.

REFERENCES

- [1].Bilic Patrick, Christ Patrick, Li Hongwei Bran, Vorontsov Eugene, Ben-Cohen Avi, Kaissis Georgios, Szeskin Adi, Jacobs Colin, Mamani Gabriel Efrain Humpire, Chartrand Gabriel, et al. The liver tumor segmentation benchmark (lits). Medical Image Analysis, 84:102680, 2023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Brown Bernice B. Delphi process: a methodology used for the elicitation of opinions of experts. Technical report, Rand Corp Santa Monica CA, 1968. [Google Scholar]

- [3].Carbonell Alberto, De la Pena Marcos, Flores Ricardo, and Gago Selma. Effects of the trinucleotide preceding the self-cleavage site on eggplant latent viroid hammerheads: differences in co-and post-transcriptional self-cleavage may explain the lack of trinucleotide auc in most natural hammerheads. Nucleic acids research, 34(19):5613–5622, 2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [4].Chen Jianxu, Ding Liya, Viana Matheus P, Lee HyeonWoo, Sluezwski M Filip, Morris Benjamin, Hendershott Melissa C, Yang Ruian, Mueller Irina A, and Rafelski Susanne M. The allen cell and structure segmenter: a new open source toolkit for segmenting 3d intracellular structures in fluorescence microscopy images. BioRxiv, page 491035, 2020. [Google Scholar]

- [5].Chicco Davide and Jurman Giuseppe. The advantages of the matthews correlation coefficient (mcc) over f1 score and accuracy in binary classification evaluation. BMC genomics, 21(1):1–13, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Chicco Davide, Tötsch Niklas, and Jurman Giuseppe. The matthews correlation coefficient (mcc) is more reliable than balanced accuracy, bookmaker informedness, and markedness in two-class confusion matrix evaluation. BioData mining, 14(1):1–22, 2021. The manuscript addresses the challenge of evaluating binary classifications. It compares MCC to other metrics, explaining their mathematical relationships and providing use cases where MCC offers more informative results. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].Cordts Marius, Omran Mohamed, Ramos Sebastian, Scharwächter Timo, Enzweiler Markus, Benenson Rodrigo, Franke Uwe, Roth Stefan, and Schiele Bernt. The cityscapes dataset. In CVPR Workshop on The Future of Datasets in Vision, 2015. [Google Scholar]

- [8].Correia Paulo and Pereira Fernando. Video object relevance metrics for overall segmentation quality evaluation. EURASIP Journal on Advances in Signal Processing, 2006:1–11, 2006. [Google Scholar]

- [9].Sabatino Antonio Di and Corazza Gino Roberto. Nonceliac gluten sensitivity: sense or sensibility?, 2012. [DOI] [PubMed]

- [10].Everingham Mark, Luc Van Gool, Williams Christopher KI, Winn John, and Zisserman Andrew. The pascal visual object classes (voc) challenge. International journal of computer vision, 88(2):303–338, 2010. [Google Scholar]

- [11].Gooding Mark J, Smith Annamarie J, Tariq Maira, Aljabar Paul, Peressutti Devis, van der Stoep Judith, Reymen Bart, Emans Daisy, Hattu Djoya, van Loon Judith, et al. Comparative evaluation of autocontouring in clinical practice: a practical method using the turing test. Medical physics, 45(11):5105–5115, 2018. [DOI] [PubMed] [Google Scholar]

- [12].Gooding Mark J, Boukerroui Djamal, Osorio Eliana Vasquez, Monshouwer René, and Brunenberg Ellen. Multicenter comparison of measures for quantitative evaluation of contouring in radiotherapy. Physics and Imaging in Radiation Oncology, 24:152–158, 2022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [13].Grandini Margherita, Bagli Enrico, and Visani Giorgio. Metrics for multi-class classification: an overview. arXiv preprint arXiv:2008.05756, 2020. [Google Scholar]

- [14].Gruber Sebastian and Buettner Florian. Trustworthy deep learning via proper calibration errors: A unifying approach for quantifying the reliability of predictive uncertainty. arXiv preprint arXiv:2203.07835, 2022. [Google Scholar]

- [15].Honauer Katrin, Maier-Hein Lena, and Kondermann Daniel. The hci stereo metrics: Geometry-aware performance analysis of stereo algorithms. In Proceedings of the IEEE International Conference on Computer Vision, pages 2120–2128, 2015. [Google Scholar]

- [16].Kaggle. Satorius Cell Instance Segmentation 2021. https://www.kaggle.com/c/sartorius-cell-instance-segmentation, 2021. [Online; accessed 25-April-2022].

- [17].Kofler Florian, Ezhov Ivan, Isensee Fabian, Berger Christoph, Korner Maximilian, Paetzold Johannes, Li Hongwei, Shit Suprosanna, McKinley Richard, Bakas Spyridon, et al. Are we using appropriate segmentation metrics? Identi- fying correlates of human expert perception for CNN training beyond rolling the DICE coefficient. arXiv preprint arXiv:2103.06205v1, 2021. [Google Scholar]

- [18].Konukoglu Ender, Glocker Ben, Ye Dong Hye, Criminisi Antonio, and Pohl Kilian M. Discriminative segmentation-based evaluation through shape dissimilarity. IEEE transactions on medical imaging, 31(12):2278–2289, 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [19].Lennerz Jochen K, Green Ursula, Williamson Drew FK, and Mahmood Faisal. A unifying force for the realization of medical ai. npj Digital Medicine, 5(1):1–3, 2022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [20].Lin Tsung-Yi, Maire Michael, Belongie Serge, Hays James, Perona Pietro, Ramanan Deva, Dollár Piotr, and Zitnick C Lawrence. Microsoft coco: Common objects in context. In European conference on computer vision, pages 740–755. Springer, 2014. [Google Scholar]

- [21].Maier-Hein Lena, Eisenmann Matthias, Reinke Annika, Onogur Sinan, Stankovic Marko, Scholz Patrick, Arbel Tal, Bogunovic Hrvoje, Bradley Andrew P, Carass Aaron, et al. Why rankings of biomedical image analysis competitions should be interpreted with care. Nature communications, 9(1):1–13, 2018. With this comprehensive analysis of biomedical image analysis competitions (challenges), the authors initiated a shift in how such challenges are designed, performed, and reported in the biomedical domain. Its concepts and guidelines have been adopted by reputed organizations such as MICCAI. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [22].Maier-Hein Lena, Reinke Annika, Christodoulou Evangelia, Glocker Ben, Godau Patrick, Isensee Fabian, Kleesiek Jens, Kozubek Michal, Reyes Mauricio, Riegler Michael A, et al. Metrics reloaded: Pitfalls and recommendations for image analysis validation. arXiv preprint arXiv:2206.01653, 2022. [Google Scholar]

- [23].Margolin Ran, Zelnik-Manor Lihi, and Tal Ayellet. How to evaluate foreground maps? In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 248–255, 2014. [Google Scholar]

- [24].Muschelli John. Roc and auc with a binary predictor: a potentially misleading metric. Journal of classification, 37(3): 696–708, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [25].Nasa Prashant, Jain Ravi, and Juneja Deven. Delphi methodology in healthcare research: how to decide its appropriate- ness. World Journal of Methodology, 11(4):116, 2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [26].Ounkomol Chawin, Seshamani Sharmishtaa, Maleckar Mary M, Collman Forrest, and Johnson Gregory R. Label-free prediction of three-dimensional fluorescence images from transmitted-light microscopy. Nature methods, 15(11): 917–920, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [27].Reinke Annika, Eisenmann Matthias, Tizabi Minu D, Sudre Carole H, Rädsch Tim, Antonelli Michela, Arbel Tal, Bakas Spyridon, Cardoso M Jorge, Cheplygina Veronika, Farahani Keyvan, Glocker Ben, Heckmann-Nötzel Doreen, Isensee Fabian, Jannin Pierre, Kahn Charles, Kleesiek Jens, Kurc Tahsin, Kozubek Michal, Landman Bennett A, Litjens Geert, Maier-Hein Klaus, Martel Anne L, Müller Henning, Petersen Jens, Reyes Mauricio, Rieke Nicola, Stieltjes Bram, Summers Ronald M, Tsaftaris Sotirios A, van Ginneken Bram, Kopp-Schneider Annette, Jäger Paul, and Maier-Hein Lena. Common limitations of image processing metrics: A picture story. arXiv preprint arXiv:2104.05642, 2021. [Google Scholar]

- [28].Reinke Annika, Eisenmann Matthias, Tizabi Minu D, Sudre Carole H, Rädsch Tim, Antonelli Michela, Arbel Tal, Bakas Spyridon, Cardoso M Jorge, Cheplygina Veronika, et al. Common limitations of image processing metrics: A picture story. arXiv preprint arXiv:2104.05642, 2021. [Google Scholar]

- [29].Roberts Brock, Haupt Amanda, Tucker Andrew, Grancharova Tanya, Arakaki Joy, Fuqua Margaret A, Nelson Angelique, Hookway Caroline, Ludmann Susan A, Mueller Irina A, et al. Systematic gene tagging using crispr/cas9 in human stem cells to illuminate cell organization. Molecular biology of the cell, 28(21):2854–2874, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [30].Schmidt Uwe, Weigert Martin, Broaddus Coleman, and Myers Gene. Cell detection with star-convex polygons. In International Conference on Medical Image Computing and Computer-Assisted Intervention, pages 265–273. Springer, 2018. [Google Scholar]

- [31].Stringer Carsen, Wang Tim, Michaelos Michalis, and Pachitariu Marius. Cellpose: a generalist algorithm for cellular segmentation. Nature methods, 18(1):100–106, 2021. [DOI] [PubMed] [Google Scholar]

- [32].Taha Abdel Aziz and Hanbury Allan. Metrics for evaluating 3d medical image segmentation: analysis, selection, and tool. BMC medical imaging, 15(1):1–28, 2015. The paper discusses the importance of effective metrics for evaluating the accuracy of 3D medical image segmentation algorithms. The authors analyze existing metrics, propose a selection methodology, and develop a tool to aid researchers in choosing appropriate evaluation metrics based on the specific characteristics of the segmentation task. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [33].Taha Abdel Aziz, Hanbury Allan, and Jimenez del Toro Oscar A. A formal method for selecting evaluation metrics for image segmentation. In 2014 IEEE international conference on image processing (ICIP), pages 932–936. IEEE, 2014. [Google Scholar]

- [34].Tran Thuy Nuong, Adler Tim, Yamlahi Amine, Christodoulou Evangelia, Godau Patrick, Reinke Annika, Tizabi Minu Dietlinde, Sauer Peter, Persicke Tillmann, Albert Jörg Gerhard, et al. Sources of performance variability in deep learning- based polyp detection. arXiv preprint arXiv:2211.09708, 2022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [35].Vaassen Femke, Hazelaar Colien, Vaniqui Ana, Gooding Mark, Brent van der Heyden, Richard Canters, and Wouter van Elmpt. Evaluation of measures for assessing time-saving of automatic organ-at-risk segmentation in radiotherapy. Physics and Imaging in Radiation Oncology, 13:1–6, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [36].Viana Matheus P, Chen Jianxu, Knijnenburg Theo A, Vasan Ritvik, Yan Calysta, Arakaki Joy E, Bailey Matte, Berry Ben, Borensztejn Antoine, Brown Eva M, et al. Integrated intracellular organization and its variations in human ips cells. Nature, pages 1–10, 2023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [37].Wiesenfarth Manuel, Reinke Annika, Landman Bennett A, Eisenmann Matthias, Saiz Laura Aguilera, Cardoso M Jorge, Maier-Hein Lena, and Kopp-Schneider Annette. Methods and open-source toolkit for analyzing and visualizing challenge results. Scientific Reports, 11(1):1–15, 2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [38].Yeghiazaryan Varduhi and Voiculescu Irina D. Family of boundary overlap metrics for the evaluation of medical image segmentation. Journal of Medical Imaging, 5(1):015006, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [39].Hirling Dominik, Tasnadi Ervin, Caicedo Juan, Caroprese Maria V, Sjögren Rickard, Aubreville Marc, Koos Krisztian, and Horvath Peter. Segmentation metric misinterpretations in bioimage analysis. Nature methods, pages 1–4, 2023. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

No data was used in this study.