Randomised controlled trials are widely accepted as the most reliable method of determining effectiveness, but most trials have evaluated the effects of a single intervention such as a drug. Recognition is increasing that other, non-pharmacological interventions should also be rigorously evaluated.1–3 This paper examines the design and execution of research required to address the additional problems resulting from evaluation of complex interventions—that is, those “made up of various interconnecting parts.”4 The issues dealt with are discussed in a longer Medical Research Council paper (www.mrc.ac.uk/complex_packages.html). We focus on randomised trials but believe that this approach could be adapted to other designs when they are more appropriate.

Summary points

Complex interventions are those that include several components

The evaluation of complex interventions is difficult because of problems of developing, identifying, documenting, and reproducing the intervention

A phased approach to the development and evaluation of complex interventions is proposed to help researchers define clearly where they are in the research process

Evaluation of complex interventions requires use of qualitative and quantitative evidence

Challenges of trials of complex interventions

There are specific difficulties in defining, developing, documenting, and reproducing complex interventions that are subject to more variation than a drug. A typical example would be the design of a trial to evaluate the benefits of specialist stroke units. Such a trial would have to consider the expertise of various health professionals as well as investigations, drugs, treatment guidelines, and arrangements for discharge and follow up. Stroke units may also vary in terms of organisation, management, and skill mix. The active components of the stroke unit may be difficult to specify, making it difficult to replicate the intervention. The box gives other examples of complex interventions.

Examples of complex interventions

Service delivery and organisation:Stroke unitsHospital at home

Interventions directed at health professionals' behaviour:Strategies for implementing guidelinesComputerised decision support

Community interventions:Community based programmes to prevent heart diseaseCommunity development approaches to improve health

Group interventions:Group psychotherapies or behavioural change strategiesSchool based interventions—for example, to reduce smoking or teenage pregnancy

Interventions directed at individual patients:Cognitive behavioural therapy for depressionHealth promotion interventions to reduce alcohol consumption or support dietary change

Framework for trials of complex interventions

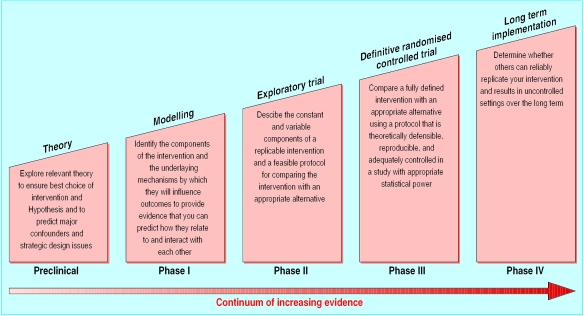

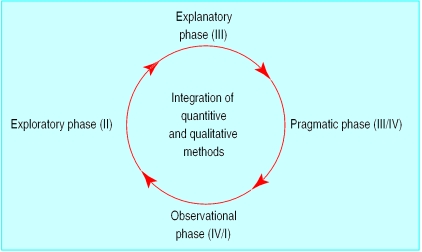

Problems often arise in the evaluation of complex interventions because researchers have not fully defined and developed the intervention. It is useful to consider the process of development and evaluation of such interventions as having several distinct phases. These can be compared with the sequential phases of drug development (fig 1) or may be seen as more iterative (fig 2). Either way a phased approach separates the different questions being asked.

Figure 1.

Sequential phases of developing randomised controlled trials of complex interventions

Figure 2.

Iterative view of development of randomised controlled trials of complex interventions

Progression from one phase to another may not be linear. In many cases an iterative process occurs—for example, if an exploratory trial finds that a complex intervention is unacceptable to potential recipients, the theoretical basis and components of the intervention may have to be re-examined. Preliminary work is often essential to establish the probable active components of the intervention so that they can be delivered effectively during the trial. Identifying which stage of development has been reached in specifying the intervention and outcome measures will give researchers and funding bodies reasonable confidence that an appropriately designed and relevant study is being proposed.

Preclinical or theoretical phase

The first step is to identify the evidence that the intervention might have the desired effect. This may come from disciplines outside the health sciences (such as theories of organisational change). Review of the theoretical basis for an intervention may lead to changes in the hypothesis and improved specification of potentially active ingredients. In addition, previous studies may have provided some empirical evidence—for example, an intervention may have been found effective for a closely related condition or in another country with a different organisation of health care.5

Phase I: defining components of the intervention

Modelling or simulation techniques can improve understanding of the components of an intervention and their interrelationships. Qualitative testing through focus groups, preliminary surveys, or case studies can also help define relevant components. Descriptive studies may help to delineate variants of a service. For example, hospital at home schemes vary in purpose. Some are designed to hasten hospital discharge, others to avoid hospital admissions, and yet others to provide palliative care in the home.6

Qualitative research can also be used to show how the intervention works and to find potential barriers to change in trials that seek to alter patient or professional behaviour.7 For example, if health professionals see the main barrier to changing their practice as being lack of time or resources, an intervention that focuses only on improving their knowledge will not work.

Phase II: defining trial and intervention design

Acceptability and feasibility

In phase II the information gathered in phase I is used to develop the optimum intervention and study design. This often involves testing the feasibility of delivering the intervention and acceptability to providers and patients. Different versions of the intervention may need to be tested or the intervention may have to be adapted to achieve optimal effectiveness—for example, if the proposed intensity and duration of the intervention are found to be unacceptable to participants.

It is also important to test for evidence of a learning curve, leading to improved performance of the intervention over time. If a learning curve exists a run-in period might be needed before formal recruitment to the trial to ensure that the intervention is provided effectively.

The exploratory trial is also an opportunity to determine the consistency with which the intervention is delivered. Consultations could be audio or video taped to give feedback of performance to providers together with training to promote consistency.

Defining the control intervention

The content of the comparative arm (control group) of the main trial will be decided during the preparatory phase. It may be an alternative package of care, standard care, or placebo. Although standard practice is often an appropriate control, it can be as complex as the intervention being evaluated and may change with time. It is thus important to monitor the care that is being delivered to the control group. The use of a no treatment control group may be unacceptable to patients. One possible solution is a randomised waiting list study in which all participants ultimately receive the intervention.

Designing the main trial

The exploratory phase should ideally be randomised to allow assessment of the size of the effect. This initial assessment will provide a sound basis for calculating sample sizes for the main trial. Other design variables can also be established in an exploratory trial.

Outcomes

Outcome measures for the main trial will also generally be piloted during the exploratory phase. Investigators should include outcomes that not only are relevant to patients with the disease or condition being studied but also encompass measures of wider relevance to the health system, including economic measures.8 Collection of data to assess a full range of costs to patients, carers, and society adds considerably to the workload and costs of researchers and may challenge the feasibility of a trial. Strategic choice of outcomes is therefore needed.9

An important decision in trials of complex interventions is whether health outcome needs to be assessed. For studies such as those evaluating strategies to change professional behaviour, it may be sufficient to show that the intervention changed behaviour, provided that clear evidence exists that the changed behaviour—for example, prescribing particular treatments—is effective.

Phase III: methodological issues for main trial

The main trial will need to address the issues normally posed by randomised controlled trials, such as sample size, inclusion and exclusion criteria, and methods of randomisation, as well as the challenges of complex interventions. Individual randomisation may not always be feasible or appropriate. For example, cluster randomisation is often used for trials of interventions directed at a practice or hospital team.10,11 Randomised incomplete block designs have also been used to evaluate different approaches to promoting change in professional behaviour.12

It is often not possible to conceal allocation of treatment from the patient, practitioner, and researcher in complex intervention trials. The potential biases of unblinded trials therefore have to be taken into account. Dissimilar levels of patient commitment between intervention and control groups may cause differential dropout, making interpretation of results difficult. When patients have strong preferences, a preference trial design may be used; patients without strong preferences are randomised as usual but those with strong preferences receive their preferred treatment.13 The results of such trials can, however, be difficult to interpret.

The findings of trials of complex interventions are more generalisable if they are performed in the setting in which they are most likely to be implemented. Eligibility criteria must not lead to the exclusion of patients—for example, on the grounds of age—who constitute a substantial portion of those to whom the intervention is likely to be offered when implemented in the health system. Poor recruitment to a trial can also raise doubts about generalisability.

Qualitative study of the processes of implementation of interventions in study arms of the main trial can further show the validity of findings.14

Phase IV: promoting effective implementation

The purpose of the final phase is to examine the implementation of the intervention into practice, paying particular attention to the rate of uptake, the stability of the intervention, any broadening of subject groups, and the possible existence of adverse effects. As in the case of drug trials, this might be carried out by long term surveillance, although currently there is no established mechanism for funding such activities.

Conclusions

Trials of complex interventions are of increasing importance because of the drive to provide the most cost effective health care. Although these trials pose substantial challenges to investigators, the use of an iterative phased approach that harnesses qualitative and quantitative methods should lead to improved study design, execution, and generalisability of results.

Acknowledgments

We thank the participants at a MRC workshop on complex interventions for their contribution. This article represents the views of the authors and does not represent the official view of the Medical Research Council.

Footnotes

Competing interests: None declared.

References

- 1.Friedli K, King MB. Psychological treatments and their evaluation. Int Rev Psychiatry. 1998;10:123–126. [Google Scholar]

- 2.Stephenson J, Imrie J. Why do we need randomised controlled trials to assess behavioural interventions? BMJ. 1998;316:611–613. doi: 10.1136/bmj.316.7131.611. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Buchwald H. Surgical procedures and devices should be evaluated in the same way as medical therapy. Controlled Clinical Trials. 1997;18:478–487. doi: 10.1016/s0197-2456(96)00114-6. [DOI] [PubMed] [Google Scholar]

- 4.Collins English dictionary. London: Collins; 1979. [Google Scholar]

- 5.Haines A, Iliffe S. Innovations in services and the appliance of science. BMJ. 1995;310:815–816. doi: 10.1136/bmj.310.6983.815. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Shepperd S, Iliffe S. The effectiveness of hospital at home compared with in-patient hospital care: a systematic review. J Pub Health Med. 1998;20:344–350. doi: 10.1093/oxfordjournals.pubmed.a024778. [DOI] [PubMed] [Google Scholar]

- 7.Haynes RB, Haines A. Barriers and bridges to evidence-based practice. BMJ. 1998;317:273–276. doi: 10.1136/bmj.317.7153.273. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Fitzpatrick R, Davey C, Buxton MJ, Jones DR. Evaluating patient based outcome measures for use in clinical trials. Health Technology Assessment. 1998;2(14):1–74. [PubMed] [Google Scholar]

- 9.Mannheim L. Health services research clinical trials: issues in the evaluation of economic cost and benefits. Controlled Clinical Trials. 1999;19:149–158. doi: 10.1016/s0197-2456(97)00146-3. [DOI] [PubMed] [Google Scholar]

- 10.Edwards S, Braunholtz D, Stevens A, Lilford R. Ethical issues in the design and conduct of cluster RCTs. BMJ. 1999;318:1407–1409. doi: 10.1136/bmj.318.7195.1407. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Ukoumunne OC, Gulliford MC, Chinn S, Sterne JAC, Burney PGJ. Methods for evaluating area-wide and organisation-based interventions in health and health care: a systematic review. Health Technology Assessment. 1999;3(5):1–110. [PubMed] [Google Scholar]

- 12.Grimshaw J, Freemantle N, Wallace S, Russell I, Hurwitz B, Watt I, et al. Developing and implementing clinical practice guidelines. Qual Health Care. 1995;4:55–64. doi: 10.1136/qshc.4.1.55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Brewin CR, Bradley C. Patient preferences and randomised controlled trials. BMJ. 1989;289:313–315. doi: 10.1136/bmj.299.6694.313. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Bradley F, Wiles R, Kinmonth A, Mant D, Gantley M. Development and evaluation of complex interventions in health services research: case study of the Southampton heart integrated care project (SHIP) BMJ. 1999;318:711–715. doi: 10.1136/bmj.318.7185.711. [DOI] [PMC free article] [PubMed] [Google Scholar]