Abstract

Background

Physician variablity in preoperative planning of endovascular implant deployment and associated inaccuracies have not been documented. This study aimed to quantify the variability in accuracy of physician flow diverter (FD) planning and directly compares it with PreSize Neurovascular (Oxford Heartbeat Ltd) software simulations.

Methods

Eight experienced neurointerventionalists (NIs), blinded to procedural details, were provided with preoperative 3D rotational angiography (3D-RA) volumetric data along with images annotated with the distal landing location of a deployed Surpass Evolve (Stryker Neurovascular) FD from 51 patient cases. NIs were asked to perform a planning routine reflecting their normal practice and estimate the stent’s proximal landing using volumetric data and the labeled dimensions of the FD used. Equivalent deployed length estimation was performed using PreSize software. NI- and software-estimated lengths were compared with postprocedural observed deployed stent length (control) using Bland–Altman plots. NI assessment agreement was assessed with the intraclass correlation coefficient (ICC).

Results

The mean accuracy of NI-estimated deployed FD length was 81% (±15%) versus PreSize’s accuracy of 95% (±4%), demonstrating significantly higher accuracy for the software (p<0.001). The mean absolute error between estimated and control lengths was 4 mm (±3.5 mm, range 0.03–30.2 mm) for NIs and 1 mm (±0.9 mm, range 0.01–3.9 mm) for PreSize. No discernable trends in accuracy among NIs or across vasculature and aneurysm morphology (size, vessel diameter, tortuousity) were found.

Conclusions

The study quantified experienced physicians’ significant variablity in predicting an FD deployment with current planning approaches. In comparison, PreSize-simulated FD deployment was consistently more accurate and reliable, demonstrating its potential to improve standard of practice.

Keywords: Aneurysm, Flow Diverter

WHAT IS ALREADY KNOWN ON THIS TOPIC

Modeling device behavior in the cerebral vasculature is complex, considering heterogeneous vascular and aneurysm morphology, and variability in device design. Studies of virtual planning software packages often compare the observed deployed length of an implant to the predicted deployed length for a given patient-specific neurovascular anatomy. However, no studies have documented the variability in current practices and quantitatively compared this variability against predictive accuracy from virtual planning. Such comparision could provide a meaningful assessment of the potential of such simulation tools to optimize the current clinical standard.

WHAT THIS STUDY ADDS

This study quantitatively demonstrates that current sizing approaches are variable and unpredictable regardless of operator or aneurysm morphology. In comparison, the PreSize Neurovascular virtual simulation was significantly more consistent and quantitatively accurate in predicting deployed length and location of flow diverters for the same cases.

HOW THIS STUDY MIGHT AFFECT RESEARCH, PRACTICE OR POLICY

Considering the field of medical simulation and decision-support software is a relatively new one, this study could standardize evaluation requirements for software to quantitatively demonstrate (1) predictive reliability and (2) improvement of current practice standards.

Introduction

There is a clinical trend favoring single endovascular implants for treating cerebral aneurysms.1–4 The efficacy of an implant in achieving aneurysm closure is dependent on its design, as well as appropriate sizing and positioning. For flow diverter (FD) planning and sizing, physicians currently evaluate how a particular stent, being deployed from distal to proximal landing zones, might foreshorten or elongate in a particular anatomy to select the optimal device. For current standard FD planning and sizing, physicians usually make manual line measurements on 2D angiograms or 3D virtual models to evaluate the length of the optimal landing zone, as well as proximal and distal landing diameters. Physicians then assess which stent, being deployed from distal to proximal landing zones, might foreshorten or elongate in the particular anatomy to meet the optimal landing. This assessment is based on their understanding of the device’s specifications, the patient-specific anatomy, and the manufacturer’s instructions for use. As such, it is operator-dependent and intrinsically prone to errors.

Inaccuracies in preoperative planning can not only result in the use of more than one device (discarded or additionally deployed), but also in the coverage of lateral branches and poor wall apposition, especially at the proximal end, which can in turn cause persistent aneurysm filling and stent-induced stenosis.5–8 Given the complexity of device behavior and patient vasculature, precision of physician predictions of FD deployment is expected to be variable, but such variability has never been previously documented.

Software that aids in selecting an appropriately sized device and in predicting deployment may reduce deployment inaccuracies, standardize clinical practice, and improve outcomes, but clear evidence to demonstrate such improvements is needed. A key step preceding use of such software in clinical care is validation of its accuracy in predicting device behavior in real clinical case scenarios. In the case of flow diversion, this is often conducted by comparing parameters of an observed deployed device to the software-predicted deployment for a given patient-specific neurovascular anatomy.9–12 Typically, the deployed FD length is compared with the predicted length from the software, with length being a critical nonlinear parameter which arises based on the specific morphology of the patient’s vasculature, and crucially contributes to FD sizing decisions.

This study aimed to quantitatively assess for the first time the precision of current FD sizing methods in clinical cases. For the same clinical cases, standard-of-practice predictions were directly compared with predictions obtained with real-time PreSize Neurovascular simulation software (Oxford Heartbeat Ltd, London, UK). The hypothesis was that FD deployed length predictions with the PreSize software would be more accurare and consistent than predictions from NIs using conventional sizing methods.

Methods

A group of eight experienced neurointerventionalists (NIs) from around the world were asked to predict the proximal landing site of an FD with their conventional planning method after providing them with the device specifications, vascular morphology volumetric data, and the distal landing site of that device from previously treated cases, blinded to the outcome. These predictions were compared against those generated by the PreSize Neurovascular software given the same information. Predictions of deployed length from the two groups (NI and PreSize) were compared against the control (measured post-deployment length) to assess and compare the accuracy of the two groups.

Study population

Fifty-one consecutive cases with intracranial aneurysms treated with a Surpass Evolve FD (Stryker Neurovascular, Fremont, CA, USA) in the following participating centers were enrolled for this study: Leeds General Infirmary (UK) (n=21),Queen Elizabeth University Hospital (Glasgow, UK) (n=11),Turku University Hospital (Finland) (n=9),West Virginia University Hospitals (US) (n=6), and Royal Infirmary of Edinburgh (n=4).

Angiographic data

Preprocedural 3D volumetric rotational angiography (3D-RA) data and either postprocedural 2D digital subtraction angiography (2D-DSA) (n=42) or 3D-RA (n=9) images were collected for each case as part of local routine practice. Imaging was performed using a Siemens Artis System (Siemens Healthineers AG, Germany) (n=20) or a Philips Allura Xper System (Philips GmbH, The Netherlands) (n=31). The voxel size of the 3D-RA volumes ranged between 0.15 and 0.50 mm. Labeled diameter and labeled length of the implanted FDs were also collected for each case.

Aneurysm and parent vessel features

Aneurysms were classified according to the dome size, as either small (largest dimension <5 mm), medium (5–10 mm), or large (>10 mm), and according to the neck width as either wide (width >4 mm or dome-to-neck ratio <2) or, otherwise, narrow. Length and diameter range, as well as tortuosity index, were determined for the parent vessels, defined as the vessel segments covered by the implanted stents. Neck regions were not considered in calculations of diameter range, and the tortuosity index was defined as the ratio of the parent vessel length to the straight-line distance between its start and endpoint.

Measured deployed length (control group)

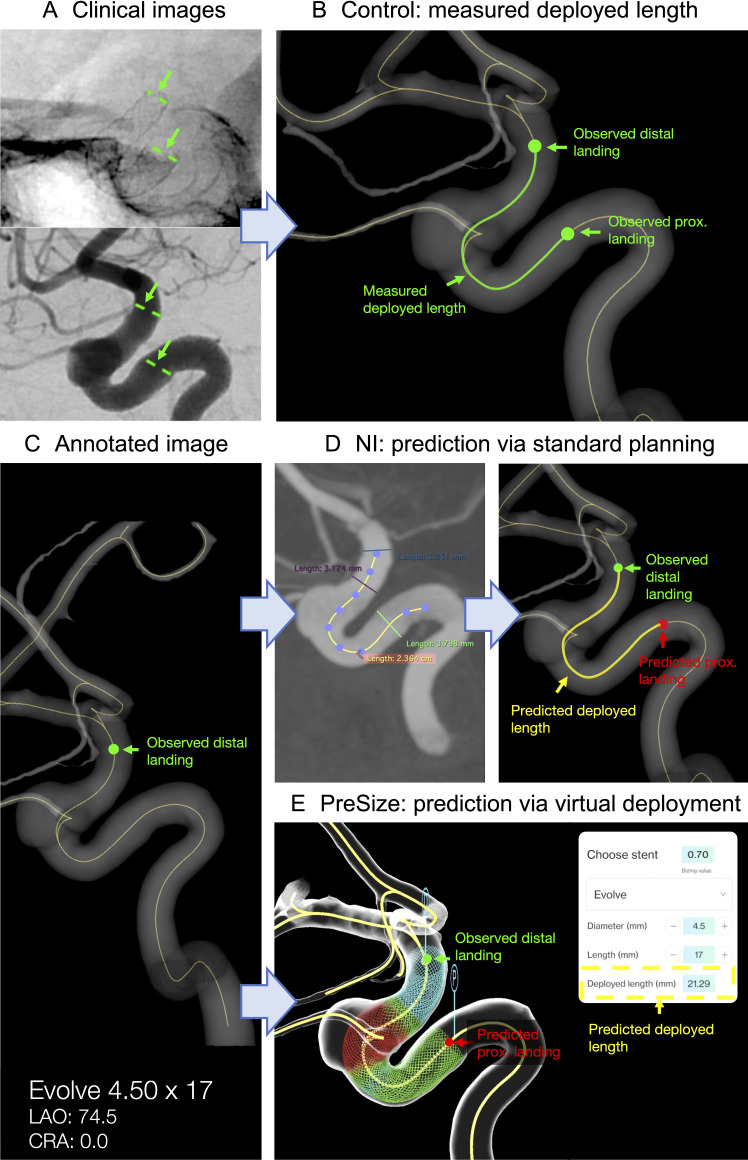

Figure 1 illustrates this study’s methodology. The deployed stent’s proximal and distal ends for each case were marked on the postprocedural images as shown in figure 1A. The position of the stent ends were transferred to the same rendered vessel centerline of the 3D reconstructed vasculatures (figure 1B). The centerline path length between the two observed endpoints defined the measured stent deployed length (Lmeas).

Figure 1.

Schematic of study protocol. (A) The deployed stent’s distal and proximal landings are marked on postprocedural clinical images. (B) The location of the stent landings are transferred to the vessel centerline of the 3D reconstructed vasculature. The length of the centerline between the stent’s observed distal and proximal landings is the measured deployed length Lmeas (control). (C) Example of annotated image with the stent’s observed distal landing, stent’s labeled diameter, and length and view angles as provided to the neurointerventionalists (NIs). (D) Example of NI prediction via standard sizing methods. The length of the centerline between the stent’s observed distal landing and predicted proximal landing is the predicted deployed length Lpred. (E) PreSize prediction via virtual deployment. The stent’s predicted deployed length Lpred is automatically calculated by the software.

Physician predictions

Eight NIs (n=2 from the UK, n=2 from Europe, n=3 from the USA, and n=1 from Australia) experienced in flow diversion participated in this study (n=3 having performed 50–100 FD cases by the time of the study, n=2 having performed 100–200 cases, n=3 >200 cases) and currently planning procedures with and using Evolve FD (n=4 having performed 5–10 Evolve cases by the time of the study, n=4 having performed 10–50 cases). Blinded to procedural details, NIs were provided with preimplantantion volumetric data from 3D-RA for each of the 51 cases. The NIs could use this to reconstruct a 3D model and measure target vessel length and diameters reflecting their normal clinical practice: two physicians used an in-built interface at a Philips workstation, one clinician physician used an in-built interface at a Siemens workstation, and five used the software OsiriX (Pixmeo, Geneva, Switzerland). The distal landing zone of the implanted FD was annotated on reconstructed images of the target vessel with centerlines projected on six anatomical planes plus a view aligned with the postprocedural 2D-DSA working view (figure 1C). The diameter and length of the implanted FD was also provided for each case. No post-deployment images or follow-up outcome was provided.

The NIs could use the provided 3D-RA data for vascular anatomy reconstruction and measurements. The NIs were instructed to predict the implanted FD’s proximal landing zone employing conventional sizing techniques as currently used in their own clinical practice, including (where relevant to individual practice) the vendor-provided sizing chart for guidance, and annotate it on one of the provided images. No time limits were set for processing each case. The estimated proximal landing zone positions were then transferred to the vessel centerline of the 3D reconstructed vasculatures, and the length of the centerline path between the (observed) distal and (predicted) proximal ends (Lpred) defined the NI-predicted deployed length (figure 1D).

Virtual deployment

Stent deployment simulations for each of the 51 cases were performed with the sofware PreSize Neurovascular, which provides real-time planning support, reconstruction of patient-specific anatomy in 3D, and simulation of fitting of implantable devices in a patient’s anatomy in a safe, virtual environment. The same information that was provided to NIs was used for PreSize software simulation. In particular, a stent of the same model and size as the one implanted during the real procedure was virtually deployed, agnostic to deployment technique, in the PreSize reconstructed case-specific vasculature starting from the implanted stent’s known observed distal landing zone. The predicted deployed FD length (Lpred) was automatically calculated by the software (Lpred) (figure 1E). Details of the software’s workflow and validation methodologies can be found in a previous publication.11

Statistical analysis

The accuracy of the NIs and software’s predictions was calculated by comparing Lpred against Lmeas as follows:

NIs’ and software’s accuracies were compared via paired t-tests and the one-way repeated measures ANOVA test to check for significant differences. One-sample t-tests were used to check for over- or underestimation bias. Bland–Altman plots with 95% limits of agreement were used to compare NIs’ and software’s predicted length against the measured length. The intraclass correlation coefficient ICC(2,1) (two-way random effects, absolute agreement, single rater)13 was used to assess the agreement among the NI assessments. Values of the ICC can range from 0 to 1, with 0 indicating no agreement and 1 perfect agreement among raters.

Pearson’s correlation coefficients were calculated to detect correlation between accuracy (NIs and software) and the following metrics: labeled diameter of the deployed FD, labeled length of the deployed FD, aneurysm size, aneurysm neck width, deployed length within the parent vessel, diameter range of parent vessel along the deployed length, and tortuosity index of the parent vessel. Pearson’s coefficient ranges from 0 for no correlation to ±1 for perfect correlation (either positive or negative, respectively), with a minimum of ±0.5 for correlation to indicate a potential trend. Statistical significance was set at p<0.01.

The study received Health Research Authority approval (legal compliance and ethics review for research projects in the UK’s National Health System). Due to the study’s retrospective nature and use of anonymized data, the requirement for informed patient consent was waived.

Results

Case characteristics

The labeled diameter of the 51 implanted FDs ranged from 3.25 to 5.00 mm (mean±SD 4.21±0.50 mm). The labeled length ranged from 12.00 to 30.00 mm (mean±SD 17.59±4.98 mm). Some 31.3% of the aneurysms were classified as small, 35.3% as medium, and 33.3% as large; 39.2% of the aneurysms were classified as wide-necked.

The length of the parent vessel covered by the implanted FD was 21.65±6.73 mm on average (range 11.96–45.51 mm), with a diameter variation along its length of 1.22±0.57 mm on average (range 0.59–3.22 mm) and a tortuosity index of 1.72±0.66 on average (range 1.02–3.68).

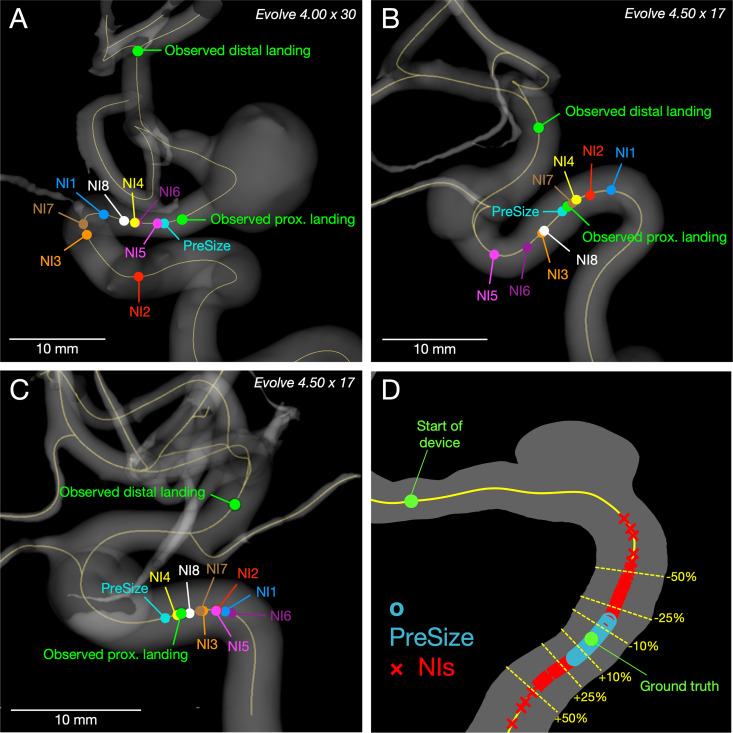

Prediction accuracy

Figure 2 demonstrates the observed proximal landing in three representative cases versus proximal landing predictions by NIs and PreSize and illustrates the deployed length predictions by NIs versus PreSize across the study’s dataset. The NI group predicted deployed lengths using conventional sizing methods at an accuracy of 81.3% (±15%, range 6.7–99.8%). The individual mean accuracy among the physicians ranged between 73.1% and 87.6%, while the SD ranged between 9.2% and 20.6%.

Figure 2.

Observed landing zone and predicted positions of stents’ proximal landing from neurointerventionalists (NIs) and PreSize within 3D reconstructions of patient vasculature. (A–C) Study cases based on standard deviation (SD) of NI predictive accuracy as a measure of interrater variability: (A) Case 4 (maximum SD) - Evolve 4.00×30, NIs mean 66% (±31%) vs PreSize’s accuracy 96%. (B) Case 39 (median SD) - Evolve 4.50×17, NIs mean accuracy 86% (±11%) vs PreSize’s accuracy 98%. (C) Case 36 (minimum SD) - Evolve 4.50×17, NIs mean accuracy 92% (±5%) vs PreSize’s accuracy 93%. (D) Plot of the spread of all predictions of deployed length from NIs (red markers) and PreSize (blue markers). Predictions are presented as percentage of measured length, plotted on an arbitrary length of centerline from a illustrative aneurysm case. The upper green marker indicates the start of the device, and the lower green marker at 100% (ground truth). Overall, predictions from PreSize were clustered more tightly around the ground truth than were the predictions from NIs.

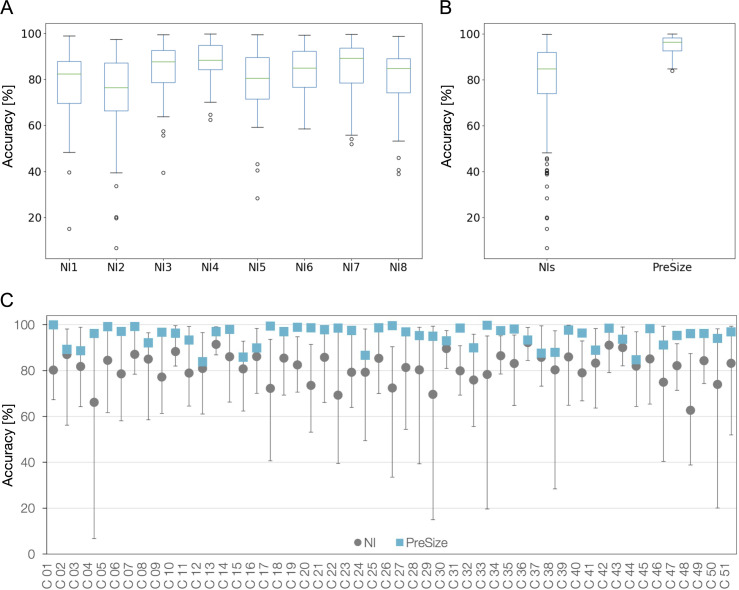

PreSize predicted the FD deployed length with a mean accuracy of 94.9% (±4.4%, range 83.9–99.9%). PreSize mean accuracy was significantly higher than the NI cohort, both taken as a group and individually (p<0.001). Boxplots for the individual and grouped physician accuracies as well as those for PreSize are shown in figure 3A,B. Across the patient cases, the lowest accuracy among NIs ranged between 6.7% and 62.4% (average of 37.6%). The lowest PreSize accuracy was 83.9%.

Figure 3.

Accuracy of predictions from neurointerventionalists (NIs) and PreSize. (A) Boxplots for the accuracies of each individual NI and (B) boxplots for the accuracies of the NIs grouped together and for PreSize. The boxes extend from the first (Q1) to the third (Q3) quartiles, with the line indicating the median (Q2). The whiskers extend to show the range of data, but no more than 1.5⋅(Q3–Q1) from the edges of the box. Outliers are indicated with circles. (C) Mean accuracy among NIs and PreSize for all 51 cases in the study. Bars indicate the NIs accuracy range for each case.

Figure 3C shows PreSize’s accuracy along with NIs’ prediction accuracy range and mean for all cases. Accuracy of NI predictions varied from case to case, but there was no particular case that all NIs performed poorly on, nor an NI who peformed consistenly better or worse than the rest of the group. PreSize’s accuracy was better than the mean accuracy of conventional sizing predictions in all 51 cases by 13.5% (±8.1%) on average (±SD). The accuracy was better by 10% or more in 34/51 cases (66.7%) and by 20% or more in 9/51 cases (17.6%).

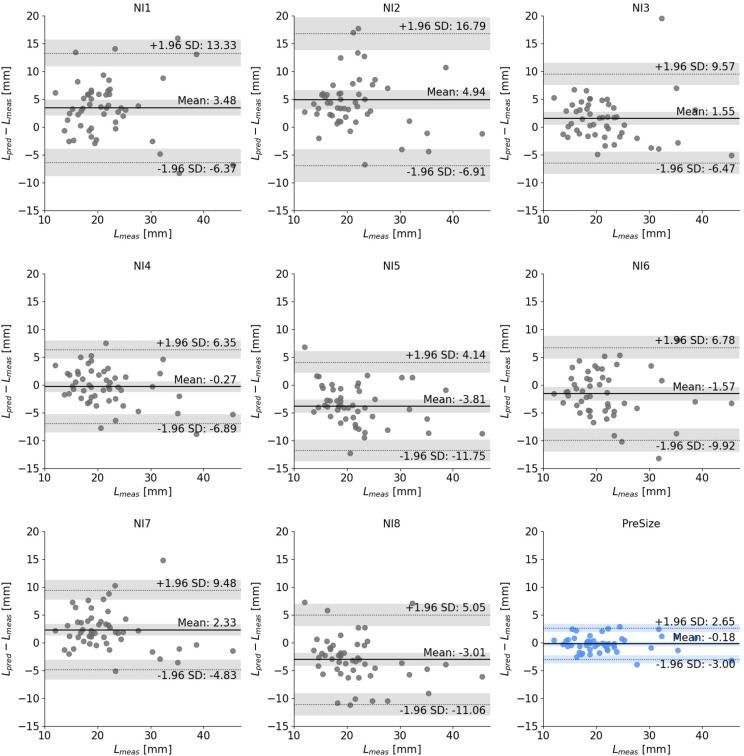

Predicted versus measured length

Bland–Altman plots comparing the predicted versus measured lengths for each NI and PreSize are shown in figure 4. The 95% limits of agreement (LoA) for the difference between Lpred and Lmeas for all the NIs pooled together was (−9.85, 10.76) mm, while PreSize’s LoA was (−3.00, 2.65) mm. Predictions of NIs 1, 2, 3, and 7 were biased toward overestimating the deployed length, while NIs 5 and 8 towards underestimating it (p<0.01). NIs 4 and 6 and PreSize showed no over- or underestimation bias. The ICC(2,1) was 0.64 (95% CI 0.49 to 0.75) for the NIs, indicating poor to moderate interrater agreement and variability in the NI deployed length predictions using using conventional sizing methods.14

Figure 4.

Bland–Altman plots for each individual neurointerventionalist (NI) (grey) and PreSize (blue). The solid line indicates the average difference between predicted deployed length (Lpred) and measured deployed length (Lmeas), while the dotted lines indicate the 95% limits of agreement (defined as average±1.96 standard deviaion of the difference). The shaded bands indicate the 95% confidence interval for the average difference and both limits of agreement.

Correlation with stent and vascular morphology

Across NIs, no correlation was shown between NI accuracy and vessel diameter range (absolute values below 0.10). Only small correlations (absolute values between 0.10 and 0.30) were detected between some of the NI accuracies and the other parameters, but no consistent (positive/negative correlation) trend was shown. Medium positive correlation (values between 0.30 and 0.50) were detected between NI3 and NI7 performance and the neck-width classification and tortuosity index, respectively. No correlation was found between PreSize accuracy and any of the features, apart from only a small negative correlation with aneurysm size.

Discussion

Endovascular treatment of cerebral aneurysms with single implants requires deployment precision for optimum outcomes. Current FD sizing methods use a combination of measurements on DSA or 3D imaging across the distal to proximal landing zones. NIs select the FD that will deploy in the target landing zone, based on an understanding of device specifications and anatomy of a given patient. This approach is approximate, with variability in predicting device behavior arising from the complex behavior of braided devices during deployment, which exhibits nonlinearity and depends on a patient’s vascular morphology. Elongation or shortening of braided devices across diseased internal carotid arteries that variably taper from proximal to distal end14 is difficult to account for when choosing an optimal device. Additionally, there is no standard method or tool among NIs to make consistent, accurate, and standardized sizing decisions. The extent to which sizing difficulties impact inaccuracies in deployment prediction has not been previously quantified.

This study created a controlled environment in which the current conventional sizing methods could be evaluated for accuracy in predicting stent deployment, with a participating group of experienced NIs evaluating the same clinical dataset. The study demonstrated that physicians’ predictions of an FD’s deployed length vary significantly (both among NIs and across patient cases) and have relatively low accuracy (eg, 17.6% of NI predictions had an accuracy of <70%, which translates into a predictive error of 9.10 mm on average). There were no discernable trends in accuracy in relation to morphological features of the patient's vasculature or aneurysm (morphology, size, vessel diameter, tortuosity). It is important to note that the NIs who participated in this study were experienced operators from different parts of the world, which further highlights the need for tools that can standardize preoperative planning and stent size selection for their patients. While some NIs were more accurate at predicting FD deployed lengths on average, no one NI was consistently more accurate than the others regardless of individual (overall or device-specific) experience or the tool used to process the cases (Philips workstation, Siemens workstation, Osirix software). Interestingly, this observation appears to contradict a common assumption that increased experience can significantly improve FD planning and sizing accuracy. It is also interesting to note that some of the cases included in the study were performed at the same center where some of the evaluators practice. However, no discernible trends in accuracy were noted among those cases and evaluators.

The potential clinical implications of incorrectly predicting the proximal landing zone are illustrated in figure 2. If a particular FD size is chosen by a physician on the erroneous prediction that it will ‘land’ in the straight portion of a cavernous internal carotid artery, but after deployment ends up in the curved segment with malapposed proximal tines, such a deployment could lead to stenosis at the proximal site or prevent aneurysm closure due to persistent inflow.

The last few years have seen an emergence of studies examining virtual simulation software for cerebrovascular implants.15–17 However, this study is unique in quantitatively comparing current FD sizing methods against the predictive accuracy of a virtual simulation tool. This direct comparison of current FD sizing methods against software simulation can contribute to standardization of medical software evaluations. PreSize simulations represent ‘neutral’ deployment (agnostic to deployment technique) and demonstrated consistently higher FD deployment accuracy than the NI group across cases, which were collected from multiple operators representing a variety of deployment techniques. A virtual simulation software that can reliably predict FDs’ deployment can improve the sizing accuracy for both new and experienced operators, and thus optimize the device selection decision-making process for optimal outcomes.

Limitations

This was a retrospective analysis that collected NI predictions of the proximal landing site of a given device in a particular anatomy, even if the operator might have chosen a different device size in some cases. This was the only protocol whereby the same scenario (aneurysm morphology, deployed device) could be presented to all NIs, using the postoperative imaging as the control against which NI and software accuracy could be compared. Second, NI-specific device deployment techniques (that could impact device deployed length) were not accounted for in this study. The study’s cases were collected from various operators across a number of centers with potentially diverse deployment techniques; any impact on accuracy would have contributed equally to both groups (NIs and PreSize), offsetting the implications on overall comparison. PreSize’s consistently high accuracy predictions indicate that FD deployed lengths are more affected by the patient-specific anatomy and device’s mechanical properties than a particular deployment technique. Allowing NIs to use their preferred reconstruction method without restrictions on approach or timing likely improved the performance of the individual NIs, but at the same time might have decreased the interrater agreement, further demonstrating the variability of predictions based on current methods.

This study compared the software against NI predictions for the Surpass Evolve device only. Future studies could similarly investigate other available devices, also potentially revealing differences in behaviors for different devices.

Conclusions

The study demonstrates a very high accuracy for the PreSize software in predicting flow diverter behavior compared with experienced operators across different geographic backgrounds. Such a software can enhance the accuracy of preoperative planning by aiding device selection and mitigating the variability of patient-specific anatomy, device design, and physician experience or deployment technique. Optimal device selection can reduce complication-associated costs and optimize outcomes.

Acknowledgments

The manuscript acknowledges the work of Mirko Bonfanti, Lowell Taylor Edgar and Aikaterini Mandaltsi for image processing, statistical analysis and manuscript preparation.

Footnotes

@Ansaar_Rai, @carrarovini

Correction notice: Since this paper first published, an acknowledgements section has been added.

Contributors: ATR contributed to the study design. ATR, SB, JD, JDP, RR, MS, JP, VCdN, CG, and TP contributed to data collection, analysis, and manuscript preparation. ATR is the guarantor of the study.

Funding: The authors have not declared a specific grant for this research from any funding agency in the public, commercial or not-for-profit sectors.

Competing interests: ATR, SB: consulting agreement Stryker, Cerenovus, Microvention. JD: PI for Oxford Heartbeat Multicentre Prospective Oxford Heartbeat PreSize study; consulting agreement Stryker, Phenox, Microvention, Oxford Endovascular. VCdN, CG: consulting agreement Stryker, Medtronic. RR: consulting agreement Stryker, Balt, Medtronic, Microvention, Acandis. JP, TP, MS, JDP: no disclosures.

Provenance and peer review: Not commissioned; externally peer reviewed.

Data availability statement

All data relevant to the study are included in the article or uploaded as supplementary information.

Ethics statements

Patient consent for publication

Not applicable.

Ethics approval

This study involved human participants but the retrospective analysis involved only selected de-identified imaging datasets/images and was exempted by the IRB (IRB#1904536497).

References

- 1. Shehata MA, Ibrahim MK, Ghozy S, et al. Long-term outcomes of flow diversion for unruptured intracranial aneurysms: a systematic review and meta-analysis. J Neurointerv Surg 2023;15:898–902. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Hanel RA, Cortez GM, Coon AL, et al. Surpass intracranial aneurysm embolization system pivotal trial to treat large or giant wide-neck aneurysms - SCENT: 3-year outcomes. J Neurointerv Surg 2023;15:1084–9. [DOI] [PubMed] [Google Scholar]

- 3. Hanel RA, Cortez GM, Lopes DK, et al. Prospective study on embolization of intracranial aneurysms with the pipeline device (PREMIER study): 3-year results with the application of a flow diverter specific occlusion classification. J NeuroIntervent Surg 2023;15:248–54. 10.1136/neurintsurg-2021-018501 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Martínez-Galdámez M, Onal Y, Cohen JE, et al. First multicenter experience using the Silk Vista flow diverter in 60 consecutive intracranial aneurysms: technical aspects. J Neurointerv Surg 2021;13:1145–51. 10.1136/neurintsurg-2021-017421 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Cohen JE, Gomori JM, Moscovici S, et al. Delayed complications after flow-diverter stenting: reactive in-stent stenosis and creeping stents. J Clin Neurosci 2014;21:1116–22. 10.1016/j.jocn.2013.11.010 [DOI] [PubMed] [Google Scholar]

- 6. Gao H, You W, Wei D, et al. Tortuosity of parent artery predicts in-stent stenosis after pipeline flow-diverter stenting for internal carotid artery aneurysms. Front Neurol 2022;13:1034402. 10.3389/fneur.2022.1034402 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. John S, Bain MD, Hui FK, et al. Long-term follow-up of in-stent stenosis after pipeline flow diversion treatment of intracranial aneurysms. Neurosurgery 2016;78:862–7. 10.1227/NEU.0000000000001146 [DOI] [PubMed] [Google Scholar]

- 8. Wang T, Richard SA, Jiao H, et al. Institutional experience of in-stent stenosis after pipeline flow diverter implantation: a retrospective analysis of 6 isolated cases out of 118 patients. Medicine (Baltimore) 2021;100:e25149. 10.1097/MD.0000000000025149 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Narata AP, Blasco J, Roman LS, et al. Early results in flow diverter sizing by computational simulation: quantification of size change and simulation error assessment. Oper Neurosurg (Hagerstown) 2018;15:557–66. 10.1093/ons/opx288 [DOI] [PubMed] [Google Scholar]

- 10. Nishimura K, Otani K, Mohamed A, et al. Accuracy of length of virtual stents in treatment of intracranial wide-necked aneurysms. Cardiovasc Intervent Radiol 2019;42:1168–74. 10.1007/s00270-019-02230-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Patankar T, Madigan J, Downer J, et al. How precise is presize neurovascular? Accuracy evaluation of flow diverter deployed-length prediction. J Neurosurg 2022:1–9. 10.3171/2021.12.JNS211687 [DOI] [PubMed] [Google Scholar]

- 12. Tong X, Shan Y, Leng X, et al. Predicting flow diverter sizing using the AneuGuide(TM) software: a validation study. J Neurointerv Surg 2023;15:57–62. 10.1136/neurintsurg-2021-018353 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Koo TK, Li MY. A guideline of selecting and reporting intraclass correlation coefficients for reliability research. J Chiropr Med 2016;15:155–63. 10.1016/j.jcm.2016.02.012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Rai AT, Hogg JP, Cline B, et al. Cerebrovascular geometry in the anterior circulation: an analysis of diameter, length and the vessel taper. J Neurointerv Surg 2013;5:371–5. 10.1136/neurintsurg-2012-010314 [DOI] [PubMed] [Google Scholar]

- 15. Ospel JM, Gascou G, Costalat V, et al. Comparison of pipeline embolization device sizing based on conventional 2D measurements and virtual simulation using the Sim&Size software: an agreement study. AJNR Am J Neuroradiol 2019;40:524–30. 10.3174/ajnr.A5973 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Rai AT, Brotman RG, Hobbs GR, et al. Semi-automated cerebral aneurysm segmentation and geometric analysis for WEB sizing utilizing a cloud-based computational platform. Interv Neuroradiol 2021;27:828–36. 10.1177/15910199211009111 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Spranger K, Capelli C, Bosi GM, et al. Comparison and calibration of a real-time virtual stenting algorithm using finite element analysis and genetic algorithms. Comput Methods Appl Mech Eng 2015;293:462–80. 10.1016/j.cma.2015.03.022 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

All data relevant to the study are included in the article or uploaded as supplementary information.