Abstract

Objectives

Medical research faces substantial challenges from noisy labels attributed to factors like inter-expert variability and machine-extracted labels. Despite this, the adoption of label noise management remains limited, and label noise is largely ignored. To this end, there is a critical need to conduct a scoping review focusing on the problem space. This scoping review aims to comprehensively review label noise management in deep learning-based medical prediction problems, which includes label noise detection, label noise handling, and evaluation. Research involving label uncertainty is also included.

Methods

Our scoping review follows the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines. We searched 4 databases, including PubMed, IEEE Xplore, Google Scholar, and Semantic Scholar. Our search terms include “noisy label AND medical/healthcare/clinical,” “uncertainty AND medical/healthcare/clinical,” and “noise AND medical/healthcare/clinical.”

Results

A total of 60 papers met inclusion criteria between 2016 and 2023. A series of practical questions in medical research are investigated. These include the sources of label noise, the impact of label noise, the detection of label noise, label noise handling techniques, and their evaluation. Categorization of both label noise detection methods and handling techniques are provided.

Discussion

From a methodological perspective, we observe that the medical community has been up to date with the broader deep-learning community, given that most techniques have been evaluated on medical data. We recommend considering label noise as a standard element in medical research, even if it is not dedicated to handling noisy labels. Initial experiments can start with easy-to-implement methods, such as noise-robust loss functions, weighting, and curriculum learning.

Keywords: deep learning, noisy label, label uncertainty

Introduction

Numerous studies have shown the extensive use of deep learning (DL) across various medical domains. Remarkably, DL has achieved performance close to domain experts in specific applications.1 However, the performance of DL training dramatically relies upon the quality of the underlying data, which can be heavily impacted by data noise. This noise can be categorized into 2 categories: feature and label noise. In this review, we focus on the latter. Label noise occurs when the labels in the training data are not completely accurate, such as incorrect disease diagnoses recorded in medical data. It is also sometimes referred to as label uncertainty.

The medical research landscape presents unique characteristics related to label noise. First, many disease diagnoses are challenging even for experts, leading to diverse outcomes.2 Moreover, there is a prevalent issue with the scarcity of expert-annotated labels, owing to the costly and time-consuming nature of the annotation process. To mitigate this, many researchers use labels generated through natural language processing (NLP), crowd-sourcing, or semi-supervised pseudo-label approaches. These alternatives, however, often lead to considerable label noises. Despite the significant presence of noisy labels in medical data, this issue has not received much attention in studies focused on medical machine learning.

To bridge this gap, this scoping review is intended to address several pertinent questions: the sources of label noise, the consequential effects of label noise, methods for identifying and handling label noise, as well as their evaluation. Our target readers are researchers and practitioners engaged in applying deep learning within the medical domain. Through examining these questions, we aim to highlight the challenge posed by noisy labels and encourage the adoption of suitable measures to mitigate this issue.

There are several reviews regarding learning from noisy labels in the general machine-learning domain. For example, Frénay and Kabán covered studies before the advent of deep learning.3 Song et al focused on model architecture change to handle label noise.4 Algan and Ulusoy comprehensively reviewed noisy label handling methodology.5 Liang et al summarized methods regarding sample selection and loss correction.6 Among all the reviews currently available, we only came across 1 survey in the medical domain.7

Our review offers several extensions to previous studies. First, previous studies focused on methodologies before 2018 within the broader deep-learning community and cited limited references in the medical domain.7 Our study offers a comprehensive review of the recent advancements in this field. Second, instead of focusing only on modeling techniques to handle label noise, our approach adopts a problem-centric perspective and takes a deeper look into the entire process of noisy label management, including noise detection, noise handling, and evaluation. Typical scenarios where label noise may happen are also audited. The detection of label noise is separately surveyed due to its broad range of applications, which is not covered in any previous reviews. Through our survey, we hope to draw greater attention to this underexplored challenge and suggest potential avenues for future research.

Methods

Our scoping review follows the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines.8

Eligibility criteria

We overview research on noisy label detection and handling in disease diagnosis and prognosis using deep learning methods. Segmentation problems are excluded from the scope of this study. The inclusion criteria for our review consisted of English-language articles published between 2016 and 2023, including both conference papers and journal articles. We chose this time frame to capture the most up-to-date research in this rapidly evolving field. Additionally, we refer to relevant preprint articles to ensure we consider cutting-edge research that has yet to be published in peer-reviewed venues.

Information sources

A search of multiple databases was carried out, including PubMed (https://pubmed.ncbi.nlm.nih.gov), the Institute of Electrical and Electronics Engineers (IEEE) Xplore Digital Library (https://ieeexplore.ieee.org), Google Scholar (https://scholar.google.com), and Semantic Scholar (https://www.semanticscholar.org). Important references are also tracked accordingly. The most recent search was executed on December 15, 2023.

Search strategy

The search starts with “noisy label AND medical” and “noise AND medical.” After screening the work, we found research on label uncertainty handling highly overlaps with noisy label handling. In addition, the keywords healthcare and clinical are also added for completeness. The final search terms include “noisy label AND medical/healthcare/clinical,” “uncertainty AND medical/healthcare/clinical,” and “noise AND medical/healthcare/clinical.” All the studies collected in this research were confined to the medical field.

Study selection

Each article was reviewed and summarized by the source of label noise, problem space (eg, computer vision and Natural Language Processing [NLP]), medical problem (eg, skin cancer and chest image), label noise detection method, label noise handling method, whether a clean dataset is needed, backbone model, evaluation method, and publishing year. During the screening and the full-text review stages, we excluded review articles, non-medical articles, image segmentation-related problems, data denoising, and feature denoising problems.

Results

Included studies and datasets

Summary of included literature

A total of 172 articles were retrieved from the 4 databases, of which 60 met the inclusion criteria for our review (Figure 1). Additionally, 21 supplementary articles partially addressed the problems of interest and were selectively referenced in the relevant sections. Details and a summary of the included papers are listed in Table 1.

Figure 1.

Flowchart of the search strategy.

Table 1.

Summary of all articles.

| Ref. | ML scope | Modality | Disease | Noise source | Detection method | Handling method | Evaluation method | Need clean data | Backbone NN | Year | Dataset (size) | Is dataset public |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 9 | CVa | MRIb | Brain disease | Inherent | Loss | Reweight, robust loss | Inject | N | MLPc | 2016 | Yale-B (2048), COIL-100 (7200), Scene-15 (4485) | Y |

| 10 | CV | X-ray | Mammography | Inherent | – | Noise adaption layer | Inject | N | MLP | 2018 | DDMS (55 890) | Y |

| 11 | CV | Dermoscopy | Melanoma | Inherent | Loss | Reweight, curriculum | Inject | N | ResNet | 2019 | ISIC-2019 (3582) | Y |

| 12 | CV | Dermoscopy, WSI, fundus photographs | Skin lesion, prostate cancer, retinal disease | Inherent, disagreement | Disagreement, uncertainty | Reweight, filter, curriculum | Clean | Y | ResNet | 2021 | ISIC-2019 (3582), Gleason 2019 (331), Retinal disease Kaggle (88 702) | Y |

| 13 | CV | Dermoscopy, WSI, X-ray | Melanoma, lymph node, lung disease, prostate cancer | Inherent | Co-training | Contrastive loss | Inject, clean | N | ResNet | 2022 | ISIC-2017 (2600), ISIC archive (1582), Kaggle histopathologic (220 025), Gleason 2019 (331) | Y |

| 14 | CV | OCT, WSI | Retinal disease, blood cell, colon pathology | Inherent | – | Contrastive loss, mixup | Inject | N | ResNet | 2023 | OCT (2100), Blood cell (4200), Colon pathology (10 250) | Y |

| 15 | CV | CT | Covid | Inherent | – | Robust loss | Same | N | VGG | 2021 | 6982 | N |

| 16 | CV | OCT | Fbrocalcifc plaques | Disagreement | F1 score | Active learning, label refinement | – | N | CNNi | 2020 | 6556 | Need Request |

| 17 | CV | WSIj | Cervical cancer | Pseudo label | Known | Label refinement, regularization | Clean | Y | Efficient Net | 2023 | 56 996 | N |

| 18 | CV | MRI | Aortic valve malformations | Pseudo label | Explicit | Robust noise | Clean | N | CNN-LSTM | 2019 | UKbiobank (4000) | Need request |

| 19 | CV | WSI | ADo | Inherent, disagreement | Disagreement | Label refinement | Clean | N | CNN | 2022 | 29 WSI (153 050) | Y |

| 20 | CV | WSI | Stomach cancer | Inherent | Loss | Label removal | Inject | N | Dense Net | 2022 | 905 WSI (139 650) | Y |

| 21 | CV | X-ray | Covid | Inherent | – | Label refinement, weighting | Inject | N | CNN | 2023 | Tawsifur (931), Skytells (1071), CRs (818) | Y |

| 22 | CV | WSI | Tumor, stroma, and immune infiltrates | Crowdsourcing | – | Label refinement | Clean | N | Gaussian process | 2021 | 151 WSI | N |

| 23 | CV | MRI | Brain lesion | Inherent | Uncertainty | Label refinement | Clean | N | CNN | 2020 | 165 | N |

| 24 | CV | MRI | Brain metastases | Pseudo label | Known | Label refinement | Clean | Y | Cropnet | 2022 | 217 | N |

| 25 | CV | X-ray | Lung disease | Inherent | – | Label refinement | – | N | AlexNet, ResNet | 2021 | 1248 | Y |

| 26 | CV | Dermoscopy, X-ray | Skin lesion, lung disease | Inherent, disagreement, machine label | – | Robustness | – | N | ResNet | 2022 | Skin (25 331), NIH Chest X-ray (112 120) | Y |

| 27 | CV | WSI | Histology | Pseudo label | Known | Model robustness | Clean | Y | ResNet | 2022 | 387 | N |

| 28 | CV | X-ray | Lung disease | Machine label | Known | Label refinement | Clean | N | MVSEh | 2021 | NIH-900 (11 014), Open-i (508 531), PMC (103), CheXpert (4236) | Y |

| 29 | CV | WSI | Pathology | Pseudo label | Loss | Label refinement, curriculum | Clean | Y | Efficient Net | 2022 | Kaggle PANDA (11 000) | Y |

| 30 | CV | Endomicroscopy | Gastrointestinal disease | Pseudo label | Uncertainty | Active learning, label refinement | Inject | Y | ResNet | 2019 | CLE (1366) | Y |

| 31 | CV | WSI | Pathology | Pseudo label | Loss | Label refinement, reweight | Inject | N | Large NN | 2021 | MICCAI DigestPath2019 (455) | Y |

| 32 | CV | X-ray | Lung disease | Machine label | Model performance | Reweight | Clean | Y | DenseNet | 2021 | 29 7541 | Y |

| 33 | CV | Retina image | Retinopathy of Prematurity | Disagreement | Meta-learning | Label refinement | Clean | Y | ResNet | 2021 | 1947 | N |

| 34 | CV | X-ray | Lung disease | Inherent | Uncertainty | Reweight, label filter | Clean | N | DenseNet | 2019 | NIH Chest X-ray (112 120), PLCO (185 421) | Y |

| 35 | CV | X-ray | Lung disease | Explicit | – | Label smoothing | Clean | Y | DenseNet | 2021 | CheXpert (224 316) | Y |

| 36 | CV | X-ray | Lung disease | Explicit | – | Label filter, label refinement, model as separate class | Clean | N | DenseNet, ResNet | 2019 | CheXpert (224 316) | Y |

| 37 | CV | Dermoscopy, WSI | Skin lesion, histopathology | Disagreement | – | Label filter | Inject | N | ResNet | 2023 | Skin (10 000), histopathology (3000) | Y |

| 38 | CV | CTl | Otosclerosis | Inherent, disagreement | – | Robust loss | Clean | N | W-Net | 2022 | 902 | N |

| 39 | CV | WSI | Prostate cancer | Disagreement | Loss | Filter label | Clean | N | ResNet | 2023 | PANDA (110 000) | Y |

| 40 | CV | X-ray | Lung disease | Expertise | Explicit | Robust architecture | Clean | N | VGG | 2023 | 271 | N |

| 41 | CV | X-ray | Lung disease | Noisy dataset | Loss, uncertainty | – | Inject, clean | N | ResNet | 2019 | NIH Chest X-ray (112 120) | Y |

| 42 | CV | MRI | Lumbar spine stenosis | Disagreement | Influence score | Reweight | Clean | N | MLP | 2022 | 26 728 | N |

| 43 | CV | X-ray | Proximal femur fractures | Expertise, disagreement | Known, uncertainty | Reweight, curriculum | Same | N | CNN | 2022 | 780 | N |

| 44 | CV | Dermoscopy, WSI | Skin cancer, lymph node | Crowdsourcing | Loss | Label refinement, curriculum, gradient based, label update | Inject | N | ResNet | 2021 | ISIC-Archive (23 906), PatchCamelyon (327 680) | Y |

| 45 | CV | Fundus image | Fundus disease | Inherent | Loss | Label filter, robust loss | Inject | N | EfficientNet | 2023 | private data (8),999, ODIR (9144) | Y/N |

| 46 | CV | OCTk | Vulnerable plaque | Pseudo label | Known | Gradient based, label update | Clean | N | ResNet | 2021 | IVOCT-2017 (3000) | Y |

| 47 | CV | WSI | Thyroid nodule | Disagreement | Model performance | Reweight | Clean | N | Small CNN | 2023 | 5232 | N |

| 48 | CV | OCT | Retina disease | Inherent | Loss | Label refinement | Inject | N | VGG, inception, ResNet | 2022 | OCT (4000), Messidor (1187), ANIMAL-10N (55 000) | Y |

| 49 | CV | Dermoscopy | Skin lesion | Pseudo label | Explicit | Label smoothing, regularization | Clean | Y | DenseNet | 2023 | ISIC-2018 (10 015), ISIC-2019 (25 531) | Y |

| 50 | CV | Dermoscopy | Skin cancer | Disagreement | Loss | Label refinement | Clean | N | ResNet | 2023 | ||

| 51 | CV | Ultra sound | Prostate cancer | Inherent | Uncertainty | Label refinement | Same | N | DNNp | 2022 | 353 | N |

| 52 | CV | Dermoscopy | Skin cancer | Pseudo label | Loss | Robust loss, mixup | Inject | N | ResNet | 2023 | 24 691 | Y |

| 53 | CV | X-ray | Lung disease | Machine label | Cluster based | Label refinement | Inject | N | DenseNet | 2023 | NIH Chest X-ray14 (112 120), CheXpert (224 316), PadChest (160 861), NIH-Google (1962) | Y |

| 54 | NLPm | EHRd | Preterm birth | De-identification | Known | Loss correction | Clean | Y | LSTM | 2018 | 23 578 | N |

| 55 | NLP | EHR | Covid | Data entry error, system error, diagnose error | Known | Robust loss, label smoothing | Inject | N | MLP | 2023 | 37 996 | Y |

| 56 | NLP | Medical data, clinical notes | Lupus erythematosus | Machine label | Explicit | Improve robustness | Clean | N | LRq | 2019 | Chest X-ray14 (112 120), CheXpert (224 316), OpenI (7470), PadChest (160 861) | Y |

| 57 | NLP | EHR | PICO entity extraction | Machine label | Explicit | Robust loss | Clean | N | PubMedBERT | 2023 | 23 172 | N |

| 58 | NLP | EHR | Mix of disease | Disagreement | Others | Adversarial training | Same | N | BiLSTM | 2021 | 2500 | Y |

| 59 | Serial | ECGe | Cardiac abnormalities | Experience level | Cluster based | Label correction | Clean | N | 1-d CNN | 2022 | CURIAL (37 896) | Need request |

| 60 | Serial | ECG | Cardiac abnormalities | Disagreement | Co-training | Co-training | Clean | N | Small CNN | 2023 | UCSF EHR (499 855) | N |

| 61 | Serial | PCGf | Cardiac diseases | Inherent | – | Label refinement | Inject | N | CNN | 2020 | PICO (4081) | Y |

| 62 | Serial | ECG | Cardiac diseases | Machine label | Cluster based | Label refinement | – | N | 1-d CNN | 2021 | CCKS 2020 (2050) | Y |

| 63 | Serial | PPGg | Atrial Fibrillation | Pseudo label | Cluster based | Robust loss | Clean | Y | ResNet | 2022 | CINC 2021 (101 149) | Y |

| 64 | Survival | EHR | Lung cancer, covid | Pseudo label | Explicit | Label refinement | Clean | Y | ML | 2023 | PTB-XL (21 837), MRI Sunnybrook challenge (153) | Y |

| 65 | Sequential model | Genome | Chromatin region detection | Pseudo label | Loss function, uncertainty | Robust loss | Clean | N | CNN-LSTM | 2023 | 1000 | Y |

| 66 | MLn | Regular data | Acute respiratory failure, shock in ICU | Inherent | NN to estimate, transition prob | Reweight | Inject | Y | MLP | 2023 | CINC 2021 (101 149) | Y |

| 67 | ML | Regular data | Diabetic | Inherent | – | Label refinement | Clean | Y | MLP | 2022 | 24 100 | N |

| 68 | ML | Regular data | Mix of disease | Inherent | Cluster based | Label filtering | Inject | N | ML | 2020 | Mix of datasets, each 1000 | Y |

Clean, the training dataset is noisy and the evaluation dataset is verified to be high quality; Inject, noise injected into training data; Same, training and testing are done on the same dataset; Inherent, the underlying problem is very hard to label; Disagreement, disagreement among experts or inter-expert variability; Curriculum, curriculum learning.

CV - Computer Vision

MRI - Magnetic Resonance Imaging

MLP - Multilayer perceptron

EHR - Electronic health record

ECG - Electrocardiogram

PCG - Phonocardiogram

PPG - Photopletismogram

MVSE - Multi-view Semantic Embedding

CNN - Convolutional neural network

WSI - Whole slide images.

OCT - Optical coherence tomography

CT - Computed tomography

NLP - Natural Language Processing

ML - Machine learning

AD - Alzheimer's disease

DNN - Deep neural network

LR - Logistic regression

Overview of problem space

Most of the prediction problems in the included studies are disease diagnosis problems. Due to the wide availability of data, chest X-ray and skin cancer are among the top medical domains that have been extensively explored. Some medical specialties are hard to diagnose by nature, which in turn causes the prevalence of label noise. For instance, Xue et al demonstrated images indicating that the malignant histology lymph node images present quite similar colors and structures to benign ones.13 The same situation can be observed for skin lesion images as well.

Table 1 lists “disease” and “noise source” for detailed information. Since most of the problems are diagnosis problems, “labels” are usually the disease of interest. The “Noise source” column explains the reason that causes label noise. “Modality,” “ML scope,” and “Backbone NN” columns summarize the problem space from a deep learning perspective. The selection of the backbone model usually depends on the domain and data size. Since most problems are image-based, ResNet and DenseNet are widely adopted. If the dataset size is small, customized small CNN can be an alternative. The rest of the columns will be elaborated in the following sections.

Source, impact, and detection of label noise

Sources of label noise

The primary source of label noise originates from the inherent complexity of the medical problem being examined. Multiple factors such as annotators’ fatigue or distraction69,70 or varying levels of expertise among annotators may contribute to label noise.20,26,38,40,43,70 Furthermore, there are inherent challenges in specific medical domains, such as the similarity between skin cancer and benign conditions.11–13,26,37,71 Such complexity of medical diagnosis is usually reflected by intra-observer and inter-observer variability, seen across multiple areas from Alzheimer’s Disease (AD) to retinopathy.12,16,19,26,33,59,60,47,50,59,60 Some studies have attempted to explicitly quantify the proportion of intra- and inter-observer variability, finding a range of 5% to 40%, depending on the specific specialties and datasets.2,16,37,38,42,72

Another significant contributor to label noise is the generation of labels by non-experts or non-humans. These labels may be extracted from an automated system,56,57 developed as pseudo labels in semi-supervised learning,18,65 stemmed from crowdsourcing,22,44 or be self-reported or non-verified.71,73,74 It is worth noting that although crowdsourcing is proven to be highly influential within the traditional computer vision (CV) community, its efficacy needs to improve in medical research due to the specialized expertise required.22

Besides the above-mentioned 2 types of contributors, label noise can also be attributed to other factors such as the de-identification process,54 data entry errors,11,75 incomplete information,11 as well as data acquisition, transmission, and storage errors.21,61,62 Notably, some popular datasets, like ChestX-ray14, are widely known to be noisy.34,36

Impact of label noise

The impact of label noise is reflected by its detriment to classification performance, which is usually measured by accuracy, AUC, PRAUC, and F1. For multi-class prediction problems, the macro average of metrics is usually used. Many studies aim to evaluate the impact of label noise on the performance of deep learning models. While some studies argued that deep learning is resilient to corrupted labels,76 they failed to assess the scenario where noisy labels were properly handled, which could potentially yield even better outcomes. In contrast, most studies have demonstrated a substantial detrimental effect when label noise is not addressed appropriately.50,71,74,77–80 For example, research by Samala et al highlights that as little as 10% of injected label noise can greatly increase DNN’s generalization error.78 Jang et al found that even a 2% random flip of labels significantly affects chest X-ray prediction accuracy.80 Collectively, these findings suggest that the impact of label noise is indeed remarkable, particularly in cases with relatively small sample sizes.

Apart from its effect on prediction accuracy, label noise could also influence fairness, especially when the noise varies across different patient subgroups.66,81 For instance, Tjandra et al pointed out that females are more frequently underdiagnosed for cardiovascular diseases.66

Detection of noisy labels

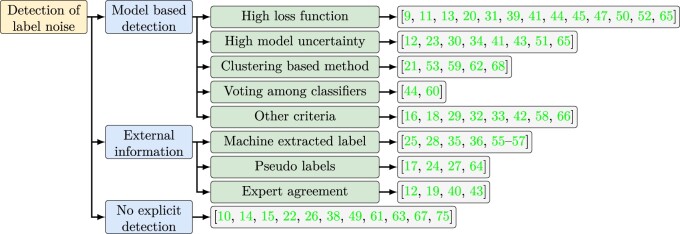

Many strategies adopt a 2-step or iterative approach: label noise detection and handling. In this section, we summarize the detection methods (Figure 2). Not all label noise requires detection; some are evident by nature. In semi-supervised learning, pseudo-labels are known to be noisy. In supervised learning, expert disagreements on disease diagnosis also indicate label noise, which can be quantified by Cohen’s Kappa coefficient.12,19,40

Figure 2.

Summary of noisy label detection methods. Terminologies in the figure are explained in the Glossary.

When the degree of label noisiness is unknown, machine learning based detection methods can be applied. The most widely used criteria are the first-order information (eg, prediction magnitude or loss function) and the second-order information (model or epistemic uncertainty). The study by Calli et al shows that they are both valid in assessing label noisiness.41 The difficulty of using them is the selection of threshold when the noise rate is still being determined.39,44,50,65,82 Despite the popularity of these 2 criteria, other studies indicate that a high loss function could also reflect a correct but hard-to-learn instance. Therefore, they try to disentangle the effects of noisy labels, hard instances, and class imbalance.29,37

Besides the loss function and model uncertainty, other machine-learning approaches are used to detect noisy labels. Ying et al have used the clustering-based method to identify prototypes or clusters within the feature space and assume labels in the same cluster are similar.21 The same strategy can also be used in label refinement (“Label filtering and refinement” section). Braun et al have developed another method to detect noise by evaluating the self-influence of a sample. This is defined by the change in the test sample loss function due to the inclusion or exclusion of the candidate instance to the training set.42 The underlying logic is that a noisy sample can decrease its own training error, but not the error of a test sample. Algan et al have employed a meta-learning criterion to search for optimal soft-labels that contribute to the most noise-robust training.33

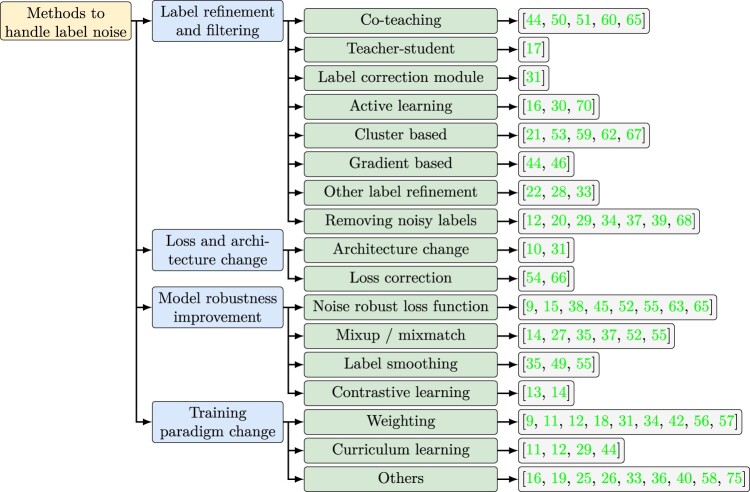

Deep learning methodologies

Techniques for addressing noisy labels in medical research can be organized into 4 main categories based on different aspects of deep learning techniques (Figure 3). “Label filtering and refinement” improves the training data, while “Loss and architecture change” and “model robustness improvement” adopt a modeling approach. “Training paradigm change,” on the other hand, primarily focuses on better optimization. Some studies only utilize 1 technique, while others leverage multiple techniques in the same study. Further categorization of these methods can be done based on whether they require a clean dataset for training or if they are applied iteratively.

Figure 3.

Methods applied to handle label noise.

Label filtering and refinement

Label filtering is the most straightforward approach, which involves directly discarding data points with noisy labels. It usually starts with applying noise detection methods as discussed in Detection of noisy labels section. One disadvantage of discarding noisy instances is the potential removal of genuinely complex but valuable data. Hence, the weighting method (Training paradigm change section) or label refinement are better alternatives.42 Another notable challenge in the label removal approach lies in the choice of appropriate threshold, ie, how noisy is considered “noisy.” Some studies use prior information to decide the percentage of noisy instances. Others propose machine-learning-based solutions to decide the threshold. Ren et al.65 proposed a dynamic threshold solution. Xiang et al.39 determined the threshold based on the learning epoch and adjust it progressively during the training process.

Label refinement is another approach that takes a more conservative standpoint by revising noisy labels rather than discarding them entirely. It can be categorized into the teacher-student framework, co-teaching, active learning, cluster-based label correction, and gradient-based label update. It is important to note that original labels may still be employed in model training following the label refinement phase.59

The teacher-student and co-teaching methods both employ another classifier to correct the label. In the teacher-student framework, predictions from a larger and more accurate model are used to correct labels for a smaller model. Comparatively, co-teaching involves training 2 classifiers on different parts of the training data and feature sets, with labels refined iteratively and each classifier using the other’s most confident predictions for refinement.

Active learning interactively queries a human (or another information source) to label new data points, typically selecting those that offer maximal information. Cluster-based methods group instances in the feature space, with each cluster’s label determined by ground truth data or majority voting. Labels can be corrected if they disagree with the cluster label. Gradient-based label update uses stochastic gradient descent to adjust labels,44,46 where the gradient is calculated with respect to labels instead of parameters.

Loss and architecture change

Loss and architecture change explicitly models the transition between latent ground true labels and observed noisy labels through dedicated layers. However, it is less common in medical research due to the constraint of a limited sample size. The most common changes to architecture involve employing loss correction or adaptation layers.

Loss correction explicitly estimates the transition matrix between the observed label and the latent ground truth label, denoted as T (),83 where and y are the noisy and true labels. The estimated transition matrix is then used to weight the loss function . It is proved that the corrected loss function is unbiased.

Differently, the noisy adaptation layer integrates this noise transition process directly into the neural network by adding an extra softmax layer after the original one.84 However, this additional layer makes the neural network non-identifiable, which poses challenges to optimization, and a careful initialization is needed.

Model robustness improvement

Instead of directly addressing noisy labels, an alternative workstream tackles the problem implicitly by enhancing model robustness and reducing overfitting.

Designing new loss functions is the most popular approach to improve robustness. The issue with the cross-entropy loss in regular classification applications is that its gradient can sharply increase as the predicted probability approaches the opposite of the corresponding label. While this accelerates convergence, it leads to overfitting when the label is wrong. Loss functions that suppress gradient can mitigate this issue. For instance, Chen et al.38 proposed a weighted combination of regular cross-entropy loss and reverse cross-entropy loss (RCE) . Interestingly, mean square error loss, the default loss function in regression, has demonstrated resilience to label noise in classification problems. For this reason, some studies prefer using mean square error on soft labels instead of cross-entropy loss on predicted hard labels (0-1 labels).33,65

Besides suppressing gradients, the second line of research enforces that instances close in latent feature space share similar labels, despite potentially noisy labels.9,55,63 This resonates with the clustering-based label correction method (Label filtering and refinement section). An example of this is Neighbor Consistency Regularization (NCR) loss,55 which initially clusters examples through KNN and subsequently ensures logits for instances within the same cluster are close. Similarly, Ding et al.63 applied an auto-encoder to compress the feature space and cluster samples in that space. Within each batch, an additional loss is added to minimize intra-cluster distance while maximizing inter-cluster distance.

The aforementioned loss functions are intentionally constructed to be noise-robust. On the other hand, contrastive learning provides a somewhat noise-resilient loss function since it does not require any labels. It first creates augmented views of each data instance through perturbation or transformation. Then, a loss function minimizes distances between these augmented views and the original data while maximizing distances between different original instances (and their respective augmented views). Xue et al.13 have implemented this approach within each batch to cope label noise.

In addition to robust loss functions, regularization methods such as label smoothing and mix-up are also used. Label smoothing involves softening binary labels (0-1) by setting label 0 to be and making label 1 to be . Here, is the level we want to smooth the labels and K is the number of classes. Pham et al.35 used label smoothing to address uncertain examples. The mix-up strategy augments training data by computing a weighted average of both the features and labels of 2 instances. Yang et al.55 used both label smoothing and mix-up within a batch. Pulido et al.27 evaluated to what extent mix-up can improve model robustness toward noisy labels. Regularization can also be achieved by customizing the optimization strategy. For instance, Hu et al. used sharpness-aware minimization to find a “flat minimum” whose neighbors have uniformly low training loss values.45

Training paradigm change

Training paradigm change mostly focuses on enhancing better optimization performance. Reweighting and curriculum learning are the most widely used ones.

Drawing from education, the concept of curriculum learning starts with training neural networks on easy samples (easy curriculum) and gradually progresses to more complex ones (hard curriculum). It aims to smoothen the warm-up process during optimization and achieve better local minima. In the context of label denoising, training can start with clean labels identified as described in Detection of noisy labels section.

Reweighting strategy (sometimes phrased as noise-aware loss functions18,57) increases the weights for clean data points and downweights noisy ones. Deng et al.9 weighted the instances by estimated label probability, while Ghesu et al.34 used estimated uncertainty as weight. There are 2 concerns with this approach. First, the adjustment of weights can introduce an artificial train-test distribution mismatch. Second, while loss function or low uncertainty are usually used as the criteria of label cleanliness, they are also considered “easy examples” in hard example mining. The potential contradiction with hard example mining raises questions about its impact on model performance.

There are other paradigm changes in addition to curriculum learning and reweighting. Irvin et al. treated noisy labels as a distinct class rather than assigning a pseudo label.36 Algan et al.33 introduced a meta-learning strategy to generate soft labels. The goal is to produce optimal soft labels for noisy instances so that a base classifier trained on such instances would perform best on a separate clean meta-data.

Evaluation

Evaluation is crucial yet challenging when studying noisy label handling, primarily due to the necessity of a clean dataset. Two common evaluation methods were identified from the reviewed studies (Figure 4): (1) using data with ground truth for evaluation and (2) injecting noise into training data (with the original data being used for evaluation). Some studies evaluate using the original noisy dataset, which should be discouraged since the metrics are unreliable with noisy labels.

Figure 4.

Evaluation method summary.

In the first approach, ground truth data could come from expert annotation, or the labeled part of data in a semi-supervised learning setting.13 In the second approach where noise is simulated, 3 different underlying assumptions exist:3 (1) random noise where the noise probability is independent of both features and latent true labels, (2) class-dependent noise where the noise probability depends only on the class labels, and (3) instance-based noise,4 which assumes the noise mechanism depends on both labels and features.

We suggest the use of ground truth data as an evaluation criterion whenever possible. While instance-based label injection is arguably the most compelling simulation for label noise, we find it is rarely applied in practice. Moreover, a positive ratio should be maintained the same after noise injection to mitigate the confounding impact of class imbalance,55 and noise may be simulated across various severity.48

Discussion

Medical research is intrinsically prone to noisy labels stemming from its inherent ambiguity, variations in label sources relating to data quality, dependence on labels generated by automated systems, and more. A considerable number of the papers we have reviewed report substantial deterioration in model performance due to label noise. In this review, we focus on a comprehensive examination of different aspects related to label noise management. The adoption of noisy label handling techniques remains limited in general medical research practices. They are predominantly only discussed in works specifically dedicated to addressing noisy labels. Many methodologies covered in this review do not entail extensive efforts and can be seamlessly integrated into existing general research workflows.

How label noise is handled depends on factors such as the availability of clean data, sample size, and noise level. Approaches like label refinement and training paradigm optimization, which heavily rely on noise detection, typically require a clean dataset. In contrast, architecture change and robustness improvement usually do not have this requirement. Applying noise-handling techniques iteratively often leads to improved results, with the noise level tending to decrease with each iteration. Even though a lot of studies directly filter out noisy instances completely, this should be discouraged due to the uncertainty in noise detection. Priority should be given to reweighting or label refinement over filtering out noisy labels.42 To evaluate how well the noisy label issue is addressed, simulations should be conducted using instance-based noise. Evaluating the original noisy dataset is not appropriate since the metrics with noisy labels are not reliable. In real-world applications where addressing noisy labels is not the primary objective, the quality of a clean evaluation dataset becomes crucial. Although most studies only use one of the handling methods, a lot of the techniques are not exclusive to each other and can be used together. We suggest treating the selection of noisy label handling techniques as a “hyperparameter” and selecting the best combination of them based on validation results.

Several directions may deserve attention for future research. The first is to further distinguish between label noise and genuinely hard examples. A duality can be applied to instances with a high loss function or model uncertainty. They can be perceived either as “noisy instances” in our context or as valuable “hard examples” in hard example mining. In the papers we reviewed, they are deprioritized, whereas in hard example mining literature, they should be emphasized. While some studies have attempted to address this issue, their suggested approaches are often difficult to implement and lack broad applicability. Second, learning from noisy labels is closely linked to other domains, such as semi-supervised learning, active learning, uncertainty-aware classification, ensemble learning, and regularization. Cross-disciplinary investigation could provide another promising direction for future research. Take semi-supervised learning as an example. Braun et al.42 highlighted that even state-of-the-art techniques such as unsupervised data augmentation, consistency regularization, and unsupervised contrastive learning cannot guarantee the quality of pseudo-labels. However, in most of the semi-supervised learning literature, the noisiness of pseudo labels is not paid attention to. Third, few studies compare different backbone models’ sensitivity and robustness to label noise. This will be helpful in guiding the selection of model architecture. Fourth, even in the broader deep learning community, there are limited studies comparing different method’s performance and how to more effectively combine them. How each succeeding work addresses the weaknesses of previous studies also needs further clarification. A systematic review or benchmark study will be helpful. Lastly, label noise detection methods have not been thoroughly discussed in existing literature. Detection methods could not only help to handle noise but also be useful in assessing the quality of evaluation data and estimating the noise ratio.

Conclusion

In this scoping review, we systematically examined the current state of research on noisy label detection and management in the medical domain. Our findings suggest that the techniques for handling noisy labels in the medical community are currently up to date with the general deep-learning community. Despite their utility, the adoption of label noise management in mainstream medical research remains limited. These methodologies are predominantly discussed in works specifically targeting the issue of noisy labels. We recommend considering label noise as a standard element in medical research. Many of these noisy label-handling methodologies (eg, reweighting, noise robust loss, and curriculum learning) do not require extensive efforts and can seamlessly integrate into existing research workflows.

Supplementary Material

Contributor Information

Yishu Wei, Department of Population Health Sciences, Weill Cornell Medicine, New York, NY 10065, United States; Reddit Inc., San Francisco, CA 16093, United States.

Yu Deng, Center for Health Information Partnerships, Northwestern University, Chicago, IL 10611, United States.

Cong Sun, Department of Population Health Sciences, Weill Cornell Medicine, New York, NY 10065, United States.

Mingquan Lin, Department of Population Health Sciences, Weill Cornell Medicine, New York, NY 10065, United States; Department of Surgery, University of Minnesota, Minneapolis, MN 55455, United States.

Hongmei Jiang, Department of Statistics and Data Science, Northwestern University, Evanston, IL 60208, United States.

Yifan Peng, Department of Population Health Sciences, Weill Cornell Medicine, New York, NY 10065, United States.

Author contributions

Study concepts/study design: Yishu Wei, Hongmei Jiang, Yifan Peng; manuscript drafting or manuscript revision for important intellectual content: all authors; approval of the final version of the submitted manuscript: all authors; agrees to ensure any questions related to the work are appropriately resolved: all authors; literature research: Yishu Wei, Yu Deng, Cong Sun, Mingquan Lin; data interpretation: Yishu Wei, Yu Deng; and manuscript editing: all authors.

Supplementary material

Supplementary material is available at Journal of the American Medical Informatics Association online.

Funding

This work was supported by National Science Foundation CAREER Award No. 2145640 and the National Library of Medicine under Award No. R01LM014306.

Conflicts of interest

There are no competing interests to declare.

Data availability

The data underlying this article are available in the article and in its online supplementary material.

References

- 1. Salahuddin Z, Woodruff HC, Chatterjee A, Lambin P. Transparency of deep neural networks for medical image analysis: a review of interpretability methods. Comput Biol Med. 2022;140:105111. [DOI] [PubMed] [Google Scholar]

- 2. Wallace DK, Quinn GE, Freedman SF, Chiang MF. Agreement among pediatric ophthalmologists in diagnosing plus and pre-plus disease in retinopathy of prematurity. J AAPOS. 2008;12(4):352-356. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Frénay B, Kabán A. A comprehensive introduction to label noise. In: The European Symposium on Artificial Neural Networks, Computational Intelligence and Machine Learning. i6doc.com publ; 2014:23-25. [Google Scholar]

- 4. Song H, Kim M, Park D, Shin Y, Lee JG. Learning from noisy labels with deep neural networks: a survey. IEEE Trans Neural Netw Learn Syst. 2023; 34(11):8135-8153. [DOI] [PubMed] [Google Scholar]

- 5. Algan G, Ulusoy I. Image classification with deep learning in the presence of noisy labels: a survey. Knowledge-Based Syst. 2021;215:106771. [Google Scholar]

- 6. Liang X, Liu X, Yao L. Review—a survey of learning from noisy labels. ECS Sens Plus. 2022;1(2):021401. [Google Scholar]

- 7. Karimi D, Dou H, Warfield SK, Gholipour A. Deep learning with noisy labels: exploring techniques and remedies in medical image analysis. Med Image Anal. 2020;65:101759. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Tricco AC, Lillie E, Zarin W, et al. PRISMA extension for scoping reviews (PRISMA-ScR): checklist and explanation. Ann Intern Med. 2018;169(7):467-473. [DOI] [PubMed] [Google Scholar]

- 9. Deng Y, Bao F, Deng X, Wang R, Kong Y, Dai Q. Deep and structured robust information theoretic learning for image analysis. IEEE Trans on Image Process. 2016;25(9):1-21. [DOI] [PubMed] [Google Scholar]

- 10. Dgani Y, Greenspan H, Goldberger J. Training a neural network based on unreliable human annotation of medical images. In: 2018 IEEE 15th International symposium on biomedical imaging (ISBI 2018). IEEE; 2018:39-42.

- 11. Xue C, Dou Q, Shi X, Chen H, Heng PA. Robust learning at noisy labeled medical images: applied to skin lesion classification. In: 2019 IEEE 16th International Symposium on Biomedical Imaging (ISBI 2019). IEEE; 2019:1280-1283.

- 12. Ju L, Wang X, Wang L, et al. Improving medical images classification with label noise using dual-uncertainty estimation. IEEE Trans Med Imaging. 2022;41(6):1533-1546. [DOI] [PubMed] [Google Scholar]

- 13. Xue C, Yu L, Chen P, Dou Q, Heng PA. Robust medical image classification from noisy labeled data with global and local representation guided co-training. IEEE Trans Med Imaging. 2022;41(6):1371-1382. [DOI] [PubMed] [Google Scholar]

- 14. Jiang H, Gao M, Hu Y, Ren Q, Xie Z, Liu J. 2023. Label-noise-tolerant medical image classification via self-attention and self-supervised learning. arXiv, arXiv:230609718, preprint: not peer reviewed. [DOI] [PubMed]

- 15. Hu K, Huang Y, Huang W, et al. Deep supervised learning using self-adaptive auxiliary loss for COVID-19 diagnosis from imbalanced CT images. Neurocomputing (Amst). 2021;458:232-245. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Lee J, Prabhu D, Kolluru C, et al. Fully automated plaque characterization in intravascular OCT images using hybrid convolutional and lumen morphology features. Sci Rep. 2020;10(1):2596. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Kurita Y, Meguro S, Tsuyama N, et al. Accurate deep learning model using semi-supervised learning and noisy student for cervical cancer screening in low magnification images. PLoS One. 2023;18(5):e0285996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Fries JA, Varma P, Chen VS, et al. Weakly supervised classification of aortic valve malformations using unlabeled cardiac MRI sequences. Nat Commun. 2019;10(1):3111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Wong DR, Tang Z, Mew NC, et al. Deep learning from multiple experts improves identification of amyloid neuropathologies. Acta Neuropathol Commun. 2022;10(1):66. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Ashraf M, Robles WRQ, Kim M, Ko YS, Yi MY. A loss-based patch label denoising method for improving whole-slide image analysis using a convolutional neural network. Sci Rep. 2022;12(1):1392. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Ying X, Liu H, Huang R. COVID-19 chest X-ray image classification in the presence of noisy labels. Displays. 2023;77:102370. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. López-Pérez M, Amgad M, Morales-Álvarez P, et al. Learning from crowds in digital pathology using scalable variational Gaussian processes. Sci Rep. 2021;11(1):11612. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Karimi D, Peters JM, Ouaalam A, et al. Learning to detect brain lesions from noisy annotations. In: 2020 IEEE 17th International Symposium on Biomedical Imaging (ISBI). IEEE; 2020:1910-1914. [DOI] [PMC free article] [PubMed]

- 24. Dikici E, Nguyen XV, Bigelow M, Ryu JL, Prevedello LM. Advancing brain metastases detection in T1-weighted contrast-enhanced 3D MRI using noisy student-based training. Diagnostics. 2022;12(8):2023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Momeny M, Neshat AA, Hussain MA, et al. Learning-to-augment strategy using noisy and denoised data: improving generalizability of deep CNN for the detection of COVID-19 in X-ray images. Comput Biol Med. 2021;136:104704. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Jaiswal A, Ashutosh K, Rousseau JF, Peng Y, Wang Z, Ding Y. Ros-kd: a robust stochastic knowledge distillation approach for noisy medical imaging. In: 2022 IEEE International Conference on Data Mining (ICDM). IEEE; 2022:981-986. [DOI] [PMC free article] [PubMed]

- 27. Pulido JV, Guleria S, Ehsan L, et al. Semi-supervised classification of noisy, gigapixel histology images. In: 2020 IEEE 20th International Conference on Bioinformatics and Bioengineering (BIBE). IEEE; 2020:563-568. [DOI] [PMC free article] [PubMed]

- 28. Paul A, Shen TC, Lee S, et al. Generalized zero-shot chest x-ray diagnosis through trait-guided multi-view semantic embedding with self-training. IEEE Trans Med Imaging. 2021;40(10):2642-2655. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Li W, Li J, Wang Z, et al. Pathal: an active learning framework for histopathology image analysis. IEEE Trans Med Imaging. 2021;41(5):1176-1187. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Gu Y, Shen M, Yang J, Yang GZ. Reliable label-efficient learning for biomedical image recognition. IEEE Trans Biomed Eng. 2018;66(9):2423-2432. [DOI] [PubMed] [Google Scholar]

- 31. Zhang S, Yuan Z, Wang Y, Bai Y, Chen B, Wang H. REUR: a unified deep framework for signet ring cell detection in low-resolution pathological images. Comput Biol Med. 2021;136:104711. [DOI] [PubMed] [Google Scholar]

- 32. Gündel S, Setio AAA, Ghesu FC, et al. Robust classification from noisy labels: integrating additional knowledge for chest radiography abnormality assessment. Med Image Anal. 2021;72:102087. [DOI] [PubMed] [Google Scholar]

- 33. Algan G, Ulusoy I, Gönül Ş, Turgut B, Bakbak B. 2020. Deep learning from small amount of medical data with noisy labels: a meta-learning approach. arXiv, arXiv:201006939, preprint: not peer reviewed.

- 34. Ghesu FC, Georgescu B, Gibson E, et al. Quantifying and leveraging classification uncertainty for chest radiograph assessment. In: Medical Image Computing and Computer Assisted Intervention-MICCAI 2019: 22nd International Conference, Shenzhen, China, October 13-17, 2019, Proceedings, Part VI 22. Springer; 2019:676-684.

- 35. Pham HH, Le TT, Tran DQ, Ngo DT, Nguyen HQ. Interpreting chest X-rays via CNNs that exploit hierarchical disease dependencies and uncertainty labels. Neurocomputing. 2021;437:186-194. [Google Scholar]

- 36. Irvin J, Rajpurkar P, Ko M, et al. Chexpert: a large chest radiograph dataset with uncertainty labels and expert comparison. In: Proceedings of the AAAI conference on artificial intelligence. Vol. 33. Association for the Advancement of Artificial Intelligence; 2019:590-597.

- 37. Li J, Cao H, Wang J, et al. Learning robust classifier for imbalanced medical image dataset with noisy labels by minimizing invariant Risk. In: International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer; 2023:306-316.

- 38. Chen H, Tan W, Li J, et al. Adaptive cross entropy for ultrasmall object detection in computed tomography with noisy labels. Comput Biol Med. 2022;147:105763. [DOI] [PubMed] [Google Scholar]

- 39. Xiang J, Wang X, Wang X, et al. Automatic diagnosis and grading of prostate cancer with weakly supervised learning on whole slide images. Comput Biol Med. 2023;152:106340. [DOI] [PubMed] [Google Scholar]

- 40. Del Amor R, Silva-Rodríguez J, Naranjo V. Labeling confidence for uncertainty-aware histology image classification. Comput Med Imaging Graph. 2023;107:102231. [DOI] [PubMed] [Google Scholar]

- 41. Calli E, Sogancioglu E, Scholten ET, Murphy K, van Ginneken B. Handling label noise through model confidence and uncertainty: application to chest radiograph classification. In: Medical Imaging 2019: Computer-Aided Diagnosis. Vol. 10950. SPIE; 2019:289-296.

- 42. Braun J, Kornreich M, Park J, et al. Influence based re-weighing for labeling noise in medical imaging. In: 2022 IEEE 19th International Symposium on Biomedical Imaging (ISBI). IEEE; 2022:1-5.

- 43. Jiménez-Sánchez A, Mateus D, Kirchhoff S, et al. Curriculum learning for improved femur fracture classification: scheduling data with prior knowledge and uncertainty. Med Image Anal. 2022;75:102273. [DOI] [PubMed] [Google Scholar]

- 44. Liu J, Li R, Sun C. Co-correcting: noise-tolerant medical image classification via mutual label correction. IEEE Trans Med Imaging. 2021;40(12):3580-3592. [DOI] [PubMed] [Google Scholar]

- 45. Hu T, Yang B, Guo J, et al. A fundus image classification framework for learning with noisy labels. Comput Med Imaging Graph. 2023;108:102278. [DOI] [PubMed] [Google Scholar]

- 46. Shi P, Xin J, Zheng N. Correcting pseudo labels with label distribution for unsupervised domain adaptive vulnerable plaque detection. In: 2021 43rd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC). IEEE; 2021:3225-3228. [DOI] [PubMed]

- 47. Gao Z, Chen Y, Sun P, Liu H, Lu Y. Clinical knowledge embedded method based on multi-task learning for thyroid nodule classification with ultrasound images. Phys Med Biol. 2023;68(4):045018. [DOI] [PubMed] [Google Scholar]

- 48. Gao M, Feng X, Geng M, et al. Bayesian statistics-guided label refurbishment mechanism: mitigating label noise in medical image classification. Med Phys. 2022;49(9):5899-5913. [DOI] [PubMed] [Google Scholar]

- 49. Zhou S, Tian S, Yu L, et al. ReFixMatch-LS: reusing pseudo-labels for semi-supervised skin lesion classification. Med Biol Eng Comput. 2023;61(5):1033-1045. [DOI] [PubMed] [Google Scholar]

- 50. Zhu M, Zhang L, Wang L, Li D, Zhang J, Yi Z. Robust co-teaching learning with consistency-based noisy label correction for medical image classification. Int J Comput Assist Radiol Surg. 2023;18(4):675-683. [DOI] [PubMed] [Google Scholar]

- 51. Javadi G, Samadi S, Bayat S, et al. Training deep neural networks with noisy clinical labels: toward accurate detection of prostate cancer in US data. Int J Comput Assist Radiol Surg. 2022;17(9):1697-1705. [DOI] [PubMed] [Google Scholar]

- 52. Chen B, Ye Z, Liu Y, et al. Combating medical label noise via robust semi-supervised contrastive learning. In: International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer; 2023:562-572.

- 53. Chen Y, Liu F, Wang H, et al. Bomd: bag of multi-label descriptors for noisy chest x-ray classification. In: Proceedings of the IEEE/CVF International Conference on Computer Vision. IEEE; 2023: 21284-21295.

- 54. Boughorbel S, Jarray F, Venugopal N, Elhadi H. 2018. Alternating loss correction for preterm-birth prediction from EHR data with noisy labels. arXiv, arXiv:181109782, preprint: not peer reviewed.

- 55. Yang J, Triendl H, Soltan AA, Prakash M, Clifton DA. Addressing label noise for electronic health records: insights from computer vision for tabular data. medRxiv. 2023:2023-10.

- 56. Murray SG, Avati A, Schmajuk G, Yazdany J. Automated and flexible identification of complex disease: building a model for systemic lupus erythematosus using noisy labeling. J Am Med Inform Assoc. 2019;26(1):61-65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57. Dhrangadhariya A, Müller H. Not so weak PICO: leveraging weak supervision for participants, interventions, and outcomes recognition for systematic review automation. JAMIA Open. 2023;6(1):ooac107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58. Li Z, Gan Z, Zhang B, et al. Semi-supervised noisy label learning for Chinese clinical named entity recognition. Data Intelligence. 2021;3(3):389-401. [Google Scholar]

- 59. Vázquez CG, Breuss A, Gnarra O, Portmann J, Madaffari A, Da Poian G. Label noise and self-learning label correction in cardiac abnormalities classification. Physiol Meas. 2022;43(9):094001. [DOI] [PubMed] [Google Scholar]

- 60. de Vos BD, Jansen GE, Išgum I. Stochastic co-teaching for training neural networks with unknown levels of label noise. Sci Rep. 2023;13(1):16875. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61. Baghel N, Dutta MK, Burget R. Automatic diagnosis of multiple cardiac diseases from PCG signals using convolutional neural network. Comput Methods Programs Biomed. 2020;197:105750. [DOI] [PubMed] [Google Scholar]

- 62. Vázquez CG, Breuss A, Gnarra O, Portmann J, Da Poian G. Two will do: CNN with asymmetric loss, self-learning label correction, and hand-crafted features for imbalanced multi-label ECG data classification. In: 2021 Computing in Cardiology (CinC). Vol. 48. IEEE; 2021:1-4.

- 63. Ding C, Guo Z, Shah A, Clifford G, Rudin C, Hu X. 2022. Learning from alarms: a robust learning approach for accurate photoplethysmography-based atrial fibrillation detection using eight million samples labeled with imprecise arrhythmia alarms. arXiv, arXiv:221103333, preprint: not peer reviewed. [DOI] [PMC free article] [PubMed]

- 64. Hong C, Liang L, Yuan Q, et al. Semi-Supervised Calibration of Noisy Event Risk (SCANER) with electronic health records. J Biomed Inform. 2023;144:104425. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65. Ren J, Liu Y, Zhu X, et al. OCRFinder: a noise-tolerance machine learning method for accurately estimating open chromatin regions. Front Genet. 2023;14:1184744. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66. Tjandra D, Wiens J. Leveraging an alignment set in tackling instance-dependent label noise. In: Conference on Health, Inference, and Learning. PMLR; 2023:477-497.

- 67. Vernekar S, Ayyar A, Rajagopalan A, Kumar B, Mishra VK. Improving medical predictions with label noise tolerant classification. In: 2022 4th International Conference on Advances in Computing, Communication Control and Networking (ICAC3N). IEEE; 2022:765-769.

- 68. Xu J, Yang Y, Yang P. Hybrid label noise correction algorithm for medical auxiliary diagnosis. In: 2020 IEEE 18th International Conference on Industrial Informatics (INDIN). Vol. 1. IEEE; 2020:567-572.

- 69. Brady AP. Error and discrepancy in radiology: inevitable or avoidable? Insights Imaging. 2017;8(1):171-182. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70. Lu H, Lee J, Ray S, et al. Automated stent coverage analysis in intravascular OCT (IVOCT) image volumes using a support vector machine and mesh growing. Biomed Opt Express. 2019;10(6):2809-2828. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71. Hekler A, Kather JN, Krieghoff-Henning E, et al. Effects of label noise on deep learning-based skin cancer classification. Front Med (Lausanne). 2020;7:177. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72. Campbell JP, Kalpathy-Cramer J, Erdogmus D, et al. Plus disease in ROP: why do experts disagree, and how can we improve diagnosis? J Am Assoc Pediatr Ophthalmol Strabismus. 2017;21(4):e5-e6. [Google Scholar]

- 73. Cosentino J, Behsaz B, Alipanahi B, et al. Inference of chronic obstructive pulmonary disease with deep learning on raw spirograms identifies new genetic loci and improves risk models. Nat Genet. 2023;55(5):787-795. [DOI] [PubMed] [Google Scholar]

- 74. Ding C, Pereira T, Xiao R, Lee RJ, Hu X. Impact of label noise on the learning based models for a binary classification of physiological signal. Sensors. 2022;22(19):7166. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75. Pechenizkiy M, Tsymbal A, Puuronen S, Pechenizkiy O. Class noise and supervised learning in medical domains: the effect of feature extraction. In: 19th IEEE symposium on computer-based medical systems (CBMS’06). IEEE; 2006:708-713.

- 76. Potapenko I, Kristensen M, Thiesson B, et al. Detection of oedema on optical coherence tomography images using deep learning model trained on noisy clinical data. Acta Ophthalmol. 2022;100(1):103-110. [DOI] [PubMed] [Google Scholar]

- 77. Khanal B, Hasan SK, Khanal B, Linte CA. Investigating the impact of class-dependent label noise in medical image classification. In: Medical Imaging 2023: Image Processing. Vol. 12464. SPIE; 2023:728-733. [DOI] [PMC free article] [PubMed]

- 78. Samala RK, Chan HP, Hadjiiski LM, Helvie MA, Richter CD. Generalization error analysis for deep convolutional neural network with transfer learning in breast cancer diagnosis. Phys Med Biol. 2020;65(10):105002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79. Büttner M, Schneider L, Krasowski A, Krois J, Feldberg B, Schwendicke F. Impact of noisy labels on dental deep learning—calculus detection on bitewing radiographs. J Clin Med. 2023;12(9):3058. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80. Jang R, Kim N, Jang M, et al. Assessment of the robustness of convolutional neural networks in labeling noise by using chest X-ray images from multiple centers. JMIR Med Inform. 2020;8(8):e18089. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81. Petersen E, Holm S, Ganz M, Feragen A. The path toward equal performance in medical machine learning. Patterns. 2023;4(7):100790. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82. Liu T, Tao D. Classification with noisy labels by importance reweighting. IEEE Trans Pattern Anal Mach Intell. 2015;38(3):447-461. [DOI] [PubMed] [Google Scholar]

- 83. Patrini G, Rozza A, Krishna Menon A, Nock R, Qu L. Making deep neural networks robust to label noise: a loss correction approach. In: Proceedings of the IEEE conference on computer vision and pattern recognition. IEEE; 2017:1944-1952.

- 84. Goldberger J, Ben-Reuven E. Training deep neural-networks using a noise adaptation layer. In: International Conference on Learning Representations. ICLR; 2016:1-9.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The data underlying this article are available in the article and in its online supplementary material.