Abstract

Objective

ModelDB (https://modeldb.science) is a discovery platform for computational neuroscience, containing over 1850 published model codes with standardized metadata. These codes were mainly supplied from unsolicited model author submissions, but this approach is inherently limited. For example, we estimate we have captured only around one-third of NEURON models, the most common type of models in ModelDB. To more completely characterize the state of computational neuroscience modeling work, we aim to identify works containing results derived from computational neuroscience approaches and their standardized associated metadata (eg, cell types, research topics).

Materials and Methods

Known computational neuroscience work from ModelDB and identified neuroscience work queried from PubMed were included in our study. After pre-screening with SPECTER2 (a free document embedding method), GPT-3.5, and GPT-4 were used to identify likely computational neuroscience work and relevant metadata.

Results

SPECTER2, GPT-4, and GPT-3.5 demonstrated varied but high abilities in identification of computational neuroscience work. GPT-4 achieved 96.9% accuracy and GPT-3.5 improved from 54.2% to 85.5% through instruction-tuning and Chain of Thought. GPT-4 also showed high potential in identifying relevant metadata annotations.

Discussion

Accuracy in identification and extraction might further be improved by dealing with ambiguity of what are computational elements, including more information from papers (eg, Methods section), improving prompts, etc.

Conclusion

Natural language processing and large language model techniques can be added to ModelDB to facilitate further model discovery, and will contribute to a more standardized and comprehensive framework for establishing domain-specific resources.

Keywords: natural language processing, large language model, computational neuroscience, automated curation

Introduction

Over the years, numerous informatics resources have been developed to aggregate human knowledge, from generalist resources like Wikidata1 to domain-specific scientific resources like GenBank2 for nucleotide sequences and NeuroMorpho.Org3 for neuron morphologies. Researchers are often obligated to submit nucleotide sequences to GenBank, but for many other types of scientific products, researchers have no such obligations, sometimes leading to sharing either not happening or not happening in a consistent manner (eg, with standardized metadata). The broader scientific community, however, benefits most when scientific products are widely available, as this allows researchers to readily build on prior work, especially when the scientific products are available in a standardized form.4–8

Domain-specific knowledge-bases attempt to address this need but face at least 3 major challenges: (i) identifying relevant publications; (ii) identifying relevant metadata; (iii) obtaining additional needed details not present in the corresponding publication. Monitoring the literature is non-trivial; PubMed lists over 1.7 million publications in 2022. Even once the literature is filtered to a specific field, the scientific product and relevant metadata desired by knowledge-bases is often not explicitly mentioned in the title or abstract, so determining relevance of a given paper may require carefully reading the full text. Traditionally, these challenges are addressed by human curators, but this requires both significant domain knowledge and time. Established repositories with community support may receive community contributions. Individual researchers know their work best and are, for that reason, in the best position to identify relevant scientific products and metadata. However, contributing researchers are unlikely to be experts on the ontologies used by a specific repository. To streamline operations, some knowledge-bases have turned to using rule-based approaches (eg,9) or Natural Language Processing (NLP) techniques such as using a custom BERT-based model (eg,10) to partly automate metadata curation, but these approaches often do not generalize well between knowledge-bases.

We describe a generalizable, cost-effective approach for identifying papers containing or using a given type of scientific product and assigning associated metadata. Our approach uses document-embeddings of core pre-identified papers to systematically pre-screen publications. We use a large-language model (LLM) to confirm inclusion criteria and identify the presence-or-absence of categories of metadata in the abstract. For identified categories, the LLM is used to identify relevant metadata annotations from a list of terms specified by the knowledge-base. We demonstrate the utility of this approach by applying it to identify papers and metadata for inclusion in ModelDB, a discovery tool for computational neuroscience research.11

Materials and methods

Corpus acquisition

Our corpus includes 1564 abstracts (actually: titles and abstracts, but referred to as “abstracts” for convenience) for computational neuroscience models from ModelDB as well as generic neuroscience abstracts from 2022 extracted from PubMed with MeSH terms under either C10 (“Nervous System Diseases”) or A08 (“Nervous System”). Our final corpus included a total of 105 502 neuroscience related abstracts from 2022.

PCA general separability

We applied Principal Component Analysis (PCA) to SPECTER2 embeddings12 of a set of 3264 abstracts containing 1564 known computational models from ModelDB and 1700 generic neuroscience works (identified from PubMed with C10 or A08 MeSH terms).12 SPECTER2 is a BERT-based13 document-embedding method that transforms paper titles and abstracts into a 768-dimensional vector; this transformation was trained using citation information, which allowed it to learn to group related papers into nearby vectors. As with any vector-based data, PCA can be used to reduce the dimensionality of SPECTER2 embeddings while preserving relationships between the embeddings and most of the variability within the embeddings. Using PCA gives us a denser, lower-dimensional dataset that can then be analyzed with tools like k-nearest neighbors (KNN) which are most powerful in small dimensions.

KNN-based approach with SPECTER2 embeddings

We explored the vector space of SPECTER2 embeddings of the 1564 known computational works and 1700 generic neuroscience works by looking at the KNN to known computational work from ModelDB. Specifically, we calculated 2 values: the fraction of models whose kth nearest model is within distance d in the set of models, and the fraction of generic neuroscience works whose kth nearest model is within distance d. The maximum value in difference would correspond to an “optimal” distance value for each k. Therefore, if an unknown abstract’s kth nearest model is within the corresponding “optimal” distance, this abstract was deemed likely to include computational work.

With our test dataset, we recorded the distance to the kth nearest model for each abstract in the test dataset for different k values (we considered k = 5, 10, 50, and 100). The abstracts were then sorted by this distance. Based on the result, we explored 2 hypotheses: Firstly, the ones with shorter distance values may have a higher possibility of being computational. Secondly, as k increases, more non-computational work may occur within the set of abstracts with smaller associated distances. To assess these hypotheses, for each k, the first 100 test abstracts with the smallest distances were annotated by 2 authors with a background of health informatics (S.G., Z.J.) to determine a gold standard for whether or not they include computational work.

Prompt engineering with GPT-3.5 and GPT-4

Works identified as likely to use computational neuroscience from the SPECTER2 embeddings were further screened by GPT-3.5 and GPT-4. Prompts were written taking into consideration the distinction between computational neuroscience and models in other categories (ie, statistics models and biophysics models), as shown below:

You are an expert in computational neuroscience, reviewing papers for possible inclusion in a repository of computational neuroscience. This database includes papers that use computational models written in any programming language for any tool, but they all must have a mechanistic component for getting insight into the function of individual neurons, networks of neurons, or of the nervous system in health or disease. Suppose that a paper has a title and abstract as indicated below. Respond with “yes” if the paper likely uses computational neuroscience approaches (eg, simulation with a mechanistic model), and “no” otherwise. In particular, respond “yes” for a paper that uses both computational neuroscience and other approaches. Respond “no” for a paper that uses machine learning to make predictions about the nervous system but does not include a mechanistic model. Respond “no” for purely experimental papers. Provide no other output.

Title: {title}

Abstract: {abstract}

GPT-4 was queried for each title and abstract in our corpus using this prompt. We varied the temperature (randomness) used by GPT-3.5 and GPT-4 and used voting to assess how this parameter affected computational versus non-computational paper classification. We later used an expanded prompt for GPT-3.5 stepping through the classification in a version of Chain-of-Thought (CoT)14 reasoning.

Result evaluation through annotation agreement

SPECTER2, GPT-3.5, and GPT-4 performances were evaluated by comparing to ground truth, established through inter-annotator agreement (utilizing Cohen’s Kappa Coefficient). Annotations were performed systematically, with initial agreement of conditions to screen computational or non-computational characteristics to eliminate human bias error as follows:

The annotators go through the abstract and title of the individual paper to examine if any keywords or concepts related to computational neuroscience are present;

When computational keywords are present, the annotator take into considerations of the context of the paper to determine if such keywords and concepts are relevant, or if they are only mentioned for comparison purposes;

To reinforce the annotators’ decision, annotators also consider other sections of the paper as additional support, especially for the method section, where annotators are able to distinguish methods that may seem computational on the surface level but should not be considered, such as statistical models, metaanalysis, reviews, and etc.;

After completing the entire process, annotators are asked to verify each other’s output results, in case of any misalignment of details that may affect the annotating performance;

Ground truth is established under the consideration of independent outputs from each annotator to evaluate the model performance.

Metadata identification

In addition to academic field classification, we assessed the positive predictive value (PPV) of metadata identification as follows: GPT-4 identified metadata for 115 PubMed papers derived from k = 5 group with the smallest distances, using queries containing terminology sources for paper concepts, regions of interest, ion channels, cell type, receptors, and transmitters derived from ModelDB’s terminologies. These prompts stressed that answers should only come from the supplied terminology lists, which GPT-4 mostly respected. (Attempts to use GPT-3.5 led to high numbers of off-list suggestions, and were not pursued further.)

To identify the accuracy of metadata identification from GPT-4, 2 annotators with health informatics (AG) and biostatistics (YQ) backgrounds performed manual validation of the GPT-4 identifications, neglecting the lack of precision of certain keywords resulting from the selection from ModelDB’s existing terminology.

This validation process assigned 1 of 3 rankings for each metadata tag: “Correct,” “Incorrect,” and “Borderline.” While “Correct” and “Incorrect” explicitly determines the performance of the model, “Borderline” catches keywords present in the text, but not directly relevant to the paper, as well as terms that are relevant to concepts in ModelDB’s terminology, but not entirely correct given the granularity of concept. For instance, in “Dopamine depletion can be predicted by the aperiodic component of subthalamic local field potentials,” the cell type of “Dopaminergic substantia nigra neuron” is not explicitly mentioned in the abstract, but referenced to introduce into the focus of the paper.15

Results

For this study, we restricted our attention to neuroscience papers published in 2022 as this is after the training cutoff date for GPT-3.5 and GPT-4 and has a defined end-date to allow completeness.

Identification of candidate papers

As described previously, we included computational neuroscience models from ModelDB, and generic neuroscience papers from PubMed published in 2022. PubMed lists 1 771 881 papers published between January 1, 2022 and December 31, 2022. As 84.5% of models from ModelDB with MeSH terms contained entries in the C10 or A08 subtrees, we used the presence of these subtrees as a proxy for an article about neuroscience. Using this criteria, we found 105 202 neuroscience-related papers in PubMed from 2022.

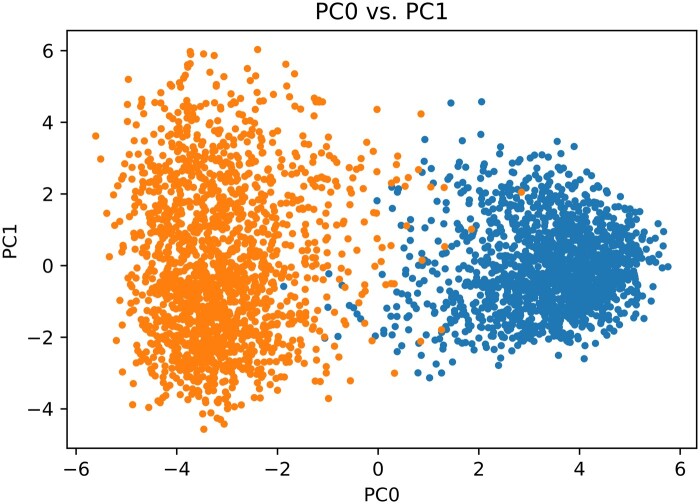

PCA results

Applying PCA to the high-dimensional SPECTER2 embeddings of our 2 datasets—1700 generic neuroscience abstracts and 1564 computational neuroscience abstracts—revealed a notable separability in SPECTER2 embeddings between the 2 datasets (Figure 1). Thus SPECTER2 embeddings can capture relevant information pertaining to computational aspects of scientific abstracts within the broader neuroscience corpus.

Figure 1.

PCA analysis of the SPECTER2 embeddings exhibits clear separation between modeling (blue) and non-modeling (orange) along the 0th principal component.

SPECTER2 vector space exploration

Based on the SPECTER2 embeddings of 1564 known models from ModelDB and 1700 generic neuroscience models, we implemented a KNN-based approach in the vector space containing all the embeddings to examine patterns in their distribution and to determine how likely a neuroscience abstract is computational or not.

Given our hypothesis that computational modeling papers embed near other computational modeling work, we examined the differences between percentages of models (Figure 2A) and non-models (Figure 2B) with kth nearest known model within a certain distance. We used this difference (Figure 2C) to determine a cutoff threshold for when a paper should be considered likely to involve computational neuroscience work (ie, if the kth nearest model is within the threshold). From this, we generated a possible range in which this threshold distance lies. For example, for the 5 nearest neighbors (k = 5), it is likely that a neuroscience work is computational when its fifth nearest neighbor (among the set of known models) is within the distance of approximately 0.08. As the value of k increases, the threshold distance increases as well, taking more neighbors into consideration, which can create bias in results and produce false positives or false negatives.

Figure 2.

SPECTER2 analysis. (A) The calculated percentages of known models that have their kth nearest neighbor (a known model) within a certain distance. (B) The percentages of generic neuroscience abstracts that have their kth nearest model within a certain distance. (C) The difference between A and B. (D) The number of computational work found going down the list from SPECTER2 results (k = 5), compared with the case when identification is fully accurate, as shown by the dotted line. The first 20 papers by similarity to ModelDB papers were all computational neuroscience papers, with the odds of containing computational neuroscience broadly decreasing as the similarity decreased.

With these potential thresholds, we explored the vector space of our test dataset, containing 105 502 neuroscience-related abstracts published in 2022. For different values of k, high probability computational abstracts were identified and sorted by the distance to their kth computational neighbor. Two annotators manually annotated the top 100 abstracts for k = 5, 20, and 50 to determine accuracy levels. Of these, smaller values of k generated more accurate predictions of the use of computational techniques. As shown in Figure 2D, the top 20 abstracts identified by SPECTER2 using k = 5 are all computational models. Further down the list, more non-computational works start to emerge.

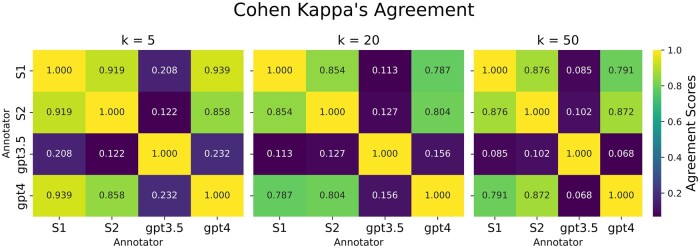

GPT-3.5 and GPT-4 results

We queried both GPT-3.5 and GPT-4 to find out if they would identify each paper—given the title and abstract—as using computational neuroscience or not. This check was performed using the prompt described in methods without examples for the top 100 abstracts from SPECTER2 analysis results with k = 5, 20, and 50. These prompts were initially run with temperature = 0 for near-deterministic results. Cohen Kappa’s agreement index between the 2 annotators, as well as agreement with GPT-3.5 and GPT-4 is shown in Figure 3. GPT-3.5 tends to have much lower agreement scores with human annotators, compared to GPT-4. GPT-3.5 often misclassified non-computational work as computational, leading to false positives.

Figure 3.

Cohen Kappa’s agreement (an agreement measure that accounts for chance events) comparing scores the identification of a paper as including computational neuroscience work or not among 2 human annotators (S1 and S2), GPT-3.5, and GPT-4. The k = 5, 20, and 50 matrices denote 3 overlapping corpuses of papers to consider, based on SPECTER embeddings as described in the text. Agreement is broadly comparable in all 3 sets, with k = 5 showing the highest overall values, so k = 5 was used for the remainder of the analyses.

Improving GPT-3.5 results

We employed 3 strategies to improve the performance of the relatively low-cost GPT-3.5 model. By using annotator S1’s results as baseline, we calculated F1 scores (the harmonic mean of precision and recall; an F1 score near 1 requires both low false positives and false negatives) to evaluate improvement in GPT-3.5’s performance from these different strategies.

1. Instruction fine-tuning: allowing outputs of uncertainty

As GPT-3.5 results returned a large number of false positives, we tweaked the prompt to allow GPT-3.5 to output unsure when it is not certain about the computational characteristics of an abstract. All instances classified as “unsure” are actually non-computational, according to GPT-4’s outputs. Integrating “unsure” instances into the negative set increased GPT-3.5’s F1 score to 0.617.

2. Role of temperature

Using the fine-tuned prompt from the previous step, we further explored the role of temperature by using temperature (randomness) values 0, 0.5, 1, 0.5, and 2. The best performing temperatures as measured by F1 scores were 0.5 and 1, as measured by a majority vote of 3 calls to the API (Figure 4).

Figure 4.

(A) Role of temperature (randomness). GPT-3.5 was queried 3 times for whether or not an abstract implied use of a computational model. After combining the answers by voting, moderate but low temperature (randomness) achieved the highest F1-1 scores, with high temperatures showing poor performance. (B) GPT-3.5 F1-1 scores before and after Chain-of-Thought (CoT), compared with GPT-4.

3. Chain-of-thought prompting

Following,14 which showed that incorporating reasoning steps (known as CoT) in LLM prompts can improve their performance, we added reasoning steps of how a human would approach classification tasks of computational work. Instead of using the few-shot examples as demonstrated by the CoT prompting, we took a zero-shot approach with specifying a general reasoning path. Our prompt is shown below.

You are an expert in computational neuroscience, reviewing papers for possible inclusion in a repository of computational neuroscience. This database includes papers that use computational models written in any programming language for any tool, but they all must have a mechanistic component for getting insight into the function individual neurons, networks of neurons, or of the nervous system in health or disease.

Suppose that a paper has title and abstract as indicated below. Perform the following steps, numbering each answer as follows.

Identify evidence that this paper addresses a problem related to neuroscience or neurology.

Identify specific evidence in the abstract that directly suggests the paper uses computational neuroscience approaches (eg, simulation with a mechanistic model). Do not speculate beyond the methods explicitly mentioned in the abstract.

Identify evidence that this paper uses machine learning or any other computational methods.

Provide a one-word final assessment of either “yes,” “no,” or “unsure” (include the quotes but provide no other output) as follows: Respond with “yes” if the paper likely uses computational neuroscience approaches (eg, simulation with a mechanistic model), and “no” otherwise. Respond “unsure” if it is unclear if the paper uses a computational model or not. In particular, respond “yes” for a paper that uses both computational neuroscience and other approaches. Respond “no” for a paper that uses machine learning to make predictions about the nervous system but does not include a mechanistic model. Respond “no” for purely experimental papers. Provide no other output.

Title: “{title}”

Abstract: “{abstract}”

GPT-3.5’s F1 score, even though still lower than GPT-4, showed a marked increase to 85.5% after CoT was applied (Figure 4).

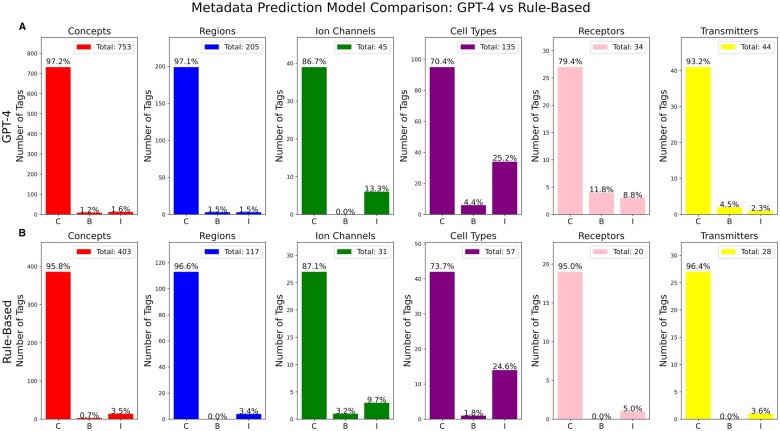

Metadata identification

We used repeated queries to GPT-4 to identify relevant computational neuroscience related metadata annotations for each remaining abstract. Specifically, we performed separate queries for each abstract for ModelDB’s long-standing 6 broad categories of metadata: “brain regions/organisms,” “cell types,” “ion channels,” “receptors,” “transmitters,” and “model concepts.” Each category may have hundreds of possible values and models may have multiple relevant metadata tags for a given category; for example, a model that examines the concept “Aging/Alzheimer’s” may also study the concept “synaptic plasticity.” Each category was predicted via 2 methods, the GPT-4 approach and for comparison an older rule-based approach.9 Both models broadly had comparable PPVs, with the exception that the rule-based approach had a lower false positive rate for receptors. Our new GPT-4 approach, however, identified 85% more total metadata tags than the rule-based approach. In exploratory studies, GPT-3.5 was relatively prone to suggest new terms or reword terms and so we did not apply it here.

Figure 5 illustrates the distribution of metadata tags assigned by the Rule-based method and GPT-4 query to 115 abstracts, totaling 636 tags from the rule-based model, and 1154 tags from GPT-4 across various categories. Notably, the category “model concepts” received the highest number of tags, amounting to 403 and 753, respectively. Since paper titles and abstracts do not encompass all details about a model, our evaluation focused solely on the relevance of the predicted metadata tags, rather than the comprehensiveness of their model descriptions.

Figure 5.

(A) Comparison of the performance of GPT-4 (top) vs ModelDB’s legacy rule-based predictor (bottom) on an analysis of 115 selected neuroscience abstracts. Each color/column represents a different broad category of metadata. Both raw counts (y-axis) and percentages are shown. Bars C, B, and I denote “correct,” “borderline” (see text), and “incorrect,” respectively. In all categories except receptors (where the rule-based approach had a very low error rate), positive predictive value was broadly comparable between the 2 methods, however, GPT-4 showed greater recall, predicting 85% more metadata tags in total. (B) Metadata identification accuracy across categories via GPT-4. Results are evaluated and accuracy scores are calculated by comparing with human annotations. Total legend represents the total number of tags in each category for metadata.

Upon manual review, we found “model regions” obtained the highest PPV at 96.6% from the rule-based model, and the “model concepts” achieved the highest PPV at 97.2% via GPT-4, while “cell types” recorded the lowest at 73.7% and 70.4%, respectively. These findings indicate that our approach, which leverages GPT-4 for metadata identification, shows comparable PPV to the rules-based approach,9 with more total matches and without needing to develop an explicit set of rules.

Discussion

Despite ongoing and previous efforts (eg, NIFSTD16; EBRAINS Knowledge Graph17; INCF18) there remains a lack of widely used robust and consistent ontologies in neuroscience.19,20 MeSH terms used to index publications in PubMed are focused on medically relevant terms, missing the cellular-level details essential for characterizing neuroscience. Therefore, establishing an automated process for identifying and annotating neuroscience work and building efficient knowledge repositories will enhance the ability to find and reuse computational models. In the future, integrating NLP techniques and LLMs may play an essential role in determining a publication’s computational aspects and metadata extraction.

Using only ModelDB data and why KNN-based approach?

We initially used entries from ModelDB11 to generate SPECTER2 embeddings to establish an initial vector space of computational neuroscience work. However, ModelDB is not necessarily representative of computational neuroscience as a whole.21 An alternative approach for creating this initial set is to collect abstracts from journals that are deemed as most relevant, such as Journal of Computational Neuroscience, etc. However, only a small fraction of ModelDB models is in these journals as computational models are often paired with experimental studies. We used KNN to measure similarity to embeddings of known model papers as our generic neuroscience set was randomly selected and may thus contain some computational work, so it could not serve as a true set of negative examples. Furthermore, KNN has the advantage of not requiring us to make assumptions about the shape or contiguity of the region of computational neuroscience work in the embedding space unlike many other algorithms (eg, unlike Support Vector Machines).

SPECTER2 embeddings are only from title and abstract

We used SPECTER212 document-level embeddings to determine whether a publication counts as computational neuroscience work. A unique feature of SPECTER2 is that it was trained on citations but does not need them to produce an embedding. In our case, if the publications that cite or are cited by a publication are computational, it is more likely that this publication is also computational. Citation links provide more information on the publication’s content and explicitly using them would generate embeddings with higher representativeness. Another challenge is that the abstract does not necessarily focus on key model details (eg, model 8728422 uses CA1 pyramidal cells, which are not mentioned in the abstract). While full text and citations contain additional information that could be used in future studies to detect computational neuroscience models, we do not use them here to keep the model simple, because of the increased tokens (and hence cost) required for considering more text, and because of licensing restrictions (for full text). Furthermore, covering too much information in embeddings could lead to false positives in the results.9

What counts as computational neuroscience work?

Computational neuroscience23 is not entirely well defined, which poses a challenge for both manual and automated paper screening. Neuroscience itself is an interdisciplinary field that often makes heavy use of computation, creating a fuzzy boundary between analysis of experimental data and computational neuroscience. Therefore, we defined a scope—based on existing ModelDB entries—when prompting responses from GPT-4 and GPT-3.5, and the 2 annotators used the same scope during the manual annotation process.

Metadata

When evaluating metadata predictions, annotators mainly considered the relevance of the provided tags to the papers themselves, while acknowledging the circumstances where certain tags could be mentioned, but to only serve as a reference or contrast. For example, “Gamma oscillation” was identified from the rule-based model as 1 of the tags for “model concepts” in15; however, gamma oscillation was only mentioned as the context of the article and not as a main theme of the paper. In this scenario, annotators would evaluate the tag “Gamma oscillation” as “borderline.” In computational neuroscience, the authors know with certainty if their models include specific, eg, ion channels or do not; when generalizing to other fields, the use of hedging (see eg,24) around uncertainties may affect prediction accuracy.

Limitations

Using one-shot/few-shot learning

As shown in the analysis of GPT-3.5 results, subtle changes in prompts may yield large differences in the results. In this study, prompts given to GPT-3.5 and GPT-4 did not include concrete examples and expected results. Research on Few-Shot Learning (FSL) shows that models can learn to generalize information from only a limited number of examples and perform with high accuracy on a new task.25 For example, in our study, providing a few examples of abstracts with their associated metadata might result in better results from GPT-3.5 and GPT-4. Future endeavors could potentially devote to experimenting with One-Shot Learning (OSL) or FSL, and measure the improvement in results.

Fine-tuning LLMs for metadata extraction

Achieving state-of-the-art results in extracting metadata information in computational neuroscience might require further fine-tuning a LLM. Fine-tuning a LLM on a specific task can markedly improve performance,26 but to employ this in the curation context will require the development of a large high-quality dataset of examples.

Conclusion

Recognizing the increasing importance of repository curation and how this could benefit future relevant work in neuroscience, we explored the feasibility of leveraging NLP techniques and LLMs to enhance identification and metadata extraction of computational neuroscience work.

SPECTER2 embeddings yield promising outcomes, demonstrating high effectiveness for screening large numbers of neuroscience papers for the use of computational models without incurring API charges. GPT-4 shows relatively high accuracy in identifying metadata, but requires continued exploration in metrics to increase performance. Automating the process of collecting computational work and systematically storing information related to computational models paves the way for future research to reuse, update, or improve existing models.

Continued efforts should focus on increasing the accuracy of LLMs in gaining more accurate and contextually aware results. Attempts at using alternative metrics to improve accuracy of LLMs or establishing a new dataset comprising manually labeled computational information and metadata to fine-tune current LLMs can potentially help reach state-of-the-art results. Overall, this study marks a significant step forward in exploring the potential of NLP techniques and LLMs in automating the identification and curation of computational neuroscience work.

Contributor Information

Ziqing Ji, Biostatistics, Yale School of Public Health, Yale University, New Haven, CT 06510, United States.

Siyan Guo, Biostatistics, Yale School of Public Health, Yale University, New Haven, CT 06510, United States.

Yujie Qiao, Biostatistics, Yale School of Public Health, Yale University, New Haven, CT 06510, United States; Integrative Genomics, Princeton University, Princeton, NJ 08540, United States.

Robert A McDougal, Biostatistics, Yale School of Public Health, Yale University, New Haven, CT 06510, United States; Biomedical Informatics and Data Science, Yale School of Medicine, Yale University, New Haven, CT 06510, United States; Program in Computational Biology and Bioinformatics, Yale University, New Haven, CT 06510, United States; Wu Tsai Institute, Yale University, New Haven, CT 06510, United States.

Author contributions

All authors contributed to the design of the study; Robert A. McDougal, Ziqing Ji, and Siyan Guo implemented the code; and all authors contributed to analysis of the results, writing, and editing the manuscript, and approval of the final version.

Funding

This research received no specific grant from any funding agency in the public, commercial, or not-for-profit sectors.

Conflicts of interest

The authors have no competing interests to declare.

Data availability

The source code and data underlying this study is available in a GitHub repository at https://github.com/mcdougallab/gpt-curation.

References

- 1. Vrandečić D, Krötzsch M. Wikidata: a free collaborative knowledgebase. Commun ACM. 2014;57(10):78-85. [Google Scholar]

- 2. Benson DA, Cavanaugh M, Clark K, et al. GenBank. Nucleic Acids Res. 2013;41(Database issue):D36-D42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Ascoli GA, Donohue DE, Halavi M. NeuroMorpho.Org: a central resource for neuronal morphologies. J Neurosci. 2007;27(35):9247-9251. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Ascoli GA. Sharing neuron data: carrots, sticks, and digital records. PLoS Biol. 2015;13(10):e1002275. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. McDougal RA, Bulanova AS, Lytton WW. Reproducibility in computational neuroscience models and simulations. IEEE Trans Biomed Eng. 2016;63(10):2021-2035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Crook SM, Davison AP, McDougal RA, et al. Editorial: reproducibility and rigour in computational neuroscience. Front Neuroinform. 2020;14:23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Abrams MB, Bjaalie JG, Das S, et al. A standards organization for open and FAIR neuroscience: the international neuroinformatics coordinating facility. Neuroinformatics. 2022;20(1):25-36. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Poline J-B, Kennedy DN, Sommer FT, et al. Is neuroscience FAIR? a call for collaborative standardisation of neuroscience data. Neuroinformatics. 2022;20(2):507-512. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. McDougal RA, Dalal I, Morse TM, et al. Automated metadata suggestion during repository submission. Neuroinformatics. 2019;17(3):361-371. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Bijari K, Zoubi Y, Ascoli GA. Assisted neuroscience knowledge extraction via machine learning applied to neural reconstruction metadata on NeuroMorpho.Org. Brain Inform. 2022;9(1):26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. McDougal RA, Morse TM, Carnevale T, et al. Twenty years of ModelDB and beyond: building essential modeling tools for the future of neuroscience. J Comput Neurosci. 2017;42(1):1-10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Singh A, D’Arcy M, Cohan A, et al. SciRepEval: a multi-format benchmark for scientific document representations. In: Bouamor H, Pino J, Bali K, eds. Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing. Association for Computational Linguistics; 2023:5548–5566. [Google Scholar]

- 13. Devlin J, Chang M-W, Lee K, et al. BERT: pre-training of deep bidirectional transformers for language understanding. arXiv [cs.CL]. http://arxiv.org/abs/1810.04805, 2018, preprint: not peer reviewed.

- 14. Wei J, Wang X, Schuurmans D, et al. Chain-of-thought prompting elicits reasoning in large language models. arXiv [cs.CL]:24824–24837. https://proceedings.neurips.cc/paper_files/paper/2022/file/9d5609613524ecf4f15af0f7b31abca4-Paper-Conference.pdf, 2022, preprint: not peer reviewed.

- 15. Kim J, Lee J, Kim E, et al. Dopamine depletion can be predicted by the aperiodic component of subthalamic local field potentials. Neurobiol Dis. 2022;168:105692. [DOI] [PubMed] [Google Scholar]

- 16. Bug WJ, Ascoli GA, Grethe JS, et al. The NIFSTD and BIRNLex vocabularies: building comprehensive ontologies for neuroscience. Neuroinformatics. 2008;6(3):175-194. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Appukuttan S, Bologna LL, Schürmann F, et al. EBRAINS live papers—interactive resource sheets for computational studies in neuroscience. Neuroinformatics. 2023;21(1):101-113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Abrams MB, Bjaalie JG, Das S, et al. Correction to: a standards organization for open and FAIR neuroscience: the international neuroinformatics coordinating facility. Neuroinformatics. 2022;20(1):37-38. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Hamilton DJ, Wheeler DW, White CM, et al. Name-calling in the hippocampus (and beyond): coming to terms with neuron types and properties. Brain Inform. 2017;4(1):1-12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Shepherd GM, Marenco L, Hines ML, et al. Neuron names: a gene- and property-based name format, with special reference to cortical neurons. Front Neuroanat. 2019;13:25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Tikidji-Hamburyan RA, Narayana V, Bozkus Z, et al. Software for brain network simulations: a comparative study. Front Neuroinform. 2017;11:46. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Morse TM, Carnevale NT, Mutalik PG, et al. Abnormal excitability of oblique dendrites implicated in early Alzheimer’s: a computational study. Front Neural Circuits. 2010;4:16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Sejnowski TJ, Koch C, Churchland PS. Computational neuroscience. Science. 1988;241(4871):1299-1306. [DOI] [PubMed] [Google Scholar]

- 24. Kilicoglu H, Bergler S. A high-precision approach to detecting hedges and their scopes. In: Farkas R, Vincze V, Szarvas G, et al. , eds. Proceedings of the Fourteenth Conference on Computational Natural Language Learning—Shared Task. Association for Computational Linguistics; 2010:70-77. [Google Scholar]

- 25. Wang Y, Yao Q, Kwok JT, et al. Generalizing from a few examples: a survey on few-shot learning. ACM Comput Surv. 2020;53(3):1-34. [Google Scholar]

- 26. Howard J, Ruder S. Universal language model fine-tuning for text classification. arXiv [cs.CL]. 10.48550/ARXIV.1801.06146, 2018, preprint: not peer reviewed. [DOI]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The source code and data underlying this study is available in a GitHub repository at https://github.com/mcdougallab/gpt-curation.