Abstract

Background

Investment in mobile devices to support primary or elementary education is increasing and must be informed by robust evidence to demonstrate impact. This systematic review of randomised controlled trials sought to identify the overall impact of mobile devices to support literacy and numeracy outcomes in mainstream primary classrooms.

Objectives

The aim of this systematic review was to understand how mobile devices are used in primary/elementary education around the world, and in particular, determine how activities undertaken using mobile devices in the primary classroom might impact literacy and numeracy attainment for the pupils involved. Within this context, mobile devices are defined as tablets (including iPads and other branded devices), smartphones (usually those with a touchscreen interface and internet connectivity) and handheld games consoles (again usually with touchscreen and internet‐enabled). The interventions of interest were those aimed at improving literacy and/or numeracy for children aged 4–12 within the primary/elementary school (or equivalent) classroom.

Specifically, the review aimed to answer the following research questions:

-

‐

What is the effect of mobile device integration in the primary school classroom on children's literacy and numeracy outcomes?

-

‐

Are there specific devices which are more effective in supporting literacy and numeracy? (Tablets, smartphones, or handheld games consoles)

-

‐

Are there specific classroom integration activities which moderate effectiveness in supporting literacy and numeracy?

-

‐

Are there specific groups of children for whom mobile devices are more effective in supporting literacy and numeracy? (Across age group and gender).

-

‐

Do the benefits of mobile devices for learning last for any time beyond the study?

-

‐

What is the quality of available evidence on the use of mobile devices in primary/elementary education, and where is further research needed in this regard?

An Expert Advisory Group supported the review process at key stages to ensure relevance to current practice.

Search Methods

The search strategy was designed to retrieve both published and unpublished literature, and incorporated relevant journal and other databases with a focus on education and social sciences. Robust electronic database searches were undertaken (12 databases, including APA PsychInfo, Web of Science, ERIC, British Education Index and others, and relevant government and other websites), as well as a hand‐search of relevant journals and conference proceedings. Contact was also made with prominent authors in the field to identify any ongoing or unpublished research. All searches and author contact took place between October and November 2020. The review team acknowledges that new studies will likely have emerged since and are not captured at this time. A further update to the review in the future is important and would build on the evidence reflected here.

Selection Criteria

The review included children within mainstream primary/elementary/kindergarten education settings in any country (aged 4–12), and interventions or activities initiated within the primary school classroom (or global equivalent) that used mobile devices (including tablets, smartphones, or hand‐held gaming devices) to intentionally support literacy or numeracy learning. In terms of study design, only Randomised Controlled Trials were included in the review.

Data Collection and Analysis

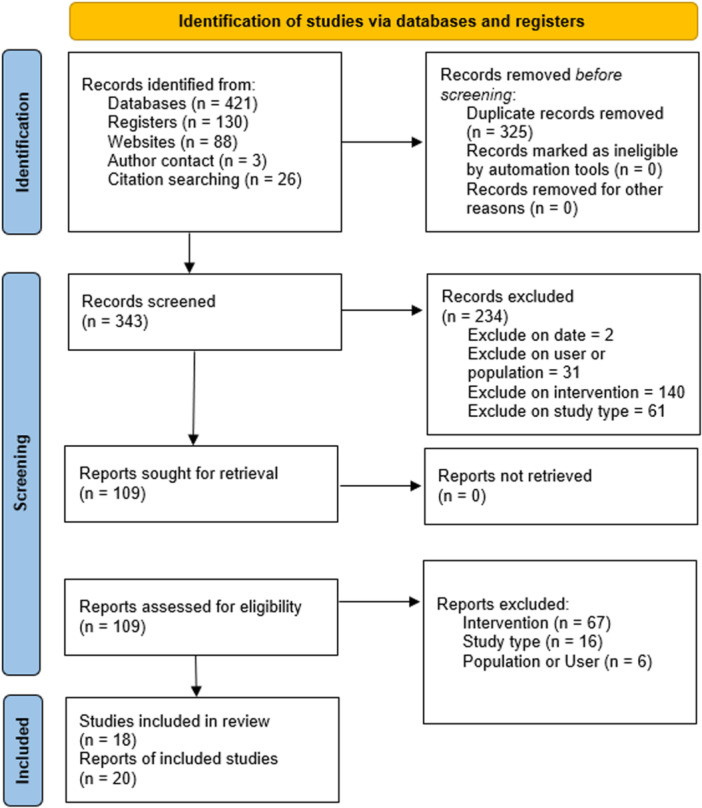

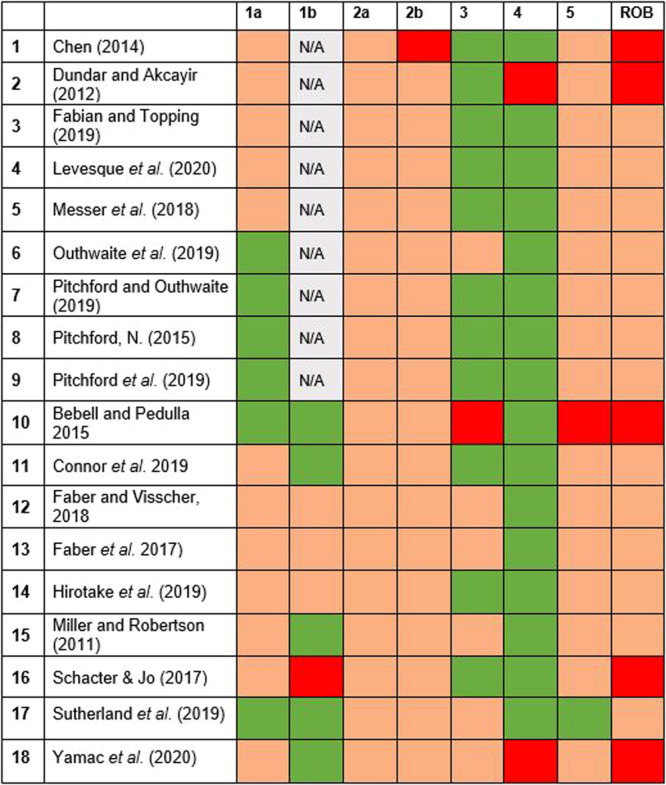

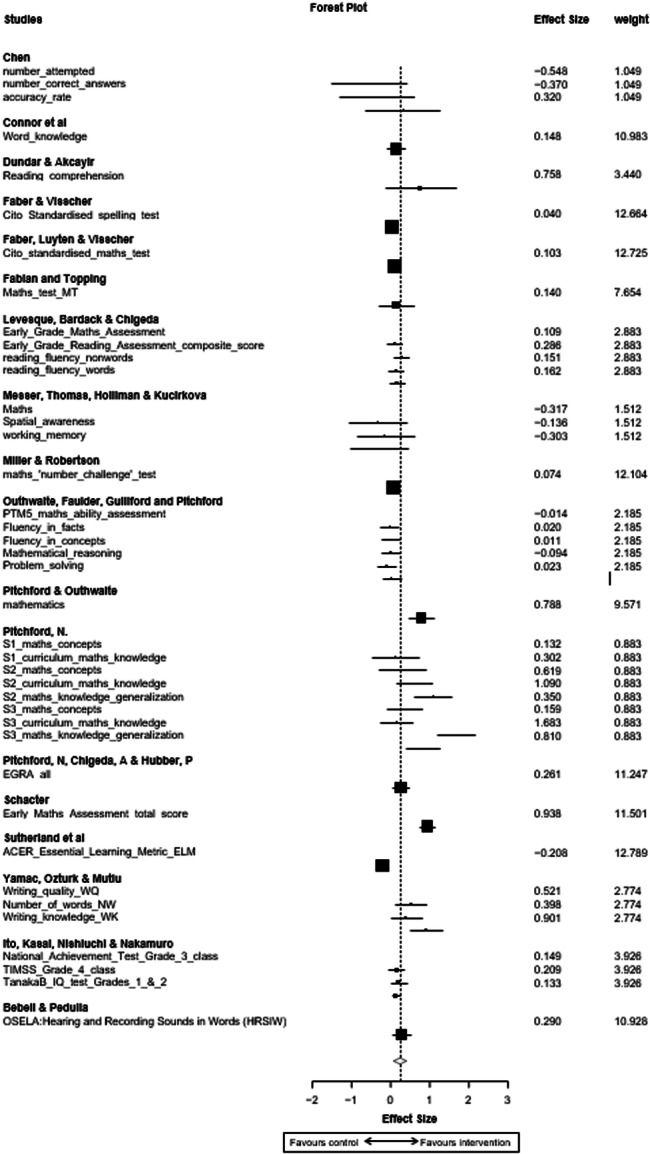

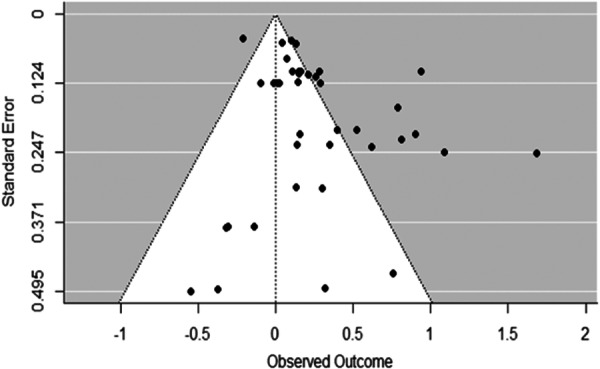

A total of 668 references were identified through a robust search strategy including published and unpublished literature. Following duplicate screening, 18 relevant studies, including 11,126 participants, 14 unique interventions, and 46 relevant outcome measures were synthesised using Robust Variance Estimation and a random effects meta‐analysis model. Risk of Bias assessment was undertaken by three reviewers using the ROB2 tool to assess the quality of studies, with 13 studies rated as having some concerns, and 5 as having high risk of bias. Qualitative data was also extracted and analysed in relation to the types of interventions included to allow a comparison of the key elements of each.

Main Results

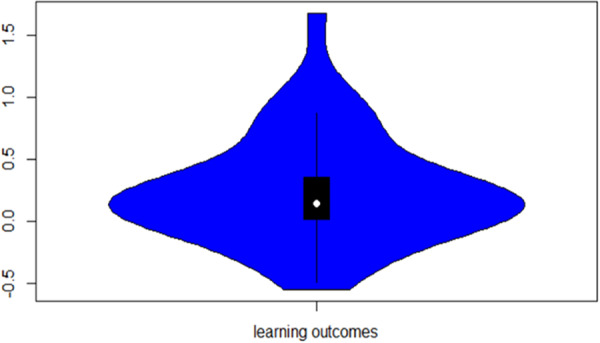

A positive, statistically significant combined effect was found (Cohen's d = 0.24, CI 0.0707 to 0.409, p < 0.01), demonstrating that in the studies and interventions included, children undertaking maths or literacy interventions using mobile devices achieved higher numeracy or literacy outcomes than those using an alternative device (e.g., a laptop or desktop computer) or no device (class activities as usual). However these results should be interpreted with caution given the risk of bias assessment noted above (5 studies rated high risk of bias and 13 rated as having some concerns). As the interventions and classroom circumstances differed quite widely, further research is needed to understand any potential impact more fully.

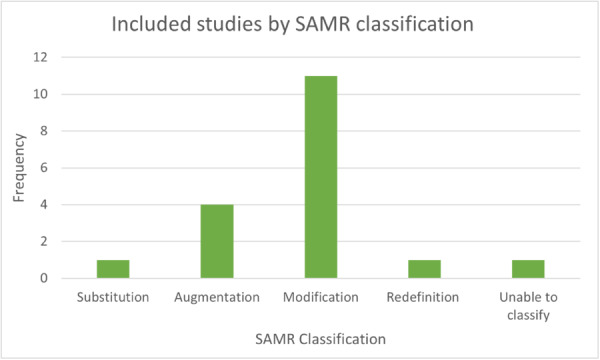

Sensitivity analysis aimed to identify moderating factors including age or gender, screen size, frequency/dosage of intervention exposure, and programme implementation features/activities (based on Puentedura's [2009] SAMR model of technology integration). There were too few studies identified to support quantitative analysis of sufficient power to draw robust conclusions on moderating factors, and insufficient data to determine impact beyond immediate post‐test period. Sensitivty analysis was also undertaken to exclude the five studies identified as having a high risk of bias, to identify any impact they may have on overall findings.

Authors' Conclusions

Overall, this review demonstrates that for the specific interventions and studies included, mobile device use in the classroom led to a significant, positive effect on literacy and numeracy outcomes for the children involved, bringing positive implications for their continued use in primary education. However given the concerns on risk of bias assessment reported above, the differing circumstances, interventions and treatment conditions and intensities, the findings must be interpreted with caution. The review also supports the need for further robust research to better understand what works, under what circumstances, and for whom, in the use of mobile devices to support learning.

Keywords: children, curriculum, digital technology, education, educatonal technology, handheld devices, iPads, learning, literacy, meta‐analysis, mobile devices, mobile technology, numeracy, primary classroom, primary education, systematic review, teacher skills, technology integration

1. PLAIN LANGUAGE SUMMARY

Mobile devices in the primary school classroom may improve literacy and numeracy learning, though concerns about risk of bias and uncertainty about modes of effect limit conclusions.

1.1. What is this review about?

This review gathered evidence on how mobile devices (including tablets, mobile phones, and handheld digital games) are used in primary school classrooms to help children's literacy and numeracy skills. An Expert Advisory Group supported the process to help findings relate to everyday practice.

1.2. What did the review hope to find out?

The review aimed to assess the impact of mobile devices in primary school classrooms on children's literacy and numeracy achievement, and its methodological quality.

The secondary objectives were to assess whether some devices or classroom activities were more effective than others in supporting literacy and numeracy; whether some children benefitted more from these devices (e.g., across age or gender); and whether effects lasted beyond the duration of the studies.

1.3. How were studies identified for inclusion in the review?

Between October and November, 2020, searches were conducted across electronic databases, journals, web pages and other sources to identify all relevant studies. Randomised Controlled Trials (a robust experimental design) were included. Children in the studies must have been between the age of 4 and 12, with research taking place in a primary/elementary school classroom (or equivalent grade in other countries). The children must have used the mobile devices themselves, rather than the teacher, to support their learning. The studies must have measured literacy or numeracy learning outcomes.

1.4. What studies are included in the review?

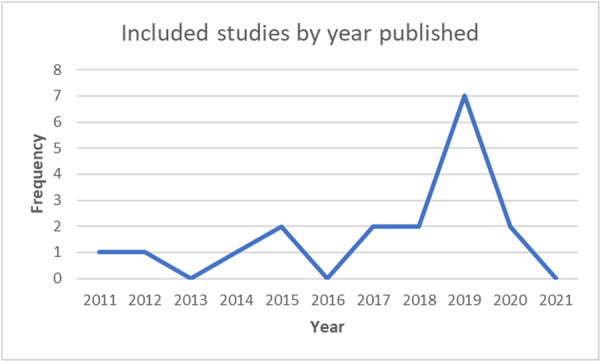

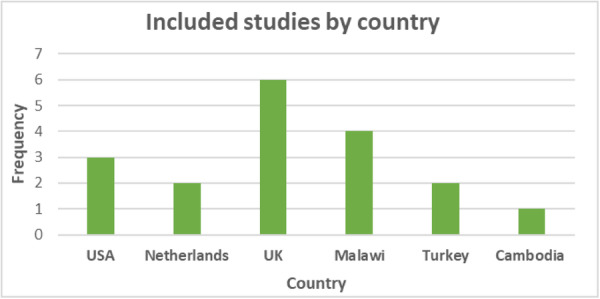

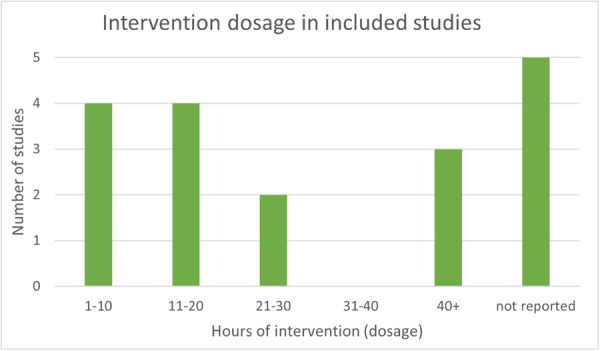

A total of 18 relevant studies, incorporating 14 unique mobile device interventions, and 46 relevant outcome measures were included in the review. The studies were from across six countries (USA, Netherlands, UK, Malawi, Turkey, and Cambodia), and included 11,126 participants. Five studies considered literacy, 11 considered numeracy, and 2 included both subjects. The duration of interventions ranged from 1 to 120 h.

1.5. What did the review find out?

Children had better results in maths or literacy tests when they used mobile devices for their lessons compared to children who used an alternative device (e.g., a laptop or desktop computer) or no device (class activities as usual). These results must be interpreted in light of overall concerns about methodologic quality, since 5 studies were at high risk of bias and the remaining 13 studies were moderate risk of bias.

There were too few studies to answer the secondary questions about why the mobile devices worked and for whom, and insufficient information provided to identify whether the benefits of mobile devices lasted for any time beyond the study.

1.6. What do the findings of the review mean?

For researchers: This review highlights that while mobile devices can support literacy and numeracy learning, we do not currently know enough about how they work best, what makes them effective, and how teachers can best use them in lessons. Further research will help to better understand this. Future research should also pay closer attention to minimising risk of bias and to how and when mobile devices are actually used in real life, so that their research reflects real activities.

For teachers and other educational professionals: We know that mobile devices overall can help children to learn better, however we are uncertain about what types of devices or strategies work better. Teachers should think carefully about the ways in which they are using mobile devices, how they are using them alongside other teaching activities and approaches, and how they might add value to children's learning experience.

For the design of educational interventions: The design and development of educational interventions should be based on evidence, therefore those designing such interventions should pay close attention to existing research, while also investing in new research. Any new interventions should be evaluated with rigorous methods to minimise risk of bias and show that they are making a difference to learning.

1.7. How up to date is this review?

The review includes research up to November 2020. It is important to repeat this review again as new research emerges, as practice is changing quickly.

2. BACKGROUND

The world is changing rapidly, in part due to the advances in technology that once amazed and now are often taken for granted. Perhaps the most significant advancement is the emergence and development of the internet, or World Wide Web. The depth of global impact that the internet has had right across our lives would have been difficult to predict, including the impact on consumer behaviours (Voramontri & Klieb, 2019), teaching and learning (Dockerty, 2019; Gamliel, 2014; Wastiau et al., 2013), and social networking (Van Deursen & Helsper, 2018; Webster et al., 2021). As adults, it has changed our lives, with infinite knowledge and opportunity just a click of our smartphone away. Yet today's generation of children have no experience of a pre‐technology world. Our vocabulary has expanded in response; Prensky (2001) calls these children Digital Natives; Twenge (2017) describes post‐millennials as the iGeneration; while Leathers et al. (2013) coined the term Digitods for toddlers who can navigate a swipe‐screen before they can talk.

As technology has advanced, its applicability to education has been explored and new devices and interventions developed. This has brought a world of possibility for creative and innovative educational experiences for the iGeneration, inside and outside the classroom (Hsin et al., 2014) and forced a reimagining of pedagogical practices (Fleer, 2011). Mobile devices are commonplace in the classroom in developed countries, and emerging in less developed countries. Yet this is an area where research has not kept pace with the development and adoption of technology (see e.g., Crompton et al., 2017; Herodotou, 2018), and while the potential for learning is evident, the impact that mobile devices actually have on educational outcomes remains unclear. Set within a backdrop of wider austerity, and with the unprecedented challenges for education that a global pandemic has brought, it is critical that investment is made in the most effective tools and approaches to best support educational outcomes. This is not to suggest that technology can or should replace traditional teaching methods, or that it will be relevant to every child, subject or setting. This review is therefore undertaken with this acknowledgement in mind. The assessment of theories and evidence below considers potential application, as and when appropriate, to supplement educational practice. A strong evidence base to demonstrate when technology can be of benefit, and indeed when it is not relevant or beneficial, should further inform any investment.

2.1. Description of the condition

This review is focused specifically on primary or elementary school education (generally including children between the ages of 4 and 12), rather than the full educational age spectrum, and considers literacy and numeracy education rather than the wider primary curriculum. This decision was informed by the differences in primary and post‐primary education, and the use of technology within these in terms of the subjects studied, the approach to pedagogy, and activities undertaken. There is also a central focus on literacy and numeracy. These are core skills which equip a child to engage in the wider curriculum and have far‐reaching implications across the life‐course. Research by the National Literacy Trust (Clark & Teravainen‐Goff, 2018) shows that children who are more engaged with literacy have better mental wellbeing, while Gross et al. (2009) note the long‐term costs to the public purse to address the impact of poor literacy and numeracy. The 2018 PISA tests (OECD, 2019), designed to assess reading, science, and maths skills of 15‐year‐olds across the globe, show the UK moving up the rankings in maths (18th, up from 27th in 2016) and in reading (14th, up from 22nd in 2016), yet still falling below many other countries. A focus on effective literacy and numeracy education is therefore priority for primary age children and a valuable focus of this review.

The review was also informed by a child rights perspective. While the UN Convention on the Rights of the Child (UNCRC, 1989) was conceived before the digital world as we know it, existing Articles must be reinterpreted to reflect the changing circumstances of children's lives. Livingstone et al. (2016, p. 18) provides a useful summary of children's rights in a digital world, highlighting the ‘three Ps’ which include a right to protection from threats; a right to provision of the resources necessary for development to full potential, and a right to participation in processes which support development and engagement as an active part in society. Stoilova et al. (2020) reflect that the onus is now on governments and organisations to develop legislation and safeguards to ensure that all children can realise their rights in the online world, not just to stay safe, but to explore and actively engage with the opportunities that the digital world brings. Any discussion about the increased use of technology must be viewed alongside a wider discussion on online safety, the challenges faced in keeping children and young people safe online, while supporting their rights to make use of technology. Many have written on this comprehensively and in more detail than is possible in this review, and a range of resources are available to support teaching and learning with digital devices. The UK Council for Internet Safety (2020) has also developed ‘Education for a connected world’, a guidance document for anyone working with children and young people and featuring key messages and responsibilities for safe use of technology. While online safety is not presented in detail in this review, nor the safety implications of individual interventions considered, it is implicit that schools must consider online safety within wider policies and procedures, alongside increased technology use in the classroom.

In summary, this systematic review and meta‐analysis sought to understand how activities undertaken using mobile devices in the primary classroom might impact literacy and numeracy attainment for the pupils involved. The interventions of interest are those aimed at improving literacy and/or numeracy for children aged 4–12 within the primary/elementary school (or equivalent) classroom. This is further expanded on below.

2.2. Description of the intervention

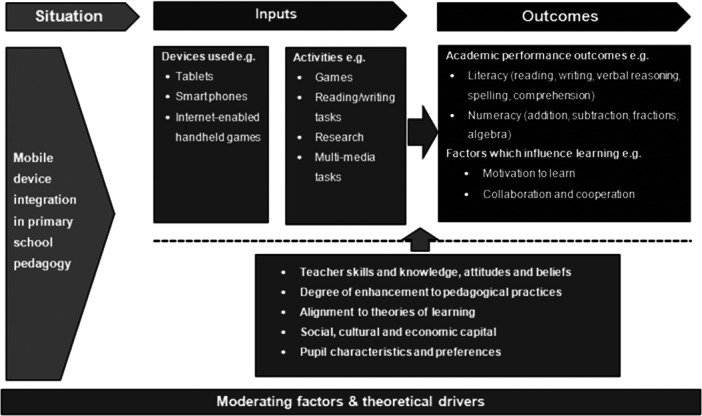

In developing this systematic review, it was useful to represent the emerging themes as a theory of change, demonstrating how the elements work together to contribute to improved outcomes for children. Within this theory of change (Figure 1), four elements are considered in how mobile technology is used in the primary school classroom. These are (a) devices; (b) activities and interventions; (c) outcomes; and (d) moderating factors and theoretical drivers.

Figure 1.

Logic model.

2.2.1. Mobile devices

Mobile devices commonly used by children include tablets, smart‐phones, and gaming devices. In 2018, the Office for National Statistics reported that for the first time, 100% of households with children across England, Scotland and Wales had internet access. OFCOM (2022) presents a picture of everyday technology usage for children across the UK. Device ownership begins early; 17% of 3‐ to 4‐year‐olds are reported to own a mobile phone, rising to 91% by the age of 11 and 100% by the age of 15. Children spend a significant amount of time online, with 97% of 5‐ to 15‐year‐olds averaging 20.5 h per week (OFCOM, 2021). Activities include game‐playing, uploading/viewing media, social networking and listening to music. For most children in the developed world, the incredible opportunities of the digital world are no further than an arm's length away, day or night, with Weinstein (2018, p. 3598) noting that social media is ‘intertwined with daily life’.

Despite the widespread ownership and engagement of technology, patterns of usage differ from child to child, and also change with time. OFCOM (2022) reported girls spending on average longer on their phones than boys, while boys spent longer playing games online; however, the gap is closing year on year, with online games becoming increasingly popular among girls. Boys were also more likely to report using online games as a means of connecting with their friends. Mascheroni and Olafsson (2014) noted that the more creative and skilled activities, such as blogs, publishing videos or photos (content creation), were undertaken by fewer children overall, however the latest OFCOM (2022) report found that content creation is now a daily activity for most young people. This represents a shift from consumer to contributor of information online.

Since March 2020, a pandemic has caused unprecedented disruption worldwide, necessitating behavioural changes as we adapt to new ways of communicating, working, and playing. Over the past 2 years there has been an increase in the amount of time spent online, and the types of activities undertaken, and research on the implications will be emerging for some time to come. The OFCOM (2022) report notes an overall increase in online activity for children in the UK during lockdown, however found that primary‐aged children were less likely to have adequate access to an appropriate device to undertake online schooling activities than secondary‐aged children.

Devices in education: Various sources suggest mobile devices are rapidly increasing in popularity in schools, with significant investment due to the potential for transformative pedagogy that they represent (Crompton et al., 2017; Nikolić et al., 2019). Research by the British Educational Suppliers Association (BESA, 2015) found that from a sample of 335 UK primary schools, 71% reported using tablets in the classroom, representing a significant increase from the previous year. Mobile devices have many benefits, including portability, the ability to customise, and comparative affordability (Haßler et al., 2015). Emerging research is beginning to show how they can be used to change classroom practice, including increasing motivation (Ciampa, 2014), classroom interaction (Campbell & Jane, 2012), and improving educational outcomes (Herodotou, 2018; Looi et al., 2015). The intuitive design of tablets and smartphones, coupled with affordability and the potential to ‘bring your own’, make them ideally placed to influence traditional teaching methods, and this undoubtedly presents an opportunity for schools. The NMC Horizon Report K‐12 Edition (Johnson et al., 2014) identified such ‘intuitive technology’ as having the potential to significantly impact educational practice over the coming years.

In the classroom, mobile devices may be accessed individually (a ‘one to one’ approach) or shared between groups of pupils. Burke and Hughes (2018) argue that due to the personalised nature of mobile devices in particular, one device per child maximises their effectiveness in the classroom. While one tablet per child in every school, even in developed countries, is currently far from possible, another option has emerged. As ownership of smartphones and tablets increases among children, schools are now considering how they can use these in class (see e.g., Rae et al., 2017). Such ‘bring your own device’ approaches also bring challenges, with online safety, appropriate behaviour policies, infrastructure capacity and ensuring equality of access, only some of the necessary considerations.

2.2.2. Activities and interventions

Digital technology alone does not improve learning or transform education (Facer & Selwyn, 2021). Rather, the important elements are the activities or interventions, and how they complement traditional learning. Mobile devices can be used across all subjects, and indeed, many countries have technology embedded as a requirement within their curricula across all stages of schooling, both to support the development of specific technology skills, and to support wider learning. Activities undertaken via mobile devices either directly access the internet or use device Applications (‘Apps’) or inbuilt device functions. Educational Apps are one of the more popular categories across the main App stores, with downloads reaching millions and growing, particularly since lockdown (Papadakis, 2021). Table 1 below summarises some popular educational Apps and educational websites (hereafter referred to as interventions) available, demonstrating the wide variety of activities involved.

Table 1.

Examples of mobile device interventions.

| Intervention | Area of study | Summary of intervention |

|---|---|---|

| Motion Math | Maths | The Motion Math model includes multiple levels of mathematics content, aimed at children aged 4–11 and covering general arithmetic concepts aligned to the school curriculum, including fractions, addition, subtraction, and percentages. Pupils can work independently at their own pace. The ‘tilt’ facility on mobile devices allows children to physically manipulate on‐screen graphics, e.g., directing a falling star to a slot. The game‐based intervention facilitates formative assessment through tracking performance, direct feedback to the child, and increasing difficulty when answers are correct. Teachers receive feedback on pupil usage and performance. |

| Mathletics | Maths | A learning platform designed for use in schools and aligned to the UK primary school curriculum, however, can also be used at home. Activities can be accessed via tablet or desktop computer and include a range of tutorials and interactive games. A test option is available, and pupil activities are marked automatically with detailed reports provided for the teacher. There is also a facility to assign homework. Activities incorporate challenges to motivate individual pupils, with points awarded for completion. Mathletics also includes scheduling and customisation facilities for teachers to support planning. |

| Learning Bug Club | Literacy | A phonics‐based reading programme which can be accessed online on all devices. eBooks, aligned learning activities and comprehension quizzes are matched to the curriculum and to individual children's skill level, allowing them to work at their own pace. Books and reading materials cover a range of fiction and non‐fiction topics to meet pupil interests, and a ‘read aloud’ function supports those who are struggling. Children can continue with their reading at home or school by logging in to their ‘My Stuff’ area, and rewards can be earned for completed activities. |

The types of activities undertaken via digital interventions vary widely, for example, basic reading and writing activities, playing games, watching instructional videos, researching topics of interest, completing online tests, or taking photographs or videos. The range of activities is as vast as the subjects covered. However, there are several evident commonalities. Play has a central role in the early years and primary/elementary education curricula, with a widely established body of research showing the effectiveness of play‐based learning, from free play through to instructive games (Whitebread et al., 2012). Most educational digital interventions aim to make learning more fun, creating a positive attitude towards a subject and aiming to enhance enjoyment of learning).

The screen size is an important difference between tablets (usually between 7 and 13 inches) and mobile phones or games consoles (usually smaller). There is limited research on the differences in educational value for each, however a recent study by Haverkamp et al. (2023) found that the reading experience of university students using a tablet was more positive than when using a mobile phone. Given the discussion above on growing mobile phone ownership in children, and their potential use in education, it is useful to consider if screen size makes interventions more effective, or changes the learning experience in any way. Screen size is therefore considered in the meta‐analysis below. However, any comparison is undertaken with the assumption that a much more detailed understanding of the differences in functionality is necessary to draw conclusions.

Within literacy and numeracy, the range of mobile device interventions is also broad, reflecting the core subject elements, for example, phonics, spelling, grammar or comprehension. Table 2 provides further examples of how digital interventions may support learning. The next secton also considers the literacy and numeracy learning outcomes which these interventions seek to support.

Table 2.

Examples of how elements of literacy and numeracy are addressed in mobile device interventions.

| Subject | Components | Activity examples in the research |

|---|---|---|

| Literacy | Sounds, words and reading | eBook: standard ereader with audio narration to support vocabulary recognition (Lee, 2017). Letter Works: a tablet app replicating magnetic letters on a virtual board, which children can manipulate to spell words (D'Agostino et al., 2016). |

| Literacy | Comprehension | Comprehension Booster: an online reading app with accompanying explanatory images, the option to have words read aloud, followed by comprehension questions to assess understanding. (Horne, 2017). eBook reader with option to add notes to summarise the key points & demonstrate understanding (Union et al., 2015). |

| Literacy | Writing | Comics Head Lite: a ‘create your own comic strip’ app to create stories (Moon, Wold and Francom, 2017). Popplet: an online concept mapping tool to plan and develop essay ideas (Kervin & Mantei, 2016). |

| Numeracy | Number recognition & simple counting | Building Blocks Programme: 200 games to introduce shapes, patterns and numbers (Foster et al., 2016). Knowledge Battle: an educational videogame‐style intervention, presenting basic maths knowledge through fun characters and storylines (Hieftje et al., 2017). |

| Numeracy | Mathematical operators | Sacar10: An online maths programme presenting sums and mathematical challenges in game format (Zaldívar‐Colado et al., 2017). Catch the Monster: Online game‐based activities to support understanding of fractions, featuring digital number lines (Fazio et al., 2016). |

| Numeracy | Mathematical reasoning | OpenSim : An opportunity to trial maths concepts that come up in everyday life via a Virtual Reality environment (Kim & Ke, 2017). CoSy_World : A 3D virtual environment with maths problems and challenges to undertake to move from scene to scene (Bouta & Retalis, 2013). |

2.3. How the intervention might work

2.3.1. Aligning mobile device use in education with theories of learning

In considering how such educational interventions might work, it is useful to consider how their features may align with traditional pedagogy, and in particular theories of learning. A summary of key learning theories and reflection on how new educational technologies, and in particular mobile devices, align to these theories, is set out in Table 3 below.

Table 3.

Summary of relevant theories of learning and their application to mobile interventions.

| Theory | Notable influencers | Key features | Influence in mobile device interventions |

|---|---|---|---|

| Behaviourism | John B. Watson, B.F. Skinner, Ivan Pavlov | Classical and operant conditioning, where learning is encouraged through reward and punishment, repetitive learning and stimulus feedback. | Rote‐learning and repetitive activities, practice games, basic feedback through right/wrong sounds or symbols, advancing to the next level when a required standard or score is achieved. |

| Cognitive Learning Theory and Constructivism | Jean Piaget Carl Rogers | Active rather than passive learning, involving interaction with surroundings, physical manipulation of objects to learn about their properties, and interpretation of observations based on existing knowledge. Child‐centred, hands‐on and creative learning, with the teacher as facilitator rather than instructor. Learning through development of relationships and engagement with others, collectively making sense of the surroundings. |

Manipulative learning through touchscreen function, allowing children to explore shapes and object properties; child‐centred learning with the child working through activities at own pace; engaging technology providing opportunities for creativity and investigation, either alone or in groups. |

| Social constructivism (or socio‐cultural approach) | Lev Vygotsky, Jerome Bruner | As above, active learning and teaching, however learning is a two‐way process involving collaboration with peers and teachers. Modelling behaviour supports learning (scaffolding), and positioning learning within the Zone of Proximal Development. | Interventions which include instructional elements (virtual scaffolding), the opportunity to review these and repeat until competent, collaborative opportunities, detailed feedback to teacher to allow appropriate intervention. |

Modern pedagogy reflects a range of learning theories and approaches, each of which incorporate elements which can be integrated within mobile device intervention design to better support learning. The independent and self‐directed nature of learning, which well‐designed mobile devices can offer, places the child at the centre of their own learning, empowering them to think critically in decision‐making (Woloshyn et al., 2017; Wong & Lung‐Hsaing, 2013). They can also provide individualised learning experiences to support each child's unique approach to learning. However, the role of social learning, collaboration and shared enquiry is less obvious within existing mobile interventions, particularly where mobile devices are used alone, and should be supported through opportunities to engage with peers during the activities.

Mobile device interventions also provide opportunity for formative assessment (Mitten et al., 2017), which Higgins (2014) proposes should lead to action or change, either for the teacher or the learner. However, effective feedback must move beyond ‘right or wrong’, instead supporting understanding of why and what lessons can be learned. If used appropriately, digital devices in the classroom can provide individualised and specific feedback on progress directly to pupils, while bringing additional opportunities for teachers to review and assess pupil progress in real time and offer targeted feedback to support learning (Dalby & Swan, 2019).

Hirsh‐Pasek et al. (2015), Kucirkova (2017) and others have highlighted the need to develop a framework to guide the quality, design and content of commercially available educational Apps, as these are currently largely unregulated. Kucirkova (2017) also highlights the lack of input from teachers and other educational professionals in their design. Coproduction, she notes would increase both the quality and applicability of Apps, and their uptake by teachers.

2.3.2. Outcomes for children

Within education, research on the impact of technology on pupil outcomes usually focuses on either academic achievement (primary outcomes) or factors that influence learning (moderating factors). Within literacy and numeracy, primary outcomes may include reading or writing fluency (e.g., Wu & Gadke, 2017), or accuracy (number of sums correct) in a math test (Musti‐Rao & Plati, 2015). Moderating factors may impact the teaching environment or pupils' learning experience, for example, motivation to learn (Turan & Seker, 2018), enjoyment of lessons (Moon, Wold and Francom, 2017) or opportunities to better collaborate with classmates (Davidsen and Vanderlinde, 2016).

Primary outcomes

Within education, primary outcomes for children refer primarily to their academic achievement across subjects studied. Examples include reading fluency and comprehension in literacy; number recognition or application of operators (addition, subtraction, multiplication, division) in maths; or ability to recognise patterns and classifications in science. Primary outcome measures generally include standardised tests, or researcher‐developed assessments. Standardised measures are available for many common academic subjects, and benefit from the availability of a ‘norm’ against which individual scores can be compared, as well as standardised procedures for administration and scoring (Morris, 2011). Examples include the Stanford‐Binet Intelligence Test (and other similar tests) which provides an overall measure of ability; or the New Group Reading Test (GL Assessment) which provides a reading age and ‘standard age’ score. The statutory educational assessments of a country are usually standardised and often used in research of this nature.

Where a standardised measure does not exist, is expensive or requires expertise to administer, the researcher may develop their own tool, such as a test based on curriculum content, or a marking scheme developed to assess content of a piece of work. Researcher‐developed measures can lack the validity of standardised tests; however validity can be assessed, for example, in comparing correlation with a standardised tool (as demonstrated by Proctor, 2011). Often, a combination of standardised and researcher‐developed measures may be most appropriate.

The Education Endowment Foundation (2019) reports extensive evidence of ‘moderate learning gains’ when technology is integrated in teaching across a wide range of subjects and age groups, resulting in an additional 4 months' progress on average (EEF ‘toolkit for teaching and learning’ calculations based on impact, cost and strength of evidence). However, EEF conclusions suggest that the type of technology, and the way in which it is integrated within the classroom, vary widely. A brief look at studies on the impact of technology on literacy or numeracy reveals a broad spectrum of interventions and findings, significant and non‐significant. Simms et al. (2019) undertook a systematic review of interventions to support mathematical achievement in primary school children. While the scope of the review incorporated all maths interventions, the authors identified 42 (from a total of 80) interventions which required technology to engage children in numeracy learning. The format of these activities varied widely; in some cases, children were engaged directly in virtual environments, others involved playing online games, while others used technology only as a small part of the activity, for example, using a digital pen to undertake maths exercises. Individually, the studies identified showed a range of significant and non‐significant effects, and due to the variety of interventions, Simms et al. (2019) were unable to undertake meta‐analysis to demonstrate an overall effect size. However, there are clear lessons from the overall review, notably that while the delivery mechanism plays a role, we must also look beyond this to understand the theory and strategies at play within the intervention. Meanwhile, Talan (2020) undertook meta‐analysis of studies using mobile learning across all subjects and grade levels and found an effect size of −0.015 for maths interventions, representing the smallest impact across all subjects. The findings therefore reveal a lack of consistency.

Cheung and Slavin (2012) also conducted a systematic review and meta‐analysis of education technology to support reading. From a total of 85 studies, they found that technology had a small, positive effect on literacy in comparison to ‘normal’ activities. However as with their study above, this was undertaken before mobile device development, therefore represents only traditional technology (computers and interactive whiteboards). The study included pupils from 5 to 18 years, and reports differing effects, with higher learning gains for older pupils – in contrast to the same authors' study on maths achievement above. The authors note the wide range of interventions and varying effects between studies, concluding that more research is needed to better understand the overall impact and how the interventions can be most effectively used.

More recently, Tingir et al. (2017) undertook a meta‐analysis of mobile device use across grades K‐12 (aged 5–18) and incorporating all subject areas. Due to limited search scope, only 14 studies were identified, 3 of which included reading interventions. Sub‐group analysis revealed interventions for reading to be significantly more effective than other subjects, however results should be interpreted with caution given the small number of studies included. Most recently, Savva et al. (2022) undertook meta‐analysis to examine the effects of electronic storybooks on language and literacy outcomes for children aged 3 to 8. While reporting a small, positive effect, the authors also discuss the extricability of the device from learning theory and teaching approaches and features. In particular, they reflect on the role of adult scaffolding and the potential effectiveness of device features which seek to replicate this. Commonly across all such studies, blanket conclusions on the effectiveness of technology versus traditional teaching methods are not prudent due to the complex nature of both the subject area and the intervention features.

As already noted, the use of technology is not always going to be relevant to a class, subject or situation, and other teaching approaches, tools and methods will be more appropriate. However, where relevant, technology has the potential to impact on a wide‐ranging set of primary outcomes, depending on how it is used. As demonstrated above, the actual impact can also vary widely; in relation to literacy and numeracy, this is explored further through this systematic review.

Moderating factors

A moderating factor or variable refers to the situation when the relationship between two variables (in this case, mobile device use and academic outcome) is influenced (moderated) by a third variable (e.g., motivation to learn). The teaching environment across each school differs, as do individual child interests, abilities and behaviours – each of these introduces a wide range of potential moderating variables, which may have positive or negative impact on the desired primary outcome. OECD (2015) found that those countries reporting heavy investment in technology in schools demonstrated no significant improvement in reading, writing or maths. While mobile device usage in the classroom continues to grow, effectiveness remains unclear. Therefore, a closer look at the factors which may moderate impact is prudent. These include increased collaboration, inclusion and motivation to learn, as well as teacher skills, attitudes and approaches.

Collaboration and inclusion: Clark and Abbott (2015) evaluated iPad implementation in a primary school in Northern Ireland, situated in the 10th most deprived area, and the first school in the region to provide one‐to‐one tablets for pupils. They found increased inclusion and collaboration, observing that children with additional needs were able to join in with activities on the tablets, and previously observed gender differences in subject areas decreased. Overall, children's interest was sustained, and ownership of learning increased.

Burke and Hughes (2018) studied the integration of touchscreen technology in the curriculum in Canada, with a particular focus on students with diverse abilities, and found that such technology in the classroom can be transformative where children have previously had difficulty engaging with traditional teaching methods. Technology has also been shown to support a reduction in gender inequalities in education. Clark and Abbott (2015) reported that boys' motivation often increased with the use of iPads in lessons, while McQuillan and O'Neill (2009) discuss how the embedding of technology in the curriculum from an early age has narrowed the gap in technology skills between boys and girls and has had a positive impact on girls' participation in STEM subjects.

Motivation to learn: Self Determination Theory (Deci & Ryan, 1985) offers a distinction between extrinsic and intrinsic motivation and has been influential in pedagogy. Extrinsic motivation is driven by external factors, such as a fear of getting into trouble, or the promise of a reward. Intrinsic motivation stems from internal factors, such as a sense of achievement, personal challenge, or ‘purely for the enjoyment of the activity in itself’ (Ryan & Deci, 2000). Researchers have shown that intrinsic motivation is a stronger determinant of engagement in the classroom (see e.g., Richter, 2016, Taylor et al., 2014). Malone and Lepper (1987) propose a ‘taxonomy of intrinsic motivations for learning’. The task should be challenging in a way that is neither too boring or too difficult, allowing learners to select their own level of ability and work at their own pace. Tasks should stimulate curiosity, in both sensory and cognitive ways, for example, through sounds, pictures, and actions. The learner should feel in control of the task, with the ability to make independent choices and control the direction of activities themselves. Opportunities for cooperation and collaboration increase intrinsic motivation through increased social competence, the realisation of common goals and the opportunity to learn from and support one another. Finally, the task should provide an element of competition, with others or with oneself. These elements have important implications for the design of educational interventions.

Teacher attitudes and beliefs: Kagan (1992) proposed that teachers screen any new knowledge through a filter of existing pedagogical beliefs. Those who do not feel adequately skilled in the use of mobile devices and their applicability to pedagogy, or do not feel positively towards the potential of educational technology, may be unwilling to use them. A systematic review by Tondeur et al. (2017), found that pre‐existing pedagogical beliefs can be a barrier to technology integration. Choy and Ng (2015) further support this view, citing studies which demonstrate that despite availability of technology in many schools, teachers who view technology less favourably are less likely to use it in a transformative manner, when transformation is desirable and relevant in the context. However, Matzen and Edmunds (2007) found that the relationship between pedagogical beliefs and technology use is bi‐directional, with technology also having the power to change pedagogical beliefs over time. Indeed, Burden et al. (2012) found that mobile devices forced teachers to rethink their role in the classroom, changing the way they relate to their students and helping them work more collaboratively. Long‐held beliefs are the hardest to change, while more recently formed beliefs can be more easily influenced. Professional teacher development can therefore support behaviour change in this regard if effort and focus is placed on understanding and changing these beliefs.

Positive leadership is also critical. Before mobile technology, Matzen and Edmunds (2007) reflected on how the wider school context, culture and resources could support or hinder technology integration, positing that a whole school approach is necessary for technology implementation to be transformative. Choy and Ng (2015) note that the school culture and infrastructure can impact individual teacher attitudes and suggest that a school principal with a positive attitude to technology, coupled with a clear school vision, strong communication and, of course, access to technological tools, increases the chances of teacher ‘buy‐in’.

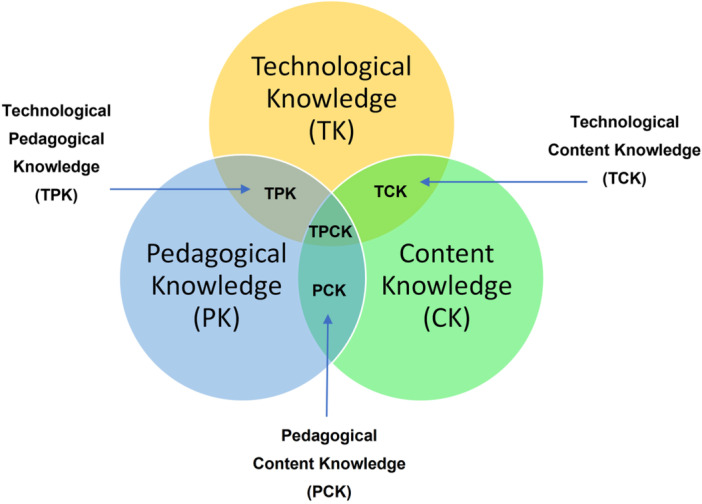

Teacher skills and knowledge: Access to mobile devices, and availability of well‐designed applications which mirror the theories of learning and motivation discussed above do not automatically translate to improved outcomes for pupils. When their use has been identified as relevant, the way in which these devices are used to support learning is key. The TPACK Framework (Technological, Pedagogical and Content Knowledge) (Mishra & Koehler, 2006) is a commonly cited model within the literature on teacher's implementation of technology in the classroom (see e.g., Dewi et al., 2021; Santos & Castro, 2021; Voogt & McKennyey, 2017) therefore useful to consider in further detail. The TPACK Framework (Figure 2) adds a technology filter to the Shulman (1986) theory of pedagogical content knowledge, which has been influential in teacher education and development (Berry, 2008).

Figure 2.

The TPACK framework.

The TPACK Framework identifies the knowledge components necessary for a teacher to effectively integrate technology in the classroom. These include content knowledge of their specific subject area; pedagogical knowledge of general approaches to teaching; and technological knowledge of the equipment and Applications. The model considers the intersection of these three elements of knowledge, theorising that for successful and effective implementation, all three must be present, and importantly, combined in the classroom.

Many researchers have identified teachers' lack of appropriate pedagogical knowledge, and/or their understanding of how to combine it with their content and pedagogical knowledge, as a barrier to technology implementation (Kearney et al., 2018; Voogt & McKennyey, 2017). Indeed, Burke and Hughes (2018) suggest the biggest barrier to technology implementation lies in a lack of teacher training and support to keep up to date with advancements. Building and sustaining teacher skills and knowledge through continued professional development, and supporting a positive attitude to technology, must therefore be prioritised when embedding mobile technology in the classroom (Zipke, 2018).

Degree of enhancement to pedagogical practices: A further critical implementation theory focuses on the degree to which mobile devices are used to enhance, rather than duplicate, current practices. The SAMR model (Substitution, Augmentation, Modification, and Redefinition) (Puentedura, 2006) receives prominent focus (e.g., Crompton & Burke, 2020; Keane et al., 2016; Savignano, 2018). The model considers the degree to which technology can change what was previously possible in the classroom. Table 4 below describes the four levels of technology integration defined by Puentedura, and additionally provides an example of classroom activities at each level.

Table 4.

Stages of the SAMR Model, Puentedura (2006).

| Implementation stage | Description | Example of activity |

|---|---|---|

| Substitution | Technology acts as a direct substitute, with no functional change | An online book is used in place of a paper copy of the same book. There are no additional pictures or content included in the online version. |

| Augmentation | Technology acts as a direct substitute, with functional improvement | A computer word processor is used to write an assignment, allowing for a more creative presentation such as the inclusion of pictures or diagrams, while the ability to edit documents makes the drafting process easier. |

| Modification | Technology allows for significant task redesign | Pupils use the internet to undertake independent research to inform their assignment. This has the potential to expand their knowledge, while also supporting the development of new skills. |

| Redefinition | Technology allows for the creation of new tasks, previously inconceivable | A multi‐media assignment is undertaken, with pupils using video, audio recordings and other creative tools to develop their assignment, and then share this with peers via a class blog. |

Substitution and augmentation are both considered to enhance pedagogy; the usual activities are undertaken, however there may be some additional function. Modification and redefinition are both considered to transform pedagogy; technology makes it possible for new and creative activities to be undertaken, therefore adding to the existing learning experience. Puentedura (2006) proposed that for true transformation of learning, implementation must aspire towards redefinition of pedagogy, rather than simply substituting one tool for another. However, in practical terms, the model is a spectrum of technology integration. As the potential application of technology in the classroom grows, the SAMR model is increasingly being used to influence good practice in classroom settings, and to support teaching professionals in their efforts to transform the pupil experience.

Researchers have also attempted to use the SAMR framework to categorise practice across schools, with varying success. Geer et al. (2017) found it difficult to distinguish between the four stages when assessing the extent of implementation, however resolved this by classifying technology use as either enhancing or transformative. They found most teachers using technology to enhance rather than transform their practice, potentially due to the relatively recent adoption of such technology. The SAMR tool has its limitations, not least that it focuses on the technology itself and ignores wider modifiers such as teacher and pupil knowledge and attitudes, and the wider dynamics within the classroom (Hamilton et al., 2016). The authors also note that there is limited information provided by Puentedura on the theoretical background or supporting evidence to the development of the model, nor is there peer‐reviewed literature. However, as a model by which to categorise the extent of implementation, it is a useful one, for both educators and researchers.

TPACK and SAMR are of course not the only implementation models, however are prominent in research and practice discussions. A more simplified version of SAMR – RAT (Replacement, Amplification, Transformation) developed by Hughes et al. (2013) – makes practical application clearer by generalising augmentation and modification, which have been criticised as being difficult to distinguish between. Puentedura also drew parallels with ‘Bloom's Taxonomy’ (Bloom, 1956), a long‐established pedagogical model of the learning journey, from remembering and understanding new knowledge; then applying and analysing the knowledge; through to creating or generating new knowledge.

Overall, it is clear that simply providing mobile devices to all primary school classrooms is not enough to improve child educational outcomes. The ways that these are used to motivate learners, the types of activities undertaken, the skills and knowledge of the teacher and the added value to existing practice, are key. While these implementation models have been criticised as being too simplistic (see e.g., Choy & Ng, 2015), given the dynamic natures of both pedagogy and technology, and the number of factors that may impact implementation in the classroom, they make a valuable contribution to our understanding of how technology may enhance educational outcomes, and the factors for consideration in practical implementation in the classroom. Importantly, both theories remain relevant despite the rapidly changing nature of technology and its application in the classroom. While the meta‐analysis in this review considers only primary outcomes, these implementation considerations are critical to the qualitative analysis, and the interpretation of findings.

2.4. Why it is important to do this review

There are existing meta‐analyses and reviews on a similar theme, but with limitations. A systematic review by Haßler et al. (2015) is most similar, however, is not a registered systematic review, which may have implications for the robustness of the review. The searches were undertaken in 2014, following which there has been rapid growth and evolution of the use of mobile technologies in the classroom. The current review therefore draws on more recent research. Furthermore, Haßler et al.'s review considered both primary and secondary school use, did not include smartphones, and focused on wider learning outcomes. There is no sub‐analysis completed, either across age groups or specific learning outcomes. Given the differences in curriculum content and teaching approaches in primary and post‐primary schools (or equivalent), a closer look at primary school practice is merited.

A protocol is currently registered with the Campbell Collaboration (Liabo et al., 2016) with a focus on the impact on academic achievement (including literacy, numeracy, and wider knowledge) and on school engagement (as measured by attendance patterns and school enjoyment) of schemes which primarily seek to increase pupils' wider access to technology, such as discounted laptop schemes or Internet access. These devices are not necessarily for use within the classroom, rather may be used at home or within the community. Additionally, the final review has not yet been completed.

Most recently, Dietrichson (2020) undertook a systematic review of school‐based interventions to improve reading and mathematics for students with or at risk of academic difficulties in grades Kindergarten to six (primary school equivalent). While there is some crossover, this review included all interventions rather than those specifically using mobile devices, and focused on targeted interventions for those experiencing educational delays, rather than on those for the class as a whole.

A full list of further reviews identified is included in Supporting Information: Appendix 1 along with details of their area of focus and limitations in relation to this review. In summary, existing reviews differ from the proposed review in a number of important ways. They tend to be focused on older or younger age groups of children (pre‐school, post‐primary, higher education) without sub‐analysis on the age‐group of interest, or focused specifically on pupils requiring additional support or with special educational needs, rather than general usage of mobile devices in the classroom. They are often inclusive of all technologies (including e.g., interactive white boards and desktop computers) rather than focused specifically on mobile devices, or more narrowly focused (e.g., on iPad branded tablets only). Given the speed of development of technology and the rapid evolution of technology applicability in the classroom, the searches are quickly outdated. Finally, they may not meet the standards set out by the Campbell Collaboration in terms of systematic review methodology, for example, by including peer reviewed journals only, or excluding grey or unpublished literature. For this reason, the current review usefully adds to existing knowledge.

Any innovation in the classroom has the capacity to impact all pupils and must be implemented by professionals who are equipped with the skills and knowledge to use it appropriately and effectively to support pupil attainment. This review will provide an accessible resource for policy makers, educational practitioners, and technology developers in the world of primary or elementary education. It has important policy and practice implications across several areas, including curriculum development and delivery; technical provision in schools; school policies and infrastructure, and teacher training and professional development.

3. OBJECTIVES

This systematic review sought to understand how mobile devices are used in primary/elementary education around the world. The study aimed to identify and synthesise high quality research (published and unpublished) to determine how activities undertaken using these mobile devices in the primary classroom might impact literacy and numeracy attainment for the pupils involved. Within this context, mobile devices are defined as tablets (including iPads and other branded devices), smartphones (usually those with a touchscreen interface and internet connectivity) and handheld games consoles (again usually with touchscreen and internet‐enabled). The interventions of interest are those aimed at improving literacy and/or numeracy for children aged 4–12 within the primary/elementary school (or equivalent) classroom.

Specifically, the review aimed to answer the following primary research question and five supplementary questions:

-

1.

What is the effect of mobile device integration in the primary school classroom on children's literacy and numeracy attainment outcomes?

-

2.

Are there specific devices which are more effective in supporting literacy and numeracy? (Tablets, smartphones, or handheld games consoles)

-

3.

Are there specific classroom integration activities which moderate effectiveness in supporting literacy and numeracy?

-

4.

Are there specific groups of children for whom mobile devices are more effective in supporting literacy and numeracy? (Across age group and gender).

-

5.

Do the benefits of mobile devices for learning last for any time beyond the study?

-

6.

What is the quality of available evidence on the use of mobile devices in primary/elementary education, and where is further research needed in this regard?

3.1. Stakeholder engagement

Chapter 2 of the Cochrane Handbook (Thomas et al., 2019) highlights the importance of stakeholder engagement throughout the review process, from defining priority topic and review questions through to interpreting review findings in relation to everyday practice. A participatory approach has therefore been incorporated to ensure stakeholder engagement throughout this review process. Cottrell et al. (2015) identified several benefits of stakeholder engagement in systematic reviews, including Increased credibility; the ability to anticipate controversy; transparency and accountability; improved relevance; enhanced quality; and increased opportunity for dissemination and uptake of findings. They also identified several challenges, including the time required to engage stakeholders; training and resources needed; and the process of engaging appropriate people at the appropriate time.

An Expert Advisory Group was established early in the process to shape the review focus and bring expert knowledge about everyday practice, as practical primary school teaching experience was not amongst the skills of the core systematic review team. The group comprised four members, including a primary school vice‐principal and a primary school senior teacher (both technology leads within their schools); a parent of three primary‐aged children; and an educational policy professional with expertise in the use of technology within the primary school curriculum. The advisory group was small to align with reviewer capacity, but brought key knowledge and skills in terms of practical application of technology in education – from the perspective of teacher, parent, and policy developer. To recruit advisors, emails were sent to pre‐existing contacts, and recommendations were followed up. A summary paper and Terms of Reference for the Expert Advisory Group was developed and shared with proposed members to ensure informed consent (See Supporting Information: Appendix 2).

The group met on three occasions during the review process (one face to face meeting and two online meetings, due to COVID‐19 pandemic restrictions), with further engagement via email between meetings. The first meeting took place in the early stages of the review process, before title registration. In addition to introducing the review, discussion focused on the types of technology used in classrooms, with the advisory group supporting a narrowed focus from technology more broadly to the specific use of mobile devices, in line with their experience of current practice. The proposed focus of literacy and numeracy was also discussed at the meeting and the group agreed this was a common area of interest for all primary school teachers and therefore of practical relevance.

Following the first meeting, group members were engaged in several email discussions on the common devices and applications used in primary schools to support literacy and numeracy learning, which helped to refine the interventions of interest and inclusion/exclusion criteria for use in the search process. A follow up online meeting then took place to discuss the feedback and propose the focus and approach to be taken in the review. This feedback contributed to protocol development and submission, and to the final methodology employed.

A further email activity took place following identification of the final set of included studies, to support the classification of included interventions under the SAMR framework classification. A summary paper (Supporting Information: Appendix 3) was shared with group members, describing the SAMR framework and stages, the interventions identified in the included studies, and the key features of each. Group members were invited to use their professional experience to classify each intervention as substitution, augmentation, modification or redefinition of ‘normal’ practice. Following email feedback, an online focus group was held with the Expert Advisors to discuss their conclusions and finalise classifications for each included intervention. At this stage, a wider discussion was also held with the group on how the research interventions compared to ‘real life’ practice, and where they felt the benefits of technology lay from their personal experience. This focus group was recorded and transcribed, and the reflections used to support interpretation of the findings. Finally, a draft Plain English Summary was shared with Advisors for review and comment to ensure accessibility to non‐researchers.

4. METHODS

4.1. Criteria for considering studies for this review

4.1.1. Types of studies

This systematic review, and the method described below, is based on a pre‐published protocol (Dorris et al., 2021). The search criteria used to identify studies for inclusion focused on the participants studied, the intervention undertaken (including outcome/s of interest, delivery method and venue in which the intervention was delivered), and the primary research methodology adopted (types of studies).

Connolly et al. (2018) found the use of RCTs in educational research to have increased significantly, and their applicability to have been demonstrated. RCTs are considered amongst the highest quality standard of evidence, therefore, only studies which reported effect sizes through the comparison of intervention and control groups either through RCTs or Cluster RCTs were eligible for inclusion in this review. Control groups could include either traditional teaching methods which did not incorporate technology (no intervention), or an alternative technology (e.g., desktop computers). Included interventions must have been time‐equivalent – therefore, interventions that resulted in pupils receiving additional tuition beyond standard class time were excluded. Quasi‐experimental, non‐experimental, or qualitative studies were excluded. Qualitative data was extracted from the final selected studies to provide some background to the differing interventions, and support subgroup analysis, however wider analysis of the content/approach of interventions and their theories of change was not possible within the review scope.

4.1.2. Types of participants

The included population for this review was children within mainstream primary, elementary or kindergarten education settings in any country (with ‘mainstream’ referring to the dominant statutory educational provision of the country). These children are usually in the age range 4 to 11, however on occasion some children aged 12 were included as it was not possible to isolate the effects across different age groups. There were no cases in which both primary and post‐primary aged pupils were included within a study. Studies which assessed the use of mobile devices in special schools, educational provision other than at school, informal preschool settings or indeed home schooling, were excluded. Additionally, interventions targeted at a sub‐group of low‐performing students, rather than the class as a whole, were excluded. Eligible studies from all countries were included if they were returned by the search, however, it is important to acknowledge that searches were conducted using the English language across databases which overrepresent English language and research.

4.1.3. Types of interventions

Included in the review were interventions initiated within the primary school classroom (or global equivalent) that used mobile devices (including tablets, smartphones, or hand‐held gaming devices) to intentionally support learning for the class as a whole. Interventions were considered where delivery was by the classroom teacher, or a researcher, as long as it was delivered within the usual day to day class time. In all interventions, the device must have been used directly and primarily by the child, although some use by the teacher alongside this was acceptable. The decision on which devices to include was discussed with the Expert Advisory Group and informed by the earlier literature review. Tablets were considered the most likely device used in classrooms; however smartphones and handheld games were mentioned in literature and are cheaper and more accessible, therefore important to capture. Laptops, chromebooks and similar were excluded from the study as they lack the portability, dexterity and manipulation that tablets and smaller devices bring, therefore were felt to provide a different overall experience. Table 5 summarises these criteria.

Table 5.

Inclusion and exclusion criteria for interventions.

| Eligible interventions included | Ineligible interventions were those which |

|---|---|

|

|

4.1.4. Types of outcome measures

While the literature on technology integration in primary school classrooms considers a wide range of influencing factors, including enhanced motivation and engagement with peers (see e.g., Ciampa, 2014), only academic performance outcome measures were included in this study. Studies which focused on improvement in any element of literacy or numeracy were considered for inclusion.

Primary outcomes

In planning for this review, a range of source material was read, and a list compiled of the types of outcome measures used or reflected in papers and studies. The primary school curricula from across the four UK Nations were also reviewed to identify the elements of literacy and numeracy taught. The wide range of potential literacy outcomes reflects the complexity of the subject and the multiple skills that effective literacy requires. These can be classified under three categories. Listening outcomes focus on hearing sounds, correctly combining sounds into words, and identifying the sound or word on the screen. Reading and writing outcomes include the identification of written words, accurately and fluently, and accurate spelling and grammar when writing. Thirdly, comprehension outcomes measure the understanding of what has been read and decision‐making ability based on information available. These skills are usually learned in order of complexity, therefore measures assessing comprehension were more likely to be used with older children. Similarly, several common numeracy elements are assessed in studies. These can be grouped in three categories. Mathematical knowledge includes number recognition, identification of operators (subtraction, addition, multiplication, division) and how to use them (incorporating accuracy and fluency). Mathematical thinking covers problem solving, reasoning, spatial awareness and working memory, while complex operations include geometrical concepts and number manipulation. Again, children progress through these skills therefore older children are more likely to be assessed in use of complex operations.

Within the scope of literacy and numeracy, many outcome measures are used, including standardised assessments, bespoke tools and statutory academic assessment of the country. The inclusion of specific outcome measures was not used as a criterion for study inclusion, however the measures used across a range of studies were used to identify search terms.

Secondary outcomes

No secondary outcomes were considered in this meta‐analysis.

4.2. Search methods for identification of studies

A sensitive and comprehensive search strategy was designed, including electronic and other sources. This is summarised below.

4.2.1. Electronic searches

Electronic databases and other search sources were identified in advance and stated in the published protocol (Dorris et al., 2021), in line with best practice in systematic reviews (see Supporting Information: Appendix 4). In compiling the final list of databases, a combination of professional experience, library subject guides and pilot searches were used to identify the most relevant for inclusion. The final search strategy incorporated relevant journal and other databases with a particular focus on education and social sciences, however as recommended by the Campbell Collaboration (Kugley et al., 2016), both field‐specific and multidisciplinary databases were searched. The search strategy was designed to retrieve both published and unpublished literature, including government research or studies by non‐governmental organisations, conference papers and reports on proceedings, technical reports, dissertations and theses, white papers, and other relevant unpublished literature. Searches took place between October and November 2020, with databases accessed through Queen's University, Belfast, and via the Internet where relevant. As noted above, an update of the searches would be relevant in terms of identifying newly published studies to build on this work.

To conduct searches in the databases identified, first a broad groups of relevant search term groupings was developed, as described in Table 6. An initial list of search terms was then compiled within each grouping by reviewing keywords and subject headings from a sample of randomly selected, relevant articles, and subject terms used in ERIC and British Education Abstracts. Careful consideration was given to synonyms, country‐specific spelling, and alternative names for devices, for example, primary school/elementary school; mobile phone/cell phone; randomised/randomized. The final search terms and keywords are included in Supporting Information: Appendix 5.

Table 6.

Search term groupings.

| Search term grouping | Details |

|---|---|

| 1. Population of interest | Combining broad terms for appropriate age with class/classroom/school. |

| 2. Setting | Mainstream primary school setting, or global equivalents. |

| 3. Intervention of interest | (a) Type of mobile device used (tablets, smartphones, handheld games consoles; all touchscreen and internet‐enabled) and (b) curricular topic addressed (i. literacy OR ii. numeracy and associated concepts). |

| 4. Study design: | Randomised controlled trials only. |

Using Boolean Operators, a sample search string was then developed (See Supporting Information: Appendix 6) by combining each grouping within ERIC as a trial database using the equation 1 AND 2 AND (3a OR 3b) AND 4. This exhaustive process is what sets a systematic review apart from other forms of literature reviews, lending both robustness and transparency to the work.

As databases vary, the final search terms were adapted to suit each database by reviewing the database thesaurus, and again piloting the search string. Where available, database limiter functions were used for ‘school setting’ (education level), rather than inputting a search string. A record of each search completed was documented, including date of search, specific combination of keywords used, and total numbers of studies identified and retrieved (see Supporting Information: Appendix 7).

In undertaking searches in Google, a potential source of bias is introduced due to inbuilt algorithms which track user data to provide personalised search results. ‘Secure’ search engines available designed without tracking, such as DuckDuckGo, are available and can be used by systematic reviewers to avoid algorithm bias. However, various sources (Landerdahl, 2022; Rethlefsen et al., 2021) recommend that the use of ‘incognito mode’ within Google or Google Scholar will give the same results. Google Scholar was used in this review, with search history, location services and other personalisation options switched off to ensure this did not impact results by returning tailored search results. Google Scholar search function is limited to 256 characters (including operators) therefore a smaller, more targeted search string was developed, and the first 500 hits screened for relevance.

Grey literature and thesis/dissertation searching were important elements of this search strategy. Searches were undertaken via a range of relevant sources, including OpenGrey, Microsoft Academic Search, and ProQuest Dissertation and Theses. Additionally, government education websites were searched (across England, Scotland, Ireland, Northern Ireland and Wales) alongside websites of charities and funding organisations, including the Education Endowment Foundation, National Literacy Trust, National Numeracy Trust, and the British Educational Research Association.

In the pre‐published protocol to this study (Dorris et al., 2021), a date of 1990 onwards was proposed as a date limiter, given that devices such as the Delaware Fingerworks or Palm Pilot were in existence, however on closer consideration, these devices did not have comparable functionality to tablets and smartphones, or educational applicability as considered in this study. The decision was therefore made to focus only on ‘new technologies’. As iPads and similar tablets only emerged from 2010 onwards, this was considered an appropriate limiter.

4.2.2. Searching other resources

Contact was made with authors prominent in the subject area via email, including the first and second authors of included studies and any others appearing regularly in excluded but relevant studies. Authors were asked to share details of any unpublished studies or work in progress, either of their own or known to them. Additionally, two relevant journals, the British Journal for Educational Technology, and Computers and Education, were identified through triangulation of information gleaned from identified studies, journal metrics and professional experience, and 5 years of editions were reviewed for relevant studies.

Alongside any conference proceedings identified through the grey literature searches above, several conference/s were identified as being of high relevance, including the International Society for Technology in Education; BETT; British Educational Research Conference and the European Conference on Education. These were selected given their global reach, relevance to primary/elementary education and technology, and focus on research and pedagogy rather than marketing opportunities for technological products. This decision was also informed by the Expert Advisory Group, several of whom had personal experience of attending EdTech conferences and were familiar with the focus of each. The conference proceedings from 2015 onwards were searched by hand to identify those not yet indexed in the commercial databases.

Reference lists of included studies were reviewed, relevant studies identified, and articles retrieved online (via QUB database). Bibliographies of other relevant systematic reviews or meta‐analyses were also reviewed, and relevant studies identified and retrieved. Finally, a citation index search of relevant databases identified any more recent studies citing the already identified studies.

4.3. Data collection and analysis

4.3.1. Selection of studies