Abstract

In recognition memory, retrieval is thought to occur by computing the global similarity of the probe to each of the studied items. However, to date, very few global similarity models have employed perceptual representations of words despite the fact that false recognition errors for perceptually similar words have consistently been observed. In this work, we integrate representations of letter strings from the reading literature with global similarity models. Specifically, we employed models of absolute letter position (slot codes and overlap models) and relative letter position (closed and open bigrams). Each of the representations was used to construct a global similarity model that made contact with responses and RTs at the individual word level using the linear ballistic accumulator (LBA) model (Brown & Heathcote Cognitive Psychology, 57 , 153–178, 2008). Relative position models were favored in three of the four datasets and parameter estimates suggested additional influence of the initial letters in the words. When semantic representations from the word2vec model were incorporated into the models, results indicated that orthographic representations were almost equally consequential as semantic representations in determining inter-item similarity and false recognition errors, which undermines previous suggestions that long-term memory is primarily driven by semantic representations. The model was able to modestly capture individual word variability in the false alarm rates, but there were limitations in capturing variability in the hit rates that suggest that the underlying representations require extension.

Supplementary Information

The online version contains supplementary material available at 10.3758/s13423-023-02402-2.

Keywords: Recognition memory, Orthographic representations, Semantic space models, Linear ballistic accumulator

Possibly the single most important cornerstone of theories of episodic memory is the encoding specificity principle (Tulving & Thomson, 1973), which states that retrieval of a given memory is proportional to the similarity between the memory-in-question and the cues present at the time of retrieval. This principle is contrary to early notions of forgetting, which placed considerably greater emphasis on the strength of the encoded memories or the amount of time between the learning and retrieval events. Since its inception, virtually all successful memory models embody this concept at the core of the theory, including models of recognition memory (Cox & Shiffrin, 2017; Dennis & Humphreys, 2001; Osth & Dennis, 2015; Shiffrin & Steyvers, 1997), free recall (Howard & Kahana, 2002a; Lehman & Malmberg, 2013; Polyn et al., 2009; Raaijmakers & Shiffrin, 1981), and serial recall (Farrell & Lewandowsky, 2002; Brown et al., 2000; Henson, 1998). In these models, both the successes and errors of retrieval are direct consequences of similarity – the test cues may be sufficiently dissimilar to a learned representation to prevent remembering, or spurious similarity between the test cues and learned information can result in a false memory of an item having been studied.

Placing similarity at the heart of retrieval does, however, invite a further question – what defines the similarity between cues and memories? Without a definition of similarity, such models risk falling prey to the circularity problem – the memory judgments themselves define the similarity between cues and studied representations with no recourse to an independent standard. While the precise definition of similarity is a very large question (e.g., Medin, Goldstone, & Gentner, 1993; Tversky, 1977), models of episodic memory often define similarity as the overlap between the representation of a probe and a stored representation in memory. However, precisely defining the content of such representations is challenging, as finding "true" psychological representations might be considered the holy grail of cognitive science more generally. Many models circumvent the problem by randomly generating stimulus representations – similarity effects can be captured by assuming that items from a common category share features (e.g., Criss & Shiffrin, 2004; Hintzman, 1988). While this allows models to make predictions about different categories or list conditions, there are no tractable means for making predictions about individual items in an experiment.

Fortunately, there has been progress in defining stimulus representations that allow models to make predictions with fewer parameters, and even make predictions on an item-by-item basis. In recent years, memory models have been able to capitalize on the successes of the semantic space models (see Günther, Rinaldi, & Marelli, 2019; Jones, Willits, & Dennis, 2015 for reviews). Using a large corpus of natural text, semantic space models learn representations of words from their co-occurrence with other words in the text. The resulting representations bear similarity relationships that resemble human judgments – the similarity between the representations of "boy" and "girl" will be higher than the similarity between unrelated concepts such as "truck" and "pool." Memory models have enjoyed some success in using semantic representations from such models as word representations in both recognition memory (Johns et al., 2012; Monaco et al., 2007; Osth et al., 2020; Reid & Jamieson, 2023; Steyvers, 2000) and recall tasks (Kimball et al., 2007; Mewhort et al., 2018; Morton & Polyn, 2016; Polyn et al., 2009). A distinct advantage of this approach is that false memory errors as a consequence of semantic similarity emerge "for free" – when a list of highly similar items is studied, the high degree of overlap between the representations of unstudied probe words and the list words naturally leads to the prediction of false memory errors.

However, few models have further specified perceptual representations of words, despite the fact that perceptual similarity among words produces the same types of false memory errors as semantic similarity (e.g., Shiffrin, Huber, & Marinelli, 1995; Sommers & Lewis, 1999; Steyvers, 2000; Watson, Balota, & Roediger, 2003). One notable exception is the dissertation of Steyvers (2000), who specified a variant of the retrieving effectively from memory (REM) model that employed semantic representations derived from word association spaces in addition to orthographic representations of the letter strings. Specifically, Steyvers employed what is referred to as a slot code – letters are coded with respect to their absolute position of occurrence, meaning that the letters in the word "cat" would be encoded as "c" in the first position, "a" in the second position, and "t" in the third position.

As we will elaborate below, there are a number of other representational schemes besides slot codes for representing letter position, each with their own consequences for similarity between letter strings. An additional class of representations are models that code for the relative position within a letter string, such as coding for whether letters are adjacent to other letters in the string or alternatively coding for the distances between letters in the string, without regard to their absolute position.

The present work aims to explore the consequences of such representations on both the accuracy and latency of episodic recognition memory decisions. While many models and analyses only consider the accuracy of recognition memory, the latency of recognition memory decisions often co-varies with accuracy such that more accurate stimuli or conditions often exhibit shorter response times (Murdock & Dufty, 1972; Ratcliff & Murdock, 1976). In the first part of the article, we will describe a set of perceptual representations of words that define string similarity solely in terms of letter position. While the similarity among the letters undoubtedly would play a role, the positional schemes we pursue in this work are relatively simple and can capture similarity effects in recognition memory, where orthographic similarity is often manipulated by the number of shared letters rather than the similarity of the letters themselves (e.g., Sommers & Lewis, 1999). These representations of letter order are quite common in the psycholinguistics literature and have often been used either as the core representations of models of reading (Coltheart et al., 2001; McClelland & Rumelhart, 1981; Snell et al., 2018) or to explain phenomena such as priming effects and response times in same-different judgments among pairs of letter strings (Davis & Bowers, 2006; Gomez et al., 2008). In this work, we will factorially explore representations that a.) code for absolute or relative position of the letters within the string and b.) code with respect to one other element or multiple elements within the string, and, for the class of absolute position models, c.) whether letter position is purely forward-ordered or whether there are both forward- and backward-ordered representations. To date, such representations have not been used to explore perceptual confusions between words in episodic memory tasks, although they do bear a resemblance to the representational schemes in models of serial order memory (Caplan, 2015; Osth & Hurlstone, 2023).

These orthographic representations will make contact with recognition memory data by calculation of the global similarity between the probe word and each of the words in memory – each probe-item similarity will be averaged together to produce an index of how similar the probe is to the contents of memory, as in global matching models of recognition memory (e.g., Clark & Gronlund, 1996; Osth & Dennis, 2020). The global similarity values will make contact with both choice and latency data from individual participants via the linear ballistic accumulator model (Brown & Heathcote, 2008), which enables the models to make contact with both choice and response time data simultaneously.

In the second part of the article, we will explore how orthographic representations combine with semantic representations, specifically those from Word2Vec (Mikolov et al., 2013). When both orthographic and semantic representations are included, we can measure the respective weights of both representations in determining the similarity between the probe and each memory, which allows us to measure how these weights vary across shallow and deep processing tasks in a depth of processing manipulation. In addition, the models will also be tested to evaluate how well they capture memory performance on an item-by-item basis in a recognition memory megastudy of individual words (Cortese et al., 2015).

Orthographic representations

Orthographic representations are representations of the word form, specifically how the letters are arranged together. The similarity of such representations has clear consequences for episodic memory – when categories are constructed of orthographically similar words, such as mate, late, and date, one often finds the same patterns of performance as with semantic categories, namely an increase in the false alarm rate (FAR) as the category size on the study list is increased (Heathcote, 2003; Shiffrin et al., 1995; Steyvers, 2000), as well as high FAR to a non-presented prototype (Chang & Brainerd, 2021; Coane et al., 2021; Sommers & Lewis, 1999; Watson et al., 2003) when lists of orthographic/phonemic categories are constructed using the Deese-Roediger-McDermott (DRM) paradigm (Deese, 1959; Roediger & McDermott, 1995). In addition, lure probes that are orthographically similar to even a single target item often elicit higher rates of false recognition than lure probes than semantically similar lures (Cramer & Eagle, 1972; Gillund & Shiffrin, 1984). Finally, lure probes containing letters that were not present in any of the study list words are rejected substantially more easily than words that contain matching letters (Mewhort & Johns, 2000).

We will detail below a number of representational schemes for letter strings that can be used for each individual word in a recognition memory experiment. For each representational scheme, the matches between the memory and the probe string are summed together and divided by the alignment length, which is defined as the number of letters in the longer of the two letter strings, to produce a measure of similarity between 0 and 1.

In addition, each scheme contained weighting parameters that allowed for extra weight of the beginning and end letter of each string. This is on the basis of previous work in word identification that suggests that the exterior letters are more consequential for identification than the interior letters (Grainger & Jacobs, 1993; Jordan et al., 2003; Scaltritti et al., 2018; Whitney, 2001), which can be considered analogs of the primacy and recency effects at the within-word level.

Absolute position codes

In the absolute position scheme, similarity between letter strings is highest when the letters occur in the same absolute position across the two strings. Absolute position can be coded relative to either the beginning or the end of the letter string. In the simplest schemes, a match is only considered if the letter occurs in the exact same position across the two strings. In variants based on the overlap model (Gomez et al., 2008), partial matches are allowed when a letter occurs in a similar position across the two strings. We will elaborate on these schemes below.

Slot codes

Slot codes involve the association of each letter within the word to its position relative to the start of the string. Words such as "candy" and "carton" can be recoded as and , which both share "c" in the first position and "a" in the second position. The similarity between the two strings is proportional to the number of these matches. Slot coding has traditionally been a very popular form of word-form representation. It was the representational scheme used by the interative activation model (McClelland & Rumelhart, 1981), the multiple readout model (MROM: Grainger & Jacobs, 1996), the dual-route cascade (DRC) model (Coltheart et al., 2001), as well as the connectionist dual process (CDP++: Perry, Ziegler, & Zorzi, 2010) model. In episodic memory, slot coding strongly resembles (Conrad, 1965)’s box model of serial order memory and was the representation of word-form used in (Steyvers, 2000)’s variant of the REM model.

Formally, we can express a match m on position k between two letter strings i and j as:

| 1 |

where W refers to the length of the word, is a parameter for the weight of the match on the start letter, and refers to the weight of the final letter. The and parameters are common to all of the models and are freely estimated to capture the importance of exterior letter effects. Here, we assumed that the parameter only applies to the final letter of the studied word j and not the probe word. This assumption is inconsequential if both words are of the same length, but if the words are of different lengths, any changes in the weight of the terminal letter apply only to studied words and not to probed words.

The number of matches is simply the sum m across all letter positions:

| 2 |

The similarity s between the two letter strings is:

| 3 |

where is the alignment length of i and j, which is the length W of the longer of the two words. If the exterior letters are weighted the same as the other letters (e.g., and ), then the entire equation is simply the number of matches M divided by the alignment length a. The inclusion of and in the equation ensures that the similarity remains bounded between 0 and 1 as the weights of the exterior letters diverge from one.

According to the slot code scheme, the strings "baseball" and "based" would result in due to "b", "a", "s", and "e" occurring in the same positions and due to the division of M by 8 (the number of letters in "baseball") if all letters are equally weighted. The strings "raided" and "hamburger", in contrast, would result in as there is only a single letter that occurs in the same position across the two strings ("a" in the second position).

Consequently, slot coding yields similar representations when words share the same stems. For instance, the words "breakfast" and "breaks" will exhibit high similarity due to sharing the first five letters in the same positions across the two words. However, while slot coding succeeds at aligning words relative to the beginning of the word, it is unable to align words that share the same suffixes or endings if the two words are of different lengths. For instance, the words "vacation" and "representation" share the same "-ation" ending, but the two words will exhibit little similarity under a slot coding representation due to the fact that the common letters are not aligned.

Both-edges slot codes

The fact that words of different lengths cannot be easily aligned within a slot code can be remedied by augmenting the representation with representations that are aligned to the ends of the words. This is referred to as both-edges coding (Fischer–Baum et al., 2010; Jacobs et al., 1998). In this scheme, letters are represented relative to not just the beginning of the word, but the end of the word as well. Both-edges schemes have been employed in competitive queuing models of spelling (Glasspool & Houghton, 2005) as well as the MROM-p model (Jacobs et al., 1998). In episodic memory, the start-end model (Henson, 1998) employs a both-edges scheme in that each item in a list is encoded relative to the beginning and end of the list.

We use the slot codes defined in the previous section to define the start-based code. The end-based code can be done in a similar manner by coding each position in the word relative to the end of the word. As an example, we could define the word "kitten" as and "smitten" as . Using this positional scoring, we can calculate m, M, and s according to Eqs. 1, 2, and 3 above. These two strings share four letters, resulting in a similarity of 4/7 or .571. The start-based similarity, in contrast, is zero (0/7 matches). For the end-based slot code, the and parameters still correspond to the start and end of the initial string.

Overall similarity between two letter strings i and j in the both-edges code is a weighted combination of start-based and end-based similarities:

| 4 |

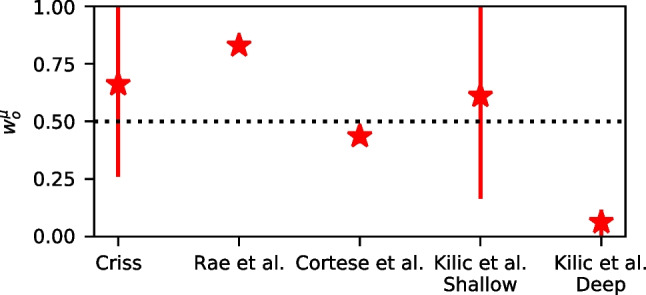

where w is estimated as a free parameter, but can reasonably be expected to be greater than .5 in most applications to data.

The overlap model

An alternative to both slot coding and both-edges representation is the overlap model (Gomez et al., 2008; Ratcliff, 1981). In the overlap model, rather than having letters associated with only a single position, letters are represented using positional uncertainty functions from the beginning of the string. The overlap model is often referred to as a "noisy" slot code because it is as if each letter occupies multiple letter position slots, but the strength is different for each position and the letter is most strongly associated to its true position. That is, consider the letter "d" in the word "badge" – the overlap model states that the "d" occupies all five letter slots, but is most strongly represented in the third position and is the weakest in the first and fifth positions. The positional uncertainty functions in the overlap model are analogous to the representations in many positional models of serial recall, where representations of within-list position overlap with each other (Brown et al., 2000; Burgess & Hitch, 1999; Lee & Estes, 1981), and also bear a resemblance to the CODE model of visual attention. (Logan, 1996).

The overlap model is formalized using Gaussian uncertainty functions – each letter is associated with a Gaussian distribution centered on its true position where the standard deviation of the Gaussian distribution is a free parameter and varies by serial position. In the overlap model, by convention the uncertainty is only present in one of the letter strings, which is usually either a prime or a briefly presented target. In the case of recognition memory, we define the uncertainty in the studied word, as this is only in memory and no longer presented to the participant. We will define k as the position of the letter in the probe string and l as the position of the same letter in the encoded string in memory:

| 5 |

where is the cumulative distribution function of the normal distribution.

is maximal when a letter occupies the same position in each word () and gets progressively weaker as l deviates from k. It is also important to note that m crucially depends on the standard deviation . As approaches zero, the model reverts to the slot code as all of the probability density in the encoded letter is centered on its true position. However, as increases, even if because the probability density of the letter’s position is distributed across multiple letters. In other words, the overlap model as implemented by Gomez et al. (2008) makes no distinction between strength and uncertainty (Davis, 2010). To correct for this and to make the model comparable to the other models, we have introduced the and parameters, which allow for flexibly weighting the exterior letters without affecting the precision of the letter representations. These were applied in the same manner as the forward slot code model.

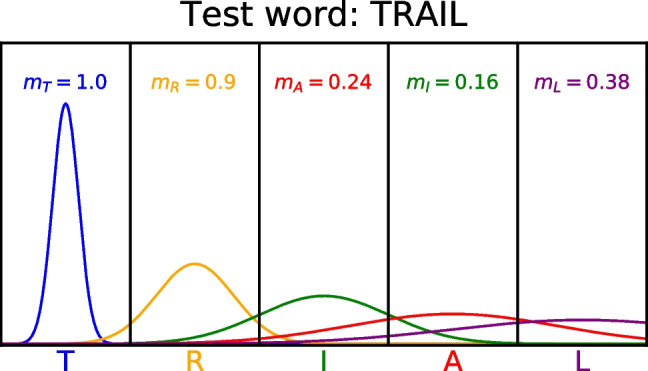

An illustration of the comparison between the studied string "trial" and the test word "trail" can be seen in Fig. 1. Each letter in the studied string is represented with uncertainty, which varies with letter position, and the higher uncertainty means that the letter’s identity "spills over" into nearby positions. The letter "T" is represented with high precision such that it is almost entirely concentrated in the first position, whereas the letter "L" is represented with low precision, such that there is a reasonable degree of probability mass in positions 3 and 4 of the string.

Fig. 1.

Illustration of the match calculation for two letter strings: a studied string “trial" in which each letter has uncertainty in its position coding, and a test string “trail." The match values m for each letter in the test string are also depicted. See the text for more details

When the string "trail" is matched against the representation of "trial", the match values depend on both the displacement of the letters in the test string from the studied string and the degree of uncertainty in the studied letter. The match for the letter "T" is 1.0 because the probability density for "T" is entirely in the first position. If another test word was matched instead that contained the letter "T" in the second position instead, such as "atlas", the match on the letter "T" would instead be approximately zero because there is very little probability density of "T" in the second position.

One can also see that for the other matching letters, the match values are a function of the uncertainty of each letter position. The match value of "L" is quite low because the uncertainty is sufficiently high that there is little probability mass in its true position. Nonetheless, this match value is still higher than the mismatched letters "A" and "I", which have lower match values because each of these letters are displaced by one position and the probability mass for mismatching letters is lower than for matching letters.

Conventionally, the standard deviation parameter varies across each letter in the word, which can result in a very large number of free parameters, especially in recognition memory where words can be ten letters or longer. Fortunately, Gomez et al. (2008) found that the standard deviations could be described more simply as a two-parameter exponential function in which the standard deviation increases with letter position:

| 6 |

where l is the serial position of a letter in the word, r is the rate of growth, and d is the asymptote – both r and d are estimated as free parameters in all of our fits to data below.

Both-edges overlap model

While the overlap model can be considered a noisy slot code, it inherits some of the limitations of the slot code in that positions are represented relative to the start of the string. For this reason, we developed a both-edges overlap model that can be considered a noisy version of the both-edges slot code model described above. Equation 5 is used to calculate similarity, with the exception that backward similarity within the overlap model is calculated relative to the end of the string. The forward and backward similarities are combined using Eq. 4, which introduces the weighting parameter w.

Relative position codes

In relative position codes, a letter is coded relative to other letters in the word rather than its position within the letter string. The most common implementation – and the one we pursue in this work – is coding the string in terms of adjacent letter bigrams. That is, a word such as "cat" can be decomposed into the bigrams CA and AT. The open bigrams allow for bigrams separated by other letters to be represented, such that an additional CT bigram would be represented in the string. While there are some models of word recognition that have considered letter trigrams (Seidenberg & McClelland, 1989), we know of little work exploring the consequences of these representations and have not pursued them in order to preserve a manageable number of representational schemes to explore.

Closed bigrams

The simplest relative position code involves coding the string into adjacent letter bigrams. Adjacent letter bigrams illustrate the advantage of relative position codes – similarity can be preserved if the absolute positions of substrings are perturbed across the two strings. For instance, consider the two words "members" and "remembering" – "member" is an embedded substring in both of these words, but there will be little alignment between the two strings according to absolute position schemes. If we break up the word into adjacent letter bigrams and use shared bigrams as the basis of similarity, we can see that both strings share the bigrams me, em, mb, be, and er. Closed bigrams resemble associative chaining models of serial recall, where items within a study list are directly associated to each other (Lewandowsky & Murdock, 1989). Direct associations between items are also common to address paired associate memory tasks and free recall (Criss & Shiffrin, 2005; Gillund & Shiffrin, 1984; Lehman & Malmberg, 2013; Osth, A. F., & Dennis, 2014; Osth & Dennis, 2015; Raaijmakers & Shiffrin, 1981). A disadvantage of all bigram schemes is that they do not have a natural way of capturing the importance of the exterior letters. For this reason, we augment the bigrams with representations of the beginning and end of the word. That is, the word "member" would also include and , where _ indicates the letter is an exterior letter, with the first position indicating that it is the first letter and the second position indicating that it’s the final letter. This is consistent with previous bigram representations (e.g., Hannagan & Grainger, 2012; Seidenberg & McClelland, 1989) and is also consistent with chaining models of serial order memory that additionally include representations of the start- and end-of-the-list (e.g., Lewandowsky & Murdock, 1989; Solway, Murdock, & Kahana, 2012).

A match m on a bigram k between strings i and j is scored as:

| 7 |

where the subscript refers to the set of bigrams in the string. A complication with bigram coding that we found is that a bigram may be repeated in one word but not another. That is, consider if the string "ababab" is compared against the string "ab" – a similarity value of .50 can be returned if each "ab" in the first string can be matched against the bigram in the second. For this reason, we made it such that each bigram can only match another bigram in a comparison string once. This was accomplished by removing each matched bigram from the set of possible bigrams during the comparison process1. Evidence for this assumption comes from the fact that strings with matching repeated letters do not show any priming advantage over strings with non-repeated letters (Schooenbaert & Grainger, 2004).

The overall similarity M is calculated in the same manner as the other schemes (according to Eq. 3) but with the exception that the alignment length a refers to the number of bigrams in the longest of the two strings.

Open bigrams

Open bigrams make allowance for adjacent letters that are separated by other letters. To prevent an explosion of possible bigrams as word length increases, we follow Grainger and van Heuven (2003) and only allow bigrams between pairs of letters that have at most two letters between them. To give an example, if we considered all bigrams that could be constructed from the letter "a" in the word about, the closed bigram representation would only include the bigram "ab", while the open bigram representation would additionally include "ao" and "au." Open bigrams can be considered the relative position analog of the overlap model – just as the overlap model could be considered a "noisy" slot code, open bigrams could be considered a "noisy" bigram representation. However, open bigrams differ from the overlap model because the longer-range bigrams are conventionally weighted the same as the bigrams formed from adjacent pairs of letters. Open bigrams are employed in models of word recognition such as the SERIOL model (Whitney, 2001) as well as the OB1-Reader (Snell et al., 2018). In episodic memory, open bigram representations strongly resemble associations from models that employ remote associations, where associations are formed between items that are more than one item apart (Logan, 2021; Murdock, 1995; Solway et al., 2012).

The match m on a bigram k is calculated according to Eq. 7 except that the set of bigrams in each string is larger, which likewise affects the alignment length a.

Levenshtein distance

We will be comparing each of the above representations to string similarity based on Levenshstein distance (Levenshtein, 1966). Levenshtein distance is a measure of string edit distance and refers to the minimum number of transformations (substitutions, insertions, or deletions) between any two strings. For instance, there is a Levenshtein distance of 1 between "dog" and "dogs" – this is because only a single operation (insertion of "s" into "dog" or removal of "s" from "dogs") is required to transform one string into another. Levenshtein distance has been used in various psychological applications, including quantification of orthographic density in the lexicon (Yarkoni et al., 2008), orthographic similarity effects in recognition memory (Freeman et al., 2010; Zhou et al., 2023), and measuring the difference between recalled sequences and study lists (Logan, 2021).

We can convert the Levenshtein distance between two strings to similarity using the following equation:

| 8 |

where the alignment length a refers to the number of letters in the longer of the two strings.

While Levenshtein distance is an extremely useful measure for computing string similarity, it was not designed as a psychological measure of orthographic similarity. For instance, each of the transformations that it considers are all equally weighted in the similarity calculation. In practice, each possible transformation often exhibits different consequences for perceived string similarity in masked priming tasks (Davis & Bowers, 2006; Hannagan & Grainger, 2012). While it would be possible to estimate different weights of these transformations, the psychological representations not only produce different weights and consequences for each operation, but they also provide more principled explanations for these phenomena that can be linked to associative mechanisms.

Nearest neighbors

To illustrate the differences between the different orthographic representations, Table 1 shows the five nearest neighbors – the words with the highest similarity values – to three different words: ledge, sustain, and yourselves. Rather than calculate the similarity of these words to the entire lexicon, we used the word set from Cortese et al. (2015) – one of the datasets we fit – as a way to demonstrate similarity to potential memory set items. This dataset contained words that were between 3 and 10 letters long.

Table 1.

Nearest neighbors to a set of four words from each orthographic representation

| Rep. | Neighbors |

|---|---|

| ledge | |

| Slot | ledger (.83), ladle (.6), wedgie (.5), redeem (.5), midget (.5) |

| Both-edges slot | ledger (.62), ladle (.6), wedgie (.42), ladder (.42), venue (.4) |

| Overlap | ledger (0.3), lever (0.26), level (0.26), leper (0.26), legend (0.26) |

| Both-edges overlap | ledger (0.28), lever (0.24), level (0.24), leper (0.24), ladle (0.24) |

| Closed bigrams | ledger (.71), ladle (.5), knowledge (.5), wedgie (.43), peddle (.43) |

| Open bigrams | ledger (.71), legend (.5), ladle (.45), wedgie (.43), knowledge (.43) |

| Levenshtein | ledger (.83), wedgie (.67), ladle (.6), mileage (.57), knowledge (.56) |

| sustain | |

| Slot | curtain (0.71), sultan (0.57), suction (0.57), mustard (0.57), disdain (0.57) |

| Both-edges slot | curtain (0.71), suction (0.57), mustard (0.57), disdain (0.57), custard (0.57) |

| Overlap | session (0.25), sultan (0.24), suction (0.24), suspend (0.22), sushi (0.22) |

| Both-edges overlap | session (0.25), sultan (0.24), suction (0.24), station (0.23), suspend (0.21) |

| Closed bigrams | sultan (0.5), status (0.5), station (0.5), retain (0.5), obtain (0.5) |

| Open bigrams | station (0.59), satin (0.59), sultan (0.53), curtain (0.53), suction (0.47) |

| Levenshtein | sultan (0.71), curtain (0.71), restrain (0.62), mountain (0.62), fountain (0.62) |

| brittle | |

| Slot | wrinkle (0.57), wrestle (0.57), whistle (0.57), shuttle (0.57), scuttle (0.57) |

| Both-edges slot | wrinkle (0.57), wrestle (0.57), whistle (0.57), shuttle (0.57), scuttle (0.57) |

| Overlap | brothel (0.26), bottle (0.26), battle (0.26), critter (0.25), bitter (0.25) |

| Both-edges overlap | bottle (0.27), battle (0.27), critter (0.25), brothel (0.25), bitter (0.25) |

| Closed Bigrams | little (0.62), bottle (0.62), battle (0.62), title (0.5), shuttle (0.5) |

| Open bigrams | little (0.59), rattle (0.53), bottle (0.53), battle (0.53), title (0.47) |

| Levenshtein | rattle (0.71), little (0.71), bottle (0.71), battle (0.71), throttle (0.62) |

| yourself | |

| Slot | counsel (0.62), journey (0.5), journal (0.5), gourmet (0.5), voucher (0.38) |

| Both-edges Slot | counsel (0.47), journey (0.38), journal (0.38), gourmet (0.38), countess (0.38) |

| Overlap | yogurt (0.17), counsel (0.17), yonder (0.16), yodel (0.15), surreal (0.15) |

| Both-edges overlap | counsel (0.16), surreal (0.15), quarrel (0.15), yogurt (0.14), trousers (0.14) |

| Closed bigrams | recourse (0.44), myself (0.44), itself (0.44), yogurt (0.33), yodel (0.33) |

| Open bigrams | recourse (0.45), morsel (0.4), trousers (0.35), myself (0.35), itself (0.35) |

| Levenshtein | morsel (0.62), counsel (0.62), yodel (0.5), myself (0.5), journey (0.5) |

Notes: Rep. = representation

The similarity is contained in parentheses

The words were sampled from the word set in the experiments of Cortese et al. (2015)

One should note that the similarities in each of these schemes depend on the values of their parameters. We fixed the values of and to 1 such that the start and end letters exhibited the same degree of importance as the other letters. If such parameters are increased, mismatches on these letters become much more consequential, ensuring that the nearest neighbors are much more likely to include words that match on these letters. For the both-edges slot and overlap models, we fixed the weight of the forward representation to .75. For the overlap models, we fixed the parameters of the exponential function to the best fitting values from Gomez et al. (2008), namely and . In our fits to recognition memory data, we estimate the values of each of these parameters.

For the first word – "ledge" – each of the representations agree on the most similar word, which is "ledger." There is likely very broad agreement among the representations when two strings differ by an addition at the end of the word or a single substitution. One exception is that the bigram models predict lower values of similarity for this comparison. This is because while the absolute position schemes result in only a single letter difference between the two strings, the bigram models result in the mismatch of at least two bigrams – the "er" and "r_" bigrams.

Not all of the words have agreement among the nearest neighbors, however. For instance, the word "brittle" illustrates the difference between the absolute position and relative position schemes. The relative position schemes select the word "little" as their most similar word. The absolute position schemes selected different words – the slot-based models instead select "wrinkle", which is likely because "little" is one letter shorter than "brittle", which offsets the alignment of their absolute positions. The overlap model instead selects "brothel" while the both-edges overlap model selects "bottle" as the most similar word.

Global similarity of orthographic representations

Each of the discussed orthographic representation schemes were used to predict performance on individual trials in four different recognition memory datasets. As mentioned previously, in recognition memory it is believed that recognition operates by computation of the global similarity – the similarity between the probe item and each of the learned representations from the study list is computed and aggregated together. The global similarity value is then subjected to a decision process to produce an "old" or "new" decision. While models such as REM and Minerva 2 produce their similarities by randomly generating representations of each list word, in this work the similarities are supplied by each of the representational schemes. Specifically, we implement each representation as its own global similarity model.

The global similarity g for a probe word i can be computed as follows:

| 9 |

where L is the set of study list words, is the length of the study list, and p is a freely estimated non-linearity parameter. Similar to our previous investigation on semantic similarity (Osth et al., 2020), we omitted the target item from the global similarity computation. This is because in the majority of the orthographic representations, the self-similarity is always equal to 1. By omitting the self-similarity parameter, the investigation is more directly focused on how the inter-item similarity affects recognition memory decisions.

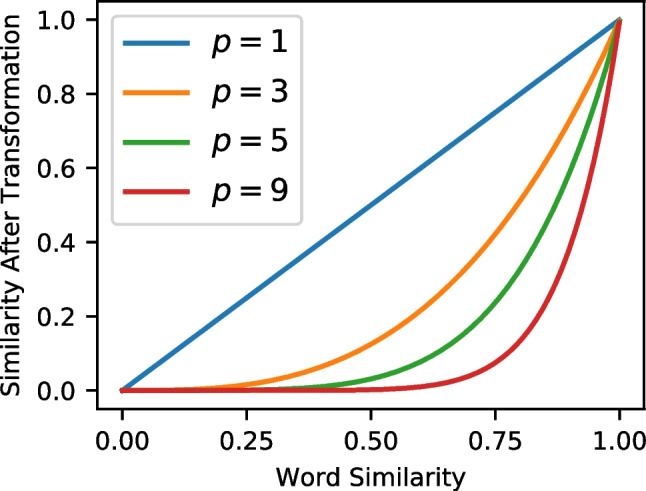

The non-linearity parameter p was directly inspired by the cubic transformation of similarities in the Minerva 2 model (Hintzman, 1988), which has the effect of punishing low similarity values before computing the global similarity. Because similarity is bounded between 0 and 1, there are no sign reversals for certain values of p (e.g., negative similarities becoming positive when p is an even number). The consequences of this transformation can be seen in Fig. 2. As the p parameter is increased, lower values of similarity are pushed to zero while higher values are more resistant, with similarity values of 1.0 being completely unaffected by the transformation. This non-linearity is also analogous to the c parameter in variants of the generalized context model (Nosofsky, 1986; Nosofsky et al., 2011). A psychological interpretation of this transformation is that it approximates auto-associative bindings, which are essentially bindings of stimulus features to each other – a mathematical analysis of the cubing process in the Minerva 2 model found that it was identical to a mode-4 autoassociative tensor (Kelly et al., 2017).

Fig. 2.

Similarity before (x-axis) and after (y-axis) the power transformation for four different values of p (1, 3, 5, and 9). See the text for details

Arndt and Hirshman (1998) demonstrated that the cubing process in Minerva 2 was essential in capturing many phenomena in the false memory literature. We will demonstrate below that it was quite crucial in our fits to recognition memory data with orthographic representations for two reasons. First, highly similar lures exhibited much higher rates of false recognition than lures of moderate or low similarity to a much larger degree than a linear model predicted (a model where ). Second, we will demonstrate that this parameter has the effect of reducing the similarity of the majority of memory set items to zero, such that only a small number of items that are similar to the probe contribute to the global similarity. In other words, this parameter functions to reduce the noise in the comparisons to the memory set.

Mapping global similarity to old-new decisions: the linear ballistic accumulator (LBA) model

To make contact with experimental data, global similarity has to be mapped to old-new decisions. Many models accomplish this by comparing the global similarity to a response criterion, with responses above the criterion eliciting "old" responses (e.g., Gillund & Shiffrin, 1984; Hintzman, 1988). However, it is becoming increasingly common to use global similarity to drive an evidence accumulation process of decision-making, which is able to produce a decision but can additionally make predictions about the latency with which decisions are made (Cox & Shiffrin, 2017; Fox et al., 2020; Nosofsky et al., 2011; Osth et al., 2018).

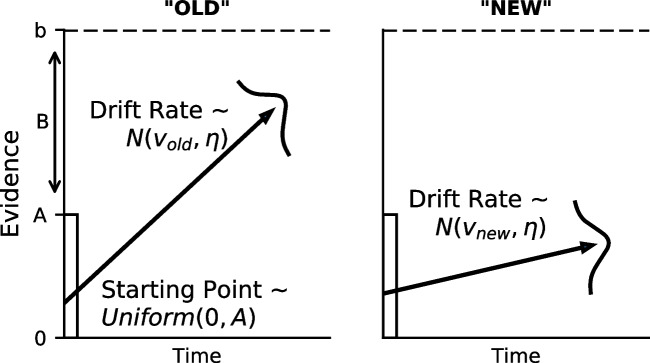

In this work, we followed the evidence accumulation approach and used the linear ballistic accumulator (LBA) model (Brown & Heathcote, 2008). In the LBA (depicted in Fig. 3), each response alternative is associated with its own accumulator, which race to produce a decision. Each accumulator has a mean drift rate v, which determines the average slope of the accumulator – higher drift rates produce steeper slopes, and hence faster evidence accumulation. The decision ends when an accumulator’s response threshold b is reached, which not only produces the corresponding decision, but also the response time, which is the sum of the time elapsed during evidence accumulation plus some additional time for non-decision processes, such as processing of the stimulus and response execution.

Fig. 3.

The linear ballistic accumulator (LBA). See the text for more details

A defining feature of the LBA is that evidence accumulation is both linear and noiseless. The stochastic aspects of the model instead come from between-trial variability in the model parameters. The starting point for evidence accumulation is sampled from a uniform distribution with height A, while the trial’s drift rate is sampled from a normal distribution with standard deviation 2. The between-trial variability parameters additionally function to allow for the prediction of fast errors under speed emphasis and slow errors under accuracy emphasis, similar to their roles in the diffusion decision model (Ratcliff & McKoon, 2008).

The threshold parameter b is responsible for the speed–accuracy tradeoff: increases in b slow decisions because the accumulators have to travel more distance to hit the threshold, but the decisions are more accurate because there is more time to overcome the noise in the starting point variation. In addition, bias can be accommodated in the model by allowing different thresholds for each response option. In this work, we estimate the B parameter, which is the distance from the top of the starting point distribution to the threshold b () and allow for bias by having two threshold parameters and .

In this work, we map the global similarity g for a probe stimulus i in experimental condition m and old-new status n of the probe stimulus (e.g., target or lure) mean drift rates and using the following equations:

| 10 |

| 11 |

where is a freely estimated shift parameter that helps ensure that the drift rates are positive (e.g., van Ravenzwaaij et al., 2020), V is a mean drift rate that can vary across experimental conditions m or old-new status n, and is a scale parameter that maps global similarity to drift rates. One can see from Eqs. 10 and 11 that increases in global similarity g serve to increase the mean drift rate and simultaneously decrease the drift rate , resulting in more frequent "old" decisions that are faster. This parameterization mirrors the changes in drift rates in the diffusion decision model, where changing the strength of evidence for one response necessarily decreases the strength of evidence for the other. To insure identifiability we fixed the parameter to 1 for lures but freely estimated the parameter for target items (), as previous investigations have found evidence of greater drift rate variability for target items (e.g., Osth et al., 2017; Starns & Ratcliff, 2014).

The purpose for including the V parameters is that in several datasets, we fit manipulations that alter performance – such as word frequency, depth of processing, and repetitions – for which global orthographic similarity may be able to partially explain but cannot provide a sufficient account of the changes of performance on its own. By allowing the V parameter to vary across conditions, it allows us to capture changes in performance across these conditions while being agnostic to the causes of these effects.

Essentially, Eqs. 10 and 11 can be considered regression equations where the global similarity is mapped onto the mean drift rates on a trial-by-trial basis. We allow the parameter to vary across targets and lures because it allows us to measure the extent to which global similarity may influence targets and lures in different ways. For instance, in previous work using a similar approach with global semantic similarity, Osth et al. (2020) found that global similarity substantially affected drift rates for lures while producing only minimal effects on targets. While process models are unable to map global similarity to targets and lures in different ways, they are often able to mimic the finding that inter-item similarity has less of an effect on targets. One such example is the REM model (Shiffrin & Steyvers, 1997), where the self-similarity of a probe to its own representation in memory is both large and highly skewed, allowing it to dominate the global similarity computation such that the similarity of the probe to other items in memory would not substantially affect the resulting global similarity.

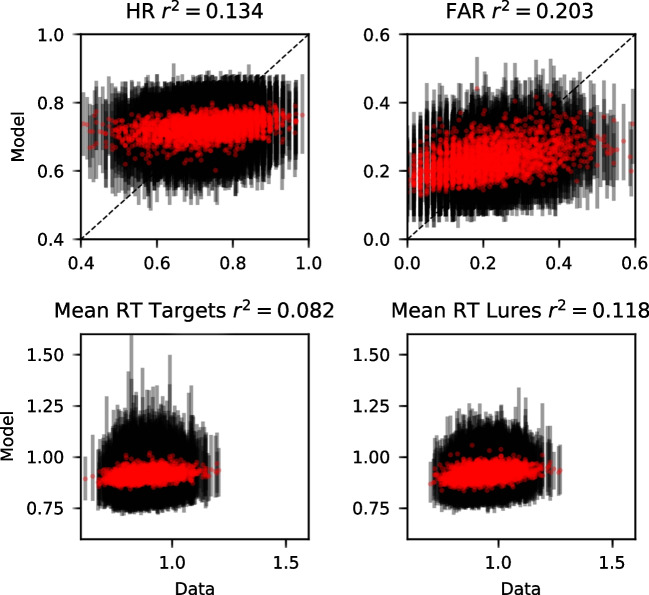

The model fit

Applying the models to data

Each representational scheme – the slot code, the both-edges slot code, the overlap model, the both-edges overlap model, closed bigrams, open bigrams, and Levenshtein distances – were all applied as separate global similarity LBA models to each of the four datasets. Within each dataset, we fit the models to each individual response and response time (RT) using hierarchical Bayesian methods (see Boehm et al. 2018), where parameters for individual participants and the group are jointly estimated. This avoids averaging artifacts associated with fitting group data (e.g., Estes & Maddox, 2005) but simultaneously allows for "pooling" information across individuals because each participant’s parameter estimates are influenced by the group-level parameters. Parameters were estimated using differential evolution Markov chain Monte Carlo (DE-MCMC), which is advantageous for fitting models like the LBA due to the presence of strong correlations between the parameters of the model (Turner et al., 2013).

For each response, the likelihood of the response and RT were computed according to the LBA’s likelihood function using the estimated parameters, including the mean drift rates for the individual trial that were computed based on the global similarity of the probe to each of the studied strings. Higher likelihoods reflect closer correspondences between the data and the model’s predictions. In hierarchical Bayesian models, the likelihood of a participant’s data under that participant’s model parameters is multiplied by the likelihood of those parameters under the group-level distribution. This is how the "pooling" occurs – participant parameters that are closer to the group level are more likely, and the resulting parameter estimates strike a balance between a good fit of the participant parameters and the correspondence with the group. Group-level distributions were either normal distributions or truncated normal distributions to capture the effects of boundaries, each with parameters and . Throughout the article, we denote the group-level parameters using superscripts (e.g., refers to the group mean of the A parameter). Additional details on the prior distributions and the MCMC sampling can be found in the Appendix.

Fitting these models to data was challenging and extremely lengthy in some cases. Each trial required aggregating the similarity of the probe to the items, where is the number of items on the study list. With the overlap model, the similarity between two words required calculating the match value on the individual letters. For the overlap model, this meant that each participant required the calculation of the number of observations O multiplied by list items multiplied by at most the longest length of the word. For our largest dataset, this meant that each participant required around 1.47 million calculations for the application of the overlap model, and this was doubled for the both-edges overlap model. Similarities to each item could not be pre-computed unless the parameters governing the representations and the non-linearity parameter p were fixed.

Each of the models varies in their number of parameters and their resulting complexity. For instance, the forward slot code can be considered a special case of the overlap model where all of the standard deviation parameters are set to zero, and also a special case of the both-edges slot code with the weight entirely on the start-based representation. Thus, the overlap model should inherently fit better than the slot code model. We make comparisons between each model using model selection techniques, which subtract a measure of model complexity from each model’s measure of goodness-of-fit. Specifically, we use the widely applicable information criterion (WAIC:Watanabe, 2010). WAIC is an approximation to leave-one-out cross validation. Because WAIC is on a deviance scale, lower values are preferred. In contrast to the deviance information criterion (DIC), WAIC is considered to be a "fully Bayesian" information criterion in the sense that its penalty term is determined by calculation of the variability in the likelihood of each data point across all of the parameters in the posterior distribution. In other words, a more complex model has more "ways" it can fit the data than a less complex model. WAIC has been recommended over DIC (Gelman et al., 2014).

Datasets

We specifically employed recognition memory datasets with lists composed of unrelated words that do not contain any obvious similarity structure, similar to past approaches exploring the consequences of semantic similarity in free recall (Howard & Kahana, 2002; Morton & Polyn, 2016) and recognition memory (Osth et al., 2020). While several studies have explored the consequences of studying categories of orthographically similar words, the majority of these studies have done so by constructing a set of words with only 1-2 letters or phonemes difference from each other (e.g., Shiffrin et al., 1995; Sommers & Lewis, 1999). We find lists composed of highly similar items undesirable for two reasons. First, when studying a list of highly similar words like "mate", "late", "date", etc., it is possible that participants adopt different encoding or retrieval strategies, such as placing more emphasis on encoding common features or evaluating test items to determine whether they contain the common features. Second, we have established in Table 1 that each of the orthographic representations would likely agree that such items are highly similar to each other. Instead, there was more disagreement among the moderate similarity items, which should be more prominent in lists of unrelated words.

A total of four datasets were included in our fits to data, which are summarized in Table 2. The datasets vary considerably in their list lengths (ranging from 40 to 150 words), word lengths (3–11 letters), and experimental manipulations (word frequency, speed–accuracy emphasis, numbers of presentations, and levels of processing). The differences in list length among the datasets are consequential when one considers that global similarity always involves an average of all similarities in the word set. With longer lists, more similarity values contribute to the global similarity calculation. Details on exclusions from the data can be found in the Appendix. The raw data can be found on our OSF repository (https://osf.io/hyt67/).

Table 2.

Summary of the datasets fit by the model

| Dataset | O | Manipulation | ||||

|---|---|---|---|---|---|---|

| Rae et al. (2014) | 47 | 757 | 40 | 1612 | 5.1 (4-7) | SA emphasis, WF (LF/HF mixed) |

| Criss (2010, E2) | 16 | 1412.9 | 50 | 1600 | 5.9 (4-11) | Pres. (1x/5x), WF (LF/HF crosslist) |

| Kiliç et al. (2017, E1) | 30 | 1736.8 | 150 | 2929 | 5.9 (4-8) | DOP (deep/shallow) |

| Cortese et al. (2015) | 119 | 2947.6 | 50 | 3000 | 6.2 (3-10) | None |

Notes: E = experiment, = number of participants, O = mean number of observations per participant, = study list length, = number of words in the word set, = word length (mean, min-max). WF = word frequency, SA = speed–accuracy, Pres. = number of presentations, LF = low frequency, HF = high frequency, DOP = depth of processing

The data from Cortese et al. (2015) are noteworthy because all participants in the sample were tested on the same set of 3000 words. The large number of participants () meant that there was a substantial number of observations for each individual item. Because global similarity varies on an item-by-item basis, it allows for us to test how each representational scheme is able to account for the variability in performance among the individual words.

For each of the datasets – with the exception of the Kiliç et al. dataset – the parameters governing the orthographic representations were all fixed across each of the conditions in the experiment. We did, however, allow several parameters within the LBA to vary across conditions. For the speed–accuracy emphasis manipulation in the Rae et al. (2014) dataset, we followed the original authors and allowed boundary separation B, starting point variability A, nondecision time and drift rate V to vary across the conditions. For the word frequency manipulation in the Criss and Rae et al. datasets, the drift rate V varied across conditions for both targets and lures. For the Criss dataset, drift rate V was varied across the repetition conditions for both targets and lures because it was a cross-list strength manipulation, which resulted in reduced FAR in the lists with repeated items. Indeed, the diffusion model analysis of Criss (2010) on the same dataset confirmed that the reduced FAR were due to changes in drift rates for the lure items. Each of these manipulations, however, were not the focus of the present work. A complete list of base LBA parameters common to all models can be found in the Appendix.

Allowances for depth of processing

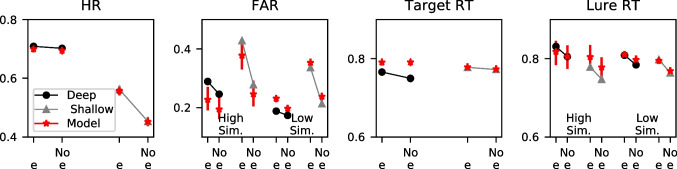

We made an exception on fixing the parameters governing the orthographic representations for the data of Kiliç et al. (2017), which used a depth of processing manipulation. In each study list, participants were either instructed to engage in shallow processing, which involved deciding whether an "e" was in the letter string, or were instructed to engage in deep processing, which involved deciding whether each word had a pleasant meaning. Their studies found that the deep processing task elicited better performance, including a reduced false alarm rate (FAR). An unexpected finding from this dataset was that hit and false alarm rates were both higher for probes containing the letter "e," a tendency that was especially pronounced in the shallow processing condition. These results can be seen in Fig. 4, which also separately show the results for high similarity lures (lures with a Levenshtein distance of 1 to one of the studied items) and lower similarity lures (lures with a minimum Levenshtein distance of 2 or higher to each of the studied items).

Fig. 4.

Group-averaged hit rates (HR), false alarm rates (FAR), mean RT to targets, and mean RT to lures, for probe items that both contain and lack the letter "e". Depicted are both the data and the posterior predictives of the selected model. Error bars depict the 95% highest density interval (HDI)

None of the representations could naturally capture this finding, so we used a modified inter-item similarity to take into account whether an "e" was present in both the probe and the stored representation:

| 12 |

| 13 |

where is the strength of the letter "e". One should note here that the presence of the letter "e" in both strings increases the string similarity regardless of the position of "e" in either of the two strings. We used this approach because the shallow processing condition only instructs the participant to find an "e" in the word and does not specify that the participant reports its position. Figure 4 shows the predictions of the selected model (see the next section for details), which reveal that the model was able to capture the higher tendency to respond "old" to items that contain the letter "e."

In addition, we found better performance of the models when other parameters relating to inter-item similarity changed across conditions, namely the , , p, and parameters. For the overlap model, however, we fixed the d and r parameters across conditions as allowing such parameters to vary compromised identifiability and did not appreciably improve the fit of the model.

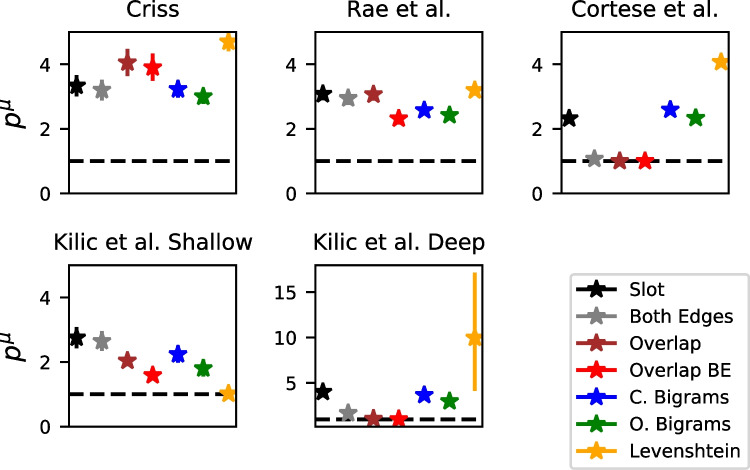

Model selection

WAIC values for each model in each dataset can be seen in Table 3. One can see that there is no clear winner across all of the datasets – closed bigrams are preferred for the Criss (2010) dataset while open bigrams are preferred for the Rae et al. and Cortese et al. datasets. While this may seem as if relative position representations should be preferred overall, the Kiliç et al. dataset shows a preference for the overlap model. While it is difficult to ascertain the causes of this discrepancy, one possibility is that the encoding tasks that are part of the depth-of-processing manipulation in the Kiliç et al. dataset changed the way in which participants represented the letter strings.

Table 3.

WAIC values for each model variant in each dataset

| Criss | Rae | Cortese | Kiliç | |||||

|---|---|---|---|---|---|---|---|---|

| Model | WAIC | P | WAIC | P | WAIC | P | WAIC | P |

| Slot | 101 | 21 | 32 | 23 | 508 | 13 | 136 | 24 |

| Both edges | 110 | 22 | 27 | 24 | 426 | 14 | 94 | 25 |

| Overlap | 79 | 23 | 33 | 25 | 828 | 15 | 0 | 26 |

| Both-edges overlap | 101 | 26 | 45 | 28 | 854 | 18 | 13 | 29 |

| Closed bigrams | 0 | 21 | 1 | 23 | 120 | 13 | 135 | 24 |

| Open bigrams | 74 | 21 | 0 | 23 | 0 | 13 | 137 | 24 |

| Levenshtein | 55 | 19 | 17 | 21 | 982 | 11 | 257 | 20 |

In addition, we would also like to mention that the data of Cortese et al. are especially diagnostic due to the especially large size of that dataset. Table 3 also reveals that the WAIC scores between the selected model (open bigrams) and the other models is very large. As we will later discuss, this dataset was also noteworthy because several of the absolute position models failed to capture the differences in the false alarm rate between high and low similarity lures, which was not a problem for the relative position models.

One clear consistency was that models based entirely on Levenshtein distances were not preferred in any of the datasets, suggesting that there are clear advantages for considering psychological representations in capturing orthographic similarity effects.

As we will discuss shortly, there are many difficulties in adjudicating between the models that come from the nature of the recognition memory paradigm – having items on the study list means there are comparisons on any given trial, so it can be unclear which words from the study list contributed to a given response on a given trial. This is made much simpler in a priming task, where it can be more safely assumed that the most recently presented item had the largest contribution to performance on a given trial.

In the coming sections, we will first give an example of the similarity values from each of the different representations. Subsequently, we will focus on the selected model for each of the datasets and focus on the relevant themes and predictions from the models. In the next section, we will discuss parameter estimates and predictions that suggest that global similarity of the orthographic representations generally exerts a large influence on lures but does not substantially affect target items. After that, we will discuss the non-linear effect of similarity, with similarity effects being largest for high similarity items.

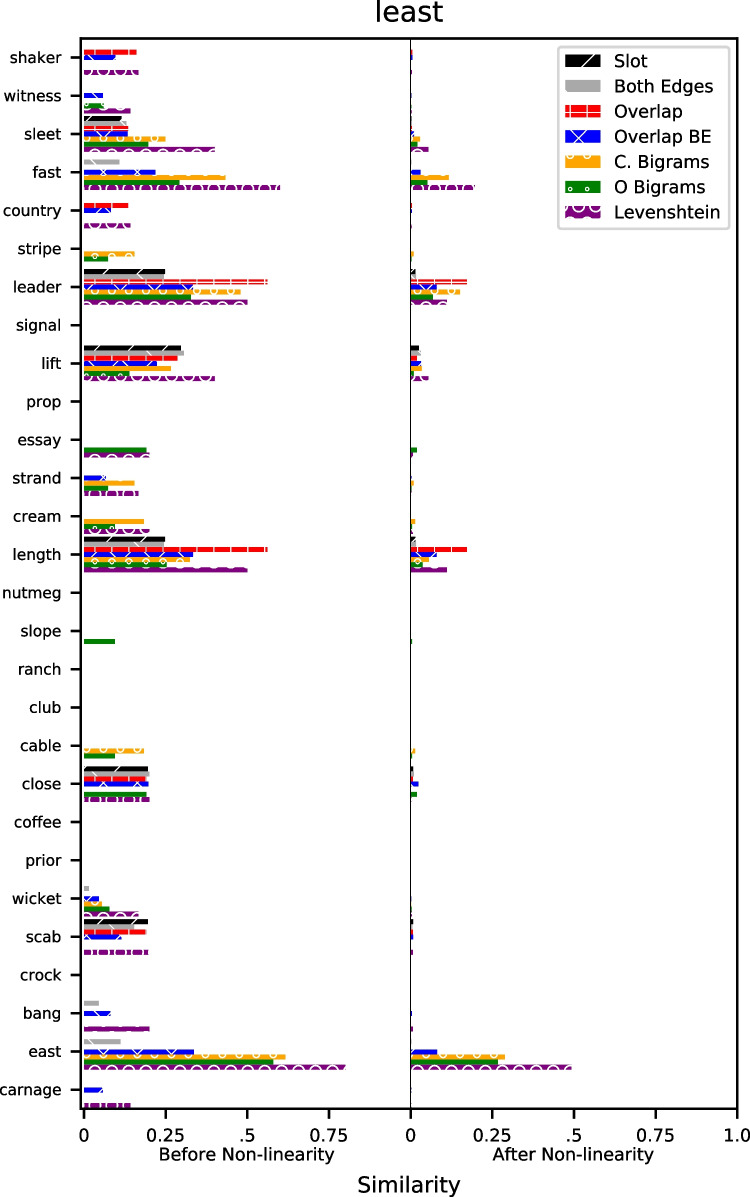

Global similarity examples

Figure 5 shows an illustration of an example trial from each of the models from the Rae et al. dataset. Each of the words are words from the study list and the word at the top ("least") is the probe word for a particular trial. The horizontal bars represent the similarity from each of the underlying representations – the left and right columns show the similarity before and after the non-linear transformation of similarity using the estimated value of the power parameter p from the fits to data.

Fig. 5.

Illustration of global similarity from each of the representations from a trial in the Rae et al. dataset. The word at the top is the probe word, the words on the left are the study list words, and the horizontal bars are the similarities between the list words and the probe word before (left) and after (right) the non-linear transformation

Two trends are evident in the figure. First, the non-linearity reduces similarities and ensures that only items that range from moderate to high similarity "survive" the non-linear transformation. Essentially, this reduces the memory set to a smaller number of "effective" items. Second, there is generally agreement among the two classes of representations – the absolute position models (slots, both edges, overlap, and both-edges overlap models) produce comparable similarity values, as do the relative position models (closed and open bigrams). While the absolute and relative position models agree on some words, there are some words that they critically disagree on. For instance, the word "east" is on the study list – one can see that both the closed and open bigram models continue to describe it as a similar item after the non-linear transformation. The absolute position models instead all describe this as an item with essentially zero similarity, which is likely due to the fact that the omission of the first letter caused a misalignment between the studied word and the probe word that heavily penalized the resulting similarity.

We found this example trial by converting the similarities to z-scores and finding example trials that maximally discriminated between the models. In each of the models that favored relative position models, we found a number of similar trials where there were one or more list words where there was misalignment between those words and the probe words, such as the addition of a prefix.

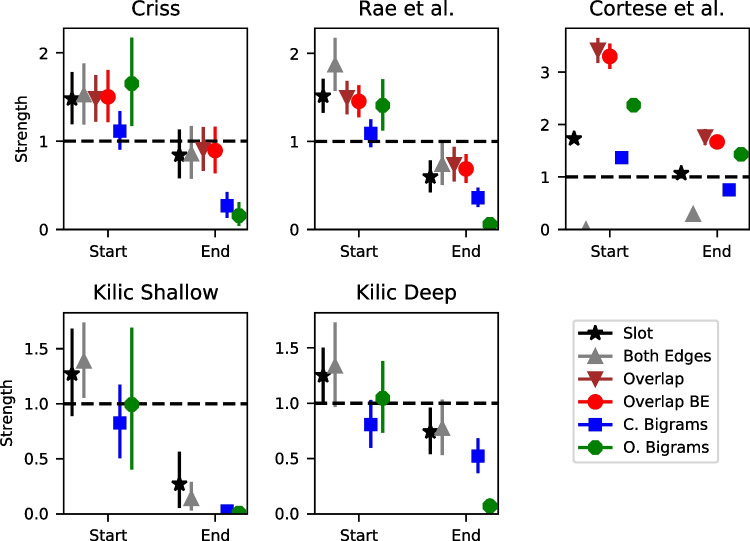

Global orthographic similarity affects lures more than targets: parameter estimates and model fits

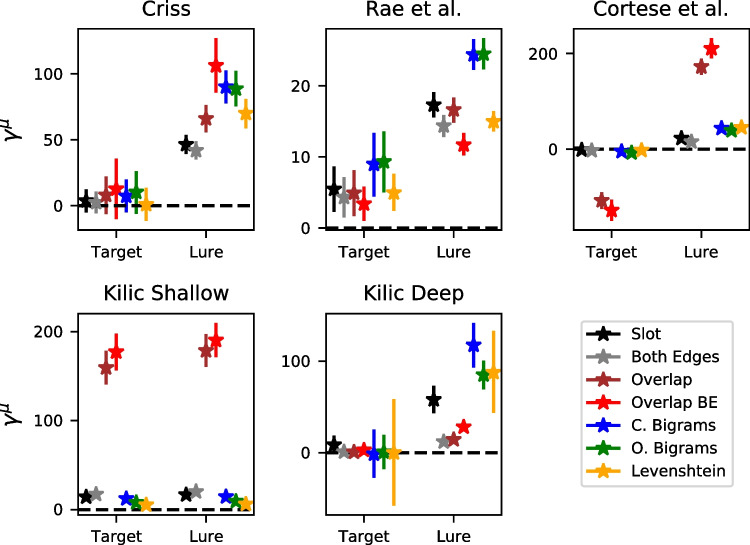

Both the parameter estimates and the model predictions suggest that global orthographic similarity affects lures to a much greater extent than targets. Figure 6 shows the group mean estimates of the scale parameter , which governs the direction and extent to which global similarity enters the drift rate for an individual trial. An important caveat about the parameter is its tradeoff with the power parameter p – as p increases, the inter-item similarities s decrease, which can be compensated by an increase in the scale parameter . For this reason, the parameter is not comparable across datasets or models, as there is no guarantee that p will be equated across them. However, is directly comparable across targets and lures because p is held constant across these item classes.

Fig. 6.

Group mean estimates of the scale parameter for each model and dataset

Figure 6 reveals that is higher for lures than targets for virtually every dataset and model. The one exception is the shallow processing condition of the Kiliç et al. dataset, is a condition where participants were instructed to response to a prompt about the letters in each of the words from the study list. However, the same was not true for the deep processing condition from the same dataset, which involves an orienting task that was not focused on orthography. For each of the other datasets, was close to zero for targets, implying that global similarity has no influence at all on the resulting hit rates or the accompanying latencies for target items.

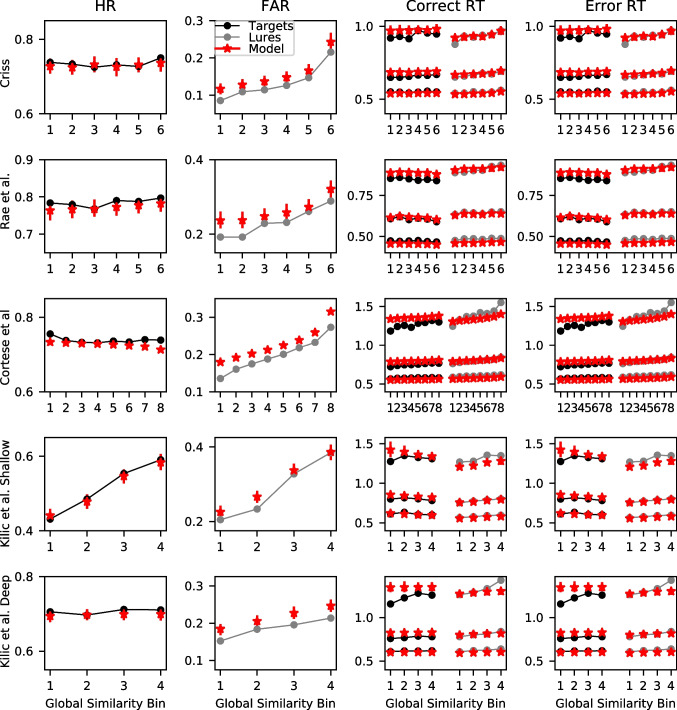

The model predictions reveal a similar pattern. For each of the winning models in each dataset, we calculated the global similarity g for each trial across the entire posterior distribution. Subsequently, we averaged the global similarity values across the posterior distribution. We then divided the global similarity into a number of equal area bins where the number of bins depended on the size of the dataset. We used six bins for the Criss and Rae et al. datasets, eight bins for the comparably larger Cortese et al. dataset, and four bins for the shallow and deep processing conditions of the Kiliç et al. dataset. Within each of the bins, we computed the hit and false alarm rates along with the .1, .5, and .9 quantiles of the response time (RT) distribution for correct and error responses, which are the 10th, 50th, and 90th percentiles of the RT distribution, respectively. These summary statistics were computed for both the data and the model’s predictions.

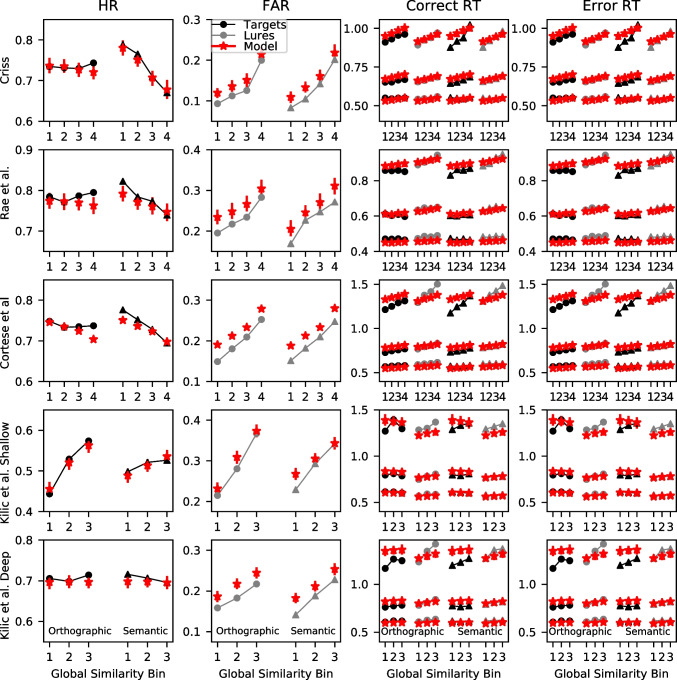

These results can be seen in Fig. 7, which reflects the patterns seen in Fig. 6. We focused on the selected model for each dataset in part because space precludes depiction of all of the models, but also because each of the models were surprisingly similar in their predictions here. This is not to suggest that the models cannot be distinguished – as we discussed previously, their predictions differ substantially for some words. In the majority of the datasets, increases in global similarity – which are reflected in the higher bin numbers – result in substantial increases in the false alarm rate (FAR) along with slowing of the correct responses to lure items. The FAR increase from the lowest to the highest similarity bin is often .10 or higher. For context, this result parallels the FAR difference reported in the orthographic-phonemic condition of Shiffrin et al. (1995)’s Experiment 1, where they found an FAR of .096 for categories of two items and an FAR of .207 for categories of nine items (difference of .10). The present results indicate that even lists of unrelated words can show substantial orthographic similarity effects that can be comparable to those from category length designs. The slowing of the RTs is most pronounced for the .9 quantile – the slowest RTs – whereas the fastest RTs (.1 quantile) and the median (.5 quantile) are relatively unaffected. This is broadly consistent with manipulations that selectively influence the drift rate (Ratcliff & McKoon, 2008) and also with our previous investigation focused on global semantic similarity (Osth et al., 2020).

Fig. 7.

Group-averaged hit rates (HR: first column), false alarm rates (FAR: second column), correct RTs (third column), and error RTs (fourth column) for each of the global similarity bins (ranked from lowest to highest) from the data (black) and the winning models (red) of each dataset. RTs are summarized using the .1, .5, and .9 quantile. Error bars depict the 95% highest density interval (HDI)

It should also be mentioned that the FAR plots show some evidence of the non-linear similarity function that is due to the influence of the p parameter. Recall that increases in the power parameter p make it such that high similarity items tend to dominate the global similarity computation, meaning that there is relatively little difference between low and moderate similarity items but a comparably larger difference between high and moderate similarity items. This can be seen in the FARs for the Criss and Cortese et al. datasets, where the increase in FAR for the highest global similarity bin is larger than the other bins. We will return to evidence for non-linearity in the next sub-section.

Target items, in contrast, show little effect – the hit rates (HR) are fairly constant across each of the global similarity bins and there are minimal effects on target RTs. The one exception is the shallow processing condition of Kiliç et al., which shows a very large increase in the HR with increases in global similarity – a difference of .20 between the smallest and largest global similarity bin. However, the same result was not found in the deep processing condition, where there was little effect of global similarity on the HR and only a small effect of global similarity on the FAR. These results are consistent with the idea that depth of processing changes the nature of the underlying word representations. We will be returning to this issue later in the article when semantic representations are incorporated into the models.

Figure 7 also reveals that the winning models are providing an excellent fit to the data. A caveat is that there is somewhat of a circularity problem in evaluating the goodness of fit in this way – we have used the representations from the model to group the data, meaning that the differences between the bins that can be seen do depend on the model under consideration. This does not guarantee a perfect fit, however. One area where the models are often missing is that the RTs often change to a somewhat greater degree than the models predict. In the coming sub-section, we remedy the circularity problem by comparing the model’s predictions to an independent standard of similarity.

Global similarity exerting a larger influence on lures than targets is broadly consistent with a number of existing global matching models. In global matching models, the memory signal for lures is composed entirely of the global similarity, whereas for targets, it consists of the global similarity between the probe and the other list items and is additionally composed of the self-match, or the similarity between the probe and its own representation in memory. If the self-match is significantly larger than the inter-item similarity, then increases in the number of similar items will not exert a large influence on target items. In the REM model (Shiffrin & Steyvers, 1997) this occurs because the self-match distribution is extremely skewed. In the generalized context model (GCM: Nosofsky, 1991a) and the Minerva 2 (Hintzman, 1988) model, the non-linear similarity functions punish low similarity items. Since the self-match has high similarity by definition, the self-match dominates the global similarity for targets.

Figure 7 also reveals that the FAR are overpredicted in each of the datasets. A reviewer inquired as to whether this was a consequence of the orthographic representations exhibiting similarities to the probe items that are slightly excessive, or whether this was a consequence of the LBA architecture itself. In the Supplementary Materials, we report on fits to data of a simple LBA model with no orthographic representations. This model overpredicted the FAR to a similar degree, implying that this is a consequence of the LBA architecture itself.

Lure similarity: the importance of non-linearity and exterior letters

Non-linear similarity functions enable the models to capture the difference in FAR between high and low similarity lures

In the previous sub-section, we depicted the model fits using the global similarity predictions from each of the models. To assess how well the models are performing in a way that circumvents the circularity problem, we used an independent standard from each of the selected models and classified each of the lures by their maximum similarity to the study list items. Specifically, we calculated the Levenshtein distance between the probe word and each of the studied words and selected the lowest distance as our measure of lure similarity. We defined words with Levenshtein distances of 1 (e.g., "dog" vs. "dogs"), 2 (e.g., "cargo" vs. "large"), 3 (e.g., "noodle" vs "nude"), or 4 or greater (e.g., "spider" vs. "shoe") as high, medium, low, and very low similarity, respectively. An alternative measure to the Levenshtein distance is the Damerau distance, which treats transpositions between adjacent letters as a single transformation (e.g., "trail" vs. "trial") while this would count as a distance of 2 according to the Levenshtein metric. However, we discovered in the process of analyzing these datasets that transposition pairs of words are quite rare in language and did not occur in any of our datasets, so there were no differences between the Levenshtein and Damerau distances.

In addition, as we previously mentioned, studies investigating priming effects in the lexical decision task have found evidence for the importance of the exterior letters of the words. To our knowledge, it has not been investigated in recognition memory as to whether exterior letters are more critical in orthographically similar lures. For this reason, in the high similarity lures, we investigated whether the missing letter was a start letter, an interior letter, or an end letter. Because high similarity lures were relatively rare in our datasets (ranging between 1 and 3% of all trials), the results are somewhat noisy for this comparison, but consistent enough across datasets to reveal a pattern.

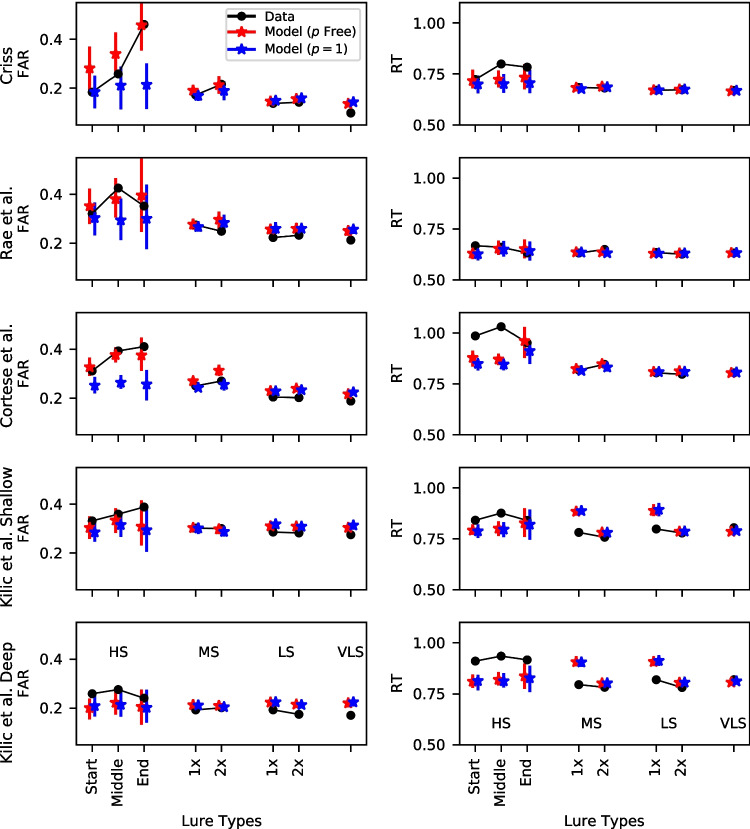

For moderate and low similarity lures, we also investigated how the number of similar items on the list affects performance. That is, for each of these lure types, we looked at whether there were one (1x) or two (2x) items on the list with the same Levenshtein distance. We were unable to separate the data in this fashion for high similarity lures because of their rarity, and because the number of trials where there were two high similarity items on the study list was close to zero in many of our datasets.

Group-averaged false alarm rates (FAR) and median RT to lures from the winning models and the data for the lure categories described above can be seen in Fig. 8. Median RTs were depicted instead of the complete distribution as there were too few observations for high similarity lures. To illustrate the importance of non-linearity in capturing these trends, we additionally fit the same models to data with a linear similarity function () – these models are depicted in blue. Note that we only fit linear versions of the winning models from Table 3 because fitting all of the models would result in a very large number of additional models. A model selection between the non-linear and linear models can be seen in Table 4, where it can be seen that the nonlinear models are selected decisively despite a larger number of parameters in three of the four datasets, with the exception of the Kiliç et al. dataset where the advantage of the nonlinear model is relatively small.

Fig. 8.

Group-averaged false alarm rates (FAR, left column) and median RTs (right column) to the lure types of varying degrees of similarity. The high similarity (HS) lures are sub-divided based on which letter in the word was missing (start = initial letter, middle = interior letter, end = terminal letter). Error bars depict the 95% highest density interval (HDI). Note: HS = high similarity, MS = medium similarity, LS = low similarity, VLS = very low similarity, 1x = one matching item on the study list, 2x = two matching items on the study list

Table 4.

WAIC values for the models with a nonlinearity similarity function (p free) and the models with a linear similarity function ()

| Criss | Rae | Cortese | Kiliç | |||||

|---|---|---|---|---|---|---|---|---|

| Model | WAIC | P | WAIC | P | WAIC | P | WAIC | P |

| p Free | 0 | 21 | 0 | 23 | 0 | 13 | 0 | 26 |

| 145 | 20 | 51 | 22 | 371 | 12 | 2 | 24 | |

See the text for details

What is apparent from Fig. 8 is the non-linear relationship between Levenshtein distance and the FAR – FAR are in some cases dramatically higher for high similarity (HS) than medium similarity (MS) lures, but the difference between medium similarity and low similarity (LS) is small, and the difference between low similarity and very low similarity lures (VLS) is barely noticeable. What is also interesting is that the difference between one and two medium similarity lures is fairly small – this difference is much smaller than the difference between medium and high similarity lures. While the models with non-linear similarity functions are able to capture these trends, the models with linear similarity functions are unable to capture the large difference in FAR between high and medium similarity lures in three of the four datasets. An exception is the Kiliç et al. dataset, where the difference between the linear and non-linear models is quite small, which explains why the linear model was favored slightly in the model selection.

Several of the models made very similar predictions for each of the datasets. One exception was for the Levenshtein model, which generally accounted for the data well but was unable to capture the differences between the high similarity (HS) lure types given that the model does not differentially weight transformations based on letter position. However, as it turns out, the Cortese et al. (2015) dataset revealed very different predictions for the absolute and relative position models. Specifically, all of the absolute position models were unable to capture the larger FAR for the HS lures, while both the closed and open bigram models were able to capture this difference. Figures comparing these models can be seen in the Supplementary Materials.