Abstract

Academic Abstract

In the wake of the replication crisis, social and personality psychologists have increased attention to power analysis and the adequacy of sample sizes. In this article, we analyze current controversies in this area, including choosing effect sizes, why and whether power analyses should be conducted on already-collected data, how to mitigate the negative effects of sample size criteria on specific kinds of research, and which power criterion to use. For novel research questions, we advocate that researchers base sample sizes on effects that are likely to be cost-effective for other people to implement (in applied settings) or to study (in basic research settings), given the limitations of interest-based minimums or field-wide effect sizes. We discuss two alternatives to power analysis, precision analysis and sequential analysis, and end with recommendations for improving the practices of researchers, reviewers, and journal editors in social-personality psychology.

Public Abstract

Recently, social-personality psychology has been criticized for basing some of its conclusions on studies with low numbers of participants. As a result, power analysis, a mathematical way to ensure that a study has enough participants to reliably “detect” a given size of psychological effect, has become popular. This article describes power analysis and discusses some controversies about it, including how researchers should derive assumptions about effect size, and how the requirements of power analysis can be applied without harming research on hard-to-reach and marginalized communities. For novel research questions, we advocate that researchers base sample sizes on effects that are likely to be cost-effective for other people to implement (in applied settings) or to study (in basic research settings). We discuss two alternatives to power analysis, precision analysis and sequential analysis, and end with recommendations for improving the practices of researchers, reviewers, and journal editors in social-personality psychology.

Keywords: research methods, statistics, power analysis, sample size

The recent movement toward reform in social-personality psychological research has revived interest in studies’ sample sizes and statistical power. Small sample sizes have been shown to jeopardize the accuracy and replicability of statistical conclusions (Open Science Collaboration, 2015). There has been a push to improve the usual methods of determining sample size, such as intuition or rules of thumb (e.g., 20 observations per cell; Simmons et al., 2011). For example, the Journal of Personality and Social Psychology now requires authors to address “justifiable power consideration” (American Psychological Association, 2022; see also Leach, 2021), while other journals such as Personality and Social Psychology Bulletin (Crandall et al., 2018), Social and Personality Psychological Science (Vazire, 2016), and Journal of Experimental Social Psychology (Giner-Sorolla, 2016) have requested authors to discuss sample size determination for some years now. With this emphasis has come an appreciation that further work is needed, because researchers sometimes show confusion or disagreement about the starting effect size needed to make decisions from a priori power analysis (see Blake & Gangestad, 2020; Farmus et al., 2023).

Power also comes into play when evaluating research post hoc. Although the sample size reported in a study is important, the power of its key tests is also tied to study design, analytic choices, and other features of the research setting that feed into effect size. For example, a repeated-measures analysis with few participants, but many data points per participant, can have far greater power than a between-subjects analysis with many participants each supplying one data point, because between-subjects error variance is controlled (see McClelland, 2000). Without understanding this point, relying on N-per-design-cell guidelines (e.g., van Voorhis & Morgan, 2007), which are often calibrated for between-subjects designs, can lead evaluators astray. For example, abstract submission guidelines for psychology articles and presentations often require reporting the N of studies but not the power of focal tests (American Psychological Association, 2020; Society for Personality and Social Psychology, 2022), implicitly promoting heuristic evaluation of robustness in terms of N. An evaluation of a given sample cannot be properly made without power analysis, as power functions are nonlinear and analysis-specific (Cohen, 1988). Therefore, any heuristic that deems a study inadequate based on a mere count of participants can underestimate designs that use participants efficiently.

Accordingly, researchers have turned to various forms of power analyses (Cohen, 1988) and precision-based approaches (e.g., Rothman & Greenland, 2018) to select and evaluate sample sizes. In this article, we review existing facts and controversies about power and sample size adequacy, review different kinds of sample size determination methods, discuss standards for reporting them, and review tools and approaches for power analysis. There are constraints to the generality of this coverage, which is intended for social-personality psychology researchers who are not statistics experts. Formulae and the like are downplayed in favor of conceptual explanations; the methods and analyses used in examples are those most typically used in social-personality research; and we do not cover sample size determination in qualitative and archival research, because these methods usually assume a different basis for inference than the population sampling that underpins most quantitative research with human participants.

The journals and books we cite are mainly published in the United States and Europe (e.g., outlets associated with the American Psychological Association and Association for Psychological Science, along with journals containing the term “European” in the title, e.g., European Journal of Social Psychology). Journals’ geographic locations are not a perfect reflection of authors’ nationality. For example, authors outside the United States can publish in APA journals; however, journals’ locations are probably a reasonable approximation of authors’ nationality. The scholars we most frequently cite are quantitative psychologists, based on the field in which they received their PhD and/or their faculty appointments. Some of the research we cite involves large-scale computer simulation studies, suggesting that some of our cited authors come from highly resourced nations and universities. Authors with interesting ideas on statistical power but who have not published in wealthy nations’ flagship journals may thus be underrepresented in our review.

Uncertainty about how to use power analysis in social/personality psychology led to a Power Analysis Working Group being convened at the 2019 meeting of the Society for Personality and Social Psychology, in response to a call from Executive Director Chad Rummel. This article’s authors are the members of that group. As for our positionality, we are based at various institutions in the United States and Western Europe and conduct quantitative research in social or personality psychology through a variety of approaches including laboratory experiments, analyses of survey data, and meta-analyses. Collectively, we are versed in many statistical techniques, and all have spent time over our careers thinking about issues of statistical power, effect sizes, and the practical importance of research findings. While we all see importance in the common goal of increasing the robustness of published findings, a particular concern for several of our authors has been avoiding roadblocks to research on novel issues and understudied populations, in which sample sizes may be considered small for statistical power purposes. It is from this standpoint that we make these recommendations.

To accompany this review and discussion of issues in power analysis, we have prepared a supplemental document that reviews recent developments, including online tools, in determining sample size (Aberson et al., 2023). Although that document was not part of this article’s peer review, by invitation of the Editor we are referencing it to give the reader access to a more detailed explanation of sample size determination procedures for specific statistical tests.

Sample Size Determination Methods

While most researchers in the field will be, at least superficially, familiar with power analysis as the most common method of sample size determination, there are a variety of others. In addition, power analysis encompasses more flexible methods than many researchers are currently aware of. In this section, we briefly review power analysis before addressing four current controversies: how to derive effect size, whether power analysis post hoc is informative, whether requiring power analysis is detrimental to certain topics of research, and which power level should be adopted. We then consider two of the alternatives to power analysis and finish with recommendations for best practices in sample size determination, reporting, and evaluation.

Power Analysis

Statistical power derives from the Neyman–Pearson approach to statistical hypothesis testing (Neyman & Pearson, 1933). Statistical power is defined as 1 − β (Cohen, 1988, 1992), where β is the false negative error rate (the probability of failing to detect an effect as significant if the alternative hypothesis is true and assumptions of the significance test are met). In other words, higher power means that true effects (if present) are detected more frequently. Importantly, power is a property of a statistical test, not strictly speaking of a study, because any given study may use more than one test of central and peripheral hypotheses. However, common usages of “power” such as “well-powered studies” can be interpreted reasonably as “minimum power of the key hypothesis-confirming test(s) within the study.”

In Cohen’s writings (e.g., Cohen, 1988), and in most current psychology research, the recommended level of power is conventionally 80%, yielding a false-negative error rate (β) of 20% if the alternative hypothesis is true and test assumptions are met. This arbitrary convention stems from Cohen’s (1965) intuition, made by his own admission “diffidently” (p. 99), that false positives are four times worse for science than false negatives. Hence, if “false positives” (α) are kept at 5%, 1 “false negatives” (β) should be no more than 20%. The value of the 80% blanket recommendation is explored more in Controversy #4.

Power analysis should not be conflated with one specific usage in which sample size is determined given a desired power level (and other features of the analysis). Common software (e.g., G*Power; Faul et al., 2007, 2009) refers to this kind of analysis as “a priori,” so researchers may be tempted to presume this is the best (or only) calculation that can be conducted before conducting a study. In fact, there are several values that can be computed as output using the power function. Given a specific research design and hypothesis testing procedure, and treating population parameters as fixed, four parameters are involved: α-level, population effect size, sample size, and power. When three of these are known or estimated, the fourth can be determined, creating four distinct types of analysis (Cohen, 1988). The first three examples below assume α = .05, two-tailed 2 —a common criterion in psychology. In all analyses below, effect size refers to the (unknown) population effect size, not the observed effect size in the sample.

Sample-size determination analysis, our preferred term for what is often called an “a priori” power analysis (Faul et al., 2007), inputs the desired power and an effect size, or distribution of effect sizes, for which power is desired. It returns a target sample size. For example, to detect a Pearson correlation test’s effect size of ρ ≥ .40 with at least 80% power, determination power analysis requires N = 44 observations. Even if the actual data have a fixed N, sample-size determination analysis after data collection can still yield an ideal sample size given certain input assumptions, to be compared against the actual N obtained.

An effect-size sensitivity analysis inputs the desired power and likely (or achieved) usable sample size. It returns the minimum population effect size detectable at this power. For example, with 100 observations, an effect-size sensitivity analysis identifies that 80% power will be achieved for correlations that have size ρ ≥ .27 in the population.

A power-determination analysis, sometimes referred to as “post-hoc power” in tools such as G*Power, inputs N and a population effect size, and it returns power. For example, given that N = 100 are collected, a power-determination analysis reveals power is terrible (16%) to detect a population correlation of ρ = .10 and excellent (87%) to detect a slightly larger population correlation of ρ = .30. Again, this analysis is not necessarily confined to post hoc timing, because N, effect size, and other parameters can be determined hypothetically.

The least well-known kind of power analysis, criterion analysis (Faul et al., 2007), inputs N, effect size, and a power level, and takes as output the α-level (significance criterion) required to reach that power level given the other parameters. For example, it can be used to see whether a power of 80% can be reached in a test of 75 people seeking ρ = .30 by increasing the α, reflecting a decision that in this case it is more important to risk a Type II than Type I error. In this case it is enough to raise α to .071 to reach 80% power. The technique can also be termed “compromise analysis” because it can be used to find an optimal point between minimizing alpha and maximizing beta. We will return to this method when discussing the optimal level of power (Controversy #4).

The appropriate analysis depends on the researcher’s goals. A sample size-determination power analysis will return the minimum necessary sample size given a particular effect size and power level. While this method may seem like the most intuitive for selecting a sample size for a study, it is difficult to use accurately. Primarily, power is influenced by the (unknown) population effect size. Thus, any sample size determination power analysis requires that researchers input some effect size they wish to detect, the value of which determines sample size. A researcher who wishes to detect “small” (d = .20) effects in an independent-samples t-test must collect N = 787 observations, whereas a researcher who is happy to detect slightly larger effects at the same power level (d = .35) need only collect one third that sample size—N = 258.

Elevating sample size determination power to a gold standard, moreover, comes with unintended consequences. First, it incentivizes misrepresentation of substandard procedures. For example, researchers may simply collect data until funding runs out or until an academic term ends, but then, to be published or funded, write up analyses as if sample size planning had been a priori. This questionable practice resembles HARKing (Hypothesizing After the Results are Known; Kerr, 1998), that is, presenting post hoc interpretations as having been generated a priori. Instead, researchers who are limited by practical sampling considerations should report an effect-size sensitivity analysis, which puts the selection of sample size first and leaves effect sizes as an output to be interpreted rather than a prior assumption.

Having effect-size sensitivity in the toolkit gives several advantages. First, it allows researchers to explicitly consider sample-size criteria other than power (e.g., resources) when planning research. These are often realities of research design, yet a sample size-determination analysis does not allow for their consideration. Second, if it is already the practice of researchers to use a given N deemed feasible for their lab or other typical labs, then reporting it as such is transparent and accurate. Third, even if a sample size-determination analysis is used, the final number of cases may differ from its recommendations. For example, researchers who collect reaction-time tests often reject some of their samples for fast, slow, or wrong responses (Ratcliff, 1993). An effect-size sensitivity analysis may be needed to evaluate this new usable sample size.

In practice, all three kinds of power analysis may be run in concert, even before a study begins. For example, a researcher might decide it best to be able to detect d ≥ .20. A sample-size-determination analysis can then be used to find a target N (e.g., finding that N = 787 for 80% power to detect d = 0.20 with an independent-samples t-test). Using this example, they might then realize that N = 787 is unrealistic because 500 is the maximum sample size achievable with current funding. Power-determination analysis could then be used to assess the adequacy of that sample size (e.g., revealing that only 61% power is achieved for N = 500 and d = 0.20). Begrudgingly, the researcher might give up on detecting such small effects and instead ask: What effect sizes can be detected with N = 500? An effect-size sensitivity analysis could then reveal that N = 500 can detect d = 0.25 with 80% power. The researcher might decide this is close enough to the original intended effect size and proceed to gather 500 participants. The researcher may also consider using other means to increase the potential effect size, particularly by improving components such as the strength of manipulation or the reliability of measures. Alternatively, if d = 0.25 seems inadequate, the researcher could seek additional resources to collect the initially recommended sample size for d = .20.

Table 1 summarizes each of these types of power analysis. Although the terms disseminated by G*Power software (Faul et al., 2009) are popular, we believe there are good reasons to prefer our scheme in which each term is defined by the output parameter rather than by assumptions about when the test is carried out. As our examples show, relying on time-based terms can lead to ignoring or unjustly dismissing potential applications of each type of analysis. Both N and effect size can be hypothesized either before or after data collection, and both can also be observed after data collection. Controversies about “post-hoc” power analysis have also hinged on demonstrating low additional value (over and beyond the p value) of a specific kind of power determination using observed effect size. While this use is not recommended, power-determination using effect sizes not derived from the data can be useful, and should be considered without the stigma from the overgeneralized term “post-hoc.” Finally, “sensitivity analysis” sometimes causes confusion because of the well-established use of the identical term to designate a completely different method in risk analysis (e.g., Iooss & Saltelli, 2017), so the addition of “effect-size” as a modifier both distinguishes the term and continues the system of mentioning the output parameter in the name.

Table 1.

Preferred Nomenclature for Four Types of Power Analysis With Brief Explanation.

| Our preferred name | Term used by G*Power software | Main input parameters | Output parameter | Main reason for change |

|---|---|---|---|---|

| Sample-size determination | A priori | Effect size, power, alpha | N | Avoid confusion with a priori use of other methods |

| Effect-size sensitivity | Sensitivity | Power, N, alpha | Effect size | Avoid confusion with the widely used procedure of sensitivity analysis in risk assessment |

| Power-determination | Post hoc | Effect size, N, alpha | Power | Avoid confusion with post hoc use of other methods; avoid stigma from inappropriate uses of power determination based on observed effect size |

| Criterion (compromise) | Criterion | Effect size, N, power | Alpha | No change |

Researchers may be tempted, or requested, to use evaluative terms to characterize “the” power level for a study—or more accurately, for its main hypothesis-confirming analysis. Indeed, a Google Scholar search identifies more than 700 articles since the start of 2018 reporting the phrase “high-powered” or “low-powered” in journals with “Psychology” in the title alone; “well-powered” gives a further 500 hits and “underpowered,” more than 2,000. These phrases usually describe the statistical power of a replication’s focal test, the power of the focal test in the present study, or the evidence base of existing literature. Although such evaluative phrases may seem handy, they are fundamentally ambiguous without further specification. If researchers end up agreeing on their meaning, it is only because they implicitly share some idea of the underlying parameters, that is, the standard Cohen (1988) recommendation of 80% power, and ideas about which effect sizes are typical or desirable in their literature. Also, it is obvious that (for example) a test with 200 participants per condition is better powered than one with 20, all else being equal. This relative sense of “better-powered” is more justifiable than the absolute sense of “well-powered.”

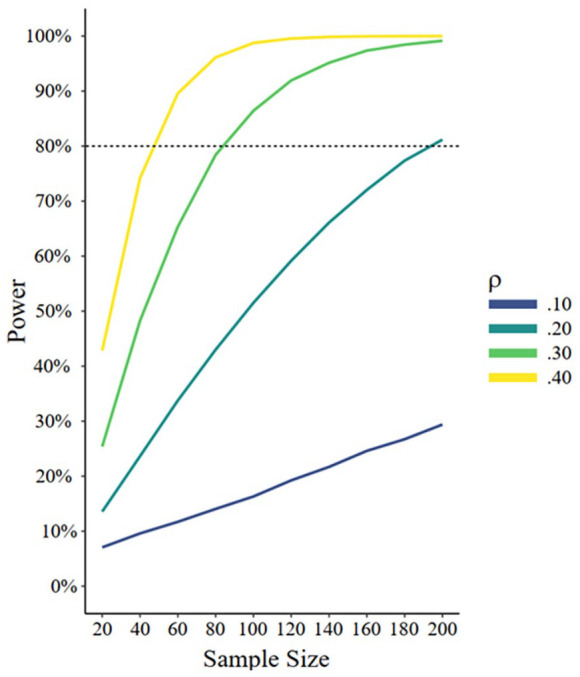

Because population effect sizes are unknown, researchers need to consider a range of possible effect sizes to accurately assess power for each analysis, whether through modeling an effect size distribution, inputting the raw parameters that makeup effect size (e.g., mean, SD) into a simulation, or just by inputting a point value of effect size. Although unknown, the population effect size is also crucial because it determines what analysis has the power to detect. All analyses have high power to detect some (large) effects and low power to detect some (small) effects. For example, a sample test with N = 100 has 99% power to detect a Pearson correlation test’s effect size of ρ = .50 yet only 52% power to detect ρ = .10. Similar calculations can be made for a range of all possible effect sizes. For this reason, power probabilities can be best envisioned as a curve across the range of possible effect sizes rather than a single value (Figure 1).

Figure 1.

Power Curves for a Simple Correlation Test, Given Different Population Values of the Correlation Coefficient Rho (r)

In addition, different tests within the same study can provide different levels of power. If a study is reported as having 80% power, it can only be reported as such in the context of a given effect size and a given analysis (e.g., “the final sample had at least 80% power to detect Pearson correlations ρ > .28 at α = .05”). But studies with multiple analyses within a single sample (e.g., independent-samples t-test and a mediation analysis) do not have a single level of power because the power for the different tests will differ.

Power Analysis: Controversies and Alternatives

As we can already see, using power analysis to determine and evaluate sample size is not always straightforward. Power analysis makes assumptions about effect size, timing, available resources, and desired power level. In the next section, we review and discuss four important controversies about these assumptions and offer guidelines for researchers. What effect size should we enter into our power calculations? Can power analysis be useful if it is conducted after data collection? How can the downsides of power-based requirements for difficult types of research be dealt with? And what level of power is appropriate?

Controversy # 1: How to Determine Effect Size in Power Analysis?

Interpreting the effect sizes used in power analysis is critical to its employment and interpretation, but it is not always obvious what kind of effect size to expect. It may seem paradoxical for a novel question. Why should we need to know how large an effect will be when our experiment will be the first to tell us how large the effect will be? Out of 614 social and personality psychology researchers surveyed by Washburn et al. (2018), 31% cited the indeterminacy of effect sizes as a reason why they sometimes avoid using power analysis. Against such resistance, it is easy to understand the temptation to propose a standard, conventional target effect size. Especially when funding, publication, or IRB approval might hinge upon study plans being seen as “well-powered” for the critical hypothesis test, agreeing on a fixed standard for effect size would present the appearance of objectivity and consensus. Instead of one clear criterion, there are many different approaches to determining the appropriate effect size, which we will review and evaluate. We then propose our own flexible but principled criteria based on two main kinds of practical interest: basic and applied.

In contemporary social-personality psychology, researchers often refer to Jacob Cohen’s benchmarks designating small, medium, and large effect sizes (e.g., Cohen, 1988). These benchmarks came from Cohen’s subjective perceptions of typical results in psychology, but they have been shown to be internally inconsistent between mathematically transformable sizes for r, d, and f2 (Correll et al., 2020). Even Cohen (1988, pp. 12–13) himself gave clear warnings against adopting these benchmarks too rigidly. We believe, likewise, that standards for effect size should depend on the purpose of the research if there is little existing knowledge about the phenomenon being studied.

Still, the standard benchmarks are popular not just as descriptions of observed effect sizes, but as criteria for power analysis. Correll et al. (2020) observed that most power analyses they found in a survey of the psychology literature started from these “t-shirt size” benchmarks rather than from prior data. Our own Google Scholar search for the exact phrases “detect a medium effect” and “detect a medium-sized effect,” often used in power justifications, yielded, as of October 19, 2021, 2,452 articles since January 1, 2020, mostly in psychology and related disciplines. Compared with similar results substituting “small-to-medium” (494) and “large” (619), it seems that conventional “medium” values prevail as a rationale for power analysis, following the strategy advocated by Hesiod and also by Goldilocks: “moderation in all things.” 3

In this section, we argue that determining the appropriate effect size to use for power analysis and related techniques is a complex process that defies easy solutions. Yet it is important for the advancement of science that we do not shy away from that complexity (Spurlock, 2019). Rather than offering a single criterion, we will first discuss general principles in considering effect sizes, and then review several determination methods in order, including the novel terminology of basic and applied practical effect size. We also note that these determination methods provide vital information both for traditional methods of power analysis in which point estimates for effect size are entered and for newer methods relying on distributions of effect sizes (e.g., S. F. Anderson et al., 2017; Chen et al., 2018; Pek & Park, 2019). Although the latter methods model effect sizes as distributions rather than points, their inputs still need to draw on realistic values for maximum, minimum, and mean parameters.

Principles of Effect Size Determination

Determining effect size a priori requires an understanding of what effect size is. We will assume that most psychology researchers are familiar with the concept of effect size reflecting a ratio of observed effect (e.g., difference between means) to noise surrounding it (e.g., pooled standard deviations). Cohen’s (1988) method of assigning a separate effect size metric to accompany each statistical test has resulted in a plethora of Latin and Greek letters, although there have been calls to adopt a broader standard, such as r-equivalent (Rosenthal & Rubin, 2003) or proportion-of-variance measures in the η2 family (Correll et al., 2020). There are also distinctions such as between population and sample effect size or between standardized and unstandardized effect size (Kelley & Preacher, 2012). In particular, thinking about effect sizes in terms of unstandardized components—raw units of the independent variable (e.g., years of education, temperature in degrees Fahrenheit), standard deviation of the dependent variable—helps to ground effect size estimates in reality and measurement method of the phenomenon being studied (Baguley, 2009; Pek & Flora, 2018). However, the main issue in determining effect sizes for power analysis is which source to use: the typical size of existing research, a size of minimal theoretical interest, a minimal size based on practical considerations, such as whether an effect can realistically be replicated in a basic research lab, or whether a psychological intervention “meaningfully changes something in the world” (Silan, 2019, p. 2).

Typical-Effect-Size Approaches

Because of arguments that Cohen’s sizing standards are arbitrary and inconsistent, which we have just reviewed, an evidence-based approach to effect sizes in research looks appealing. Indeed, benchmarking studies drawing on published results in various areas of behavioral science tend to yield estimates for the central tendency of effect sizes that are smaller than Cohen’s impressionistic “medium” (Bosco et al., 2015; Funder & Ozer, 2019; Richard et al., 2003). These benchmarks have often been proposed as replacements for Cohen’s criteria. However, before we too hastily substitute one heuristic for another, it would make sense to step back and consider the limitations of literature-based benchmarks.

In social and personality psychology, we sometimes see meta-analytic aggregations of study results across many different methods, and even many different topics (e.g., Richard et al., 2003 for social-personality psychology; Gignac & Szodorai, 2016, for personality psychology). Richard et al. (2003), for example, yield an average r of .23 across meta-analyses in social-personality psychology, but different topics yield average effect sizes ranging from near-zero to r = .70. Schäfer and Schwarz (2019) more recently have also found great heterogeneity in the effect sizes of published psychological research. Average effect sizes calculated from a collection of meta-analyses might thus depend critically and arbitrarily on the prevalence of certain kinds of research in their samples. However, it is doubtful that they represent an abstracted, tightly defined size that most research projects should expect to attain.

Method issues, too, are vital when interpreting effect sizes. Effect sizes can be difficult to compare across different models, manipulations, and measures if they even can be sensibly compared (Pek & Flora, 2018). An intervention that combines many different effective mechanisms (e.g., a mood manipulation that uses happy music, bright colors, and a comedy sketch video) may be conceptually imprecise, but it will give stronger effects than an intervention that tests one isolated mechanism. Statistically, effect size interpretation depends critically on the design and model used, so a raw beta coefficient of .20 in a bivariate model (equivalent to r = .20) represents something different than a beta coefficient of .20 in a model with four other intercorrelated predictors.

As an example of the importance of methodological choices, consider methods of manipulating interracial threats within social psychology experiments. At the subtle end of the spectrum, one can manipulate minimal features of vignettes, such as information about the year when the United States is expected to become a “majority-minority” nation (Craig & Richeson, 2014). That manipulation should produce a smaller effect size, all else equal, than a more vivid procedure in which, for example, White and Black men are paired to chat about racial profiling (Goff et al., 2008).

The design of a study, which is one aspect of method, also counts when estimating likely interaction effects based on literature for which only a main or simple effect is known. Even though interactions are statistically independent of main effects, the size of interactions that are built from a previously known effect as one of their simple effects can be estimated. Unless the interaction is a perfect cross-over (e.g., a complete reversal of the original effect in a new condition), its size will be a fraction of the original simple effect; closer to one half if the new condition completely eliminates the effect, or less if the new condition merely attenuates it (Giner-Sorolla, 2018; Simonsohn, 2015; Westfall, 2015). Blake and Gangestad (2020) present further simulations and guidelines supporting these observations. Also, the unreliability of continuous moderators obtained outside experimental control means that interaction effect sizes should be expected to be low (McClelland & Judd, 1993).

Low reliability of measures also attenuates effect sizes. In one example, reducing the reliability of a measure from .90 to .70 reduces the power for a repeated-measures t-test from over .99 to under .70 (Heo et al., 2015). This is equivalent to effectively halving the effect size detectable (from d = .67 to d = .33) if power is held constant at .80. Investing in steps to tighten construct measurement thus promises to pay dividends in statistical power, if it is done with regard for validity; for example, taking care that dropping items to increase reliability does not reduce construct coverage and thus reduce effect size (Cattell & Tsujioka, 1964).

The research literature drawn on when anticipating an effect size, then, should match as closely as possible the topic, statistical question, and method of the proposed study. Direct replication studies are most likely to achieve this match. Beyond replication, emerging meta-analytic tools such as the Cooperation Databank (Spadaro et al., 2022) and the MetaBUS database (Bosco et al., 2020) can be used to gain precise, up-to-date, and methods-sensitive estimates of effect sizes based on research literature, selecting for topic and methods specificity. Data from meta-analyses can also be drawn upon to derive distributions of effect sizes for input into power analyses and modeling uncertainty (Du & Wang, 2016).

No matter how tightly matched the research, however, effect sizes derived from the published literature are overestimated if nonsignificant results have difficulty being published (Dickersin, 1990; Friese & Frankenbach, 2020). Simply put, in a world where only large effects can be significant (as when the test has a high power level only for large effect sizes), then all significant effects will be large. This situation can help explain, among other things, why studies with smaller sample sizes are less likely to show significant independent replication compared with those having larger sample sizes (e.g., Open Science Collaboration, 2015). A useful simulation of the underlying process is provided by Pek et al. (2020), while Schäfer and Schwarz (2019) show relative inflation of effect sizes among non-preregistered compared with preregistered published studies in psychology, possibly due to publication bias.

Because publication bias can be assumed in most topics of psychology, some authors have proposed adjusting downward any effect sizes taken from published literature or otherwise treating them with skepticism (S. F. Anderson et al., 2017; McShane et al., 2016; Perugini et al., 2014; Simonsohn et al., 2014). Bias correction generally involves adjusting an observed distribution of published effect sizes, accounting for publication bias (e.g., a cutoff of p < .05 to be published) by modeling, in various ways, the body of results that did not meet the cutoff and therefore did not get published. Although these techniques only work well when their assumptions are met (N. A. Lewis & Michalak, 2019), it is vital to consider them because published effect sizes from biased literature will not reflect what a researcher starting their own study might find.

Unfortunately, currently available methods of correcting meta-analytic effect sizes for publication bias are complex and may still not be as effective as hoped. Sladekova et al. (2023) reanalyzed many meta-analytic databases using publication-bias-correction methods but found little change from the original unadjusted average effect sizes. Sladekova and colleagues argued that “although the effect size attenuation we found tended to be minimal, this should not be taken as an indication of low levels of publication bias in psychology” (p. 664). Inzlicht et al. (2015) simulated a variety of bias-correction techniques in a situation where heterogeneous effects are subject to publication bias and found little consistency among the results of techniques as well as an overall range of estimates that did little to resolve questions about the true state of the simulated literature in question.

If prior literature is biased or nonexistent, experimenters may want to take matters into their own hands and carry out pilot tests with a descriptive goal: to capture effect sizes. Pilot testing can be valuable in understanding how design choices affect the potency of the psychological experience produced, with implications for effect sizes in the envisioned study. For example, if one is interested in studying cross-race interactions, one could conduct a pilot study to determine whether participants react more strongly to having a face-to-face conversation about race (e.g., Goff et al., 2008) than to read a vignette about changing racial demographics (e.g., Craig & Richeson, 2014), with the intent of maximizing effect size through the final choice of method. However, this method can overestimate effects as well because pilot tests are also selected: We only follow-up on the ones whose results seem promising (Albers & Lakens, 2018). To compare different paradigms for strength, rather than to generate an absolute value, is thus a preferable use of pilots. All the same, uncertainty also surrounds the estimates taken from pilot testing, so firm decisions are best taken on a finding of statistically significant differences between two pilot tests, which may be difficult to achieve if the participant numbers put into pilot testing are relatively low. Essentially, pilot testing can be seen as a home-grown source of estimates, one which can exactly match the topic and methodology of the proposed study, but is subject to similar biases as consulting the prior literature.

Minimal-Effect-Size Approaches

The limitations of benchmarking according to the typical effect size might lead researchers to instead benchmark from the smallest effect size of interest as recommended by Lakens, Scheel, et al. (2018; see also Lakens, 2022). The interest in such criteria arises because no sample or population is likely to show that one variable is completely mathematically unrelated to another (e.g., a correlation coefficient of zero to 20+ decimal places). And yet psychologists would like to know at what point they can declare that two things are for all practical purposes unrelated. Are there ways to determine a minimum effect size of interest—a parameter that can be entered to evaluate whether a proposed or achieved sample size can decisively tell us if a meaningful directional effect exists?

In most social-personality psychology research, it is difficult to gauge the smallest effect size of interest in a way that can be agreed upon. The issue is clouded by purported instances of very small-looking effect sizes corresponding to sizable real-world impacts, as when Rosenthal (1990) identified an aspirin intervention that reduced heart-attack risks considerably as having an effect size of r = .03. But such examples are flawed because they include large numbers of participants who are irrelevant to the hypotheses, due to heart attacks being a very low-occurrence event. Calculating how well the treatment prevents heart attacks, but only considering those people who would be in line to suffer them in the first place, gives a much larger effect size, such as r = .52, in the aspirin example (Ferguson, 2009). This example and similar examples using inappropriate interpretations of 2 × 2 datasets with very uneven outcome numbers interfere with a more reasonable understanding of how an important-looking treatment effect ought to be described in effect size terms.

Other arguments for powering studies to detect small effect sizes are likewise shaky. 4 For example, it has been argued that a very small effect identified in the lab can add up over many repeated exposures, or many individual cases, to a potent real-world effect (Abelson, 1985) or that a small effect identified across the general population can have large effects at the tail ends of the distribution of one variable (Hyde, 1981). But none of these processes occur out of necessity. They rest on assumptions about the additivity of effects over time (as Funder & Ozer, 2019, argue), the transfer of small observed lab effects to large-scale field effects, and the normality of distributions in extreme ranges. Establishing the growth potential of effects from single-instance lab studies would require long-term multiple-exposure interventions (e.g., repeated priming of a concept across a year), large-scale interventions (e.g., across millions of people via mass media), or studies focusing on people at the thin end of a distribution (e.g., incidence of a personality trait among chess grandmasters, presumed extremely high in analytical ability). With all these alterations to design, the small effect could add up to a more considerable effect but also could fail to materialize. The value of small lab-grown effects when translated to a different scale is an empirical question, not an assumption.

If we define a useful finding as an effect size with a tight confidence limit around it, then it is difficult to insist on any smallest effect size of interest. That is, if the goal is to know the direction and size of any effect, it is just as good to know about the finding r = .03, ±.01 as to know about r = .53, ±.01. The theoretical demands for the smallest effect size come not from a science with point-estimation goals but from the framing of psychological hypotheses in a “weak” sense: stating only the positive or negative direction of a relationship, but without a theory-grounded prior estimate of how large it should be (Meehl, 1990). Merely directional hypotheses are nearly impossible to falsify empirically based on nonsignificant findings because it can always be argued that the population effect still points in that direction, but the findings just did not have enough power to detect it. Indeed, most theories in social-personality psychology do not make “strong” predictions that include an effect size (Klein, 2022; Lykken, 1968).

Others have attempted to calibrate effect sizes empirically by appealing to the size of psychological effects visible to the “naked eye” of research participants or observers (e.g., Anvari & Lakens, 2021; Ozer, 1993). To be sure, knowing these sizes is useful for understanding the phenomenology of effects, but they do not guard against the possibility that a meaningful effect on behavior can occur without the subject or casual observers being aware of it. This possibility is a cornerstone of many fields of psychological research, in particular social cognition (Nisbett & Wilson, 1977). If an effect exists below the threshold of observation, but has further consequences, basic science still has an interest in establishing its magnitude and direction.

Practical-Effect-Size Approaches

We argue for a change in focus. When there is little prior guidance for what size of effect to expect in a specific topic and methodology, the target should not be the smallest effect size of “interest,” because any effect size can be of interest to establish—even one close to zero. Nor should it be a typical effect size because of the many difficulties in establishing and generalizing such a parameter from a heterogeneous existing literature. Rather, benchmarks should target an effect size that is “practically meaningful.”

This phrase can be interpreted in two ways. First, to the extent that findings can be applied directly to a problem with a cost-benefit structure, it should be possible to approximate the cost-benefit tradeoff that would be useful for the problem—the minimum applied practical effect size. For example, if making a change that costs US$1,000 in employee time and resources annually would increase donations to a charitable organization, then any percentage increase in donations that would scale to higher than US$1,000 is cost-effective and therefore of interest to establish (for detailed examination of cost-effectiveness and scalability, see Kraft, 2020). Consulting with practitioners, using unstandardized descriptive parameters or other ways of expressing effect sizes that are accessible to non-statisticians (e.g., common language probabilities; Liu et al., 2019) can help researchers to power their analyses (McClelland, 1997). A social psychologist studying education, for instance, can consult users to see whether they see it as worthwhile to invest student time resources (say, 1 hr a week) into a growth mindset exercise that translates into a 0.10 GPA increase in student performance (cf. Yeager et al., 2019), and if so, at what minimal point in GPA improvement the intervention would cease to be seen as worthwhile.

In another example from educational psychology, research has documented that educational interventions should reach at least 60% fidelity for interventions to show their intended effects, but that it is rare for them to be implemented with greater than 80% fidelity (Durlak & DuPre, 2008). Therefore, the “sweet spot” to work toward is an intervention that can be implemented with somewhere between 60% and 80% fidelity, representing the range of effects that can produce a psychologically meaningful change (Durlak & DuPre, 2008; Horowitz et al., 2018; Premachandra & Lewis, 2022). Byrne (2019) further argues for stakeholder involvement in research planning as a way to “identify intervention outcomes that are considered important by stakeholders” (p. 291). Although the three examples Byrne (2019) gives for behavioral diabetes management were largely qualitative (i.e., identifying outcomes that matter to diabetes patients), one can imagine further asking, through sensitive and natural-language means, quantitative questions about how much a given improvement would matter. Psychological measures could take a cue from the concept of the minimally clinically important difference (MCID; Jaeschke et al., 1989), a patient-led measure of symptom relief that has not yet been widely applied to medical research (Brennan et al., 2023). Practically applied sizes will vary greatly with the context (Bakker et al., 2019) and the resources of a given applied setting, a strong argument for the involvement of stakeholders in research.

However, much basic research does not have a directly translatable application. We propose that in these cases, a basic practical effect size can depend on a paradigm’s ability to be replicated, extended, and inspire further research. Effect sizes much smaller than Cohen’s conventional “small” size of r = .10 may in theory be worthwhile to capture. But distinguishing those effects from zero, or effects in the other direction, can require enormous resources. A d = .05 in a two-sample t-test, for example, would require close to 10,000 participants to attain 80% power at two-tailed α = .05. By making sure that effect sizes do not escape the resource limits of a typical research lab or grant in their field while considering the possibility to achieve resource savings through methodological changes such as repeated-measures designs, basic researchers might ensure that other researchers can verify and follow-up their findings. This approach is also suggested by Lakens, Scheel, et al. (2018): “. . . researchers can justify their sample size on the basis of reasonable resource limitations, which are specific to scientific disciplines and research questions . . .” (p. 263). Our only clarification would draw a line between a resource-based practical minimum and the term “smallest effect size of interest.” The small effect size might still be valuable to establish, but the reason it undershoots the minimum is one of practicality, not scientific interest—hence our phrase, “practical effect size.”

We should note two things about the basic practical effect size. First, it is not intended to benchmark a typical effect size that labs must have the resources to achieve. It is an upper, not a lower, limit on resource expenditure. Because labs may split their resources among different lines of research, the basic practical size does not completely define what the typical sample size in a lab will be, although it does caution researchers that studies with lower power to detect it may miss some effects worthy of further study. Second, basing a minimal effect size on the maximum resources to be expected in a discipline is different from simply basing sample size on available resources in one’s own lab, one focus of Lakens’ (2022) recent guidance. If personal resources are the constraint on sample size, then power analysis to determine N through a minimal effect size derived from those resources is hardly necessary. However, either before or after the study, it would be useful to gauge the size of the effect that could be captured via effect-size sensitivity analysis. Lakens’s (2022) consideration of resource limitations in the individual research lab is relevant, and we also discuss how labs without extensive resources can address power concerns in Controversy #3, below.

But there is also a need, field by field, to discuss and establish overall standards. Lakens, Scheel, et al. (2018) suggest that peer reviewers of articles and grants could make such judgments case by case. We also see benefits for openly discussing the issue across an area of research, with the goal of establishing updateable parameters for effect sizes that can be practically replicated (cf. Lakens’ (2022) call for field-wise heuristics). A paradigm that is long, difficult, and lab-bound, for example, might use a practical effect size calibrated to gathering at most 50 participants (perhaps boosted in power by repeated-measures design); while a two-condition design that takes 5 min and can be given to a convenience sample online might use a practical effect size calibrated to 500 participants. Either limit, perhaps, could be determined using a willingness-to-pay survey among researchers interested in building on or replicating the study.

Like its applied effect counterpart, the effect size of basic practical interest will vary according to research paradigms and typical resources, so we are reluctant to suggest a single concrete figure. We acknowledge, too, that the kind of discourse that might lead to more concrete recommendations for both kinds of practical effect sizes has barely begun. The flow of information from basic to applied researchers and from applied research to practitioners tends to be one way (Giner-Sorolla, 2019). Applied practical effect sizes will need to be worked out on a very specific basis due to the differing importance of specific interventions and resources. Seeking general benchmarks would be less useful than working out techniques for surveying practitioners to establish the parameters of cost-effectiveness.

Conversations about what sizes of basic effects might be impractical for future research also need to happen across different fields. Techniques for power analysis assuming budgetary constraint, already developed to some extent in psychometrics (e.g., Marcoulides, 1995), are yet to be formally worked out in social and personality psychology. Effect-size sensitivity analysis can fill in some of the blanks; it suggests, for example, that someone with access to 200 participants per study can detect, with 80% power in a two-sample t-test, d = .40 in a between-participants experiment; someone who can recruit 2,000 participants can detect d = .13 under the same conditions; and a question that engages a worldwide research network putting together 20,000 participants can hope to detect d = .04. It might eventually be decided, for example, that this last level of focus should be reserved for extremely pressing or foundational questions in basic research, while the more moderate levels of expenditure are more appropriate for derivative or peripheral questions. Another source of very small effect sizes that are reliably different from zero might be a meta-analysis of studies in a single paradigm totaling thousands of data points. However, under those conditions, future researchers would be well advised to identify more specific methods and contexts within the meta-analysis that reliably yield higher effect sizes, which can be tested fruitfully with a more reasonable expenditure of resources.

The concept of basic practical effect size recognizes that larger effects are replicable by more labs and end up creating a larger knowledge base than smaller effects do. It does not preclude some questions from being deemed important enough to study at a very large scale, with high power to detect small effects. But although basic research in social-personality psychology is used for its applications, it is also given away to satisfy public curiosity about how the mind works—not just a behavioral technology, but an idea technology (Schwartz, 1997). If we see any effect size as newsworthy no matter how small, we risk giving the wrong impression when the public only sees headlines claiming a link between two constructs or a difference between two groups. Imagine that, on hearing of a scientifically confirmed gender difference between men and women, the average person presumes that men must be “from Mars” and women “from Venus” (or at least 0.8 standard deviations, if not astronomical units, away from each other). Such a belief may grossly exaggerate the actually confirmed difference which may be robust, and significant, but no more than 0.3 standard deviations in size. (For related evidence on overestimation of effects see Hanel & Mehler, 2019.) Until the applied results of a finding are clear, the responsibility of basic research is to communicate the size as well as direction of findings in a way that is understandable to laypeople and to discuss small-sized findings with caution (see Anvari et al., 2023, for discussion of ways to examine the implications of small effects).

Researchers should also explore ways of going beyond Cohen-type effect sizes to convey practical importance to research consumers who lack the foundational statistical training for evaluating effect sizes. As Spurlock (2019) notes, “effect sizes are expressed in technical terms unfamiliar to consumers of research, and further, most effect sizes are expressed in units with no clear real-world application, such as is the case with the correlation coefficient” (p. 624). Researchers and practitioners have come up with more intuitive metrics for conveying real-world importance, such as number needed to treat, defined as the “number of patients who need to be treated with an intervention before one patient benefits from the treatment” (Spurlock, 2019, p. 624). Many recent articles wrestling with the issue of defining practical significance in real-world terms arrive at the conclusion that subjectivity will never be removed from such determinations and that experts in the relevant content field will need to be consulted (e.g., Balkin & Lenz, 2021; Spurlock, 2019).

In bringing up difficulties with both the minimal and typical effect size approaches, we do not wish to elevate one set of difficulties over another. Indeed, both have difficult and variable solutions. Minimal sizes need to be benchmarked against their resource implications, whether for following through with basic research or for implementing applied research. Typical sizes need to be assessed carefully for accuracy, keeping in mind the similarity in method as well as in hypothesis between the existing and proposed research. A target size averaged from literature can be better than nothing, but a more contextually sensitive size is bound to be even better than that.

We conclude our discussion of effect size by listing four considerations researchers can use to determine which effect size to use in their power analyses. We start from a situation of maximum information about the typical effect size in the experimental paradigm and move to techniques that can be used when little is known about the effect under investigation.

The best option is to work from an accurately estimated literature. This is a literature that focuses on a single topic studied with highly similar and comparable methods, usually a single paradigm and outcome. Although finding accurate literature retrospectively may be difficult in the present moment, they can be recognized going forward by their use of results-neutral formats, such as Registered Reports or multistudy papers that affirm to be a complete account of the lab’s research using that paradigm. Such precedents are best used if the purpose of new research is to test the original effect in a new situation—for example, when removing a potential confound, moving to a different culture, or adding a new contextual factor. However, if the focus is on a novel moderation effect, the size of that effect is likely to be less than the original basis for one of its simple effects, so prior estimates usually should be revised downward, sometimes drastically.

Empirically determining an anticipated effect size from a biased precedent, which may draw on previous results, requires a set of studies with a comparable topic and methodology, but also a valid method for correcting bias in the estimate, which at present seems to be hard to achieve. Aggregations of effect sizes without a comparable methodology are also common but are likely to be even less accurate as a precedent for a given anticipated effect. Internal pilot testing as a source of precedent also has enough problems with accuracy and bias that it can only be recommended as a means to make relative decisions about, for example, the strength of different manipulations.

While they are rare in social-personality psychology, strong theories, or theories that yield effect size predictions, can be helpful if they exist (Navarro, 2019; Rohrer, 2018).

- Finally, for researchers who find no relevant prior literature or theory firmly establishing an effect size for what they want to study, we advocate considering the practical effect size for basic and applied research.

- A case for basic practical effect size can be made by assuming a maximal level of resources typically available for studying the question, aiming to calibrate a minimum effect size that most basic researchers can build on.

- A case for applied practical effect size is ideally made after consultation with practitioners. It can focus either on a cost-benefit analysis or on a pragmatic estimate of the lowest size of the outcome, in raw units, that would be meaningful.

Controversy #2: Is Power Useful After the Completion of a Study?

Some methodologists and statisticians have emphasized that power is only relevant before data are collected (see Levine & Ensom, 2001; Senn, 2002; Zhang et al., 2019). Similarly, Wilkinson and Task Force on Statistical Inference, American Psychological Association, Science Directorate (1999) state that “Once the study is analyzed, confidence intervals replace calculated power in describing results” (p. 596). We agree that, strictly speaking, the probabilistic process that determines power no longer applies after data is collected. However, power analysis and related tools can be useful for understanding and evaluating studies after their completion, particularly when a priori power analyses are not reported for the study. Complicating matters, the term “post-hoc power analysis” is often applied to a specific kind of analysis that is not useful: using the observed effect size from a sample to evaluate the same sample. Acceptable and unacceptable uses of power analysis for post hoc evaluation are discussed in this section.

Avoid Power Determination Using the Observed Effect Size

Within power-determination analyses (ones that output a test’s power), a common misstep is to input all parameters based on those observed in the sample (i.e., sample size and effect size). Proponents of this kind of analysis argue that for nonsignificant results, it provides useful information about the need for replication (e.g., Onwuegbuzie & Leech, 2004). This practice is also encouraged by the popular software package SPSS, which provides entirely sample-based power estimates (called “observed power”) as an option for many analyses (IBM Corp., 2017) suggesting to users that this kind of power determination must be useful. Reviewers also sometimes request “observed power,” particularly when effects are nonsignificant. After such requests, authors have an incentive to follow them, even if they know better.

In truth, it is incorrect to use the sample’s observed effect size to determine the power of that analysis (Cohen, 1988; Gelman, 2019). In that case, the output power estimate is a monotonic function of the p value calculated in the sample (see (Goodman & Berlin, 1994; Lenth, 2001, 2007), so no new information is gained from calculating power using the observed effect size if the p value is already known. When the p value is nonsignificant, observed-effect-size power is usually close to the unimpressive figure of 50% (with exceptions for F tests with high denominator degrees of freedom), but when results are strongly significant, it will reach 90% or higher (Hoenig & Heisey, 2001; Lenth, 2007; Nakagawa & Foster, 2004). However, the low information value of this specific practice should not be over-generalized to more useful practices by calling it simply “post-hoc power analysis.” This label unjustly maligns all forms of power-determination analysis that can be done after data are collected.

Observed Power and p Values in Plausibility Determination

There is also one case in which observed power based on data can be useful, compatible with the insight that this statistic is another way to express a p value. Because previous editorial practices have discouraged authors from submitting nonsignificant studies in a multistudy article, it has been observed that some articles whose studies all yield significant results are very unlikely to be a complete report of a research program (Schimmack, 2012). That is, if each study in a seven-study paper has some chance of obtaining a nonsignificant finding in its hypothesis-confirming test, given its observed effect size, what are the odds that all of seven straightforwardly analyzed and reported studies would be significant, and are these odds credible across a paper or a larger literature? To this end, it has been suggested that post hoc power for each key test using the observed effect size can be calculated and then combined to estimate just how much of a stroke of luck the all-significant article is. At some point, it should be possible to observe that an unbroken series of positive results from studies, given their power to capture a plausible effect size, is implausible. Such a series would suggest, if not definitively prove, some kind of selective reporting at work.

Numerous published analyses have attempted to test the credibility of published multistudy articles or even literature, using one of the specific methods developed for these purposes: for example, p-curve (Simonsohn et al., 2014) or Z-curve (Bartoš & Schimmack, 2022; Brunner & Schimmack, 2020). Using a precursor to these methods, an analysis of Bem’s (2011) controversial paper on precognition, for example, found the joint product of the observed power for a set of 10 studies yielding 19 tests to be <.001. Although among these studies, only 14 tests produced significant results, this figure still suggested an unlikely outcome for a world where the reported analyses represented all planned studies and analyses (Schimmack, 2012, pp. 558–559). The more likely explanation, later confirmed through interviews with those involved in the project, is that the research program involved many unreported studies, and many options to choose which analysis was declared consequential for the hypotheses (Engber, 2017). However, the plausibility of findings can also be assessed by testing how likely it is for a set of significant p values to arise from null versus alternative distributions (e.g., Gronau et al., 2017; Simonsohn et al., 2014).

The application of these methods has itself come under criticism, most strongly by Pek et al. (2022). Apart from definitional objections to calculating power post hoc, they also bring up cautions about the theoretical assumptions of power analysis that are likely to be violated by properties of aggregated actual studies. These include nonindependence of observations, heterogeneity of population characteristics, and heterogeneity of sample size. These assumptions render diagnostic analyses based on observed statistical power imprecise, and “at best, exploratory” (p. 261). Although Brunner and Schimmack (2020) assess the accuracy of different post hoc analytic methods under conditions of effect and sample size heterogeneity, this assessment is unlikely to allay Pek et al.’s, more broadly based doubts completely. However, there remains a compelling logic to the idea that some sets of studies with a dearth of negative results look unlikely as complete reports, regardless of whether statistical power is the best concept to use in testing it.

Power and the Evaluation of Significant Effects

In addition to reducing the likelihood of negative results, having good power before the study is conducted also improves the robustness of the eventual positive results (Szucs & Ioannidis, 2017). In a world where power to detect true effects is low, then any given positive result is less likely to be a true positive, and thus relatively more likely to be a false positive. Some people may intuitively admire a “heroic” significant effect found in an analysis with relatively low power, thinking that the effect must be inherently strong to emerge as significant under such difficult conditions. However, this evaluation is wrong (Loken & Gelman, 2017). Low power decreases, rather than increases, the credibility of a significant effect.

For example, assume that out of 100 effects, 30 are true in the population. If the power to detect a reasonable effect size across all studies is extremely low (e.g., 12%), then 3.6 true positives are expected. However, 3.5 false positives are also expected, so nearly half of all significant effects are not true in the population (Szucs & Ioannidis, 2017). At a power of 80%, 24 true positives are found versus 3.5 false positives, meaning we can be much more confident that any observed significant effect is true. 5 In this example, increasing the power from 12% to 80% is assumed to be achieved by increasing sample size, not by increasing the effect size of the alternative hypothesis, which as Mayo (2019) notes, would also have to increase the alpha criterion or else result in an internally incompatible model. Thus, having high power to detect an effect of interest is important in controlling the field-wide dissemination of false positive results as well as controlling study-level false-negative rates.

To further understand how power carries implications for the value of observed p values, one must understand what error rates do—and do not—say about research. If α = .05, many researchers and educators make the fallacious assumption that there is only a 5% risk of a false positive (for evidence see, e.g., Hubbard et al., 2011). Setting α to .05 does indeed allow only 5% of null effects to appear significant. However, it does not lead to the converse inference that 5% of all observed significant effects correspond to a null effect, any more than knowing that 95% of all dogs are pets entails that 95% of all pets are dogs. Researchers may wish to know the percentage of all observed significant results that are false positives, known as the false discovery rate (FDR; Ioannidis, 2005) or false-positive risk (FPR; Colquhoun, 2019), aiming to restrict this risk to 5% or some other low number (e.g., Colquhoun, 2019).

Using reasoning based on signal detection theory, as in the previous example about higher- and lower-powered studies, the FPR depends on the frequency of false positives (determined by α) and true positives (determined by power), as well as the odds of the effect being true in the population (prior odds). High power to detect a given effect size means the FPR is closer to an acceptable number. For example, in a test with a very low power of 10% to detect the population effect size, and uninformed prior odds of 1:1, the FPR is 33%, whereas an identical test with power of 80% has FPR of 5.9%, which is closer to the naive assumption that the FPR equals the conventional alpha value of 5%. A middling power of 40% leads to an FPR of 11.1%, meaning that the α level needed to reach the same FPR as the test with 80% power would have to be set closer to .025 than to .05. That is, p values close to .05 are particularly untrustworthy in lower-powered tests because they are unlikely to reach the α level required to achieve an intuitively acceptable risk of false positives. (All calculations were facilitated by the online resources at Zehetleitner & Schönbrodt, 2022.)

The contribution of power to false-positive rates should not be exaggerated. As Wegener et al. (2022) point out, power plays a limited role when the prior odds are close to 50% or higher, and increasing power has diminishing returns beyond the power of .50. A similar line of argument about the low importance of power compared with prior odds is taken by Mayo and Morey (2017), who additionally point out that prior odds for any given research question are indeterminate (see also Mayo, 2018). Still, it can be argued that in an ideal world, psychologists would choose research questions with mid-range prior odds: interesting because they are somewhat in doubt but grounded on plausible theory or real-world observations. Indeed, a recent selection of unbiased psychological results reporting via Registered Reports, which are published regardless of findings, suggests something close to 50% prior odds with attrition from less than perfect power (i.e., 44% of main hypotheses were confirmed; Scheel et al., 2021). It cannot be said conclusively that the research questions chosen for Registered Reports are representative of all research questions. Perhaps particularly risky or safe ones are chosen. However, the state of this literature would be a good model for a level of scientific risk going forward, in the absence of strong theories that can make confident predictions about effect sizes.

But given that some literature (including Bem’s (2011) paper) has been built on surprising findings rather than solid theoretical frameworks, lower prior odds are not out of the question. For example, Wilson and Wixted (2018) compared social to cognitive psychology articles within a large-scale replication project of studies published in 2008 (Open Science Collaboration, 2015). They estimated that, along with differences in power, cognitive experiments were likely to have higher prior odds (e.g., 25% assuming 80% power) than social (e.g., 10% even assuming 50% power; with much stronger differences if power is assumed to be equal in the two fields). Given that a recent summary placed the median effect size of 134 social psychology meta-analyses at d = .36 (Lovakov & Agadullina, 2021), pre-2011 studies with n = 20 to 30 per cell must have had power considerably lower than 50% to detect many presumed effects, even without adjusting for publication bias.

We should also keep in mind that concerns about “low-powered” findings often piggyback onto other features of those findings which may be just as important in casting doubt on the findings, if not more, as their probabilistic false-positive rate (Mayo & Morey, 2017). For example, an isolated p < .05 result should not be taken as definitive evidence, certainly not by Fisher’s original hypothesis testing guidelines which aimed to establish experimental procedures that would rarely fail to produce a significant result. And if the positive result occurs in a test that had low power to detect a reasonable effect, we might further investigate the selectivity of reporting and analysis that went into producing this result, even more so if multiple low-powered tests yield positive results in a relatively implausible way.

Options for Post Hoc Analysis

In conclusion, for analyses of completed studies where N, alpha, and desired power level are known or assumed, there are two options for meaningful evaluation of the analysis after the fact. First, one might enter the given sample size and desired power into an effect-size sensitivity analysis to determine whether the analysis was powered to detect meaningful effects. The question is how to evaluate the effect size output. We suggest that if analysis at 80% power can only detect effects much larger than those considered typically or practically useful from a basic or applied standpoint (see Controversy #1), then an analysis of more reasonable effect sizes would yield low power, and p values in the .01 to .05 range should be viewed with caution. The 80% power figure is chosen as being an acceptable but not overly restrictive level for power (see Controversy #4); the range for p values comes from our earlier observation that p values close to .05 are particularly untrustworthy in lower-powered tests because they are likely to have FPRs much greater than .05.

Second, one might choose to use power-determination analysis with one of the effect size derivation methods listed previously. The resulting power then can feed into decisions about the robustness of the finding, with special caution applying to power levels under 50%, especially when the hypothesis is deemed to be low probability. We emphasize, however, that the state of uncertainty about population effect sizes, theoretically predicted effect sizes, and prior hypothesis odds in social-personality psychology argues against trying to convert these impressionistic recommendations into hard-and-fast quantified criteria.

Despite having different outputs, both the effect-size sensitivity and power-determination methods, when applied correctly post hoc, require only one empirical parameter from the data (the test’s N). Assuming a fixed alpha, the other two parameters each require some kind of criterion value, with one being fixed at a criterion, and the other being evaluated against a criterion. That is, in effect-size sensitivity, power is fixed (say, at 80% or 90%) and the effect size output has to be evaluated—is this an unrealistically large effect size? Conversely, in properly conducted power determination, the effect size is fixed at some typical or minimal value, and the power of the test is then evaluated against the conventional criteria—is this much lower than the generally accepted 80%? If there is a reason to prefer sensitivity analysis here, it might have to do with the difficulty of deriving an agreed-upon criterion effect size to start with, so it would be better to end with an effect size for further discussion rather than presume an effect size in the first place.

Finally, additional cautions apply to both these methods, when aggregating multiple estimates of power from several studies in published literature. Specifically, the existence of heterogeneity, selection bias, and moderating factors means that accurate modeling is likely to require more sophisticated techniques than simply looking at the average (mean) power (McShane et al., 2020).

Controversy #3: Do Power Criteria Unjustly Disadvantage Some Kinds of Research?

While we have seen that power can be important in evaluating the strength of a study, simply excluding manuscripts deemed to have low power from publication and other forms of dissemination can limit a scientific field in undesirable ways. Because publication is a major metric of hiring, tenure, and promotion, these decisions will also influence scholars’ judgments about whether to pursue a particular type of research in the first place. Here we will address objections to the practice of using the perceived low power of a study’s key analyses as an argument against conducting or accepting it.

A policy of rejecting “low-powered” research could discourage work on hard-to-reach and diverse populations, for whom sample sizes would tend to be lower, as each participant is reached at additional cost and with a potential limit on numbers available. Increasing research power to increase confidence in conclusions is a value we welcome, but research exists in a context of multiple values. If discouraging low-powered research also discourages research focusing on underrepresented groups in society, then this trade-off in values needs to be carefully examined. Outright rejecting low-powered research would also perpetuate the long-standing file drawer problem, an issue that becomes particularly pernicious for groups that are already underrepresented in the literature. This includes underserved groups and ones that are simply more diverse or difficult to study than typical samples from relatively affluent Western citizens (WEIRD populations; Henrich et al., 2010).

Conversely, standards requiring high power to detect reasonable effects are most easily reached through samples such as undergraduate college students or online workers, who can be recruited relatively easily, quickly, and in large numbers. But these samples are simply not appropriate or possible for some research questions and methods. In addition, prioritizing undergraduate samples can systematically exclude scholars from institutions with smaller participant pools, decreasing the diversity of perspectives in our field. Researchers have thus recently turned to crowdsourced participant pools online for data collection (e.g., Buhrmester et al., 2011, 2018; Paolacci & Chandler, 2014; Sassenberg & Ditrich, 2019). However, online samples are not appropriate for some research needs, such as immersive face-to-face social environments or nondigital behavioral outcomes (C. A. Anderson et al., 2019). Well-funded labs are also privileged in the number of online workers they can recruit, assuming an ethical commitment to pay adequate wages for the work.