Abstract

The spiking activity of neocortical neurons exhibits a striking level of variability, even when these networks are driven by identical stimuli. The approximately Poisson firing of neurons has led to the hypothesis that these neural networks operate in the asynchronous state. In the asynchronous state, neurons fire independently from one another, so that the probability that a neuron experience synchronous synaptic inputs is exceedingly low. While the models of asynchronous neurons lead to observed spiking variability, it is not clear whether the asynchronous state can also account for the level of subthreshold membrane potential variability. We propose a new analytical framework to rigorously quantify the subthreshold variability of a single conductance-based neuron in response to synaptic inputs with prescribed degrees of synchrony. Technically, we leverage the theory of exchangeability to model input synchrony via jump-process-based synaptic drives; we then perform a moment analysis of the stationary response of a neuronal model with all-or-none conductances that neglects postspiking reset. As a result, we produce exact, interpretable closed forms for the first two stationary moments of the membrane voltage, with explicit dependence on the input synaptic numbers, strengths, and synchrony. For biophysically relevant parameters, we find that the asynchronous regime yields realistic subthreshold variability (voltage variance ≃4–9 mV2) only when driven by a restricted number of large synapses, compatible with strong thalamic drive. By contrast, we find that achieving realistic subthreshold variability with dense cortico-cortical inputs requires including weak but nonzero input synchrony, consistent with measured pairwise spiking correlations. We also show that, without synchrony, the neural variability averages out to zero for all scaling limits with vanishing synaptic weights, independent of any balanced state hypothesis. This result challenges the theoretical basis for mean-field theories of the asynchronous state.

Subject Areas: Biological Physics, Complex Systems, Interdisciplinary Physics

I. INTRODUCTION

A common and striking feature of cortical activity is the high degree of neuronal spiking variability [1]. This high variability is notably present in sensory cortex and motor cortex, as well as in regions with intermediate representations [2–5]. The prevalence of this variability has led to it being a major constraint for modeling cortical networks. Cortical networks may operate in distinct regimes depending on species, cortical area, and brain states. In the asleep or anesthetized state, neurons tend to fire synchronously with strong correlations between the firing of distinct neurons [6–8]. In the awake state, although synchrony has been reported as well, stimulus drive, arousal, or attention tend to promote an irregular firing regime whereby neurons spike in a seemingly random manner, with decreased or little correlation [1,8,9]. This has led to the hypothesis that cortex primarily operates asynchronously [10–12]. In the asynchronous state, neurons fire independently from one another, so that the probability that a neuron experiences synchronous synaptic inputs is exceedingly low. That said, the asynchronous state hypothesis appears at odds with the high degree of observed spiking variability in cortex. Cortical neurons are thought to receive a large number of synaptic inputs (≃104) [13]. Although the impact of these inputs may vary across synapses, the law of large numbers implies that variability should average out when integrated at the soma. In principle, this would lead to clock-like spiking responses, contrary to experimental observations [14].

A number of mechanisms have been proposed to explain how high spiking variability emerges in cortical networks [15]. The prevailing approach posits that excitatory and inhibitory inputs converge on cortical neurons in a balanced manner. In balanced models, the overall excitatory and inhibitory drives cancel each other so that transient imbalances in the drive can bring the neuron’s membrane voltage across the spike-initiation threshold. Such balanced models result in spiking statistics that match those found in the neocortex [16,17]. However, these statistics can emerge in distinct dynamical regimes depending on whether the balance between excitation and inhibition is tight or loose [18]. In tightly balanced networks, whereby the net neuronal drive is negligible compared to the antagonizing components, activity correlation is effectively zero, leading to a strictly asynchronous regime [19–21]. By contrast, in loosely balanced networks, the net neuronal drive remains of the same order as the antagonizing components, which allows for strong neuronal correlations during evoked activity, compatible with a synchronous regime [22–24].

While the high spiking variability is an important constraint for cortical network modeling, there are other biophysical signatures that may be employed. We now have access to the subthreshold membrane voltage fluctuations that underlie spikes in awake, behaving animals (see Fig. 1). Membrane voltage recordings reveal two main deviations from the asynchronous hypothesis: First, membrane voltage does not hover near the spiking threshold and is modulated by the synaptic drive; second, it exhibits state- or stimulus-dependent non-Gaussian fluctuation statistics with positive skewness [25–28]. In this work, we further argue that membrane voltage recordings reveal much larger voltage fluctuations than predicted by balanced cortical models [29,30].

FIG. 1.

Large trial-by-trial membrane voltage fluctuations. Membrane voltage responses are shown using whole cell recordings in awake behaving primates for both fixation alone trials (left) and visual stimulation trials (right). A drifting grating is presented for 1 s beginning at the arrow. Below, the membrane voltage traces are records of horizontal and vertical eye movements, illustrating that the animal was fixating during the stimulus. Red and green traces indicate different trials under the same conditions. Adapted from Ref. [27].

How could such large subthreshold variations in membrane voltage emerge? One way that fluctuations could emerge, even for large numbers of input, is if there is synchrony in the driving inputs [31]. In practice, input synchrony is revealed by the presence of positive spiking correlations, which quantify the propensity of distinct synaptic inputs to coactivate. Measurements of spiking correlations between pairs of neurons vary across reports but have generally been shown to be weak [10–12]. That said, even weak correlations can have a large impact when the population of correlated inputs is large [32,33]. Furthermore, the existence of input synchrony, supported by weak but persistent spiking correlations, is consistent with at least two other experimental observations. First, intracellular recordings from pairs of neurons in both anesthetized and awake animals reveal a high degree of membrane voltage correlations [7,34,35]. Second, excitatory and inhibitory conductance inputs are highly correlated with each other within the same neuron [35,36]. These observations suggest that input synchrony could explain the observed level of subthreshold variability.

While our focus is on achieving realistic subthreshold variability, other challenges to asynchronous networks have been described. In particular, real neural networks exhibit distinct regimes of activity depending on the strength of their afferent drives. In that respect, Zerlaut et al. [37] showed that asynchronous networks can exhibit a spectrum of realistic regimes of activity if they have moderate recurrent connections and are driven by strong thalamic projections (see also Ref. [17]). Furthermore, it has been a challenge to identify the scaling rule that should apply to synaptic strengths for asynchrony to hold stably in idealized networks. Recently, Sanzeni, Histed, and Brunel [38] proposed that a realistic asynchronous regime is achieved for a particular large-coupling rule, whereby synaptic strengths scale in keeping with the logarithmic size of the network. Both studies consider balanced networks with conductance-based neuronal models, but neither focuses on the role of synchrony, consistent with the asynchronous state hypothesis. The asynchronous state hypothesis is theoretically attractive, because it represents a naturally stable regime of activity in infinite-size, balanced networks of current-based neuronal models [16,17,20,21]. Such neuronal models, however, neglect the voltage dependence of conductances, and it remains unclear whether the asynchronous regime is asymptotically stable for infinite-size, conductance-based network models.

Here, independent of the constraint of network stability, we ask whether biophysically relevant neuronal models can achieve the observed subthreshold variability under realistic levels of input synchrony. To answer this question, we derive exact analytical expressions for the stationary voltage variance of a single conductance-based neuron in response to synchronous shot-noise drives [39,40]. A benefit of shot-noise models compared to diffusion models is to allow for individual synaptic inputs to be temporally separated in distinct impulses, each corresponding to a transient positive conductance fluctuation [41–43]. We develop our shot-noise analysis for a variant of classically considered neuronal models. We call this variant the all-or-none-conductance-based model for which synaptic activation occurs as an all-or-none process rather than as an exponentially relaxing process. To perform an exact treatment of these models, we develop original probabilistic techniques inspired from Marcus’ work about shot-noise-driven dynamics [44,45]. To model shot-noise drives with synchrony, we develop a statistical framework based on the property of input exchangeability, which assumes that no synaptic inputs play a particular role. In this framework, we show that input drives with varying degree of synchrony can be rigorously modeled via jump processes, while synchrony can be quantitatively related to measures of pairwise spiking correlations.

Our main results are biophysically interpretable formulas for the voltage mean and variance in the limit of instantaneous synapses. Crucially, these formulas explicitly depend on the input numbers, weights, and synchrony and hold without any forms of diffusion approximation. This is in contrast with analytical treatments which elaborate on the diffusion and effective-time-constant approximations [37,38,46,47]. We leverage these exact, explicit formulas to determine under which synchrony conditions a neuron can achieve the experimentally observed subthreshold variability. For biophysically relevant synaptic numbers and weights, we find that achieving realistic variability is possible in response to a restricted number of large asynchronous connections, compatible with the dominance of thalamo-cortical projections in the input layers of the visual cortex. However, we find that achieving realistic variability in response to a large number of moderate cortical inputs, as in superficial cortical visual layers, necessitates nonzero input synchrony in amounts that are consistent with the weak levels of measured spiking correlations observed in vivo.

In practice, persistent synchrony may spontaneously emerge in large but finite neural networks, as nonzero correlations are the hallmark of finite-dimensional interacting dynamics. The network structural features responsible for the magnitude of such correlations remains unclear, and we do not address this question here (see Refs. [48,49] for review). The persistence of synchrony is also problematic for theoretical approaches that consider networks in the infinite-size limits. Indeed, our analysis supports that, in the absence of synchrony and for all scaling of the synaptic weights, subthreshold variability must vanish in the limit of arbitrary large numbers of synapses. This suggests that, independent of any balanced condition, the mean-field dynamics that emerge in infinite-size networks of conductance-based neurons will not exhibit Poisson-like spiking variability, at least in the absence of additional constraints on the network structure or on the biophysical properties of the neurons. In current-based neuronal models, however, variability is not dampened by a conductance-dependent effective time constant. These findings, therefore, challenge the theoretical basis for the asynchronous state in conductance-based neuronal networks.

Our exact analysis, as well as its biophysical interpretations, is possible only at the cost of several caveats: First, we neglect the impact of the spike-generating mechanism (and of the postspiking reset) in shaping the subthreshold variability. Second, we quantify synchrony under the assumption of input exchangeability, that is, for synapses having a typical strength as opposed to being heterogeneous. Third, we consider input drives that implement an instantaneous form of synchrony with temporally precise synaptic coactivations. Fourth, we do not consider slow temporal fluctuations in the mean synaptic drive. Fifth, and perhaps most concerning, we do not account for the stable emergence of a synchronous regime in network models. We argue in the discussion that all the above caveats but the last one can be addressed without impacting our findings. Addressing the last caveat remains an open problem.

For reference, we list in Table I the main notations used in this work. These notations utilize the subscript and to refer to excitation or inhibition, respectively. The notation means that the subscript can be either or . The notation is used to emphasize that a quantity depends jointly on excitation and inhibition.

TABLE I.

Main notations.

| First-order synaptic efficacies | |

| Second-order synaptic efficacies | |

| Auxiliary second-order synaptic efficacies | |

| , | Rate of the driving Poisson process |

| Rate of the excitatory or inhibitory Poisson process | |

| Membrane capacitance | |

| , | Cross-correlation synaptic efficacy |

| Stationary covariance | |

| Stationary expectation | |

| Expectation with respect to the joint distribution or | |

| Expectation with respect to the marginal distribution , or | |

| Fast-conductance small parameter | |

| Passive leak conductance | |

| Overall excitatory or inhibitory conductance | |

| Reduced excitatory or inhibitory conductance | |

| Number of coactivating excitatory or inhibitory synaptic inputs | |

| Total number of excitatory or inhibitory synaptic inputs | |

| Driving Poisson process with rate | |

| Excitatory or inhibitory driving Poisson process with rate | |

| Bivariate jump distribution of | |

| Marginal jump distribution of | |

| Bivariate distribution for the numbers of coactivating synapses | |

| Marginal synaptic count distribution | |

| Individual excitatory or inhibitory synaptic rate | |

| Spiking correlation between excitatory and inhibitory inputs | |

| Spiking correlation within excitatory or inhibitory inputs | |

| Passive membrane time constant | |

| Synaptic time constant | |

| Stationary variance | |

| Excitatory or inhibitory random jumps | |

| Excitatory or inhibitory reversal potentials | |

| Typical value for excitatory or inhibitory synaptic weights | |

| Binary variable indicating the activation of excitatory synapse | |

| Binary variable indicating the activation of inhibitory synapse | |

| Driving compound Poisson process with base rate and jump distribution |

II. STOCHASTIC MODELING AND ANALYSIS

A. All-or-none-conductance-based neurons

We consider the subthreshold dynamics of an original neuronal model, which we called the all-or-none-conductance-based (AONCB) model. In this model, the membrane voltage obeys the first-order stochastic differential equation

| (1) |

where randomness arises from the stochastically activating excitatory and inhibitory conductances, respectively denoted by and [see Fig. 2(a)]. These conductances result from the action of excitatory and inhibitory synapses: and . In the absence of synaptic inputs, i.e., when , and of external current , the voltage exponentially relaxes toward its leak reversal potential with passive time constant , where denotes the cell’s membrane capacitance and denotes the cellular passive conductance [50]. In the presence of synaptic inputs, the transient synaptic currents and cause the membrane voltage to fluctuate. Conductance-based models account for the voltage dependence of synaptic currents via the driving forces and , where and denotes the excitatory and inhibitory reversal potential, respectively. Without loss of generality, we assume in the following that and that .

FIG. 2.

All-or-none-conductance-based models. (a) Electrical diagram of conductance-based model for which the neuronal voltage evolves in response to fluctuations of excitatory and inhibitory conductances and . (b) In all-or-none models, inputs delivered as Poisson processes transiently activate the excitatory and inhibitory conductances and during a finite, nonzero synaptic activation time . Simulation parameters: , and .

We model the spiking activity of the upstream neurons as shot noise [39,40], which can be generically modeled as a -dimensional stochastic point process [51,52]. Let us denote by its excitatory component and by its inhibitory component, where denotes time and is the neuron index. For each neuron , the process is specified as the counting process registering the spiking occurrences of neuron up to time . In other words, , where denotes the full sequence of spiking times of neuron and where denotes the indicator function of set . Note that, by convention, we label spikes so that for all neuron . Given a point-process model for the upstream spiking activity, classical conductance-based models consider that a single input to a synapse causes an instantaneous increase of its conductance, followed by an exponential decay with typical timescale . Here, we depart from this assumption and consider that the synaptic conductances operates all-or-none with a common activation time still referred to as . Specifically, we assume that the dynamics of the conductance follows

| (2) |

where is the dimensionless synaptic weight. The above equation prescribes that the th spike delivery to synapse at time is followed by an instantaneous increase of that synapse’s conductance by an amount for a period . Thus, the synaptic response prescribed by Eq. (2) is all-or-none as opposed to being graded as in classical conductance-based models. Moreover, just as in classical models, Eq. (2) allows synapses to multiactivate, thereby neglecting nonlinear synaptic saturation [see Fig. 2(b)].

To be complete, AONCB neurons must, in principle, include a spike-generating mechanism. A customary choice is the integrate-and-fire mechanism [53,54]: A neuron emits a spike whenever its voltage exceeds a threshold value and resets instantaneously to some value afterward. Such a mechanism impacts the neuronal subthreshold voltage dynamics via postspiking reset, which implements a nonlinear form of feedback. However, in this work, we focus on the variability that is generated by fluctuating, possibly synchronous, synaptic inputs. For this reason, we neglect the influence of the spiking reset in our analysis, and, actually, we ignore the spike-generating mechanism altogether. Finally, although our analysis of AONCB neurons applies to positive synaptic activation time , we discuss our results only in the limit of instantaneous synapses. This corresponds to taking while adopting the scaling in order to maintain the charge transfer induced by a synaptic event. We will see that this limiting process preserves the response variability of AONCB neurons.

B. Quantifying the synchrony of exchangeable synaptic inputs

Our goal here is to introduce a discrete model for synaptic inputs, whereby synchrony can be rigorously quantified. To this end, let us suppose that the neuron under consideration receives inputs from excitatory neurons and inhibitory neurons, chosen from arbitrary large pools of excitatory neurons and inhibitory neurons. Adopting a discrete-time representation with elementary bin size , we denote by in the input state within the th bin. Our main simplifying assumption consists in modeling the excitatory inputs and the inhibitory inputs as separately exchangeable random variables and that are distributed identically over and , respectively, and independently across time. This warrants dropping the dependence on time index . By separately exchangeable, we mean that no subset of excitatory inputs or inhibitory inputs plays a distinct role so that, at all time, the respective distributions of and are independent of the input labeling. In other words, for all permutations of and of , the joint distribution of and is identical to that of and [55,56]. By contrast with independent random spiking variables, exchangeable ones can exhibit nonzero correlation structure. By symmetry, this structure is specified by three correlation coefficients:

where and denote the covariance and the variance of the binary variables and , respectively.

Interestingly, a more explicit form for , and can be obtained in the limit of an infinite-size pool . This follows from de Finetti’s theorem [57], which states that the probability of observing a given input configuration for excitatory neurons and inhibitory neurons is given by

where is the directing de Finetti measure, defined as a bivariate distribution over the unit square [0, 1] × [0, 1]. In the equation above, the numbers and represent the (jointly fluctuating) probabilities that an excitatory neuron and an inhibitory neuron spike in a given time bin, respectively. The core message of the de Finetti theorem is that the spiking activity of neurons from infinite exchangeable pools is obtained as a mixture of conditionally independent binomial laws. This mixture is specified by the directing measure , which fully parametrizes our synchronous input model. Independent spiking corresponds to choosing as a point-mass measure concentrated on some probabilities , where denotes the individual spiking rate of a neuron: [see Fig. 3(a)]. By contrast, a dispersed directing measure corresponds to the existence of correlations among the inputs [see Fig. 3(b)]. Accordingly, we show in Appendix A that the spiking pairwise correlation takes the explicit form

| (3) |

whereas , the correlation between excitation and inhibition, is given by

| (4) |

In the above formulas, , and denote expectation, variance, and covariance of , respectively. Note that these formulas show that nonzero correlations correspond to nonzero variance, as is always the case for dispersed distribution. Independence between excitation and inhibition for which corresponds to directing measure with product form, i.e., , where and denote the marginal distributions. Alternative forms of the directed measure generally lead to nonzero cross correlation , which necessarily satisfies .

FIG. 3.

Parametrizing correlations via exchangeability. The activity of exchangeable synaptic inputs collected over consecutive time bins can be represented as {0, 1}-valued array , where if input activates in time bin . Under assumptions of exchangeability, the input spiking correlation is entirely captured by the count statistics of how many inputs coactivate within a given time bin. In the limit , the distribution of the fraction of coactivating inputs coincides with the directing de Finetti measure, which we consider as a parametric choice in our approach. In the absence of correlation, synapses tend to activate in isolation: in (a). In the presence of correlation, synapses tend to coactivate, yielding a disproportionately large synaptic activation event: in (b). Considering the associated cumulative counts specifies discrete-time jump processes that can be generalized to the continuous-time limit, i.e., for time bins of vanishing duration .

In this exchangeable setting, a reasonable parametric choice for the marginals and is given by beta distributions , where and denote shape parameters [58]. Practically, this choice is motivated by the ability of beta distributions to efficiently fit correlated spiking data generated by existing algorithms [59]. Formally, this choice is motivated by the fact that beta distributions are conjugate priors for the binomial likelihood functions, so that the resulting probabilistic models can be studied analytically [60–62]. For instance, for , the probability that synapses among the inputs are jointly active within the same time bin follows the beta-binomial distribution

| (5) |

Accordingly, the mean number of active excitatory inputs is . Utilizing Eq. (3), we also find that . Note that the above results show that, by changing de Finetti’s measure, one can modify not only the spiking correlation, but also the mean spiking rate.

In the following, we exploit the above analytical results to illustrate that taking the continuous-time limit specifies synchronous input drives as compound Poisson processes [51,52]. To do so, we consider both excitation and inhibition, which in a discrete setting corresponds to considering bivariate probability distributions defined over . Ideally, these distributions should be such that its conditional marginals and , with distributions given by Eq. (5). Unfortunately, there does not seem to be a simple low-dimensional parametrization for such distributions , except in particular cases. To address this point, at least numerically, one can resort to a variety of methods including copulas [63,64]. For analytical calculations, we consider only two particular cases for which the marginals of are given by the beta distributions: (i) the case of maximum positive correlation for which , i.e., with , and (ii) the case of zero correlation for which and are independent, i.e., .

C. Synchronous synaptic drives as compound Poisson processes

Under assumption of input exchangeability and given typical excitatory and inhibitory synaptic weights , the overall synaptic drive to a neuron is determined by , the numbers of active excitatory and inhibitory inputs at each discrete time step. As AONCB dynamics unfolds in continuous time, we need to consider this discrete drive in the continuous-time limit as well, i.e., for vanishing time bins . When , we show in Appendix B that the overall synaptic drive specifies a compound Poisson process with bivariate jumps . Specifically, we have

| (6) |

where are i.i.d. samples with bivariate distribution denoted by and where the overall driving Poisson process registers the number of synaptic events without multiple counts (see Fig. 4). By synaptic events, we mean these times for which at least one excitatory synapse or one inhibitory synapse activates. We say that registers these events without multiple count as it counts one event independent of the number of possibly coactivating synapses. Similarly, we denote by and the counting processes registering synaptic excitatory events and synaptic inhibitory events alone, respectively. These processes and are Poisson processes that are correlated in the presence of synchrony, as both and may register the same event. Note that this implies that . More generally, denoting by and the rates of and , respectively, the presence of synchrony implies that and , where is the typical activation rate of a single synapse.

FIG. 4.

Limit compound Poisson process with excitation and inhibition. (a) Under assumption of partial exchangeability, synaptic inputs can be distinguished only by the fact that they are either excitatory or inhibitory, which is marked by being colored in red or blue, respectively, in the discrete representation of correlated synaptic inputs with bin size . Accordingly, considering excitation and inhibition separately specifies two associated input-count processes and two cumulative counting processes. For nonzero spiking correlation , these processes are themselves correlated as captured by the joint distribution of excitatory and inhibitory input counts (center) and by the joint distribution of excitatory and inhibitory jumps (right). (b) The input count distribution is a finite-size approximation of the bivariate directing de Finetti measure , which we consider as a parameter as usual. For a smaller bin size , this distribution concentrates in (0,0), as an increasing proportion of time bins does not register any synaptic events, be they excitatory or inhibitory. In the presence of correlation, however, the conditioned jump distribution remains correlated but also dispersed. (c) In the limit , the input-count distribution is concentrated in (0,0), consistent with the fact that the average number of synaptic activations remains constant while the number of bins diverges. By contrast, the distribution of synaptic event size conditioned to distinct from (0,0) converges toward a well-defined distribution: . This distribution characterizes the jumps of a bivariate compound Poisson process, obtained as the limit of the cumulative count process when considering .

For simplicity, we explain how to obtain such limit compound Poisson processes by reasoning on the excitatory inputs alone. To this end, let us denote the marginal jump distribution of as . Given a fixed typical synaptic weight , the jumps are quantized as , with distributed on , as by convention jumps cannot have zero size. These jumps are naturally defined in the discrete setting, i.e., with , and their discrete distribution is given via conditioning as . For beta distributed marginals , we show in Appendix B that considering yields the jump distribution

| (7) |

where denotes the digamma function. In the following, we explicitly index discrete count distributions, e.g., , to distinguish them from the corresponding jump distributions, i.e., . Equation (7) follows from observing that the probability to find a spike within a bin is , so that for fixed excitatory spiking rate when . As a result, the continuous-time spiking correlation is , so that we can interpret as a parameter controlling correlations. More generally, we show in Appendix C that the limit correlation depends only on the count distribution via

| (8) |

where denotes expectations with respect to . This shows that zero spiking correlation corresponds to single synaptic activations, i.e., to an input drive modeled as a Poisson process, as opposed to a compound Poisson process. For Poisson-process models, the overall rate of synaptic events is necessarily equal to the sum of the individual spiking rate: . This is no longer the case in the presence of synchronous spiking, when nonzero input correlation arises from coincidental synaptic activations. Indeed, as the population spiking rate is conserved when , the rate of excitatory synaptic events governing satisfies so that

| (9) |

Let us reiterate for clarity that, if synapses activate synchronously, this counts as only one synaptic event, which can come in variable size . Consistently, we have, in general, . When , we have perfect synchrony with and , whereas the independent spiking regime with is attained for , for which we have .

It is possible to generalize the above construction to mixed excitation and inhibition, but a closed-form treatment is possible only for special cases. For the independent case (i), in the limit , jumps are either excitatory alone or inhibitory alone; i.e., the jump distribution has support on . Accordingly, we show in Appendix D that

| (10) |

where and are specified in Eqs. (7) and (9) by the parameters and , respectively. This shows that, as expected, in the absence of synchrony the driving compound Poisson process with bidimensional jump is obtained as the direct sum of two independent compound Poisson processes. In particular, the driving processes are such that , with rates satisfying . By contrast, for the maximally correlated case with (ii), we show in Appendix D that the jumps are given as , with distributed on [see Figs. 4(b) and 4(c)] according to

| (11) |

Incidentally, the driving Poisson process has a rate determined by adapting Eq. (9):

for which one can check that .

All the closed-form results so far have been derived for synchrony parametrization in terms of beta distribution. There are other possible parametrizations, and these would lead to different count distributions but without known closed form. To address this limitation in the following, all our results hold for arbitrary distributions of the jump sizes on the positive orthant . In particular, our results are given in terms of expectations with respect to , still denoted by . Nonzero correlation between excitation and inhibition corresponds to those choices of for which with nonzero probability, which indicates the presence of synchronous excitatory and inhibitory inputs. Note that this modeling setting restricts nonzero correlations to be positive, which is an inherent limitation of our synchrony-based approach. When considering an arbitrary , the main caveat is understanding how such a distribution may correspond to a given input numbers and spiking correlations and . For this reason, we always consider that and follows beta distributed marginal distributions when discussing the roles of , and in shaping the voltage response of a neuron. In that respect, we show in Appendix C that the coefficient can always be deduced from the knowledge of a discrete count distribution on via

where the expectations are with respect to .

D. Instantaneous synapses and Marcus integrals

We are now in a position to formulate the mathematical problem at stake within the framework developed by Marcus to study shot-noise-driven systems [44,45]. Our goal is quantifying the subthreshold variability of an AONCB neuron subjected to synchronous inputs. Mathematically, this amounts to computing the first two moments of the stationary process solving the following stochastic dynamics:

| (12) |

where are constants and where the reduced conductances and follow stochastic processes defined in terms of a compound Poisson process with bivariate jumps. Formally, the compound Poisson process is specified by , the rate of its governing Poisson process , and by the joint distribution of its jumps . Each point of the Poisson process represents a synaptic activation time , where is in with the convention that . At all these times, the synaptic input sizes are drawn as i.i.d. random variables in with probability distribution .

At this point, it is important to observe that the driving process is distinct from the conductance process . The latter process is formally defined for AONCB neurons as

where the dimensionless parameter is the ratio of the duration of synaptic activation relative to the passive membrane time constant. Note that the amplitude of scales in inverse proportion to in order to maintain the overall charge transfer during synaptic events of varying durations. Such a scaling ensures that the voltage response of AONCB neurons has finite, nonzero variability for small or vanishing synaptic time constant, i.e., for (see Fig. 5). The simplifying limit of instantaneous synapses is obtained for , which corresponds to infinitely fast synaptic activation. By virtue of its construction, the conductance process becomes a shot noise in the limit , which can be formally identified to . This is consistent with the definition of shot-noise processes as temporal derivative of compound Poisson processes, i.e., as collections of randomly weighted Dirac-delta masses.

FIG. 5.

Limit of instantaneous synapses. The voltage trace and the empirical voltage distribution are only marginally altered by taking the limit for short synaptic time constant: in (a) and in (b). In both (a) and (b), we consider the same compound Poisson-process drive with , and , and the resulting fluctuating voltage is simulated via a standard Euler discretization scheme. The corresponding empirical conductance and voltage distributions are shown on the right. The later voltage distribution asymptotically determines the stationary moments of .

Because of their high degree of idealization, shot-noise models are often amenable to exact stochastic analysis, albeit with some caveats. For equations akin to Eq. (12) in the limit of instantaneous synapses, such a caveat follows from the multiplicative nature of the conductance shot noise . In principle, one might expect to solve Eq. (12) with shot-noise drive via stochastic calculus, as for diffusion-based drive. This would involve interpreting the stochastic integral representations of solutions in terms of Stratonovich representations [65]. However, Stratonovich calculus is not well defined for shot-noise drives [66]. To remedy this point, Marcus has proposed to study stochastic equations subjected to regularized versions of shot noises, whose regularity is controlled by a nonnegative parameter [44,45]. For , the dynamical equations admit classical solutions, whereas the shot-noise-driven regime is recovered in the limit . The hope is to be able to characterize analytically the shot-noise-driven solution, or at least some of its moments, by considering regular solutions in the limit . We choose to refer to the control parameter as by design in the above. This is because AONCB models represent Marcus-type regularizations that are amenable to analysis in the limit of instantaneous synapses, i.e., when , for which the conductance process converges toward a form of shot noise.

Marcus interpretation of stochastic integration has practical implications for numerical simulations with shot noise [41]. According to this interpretation, shot-noise-driven solutions are conceived as limits of regularized solutions for which standard numerical scheme applies. Correspondingly, shot-noise-driven solutions to Eq. (12) can be simulated via a limit numerical scheme. We derive such a limit scheme in Appendix E. Specifically, we show that the voltage of shot-noise-driven AONCB neurons exponentially relaxes toward the leak reversal potential , except when subjected to synaptic impulses at times . At these times, the voltage updates discontinuously according to , where the jumps are given in Appendix E via the Marcus rule

| (13) |

Observe that the above Marcus rule directly implies that no jump can cause the voltage to exit , the allowed range of variation for . Moreover, note that this rule specifies an exact even-driven simulation scheme given knowledge of the synaptic activation times and sizes [67]. We adopt the above Marcus-type numerical scheme in all the simulations that involve instantaneous synapses.

E. Moment calculations

When driven by stationary compound Poisson processes, AONCB neurons exhibit ergodic voltage dynamics. As a result, the typical voltage state, obtained by sampling the voltage at random time, is captured by a unique stationary distribution. Our main analytical results, which we give here, consist in exact formulas for the first two voltage moment with respect to that stationary distribution. Specifically, we derive the stationary mean voltage Eq. (14) in Appendix F and the stationary voltage variance Eq. (16) in Appendix G. These results are obtained by a probabilistic treatment exploiting the properties of compound Poisson processes within Marcus’ framework. This treatment yields compact, interpretable formulas in the limit of instantaneous synapses . Readers who are interested in the method of derivation for these results are encouraged to go over the calculations presented in Appendixes F–L.

In the limit of instantaneous synapses, , we find that the stationary voltage mean is

| (14) |

where we define the first-order synaptic efficacies as

| (15) |

Note the refers to the expectation with respect to the jump distribution in Eq. (15), whereas refers to the stationary expectation in Eq. (14). Equation (14) has the same form as for deterministic dynamics with constant conductances, in the sense that the mean voltage is a weighted sum of the reversal potentials , and . One can check that, for such deterministic dynamics, the synaptic efficacies involved in the stationary mean simply read . Thus, the impact of synaptic variability, and, in particular, of synchrony, entirely lies in the definition of the efficacies in Eq. (15). In the absence of synchrony, one can check that accounting for the shot-noise nature of the synaptic conductances leads to synaptic efficacies under exponential form: . In turn, accounting for input synchrony leads to synaptic efficacies expressed as expectation of these exponential forms in Eq. (15), consistent with the stochastic nature of the conductance jumps (). Our other main result, the formula for the stationary voltage variance, involves synaptic efficacies of similar form. Specifically, we find that

| (16) |

where we define the second-order synaptic efficacies as

| (17) |

Equation (16) also prominently features auxiliary second-order efficacies defined by . Owing to their prominent role, we also mention their explicit form:

| (18) |

The other quantity of interest featuring in Eq. (16) is the cross-correlation coefficient

| (19) |

which entirely captures the (non-negative) correlation between excitatory and inhibitory inputs and shall be seen as an efficacy as well.

In conclusion, let us stress that, for AONCB models, establishing the above exact expressions does not require any approximation other than taking the limit of instantaneous synapses. In particular, we neither resort to any diffusion approximations [37,38] nor invoke the effective-time-constant approximation [41–43]. We give in Appendix L an alternative factorized form for to justify the non-negativity of expression (16). In Fig. 6, we illustrate the excellent agreement of the analytically derived expressions (14) and (16) with numerical estimates obtained via Monte Carlo simulations of the AONCB dynamics for various input synchrony conditions. Discussing and interpreting quantitatively Eqs. (14) and (16) within a biophysically relevant context is the main focus of the remainder of this work.

FIG. 6.

Comparison of simulation and theory. (a) Examples of voltage traces obtained via Monte Carlo simulations of an AONCB neuron for various types of synchrony-based input correlations: uncorrelated (uncorr, yellow), within correlation and (within corr, cyan), and within and across correlation (across corr, magenta). (b) Comparison of the analytically derived expressions (14) and (16) with numerical estimates obtained via Monte Carlo simulations for the synchrony conditions considered in (a).

III. COMPARISON WITH EXPERIMENTAL DATA

A. Experimental measurements and parameter estimations

Cortical activity typically exhibits a high degree of variability in response to identical stimuli [68,69], with individual neuronal spiking exhibiting Poissonian characteristics [3,70]. Such variability is striking, because neurons are thought to typically receive a large number (≃104) of synaptic contacts [13]. As a result, in the absence of correlations, neuronal variability should average out, leading to quasideterministic neuronal voltage dynamics [71]. To explain how variability seemingly defeats averaging in large neural networks, it has been proposed that neurons operate in a special regime, whereby inhibitory and excitatory drive nearly cancel one another [16,17,19–21]. In such balanced networks, the voltage fluctuations become the main determinant of the dynamics, yielding a Poisson-like spiking activity [16,17,19–21]. However, depending upon the tightness of this balance, networks can exhibit distinct dynamical regimes with varying degree of synchrony [18].

In the following, we exploit the analytical framework of AONCB neurons to argue that the asynchronous picture predicts voltage fluctuations are an order of magnitude smaller than experimental observations [1,26–28]. Such observations indicate that the variability of the neuronal membrane voltage exhibits typical variance values of ≃4–9 mV2. Then, we claim that achieving such variability requires input synchrony within the setting of AONCB neurons. Experimental estimates of the spiking correlations are typically thought as weak with coefficients ranging from 0.01 to 0.04 [10–12]. Such weak values do not warrant the neglect of correlations owing to the typically high number of synaptic connections. Actually, if denotes the number of inputs, all assumed to play exchangeable roles, an empirical criterion to decide whether a correlation coefficient is weak is that [32,33]. Assuming the lower estimate of , this criterion is achieved for inputs, which is well below the typical number of excitatory synapses for cortical neurons. In the following, we consider only the response of AONCB neurons to synchronous drive with biophysically realistic spiking correlations ().

Two key parameters for our argument are the excitatory and inhibitory synaptic weights denoted by and , respectively. Typical values for these weights can be estimated via biophysical considerations within the framework of AONCB neurons. In order to develop these considerations, we assume the values for reversal potentials and for the passive membrane time constant. Given these assumptions, we set the upper range of excitatory synaptic weights so that, when delivered to a neuron close to its resting state, unitary excitatory inputs cause peak membrane fluctuations of ≃0.5 mV at the soma, attained after a peak time of ≃5 ms. Such fluctuations correspond to typically large in vivo synaptic activations of thalamo-cortical projections in rats [72]. Although activations of similar amplitude have been reported for cortico-cortical connections [73,74], recent large-scale in vivo studies have revealed that cortico-cortical excitatory connections are typically much weaker [75,76]. At the same time, these studies have shown that inhibitory synaptic conductances are about fourfold larger than excitatory ones but with similar time-scales. Fitting these values within the framework of AONCB neurons for reveals that the largest possible synaptic inputs correspond to dimensionless weights and . Following Refs. [75,76], we consider that the comparatively moderate cortico-cortical recurrent connections are an order of magnitude weaker than typical thalamo-cortical projections, i.e., and . Such a range is in keeping with estimates used in Ref. [38].

B. The effective-time-constant approximation holds in the asynchronous regime

Let us consider that neuronal inputs have zero (or negligible) correlation structure, which corresponds to assuming that all synapses are driven by independent Poisson processes. Incidentally, excitation and inhibition act independently. Within the framework of AONCB neurons, this latter assumption corresponds to choosing a joint jump distribution of the form

where denotes the Dirac delta function so that with probability one. Moreover, and are independently specified via Eq. (9), and the overall rate of synaptic events is purely additive: . Consequently, the cross-correlation efficacy in Eq. (16) vanishes, and the dimensionless efficacies simplify to

Further assuming that individual excitatory and inhibitory synapses act independently leads to considering that and depict the size of individual synaptic inputs, as opposed to aggregate events. This corresponds to taking and in our parametric model based on beta distributions. Then, as intuition suggests, the overall rates of excitation and inhibition activation are recovered as and , where and are the individual spiking rates.

Individual synaptic weights are small in the sense that , which warrants neglecting exponential corrections for the evaluation of the synaptic efficacies, at least in the absence of synchrony-based correlations. Accordingly, we have

as well as symmetric expressions for inhibitory efficacies. Plugging these values into Eq. (16) yields the classical mean-field estimate for the stationary variance:

which is exactly the same expression as that derived via the diffusion and effective-time-constant approximations in Refs. [46,47]. However, observe that the only approximation we made in obtaining the above expression is to neglect exponential corrections due to the relative weakness of biophysically relevant synaptic weights, which we hereafter refer to as the small-weight approximation.

C. Asynchronous inputs yield exceedingly small neural variability

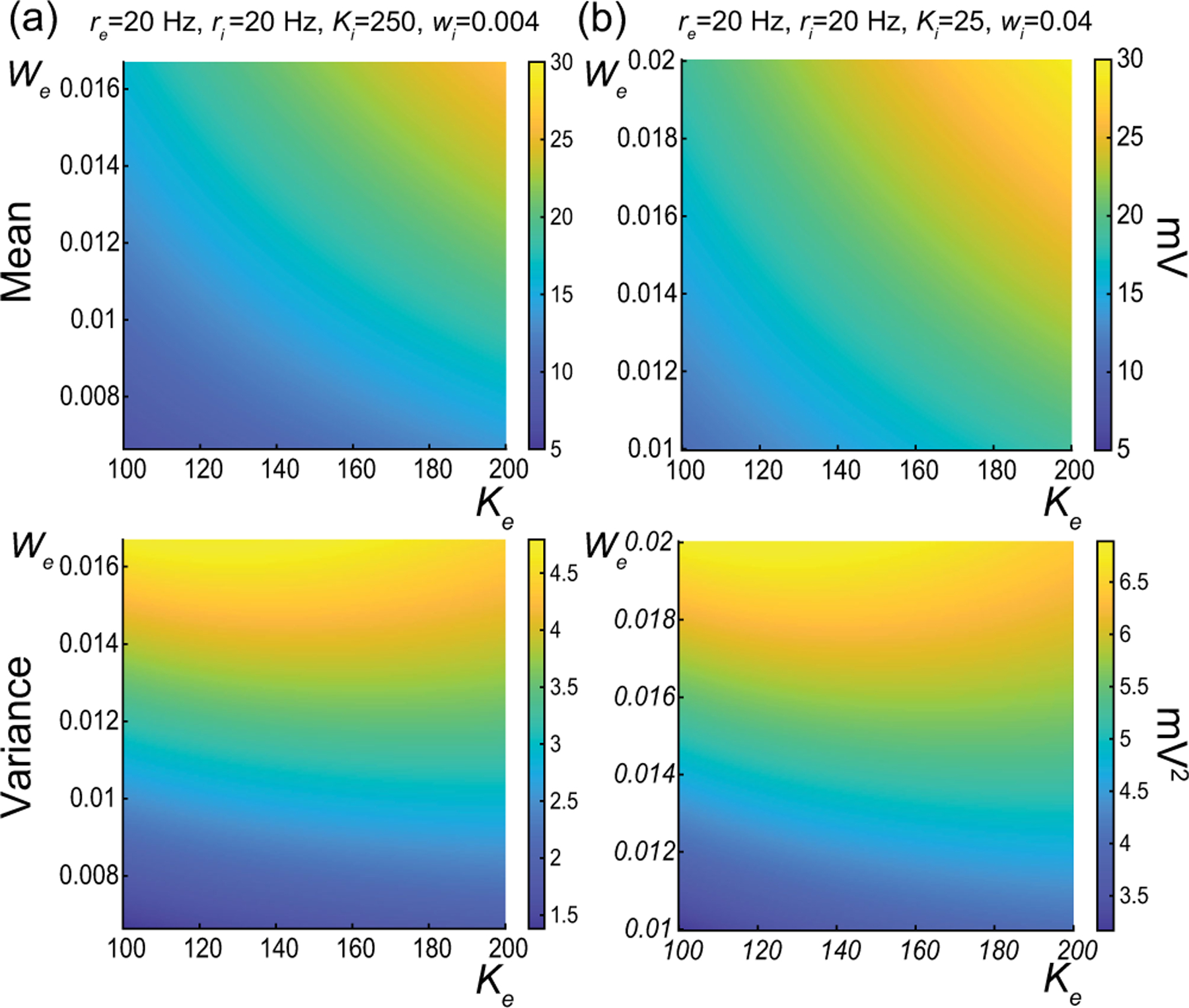

In Fig. 7, we represent the stationary mean and variance as a function of the neuronal spiking input rates and but for distinct values of synaptic weights and . In Fig. 7(a), we consider synaptic weights as large as biophysically admissible based on recent in vivo studies [75,76], i.e., and . By contrast, in Fig. 7(b), we consider moderate synaptic weights and , which yield somatic postsynaptic deflections of typical amplitudes. In both cases, we consider input numbers and such that the mean voltage covers the same biophysical range of values as and varies between 0 and 50 Hz. Given a zero resting potential, we set this biophysical range to be bounded by as typically observed experimentally in electrophysiological recordings. These conditions correspond to constant aggregate weights set to so that

This implies that the AONCB neurons under consideration do not reach the high-conductance regime for which the passive conductance can be neglected, i.e., [77]. Away from the high-conductance regime, the variance magnitude is controlled by the denominator in Eq. (20). Accordingly, the variance in both cases is primarily dependent on the excitatory rate , since, for , the effective excitatory driving force dominates the effective inhibitory driving force . This is because the neuronal voltage typically sits close to the inhibitory reversal potential but far from the excitatory reversal potential . For instance, when close to rest , the ratio of the effective driving forces is fold in favor of excitation. Importantly, the magnitude of the variance is distinct for moderate synapses and for large synapses. This is because, for constant aggregate weights , the ratio of effective driving forces for large and moderate synapses scales in keeping with the ratio of the weights, and so does the ratio of variances away from the high-conductance regime. Thus, we have

and the variance decreases by one order of magnitude from large weights in Fig. 7(a) to moderate weights in Fig. 7(b).

FIG. 7.

Voltage mean and variance in the absence of input correlations. Column (a) depicts the stationary subthreshold response of an AONCB neuron driven by and synapses with large weights and . Column (b) depicts the stationary subthreshold response of an AONCB neuron driven by and synapses with moderate weights and . For synaptic weights , the mean response is identical as for (a) and (b). By contrast, for , the variance is at least an order of magnitude smaller than that experimentally observed (4–9 mV2) for moderate weights as shown in (a). Reaching the lower range of realistic neural variability requires driving the cell via large weights as shown in (b).

The above numerical analysis reveals that achieving realistic levels of subthreshold variability for a biophysical mean range of variation requires AONCB neurons to be exclusively driven by large synaptic weights. This is confirmed by considering the voltage mean and variance in Fig. 8 as a function of the number of inputs and of the synaptic weights for a given level of inhibition. We choose this level of inhibition to be set by moderate synapses with in Fig. 8(a) and by large synapses with in Fig. 8(b). As expected, assuming that in the absence of input correlations, the voltage mean depends on only the product , which yields a similar mean range of variations for varying up to 2000 in Fig. 8(a) and up to 200 in Fig. 8(b). Thus, it is possible to achieve the same range of variations as with moderate synaptic with a fewer number of larger synaptic weights. By contrast, the voltage variance achieves realistic levels only for large synaptic weights in both conditions, with for moderate inhibitory background synapses in Fig. 8(a) and for large inhibitory background synapses in Fig. 8(b).

FIG. 8.

Dependence on the number of inputs and the synaptic weights in the absence of correlations. Column (a) depicts the stationary subthreshold response of an AONCB neuron driven by a varying number of excitatory synapses with varying weight at rate , with background inhibitory drive given by with moderate weights and . Column (b) depicts the same as in column (a) but for a background inhibitory drive given by with large weights and . For both conditions, achieving realistic level of variance, i.e., , while ensuring a biophysically relevant mean range of variation, i.e., , is possible only for large weights: for moderate inhibitory weights in (a) and for large weights.

D. Including input correlations yields realistic subthreshold variability

Without synchrony, achieving the experimentally observed variability necessitates an excitatory drive mediated via synaptic weights , which corresponds to the upper bounds of the biophysically admissible range and is in agreement with numerical results presented in Ref. [38]. Albeit possible, this is unrealistic given the wide distribution of amplitudes observed experimentally, whereby the vast majority of synaptic events are small to moderate, at least for cortico-cortical connections [75,76]. In principle, one can remedy this issue by allowing for synchronous activation of, say, synapses with moderate weight , as it amounts to the activation of a single synapse with large weight . A weaker assumption that yields a similar increase in neural variability is to ask for synapses to only tend to synchronize probabilistically, which amounts to requiring to be a random variable with some distribution mass on . This exactly amounts to modeling the input drive via a jump process as presented in Sec. II, with a jump distribution that probabilistically captures this degree of input synchrony. In turn, this distribution corresponds to a precise input correlation via Eq. (8).

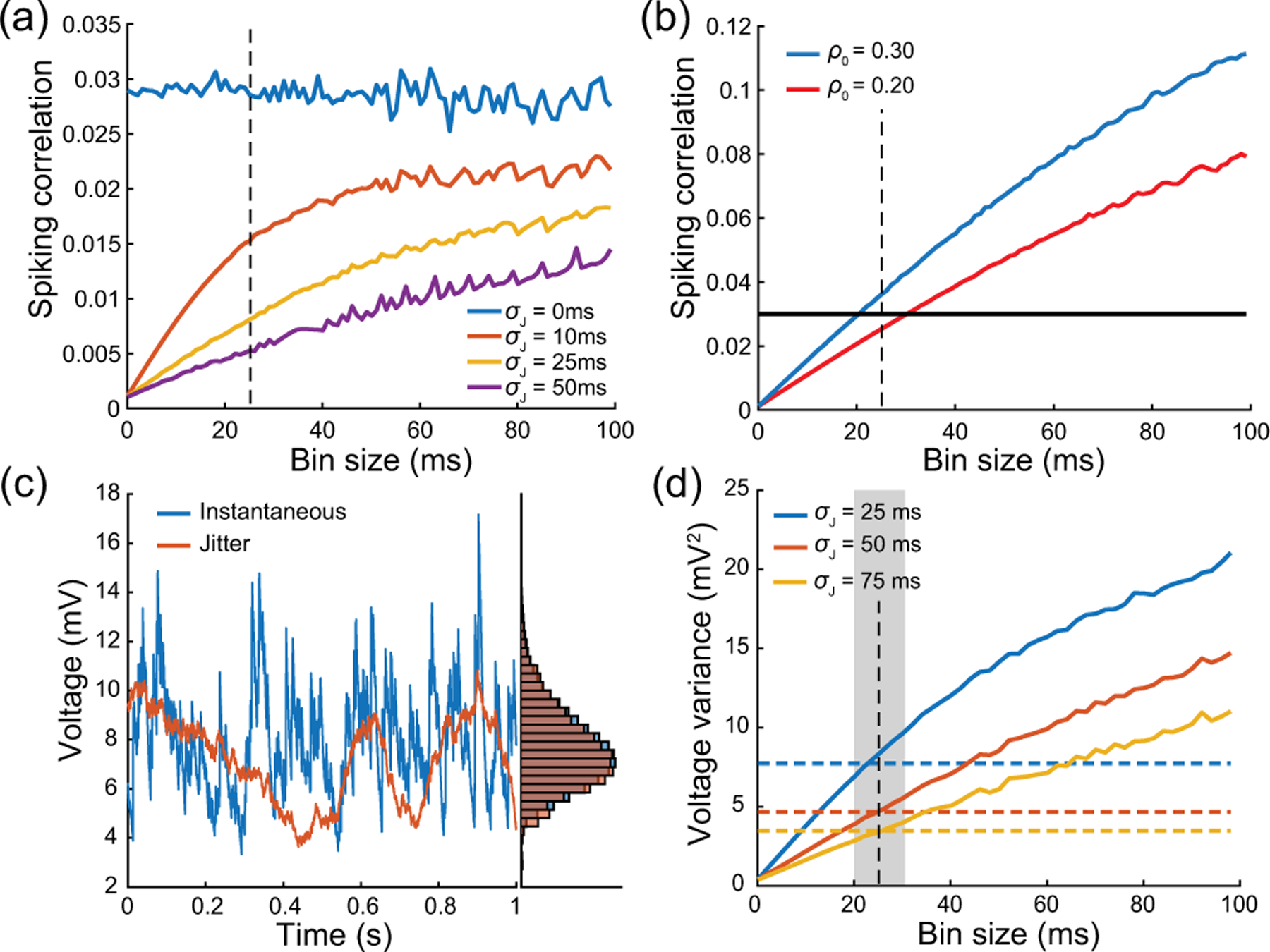

We quantify the impact of nonzero correlation in Fig. 9, where we consider the cases of moderate weights and and large weights and as in Fig. 7 but for . Specifically, we consider an AONCB neuron subjected to two independent beta-binomial-derived compound Poisson process drives with rate and , respectively. These rates and are obtained via Eq. (9) by setting and for given input numbers and and spiking rates and . This ensures that the mean number of synaptic activations and remains constant when compared with Fig. 7. As a result, the mean response of the AONCB neuron is essentially left unchanged by the presence of correlations, with virtually identical biophysical range of variations . This is because, for correlation , the aggregate weights still satisfy with probability close to one given that . Then, in the absence of cross-correlation, i.e., , we still have

as well as by symmetry. However, for both moderate and large synaptic weights, the voltage variance now exhibits slightly larger magnitudes than observed experimentally. This is because we show in Appendix M that in the small-weight approximation

where we recognize as the second-order efficacy in the absence of correlations from Fig. 7. A similar statement holds for . This shows that correlations increase neural variability whenever or , which coincides with our previously given criterion to assess the relative weakness of correlations. Accordingly, when excitation and inhibition act independently, i.e., , we find that the increase in variability due to input synchrony satisfies

| (20) |

The above relation follows from the fact that the small-weight approximation for is independent of correlations and from neglecting the exponential corrections due to the nonzero size of the synaptic weights. The above formula remains valid as long as the correlations and are weak enough so that the aggregate weights satisfy with probability close to one. To inspect the relevance of exponential corrections, we estimate in Appendix N the error incurred by neglecting exponential corrections. Focusing on the case of excitatory inputs, we find that, for correlation coefficients , neglecting exponential corrections incurs less than a 3% error if the number of inputs is smaller than for moderate synaptic weight or than for large synaptic weight .

FIG. 9.

Voltage mean and variance in the presence of excitatory and inhibitory input correlations but without correlation across excitation and inhibition: . Column (a) depicts the stationary subthreshold response of an AONCB neuron driven by and synapses with large weights and . Column (b) depicts the stationary subthreshold response of an AONCB neuron driven by and synapses with moderate dimensionless weights and . For synaptic weights , the mean response is identical as for (a) and (b). By contrast with the case of no correlation in Fig. 7, for and , the variance achieves similar levels as experimentally observed (4–9 mV2) for moderate weights as shown in (b) but slightly larger levels for large weights as shown in (a).

E. Including correlations between excitation and inhibition reduces subthreshold variability

The voltage variance estimated for realistic excitatory and inhibitory correlations, e.g., and , exceeds the typical levels measured in vivo, i.e., 4–9 mV2, for large synaptic weights. The inclusion of correlations between excitation and inhibition, i.e., , can reduce the voltage variance to more realistic levels. We confirm this point in Fig. 10, where we consider the cases of moderate weights and and large weights and as in Fig. 9 but for . Positive cross-correlation between excitation and inhibition only marginally impacts the mean voltage response. This is due to the fact that exponential corrections become slightly more relevant as the presence of cross-correlation leads to larger aggregate weights: with and possibly being jointly positive. By contrast with this marginal impact on the mean response, the voltage variance is significantly reduced when excitation and inhibition are correlated. This is in keeping with the intuition that the net effect of such cross-correlation is to cancel excitatory and inhibitory synaptic inputs with one another, before they can cause voltage fluctuations. The amount by which the voltage variance is reduced can be quantified in the small-weight approximation. In this approximation, we show in Appendix M that the efficacy capturing the impact of cross-correlations simplifies to

Using the above simplified expression and invoking the fact that the small-weight approximation for is independent of correlations, we show a decrease in the amount with

| (21) |

Despite the above reduction in variance, we also show in Appendix M that positive input correlations always cause an overall increase of neural variability:

Note that the reduction of variability due to crucially depends on the instantaneous nature of correlations between excitation and inhibition. To see this, observe that Marcus rule Eq. (13) specifies instantaneous jumps via a weighted average of the reversal potentials and , which represent extreme values for voltage updates. Thus, perfectly synchronous excitation and inhibition updates the voltage toward an intermediary value rather than extreme ones, leading to smaller jumps on average. Such an effect can vanish or even reverse when synchrony breaks down, e.g., when inhibition substantially lags behind excitation.

FIG. 10.

Voltage mean and variance in the presence of excitatory and inhibitory input correlations and with correlation across excitation and inhibition: . Column (a) depicts the stationary subthreshold response of an AONCB neuron driven by and synapses with large weights and . Column (b) depicts the stationary subthreshold response of an AONCB neuron driven by and synapses with moderate dimensionless weights and . For synaptic weights , the mean response is identical as for (a) and (b). Compared with the case of no cross-correlation in Fig. 9, for , the variance is reduced to a biophysical range similar to that experimentally observed (4–9 mV2) for moderate weights as shown in (a), as well as for large weights as shown in (b).

F. Asynchronous scaling limits require fixed-size synaptic weights

Our analysis reveals that the correlations must significantly impact the voltage variability whenever the number of inputs is such that or . Spiking correlations are typically measured in vivo to be larger than 0.01. Therefore, synchrony must shape the response of neurons that are driven by more than 100 active inputs, which is presumably allowed by the typically high number of synaptic contacts (≃104) in the cortex [13]. In practice, we find that synchrony can explain the relatively high level of neural variability observed in the subthreshold neuronal responses. Beyond these practical findings, we predict that input synchrony also has significant theoretical implications with respect to modeling spiking networks. Analytically tractable models for cortical activity are generally obtained by considering spiking networks in the infinite-size limit. Such infinite-size networks are tractable, because the neurons they comprise interact only via population averages, erasing any role for nonzero correlation structure. Distinct mean-field models assume that synaptic weights vanish according to distinct scalings with respect to the number of synapses, i.e., as . In particular, classical mean-field limits consider the scaling , balanced mean-field limits consider the scaling , with , and strong coupling limits consider the scaling , with as well.

Our analysis of AONCB neurons shows that the neglect of synchrony-based correlations is incompatible with the maintenance of neural variability in the infinite-size limit. Indeed, Eq. (20) shows that for any scaling with and , as for all the mean-field limits mentioned above, we have

Thus, in the absence of correlation and independent of the synaptic weight scaling, the subthreshold voltage variance of AONCB neurons must vanish in the limit of arbitrary large numbers of synapses. We expect such decay of the voltage variability to be characteristic of conductance-based models in the absence of input correlation. Indeed, dimensional analysis suggests that voltage variances for both current-based and conductance-based models are generically obtained via normalization by the reciprocal of the membrane time constant. However, by contrast with current-based models, the reciprocal of the membrane time constant for conductance-based models, i.e., , involves contributions from synaptic conductances. Thus, to ensure nonzero asymptotic variability, the denominator scaling must be balanced by the natural scaling of the Poissonian input drives, i.e., . In the absence of input correlations, this is possible only for fixed-size weights, which is incompatible with any scaling assumptions.

G. Synchrony allows for variability-preserving scaling limits with vanishing weights

Infinite-size networks with fixed-size synaptic weights are problematic for restricting modeled neurons to operate in the high-conductance regime, whereby the intrinsic conductance properties of the cell play no role. Such a regime is biophysically unrealistic, as it implies that the cell would respond to perturbations infinitely fast. We propose to address this issue by considering a new type of variability-preserving limit models obtained for the classical scaling but in the presence of synchrony-based correlations. For simplicity, let us consider our correlated input model with excitation alone in the limit of an arbitrary large number of inputs . When , the small-weight approximation Eq. (20) suggests that adopting the scaling , where denotes the aggregate synaptic weight, yields a nonzero contribution when as the numerator scales as . It turns out that this choice can be shown to be valid without resorting to any approximations. Indeed, under the classical scaling assumption, we show in Appendix O that the discrete jump distribution weakly converges to the continuous density in the sense that

| (22) |

The above density has infinite mass over owing to its diverging behavior in zero and is referred to as a degenerate beta distribution. In spite of its degenerate nature, it is known that densities of the above form define well-posed processes, the so-called beta processes, which have been studied extensively in the field of nonparametric Bayesian inference [61,62]. These beta processes represent generalizations of our compound Poisson process drives insofar as they allow for a countable infinity of jumps to occur within a finite time window. This is a natural requirement to impose when considering an infinite pool of synchronous synaptic inputs, the overwhelming majority of which having nearly zero amplitude.

The above arguments show that one can define a generalized class of synchronous input models that can serve as the drive of AONCB neurons as well. Such generalizations are obtained as limits of compound Poisson processes and are specified via their Lévy-Khintchine measures, which formalize the role of [78,79]. Our results naturally extend to this generalized class. Concretely, for excitation alone, our results extend by replacing all expectations of the form by integral with respect to the measure . One can easily check that these expectations, which feature prominently in the definition of the various synaptic efficacies, all remain finite for Lévy-Khintchine measures. In particular, the voltage mean and variance of AONCB neurons remain finite with

Thus, considering the classical scaling limit preserves nonzero subthreshold variability in the infinite size limit as long as puts mass away from zero, i.e., for . Furthermore, we show in Appendix O that so that voltage variability consistently vanishes in the absence of spiking correlation, for which concentrates in zero, i.e., when .

IV. DISCUSSION

A. Synchrony modeling

We have presented a parametric representation of the neuronal drives resulting from a finite number of asynchronous or (weakly) synchronous synaptic inputs. Several parametric statistical models have been proposed for generating correlated spiking activities in a discrete setting [59,80–82]. Such models have been used to analyze the activity of neural populations via Bayesian inference methods [83–85], as well as maximum entropy methods [86,87]. Our approach is not to simulate or analyze complex neural dependencies but rather to derive from first principles the synchronous input models that could drive conductance-based neuronal models. This approach primarily relies on extending the definition of discrete-time correlated spiking models akin to Ref. [59] to the continuous-time setting. To do so, the main tenet of our approach is to realize that input synchrony and spiking correlation represent equivalent measures under the assumption of input exchangeability.

Input exchangeability posits that the driving inputs form a subset of an arbitrarily large pool of exchangeable random variables [55,56]. In particular, this implies that the main determinant of the neuronal drive is the number of active inputs, as opposed to the magnitude of these synaptic inputs. Then, the de Finetti theorem [57] states that the probability of observing a given input configuration can be represented in the discrete setting under an integral form [see Eq. (3)] involving a directing probability measure . Intuitively, represents the probability distribution of the fraction of coactivating inputs at any discrete time. Our approach identifies the directing measure as a free parameter that captures input synchrony. The more dispersed the distribution , the more synchronous the inputs, as previously noted in Refs. [88,89]. Our work elaborates on this observation to develop computationally tractable statistical models for synchronous spiking in the continuous-time limit, i.e., for vanishing discrete time step .

We derive our results using a discrete-time directing measure chosen as beta distribution , where the parameters and can be related to the individual spiking rate and the spiking correlation via and . For this specific choice of distribution, we are able to construct statistical models of the correlated spiking activity as generalized beta-binomial processes [60], which play an important role in statistical Bayesian inference [61,62]. This construction allows us to fully parametrize the synchronous activity of a finite number of inputs via the jump distribution of a compound Poisson process, which depends explicitly on the spiking correlation. For being continuously indexed in time, stationary compound Poisson processes can naturally serve as the drive to biophysically relevant neuronal models. The idea to utilize compound Poisson processes to model input synchrony was originally proposed in Refs. [90–92] but without constructing these processes as limits of discrete spiking models and without providing explicit functional form for their jump distributions. More generally, our synchrony modeling can be interpreted as a limit case of the formalism proposed in Refs. [93,94] to model correlated spiking activity via multidimensional Poisson processes.

B. Moment analysis

We analytically characterize the subthreshold variability of a tractable conductance-based neuronal model, the AONCB neurons, when driven by synchronous synaptic inputs. The analytical characterization of a neuron’s voltage fluctuations has been the focus of intense research [46,47,95–97]. These attempts have considered neuronal models that already incorporate some diffusion scaling hypotheses [98,99], formally obtained by assuming an infinite number of synaptic inputs. The primary benefit of these diffusion approximations is that one can treat the corresponding Fokker-Planck equations to quantify neuronal variability in conductance-based integrate-and-fire models while also including the effect of postspiking reset [37,38]. In practice, subthreshold variability is often estimated in the effective-time-constant approximation, while neglecting the multiplicative noise contributions due to voltage-dependent membrane fluctuations [46,95,96], although an exact treatment is also possible without this simplifying assumption [38]. By contrast, the analysis of conductance-based models has resisted exact treatments when driven by shot noise, as for compound Poisson input processes, rather than by Gaussian white noise, as in the diffusion approximation [41–43].

The exact treatment of shot-noise-driven neuronal dynamics is primarily hindered by the limitations of the Itô-Stratonovich integrals [65,100] to capture the effects of point-process-based noise sources, even without including a reset mechanism. These limitations were originally identified by Marcus, who proposed to approach the problem via a new type of stochastic equation [44,45]. The key to the Marcus equation is to define shot noise as limits of regularized, well-behaved approximations of that shot noise, for which classical calculus applies [66]. In practice, these approximations are canonically obtained as the solutions of shot-noise-driven Langevin equations with relaxation timescale , and shot noise is formally recovered in the limit . Our assertion here is that all-or-none conductances implement such a form of shot-noise regularization for which a natural limiting process can be defined when synapses operate instantaneously, i.e., . The main difference with the canonical Marcus approach is that our regularization is all-or-none, substituting each Dirac delta impulse with a finite steplike impulse of duration and magnitude , thereby introducing a synaptic timescale but without any relaxation mechanism.

The above assertion is the basis for introducing AONCB neurons, which is supported by our ability to obtain exact formulas for the first two moments of their stationary voltage dynamics [see Eqs. (14) and (16)]. For , these moments can be expressed in terms of synaptic efficacies that take exact but rather intricate integral forms. Fortunately, these efficacies drastically simplify in the instantaneous synapse limit , for which the canonical shot-noise drive is recovered. These resulting formulas mirror those obtained in the diffusion and effective-time-constant approximations [46,47], except that they involve synaptic efficacies whose expressions are original in three ways [see Eqs. (15), (G4), (G7), and (G8)]: First, independent of input synchrony, these efficacies all have exponential forms and saturate in the limit of large synaptic weights. Such saturation is a general characteristic of shot-noise-driven, continuously relaxing systems [101–103]. Second, these efficacies are defined as expectations with respect to the jump distribution of the driving compound Poisson process [see Eq. (11) and Appendix B]. A nonzero dispersion of , indicating that synaptic activation is truly modeled via random variables and , is the hallmark of input synchrony [91,92]. Third, these efficacies involve the overall rate of synaptic events [see Eq. (12)], which also depends on input synchrony. Such dependence can be naturally understood within the framework of Palm calculus [104], a form of calculus specially developed for stationary point processes.

C. Biophysical relevance

Our analysis allows us to investigate quantitatively how subthreshold variability depends on the numbers and strength of the synaptic contacts. This approach requires that we infer synaptic weights from the typical peak time and peak amplitude of the somatic membrane fluctuations caused by postsynaptic potentials [72,75,76]. Within our modeling framework, these weights are dimensionless quantities that we estimate by fitting the AONCB neuronal response to a single all-or-none synaptic activation at rest. For biophysically relevant parameters, this yields typically small synaptic weights in the sense that . These small values warrant adopting the small-weight approximation, for which expressions (14) and (16) simplify.

In the small-weight approximation, the mean voltage becomes independent of input synchrony, whereas the simplified voltage variance Eq. (20) depends on input synchrony only via the spiking correlation coefficients , and , as opposed to depending on a full jump distribution. Spike-count correlations have been experimentally shown to be weak in cortical circuits [10–12], and, for this reason, most theoretical approaches argued for asynchronous activity [17,105–109]. A putative role for synchrony in neural computations remains a matter of debate [110–112]. In modeled networks, although the tight balance regime implies asynchronous activity [19–21], the loosely balanced regime is compatible with the establishment of strong neuronal correlations [22–24]. When distributed over large networks, weak correlations can still give rise to precise synchrony, once information is pooled from a large enough number of synaptic inputs [32,33]. In this view, and assuming that distinct inputs play comparable roles, correlations measure the propensity of distinct synaptic inputs impinging on a neuron to coactivate, which represents a clear form of synchrony. Our analysis shows that considering synchrony in amounts consistent with the levels of observed spiking correlation is enough to account for the surprisingly large magnitude of subthreshold neuronal variability [1,26–28]. In contrast, the asynchronous regime yields unrealistically low variability, an observation that challenges the basis for the asynchronous state hypothesis.

Recent theoretical works [37,38] have also noted that the asynchronous state hypothesis seems at odds with certain features of the cortical activity such as the emergence of spontaneous activity or the maintenance of significant average polarization during evoked activity. Zerlaut et al. have analyzed under which conditions conductance-based networks can achieve a spectrum of asynchronous states with realistic neural features. In their work, a key variable to achieve this spectrum is a strong afferent drive that modulates a balanced network with moderate recurrent connections. Moderate recurrent conductances are inferred from allowing for up to 2 mV somatic deflections at rest, whereas the afferent drive is provided via even stronger synaptic conductances that can activate synchronously. These inferred conductances appear large in light of recent in vivo measurements [72,75,76], and the corresponding synaptic weights all satisfy within our framework. Correspondingly, the typical connectivity numbers considered are small with for recurrent connections, and for the coactivating afferent projections. Thus, results from Ref. [37] appear consistent with our observation that realistic subthreshold variability can be achieved asynchronously only for a restricted number of large synaptic weights. Our findings, however, predict that these results follow from connectivity sparseness and will not hold in denser networks, for which the pairwise spiking correlation will exceed the empirical criteria for asynchrony, e.g., in Ref. [37]). Sanzeni et al. have pointed out that implementing the effective-time-constant approximation in conductance-based models suppresses subthreshold variability, especially in the high-conductance state [77]. As mentioned here, this suppression causes the voltage variability to decay as in any scaling limit with vanishing synaptic weights. Sanzeni et al. observe that such decay is too fast to yield realistic variability for the balanced scaling, which assumes and . To remedy this point, these authors propose to adopt a slower scaling of the weights, i.e., and , which can be derived from the principle of rate conservation in neural networks. Such a scaling is sufficiently slow for variability to persist in networks with large connectivity number (≃105). However, as any scaling with vanishing weights, our exact analysis shows that such scaling must eventually lead to decaying variability, thereby challenging the basis for the synchronous state hypothesis.