Abstract

Purpose:

AI modeling physicians’ clinical decision-making (CDM) can improve the efficiency and accuracy of clinical practice or serve as a surrogate to provide initial consultations to patients seeking secondary opinions. In this study, we developed an interpretable AI model that predicts dose fractionation for patients receiving radiation therapy for brain metastases with an interpretation of its decision-making process.

Materials/Methods:

152 patients with brain metastases treated by radiosurgery from 2017 to 2021 were obtained. CT images and target and organ-at-risk (OAR) contours were extracted. Eight non-image clinical parameters were also extracted and digitized, including age, the number of brain metastasis, ECOG performance status, presence of symptoms, sequencing with surgery (pre- or post-operative radiation therapy), de novo vs. re-treatment, primary cancer type, and metastasis to other sites. 3D convolutional neural networks (CNN) architectures with encoding paths were built based on the CT data and clinical parameters to capture three inputs: (1) Tumor size, shape, and location; (2) The spatial relationship between tumors and OARs; (3) The clinical parameters. The models fuse the features extracted from these three inputs at the decision-making level to learn the input independently to predict dose prescription. Models with different independent paths were developed, including models combining two independent paths (IM-2), three independent paths (IM-3), and ten independent paths (IM-10) at the decision-making level. A class activation score and relative weighting were calculated for each input path during the model prediction to represent the role of each input in the decision-making process, providing an interpretation of the model prediction. The actual prescription in the record was used as ground truth for model training. The model performance was assessed by 19-fold cross-validation, with each fold consisting of randomly selected 128 training, 16 validation, and 8 testing subjects.

Result:

The dose prescriptions of 152 patient cases included 48 cases with 1×24Gy, 48 cases with 1×20–22Gy, 32 cases with 3×9Gy, and 24 cases with 5×6Gy prescribed by 8 physicians. IM-2 achieved slightly superior performance than IM-3 and IM-10, with 131 (86%) patients classified correctly and 21 (14%) patients misclassified. IM-10 provided the most interpretability with a relative weighting for each input: target (34%), the relationship between target and OAR (35%), ECOG (6%), re-treatment (6%), metastasis to other sites (6%), number of brain metastases (3%), symptomatic (3%), pre/post-surgery (3%), primary cancer type (2%), age (2%), reflecting the importance of the inputs in decision making. The importance ranking of inputs interpreted from the model also matched closely with a physician’s own ranking in the decision process.

Conclusion:

Interpretable CNN models were successfully developed to use CT images and non-image clinical parameters to predict dose prescriptions for brain metastases patients treated by radiosurgery. Models showed high prediction accuracy while providing an interpretation of the decision process, which was validated by the physician. Such interpretability makes the model more transparent, which is crucial for the future clinical adoption of the models in routine practice for CDM assistance.

Keywords: clinical decision-making, metastases, interpretable, deep learning

1. Introduction

Artificial intelligence (AI), especially deep learning, is transforming many fields of medicine and has the potential to address many of the challenges faced in clinical practice. For example, deep learning has seen an explosion of interest and application across medical image processing, particularly in image registration, detection, segmentation, regression, and classification. [1–3] AI has also been introduced into radiation therapy to significantly enhance the quality and efficiency of a large variety of clinical tasks, such as image enhancement, treatment planning, organ segmentation, quality assurance, and treatment response prediction, as shown in many publications. [4–9] So far, AI applications in oncology primarily focus on diagnosis or treatment optimization, as well as outcome prediction and prognosis evaluation. Relatively fewer are involved with treatment decision-making because clinical decision-making (CDM) in oncology is often complicated, lacks consensus, and contains uncertainties [10,11]. IBM has developed Watson to perform CDM in diagnosis and treatment. However, Watson aimed to outperform physicians by discovering new treatment paradigms by learning from various sources, including treatment records, medical literature, etc, which is overly ambitious and unrealistic due to the limitations of current AI models and various practical challenges. [12]

Recently, we reported a more practical approach by developing AI to follow the thought process of physicians to imitate their CDM. [13] The model was trained based on the actual treatment records with the incorporation of physicians’ logical decision processes in the model. Moreover, the model was trained to be institution-specific using the dataset from a specific institution, removing impact from practice variations across institutions. We used dose prescription as an example to demonstrate the efficacy of the model. [13] Despite the promising results, the model was constructed in a way that is difficult to interpret its decision process. All the image and non-image information was input into the model, which then outputs the final prediction using a set of fully connected layers. This architecture made it impossible to dissect the impact of each input and analyze how the model weighed different inputs in making the final decision. Lack of interpretability can significantly impair the clinical adoption of the model for assistance in CDM.

Developing interpretable AI models has gained significant attention in recent years since interpretability and transparency make the models’ performance more understandable and predictable, which are crucial for improving the models’ robustness and generalizability and making the models more trustable for physicians to adopt in daily practice. [14] To our best knowledge, very little work has been done to interpret the results reported by a convolutional neural network. Zhou et al. proposed a technique called Class Activation Mapping (CAM) for identifying discriminative regions used by a restricted class of image classification that does not contain fully connected layers. Selvaraju et al. proposed a Gradient-weighted Class Activation Mapping (Grad-CAM), which is a generalization of CAM. [15,16] Instead of generating CAM by using global average pooling, Chen et al. built a prototype part network (ProtoPNet) that computes squared distance to identify some latent training patches. [17] A potential limitation of the previous models is that the networks use only image-level labels for training without any non-image data involved. To date, it remains challenging to build an interpretable model that can account for both image and non-image information.

In this study, we propose an interpretable model for clinical decision-making (CDM) involving both image information (CT) and non-image information (clinical parameters). As illustrated in Fig 1, we developed a novel deep-learning network that fuses the features extracted from the three types of inputs at the decision-making level, allowing us to measure the contribution from each input path by the class activation score. This unique design provides insight into the importance of each input and how they were weighed together by the model to make the final decision. Another key contribution is that the model is constructed so that the parameters of different paths for the input CT images, and clinical parameters are independent, which provides the flexibility of adjusting the weights of each path of the inputs independently to improve the model performance. To our knowledge, this is the first time an interpretable AI model has been developed to use both image and non-image information to predict dose prescription in the CDM of radiotherapy. The interpretability allows physicians to compare the decision process of the AI model with their knowledge, which provides a means for physicians to understand and validate the model, greatly facilitating its adoption in routine clinical usage. Such an interpretable CDM model can serve as a surrogate for physicians to address healthcare disparities by providing preliminary consultations to patients in underdeveloped areas with limited medical resources. It can also serve as a valuable training or QA tool representing a specific institution’s clinical practice for physicians to cross-check intra- and inter-institution practice variations.

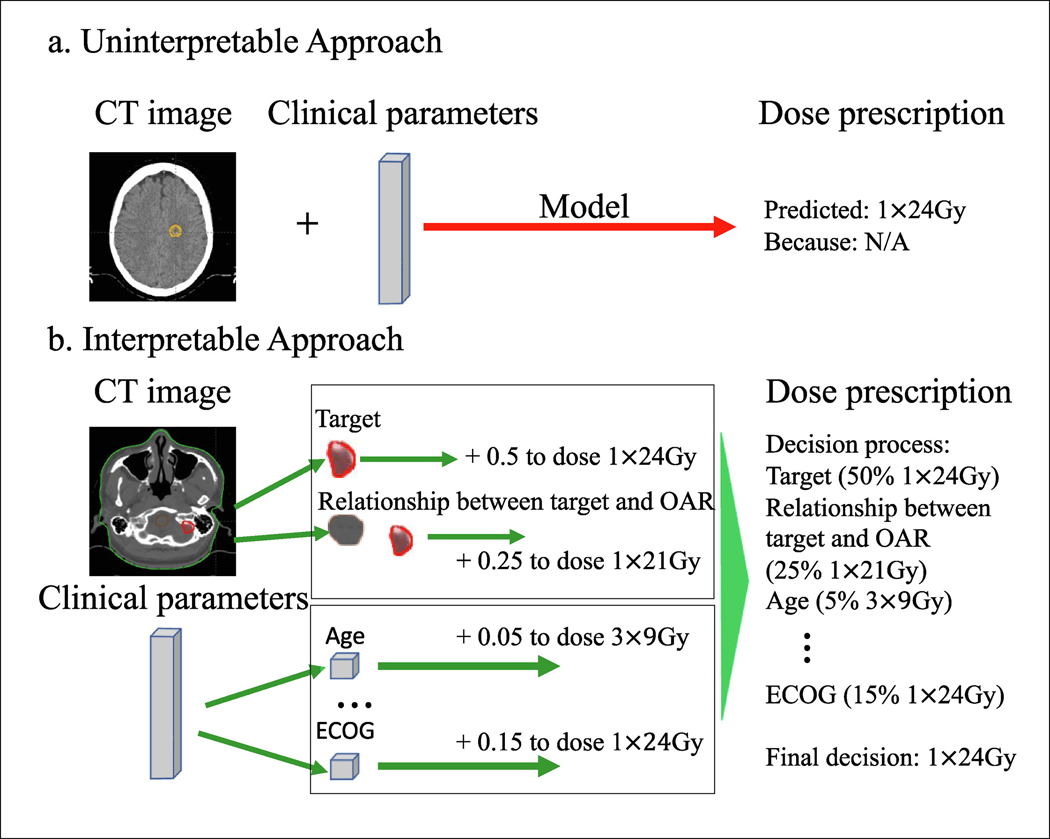

Figure 1.

(a) Uninterpretable approaches give no explanations for their output. (b) The interpretable model provides an explanatory framework that illustrates how the parameters are weighed in deciding dose prescription.

2. Materials and methods

2.1. Patient population, CT data, and clinical parameters processing

Under an institutional review board (IRB) approved protocol, we retrospectively extracted data from 152 patients with brain metastases treated with Stereotactic Radiosurgery (SRS) or Stereotactic Radiation Therapy (SRT) from 2017 to 2021. All methods for data extraction followed the relevant guidelines and regulations. [18]

3D CT images and radiation therapy (RT) structures, including target volume and organ at risk (OARs), were obtained from the patient record in the treatment planning system. The target volume used for dose prescription was either a gross tumor target volume (GTV) or a planning target volume (PTV) with margins added to the GTV, depending on the location and size of the target and the preference of the physician. The primary OARs included the brainstem, optic nerves, chiasms, retina, and cochlea. In routine clinical practice, physicians typically evaluate the target volume’s size, shape, and location and the relative position between the target and OAR to decide the dose prescription. To imitate the physician’s thought process, we extracted the masks of the target volume and OAR to obtain the above information physicians used to decide on dose prescription and used them as inputs to the AI model. To differentiate the target and OAR masks in the model inputs, the mask values were set to 1 and 2 for OAR and target masks, respectively.

Clinical parameters were selected and digitized to feed the CNN model as a supplement, as they are also important factors physicians consider when deciding the dose prescription. Instead of using all the clinical parameters, we asked physicians to select the relevant parameters based on what they typically use in their daily decision process for dose prescription. This approach allows us to incorporate physicians’ knowledge into the model to imitate their decision process. Table 1 shows the list of non-image clinical parameters selected by physicians based on their relevance to dose prescription design. Clinical parameters were codified as follows based on clinical guidelines and physicians’ suggestions: age (0 for <60 years; 1 for 60—75 years; 2 for >75 years old), number of brain metastases (0 for 1 lesion; 1 for 2—5 lesions; 2 for >5 lesions), ECOG (0 for fully active; 1 for restricted in physically strenuous activity; 2 for ambulatory and capable of all self-care but unable to carry out any work activity; 3 for capable of only limited self-care; 4 for completely disabled; 5 for death), primary cancer type (1 for melanoma and sarcoma cancer with radioresistant histologist; 0 for others), re-treatment (0 for first treatment; 1 for the second treatment in a different region; 2 for a second treatment in the same region), metastases to other anatomical sites (0 for not found; 1 for found in other sites), presence of symptoms (0 for no; 1 for yes), pre/post-operative (0 for no; 1 for yes). Numbers 0, 1, 2, 3, and 4 were chosen to recognize the difference based on the same factor by the AI model.

Table 1.

Summary of clinical parameters selected by physicians to decide dose prescription.

| Index | Age | Number of metastases | ECOG | Primary cancer type | Genetic | Re-treatment | Chemotherapy | Metastases to other sites | Presence of Symptoms | Pre/Post surgery | Current medication |

|---|---|---|---|---|---|---|---|---|---|---|---|

|

| |||||||||||

| Selected | Y | Y | Y | Y | N | Y | N | Y | Y | Y | N |

|

| |||||||||||

| Codification | 2/1/0 | 2/1/0 | 4/3/2/1/0 | 1/0 | NA | 2/1/0 | NA | 1/0 | 1/0 | 1/0 | NA |

|

| |||||||||||

| Percentage of patients (%) | 13/60/27 | 9/36/55 | 0/2/13/65/20 | 11/89 | NA | 11/13/76 | NA | 36/64 | 29/71 | 22/78 | NA |

2.2. Network architecture

As shown in Fig 2, three 3D convolutional neural networks (CNN) architectures with three encoding paths were built to capture three inputs from the CT data and clinical parameters: (1). Target input: tumor size, shape, and location; (2). Target and OAR input: the mask images of the target and OAR providing information on their spatial relationship, as described in section 2.1; (3). the clinical parameters. Both the first and second encoding paths have the same kernel with fixed filters to control the unique feature of each CT image input. The third encoding path will be divided into as many as eight sub-paths to capture the clinical parameters, respectively. Inside these encoding paths, the corresponding kernel convolution is applied twice with a rectified linear unit (RELU), a dropout layer is included between the convolutions with a dropout rate of 0.4, and a 2×2×2 max-pooling operation is used in each layer. [19] The number of feature channels doubles after the max-pooling operation. Inside the third encoding path for clinical parameters, a dense layer is applied to increase the weighting of the sparse clinical parameters.

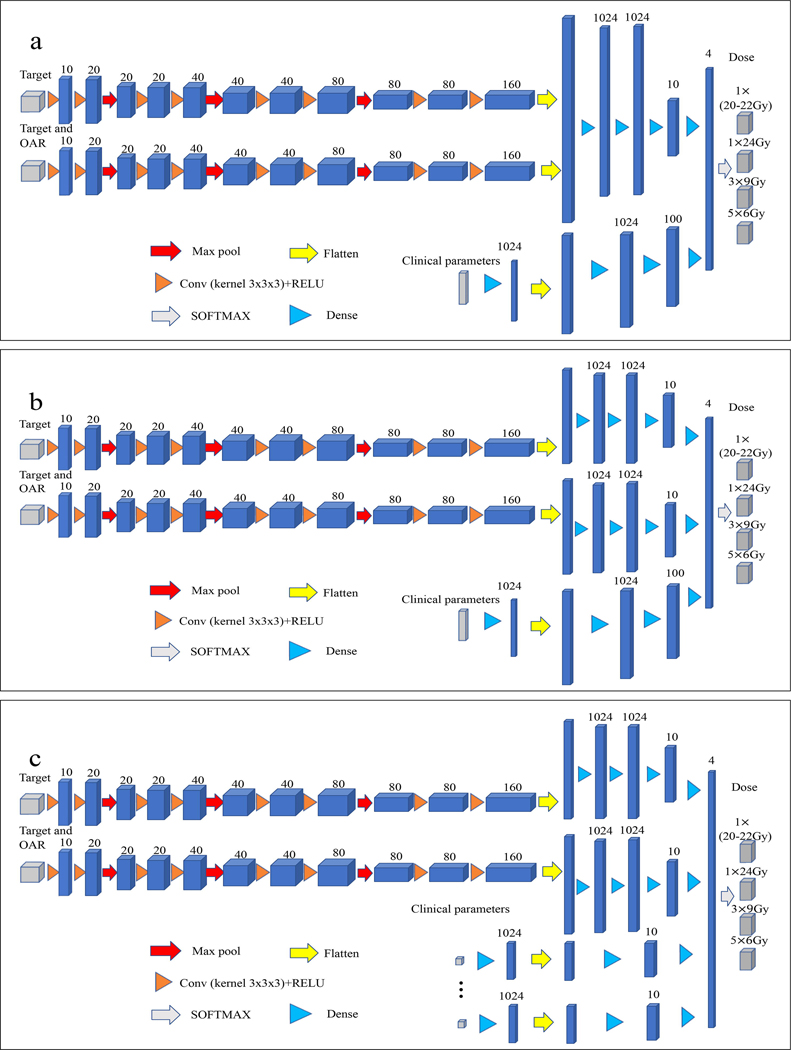

Figure 2.

(a) The architecture of the two-path three-dimensional CNN model fusing the two independent paths at the decision-making level. (b) The architecture of the three-path three-dimensional CNN model fusing the three independent paths at the decision-making level. (c) The architecture of the ten-path three-dimensional CNN model fusing the ten independent paths at the decision-making level. Each blue cuboid corresponds to a feature map. The number of channels is denoted on the top of the cuboid.

After encoding, the model fuses the features extracted by the encoding paths at the decision-making layer to learn the inputs and make the decision. The model was designed to split the encoded features into different paths at the decision-making layer so that the role of features in each path can be interpreted, making the model more transparent. As shown in Fig. 2, three interpretable models were developed, including models with two independent paths (IM-2) (Fig 2a), three independent paths (IM-3) (Fig 2b), and ten independent paths (IM-10) (Fig 2c) at the decision-making layer. IM-10 comprised ten independent paths, including 2 paths for image information (target, target and OAR) and 8 paths for the 8 non-image clinical parameters. Each clinical parameter has its own path in the network, and all paths combine at the final decision-making layer to make a prediction, as shown in Fig. 2c. Such architecture allows us to calculate the class activation score for each path to measure its weighting in the decision process. In the final step, the output from the last fully connected decision layer feeds a SoftMax, which maps the feature vector to the final classification of dose prescriptions. Four classes of dose prescriptions were used in our study, including 1×20–22Gy, 1×24Gy, 3×9Gy, and 5×6Gy. Note that 20–22Gy dose prescriptions were combined into one class in this initial study due to their similarity in clinical considerations. Categorical cross-entropy was used as a loss function in model training. Glorot (Xavier) normal initializer was used for this symmetric CNN based on CT images, [20] which drew samples from a truncated normal distribution centered on zero with , where and were the numbers of input and output units, respectively, in the weight tensor. Adam optimizer was applied to train this model. [21] The learning rates ranging from 1×10−6 to 1×10−4 were tested, and a learning rate of 1×10−5 with 3000 epochs was selected based on the model convergence. The actual prescription in the treatment record was used as ground truth for model training. 24 patients (out of 152) with medium target size and target-to-OAR distances were selected to perform data augmentation by rotating the images by 90, 180, and 270 degrees. Note that the augmented images were only used when the patient was selected for training data. We divided the patient cohort into training (128), validation (16), and testing subjects (8). 19-fold cross-validation was implemented in this study.

2.3. Model performance evaluation and statistical analysis

The performance of the dose prediction model was evaluated using the following quantitative metrics: accuracy, sensitivity, specificity, receiver-operating characteristic (ROC) curve analysis, and the area under the curve (AUC). In addition, mean ± standard deviation (SD) was used to summarize the results. A confusion matrix was used to compare the predicted prescription with the actual prescription extracted from the patient record. [22]

2.4. Model interpretation using class activation score (CAS)

Uninterpretable model:

In our previous uninterpretable model, [13] the different input paths are mixed together in the last dense decision layer for each dose fractionation category k (k=1–4 for the 4 dose fractionations to be predicted). Then, the final dose classification score () for the dose fractionation category k is obtained by

where is the weight for the inputs. . The dose fractionation category with the highest is selected as the final dose fractionation predicted by the model.

Since all the different input paths are mixed together in the last decision layer , it is impossible to separate different inputs to interpret their individual roles and weight in the decision process.

Interpretable model:

In this new interpretable model, we keep the input paths separated when connecting them at the last decision-making layer, which can be represented as for the dose fractionation category . The final for the dose fractionation category k can be calculated as:

where is the weight for the ith input path. Similar to the uninterpretable model, the dose fractionation category with the highest is selected as the final dose fractionation predicted by the model.

Since different input paths are separated with their own and , we can define a class activation score (CAS) for input path i and dose fractionation category as:

represents how much the input path favors the selection of dose fractionation category in the final prediction. The higher value is, the more preferred the input path gives to select the dose category . Analyzing will provide insight into how different inputs influence the selection of dose fractionations and how the model weighs consistent or conflicting suggestions from different inputs to make the final decision.

To measure the overall importance of each input path, a total class activation score (TCAS) is calculated for each input path as follows:

calculates the total magnitude of class activation scores of input path for different dose fractionation categories, which represents the total influence or weight the input path has in the model’s decision process. Based on , we can calculate the relative percentage weight of each input path as:

represents each input path’s relative weight in the model’s decision process. In this study, the number of input paths n is 2 for IM-2, 3 for IM-3, and 10 for IM-10 models.

Note that the weight of an input variable changes with its variable values since variable values are inherently encoded in the calculation of the class activation score. This design is consistent with our clinical practice in that the same factor will be weighed differently in the decision process when its value changes for different patient situations.

2.5. Impact of physician practice variations on model training

To study the impact of physician practice variations on model training, we have asked a designated physician to review and prescribe doses to all the cases. We kept the target volume unchanged from the multi-physician study to the single-physician study, both using the target volumes (GTV or PTV) in the original plans. The updated dose prescription of 152 patient cases included 31 cases with 1×24Gy, 52 cases with 1×20–22Gy, 27 cases with 3×9Gy, and 42 cases with 5×6Gy. Among them, 17 cases of 1×24Gy were changed to 1×20–22Gy, 7 cases of 1×20–22Gy were changed to 1×24Gy, 12 single dose prescriptions were changed to multiple dose prescriptions 3×9Gy, and 6 single dose prescriptions were changed to multiple dose prescriptions 5×6Gy. For the multiple-dose prescription, 6 multiple-dose prescriptions were changed to single-dose prescriptions 1×20–22Gy, 16 cases of 3×9Gy were changed to 5×6Gy, and 4 cases of 5×6Gy were changed to 3×9Gy. The updated dose prescriptions by a single physician were used to train a physician-specific model.

3. Result

Of these 152 patients, 48 (31.5%) had a dose prescription 1×20–22Gy, 48 (31.5%) had a dose prescription 1×24Gy, 32 (21.0%) had a dose prescription 3×9Gy, and 24 (16.0%) had a dose prescription 5×6Gy. The mean and SD of the target volumes for dose prescriptions 1×24Gy, 1×20–22Gy, 3×9Gy, and 5×6Gy are 0.97 ± 0.87 cc, 1.48 ± 0.90 cc, 14.12 ± 11.22 cc, 14.23 ± 13.12 cc. 44 patients were given dose prescriptions based on the GTV, while 108 patients were given dose prescriptions based on the PTV.

We evaluated three models on the dataset: (1) interpretable model fusing the two independent paths at the decision-making level (IM-2). (2) interpretable model fusing three independent paths at the decision-making level (IM-3). (3) interpretable model fusing ten independent paths at the decision-making level (IM-10). Table 2 shows the detailed model validation results. IM-2 splits the input image and non-image clinical parameters into different paths so their roles in the decision process can be interpreted using TCAS and relative weight W% in each path, as defined in section 2.4. IM-2 achieved the best prediction accuracy, with 131 (86%) patients correctly classified. IM-3 provides more interpretation by further splitting the input image information, including the target and spatial relationship between OAR and target, into different paths to interpret their individual roles in the decision process. IM-3 predicted the dose prescription correctly for 130 (85.5%) patients, which was just slightly lower than IM-2. IM-10 provides the most interpretability among the three models by splitting both image and non-image clinical parameters into 10 different paths so TCAS and W% can be calculated for each input to analyze its role in the decision process. IM-10 predicted the dose prescription correctly for 123 (81%) patients, which was slightly lower than the other two models. As a benchmark comparison, the non-interpretable model we developed before predicted the dose prescription correctly for 124 (82%) patients, which was comparable to the interpretable models developed in this study. [13] This showed that adding interpretability didn’t necessarily degrade the prediction accuracy of the model.

Table 2:

Summary of dose prediction performance using different networks.

| Dose prescription | 1×24Gy | 1×20–22Gy | 3×9Gy | 5×6Gy | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| In record (48) | In record (48) | In record (32) | In record (24) | |||||||||

|

| ||||||||||||

| Model Type (CNN) | IM-10 | IM-3 | IM-2 | IM-10 | IM-3 | IM-2 | IM-10 | IM-3 | IM-2 | IM-10 | IM-3 | IM-2 |

|

| ||||||||||||

|

Predicted

1×24Gy |

40 | 44 | 44 | 2 | 2 | 3 | 0 | 0 | 0 | 0 | 0 | 0 |

|

| ||||||||||||

|

Predicted

1×20–22Gy |

4 | 2 | 2 | 37 | 39 | 40 | 1 | 1 | 1 | 2 | 1 | 1 |

|

| ||||||||||||

|

Predicted

3×9Gy |

2 | 2 | 2 | 6 | 5 | 3 | 26 | 26 | 26 | 2 | 2 | 2 |

|

| ||||||||||||

|

Predicted

5×6Gy |

2 | 0 | 0 | 2 | 1 | 1 | 5 | 5 | 5 | 20 | 21 | 21 |

To validate the efficacy of the proposed CNN model against benchmark machine learning models, a random forest model was constructed to use CT image features (volume, distance from the brainstem), and eight clinical features to predict dose prescription. The random forest model misclassified 60 (39%) patients, indicating the superiority of the proposed CNN model (misclassification of 14% in the IM-2 model). [23]

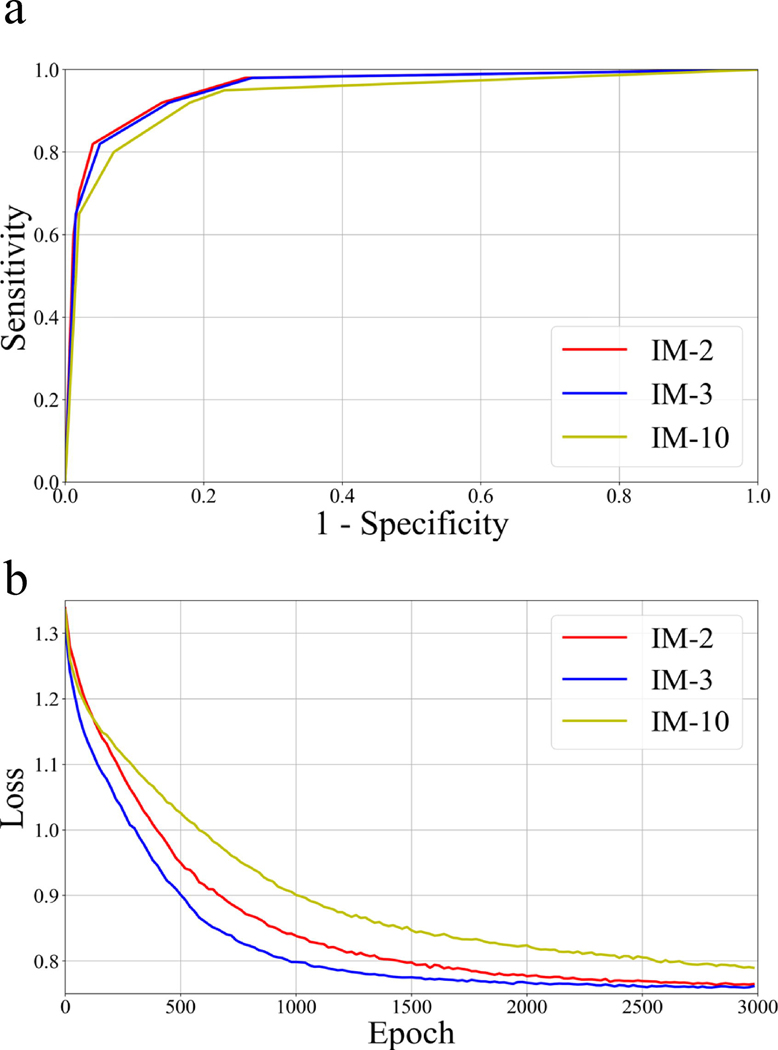

Fig. 3a and b show the three models’ receiver operating characteristic (ROC) curves and loss function convergence for all the patients tested, respectively. The proposed IM-2 achieved the best classification performance among all three models from Fig. 3a. The mean area under the curve (AUC) values were 0.96 (IM-2), 0.96 (IM-3), and 0.93 (IM-10), respectively.

Figure 3.

(a) ROC curves from the three models, including IM-2, IM-3, IM-10. (b) Convergence of the objective loss function along with the epochs for the three models.

IM-2 can show the contribution of CT images and clinical parameters in the decision process, respectively. Table 3 shows the TCAS and relative weight W% defined in section 2.4 for image and non-image inputs in the IM-2 model. For single fraction treatments, the mean TCAS from image and non-image clinical parameters were 8.62 (66%) and 4.43 (34%) for 1×24Gy and 8.96 (65%) and 4.79 (35%) for 1×20–22Gy. For multiple fraction treatments, the mean TCAS from image and non-image clinical parameters were 9.35 (74%) and 3.26 (26%) for 3×9Gy and 9.81 (75%) and 3.12 (24%) for 5×6Gy. These results showed that CT image information weighed more heavily in the decision process for cases prescribed with multi-faction doses. This finding is consistent with the clinical practice since multi-fraction doses are used often because of the close target to OAR distance in the images. The mean and SD of the distance between the target and OAR for dose prescription 1×24Gy, 1×20–22Gy, 3×9Gy, 5×6Gy are 3.71 ± 1.61 cm, 3.05 ± 1.65 cm, 2.72 ± 1.47 cm, and 1.59 ± 1.85 cm, respectively.

Table 3:

Summary of the total class activation scores (TCAS) and their relative percentage weight W% for image and non-image (clinical parameters) inputs in the decision process of the IM-2 model.

| Input | 1×24Gy | 1×20–22Gy | 3×9Gy | 5×6Gy |

|---|---|---|---|---|

| In record (48) | In record (48) | In record (32) | In record (24) | |

| Mean TCAS (% weight) | Mean TCAS (% weight) | Mean TCAS (% weight) | Mean TCAS (% weight) | |

| (min, max) | (min, max) | (min, max) | (min, max) | |

|

| ||||

| Images | 8.62 (66%) | 8.96 (65%) | 9.35(74%) | 9.81 (76%) |

| (6.83, 10.27) | (6.96, 11.91) | (7.67, 11.74) | (7.93, 12.19) | |

|

| ||||

| Non-image clinical parameters | 4.43 (34%) | 4.79 (35%) | 3.26 (26%) | 3.12 (24%) |

| (3.12, 5.78) | (3.45, 6.12) | (2.65, 5.34) | (2.31, 4.15) | |

Table 4 details the TCAS and the relative percentage weight W% for each input in the IM-3 model. The relative percentage weights of image and non-image inputs were comparable between IM-3 and IM-2 models for all dose prescriptions, showing the consistency of interpretation from different models. The relative percentage weights for target and OAR increased for cases prescribed with multi-faction doses for the same reason explained in Table 3.

Table 4:

Summary of TCAS and their relative percentage weight W% for images (target, target and OAR) and non-images (clinical parameters) inputs in the IM-3 model.

| Input | 1×24Gy | 1×20–22Gy | 3×9Gy | 5×6Gy |

|---|---|---|---|---|

| In record (48) | In record (48) | In record (32) | In record (24) | |

| Mean TCAS (% weight) | Mean TCAS (% weight) | Mean TCAS (% weight) | Mean TCAS (% weight) | |

| (min, max) | (min, max) | (min, max) | (min, max) | |

|

| ||||

| Target | 4.51 (36%) | 4.28 (33%) | 4.63 (35%) | 4.26 (34%) |

| (3.04, 6.23) | (3.34, 6.02) | (3.28, 6.78) | (3.61, 6.73) | |

|

| ||||

| Images Target and OAR | 4.27 (34%) | 4.43 (35%) | 5.25 (39%) | 5.47 (43%) |

| (2.81, 5.67) | (3.32, 6.41) | (4.51, 6.89) | (4.61, 6.92) | |

|

| ||||

| Non-image clinical parameters | 3.89 (31%) | 4.13 (32%) | 3.27 (25%) | 2.86 (23%) |

| Non-image clinical parameters | (2.11, 5.23) | (2.54, 5.41) | (2.25, 4. 87) | (2.03, 3.94) |

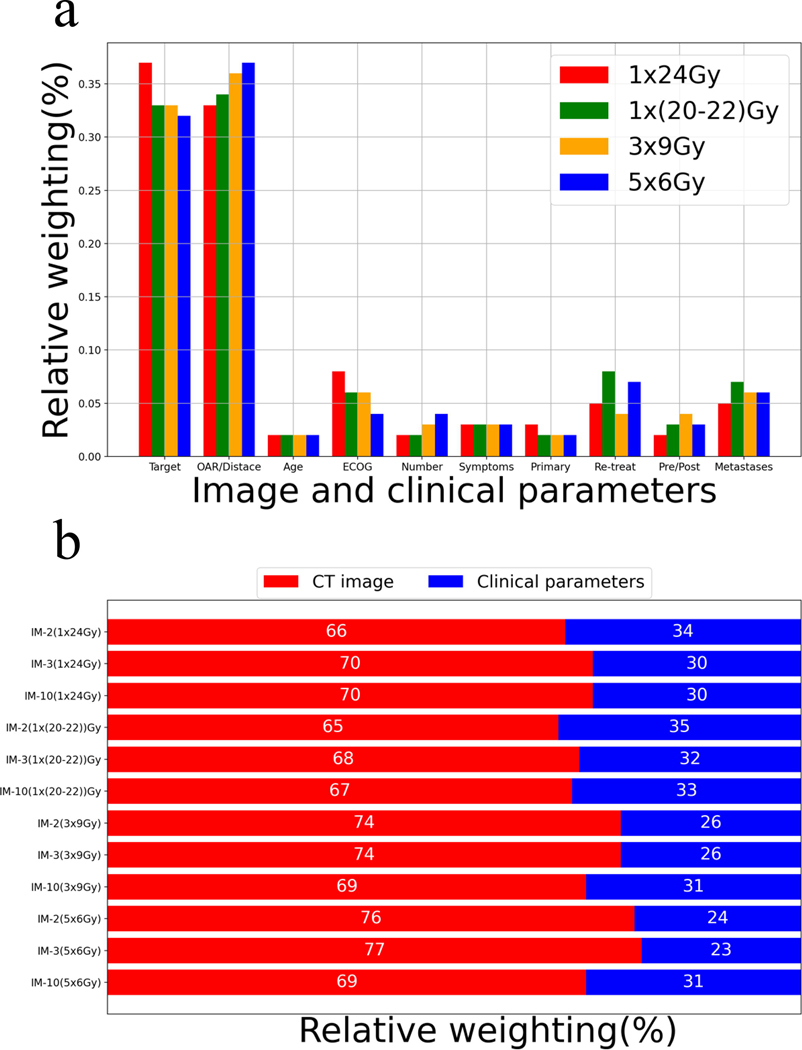

Table 5 shows the TCAS and the relative percentage weight W% for each input in the IM-10 model, including target, the relationship between target and OAR, age, ECOG, number of brain metastases, symptoms, primary cancer type, re-treatment, pre/post-surgery, and metastasis to other sites. Figure 4a further plots the relative percentage weights W% of each input for different dose prescriptions to better visualize the data in Table 5. The relative percentage weights for the target and target and OAR for all dose fractions were 32–37% and 33–37%, which were much higher than those for individual clinical parameters (2–8%) in the model decision process. Similar to IM-2 and IM-3 models, the relative percentage weights of target and OAR input increased from single fraction to multi-fraction cases. For clinical parameters, the ECOG, re-treatment, and metastasis to other sites had higher relative percentage weightings of 4–8%, 4–8% and 5–7% for all dose fractions than age (2%), number of brain metastases (2–4%), symptoms (3%), primary cancer type (2–3%), pre/post-surgery (2–4%). The relative percentage weights for ECOG and re-treatment decreased slightly from single-fraction to multiple-fraction cases, potentially due to the increasing weight of image inputs in fractionated cases.

Table 5:

Summary of TCAS and their relative percentage weight W% for images (target, target and OAR) and non-images clinical parameters (age, ECOG, number of brain metastases, symptoms, primary cancer type, re-treatment, pre/post-surgery, metastases to other site) in the IM-10 model.

| Input | 1×24Gy | 1×20–22Gy | 3×9Gy | 5×6Gy | |

|---|---|---|---|---|---|

| In record | In record | In record | |||

|

| |||||

| In record (48) | (48) | (32) | (24) | ||

| Mean TCAS (% weight) | Mean TCAS (% weight) | Mean TCAS (% weight) | Mean TCAS (% weight) | ||

| (min, max) | (min, max) | (min, max) | (min, max) | ||

|

| |||||

| Images | Target | 4.94 (37%) | 4.77 (33%) | 4.35 (33%) | 4.12 (32%) |

| (3.24, 6.25) | (3.54, 6.31) | (3.45, 6.86) | (3.45, 6.87) | ||

|

| |||||

| Target and OAR | 4.45 (33%) | 4.89 (34%) | 4.82 (36%) | 4.79 (37%) | |

| (3.21, 5.93) | (3.68, 6.65) | (4.56, 6.95) | (4.34, 7.32) | ||

|

| |||||

| Non-images clinical parameters | Age | 0.26 (2%) | 0.26 (2%) | 0.31 (2%) | 0.29 (2%) |

| (0.11, 0.45) | (0.14, 0.50) | (0.16, 0.55) | (0.17, 0.55) | ||

|

| |||||

| ECOG | 1.07 (8%) | 0.76 (6%) | 0.71 (6%) | 0.46 (4%) | |

| (0.46, 1.34) | (0.44, 1.45) | (0.38, 0.93) | (0.34, 0.87) | ||

|

| |||||

| Number of Brain metastases | 0.27 (2%) | 0.28 (2%) | 0.50 (3%) | 0.45 (4%) | |

| (0.16, 0.38) | (0.14, 0.45) | (0.23, 0.86) | (0.21, 0.75) | ||

|

| |||||

| Symptoms | 0.41 (3%) | 0.41 (3%) | 0.42 (3%) | 0.41 (3%) | |

| (0.21, 0.75) | (0.19, 0.68) | (0.14, 0.73) | (0.16, 0.63) | ||

|

| |||||

| Primary | 0.35 (3%) | 0.34 (2%) | 0.19 (2%) | 0.23 (2%) | |

| Cancer type | (0.15, 0.35) | (0.14, 0.47) | (0.07, 0.36) | (0.12, 0.48) | |

|

| |||||

| Re-treatment | 0.69 (5%) | 1.09 (8%) | 0.51 (4%) | 0.86 (7%) | |

| (0.32, 0.89) | (0.45, 1.38) | (0.27, 0.78) | (0.36, 1.29) | ||

|

| |||||

| Pre/Post-surgery | 0.28 (2%) | 0.45 (3%) | 0.54 (4%) | 0.40 (3%) | |

| (0.17, 0.43) | (0.31, 0.65) | (0.25, 0.72) | (0.21, 0.68) | ||

|

| |||||

| Metastases to | 0.69 (5%) | 1.01 (7%) | 0.72 (6%) | 0.72 (6%) | |

| Other sites | (0.32, 0.86) | (0.42, 1.35) | (0.34, 0.96) | (0.45, 0.94) | |

Figure 4.

(a) The relative percentage weightings for all inputs in the IM-10 model. (b) Comparison of relative percentage weightings of images and non-image clinical parameter inputs among all models and different dose fractionations.

Figure 4b shows the relative weighting of CT image and non-image clinical parameters in the decision process of the IM-2, IM-3, and IM-10 models. Results showed consistency between the three models, with around 70% weighting for CT images and 30% weighting for clinical parameters in the decision process.

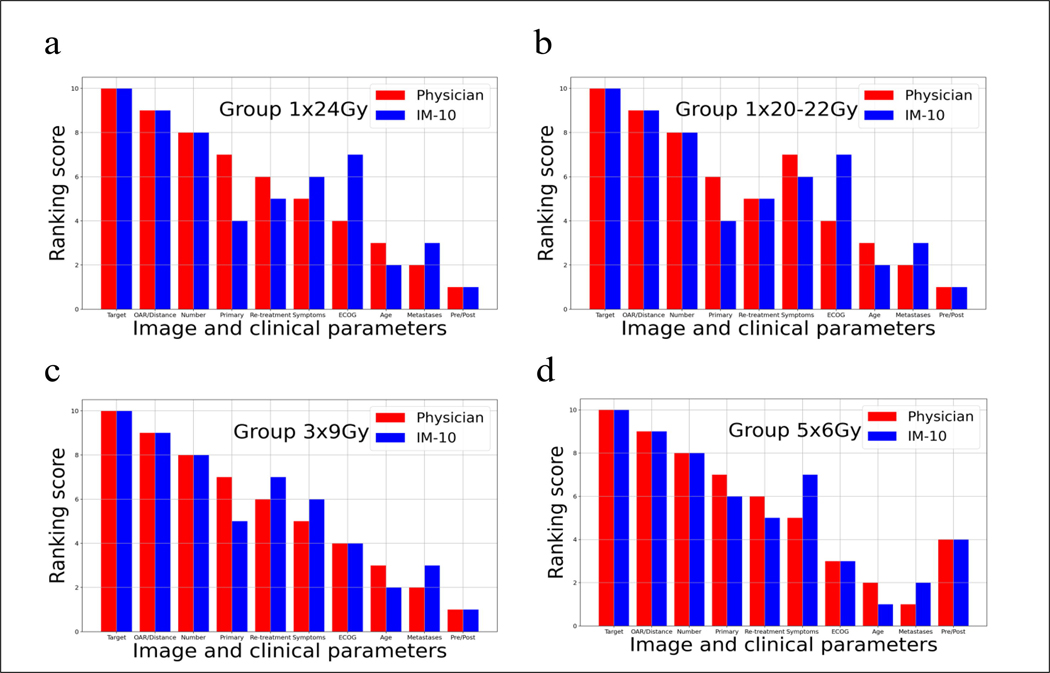

To further validate the interpretation from the IM-10 model, we asked a physician to rank the importance of image and clinical parameters for selecting dose prescriptions for 40 patients (10 in each prescription group) and compared the physician’s rank with the rank interpreted by the physician-specific IM-10 model. Ten inputs (target, target and OAR, and 8 clinical parameters) were ranked from 1 to 10, with 10 being the most important input. Figure 5 compares the average ranking scores of the image and clinical parameters provided by the physician (Red) and IM-10 model (Blue) for each dose prescription group. The majority of the rankings agreed well between the physician and the IM-10 model, with target, target and OAR, and the number of metastases consistently ranked very high, and pre/post-surgery, metastases to other sites, and age consistently ranked very low for dose prescription selection. Some discrepancies exist for the inputs ranked in the middle, such as ECOG, likely due to uncertainties in physician ranking and AI modeling. A representative patient was given insight into how the model makes the prediction in detail (Supplementary Case Study).

Figure 5.

Comparison of ranking scores of the image and clinical parameters provided by the physician (Red) and IM-10 model (Blue) for each group. The score range is 1-10, with 10 being the most important input.

Besides cross-validation, we have performed an additional study to test the model with separate training and testing data sets. Our entire dataset was divided into training (104), validation (16), and testing (32) datasets. The testing dataset was chosen randomly from each dose prescription group, including 9 cases from 1×24Gy, 9 cases from 1×20–22Gy, 8 cases from 3×9Gy, and 6 cases from 5×6Gy, with a total of 32 patients. Using IM-2 and IM-3 models, 28 (88%) dose prescriptions were classified correctly. IM-10 predicted the dose prescription correctly for 26 (81%) patients, which was slightly lower than the other two models. Table 6 shows the detailed model validation results. This separate study demonstrated that the models achieved comparable performance in evaluations using cross-validation and separate training-testing datasets.

Table 6:

Summary of dose prediction performance by separating training and testing data sets using different networks.

| Dose prescription | 1×24Gy | 1×20–22Gy | 3×9Gy | 5×6Gy | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| In record (9) | In record (9) | In record (8) | In record (6) | |||||||||

|

| ||||||||||||

| Model Type (CNN) | IM-10 | IM-3 | IM-2 | IM-10 | IM-3 | IM-2 | IM-10 | IM-3 | IM-2 | IM-10 | IM-3 | IM-2 |

|

| ||||||||||||

|

Predicted

1×24Gy |

8 | 9 | 9 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

|

| ||||||||||||

|

Predicted

1×20–22Gy |

1 | 0 | 0 | 8 | 8 | 8 | 2 | 1 | 1 | 0 | 0 | 0 |

|

| ||||||||||||

|

Predicted

3×9Gy |

0 | 0 | 0 | 1 | 1 | 1 | 5 | 6 | 6 | 1 | 1 | 1 |

|

| ||||||||||||

|

Predicted

5×6Gy |

0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 5 | 5 | 5 |

To investigate the impact of physician practice variations on the AI modeling, we re-trained a physician-specific AI model using the dose prescriptions from the single physician, as explained in section 2.5. IM-2, IM-3, and IM-10 models predicted correctly for 137 (90%), 137 (90%), and 132 (87%) patients, respectively. In contrast, the group-based IM-2, IM-3, and IM-10 models trained with dose prescriptions from 8 physicians predicted correctly for 131 (86%), 130 (85.5%), and 123 (81%) patients, respectively. Supplementary Table. S1 shows the detailed model validation results. These results showed that removing the physician variations in the data further improved the prediction accuracy of the AI model.

4. Discussion

In this study, we developed interpretable models for predicting dose prescription in CDM in radiation therapy. The models fuse the input features at the decision-making level to independently learn the features from each input for decision-making. We proposed a general technique called class activation score, which can be calculated at the last fully connected layer and backtracked to the corresponding inputs (CT images or clinical parameters) to represent their role and importance in the decision-making process. Typically, there exists a trade-off between accuracy and interpretability. In our study, the IM-2 model outperformed IM-3 and IM-10 in predicting all dose prescription categories. However, IM-2 has the lowest interpretability among the three models since it can only identify the roles of CT images and clinical parameters as a whole in decision-making. In contrast, IM-10 provided slightly lower prediction accuracy than other models but provided the most interpretability with interpreted roles for all image and non-image inputs separately. The IM-10 model also provides quantitative importance of each input using the activation score. Since the model was trained using actual dose records prescribed by the physicians, the quantitative interpretation provided by the model can provide valuable insight for the physician to understand his/her decision process quantitatively. The importance ranking of all the inputs matched well between the physician and the IM-10 model, showing the model was interpreting the physician’s decision process correctly. Results from the three models showed high consistency in interpreting the importance of image and non-image inputs. In all models, the image inputs (target, target and OAR) and non-image clinical parameter inputs had around 70% and 30% weightings, respectively. Among the clinical parameters, the ECOG, re-treatment, and number of brain metastases had more importance than other parameters. The importance of different inputs in the decision process varied for patients prescribed for different doses prescriptions, which is consistent with our clinical practice where we weigh various factors differently for different patients.

Physician practice variation is an aspect to consider when modeling CDM. The clinical decision, such as dose prescriptions in SRS, is a complex process involving the consideration of many factors, including both image and non-image factors. Although there is a general clinical guideline for dose prescription design, there are still flexibilities within the clinical guideline for physicians to choose the prescription based on their individual judgments. Even given the same patient information, physicians can choose the dose prescription slightly differently depending on their personal experiences and preferences (being aggressive or conservative). The physician decision variations in the training data can lead to inconsistency in the data, which affects the prediction accuracy of the AI model that was trained to learn a consistent decision process. This is why the physician-specific model achieved superior prediction accuracy than the group-based model in our study. The remaining incorrectly predicted cases in the physician-specific modeling were most in the groups of 1×20–22Gy and 3×9Gy, which are more challenging to prescribe due to the intermediate tumor size, locations, and clinical conditions of these patients. The remaining errors can be due to two reasons: (1). Physician prescription uncertainties. Even for the same physician, the decision can have inherent uncertainties. The physician decision process is complex as they often need to balance different and often conflicting factors to make a final optimal decision. This decision process cannot be described by a simple formula, and the decision can have uncertainties or inconsistencies that affect how accurately AI can model such a process. (2). Limitation in the modeling. AI model itself can be imperfect in completely modeling the physician decision process for these challenging cases.

This study is limited by the relatively small patient sample size. As a result, 19-fold validation was used for model training and testing. More patient data can be obtained in the future to further validate the model’s performance. The second limitation of our study is that we didn’t model the process of selecting GTV or PTV as the target volume, which is usually done before the dose prescription selection. The current model focuses on modeling the dose prescription selection process. It just uses the final target volume as an input to determine dose prescription without the need to know whether the target was generated from GTV or PTV since the final target volume determines the dose to the healthy tissues and dose prescription that can be used. In the future, we can build a separate model to model the GTV/PTV selection process. Since GTV/PTV selection is mainly a physician’s preference, the modeling can be as simple as assigning each physician a fixed selection. The third limitation is that the group-physician data potentially included some cases where the initial dose prescription was changed after plan dosimetry review, which was not modeled by our AI network designed to only model the initial prescription-based image and clinical parameters. This discrepancy potentially led to a degradation of the prediction accuracy. From our clinical experience, the dose prescription change happens for a very small percentage of our SRS patients since our physicians are very experienced with estimating the OAR doses before the treatment planning, so this will be less of an issue in our data compared to small clinics with less experience in SRS. In the physician-specific data, we asked a physician to prescribe the dose solely based on the images and clinical factors, so the prescription data won’t be affected by dosimetry and are consistent with the AI modeling. In the future, we could perform a prospective study to collect the physician’s decision of dose prescription before and after treatment planning, which can be used to train a model to model the entire decision process.

In this study, we investigated both interpretable group-based and physician-specific models to assist CDM in radiation oncology. The interpretability of AI models is crucial for physicians’ or patients’ recognition and adoption of the models in daily clinical care. In this regard, developing a model capable of providing detailed insights into its decision process for dose prescription is the key to this study. Interpretable CDM can be valuable in the following aspects. 1) Improve the access and efficiency of secondary opinion consultation for cancer patients. With physician-specific or institution-specific modeling, CDM can provide preliminary consultations from a wide variety of physician experts modeled in the system, significantly improving efficiency, and reducing the cost of “physician shopping” for patients. Based on this initial survey of secondary opinions, patients can choose favorable physicians to pursue in-person consultations afterward. (2) Improve the quality of care. CDM can serve as a quality assurance tool to improve the consistency of physician decisions and minimize human errors by providing the predicted decision as a reference to physicians. (3) Improve the efficiency of the clinical practice. CDM can assist in providing preliminary analysis and clinical decisions for different tasks so physicians can quickly review and make adjustments as needed.

In summary, AI-based CDM model can alleviate healthcare disparities and improve the quality and efficiency of clinical practice, which may lead to improved patient outcomes. Interpretability of the clinical decision made by AI is critical for its clinical deployment since the transparency of the AI decision is vital for patients and physicians to understand and build confidence in the model. This study marks an important step toward building an interpretable AI model for CDM in radiation oncology. Although we used dose prescription as an example and starting point to demonstrate the feasibility of the model, the AI model can be expanded to other clinical decision makings in radiation oncology, such as initial consultation or treatment planning review, to model the entire clinical workflow. Furthermore, such modeling can be expanded to other medical specialties, such as surgery and medical oncology, to provide comprehensive CDM assistance to patients and clinicians.

5. Conclusion

Interpretable CNN models with encoding paths from CT images and non-image clinical parameters were successfully developed to predict dose prescriptions for brain metastases patients treated by radiotherapy. Such models allow us to interpret the roles and contributions of all the parameters in the decision-making process, which improves the model’s transparency and facilitates its future clinical adoption for CDM assistance. The interpretation from the model also provides insights into the thought process of a physician’s CDM with quantitative metrics to evaluate the consideration of each parameter in their decision.

Supplementary Material

Highlights.

develop interpretable AI to solely follow the thought process of physicians to effectively model their clinical decision-making (CDM).

This is the first time an interpretable AI model has been developed to use both image and non-image information to predict dose prescription in CDM of radiotherapy.

The models trained based on the actual treatment records showed high prediction accuracy while providing an interpretation of the decision process.

Class activation scores are designed and calculated for the model to illustrate the roles and importance of different inputs in the decision-making process, which was validated by the physician’s interpretation of the decision process.

Acknowledgments

We would like to sincerely thank Dr. Cedric (Xinsheng) Yu for his valuable comments and suggestions on the manuscript. This work is supported by NIH R01EB028324 and R01EB032680.

Footnotes

Conflict of interest

The authors have no conflict of interest to disclose.

Conflicts of interest/Competing interests

The listed authors have no conflict of interest.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- [1].Frid-Adar M, Diamant I, Klang E, Amitai M, Goldberger J, Greenspan H. GAN-based synthetic medical image augmentation for increased CNN performance in liver lesion classification. Neurocomputing 2018;321:321–31. [Google Scholar]

- [2].Cao Y, Vassantachart A, Jason CY, Yu C, Ruan D, Sheng K, et al. Automatic detection and segmentation of multiple brain metastases on magnetic resonance image using asymmetric UNet architecture. Phys Med Biol 2021;66:015003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [3].Vassantachart A, Cao Y, Gribble M, Guzman S, Ye JC, Hurth K, et al. Automatic differentiation of Grade I and II meningiomas on magnetic resonance image using an asymmetric convolutional neural network. Sci Rep 2022;12:1–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [4].Jiang Z, Yin F-F, Ge Y, Ren L. A multi-scale framework with unsupervised joint training of convolutional neural networks for pulmonary deformable image registration. Phys Med Biol 2020;65:015011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [5].Mohammadi R, Shokatian I, Salehi M, Arabi H, Shiri I, Zaidi H. Deep learning-based Auto-segmentation of Organs at Risk in High-Dose Rate Brachytherapy of Cervical Cancer. Radiother Oncol 2021. [DOI] [PubMed] [Google Scholar]

- [6].Zhang Z, Huang M, Jiang Z, Chang Y, Lu K, Yin F-F, et al. Patient-specific deep learning model to enhance 4D-CBCT image for radiomics analysis. Phys Med Biol 2022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].Jiang Z, Zhang Z, Chang Y, Ge Y, Yin F-F, Ren L. Enhancement of Four-dimensional Cone-beam Computed Tomography (4D-CBCT) using a Dual-encoder Convolutional Neural Network (DeCNN). IEEE Trans Radiat Plasma Med Sci 2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].Jiang Z, Yin F-F, Ge Y, Ren L. Enhancing digital tomosynthesis (DTS) for lung radiotherapy guidance using patient-specific deep learning model. Phys Med Biol 2021;66:035009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [9].Jiang Z, Chen Y, Zhang Y, Ge Y, Yin F-F, Ren L. Augmentation of CBCT reconstructed from under-sampled projections using deep learning. IEEE Trans Med Imaging 2019;38:2705–15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [10].Jiang F, Jiang Y, Zhi H, Dong Y, Li H, Ma S, et al. Artificial intelligence in healthcare: past, present and future. Stroke Vasc Neurol 2017;2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [11].LeBlanc TW, Fish LJ, Bloom CT, El‐Jawahri A, Davis DM, Locke SC, et al. Patient experiences of acute myeloid leukemia: a qualitative study about diagnosis, illness understanding, and treatment decision‐making. Psycho‐Oncology 2017;26:2063–8. [DOI] [PubMed] [Google Scholar]

- [12].Strickland E IBM Watson, heal thyself: How IBM overpromised and underdelivered on AI health care. IEEE Spectr 2019;56:24–31. [Google Scholar]

- [13].Cao Y, Kunaprayoon D, Xu J, Ren L. AI-assisted Clinical Decision Making (CDM) for dose prescription in radiosurgery of brain metastases using three-path three-dimensional CNN. Clin Transl Radiat Oncol 2022:100565. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [14].Samek W, Wiegand T, Müller K-R. Explainable artificial intelligence: Understanding, visualizing and interpreting deep learning models. ArXiv Prepr ArXiv170808296 2017. [Google Scholar]

- [15].Zhou B, Khosla A, Lapedriza A, Oliva A, Torralba A. Learning deep features for discriminative localization. Proc. IEEE Conf. Comput. Vis. Pattern Recognit, 2016, p. 2921–9. [Google Scholar]

- [16].Selvaraju RR, Cogswell M, Das A, Vedantam R, Parikh D, Batra D. Grad-cam: Visual explanations from deep networks via gradient-based localization. Proc. IEEE Int. Conf. Comput. Vis, 2017, p. 618–26. [Google Scholar]

- [17].Chen C, Li O, Tao D, Barnett A, Rudin C, Su JK. This looks like that: deep learning for interpretable image recognition. Adv Neural Inf Process Syst 2019;32. [Google Scholar]

- [18].de Hond AA, Leeuwenberg AM, Hooft L, Kant IM, Nijman SW, van Os HJ, et al. Guidelines and quality criteria for artificial intelligence-based prediction models in healthcare: a scoping review. NPJ Digit Med 2022;5:2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [19].Simonyan K, Zisserman A. Very deep convolutional networks for large-scale image recognition. ArXiv Prepr ArXiv14091556 2014. [Google Scholar]

- [20].Glorot X, Bengio Y. Understanding the difficulty of training deep feedforward neural networks. Proc. Thirteen. Int. Conf. Artif. Intell. Stat, 2010, p. 249–56. [Google Scholar]

- [21].Kingma DP, Ba J. Adam: A method for stochastic optimization. ArXiv Prepr ArXiv14126980 2014. [Google Scholar]

- [22].Fawcett T An introduction to ROC analysis. Pattern Recognit Lett 2006;27:861–74. [Google Scholar]

- [23].Pedregosa F, Varoquaux G, Gramfort A, Michel V, Thirion B, Grisel O, et al. Scikit-learn: Machine learning in Python. J Mach Learn Res 2011;12:2825–30. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.