The COVID-19 pandemic strained our health care delivery system and brought the severity of health and social disparities to the forefront. Black, Hispanic, and Asian populations had a 20% relative increase in heart disease deaths during the COVID-19 pandemic versus Whites.1 COVID-19 pandemic exacerbated county-level income inequality by itself and through its interaction with racial/ethnic composition, systematically disadvantaging Black and Hispanic communities.2

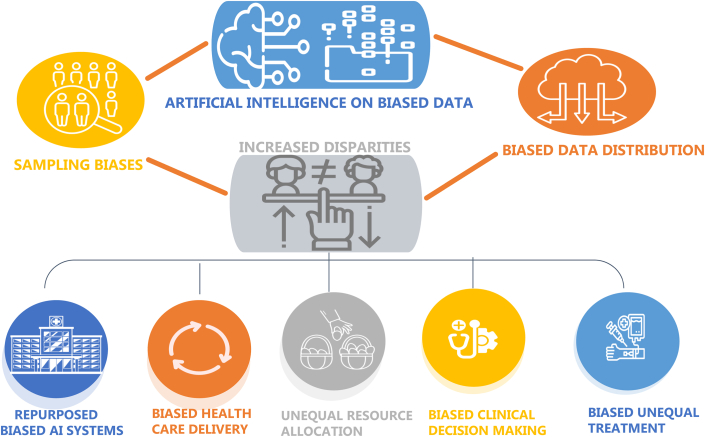

Since there were less outpatient blood pressure and cholesterol assessments during COVID-19, the need for health care delivery to shift to more remote, virtual, and non-face-to-face services was paramount.3 Artificial intelligence (AI) could help bridge this gap. However, AI could also lead to sampling bias if access to health care is inadequate. The computing resources and expertise required to train AI models may mean that fewer centers are able to contribute to research potentially leading to less diversity in the AI training data sets.4 Smaller centers may have difficulty incorporating AI models into their workflow because of the economic cost of buying proprietary algorithms, leading to less well-resourced centers being unable to access these tools to help their patients and data disparity (Figure 1).

Figure 1.

AI and Health Disparities

AI = artificial intelligence.

AI methods

The potential benefits and pitfalls of AI are better understood when examining the methods behind developing machine learning (ML) algorithms. The purpose of ML training is to train algorithms to recognize patterns in information. Training methods can be broadly divided into supervised and unsupervised approaches.

The supervised approach involves the ML algorithm learning patterns from data that has been labeled such as electrocardiogram tracings, echocardiograms, or cross-sectional imaging.5 The algorithm learns the pertinent features of the labeled data set which then allows it to interpret data sets that it has not been trained upon. While the labeled approach allows precise training upon a target of interest, it is also less flexible in allowing the ML algorithm to discover unidentified patterns. A limitation of supervised ML is that it requires labeled data sets which can be time-consuming to create and may not be of sufficient size to create a robust, generalizable model.

The unsupervised ML method uses unlabeled data to train the algorithm. The algorithm will search for patterns on its own without human input and may identify features of interest that are unique and previously unnoticed. This approach saves time that would otherwise be required to label data sets and can identify patterns that may be more predictive than traditional measures.6 Some examples of unsupervised ML include classifying arrhythmias, genetic data, and heart failure risk. ML methods can lead to novel and important contributions in patient diagnosis, risk prediction, and disease classification.5 However, the requirements for ML training can have unintended consequences. If certain populations are not well-represented in the training data set, the ML algorithm may perform fine in the lab setting but face significantly worse performance in the real-world.

Access

Access is key. Equity across digital health (apps, portals, telehealth) is essential to address health disparities. Without access, individuals from minority groups and people with less social economic status (SES) will not be included in data sets from health care. Their data will not be used to train and validate the AI algorithms, leading to sampling bias and entrenching existing health care disparities. AI algorithms cannot use data on historically marginalized individuals if data are not available. Historically marginalized individuals may seek medical attention less frequently than nonmarginalized individuals leading to their under-representation in clinical databases. This real-world disparity may then be reinforced algorithmically with underdiagnosis, worse risk prediction, and inaccurate disease classification in these individuals.4

Insurance coverage for digital health: unequal resource allocation and treatment

Over the last decade, health insurance companies have significantly expanded coverage across the spectrum of digital health, ranging from more established tools such as data tracking to novel tools including virtual reality and AI-based programs. The tipping point started with Center for Medicare & Medicaid Services expansion for telehealth precipitated by the onset of the pandemic in March 2020. During the first year of the pandemic, telehealth use among Medicare enrollees increased from 840,000 visits in 2019 to 52 million in 2020.7 However, beyond telehealth, significant variation persists in insurance coverage for digital health tools such as monitoring devices (eg, continuous glucose, blood pressure, heart rate), behavioral change programs (eg, medication adherence apps), and exercise tools (eg, virtual reality gaming).

For tools that have been proven to impact health outcomes, variable insurance coverage threatens to widen disparities in care. For example, a report by the American Diabetes Association showed that despite evidence that patients who use continuous glucose monitoring achieve lower HgA1c levels with less hypoglycemia, commercial health insurance is 2 to 5 times more likely to cover the costs of continuous glucose monitoring than Medicaid.8 Among those with Medicaid, Black and Hispanic patients were less likely than White patients to receive continuous glucose monitoring. In addition, large racial biases accrue when health insurance uses health care costs rather than illness to predict who needs extra care because unequal access to care means that less money is spent caring for Black patients than for White patients.9

While digital health tools may offer tremendous opportunities to bridge gaps in care, universal insurance reimbursement for all digital health tools is not necessarily the answer either. The selection of which programs and tools that insurance reimburses has equity implications as well. For example, the language used, intuition required, and design elements of an app or digital device all influence likelihood of adoption among different patient groups. Critical examination of potential for equitable adoption is needed to ensure that increased insurance coverage itself does not widen disparities.

Impact of AI on data and digital inclusion

Addressing the AI-induced health care inequity will require improved digital infrastructure including larger data sets, reducing sampling biases, and lack of representation in current data sets which imbue patterns of bias and discrimination into data distributions. Current data suggest substantial disparities in the data sources used to develop clinical AI models, representation of specialties, and in authors’ gender, nationality, and expertise.4 Another potential confounder is the effect of disease prevalence on the negative predictive value which should be kept in mind when evaluating new AI algorithms. Additionally, with AI algorithms, the validation cohort is key to applying the results to an intended use population. Accuracy of AI products/devices is validated by comparing their performance within nonrandomized groups of patients, unlike traditional Randomized Controlled Trials.10 Biased AI design and deployment practices fuel imbalances that lead to biased AI systems. Biased clinical decision-making from unequal AI algorithms will exacerbate unequal access and resource allocation, further widening the digital divide and increasing health inequality and discrimination compounded by COVID-19.

Biased health care delivery

Systemic inequalities are instilled in societies and health care systems. This has led to difficulty in defining generalized biases. Rather than AI improving biases over time, medical AI and medical practice cycles are likely to interact and mutually worsen, in the absence of coordinated intervention.

The initial step to developing intervention is understanding which biases exist. There have been some already discussed earlier. There may be multiple biases within health care delivery, including racial, gender, SES, and language bias. Racial bias could occur when the data set for the AI algorithm only covers a specific racial ethnic group. Different diseases/illnesses have different effects on women and men. If the AI algorithm does not incorporate these gender differences, the output can be inaccurate. The bias of clinicians related to SES factors of patients transfers into the training data for the AI model and results in inequalities in the output. People with low incomes have a higher health risk than people with high incomes. If the data are only collected from an expensive private clinic, then the AI model will become biased.11

Language bias can be introduced when there are AI models that use audio data to diagnose chronic disease. If these models are not trained with a wide range of accents, their outputs can be biased. For example, an AI algorithm created only on a person born and raised in the United States will lead to disadvantage in the immigrant population with other English-speaking accents.

We may not be able to develop AI algorithms that are completely unbiased, but we can improve the range of data collection to reduce bias in health care delivery.

To ensure that the data collection includes a diverse population, encourage collaboration between public and private sectors to improve the range of data collection to reduce bias in health care delivery.

Future steps

The use of AI in health care has the potential for significant benefits for patients and clinicians. The rapid increase in the number of scientific publications and research makes keeping up with medical advances increasingly difficult, potentially leading to more practice variation between providers. Having AI tools to augment and assist in clinical practice could help clinicians provide more efficient and quality care to their patients.

The data that one uses needs to represent “what should be” and not “what is.” Data governance should be mandated and enforced to regulate the AI algorithms/modeling processes to ensure that they are ethical. AI models should be evaluated to ensure that the true accuracy and false positive rate are consistent when comparing different groups by gender, ethnicity, or age. Analyzing the construct of validation cohorts when interpreting AI models is key since the area under the curve-receiver operating characteristic, model performance, can change with the controls selected yet can determine the clinical pathway chosen.

Funding support and author disclosures

The authors have reported that they have no relationships relevant to the contents of this paper to disclose.

Footnotes

The authors attest they are in compliance with human studies committees and animal welfare regulations of the authors’ institutions and Food and Drug Administration guidelines, including patient consent where appropriate. For more information, visit the Author Center.

References

- 1.Wadhera R.K., Figueroa J.F., Rodriguez F., et al. Racial and ethnic disparities in heart and cerebrovascular disease deaths during the COVID-19 pandemic in the United States. Circulation. 2021;143(24):2346–2354. doi: 10.1161/CIRCULATIONAHA.121.054378. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Liao T.F., De Maio F. Association of social and economic inequality with coronavirus disease 2019 incidence and mortality across US counties. JAMA Netw Open. 2021;4(1) doi: 10.1001/jamanetworkopen.2020.34578. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Alexander G.C., Tajanlangit M., Heyward J., et al. Use and content of primary care office-based vs telemedicine care visits during the COVID-19 pandemic in the US. JAMA Netw Open. 2020;3(10) doi: 10.1001/jamanetworkopen.2020.21476. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Celi L.A., Cellini J., Charpignon M.L., et al. Sources of bias in artificial intelligence that perpetuate healthcare disparities-a global review. PLoS Digit Health. 2022;1(3) doi: 10.1371/journal.pdig.0000022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Quer G., Arnaout R., Henne M., Arnaout R. Machine learning and the future of cardiovascular care: JACC state-of-the-art review. J Am Coll Cardiol. 2021;77(3):300–313. doi: 10.1016/j.jacc.2020.11.030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Currie G., Hawk K.E., Rohren E., Vial A., Klein R. Machine learning and deep learning in medical imaging: intelligent imaging. J Med Imaging Radiat Sci. 2019;50(4):477–487. doi: 10.1016/j.jmir.2019.09.005. [DOI] [PubMed] [Google Scholar]

- 7.U.S. DHHS Report. https://aspe.hhs.gov/sites/default/files/documents/a1d5d810fe3433e18b192be42dbf2351/medicare-telehealth-report.pdf

- 8.American Diabetes Association . Health Management Associates, 2021. Health Equity and Diabetes Technology: A Study of Access to Continuous Glucose Monitors by Payer and Race Executive Summary. [Google Scholar]

- 9.Obermeyer Z., Powers B., Vogeli C., Mullainathan S. Dissecting racial bias in an algorithm used to manage the health of populations. Science. 2019;366(6464):447–453. doi: 10.1126/science.aax2342. [DOI] [PubMed] [Google Scholar]

- 10.Sanders W.E., Khedraki R., Rabbat M., et al. Machine learning algorithms: selection of appropriate validation populations for cardiology research—Be careful! JACC: Adv. 2023;2(1) [Google Scholar]

- 11.Gianfrancesco M.A., Tamang S., Yazdany J., Schmajuk G. Potential biases in machine learning algorithms using electronic health record data. JAMA Intern Med. 2018;178(11):1544–1547. doi: 10.1001/jamainternmed.2018.3763. [DOI] [PMC free article] [PubMed] [Google Scholar]