Studies that show a significant effect of treatment are more likely to be published, be published in English, be cited by other authors, and produce multiple publications than other studies.1–8 Such studies are therefore also more likely to be identified and included in systematic reviews, which may introduce bias.9 Low methodological quality of studies included in a systematic review is another important source of bias.10

All these biases are more likely to affect small studies than large ones. The smaller a study the larger the treatment effect necessary for the results to be significant. The greater investment of time and money in larger studies means that they are more likely to be of high methodological quality and published even if their results are negative. Bias in a systematic review may therefore become evident through an association between the size of the treatment effect and study size—such associations may be examined both graphically and statistically.

Summary points

Asymmetrical funnel plots may indicate publication bias or be due to exaggeration of treatment effects in small studies of low quality

Bias is not the only explanation for funnel plot asymmetry; funnel plots should be seen as a means of examining “small study effects” (the tendency for the smaller studies in a meta-analysis to show larger treatment effects) rather than a tool for diagnosing specific types of bias

Statistical methods may be used to examine the evidence for bias and to examine the robustness of the conclusions of the meta-analysis in sensitivity analyses

“Correction” of treatment effect estimates for bias should be avoided as such corrections may depend heavily on the assumptions made

Multivariable models may be used, with caution, to examine the relative importance of different types of bias

Graphical methods for detecting bias

Funnel plots

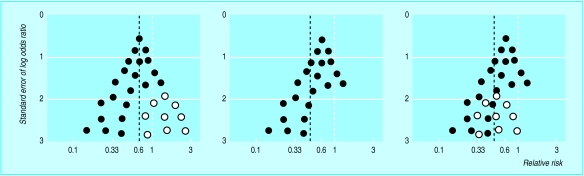

Funnel plots were first used in educational research and psychology.11 They are simple scatter plots of the treatment effects estimated from individual studies (horizontal axis) against some measure of study size (vertical axis). Because precision in estimating the underlying treatment effect increases as a study's sample size increases, effect estimates from small studies scatter more widely at the bottom of the graph, with the spread narrowing among larger studies. In the absence of bias the plot therefore resembles a symmetrical inverted funnel (fig 1 (left)).

Figure 1.

Hypothetical funnel plots: left, symmetrical plot in absence of bias (open circles are smaller studies showing no beneficial effects); centre, asymmetrical plot in presence of publication bias (smaller studies showing no beneficial effects are missing); right, asymmetrical plot in presence of bias due to low methodological quality of smaller studies (open circles are small studies of inadequate quality whose results are biased towards larger effects). Solid line is pooled odds ratio and dotted line is null effect (1). Pooled odds ratios exaggerate treatment effects in presence of bias

Ratio measures of treatment effect—relative risk or odds ratio—are plotted on a logarithmic scale, so that effects of the same magnitude but in opposite directions—for example, 0.5 and 2—are equidistant from 1.0.12 Treatment effects have generally been plotted against sample size or log sample size. However, the statistical power of a trial is determined by both the sample size and the number of participants developing the event of interest, and so the use of standard error as the measure of study size is generally a good choice. Plotting against precision (1/standard error) emphasises differences between larger studies, which may be useful in some situtations. Guidelines on the choice of axis in funnel plots are presented elsewhere.13

Reporting bias—for example, because smaller studies showing no statistically significant beneficial effect of the treatment (open circles in fig 1 (left)) remain unpublished—leads to an asymmetrical appearance with a gap in the bottom right of the funnel plot (fig 1 (centre)). In this situation the combined effect from meta-analysis overestimates the treatment's effect.14,15 Smaller studies are, on average, conducted and analysed with less methodological rigour than larger ones, so that asymmetry may also result from the overestimation of treatment effects in smaller studies of lower methodological quality (fig 1 (right)).

Alternative explanations of funnel plot asymmetry

It is important to realise that funnel plot asymmetry may have causes other than bias.14 Heterogeneity between trials leads to asymmetry if the true treatment effect is larger in the smaller trials. For example, if a combined outcome is considered then substantial benefit may be seen only in patients at high risk for the component of the combined outcome affected by the intervention.16,17 Trials conducted in patients at high risk also tend to be smaller because of the difficulty in recruiting such patients and because increased event rates mean that smaller sample sizes are required to detect a given effect. Some interventions may have been implemented less thoroughly in larger trials, which thus show decreased treatment effects. For example, an asymmetrical funnel plot was found in a meta-analysis of trials examining the effect of comprehensive assessment on mortality. An experienced consultant geriatrician was more likely to be actively involved in the smaller trials, and this may explain the larger treatment effects observed in these trials.14,18

Other sources of funnel plot asymmetry are discussed elsewhere.19 Because publication bias is only one of the possible reasons for asymmetry, the funnel plot should be seen as a means of examining “small study effects” (the tendency for the smaller studies in a meta-analysis to show larger treatment effects). The presence of funnel plot asymmetry should lead to consideration of possible explanations and may bring into question the interpretation of the overall estimate of treatment effect from a meta-analysis.

Examining biological plausibility

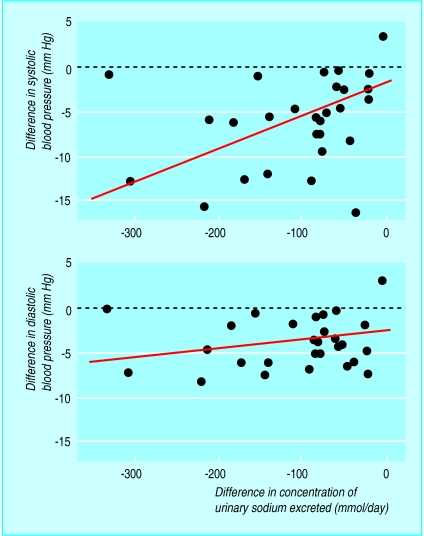

In some circumstances the possible presence of bias can be examined through markers of adherence to treatment, such as drug metabolites in patients' urine or markers of the biological effects of treatment such as the achieved reduction in cholesterol concentration in trials of cholesterol lowering drugs. If patients' adherence to an effective treatment varies across trials this should result in corresponding variation in treatment effects. Scatter plots of treatment effect against adherence should be compatible with there being no treatment effect at 0% adherence, and so a simple regression line should intercept the vertical axis at zero treatment effect. If a scatter plot indicates a treatment effect even when no patients adhere to treatment then bias is a possible explanation. Such plots provide an analysis that is independent of study size. For example, in a meta-analysis of trials examining the effect of reducing dietary sodium on blood pressure Midgley et al plotted the reduction in blood pressure against the reduction in urinary sodium concentration for each study and performed a linear regression analysis (fig 2).20 The results show a reduction in blood pressure even in the absence of a reduction in urinary sodium concentration, which may indicate the presence of bias.

Figure 2.

Regression lines, adjusted for number of measurements of urinary sodium concentration, of predicted change in blood pressure for change in concentration of urinary sodium from randomised controlled trials of reduction in dietary sodium. Intercepts indicate decline in blood pressure even if diets in intervention and control groups were identical, which may indicate presence of bias. Modified from Midgley et al20

Statistical methods for detecting and correcting for bias

Selection models

“Selection models” to detect publication bias model the selection process that determines which results are published, based on the assumption that the study's P value affects its probability of publication.21–23 The methods can be extended to estimate treatment effects, corrected for the estimated publication bias,24 but avoidance of strong assumptions about the nature of the selection mechanism means that a large number of studies is required so that a sufficient range of P values is included. Published applications include a meta-analysis of trials of homoeopathy and correction of estimates of the association between passive smoking and lung cancer.25,26 The complexity of the methods and the large number of studies needed probably explains why selection models have not been widely used in practice.

Copas proposed a model in which the probability that a study is included in a meta-analysis depends on its standard error.27 Because there are not enough data to choose a single “best” model, he advocates sensitivity analyses in which the value of the estimated treatment effect is computed under a range of assumptions about the severity of the selection bias: these show how the estimated effect varies as the assumed amount of selection bias increases. Application of the method to epidemiological studies of environmental tobacco smoke and lung cancer suggests that publication bias may explain some of the association observed in meta-analyses of these studies.28

The “correction” of effect estimates when publication bias is assumed to be present is problematic and a matter of ongoing debate. Results may depend heavily on the modeling assumptions used. Many factors may affect the probability of publication of a given set of results, and it is difficult, if not impossible, to model these adequately. Furthermore, publication bias is only one of the possible explanations for associations between treatment effects and study size. It is therefore prudent to restrict the use of statistical methods that model selection mechanisms to the identification of bias rather than correcting for it.29

Trim and fill

Duval and Tweedie have proposed “trim and fill”; a method based on adding studies to a funnel plot so that it becomes symmetrical.30–32 Smaller studies are omitted until the funnel plot is symmetrical (trimming). The trimmed funnel plot is used to estimate the true “centre” of the funnel, and then the omitted studies and their missing “counterparts” around the centre are replaced (filling). This provides an estimate of the number of missing studies and an adjusted treatment effect, including the “filled” studies. A recent study that used the trim and fill method in 48 meta-analyses estimated that 56% of meta-analyses had at least one study missing whereas the number of missing studies in 10 was statistically significant.33 However, simulation studies have found that the trim and fill method detects “missing” studies in a substantial proportion of meta-analyses, even in the absence of bias.34 Thus there is a danger that in many meta-analyses application of the method could mean adding and adjusting for non-existent studies in response to funnel plot asymmetry arising from nothing more than random variation.

Statistical methods for detecting funnel plot asymmetry

An alternative approach, which does not attempt to define the selection process leading to publication, is to examine associations between study size and estimated treatment effects. Begg and Mazumdar proposed a rank correlation method to examine the association between the effect estimates and their variances (or, equivalently, their standard errors),35 whereas Egger et al introduced a linear regression approach, which is equivalent to a weighted regression of treatment effect (for example, log odds ratio) on its standard error, with weights inversely proportional to the variance of the effect size.14 Because each of these approaches looks for an association between the study's treatment effect and its standard error, they can be seen as statistical analogues of funnel plots. The regression method is more sensitive than the rank correlation approach, but the sensitivity of both methods is generally low in meta-analyses based on less than 20 trials.36

An obvious extension is to consider study size as one of several different possible explanations for heterogeneity between studies in multivariable “meta-regression” models.37,38 For example, the effects of study size, adequacy of randomisation, and type of blinding might be examined simultaneously. Three notes of caution are necessary. Firstly, in standard regression models inclusion of large numbers of covariates (overfitting) is unwise, particularly if the sample size is small. In meta-regression the number of data points corresponds to the number of studies, which is often less than 10.36 Thus tests for an association between treatment effect and large numbers of study characteristics may lead to spurious claims of association. Secondly, all associations found in such analyses are observational and may be confounded by other factors. Thirdly, regression analyses using averages of patient characteristics from each trial (for example, patients' mean age) can give misleading impressions of the relation for individual patients—the “ecological fallacy.”39

Meta-regression can also be used to examine associations between clinical outcomes and markers of adherence to, or the biological effects of, treatment; weighting appropriately for study size. As discussed, a non-zero intercept may indicate bias or a treatment effect that is not mediated through the marker. The error in estimating the effect of treatment should be incorporated in such models: Daniels and Hughes discuss this and propose a bayesian estimation procedure, which has been applied in a study of CD4 cell count as a surrogate end point in clinical trials of HIV.40,41

Case study

Is the effect of homoeopathy due to the placebo effect?

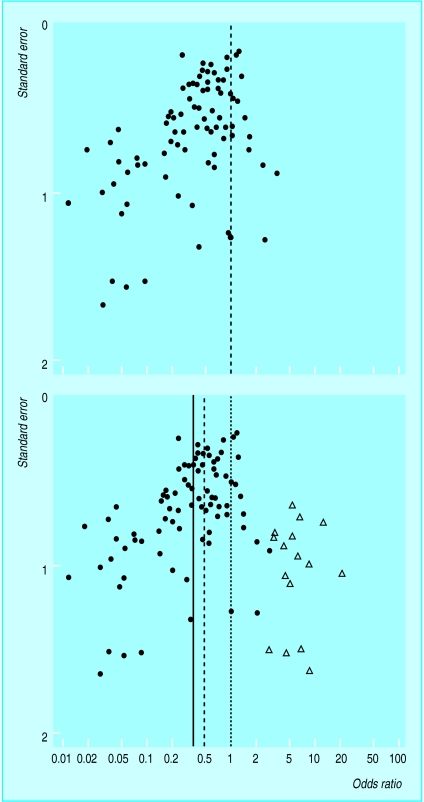

The placebo effect is a popular explanation for the apparent efficacy of homoeopathic remedies.42–44 Linde et al addressed this question in a systematic review and meta-analysis of 89 published and unpublished reports of randomised placebo controlled trials of homoeopathy.25 They did an extensive literature search and quality assessment that covered dimensions of internal validity known to be associated with treatment effects.10

The funnel plot of the 89 trials is clearly asymmetrical (fig 3 (top)), and both the rank correlation and the weighted regression tests indicated clear asymmetry (P<0.001). The authors used a selection model to correct for publication bias and found that the odds ratio was increased from 0.41 (95% confidence interval 0.34 to 0.49) to 0.56 (0.32 to 0.97, P=0.037).22,24 They concluded that the clinical effects of homoeopathy were unlikely to be due to placebo.25 Similar results are obtained with the trim and fill method (fig 3 (bottom)), which adds 16 studies to the funnel plot, leading to an adjusted odds ratio of 0.52 (0.43 to 0.63). These methods do not, however, allow simultaneously for other sources of bias. It may be more reasonable to conclude that methodological flaws led to exaggeration of treatment effects in the published trials than to assume that there are unpublished trials showing substantial harm caused by homoeopathy (fig 3 (bottom)).

Figure 3.

Asymmetrical funnel plot of 89 randomised controlled trials comparing homoeopathic medicine with placebo identified by Linde et al25 (top) and application of the “trim and fill” method (bottom). Solid circles represent the 89 trials and open diamonds “filled” studies. Solid line is original (random effects) estimate of pooled odds ratio (0.41), dashed line is adjusted estimate (0.52, including filled studies), and dotted line is null value (1)

The table shows the results from meta-regression analyses of associations between trial characteristics and the estimated effect of homoeopathy. Results are presented as ratios of odds ratios: ratios of less than 1 correspond to a smaller odds ratio for trials with the characteristic and hence a larger apparent benefit of homoeopathy. For example, in univariable analysis the ratio of odds ratios was 0.24 (95% confidence interval 0.12 to 0.46) if the assessment of outcome was not adequately blinded, implying that such trials showed much greater protective effects of homoeopathy. In the multivariable analysis shown in the table there was clear evidence from the asymmetry coefficient that treatment effects were larger in smaller studies and in studies with inadequate blinding of outcome assessment. There was also a tendency for larger treatment effects in trials published in languages other than English .

MARK OLDROYD

Summary recommendations on investigating and dealing with publication and other biases in a meta-analysis

Examining for bias

Check for funnel plot asymmetry with graphical and statistical methods

Use meta-regression to look for associations between key measures of trial quality and size of treatment effect

Use meta-regression to examine other possible explanations for heterogeneity

If available, examine associations between size of treatment effect and changes in biological markers or patients' adherence to treatment

Dealing with bias

If there is evidence of bias, report this with the same prominence as any combined estimate of treatment effect

Consider sensitivity analyses to establish whether the estimated treatment effect is robust to reasonable assumptions about the effect of bias

Consider excluding studies of lower quality

If sensitivity analyses show that a review's conclusions could be seriously affected by bias, then consider recommending that the evidence to date be disregarded

The largest trials of homoeopathy (those with the smallest standard error) that were also double blind and had adequate concealment of randomisation show no effect. The evidence is thus compatible with the hypothesis that the clinical effects of homoeopathy are completely due to placebo and that the effects observed in Linde et al's meta-analysis are explained by a combination of publication bias and inadequate methodological quality of trials. We emphasise, however, that these results cannot prove that the apparent benefits of homoeopathy are due to bias.

Conclusions

Prevention is better than cure. In conducting a systematic review and meta-analysis, investigators should make strenuous efforts to find all published studies and search for unpublished work. The quality of component studies should also be carefully assessed.10 The box shows summary recommendations on examining for, and dealing with, bias in meta-analysis. Selection models for publication bias are likely to be of most use in sensitivity analyses in which the robustness of a meta-analysis to possible publication bias is assessed. Funnel plots should be used in most meta-analyses to provide a visual assessment of whether the estimates of treatment effect are associated with study size. Statistical methods may be used to examine the evidence for funnel plot asymmetry and competing explanations for heterogeneity between studies. The power of these methods is, however, limited, particularly for meta-analyses based on a small number of small studies. The results of such meta-analyses should always be treated with caution.

Statistically combining data from new trials with a body of flawed evidence does not remove bias. However there is currently no consensus to guide clinical practice or future research when a systematic review suggests that the evidence to date is unreliable for one or more of the reasons discussed here. If there is clear evidence of bias, and if sensitivity analyses show that this could seriously affect a review's conclusions, then reviewers should recommend that some or all of the evidence to date be disregarded. Future reviews could then be based on new, high quality evidence. Improvements in the conduct and reporting of trials, prospective registration, and easier access to data from published and unpublished studies45,46 mean that bias will hopefully be a diminishing problem in future systematic reviews and meta-analyses.

Table.

Multivariable meta-regression analysis of bias in 89 placebo controlled trials of homoeopathy

| Study characteristic | Ratio of odds ratios* (95% CI) | P value |

|---|---|---|

| Asymmetry coefficient† | 0.20 (0.11 to 0.37) | <0.001 |

| Language (non-English versus English) | 0.73 (0.55 to 0.98) | 0.038 |

| Study quality: | ||

| Allocation concealment (not adequate versus adequate) | 0.98 (0.73 to 1.30) | 0.87 |

| Blinding (not double blind versus double blind) | 0.35 (0.20 to 0.60) | <0.001 |

| Handling of withdrawals (not adequate versus adequate) | 1.10 (0.80 to 1.51) | 0.56 |

| Publication type (not indexed by Medline versus indexed by Medline) | 0.91 (0.67 to 1.25) | 0.57 |

Odds ratio with characteristic divided by odds ratio without characteristic. Ratios below 1 correspond to smaller treatment odds ratio for trials with characteristic and hence larger apparent benefit of homoeopathic treatment.

Ratio of odds ratios per unit increase in standard error of log odds ratio, which measures funnel plot asymmetry.

Acknowledgments

We thank Klaus Linde and Julian Midgley for unpublished data.

This is the second in a series of four articles

Footnotes

Series editor: Matthias Egger

Competing interests:

Systematic Reviews in Health Care: Meta-analysis in Context can be purchased through the BMJ Bookshop (www.bmjbookshop.com); further information and updates for the book are available (www.systematicreviews. com)

References

- 1.Easterbrook PJ, Berlin JA, Gopalan R, Matthews DR. Publication bias in clinical research. Lancet. 1991;337:867–872. doi: 10.1016/0140-6736(91)90201-y. [DOI] [PubMed] [Google Scholar]

- 2.Dickersin K, Min YI, Meinert CL. Factors influencing publication of research results: follow-up of applications submitted to two institutional review boards. JAMA. 1992;263:374–378. [PubMed] [Google Scholar]

- 3.Stern JM, Simes RJ. Publication bias: evidence of delayed publication in a cohort study of clinical research projects. BMJ. 1997;315:640–645. doi: 10.1136/bmj.315.7109.640. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Egger M, Zellweger-Zähner T, Schneider M, Junker C, Lengeler C, Antes G. Language bias in randomised controlled trials published in English and German. Lancet. 1997;350:326–329. doi: 10.1016/S0140-6736(97)02419-7. [DOI] [PubMed] [Google Scholar]

- 5.Gøtzsche PC. Reference bias in reports of drug trials. BMJ. 1987;295:654–656. [PMC free article] [PubMed] [Google Scholar]

- 6.Ravnskov U. Cholesterol lowering trials in coronary heart disease: frequency of citation and outcome. BMJ. 1992;305:15–19. doi: 10.1136/bmj.305.6844.15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Tramèr MR, Reynolds DJ, Moore RA, McQuay HJ. Impact of covert duplicate publication on meta-analysis: a case study. BMJ. 1997;315:635–640. doi: 10.1136/bmj.315.7109.635. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Gøtzsche PC. Multiple publication of reports of drug trials. Eur J Clin Pharmacol. 1989;36:429–432. doi: 10.1007/BF00558064. [DOI] [PubMed] [Google Scholar]

- 9.Egger M, Dickersin K, Davey Smith G. Problems and limitations in conducting systematic reviews. In: Egger M, Davey Smith G, Altman DG, editors. Systematic reviews in health care: meta-analysis in context. 2nd ed. London: BMJ Books; 2001. [Google Scholar]

- 10.Jüni P, Altman DG, Egger M. Assessing the quality of controlled clinical trials. BMJ. 2001;322:000–000. doi: 10.1136/bmj.323.7303.42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Light RJ, Pillemer DB. Summing up: the science of reviewing research. Cambridge: Harvard University Press; 1984. [Google Scholar]

- 12.Galbraith RF. A note on graphical presentation of estimated odds ratios from several clinical trials. Stat Med. 1988;7:889–894. doi: 10.1002/sim.4780070807. [DOI] [PubMed] [Google Scholar]

- 13.Sterne JAC, Egger M. Funnel plots for detecting bias in meta-analysis: guidelines on choice of axis. J Clin Epidemiol 2001 (in press). [DOI] [PubMed]

- 14.Egger M, Davey Smith G, Schneider M, Minder C. Bias in meta-analysis detected by a simple, graphical test. BMJ. 1997;315:629–634. doi: 10.1136/bmj.315.7109.629. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Villar J, Piaggio G, Carroli G, Donner A. Factors affecting the comparability of meta-analyses and the largest trials results in perinatology. J Clin Epidemiol. 1997;50:997–1002. doi: 10.1016/s0895-4356(97)00148-0. [DOI] [PubMed] [Google Scholar]

- 16.Davey Smith G, Egger M. Who benefits from medical interventions? Treating low risk patients can be a high risk strategy. BMJ. 1994;308:72–74. doi: 10.1136/bmj.308.6921.72. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Glasziou PP, Irwig LM. An evidence based approach to individualising treatment. BMJ. 1995;311:1356–1359. doi: 10.1136/bmj.311.7016.1356. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Stuck AE, Siu AL, Wieland GD, Adams J, Rubenstein LZ. Comprehensive geriatric assessment: a meta-analysis of controlled trials. Lancet. 1993;342:1032–1036. doi: 10.1016/0140-6736(93)92884-v. [DOI] [PubMed] [Google Scholar]

- 19.Sterne JAC, Egger M, Davey Smith G. Investigating and dealing with publication and other biases. In: Egger M, Davey Smith G, Altman DG, editors. Systematic reviews in health care: meta-analysis in context. 2nd ed. London: BMJ Books; 2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Midgley JP, Matthew AG, Greenwood CMT, Logan AG. Effect of reduced dietary sodium on blood pressure. A meta-analysis of randomized controlled trials. JAMA. 1996;275:1590–1597. doi: 10.1001/jama.1996.03530440070039. [DOI] [PubMed] [Google Scholar]

- 21.Dear KBG, Begg CB. An approach to assessing publication bias prior to performing a meta-analysis. Stat Sci. 1992;7:237–245. [Google Scholar]

- 22.Hedges LV. Modeling publication selection effects in meta-analysis. Stat Sci. 1992;7:246–255. [Google Scholar]

- 23.Iyengar S, Greenhouse JB. Selection problems and the file drawer problem. Stat Sci 1988;109-35.

- 24.Vevea JL, Hedges LV. A general linear model for estimating effect size in the presence of publication bias. Psychometrika. 1995;60:419–435. [Google Scholar]

- 25.Linde K, Clausius N, Ramirez G, Melchart D, Eitel F, Hedges LV, et al. Are the clinical effects of homoeopathy placebo effects? A meta-analysis of placebo-controlled trials. Lancet. 1997;350:834–843. doi: 10.1016/s0140-6736(97)02293-9. [DOI] [PubMed] [Google Scholar]

- 26.Givens GH, Smith DD, Tweedie RL. Publication bias in meta-analysis: a Bayesian data-augmentation approach to account for issues exemplified in the passive smoking debate. Stat Sci. 1997;12:221–250. [Google Scholar]

- 27.Copas J. What works?: selectivity models and meta-analysis. J R Stat Soc A. 1999;162:95–109. [Google Scholar]

- 28.Copas JB, Shi JQ. Reanalysis of epidemiological evidence on lung cancer and passive smoking. BMJ. 2000;320:417–418. doi: 10.1136/bmj.320.7232.417. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Begg CB. Publication bias in meta-analysis. Stat Sci. 1997;12:241–244. [Google Scholar]

- 30.Taylor SJ, Tweedie RL. Practical estimates of the effect of publication bias in meta-analysis. Aust Epidemiol. 1998;5:14–17. [Google Scholar]

- 31.Duval SJ, Tweedie RL. Trim and fill: a simple funnel plot based method of testing and adjusting for publication bias in meta-analysis. Biometrics 2001 (in press). [DOI] [PubMed]

- 32.Duval SJ, Tweedie RL. A non-parametric “trim and fill” method of assessing publication bias in meta-analysis. J Am Stat Assoc 2001 (in press).

- 33.Sutton AJ, Duval SJ, Tweedie RL, Abrams KR, Jones DR. Empirical assessment of effect of publication bias on meta-analyses. BMJ. 2000;320:1574–1577. doi: 10.1136/bmj.320.7249.1574. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Sterne JAC, Egger M. High false positive rate for trim and fill method [rapid response to (www.bmj.com/cgi/eletters/320/7249/1574#EL1)] (accessed 30 March 2001).

- 35.Begg CB, Mazumdar M. Operating characteristics of a rank correlation test for publication bias. Biometrics. 1994;50:1088–1101. [PubMed] [Google Scholar]

- 36.Sterne JAC, Gavaghan D, Egger M. Publication and related bias in meta-analysis: power of statistical tests and prevalence in the literature. J Clin Epidemiol. 2000;53:1119–1129. doi: 10.1016/s0895-4356(00)00242-0. [DOI] [PubMed] [Google Scholar]

- 37.Thompson SG, Sharp SJ. Explaining heterogeneity in meta-analysis: a comparison of methods. Stat Med. 1999;18:2693–2708. doi: 10.1002/(sici)1097-0258(19991030)18:20<2693::aid-sim235>3.0.co;2-v. [DOI] [PubMed] [Google Scholar]

- 38.Thompson SG. Why and how sources of heterogeneity should be investigated. In: Egger M, Davey Smith G, Altman DG, editors. Systematic reviews in health care: meta-analysis in context. 2nd ed. London: BMJ Books; 2001. [Google Scholar]

- 39.Morgenstern H. Uses of ecologic analysis in epidemiologic research. Am J Public Health. 1982;72:1336–1344. doi: 10.2105/ajph.72.12.1336. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Daniels MJ, Hughes MD. Meta-analysis for the evaluation of potential surrogate markers. Stat Med. 1997;16:1965–1982. doi: 10.1002/(sici)1097-0258(19970915)16:17<1965::aid-sim630>3.0.co;2-m. [DOI] [PubMed] [Google Scholar]

- 41.Hughes MD, Daniels MJ, Fischl MA, Kim S, Schooley RT. CD4 cell count as a surrogate endpoint in HIV clinical trials: a meta-analysis of studies of the AIDS Clinical Trials Group. AIDS. 1998;12:1823–1832. doi: 10.1097/00002030-199814000-00014. [DOI] [PubMed] [Google Scholar]

- 42.Maddox J, Randi J, Stewart WW. “High-dilution” experiments a delusion. Nature. 1988;334:287–291. doi: 10.1038/334287a0. [DOI] [PubMed] [Google Scholar]

- 43.Gøtzsche PC. Trials of homeopathy. Lancet. 1993;341:1533. [PubMed] [Google Scholar]

- 44.Vandenbroucke JP. Homoeopathy trials: going nowhere. Lancet. 1997;350:824. doi: 10.1016/S0140-6736(97)22038-6. [DOI] [PubMed] [Google Scholar]

- 45.Moher D, Schulz KF, Altman DG, for the CONSORT Group. The CONSORT statement: revised recommendations for improving the quality of reports of parallel group randomized trials. Lancet 2001 (in press). [DOI] [PubMed]

- 46.Lefebvre C, Clarke M. Identifying randomised trials. In: Egger M, Davey Smith G, Altman DG, editors. Systematic reviews in health care: meta-analysis in context. 2nd ed. London: BMJ Books; 2001. [Google Scholar]