Abstract

Syntax, the abstract structure of language, is a hallmark of human cognition. Despite its importance, its neural underpinnings remain obscured by inherent limitations of non-invasive brain measures and a near total focus on comprehension paradigms. Here, we address these limitations with high-resolution neurosurgical recordings (electrocorticography) and a controlled sentence production experiment. We uncover three syntactic networks that are broadly distributed across traditional language regions, but with focal concentrations in middle and inferior frontal gyri. In contrast to previous findings from comprehension studies, these networks process syntax mostly to the exclusion of words and meaning, supporting a cognitive architecture with a distinct syntactic system. Most strikingly, our data reveal an unexpected property of syntax: it is encoded independent of neural activity levels. We propose that this “low-activity coding” scheme represents a novel mechanism for encoding information, reserved for higher-order cognition more broadly.

Keywords: ECoG, syntax, language production, neural coding schemes

1. Introduction

Syntax, the abstract structure underlying uniquely human behaviors like language, music [1-5], and possibly math [6, 7], is the evolutionary adaptation that sets our species’ cognition apart. In language, syntactic representations take the form of abstract structural “rules” like Sentence = Subject + Verb + Object, which we use to communicate infinite meanings with finite words. Despite its centrality in human cognition, it continues to elude a satisfactory neural characterization, in large part due to a number of contradictory findings in the literature.

One area of disagreement is localization: where and how syntax is spatially encoded. Traditionally, syntax was approached from a localizationist perspective, and indeed certain anatomical regions are frequently associated with syntax, especially inferior frontal gyrus (IFG) [8-18] and posterior temporal lobe [8-15, 19-22]. However, a growing number of studies have identified syntactic processing throughout broad swaths of cortex outside these traditional hubs [17, 22-25], suggesting the localizationist view may be insufficient and pointing instead to a highly distributed code. Relatedly, there is significant disagreement regarding selectivity: the degree to which syntax overlaps with words and meaning, which has important theoretical implications (see [25, 26] for discussion). Findings of syntax-selective regions are abundant in the literature [11-13, 22, 27-34], although the precise locations and functions identified vary widely across studies. However, recent work using more advanced and targeted methods has reported extensive spatial overlap in these systems [22-25, 35-37].

These discrepancies likely reflect a number of factors. For one, different studies define syntax differently, and may in fact be studying different phenomena. While syntax is often treated as a monolith in neuroscience, it is in fact a complex system involving multiple types of representations (e.g., function words, hierarchical “tree” structures, word categories) and processes (e.g., agreement, sequencing, and binding). Some experimental designs target the depth of syntactic processing by manipulating the complexity of hierarchical structures [9, 17, 23]. Others have manipulated the presence or absence of syntactic structure by comparing sentences to unstructured lists of words [9, 35, 37-44], targeting the system as a whole. A second potential source of the discrepancies are confounds, a nearly inevitable problem when studying an inherently abstract system [45]. Complexity manipulations also systematically vary working memory demand, and sentence-list comparisons vary combinatorial semantics. While creative solutions have been employed to mitigate these concerns, manipulations to syntax inevitably involve variation in some additional dimension in any study.

Here, take a focused approach, aiming to understand the neural underpinnings of hierarchical structural representations rather than, e.g., syntactic processing as a whole. We leverage an approach from lower-level sensory neuroscience, where considerable advances have been made in mapping sensory cortices by contrasting representations like phonemes [46] and visual gradients [47, 48]. We compare two syntactic representations: the active and the passive. These hierarchical tree structures can be used to express the same meaning by arranging the same content words (e.g., Dracula, spray, chicken) in reverse orders (active: “Dracula sprayed the chicken”; passive: “The chicken was sprayed by Dracula”), thereby varying syntax while holding words and meaning constant. We account for potential differences in difficulty and articulation with signal processing and modeling techniques, mitigating concerns about confounds with frequency or difficulty.

We also fill a number of other critical gaps in the literature. First, with few exceptions [21, 49-51], the vast majority of prior research has studied comprehension. However, comprehension as a modality introduces a number of complications for studying syntax. As comprehension is an ongoing process of building and assessing candidate syntactic structures, it is highly dynamic [11, 52, 53]. Any sort of reasonable temporal granularity is very hard to achieve in comprehension, particularly when looking at individual representations whose activation may be relatively fleeting. Perhaps most problematically, though, is that syntax is often altogether ignored during comprehension in favor of less effortful strategies (e.g., context) [54-57]. Consequently, the lack of selectivity reported by some studies may reflect a computational shortcut based on context rather than an inherent property of syntax. Second, experimental paradigms comparing sentences and lists have become a dominant approach to studying syntax [9, 35, 37-44]. However, processing even single words engages syntax [58, 59], calling into question the effectiveness of these comparisons in isolating syntax. Finally, previous research has relied primarily on non-invasive neuroimaging and electrophysiology, which provide either high spatial or high temporal resolution, but not both [60, 61].

Here we address these gaps by employing a controlled sentence production experiment while sampling neural data directly from cortex in ten neurosurgical patients. Electrocoticography (ECoG), which is virtually immune to motor artifacts, provides simultaneously high spatial and temporal resolution. In contrast to comprehension, the syntactic structure of an utterance is selected prior to speech onset in production [11, 53, 62, 63] and remains invariant over time. In our stimuli, this is logically ensured by the fact that the subject (i.e., first word) depends on the choice of structure, making it impossible to begin speaking without a priori structure assignment. Together, these methods afford unparalleled resolution, and enable us to introduce a fine-grained manipulation that varies syntactic frames while holding semantics and lexical elements largely constant. We directly contrast this manipulation with the traditional approach of comparing sentences and lists and show that the sentence-list comparison misses not just some, but the majority of electrodes that are truly sensitive to syntax. We demonstrate that this derives from a striking and previously uncharacterized property of higher-level cognitive representations, which we term “low-activity coding,” whereby processing is independent of the overall degree of neural activity. The combination of ECoG, a production paradigm, and a controlled syntactic manipulation allow us to capture a number of important and previously unknown properties of this hallmark aspect of human cognition.

2. Results

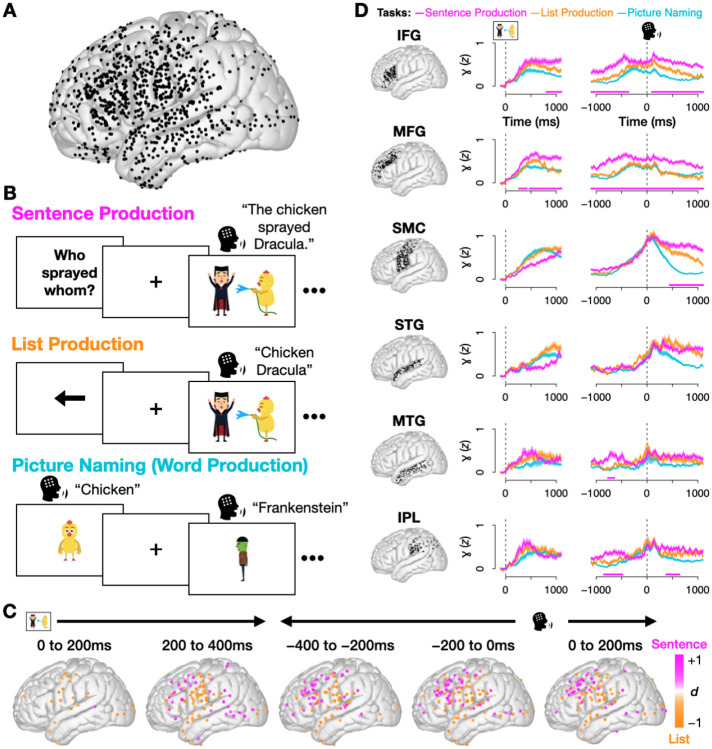

Ten neurosurgical patients with electrode coverage of left peri-Sylvian cortex (Fig. 1A) performed a sentence production task and two control tasks: list production and picture naming (Fig. 1B). During sentence production, patients overtly described cartoon vignettes depicting transitive actions (e.g., poke, scare, etc.) in response to a preceding question. Questions were manipulated to use either active (“Who poked whom?”) or passive syntax (“Who was poked by whom?”), implicitly priming patients to respond with the same structure (“The chicken poked Dracula” or “Dracula was poked by the chicken”) [64]. This manipulation varied syntactic structure, while holding semantic and lexical content largely constant. During the list production control task, participants saw the same vignettes as in the sentence production trials, but preceded by an arrow indicating the direction in which participants should list the characters: left-to-right (e.g., “chicken Dracula”) or right-to-left (“Dracula chicken”), mimicking the reverse order of nouns in active and passive sentences. We quantified neural activity as high gamma broadband activity (70–150 Hz), normalized (z-scored) to each trial’s 200 ms pre-stimulus baseline, as this correlates with underlying spiking and BOLD signal [65, 66] and has been widely employed in the ECoG literature. Parallel analyses on beta-band activity, also associated with cognition [67, 68], appear in the supplemental information (see Supplemental Information A.2).

Fig. 1.

(A) Coverage across all 10 patients (1256 electrodes after exclusions, see Methods Sec. 4.2). (B) Experimental design: Participants completed three overt production tasks. During Sentence Production trials, they produced sentences to respond to preceding questions. Questions appeared with either active (“Who sprayed whom?”) or passive syntax (“Who was sprayed by whom?”), implicitly priming patients to respond with active (“The chicken sprayed Dracula”) or passive syntax (“Dracula was sprayed by the chicken”). During List Production, a control task, participants saw the same stimuli from the Sentence Production block, but preceded by an arrow indicating the direction in which to order the two characters. During Picture Naming trials, they produced single words. (C) Magnitude of difference in neural activity (Cohen’s d) for all electrodes with a significant difference between sentences and lists, in 200 ms bins (p < .05, FDR-corrected across electrodes, Wilcoxon rank sum test). (D) Mean and standard error of high gamma activity for all three tasks, by region, locked to stimulus (i.e., picture) onset (left column) and production onset (right column). Pink bar at bottom indicates times when sentences had significantly greater activity than lists (p < .05 for at least 100 ms; one-tailed Wilcoxon rank sum test). Region abbreviations: inferior frontal gyrus (IFG), middle frontal gyrus (MFG), sensorimotor cortex (SMC), superior temporal gyrus (STG), middle temporal gyrus (MTG), inferior parietal lobule (IPL).

We began by comparing neural activity between sentence production and the list production control. We looked for differences during the planning period – the time between the onset of the cartoon vignette and speech onset, when syntactic and semantic representations are selected [62, 63]. This identified 60 broadly distributed electrodes with significantly higher activity for sentences (p < .05 for 100 ms, Wilcoxon rank sum test), with sentences recruiting higher activity in the 200 to 400 ms post-stimulus window in posterior temporal areas and the inferior parietal lobule (IPL), and increasing in IFG and middle frontal gyrus (MFG) leading up to production (Fig. 1C). A region-of-interest (ROI) analysis (Fig. 1D) found four regions with significantly higher activity for sentences during the planning period: IFG, MFG, IPL, and middle temporal gyrus (MTG) (p < .05 for 100 ms, permutation test; see Methods Sec. 4.7).

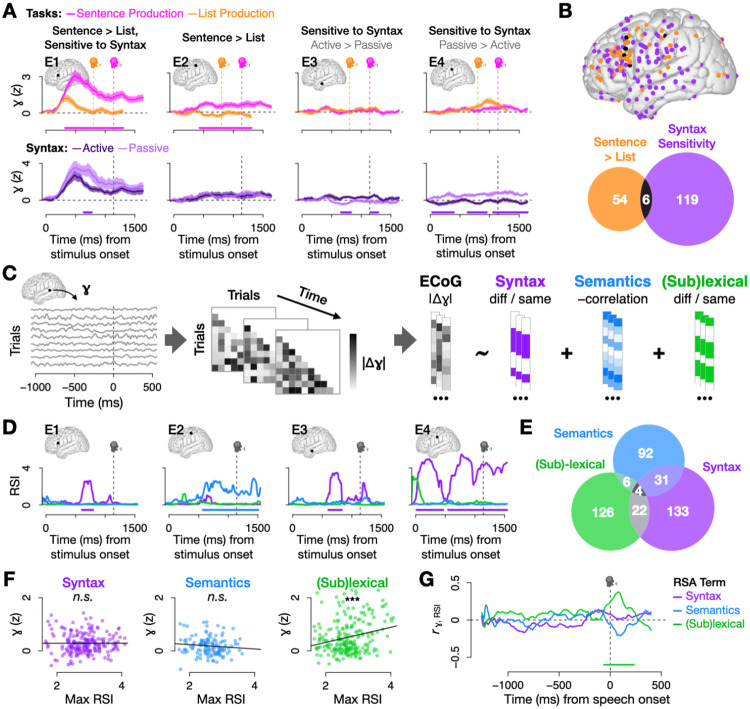

While comparisons of sentences and lists are common, they differ in multiple processes [37-39, 41-43]. In order to specifically test for syntax, we looked for sensitivity to syntactic structure by comparing active and passive sentences. Because active and passive trials had significantly different response times (see Sec. 4.8), we followed [69-71] and temporally warped the data to remove these differences, setting each trial’s response time to the median for that task (see Supplementary Fig. S1). Critically, this did not change the pattern of results in Fig. 1 (see Supplementary Fig. S2 for a replication using the warped data), and demonstrably increased the signal-to-noise ratio in the sentence and list production data (Supplementary Fig. S3). Figure 2A shows the sentence-list and active-passive comparisons for three sample electrodes. Electrodes E1 and E2 showed patterns assumed in the literature: E1 had significantly higher activity for sentences than lists (top plot; p < .05 for 100 ms, Wilcoxon rank sum test) and corresponding differences between active and passive syntax in the same time windows (bottom; p < .05 for 100 ms, Wilcoxon rank sum test). Electrode E2 also had significantly higher sentence activity (p < .05 for 100 ms, Wilcoxon rank sum task), but showed no syntax sensitivity, suggesting involvement in some other process that differs between sentences and lists such as combinatorial semantics.

Fig. 2.

(A) Four sample electrodes. Top: mean and standard error of high gamma activity by task. Pink bars denote where sentences were significantly higher than lists (p < .05 for 100 ms, Wilcoxon rank sum test). Bottom: mean and standard error of sentence trials split by syntax condition; bars denote significant differences between active vs. passive trials (p < .05 for 100 ms, Wilcoxon rank sum test). (B) 60 electrodes had significantly higher activity for sentences than lists (p < .05 for 100 ms, Wilcoxon rank sum test) and 125 electrodes had significant differences between active and passive trials (p < .05 for 100 ms, Wilcoxon rank sum test). Only 6 electrodes (< 5%) had both. (C) RSA analysis pipeline: For each electrode at each time sample, we modeled the magnitude of differences in high gamma activity for each pair of sentence trials as a function of differences in syntax, event semantics, and (sub)lexical content. (D) Representational Similarity Indices (RSIs; derived from model coefficients) for the same four sample electrodes show evidence for syntax in E1, E3, and E4 and event semantics in E2 (all p < .05 for 100 ms; one-tailed permutation tests). (E) Significant electrodes (p < .05 for 100 ms, permutation test) by RSA term. Most significant electrodes were selective for just one representation. (G-H) Two approaches to assessing the relationship between high gamma activity and RSIs for significant electrodes. In both, syntax and semantics were not significantly related to high gamma, but (sub)lexical processing was. (F) Regressing activity over RSIs at the electrode- and RSI- specific time sample when RSIs peaked (syntax: p = .987, semantics: p = .248, (sub)lexical: p < .001, linear regression). (G) Correlating across electrodes at each time sample (significant (sub)lexical RSI: p < .05 for 100 ms, Spearman correlation).

However, the most prevalent pattern we observed among significant electrodes, exemplified by Electrodes E3 and E4, was unexpected. Despite no significant increase in sentence activity relative to lists, these electrodes showed significant differences between active and passive trials. This combination of sensitivity to syntax without elevated sentence activity violates one of the most fundamental assumptions in the field: that information processing corresponds to increased neural activity. Indeed, while 125 electrodes were in fact sensitive to syntax, only 6 of these (fewer than 5%) were identified by comparing sentences to lists (Fig. 2B). Thus, syntactic processing is not well captured by increased neural activity. In order to quantify and isolate syntax from other sources of variance, we leveraged an analytical technique that combines Representational Similarity Analysis (RSA) and multiple regression (Fig. 2C; Methods Sec. 4.9) [72-75]. We modeled neural dissimilarity as a linear combination of syntactic (active vs. passive), event-semantic (GPT-2 sentence embeddings [76]), (sub)lexical (same vs. different sentence-initial word) dissimilarity. The (sub)lexical term accounts for variance associated with surface differences between active and passive sentences such as the presence of the word “by” – although these differences did not appear until the third or fourth word of the sentences (relatively late compared to the period prior to sentence onset, where we concentrated our analyses). We also included response time as a covariate in the model to further account for potential differences in the frequency and/or difficulty of actives and passives. We derived Representational Similarity Indices (RSIs) from the model coefficients to approximate the amount of evidence for syntactic, event semantic, and (sub)lexical information in each electrode (shown for the three sample electrodes in Fig. 2D). The syntax RSI accurately captured the presence of syntactic information in E1, E3, and E4, and verified that E2’s higher activity for sentences than lists corresponded to the presence of event semantics (p < .05 for 100 ms, permutation test, for all three tests). Across electrodes with any significant RSIs, the majority of electrodes processed only one of the three types of information (Fig. 2E), contradicting claims of a fully interwoven system [24, 35, 36].

Next, to investigate the relationship between degree of neural activity and syntactic processing (or lack of such a relationship, as in Electrodes E3 and E4), we performed a series of analyses comparing each RSI to high gamma. Note that while RSIs index differences in high gamma activity in an electrode, such differences do not require that the electrode exhibit a high degree of activity. This, however, is the general assumption, and predicts that RSIs should be positively correlated with the magnitude of high gamma activity. However, in two analyses we found no evidence that syntactic processing was related to neural activity in this way: not across electrodes at the time when each electrode’s RSI reaches its maximum (Fig. 2F), nor across electrodes at each time sample (Fig. 2G). In contrast, (sub)lexical processing showed the expected result in both analyses: a positive relationship with degree of high gamma activity at the times when the (sub)lexical RSI peaked (p < .001, linear regression), as well as at each time sample (p < .05 for 100 ms, Spearman correlation). Intriguingly, event semantics shared the syntactic pattern of independence from the magnitude of high gamma activity in both analyses.

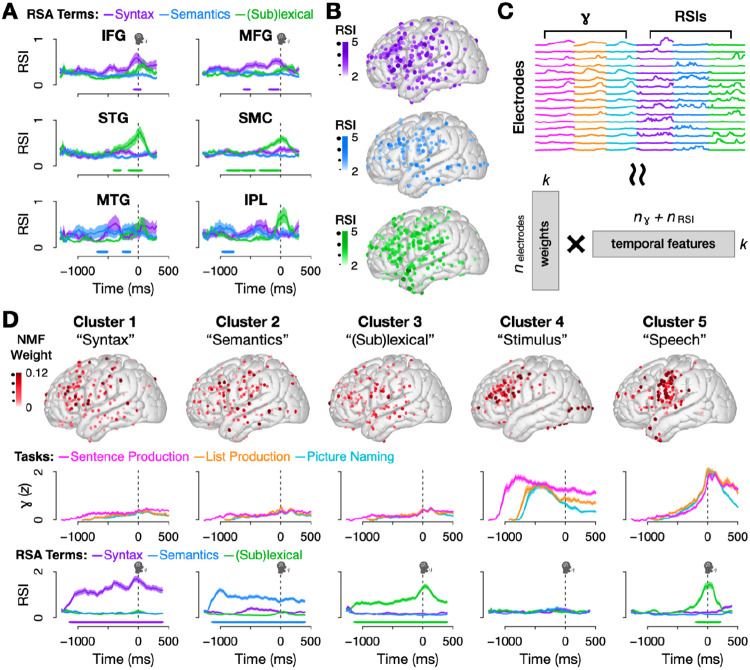

A traditional region-of-interest approach showed limited representational specificity for syntax across IFG and MFG and event-semantics in MTG and IPL (Fig. 3A), as well as (sub)lexical information in SMC and STG (p < .05 for 100 ms, permutation test). However, testing within each electrode revealed that these representations were widely distributed across cortex (see Fig. 3B and Supplementary Fig. S6 for beta band activity).

Fig. 3.

(A) Mean and standard error of electrodes’ RSIs by ROI. (B) Significant electrodes (p < .05 for 100 ms; one-tailed permutation test) for all three RSIs; color and size correspond to peak RSI value. (C) Non-negative Matrix Factorization (NMF), a matrix decomposition technique particularly suited for identifying prototypical patterns, was used to cluster electrodes based on their concatenated high gamma activity (trial means for the three tasks) and RSIs. (D) Clustering results. Top: electrode localizations; color and size correspond to NMF weight. Middle: weighted mean and standard error of high gamma for all three experimental tasks. Bottom: weighted mean and standard error of RSIs. Bars denote significance (p < .001 for 100 ms, permutation test).

Given that our findings were not confined to particular cortical hubs, we aimed to characterize these networks without imposing anatomical assumptions on the neural data. We took a data-driven approach using an unsupervised machine learning technique, Non-negative Matrix Factorization (NMF, see Methods 4.10). This allowed us to cluster electrodes according to prototypical patterns in the combined high gamma and RSI dataset (Fig. 3C), identifying 5 major clusters (Fig. 3D).

The first three clusters were characterized by high information content (RSI) accompanied by very low high gamma activity (Clusters 1-3 in Fig. 3D), replicating the dissociation between syntax and neural activity found at the electrode level (see Fig. 2B). Each of these three clusters was spatially distributed and contained significant information about a single representation type – syntax, event semantics, or (sub)lexical information (p < .05 for 100 ms, permutation test). Temporally, these profiles aligned with theoretical and computational models of sentence production, according to which semantics is processed first, then syntax, and finally (sub)lexical representations [62, 63, 77] (see Fig. 3D, bottom).

In contrast, the last two clusters were characterized by robust high gamma activity and high regional specificity, despite the fact that our unsupervised approach did not have access to electrode locations. Cluster 4 was focused in areas associated with visual processing and executive control, with activity peaking just after stimulus onset, and it did not contain above-chance information about syntax, event semantics, or (sub)lexical information (p > .05, permutation test across all time points after correction for multiple comparisons). Cluster 5 was concentrated in speech-motor cortex and superior temporal gyrus, with activity peaking just after speech onset. It contained above-chance information only for the (sub)lexical RSI (p < .05 for 100 ms, permutation test) at the time when activity peaked, consistent with the correlation between (sub)lexical processing and neural activity (Figs. 2F,G).

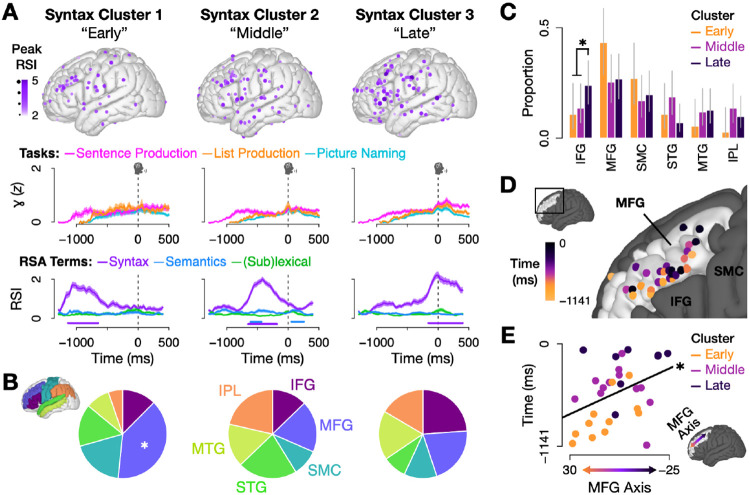

However, while syntax is often treated as a single entity, it in fact consists of multiple processing stages [62, 63, 77]. We asked whether the syntax network we identified might itself show evidence of these temporal stages. We clustered the syntax RSIs from all electrodes with a significant syntax RSI (regardless of neural activity level). This analysis revealed three subnetworks with peaks at 1062 ms, 422 ms, and 16 ms before speech onset (Fig. 4A). The early and late clusters were informationally selective – containing information about syntax (p < .05 for 100 ms, permutation test) but not words or event semantics. The middle cluster additionally encoded event semantic information (p < .05 for 100 ms, permutation test), suggesting a role in mapping from semantics to syntax. We found two significant patterns in these clusters’ spatial distributions. First, we found more MFG electrodes in the early cluster (16 electrodes, or 43% of the cluster; Fig. 4B) than expected by chance (21%) based on the distribution of the original dataset (FDR-corrected p = .012, binomial test). Second, we looked for differences between clusters and found a significant increase in IFG electrodes in the Late cluster relative to the Early and Middle clusters (Fig. 4C; FDR-corrected p = .040, binomial test). While IFG is commonly associated with syntax [8-12, 14-19, 78-80], MFG is not. Some recent evidence suggests a role for posterior MFG in higher-order language [19, 81, 82], however posterior MFG came online primarily in our Late cluster. The highest concentration of syntax in our data was in anterior MFG in the Early cluster. A closer analysis of the syntactic processing timecourse (Fig. 4D) showed a rostral-caudal gradient within MFG. This observation was statistically verified (Fig. 4E) by comparing MFG electrodes’ peak syntax RSI times to their position along the principal axis of MFG. This finding suggests a cascade of syntactic information from more anterior areas starting at stimulus onset to more posterior areas just before speech onset (p = .027, linear regression).

Fig. 4.

(A) Temporal clusters of electrodes with significant syntax RSIs (p < .05 for 100 ms, permutation test). Columns contain data from only electrodes in each cluster. Top row: Electrode localizations in each of the three clusters; color and size correspond to each electrode’s peak syntax RSI value. Middle row: Weighted mean and standard error of high gamma activity by experimental task (weighted by NMF weights). Bottom row: Weighted mean and standard error of the three RSIs; bars denote significance per term (p < .05 for 100 ms, permutation test). (B) ROI representation by cluster, scaled by each ROI’s representation in the original dataset (i.e., the chance distribution is 6 equal slices). MFG was significantly overrepresented in the Early cluster compared to the original distribution (p = .012, binomial test); no other regions survived multiple comparisons corrections. (C) Proportion of electrodes in each region of interest by cluster (error bars: 95% confidence intervals, Wilson score). Comparing across clusters, there was a significant increase in IFG electrodes in the Late cluster relative to the the Early and Middle clusters (padj. = .040, binomial test) and a marginally significant increase in STG electrodes in the Middle cluster relative to the Early and Late clusters (padj. = .053, binomial test). (D) Significant syntax electrodes in MFG, colored by the time when the syntax RSI peaked (median latency from stimulus to speech was 1141 ms). (E) Electrodes’ peak syntax RSI time was significantly predicted by position along the main axis of MFG (p = .027, linear regression) (only including MFG electrodes with significant syntax RSIs). Electrode position was calculated by projecting electrodes onto the main axis of MFG, which was the first principal component of the y and z MNI coordinates for MFG electrodes (see arrow on brain).

3. Discussion

Here, we leveraged the high spatial and temporal resolution of intracranial recordings in order to investigate the dynamics of syntax. We showed that one of the most common approaches, comparing sentences to lists, identified only a small fraction of electrodes that were in fact sensitive to syntax. We then used a representational similarity approach to identify syntactic, event semantic, and (sub)lexical processing in each electrode. In direct contradiction with one of the most widespread assumptions in the field, we found that syntactic and event-semantic processing were uncorrelated with the degree of neural activity measured. That is, while electrodes encoding syntax and event semantics showed differences in activity, those differences did not systematically correspond to times when the overall degree of activity in the electrode was high. This “low-activity coding” scheme explains why the sentence-list comparison failed to identify the majority of syntactic electrodes, and has critical implications for how the field should go about studying syntax. A clustering approach identified three broadly distributed networks that uniquely encoded either syntax, event semantics, or (sub)lexical information based on neural activity and representation processing. Lastly, temporal clustering of just syntactic information revealed three syntactic subnetworks, and a cascade of syntactic processing through MFG over the course of sentence planning.

The striking dissociation between syntactic processing and degree of neural activity has profound implications. It suggests a need to reassess common assumptions about the relationship between information processing and the overall degree of neural activity. It also highlights the danger in relying on elevated activity as an indicator for information content. We argue for the importance of fine-grained statistical comparisons and of not excluding low-activity responses [e.g., 76, 83, 84, 84-88]. In terms of experimental design, an important consequence is that sentence-list comparisons may be misleading when it comes to identifying syntax. That is, sentence-list comparisons, which rely on the assumption that syntactic processing elevates neural activity, will only identify a small fraction of syntactic information – just 5% in our data (Fig. 2B). As for why this might be the case, there are a number of possibilities. It may be related to the nature of production, in which case our findings would not necessarily contradict those of previous studies. However, another possibility is that lists do not control for syntax as intended. While lists differ from the stimuli in these studies, previous research shows that single words engage syntactic processing [58, 59] and that comprehending apparently ungrammatical sequences of words triggers syntactic analysis [89-91], suggesting that lists might also engage syntax. Moreover, syntactic parsing might be necessary to recognize lists as lacking syntax. If so, then a reasonable alternative hypothesis is that lists engage syntax more than sentences, as the comprehension system struggles to identify a correct underlying parse. This would be consistent with our finding that sentence activity was only greater than list activity in 5% of syntactic electrodes. Regardless, our findings indicate that finer-grained manipulations are necessary to understand syntax more thoroughly (see [92] for further discussion).

The question of selectivity has implications for the cognitive architecture of language, where the degree of separation between syntax, semantics, and the lexicon is a matter of heated debate [13, 15, 25, 45, 93-103]. While various regions have been reported to be selective for syntax [11, 22, 27-34], recent studies taking individual subject anatomy into account have consistently failed to identify syntax selectivity in any region. This lack of evidence has been interpreted as support for so-called “lexicalist” models, where syntax is inextricably linked to particular words [23-25, 35-37]. But we argue that the question of whether any regions are selective for syntax is ill-posed. That is, the critical issue is whether syntax is dissociable from lexical and semantic information in the brain. Given that syntax is encoded in broadly distributed networks rather than regions, the relevant question is whether these networks are selective for syntax. By leveraging production and a direct comparison of syntactic structures, we show that selectivity is in fact the predominant pattern for syntax, both within syntactic clusters (Fig. 4A) and at the level of individual electrodes (Fig. 2E). This finding suggests that syntax is at some level represented independent of words and meaning.

Regarding the spatial distribution of syntax, our findings suggest a hybrid organization, with syntactic electrodes distributed across traditional language regions (Fig. 3B), but also concentrated in IFG and MFG (Figs. 4B,C). This hybrid pattern may explain the ongoing debate in the literature, which often considers only two possibilities: broad distribution or localized hubs. Our analyses also revealed a cascade of syntactic information from anterior to posterior MFG. Posterior MFG (pMFG), including area 55b [104], has previously been implicated in both low-level speech-motor planning [104-110] and high-level syntactic processing [19, 81], although the latter is also consistent with prosodic planning [82]. Interestingly, the highest concentration of syntactic information in our data was in fact in anterior MFG (aMFG), a region not typically associated with language. In contrast to the low-level functions of pMFG, aMFG is primarily associated with executive functions like attention [111-114] and working memory [115, 116], and to some degree lexical semantics [117-121]. One possibility is that the unexpected sensitivity of aMFG to the active/passive distinction reflects task demands. That is, passive sentences may have been harder to produce than actives, and signatures of this may have remained in the data despite our efforts to control for such effects (e.g., by informing patients that there were no right or wrong responses, temporally warping trials, and including response time covariates). However even if this interpretation accounts for some of the electrodes we identified as syntactic, it would only deepen the mystery of why so few electrodes had higher activity for sentences than lists. Perhaps more so than for syntactic processing, one would expect that regions recruited to process difficult tasks should show elevated activity. Regardless, it remains the case that our analyses would have also identified truly syntactic electrodes as well. Given the robustness of the lack of overlap between electrodes sensitive to syntax and those with higher activity for sentences than lists, an executive function interpretation does not undermine our conclusions in general. Another intriguing possibility, consistent with the functional and connectivity attributes of aMFG, is that MFG plays a role in syntactic planning. Specifically, aMFG is connected via white matter tracts to several key language regions including IFG, IPL, and posterior temporal lobe [111], supporting a role in language. Taken together with the known role of pMFG in language, this suggests a processing pipeline where disparate linguistic representations are integrated in anterior portions of MFG and propagated posteriorly to generate a speech-motor plan. The production-specific nature of this function may explain why previous research, predominantly focused on comprehension, has overlooked MFG’s role in syntax.

Finally, our data reveal a distinction in how the brain processes different kinds of information. While (sub)lexical processing was strongly correlated with degree of neural activity, syntax did not show such a relationship. This distinction may belie a deeper taxonomic generalization. That is, (sub)lexical processing is not alone: low-level sensorimotor processes in general show a similar relationship to neural activity, including vision [47, 48], audition [122, 123], and motor movement [124, 125]. Similarly, syntax was not alone: we found that event semantic processing also showed no systematic relationship with degree of neural activity. We hypothesize that just as low-level information shares encoding properties, higher-order processing may in general be encoded in low-activity neural populations. Such a divergence in coding schemes could derive from any number of differences between low- and high-level cognitive systems, including their spatial distribution, evolutionary age, or informational complexity. One possibility is that because higher-level representations are generally sustained over longer periods of time, they require a metabolically efficient neural code. In contrast, lower-level representations like phonemes are relatively short-lived [126, 127], and higher firing rates may therefore be achieved without depleting resources. Taken together, our findings constitute a significant advance in understanding syntax in the brain, providing evidence for widespread representational selectivity and a low-activity coding scheme that may underlie higher-order cognition in general.

4. Methods

4.1. Participants

Ten neurosurgical patients undergoing evaluation for refractory epilepsy participated in the experiment (3 female, mean age: 30 years, range: 20 to 45). All ten were implanted with electrocorticographic grids and strips and provided informed consent to participate. All consent was obtained in writing and then requested again orally prior to the beginning of the experiment. Electrode implantation and location were guided solely by clinical requirements. Eight of the participants were implanted with standard clinical electrode grid with 10mm spaced electrodes (Ad-Tech Medical Instrument, Racine, WI). Two participants consented to a research hybrid grid implant (PMT corporation, Chanassen, MN) that included 64 additional electrodes between the standard clinical contacts (with overall 10mm spacing and interspersed 5mm spaced electrodes over select regions), providing denser sampling but with positioning based solely on clinical needs. The study protocol was approved by the NYU Langone Medical Center Committee on Human Research.

4.2. Data collection and preprocessing

Participants were tested while resting in their hospital bed in the epilepsy monitoring unit. Stimuli were presented on a laptop screen positioned at a comfortable distance from the participant. Participants’ voice was recorded using a cardioid microphone (Shure MX424). Inaudible TTL pulses marking the onset of a stimulus were generated by the experiment computer, split, and recorded in auxiliary channels of both the clinical Neuroworks Quantum Amplifier (Natus Biomedical, Appleton, WI), which records ECoG, and the audio recorder (Zoom H1 Handy Recorder). The microphone signal was also fed to both the audio recorder and the ECoG amplifier. These redundant recordings were used to sync the speech, experiment, and neural signals.

The standard implanted ECoG arrays contained 64 macro-contacts (2mm exposed, 10mm spacing) in an 8×8 grid. Hybrid grids contained 128 electrode channels, including the standard 64 macro-contacts and 64 additional interspersed smaller electrodes (1 mm exposed) between the macro-contacts (providing 10mm center-to-center spacing between macro-contacts and 5mm center-to-center spacing between micro/macro contacts, PMT corporation, Chanassen, MN). The FDA-approved hybrid grids were manufactured for research purposes, and a member of the research team explained this to patients during consent. The ECoG arrays were implanted on the left hemisphere for all ten participants. Placement location was solely dictated by clinical care.

ECoG was recorded at 2,048 Hz, which was decimated to 512 Hz prior to processing and analysis. After rejecting electrodes with artifacts (i.e., line noise, poor contact with the cortex, and high amplitude shifts), we subtracted a common average reference (across all valid electrodes and time) from each individual electrode. Electrodes with interictal and epileptiform activity were removed from the analysis. We then extracted the envelope of the high gamma component (the average of three log-spaced frequency bands from 70 to 150 Hz) from the raw signal with the Hilbert transform. Beta activity was quantified as the envelope of the 12 to 30 Hz band.

The signal was separately epoched locked to stimulus (i.e., cartoon images) and production onsets for each trial. The 200 ms silent period preceding stimulus onset (during which patients were not speaking and fixating on a cross in the center of the screen) was used as a baseline, and each epoch for each electrode was normalized to this baseline’s mean and standard deviation (i.e., z-scored).

4.3. Experimental Design

4.3.1. Procedure

The experiment was performed in one session that lasted approximately 40 minutes. Stimuli were presented in pseudo-random order using PsychoPy [128]. All stimuli were constructed using the same 6 cartoon characters (chicken, dog, Dracula, Frankenstein, ninja, nurse), chosen to vary along many dimensions (e.g., frequency, phonology, number of syllables, animacy, proper vs. common) to facilitate identification of lexical information at analysis.

The experiment began with two familiarization blocks. In the first block (6 trials), participants saw images of each of the six cartoon characters once with labels (chicken, dog, Dracula, Frankenstein, ninja, nurse) written beneath the image. Participants were instructed to read the labels aloud, after which the experimenter pressed a button to go to the next trial. In the second block, participants saw the same six characters one at a time, twice each in pseudo-random order (12 trials), but without labels. They were instructed to name the characters out loud. After naming the character, the experimenter pressed a button revealing the target name to ensure learning of the correct labels. Participants then completed the picture naming block (96 trials). As before, characters were presented in the center of the screen, one at a time, but no labels were provided.

Next, participants performed a sentence production block (60 trials), starting with two practice trials. Participants were instructed that there were no right or wrong answers, we want to know what their brain does when they speak naturally. On each trial, participants saw a 1 s fixation cross followed by a written question, which they were instructed to read aloud to ensure attention. After another 1 s fixation cross, a static cartoon vignette appeared in the center of the screen depicting two of the six characters engaged in a transitive event. Participants were instructed to respond to the question by describing the vignette. The image remained on the screen until the participant completed the sentence, at which point the experimenter pressed a button to proceed. After the first 12 trials, the target sentence (i.e., an active sentence after an active question or passive sentence after a passive question) appeared in text on the screen and participants were instructed to read aloud “the sentence we expected [them] to say” to implicitly reinforce the link between the syntax of the question and the target response. If participants appeared to interpret these as corrections, the experimenter reminded them that there were no right or wrong answers.

We interleaved two picture naming trials between each sentence production trial in order to reduce task difficulty and facilitate fluent sentence production. The picture naming trials involved the two characters that would be engaged in the subsequent vignette, presented in a counterbalanced order such that on half of trials they would appear in the same order as in the expected sentence response, and in the opposite order on the other half.

Next, participants performed the listing block. List production trials were designed to parallel sentence production trials. Each trial began with a 1 s fixation cross and then an arrow pointing either left or right appeared for 1 s in the center of the screen. After another 1 s fixation cross, a cartoon vignette (the exact same stimuli) appeared on the screen. Participants named the two characters in the vignette from left to right or from right to left, according to the direction of the preceding arrow. As in sentence production trials, each list production trial was preceded by two picture naming trials with the two characters that would appear in the subsequent vignette, again in counterbalanced order.

Between each block, participants were offered the opportunity to stop the experiment if they did not wish to continue. One participant stopped before the list production block, providing only picture naming and sentence production data. The other nine participants completed all three blocks. These nine were offered the opportunity to complete another picture naming block and another sentence production block. Six consented to another picture naming block and two consented to another sentence production block.

4.3.2. Stimulus Design, Randomization, and Counterbalancing

Picture naming stimuli consisted of images of the 6 characters in pseudo-random order so that each consecutive set of 6 trials contained all 6 characters in random order. This ensured a relatively even distribution of characters across the block, and that no character appeared more than twice in a row. Characters were pseudorandomly depicted facing 8 orientations: facing forward, backward, left, right, and at the 45° angle between each of these.

Sentence production stimuli consisted of a written question and a static cartoon vignette. Questions were manipulated so half were constructed with passive syntax and half with active. All questions followed the scheme: “Who is [verb]-ing whom?” or “Who is being [verb]-ed by whom?”. There were 10 verbs: burn, hit, hypnotize, measure, poke, scare, scrub, spray, tickle, trip. Each verb was used to create 3 vignettes involving 3 characters, counterbalanced so each character was the agent (i.e., active subject) in one vignette and the non-agent (i.e., active object) in one vignette. Each of these three vignettes was shown twice in the sentence production block, once preceded by an active question and once by a passive question to prime active and passive responses, respectively [64]. Vignettes were flipped around the vertical axis the second time they appeared so that the character that was on the left in the first appearance was on the right in the second appearance and vice versa. This was counterbalanced so that on half of the trials in each syntax condition (active/passive) the subject was on the left. List production stimuli similarly consisted of the same 60 vignettes, also presented in pseudorandom order and counterbalanced across conditions (i.e., arrow direction).

4.4. Data Coding and Inclusion

Speech was manually transcribed and word onset times were manually recorded using Audacity [129] to visualize the audio waveform and spectrogram. Picture naming trials were excluded if the first word uttered was not the target word (e.g., “Frankenstein – I mean Dracula”). Sentence trials were excluded if the first word was incorrect (i.e., “Frankenstein” instead of “Dracula,” regardless of active/passive structure) or if the meaning of the sentence did not match the meaning of the depicted scene; no sentences were excluded because the syntax did not match that of the prime (i.e., question). Sentences were coded as active/passive depending on the structure the patient used, not the prime structure. Listing trials were excluded if the first word was incorrect or if the order did not match that indicated by the arrow.

All patients were included in Figure 1 analyses, however three patients who produced 6 or fewer passive sentences during the sentence production block were excluded prior to any subsequent analyses that involved an active/passive comparison (including the RSA and NMF analyses).

4.5. Electrode Localization

Electrode localization in subject space, as well as MNI space, was based on coregistering a preoperative (no electrodes) and postoperative (with electrodes) structural MRI (in some cases, a postoperative CT was employed depending on clinical requirements) using a rigid-body transformation. Electrodes were then projected to the surface of the cortex (preoperative segmented surface) to correct for edema-induced shifts following previous procedures [130] (registration to MNI space was based on a nonlinear DAR-TEL algorithm). Based on the subject’s preoperative MRI, the automated FreeSurfer segmentation (Destrieux) was used for labeling within-subject anatomical locations of electrodes.

4.6. Significance testing and corrections for multiple comparisons in time series data

Statistical tests on time series data were performed independently at each time sample, resulting in the same number of p-values as there are samples in the time series. To correct for multiple comparisons we follow [131, 132] and establish a conservative criterion for significance for all time series comparisons: an uncorrected p-value that remains below .05 for at least 100 consecutive milliseconds.

4.7. Permutation tests for ROIs

To determine whether activity was significantly above chance for a given ROI (Figs. 1D and 3A), we randomized electrodes’ ROI labels and re-calculated ROI means 1000 times. We derived p-values by determining what proportion of these chance means were above the real mean value at each time sample. If the p-value was less than .05 for at least 100 consecutive milliseconds (see Sec. 4.6), it was considered significant, denoted by a bar at the bottom of the plot.

4.8. Temporal warping

Our analyses focused on the planning period – the time between stimulus and speech onsets, when hierarchical syntactic structure is planned [11, 53, 62, 63]. The duration of the planning period varied considerably both across and within patients, meaning that cognitive processes become less temporally aligned across trials the farther one moves from stimulus onset in stimulus-locked epochs or speech onset in speech-locked epochs. This was potentially problematic for comparing syntactic structures, as passive trials took longer to plan (median RT: 1,424 ms) than active trials (median RT: 1,165 ms; p < .001, Wilcoxon rank sum test). The farther from the time lock, the more misaligned active and passive trials would be, and the more likely significant differences would be to reflect temporal misalignment rather than syntax.

Temporal warping reduces such misalignments [69-71, 133, 134]. Following [69], we linearly interpolated the data in the middle of the planning period (from 150 ms post-stimulus to 150 ms pre-speech) for each trial to set all trials’ planning periods to the same duration (Supplementary Fig. S1): the global median per task (1141 ms for sentences; 801 ms for lists; 758 ms for words). Specifically, for each task we started by excluding trials with outlier response times, which we defined as those in the bottom 2.5% or top 5% per participant. We then calculated the median response time per task across participants (1,142 ms for sentences, 800 ms for lists, and 758 ms for words), and for each electrode and each trial, concatenated (a) the stimulus-locked data from 150 ms post-stimulus to the median reaction time with (b) the production-locked data from median reaction time to 150 ms pre-speech. We then linearly interpolated this time series to the number of time samples corresponding to the median reaction time minus 300 ms (i.e., the two 150 ms periods following stimulus onset and preceding speech onset). Finally, we concatenated (a) the unwarped data leading up to 150 ms post-stimulus, (b) the warped data from the previous step, and (c) the unwarped data starting 150 ms before speech onset to form the final epochs used in the analyses in Figs. 2, 3, and 4.

Improved temporal alignment leads to better signal-to-noise ratio, which can be seen in higher trial means [71]. We leveraged this fact as a diagnostic for whether temporal warping in fact improved alignment in our data. For electrodes whose mean high gamma peaked in the warped period (between 150 ms post-stimulus and 150 ms pre-speech), we compared the peak mean values in the unwarped and the warped data. Peak values were significantly higher for the warped data than the unwarped data (Supplementary Fig. S3) for both the sentence and list production tasks (p = .003 and p.008 respectively, Wilcoxon signed rank tests), indicating that warping significantly improved temporal alignment in the data. (There was no significant difference between peak values in the picture naming data (p = .592). This likely reflects the fact that these trials were relatively short, with a median reaction time of just 758 ms, meaning that warping made relatively minor changes to a smaller number of time samples.)

For comparison, we reproduced Fig. 1 in Supplementary Fig. S2 using the warped data. The results of the statistical tests in these figures were qualitatively identical: The spatial distributions of significant electrodes were nearly identical over time (Fig. 1C and Supplementary Fig. S2C) and the same ROIs (IFG, MFG, MTG, and IPL) showed significantly higher activity for sentences than lists during the planning period (Fig. 1D and Supplementary Fig. S2D).

4.9. Representational Similarity Analysis (RSA) and Representational Similarity Indices (RSIs)

To uniquely identify variance associated with syntactic, event-semantic, and (sub)lexical processing, we used multiple linear regression to model the neural activity from each electrode and at each time sample as a linear combination of syntactic, event-semantic, and (sub)lexical properties of the sentence the patient was planning/producing. Multiple regression is ideal in this context because it fits coefficients (slopes/betas) that can be used to derive t-values and corresponding p-values which reflect the unique contribution of each independent variable, effectively partitioning variance that can be unambiguously ascribed to just one term [135].

However, event semantic and (sub)lexical representations are highly complex and multidimensional, requiring a choice of which dimensions/features to use in a multiple regression model. To avoid this scenario, we leveraged a Representational Similarity Analysis-style approach and modeled pairwise differences between trials [72-75]. This resulted in just one vector per construct representing pairwise trial differences syntax, event semantics, and word content.

4.9.1. Linguistic and Neural Dissimilarity Models

The syntax term was binary: 1 if one trial was active and the other was passive and 0 otherwise. We modeled event-semantic representations using outputs from GPT-2’s 8th hidden layer, where embeddings correlate most highly with neural activity [136]. Specifically, for each unique stimulus vignette, we fed the corresponding sentence with active syntax into GPT-2 and averaged the embeddings in layer 8 for all of the words in the sentence. By inputting only active sentences (rather than a combination of actives and passives depending on the structure of the specific trials), we ensured that the resulting vector contains just the semantic information from the trial, not the relevant syntactic information (i.e., whether it was active or passive).

To quantify event-semantic dissimilarity between trials, we correlated the vectors corresponding to each pair of trials’ stimuli. Prior to modeling, correlation coefficients (r) were centered, scaled, and multiplied by −1 so that a value of 1 corresponded to more dissimilar meanings, and −1 meant that the two trials had the same exact stimulus. (Each stimulus appeared twice in each sentence production block, once with an active prime and once with a passive prime.)

The (sub)lexical term was also binary: 1 if the first word of the two trials’ sentences were different, 0 if they were the same. This encoding scheme was meant to absorb variance associated with a host of extraneous linguistic features at the lexical and sub-lexical levels including phonetics, phonology, articulatory/motor information, auditory feedback, lemma-level representations, and lexical semantics (the meaning of individual words rather than the global event meaning).

We also included differences in response time () as a covariate in the model, as this is known to index other extraneous non-linguistic features like difficulty that might be correlated with our syntactic manipulation. This term was quantified as the absolute value of the difference in the log of each trial’s reaction times, so that higher values corresponded to bigger differences in reaction times.

We then modeled pairwise trial differences in neural activity (the magnitude of the difference between z-scored high gamma activity at a given sample for a given electrode) as a linear combination of these four dissimilarity () terms:

| (1) |

4.9.2. Electrode Significance

The data used in the RSA regression did not clearly meet the assumptions of standard linear regressions: it was not clear that the residuals should be normally distributed (high gamma activity is gamma-distributed), and imbalances in the datasets due to trial exclusions and the varying effectiveness of priming across patients were exacerbated by the implementation of pairwise trial differences. To err on the side of caution, rather than directly interpreting model statistics, we ran a permutation analysis, shuffling the neural activity with respect to linguistic features in the original datasets, reconstructing the neural dissimilarity models, and re-running the linear regression at each time sample 1000 times.

To assess whether a given electrode was significant for the syntax, semantics, and (sub)lexical terms, we started by calculating the t-values corresponding to the each term’s coefficient, which corresponds to the amount of evidence against the null hypothesis. Then we smoothed the real and shuffled t-values over time with a 100 ms boxcar function. Finally, we z-scored the real t-values by time sample and electrode with respect to the 1000 t-values from shuffled models in the same time sample and electrode. The resulting z-values reflect an estimate of evidence against the null that is standardized across patients and electrodes and independent of the number of trials. Significant electrodes for each representation were defined as those which maintained a z-value of at least 1.645 (corresponding to a 1-tailed p-value of less than .05) for at least 100 consecutive milliseconds.

4.9.3. Representational Similarity Indices (RSIs)

To derive RSIs, we performed additional transformations on these z-values to make them more interpretable and conservative. First, We scaled z-scores, dividing by 2.326, which corresponds to a p-value of .01. Consequently, values greater than 1 could be interpreted as very likely to reflect positive results, and values less than 1 were likely to reflect negative results. Next, to reduce the possibility of extremely high values having an undue influence on aggregate statistics and NMF, we applied a logarithmic penalty to values grater than 1, replacing them with log(x) + 1. Similarly, to reduce values more likely to correspond to negative results, we shrunk low values toward 0 by replacing values less than 1 with (ex), and then cubing the resulting values (i.e., (ex)3) to impose an even more severe penalty on low values. Notably, this renders all values non-negative, naturally resolving issues related to the uninterpretability of negative relationships in RSA [72, 137] and facilitating the use of NMF for subsequent clustering. Finally, we re-scaled values by multiplying them by 2.326 (undoing Step 1) so that the final RSI scale more closely matched the z scale in interpretation. Like z-scores, RSI values over 2.326 can be safely interpreted as significant at the α = .01 threshold.

4.10. Non-negative Matrix Factorization (NMF)

We used NMF to cluster electrodes according to prototypical patterns in the data [138, 139]. In the first clustering analysis (Fig. 3), we analyzed RSIs and mean neural activity from all three tasks for all electrodes that were either “active” (non-zero neural activity; p < .05 for 100 ms, Wilcoxon signed rank test) or inactive, but with at least one significant RSI (p < .05 for 100 ms, permutation test).

High gamma activity was in units of standard deviations (i.e., z-scores), while RSIs were z-scores that had been smoothed and transformed to be non-negative, with extreme values in both directions shrunk (see Section 4.9.3). To ensure that NMF weighted the two types of information the same way, we applied the same transformation used to create the RSIs (Sec. 4.9.3) to the neural data prior to performing NMF.

We then concatenated these three time series (i.e., mean high gamma per task) and the three RSI time series (i.e., syntax, event semantics, and (sub)lexical), excluding pre-stimulus time samples and those after 500 ms post-speech onset. This resulted in a matrix of dimensionality 741 (electrodes) × 4364 (time samples from 3 high gamma and 3 RSI time series).

We fed this matrix to the nmf() function in R (NMF library v0.25 [140]) using the Brunet algorithm [141] and 50 runs to mitigate the risk of converging on local minima. We tried a range of model ranks: 3 to 9 for the full analysis (Fig. 4) and 2 to 7 for the syntax analysis (Fig. 4). The optimal rank (5 for the full analysis; 3 for the syntax analysis) was determined by finding the elbow in the scree plots.

Supplementary Material

Acknowledgments

This work was supported by National Institutes of Health grants F32DC019533 (A.M.), R01NS109367 (A.F.), R01NS115929 (A.F.), and R01DC018805 (A.F.).

Footnotes

Data and Code availability

The data set generated during the current study will be made available from the authors upon request and documentation is provided that the data will be strictly used for research purposes and will comply with the terms of our study IRB. The code is available upon publication at https://github.com/flinkerlab/low_activity_syntax.

Declarations

The authors declare that they have no competing interests.

References

- [1].Patel A.D., Iversen J.R., Wassenaar M., Hagoort P.: Musical syntactic processing in agrammatic broca’s aphasia. Aphasiology 22(7-8), 776–789 (2008) 10.1080/02687030701803804 [DOI] [Google Scholar]

- [2].Slevc L.R., Rosenberg J.C., Patel A.D.: Language, music and modularity: Evidence for shared processing of linguistic and musical syntax. In: Proceedings of the 10th International Conference on Music Perception & Cognition (2008) [Google Scholar]

- [3].Patel A.D.: Language, music, syntax and the brain. Nature neuroscience 6(7), 674–681 (2003) 10.1038/nn1082 [DOI] [PubMed] [Google Scholar]

- [4].Fedorenko E., Patel A., Casasanto D., Winawer J., Gibson E.: Structural integration in language and music: Evidence for a shared system. Memory & cognition 37, 1–9 (2009) 10.3758/MC.37.1.1 [DOI] [PubMed] [Google Scholar]

- [5].Kunert R., Willems R.M., Casasanto D., Patel A.D., Hagoort P.: Music and language syntax interact in broca’s area: An fMRI study. PloS one 10(11), 0141069 (2015) 10.1371/journal.pone.0141069 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Hoch L., Tillmann B.: Shared structural and temporal integration resources for music and arithmetic processing. Acta Psychologica 140(3), 230–235 (2012) 10.1016/j.actpsy.2012.03.008 [DOI] [PubMed] [Google Scholar]

- [7].Cavey J., Hartsuiker R.J.: Is there a domain-general cognitive structuring system? evidence from structural priming across music, math, action descriptions, and language. Cognition 146, 172–184 (2016) 10.1016/j.cognition.2015.09.013 [DOI] [PubMed] [Google Scholar]

- [8].Kaan E., Swaab T.Y.: The brain circuitry of syntactic comprehension. Trends in cognitive sciences 6(8), 350–356 (2002) 10.1016/S1364-6613(02)01947-2 [DOI] [PubMed] [Google Scholar]

- [9].Pallier C., Devauchelle A.-D., Dehaene S.: Cortical representation of the constituent structure of sentences. Proceedings of the National Academy of Sciences 108(6), 2522–2527 (2011) 10.1073/pnas.1018711108 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [10].Nelson M.J., El Karoui I., Giber K., Yang X., Cohen L., Koopman H., Cash S.S., Naccache L., Hale J.T., Pallier C., et al. : Neurophysiological dynamics of phrase-structure building during sentence processing. Proceedings of the National Academy of Sciences 114(18), 3669–3678 (2017) 10.1073/pnas.1701590114 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [11].Hagoort P., Indefrey P.: The neurobiology of language beyond single words. Annual review of neuroscience 37, 347–362 (2014) 10.1146/annurev-neuro-071013-013847 [DOI] [PubMed] [Google Scholar]

- [12].Friederici A.D.: The brain basis of language processing: from structure to function. Physiological Reviews 91(4), 1357–1392 (2011) 10.1152/physrev.00006.2011 [DOI] [PubMed] [Google Scholar]

- [13].Hickok G., Poeppel D.: The cortical organization of speech processing. Nature reviews neuroscience 8(5), 393–402 (2007) 10.1038/nrn2113 [DOI] [PubMed] [Google Scholar]

- [14].Matchin W., Liao C.-H., Gaston P., Lau E.: Same words, different structures: An fMRI investigation of argument relations and the angular gyrus. Neuropsychologia 125, 116–128 (2019) 10.1016/j.neuropsychologia.2019.01.019 [DOI] [PubMed] [Google Scholar]

- [15].Matchin W., Hickok G.: The cortical organization of syntax. Cerebral Cortex 30(3), 1481–1498 (2020) 10.1093/cercor/bhz180 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [16].Menenti L., Segaert K., Hagoort P.: The neuronal infrastructure of speaking. Brain and language 122(2), 71–80 (2012) 10.1016/j.bandl.2012.04.012 [DOI] [PubMed] [Google Scholar]

- [17].Stromswold K., Caplan D., Alpert N., Rauch S.: Localization of syntactic comprehension by positron emission tomography. Brain and language 52(3), 452–473 (1996) 10.1006/brln.1996.0024 [DOI] [PubMed] [Google Scholar]

- [18].Zaccarella E., Friederici A.D.: Merge in the human brain: A sub-region based functional investigation in the left pars opercularis. Frontiers in psychology 6, 1818 (2015) 10.3389/fpsyg.2015.01818 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [19].Matchin W., Basilakos A., Stark B.C., Ouden D.-B., Fridriksson J., Hickok G.: Agrammatism and paragrammatism: a cortical double dissociation revealed by lesion-symptom mapping. Neurobiology of Language 1(2), 208–225 (2020) 10.1162/nol_a_00010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [20].Flick G., Pylkkänen L.: Isolating syntax in natural language: MEG evidence for an early contribution of left posterior temporal cortex. Cortex 127, 42–57 (2020) 10.1016/j.cortex.2020.01.025 [DOI] [PubMed] [Google Scholar]

- [21].Takashima A., Konopka A., Meyer A., Hagoort P., Weber K.: Speaking in the brain: the interaction between words and syntax in sentence production. Journal of Cognitive Neuroscience 32(8), 1466–1483 (2020) 10.1162/jocn_a_01563 [DOI] [PubMed] [Google Scholar]

- [22].Shain C., Kean H., Casto C., Lipkin B., Affourtit J., Siegelman M., Mollica F., Fedorenko E.: Distributed sensitivity to syntax and semantics throughout the language network. Journal of Cognitive Neuroscience, 1–43 (2024) 10.1162/jocn_a_02164 [DOI] [PubMed] [Google Scholar]

- [23].Ding N., Melloni L., Zhang H., Tian X., Poeppel D.: Cortical tracking of hierarchical linguistic structures in connected speech. Nature neuroscience 19(1), 158–164 (2016) 10.1038/nn.4186 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [24].Blank I., Balewski Z., Mahowald K., Fedorenko E.: Syntactic processing is distributed across the language system. Neuroimage 127, 307–323 (2016) 10.1016/j.neuroimage.2015.11.069 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [25].Fedorenko E., Blank I.A., Siegelman M., Mineroff Z.: Lack of selectivity for syntax relative to word meanings throughout the language network. Cognition 203, 104348 (2020) 10.1016/j.cognition.2020.104348 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [26].Krauska A., Lau E.: Moving away from lexicalism in psycho-and neurolinguistics. Frontiers in Language Sciences 2, 1125127 (2023) 10.3389/flang.2023.1125127 [DOI] [Google Scholar]

- [27].Schell M., Zaccarella E., Friederici A.D.: Differential cortical contribution of syntax and semantics: An fMRI study on two-word phrasal processing. Cortex 96, 105–120 (2017) 10.1016/j.cortex.2017.09.002 [DOI] [PubMed] [Google Scholar]

- [28].Friederici A.D., Kotz S.A., Scott S.K., Obleser J.: Disentangling syntax and intelligibility in auditory language comprehension. Human brain mapping 31(3), 448–457 (2010) 10.1002/hbm.20878 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [29].Ni W., Constable R., Mencl W., Pugh K., Fulbright R., Shaywitz S., Shaywitz B., Gore J., Shankweiler D.: An event-related neuroimaging study distinguishing form and content in sentence processing. Journal of Cognitive Neuroscience 12(1), 120–133 (2000) 10.1162/08989290051137648 [DOI] [PubMed] [Google Scholar]

- [30].Dapretto M., Bookheimer S.Y.: Form and content: dissociating syntax and semantics in sentence comprehension. Neuron 24(2), 427–432 (1999) 10.1016/S0896-6273(00)80855-7 [DOI] [PubMed] [Google Scholar]

- [31].Embick D., Marantz A., Miyashita Y., O’Neil W., Sakai K.L.: A syntactic specialization for Broca’s area. Proceedings of the National Academy of Sciences 97(11), 6150–6154 (2000) 10.1073/pnas.100098897 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [32].Kuperberg G.R., McGuire P.K., Bullmore E.T., Brammer M.J., Rabe-Hesketh S., Wright I.C., Lythgoe D.J., Williams S.C., David A.S.: Common and distinct neural substrates for pragmatic, semantic, and syntactic processing of spoken sentences: an fMRI study. Journal of Cognitive Neuroscience 12(2), 321–341 (2000) 10.1162/089892900562138 [DOI] [PubMed] [Google Scholar]

- [33].Kuperberg G.R., Holcomb P.J., Sitnikova T., Greve D., Dale A.M., Caplan D.: Distinct patterns of neural modulation during the processing of conceptual and syntactic anomalies. Journal of Cognitive Neuroscience 15(2), 272–293 (2003) 10.1162/089892903321208204 [DOI] [PubMed] [Google Scholar]

- [34].Wagley N., Hu X., Satterfield T., Bedore L.M., Booth J.R., Kovelman I.: Neural specificity for semantic and syntactic processing in spanish-english bilingual children. Brain and Language 250, 105380 (2024) 10.1016/j.bandl.2024.105380 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [35].Fedorenko E., Nieto-Castanon A., Kanwisher N.: Lexical and syntactic representations in the brain: an fMRI investigation with multi-voxel pattern analyses. Neuropsychologia 50(4), 499–513 (2012) 10.1016/j.neuropsychologia.2011.09.014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [36].Blank I.A., Fedorenko E.: No evidence for differences among language regions in their temporal receptive windows. NeuroImage 219, 116925 (2020) 10.1016/j.neuroimage.2020.116925 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [37].Fedorenko E., Hsieh P.-J., Nieto-Castañón A., Whitfield-Gabrieli S., Kanwisher N.: New method for fMRI investigations of language: Defining ROIs functionally in individual subjects. Journal of Neurophysiology 104(2), 1177–1194 (2010) 10.1152/jn.00032.2010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [38].Mazoyer B.M., Tzourio N., Frak V., Syrota A., Murayama N., Levrier O., Salamon G., Dehaene S., Cohen L., Mehler J.: The cortical representation of speech. Journal of cognitive neuroscience 5(4), 467–479 (1993) 10.1162/jocn.1993.5.4.467 [DOI] [PubMed] [Google Scholar]

- [39].Friederici A.D., Meyer M., Von Cramon D.Y.: Auditory language comprehension: an event-related fMRI study on the processing of syntactic and lexical information. Brain and language 74(2), 289–300 (2000) 10.1006/brln.2000.2313 [DOI] [PubMed] [Google Scholar]

- [40].Matchin W., Hammerly C., Lau E.: The role of the IFG and pSTS in syntactic prediction: Evidence from a parametric study of hierarchical structure in fMRI. Cortex 88, 106–123 (2017) 10.1002/hbm.24403 [DOI] [PubMed] [Google Scholar]

- [41].Rogalsky C., Hickok G.: Selective attention to semantic and syntactic features modulates sentence processing networks in anterior temporal cortex. Cerebral Cortex 19(4), 786–796 (2009) 10.1093/cercor/bhn126 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [42].Stowe L.A., Broere C.A., Paans A.M., Wijers A.A., Mulder G., Vaalburg W., Zwarts F.: Localizing components of a complex task: sentence processing and working memory. Neuroreport 9(13), 2995–2999 (1998) [DOI] [PubMed] [Google Scholar]

- [43].Humphries C., Love T., Swinney D., Hickok G.: Response of anterior temporal cortex to syntactic and prosodic manipulations during sentence processing. Human brain mapping 26(2), 128–138 (2005) 10.1002/hbm.20148 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [44].Humphries C., Binder J.R., Medler D.A., Liebenthal E.: Syntactic and semantic modulation of neural activity during auditory sentence comprehension. Journal of cognitive neuroscience 18(4), 665–679 (2006) 10.1162/jocn.2006.18.4.665 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [45].Sprouse J., Lau E.F.: Syntax and the brain. The Cambridge Handbook of Generative Syntax, 971–1005 (2013) [Google Scholar]

- [46].Mesgarani N., Cheung C., Johnson K., Chang E.F.: Phonetic feature encoding in human superior temporal gyrus. Science 343(6174), 1006–1010 (2014) 10.1038/nature11020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [47].Hubel D.H., Wiesel T.N.: Receptive fields of single neurones in the cat’s striate cortex. The Journal of physiology 148(3), 574 (1959) [DOI] [PMC free article] [PubMed] [Google Scholar]

- [48].Daniel P., Whitteridge D.: The representation of the visual field on the cerebral cortex in monkeys. The Journal of physiology 159(2), 203 (1961) [DOI] [PMC free article] [PubMed] [Google Scholar]

- [49].Hu J., Small H., Kean H., Takahashi A., Zekelman L., Kleinman D., Ryan E., Nieto-Castañón A., Ferreira V., Fedorenko E.: Precision fmri reveals that the language-selective network supports both phrase-structure building and lexical access during language production. Cerebral Cortex 33(8), 4384–4404 (2023) 10.1093/cercor/bhac350 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [50].Giglio L., Ostarek M., Sharoh D., Hagoort P.: Diverging neural dynamics for syntactic structure building in naturalistic speaking and listening. Proceedings of the National Academy of Sciences 121(11), 2310766121 (2024) 10.1073/pnas.2310766121 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [51].Segaert K., Menenti L., Weber K., Petersson K.M., Hagoort P.: Shared syntax in language production and language comprehension—an fmri study. Cerebral Cortex 22(7), 1662–1670 (2012) 10.1093/cercor/bhr249 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [52].Aoshima S., Yoshida M., Phillips C.: Incremental processing of coreference and binding in japanese. Syntax 12(2), 93–134 (2009) 10.1111/j.1467-9612.2009.00123.x [DOI] [Google Scholar]

- [53].Garrett M.: Remarks on the architecture of language processing systems. Language and the brain, 31–69 (2000) 10.1016/B978-012304260-6/50004-9 [DOI] [Google Scholar]

- [54].Ferreira F., Bailey K.G., Ferraro V.: Good-enough representations in language comprehension. Current Directions in Psychological Science 11(1), 11–15 (2002) 10.1111/1467-8721.0015 [DOI] [Google Scholar]

- [55].Ferreira F.: The misinterpretation of noncanonical sentences. Cognitive psychology 47(2), 164–203 (2003) 10.1016/S0010-0285(03)00005-7 [DOI] [PubMed] [Google Scholar]

- [56].Dwivedi V.D.: Interpreting quantifier scope ambiguity: Evidence of heuristic first, algorithmic second processing. PloS one 8(11), 81461 (2013) 10.1371/journal.pone.0081461 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [57].Christianson K., Hollingworth A., Halliwell J.F., Ferreira F.: Thematic roles assigned along the garden path linger. Cognitive psychology 42(4), 368–407 (2001) 10.1006/cogp.2001.0752 [DOI] [PubMed] [Google Scholar]

- [58].Trueswell J.C., Kim A.E.: How to prune a garden path by nipping it in the bud: Fast priming of verb argument structure. Journal of memory and language 39(1), 102–123 (1998) 10.1006/jmla.1998.2565 [DOI] [Google Scholar]

- [59].Berkovitch L., Dehaene S.: Subliminal syntactic priming. Cognitive psychology 109, 26–46 (2019) 10.1016/j.cogpsych.2018.12.001 [DOI] [PubMed] [Google Scholar]

- [60].Flinker A., Piai V., Knight R.T.: Intracranial electrophysiology in language research. In: Rueschemeyer S.-A., Gaskell M.G. (eds.) The Oxford Handbook of Psycholinguistics (2nd Edn) vol. 1. Oxford University Press, ??? (2018). 10.1093/oxfordhb/9780198786825.013.43 [DOI] [Google Scholar]

- [61].Llorens A., Trébuchon A., Liégeois-Chauvel C., Alario F.-X.: Intra-cranial recordings of brain activity during language production. Frontiers in psychology 2, 375 (2011) 10.3389/fpsyg.2011.00375 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [62].Ferreira V.S., Morgan A.M., Slevc L.R.: Grammatical encoding. The Oxford Handbook of Psycholinguistics, 453–469 (20018) [Google Scholar]

- [63].Bock K., Ferreira V.S.: Syntactically speaking. The Oxford handbook of language production, 21–46 (2014) [Google Scholar]

- [64].Bock J.K.: Syntactic persistence in language production. Cognitive psychology 18(3), 355–387 (1986) 10.1016/0010-0285(86)90004-6 [DOI] [Google Scholar]

- [65].Mukamel R., Gelbard H., Arieli A., Hasson U., Fried I., Malach R.: Coupling between neuronal firing, field potentials, and fMRI in human auditory cortex. Science 309(5736), 951–954 (2005) 10.1126/science.1110913 [DOI] [PubMed] [Google Scholar]

- [66].Nir Y., Fisch L., Mukamel R., Gelbard-Sagiv H., Arieli A., Fried I., Malach R.: Coupling between neuronal firing rate, gamma LFP, and BOLD fMRI is related to interneuronal correlations. Current biology 17(15), 1275–1285 (2007) 10.1016/j.cub.2007.06.066 [DOI] [PubMed] [Google Scholar]

- [67].Fries P.: Rhythms for cognition: communication through coherence. Neuron 88(1), 220–235 (2015) 10.1016/j.neuron.2015.09.034 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [68].Richter C.G., Thompson W.H., Bosman C.A., Fries P.: Top-down beta enhances bottom-up gamma. Journal of Neuroscience 37(28), 6698–6711 (2017) 10.1523/JNEUROSCI.3771-16.2017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [69].Edwards E., Nagarajan S.S., Dalal S.S., Canolty R.T., Kirsch H.E., Barbaro N.M., Knight R.T.: Spatiotemporal imaging of cortical activation during verb generation and picture naming. Neuroimage 50(1), 291–301 (2010) [DOI] [PMC free article] [PubMed] [Google Scholar]

- [70].Picton T.W., Lins O.G., Scherg M.: The recording and analysis of event-related potentials. Handbook of neuropsychology 10, 3–3 (1995) [Google Scholar]

- [71].Molina M., Tardón L.J., Barbancho A.M., De-Torres I., Barbancho I.: Enhanced average for event-related potential analysis using dynamic time warping. Biomedical Signal Processing and Control 87, 105531 (2024) 10.1016/j.bspc.2023.105531 [DOI] [Google Scholar]

- [72].Jozwik K.M., Kriegeskorte N., Mur M.: Visual features as stepping stones toward semantics: Explaining object similarity in it and perception with non-negative least squares. Neuropsychologia 83, 201–226 (2016) 10.1016/j.neuropsychologia.2015.10.023 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [73].Kaiser D., Turini J., Cichy R.M.: A neural mechanism for contextualizing fragmented inputs during naturalistic vision. elife 8, 48182 (2019) 10.7554/eLife.48182 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [74].Proklova D., Kaiser D., Peelen M.V.: Disentangling representations of object shape and object category in human visual cortex: The animate–inanimate distinction. Journal of cognitive neuroscience 28(5), 680–692 (2016) 10.1162/jocn_a_00924 [DOI] [PubMed] [Google Scholar]

- [75].Tucciarelli R., Wurm M., Baccolo E., Lingnau A.: The representational space of observed actions. elife 8, 47686 (2019) 10.7554/eLife.47686 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [76].Millet J., Caucheteux C., Boubenec Y., Gramfort A., Dunbar E., Pallier C., King J.-R., et al. : Toward a realistic model of speech processing in the brain with self-supervised learning. Advances in Neural Information Processing Systems 35, 33428–33443 (2022) [Google Scholar]

- [77].Chang F., Dell G.S., Bock K.: Becoming syntactic. Psychological review 113(2), 234 (2006) 10.1037/0033-295X.113.2.234 [DOI] [PubMed] [Google Scholar]