Abstract

Deep convolutional neural networks approaches often assume that the feature response has a Gaussian distribution with target-centered peak response, which can be used to guide the target location and classification. Nevertheless, such an assumption is implausible when there is progressive interference from other targets and/or background noise, which produces sub-peaks on the tracking response map and causes model drift. In this paper, we propose a feature response regularization approach for sub-peak response suppression and peak response enforcement and aim to handle progressive interference systematically. Our approach, referred to as Peak Response Regularization (PRR), applies simple-yet-efficient method to aggregate and align discriminative features, which convert local extremal response in discrete feature space to extremal response in continuous space, which enforces the localization and representation capability of convolutional features. Experiments on human pose detection, object detection, object tracking, and image classification demonstrate that PRR improves the performance of image tasks with a negligible computational cost.

Subject terms: Electrical and electronic engineering, Computer science, Information technology, Statistics

Introduction

During the past few years, convolutional neural networks (CNNs) have achieved rapid development and breakthroughs in the past decade, including image classification1–3, object detection4,5, object tracking6–8, automatic driving9,10. By continuously arranging and combining different CNN layers, pooling and activation functions, CNNs can obtain different levels of features and global semantic information11,12. Although CNNs have become the most excellent model in object detection tasks, there are still many problems in actual use. Their performance in precise target positioning is still limited due to the following 2 reasons:

To achieve the invariance of target movement and deformation, and reduce the amount of computation, the CNNs use multiple pooling operations to reduce the spatial resolution, extract high-level semantic features, and confuse the spatial location of features, but also lose some information of network features13–15;

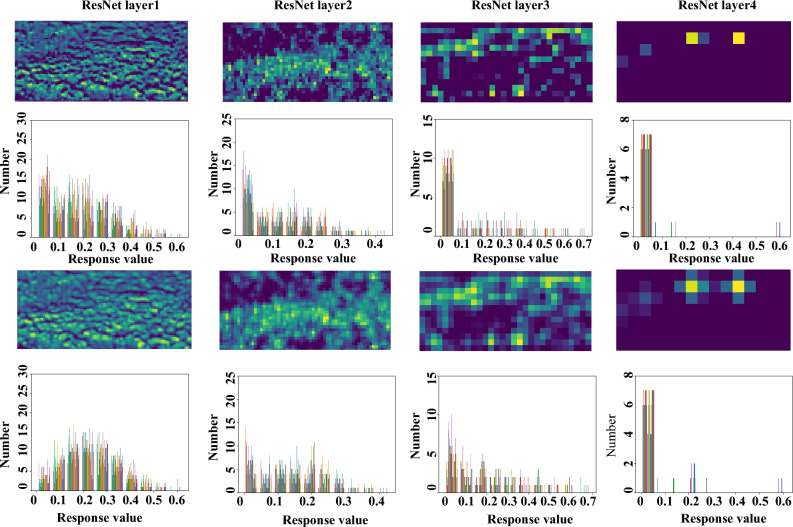

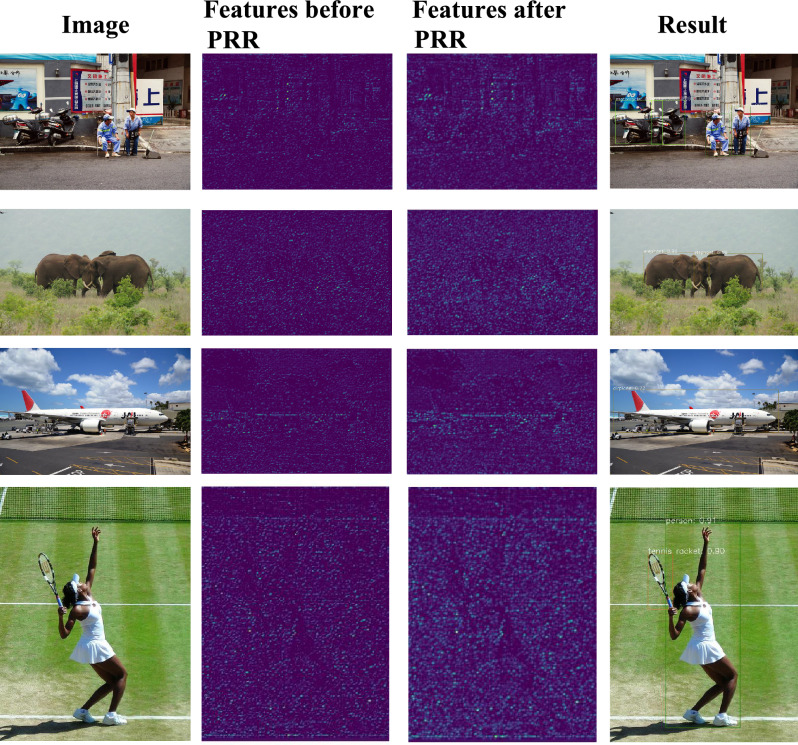

Many factors, including multi-target occlusion, appearance variance and/or background noise, remain challenging state-of-the-art CNN-based methods. As shown in Fig. 1(up), The intermediate features of visual tasks will be seriously lost with the deepening of features, and the target-centered assumption has been challenged16.

Figure 1.

Feature response map (first and third rows) and histograms of feature response without (second row) and with (fourth row) (Best viewed in color and with zoom).

To conquer the issues, batch normalization (BN) is an effective method to normalize features16, which can avoid gradient explosion and gradient disappearance, and also improve the recognition ability of network features. RetinaNet17 suggests using focus loss to improve the imbalance between positive and negative samples during training to strengthen feature learning. SENet18 used squeezing and stimulation operations on the channels of neural network, which can automatically learn the importance of different channel characteristics. Dilated convolution19 is designed to increase the receptive field and improve the positioning accuracy of small targets. Although these methods have achieved good results, inaccurate or even incorrect positioning will still occur for the above problems. The existing methods usually carry out specific post-processing on the feature map, the feature distribution is still not a perfect Gaussian distribution, which will affect the learning efficiency of the network and neglect the regularization of the feature space. There are few studies on direct spatial regularization of convolution features20–22.

Therefore, our aim is to enhance the localization and representation of convolution features in a straightforward and efficient manner. We propose employing a simple-yet-effective cross maximization as the peak response regularization method to execute and adjust the extreme response, as well as to suppress the sub-extreme response. It can work as a plug-and-play module in computer vision tasks to adjust the shallow and deep features in the neural network and improve the performance of multiple tasks without increasing the amount of computation. Finally, the effectiveness of our algorithm is verified by human pose detection, object detection, object tracking and image classification tasks.

The remainder of this paper is summarized as follows. Related works are described in “Related work” section and the proposed PRP approaches are respectively presented in "Peak response regularization (PRR)" section. Experimental results are given in “Experiment” section. We conclude this paper in “Conclusion” section.

Related work

By incorporating the spatial relation of features, CNN has been an effective model for spatial localization tasks including visual object tracking23–25, human pose detection26,27, object detection17,28 and image classification18,29. Nevertheless, CNNs developed for these tasks usually focus on finding discriminative representation through extensive offline learning but unfortunately overlook variance of feature response and inference from objects’ context area.

Image classification

Image classification is a classic task. Dilated convolution is designed to increase the receptive field and improve the positioning accuracy of small targets. SENet18 leverages a squeeze-and-excitation operation to filter out local extremal features while enforcing feature representation. Nevertheless, it don’t involve any spatial regularization of convolutional features. Although SENet can enforce the discrimination capability of channels, it has negligible impact on features.

Human pose detection

In human pose detection, most methods model key points as Gaussian distribution. OpenPose26 achieves accurate key-point localization by using Part Affinity Fields. In the following work27, a Part Intensity Field (PIF) and a Part Association Field (PAF) are proposed to associate body parts to form full human poses. High-resolution network and hourglass network are also used to achieve precise keypoint localization30.

Object detection

In object detection, point-based methods31,32 use corner points to detect objects while leveraging corner/center pooling to align features and improve object localization. FreeAnchor33 incorporates a learning-to-match mechanism to break IoU restriction, allowing objects to localize anchors/features in a flexible manner. Dilated convolution19 is designed to increase receptive fields while improving the localization precision of small objects.

Object tracking

Object tracking is formulated in a metric (similarity) learning framework, assuming that the object response has a Gaussian distribution with a target-centered peak response to facilitate state estimation. Classification and state estimation are integrated into a Siamese network34 to measure the similarity between the target and the candidates for tracking. Semantic branches and appearance branches are constructed in a dual Siamese network35, and saliency mechanisms are introduced in the attention-based Siamese network36. SiamRPN24,37 combines the Siamese network with region proposal network (RPN), allowing trackers to estimate target extent when positioned accurately. SiamRPN++24 introduces a deeper feature network into the SiamRPN37, which successfully enables the Siamese network to perform end-to-end offline pre-training on ResNet29.

Despite the effectiveness of various object/pose localization approaches, direct spatial regularization of convolutional features is seldom explored. Existing approaches usually use specific post-processing on feature maps but unfortunately ignore feature spatial regularization. In this paper, we propose Peak Response Regularization (PRR) and aim to enforce both the localization and representation capability of convolutional features in an efficient manner.

Peak response regularization (PRR)

Feature extraction, coding and fusion of visual features are important components of semantic image analysis methods. The development of convolutional neural network combines these traditional independent steps. The effectiveness of visual tasks depends on the effectiveness of these steps. Object detection is to use convolution neural network for feature extraction and encode the target into Gaussian feature model. Accurate object localization is crucial in many computer vision tasks including human pose detection, visual object detection, object tracking, and image recognition. Despite the unprecedented performance achieved by CNNs over object localization, they remain challenged by the variance of object appearance and inference from backgrounds.

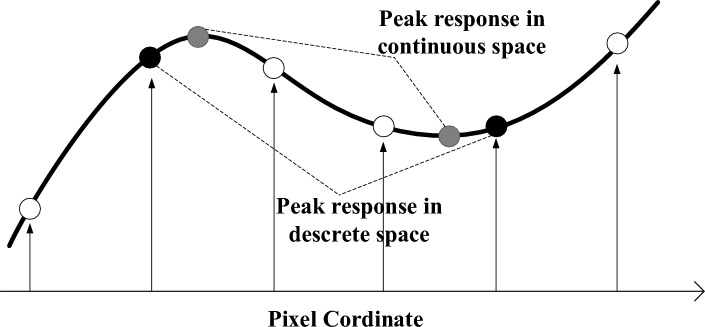

Peak response in continuous space

The peaks on a feature response map are in discrete space instead of continuous space. It requires converting the peaks from discrete space instead of continuous space to identify the most stable features, as shown in Fig. 2. To fulfill this purpose, we first model the peak response from a perspective of extremal point detection in continuous space. Let and f(z) denote a pixel location and a response value on the feature response map, respectively. Based on Taylor expansion, we have that

| 1 |

To get the extreme value of f(z) in terms of location variable z, it requires to set the derivative on z to zero, as

| 2 |

and we have

| 3 |

and the extremal response value is calculated as

| 4 |

Figure 2.

Illustration of continuous and discrete extremal values (peak response).

Response regularization

To identify peak response in continuous space, we approximate by respectively maximizing response values in horizontals and vertical (row and column) orientations, Fig. 3. On the feature map predicted, PRP is first performed to concentrate the feature map into a horizontal pooling map. This procedure is done by finding the maximum feature in each row of the feature map and assigning all pixels in the line the maximum feature value. In a similar way, vertical PRP is performed in each column on the feature map to obtain the vertical pooling map. The horizontal and vertical pooling maps are summarized, as

| 5 |

where denotes the response value at the location (p,q) and i the size of sliding window. According to Eq. 4, the feature values are converted to

| 6 |

where is a normalization factor, which is experimentally set to be 0.5.

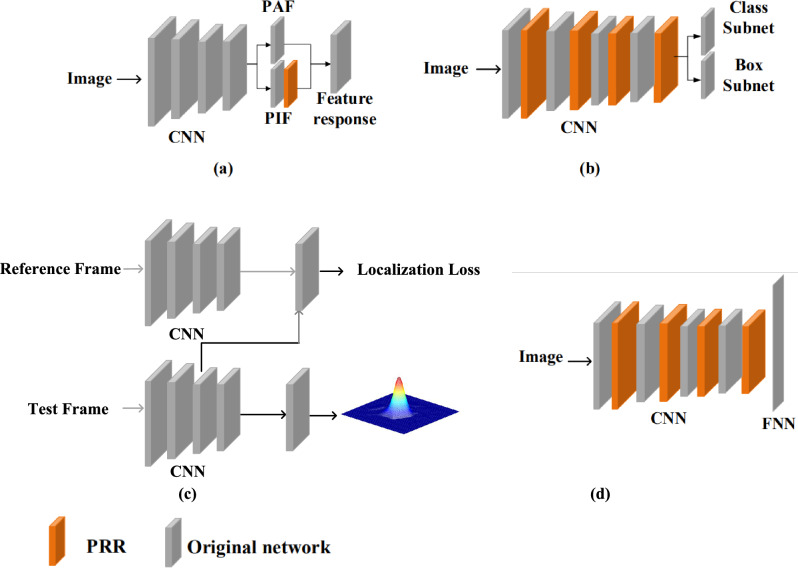

Figure 3.

Illustration of the proposed G-SPS approach, cross maximization to enforce and align extremal response, as well as suppressing sub-extremal response with slide window. (Best viewed in color).

Object localization

The proposed PRR is applied on feature response maps for typical object detection tasks including human pose detection, visual object detection and image classification, Fig. 4. For different tasks, different sliding window sizes are adopted for PRR. For object tracking, we define the window size as large as the size of feature map, which can suppress the sub-peak of response map and reduce the drift of the model. In human pose detection, the size of sliding window is set to be small (55) to capture different local maxima (representing joints of different human bodies). In object detection, the size of sliding windows is very small (33). Considering that in the single-stage object detection framework, each single deep pixel is used to represent an object, a 33 rectangle region can cover both the object and its context area.

Figure 4.

Network architecture with Peak Response Regularization (PRR) for human pose detection, object detection and image classification.

Human pose detection

Human pose detection defines a keypoint coding problem by using the Gaussian mixture distribution prior. The key to human pose detection lies in precise keypoint localization. Unlike object tracking, there are multiple local maxima on the keypoint response map. When performing pose detection, sub-peak response around keypoints could produce interference and reduce the precision of keypoint localization. To enforce keypoint detection, PRR is applied on the response map to regularize feature distribution, Fig. 4a. The response map is produced by a state-of-the-art human pose detection approach27, which uses a Part Intensity Field (PIF) to localize keypints and a Part Association Field (PAF) to estimate human pose on detected keypoints.

Object detection

CNN-based object detection typically consists of an object classification module and an object localization module. While the classification module aims to classify a region of convolutional feature maps into object categories, the localization module predicts object extent via a bounding-box regression procedure17. During the procedure, object appearance and background noise lead to variance of peak response, which deteriorates object classification and/or localization. PRR is therefore applied after each convolutional layer to regularize peak response while enforcing feature representation, Fig. 4b.

Object tracking

Object tracking usually assumes that the feature response has a Gaussian distribution with target-centered peak response25,34. Nevertheless, such an assumption is implausible when there is progressive interference from other targets and/or background noise, which produces sub-peaks on the tracking response map and causes model drift. To mitigate the interference, PRR is applied to aggregate and align discriminative features to modify the tracking response to Gaussian distribution, as shown in Fig. 4c.

Image classification

Image Classification is a fundamental problem in computer vision, which aims to classify images based on pre-trained network models18,29. Although image classification does not explicitly involve object localization, PRR on feature response maps could also benefit feature representation and improve classification performance. Like object detection, PRR is applied after each convolutional layer to align most discriminative feature representation to local peaks, Fig. 4d.

Experiment

Implementation details

In this section, we evaluate the performance of object tracking, human pose estimation, object detection and classification network with and without using PRR. Experiments are carried out with Pytorch on Intel Xeon E5-2678 V3 CPU with 2.5GHz*48 and Nvidia GTX 2080ti GPU4 with 11GB4 memory.

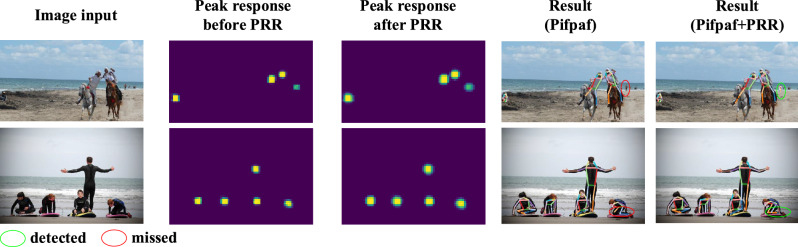

Peak response regularization

PRR targets regularizing the peak response from discrete space to continuous space. In Fig. 5, the peak response with/without PRR is compared. It can be seen that with PRR, the response map is smoothed and can better fit the Gaussian distribution priors (supervision), which facilitates keypoint detection. In the deep learning framework, better fitting of supervision usually means easier training of the network model and higher performance. In Fig. 1, the response feature maps for object detection are compared.

Figure 5.

Human pose detection with/without PRR on the MS-COCO 2017 dataset. With PRR, the response map is smoothed and can better fit the Gaussian distribution priors (supervision), which facilities keypoint detection.

It can be seen that after PRR, the response feature maps are regularized to form distributions centered at local response peaks, particularly in deeper fourth convolutional layers. Such feature distribution could be more robust to object appearance variance and noise inference. By comparing feature histograms before and after PRR, we can see that PRR can reduce the low response features while enforcing local peak response. After PRR, the global histogram is regularized to enforce the effective (larger) features while depressing trivial ones.

Performance and comparison

Precision

On human pose detection, we test the proposed PRR approach on the MS-COCO 2017 dataset by adding PRR after the PIF module of the PifPaf27. In Fig. 5, it can be seen that with PRR local response peaks are regularized to be more continuous. The regularized peak response can fit Gaussian distribution prior (supervision) better and get more accurate keypoint localization. As a result, our approach improves the average precision (AP) value by 1.7% (62.6–64.3%), Table 1, which is a significant margin for the challenging pose detection task. As shown in the first row of Fig. 5, the baseline PifPaf approach misses a small-scale person. In contrast, our approach detects the small person for the improved localization capability of regularized feature response.

Table 1.

Comparison of pose detection performance and time cost on the MS-COCO 2017 dataset.

| Backbone | Detector | AP | ||

|---|---|---|---|---|

| ResNet-50 | PifPaf27 | 62.6 | 79 | 39 |

| ResNet-50 | PifPaf+PRR (ours) | 64.3 | 80 | 39 |

Significant values are in bold.

For object detection, RetinaNet17 with ResNet-50 is selected as the baseline and the proposed PRR is added into each convolutional layer of the backbone ResNet. As shown in Table 2 and Fig 6, on the MS-COCO 2017 dataset the introduction of PRR improves the AP value by 1.6% (35.7–37.3%), which again validates the effectiveness of the proposed approach.

Table 2.

Comparison of object detection performance and and time cost on the MS-COCO 2017 dataset.

| Backbone | Detector | AP | Time[ms] | |||||

|---|---|---|---|---|---|---|---|---|

| ResNet-50 | RetinaNet17 | 35.7 | 55.0 | 38.5 | 18.9 | 38.9 | 46.3 | 153 |

| ResNet-50 | RetinaNet+PRR | 37.3 | 56.4 | 39.9 | 21.4 | 40.9 | 49.6 | 154 |

Significant values are in bold.

Figure 6.

Object detection with/without PRR on the MS-COCO 2017 dataset. With PRR, the response map is more salient to extract more features to assist location.

For object tracking, ATOM tracker25 with a target classification branch and a target localization branch. The classification branch converts the feature map into a response map and provides the coarse locations of the target. Upon the classification branch, the PRR is applied in a plug-and-play manner to regularize the response map to Gaussian distribution. On the VOT201838 dataset, can improve EAO by 0.19 (0.420 vs. 0.401), as reported in Table 3.

Table 3.

Tracking performance comparison on VOT-2018.

| Tracker | EAO | Accuracy | Robustness | FPS |

|---|---|---|---|---|

| ATOM+PRR | 0.420 | 0.609 | 0.191 | 39 |

| ATOM25 | 0.401 | 0.590 | 0.204 | 40 |

For classification, by adding PRR to ResNet50, we improve the image classification accuracy by 0.9% (76.2–77.1%) on the ImageNet39 dataset.

Time cost

As reported in Table 1, we can see that our proposed method only takes a slightly longer time than PifPaf to detect human pose, specifically, PifPaf takes 79 ms on average and our approach takes 80 ms. In Table 3, with a single GPU, the proposed PRR achieves a tracking speed of 39 fps. Compared with the speed (40 fps) of the baseline ATOM. In Tables 2 and 4, we further validate that PRR achieves significant detection and classification performance gains with negligible computational cost.

Table 4.

Comparison of image classification performance and time cost on the ImageNet dataset.

| Backbone | Top-1 Acc. | Top-5 Acc. | Time[ms] |

|---|---|---|---|

| ResNet-5029 | 76.2 | 92.9 | 5 |

| ResNet-50+PRR | 77.1 | 93.4 | 5 |

Significant values are in bold.

Conclusion

Precise object localization is a primary problem in many computer vision tasks including object tracking, object detection, and human pose detection. Nevertheless, the localization of the target object remains challenged by interference from nearby objects, object appearance variation, and background noise. In this paper, we propose a peak response modeling approach and alleviate the localization inference from the perspective of feature response regularization. A plug-and-play Peak Response Regularization (PRR) is proposed to convert local extremal response in discrete feature space to continuous space to aggregate and align discriminative features. The proposed feature response regularization improves the performance of object tracking, image classification, pose and object detection, with shirking contrast with the baseline approaches. The proposed approach can provide a new insight for object localization with convolutional features.

Author contributions

Conceptualization, J.W.Y. and Q.T.H.; Methodology, J.W.Y., C.X.Z. and Q.T.H.; Collection, X.H.Z., and Q.T.H.; Writing–Original Draft Preparation, J.Z.Y. and Q.T.H.; Writing–Review & Editing, J.W.Y. and Q.T.H.

Data availibility

Deidentified data analyzed in this study will be available to share upon request to the corresponding author.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Liu, Z. et al. A convnet for the 2020s. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 11976–11986 (2022).

- 2.Yadav SS, Jadhav SM. Deep convolutional neural network based medical image classification for disease diagnosis. J. Big data. 2019;6:1–18. doi: 10.1186/s40537-019-0276-2. [DOI] [Google Scholar]

- 3.He, T. et al. Bag of tricks for image classification with convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 558–567 (2019).

- 4.Diwan T, Anirudh G, Tembhurne JV. Object detection using yolo: Challenges, architectural successors, datasets and applications. Multimed. Tools Appl. 2022;82:1–33. doi: 10.1007/s11042-022-13644-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Wang, C. -Y., Bochkovskiy, A. & Liao, H. -Y. M. Yolov7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. arXiv preprint arXiv:2207.02696 (2022).

- 6.Bhat, G., Danelljan, M., Gool, L. V. & Timofte, R. Learning discriminative model prediction for tracking. In Proceedings of the IEEE/CVF International Conference on Computer Vision 6182–6191 (2019).

- 7.Zhang, Y. et al. Bytetrack: Multi-object tracking by associating every detection box. In Computer Vision–ECCV 2022: 17th European Conference, Tel Aviv, Israel, October 23–27, 2022, Proceedings, Part XXII 1–21 (Springer, 2022).

- 8.Liang Y, Liu Y, Yan Y, Zhang L, Wang H. Robust visual tracking via spatio-temporal adaptive and channel selective correlation filters. Pattern Recognit. 2020;112:107738. doi: 10.1016/j.patcog.2020.107738. [DOI] [Google Scholar]

- 9.Hossain MU, et al. Automatic driver distraction detection using deep convolutional neural networks. Intell. Syst. Appl. 2022;14:200075. [Google Scholar]

- 10.Zhang J, Xie Z, Sun J, Zou X, Wang J. A cascaded R-CNN with multiscale attention and imbalanced samples for traffic sign detection. IEEE Access. 2020;8:29742–29754. doi: 10.1109/ACCESS.2020.2972338. [DOI] [Google Scholar]

- 11.Nirthika R, Manivannan S, Ramanan A, Wang R. Pooling in convolutional neural networks for medical image analysis: A survey and an empirical study. Neural Comput. Appl. 2022;34:5321–5347. doi: 10.1007/s00521-022-06953-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Dubey SR, Singh SK, Chaudhuri BB. Activation functions in deep learning: A comprehensive survey and benchmark. Neurocomputing. 2022;503:92–108. doi: 10.1016/j.neucom.2022.06.111. [DOI] [Google Scholar]

- 13.Graham, B. Fractional Max-Pooling. arXiv preprint arXiv:1412.6071 (2014).

- 14.Liu, W., Liao, S., Ren, W., Hu, W. & Yu, Y. High-level semantic feature detection: A new perspective for pedestrian detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 5187–5196 (2019).

- 15.Dou, Q., Coelho de Castro, D., Kamnitsas, K. & Glocker, B. Domain generalization via model-agnostic learning of semantic features. Adv. Neural Inf. Process. Syst. 32 (2019).

- 16.Santurkar, S., Tsipras, D., Ilyas, A. & Madry, A. How does batch normalization help optimization?. Adv. Neural Inf. Process. Syst. 31 (2018).

- 17.Lin, T.-Y., Goyal, P., Girshick, R., He, K. & Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision 2980–2988 (2017).

- 18.Hu, J., Shen, L. & Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 7132–7141 (2018).

- 19.Yu, F. & Koltun, V. Multi-scale Context Aggregation by Dilated Convolutions. arXiv preprint arXiv:1511.07122 (2015).

- 20.Wang, T., Huang, J., Zhang, H. & Sun, Q. Visual commonsense R-CNN. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 10760–10770 (2020).

- 21.Akhtar N, Ragavendran U. Interpretation of intelligence in CNN-pooling processes: A methodological survey. Neural Comput. Appl. 2020;32:879–898. doi: 10.1007/s00521-019-04296-5. [DOI] [Google Scholar]

- 22.Khumaidi, A., Yuniarno, E. M. & Purnomo, M. H. Welding defect classification based on convolution neural network (CNN) and gaussian kernel. In 2017 International Seminar on Intelligent Technology and Its Applications (ISITIA) 261–265 (IEEE, 2017).

- 23.Zhipeng, Z., Houwen, P. & Qiang, W. Deeper and wider Siamese networks for real-time visual tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 4591–4600 (2019).

- 24.Li, B. et al. Siamrpn++: Evolution of Siamese visual tracking with very deep networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 4282–4291 (2019).

- 25.Danelljan, M., Bhat, G., Khan, F. S. & Felsberg, M. Atom: Accurate tracking by overlap maximization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 4660–4669 (2019).

- 26.Cao, Z., Hidalgo, G., Simon, T., Wei, S. -E. & Sheikh, Y. Openpose: Realtime Multi-person 2D Pose Estimation Using Part Affinity Fields. arXiv preprint arXiv:1812.08008 (2018). [DOI] [PubMed]

- 27.Kreiss, S., Bertoni, L. & Alahi, A. Pifpaf: Composite fields for human pose estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 11977–11986 (2019).

- 28.Yuan J, et al. Gated CNN: Integrating multi-scale feature layers for object detection. Pattern Recognit. 2020;105:107131. doi: 10.1016/j.patcog.2019.107131. [DOI] [Google Scholar]

- 29.He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 770–778 (2016).

- 30.Newell, A., Yang, K. & Jia, D. Stacked hourglass networks for human pose estimation. In Proceedings of the European Conference on Computer Vision 483–499 (2016).

- 31.Duan, K. et al.Centernet: Keypoint Triplets for Object Detection. arXiv preprint arXiv:1904.08189 (2019).

- 32.Law H, Deng J. Cornernet: Detecting objects as paired keypoints. Int. J. Comput. Vis. 2020;128:642–656. doi: 10.1007/s11263-019-01204-1. [DOI] [Google Scholar]

- 33.Zhang, X., Wan, F., Liu, C., Ji, R. & Ye, Q. Freeanchor: Learning to match anchors for visual object detection. Adv. Neural Inf. Process. Syst. 147–155 (2019).

- 34.Bertinetto, L., Valmadre, J., Henriques, J. F., Vedaldi, A. & Torr, P. H. S. Fully-convolutional Siamese networks for object tracking. In Proceedings of the European Conference on Computer Vision Workshop 850–865 (2016).

- 35.He, A., Chong, L., Tian, X. & Zeng, W. A twofold Siamese network for real-time object tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 4834–4843 (2018).

- 36.Wang, Q., Teng, Z., Xing, J., Gao, J. & Maybank, S. Learning attentions: Residual attentional Siamese network for high performance online visual tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 4854–4863 (2018).

- 37.Li, B., Yan, J., Wu, W., Zhu, Z. & Hu, X. High performance visual tracking with Siamese region proposal network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 8971–8980 (2018).

- 38.Kristan, M. et al. The sixth visual object tracking vot2018 challenge results. In Proceedings of the European Conference on Computer Vision Workshop 3–53 (2018).

- 39.Deng, J. et al. Imagenet: A large-scale hierarchical image database. In 2009 IEEE Conference on Computer Vision and Pattern Recognition 248–255 (IEEE, 2009).

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Deidentified data analyzed in this study will be available to share upon request to the corresponding author.