Abstract

Background

Machine learning (ML)-derived notifications for impending episodes of hemodynamic instability and respiratory failure events are interesting because they can alert physicians in time to intervene before these complications occur.

Research Question

Do ML alerts, telemedicine system (TS)-generated alerts, or biomedical monitors (BMs) have superior performance for predicting episodes of intubation or administration of vasopressors?

Study Design and Methods

An ML algorithm was trained to predict intubation and vasopressor initiation events among critically ill adults. Its performance was compared with BM alarms and TS alerts.

Results

ML notifications were substantially more accurate and precise, with 50-fold lower alarm burden than TS alerts for predicting vasopressor initiation and intubation events. ML notifications of internal validation cohorts demonstrated similar performance for independent academic medical center external validation and COVID-19 cohorts. Characteristics were also measured for a control group of recent patients that validated event detection methods and compared TS alert and BM alarm performance. The TS test characteristics were substantially better, with 10-fold less alarm burden than BM alarms. The accuracy of ML alerts (0.87-0.94) was in the range of other clinically actionable tests; the accuracy of TS (0.28-0.53) and BM (0.019-0.028) alerts were not. Overall test performance (F scores) for ML notifications were more than fivefold higher than for TS alerts, which were higher than those of BM alarms.

Interpretation

ML-derived notifications for clinically actioned hemodynamic instability and respiratory failure events represent an advance because the magnitude of the differences of accuracy, precision, misclassification rate, and pre-event lead time is large enough to allow more proactive care and has markedly lower frequency and interruption of bedside physician work flows.

Key Words: alarm, alert, critical care, patient monitoring, quality, safety

Graphical Abstract

Take-home Points.

Study Question: How do machine learning alerts, physiologic trend-based telemedicine system-generated alerts, and biomedical monitor alarms compare regarding predicting episodes of intubation or administration of vasopressors?

Results: Machine learning notifications were superior to physiologic trend-based telemedicine system-generated alerts and biomedical monitor alarms regarding accuracy, precision, and alarm burden for predicting vasopressor initiation and intubation events.

Interpretation: Machine learning-derived notifications for clinically actioned vasopressor initiation and respiratory failure events represent an advance because the magnitude of the differences of accuracy, precision, misclassification rate, and longer pre-event lead time is large enough to allow more efficient monitoring and proactive care.

The biomedical monitors (BMs) that are considered essential tools for managing high-acuity critically ill, perioperative, ED, and cardiac patients are part of every modern hospital.1 These devices are designed to generate audible alarms at the time that abnormal heart rate, BP, oxygen saturation values, or arrythmias are detected rather than to predict the future occurrence of clinical emergencies. The poor predictive performance and short pre-emergent event detection characteristics of BM alarms led to the development of telemedicine system (TS) alerts based on combinations of monitor signals and their trends over time. Although TS alerts have longer time to event characteristics than BM alarms, they also have high false alarm (false-positive) rates.1 The high frequency of BM alarms, when no clinical intervention is indicted, distracts providers. False alarms lead to alarm fatigue,2 which is a driver of burnout syndrome.3 Audible bedside alarms often awaken patients. ICU stays are associated with sleep disruption with up to 26% of audible alarms causing arousal from sleep. Excessive noise is the most common preventable cause of these awakenings, and ICU noise levels frequently approach and exceed 80 dB.4 Patients consistently identify staff conversations and BM alarms as the most disruptive noises affecting their sleep,5 and noise mapping studies localize the site of loudest noise generation to BMs.6 Unlike bedside BM alarms, TS and machine learning (ML) alerts are presented away from the bedside and do not awaken patients.

The value proposition for prediction of events that require emergent clinical intervention is that earlier recognition allows risk reduction and converts emergent to urgent provider tasks. Early warning allows time for risk reduction of the need for vasopressors or invasive mechanical ventilation by implementing countermeasures (eg, oxygen therapies, noninvasive ventilation, diuresis, antimicrobials, infection source control, administration of blood products before the onset of hypotension). Accurate prediction also allows better preparation of the patient and families when risk reduction is not achievable or wanted. Identifying predictive alerts with favorable performance characteristics justifies performing studies of interventions based on their clinical application.

We evaluated two novel ML models designed, respectively, to predict clinically actioned hemodynamic (HD) and respiratory failure (RF) events that we validated using independent cohorts, including a COVID-19 population that did not exist at the time of model derivation. This paper presents the findings of studies that test the hypothesis that ML-derived alerts are more accurate, have lower false-positive rates, are less frequent, and have longer pre-event lead times than TS alerts and BM system alarms.

Study Design and Methods

A retrospective cohort study was designed to develop and measure the ability of ML algorithms to predict HD and RF events and compare their accuracy to existing notification best practices. A derivation and two separate prospectively designated internal validation cohorts were selected from patients monitored by the UMass Memorial Health eICU program population (hereafter referred to as UMass). A prospectively collected WakeMed Health System eICU population (hereafter referred to as WakeMed) and a cohort of patients with COVID-19 that did not exist at the time of model derivation were used as external control groups. A recent prospectively collected UMass usual care non-COVID-19 control cohort was used to compare event rates and test characteristics of tuned TS alerts and BM alarms.

The staffing, scheduling, governance structure, and models of critical care delivery were not changed during the study. Identification of events and their time stamps were conducted per protocol and policy and were not under the control of the clinical investigator team. The study was approved in advance by the UMass Chan School of Medicine institutional review board (No. 19623). The paper is compliant with the 36 Transparent Reporting of a Multivariable Prediction Model for Individual Prognosis or Diagnosis elements that do not compromise intellectual property.

Study Population

UMass validation population data were derived from patient stays at seven ICUs of two hospitals that included 102 adult ICU beds, and were collected between July 17, 2006, and September 30, 2017 (Table 1). Patient-level BM alarm data could not be included because of vendor proprietary restrictions. UMass usual care control cohort patients were hospitalized between June 12, 2021, and July 22, 2021, at a time when ML notifications were not available, nearly all patients with COVID-19 had been discharged from the ICUs, after the ICU telemedicine alerting system had been tuned by masking low-acuity alerts that were identified as having high false-positive rates, and bedside nurses and telemedicine physicians had completed patient-level alert and alarm tuning training. The WakeMed population included patients with a stay in one of seven adult ICUs of two hospitals, collected from November 5, 2019, to July 6, 2020 (Table 1).

Table 1.

Demographic Characteristics of the Study Cohorts

| Characteristic | UMass Model Derivation Cohort | UMass Cohort 1 | UMass Cohort 2 | WakeMed Cohort | COVID-19 Cohort | UMass Usual Care Cohort |

|---|---|---|---|---|---|---|

| No. of valid stays | 59,573 | 6,098 | 6,116 | 3,191 | 513 | 362 |

| Sex, male | 34,002 (57.1) | 3,456 (56.7) | 3,472 (56.7) | 1,808 (56.7) | 289 (56.3) | 207 (57.2) |

| Age, ya | 64 (52-76) | 63 (51-75) | 63 (51-75) | 63 (50-74) | 66 (54-77) | 63 (49-77) |

| ICU typea | ||||||

| Medical | 25,200 (42.4) | 2,596 (42.6) | 2,619 (42.8) | 941 (30.8) | 316 (74.9) | 153 (42.2) |

| Surgical | 12,008 (20.2) | 1,261 (20.7) | 1,253 (20.5) | 818 (26.8) | 31 (7.3) | 73 (20.2) |

| Neurosciences | 11,288 (19.0) | 1,164 (19.1) | 1,167 (19.1) | 299 (9.8) | 71 (16.8) | 69 (19.1) |

| Cardiovascular | 10,991 (18.5) | 1,077 (17.6) | 1,077 (17.6) | 993 (32.5) | 4 (1.0) | 67 (18.5) |

| ICU LOS, ha | 62 (34-111) | 62 (36-112) | 61 (37-111) | 49 (26-102) | 168 (89-343) | 59 (33-112) |

| Mortalitya | 7,100 (11.9) | 707 (11.6) | 709 (11.6) | 265 (8.3) | 110 (21.4) | 42 (11.6) |

| No. of events (RF and HD) | 8,892 | 909 | 907 | 1,026 | 1,078 | 77 |

| Vasopressor(s)a | 8,582 (14.4) | 841 (13.8) | 881 (14.4) | 905 (28.4) | 207 (40.4) | 51 (14.0) |

| MVa | 6,073 (10.2) | 645 (9.9) | 668 (10.2) | 509 (16.0) | 224 (43.7) | 36 (9.9) |

| Duration of MV, ha | 37 (13-97) | 34 (13-93) | 41 (14-107) | 36 (5-113) | 109 (26-242) | 33 (12-98) |

| MV and vasopressora | 3,318 (5.6) | 329 (5.4) | 349 (5.7) | 339 (10.6) | 168 (32.8) | 20 (5.5) |

| Maximal SOFA scorea | 6 (4-9) | 6 (4-9) | 6 (4-9) | 7 (4-13) | 11 (7-16) | 6 (4-9) |

Values are No. (%), median (interquartile range), or as otherwise indicated. P < .001 for all comparisons except age (P < .01). HD = hemodynamic; LOS = length of stay; MV = mechanical ventilation; RF = respiratory failure; SOFA = Sequential Organ Failure Assessment.

The UMass cohort was significantly different than the other groups.

Study Design

The study inclusion criteria were as follows: a valid registration in a study ICU, aged ≥ 18 years at the time of ICU admission, and at least 6 h of ICU data. Exclusion criteria for a study visit were heart rate and oxygen saturation data frequency of less than one observation every 60 min or prior admission to an ICU during the same hospital stay.

Model Derivation

Deidentified data elements included ICU and hospital identifiers, ICU admission and discharge time stamps, age, sex, weight, admission diagnosis, nursing and respiratory flow sheet data, vital sign and oxygen saturation data, laboratory test results, and medication orders. After deidentification, interfaced data elements routinely used for telecritical care service delivery and extracted into telemedicine platforms were mapped using a standardized proprietary ontology protocol. The mapping process included translation from hospital-specific electronic feature code into a feature map, by transforming stay identifier, data element code, time, value, and unit values using a mapping procedure. After mapping, signal preprocessing and performing a median was completed by removing redundant data, noninformative data, and values that were not within 3 SD of values for that element and a median imputation for missing values procedure.

This processing allowed derivation of fixed element features and recording of their temporal trends (time series). Based on these transformed data sets, models were developed using both primary and derived features. The models received data and applied a temporal analytical procedure, gradually exposing data from the start of the stay through discharge. The models generated notifications for the prediction of RF and HD events using only data that were available up to the time point under consideration. Each notification was recorded with its time stamp (Fig 1).

Figure 1.

Methods of model development. Model features are collections of individual data points identified by critical care experts as carrying information regarding specific organ dysfunction. Temporal features are analyses of how features change over time. ADT = admission discharge and transfer; EMR = electronic medical record.

ML models for the prediction of RF and HD events were developed and tuned using hospital data from the UMass derivation cohort (59,573 stays; 80%), and its test characteristics were measured using two distinct and prospectively identified UMass internal validation cohorts (cohort 1: 6,098 stays [10%]; cohort 2: 6,116 stays [10%]) (Table 1). Cohorts were randomly generated, and the first set with approximately proportional sex, unit identifier, length of stay, and event frequency characteristics was selected. External validation was assessed by analyses of the WakeMed data set (3,191 stays; 100%) (Table 1).

Event predictions were made every 15 min for each type of alert and were available at the time that the model detected that the probability threshold had been crossed. An RF model event was defined as a physician-documented episode of invasive mechanical ventilation initiation that occurred 6 h after ICU admission, or any such event ≥ 12 h after termination of a prior episode. An HD event was defined as nurse-documented vasopressor initiation that occurred ≥ 6 h after ICU admission or reinitiated after a 6-h vasopressor-free interval.

The models were developed using a gradient boosting algorithm, CatBoost (version 0.22; https://catboost.ai/), an ML technique commonly used for regression and classification problems, which produces a prediction model in the form of an ensemble of weak prediction decision trees. The base model with default hyperparameters was iteratively retrained according to the hyperparameter optimization process primarily for the parameters: learning rate, tree depth, and maximum number of trees. The hyperparameter optimization process also addressed data imbalance (low number of positive compared with negative predictions) by optimizing the scale_pos_weight parameter and oversampling positive events (HD or RF events). The complex final models consist of approximately 2,000 boosted trees with maximal depth of eight (number of edges/arc from the root node to the leaf node of the tree). The model features and their average contributions to a correct prediction are presented in Figure 2, and the key reporting elements are presented in e-Appendix 1.

Figure 2.

SHAP plot portraying > 15 million model label comparisons made with and without inclusion of individual data points for the 10 most impactful features for the intubation and vasopressor initiation models. Features are listed in rank order of absolute differences in their mean SHAP values. ABG oxygenation = derived from the ratio of arterial and inspired oxygen levels; GCS = Glasgow Coma Scale; Lowest BP = area under the noninvasive systolic BP by time curve; cns central nervous system; MEWS = modified Early Warning Score; NEWS = National Early Warning Score for sepsis; ns = = nervous system; res = respiration; sat = saturation; SBP = systolic BP; SHAP = Shapley Additive exPlanation Plot; SOFA = Sequential Organ Failure Assessment score (subscale).

Outcomes and Measures

The main outcome is the prediction of RF and HD events identified using a published classification algorithm.7 Automated identification of RF or HD events was accomplished using a rule-based, internally validated tagging system for the derivation set and was verified by concordant independent medical record review by expert physicians (C. M. L. and E. W. C.) for the control group. The event time stamp was defined as the time recorded for intubation or vasopressor initiation. The number of model-generated alerts and the subset of TS heart rate, BP, respiratory rate, and oxygen saturation or trend alerts (eCareManager; Philips Healthcare) that were presented to monitoring personnel were captured electronically, and the number of monitor alarms (Philips, Spacelabs, Mindray, or Nihon Kohden) presented to bedside providers was counted during near real-time review of monitor summary display screens and entered into the study database. The alarm time was the time of alarm suppression by a physician, or the most proximal to the event time when this did not occur.

Event and Feature Windows

RF and HD event prediction models were evaluated separately and independently for each cohort. Alert or alarm performance characteristics were calculated from event occurrence and event nonoccurrence time windows for RF and HD events. The event prediction window was defined as the 8 h that preceded an RF or HD event time stamp. Event-type-specific nonevent windows commenced at the first time that was 6 h after ICU admission that was not during an event occurrence window or 8 h after a nonevent window when no corresponding-type event had occurred. Nonevent windows terminated at discharge, the start of a corresponding-type event occurrence window, or after 8 h. One or more corresponding-type (RF or HD) prediction(s) that occurred during an event window was recorded as a single true-positive prediction. Predictions that occurred during a nonevent window were classified as a false-positive prediction, event windows without predictions were classified as a single false-negative prediction, and each nonevent window without a prediction was classified as a single true-negative prediction. Event and nonevent time windows of < 4-h duration were excluded. Each feature had a distinct window duration that was indirectly proportional to the frequency of the measurement of its components.

Statistical Analyses

Nonparametric analyses including lead time (defined as the time between event notification and the event occurrence time stamps) were compared by analysis of variance based on ranks. Alert and alarm frequency data for the alternative types of alerts were compared by the Mann-Whitney rank sum test. Rates and proportion data were analyzed by the χ2 or Pearson product moment correlation tests for individual cohorts, and P < .05 was considered significant. These prespecified analyses were conducted using SAS version 9.4 (SAS Institute).

Results

The respective study cohorts were similar regarding their demographic characteristics and to those reported for US adult ICUs that use telemedicine monitoring.8 The cohorts used to develop and validate the ML notification models varied regarding acuity, in-hospital mortality, and rates of HD and RF events (Table 1).

The clustering of ML notifications around clinically actioned HD and RF events that was evident by visual inspection was more difficult to discern for TS alerts and was not appreciated for BM alarms. ML notifications were directly and tightly correlated with events across the cohorts with a regression coefficient of 0.98 (P < .001). TS alerts were significantly more frequent during event than no event time windows (median, 12; interquartile range [IQR], 5-46 vs median, 4; IQR, 0-12, respectively; P < .01). BM alarm rates during event windows were not significantly different from those of no event windows (median, 53; IQR, 4-90 vs median, 46; IQR, 22-77 alarms/window; P = .46).

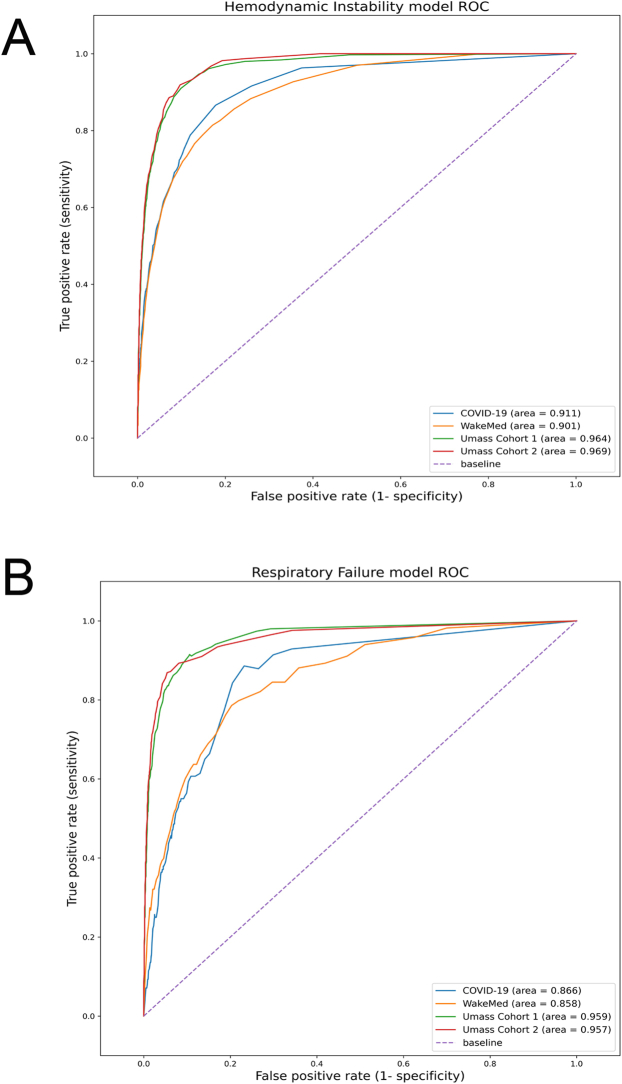

The area under the receiver operating characteristic curves for the ML HD models were 0.969 for the UMass cohort 1, 0.964 for the UMass cohort 2, 0.901 for the WakeMed cohort, and 0.911 for patients with COVID-19. The RF area under the receiver operating characteristic curves were 0.957 for the UMass cohort 1, 0.959 for the UMass cohort 2, 0.858 for the WakeMed cohort, and 0.966 for the COVID-19 cohort (e-Fig 1). The higher specificity of ML notifications translated to better summary performance characteristics for ML notifications than for TS alerts. The harmonic means of precision and recall (F scores) of ML were more than fivefold higher than those of TS alerts. The accuracy of ML notifications was 0.87 to 0.94, which is in the range of many clinical tests, whereas the 0.53 value for TS alerts is not. The misclassification rates for ML notifications were more than threefold lower than those of TS alerts (Table 2).

Table 2.

Validation Studies Model Performance Characteristics

| Characteristic | UMass Cohort 1 | UMass Cohort 2 | WakeMed Cohort | COVID-19 Cohort | Telemedicine System Alerts |

|

|---|---|---|---|---|---|---|

| UMass Cohort 1 | UMass Cohort 2 | |||||

| CLEW hemodynamic instability model | ||||||

| Patientsa | 6,098 | 6,116 | 3,191 | 513 | 6,098 | 6,616 |

| F scoreb | 0.20 | 0.21 | 0.33 | 0.36 | 0.032 | 0.032 |

| TPR (sensitivity) | 0.72 | 0.72 | 0.61 | 0.79 | 0.67 | 0.69 |

| TNR (specificity) | 0.94 | 0.94 | 0.94 | 0.88 | 0.53 | 0.53 |

| PPV (precision) | 0.12 | 0.12 | 0.22 | 0.23 | 0.016 | 0.016 |

| NPV | 0.99 | 0.99 | 0.99 | 0.99 | 0.99 | 0.99 |

| Accuracy | 0.94 | 0.94 | 0.92 | 0.87 | 0.53 | 0.53 |

| Misclassification rate | 0.065 | 0.062 | 0.068 | 0.13 | 0.47 | 0.47 |

| TP | 478 | 462 | 492 | 426 | 443 | 441 |

| FP | 3,538 | 3,337 | 1,714 | 1,421 | 26,770 | 26,776 |

| TN | 53,206 | 53,082 | 27,545 | 10,005 | 29,974 | 29,643 |

| FN | 186 | 180 | 320 | 111 | 221 | 201 |

| No. of events | 664 | 642 | 812 | 537 | 664 | 642 |

| No. of alert windows | 4,016 | 3,799 | 2,206 | 1,847 | 27,213 | 27,212 |

| Mean total alerts per patient per dayc | 0.21 | 0.20 | 0.22 | 0.46 | 12 | 11 |

| Median lead time (IQR), min | 240 (170-342) | 220 (160-340) | 180 (72-290) | 180 (72-290) | 200 (78-340) | 180 (72-330) |

| CLEW respiratory failure model | ||||||

| Patientsa | 6,098 | 6,116 | 3,191 | 513 | 6,098 | 6,116 |

| F scoreb | 0.143 | 0.132 | 0.111 | 0.213 | 0.020 | 0.019 |

| TPR (sensitivity) | 0.75 | 0.70 | 0.58 | 0.59 | 0.49 | 0.47 |

| TNR (specificity) | 0.94 | 0.94 | 0.91 | 0.90 | 0.70 | 0.69 |

| PPV (precision) | 0.08 | 0.07 | 0.06 | 0.13 | 0.01 | 0.01 |

| NPV | 0.99 | 0.99 | 0.92 | 0.81 | 0.99 | 0.99 |

| Accuracyd | 0.94 | 0.94 | 0.91 | 0.89 | 0.70 | 0.69 |

| Misclassification rate | 0.057 | 0.061 | 0.088 | 0.11 | 0.30 | 0.31 |

| TP | 199 | 200 | 124 | 83 | 130 | 132 |

| FP | 2,325 | 2,535 | 1,900 | 558 | 12,401 | 13,132 |

| TN | 39,172 | 40,011 | 20,485 | 4,786 | 29,069 | 29,414 |

| FN | 65 | 84 | 90 | 57 | 134 | 152 |

| No. of events | 264 | 284 | 214 | 140 | 264 | 284 |

| No. of alert windows | 2,524 | 2,735 | 2,024 | 641 | 12,531 | 13,264 |

| Mean total alerts per patient per dayc | 0.18 | 0.19 | 0.27 | 0.35 | 11 | 11 |

| Median lead time (IQR), h | 240 (160-350) | 210 (150-320) | 260 (180-370) | 250 (170-360) | 170 (54-340) | 190 (78-350) |

FN = false negative; FP = false positive; IQR = interquartile range; NPV = negative predictive value; PPV = positive predictive value; TN = true negative; TNR = true negative rate; TP = true positive; TPR = true positive rate.

Number of ICU visits that contributed data to one or both models.

Harmonic mean of precision and recall with a range of 0 to 1. TPR can also be termed model recall, and PPV (true positives/[true positives + false negatives]) can also be termed model precision.

Each 8-h alert window has one or more alerts.

Accuracy is defined as (true positives + true negatives)/(all positives + all negatives).

ML notifications were significantly (more than 20-fold) less frequent than TS alerts (P < .01) (Table 2). The substantially longer time between notification and an HD or RF event by ML notifications or TS alerts than by BM alarms meaningfully increases the time for the implementation of countermeasures (Table 2, Table 3).

Table 3.

Control Study Tuned Alert and Alarm Performance Characteristics

| Characteristic | Hemodynamic Events |

Intubation Events |

||

|---|---|---|---|---|

| Biomedical Alarms | Telemedicine Alerts | Biomedical Alarms | Telemedicine Alerts | |

| Patients | 362 | 362 | 362 | 362 |

| F scorea | 0.023 | 0.040 | 0.016 | 0.024 |

| TPR (sensitivity) | 0.63 | 0.62 | 0.60 | 0.63 |

| TNR (specificity) | 0.017 | 0.46 | 0.011 | 0.28 |

| PPV (precision) | 0.012 | 0.020 | 0.008 | 0.012 |

| NPV | 0.71 | 0.98 | 0.66 | 0.98 |

| Accuracy | 0.028 | 0.46 | 0.019 | 0.28 |

| Misclassification rate | 0.37 | 0.38 | 0.40 | 0.37 |

| TP | 51 | 50 | 37 | 39 |

| FP | 4,346 | 2,391 | 4,389 | 3,217 |

| TN | 73 | 2,028 | 49 | 1,221 |

| FN | 30 | 31 | 25 | 23 |

| No. of events | 81 | 81 | 62 | 62 |

| No. of alert windows | 4,397 | 2,441 | 4,426 | 3,256 |

| Mean total alerts per patient per dayb | 159 | 10 | 127 | 10 |

| Median lead time (IQR), min | 4.8 (0-7.2) | 170 (60-350) | 4.2 (0-7.8) | 240 (120-350) |

FN = false negative; FP = false positive; IQR = interquartile range; NPV = negative predictive value; PPV = positive predictive value; TN = true negative; TNR = true negative rate; TP = true positive; TPR = true positive rate.

Harmonic mean of precision and recall with a range of 0 to 1. TPR can also be termed model recall, and PPV can also be termed model precision.

Each 8-h alert window has one or more alerts or alarms.

Untuned (not adjusted) ML models produced stable performance characteristics across internal and independent external validation cohorts including a cohort of patients with COVID-19 that did not exist at the time of model derivation. Validation study of the area under the receiver operating characteristic curves is presented graphically as e-Figure 1.

The performance characteristics of tuned TS alerts and BM alarms are presented in Table 3. The accuracy of TS alerts was more than 10-fold higher than that of BM alerts, and the TS F scores were substantially higher than those of the BM. The frequency of BM alarms was significantly (10-fold higher) than TS alerts (P < .001) with equivalent rates of false negative alerts or alarms. The performance of the tuned TS alerts was qualitatively similar to the untuned alerts of the validation study cohorts.

A visual display of the substantial differences of accuracy, presented as a function of alarm burden characteristics for ML, TS notifications, and BM alarms, is displayed in Figure 3. ML notifications were more accurate with lower levels of alarm burden.

Figure 3.

Alarm, alert, or notification burden and accuracy characteristics for machine learning (ML), telemedicine system (TS), and biomedical monitor (BM) systems. The open circles represent the frequencies and test characteristics of the control study BM alarms for predicting respiratory failure and hemodynamic events; the gray circles present these characteristics of the TS alarms for the control cohort, UMass cohort 1, and UMass cohort 2; and the black circles present these characteristics for ML notifications for UMass cohort 1, UMass cohort 2, and WakeMed cohort.

Discussion

The term ML notifications is derived from the use of the analytical techniques applied to the data clusters of their features. However, they are better described as physician-guided ML alerts because individual feature development was guided by critical care specialist experts who identified groups of data elements as predictive of episodes of hypotension and RF that require emergent intervention. The most predictive features of both models are measures of oxygenation with measures of BP supplementing the initiation of vasopressor model (Fig 2). The models substantially enhance the established early warning system predictions which they include9,10 by using features processed from electronic medical records, laboratories, and BM feeds (Fig 1). Even without recalibration, the ML alerts were better predictors of emergent life-threatening RF and HD instability events than BM alarms or TS analyses of BM signal trends. ML notifications are more accurate, more specific, have lower rates of misclassification, are markedly less frequent, and have longer pre-event lead times than TS alerts and BM alarms. The magnitude of the differences for these performance characteristics materially affects the clinical utility and alarm burden aspects of BM technologies as displayed in Figure 3. The ability to predict clinically actioned HD and RF events hours in advance allows time for risk reduction interventions and clinical countermeasures. The transformation of even a small number of emergent responses to physiologic instability events into controlled, measured, protocol-guided best practice interventions would be expected to meaningfully improve ICU care delivery.

The differences of alarm frequency among the systems have important implications for provider-level alarm burden and fatigue11 and system-level costs of patient monitoring. The average daily number of auditory notifications delivered to the bedside caregivers by their BM alarm system was 150 alarms per patient. The unintended consequences of these high false-positive auditory alarms include the generation of anxiety and sleep disruption among patients who perceive them. Many providers have identified that the time required to respond to the 147 false-positive alarms does not contribute to a sense of professional fulfillment. Replacing these 147 burdensome, inefficient, interrupting alarms that annoy patients with one accurate notification that occurs once every 5 days is one way that health care systems can directly reduce burnout syndrome by impacting the personal accomplishment domain of the Maslach Burnout Inventory.3 Introducing an ML notification system reduces alarm fatigue by reducing the number of auditory interruptions, dramatically increases the percentage of notification responses that providers perceive as in time to help patients, and creates a more calm and peaceful ICU environment. This is one of the few available options that improves ICU burnout syndrome by reducing unnecessary workload.

The small differences of alarm frequency observed after BM manufacturer-recommended bedside tuning training suggest that this is not an effective solution for reducing alarm frequency. Despite optimization, the 150 daily auditory alarms we observed correspond to 13 awakening events during each 8 h of attempted sleep. The characteristics of ML notifications presented by this study allow an equipoise argument for a clinical trial of BM alarm silencing or reduction of volume to less than the 35- to 45-dB Environmental Protection Agency noise limit.12 The fear that changing or silencing BM alarms would result in missed events is based on the non-evidence-based notion that BMs do not miss clinically actioned events. The finding of this study, that ML, TS, and BM-based monitoring have similar false-negative characteristics for HD and RF events, provides a rationale for equipoise around the issue of bedside auditory alarm silencing.

The differences of alarm accuracy and burden presented in Figure 3 are large enough to encourage the development of effective monitoring strategies that have lower costs than alert curation by offsite monitoring teams. Such teams are common in the ICU, tele-ICU, and telemetry-based step-down units. The pre-event lead times that are made possible by ML notification technologies allow for targeted delivery of notifications directly to bedside physicians for intervention. Because the accuracy of ML alerts approaches that of many currently available clinical tests, the development of notification evaluation protocols, akin to those used for fever evaluation, appear to be justified. Alternatively, access to critical care specialist monitoring services for alarm management could be expanded at modest cost because the frequency of notification is such that all the 68,558 US nonfederal ICU beds could be theoretically covered by 76 critical care telemedicine specialists working simultaneously.13,14

The key limitations of this study include its retrospective design and observational nature that prevented conclusions regarding the effects of ML notifications on clinical interventions or outcomes. Although the models substantially reduced false-positive predictions, they did not lower them to a frequency that would eliminate the need for physician review before action. Further research will be required to develop models with higher positive predictive value that virtually eliminate false-positive alerts. The study also did not focus on individual patient alarms or patient-level comparisons because the study was designed to examine the potential impact on providers managing an ICU population. Despite expert verification, some events may not have been identified. In addition, some clinically important events such as augmentaion of oxygen therapies and administartion of IV fluids were not included. The study did not directly assess the extent to which model performance is dependent on the accuracy of bedside provider documentation; however, analyses of model performance across ICUs, hospitals, and health care systems suggest that their differences of documentation processes did not cause unacceptable deterioration of model performance. The effects of cohort-specific tuning for ML notifications are unknown because they were deployed without recalibration or customization.

Interpretation

ML notifications for HD and RF events are a technological advance with the potential to support more proactive and less burdensome care delivery processes for critically ill adults. When deployed without tuning, the accuracy, precision, misclassification, and frequency characteristics are several-fold more favorable than usual care methods of event prediction. The predictive ability of these ML alerts suggests that a study of their ability to impact event rates and identify the type of monitoring system that providers and patients prefer would be of interest. One unusual feature of this report is the diversity of the populations for which model performance was stable without site-specific tuning. ML notifications for HD and RF events have clinically actionable accuracy and occur with frequencies far below the 147 false-positive alarms per patient per day rate of BMs.

Funding/Support

The authors have reported to CHEST that no funding was received for this study.

Financial/Nonfinancial Disclosures

The authors have reported to CHEST the following: O. C., A. L., I. L., and G. C. are employees of CLEW Medical. I. M. P. and J. B. serve as consultants to CLEW Medical. None declared (D. K., G. L., E. C., C. L.).

Acknowledgments

Author contributions: C. M. L., J. M. B., and O. C. are responsible for the accuracy of this report. J. M. B., O. C., I. M. P., D. K., A. L., and C. M. L. conceived and designed the analyses. I. L., G. C., G. L., E. W. C., and C. M. L. collected the data. I. L., G. C., O. C., and G. L. contributed data or analysis tools. G. C., O. C., G. L., and C. M. L. performed the analyses. C. M. L., J. M. B., I. M. P., and O. C. wrote the paper.

Other contributions: We thank Mauricio Leitào, MD, who curated the automated event detection system. We also thank Gurudev Lotun, MCP, for creating the data deidentification module.

Additional information: The e-Appendix and e-Figure are available online under "Supplementary Data."

Footnotes

Drs Kirk and Pessach contributed equally to this manuscript.

Supplementary Data

e-Figure.

References

- 1.Femtosim Clinical Inc. History of physiologic monitors Femtosim Clinical Inc website. http://www.femtosimclinical.com/History%20of%20Physiologic%20Monitors.htm

- 2.Andrade-Mendez B., Arias-Torres D.O., Gomez-Tovar L.O. Alarm fatigue in the intensive care unit: relevance and response time. Enferm Intensiva (Engl Ed) 2020;31(3):147–153. doi: 10.1016/j.enfi.2019.11.002. [DOI] [PubMed] [Google Scholar]

- 3.Kleinpell R., Moss M., Good V.S., Gozal D., Sessler C.N. The critical nature of addressing burnout prevention: results from the Critical Care Societies Collaborative's National Summit and Survey on Prevention and Management of Burnout in the ICU. Crit Care Med. 2020;48(2):249–253. doi: 10.1097/CCM.0000000000003964. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Freedman N.S., Gazendam J., Levan L., Pack A.I., Schwab R.J. Abnormal sleep/wake cycles and the effect of environmental noise on sleep disruption in the intensive care unit. Am J Respir Crit Care Med. 2001;163(2):451–457. doi: 10.1164/ajrccm.163.2.9912128. [DOI] [PubMed] [Google Scholar]

- 5.Freedman N.S., Kotzer N., Schwab R.J. Patient perception of sleep quality and etiology of sleep disruption in the intensive care unit. Am J Respir Crit Care Med. 1999;159(4 pt 1):1155–1162. doi: 10.1164/ajrccm.159.4.9806141. [DOI] [PubMed] [Google Scholar]

- 6.Darbyshire J.L., Muller-Trapet M., Cheer J., Fazi F.M., Young J.D. Mapping sources of noise in an intensive care unit. Anaesthesia. 2019;74(8):1018–1025. doi: 10.1111/anae.14690. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Jeddah D., Chen O., Lipsky A.M., et al. Validation of an automatic tagging system for identifying respiratory and hemodynamic deterioration events in the intensive care unit. Healthc Inform Res. 2021;27(3):241–248. doi: 10.4258/hir.2021.27.3.241. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Lilly C.M., Swami S., Liu X., Riker R.R., Badawi O. Five-year trends of critical care practice and outcomes. Chest. 2017;152(4):723–735. doi: 10.1016/j.chest.2017.06.050. [DOI] [PubMed] [Google Scholar]

- 9.Wong A.I., Kamaleswaran R., Tabaie A., et al. Prediction of acute respiratory failure requiring advanced respiratory support in advance of interventions and treatment: a multivariable prediction model from electronic medical record data. Crit Care Explor. 2021;3(5) doi: 10.1097/CCE.0000000000000402. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Liu V.X., Lu Y., Carey K.A., et al. Comparison of early warning scoring systems for hospitalized patients with and without infection at risk for in-hospital mortality and transfer to the intensive care unit. JAMA Netw Open. 2020;3(5) doi: 10.1001/jamanetworkopen.2020.5191. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Winters B.D., Cvach M.M., Bonafide C.P., et al. Technological distractions (part 2): a summary of approaches to manage clinical alarms with intent to reduce alarm fatigue. Crit Care Med. 2018;46(1):130–137. doi: 10.1097/CCM.0000000000002803. [DOI] [PubMed] [Google Scholar]

- 12.Kamdar B.B., Needham D.M., Collop N.A. Sleep deprivation in critical illness: its role in physical and psychological recovery. J Intensive Care Med. 2012;27(2):97–111. doi: 10.1177/0885066610394322. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Halpern N.A., Tan K.S., DeWitt M., Pastores S.M. Intensivists in U.S. acute care hospitals. Crit Care Med. 2019;47(4):517–525. doi: 10.1097/CCM.0000000000003615. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.American Hospital Association Fast facts on U.S. hospitals, 2020. https://www.aha.org/statistics/fast-facts-us-hospitals

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.