Abstract

Postelection surveys regularly overestimate voter turnout by 10 points or more. This article provides the first comprehensive documentation of the turnout gap in three major ongoing surveys (the General Social Survey, Current Population Survey, and American National Election Studies), evaluates explanations for it, interprets its significance, and suggests means to continue evaluating and improving survey measurements of turnout. Accuracy was greater in face-to-face than telephone interviews, consistent with the notion that the former mode engages more respondent effort with less social desirability bias. Accuracy was greater when respondents were asked about the most recent election, consistent with the hypothesis that forgetting creates errors. Question wordings designed to minimize source confusion and social desirability bias improved accuracy. Rates of reported turnout were lower with proxy reports than with self-reports, which may suggest greater accuracy of proxy reports. People who do not vote are less likely to participate in surveys than voters are.

Keywords: survey methodology, voter turnout, general social survey, election studies, survey error

Introduction: The Turnout Gap

Researchers have known for decades that postelection survey measurements of voter turnout have routinely been notably inaccurate (e.g., Clausen 1968; Parry and Crossley 1950; Smith 1982). With all sorts of sample designs, data collection modes, and electoral environments, surveys have often produced higher rates of turnout than the rates reported by government elections officials. For example, 62 percent of the voting-eligible population (VEP) voted in the U.S. presidential election in 2008, according to government statistics, yet 77 percent of respondents in the 2008 American National Election Study reported having voted (DeBell and Cowden 2010). We use the term “turnout gap” to describe this discrepancy between the turnout rates computed from administrative records (which we call the “actual” turnout) and the self-reports in surveys.

Researchers have been concerned about the turnout gap for at least two major reasons. First, errors in measurements of turnout may call into question the scientific conclusions of the empirical literature on turnout. Research has sought to describe voters and nonvoters, to understand the policy and electoral consequences of the differences between them, and to understand why people do and do not vote. When turnout measurements are wrong, such that one-fifth of respondents who analysts think voted were actually nonvoters, then conclusions about the distinguishing characteristics of voters as well as the causes and consequences of turnout may be incorrect. For a time, such distortion was thought not to be substantial (Flanigan and Zingale 1983; Katosh and Traugott 1981; Sigelman 1982). However, differences between self-reported turnout and validated turnout (i.e., individuals’ turnout as indicated by official records maintained by elections officials) have been shown to lead to substantively different conclusions about the variables associated with turnout (Ansolabehere and Hersh 2012; Bernstein, Chadha, and Montjoy 2001; Silver, Anderson, and Abramson 1986), constituting more reason to worry about the gap.

A second reason for concern about erroneous turnout measurements is that errors call into question the conclusions of the even larger research literature on candidate choice and election outcomes. If many respondents who say they voted really did not, then parameter estimates in statistical analyses predicting candidate choices may be biased due to the inclusion of fictitious reports of candidate choices by nonvoters.

Despite turnout data’s importance for the study of elections, the turnout gap has vexed at least three generations of researchers. Yet a vast amount of data has accumulated from studies employing varied methods that afford new opportunities to explore the methodological factors associated with greater accuracy in these estimates. We do so in this article.

We begin by describing the size of the turnout gap in three major ongoing national surveys: the American National Election Studies (ANES), the General Social Survey (GSS), and the Current Population Survey (CPS). We specify appropriate data for the assay of the gap and offer a caveat regarding potentially misleading reports about the accuracy of data from the CPS. Then we turn to possible explanations for the turnout gap and evaluate, with univariate and then multivariate analyses, the characteristics of data collections that may be related to accuracy: survey mode, survey timing, question wording, proxy reporting, panel attrition, panel conditioning, and response rates.

Describing the Turnout Gap: Data and Method

Turnout statistics based on government records are provided by the U.S. Elections Project (McDonald 2015) for the voting-age population (VAP) and VEP for all national elections held between 1789 and 2012. The VAP turnout rate is the proportion of voting-age Americans who cast a ballot. The VEP turnout rate is the proportion of Americans eligible to register and vote who cast a ballot, which excludes noncitizens and citizens disenfranchised by certain criminal convictions.

We obtained survey estimates of voter turnout from the ANES, GSS, and CPS from the earliest year available through 2008, which is the last presidential election year for which turnout estimates were available from all three studies at the time we did these analyses. These are among the most widely used data sets in the social sciences generally and in the study of elections in particular. They are also well suited to a comparison of methodological details because of their varied research methods and their records spanning dozens of studies over several decades.

ANES

The ANES time series includes surveys of area probability samples of noninstitutionalized American citizens conducted approximately biannually since 1948. In presidential election years, respondents have been interviewed both before and after the election. In years without a presidential election, respondents have usually been interviewed only postelection. Data collection was almost always exclusively via face-to-face interviewing.

The ANES has used several different question wordings to assess turnout and has implemented some split-half experiments to compare results obtained by different question wordings. Most common were the following wordings:

In [Election year] [Democratic candidate] ran on the Democratic ticket against [Republican candidate] for the Republicans. Do you remember for sure whether or not you voted in that election?

In talking to people about elections, we often find that a lot of people were not able to vote because they weren’t registered, they were sick, or they just didn’t have time. How about you—did you vote in the elections this November?

GSS

The GSS has collected data from area probability samples of noninstitutionalized American adults annually from 1972 to 1993 and biannually since 1994. Respondents were interviewed mostly face-to-face, though some respondents in recent years were interviewed by telephone.

The GSS measured turnout with the following question:

In [Election year], you remember that [Democratic candidate] ran for President on the Democratic ticket against [Republican candidate] for the Republicans. Do you remember for sure whether or not you voted in that election?

GSS interviews occur before November, so the questions refer to elections in past years. For example, GSS turnout reports for the 2004 election come from GSS interviews in 2006 or later because the 2004 GSS occurred before the 2004 election.

CPS

The CPS is a monthly survey of about 50,000 households conducted by the Bureau of the Census for the Bureau of Labor Statistics. The sample represents the U.S. civilian noninstitutional population. Every two years, a voting and registration supplement has been added to the CPS in November following national elections. In each household, at least one person served as the informant and provided self-descriptions, and in some cases, informants provided reports about other household members (i.e., proxy reports). The CPS has gauged turnout by asking the following question:

In any election, some people are not able to vote because they are sick or busy or have some other reason, and others do not want to vote. Did [you/name] vote in the election held on [date of election]?

More details about the data and methods used for the present project to study 67 ANES, GSS, and CPS data sets are described in Online Appendix 1, including the response rates and sample sizes for all surveys that were examined.

Describing the Turnout Gap

In comparison to the official government-reported turnout rates (VEP and VAP), the ANES, GSS, and CPS significantly overestimated turnout in the VEP in almost every survey year, with the CPS being more accurate on average (10 percentage point error) than the GSS (15 points) and the ANES (17 points; see Online Appendix Table A4).

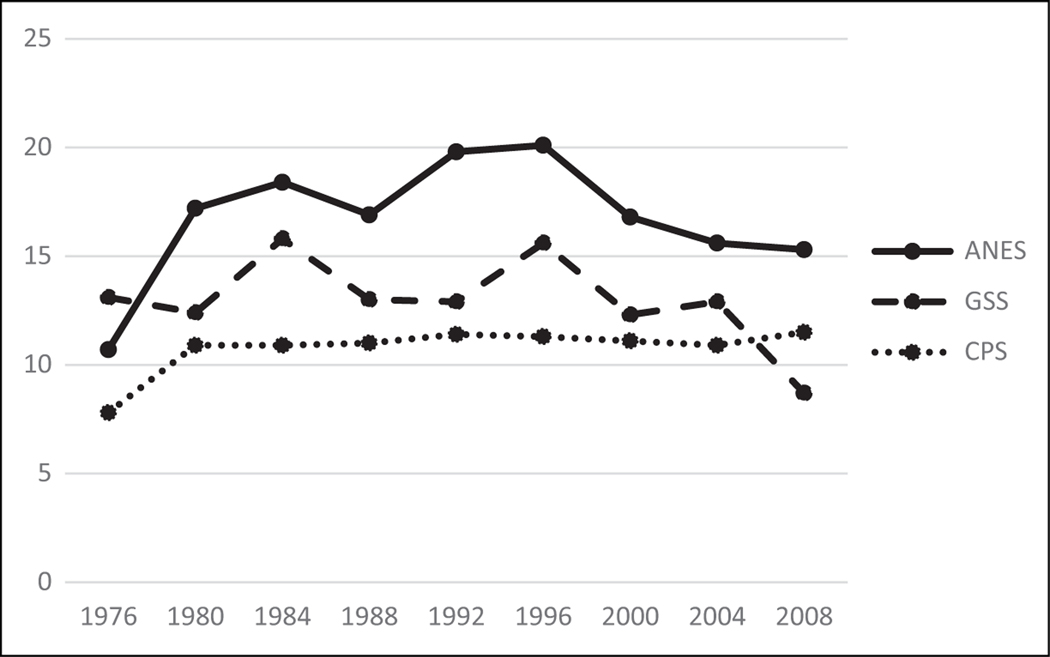

Comparison of these three figures is hampered by the fact that the surveys differed in which elections they asked about. Limiting the comparison to the elections for which all three surveys provided estimates and using only reports about the most recent election (i.e., the same year for the ANES and CPS and the election one or two years prior for the GSS), the gaps for the nine presidential election years 1976 through 2008 averaged 11 points for the CPS, 13 for GSS, and 17 for ANES (see Figure 1).

Figure 1.

Turnout gaps in ANES, GSS, and CPS: 1976–2008. ANES = American National Election Studies; CPS = Current Population Survey; GSS = General Social Survey.

A Correction to Census Estimates

These CPS turnout gaps are much larger than the gaps indicated in reports published by the U.S. Census Bureau on the same data (e.g., File and Crissey 2010). For example, the Census Bureau reported an estimated turnout rate of 63.6 percent of the voting-age citizen population in 2008, meaning a turnout gap of just 1 percentage point. However, this apparent accuracy is an artifact of a particular way of handling missing data, assuming that all respondents about whom no data were collected did not vote (see also Gera et al. 2010; Hur and Achen 2013).

In the CPS, for more than 10 percent of the household members, turnout data are missing because the self-reporter or the proxy reporter failed to answer the turnout question or because the interviewer did not administer the voting and registration supplement questionnaire at all.1 When the Census Bureau computed turnout rates using their survey data, these missing data points were all included in the denominator of the turnout rate but were excluded from the numerator, reflecting the assumption that none of these people voted. In 2008, this amounts to the assumption that 28.4 million Americans (about 14 percent of the adult citizen population in 2008) were nonvoters simply because no turnout data were collected about the survey sample’s representatives of those people.

The conventional default approach to handling missing data is listwise deletion. That means excluding from both the numerator and the denominator the cases with missing data and reporting an estimate for the cases for whom data are complete. This straightforward approach yields a 2008 CPS turnout estimate of 73.8 percent and a turnout gap of 11.5 percent (see Online Appendix Table A4; all the other estimates in Table A4 are based on the same method.).

Instead of eliminating household members about whom turnout reports were not obtained, it is possible to implement model-based imputation to guess whether they voted or not. When we did this, it yielded a nearly identical turnout gap of 10.9 percentage points (see the Online Appendix for details).

This sort of correction to CPS estimates is important because it shows that the turnout gap in the CPS is large instead of negligible and also because listwise deletion of missing data and model-based imputation change the apparent characteristics of nonvoters. For example, if people with lower incomes are less likely to respond to the survey and are also less likely to vote (which they are), then this correlation between nonresponse and non-voting causes bias in the survey estimates of the characteristics of nonvoters, and the Census Bureau’s method hides but does not correct some of that bias by making nonvoters look more similar to voters than they really are, as shown in Table 1.

Table 1.

Percentage of Voters and Percentage of Nonvoters With Selected Characteristics: 2008.

| Nonvoters | ||||

|---|---|---|---|---|

| Characteristics | Voters | Census Method | Listwise Method | Method Difference |

|

| ||||

| Age | ||||

| 18–29 | 17.7 | 30.4 | 33.1 | 2.7 |

| (0.19) | (0.31) | (0.40) | (0.50) | |

| 30–69 | 69.2 | 59.7 | 57.1 | −2.6 |

| (0.23) | (0.33) | (0.42) | (0.53) | |

| 70+ | 13.1 | 9.9 | 9.8 | −0.1 |

| (0.17) | (0.20) | (0.25) | (0.32) | |

| Male | 46.3 | 51.2 | 52.8 | 1.6 |

| (0.25) | (0.33) | (0.42) | (0.54) | |

| Bachelor’s degree | 22.3 | 10.9 | 7.3 | −3.6 |

| (0.21) | (0.21) | (0.22) | (0.30) | |

| Advanced degree | 11.5 | 3.9 | 2.2 | −1.7 |

| (0.16) | (0.13) | (0.12) | (0.18) | |

| Black | 12.9 | 12.9 | 9.9 | −3.0 |

| (0.20) | (0.27) | (0.31) | (0.41) | |

| Hispanic | 5.2 | 9.9 | 11.1 | 1.2 |

| (0.17) | (0.31) | (0.42) | (0.52) | |

| Parent | 67.8 | 53.9 | 52.4 | −1.5 |

| (0.23) | (0.33) | (0.42) | (0.54) | |

| Disabled | 3.1 | 6.4 | 7.7 | 1.3 |

| (0.09) | (0.16) | (0.23) | (0.28) | |

| Moved in last year | 10.6 | 14.2 | 21.8 | 7.6 |

| (0.15) | (0.23) | (0.35) | (0.42) | |

| Not moved in last five years | 62.6 | 31.0 | 46.5 | 15.5 |

| (0.24) | (0.31) | (0.42) | (0.52) | |

| Employed or retired | 84.4 | 72.6 | 69.9 | −2.7 |

| (0.18) | (0.30) | (0.39) | (0.49) | |

| Veteran | 12.0 | 8.7 | 8.3 | −0.4 |

| (0.16) | (0.19) | (0.23) | (0.30) | |

| Southern | 34.2 | 37.2 | 39.1 | 1.9 |

| (0.23) | (0.32) | (0.41) | (0.52) | |

| Married | 58.9 | 42.2 | 39.5 | −2.7 |

| (0.24) | (0.33) | (0.41) | (0.53) | |

| Family income | ||||

| Under US$20,000 | 11.7 | 22.2 | 24.2 | 2.0 |

| (0.16) | (0.28) | (0.36) | (0.46) | |

| US$20,000–US$34,999 | 14.9 | 21.3 | 22.5 | 1.2 |

| (0.18) | (0.27) | (0.35) | (0.45) | |

| US$35,000–US$59,999 | 23.2 | 24.6 | 24.8 | 0.2 |

| (0.21) | (0.29) | (0.37) | (0.47) | |

| US$60,000 or more | 50.2 | 32.0 | 28.5 | −3.5 |

| (0.25) | (0.31) | (0.38) | (0.49) | |

Source: Current Population Survey, Voting and registration supplement, November 2008. Note: n = 85,587. Sampling errors are in parentheses and are based on generalized variance parameters. “Listwise method” deletes missing data listwise. “Census method” includes missing data in denominator.

The final columns of Table 1 show the difference between the CPS method and the listwise deletion method in terms of the proportion of nonvoters in each of various demographic categories.2 Many of these differences are small, but the differences tell a consistent story: The listwise deletion approach reveals bigger differences between voters and nonvoters. With listwise deletion, nonvoters are younger (33 compared to 30 percent, aged 18–29), less educated (7 compared to 11 percent with a bachelor’s degree), more likely to have moved in the past year (22 compared to 14 percent), more likely to have not moved in the past five years (47 compared to 31 percent), and poorer (24 compared to 22 percent earning under US$20,000/year and 29 compared to 32 earning over US$60,000) than the CPS method would indicate.3 Fewer nonvoters appear to be black than the CPS method implies, although high turnout among blacks for Barack Obama’s election may make 2008 unusual in this respect.

Explaining the Turnout Gap: Likely Factors

Having described the magnitude of the turnout gap in the major surveys, we turn now to methodological explanations for it. We examined six factors that may cause the turnout gap: mode (face-to-face vs. telephone interviewing), elapsed time between the election and the survey interview, question wording, self-reporting versus proxy reporting, panel attrition and conditioning, and response rates. We begin by outlining our hypotheses and then report tests of all of them.

Mode

Answers to questions about political behavior are sometimes affected by social desirability bias: The tendency of some respondents to give answers that they think will be viewed favorably by others. Scholarship exploring the effect of interview mode on this tendency has revealed that face-to-face interviewing is less susceptible to social desirability bias than telephone interviewing (Holbrook, Green, and Krosnick 2003). With regard to turnout measurement, social desirability bias might be manifested through respondents claiming to have voted or claiming not to know whether they voted when they know they did not. Survey mode might also affect turnout reports by influencing survey satisficing: The tendency of some survey respondents to give the first answer that comes to mind that seems acceptable rather than thinking carefully to come up with the most accurate answer to a question. Satisficing is more common in surveys by telephone than face–to-face (Holbrook et al. 2003). Thus, satisficing may lead respondents to misreport that they voted when they did not. In order to explore the association of interview mode with the overstatement of turnout, we compared rates of reported turnout in the ANES, GSS, and CPS when collected via telephone interviewing versus face-to-face interviewing.

Elapsed Time

Another factor that might cause the turnout gap is recollection inaccuracy. The GSS has asked respondents exclusively about their voting behavior in elections held at least a year prior to the interview, whereas the ANES and CPS asked about elections held just days or weeks earlier, as well as elections in prior years. Interviewing sooner after Election Day may enhance respondents’ ability to accurately recall what they did on Election Day. Consistent with prior literature (e.g., Stocke 2007), we hypothesized that the turnout gap may increase when more time has passed between Election Day and the survey interview.

Question Wording

Differences between the ANES, GSS, and CPS in terms of the wordings of questions measuring turnout are confounded with other design differences between the surveys, so comparisons of these surveys with one another cannot reveal effects of question wording. And the GSS and CPS question wordings have not changed notably over the years, so comparisons within those data streams cannot be implemented to investigate question wording effects. Fortunately, however, the ANES has used three different wordings of turnout questions and conducted experiments involving random assignment of respondents to question wordings in 2002, 2004, and 2008. To provide a context for these experiments, we first review the question wordings that have been employed in all the surveys.

In 1952, 1956, 1962, 1966, 1968, 1970, 1972, 1976, 1980, 1984, 1988, 1992, 1994, 1998, and 2004, ANES respondents were asked:

In talking to people about elections [some years: “the election” in place of “elections”], we [1972 and later “often”] find that a lot of people were not [some years: “weren’t” in place of “were not”] able to vote because they weren’t registered, [some years “or”] they were sick, or they just didn’t have time.

(1952–1960: How about you, did you vote this time?)

(1964–1970: How about you, did you vote this time, or did something keep you from voting?)

(1972–1976: How about you, did you vote in the elections this fall?)

(1978 and later: How about you, did you vote in the elections this November?)

The preamble of this question was presumably intended to reduce social desirability bias by legitimating nonvoting. However, the invitation of a “yes or no” response (in all years except 1964–1970) makes the question subject to acquiescence bias (Cronbach 1950, Krosnick and Presser 2010). However, one study challenges this assertion (Abelson, Loftus, and Greenwald 1992).

In 2002, 2004, and 2008, half of the ANES the sample was randomly assigned to be asked the following question, and all respondents in the 2000 ANES were asked it:

In talking to people about elections, we often find that a lot of people were not able to vote because they weren’t registered, they were sick, or they just didn’t have time. Which of the following statements best describes you?

One, I did not vote (in the election this November)

Two, I thought about voting this time - but didn’t

Three, I usually vote, but didn’t this time; or

Four, I am sure I voted?

By avoiding yes/no response options, this question wording avoids inviting acquiescence, and the wording is also intended to reduce social desirability pressures by allowing nonvoters to express their identity as habitual voters (option three) or potential voters (option two).

In the 2008 ANES, a new series of turnout-related questions, with a new introduction, were asked to reduce social desirability bias and recall errors. Half of the sample was asked:

In asking people about elections, we often find that a lot of people were not able to vote because they weren’t registered, they were sick, they didn’t have time, or something else happened to prevent them from voting. And sometimes, people who usually vote or who planned to vote forget that something unusual happened on Election Day one year that prevented them from voting that time. So please think carefully for a minute about the recent elections, and other past elections in which you may have voted, and answer the following questions about your voting behavior.

During the past 6 years, did you USUALLY VOTE in national, state, and local elections, or did you USUALLY NOT VOTE?

During the months leading up to the elections that were held on November 4, did you ever plan to vote, or didn’t you plan to do that? Which one of the following best describes what you did in the elections that were held November 4?

Definitely did not vote in the elections

Definitely voted in person at a polling place on election day

Definitely voted in person at a polling place before election day

Definitely voted by mailing a ballot to elections officials before the election

Definitely voted in some other way

Not completely sure whether you voted or not

This question sequence was designed to allow respondents to express their habit as a habitual voter, to express their intention to vote, to indicate that they voted only if they were sure, and to express uncertainty if they were not sure. Although some experiments have shown that similar questions failed to improve accuracy relative to simpler, shorter questions (Belli, Traugott, and Rosenstone 1994), other experiments have had greater success (Belli et al. 1999; Belli, Moore, and Van Hoewyk 2006; Hanmer, Banks, and White 2014; Holbrook and Krosnick 2013), and some scholars have noted the differing results from the two ANES versions asked in 2002 (Duff et al. 2007).

Self-reporting Versus Proxy Reporting

Although all turnout measurement in the ANES and GSS has been done via self-reporting (whereby the respondent reports information about himself or herself), CPS measurements are a mix of self-reports and proxy reports (whereby an informant gives information about someone else in his or her household). Proxy reports are thought to be reasonably accurate when measuring earnings in the CPS (Bollinger and Hirsch 2007) and in some medical measurement exercises as well (e.g., Cobb et al. 1956; Thompson and Tauber 1957). But study design limitations in the accumulated literature evaluating proxy reports mean that we do not generally know whether proxy reports are as accurate as self-reports (Cobb, Krosnick, and Pearson 2011). One might be inclined to presume that if anything, proxy reports will be less accurate than self-reports. But if people are reluctant to admit not voting when providing self-reports, it is possible that proxies might more readily report that a fellow household member did not vote, thereby revealing the truth. We explored this possibility with CPS data, which have previously been found to reveal a reduction in the turnout overestimation in the CPS in 1992, 1996, and 2000 (Highton 2005).

Panel Attrition and Conditioning

In the ANES preelection interviews during presidential election years, respondents were typically interviewed for an hour or more on the subject of politics and were asked to predict whether they will vote in the upcoming presidential election. Both could increase their propensity to vote because being interviewed about a topic can increase interest in that topic (Bridge et al. 1977) and because behavior predictions can become self-fulfilling prophesies (Greenwald et al. 1988; Spangenberg and Greenwald 1999), enhancing the turnout gap in postelection interviews. A number of studies yielded evidence consistent with the claim that interviews about politics or predictions of turnout can increase actual turnout (Anderson, Silver, and Abramson 1988; Clausen 1968; Granberg and Holmberg 1992; Greenwald et al. 1987; Kraut and McConahay 1973; Spangenberg and Greenwald 1999; Yalch 1976), though other studies did not find such effects (Mann 2005; Smith, Gerber, and Orlich 2003). Furthermore, about 10 percent of ANES preelection respondents were not interviewed again after the election, and if these individuals were disproportionately low in interest in politics and the propensity to vote, this, too, could enhance the turnout gap.

We examine panel attrition effects on reported turnout by taking advantage of a turnout question in preelection questionnaires. In most presidential election years between 1952 and 2008, preelection respondents were asked whether they voted in the last presidential election, four years before.4 If habitual nonvoters disproportionally fail to complete postelection interviews, then we should find an association between the amount of panel attrition that occurred in a year and reported retrospective turnout in the preelection interview. Specifically, respondents who reported having not voted in the previous election should be less likely to complete the postelection interview than respondents who reported having voted in the previous election. We test for such an association by comparing the retrospective turnout rate reported by preelection respondents to the retrospective turnout rate reported by the subset of preelection respondents who also completed the postelection interview. Although other confounds might affect this test (such as recall error possibly being correlated with attrition), such a test of panel attrition effects is unaffected by panel conditioning because the analysis uses only data from preelection survey respondents.

Response Rates

Response rates per se do not indicate survey accuracy (Groves 2006). However, response rates below 100 percent indicate the potential for nonresponse bias to reduce accuracy, and this potential increases as response rates fall.

Scholarly literature has explored the effect of declining response rates on the accuracy of national surveys’ turnout estimates, but the findings have been contradictory. Using ANES data, Burden (2000:389) concluded that “worsening presidential turnout estimates are the result mostly of declining response rates rather than instrumentation, question wording changes or other factors,” but Martinez (2003) showed that this finding is contingent upon using the less-appropriate VAP turnout rate and that when the VEP is examined, the relationship between response rate and turnout gap disappears. McDonald (2003:180) analyzed VEP turnout rates and showed that “the post-1976 rise in ANES response rates (until 2000) is rewarded in a lower turnout gap.” Meta-analysis involving other studies and variables has found that the relationship between unit nonresponse rates and nonresponse bias is usually weak at best (Groves and Peytcheva 2008).

Olson and Witt (2011) found that the likelihood of older respondents and of white respondents completing the ANES postelection interview was greater recently than a few decades ago. These groups usually have higher turnout rates than younger respondents and nonwhite respondents. So losing fewer of those people recently will cause turnout rates among respondents to be higher than they used to be. Peress (2010) reported evidence suggesting that nonresponse bias is a major contributor to the ANES turnout gap, but his method assumes that validated turnout reports are accurate, which may not be true (Berent, Krosnick, and Lupia 2011, 2016).

Reported CPS response rates have been exceptionally high, exceeding 90 percent, which makes it seem on first inspection that there is very little room for nonresponse error to contribute to CPS overestimates of turnout. But this is not the case for at least two reasons. First, the response rate for the voting and registration supplement is lower than 90 percent because interviewers are permitted to choose not to administer it.5 Second, the population coverage of the CPS sample is below 90 percent, as we explain next.

The Census Bureau reports a household-level response rate of 91.2 percent for the November 2008 basic monthly survey and a person-level noncumulative response rate of 89.7 percent for the voting and registration supplement (i.e., 89.7 percent of household members about whom supplement respondents could have been collected in interviewed households; U.S. Census Bureau 2008). Because we cannot know how many people about whom data should have been collected in households that were not interviewed, it is impossible to compute a person-level response rate for the main CPS survey or the supplement. However, if we assume that the nonresponding households were, on average, the same size as the responding households, this would imply a person-level overall response rate for the supplement of 81.8 percent (91.2 percent × 89.7 percent).

Whereas the response rate is the proportion of eligible sample members who complete the interview, the coverage rate is the proportion of population members who could have been included in the sample. Noncoverage occurs, for example, when housing units are not present on a list used for list-based sampling and when household members are not listed during household screening. The CPS coverage proportion in 2008 was about .88, and coverage rates were similar in other recent years (U.S. Census Bureau 2006a, 2008). Thus, about 12 percent of the population had no chance to be included in the CPS sample.6

Nonresponse and undercoverage combined suggest that the proportion of the population that is properly represented by the CPS voting and registration supplement file may be about 72 percent (.912 basic monthly survey response rate × .897 supplement response × .88 coverage = .72), which leaves considerably more room for sample composition bias to affect the turnout estimates than is implied by the basic response rate.

We examined the relationship between the response rates and the amount of turnout overestimation. We did so, first, by looking at response rates across the set of ANES, CPS, and GSS studies presented here.7 Second, we exploited the statistical power and state-level representation afforded by the CPS data to examine the relationship between the turnout gap and the CPS response rates by state and by year. We use CPS data from each even-numbered year from 1980 through 2010 and treat each state and DC as a separate case, meaning 51 areas in 16 surveys or 816 cases for analysis (Bauman 2012).8

Explaining the Turnout Gap: Findings

Having outlined our hypotheses, we next describe findings regarding data collection mode, elapsed time between the election and the interview, question wording, self-reporting versus proxy reporting, panel attrition, panel conditioning, and response rates. Then we discuss limitations of that evidence due to confounds in the nonexperimental variation of methods across respondents, and we present the results of a multivariate analyses that control for as many of those confounds as possible. This analysis will clarify which factors are more likely to contribute to the turnout gap.

Mode

In all three surveys, telephone interviews yielded a larger turnout gap than did face-to-face interviews. In the ANES, face-to-face interviewing led to an average turnout gap of 16 percent versus a 19 percent average gap for telephone interviews (see Table 2). In the GSS, face-to-face interviewing yielded an average overestimation of 13 percent versus 17 percent by telephone. CPS face-to-face interviewing yielded an average turnout gap of 11 percent versus 18 percent by telephone interviewing. CPS results suggest that telephone interviewing had a larger distorting effect than did face-to-face interviewing by 7 percentage points.

Table 2.

Average Turnout Gap by Mode.

| Turnout Gap (Percent) | ||

|---|---|---|

|

|

||

| Survey | Telephone | Face-to-Face |

|

| ||

| ANES | 19 | 16 |

| GSS | 17 | 13 |

| CPS | 18 | 11 |

Note: ANES data 1952–2008 for face-to-face; ANES data 1984–2002 for telephone; GSS data 2004–2008; CPS data 1974–2008. ANES = American National Election Studies; CPS = Current Population Survey; GSS = General Social Survey.

The 1996 ANES included an experiment with random assignment to mode for the postelection interview. As expected, respondents who completed the interview in person had a VEP turnout overreport of 18.4 points compared to 21.7 points on the telephone, but this difference is not statistically significant. This nonsignificant difference is of about the same magnitude as that observed in the observational comparisons of telephone and face-to-face data in the ANES and GSS: 2.5 to 4.0 percentage points.

Elapsed Time

The ANES yielded smaller turnout gaps when questions asked about elections in the same year as the interview, whereas the ANES turnout measurements were less accurate when respondents were asked about turnout in prior years. The average turnout gap (VEP) for “same year” surveys is 15 points compared to 19 points for elections in previous years (see Online Appendix Table A4). The CPS measured turnout in prior years only in the 1970s and 1980s, and in these years, the previous year gaps were smaller than the same year gaps: 7 points compared to 11 points. In recent years, the ANES overestimation for turnout in previous year elections has increased, widening the turnout gap for elections whose year of occurrence is not identical to the survey year.

Question Wording

Question wording experiments were included in the ANES in 2002, 2004, and 2008. The new version of the turnout question asked in 2002 and 2004 out-performed the traditional question, cutting the turnout gap from 24 to 16 points in 2002 and from 19 to 12 in 2004 (see Table 3). However, in 2008, the difference between the two forms of the question was nil (15.25 compared to 15.26).

Table 3.

ANES Question Wording Experiments: Turnout Gaps in 2002, 2004, and 2008.

| Question | 2002 | 2004 | 2008 |

|---|---|---|---|

|

| |||

| 2008 new question | — | — | 15 |

| Which one of the following best describes what you did in the elections that were held November 4? | |||

| 1. Definitely did not vote in the elections | |||

| 2. Definitely voted in person at a polling place on election day | |||

| 3. Definitely voted in person at a polling place before election day | |||

| 4. Definitely voted by mailing a ballot to elections officials before the election | |||

| 5. Definitely voted in some other way | |||

| 6. Not completely sure whether you voted or not 2002 and 2004 new question | 16 | 12 | 15 |

| In talking to people about elections, we often find that a lot of people were not able to vote because they weren’t registered, they were sick, or they just didn’t have time. Which of the following statements best describes you? One, I did not vote (in the election this November); Two, I thought about voting this time - but didn’t; Three, I usually vote, but didn’t this time; or Four, I am sure I voted? | |||

| Traditional question | 24 | 19 | — |

| In talking to people about elections, we often find that a lot of people were not able to vote because they weren’t registered, they were sick, or they just didn’t have time. How about you, did you vote in the elections this November? How about you, did you vote in the elections this November? | |||

Note: ANES = American National Election Studies.

Reporter (Self vs. Proxy)

In the CPS, the largest differences in accuracy are seen when we examine interview mode and self- versus proxy reporting simultaneously. Face-to-face proxy reports yielded the smallest average overestimation (three points), whereas telephone self-reports yield the largest (16 points; see Table 4). In both interview modes, proxy reports significantly and consistently yielded lower reported turnout rates than did self-reports: four points on average for face-to-face interviews and six points on average for telephone interviews. This finding is consistent with the hypothesis that the social desirability bias may be alleviated when people report the turnout of other household members because respondents may be less motivated to cultivate favorable presentations of others than of themselves.9

Table 4.

CPS Turnout Gap by Mode and Report.

| Face-to-face Mode | Telephone Mode | ||||||||

|---|---|---|---|---|---|---|---|---|---|

|

|

|

||||||||

| Self-reports | Proxy Reports | Self-reports | Proxy Reports | ||||||

|

|

|

|

|

||||||

| Year | VEP Turnout | Estimated Turnout | Gap | Estimated Turnout | Gap | Estimated Turnout | Gap | Estimated Turnout | Gap |

|

| |||||||||

| 2008 | 62 | 70 | 8 | 65 | 3 | 79 | 16 | 72 | 10 |

| 2006 | 41 | 50 | 9 | 45 | 4 | 60 | 18 | 53 | 11 |

| 2004 | 61 | 68 | 7 | 63 | 3 | 76 | 16 | 71 | 10 |

| 2002 | 41 | 48 | 7 | 45 | 4 | 56 | 16 | 50 | 10 |

| 2000 | 55 | 63 | 8 | 58 | 2 | 71 | 16 | 65 | 10 |

| 1998 | 39 | 47 | 7 | 44 | 5 | 53 | 13 | 48 | 8 |

| 1996 | 52 | 59 | 7 | 54 | 3 | 68 | 16 | 62 | 10 |

| Average | 8 | 3 | 16 | 10 | |||||

Source: Current Population Survey, indicated years, November supplements.

Note: “VEP turnout” is the turnout rate among the voting eligible population based on government turnout data. Sampling errors are less than 1 percentage point using generalized variance estimation. CPS = Current Population Survey.

Panel Attrition

In 12 ANES presidential-year surveys between 1952 and 2008, preelection respondents were asked whether they voted in the previous presidential election. If bias in turnout estimates is caused by biased panel attrition, then we should find that the turnout rate reported by the subset of those respondents who completed the postelection interview is significantly higher than that reported by respondents who did not complete the postelection interview. However, this difference ranged from 0.0 to 2.3 percentage points and averaged only 1.0 (see Table 5). This means that biased attrition contributed to the turnout gap but only to a small degree.

Table 5.

Effect of ANES Panel Attrition on Retrospective Turnout Estimates, 1952–2008.

| Year | Preelection Estimate of Turnout in Election Four years Ago | Attrited Estimate | Attrition Effect (Difference) |

|---|---|---|---|

|

| |||

| 1952 | 69.8 | 70.7 | 0.8 |

| 1960 | 76.1 | 76.8 | 0.6 |

| 1964 | 80.6 | 80.9 | 0.3 |

| 1968 | 76.6 | 76.5 | 0.0 |

| 1972 | 74.4 | 75.9 | 1.5 |

| 1976 | 71.6 | 72.8 | 1.3 |

| 1980 | 72.7 | 73.3 | 0.6 |

| 1992 | 66.7 | 67.0 | 0.3 |

| 1996 | 75.6 | 77.4 | 1.8 |

| 2000 | 65.5 | 67.8 | 2.3 |

| 2004 | 65.5 | 66.9 | 1.5 |

| 2008 | 67.2 | 68.2 | 1.0 |

| Average | 71.9 | 72.8 | 1.0 |

Note: Differences may not add due to rounding error. ANES = American National Election Studies.

Response Rate

As expected, a higher response rate was associated with a smaller turnout gap. In a regression treating each ANES, CPS, and GSS estimate as the unit of analysis and predicting the survey’s turnout gap with its response rate, a 10-pointimprovementinresponserateisassociatedwithareductionintheturnout gap of 1.7 percentage points (b = −.17, SE = .04, n= 104, p < .001).

The CPS provides unique leverage to examine the relationship between nonresponse and the turnout gap at the state level because, unlike the ANES and GSS, the CPS provides subsamples that are representative of the states. Using a data set that separated results from each voting and registration supplement between 1980 and 2010 into results for individual states (Bauman 2012), we estimated regression coefficients predicting the turnout gap (treating nonresponses as missing data) with the government-reported turnout rate in the state, the proportion of respondents who were asked but did not answer the turnout question, the proportion of unit nonresponse on the voting and registration supplement, and dummy variables for years (see Table 6). The turnout gap was smaller when official turnout was higher, and the turnout gap grew larger as the amounts of missing data and unit nonresponse increased. A 10-point increase in actual turnout is associated with a 2.1-point reduction in the turnout gap. A 10-point increase in item missing data is associated with a 2.1-point increase in the turnout gap. And a 10-point increase in nonresponse to the whole survey is associated with a 2.4-point increase in the turnout gap.

Table 6.

Predictors of CPS Turnout Gap by State.

| Variable | Coefficient |

|---|---|

|

| |

| Actual turnout | −.21*** (.014) |

| Item missing data | .21*** (.049) |

| Unit missing data | .24*** (.056) |

| 1982 | .00 (.006) |

| 1984 | .01 (.006) |

| 1986 | −.01 (.007) |

| 1988 | .01 (.006) |

| 1990 | −.01 (.006) |

| 1992 | .02** (.006)*** |

| 1994 | −.03 (.006) |

| 1996 | .00 (.006) |

| 1998 | −.03*** (.007) |

| 2000 | .00 (.006) |

| 2002 | −.02** (.007) |

| 2004 | .01 (.007) |

| 2006 | −.01 (.007) |

| 2008 | .01 (.007) |

| 2010 | −.02 (.008) |

| Constant | .17 (.010) |

| R 2 | .35 |

| n | 816 |

Note: CPS = Current Population Survey.

p < .01.

p < .001.

Confounds of Mode and Reporting

None of the data discussed thus far involved random assignment to mode (except the 1996 ANES), self-reporting versus proxy reporting, or elapsed time between the election and the survey interview, or experimental manipulation of response rate. Therefore, all data discussed thus far are observations and subject to alternative explanations. For example, in the CPS and the GSS, telephone interviews were sometimes done with respondents who were too difficult to reach to complete a face-to-face interview. Thus, turnout differences by mode are confounded with turnout differences by accessibility to the interviewer. Similarly, in the CPS, household members decide who will be described by self-reports and who will be described by proxy reports, so differences between self-reports and proxy reports may be due to differences in the characteristics of self-selected household informants and other household members and not due to differences between self-reporting and proxy reporting per se.

To eliminate variation across surveys in respondent self-selection, mode, and attrition, we examined the turnout rates only for ANES and CPS respondents who lived in single-adult households (and therefore all provided self-reports) and who were interviewed face-to-face. Furthermore, in the CPS, we only examined data from the first of eight waves of interviewing of each single-household member to eliminate panel attrition and panel conditioning.

In the 2008 ANES and CPS, turnout estimates were slightly lower in this subgroup of respondents, 76.3 percent and 71.5 percent, respectively (ANES n= 756, CPS n= 1,273), than in the entire survey samples: 77.4 percent and 73.8 percent. The slightly higher numbers for the full CPS samples (which include a mix of self-reports and proxy reports) are inconsistent with the conclusion that including the proxy reports reduces reported turnout rates, but we do not know the true turnout rates for these subgroups.

Multivariate Prediction of the Turnout Gap

To compare several results and control for more than one variable at a time, we present estimates of the parameters of ordinary least squares regression equations predicting the turnout gap (Table 7). Each column is a regression equation. The dependent variable is the VEP turnout gap, coded as the percentage point error (so a value of 10.5 represents a VEP turnout estimate that is 10.5 percentage points greater than the official VEP estimate based on official government records.). The predictors included: Telephone is a dummy variable indicating whether any data for the survey were collected by phone, with a reference category of exclusively face-to-face data collection; elapsed time is the difference in years between the year of the survey interview and the year of the election;10 response rate is the survey’s response rate as shown in Online Appendix Table A1; ANES and GSS data are dummy variables indicating these data sources, with the CPS as the reference category; presidential is a dummy variable indicating if the election being asked about was in a presidential election year; year is the year of the election being asked about (coded 1952, 1956, etc.).11

Table 7.

Predictors of Turnout Gap (OLS Regression).

| Model 1 | Model 2 | Model 3 | Model 4 | Model 5 | Model 6 | Model 7 | Model 8 | Model 9 | |

|---|---|---|---|---|---|---|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

| Variable | ANES Off-years Only | ANES, All Same Year Estimates | ANES, All Same Year Estimates | CPS | GSS | All Studies | All Studies, Presidential Years | All Studies | All Studies |

|

| |||||||||

| Telephone | −1.73 (1.43) | ||||||||

| Elapsed time | 0.65* (0.27) | ||||||||

| Response rate | −0.09 | −0.10 | 0.01 | −0.51 | 0.21 | −0.17** | −0.17** | −0.16** | 0.00 |

| (.124) | (0.08) | (0.13) | (0.32) | (0.15) | (0.05) | (0.06) | (0.05) | (0.1 1) | |

| ANES data | 4.46 (2.86) | ||||||||

| GSS data | 2.20 (2.26) | ||||||||

| Presidential | 0.92 | 1.71 | 1.68 | −0.56 | |||||

| (1.25) | (1.48) | (1.34) | (1.33) | ||||||

| Year | 0.06 | −0.07 | |||||||

| (0.06) | (0.05) | ||||||||

| Constant | 19.96† | 20.89*** | −109.30 | 55.24† | −0.079 | 27.22*** | 27.21 *** | 25.05*** | 148.30 |

| (8.56) | (5.35) | (130.41) | (27.86) | (11.13) | (3.78) | (4.65) | (4.15) | (101.90) | |

| R 2 | .06 | .12 | .16 | .10 | .06 | .10 | .08 | .12 | .38 |

| n | 9 | 24 | 24 | 25 | 32 | 100 | 82 | 100 | 100 |

Note: “Telephone” is an indicator variable with a reference category of face-to-face. Results from “experimental” question wordings in ANES data are excluded. ANES = American National Election Studies; CPS = Current Population Survey; GSS = General Social Survey. OLS = ordinary least squares.

p < .1.

p < .05.

p < .01.

p < .001.

Models 1 to 3, using ANES data only, reveal no statistically significant association between the response rate and the turnout gap, regardless of whether controlling for study year or presidential election year or not (see Table 7). Models 4, 5, 6 and 7 test for an association between response rate and the turnout gap in the CPS alone, the GSS alone, all studies combined, and all studies combined done in presidential election years, respectively. The estimates of the parameters of the models including all studies (6, 7, and 8) show that the turnout gap was a little smaller when the response rate was higher: about a one-point reduction in the turnout gap for a six-point increase in response rate. Model 9 uses data from all studies and includes all control variables. This suggests that elapsed time after an election inflates the turnout gap by a fraction of a point per year. Response rate was not a statistically significant predictor of the turnout gap.12

Discussion

Collectively, these findings support several conclusions:

The turnout gap is substantial in all three studies and has remained so for decades. The CPS gap is larger than the Census Bureau has reported, but the CPS gap is smaller than the gaps in ANES and GSS estimates, and the ANES gap is largest.

The turnout gap seems partly to be due to social desirability response bias, as evidenced by the fact that the gap is smaller in face-to-face interviews than in telephone interviews (consistent with prior literature such as Holbrook et al. 2003), smaller in (self-selected) proxy reports than in self-reports, and smaller when question wording allows respondents to say they usually voted and thought about voting in this election before acknowledging that they did not.

The turnout gap seems also to be partly due to recall errors because the gap grows as more time passes between the election and the survey interview.

Acquiescence response bias may also inflate the turnout gap. The gap is reduced when, instead of the conventional yes/no question format, respondents are offered balanced, construct-specific response options.

Biased nonresponse appears partly responsible for the gap, in that people who do not vote are especially unlikely to participate in surveys.

Panel attrition in the ANES from the preelection interview to the postelection interview seems to inflate the turnout gap.

The conclusion about biased nonresponse is bolstered by other work. For example, Burden (2000) demonstrated that self-reported turnout in the ANES is higher among respondents who were easier to interview. Voogt and Van Kempen (2002) similarly found that nonrespondents in the Dutch National Election Study (who answered a nonresponse followup study) were less likely to report voting than respondents were. Like-wise, findings reported by Berent et al. (2016) suggest that in a recent ANES survey done online, survey participants were more likely to vote than were nonrespondents. Peress (2010) also analyzed ANES data and reached the same conclusion.

Berent et al. (2016) found that the turnout gap was small in a recent online survey. Our results can be reconciled with theirs by noting that whereas the data we focus on were collected by interviewers, Berent et al.’s respondents completed self-administered questionnaires via the Internet. And whereas we focus on surveys that measured turnout using question wordings that are susceptible to acquiescence response bias, Berent et al.’s wording was designed to avoid this bias. And we have shown that turnout rates are reduced when reports are collected via a mode that minimizes social desirability bias and when wording avoids acquiescence as well. Thus, our findings help explain why the surveys we examine manifest more overreporting than Berent et al. observed.

The turnout gap is partly attributable to measurement error and partly attributable to nonresponse bias. To minimize measurement error among surveys employing interviewers, survey designers might choose from a tool kit that includes proxy reporting, nontelephone interviews, and questions designed to minimize satisficing (including acquiescence) and social desirability. To minimize nonresponse bias and its effects, survey researchers should favor methods that produce higher response rates, recruitment techniques that minimize basing a decision to participate in a survey on interest in politics, and weighting that accounts for response propensity’s correlation with turnout.

Supplementary Material

Funding

The author(s) received no financial support for the research, authorship, and/or publication of this article.

Author Biographies

Matthew DeBell is a senior research scholar at the Institute for Research in the Social Sciences at Stanford University. Since 2006 he has served as the Director of Stanford Operations for the American National Election Studies.

Jon A. Krosnick is Frederic O. Glover Professor in humanities and social sciences, and professor of communication, political science, and, by courtesy, psychology at Stanford University. He is the Director of Stanford’s Political Psychology Research Group, Research Psychologist at the U.S. Census Bureau, and former principal investigator of the American National Election Studies.

Katie Gera is the Director of Growth and Strategic Planning at Minted, an online marketplace for independent artists. She graduated from Stanford University in 2011 with a BA in public policy and returned to receive her MBA in 2016.

David S. Yeager is an Associate Professor of psychology at the University of Texas at Austin. His research focuses primarily on adolescent development.

Michael P. McDonald is an Associate Professor of political science at the University of Florida. He also directs the U.S. Elections Project, an information source for the United States electoral system.

Footnotes

Interviewers have been given the discretion to opt out of administering the supplement to a respondent if they believe that administering it might reduce the person’s willingness to be interviewed in future waves of the Current Population Survey (CPS).

What we refer to as the “CPS method” or “Census method” is the definition ofnonvoters implied by the voter turnout calculation the Census Bureau has used in the past. To our knowledge, the Census Bureau has never presented descriptive statistics for nonvoters using this method.

Due to the enormous CPS sample size, all differences exceeding 1 percentagepoint are statistically significant at p < .05.

Data from 1956 respondents who did not complete the postelection study werenot retained in the American National Election Studies (ANES) archives. This turnout question was not asked in 1984. This turnout question was asked post-election in 1988.

The Census Bureau does not report that response rate.

Undercoverage affects the ANES and General Social Survey (GSS), too.

We examine the effects of response rates across studies but not by mode.Response rates can be much higher in face-to-face interviews than on the telephone, and in principle, the turnout gap could be affect by mode-based differences in nonresponse. However, the current studies (with one exception of ANES in 2002) use the telephone as a second mode within studies also conducted face-to-face. As such, they do not report response rates separately by mode, so we cannot analyze how differential nonresponse rates across modes affect the turnout gap.

We thank Kurt Bauman for demonstrating this analysis and providing the data.

When the size of self-reports’ impact on overestimation is more precisely measured, different weights may be designed by the CPS for respondents self-reporting versus proxies.

A 2008 interview asking about the 2008 election has an elapsed time of 0; a 2008 interview asking about the 2004 election has an elapsed time of 4.

Note that the model specifications for the analyses in Table 7 differ from themodel specified in Table 6 of necessity. We cannot develop a model like that in Table 6 for the combined data sets of the three studies because the ANES and GSS are not representative at the state level, and we cannot develop an equivalent model for the three studies nationally because it would be overspecified for the small number of national data sets available.

Controlling for the government-reported actual turnout rate had no significanteffect on the results.

Supplemental Material

Supplemental material for this article is available online.

Declaration of Conflicting Interests

The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

References

- Abelson Robert P., Loftus Elizabeth F., and Greenwald Anthony G. 1992. “Attempts to Improve Accuracy of Self-reports of Voting.” Pp. 138–53 in Questions about Questions, edited by Tanur Judith M. New York: Russell Sage Foundation. [Google Scholar]

- Anderson Barbara A., Silver Brian D., and Abramson Paul R. 1988. “The Effects of Race of the Interviewer on Measures of Electoral Participation by Blacks in SRC National Election Studies.” Public Opinion Quarterly 52:53–83. [Google Scholar]

- Ansolabehere Stephen and Hersh Eitan. 2012. “Validation: What Big Data Reveal about Survey Misreporting and the Real Electorate.” Political Analysis 20:437–59. [Google Scholar]

- Bauman Kurt. 2012. “Consolidated Public Use CPS November 1980–2010 Data [data file].” U.S. Census Bureau, MD. [Google Scholar]

- Belli Robert F., Traugott Michael W., and Rosenstone Steven J. 1994. “Reducing Over-reporting of Voter Turnout: An Experiment Using a ‘Source Monitoring’ Framework.” ANES Technical Report No. 010153. Retrieved February 8, 2018 (http://ftp://ftp.electionstudies.org/ftp/nes/bibliography/documents/nes010153.pdf). [Google Scholar]

- Belli Robert F., Moore Sean E., and Van Hoewyk John. 2006. “An Experimental Comparison of Question forms Used to Reduce Vote Overreporting.” Electoral Studies 25:751–59. [Google Scholar]

- Belli Robert F., Traugott Michael W., Young Margaret, and McGonagle Katherine A. 1999. “Reducing Vote Overreporting in Surveys: Social Desirability, Memory Failure, and Source Monitoring.” Public Opinion Quarterly 63:90–108. [Google Scholar]

- Berent Matthew K., Krosnick Jon A., and Lupia Arthur. 2011. “The Quality of Government Records and Over-estimation of Registration and Turnout in Surveys: Lessons from the 2008 Panel Study’s Registration and Turnout Validation Exercise.” ANES Technical Report Series, No. nes012554. Retrieved February 8, 2018 (http://electionstudies.org/resources/papers/nes012554.pdf). [Google Scholar]

- Berent Matthew K., Krosnick Jon A., and Lupia Arthur. 2016. “Measuring Voter Registration and Turnout in Surveys: Do Official Government Records Yield More Accurate Estimates?” Public Opinion Quarterly 80:597–621. doi: 10.1093/poq/nfw021. [DOI] [Google Scholar]

- Bernstein Robert, Chadha Anita, and Montjoy Robert. 2001. “Overreporting Voting: Why It Happens and Why It Matters.” Public Opinion Quarterly 65:22–44. [PubMed] [Google Scholar]

- Bollinger Christopher R. and Hirsch Barry T. 2007. “How Well Are Earnings Measured in the Current Population Survey? Bias from Nonresponse and Proxy Respondents.” Paper presented at the North American Summer Meetings of the Econometric Society, June 21–24, Duke University, Durham, NC. Retrieved May 26, 2015 (http://www2.gsu.edu/*ecobth/ES_Bollinger-Hirsch_Response_Bias_Apr07.pdf). [Google Scholar]

- Bridge Gary, Reeder Leo G., Kanouse David, Kinder Donald R., and Nagy Vivian Tong. 1977. “Interviewing Changes Attitudes—Sometimes.” Public Opinion Quarterly 41:56–64. [Google Scholar]

- Burden Barry C. 2000. “Voter Turnout and the National Election Studies.” Political Analysis 8:389–98. [Google Scholar]

- Clausen Aage R. 1968. “Response Validity: Vote Report.” Public Opinion Quarterly 32:588–606. [Google Scholar]

- Cobb Curtiss L. III, Krosnick Jon A., and Pearson Jeremy. 2011. “The Accuracy of Self-reports and Proxy Reports in Surveys.” Stanford University, CA. Unpublished manuscript. [Google Scholar]

- Cobb Sidney, Thompson Donovan J., Rosenbaum Joseph, Warren Joseph E., and Merchant William R. 1956. “On the Measurement of Prevalence of Arthritis and Rheumatism from Interview Data.” Journal of Chronic Diseases 3:134–39. [DOI] [PubMed] [Google Scholar]

- Cronbach Lee J. 1950. “Further Evidence on Response Sets and Test Design.” Educational and Psychological Measurement 10:3–31. [Google Scholar]

- DeBell Matthew and Cowden Jonathan. 2010. Benchmark Report for the 2008 American National Election Studies Time Series and Panel Study. ANES Technical Report Series, no. nes012493. Ann Arbor, MI, and Palo Alto, CA: American National Election Studies. Retrieved from (http://electionstudies.org/resources/papers/nes012493.pdf). [Google Scholar]

- Duff Brian, Hanmer Michael J., Park Won-Ho, and White Ismail K. 2007. “Good Excuses: Understanding Who Votes with an Improved Turnout Question.” Public Opinion Quarterly 71:67–90. [Google Scholar]

- File Thom and Crissey Sarah. 2010. Voting and Registration in the Election of November 2008. Current Population Reports, P20–562.U.S. Suitland, Maryland: Census Bureau. [Google Scholar]

- Flanigan William H. and Zingale Nancy H. 1983. Political Behavior of the American Electorate. 5th ed. Boston, CA: Allyn & Bacon. [Google Scholar]

- Gera Katie, Yeager David, Krosnick Jon A., DeBell Matthew, and McDonald Michael. 2010. “Overestimation of Voter Turnout in National Surveys.” Paper presented at the Annual Meeting of the American Political Science Association, Washington, DC. [Google Scholar]

- Granberg Donald and Holmberg Sören. 1992. “The Hawthorne Effect in Election Studies: The Impact of Survey Participation on Voting.” British Journal of Political Science 22:240–47. [Google Scholar]

- Greenwald Anthony G., Carnot Catherine G., Beach Rebecca, and Young Barbara. 1987. “Increasing Voting Behavior by Asking People If They Expect to Vote.” Journal of Applied Psychology 72:315–18. [Google Scholar]

- Greenwald Anthoony G., Klinger Mark R., Vande Kamp Mark E., and Kerr Katherine L. 1988. “The Self-Prophecy Effect: Increasing Voter Turnout by Vanity-assisted Consciousness Raising.” University of Washington, Seattle, WA. Unpublished manuscript. [Google Scholar]

- Groves Robert M. 2006. “Nonresponse Rates and Nonresponse Bias in Household Surveys.” Public Opinion Quarterly 70:646–75. [Google Scholar]

- Groves Robert M. and Peytcheva Emilia. 2008. “The Impact of Nonresponse Rates on Nonresponse Bias: A Meta-analysis.” Public Opinion Quarterly 72:167–89. [Google Scholar]

- Hanmer MichaelJ.,Banks AntoineJ.,andWhite IsmailK..2014.“ExperimentstoReduce the Over-reporting of Voting: A Pipeline to the Truth.” Political Analysis 22:130–41. [Google Scholar]

- Highton Benjamin. 2005. “Self-reported versus Proxy-reported Voter Turnout in the Current Population Survey.” Public Opinion Quarterly 69:113–23. [Google Scholar]

- Holbrook Allyson L., Green Melanie C., and Krosnick Jon A. 2003. “Telephone versus Face-to-face Interviewing of National Probability Samples with Long Questionnaires: Comparisons of Respondent Satisficing and Social Desirability Bias.” Public Opinion Quarterly 67:79–125. [Google Scholar]

- Holbrook Allyson L. and Krosnick Jon A. 2013. “A New Question Sequence to Measure Voter Turnout in Telephone Surveys: Results of an Experiment in the 2006 ANES Pilot Study.” Public Opinion Quarterly 77:106–23. [Google Scholar]

- Hur Aram and Achen Chris. 2013. “Coding Voter Turnout Responses in the Current Population Survey.” Public Opinion Quarterly 78:985–93. [Google Scholar]

- Katosh John P. and Traugott Michael W. 1981. “The Consequences of Validated and Self-reported Voting Measures.” Public Opinion Quarterly 45:519–35. [Google Scholar]

- Kraut Robert E. and McConohay John B. 1973. “How Being Interviewed Affects Voting: An Experiment.” Public Opinion Quarterly 37:398–406. [Google Scholar]

- Krosnick Jon A. and Presser Stanley. 2010. “Question and Questionnaire Design.” Pp. 263–314 in Handbook of Survey Research, edited by Marsden Peter and Wright James. Bradford Emerald Group. [Google Scholar]

- Mann Christopher B. 2005. “Unintentional Voter Mobilization: Does Participation in Preelection Surveys Increase Voter Turnout?” The ANNALS of the American Academy of Political and Social Science 601:155–68. [Google Scholar]

- Martinez Michael D. 2003. “Comment on “Voter Turnout and the National Election Studies.”” Political Analysis 11:187–92. [Google Scholar]

- McDonald Michael. 2015. “United States Elections Project.” Retrieved January 24, 2015 (http://www.electproject.org/national-1789-present).

- McDonald Michael P. 2003. “On the Overreport Bias of the National Election Study Turnout Rate.” Political Analysis 11:180–86. [Google Scholar]

- Olson Kristen and Witt Lindsey. 2011. “Are We Keeping the People Who Used to Stay? Changes in Correlates of Panel Survey Attrition over Time.” Social Science Research 40:1037–50. [Google Scholar]

- Parry Hugh J. and Crossley Helen M. 1950. “Validity of Responses to Survey Questions.” Public Opinion Quarterly 14:61–80. [Google Scholar]

- Peress Michael. 2010. “Correcting for Survey Nonresponse Using Variable Response Propensity.” Journal of the American Statistical Association 105:1418–30. [Google Scholar]

- Sigelman Lee. 1982. “The Nonvoting Voter in Voting Research.” American Journal of Political Science 26:47–56. [Google Scholar]

- Silver Brian D., Anderson Barbara A., and Abramson Paul R. 1986. “Who Over-reports Voting?” American Political Science Review 80:613–24. [Google Scholar]

- Smith Tom W. 1982. Discrepancies in Past Presidential Vote. GSS Methodological Report No. 21. Retrieved February 8, 2018 (http://gss.norc.org/Documents/reports/methodological-reports/MR021.pdf). [Google Scholar]

- Smith Jennifer K., Gerber Alan S., and Orlich Anton. 2003. “Self-prophecy Effects and Voter Turnout: An Experimental Replication.” Political Psychology 24: 593–604. [Google Scholar]

- Spangenberg Eric R. and Greenwald Anthony G. 1999. “Social Influence by Requesting Self-prophecy.” Journal of Consumer Psychology 8:61–89. [Google Scholar]

- Stocke Volker. 2007. “Response Privacy and Elapsed Time Since Election Day as Determinants for Vote Overreporting.” International Journal of Public Opinion Research 19:237–46. [Google Scholar]

- Thompson Donovan J. and Tauber Joseph. 1957. “Household Survey, Individual Interview, and Clinical Examination to Determine Prevalence of Heart Disease.” American Journal of Public Health 47:1131–140. [DOI] [PMC free article] [PubMed] [Google Scholar]

- U.S. Census Bureau. 2006a. Current Population Survey Design and Methodology Technical Paper 66. Retrieved February 8, 2018 (http://www.census.gov/prod/2006pubs/tp-66.pdf).

- U.S. Census Bureau. 2008. Current Population Survey, November 2008, Voting and Registration Supplement File, Technical Documentation. Retrieved February 8, 2018 (http://www.census.gov/prod/techdoc/cps/cpsnov08.pdf).

- Voogt Robert J. J. and Van Kempen Hetty. 2002. “Nonresponse Bias and Stimulus Effects in the Dutch National Election Study.” Quality & Quantity 36:325–45. [Google Scholar]

- Yalch Richard F. 1976. “Pre-election Interview Effects on Voter Turnout.” Public Opinion Quarterly 40:331–36. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.