Abstract

Gene regulatory networks (GRNs) govern many core developmental and biological processes underlying human complex traits. Even with broad-scale efforts to characterize the effects of molecular perturbations and interpret gene coexpression, it remains challenging to infer the architecture of gene regulation in a precise and efficient manner. Key properties of GRNs, like hierarchical structure, modular organization, and sparsity, provide both challenges and opportunities for this objective. Here, we seek to better understand properties of GRNs using a new approach to simulate their structure and model their function. We produce realistic network structures with a novel generating algorithm based on insights from small-world network theory, and we model gene expression regulation using stochastic differential equations formulated to accommodate modeling molecular perturbations. With these tools, we systematically describe the effects of gene knockouts within and across GRNs, finding a subset of networks that recapitulate features of a recent genome-scale perturbation study. With deeper analysis of these exemplar networks, we consider future avenues to map the architecture of gene expression regulation using data from cells in perturbed and unperturbed states, finding that while perturbation data are critical to discover specific regulatory interactions, data from unperturbed cells may be sufficient to reveal regulatory programs.

1. Introduction

In the past decade, single cell sequencing assays have been instrumental in enabling functional studies of gene regulatory networks (GRNs). Observational studies of single cells have revealed substantial diversity and heterogeneity in the cell types that comprise healthy and diseased tissues [1], and molecular models of transcriptional systems have been used to understand the developmental processes involved in maintaining cell state and cell cycle [2,3]. Meanwhile, recent advances in the design of interventional studies, including CRISPR-based molecular perturbation approaches like Perturb-seq [4,5], have been useful for learning the local structure of GRNs around a focal gene or pathway [6,7], discovering trait-relevant gene sets at scale [8], and determining novel functions for poorly characterized genes in a particular cell type [9]. The preponderance of single-cell data in multiple cell types, tissues, and contexts has also fueled a resurgence of interest in the wholesale inference of GRNs, capitalizing on new techniques from graph theory and causal inference [10,11].

In functional genomics, network inference and candidate gene prioritization are typical aims of experimental data analysis. In this setting, it is common to make assumptions about the structure and function of GRNs to enable convenient computation. In particular, linear models of gene expression on directed acyclic graphs (DAGs) have been foundational for studies of GRNs, and this approach to structure learning is well-described in the literature [12,13]. Many extensions based on this framework have been proposed, including additional sparsity constraints in the form of regression penalties or low-rank assumptions [14,15]. Analogous techniques have also been used in the algorithmic design of perturbation experiments [16].

Even though convenience assumptions like linearity and acyclicity are rarely seen as limiting in practice, it is important to note that they are not always biologically realistic. Gene regulation is known to contain extensive feedback mechanisms [6], and some regulatory structures (in particular, triangles, like the feed-forward motif [17,18]) are not captured well by low-rank representations of GRNs [19]. Furthermore, biological networks are thought to be well described by directed graphs with hierarchical organization and with a degree-distribution that follows an approximate power-law [20–22]. In network inference, it is less common to make explicit use of these properties, though there are notable exceptions [7,23].

With these practical considerations in mind, it is worth critically examining assumptions which are (or could be) made about the structure of GRNs. In network theory, there are well-established models of networks with group structure [24,25] and with scale-free topologies [26–28]. The defining feature of directed scale-free graphs is a power-law distribution of node in- and out-degrees: this yields emergent properties including group-like structure and enrichment for structural motifs [18]. Further, most nodes in these graphs are connected to one another by short paths, which is referred to as the “small-world” property of networks [29,30].

Here, we characterize in detail a set of structural properties that we consider to be highly relevant for the study of GRNs. We propose a new algorithm to generate synthetic networks with these properties and formulate a gene expression model to simulate data from them. We use this simulation framework to conduct an array of in silico functional genomic studies and characterize the parameter space of our model in light of a recent genome-wide Perturb-seq study [9]. Our results provide intuition about the effects of various graph properties and how they manifest in experimental data. We conclude by discussing implications for future efforts to map the architecture of gene regulation and complex traits, with particular emphasis on identifying pairwise regulatory relationships between genes and clustering genes into programs. Our analysis tools are available on github as a resource to the scientific community.

2. Main

2.1. Modeling approach

Inspired by previous work from network theory and systems biology, we list what we consider to be key properties of GRNs. We motivate these criteria in light of a recent genome-scale study of genetic perturbations, conducted in an erythroid progenitor cell line (K562) (Fig. 1) [9]. To date, this is one of the largest available single-cell and single-gene perturbation datasets in any cell type: the data contain measurements on the expression of 5,530 gene transcripts in 1,989,578 cells, which were subject to 11,258 CRISPR-based perturbations of 9,866 unique genes. Here, we subset these data to 5,247 perturbations that target genes whose expression is also measured in the data (Methods). Key network properties are as follow:

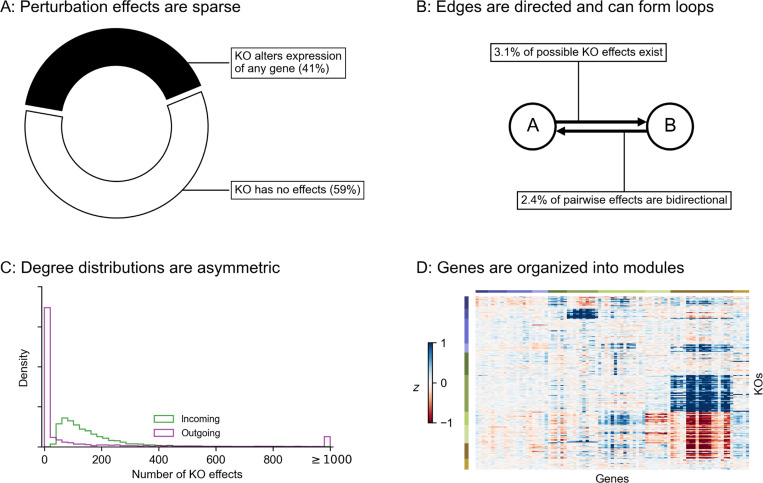

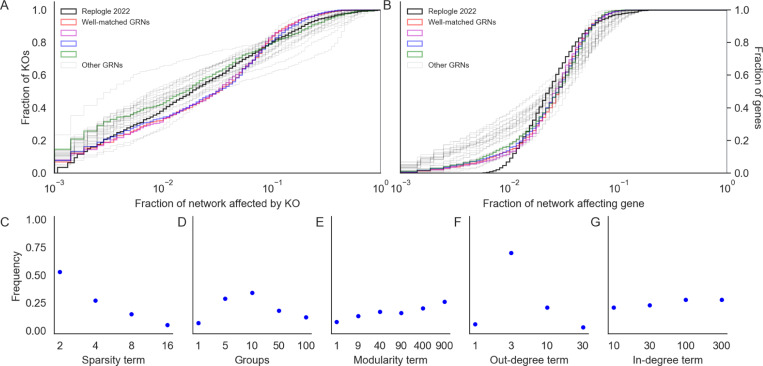

Figure 1: Key properties of gene regulatory networks.

Data from Replogle et. al., 2022. (A) Of the 5,247 perturbations in our analysis subset, 2,152 (41%) have a measurable effect on the transcriptional state of cells (energy-test p < 0.001). (B) Among all ordered pairs of genes, 3.1% (865,719 pairs) have a one-directional effect (FDR-corrected p < 0.05). Of these pairs with KO effects, 2.4% (20,621 pairs) further have bidirectional effects. (C) Summaries of the distribution of KO effects (Anderson-Darling p < 0.05) from the perspective of genes as subject to perturbation (outgoing effects) and as target genes when other genes are perturbed (incoming effects). (D) Subset of -normalized expression data corresponding to 10 gene modules, using labels as provided in the dataset - each modules is labeled by a color in the to bars above the x- and y-axes, and z-scores are clipped at ±1, for visualization.

GRNs are sparse: While gene expression is controlled by many variables, the typical gene is directly affected by a small number of regulators. We further expect the number of regulators of any single gene to be much smaller than the total number of regulators in the network. Also, not all genes participate in expression regulation: only 41% of perturbations that target a primary transcript have significant effects on the expression of any other gene (Fig. 1A).

GRNs have directed edges and feedback loops: Regulatory relationships between genes are directed, with one gene acting as a regulator and the other as a target gene: this means that “A regulates B” is distinct from “B regulates A”. Meanwhile, feedback loops are also thought to be pervasive in gene regulatory networks. A simple case of a feedback loop is bidirectional regulation, which is observed in data: 3.1% of ordered gene pairs have at least a one-directional perturbation effect (i.e., “A affects B”, Anderson-Darling FDR-corrected p < 0.05), and 2.4% of these pairs further have bi-directional effects (i.e., “B also affects A”) (Fig. 1B).

GRNs have asymmetric distributions of in- and out-degree: A further asymmetry between regulators and target genes arises from the existence of master regulators, which directly participate in the regulation of many other genes. The number of regulators per gene and genes per regulator are both thought to follow an approximate power-law distribution [20, 21], and indeed, the number of perturbation effects per regulator has a heavier-tailed distribution than the number of effects per target gene (Fig. 1C).

GRNs are modular: Genes in regulatory networks have different molecular functions that are executed in concert across various cell and tissue types. This grouping of genes by function also corresponds to a hierarchical organization of regulatory relationships that is revealed when these programs respond similarly to certain sets of perturbations (Fig. 1D).

While these criteria are not exhaustive, they do substantially constrain the space of plausible GRN structures. But from first principles, it is not obvious how these properties of GRNs manifest in an ever-growing body of experimental data. In other words, what does it matter that networks are sparse, or modular?

2.2. Network generating algorithm

To better understand these foundational questions about the architecture of gene expression regulation, we propose a two-step process to simulate synthetic GRNs. First, we produce realistic graph structures using a novel generating algorithm: we show that its parameters control key properties of the resulting graphs. Second, we describe a dynamical systems model of gene expression, which we use to generate synthetic data from arbitrary graph structures. With these tools, we conduct an array of simulated molecular perturbation studies, varying network properties of interest: an overview of our network generating algorithm is in Fig. 2A.

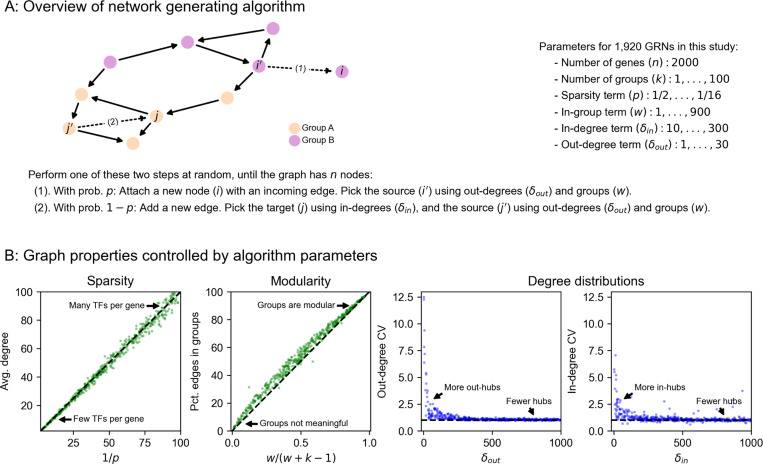

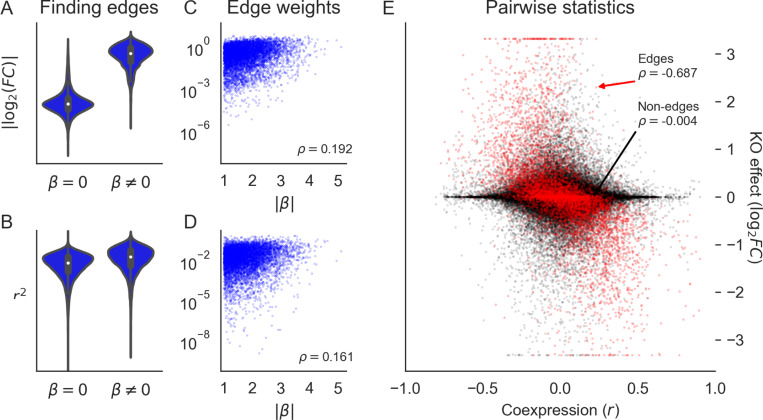

Figure 2: Modeling approach and network generating algorithm.

(A) Overview of network generating algorithm, based on a growth process with preferential attachment. At each step, randomly add either a node or an edge, with the source and target determined by the out- and in-degree distributions, and node membership in groups. (B) Key graph properties can be tuned by changing the parameters of the generating algorithm. We validate this in 1,000 synthetic graphs with 500 nodes each, produced with various generating parameters. The same networks are plotted in all four panels, indicating robustness across different background distributions of parameters.

Our algorithm is based on that of Bollobas et. al., 2003 [28], which models network growth with preferential attachment. This algorithm starts with a small initial graph, and randomly adds nodes or directed edges until the graph reaches a pre-specified size. When adding a node, the new node is selected to be the target of a new directed edge. When adding an edge between existing nodes, a node is selected to be the target with a probability that increases with the number of outgoing edges it already has. When selecting a node to be the source of a new edge (i.e., to be the regulator for a new gene, if we are adding a node, or for an existing gene, if we are adding an edge), we select with probability increasing in the number of incoming edges it already has. Our work extends this algorithm in two ways: first, by assigning each node in the network to one of a number of pre-specified groups, and second, by specifying a within-group affinity term which biases edges to be drawn between members of the same group. The full procedure, including pseudocode and a description of its parameters, is given by Algorithm 1.

The output of our algorithm is a directed scale-free network on nodes, each of which is assigned to one of groups. The parameters in our algorithm control specific network properties. To show this, we generated 1,000 synthetic graphs with genes using an array of randomly sampled parameters (Fig. 2B). We observe that the sparsity term adjusts the mean number of regulators per gene, which is approximately (Fig. 2B). The number of groups and the

Algorithm 1.

Directed scale-free network with groups

|

modularity term determine the fraction of edges which are drawn between members of the groups - this fraction is approximately (Fig. 2B). Finally, the bias terms and respectively control the coefficient of variation (CV) of the in- and out-degree distributions (Fig. 2B). CV is the standard deviation of a distribution over its mean, and for power-law distributions the CV is related to the power-law coefficient: a larger CV means the distribution has a heavier tail (i.e. there are hub regulators which have many target genes; or there are hub target genes which are directly affected by many regulators).

2.3. Expression model

In order to enable reasonable comparisons with experimental data, we use an expression model with quantitative (rather than binary) measurements, and with dynamics subject to a non-linearity that enforces realistic physical constraints: gene expression is non-negative and saturates near a maximum value. Given a graph structure generated using the algorithm above, we assign parameters to each gene (node) and regulatory interaction (edge) in the graph. Each gene has two rate parameters: one for innate RNA production in the absence of regulators (), and another for the decay of existing cellular RNAs (). Each regulatory relationship, between genes and , has one parameter: a magnitude () which describes the importance of the regulator for the expression of the target gene. We also enforce a constraint that interactions have a minimum strength . A full description of the strategy we use to sample these parameters for synthetic GRNs is in Methods.

Our expression model takes the form of a stochastic differential equation (SDE), and we produce expression values using forward simulation according to the Euler-Maruyama method (Fig. 3A). For gene with regulators having expression (likewise ) at time , the difference equation for expression at time is given by

where the terms on the right hand side of the equation, in order, correspond to transcriptional synthesis, degradation, and noise. Unless stated otherwise, we set as the magnitude of noise, which serves to scale the intrinsic biological noise in synthesis and degradation of RNAs (hence noise is also proportional to . We let be the step size, as in previous work [31], and take as the logistic sigmoid (expit) function . In practice, we conduct forward simulation in vectorized form with an update rule:

Throughout our experiments, we perform on the order of thousands of iterations and then check that the system of differential equations has reached an expression steady-state (Methods).

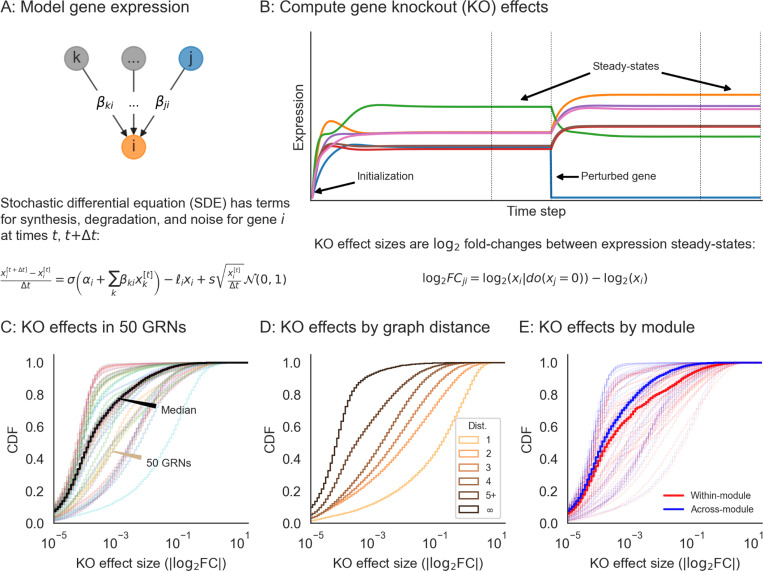

Figure 3: Perturbations and their effects within networks.

(A) Overview of gene expression model and its parameters. Here, is the logistic sigmoid . (B) Example forward simulation of the dynamical systems model. Trace lines show genes, whose expression values are initialized at zero. The system eventually reaches a steady-state, and is then subject to perturbation (knockout of gene , i.e. holding ). Further forward simulation leads to a new steady-state, from which we can compute perturbation effects (log2 FC for other genes ). (C) Distribution of knockout (KO) effects (i.e., log2 fold-changes in expression of a focal gene ) in 50 example GRNs, along with the median distribution (black line). (D) KO effects as a function of network distance between two genes, and (E) within and across modules given by the generating algorithm. Note that the solid lines in (D) and (E) are the median distributions over the 50 example GRNs, split respectively by distances and modules.

Our model can be used to quantify the effects of many types of perturbations. These include (1) gene knockouts (KOs), which we model by nullifying (or equivalently, setting for all ); (2) gene knockdown or overexpression, which can be modeled by decreasing or increasing , increasing or decreasing , or directly manipulating to a fixed value; (3) enhancer edits or transcriptional rewiring, modeled by changing specific and (4) changes to expression noise, modeled by altering , either globally or for specific genes. We further note that similarly formulated perturbations with small magnitudes could also make appropriate models of the effects of molecular quantitative trait loci (QTLs). Here, we focus solely on gene knockouts, which we consider for the remainder of this work.

2.4. Perturbation studies

We conducted synthetic perturbation studies in 1,920 GRNs with n = 2,000 genes - these GRNs were produced with a range of network generating parameters (Methods). For each GRN, we initialized gene expression values at zero and conducted a minimum 5,000 iterations of forward simulation, later verifying that the dynamical system reached equilibrium and assessing its stability (Fig. 3A,B, Methods). We then independently knocked out each gene in the network and let the system re-equilibrate after additional rounds of forward simulation (Fig. 3B). We computed the effect of perturbing gene as the log-fold change in expression of all other genes , which we refer to as the “perturbation effect” of gene on gene . Mathematically, this is

where denotes the expression of gene when gene has been knocked out. While the majority of perturbation effects are small in all GRNs, with 86.6% of all effects below , each network harbors a median 5,296 large effects on the order (Fig. 3C). We also note substantial variability in the distribution of perturbation effects across networks (Fig. 3C).

These effects are largely stratified by the distance between regulator and target (Fig. 3D), with distance here being the length of the shortest path between genes along edges in the network. Across GRNs, a majority of direct regulators of a gene confer at least a modest effect on average (77.3% of genes at distance 1 have when knocked out). Meanwhile, indirect effects of this magnitude also exist, but are less common on a per-interaction basis (mean 21.5% of gene pairs not connected by an edge). However, since mediation is much more common than direct regulation, mediated effects contribute a substantial fraction of perturbation effects at all but the largest magnitudes - for example, 98.5% of effects at across GRNs are mediated rather than direct (Fig. S1).

Since genes in the simulated GRNs belong to pre-defined groups, we further investigated the extent to which perturbation effects cluster within rather than across groups. On average, there is an enrichment of effects within groups - but as with the overall distribution, there is heterogeneity in the distributions of within- and across-group perturbation effects (Fig. 3E). This heterogeneity is driven largely by the modularity term: as increases, the distributions of within- and across- group effects become further separated, even across networks with different numbers of groups (Fig. S2). This effect is based on changes in network architecture: since the strongest perturbation effects are from direct regulators, an increased affinity for within-group regulation (i.e., larger ) means that these effects should also come from members of the same group.

2.5. Impact of network properties

Next, we turned our attention to the relationship between properties of networks (as determined by network generating parameters) and their distributions of perturbation effects. As a summary of this distribution, we compared the number of genes which are hub KOs and hub target genes in each of the 1,920 synthetic GRNs. We say a gene is a hub KO if it introduced a change of in at least 100 other genes when knocked out; analogously, we say a gene is a hub target gene if its expression was changed by upon knockout of at least 100 other genes. Genes whose equilibrium expression was below the magnitude of noise were removed from these counts, as their expression could vary widely across conditions solely due to noise. We find that these statistics behave consistently with respect to the network generating parameters across GRNs (Fig. 4), and that the directions of effect are also similar to the overall number of perturbation effects at this threshold in the network (Fig. S3).

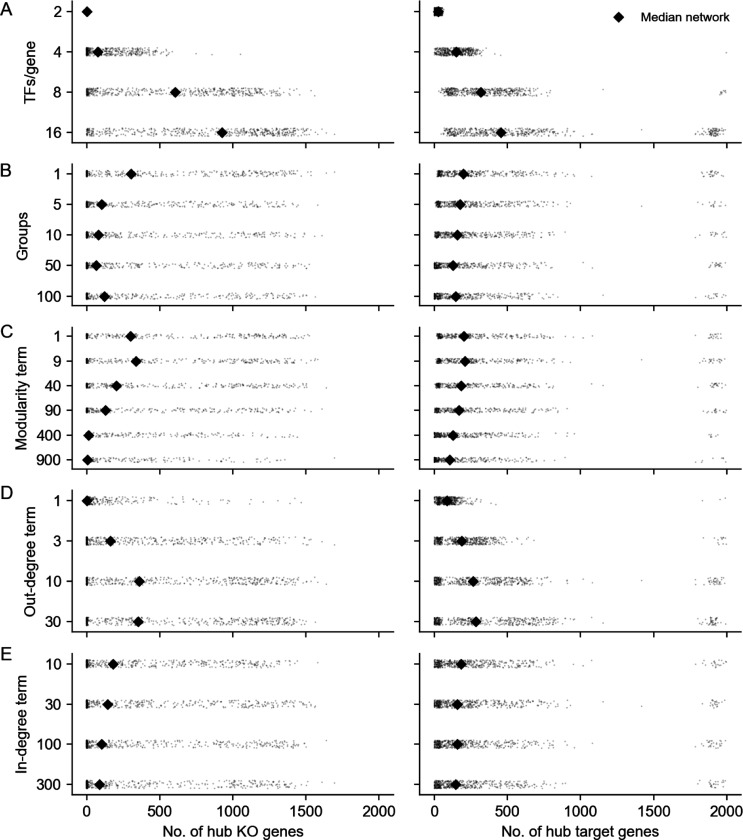

Figure 4: Network properties influence the distribution of perturbation effects.

Counts of genes that are hub knockouts (left) and hub target genes (right) in each synthetic GRN, as a function of network generating parameters. Each panel shows all 1,920 GRNs as individual points, stratified by parameter values. Each distribution is annotated with its mean over GRNs (diamond points).

Graph sparsity has the greatest influence on the number of hub KOs and hub target genes in the GRNs (Fig. 4A). More regulators per gene (large ) tends to translate to more perturbation effects overall, increasing the number of both hub knockouts and target genes. Notably, the effects on regulators and targets are not identical. In denser networks, the median number of hub KOs tends to be larger than the number of hub target genes. However, in a subset of dense networks, most genes in the network are identified as hub target genes. This is related to the absence of stable equilibrium dynamics in the low-noise limit of the gene expression model (Fig. S4), which suggests that as GRNs become more dense and genes are subject to regulation by larger fractions of the network, the system is less likely to be stable. Although this term has a large effect on perturbation effects, we find no obvious interaction between it and other terms in the generating algorithm (Fig. S5).

GRNs with fewer groups (small ) and higher modularity (large ) tend to have fewer hub KOs and hub target genes (Figs. 4B, 4C). The modularity term monotonically increases resilience to perturbation; the group term monotonically decreases it, with the exception of . From the perspective of the network generating algorithm, and are identical; they are equivalent to the algorithm from Bollobas et. al., 2003 [28] and correspond to the dissolution of modular structure with respect to the specified grouping. This is also equivalent to , in which each gene in the network has its own group - this intuition is supported by the remaining trend in (Fig. 4B). Meanwhile, in modular networks (large ), most edges are between members of the same group. This might serve to confine the downstream effects of perturbations to members of the same group, effectively dampening the transcriptional impact of altering the function of master regulators.

When the out-degree distribution of GRNs has a heavier tail (small ), there tend to be many fewer hub knockout genes (Fig. 4D). This relationship is non-linear, and in the most extreme case () there are only 89 hub KOs on average (median 1 hub KO) in the GRN. This effect is a consequence of preferential attachment; as more edges are drawn from master regulators, outgoing regulatory effects also concentrate there as well. Counterintuitively, this parameter exerts influence over the number of hub target genes in the network as well, and in the same direction. When effects are concentrated among a few key regulators, it may simply be less feasible for any gene to be affected by many knockouts since there are fewer genes that have many knockout effects at all. As with the sparsity term, we do not see obvious interactions between this term and others in the generating algorithm (Fig. S6). Meanwhile, when the in-degree distribution of GRNs has a less heavy tail (large ), there are modestly more hub target genes and hub KOs (Fig. 4E). The source of this trend is difficult to intuit, but the effect is very weak.

Looking across parameters, these results reflect a wide range of variation in the susceptibility of GRNs to perturbations as a function of their structural properties. While there is substantial overlap in the distributions of hub KO and target genes across network generating parameters, we find that all parameters except the in-degree term have statistically significant effects on both quantities (p < 0.001 for all tests, Fig. S7 - full results in Tables S1, S2). We estimate these effects with a multiple regression on the logit-transformed fraction of genes in each GRN which are hub KOs or hub target genes (Methods). In total, the network generating parameters explain just under half the variance in the fraction of the GRN which is either a hub KO (model ) or hub target gene (model ). Moreover, there is also a noteworthy thematic consistency across parameters. In all cases, the direction of protective effect from perturbation is also the direction of biological plausibility with respect to our modeling desiderata, where intuition dictates that GRNs should be sparse, modular, and have a heavy-tailed out- but not in-degree distribution.

2.6. Comparing with experimental data

With this intuition about network properties in hand, we now return to real experimental data. Given that synthetic GRNs with quantifiably different structures produce qualitatively different distributions of knockout effects, we next sought to ask whether any of them were also similar to real data. For this, we made use of the subset of perturbations that correspond one-to-one with gene expression readout in a recent Perturb-seq study [9].

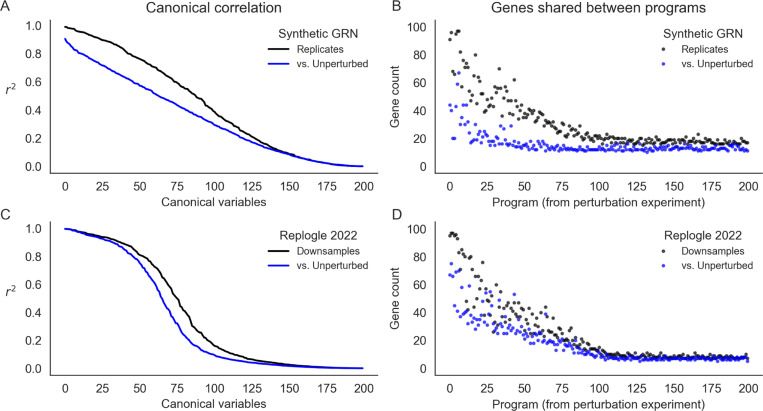

We compared the real and synthetic data using their cumulative distributions of perturbation effects, computed both from the perspective of genes as regulators and as targets of regulation (Fig. 5A-B). Since these data have different numbers of genes that are not lowly expressed, we normalized the number of incoming and outgoing effects to the size of the network (Methods). In the Perturb-seq data, we find noticeable qualitative differences between the distribution of incoming and outgoing effects - these differences are consistent with the notion that GRNs should have master regulators, but not master target genes. In the synthetic data, we find substantial diversity in both distributions across GRNs, including many patterns that seem wholly incompatible with those observed in experiments. Meanwhile, some GRNs seem well-matched to the Perturb-seq results: the distributions closest to the data are highlighted in color in Fig. 5A-B, and correspond to those having the smallest Kolmogorov-Smirnov test statistics when compared with the data distributions (Methods).

Figure 5: Comparing with genome-wide Perturb-seq.

Fraction of GRN that (A) affects each gene when perturbed (outgoing effects) or (B) is affected by other perturbations (incoming effects). In synthetic data, perturbation effects are thresholded at the top 3% of absolute log-fold change values, matching the proportion of pairwise tests from the Perturb-seq data with FDR-corrected Anderson-Darling p < 0.05. Highlighted in color are the four GRNs that best match the Perturb-seq data. (C-G) Distribution of network generating parameters for the 100 GRNs that are best matched to Perturb-seq data (by Kolmogorov-Smirnov -value rank for both distributions in A and B).

Although the focus of our work is not network inference, we do observe a coherent set of properties among the well-matched networks (Fig. 5C-G). Specifically, they share a relatively small number of regulators per gene (two, rather than 16); they have a small number of groups (five to ten rather than one or 100); they are highly modular (large ); and they have a heavy tail in the distribution of out-degree but not in-degree ( near three but on the order of 100). Consistent with previous results, we find these parameter sets to be within a range that matches our motivating intuition about the structural properties of GRNs, and we do not find these properties to have obvious pairwise interactions that affect concordance to data (Fig. S8).

2.7. Challenges and opportunities for inference

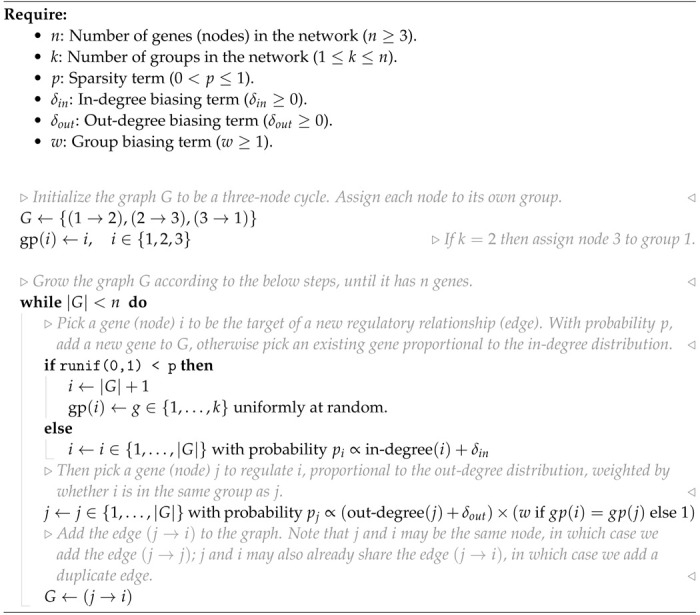

Finally, we highlight the utility of our simulation approach by considering the value of different data sources for inference tasks. For this, we conducted further analysis using an example synthetic GRN whose patterns of knockout effects were well-matched with Perturb-seq data. Specifically, we focused on the recovery of edges, edge weights, and group structure using interventional data (e.g., perturbation effects) and observational data (e.g., coexpression). We made use of perturbation effects as previously described, and further computed pairwise gene coexpression values using additional rounds of forward simulation from the expression model at steady state to approximate the naturally occurring variation across cells (Methods).

2.7.1. Discovering pairwise relationships

Several computational and experimental approaches have been used to estimate pairwise causal relationships between genes, with the ultimate goal of wholesale inference of gene regulatory networks [10,11]. These data and methods are broad in scope, and range from estimating networks using natural variation in gene expression values from bulk tissue [12,23] to fitting complex machine learning models on data from single-cell perturbation experiments [7,15,32]. Here, we consider two descriptive pairwise summary statistics at the gene level - gene coexpression across cells and perturbation effects across gene knockouts - and their connections to edges in a simulated GRN.

In the synthetic data, we find that the distributions of pairwise gene coexpression values and knockout effects both span multiple orders of magnitude (Fig. 6). However, where the distribution of knockout effects differs dramatically between gene pairs that share an edge and those that do not, the distributions of coexpression values have substantial overlap (Fig. 6A,B). This difference in distribution reflects what each statistic tends to measure. Gene perturbation effects tend to flow through the network along edges, and are therefore highly related to the network distance between genes (Fig. 3D). As a special case, this includes whether or not two genes share a direct regulatory relationship in the form of an edge. Meanwhile, strong coexpression is more often due to co-regulation than to there being a direct causal relationship between genes (Fig. S9).

Figure 6: Perturbations more reliably measure fine-scale network structure than coexpression.

(A) Distribution of perturbation effects between pairs of genes in a realistic synthetic GRN that do or do not share an edge. (B) Rank correlation of perturbation effect sizes with edge weights. (C) Distribution of gene coexpression between pairs of genes that do or do not share an edge. (D) Rank correlation of coexpression magnitude with edge weights. (E) Rank correlation between coexpression and perturbation effects (the -axis is clipped at values corresponding to tenfold change).

For gene pairs where there is direct regulation we see that both knockout effects (Fig. 6C) and coexpression (Fig. 6D) have weak correlation with the strength of known regulatory relationships. This reflects that both statistics are imperfect measures of regulatory importance: they are both affected by differences in regulatory architecture across genes (e.g., number of regulators or the intensity of transcriptional buffering). We further see this when directly comparing coexpression and knockout effects between pairs of genes. Across all pairs of genes, these two statistics are uncorrelated - but the two are highly correlated among pairs of genes that share an edge (Fig. 6E). In this way, both perturbation effects and coexpression contain similar information about edges in the GRN - but coexpression also measures non-causal relationships between genes, like coregulation, and is therefore systematically uncorrelated with perturbation effects even in real data (Fig. S10).

Together, these results underscore the importance of interventional data for inferring network edges. They also highlight limitations in the use of coexpression networks. But neither form of data are a panacea, and care is warranted in the analysis of experimental data and in the development of structure learning algorithms. For example, sorting and thresholding perturbation effects has been shown to be a high-quality baseline for network reconstruction [10,11] (one that we also use to compare networks in Fig. 5). However, false negative effects can arise when regulatory effects are weak due to transcriptional buffering, and false positive edges can be drawn where effects are amplified by mediation. This suggests that structure learning algorithms could benefit from modeling the transcriptional state of individual genes (i.e., level of buffering at baseline) and from approaches to explicitly resolve direct versus mediated effects.

2.7.2. Discovering group structure

Recent work has also attempted to identify trait-relevant sets of genes that act through coordinated effects in a particular cell type. These groups are sometimes called programs, and it is common to use dimensionality reduction techniques like singular value decomposition (SVD) or non-negative matrix factorization (NMF) on single-cell expression values to identify groups [8, 33]. In our example synthetic GRN and in the Perturb-seq data, we used a variant of this approach based on truncated SVD (TSVD) to assign genes to programs. As input, we used the set of 75,328 unperturbed cells from real data [9] and downsamples of the entire experiment to the same number of cells; for the synthetic data, we simulated the same number of cells from baseline or baseline and perturbed conditions, mimicking the composition of the real experiments. From these data, we computed the first 200 singular vectors of the expression data, using each vector to define a “program” of 100 genes with the largest loadings (Methods).

Here, we assess the extent to which these programs and their constituent singular vectors replicate across experiments from perturbed and unperturbed conditions. We use canonical correlation analysis (CCA) to assess the similarity of the singular vectors. This technique seeks to find rotations , of inputs , such that the correlation between and is maximized - the transformed inputs are called canonical variables, and we report their correlations when the inputs , are gene singular vectors from different experimental conditions (Fig. 7A). In the synthetic GRN, the canonical correlation steadily declines over the 200 dimensions of input. Notably, the magnitude of this correlation is similar when perturbation data are compared to a replicate or to unperturbed data. Even though this correlation is modest by the 100th set of canonical variables, this trend suggests consistency between lower-dimensional representations of expression data regardless of cell state (perturbed or unperturbed).

Figure 7: Learned representations are similar between control and intervened-upon cells.

Concordance between low-rank representations of single cells in a simulated GRN (top row) and downsamples of experimental data (Replogle et. al., 2022). (A) Correlation between the first 200 canonical variables (linear combinations of singular vectors) between samples of 75,328 baseline or baseline+perturbed cells from a synthetic GRN. (B) Overlap in gene programs inferred from singular value decomposition of single cell expression data. Programs are defined using singular vectors of gene expression from perturbed cells (x-axis), and intersected with programs analogously defined from baseline and additional perturbed cells. (C) Canonical correlation of control cells and two downsamples of the entire Perturb-seq experiment (Replogle et. al., 2022). (D) Overlap in gene programs computed from control and downsamples of experimental Perturb-seq data.

At this sample size (75,238 cells) for the synthetic GRN, however, there is a difference in the reproducibility of individual programs across data sources (perturbed or unperturbed; Fig. 7B). For this, we compare programs computed from one perturbation experiment (the “reference”) to programs from a replicate perturbation experiment and to programs from unperturbed cells. For each program from the reference, we report the maximum number of genes which overlap any other program computed from each of the other data sources. The first few programs (corresponding to the first few singular vectors) are highly reproducible in the replicate perturbation data. This overlap steadily declines to no effective overlap after the first ~100 programs. Despite similar canonical correlation, the unperturbed data do not replicate the same programs to the same extent - there is modest overlap with the first few programs from the perturbation data, and this overlap decays very quickly (after the first ~20 programs). When assessing these programs with respect to the () ground truth groups of this network, we find that nearly all true groups are at least modestly well represented by the top 50 programs from both data sources. However, the programs from the perturbation data much better represent the true groups than those from the unperturbed data (Fig. S11).

We find similar results in the experimental Perturb-seq data. Here, however, the first few canonical variables are highly correlated, and the canonical correlation drops off precipitously between the 50th and 100th canonical variables (Fig. 7C). We also find that programs computed from two downsamples of the entire experiment are about as reproducible as those from the simulated data, but are slightly more similar to the programs from control cells (Fig. 7D). While this may reflect some aspect of GRN structure, it is also related to the number of cells in the input data and magnitude of intrinsic gene expression noise. Both tend to reduce canonical correlations and the reproducibility of gene programs. In the real data, lowering the number of cells input to TSVD lowers both canonical correlation and program similarity compared to the entire experiment; relatedly, we find that the 75,328 control cells exhibit comparable performance as 30,000 perturbed cells at recovering representations from the full data (Fig. S12). In the synthetic networks, we find that altering the level of global transcriptional noise () alters the concordance between replicates and between perturbed and unperturbed cell states - at high levels of noise, there is little practical difference between programs derived from perturbed or unperturbed cells, but with low levels of noise, the programs from perturbation data are markedly different (Fig. S13). For presentation in Fig. 7A-B, we chose a level of noise () that recapitulated the qualitative behavior of the real data.

Taken together, these findings seem to suggest that the leading variance components of single-cell gene expression data will be similar across perturbed and unperturbed conditions, unless the magnitude of perturbation effects is greater than the level of intrinsic transcriptional noise. Relatedly, we suspect that dimensionality reduction techniques will produce concordant representations of both perturbed and unperturbed data under similar conditions, and that this similarity can propagate into gene sets derived from these representations. This begs a key line of questions for future work: where, and how, do molecular perturbations add value in uncovering the sets of genes that are collectively important for cell-type and disease-specific processes? In light of the number of cells required for reliable inference at this scale, we anticipate that large atlas-style cell reference data (e.g., the Human Cell Atlas and similar resources [34–36]) may provide a critical opportunity to reveal global aspects of network structure.

3. Discussion

In this work, we have presented a new model to simulate gene regulatory networks, with particular emphasis on generating networks with realistic structural properties. We note that this algorithm may be of interest in contexts outside gene regulation - namely, in any study of scale-free networks with group-like structure. We also anticipate that our technique to simulate gene expression from arbitrary networks may be useful for model development and benchmarking, or in other studies where network structures are known or may be hypothesized.

Here, we have highlighted the utility of our approach with simulations to develop intuition about key properties of GRNs, particularly in the context of molecular perturbations and coexpression data. While our study design draws inspiration from recent works using Perturb-seq, we also acknowledge limitations to the realism of our model. In focusing on the equilibrium dynamics of cells of one type, we have ignored developmental trajectories and cell-type heterogeneity within tissues, both of which modify our assumptions about regulatory network structure. For the sake of computational efficiency in quantifying expression for thousands of genes, we have also eschewed detailed models of the biological synthesis and experimental measurement of cellular RNAs: in future work where it is critical to match distributions of count data from experiments, we encourage modeling these complexities. Similar considerations may also be necessary for the application of our approach beyond modeling knockouts - for example, in studying genetic variation which affects gene expression.

Independently, our results suggest that the space of realistic network structures may be quite limited, and that it may be useful to consider this prior information in various inference settings. While our approach as outlined in this work is not optimized for inference, the algorithms we describe are generative, which means they could be used directly in applications for simulation-based inference. Although we used experimental data from K562 cells in this study, we anticipate the high-level structural properties of GRNs will generalize across different cell types. Moreover, we observed through simulations that hallmark properties of GRNs tend to confer resilience to perturbations across multiple measures, reducing the number of sensitive target genes and large-effect master regulators. We do not suspect this is an incidental finding in light of the selection to which GRNs are subject over evolutionary time, and suspect that considering this type of constraint may be insightful for future work.

Looking forward, we also anticipate that broad observational studies of diverse cell types and deep interventional studies of specific cell lines will both be useful in disentangling the basis of complex traits in regulatory networks. However, a key question remains in determining how best to leverage these data types towards a unifying understanding of cell biology. We suggest that a scaffolded approach to this problem may be useful. Where the scale of cell atlases presents a unique opportunity to learn transferable representations of cells across developmental states and tissues, perhaps including the discovery of cellular programs, these data are limited in their ability to resolve interactions between single genes. This is where perturbation data - however limited to specific cell types - retain critical value. Even as existing network inference algorithms experience computational challenges in genome-scale applications, the modularity of GRNs suggests that piecewise inference strategies may be viable until these challenges are resolved. As efforts like these enhance our mechanistic understanding of biological networks, we hope that our work serves to provide general intuition on their salient structural properties. We are optimistic that understanding these principles will be useful for an array of challenges and highlight future opportunities in functional genomics.

5. Methods

5.1. Graph generating parameters

5.1.1. Sampling

A full description of the graph generating algorithm can be found in the main text, with the exact procedure given by Algorithm 1. Here, we provide additional intuition on its generating parameters, and detail the scheme for sampling them.

In motivating our study, we highlight several key properties of gene regulatory networks: briefly, these are sparsity, modular groups, and asymmetric power-law degree distributions. In Fig. 2 we show that these properties are individually tuned by parameters of our generating algorithm. When generating synthetic networks, we sample values for each parameter across one to three orders of magnitude. To cover these ranges, the values are spaced geometrically, and the extrema are chosen to overlap values which we believe to be consistent with biological intuition for a network of n = 2,000 genes.

Sparsity term .

Number of groups .

Modularity term .

In-degree uniformity term .

Out-degree uniformity term .

The sparsity term is sampled so that the average number of regulators per gene spans from low single-digits to low double-digits. The number of groups is sampled from , which corresponds to a rough lower limit on the size of groups (20 genes), to , which corresponds to the dissolution of group structure and is equivalently to the algorithm from Bollobas et. al., 2003 [28]. The modularity / within-group affinity term w is sampled in a similar way: also corresponds to the dissolution of group structure, again giving the algorithm from Bollobas et. al., 2003, and gives an upper limit on modularity with respect to groups . The in- and out-degree uniformity terms , are both sampled across orders of magnitude. The bounds for the in-degree term to be larger in magnitude, corresponding to the assumption that the in-degree distribution should be less dispersed (i.e., have fewer hubs) than the out-degree distribution, but the range of values is intentionally overlapping.

To produce the set of 1,920 GRNs used in the study, we simulated one network with every possible combination of parameters listed above: this totals 4 × 5 × 6 × 4 × 4 = 1,920 networks.

5.1.2. Relationship to perturbation effects

We performed a regression analysis to estimate the effect of each graph generating parameter on the distribution of perturbation effects in the synthetic GRNs. Specifically, we regressed the logit-transformed fraction of genes in each GRN that are hub regulators or hub targets according to the following equation:

where is the inverse of the sparsity term, is a transformed number of groups (GRNs with group are treated as GRNs with groups; see Fig. 4), and , , and are as described above. The dependent variable of the regression, is either the fraction of genes in the GRN which are hub regulators or hub targets. These quantities are analyzed separately. Full results for each regression are in Table S1 and S2.

5.2. Expression simulation

5.2.1. Parameter selection

An overview of our gene expression model can be found in the main text. Here, we describe the sampling strategy for the parameters of the model and give additional information on their interpretation. Recall that the expression, , of gene is influenced by the following variables:

the baseline transcription rate ,

the degradation rate of RNAs ,

effects from regulating genes, ,

expression noise, with magnitude .

Note that and are properties of genes (nodes); is a property of regulatory interactions (edges); and s is a global parameter for the entire network. During forward simulation from the discretized stochastic differential equation, we take steps of size as in prior work [31], and update expression values from (at time ) to (at time ) according to the following:

In the deterministic limit, this results in an equation satisfied by any potential steady-state

where is the logistic sigmoid. When setting up the model given a graph structure from our generating algorithm, we simulate expression parameters according to the following scheme:

Beta(2, 8), under the assumption that genes have low but non-zero expression at baseline, in the absence of regulation - i.e., is small. Here, is again the logistic sigmoid (expit) function.

Beta(8, 2), under the assumption that the maximum expression of each gene, , tends to be of a similar order of magnitude (close to one), but can vary. To prevent steady-state gene expression from being excessively large (by having small ), we hard clip to be at least as large as .

, under the assumptions that regulatory interactions have a minimum strength . Here, is the probability that a regulator acts as an activator rather than a repressor.

, fixed across all genes in the networks. This value is chosen to be as large as possible without limiting detection of very small KO effects. At this level of noise, we can reliably detect log2 fold-changes down to the order of 10−4 (Fig. 3D).

5.2.2. Forward simulation

Once parameters of the expression model are chosen for a specific GRN, we initialize the expression of each gene and conduct forward simulation according to the update rule given in the previous section, which is also described in the main text.

When performing forward simulations, we initialize all genes in the network to have zero expression. We then perform iterations of forward simulation as a “burn-in”. After burn-in, we check whether the system of equations has converged to a steady-state by measuring differences in the time averaged mean after the burn-in. Specifically, we compute the maximum absolute log2 fold-change of non-lowly expressed genes in the network

where indexes genes whose running mean expression at the current iteration is above the noise magnitude , and is the step size to check for convergence. Mathematically, the running mean in the numerator is

where is the expression of gene at iteration . The denominator is analogously the running mean expression of gene the last time we checked for convergence.

If this maximum log fold-change is below 10−3, we say the system has converged, and take the vector as the steady-state expression of all genes in the network. We perform this check every iterations, up to a maximum iterations. We take the vector as an approximate equilibrium state if the system did not fully converge.

We further assess the stability of the steady-state of each GRN by performing a linear stability analysis of the expression model in the limit . In this limit, the expression model takes the form of an ordinary differential equation (ODE). The stability of an equilibrium point of this ODE can be assessed using the eigenvalues of the Jacobian matrix evaluated at − if all of the eigenvalues have a negative real part, the system is said to be stable [37]. Here, we have

where the (, )th entries correspond to the partial derivative of the deterministic part of the expression function of gene , with respect to the expression of gene . For our model,

where the first term is zero for and the second term is zero for .

5.2.3. Perturbation experiments

For each synthetic GRN in this study, we perform a systematic assessment of gene-level perturbation effects. We start with baseline steady-state expression values of an instantiated GRN, with edges drawn according to the generating algorithm and expression parameters chosen as described above. Then, separately for each gene , we perform a knockout by setting for all other genes - that is, we nullify its outgoing effects. This perturbs the equilibrium dynamics of the expression SDE, and we conduct additional rounds of forward simulation using the modified parameters until a new expression steady-state is reached. We perform the same procedure with burn-in and convergence checks as in the previous section.

We summarize the effect that perturbing gene has on gene using a log fold-change in expression values:

where is the steady-state expression of gene under baseline conditions, and is its steady-state expression when gene has been perturbed (both computed as described above).

5.2.4. Baseline coexpression

Since gene coexpression is also commonly used to describe pairwise relationships between genes, we further use the expression SDE to compute the gene-level correlations at steady-state in the synthetic GRNs. Under baseline conditions, we perform additional forward time steps, from which we sample “baseline cells” by taking the gene expression value at every step. The noise inherent to the model () produces sufficient variability in this cell population to compute gene-level correlations. We measure the coexpression of genes all and (not filtering out lowly expressed genes) using the Pearson correlation between and across cells.

5.3. Perturb-seq data

5.3.1. Data processing

To motivate aspects of our work, and to assess our simulations in context of experimental data, we make use of summary statistics from a recent genome-scale Perturb-seq study [9]. Specifically, we used pairwise FDR-corrected Anderson-Darling p-values (from the supplemental file, “anderson-darling p-values, BH-corrected.csv.gz”) as ameasure of the expression response to single-gene perturbations. Throughout this work, we used a single large subset of these data corresponding to the set of genes whose expression was subject to both experimental perturbation and measurement in response. We matched perturbations to target genes using the provided ENSEMBL gene IDs, subsetting to perturbations which targeted any primary transcript. In (rare) cases where there was more than one such perturbation, we used the perturbation which induced a statistically significant change in the expression of the target transcript. We note that target genes with expression levels below 0.25 UMI per cell were not included in this file, which further limited the genes included in our analysis. We performed a similar post-processing step when analyzing results from the synthetic networks, limiting analysis to genes whose steady-state expression was above the level of intrinsic noise (i.e., ).

5.3.2. Comparing with simulations

We compared the distribution of perturbation effects (incoming and outgoing) at the gene level when assessing similarities between the real and simulated networks. For this, we thresholded pairwise effects from the experimental data at FDR-corrected Anderson-Darling , saying that effects at this significance level constitute biologically meaningful effects, and others do not. At this threshold, we find that 3.16% of pairwise effects are called significant. For a given gene , we then computed two values: the fraction of the network that is affected when is perturbed (i.e., the fraction of genes for which ), and the fraction of the network that affects when perturbed (i.e., the fraction of genes for which ).

We then compared these distributions to analogous quantities derived from the synthetic GRNs. Since the experimental data are affected by imperfect statistical power, we set the discovery rate to be equal across all synthetic GRNs, doing so by taking the top 3.16% of pairwise perturbation effects (i.e., , where varies) as “statistically significant”. For each gene in a synthetic GRN, we computed the fraction of the network which is affected when is perturbed (i.e., the fraction of genes for which ), and the fraction of the network which affects when perturbed (i.e., the fraction of genes for which . Note that in each GRN in Fig. 5, we remove lowly-expressed genes, with baseline expression . This means that the number of genes analyzed is not exactly the same for all GRNs - we therefore normalized the distribution of perturbation effects by the number of genes that are included in the analysis (i.e., those not lowly-expressed).

Finally, we compared the distributions of incoming and outgoing perturbation effects using the Kolmogorov-Smirnov test as implemented in scipy (scipy.stats.ks_2samp) [38]. This is a nonparametric test for equality of distribution between two samples, which measures the maximum difference between cumulative distribution functions. To select the synthetic GRNs which are closest to the real data, we rank GRNs by largest KS -values with each distribution (incoming and outgoing), then find the smallest rank such that GRNs are in the top of all GRNs compared to both distributions.

5.4. Gene programs

5.4.1. Truncated singular value decomposition

We used truncated singular value decomposition (TSVD) to cluster genes into “programs” based on their expression profiles in cells from both perturbed and unperturbed settings, using the TruncatedSVD function from scikit-learn [39]. Briefly, TSVD is an algorithmic modification of singular value decomposition (SVD), which produces orthogonal singular vectors corresponding to the directions of maximum variance in the input data. In TSVD, only the top vectors are computed, which results in faster computational runtimes for our analysis.

We assembled separate input datasets consisting of perturbed and unperturbed cells for both synthetic data and using downsamples of the experimental Perturb-seq data. For the synthetic data, we simulated 75,328 single cells from baseline conditions by forward simulation from the expression fixed point of the GRN, sampling cells from every forward time step. We also simulated the expression of an identical number of cells under perturbed conditions, modeling the split of cells after the real Perturb-seq study: roughly 8.1% of the cells corresponded to baseline conditions, and the remainder were assigned uniformly at random to a knockout condition for each of the 2,000 genes in the GRN (this corresponds to 35 cells per KO on average). We do not filter out lowly expressed genes for this analysis.

For the real data, we used single-cell expression data of the 5,247 genes in our data subset from all 75,328 control cells as measurements of the GRN in unperturbed conditions. Then, to avoid effects from varying the size of the input cell population, we performed two independent (random) downsamples of the entire experiment to the same number of cells as measurements of the GRN in perturbed conditions.

With each of these input datasets , we normalized each gene to have zero mean and unit variance, and then performed TSVD to compute the top dimensions of expression variability. This resulted in singular matrices for cells () and genes (), and a diagonal matrix of singular values, . The product of these matrices approximates the input:

and we used the gene loadings (columns of the gene singular matrix ) to define gene programs. Each “program” corresponds one-to-one with one of the gene singular vectors , and is the set of 100 genes with the largest squared entries of .

5.4.2. Similarity across datasets

We assess the similarity of gene programs from two different experiments in two distinct ways: one using the set of genes which constitutes each program, and the other using the singular vector used to define it. When comparing programs from one (reference) experiment to programs from another experiment, we report the maximum overlap between each program in the reference set to any program in other set - that is,

which quantifies the extent to which each program is reproduced by the other experiment. When comparing gene singular vectors from the two experiments, we make use of the fact that the SVD of their dot product is a well-characterized mathematical procedure called canonical correlation analysis (CCA) [40]. The top components of this decomposition are called canonical variables, and they each represent the axes of rotation which maximize correlation between variables in the input data. Here, we report the canonical correlation (singular values from the second SVD step) for the first 200 canonical variables, to quantify the extent to which the lower-dimensional representations of the inputs are consistent with one another.

Supplementary Material

4. Acknowledgements

We would like to thank Mineto Ota, Emma Dann, Courtney Smith, Garyk Brixi, Josh Weinstock, and members of the Pritchard Lab at Stanford University for helpful comments and discussion related to this work. M.A. acknowledges support from a Microsoft Research PhD Fellowship, and from the National Library of Medicine (NLM) under training grant T15LM007033. This work was supported by the National Human Genome Research Institute (NHGRI) under grants R01HG008140 and U01HG012069 (J.K.P.) and by the National Institute of General Medical Sciences (NIGMS) under grant R01GM115889 (G.S.).

References

- [1].Papalexi Efthymia and Satija Rahul. Single-cell RNA sequencing to explore immune cell heterogeneity. Nature Reviews Immunology, 18(1):35–45, January 2018. Publisher: Nature Publishing Group. [DOI] [PubMed] [Google Scholar]

- [2].La Manno Gioele, Soldatov Ruslan, Zeisel Amit, Braun Emelie, Hochgerner Hannah, Petukhov Viktor, Lidschreiber Katja, Kastriti Maria E., Lönnerberg Peter, Furlan Alessandro, Fan Jean, Borm Lars E., Liu Zehua, van Bruggen David, Guo Jimin, He Xiaoling, Barker Roger, Sundström Erik, Castelo-Branco Gongalo, Cramer Patrick, Adameyko Igor, Linnarsson Sten, and Kharchenko Peter V. RNA velocity of single cells. Nature, 560(7719):494–498, August 2018. Publisher: Nature Publishing Group. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [3].Bergen Volker, Lange Marius, Peidli Stefan, Wolf F. Alexander, and J Fabian. Theis. Generalizing RNA velocity to transient cell states through dynamical modeling. Nature Biotechnology, 38(12):1408–1414, December 2020. Publisher: Nature Publishing Group. [DOI] [PubMed] [Google Scholar]

- [4].Dixit Atray, Parnas Oren, Li Biyu, Chen Jenny, Fulco Charles P., Jerby-Arnon Livnat, Marjanovic Nemanja D., Dionne Danielle, Burks Tyler, Raychowdhury Raktima, Adamson Britt, Norman Thomas M., Lander Eric S., Weissman Jonathan S., Friedman Nir, and Regev Aviv. Perturb-Seq: Dissecting Molecular Circuits with Scalable Single-Cell RNA Profiling of Pooled Genetic Screens. Cell, 167(7):1853–1866.e17, December 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [5].Replogle Joseph M., Norman Thomas M., Xu Albert, Hussmann Jeffrey A., Chen Jin, Cogan J. Zachery, Meer Elliott J., Terry Jessica M., Riordan Daniel P., Srinivas Niranjan, Fiddes Ian T., Arthur Joseph G., Alvarado Luigi J., Pfeiffer Katherine A., Mikkelsen Tarjei S., Weissman Jonathan S., and Adamson Britt. Combinatorial single-cell CRISPR screens by direct guide RNA capture and targeted sequencing. Nature Biotechnology, 38(8):954–961, August 2020. Publisher: Nature Publishing Group. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Freimer Jacob W., Shaked Oren, Naqvi Sahin, Sinnott-Armstrong Nasa, Kathiria Arwa, Garrido Christian M., Chen Amy F., Cortez Jessica T., Greenleaf William J., Pritchard Jonathan K., and Marson Alexander. Systematic discovery and perturbation of regulatory genes in human T cells reveals the architecture of immune networks. Nature Genetics, 54(8):1133–1144, August 2022. Number: 8 Publisher: Nature Publishing Group. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].Weinstock Joshua S., Arce Maya M., Freimer Jacob W., Ota Mineto, Marson Alexander, Battle Alexis, and Pritchard Jonathan K. Gene regulatory network inference from CRISPR perturbations in primary CD4+ T cells elucidates the genomic basis of immune disease. bioRxiv, page 2023.09.17.557749, October 2023. [DOI] [PubMed]

- [8].Schnitzler Gavin R., Kang Helen, Fang Shi, Angom Ramcharan S., Lee-Kim Vivian S., Ma X. Rosa, Zhou Ronghao, Zeng Tony, Guo Katherine, Taylor Martin S., Vellarikkal Shamsudheen K., Barry Aurelie E., Sias-Garcia Oscar, Bloemendal Alex, Munson Glen, Guckel-berger Philine, Nguyen Tung H., Bergman Drew T., Hinshaw Stephen, Cheng Nathan, Cleary Brian, Aragam Krishna, Lander Eric S., Finucane Hilary K., Mukhopadhyay Debabrata, Gupta Rajat M., and Engreitz Jesse M. Convergence of coronary artery disease genes onto endothelial cell programs. Nature, 626(8000):799–807, February 2024. Number: 8000 Publisher: Nature Publishing Group. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [9].Replogle Joseph M., Saunders Reuben A., Pogson Angela N., Hussmann Jeffrey A., Lenail Alexander, Guna Alina, Mascibroda Lauren, Wagner Eric J., Adelman Karen, Lithwick-Yanai Gila, Iremadze Nika, Oberstrass Florian, Lipson Doron, Bonnar Jessica L., Jost Marco, Norman Thomas M., and Weissman Jonathan S. Mapping information-rich genotype-phenotype landscapes with genome-scale Perturb-seq. Cell, 185(14):2559–2575.e28, July 2022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [10].Chevalley Mathieu, Sackett-Sanders Jacob, Roohani Yusuf, Notin Pascal, Bakulin Artemy, Brzezinski Dariusz, Deng Kaiwen, Guan Yuanfang, Hong Justin, Ibrahim Michael, Kotlowski Wojciech, Kowiel Marcin, Misiakos Panagiotis, Nazaret Achille, Markus Püschel Chris Wendler, Mehrjou Arash, and Schwab Patrick. The CausalBench challenge: A machine learning contest for gene network inference from single-cell perturbation data, August 2023. arXiv:2308.15395 [cs, q-bio].

- [11].Chevalley Mathieu, Roohani Yusuf, Mehrjou Arash, Leskovec Jure, and Schwab Patrick. CausalBench: A Large-scale Benchmark for Network Inference from Single-cell Perturbation Data, July 2023. arXiv:2210.17283 [cs].

- [12].Friedman Nir, Linial Michal, Nachman Iftach, and Pe’er Dana. Using Bayesian networks to analyze expression data. In Proceedings of the fourth annual international conference on Computational molecular biology, RECOMB ‘00, pages 127–135, New York, NY, USA, April 2000. Association for Computing Machinery. [DOI] [PubMed] [Google Scholar]

- [13].Heckerman David. A Tutorial on Learning with Bayesian Networks. In Holmes Dawn E. and Jain Lakhmi C., editors, Innovations in Bayesian Networks: Theory and Applications, pages 33–82. Springer, Berlin, Heidelberg, 2008. [Google Scholar]

- [14].Wang Yuhao, Solus Liam, Yang Karren Dai, and Uhler Caroline. Permutation-based Causal Inference Algorithms with Interventions, November 2017. arXiv:1705.10220 [stat].

- [15].Lopez Romain, Hütter Jan-Christian, Pritchard Jonathan K., and Regev Aviv. Large-Scale Differentiable Causal Discovery of Factor Graphs, October 2022. arXiv:2206.07824 [cs, q-bio, stat].

- [16].Yao Douglas, Binan Loic, Bezney Jon, Simonton Brooke, Freedman Jahanara, Frangieh Chris J., Dey Kushal, Geiger-Schuller Kathryn, Eraslan Basak, Gusev Alexander, Regev Aviv, and Cleary Brian. Scalable genetic screening for regulatory circuits using compressed Perturb-seq. Nature Biotechnology, pages 1–14, October 2023. Publisher: Nature Publishing Group. [DOI] [PMC free article] [PubMed]

- [17].Milo R., Shen-Orr S., Itzkovitz S., Kashtan N., Chklovskii D., and Alon U. Network Motifs: Simple Building Blocks of Complex Networks. Science, 298(5594):824–827, October 2002. Publisher: American Association for the Advancement of Science. [DOI] [PubMed] [Google Scholar]

- [18].Shen-Orr Shai S., Milo Ron, Mangan Shmoolik, and Alon Uri. Network motifs in the transcriptional regulation network of Escherichia coli. Nature Genetics, 31(1):64–68, May 2002. Publisher: Nature Publishing Group. [DOI] [PubMed] [Google Scholar]

- [19].Seshadhri C., Sharma Aneesh, Stolman Andrew, and Goel Ashish. The impossibility of low-rank representations for triangle-rich complex networks. Proceedings of the National Academy of Sciences, 117(11):5631–5637, March 2020. Publisher: Proceedings of the National Academy of Sciences. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [20].Balaji S., Iyer Lakshminarayan M., Aravind L., and Babu M. Madan. Uncovering a Hidden Distributed Architecture Behind Scale-free Transcriptional Regulatory Networks. Journal of Molecular Biology, 360(1):204–212, June 2006. [DOI] [PubMed] [Google Scholar]

- [21].Gerstein Mark B., Kundaje Anshul, Hariharan Manoj, Landt Stephen G., Yan Koon-Kiu, Cheng Chao, Jasmine Mu Xinmeng, Khurana Ekta, Rozowsky Joel, Alexander Roger, Min Renqiang, Alves Pedro, Abyzov Alexej, Addleman Nick, Bhardwaj Nitin, Boyle Alan P., Cayting Philip, Charos Alexandra, Chen David Z., Cheng Yong, Clarke Declan, Eastman Catharine, Euskirchen Ghia, Frietze Seth, Fu Yao, Gertz Jason, Grubert Fabian, Harmanci Arif, Jain Preti, Kasowski Maya, Lacroute Phil, Leng Jing, Lian Jin, Monahan Hannah, O’Geen Henriette, Ouyang Zhengqing, Partridge E. Christopher, Patacsil Dorrelyn, Pauli Florencia, Raha Debasish, Ramirez Lucia, Reddy Timothy E., Reed Brian, Shi Minyi, Slifer Teri, Wang Jing, Wu Linfeng, Yang Xinqiong, Yip Kevin Y., Zilberman-Schapira Gili, Batzoglou Serafim, Sidow Arend, Farnham Peggy J., Myers Richard M., Weissman Sherman M., and Snyder Michael. Architecture of the human regulatory network derived from ENCODE data. Nature, 489(7414):91–100, September 2012. Publisher: Nature Publishing Group. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [22].Singh Arun J., Ramsey Stephen A., Filtz Theresa M., and Kioussi Chrissa. Differential gene regulatory networks in development and disease. Cellular and Molecular Life Sciences, 75(6):1013–1025, March 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [23].Langfelder Peter and Horvath Steve. WGCNA: an R package for weighted correlation network analysis. BMC Bioinformatics, 9(1):559, December 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [24].Holland Paul W., Laskey Kathryn Blackmond, and Leinhardt Samuel. Stochastic blockmodels: First steps. Social Networks, 5(2):109–137, June 1983. [Google Scholar]

- [25].Funke Thorben and Becker Till. Stochastic block models: A comparison of variants and inference methods. PLOS ONE, 14(4):e0215296, April 2019. Publisher: Public Library of Science. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [26].Barabási Albert-László and Albert Réka. Emergence of Scaling in Random Networks. Science, 286(5439):509–512, October 1999. Publisher: American Association for the Advancement of Science. [DOI] [PubMed] [Google Scholar]

- [27].Holme Petter and Kim Beom Jun. Growing scale-free networks with tunable clustering. Physical Review E, 65(2):026107, January 2002. Publisher: American Physical Society. [DOI] [PubMed] [Google Scholar]

- [28].Bollobás Béla, Borgs Christian, Chayes Jennifer T, and Riordan Oliver. Directed scale-free graphs. In SODA, volume 3, pages 132–139,2003. [Google Scholar]

- [29].Watts Duncan J. and Strogatz Steven H. Collective dynamics of ‘small-world’ networks. Nature, 393(6684):440–442, June 1998. Publisher: Nature Publishing Group. [DOI] [PubMed] [Google Scholar]

- [30].Cohen Reuven and Havlin Shlomo. Scale-Free Networks Are Ultrasmall. Physical Review Letters, 90(5):058701, February 2003. Publisher: American Physical Society. [DOI] [PubMed] [Google Scholar]

- [31].Dibaeinia Payam and Sinha Saurabh. SERGIO: A Single-Cell Expression Simulator Guided by Gene Regulatory Networks. Cell Systems, 11(3):252–271.e11, September 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [32].Zheng Xun, Aragam Bryon, Ravikumar Pradeep, and Xing Eric P. DAGs with NO TEARS: Continuous Optimization for Structure Learning, November 2018. arXiv:1803.01422 [cs, stat].

- [33].Kotliar Dylan, Veres Adrian, Nagy M Aurel, Tabrizi Shervin, Hodis Eran, Melton Douglas A, and Sabeti Pardis C. Identifying gene expression programs of cell-type identity and cellular activity with single-cell RNA-Seq. eLife, 8:e43803, July 2019. Publisher: eLife Sciences Publications, Ltd. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [34].Regev Aviv, Teichmann Sarah A, Lander Eric S, Amit Ido, Benoist Christophe, Birney Ewan, Bodenmiller Bernd, Campbell Peter, Carninci Piero, Clatworthy Menna, Clevers Hans, Deplancke Bart, Dunham Ian, Eberwine James, Eils Roland, Enard Wolfgang, Farmer Andrew, Fugger Lars, Göttgens Berthold, Hacohen Nir, Haniffa Muzlifah, Hemberg Martin, Kim Seung, Klenerman Paul, Kriegstein Arnold, Lein Ed, Linnarsson Sten, Lundberg Emma, Lundeberg Joakim, Majumder Partha, Marioni John C, Merad Miriam, Mhlanga Musa, Nawijn Martijn, Netea Mihai, Nolan Garry, Pe’er Dana, Phillipakis Anthony, Ponting Chris P, Quake Stephen, Reik Wolf, Rozenblatt-Rosen Orit, Sanes Joshua, Satija Rahul, Schumacher Ton N, Shalek Alex, Shapiro Ehud, Sharma Padmanee, Shin Jay W, Stegle Oliver, Stratton Michael, Stubbington Michael J T., Theis Fabian J, Uhlen Matthias, van Oudenaarden Alexander, Wagner Allon, Watt Fiona, Weissman Jonathan, Wold Barbara, Xavier Ramnik, Yosef Nir, and Human Cell Atlas Meeting Participants. The Human Cell Atlas. eLife, 6:e27041, December 2017. Publisher: eLife Sciences Publications, Ltd. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [35].THE TABULA SAPIENS CONSORTIUM. The Tabula Sapiens: A multiple-organ, single-cell transcriptomic atlas of humans. Science, 376(6594):eabl4896, May 2022. Publisher: American Association for the Advancement of Science. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [36].Megill Colin, Martin Bruce, Weaver Charlotte, Bell Sidney, Prins Lia, Badajoz Seve, Mc-Candless Brian, Pisco Angela Oliveira, Kinsella Marcus, Griffin Fiona, Kiggins Justin, Haliburton Genevieve, Mani Arathi, Weiden Matthew, Dunitz Madison, Lombardo Maximilian, Huang Timmy, Smith Trent, Chambers Signe, Freeman Jeremy, Cool Jonah, and Carr Ambrose. Cellxgene: a performant, scalable exploration platform for high dimensional sparse matrices, April 2021. Pages: 2021.04.05.438318 Section: New Results.

- [37].Strogatz Steven H. Nonlinear dynamics and chaos: with applications to physics, biology, chemistry, and engineering. CRC press, 2018. [Google Scholar]

- [38].Virtanen Pauli, Gommers Ralf, Oliphant Travis E., Haberland Matt, Reddy Tyler, Cournapeau David, Burovski Evgeni, Peterson Pearu, Weckesser Warren, Bright Jonathan, van der Walt Stéfan J., Brett Matthew, Wilson Joshua, Millman K. Jarrod, Mayorov Nikolay, Nelson Andrew R. J., Jones Eric, Kern Robert, Larson Eric, Carey C. J., Polat Ilhan, Feng Yu, Moore Eric W., VanderPlas Jake, Laxalde Denis, Perktold Josef, Cimrman Robert, Henriksen Ian, Quintero E. A., Harris Charles R., Archibald Anne M., Ribeiro Antonio H., Pedregosa Fabian, and van Mulbregt Paul. SciPy 1.0: fundamental algorithms for scientific computing in Python. Nature Methods, 17(3):261–272, March 2020. Publisher: Nature Publishing Group. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [39].Pedregosa Fabian, Varoquaux Gaël, Gramfort Alexandre, Michel Vincent, Thirion Bertrand, Grisel Olivier, Blondel Mathieu, Prettenhofer Peter, Weiss Ron, Dubourg Vincent, et al. Scikit-learn: Machine learning in python. the Journal of machine Learning research, 12:2825–2830,2011. [Google Scholar]

- [40].William H Press. Canonical correlation clarified by singular value decomposition, 2011.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.