Summary

Here we present an open-source behavioral platform and software solution for studying fine motor skills in mice performing reach-to-grasp task. We describe steps for assembling the box, training mice to perform the task, and processing the video with the custom software pipeline to analyze forepaw kinematics. The behavioral platform uses readily available and 3D-printed components and was designed to be affordable and universally reproducible. We provide the schematics, 3D models, code, and assembly instructions in the open GitHub repository.

Subject areas: Neuroscience, Cognitive Neuroscience, Behavior

Graphical abstract

Highlights

-

•

Steps to build a low-cost platform to study skilled motor activity in mice

-

•

Customized software to analyze mouse forelimb kinematics

-

•

Guidance on training mice to perform reach-to-grasp tasks

Publisher’s note: Undertaking any experimental protocol requires adherence to local institutional guidelines for laboratory safety and ethics.

Here we present an open-source behavioral platform and software solution for studying fine motor skills in mice performing reach-to-grasp task. We describe steps for assembling the box, training mice to perform the task, and processing the video with the custom software pipeline to analyze forepaw kinematics. The behavioral platform uses readily available and 3D-printed components and was designed to be affordable and universally reproducible. We provide the schematics, 3D models, code, and assembly instructions in the open GitHub repository.

Before you begin

Acquisition and execution of complex and skilled motor activity involve synergistic interaction of the cerebral cortex, the basal ganglia, the cerebellum and the spinal cord. The reach-to-grasp task in rodents has been established and used for decades to investigate neurobiological mechanisms underlying the skilled motor activity and its impairments in models of human diseases.1,2,3,4,5,6,7,8,9,10 In the present work, we describe the design and fabrication of the hardware-software platform for mouse reach-to-grasp task and use of this platform for mice training and recording behavioral videos. As a part of the platform, we present an open-source software for video analysis and kinematic quantification of mouse fine motor skills. We share our design and analysis pipeline to promote the reproducibility and availability of this protocol to the wide open-science community. All the schematics, code, 3D models, printing and assembly instructions are provided in the open GitHub repository (https://github.com/BerezhnoyD/Reaching_Task_VAI; https://doi.org/10.5281/zenodo.7383917). The reaching box is programmed with Arduino IDE and can be used as a device for automated behavioral training and data acquisition. In addition, it has the features to log basic behavioral data (touch/beam sensors) and trigger or synchronize external devices for electrophysiology recording, in vivo imaging, optogenetic stimulation etc. Last, we trained a cohort of wild-type C57BL6J mice using this platform and performed kinematic analysis of forelimb movement. We found that most animals successfully acquired the forelimb reaching skills. Troubleshooting tips that we found useful in our practice were reported, which can be beneficial to researchers entering this field.

Institutional permissions

All animal procedures in this study were reviewed and approved by the Van Andel Institute Animal Care and Use Committee (IACUC; Protocol# 22-02-006).

Behavioral box construction

Timing: 2–3 days

-

1.

Design of 3D parts and Plexiglas sheets and assembly of the box.

Gather 4 main components that to assemble the behavioral box (See Figure 1B for the overall schematics of the box; Figures 1A–1D for the view from different angles, key resources table):-

a.Plexiglas sheets cut to size and drilled,

-

b.PLA plastic for 3D printed parts, such as corners holding the sheets together,

-

c.Small screws and nuts kit, and,

-

d.metal rods for the floor.

-

a.

-

2.Manufacture the parts for the behavioral box.

-

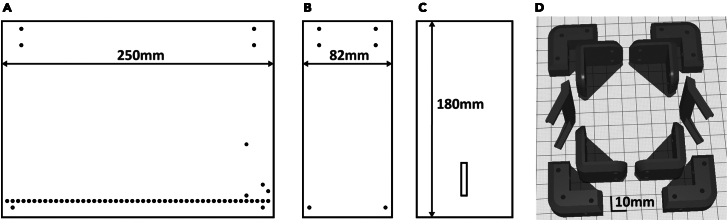

a.See the Figure 2 for the dimensions of each Plexiglas part.

-

b.Using the 3D stereolithography files (∗.stl) provided in the repository (https://github.com/BerezhnoyD/Reaching_Task_VAI ) print all the required parts in PLA using a 3D-printer, including:

-

i.Lower and upper corners (4 for each) to hold the box together.

-

ii.Two frames to attach mirrors to the box.Note: In case of any modifications needed, like the angle of the mirror, the original models from the Computer-aided design (CAD) software are also provided in the repository.

-

iii.Base for the feeder motor and the feeder disk.Note: it is better to print the disk upside down to ensure it is smooth.

-

iv.The XY-stage for easy and precise adjustment of the feeder position.Note: We used the Ultimaker Cura Slicer and Ultimaker S3 3D printer.For most of the parts you should include support and an initial layer for better adhesion to the baseplate.

-

i.

-

c.Cut and drill 4 Plexiglas sheets (3 mm) for the side and back walls of the box according to the dimensions provided. The front wall should be made from thinner acrylic sheet (0.5 mm).

CRITICAL: The front wall needs to be changed for each experiment, as it may get dirty and obstruct the view of the camera. The frontal wall can be also modified to have more room for head-mounted apparatus (e.g., miniscope, preamplifier).Note: CAD software along with CNC cutter machine can be used to scale and speed up the manufacturing process (all the design files provided were made using FreeCAD software, Open-source GNU license, https://www.freecad.org/), but the original design can be easily reproduced also with the use of all-manual tools.

CRITICAL: The front wall needs to be changed for each experiment, as it may get dirty and obstruct the view of the camera. The frontal wall can be also modified to have more room for head-mounted apparatus (e.g., miniscope, preamplifier).Note: CAD software along with CNC cutter machine can be used to scale and speed up the manufacturing process (all the design files provided were made using FreeCAD software, Open-source GNU license, https://www.freecad.org/), but the original design can be easily reproduced also with the use of all-manual tools.

-

a.

-

3.Assemble the box (Figure 1) using the Plexiglas sheets, corners, and screws (2 mm) with nuts.

- a.

-

b.Put the screws from the inside with nuts facing outside for easier maintenance.

-

c.Screw the lower and upper corner in the proper places of the side walls and then attach the back wall to fit two parts together. The front wall should slide freely in place with no screws needed to allow for easy change.

-

d.Screw the mirror frames into their places on both sides of the box and slide the mirrors in (should slide freely or the mirror frames will likely brake).

-

e.Slide the metal rods in the holes at the bottom and glue them in place.

CRITICAL: Grid floor makes the cleaning of the box easier and prevents animals from stashing the collected pellets in the box, which is important for successful training. There is an elevated front rod, which is used as a starting point for animal to reach and a ‘perch’ where animals keep their paws in preparation for the reach. This rod should slide freely into its place and shouldn’t be glued for easier removal and cleaning.Note: You can cover the outside of the box with opaque film to provide dim-light conditions as in Figure 1D.

CRITICAL: Grid floor makes the cleaning of the box easier and prevents animals from stashing the collected pellets in the box, which is important for successful training. There is an elevated front rod, which is used as a starting point for animal to reach and a ‘perch’ where animals keep their paws in preparation for the reach. This rod should slide freely into its place and shouldn’t be glued for easier removal and cleaning.Note: You can cover the outside of the box with opaque film to provide dim-light conditions as in Figure 1D. -

f.Solder 5–7 front floor rods together and attach them to a wire (15 cm) with a 2.54 mm pin connector on the other end (Figure 3C). Solder the elevated front rod to another wire with 2.54 mm pin connector.Note: These two sets of rods are used as touch sensors needed to register the time spent near the slit, the beginning of the reaching trial etc.

-

4.Assemble the motorized feeder.

- a.

-

b.Plug the rotating disc right into the shaft of the stepper motor, it doesn’t require gluing.

Note: We designed two variants of the feeder – one for easier movement during the experiment (to dynamically adjust the distance from the slit manually) and the second one fixed and connected to the precise XY positioning stage. Designs for both are provided in the repository, but the first one is used in this protocol.

CRITICAL: Use caution while positioning the stepper motor as a slight tilt or shift may affect the accuracy of the disc positioning. Check the precision of the pellet delivery before starting the real experiment.

-

5.Position the parts on a steady base (Figure 1B).

-

a.Assemble all the parts on a stable base. We used a thick piece of Plexiglas (5 mm) with drilled holes for wires and long screws. These upside-down screws, acting as anchor rods, make easier the precise positioning of the box every time by simply sliding screw shanks into the holes on the bottom angles.Note: Allow some space underneath or on the side for the wiring and the behavioral box controller.

-

b.Use the same base to fix the XY positioning stage of the feeder and the video camera on a stable stand.

-

c.The feeder should be positioned that way, so the center of the closest pellet slot is 7 mm to the slit (5 mm from the edge of the disk to the slit).

CRITICAL: The position and all settings of the video camera should remain the same throughout the whole experiment for the videos and 3D kinematics of the reaching to be comparable between days. The mirrors determining the angle of the side views are mounted to the box itself, so the parameters of the optical system are dependent mostly on the relative distance of the box to the camera. Thus, it is very important to fix the camera on the same stable surface as the behavioral box and the feeder, as well as fix the relative position of the latter to the camera.

CRITICAL: The position and all settings of the video camera should remain the same throughout the whole experiment for the videos and 3D kinematics of the reaching to be comparable between days. The mirrors determining the angle of the side views are mounted to the box itself, so the parameters of the optical system are dependent mostly on the relative distance of the box to the camera. Thus, it is very important to fix the camera on the same stable surface as the behavioral box and the feeder, as well as fix the relative position of the latter to the camera.

-

a.

Figure 1.

Overview of the 3D printed reaching box

(A) the front view, (B) orthogonal view, (C) the top view, (D) the side view. Main features of the behavioral apparatus include 1) rotating disk feeder for automatic food delivery not obstructing the view of the mouse behavior in the box, 2) vertical slit for the animal to reach through, 3) the mirror system allowing to record reach-to-grasp movement from multiple views and reconstruct the trajectory, 4) metal grid floor with frontal bars connected to the controller and used as touch sensors, 5) autonomous experiment controller allowing the full control of the experiment and recording of the animal behavior in the box along with providing the synchro-signal for electrophysiology recording or other experimental devices, 6)single high-speed camera for capturing behavior.

Figure 2.

Blueprints of the reaching box that can be used to manufacture it using the Plexiglas sheets and 3D printed parts

(A) Side wall with the holes for the metal floor and the screws (3 mm Plexiglas), (B) front wall with a slit for animal paws (0.5 mm Plexiglas), (C) models for 3D printed parts of the box, (D) back wall with the holes for the screws (3 mm Plexiglas). All the parts on the pictures (A), (B), (C) are shown with the same scale, the scale on the (D) is different. 3D models are provided in the GitHub repository.

Figure 3.

Behavioral experiment controller components

(A) Arduino Nano board and custom schematics on a breadboard enclosed in a box (figure created with Fritzing software).

(A–D) The controller is connected to multiple electronic components of the behavioral box – automatic feeder (B) and floor bar sensors (C) with the use of a 10 pin connector (A), which makes the assembly-disassembly of the box easy (D). The schematics are also provided in the GitHub repository.

Design and assembly of the control schematics

Timing: 1 day

A customized circuit is used to execute behavioral protocol, the stimuli and reinforcement (e.g., food pellets) presentation, data logging from the sensors, and video camera activation. The electrical components are housed in a small 3D printed box and soldered together on a breadboard (Figure 3D). The main component of the system is the Arduino Nano controller autonomously performing the programmed experimental protocol (written in C++ using Arduino IDE). Hence, this behavioral system can be used for (semi-)automatic training of mice to perform the reach-to-grasp task. Connected to a computer running custom Python script, this system collects both basic behavioral data from the touch sensors (position of the animal, paw placement, time spent in front of the slit, timing, and number of trials) and 3D view of the reaching movement (from the front camera and two side mirrors) for kinematic analysis. The following steps guide you through the assembly of the system.

-

6.Assemble the breakout board. This is the interface between the components of the behavioral box.

CRITICAL: Power to the whole board is 5V delivered from the connection with Arduino board so all power and ground connections from all sensors should go to the single point at the 10-pin input (Arduino socket) – Power (red) and Ground (Brown) respectively.

Optional: The IR Proximity sensor serve as (1) an additional IR light source making the paw more contrast, and (2-optional) as a beam-break sensor detecting all the reaching attempts. For that purpose, we need to unsolder two LEDs from the sensor and mount them on the opposite ends of the slit facing each other: IR emitter at the bottom and detector on the top of the slit. They can be resoldered to the board with a long wire or use a pin- socket connection to the board. All the other connections, both with Arduino and the behavioral box, are established using the 2.54 mm pin headers (See pinout on Figure 3A).

-

7.

Solder the relay cables for the Arduino. There are three main relay cables: 10-pin connector for interface with the breadboard, 4-pin connector for controlling the feeder stepper motor and simple breadboard with two buttons to control the feeder rotation manually. Refer to Figure 3A–1,2,3.

Note: We recommend mounting Arduino Board on the same breadboard or close to it while making the cable to the buttons longer and more durable.

-

8.

Connect the control board to the behavioral box sensors (using the diagram on Figure 3A) and the video camera using Arduino pin 12 and GND and connecting them to the pin 1 - GPI and 6 – GND on the Blackfly S correspondingly (May be different for the camera you use).

Note: Instructions on how to setup the synchronized recording on the FLIR Blackfly S camera used in this protocol can be found on FLIR official website (https://www.flir.com/support-center/iis/machine-vision/application-note/configuring-synchronized-capture-with-multiple-cameras/).

Configuring computer for data streaming, storage, and analysis

Timing: 2–4 h

-

9.

Download Arduino IDE programming environment from the official website (https://www.arduino.cc/en/software) as it is used to write, compile and upload the C++ code for Arduino controllers.

Optional: It can also be used to test the output stream of the behavioral box controller connected to the computer using the Serial Monitor (the proposed device outputs the data from all sensors as an updating table in a COM port interface).

-

10.

Connect the Arduino Nano board to the computer using USB cable and open Arduino IDE.

-

11.Choose one of the provided programs or modify them to fit specific experimental needs. All of them are provided in the repository (https://github.com/BerezhnoyD/Reaching_Task_VAI; https://doi.org/10.5281/zenodo.7383917). The one used in the following protocol is the ‘Reaching_Task_Manual’, but there are others provided for different training algorithms.

-

a.‘Reaching_Task_Draft’ – basic protocol with initialization for all the components to explore the structure of the program.

-

b.‘Reaching_Task_Manual’ - Feeder is controlled manually and makes one step clockwise or counterclockwise when the experimenter presses the left or right button respectively.

-

c.‘Reaching_Task_Feeder_Training’ – Feeder runs automatically and takes one step every 10 s while animal is in the front part of the box.

-

d.‘Reaching_Task_Door_Training’ – If animal is detected in the front part of the box and grabs the elevated front rod, the feeder takes one step and the door blocking the slit opens (need additional servo motor connected for the door, see Figure 3A, socket pinout).

-

e.‘Reaching_Task_CS_Training’ - If animal is detected in the front part of the box the speaker delivers 5s beep sound (CS trial) with intertrial intervals of 5s (ITI) and if the animal grabs the elevated front rod during this sound (trial) the feeder makes one step and the door blocking the slit opens (need additional servo motor and the speaker connected, see Figure 3A, socket pinout).

-

a.

-

12.

Upload the chosen experimental program and test the functioning of the components.

CRITICAL: After a single upload of the program the board will perform it autonomously on every power up. To switch to another protocol, you will need to connect Arduino to computer and upload another program using Arduino IDE

-

13.Establish data streaming to PC. We use Python scripts to record the data streamed from the FLIR camera and save it on the computer along with the sensor data from the behavioral box.

-

a.Locate Python Scripts in the repository (ex. Reaching_Task_VAI/Recordingtoolbox/FLIR_GPU/CameraRoll.py) and copy them to the easily accessible folder on your PC.

-

b.To interface with the FLIR camera using the Python scripts you need to install the Anaconda Python Platform (https://www.anaconda.com/products/distribution), FLIR Spinnaker and PySpin API (https://www.flir.com/products/spinnaker-sdk/) from the official FLIR website.

-

i.Download and install the appropriate version of Spinnaker SDK from the FLIR website first.

-

ii.Install Anaconda and check the Python version and only then install “Latest Python Spinnaker” checking it matches the installed version of Python you have.

-

i.

-

c.The Python Script we use to handle the video still requires a few libraries.

-

i.First, scikit-video (http://www.scikit-video.org/stable/) can be added to your Anaconda environment opening the Anaconda Prompt and typing in:pip install scikit-video

-

ii.Second, FFMPEG - is mentioned in the script itself as a path to binary file. So the FFMPEG executable (https://ffmpeg.org/download.html) needs to be placed in a folder you can point to and then you should manually change the path in the Script accordingly. Opening the script (ex. CameraRoll.py) in notepad look for the following line:skvideo.setFFmpegPath(‘C:/path_where_you_put_ffmpeg/bin/’)

-

i.

-

d.To start camera recording on the computer side plug both the Arduino board and FLIR Blackfly S camera to the PC USB ports and run the script in Anaconda Command Prompt.python ‘C:/folder_with_a_script/CameraRoll.py’

CRITICAL: Be sure to plug the video camera in the USB 3.0 port of the computer, otherwise you will experience a lot of dropped frames due to the USB interface speed limitations.

CRITICAL: Be sure to plug the video camera in the USB 3.0 port of the computer, otherwise you will experience a lot of dropped frames due to the USB interface speed limitations. -

e.We have provided multiple example scripts that can be customized for your needs.

-

i.‘CameraRoll.py’ – the script to run the FLIR camera in continuous recording mode, stream compressed video data to disk and save the data from Arduino sensors as a table (each column – one data stream) along with the corresponding FLIR camera frame number. All the adjustable parameters for the recording (ex. Exposure Time, Frame Rate, Time to record, Folder to Save Videos) are at the beginning of the Script.

CRITICAL: be sure to put in the right folder to save your videos to.

CRITICAL: be sure to put in the right folder to save your videos to. -

ii.‘CameraTrigger.py’ – the script to run the FLIR camera in triggered recording mode (triggered by the Arduino synchro pin), stream compressed video data to disk and display it on the screen + save the data from Arduino sensors as a table (each column – one data stream) along with the corresponding FLIR camera frame number. This is the Script used throughout the protocol as it saves only the important part of the video when the animal is holding the front bar and reaching continuously.

-

i.

-

a.

-

14.Install the analysis software. All the scripts for data analyses and visualization (Figure 4) are written in Python and assembled in a series of Jupyter Notebooks.

-

a.Download the Scripts and the Notebooks from the project GitHub page (https://github.com/BerezhnoyD/Reaching_Task_VAI; https://doi.org/10.5281/zenodo.7383917).

CRITICAL: To run the scripts, you will need to install multiple Python libraries and dependencies. We suggest running the installation through the Anaconda Python environment, handling all the dependencies properly.

CRITICAL: To run the scripts, you will need to install multiple Python libraries and dependencies. We suggest running the installation through the Anaconda Python environment, handling all the dependencies properly. -

b.Download and install Anaconda Python Platform (https://www.anaconda.com/products/distribution).Note: If you have done this on a previous step to interface with FLIR camera and using the same computer for analysis you can skip it here.

-

c.From the same project repository download DEEPLABCUT.yml file containing all the dependencies needed to run the scripts (including the DeepLabCut and Anipose lib) and put in the folder accessible by Anaconda

-

d.Install the environment using the Anaconda prompt command:conda env create -f DEEPLABCUT.yml

-

e.If the environment setup was successful, you should have the new ‘DEEPLABCUT’ Anaconda environment which you should activate to run the analysis scripts. In Anaconda prompt run the following:cd path\to\the scripts\conda activate DEEPLABCUTjupyter notebook

-

f.This will open the Jupyter Notebook layout in your browser from which you will be able to navigate through the folder with the scripts and open the Notebook ∗.ipynb files with the main steps for analysis (Figure 4).

-

a.

Figure 4.

Data processing workflow summarized in 3 Jupyter Notebooks run sequentially

Notebook 1 performs video tracking; Notebook 2 is reserved for manual behavioral analysis and labeling and Notebook 3 contains various data visualization scripts to generate the final plots. Figure was created with BioRender.com.

Key resources table

| REAGENT or RESOURCE | SOURCE | IDENTIFIER |

|---|---|---|

| Experimental models: Organisms/strains | ||

| Mice: C57Bl/6J, male and female from 2 to 24 months | The Jackson Laboratory | RRID:IMSR_JAX:000664 |

| Software and algorithms | ||

| Arduino IDE v.1.8.19 | https://www.arduino.cc/en/software | RRID:SCR_024884 |

| FLIR Spinnaker 3.2.0.57 | https://www.flir.com/support-center/iis/machine-vision/downloads/spinnaker-sdk-download/ | RRID:SCR_016330 |

| PySpin API | https://www.flir.com/products/spinnaker-sdk/ | N/A |

| FreeCAD software v.0.20.1 | https://www.freecad.org/ | RRID:SCR_022535 |

| Ultimaker Cura Slicer v.4.3 | https://ultimaker.com/software/ultimaker-cura | RRID:SCR_018898 |

| Jupyter Notebook v.4.9.2 | https://jupyter.org/ | RRID:SCR_018315 |

| DeepLabCut v.2.2.0.6 | https://github.com/DeepLabCut/DeepLabCut | RRID:SCR_021391 |

| Anipose lib v. 0.4.3 | https://github.com/lambdaloop/anipose | RRID:SCR_023041 |

| FFmpeg executable v.7.0 | https://ffmpeg.org/download.html | RRID:SCR_016075 |

| Python | https://www.python.org/downloads/release/python-3813/ | RRID: SCR_008394 |

| Anaconda Python platform v.22.11.1 | https://www.anaconda.com/ | RRID:SCR_018317 |

| scikit-video v.1.1.11 | http://www.scikit-video.org/stable/ | N/A |

| ReachOut v.1.2 – package containing original code and 3D models for the article | https://github.com/BerezhnoyD/Reaching_Task_VAI | https://doi.org/10.5281/zenodo.7383917 |

| Other | ||

| 5 mg Sucrose Tap Pellets | TestDiet (Richmond, IN, USA) | 1811327 (5TUT) |

| Arduino Nano controller | www.amazon.com | ASIN: B07R9VWD39 |

| Breadboard | www.amazon.com | ASIN: B072Z7Y19F |

| Metal rods for crafts | www.amazon.com | ASIN: B08L7RKM6Q |

| Adafruit Industries AT424QT1070 capacitive touch sensor boards | www.amazon.com | ASIN: B082PMQG4P |

| ULN2003 stepper motor drive with 28BYJ-48 stepper motor | www.amazon.com | ASIN: B00LPK0E5A |

| FLIR 1.6 MP high-speed camera (model: Blackfly S BFS- U3-16S2M) | https://www.edmundoptics.com/p/bfs-u3-16s2m-cs-usb3-blackflyreg-s-monochrome-camera/40163/ | Stock #11-507 |

| IR proximity sensor for Arduino | www.amazon.com | ASIN: B07FJLMLVZ |

| 10 pin 2.54 mm sockets | www.amazon.com | ASIN: B09BDX9L66 |

| 2.54 mm pin headers | www.amazon.com | ASIN: B07BWGR4QP |

| Parts for the behavioral box (corners, frames for the mirrors, feeder) | In-house 3D print | |

| Acrylic mirror - clear 1/8 (.118)" thick, 2 inches wide, 2 inches long | https://www.tapplastics.com/ | N/A |

| 2 mm screws with nuts | www.amazon.com | ASIN: B01NBOD98K |

| Clear polycarbonate thickness: 3 mm, length: 250 mm, width: 180 mm | https://www.tapplastics.com/ | N/A |

| Clear polycarbonate sheets thickness: .75 mm, length: 180 mm, width: 88 mm | https://www.tapplastics.com/ | N/A |

Step-by-step method details

Mouse reach-to-grab task training protocol

The behavioral protocol consists of 5 days of habituation and 2–3 days of shaping followed by 7 days of training. Each of these stages will be detailed hereafter. All sessions are done during the light phase of the light/dark cycle.

Note: We noticed that mice are most motivated in the afternoon, when fed approx. 1 h before the start of the dark phase of the light/dark cycle (16-h food deprivation). Therefore, we planned all the experiments to end approx. 1 h before the start of the dark phase. The sugar pellets used in the protocol were 5 mg spheres of approx. 2 mm in diameter (see key resources table).

Habituation

Timing: 5 days

This section details the first step in the training protocol – habituation of animals to the experimenter, the room and the test setup. This stage is critical as it determines the following learning success. We started each training session with 5 days of habituation that allows mice gradually habituate to the experimenter and the testing environment (e.g., room and behavior apparatus).

-

1.Habituate animals to the experimenter (days 1–2).

-

a.During habituation, mice were handled for 15–30 min every day.

-

b.Throughout the experiment, make sure that the mice will be handled by the same experimenter until the end of training (e.g., for the weekly cage change).

-

c.By the end of day 2 and to proceed to day 3, mice should get acclimated to the experimenter and handling (i.e., do not try to bite and circulate around experimenter’s hand in home cage, do not jump off experimenter’s hands/arms nor defecate and urinate excessively).

-

d.If needed, extra days of habituation can be added, or the mice should be excluded from the study).

-

a.

-

2.Habituation to the test setup I (day 3): The purpose is to acclimate the mice to the test box. To reduce stress, we propose that the first contact with the box be with a cage mate.

-

a.Transfer cage to the behavior room.

-

b.Allow mice to acclimate to the room for 5 min.

-

c.Place two mice from the same cage in the middle of the reaching box by holding tails.

-

d.Let the mice explore the box for 20 min.

-

e.Put animals back to their cage and add a few sugar pellets on the floor for consumption.

-

f.Clean the box with 70% ethanol between mice.

-

a.

-

3.Habituation to the test setup II (days 4–5): The purpose of the last two sessions of habituation is to allow animals get acclimated to the box individually, in the presence of sugar pellets. Recording the mouse behavior on habituation day 5 allows to assess this acclimation.

-

a.Transfer cage to the behavior room.

-

b.Allow mice to acclimate to the room for 5 min.

-

c.Place the feeder with a pile of sugar pellets 5 mm away from the front wall.

-

d.Place a plastic tray with 10 sugar pellets inside the box against the front wall. Note: no need to set up the elevated front rod at this stage.

-

e.Place a mouse in the center of the box.

-

f.Let the mouse explore the box for 20 min.

-

g.Move the mouse back to its cage.

-

h.Clean the box, the tray, and the disk with 70% ethanol between mice.

-

a.

CRITICAL: By the end of day 5, animals should show free exploration of the reaching box, interest in the slit and the pellets.

Note: Animals may not eat any pellets at this stage if they are not hungry but should be spending enough time near the slit and sniff it.

Shaping

Timing: 2–3 days

Shaping stage is included to initiate reaching in simpler setting and determine the paw dominance before starting the actual training. Each session is recorded with the FLIR camera and the Arduino on, using the CameraRoll.py python script in the PC.

-

4.

During the training animals should be food-restricted.

Note: In our lab, all animals were food-restricted throughout the shaping and training periods at the same level, i.e., around 80% of baseline bodyweight. The mice were housed in groups of 4 and food was placed on the cage floor. Daily food provided is the equivalent of 8% of animals’ baseline bodyweight.

-

5.

Transfer the cage to the behavior room.

-

6.

Allow mice to acclimate to the room for 5 min.

-

7.

Place the feeder with a pile of sugar pellets 5 mm away from the front wall.

Optional: The disk can be placed closer to the slit and gradually moved away during the session.

Note: the elevated front rod should not be set up during the shaping phase.

-

8.

Connect the FLIR camera and the Arduino to a computer as previously described.

Note: We highly recommend using the ‘Reaching_Task_Manual’ script during the Shaping phase and controlling the feeder with the buttons. The automatic scripts for the Arduino work well when the animal is reaching consistently.

-

9.

Place the mouse in the middle of the box.

-

10.

Start the CameraRoll.py recording script to monitor the activity of the mouse for 20 min.

-

11.The mouse may retrieve sugar pellets by licking or reaching and the following numbers should be recorded:

-

a.failed and successful licks.

-

b.failed and successful reaches with the right and left paws.

-

a.

Note: When there are no sugar pellets remaining on the disk within the reaching distance from the slit, press the button to rotate the disk so that more sugar pellets are available to reach for.

Optional: Mice might start using their tongues to get sugar pellets (licking) before using their paws (reaching). In that case you can try moving the disc even further from the slit (>9 mm).

-

12.

Move the mouse back to the home cage at the end of the session.

-

13.

Clean the box and the disk with 70% ethanol between mice.

-

14.

For each mouse, calculate total number of reaches (Equation 1) and percentage of reaches with right paw (Equation 2) to determine paw dominance. The dominant paw is the paw used for more than 70% of all reaches (successful and failed).

| (Equation 1) |

| (Equation 2) |

-

15.

Shaping stage takes 2–3 days. By the end of this stage, mice should be able to perform at least 20 reaches within 20 min and show paw dominance. Even if mice reach these criteria in shaping day 1, we strongly recommend keeping shaping day 2. Shaping day 3 is optional. If mice don’t meet this criterion, they are excluded from the study.

Note: Shaping day 3 should be included if (i) the mouse still predominantly retrieves food pellets by licking at the end of shaping day 2, even if the mice have performed more than 20 reaches within the session, or (ii) if paw dominance can’t be determined at the end of shaping day 2.

Training

Timing: 7 days

Training takes place after the shaping stage and requires at least 7 sessions (T1 to T7). Each session is recorded with the FLIR camera and the Arduino is on, using the CameraTrigger.py python script.

Note: Online observations are helpful to obtain preliminary results of each session (e.g., success rate and number of reaches), which can be used to optimize the training protocol whenever needed (see troubleshooting below).

-

16.

Transfer the cage to the behavior room.

-

17.

Allow mice to acclimate to the room for 5 min.

-

18.

Fill the slots of the feeder with sugar pellets.

-

19.Place the disk such as:

-

a.Its edge is at 7 mm distance from the front wall.

-

b.The sugar pellet is aligned with the left or right edge of the slit for mice showing right or left paw dominance, respectively.

-

a.

-

20.

Set up the elevated front rod.

-

21.

Connect the FLIR camera and the Arduino box to the PC.

-

22.

Place the mouse in the center of the box.

-

23.

Start the CameraTrigger.py recording script (see Optional).

-

24.

Monitor the activity of the mouse for 20 min.

-

25.

Rotate the feeder using the buttons when the slot in front of the slit becomes empty.

-

26.Food pellets should be delivered:

-

a.After successful reaches, when the mouse moves away from the slit to consume the pellet.Note: After food consumption, if the mouse stays at the slit and keeps performing in vain reaches, no pellet should be delivered until the animal goes to the back of the reaching box and returns to the slit again.

-

b.After failed reaches, when the animal goes to the back of the reaching box and comes to slit again.

-

a.

-

27.

At the end of the session, put the mice back to its home cage.

-

28.

Clean the box and the disk with 70% ethanol between mice.

-

29.

For each mouse calculate sum of failed and successful reaches and success rate (Equation 3).

| (Equation 3) |

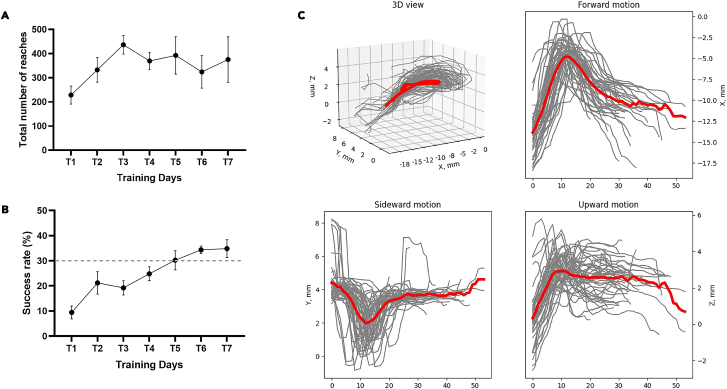

By the end of training, mice persistently reached for the pellets and conducted 100-300 reaches within 20 min. Around 70% of the animals trained using this protocol showed a success rate of 30%–40%.11 See troubleshooting 1.

Optional: If quantification of 3D trajectory (e.g., using Anipose) is needed, (1) the CameraRoll.py or CameraTrigger.py script should be started before each experiment; and (2) a 30 sec calibration video can be recorded for each day with a checkerboard calibration pattern visible in both central and mirror view. For more details on calibration see Anipose article4 or documentation in GitHub repository (https://github.com/lambdaloop/anipose).

Data analysis

Timing: 2–4 h

This part goes through the main processing steps of the analysis pipeline from opening the raw videos from the high-speed camera to the clustering and comparison of the 3D trajectories for different categories of reaches. Run three provided Notebooks consequently. The pipeline for the data analysis is shown on Figure 4.

-

30.Run the first ReachOut - Tracking.ipynb notebook. This notebook leads you through the main steps to convert the acquired video to the reconstruction of the mouse 3D paw trajectory. This Notebook relies on two state-of-the-art tools in markerless pose estimation and 3D triangulation: DeepLabCut2 and Anipose lib.4

-

a.Using the first cell split single video acquired with the FLIR camera into the left, central, and right views provided by the mirrors.Note: Both the behavior video and the corresponding calibration video from the same day should be split. Parameters of this cropping operation can be adjusted in the Script.

-

b.Start the DeepLabCut GUI interface using second cell in the Notebook.

-

c.Use DeepLabCut GUI to open videos, label points for tracking, perform the training and evaluation of the neural network, and finally get the tracking for all the desired points (ex. snout, palm, 4 fingers, pellet).

-

d.Save the tracking results from DeepLabCut pipeline as ∗.csv tables. You should get the tracking for each point of interest in two projections (from central view and from the mirror view) to proceed with 3D reconstruction.Optional: Otherwise, you can use the 2D version of the tracking notebook provided in the repository as well.Note: All the instructions for working with DeepLabCut can be found in the original repository (https://github.com/DeepLabCut/DeepLabCut).

-

e.To track the same points in two camera views we suggest running the DeepLabCut pipeline twice: once for the frontal view videos and the second time for the mirror (left or right depending on the dominant paw).Note: We found that training two separate networks to track the same points in orthogonal camera views generates more accurate results than using one single network for all views.

-

f.Triangulate the paw points using the Anipose Lib through the last two cells in the processing notebook. The code restores the coordinates in 3D space using two 2D trajectories from separate views.

-

i.First, calibrate the camera – calculate the camera intrinsic and extrinsic parameters for our views using calibration videos recorded right before each session with the small checkerboard pattern (4 × 4 squares, each square 1 × 1 mm), visible both from the frontal view and the mirror. Run the script on this video to acquire the calibration file. The script will automatically find the checkerboard pattern in the frames and ask you to confirm or reject the detection results manually (all points of the checkerboard should be detected and connected with lines from left to right, top to bottom).Note: This step could be done once for each batch of videos using single calibration video with a checkerboard for that day. Before running this script you will need to point the whole path to the calibration videos along with renaming them to match the Anipose pattern of A,B,C camera names. Further details can be found in the original Anipose repository (https://github.com/lambdaloop/anipose).

-

ii.The previous step generates the calibration file (calibration.toml generated by Anipose) which we can apply to the 2D tracking data acquired with DeepLabCut for two different views (use the ∗.h5 files generated by DLC) to triangulate the points in 3D space. You will need to point the script to these 3 files and also point the path for the output file.Note: The names for the points to track should be the same in both DeepLabCut files. If you want to correct the coordinate frame to match certain static points in your video, you will also need to type in these points. They should also be present in the initial output files from DeepLabCut triangulation.

-

iii.After this step you get the ∗.csv file with all the coordinates in absolute values (in mm, relative to the static points) and also can do a simple visual verification of the x,y,z coordinate for each of the part triangulated, which concludes the first Notebook.

-

i.

-

a.

-

31.Run the second ReachOut - Analysis.ipynb notebook. This notebook contains the workflow to process the ∗.csv table with coordinates acquired on the previous step: clean the data, segment the trajectory to extract the relevant parts and assign the behavioral labels to the extracted parts. The screenshots for the following program snippets are shown in Figure 5.

-

a.Run the first script (tracking_split). It opens the GUI designed to choose the parts of the trajectory for analysis (the peaks corresponding to the reach-to-grasp movement). It opens the ∗.csv file containing x,y,z coordinates for all the parts tracked and visualizes the trajectory for the selected part in 3D space (Figure 5A).

-

i.Rotate and zoom in/out the plot by hovering on top of it with the mouse and holding the left mouse button. At first it shows the whole trajectory, but when you click on the progress bar in the bottom it will scroll through the small parts of the trajectory (500 frames at a time). Two smaller plots underneath the main one show the same trajectory projected on X (side view) and Y (front view) axis and are designed to extract even smaller parts of the trajectory - single reaches.

-

ii.When you click the left mouse button on these plots and move the cursor you will choose the part of the trajectory with a red span selector.

-

iii.If you want to save this part of the trajectory for further analysis, you should click the green “Save” button on the left.

-

iv.Scroll through the whole file, look at the trajectories and choose all the parts corresponding to the full reaches.

-

v.When you finish analyzing the trajectory file you should click the green “Save_all” button on the right which saves the whole data frame with extracted parts of the trajectory for analysis as an ∗.h5 file.

-

i.

-

b.The second script (viewer) is opening the ∗.h5 file with the reaches extracted from the single video along with the video itself (∗.mp4 file) and lets the user manually assign the category of the reach rewatching the video snippet corresponding to the trajectory extracted.

-

i.The script opens the subplots with the selected trajectory from different views and two dropdown lists – one for the trajectories and one for the reach types (Figure 5B).

-

ii.You should sequentially choose each of the trajectories from the first list, which will open the corresponding video, and assign the category from the second list by simply clicking on it.

-

iii.The video can be closed by pressing the ‘Q’ button.

-

i.

-

c.By default, we classified the reaches to one of 6 categories depending on the trial outcome we have seen in the video:

-

i.Grasped – when mouse successfully grasped and consumed the food pellet.

-

ii.Missed - when mouse did not touch the pellet during the reach.

-

iii.Flicked – when mouse touched the pellet and knocked it down from the disk.

-

iv.Lost – when mouse picked the pellet up but lost it on the way to the mouth.

-

v.In vain – animal reaching in the absence of food pellet.

-

vi.Artifact – the recorded trajectory is not an acceptable reach.

-

i.

-

d.After accomplishing the classification, the script saves the ∗_scalar.h5 data frame with all the kinematic parameters for each of the reaches extracted. To open and visualize this data frame you should run the third analysis Notebook.

-

a.

-

32.Run the third ReachOut - Visualization.ipynb notebook. This notebook contains the scripts for visualization of the kinematic parameters, average projections, and additional automatic clustering of the extracted and labeled trajectories. The screenshots for the following program snippets are shown in Figure 6.

-

a.The first script (reach_view) shows the average 3D trajectory along with projections of the reaching trajectories to 3 different axes to dissect the whole movement into its components: x (forward), y (sideward) and z (upward) (Figure 6A). You can choose the category of reaches to show from the dropdown list and save the picture by clicking the right mouse button.

-

b.The second script (scalar_view) plots all the kinematic parameters for the chosen category of reaches as the mean value (with mean parameter for individual reaches as the points) on the left and mean variance on the right (with the variation parameter, usually STD for individual reaches) (Figure 6B). All the parameters plotted are calculated in the previous notebook and are taken from the ∗_scalar.h5 data frame. You should choose categories of reaches and parameters to show from the dropdown lists for the plots to be displayed.

-

c.Third script (clustering) is optional and is designed to perform automatic clustering of the reaches based on the scalar parameters extracted. You should choose the clustering algorithm from the dropdown list and visualize the results. The results of the clustering can be saved to the ∗_scalar.h5 data frame as an additional labels column.

-

d.The fourth script simply shows the number of reaches in each category labeled.

-

a.

-

33.

The third notebook concludes the analysis step and allows to generate the pictures reflecting main kinematic variables analyzed: reach trajectory, duration, velocity, reach endpoint coordinates etc.

Figure 5.

Processing the data and labeling the reaches using the proposed data analysis tools

The picture shows the GUI for different program snippets that the user runs through the processing pipeline (Notebook 2).

(A) Extracting individual reaches from trajectory, (B) looking at the videos for individual reaches and labeling the type of the reach. For the instructions on how to use the snippets read the annotated Notebook 2 in the GitHub repository.

Figure 6.

Visualizing the data using the proposed data analysis tools

The picture shows the GUI for different program snippets that the user runs through the processing pipeline (Notebook 3). (A) Mean trajectory visualization, (B) scalar parameters visualization tool. For the instructions on how to use the snippets read the annotated Notebook 3 in the GitHub repository.

Expected outcomes

In this protocol we propose the open-source hardware-software solution for training mice to perform reach-to-grasp task, acquire the video for behavioral analysis and process it to analyze the fine motor skill kinematics. The proposed device can be easily manufactured in the lab with the readily available tools and materials with the only expensive component being the high-speed camera for behavioral acquisition. Behavioral apparatus can be used to perform multiple protocols depending on the study goals and not limited to the one suggested in the current study.

We used a high-speed camera with tilted mirrors to capture x, y and z coordinates of the movement in a single video. This approach acquires the data sufficient for 3D trajectory reconstruction using a single camera without synchronization of different video streams. Front- and side-view monitoring of the movement proved to be the most accurate in terms of paw and fingertip monitoring and sufficient to reliably distinguish between different movement outcomes. The analysis of the restored 3D trajectory provides accurate kinematic profile of the movement which can be further dissected into different directions (forward, sideward and upward, Figure 7C) and phases (reaching, protonation, grasping, retraction, Figures 5B–5D) depending on the goal of the study. We demonstrate the effective manual and automatic clustering of the reaches into different categories and extraction of the number of kinematic parameters from every reach: endpoint coordinates, average and maximum velocity, acceleration and jerk, timing of the max velocity and peak positions and reach duration (Figures 7A and 7B).

Figure 7.

Exemplary data acquired with the system

(A) Total number of reaches of wild-type C57BL/6 mice (n = 6) over the 7 days of training (T1 to T7). Results are presented as mean ± SEM.

(B) Success rate wild-type C57BL/6 mice (n = 6) over the 7 days of training (T1 to T7). The dotted line indicates the threshold (30%) chosen in the literature to consider a mouse as a “learner” mouse.

(C) Kinematic profile of the reach-to-grasp movement from a single animal acquired on the day 7 of training (T7) with the use of the proposed data analysis pipeline. Upper left plot shows all reaches from one representative mouse in 3D. All other panels show the dissection of the movement to three directional 2D components that can be analyzed separately – forward, sideward and upward motion of the paw. Data on each plot shows individual reaches in gray and averaged trajectory in red, Y-axis is expressed in distance to pellet.

Aside from movement kinematics which are analyzed from behavioral videos offline, experimenter may choose from several standard learning metrics like success rate or number of reaches in different categories to characterize the progress in a form of standard learning curves (Figures 6A and 6B).

Limitations

The current platform involves food deprived/restricted animals to perform fine movements with the forepaws, which could be an issue for animal models with motor impairments (e.g., Parkinson’s disease). Also, the success rate is highly dependent on the training protocol used and precise timing of the food delivery contingent upon animal actions. We found that extensive handling procedure prior to starting the training greatly improves the results, but also significantly increases the time required. In addition, only one animal can be present in the reaching box per training session. Thus, multiple units will be needed for high-throughput animal trainings.

In the data acquisition and analysis pipeline we used camera sampling rate 100 Hz, which along with lower resolution as a result of splitting the video to multiple views may cause occasional blurring during the fast parts of the movement, especially for the fingers. For studies focusing on individual digit control, increasing camera sampling rate (> 500 frame/sec) or using of multiple cameras will be needed to capture fine movement of fingers.1,12,13

Troubleshooting

Problem 1

Animal habituation and training, Related to Habituation.

It is quite often that animal training may not go as smoothly as described here or in literature. Mice might naturally lick off pellets instead of reaching for them, or not go to the back of the box or stay there.

Potential solution

There are various factors affecting the success of the training protocol, from training environment and Habituation to the behavioral box construction and protocol changes. Some would simply exclude the mice from the protocol, but we chose to control the protocol manually and modify the protocol based on observed behaviors listed below. The simplest solutions to control for the training environment variables.

-

•

Behavioral room should be quite with proper temperature and lighting.

-

•

There should be only the one experimenter in the room. The experimenter shouldn’t wear any kind of perfume.

-

•

If a mouse jumps out of the hand during habituation to the experimenter, it can be returned to the home cage. The habituation can be tried again after habituating the rest of the mice.

Problem 2

Animal is not engaged in training, Related to Shaping.

Potential solution

Tips to engage animals into training”.

-

•

On shaping day 1, if the mouse shows no interest in getting food (no licks, no reaches), you can try to provide sugar pellets in the home cage with regular chow at the end of day, but still maintain the same total amount of food provided (e.g., 2 g of chow + 2 g of sugar pellets can be provided for calculated 4 g food daily. Make sure to add an additional shaping day.

-

•

During the initial days of training, if the mouse starts biting on the slit or performs more than 20 consecutive in-vain reaches, a brief noise can be introduced by gently scratching the top corner of the side wall. This will distract the mouse and move it to the rear of the reaching box. We have noticed that the mouse would then come forward again after hearing the rotating disk.

-

•

If the mouse stays in the back of the test box for more than 5 s, rotate the disk again. The sound of the rotating disk might encourage the mouse to come forward.

-

•

At the beginning of single-pellet training, if the mouse sniffs through the slit but does not reach, adding a small pile of pellets in the center of the disk encourages the mouse to reach.

Problem 3

Animal is licking the pellets instead of reaching, Related to Shaping.

Potential solution

Tips to encourage reaching and prevent licking.

-

•

If the mouse attempts to lick off the pellets during the early days of training, move the disk slightly away from the slit. This will prevent the pellet from being reachable by tongue but still reachable by paw. In case the mouse stops licking for a full session, move the disk closer to the slit in the next session. Move 1–2 mm closer every 5 min, as long as the mouse keeps reaching, until you can place it at the standard 5 mm distance from the front wall.

-

•

If the mouse loses too much weight (i.e., < 75% of baseline body weight), it has a tendency to start licking, even after the shaping phase. If the previous solution to licking does not work, we found it helpful to increase the food portion after the session and move back to the shaping setup on the next session.

Problem 4

Animal success rate is low, Related to Training.

Potential solution

Tips to increase success rate.

-

•

We strongly encourage a single experimenter to conduct the habituation and behavioral shaping and training sessions. We noted that animals can stop or reduce reaching when a new experimenter is involved at any stages of the task. For instance, even well trained (i.e., “experts”) mice could stop reaching when additional experimenter assisted with the training.

-

•

Shaping and training sessions are expected to be performed at the same time of the day to reduce variabilities due to circadian rhythm.

-

•

Successful acquisition of this reaching skills requires daily training for at least consecutive 4 days after the completion of shaping.

-

•

We were using a rotating disk for pellet delivery. Some modifications may be considered and can be helpful to increase success rate of reaches, such as slightly increasing depth of wells, adjusting the height of disk, and precisely positioning the food dispenser.

Resource availability

Lead contact

Further information and requests for resources and reagents should be directed to and will be fulfilled by the lead contact, Hong-Yuan Chu (Hongyuan.Chu@gmail.com).

Technical contact

Inquiries about technical aspects of the hardware and software of this system should be directed to D.B. (db1671@georgetown.edu), H.D.C. (hc960@georgetown.edu), and H.-Y.C. (Hongyuan.Chu@gmail.com).

Materials availability

This study did not generate new unique reagents. All the devices listed in this study can be either found in key resources table or manufactured in-lab.

Data and code availability

The datasets and code used during this study are available at https://github.com/BerezhnoyD/Reaching_Task_VAI, https://doi.org/10.5281/zenodo.7383917.

Acknowledgments

The authors thank the Van Andel Institute Maintenance Department for the assistance in customizing the mouse reach training box and the Van Andel Institute Research Operation team for 3D printer and supplies. This research was funded in whole or in part by Aligning Science Across Parkinson’s (ASAP-020572) through the Michael J. Fox Foundation for Parkinson’s Research (MJFF). This work was partially supported by National Institute of Neurological Disorders and Stroke grant R01NS121371 (H.-Y.C.). For the purpose of open access, the authors have applied a CC BY public copyright license to all author accepted manuscripts arising from this submission.

Author contributions

D.B.: methodology, software, visualization, writing – original draft, editing. H.D.C.: methodology validation, investigation, visualization, writing – original draft, editing. H.-Y.C.: funding acquisition, supervision, conceptualization, resources, visualization, writing – review and editing.

Declaration of interests

The authors declare no competing interests.

References

- 1.Guo J.-Z., Graves A.R., Guo W.W., Zheng J., Lee A., Rodríguez-González J., Li N., Macklin J.J., Phillips J.W., Mensh B.D., et al. Cortex commands the performance of skilled movement. Elife. 2015;4 doi: 10.7554/elife.10774. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Xu T., Yu X., Perlik A.J., Tobin W.F., Zweig J.A., Tennant K., Jones T., Zuo Y. Rapid formation and selective stabilization of synapses for enduring motor memories. Nature. 2009;462:915–919. doi: 10.1038/nature08389. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Guo L., Xiong H., Kim J.-I., Wu Y.-W., Lalchandani R.R., Cui Y., Shu Y., Xu T., Ding J.B. Dynamic rewiring of neural circuits in the motor cortex in mouse models of Parkinson's disease. Nat. Neurosci. 2015;18:1299–1309. doi: 10.1038/nn.4082. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Miklyaeva E.I., Castañeda E., Whishaw I.Q. Skilled reaching deficits in unilateral dopamine-depleted rats: impairments in movement and posture and compensatory adjustments. J. Neurosci. 1994;14:7148–7158. doi: 10.1523/jneurosci.14-11-07148.1994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Bova A., Gaidica M., Hurst A., Iwai Y., Hunter J., Leventhal D.K. Precisely-timed dopamine signals establish distinct kinematic representations of skilled movements. Elife. 2020;9 doi: 10.7554/elife.61591. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Aeed F., Cermak N., Schiller J., Schiller Y. Intrinsic Disruption of the M1 Cortical Network in a Mouse Model of Parkinson's Disease. Mov. Disord. 2021;36:1565–1577. doi: 10.1002/mds.28538. [DOI] [PubMed] [Google Scholar]

- 7.Calame D.J., Becker M.I., Person A.L. Cerebellar associative learning underlies skilled reach adaptation. Nat. Neurosci. 2023;26:1068–1079. doi: 10.1038/s41593-023-01347-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Whishaw I.Q. Loss of the innate cortical engram for action patterns used in skilled reaching and the development of behavioral compensation following motor cortex lesions in the rat. Neuropharmacology. 2000;39:788–805. doi: 10.1016/s0028-3908(99)00259-2. [DOI] [PubMed] [Google Scholar]

- 9.Whishaw I.Q., Pellis S.M. The structure of skilled forelimb reaching in the rat: a proximally driven movement with a single distal rotatory component. Behav. Brain Res. 1990;41:49–59. doi: 10.1016/0166-4328(90)90053-h. [DOI] [PubMed] [Google Scholar]

- 10.Azim E., Jiang J., Alstermark B., Jessell T.M. Skilled reaching relies on a V2a propriospinal internal copy circuit. Nature. 2014;508:357–363. doi: 10.1038/nature13021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Chen C.C., Gilmore A., Zuo Y. Study motor skill learning by single-pellet reaching tasks in mice. J. Vis. Exp. 2014;85 doi: 10.3791/51238. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Becker M.I., Person A.L. Cerebellar Control of Reach Kinematics for Endpoint Precision. Neuron. 2019;103:335–348.e5. doi: 10.1016/j.neuron.2019.05.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Lopez-Huerta V.G., Denton J.A., Nakano Y., Jaidar O., Garcia-Munoz M., Arbuthnott G.W. Striatal bilateral control of skilled forelimb movement. Cell Rep. 2021;34 doi: 10.1016/j.celrep.2020.108651. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The datasets and code used during this study are available at https://github.com/BerezhnoyD/Reaching_Task_VAI, https://doi.org/10.5281/zenodo.7383917.