Abstract

Protein function prediction is critical for understanding the cellular physiological and biochemical processes, and it opens up new possibilities for advancements in fields such as disease research and drug discovery. During the past decades, with the exponential growth of protein sequence data, many computational methods for predicting protein function have been proposed. Therefore, a systematic review and comparison of these methods are necessary. In this study, we divide these methods into four different categories, including sequence-based methods, 3D structure-based methods, PPI network-based methods and hybrid information-based methods. Furthermore, their advantages and disadvantages are discussed, and then their performance is comprehensively evaluated and compared. Finally, we discuss the challenges and opportunities present in this field.

Keywords: protein function prediction, sequence-based methods, 3D structure-based methods, PPI network-based methods and hybrid information-based methods

Introduction

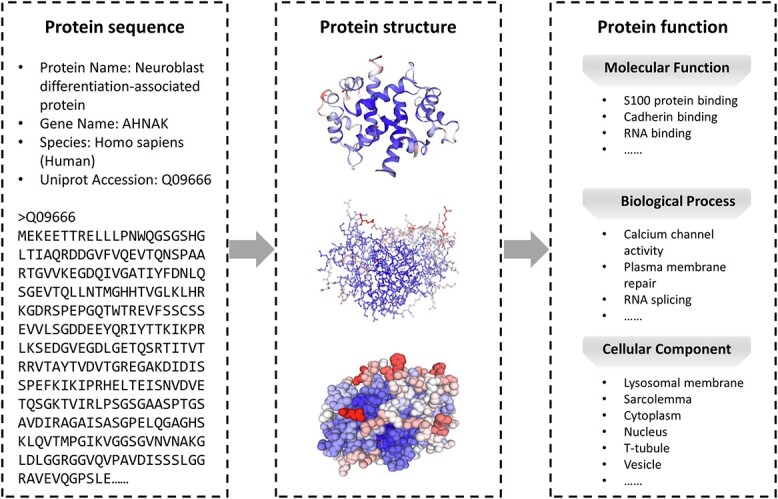

Proteins are vital constituents of living systems, playing diverse biological roles such as catalyzing chemical reactions, participating in signal transduction and maintaining cellular structure. Proteins must undergo multiple cellular steps to fulfill their functions and engage in cellular activities [1, 2]. The amino acid residues in a protein sequence undergo spatial transformation, leading to the formation of a three-dimensional structure. This is followed by the establishment of protein–protein interaction (PPI) networks through non-covalent forces [3], regulating both structure and function. Subsequently, PPI networks assemble into protein complexes [4, 5], which serve as molecular machines for realizing protein functions and executing life activities. It is noteworthy that not all proteins require complex formation and some can independently execute their functions. In protein research, the paradigm of ‘sequence-structure-function’ is followed. Currently, protein function is standardized by the Gene Ontology (GO) [6, 7], which encompasses three aspects: biological process ontology (BPO), molecular function ontology (MFO) and cellular component ontology (CCO), as shown in Figure 1.

Figure 1.

A schematic view of the ‘sequence-structure-function’ paradigm. Sequence refers to the arrangement of amino acids in a protein. Structure refers to the three-dimensional arrangement of a protein’s atoms. Function refers to the particular role a protein plays within an organism [8–10]. Generally, a protein sequence determines its structure, which, in turn, determines its function.

Exploring protein function prediction holds profound significance in comprehending both intracellular and extracellular biological processes and uncovering the correlation between protein diversity and evolution. Additionally, protein function prediction plays a crucial role in elucidating gene functions, comprehending gene regulatory networks and guiding the functional annotation of uncharacterized proteins [11, 12]. Accurate prediction of protein function supports drug discovery and development by facilitating the creation of drugs targeting specific proteins, thereby enhancing treatment efficacy [12]. In personalized medicine [13], understanding the individual variations in protein function is essential for evaluating disease risk and devising individualized treatment strategies. In summary, protein function prediction serves as a crucial tool driving the progress of life science and medicine, thus contributing significantly to human health and sustainable development.

Traditional biological experiments exhibit high accuracy in determining protein function. However, conducting these experiments involves costly equipment and materials, intricate implementation steps, as well as substantial time and labor expenses [14]. Moreover, biological experiments require operations within living cells, and achieving accurate results requires multiple repetitions and analyses. Due to the limitations of traditional biological experiments, computational methods employing machine learning and deep learning techniques are widely utilized and exhibit robust performance [15–17]. In contrast to traditional experiments, computational methods demonstrate superior scalability and the ability to concurrently manage large-scale protein sample data, thereby enhancing research efficiency. Furthermore, computational methods can reduce research costs and decrease reliance on costly experimental equipment, as well as minimize time and labor requirements.

The Critical Assessment of Function Annotations (CAFA) Challenge [18] is a challenging competition in the field of bioinformatics designed to assess and advance the development of methods for protein function prediction. The challenge is organized by the international bioinformatics research community and attracts researchers from all over the world. Briefly, CAFA organizers provide a large number of protein sequences and participants are required to predict the function of these proteins using their computational methods, and these predictions are compared with the actual annotations to assess the accuracy of the computational methods. The CAFA challenge provides a platform for researchers to evaluate and compare their computational methods for predicting protein function. In addition, CAFA challenge has become an important competition in the field of bioinformatics, where researchers can learn about the advantages and disadvantages of various current function prediction algorithms and promote the development of protein function prediction methods [18–20].

Several review papers [21–23] have been proposed, summarizing existing computational methods. However, an updated and more comprehensive review is highly required for the following reasons: (i) Outdated classification: Sleator et al. [21] only classified protein function prediction methods as sequence-based and structure-based, while ignoring other bioinformatic data, such as PPI networks and Interpro. (ii) Lack of comprehensiveness: Shehu et al. [22] focuses on different categories based on the type of data used but lacks a discussion of the techniques employed for each category of method. (iii) Lack of comparison: xisting reviews categorize protein function prediction methods and describe the analyses, but they fall short in providing a comparative analysis of performance results computed on the same benchmark dataset.

In this review, we provide a comprehensive and up-to-date overview of existing computational methods for protein function prediction, focusing primarily on methods proposed in recent years. Initially, we introduce the databases used for predicting protein function. Subsequently, we propose a new categorization method to classify computational methods into four categories. Following that, we conduct a fair evaluation of the performance of each category of computational methods using the same benchmark dataset. Finally, we discuss future directions and challenges, exploring opportunities in this research area.

Databases

In recent decades, owing to the development of this important filed, several databases have been constructed, including Uniprot [24], GO [25], GOA [26], STRING [27], InterPro [28], Research Collaboratory for Structural Bioinformatics Protein Data Bank (RCSB PDB) [29] and AlphaFold [30]. A summary of these protein databases is shown in Table 1.

Table 1.

The summary of protein databases.

| Database | Version |

Description

|

Website |

|---|---|---|---|

| Uniprot [24] | January 2024 | Swiss-Prot(570,830) TrEMBL(249,751,891) |

https://www.uniprot.org/ |

| GO [25] | 17 January 2024 | Biological Process(2,833,885) Molecular Function(2,459,729) |

|

| Cellular Component(2,362,323) | https://geneontology.org/ | ||

| GOA [26] | v219 | Electronic GO Annotations(1,372,042,474) Manual GO Annotations(5,916,798) |

https://www.ebi.ac.uk/GOA/ |

| STRING [27] | v12.0 | Proteins(59,309,604) Organisms(12,535) |

https://string-db.org/ |

| InterPro [28] | v98.0 | Homologous Superfamily(3,446) Family(21,942) Domain(14,053) Repeat(374) Site(953) |

http://www.ebi.ac.uk/interpro/ |

| RCSB PDB [29] | 19 March 2024 | PDB Structures(217,387) Computed Structure Models(1,068,577) |

https://www.rcsb.org/ |

| AlphaFold [30] | v2.0 | Organisms(48) Predicted Structures(564,446) |

https://alphafold.ebi.ac.uk/ |

The protein database contains various types of data along with their respective quantities.

The protein database contains various types of data along with their respective quantities.

UniProt [24] is a widely utilized protein database, comprising various significant sections, including UniProtKB, UniRef and UniParc. UniProt release 2024_01 comprises over 250 million sequence records. The manually curated and reviewed entries section of UniProt is known as UniProtKB/Swiss-Prot, containing approximately 570 000 sequences. The unreviewed section is known as UniProtKB/TrEMBL and contains about 249 million sequences. Additionally, UniRef [31, 32] provides protein sequence clusters at three identity levels: 100, 90 and 50%. UniParc [33] provides a set of all known protein sequences, which stores all new and updated protein sequences from a variety of sources for comprehensive coverage. To avoid redundancy, each unique protein sequence is stored only once using a stable protein identifier [33, 34].

The GO [25] is a comprehensive resource for gene function information, where functional features used to describe gene function are classified into three categories: biological process (BP), molecular function (MF) and cellular component (CC). GO release 2024_01_17 comprises over 7 million annotations, 1 million gene products and 5000 species. Additionally, GO is available in three different versions on the download page(https://geneontology.org/docs/download-ontology/), including ‘go-basic’, ‘go’ and ‘go-plus’.

The Gene Ontology Annotation (GOA) [26] provides high-quality GO annotations for proteins in the UniProt Knowledgebase (UniProtKB). GOA employs two distinct GO annotation methods: electronic and manual [35]. Currently, the total count of GOA annotations exceeds 1.377 billion, with approximately 1.372 billion electronic GO annotations and only about 5.9 million manual GO annotations. Specifically, over 99 percent of GO annotations in the GOA database are made through electronic annotation methods.

STRING [27] is a database of known and predicted PPIs that integrates interaction data from five major sources, including genomic context predictions, high-throughput lab experiments, conserved-expression, automated textmining and previous knowledge in databases. STRING provides interaction scores between proteins, reflecting the strength of their connections in biological networks. The STRING database release 12.0 covers about 6 million proteins from over 10 000 organisms.

InterPro [28] is a comprehensive database that integrates 13 member databases: CATH-Gene3D [36], the Conserved Domains Database [37], HAMAP [38], PANTHER [39], Pfam [40], PIRSF [41], PRINTS [42], PROSITE Patterns [43], PROSITE Profiles [43], SMART [44], the Structure–Function Linkage Database [45], SUPERFAMILY [46] and TIGRFAMs [47]. Moreover, each member database contains focused characterization data. For example, the Pfam database primarily gathers, categorizes and annotates information related to protein domains.

The RCSB PDB [29] is a specialized database for storing information about the 3D structures of biological macromolecules. Currently, the RCSB.org portal provides over 200 000 experimentally determined PDB structures, with more than 120 000 eukaryotic protein structures and over half originating from humans. Additionally, approximately 1 million computed structure models (CSM) from AlphaFold DataBase [48] and ModelArchive are available.

AlphaFold [30] is a high-precision protein structure prediction database co-developed by DeepMind and EMBL-EBI, and in release v2.0, it contains more than 200 million entries that including the proteomes of 48 key organisms, providing broad coverage of UniProt. For protein structures not included in the database, structure prediction can be performed using the source code (https://github.com/google-deepmind/alphafold/).

In addition to the above databases that are currently widely used for protein function prediction studies, there are a number of other very important thermodynamic databases. For example, PINT [49], ProNIT [50], ProCaff [51] and PLD [52], these thermodynamic databases provide a wealth of thermodynamic information on protein interactions, which is an important reference value for understanding protein functions and interaction mechanisms.

Methods

Over the past decades, numerous computational methods have been proposed to predict protein function. These methods can be categorized into four groups based on the type of information they utilize: sequence-based methods, 3D structure-based methods, PPI network-based methods and hybrid information-based methods. It’s important to note that these categories are not strictly different, and there is overlap and correlation between them. For example, some computational methods based on structure or PPI information also use sequence information to predict protein function.

Sequence-based methods

Sequence-based methods focus on the protein sequence to predict protein function by extracting potential features related to function from it. These methods delve deeper into the information contained in the amino acid sequences and attempt to capture subtle features related to protein function that are embedded within them. The flowchart of sequence-based methods is shown in Figure 2.

Figure 2.

The flowchart of sequence-based methods. First, features are extracted from the sequence by different methods. Second, the extracted features are fed into some algorithm. Finally, the function of unknown proteins is predicted by the trained model, and the probability of their belonging to a specific GO term is calculated. However, BLAST tool searches the database for sequences similar to the target protein, eliminating the need to extract features from the sequence.

BLAST [53] is a widely employed tool for searching databases to find sequences similar to the target protein, thereby inferring potential functions of the target protein. However, relying solely on sequence alignment tools to predict protein function lacks high accuracy. Therefore, many methods have begun incorporating neural networks. In order to select the best protein feature representation, DEEPred [54] conducted separate deep neural network model training experiments using three different feature types, including subsequence profile map (SPMap) [55], pseudo amino acid composition (PAAC) [56] and conjoint triad [57]. The results show that the prediction performance with SPMap feature was the best. Consequently, DEEPred utilizes the SPMap features which extracted from the sequences and employs them as input for the multi-task feed-forward deep neural networks model to predict protein function. Combined with the prediction results from convolutional neural networks, DeepGOPlus [58] improves prediction accuracy by searching similar proteins with known functions. Compared to the previous model DeepGO [59], DeepGOPlus removes PPI features yet improves the prediction performance. In addition, it overcomes the sequence length limitation and replaces the amino acid trigram embedding layer with one-hot encoding. Drawing inspiration from the idea that proteins can be represented as a language, HiFun [60] embeds protein sequences using the BLOSUM62 matrix [61] and the FastText embedding. Vectors based on the BLOSUM62 matrix are fed into the convolutional layer to extract latent features, while matrices based on the FastText embedding are fed into an architecture consisting of convolutional neural network (CNN) and bidirectional long and short-term memory with self-attentive architecture (BiLSTM) [62]. The feature vectors extracted from the CNN are passed to the BiLSTM two hidden layers, which utilise the preceding and succeeding contextual information to enhance the feature representation [63]. In addition, HiFun performs the self-attention mechanism on the output of the BiLSTM layer to estimate the importance of amino acids.

These methods utilizes protein sequences solely for protein function prediction and plays a important role in discovering functions of new proteins. Several research experiments [64] have demonstrated that methods relying on protein sequences can significantly improve the prediction of MFO in functional categories. However, many proteins are similar in function but not in sequence, potentially leading to poorer predictive performance for proteins with low sequence similarity.

3D structure-based methods

3D structure-based methods focus on protein structure features. Protein structures are often translated into contact maps from which features closely related to function are captured. It’s important to note that protein sequence is frequently used as one of the input features in these methods. For proteins whose function is predominantly influenced by structure features, 3D structure-based methods have more pronounced advantages. The flowchart of 3D structure-based methods is shown in Figure 3.

Figure 3.

The flowchart of 3D structure-based methods. First, the feature extraction methods can be categorized into three types. (i) Extracting sequence embedding from sequences. (ii) Transforming structures into contact maps [65] and obtaining residue-level features. Second, the extracted features are fed into some algorithm. Finally, the function of unknown proteins is predicted by the trained model, and the probability of their belonging to a specific GO term is calculated.

DeepFRI [66] utilizes graph convolutional networks (GCN) [67] as its core technology. Firstly, it uses sequences from the protein family database (Pfam) [40] to pre-train the language model with long and short-term memory (LSTM-LM) [68] to extract residue-level features of the sequences. Secondly, it transforms protein structures into contact maps. Both are used as inputs to the GCN. The features learned by LSTM-LM from sequences and learned by GCN from contact maps significantly improve the performances of protein function prediction. Additionally, this method can also predict protein structure. In contrast to DeepFRI, GAT-GO [69] uses graph attention networks (GAT) [70] instead of traditional GCN. Each GAT layer is followed by a topological pooling layer [71] to perform topology-aware downsampling and a global pooling layer at the end. In addition, GAT-GO uses inter-residue contacts predicted by RaptorX [72] instead of the native contact maps used by DeepFRI. GAT-GO integrates predicted residue contact maps and CNN-generated sequence representations as GAT inputs, and combines GAT-generated representations with protein-level sequence embeddings to predict functional annotations. HEAL [73] is also compared to DeepFRI. The authors first trained the model HEAL-PDB using proteins from the Protein Data Bank (PDB) [74], which showed comparable performance to DeepFRI. The performance of HEAL was then significantly improved by incorporating protein structures predicted by AlphaFold2 [30]. HEAL first constructs graphs for each protein based on sequence node features and contact maps, which are fed into an architecture consisting of a message passing neural network and a hierarchical graph transformer. Then, supervised and comparative learning [75] are used to optimise the network. Struct2GO [76] takes both sequence and structure information as inputs. It utilises the SeqVec [77] model to extract sequence features and the hierarchical graph pooling [78] model based on the self-attention mechanism to extract features from structures predicted by AlphaFold2 [30]. To maximize the utilization of structure information, Struct2GO employs the Node2vec [79] algorithm to generate amino acid level embeddings in the protein structure network. These embeddings are used as the initial node features for the graph pooling model. Compared with the experimentally determined protein structures, AlphaFold2 [30] provides abundant high-resolution structure information, significantly improving model prediction accuracy.

These methods integrate protein structure alongside the protein sequence to substantially improve the accuracy of protein function prediction. For proteins with high similarity, it is difficult to distinguish minor functional differences using only sequence features, and combining structure features can help overcome this problem. However, the drawback of such methods is that they focus on protein structure features and ignore interactions between proteins.

PPI network-based methods

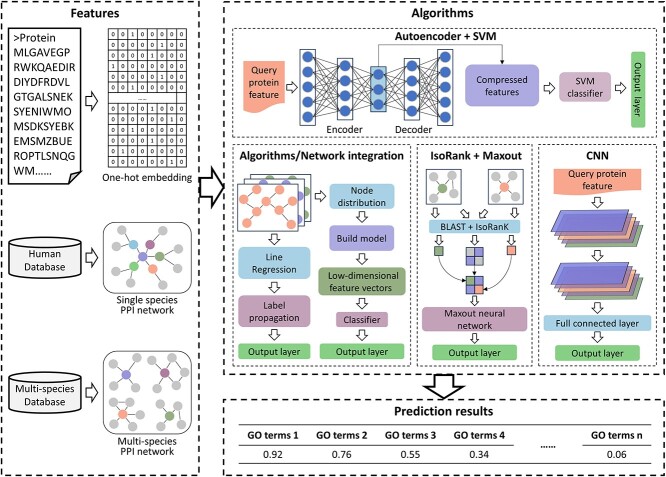

PPI network-based methods focus on PPI networks. Similar to 3D structure-based methods, protein sequence can also be utilized as one of the input features in these methods. The flowchart of PPI network-based methods is shown in Figure 4.

Figure 4.

The flowchart of PPI network-based methods. First, the feature extraction methods can be categorized into three types. (i) Extracting sequence embedding from sequences. (ii) Obtaining single-species or multi-species PPI networks from the STRING database. Second, the extracted features are fed into some algorithm. Finally, the function of unknown proteins is predicted by the trained model, and the probability of their belonging to a specific GO term is calculated.

GeneMANIA [80] is a network integration algorithm that combines linear regression algorithm, which constructs composite functional association networks from multiple protein data sources, and label propagation algorithm, which predicts functional annotations for these networks. It utilizes a distinct optimization strategy compared to other functional association network methods [81, 82], emphasizing the adjustment of network weights and discriminant values. Moreover, GeneMANIA integrates an improved Gaussian field label propagation algorithm [83] to improve functional prediction accuracy. Similar to the GeneMANIA algorithm, Mashup [84] is a network integration framework that utilizes network diffusion to obtain the distribution of each node and captures correlation information with other nodes. Then, the topological information of each node is represented as low-dimensional spatial vectors, and finally these vector representations are used as inputs for functional prediction. Mashup improves prediction accuracy for two main reasons: one is to analyze the topology of each network individually, and the other is to convert topology information into a compact low-dimensional representation. With the wide application of deep learning techniques, a network fusion method named deepNF [85] has been proposed, which is constructed based on multimodal deep autoencoder (MDA). Autoencoder is a special type of neural network that consists of an encoding part and a decoding part. It has been shown to effectively remove noise [86]. deepNF converts heterogeneous networks into vector representations by constructing positive pointwise mutual information (PPMI) matrices that capture network information using random walk with restarts (RWR) method. Subsequently, it extracts compact low-dimensional features from the networks represented by the PPMI matrices via the MDA middle layer and trains SVM classifiers with the features. NetQuilt [87] uses the IsoRank [88] algorithm to calculate similarity scores between proteins of the same and different species separately, and then constructs the IsoRank similarity matrices. Subsequently, it combines the IsoRank matrices of all species into a new matrix that is used as input to the neural network with a maxout activation function [89]. The above methods integrate multiple types of single-species PPI networks into a single kernel or compact low-dimensional representation, while NetQuilt integrates the homology information of multiple-species and the global alignment of PPI networks into a meta-network profile. Based on the IsoRank algorithm, NetQuilt significantly outperforms methods based on single-species PPI networks by integrating similarity scores from multiple-species networks. DeepGO [59], a previous version of DeepGOPlus, uses multi-layer neural networks to learn features for protein function prediction from protein sequences and PPI networks. Because only a small number of proteins have such network information, DeepGOPlus removes the PPI network information and instead improves prediction performance by integrating a sequence similarity-based method.

These methods primarily relies on PPI networks. It has been demonstrated that proteins with interactions in PPI networks often exhibit similar functions [90], making it feasible to predict protein functions using PPI networks. However, predicting protein function solely based on PPI networks has limitations, as the data generated by high-throughput techniques may contain noise [91], potentially affecting result accuracy.

Hybrid information-based methods

Hybrid information-based methods emphasize the combination of various information types, such as integration of protein sequences, protein structures, PPI networks or InterPro features and other integration methods. The CAFA challenge [18] has shown that the effective integration of diverse information types can genuinely improve the accuracy of protein function prediction [18–20]. Currently, hybrid information stands out as a major trend in the development of this field. The flowchart of hybrid information-based methods is shown in Figure 5.

Figure 5.

The flowchart of hybrid information-based methods. First, features can be extracted from four different types of information. (i) In sequence, extracting sequence embedding or residue-level vectors. (ii) In 3D structure, transforming structures into contact maps and obtaining residue-level features. (iii) In PPI networks, obtaining PPI networks from the STRING database. (iv)In InterPro features, obtaining features from the InterPro database. (v) Others, constructing GO term graphs, constructing homology networks based on sequences or query the literature for proteins and use them as textual features. Second, the extracted features are fed into some algorithm. Finally, the function of unknown proteins is predicted by the trained model, and the probability of their belonging to a specific GO term is calculated.

Integration of sequences, structures, PPI or InterPro

GOLabeler [92] uses the learning to rank (LTR) [93] framework to integrate five types of information, including GO term frequencies, sequence similarity, amino acid triad frequencies, ProFET features [94] and InterPro features [95]. The candidate GO terms are then ranked, and a ranked list of GO terms as the final output. The advantage of GOLabeler is the use of the LTR framework, making it possible to integrate any classifier into the framework as a new component. NetGO [96] maintains GOLabeler’s LTR [93] framework and introduces a new component, Net-KNN, which further improves prediction performance by using STRING’s extensive network information. The basic idea of Net-KNN is similar to one of the components in GOLabeler, BLAST-KNN, with the difference being that Net-KNN uses the association scores in the network instead of sequence similarity as in BLAST-KNN. Besides LTR framework, neural network models are also commonly used. SDN2GO [97] employs an integrated deep learning model for protein function prediction. It utilizes three distinct neural network modules to extract features from protein sequences, protein domains and PPI networks, respectively. Subsequently, these features are integrated into a weighted classifier, comprising a three-layer non-fully-connected network that learns features from the three different sources to optimize the weights. Additionally, SDN2GO builds a sub-model SN2GO, in which features from protein domains are excluded. The experiment results show that incorporating protein domain information can significantly improve prediction performance, especially in aspects of BPO. Graph2GO [64] is a multimodal graph-based model that builds two graphs for PPI networks and sequence similarity networks, respectively. Attributes such as protein sequences, subcellular locations and protein domains serve as nodes in the two graphs. Subsequently, potential features are learned from the nodes in the graphs using the variational graph auto-encoder [98]. This architecture learns vector representations from both the network structure and node attributes, which can be applied not only to predict protein function but also to other tasks involving biological networks, such as predicting interactions between proteins and predicting protein complexes. DeepGraphGO [99] is a multi-species graph neural network-based method. It first utilizes the InterProScan [100] tool to generate InterPro feature vectors for proteins and combines PPI network to use the InterPro feature vectors as node features in the network. Higher-order information in the network is then captured by multiple graph convolutional layers. Additionally, the advantage of DeepGraphGO is that it employs a multi-species strategy that utilizing protein data from all species to train a single model, which not only saves time and computational resources, but also improves the generalization of the model. CFAGO [101] cross-fuse PPI networks and protein attributes in two stages based on multi-head attention mechanism [102]. The first step is pre-training, where an autoencoder is employed to learn hidden features within the PPI network and protein attributes. As mentioned previously, the autoencoder have the ability to ignore noise in the data. The second step is fine-tuning, wherein the pre-trained encoder is combined with a two-layer fully connected neural network to predict protein function. Additionally, ablation experiments indicate that pre-training contributes more to performance enhancement than the attention mechanism does. Recently, it has been shown that the protein structure information predicted by AlphaFold2 [30] significantly improves the accuracy of function prediction. PredGO [103] consists of three important modules: using the pre-trained protein language model ESM-1b [104] to extract protein sequence features, employing a graph neural network with geometric vector perceptron [105] to extract features from the protein structure predicted by AlphaFold2 [30], and utilizing a protein fusion layer based on the multi-head attention mechanism to fuse sequence features and PPI features, generating fusion features. Then, the fusion features and structure features are concatenated for functional prediction. Additionally, the ablation experiments demonstrate that structure information improves performance in aspects of MFO and CCO, while PPI information improves performance in aspects of BPO. Combining sequence, structure and PPI information, enables a more comprehensive improvement in prediction performance.

Other integration methods

Besides the information utilized by the previously described methods, various additional information has been used to predict protein function. Compared to the previous version of NetGO [96], NetGO 2.0 [106] integrates protein literature information from SwissProt [107] (LR-Text) and sequence information extracted by recurrent neural network (Seq-RNN) into the LTR [93] framework. As a component of NetGO, LR-ProFET, had minimal impact on overall performance and was removed in NetGO 2.0, but the overall performance was improved. In contrast to the previously discussed methods, the following methods use GO term information. PANDA2 [108] employs graph neural networks to model the GO-directed acyclic graph [109] and integrates them with sequence features generated based on protein language model. Additionally, PANDA2 utilizes sequence alignment features based on PSI-BLAST, DIAMOND and Priority Score, as well as PAAC sequence attribute features. Moreover, the node attributes are iteratively updated in each network block of PANDA2 and knowledge can be learned from neighborhoods or receptive fields by stacking three network blocks. SPROF-GO [110] utilizes DIAMOND [111] to construct sequence homology networks instead of PPI networks. Initially, it extracts sequence embeddings from a pre-trained protein language model ProtT5 [112] and feeds them into two multilayer perceptrons (MLP) to learn attention scores and hidden embedding matrices. Subsequently, the hidden embeddings are weighted averaged based on the attention scores, which are then input into the output MLP to get the initial prediction. Additionally, SPROF-GO applies a hierarchical learning strategy. During the testing phase, a homology network is constructed based on the training set, and both the initial prediction and the homology network are input into the mark-diffusion algorithm to obtain the final prediction. PFresGO [113] encodes proteins as sequence embeddings using pre-trained protein language model ProtT5 [112]. Subsequently, it generates residue-level embeddings by combining one-hot sequence embeddings with sequence embeddings reduced in dimensionality by autoencoder. Additionally, PFresGO utilizes Anc2vec [114] to construct GO term embeddings based on the self-attention mechanism. These two types of embeddings are fused to calculate the probability of GO term based on the multi-head attention mechanism. The results indicate that even without using information beyond the sequence, the hierarchical structure of the GO graph can effectively improve prediction performance. Another method that utilize GO term information is HNetGO [115], which employs the DIAMOND [111] tool to calculate sequence similarity for building similarity networks. It integrates sequence features extracted based on pre-trained protein language model SeqVec [77], GO term relevance information and PPI networks to build heterogeneous networks. The networks are utilized to extract node features by using GNN model based on attention mechanism for predicting protein functions. Additionally, HNetGO innovatively constructs the heterogeneous information networks, which effectively integrate sequence and PPI network information, enabling the modelling of complex relationships between proteins and GO terms.

Through effectively fusing information from multiple sources, these methods can capture the diversity features of protein from multiple aspects, thereby improving the performance of functional prediction. Additionally, these methods can partially alleviate the noise effect inherent in information from a single data source. However, fusing information from different data sources usually necessitates more sophisticated algorithms or techniques to extract feature, particularly on extensive datasets, which may lead to increased consumption of computational resources and time. Furthermore, some proteins may lack source information of certain features, resulting in a lack of comprehensive fusion information for these proteins. Therefore, correctly handling missing data is a major challenge for such methods.

Web servers and stand-alone tools

Because of the importance of protein function prediction, some web servers and stand-alone tools have been established, which are listed in Table 2. By using these servers or tools, we can easily reproduce these methods and make new predictions. From this table, we can clearly see the information on which these methods are based and the algorithms used. These have an impact on their performance results.

Table 2.

The summary of web servers and stand-alone tools for protein function prediction.

Category

|

Method | Year | Website | Information | Algorithm |

|---|---|---|---|---|---|

| S | BLAST [53] | 1990 | https://blast.ncbi.nlm.nih.gov/Blast.cgi | Sequence | Sequence similarity search |

| DEEPred [54] | 2019 | https://github.com/cansyl/DEEPred | Sequence | Multi-task feed-forward deep neural network | |

| DeepGOPlus [58] | 2020 | http://deepgoplus.bio2vec.net/ | Sequence | Convolutional neural network and Sequence similarity search | |

| HiFun [60] | 2023 | http://www.unimd.org/HiFun | Sequence | Convolutional neural network and Bidirectional long short-term memory with self-attention mechanism | |

| D | DeepFRI [66] | 2021 | https://beta.deepfri.flatironinstitute.org | Sequence and Structure | Protein language model and Graph convolutional network |

| GATGO [69] | 2022 | ∖ | Sequence and Structure | Protein language model and Graph attention network | |

| HEAL [73] | 2023 | https://github.com/ZhonghuiGu/HEAL | Sequence and Structure | Protein language model, Message passing neural network and Hierarchical graph transformer | |

| Struct2GO [76] | 2023 | https://github.com/lyjps/Struct2GO | Sequence and Structure | Protein language model, Graph convolution and Attention-based graph pooling mechanism | |

| P | GeneMANIA [80] | 2008 | ∖ | PPI | Linear regression and Label propagation algorithms |

| Mashup [84] | 2016 | https://mashup.csail.mit.edu/ | PPI | Network integration | |

| deepNF [85] | 2018 | https://github.com/VGligorijevic/deepNF | PPI | Autoencoder and Support vector machine | |

| DeepGO [59] | 2018 | http://deepgo.bio2vec.net/ | Sequence and PPI | Convolutional neural network | |

| NetQuilt [87] | 2021 | https://github.com/nowittynamesleft/NetQuilt | PPI | IsoRank algorithm and Maxout neural network | |

| H1 | GOLabeler [92] | 2018 | ∖ | Sequence and InterPro | Logistic regression and Learning to rank |

| NetGO [96] | 2019 | ∖ | Sequence, PPI and InterPro | Logistic regression and Learning to rank | |

| SDN2GO [97] | 2020 | https://github.com/Charrick/SDN2GO | Sequence, PPI and InterPro | Convolutional neural network | |

| Graph2GO [64] | 2020 | https://integrativeomics.shinyapps.io/graph2go/ | Sequence, PPI and InterPro | Autoencoder and Graph convolutional network | |

| DeepGraphGO [99] | 2021 | https://github.com/yourh/DeepGraphGO | PPI and InterPro | Graph convolutional network | |

| CFAGO [101] | 2023 | http://bliulab.net/CFAGO/ | PPI and InterPro | Autoencoder, Pre-training and Multi-head attention mechanism | |

| PredGO [103] | 2023 | http://predgo.denglab.org/ | Sequence, PPI and Structure | Protein language model, Geometric vector perceptron and Multi-head attention mechanism | |

| H2 | NetGO 2.0 [106] | 2021 | ∖ | Sequence, PPI, InterPro and Literature | Logistic regression and Learning to rank |

| PANDA2 [108] | 2022 | http://dna.cs.miami.edu/PANDA2/ | Sequence and GO term | Protein language model and Graph convolutional network | |

| SPROF-GO [110] | 2023 | http://bio-web1.nscc-gz.cn/app/sprof-go | Sequence and Homology network | Protein language model, Self-attention mechanisms and Label diffusion algorithm | |

| PFresGO [113] | 2023 | https://github.com/BioColLab/PFresGO | Sequence and GO term | Protein language model, Autoencoder and Multi-head attention mechanism | |

| HNetGO [115] | 2023 | https://github.com/BIOGOHITSZ/HNetGO | PPI, Homology network and GO term | Protein language model, Graph neural network and Multi-head attention mechanism |

‘S’ represents sequence-based methods, ‘D’ represents 3D structure-based methods, ‘P’ represents PPI network-based methods and ‘H’ represents hybrid information-based methods. ‘H1’ represents integration of sequences, structures, PPI or InterPro. ‘H2’ represents other integration methods.

‘S’ represents sequence-based methods, ‘D’ represents 3D structure-based methods, ‘P’ represents PPI network-based methods and ‘H’ represents hybrid information-based methods. ‘H1’ represents integration of sequences, structures, PPI or InterPro. ‘H2’ represents other integration methods.

We have systematized existing papers that explicitly state prediction methods. Currently, computational methods for predicting protein function can be divided into two main categories: integrated prediction methods and individual prediction methods. Integrated prediction methods train one model to predict MFO, BPO and CCO labels in three categories, such as Naïve [19], BLAST [53], DeepGOPlus [58], Mashup [84], deepNF [85] and PANDA2 [108]. Individual prediction methods train three separate models each for predicting one category of labels. It is worth noting that GeneMANIA [80] employs the Gaussian label propagation algorithm for label prediction, which is different from the above two categories of methods.

Discussion and conclusion

As introduced and discussed above, many computational methods for protein function prediction have been proposed due to their importance. In this section, we provide a comprehensive comparison of these methods on a widely used benchmark dataset.

Datasets

The datasets we used for performance comparison strictly followed the standards of the CAFA challenge [18]. To ensure the accuracy and reliability of the experimental results, we used manually curated and reviewed proteins in UniProtKB/Swiss-Prot [116] and annotations are propagated using the hierarchical structure of GO [6], as the annotations of unreviewed proteins are electronically made (evidence code: IEA), which may contain potential errors. In addition, according to CAFA, annotations with evidence codes (EXP, IDA, IPI, IMP, IGI, IEP, TAS and IC) are considered experimental, and therefore we selected proteins with experimental evidence codes. In the aspects of MFO, BPO and CCO, the number of training sets is 2747, 3197 and 5263, the number of validation sets is 503, 304 and 577, the number of testing sets is 719, 182 and 119, and the number of labels is 38, 45 and 35, respectively [24–30, 101]. The performance results of the various computational methods presented in Table 3 and Figure 6 are obtained from experiments conducted on this dataset.

Table 3.

Performance comparison of different computational methods.

F

|

AUPR | |||||

|---|---|---|---|---|---|---|

Method

|

MFO | BPO | CCO | MFO | BPO | CCO |

| Naive [19] | 0.177 | 0.051 | 0.121 | 0.050 | 0.024 | 0.047 |

| BLAST [53] | 0.122 | 0.270 | 0.196 | 0.044 | 0.110 | 0.084 |

| DeepGOCNN [58] | 0.435 | 0.095 | 0.121 | 0.272 | 0.042 | 0.061 |

| DeepGOPlus [58] | 0.394 | 0.354 | 0.245 | 0.283 | 0.185 | 0.104 |

DeepFRI [66] [66] |

0.461 | 0.362 | 0.385 | 0.382 | 0.308 | 0.360 |

| Struct2GO [76] | 0.416 | 0.364 | 0.445 | 0.413 | 0.373 | 0.514 |

| GeneMANIA [80] | 0.000 | 0.000 | 0.031 | 0.050 | 0.042 | 0.103 |

| Mashup [84] | 0.058 | 0.075 | 0.000 | 0.053 | 0.238 | 0.179 |

| deepNF [85] | 0.153 | 0.394 | 0.297 | 0.089 | 0.303 | 0.178 |

| NetQuilt [87] | 0.081 | 0.164 | 0.138 | 0.045 | 0.077 | 0.081 |

| Graph2GO [64] | 0.196 | 0.335 | 0.298 | 0.103 | 0.237 | 0.215 |

| DeepGraphGO [99] | 0.142 | 0.327 | 0.209 | 0.080 | 0.210 | 0.133 |

| CFAGO [101] | 0.236 | 0.439 | 0.366 | 0.159 | 0.328 | 0.337 |

| PredGO [103] | 0.503 | 0.104 | 0.269 | 0.256 | 0.061 | 0.195 |

| PFresGO [113] | 0.512 | 0.527 | 0.406 | 0.496 | 0.492 | 0.399 |

| HNetGO [115] | 0.523 | 0.412 | 0.618 | 0.570 | 0.512 | 0.669 |

The best performance is highlighted with bold font, and the performance ranked the second is highlighted with underline, and the performance ranked the third is highlighted with italic.  In the implementation of these methods, the predictions for a protein are not counted under the following conditions. (i) Lack of structure data. (ii) Sequence lengths too long for input to the language model.

In the implementation of these methods, the predictions for a protein are not counted under the following conditions. (i) Lack of structure data. (ii) Sequence lengths too long for input to the language model.  The dataset built by DeepFRI does not contain sequences longer than 1000, so we filter out sequences from our dataset that are longer than 1000 for this method implementation.

The dataset built by DeepFRI does not contain sequences longer than 1000, so we filter out sequences from our dataset that are longer than 1000 for this method implementation.

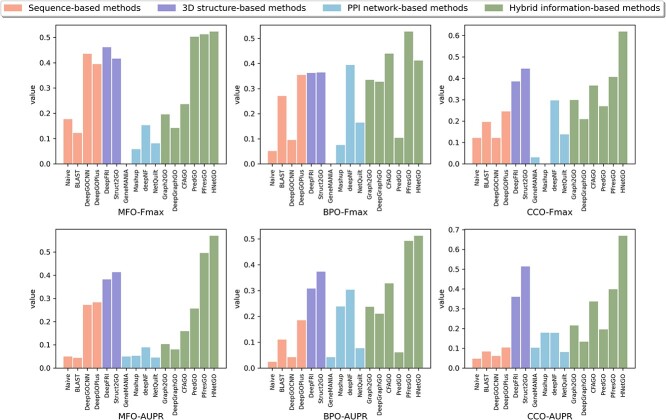

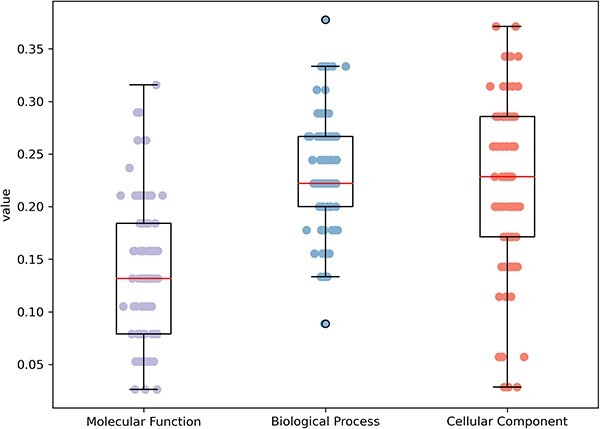

Figure 6.

Performance comparison of four categories computational methods.

Evaluation

To evaluate the prediction accuracy of different methods from different perspectives, we select two different metrics, including F-max score (Fmax) and area under the precision-recall curve (AUPR). Fmax [117] is a protein-centered measure used in the CAFA challenge [19] and many protein function prediction studies, which takes values from 0 to 1, where 1 indicates the best performance of the model and 0 indicates the worst performance [19]. It is defined as following:

|

(1) |

where pr( ) and rc(

) and rc( ) are the precision and recall in terms of threshold

) are the precision and recall in terms of threshold  , respectively, which are defined as following:

, respectively, which are defined as following:

|

(2) |

|

(3) |

where  is a GO class,

is a GO class,  is a set of predicted annotations for a protein

is a set of predicted annotations for a protein  and threshold

and threshold  ,

,  is a set of true annotations for the protein, m(

is a set of true annotations for the protein, m( ) is the number of proteins we predict for at least one class and

) is the number of proteins we predict for at least one class and  is the total number of proteins and

is the total number of proteins and  is an indicator function.

is an indicator function.

In addition, AUPR is a function-centered measure used in many protein function prediction studies, which is also a reasonable metric for evaluating high levels of category imbalance in the predicted outcomes [118]. Both AUPR and AUROC are very general criteria for classification evaluation. However, because AUROC may not be sensitive enough in the case of classification imbalance, as it takes into account the balance between true positive and false positive rates, which can be affected by a large number of negative class samples. In contrast, AUPR better reflects the performance of the classifier in predicting positive cases, i.e. it is more able to penalize false positives [66, 92, 119]. It also takes values from 0 to 1, where 1 indicates the best performance of the model and 0 the worst performance [19].

Performance comparison of four categories methods

Figure 6 shows the results of the four categories of methods on three aspects of GO. Overall, hybrid information-based methods outperforms the other three categories of methods in several metrics. For MFO and CCO aspects, 3D structure-based methods show more significant improvement compared to sequence-based methods, and for BPO aspects, PPI network-based methods show more significant improvement.

A more detailed summary of the performance results for different methods is shown in Table 3. It is evident that hybrid information-based methods achieve the best or second best results in terms of Fmax and AUPR measures. Specifically, PFresGO achieves the best performance in aspects of BPO with Fmax values of 0.527. Additionally, HNetGO achieves the best performance in aspects of MFO and CCO with Fmax value of 0.523 and 0.618, respectively. Moreover, HNetGO achieves the best performance in aspects of MFO, BPO and CCO with AUPR values of 0.570, 0.512 and 0.669, respectively. It is worth noting that due to the low proportion of training proteins in the PPI networks, GeneMANIA is unable to effectively propagate label information from training proteins to test proteins. Similarly, and Mashup also fails to effectively learn compact low-dimensional representations from the network. This results in some aspects of GO having a value of 0 for Fmax.

Hybrid information-based methods outperform other three categories of methods in several metrics, indicating that the strategy of integrating multi-source data information is reasonable and contributes to improving prediction accuracy. The reason for this is that hybrid information-based methods can effectively utilise data from different sources, such as sequence, structure, PPI, InterPro, GO terms, etc. These data are often complementary, providing different but relevant information that complements each other and enriches the understanding of protein function. Specifically, sequence provides information about amino acid composition and arrangement, forming the basis for inferring protein function. Structure provides information about the 3D structure and conformation of proteins, revealing important features such as the folding modes and the location of functional sites. In addition, PPI provides a global view of protein interactions in the organism, revealing the complex network structure of protein function regulation and signalling. InterPro provides multiple types of protein characterisation and GO terms provide detailed descriptions of the functional characteristics of proteins, respectively, which help to predict protein functions more accurately.

In order to further investigate the impact of sequence, structure and PPI on prediction performance, we excluded hybrid information-based methods. As evident from the results of the three categories, DeepFRI achieves the best performance in aspects of MFO with Fmax value of 0.461, and deepNF performs best in aspects of BPO with Fmax value of 0.394. In comparison, Struct2GO achieves the best performance in aspects of CCO with Fmax value of 0.445. Additionally, Struct2GO achieves the best performance in aspects of MFO, BPO and CCO with AUPR values of 0.413, 0.373 and 0.514, respectively. deepNF achieves comparable performance in aspects of BPO with AUPR values of 0.303. These results show that sequence and structure information contribute to improving performance in aspects of MFO and CCO, while PPI information contribute to improving performance in aspects of BPO. Specifically, MFO mainly describes the molecular function of a protein, while CCO describes the composition and structure of the cell in which the protein resides. Sequence information provides detailed information about the amino acid composition of a protein, and functionally relevant semantic information related to function can be extracted from the sequence. Structure information, on the other hand, provides more multifaceted information, including subcellular locations, protein domains and internal structural features. This information complements each other and reveals not only the molecular functions of proteins and their mechanisms of action inside and outside the cell, but also the localization and composition of proteins within the cell. In addition, BPO mainly describes the biological processes or activities in which proteins are involved, usually involving interactions and regulation among multiple proteins. PPI information provides information on protein interaction networks, signaling and metabolic regulation, revealing the overall role and function of proteins in biological processes. Therefore, sequence information, structure information and PPI information play different roles in protein function prediction tasks, which also reflects their different focuses and characteristics in the three aspects of MFO, BPO and CCO.

Accuracy comparison of structure prediction methods

Since the precise structure often determines the function of a protein, we are naturally concerned about the accuracy of the structures used in 3D structure-based approaches. In this regard, deep learning methods have shown great potential. Among them, deep learning methods including AlphaFold [120] and RaptorX [121] have already achieved good results. In addition, we have chosen two other advanced deep learning methods ProSPr [122] and trRosetta [123] for comparison. RaptorX and trRosetta use deep residual neural networks (ResNet) as their core deep learning architecture. In contrast, the core component of AlphaFold and ProSPr is a deep CNN. Notably, the contact accuracy of these four methods on the CASP13 [124] test set was evaluated in a recent study [125], and the performance results of these four methods are summarized in detail in Table 4. It is obvious that AlphaFold shows the most excellent performance in all aspects. Because of this, most 3D structure-based methods choose to use AlphaFold-predicted protein structures as input features for protein function prediction, and the accuracy and reliability demonstrated by AlphaFold provides strong support for these methods. Using AlphaFold-predicted high-precision structures as input features, these methods can predict protein function more accurately.

Table 4.

The contact accuracy of these four methods on the CASP13 test set.

Method

|

Short(%) | Mid(%) | Long(%) | Avg(%) |

|---|---|---|---|---|

| AlphaFold [120] | 95.13 | 87.12 | 72.53 | 84.93 |

| RaptorX [121] | 92.47 | 84.65 | 69.46 | 82.19 |

| ProSPr [122] | 93.12 | 83.10 | 67.21 | 81.14 |

| trRosetta [123] | 92.73 | 84.77 | 69.30 | 82.27 |

The best performance is highlighted with bold font, and the performance ranked the second is highlighted with underline.  These experimental results in the table are referenced from associated literature [125].

These experimental results in the table are referenced from associated literature [125].

Accuracy of predicting unannotated proteins

In order to assess the accuracy of the best-performing method in predicting unannotated protein functions, we selected the latest 100 human proteins from the Uniprot [24] database for prediction evaluation, and the results in Figure 7 show that the maximum accuracies in the three functional categories of MFO, BPO and CCO are 0.316, 0.378 and 0.371, respectively, and the average accuracies are 0.138, 0.229 and 0.220. Although the current best-performing computational methods do not achieve the desired accuracy, we need to note that these results are only predictive values and are subject to a degree of uncertainty. Furthermore, the assessment of accuracy is also affected by a degree of uncertainty, as the labels on which the assessment relies are based on computational methods for prediction and lack experimental validation. Therefore, we believe that computational methods using deep learning still have great potential for development in the field of unannotated protein function prediction.

Figure 7.

The accuracy of the best performing method on the unannotated protein set.

Problems and perspectives of protein function prediction

All the aforementioned methods have significantly contributed to the advancement of protein function prediction. However, there still exist some problems in this field. In this section, we will discuss these problems.

Benchmark datasets

Datasets are crucial for the construction and evaluation of predictive models. However, there are several problems with currently available protein datasets. (i) Very limited reviewed protein data. More than 99% of the proteins in the latest version of the Uniprot database still need to be reviewed [24]. The high cost of experimentally determining protein structures results in relatively limited coverage of structure data [126]. In addition, PPI data may contain noise and false positives [91, 127, 128], which could impact the accuracy and reliability of protein interactions. Therefore, more effort should be invested in collecting reliable protein data. (ii) Many protein function prediction methods have been proposed [15–17], but most trained and tested with different datasets. Models trained using data collected by one method may perform poorly on datasets built by other methods, making it difficult to directly and objectively compare the performance of different methods based on different test sets. In order to promote the development of the field, it is crucial to build an updated and more comprehensive benchmark dataset.

Feature extraction methods

Feature extraction methods are critical for protein function prediction. In the ‘Methods’ section, many feature extraction methods are presented, which are constructed based on different protein information. Specifically, (i) Based on sequence, where similarity information [129] is computed with the help of search tools or semantic information is extracted by the protein language models [77, 104, 112]. (ii) Based on structure, where the structure is transformed into contact maps, and then the residue-level features are obtained algorithmically. (iii) Based on PPI, where the PPI is represented as a graph structure, and then the features are extracted from the graph topology and network features. (iv) Based on InterPro, which integrates a variety of information. For example, binary features can be built using the presence or absence of subcellular locations [64] and protein domains [101]. From the results of the ‘Performance comparison of four categories methods’ section, we can see that the prediction performance can be significantly improved by combining the sequence, structure, PPI, InterPro and other forms of protein information. However, most protein function prediction methods rely on the effective integration of existing feature extraction methods to obtain feature information. Therefore, it is crucial to develop new feature extraction methods, which can provide more comprehensive and accurate representations of protein features.

Algorithms

The methods based on machine learning or deep learning achieve advanced performance in this field. The key to improving their performance is to find suitable algorithms. In recent years, the use of deep learning algorithms [130, 131] to predict protein function has become a hot topic of research in this field. For example, graph neural networks [132], autoencoders [133], attention mechanisms [102], etc., which show promise for improving the predictive performance. As more advanced deep learning algorithms are proposed and widely used in various fields. How to utilize or combine these deep learning models to build more accurate protein function predictors is one of the current important issues in this field. Currently, computational methods exist that are constructed based on data for a species with sufficient functional annotations. However, for species with sparse data and insufficient information, the utilization of established methods may result in poor prediction performance. To solve this problem, transfer learning [134] can be introduced into protein function prediction, which utilizes the existing species information to improve the predictive performance of new species information by different training strategies and mitigate the problem caused by sparse data and insufficient information.

Key Points

Owing to the importance of protein function prediction in the field of bioinformatics, it is crucial to develop efficient and accurate computational methods to predict protein function.

The databases of proteins are introduced and discussed, providing the useful information of these databases.

The computational methods for protein function prediction are introduced, and their advantages and disadvantages are discussed. The existing web servers and stand-alone tools in the field are given.

The performance of existing computational methods in this field is evaluated and compared based on a widely used benchmark dataset. Finally, some problems and perspectives in the field are discussed.

Author Biographies

Baohui Lin is an undergraduate at the College of Big Data and Internet, Shenzhen Technology University, Shenzhen, Guangdong, China.

Xiaoling Luo, PhD, is an assistant professor at the College of Computer Science and Software Engineering, Shenzhen University, Shenzhen, China. She is also with Guangdong Provincial Key Laboratory of Novel Security Intelligence Technologies, Shenzhen, China. Her expertise is in deep learning, medical image processing and computer vision.

Yumeng Liu, PhD, is a lecturer at the College of Big Data and Internet, Shenzhen Technology University, Shenzhen, Guangdong, China. Her expertise is in bioinformatics, nature language processing and machine learning.

Xiaopeng Jin, PhD, is a lecturer at the College of Big Data and Internet, Shenzhen Technology University, Shenzhen, Guangdong, China. His expertise is in bioinformatics, nature language processing and machine learning.

Contributor Information

Baohui Lin, College of Big Data and Internet, Shenzhen Technology University, Shenzhen, Guangdong 518118, China.

Xiaoling Luo, Guangdong Provincial Key Laboratory of Novel Security Intelligence Technologies, Shenzhen, Guangdong, China; College of Computer Science and Software Engineering, Shenzhen University, Shenzhen, Guangdong 518061, China.

Yumeng Liu, College of Big Data and Internet, Shenzhen Technology University, Shenzhen, Guangdong 518118, China.

Xiaopeng Jin, College of Big Data and Internet, Shenzhen Technology University, Shenzhen, Guangdong 518118, China.

Funding

This work was supported by the National Natural Science Foundation of China (Grant No.62302316 and 62302317), Shenzhen Science and Technology Program (Grant No.RCBS202 21008093227027), Shenzhen Colleges and Universities Stable Support Program (Grant No.20220715183602001 and 202311220 05530001), The project of Guangdong Provincial Key Laboratory of Novel Security Intelligence Technologies (Grant No. 2022B1212010005), Natural Science Foundation of Top Talent of SZTU (Grant No.GDRC202319), SZTU Project No.20224027010006.

References

- 1. Alberts B. The cell as a collection of protein machines: preparing the next generation of molecular biologists. Cell 1998;92:291–4. 10.1016/S0092-8674(00)80922-8. [DOI] [PubMed] [Google Scholar]

- 2. Hartwell LH, Hopfield JJ, Leibler S. et al.. From molecular to modular cell biology. Nature 1999;402:C47–52. 10.1038/35011540. [DOI] [PubMed] [Google Scholar]

- 3. Kollman Peter. Non-covalent forces of importance in biochemistry. New Comprehensive Biochem 1984;6:55–71. Elsevier, 10.1016/S0167-7306(08)60373-7. [DOI] [Google Scholar]

- 4. Spirin V, Mirny LA. Protein complexes and functional modules in molecular networks. Proc Natl Acad Sci 2003;100:12123–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Mei S. A framework combines supervised learning and dense subgraphs discovery to predict protein complexes. Front Comp Sci 2022;16:161901. 10.1007/s11704-021-0476-8. [DOI] [Google Scholar]

- 6. Ashburner M, Ball CA, Blake JA. et al.. Gene ontology: tool for the unification of biology. Nat Genet 2000;25:25–9. 10.1038/75556. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. The Gene Ontology Consortium . The gene ontology resource: enriching a gold mine. Nucleic Acids Res 2021;49:D325–34. 10.1093/nar/gkaa1113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Schröder B, Wrocklage C, Pan C. et al.. Integral and associated lysosomal membrane proteins. Traffic 2007;8:1676–86. 10.1111/j.1600-0854.2007.00643.x. [DOI] [PubMed] [Google Scholar]

- 9. Pankonien I, Alvarez JL, Doller A. et al.. Ahnak1 is a tuneable modulator of cardiac ca (v) 1.2 calcium channel activity. J Muscle Res Cell Motil 2011;32:281–90. 10.1007/s10974-011-9269-2. [DOI] [PubMed] [Google Scholar]

- 10. Castello A, Fischer B, Eichelbaum K. et al.. Insights into RNA biology from an atlas of mammalian mrna-binding proteins. Cell 2012;149:1393–406. 10.1016/j.cell.2012.04.031. [DOI] [PubMed] [Google Scholar]

- 11. Lichtarge O, Bourne HR, Cohen FE. An evolutionary trace method defines binding surfaces common to protein families. J Mol Biol 1996;257:342–58. 10.1006/jmbi.1996.0167. [DOI] [PubMed] [Google Scholar]

- 12. Eisenberg D, Marcotte EM, Xenarios I. et al.. Protein function in the post-genomic era. Nature 2000;405:823–6. 10.1038/35015694. [DOI] [PubMed] [Google Scholar]

- 13. Chan IS, Ginsburg GS. Personalized medicine: progress and promise. Annu Rev Genomics Hum Genet 2011;12:217–44. [DOI] [PubMed] [Google Scholar]

- 14. Costanzo M, VanderSluis B, Koch EN. et al.. A global genetic interaction network maps a wiring diagram of cellular function. Science 2016;353:aaf1420. 10.1126/science.aaf1420. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Friedberg I. Automated protein function prediction–the genomic challenge. Brief Bioinform 2006;7:225–42. 10.1093/bib/bbl004. [DOI] [PubMed] [Google Scholar]

- 16. Rentzsch R, Orengo CA. Protein function prediction–the power of multiplicity. Trends Biotechnol 2009;27:210–9. 10.1016/j.tibtech.2009.01.002. [DOI] [PubMed] [Google Scholar]

- 17. Kihara D. Computational protein function predictions. Methods 2016;93:1–2. 10.1016/j.ymeth.2016.01.001. [DOI] [PubMed] [Google Scholar]

- 18. Zhou N, Jiang Y, Bergquist TR. et al.. The CAFA challenge reports improved protein function prediction and new functional annotations for hundreds of genes through experimental screens. Genome Biol 2019;20:1–23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Radivojac P, Clark WT, Oron TR. et al.. A large-scale evaluation of computational protein function prediction. Nat Methods 2013;10:221–7. 10.1038/nmeth.2340. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Jiang Y, Oron TR, Clark WT. et al.. An expanded evaluation of protein function prediction methods shows an improvement in accuracy. Genome Biol 2016;17:1–19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Sleator RD, Walsh P. An overview of in silico protein function prediction. Arch Microbiol 2010;192:151–5. 10.1007/s00203-010-0549-9. [DOI] [PubMed] [Google Scholar]

- 22. Shehu A, Barbará D, Molloy K. A survey of computational methods for protein function prediction. In: Wong K-C (ed.) Big Data Analytics in Genomics. Switzerland: Springer Cham, 2016, 225–98. 10.1007/978-3-319-41279-5_7. [DOI] [Google Scholar]

- 23. Yan T-C, Yue Z-X, Hong-Quan X. et al.. A systematic review of state-of-the-art strategies for machine learning-based protein function prediction. Comput Biol Med 2023;154:106446. 10.1016/j.compbiomed.2022.106446. [DOI] [PubMed] [Google Scholar]

- 24. The UniProt Consortium . Uniprot: the universal protein knowledgebase in 2023. Nucleic Acids Res 2023;51:D523–31. 10.1093/nar/gkac1052. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Aleksander SA, Balhoff J, Seth Carbon J. et al.. The gene ontology knowledgebase in 2023. Genetics 2023;224(1):iyad031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Huntley RP, Sawford T, Mutowo-Meullenet P. et al.. The Goa database: gene ontology annotation updates for 2015. Nucleic Acids Res 2015;43:D1057–63. 10.1093/nar/gku1113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Szklarczyk D, Kirsch R, Koutrouli M. et al.. The string database in 2023: protein–protein association networks and functional enrichment analyses for any sequenced genome of interest. Nucleic Acids Res 2023;51:D638–46. 10.1093/nar/gkac1000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Paysan-Lafosse T, Blum M, Chuguransky S. et al.. Interpro in 2022. Nucleic Acids Res 2023;51:D418–27. 10.1093/nar/gkac993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Burley SK, Bhikadiya C, Bi C. et al.. Rcsb protein data bank (rcsb. Org): delivery of experimentally-determined pdb structures alongside one million computed structure models of proteins from artificial intelligence/machine learning. Nucleic Acids Res 2023;51:D488–508. 10.1093/nar/gkac1077. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Varadi M, Anyango S, Deshpande M. et al.. Alphafold protein structure database: massively expanding the structural coverage of protein-sequence space with high-accuracy models. Nucleic Acids Res 2022;50:D439–44. 10.1093/nar/gkab1061. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Suzek BE, Huang H, McGarvey P. et al.. Uniref: comprehensive and non-redundant uniprot reference clusters. Bioinformatics 2007;23:1282–8. 10.1093/bioinformatics/btm098. [DOI] [PubMed] [Google Scholar]

- 32. Suzek BE, Wang Y, Huang H. et al.. Uniref clusters: a comprehensive and scalable alternative for improving sequence similarity searches. Bioinformatics 2015;31:926–32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Leinonen R, Diez FG, Binns D.et al.. UniProt archive. Uniprot archive Bioinformatics 2004;20:3236–7. 10.1093/bioinformatics/bth191. [DOI] [PubMed] [Google Scholar]

- 34. UniProt Consortium . The universal protein resource (uniprot). Nucleic Acids Res 2007;36:D190–5. 10.1093/nar/gkm895. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Barrell D, Dimmer E, Huntley RP. et al.. The Goa database in 2009–an integrated gene ontology annotation resource. Nucleic Acids Res 2009;37:D396–403. 10.1093/nar/gkn803. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Sillitoe I, Bordin N, Dawson N. et al.. Cath: increased structural coverage of functional space. Nucleic Acids Res 2021;49:D266–73. 10.1093/nar/gkaa1079. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Shennan L, Wang J, Chitsaz F. et al.. CDD/Sparcle: the conserved domain database in 2020. Nucleic Acids Res 2020;48:D265–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Pedruzzi I, Rivoire C, Auchincloss AH. et al.. Hamap in 2015: updates to the protein family classification and annotation system. Nucleic Acids Res 2015;43:D1064–70. 10.1093/nar/gku1002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Mi H, Ebert D, Muruganujan A. et al.. Panther version 16: a revised family classification, tree-based classification tool, enhancer regions and extensive api. Nucleic Acids Res 2021;49:D394–403. 10.1093/nar/gkaa1106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Mistry J, Chuguransky S, Williams L. et al.. Pfam: the protein families database in 2021. Nucleic Acids Res 2021;49:D412–9. 10.1093/nar/gkaa913. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Nikolskaya AN, Arighi CN, Huang H. et al.. Pirsf family classification system for protein functional and evolutionary analysis. Evolutionary Bioinformatics 2006;2:117693430600200. 10.1177/117693430600200033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42. Attwood TK, Coletta A, Muirhead G, et al.. The prints database: a fine-grained protein sequence annotation and analysis resource–its status in 2012. Database 2012;2012:bas019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Sigrist CJA, De Castro E, Cerutti L. et al.. New and continuing developments at prosite. Nucleic Acids Res 2012;41:D344–7. 10.1093/nar/gks1067. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44. Letunic I, Khedkar S, Bork P. Smart: recent updates, new developments and status in 2020. Nucleic Acids Res 2021;49:D458–60. 10.1093/nar/gkaa937. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. Akiva E, Brown S, Almonacid DE. et al.. The structure–function linkage database. Nucleic Acids Res 2014;42:D521–30. 10.1093/nar/gkt1130. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46. Pandurangan AP, Stahlhacke J, Oates ME. et al.. The superfamily 2.0 database: a significant proteome update and a new webserver. Nucleic Acids Res 2019;47:D490–4. 10.1093/nar/gky1130. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47. Li W, O’Neill KR, Haft DH. et al.. Refseq: expanding the prokaryotic genome annotation pipeline reach with protein family model curation. Nucleic Acids Res 2021;49:D1020–8. 10.1093/nar/gkaa1105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48. Jumper J, Evans R, Pritzel A. et al.. Highly accurate protein structure prediction with alphafold. Nature 2021;596:583–9. 10.1038/s41586-021-03819-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49. Shaji Kumar MD, Michael M, Gromiha. PINT: Protein–Protein Interactions Thermodynamic Database. Nucleic Acids Res 2006;34:D195–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50. Prabakaran P, Jianghong An M, Gromiha M. et al.. Thermodynamic database for protein–nucleic acid interactions (pronit). Bioinformatics 2001;17:1027–34. 10.1093/bioinformatics/17.11.1027. [DOI] [PubMed] [Google Scholar]

- 51. Siva Shanmugam NR, Jino Blessy J, Veluraja K. et al.. Procaff: protein–carbohydrate complex binding affinity database. Bioinformatics 2020;36:3615–7. 10.1093/bioinformatics/btaa141. [DOI] [PubMed] [Google Scholar]

- 52. Puvanendrampillai D, Mitchell JBO. Protein Ligand Database (PLD): additional understanding of the nature and specificity of protein–ligand complexes. Bioinformatics 2003;19:1856–7. 10.1093/bioinformatics/btg243. [DOI] [PubMed] [Google Scholar]

- 53. Altschul SF, Gish W, Miller W. et al.. Basic local alignment search tool. J Mol Biol 1990;215:403–10. 10.1016/S0022-2836(05)80360-2. [DOI] [PubMed] [Google Scholar]

- 54. Rifaioglu AS, Doğan T, Martin MJ. et al.. Deepred: automated protein function prediction with multi-task feed-forward deep neural networks. Sci Rep 2019;9:7344. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55. Sarac OS, Gürsoy-Yüzügüllü Ö, Cetin-Atalay R. et al.. Subsequence-based feature map for protein function classification. Comput Biol Chem 2008;32:122–30. 10.1016/j.compbiolchem.2007.11.004. [DOI] [PubMed] [Google Scholar]

- 56. Chou K-C. Prediction of protein cellular attributes using pseudo-amino acid composition. Proteins 2001;43:246–55. 10.1002/prot.1035. [DOI] [PubMed] [Google Scholar]

- 57. Shen J, Zhang J, Luo X. et al.. Predicting protein–protein interactions based only on sequences information. Proc Natl Acad Sci 2007;104:4337–41. 10.1073/pnas.0607879104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58. Kulmanov M, Hoehndorf R. DeepGOPgoplus: improved protein function prediction from sequence. Bioinformatics 2020;36:422–9. 10.1093/bioinformatics/btz595. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59. Kulmanov M, Khan MA, Hoehndorf R. et al.. Deepgo: predicting protein functions from sequence and interactions using a deep ontology-aware classifier. Bioinformatics 2018;34:660–8. 10.1093/bioinformatics/btx624. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60. Jun W, Qing H, Ouyang J. et al.. Hifun: homology independent protein function prediction by a novel protein-language self-attention model. Brief Bioinform 2023;24:bbad311. [DOI] [PubMed] [Google Scholar]

- 61. Song D, Chen J, Chen G. et al.. Parameterized blosum matrices for protein alignment. IEEE/ACM Trans Comput Biol Bioinform 2014;12:686–94. 10.1109/TCBB.2014.2366126. [DOI] [PubMed] [Google Scholar]

- 62. Guixian X, Meng Y, Qiu X. et al.. Sentiment analysis of comment texts based on bilstm. Ieee Access 2019;7:51522–32. 10.1109/ACCESS.2019.2909919. [DOI] [Google Scholar]

- 63. Alex Graves, Santiago Fernández, and Jürgen Schmidhuber. Bidirectional lstm networks for improved phoneme classification and recognition. In: International Conference on Artificial Neural Networks, p. 799–804. Berlin, Heidelberg: Springer, 2005. 10.1007/11550907_126. [DOI] [Google Scholar]

- 64. Fan K, Guan Y, Zhang Y. Graph2go: a multi-modal attributed network embedding method for inferring protein functions. GigaScience 2020;9:giaa081. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65. Di Lena P, Nagata K, Baldi P. Deep architectures for protein contact map prediction. Bioinformatics 2012;28:2449–57. 10.1093/bioinformatics/bts475. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66. Vladimir, Gligorijević P, Renfrew D, Kosciolek T. et al.. Structure-based protein function prediction using graph convolutional networks. Nat Commun 2021;12:3168. 10.1038/s41467-021-23303-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67. Kipf TN, Welling M. Semi-supervised classification with graph convolutional networks. International Conference on Learning Representations. arXiv preprint arXiv:1609.02907. 2016.

- 68. Hochreiter S, Schmidhuber J. Long short-term memory. Neural Comput 1997;9:1735–80. 10.1162/neco.1997.9.8.1735. [DOI] [PubMed] [Google Scholar]

- 69. Lai B, Jinbo X. Accurate protein function prediction via graph attention networks with predicted structure information. Brief Bioinform 2022;23:bbab502. 10.1093/bib/bbab502. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70. Veličković P, Cucurull G, Casanova A. et al.. Graph attention networks. International Conference on Learning Representations. arXiv preprint arXiv:1710.10903. 2018.

- 71. Wenjie Li, Chi-hua Wang, Cheng G, Song Q. Self-attention graph pooling. International Conference on Machine Learning. Trans Mach Learn Res 2019;3734–43.

- 72. Wang S, Sun S, Li Z. et al.. Accurate de novo prediction of protein contact map by ultra-deep learning model. PLoS Comput Biol 2017;13:e1005324. 10.1371/journal.pcbi.1005324. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73. Zhonghui G, Luo X, Chen J. et al.. Hierarchical graph transformer with contrastive learning for protein function prediction. Bioinformatics 2023;39:btad410. 10.1093/bioinformatics/btad410. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74. Berman HM, Westbrook J, Feng Z. et al.. The protein data bank. Nucleic Acids Res 2000;28:235–42. 10.1093/nar/28.1.235. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75. Zeng J, Xie P. Contrastive self-supervised learning for graph classification. In: Kevin Leyton-Brown, Mausam (eds.) Proceedings of the AAAI Conference on Artificial Intelligence. Palo Alto, California, USA: AAAI Press, 2021, 35, 10824–32. 10.1609/aaai.v35i12.17293. [DOI] [Google Scholar]

- 76. Jiao P, Wang B, Wang X. et al.. Struct2go: protein function prediction based on graph pooling algorithm and alphafold2 structure information. Bioinformatics 2023;39:btad637. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77. Heinzinger M, Ahmed Elnaggar Y, Wang CD. et al.. Modeling aspects of the language of life through transfer-learning protein sequences. BMC Bioinformatics 2019;20:1–17. 10.1186/s12859-019-3220-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78. Zhang Z, Bu J, Ester M. et al.. Hierarchical graph pooling with structure learning. IEEE Transactions on Knowledge and Data Engineering. arXiv preprint arXiv:1911.05954. 35, p. 545–59, 2021. [Google Scholar]