Abstract

Hyperspectral imaging has emerged as an effective powerful tool in plentiful military, environmental, and civil applications over the last three decades. The modern remote sensing approaches are adequate for covering huge earth surfaces with phenomenal temporal, spectral, and spatial resolutions. These features make HSI more effective in various applications of remote sensing depending upon the physical estimation of identical material identification and manifold composite surfaces having accomplished spectral resolutions. Recently, HSI has attained immense significance in the research on safety and quality assessment of food, medical analysis, and agriculture applications. This review focuses on HSI fundamentals and its applications like safety and quality assessment of food, medical analysis, agriculture, water resources, plant stress identification, weed & crop discrimination, and flood management. Various investigators have promising solutions for automatic systems depending upon HSI. Future research may use this review as a baseline and future advancement analysis.

Keywords: Hyperspectral, Imaging, Spectral, Spatial, Temporal, Sensors

1. Introduction

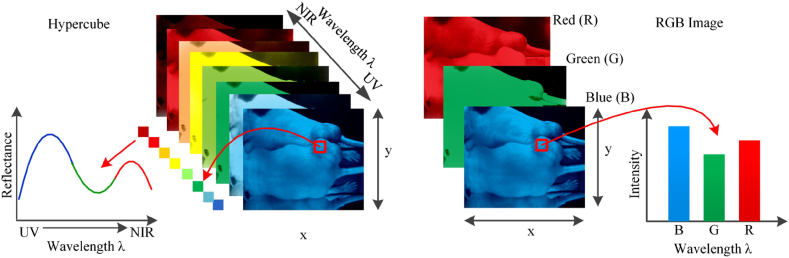

Hyperspectral imaging also known as spectroscopy imaging is the study of the interaction of light with the material observed. It is a hybrid process that combines spectroscopy and imaging. It generates a three-dimensional (3-D) database of spectral and spatial information from the collection of spectral information at each pixel of a two-dimensional (2-D) array detector [1]. This generates a 3D database known as hypercube as shown in Fig. 1. This spatial information locates the source of every spectrum in the samples which makes it possible to examine more likely with the lighting condition of the environment. Moreover, HSI covers a continuous portion of the spectrum of light with more spectral bands and high spectral resolution. Therefore, HSI has the potential to capture the spectral differences under 2D different environmental conditions. The difference between hypercube and RGB images is indicated in Fig. 1. Hypercube is a 3D database of 2D images on each wavelength. The leftmost is the spectral reflectance signature curve of pixels in the image. The RGB image has three bands in red, green, and blue wavelength respectively. The rightmost is the intensity curve of a pixel in the RGB image. It measures the extent of light transmitted, reflected, or emitted from an assured target or object.

Fig. 1.

Comparison between hypercube and RGB image [2].

This review specifies the fundamentals of hyperspectral imaging, confers with the familiar technologies of HSI, and focuses on the novel applications of HSI in the areas of safety & quality assessment of food, medical analysis, agriculture, water resource management, plant stress identification, weed & crop discrimination and flood management.

1.1. Hyperspectral imaging and its platforms

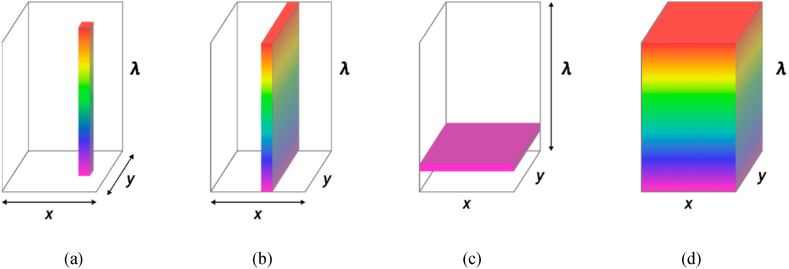

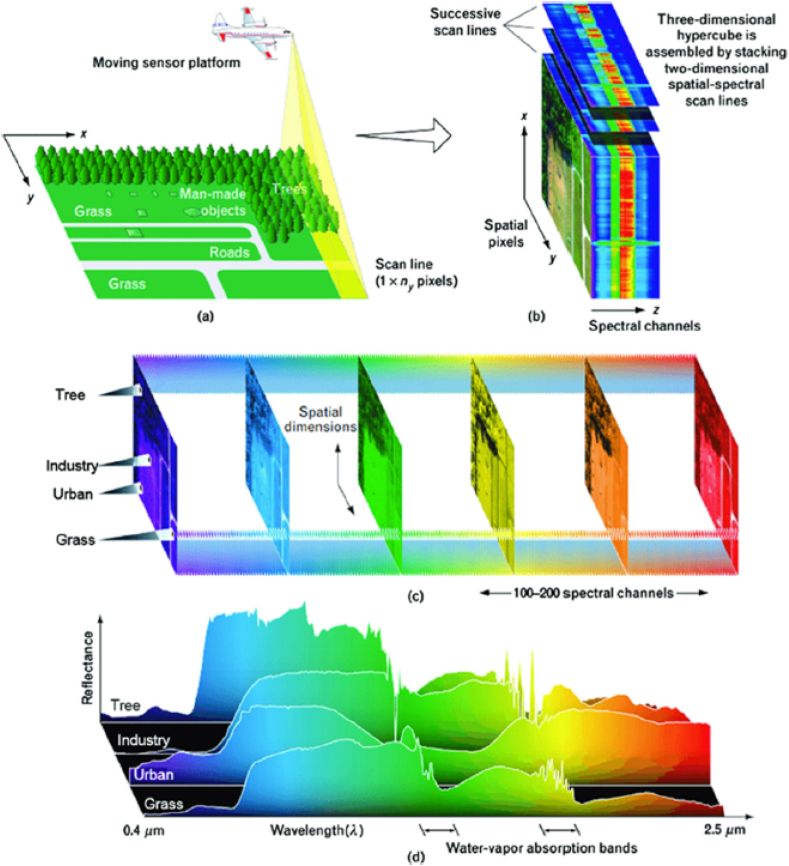

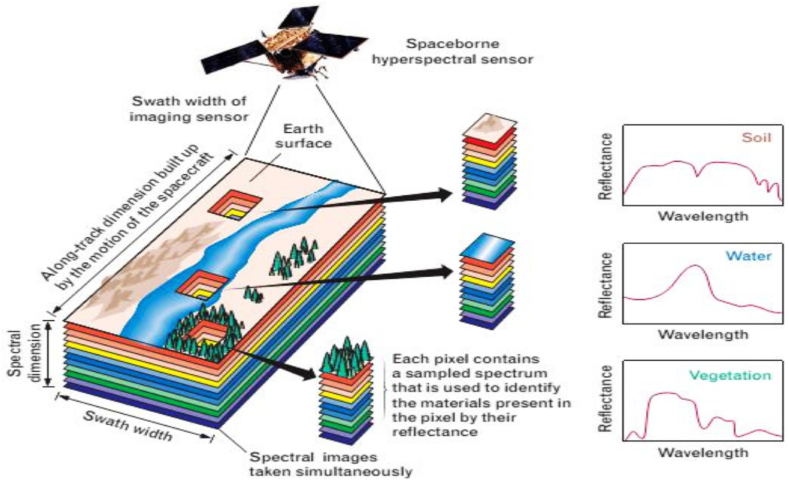

Hyperspectral imaging is a technique that facilitates the spectrum acquisition in an image for every pixel value. HSI sensors (spectrometers imaging) usually capture near-infrared, visible, and short-wavelength infrared spectra in the range of 0.4–2.5 μm region. Also, HSI with narrow band systems are agile to produce spectral spectrum at hundreds of distinct wavelengths [3]. This spectral spectrum makes HSI a powerful and interesting tool for earth surface categorization resulting in a promising wide range of applications [[4], [5], [6]]. The HSI data consists of various channels compared to RGB or grayscale images which comprise three or more channels [7]. HSI produces a 3-D hyperspectral cube with one wavelength dimension and two spatial dimensions. The hyperspectral data are categorized based on spatial and spectral information or the acquisition mode. A few examples of HSI data acquired techniques are point scanning (whiskbroom), line scanning (push broom) imagers staring, and imager snapshots as indicated in Fig. 2.

Fig. 2.

Three approaches are used for constructing a hyperspectral image (a) Point Scanning (b) Line Scanning (c) Imagers Staring (d) Imager Snapshot [2].

Point scanning also known as the imager whisk broom technique involves moving the detector or sample on spatial (Sx and Sy) dimension to the scana sole point [8,9]. The images obtained using this approach are of huge spectral resolution with enormous flexibility concerning the optical approach, size of the sample, and range of spectra. Another detailed hyperspectral 3D cube is shown in Fig. 3. The line scanning also known as the push broom sensor attains one-dimensional spectral cross pixel on a spaceborne or airborne platform represented in Fig. 3 (a). This approach provides the linear view field information for every spatial and spectral exclusive information using concurrent acquisition. During this technique spatial and spectral information is compiled along the x-direction and the substance is moving in spatial y-direction. This approach is extensively beneficial in remote sensing. Fig. 3 (b) represents sequential lines for the cross pixel in each row to attain a hyperspectral 3-D cube where the x direction includes spatial scene detail and the y direction includes the cube. Fig. 3 (c) represents 2D spatial information determined by a hyperspectral 3D cube. Fig. 3 (d) indicates spectral points for feature discrimination pixels of classification and detection of spectra in the scene [10,11].

Fig. 3.

Detailed hyperspectral three-dimensional data cube structure [12].

The image starting also known as spectral scanning and area scanning adopts a 2 D array detector for scene capture in separate exposure. This approach utilizes images of high resolution depending on the resolution of the pixel and optics camera. An image snapshot also known as image scanning utilizes separate integration time to generate a hyperspectral 3D cube [13]. This technique uses spectral and spatial information recording beyond scanning. It makes the approach more flexible and straightforward.

Qian [12] presented the three distinct methods of obtaining hyperspectral cube data using different spectrometers such as snapshot HSI, spectral filter-based method, and dispersive-element-based methods. To acquire hyperspectral images with different temporal and spatial resolutions, the sensors used must be mounted on different platforms such as close-range platforms, airplanes, and Unmanned Aerial Vehicles (UAV). The comparative analysis of distinct platforms for hyperspectral imaging is indicated in Table 1.

Table 1.

Comparative Analysis of distinct platforms for hyperspectral imaging [14].

| Specifications | Airplanes | Helicopters | Satellites | Fixed Wing UAV | Multi UAV |

|---|---|---|---|---|---|

| Example Image |  |

|

|

|

|

| Operational Altitudes | 1–20 km | 100 m- 2 km | 400–700 km | <150 m | <150 m |

| Spatial Coverage | ∼100 km2 | ∼10 km2 | 42 × 7.7 km | ∼5 km2 | ∼0.5 km2 |

| Spatial Resolution | 1–20 m | 1.1–1 m | 20–60 m | 1.1–0.5 m | 0.01–0.5 m |

| Temporal Resolution | Depends on operations of flights (Hours to Days) | ||||

| Flexibility | Medium (Limited by availability of aviation) | Low (Fixed repeating cycles) | High | ||

| Operational Complexity | Medium (Depending on the sensor operator) | Low (Data provided to users) | High (Software & Hardware of setup by users) | ||

| Applicable Scales | Regional landscape | Global landscape | Canopy landscape | ||

| Cost of Acquisition | High (requires aviation company to fly) | Low to Medium | High (for a large area) | ||

| Limiting Factors | Unfavorable flight speed | Weather | Flight regulations | ||

The hyperspectral images are described by their spectral as well as spatial resolution. The spectral resolution regulates the variation in pixels of the picture as f(ƛ) (function of wavelength) while spatial resolution regulates the geometrical relationship of the image pixels to each other. Hyperspectral images are generated by instruments known as imaging spectrometers. The evolution of these complex sensors has involved the convergence of two related but different approaches: Remote Imaging and Spectroscopy. Spectroscopy is the study of light that emits or reflects from material and its variation in energy with wavelength. In the remote sensing field, spectroscopy deals with the spectrum of sunlight that is reflected by materials at the earth's surface.

Spectrometer instruments are used to make laboratory or ground-based measurements of the light reflected from the material to be tested. By using hundreds or thousands of detectors spectrometers can make spectral measurements of bands as narrow as 0.01 μm (wide wavelength range), at least 0.4–2.4 μm (infrared wavelength range) [14]. The list of various imaging spectrometer sensors for hyperspectral imaging contributing information are AVIRIS (Airborne Visible Infrared Imaging Spectrometer), AISA (Airborne Imaging Spectroradiometer Application), Hymap, and Hyperion. Some of the current space and airborne satellite hyperspectral sensors for spectral and spatial resolution spectrometers are indicated in Table 2.

Table 2.

The current space and airborne satellite hyperspectral sensors [14].

| Sensor | Origin | Spectral Range | No. of spectral bands | Spectral Resolution (nm) | Operational Altitude (km) | Spatial Resolution (m) | Authors | |

|---|---|---|---|---|---|---|---|---|

| Satellite Based | Hyperion | NASA, UK | 352–2576 | 220 | 10 | 707 (7.7 km) | 30 | Pearlman et al. [15] |

| PROBA-CHRIS | ESA, UK | 415–1050 | 19 63 | 34 17 | 830 (14 km) | 17 36 | Kunkel et al. [16] | |

| Airplane Based | AVIRIS | Jet Propulsion Laboratory, USA | 400–2050 | 224 | 10 | – | – | Green et al. [17] |

| CASI | Itres, Canada | 380–1050 | 288 | <3.5 | 1–20 | 1–20 | Babey & Anger [18] | |

| AISA | Specim, Finland | 400–970 | 244 | 3.3 | 1–20 | 1–20 | ||

| HyMap | Integrated Spectronics, Australia | 440–2500 | 128 | 15 | – | – | Cocks et al. [19] | |

| UAV Based | Head Well Hyperspec | Headwall Photonics, USA | 400–1000 | 270 Nano 324 Micro | 6 Nano 2.5 Micro |

<0.15 | 0.01–0.5 | Rickard et al. [20] |

| UHD 185 Firefly | Cubert, Germany | 450–950 | 138 | 4 | <0.15 | 0.01–0.5 | Eckardt et al. [21] |

The spatial, spectral, and temporal resolution of an image provides valuable information that is used to form interpretations about surface conditions and materials. For each of these properties, the image resolution can be defined by the sensor system. These image resolution components limit the information derived from remote sensor images.

-

A.

Spatial Resolution

Spatial resolution can be defined as the smallest detectable detail in an image that can be stated as the measure of the smallest entity in an image which can be discriminated as an independent entity in the image [22]. It is also the function of the design of the sensor and its operating altitude above the surface. Practically, the image clarity is imposed by its spatial resolution, not the number of pixels in an image. The spatial characteristics of an image depend on the image sensor design in terms of its altitude and its field of view [23]. The remote sensor detector measures energy received from a bounded patch of the ground surface. The patch size is inversely proportional to the spatial resolution. The smaller the individual patches, the more detailed will be the spatial information that can be described from the image. Shape is one of the usual factors that is detectable only if the cell dimensions are much smaller than the object dimensions. If the object is darker or brighter than the surroundings, it will control the average brightness of the image cell and this cell will be brighter compared to contiguous cells. The spatial resolution allows us to distinguish linear features that are more precise than a cell dimension, such as fruits on the farm.

-

B.

Spectral Resolution

Spectral resolution can be defined as the range of the electromagnetic spectrum and the number of spectral bands measured by the sensor. An imaging sensor may reciprocate to a substantial frequency range but still have a small spectral resolution if it attains a low number of spectral ranges of the band. Adversely, if a sensor is precise to the low-frequency range but acquires a gigantic number of spectral bands has a huge spectral resolution, due to its capability to differentiate between scenes of identical or close spectral signatures [24]. The multispectral images consist of small spectral resolution and, therefore inadequate to resolve fine spectral signatures identified in the scene. HSI sensors capture images in various contiguous and exceptionally narrow spectral bands in visible, near-infrared, and mid-infrared segments of the electromagnetic spectrum. This advanced imaging system indicates enormous potential for the identification of materials based on their particular spectral signatures. The HSI with a single pixel spectrum may allow considerably more information about the material of the surface than a normal range.

-

C.

Temporal Resolution

In hyperspectral imaging, the temporal resolution depends on the orbital attribute of the imaging sensor. It is usually defined as the time required by the sensor to revisit and obtain data from the same location [25]. It is also known as revisit or return time. Temporal resolution is said to be low if the revisiting frequency of the sensor platform for the same location is low and is said to be high if the revisiting frequency is high. Temporal resolution is generally defined in days.

-

D.

Understanding Spectral Signatures

The earth's surface absorbs, transmits, and reflects the radiations in the form of electromagnetic waves from the sun depending upon the material of the surface. Electromagnetic energy is measured by hyperspectral sensors that allow us to examine the changes and specific features on the Earth's surface. When electromagnetic energy rebounds from the surface, it measures reflectance. It is defined as a function of wavelength with a ratio of reflected and incident energy. Practically, reflectance ranges from [0,100] where 0 % when whole wavelength incident light is absorbed and 100 % when whole wavelength incident light is reflected. The materials present on the earth's surface such as forests, minerals, soil, and water can be compared and plotted concerning the reflectance value of specific electromagnetic spectrum. Such plots are labeled as spectral response or signature curves.

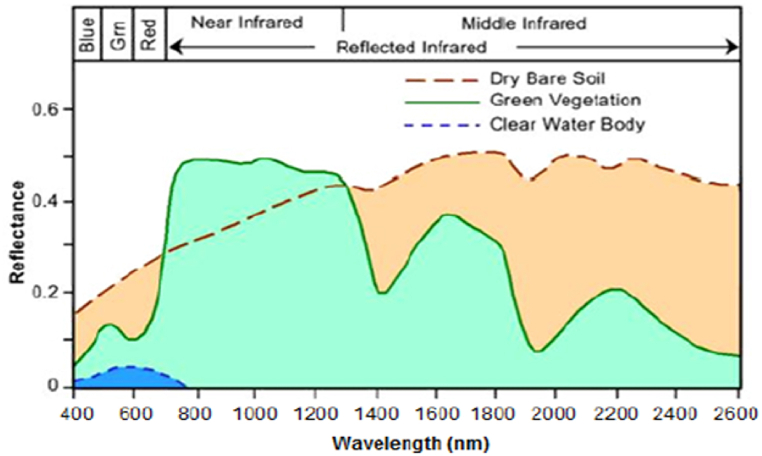

Fig. 4 indicates the spectral model signature of different models present on earth's surface in hyperspectral imaging. These plots classify remotely sensed images for every material in a scene that has its unique spectral signature. The higher the resolution of the sensor, the more information is cited from the spectral signature. Hyperspectral imaging sensors have a higher spectral resolution than multispectral imaging sensors and therefore they provides the ability to differentiate between huge subtle variations in a scene. The water and land resources mapping can be done by geologists in active and historic areas [26]. It is also promoted to map hazardous waste and heavy metals in active and historic mining areas. Fig. 4 represents the vegetation, water, and soil spectral response. It is clear from the above figure that vegetation reflectance is high compared to soil due to reflectance variation in the electromagnetic spectrum. This is because the factors which affect soil.

Fig. 4.

A collective scheme of Soil, Water & Vegetation mapping on hyperspectral imaging [27].

Reflectance varies in a narrow range of electromagnetic spectrum. These factors consist of the texture of the soil, minerals present such as surface roughness, iron, and content of moisture in the soil. Spectral signatures of green vegetation have vessels in the visible spectrum range which illustrates the pigmentation in green vegetation is chlorophyll [28] that absorbs 450 nm blue and 670 nm red region known as the chlorophyll spectral absorption bands. If a plant is under stress such that growth of chlorophyll is reduced in these cases the reflectance amount in 670 nm red region increases. The water spectral response has distinct characteristics of light absorption in the infrared region and beyond it. The several probable factors that affect the spectral response of water are drooping sediments and expanding the level of chlorophyll. In such special cases, the spectral response will be shifted subsequently showing the existence of suspended algae or sediments in water.

1.2. Pros & cons of hyperspectral imaging

The pros of using Hyperspectral Imaging in different applications such as agriculture, and food quality estimation are as follows [29]:

-

(i)

It is a non-invasive, non-contact, non-destructive technology that ensures the quality and safety of food goods.

-

(ii)

The experiments use no chemicals therefore environmentally safe.

-

(iii)

The time required to process for quality evaluation and food control/storage is low compared to chemical and traditional approaches.

-

(iv)

It gives an improved understanding of the chemical elements of food products and is generally known as chemical imaging.

-

(v)

It provides appropriate area selection for critical analysis of the image.

-

(vi)

It gains spatial and spectral information together to provide more appropriate and accurate data concerning chemical samples from interested platforms and enhance a chance to data refine and achieve further experiments.

Despite its pros, Hyperspectral Imaging also has some cons [29].

-

(i)

A hyperspectral imaging system is highly costly compared to other image processing techniques.

-

(ii)

Since the data size of hyperspectral imaging is large, there is a demand for high-speed computers for the processing of data and extensive capacity drives for the storage of data.

-

(iii)

While acquiring the images, the signal could be impacted by ambient surroundings such as scattering, illumination, etc. therefore producing a destitute signal-to-noise ratio.

-

(iv)

Detection and identification of different items within the equivalent image using spectral data is mostly difficult except the diverse objects have distinct absorption features.

2. Applications of HSI

HSI is rapidly growing for a huge variety of applications such as military, industrial, and commercial. This section focuses on HSI applications for the quality and safety of food, medical fields, water food and resource management, agriculture, forensics, homeland and defense security, plant detection, and weed and crop discrimination.

-

A.

Safety and Quality Assessment of food

Various challenges for the safety and quality of food and its products are forced due to the requirement of low-cost production and huge efficiency. Distinct food attributes such as biological, chemical, and physical are examined to assess the safety and quality of food. The traditional approaches depending upon biological, chemical, and visual inspection of food are unfriendly to the environment, time-consuming and destructive. The advancement in technology related to instrumentation and computer engineering enabled faster and more efficient food assessment. Machine learning and computer vision-based approaches using image processing have been successfully adapted for the assessment of food and its product external attributes [[30], [31], [32], [33], [34], [35]]. The internal attributes of food are not able to be examined using these approaches due to a lack of ability to capture spectral information. This limitation of machine learning and computer vision is overcome by near-infrared spectroscopy because of the relation between the near-infrared spectrum and food components [36,37]. HSI consists of a huge amount of spatial as well as spectral information which results in HSI methods suitable for the safety and quality assessment of food [38]. The defect identification [39,40] and contamination detection [41,42] in food products can be analyzed using HSI. The post-harvest processing such as grading, sorting, chemical prediction, and recognition of bruises can also be done using distinct novel approaches of HSI. Archibald et al. [43] presented an approach for wheat classification using short-wavelength near-infrared spectral imaging. The model uses regression analysis to select wavelength, and kernel segmentation with histogram equalization to classify wheat. Mahesh et al. [44] proposed a statistical ANN classifier for distinguishing wheat that achieves 100 % accuracy. Singh et al. [45] presented a model that utilizes histogram and statistical (color/textural/morphological) features and lastly classified using a BPNN classifier and achieves 96.40 % accuracy. Singh et al. [46] proposed statistical features with linear/quadratic/Mahalo Nobis classifier to achieve 99.30 % accuracy. William et al. [47] presented principle component analysis-based feature extraction and the PLS-DA model as a classifier to classify maize with 86.00 % accuracy. Valenzuela et al. [48] proposed blueberry's solid content and firmness and achieved 87.00 % accuracy. Huang et al. [49] presented SVM-based classification for apple classification and achieved 82.50 % accuracy. Huang et al. [50] proposed a Gabor filter and GLCM to identify salmon. Ivorra et al. [51] proposed a model to identify expired, packed, vacuum salmon and reach an 82.70 % recognition rate. Serranti et al. [52] presented a PAC and PLS-DA-based classification model for grout and oat and achieved 100 % accuracy. The literature reports different HSI-based food grain evaluations as shown in Table 3.

Table 3.

Distinct approaches for hyperspectral imaging-based food grains evaluation.

| Authors | HSI | Range (nm) | Food Grain | Approach | Accuracy (%) |

|---|---|---|---|---|---|

| Archibald et al. [43] | NIR | 632–1098 | Wheat | Histogram | – |

| Mahesh et al. [44] | NIR | 960–1700 | Wheat | ANN | 100 |

| Singh et al. [45] | NIR | 700–1000 | Wheat | BPNN | 96.40 |

| Singh et al. [46] | NIR | 700–1000 | Wheat | Quadratic | 99.30 |

| McGoverin et al. [53] | NIR | 1920–1940 | Wheat | PLS DA | – |

| Weinstock et al. [54] | NIR | 950–1700 | Corn | PLSA | – |

| William et al. [47] | NIR | 960–1662 | Maize | PLS DA | 86.00 |

| William et al. [55] | NIR | 1000–2498 | Maize | PLS RM | – |

| Shahinet et al. [56] | NIR | 400–1000 | Wheat | PLS | 90.60 |

| Caporaso et al. [57] | NIR | – | Cereal | – | – |

| Valenzuela et al. [48] | NIR | 500–1000 | Blueberries | – | 87.00 |

| Huang et al. [49] | NIR | 600–1000 | Apple | SVM | 82.50 |

| Huang et al. [50] | NIR | 1193–1217 | Salmon | GLCM | – |

| Ivorra et al. [51] | NIR | – | Salmon | PLS DA | 82.70 |

| Serranti et al. [52] | NIR | 1006–1650 | Oat & Grout | PLS DA | 100 |

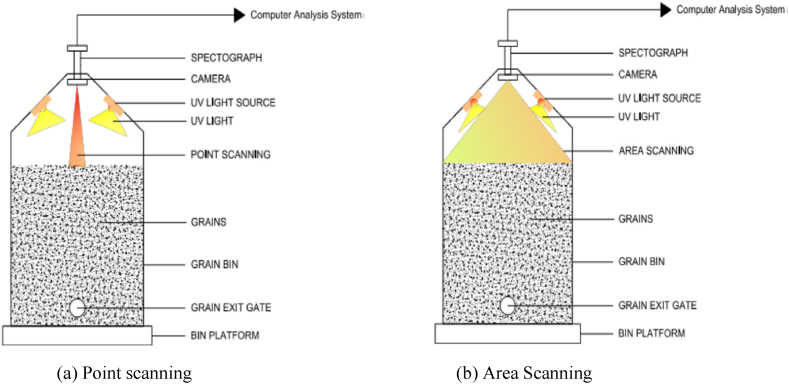

HSI plays an exclusive role in grain repository to detect deteriorated seed kernel and coat, bins sensing, insect, and fungal detection in grain (see Fig. 5). Fig. 6 represents the simplified diagram of feasible methods for HSI applications to evaluate and check the quality of bulk grain storage. The model consists of a camera, UV light source (Liquid Crystal tuneable Filter), and computer (image processing unit). Each element has its function in processing for hyperspectral imaging. The camera acquires spatial and spectral information, spectrograph distributes the light into several wavelengths. The UV light source spots the grains and the computer will store and compose the 3D hypercube. The deep scanning can be done by FIR and MIR spectral range that selects the optimum wavelength to analyze the system using Partial Least Square (PLS), Genetic Algorithm Partial Least Square (GAPLS), Convolutional Neural Network (CNN) or Principle Component Analysis (PCA).

-

B.

Medical Analysis

Fig. 5.

Soil, Water & Vegetation curve for spectral response [27].

Fig. 6.

Hyperspectral Imaging scanning [58].

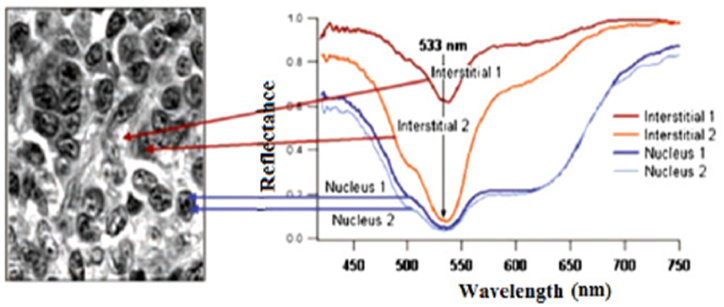

The traditional approaches for clinical medical analysis are magnetic resonance imaging (MRI) and computed tomography (CT). Paly et al. [59] compared the CT and MRI for sclerosis detection in over 200 patients during the evaluation. Hovels et al. [60] use MRI and CT both for node lymph in cancer prostate. MRI achieves better results compared to CT. During the past decades, the spectral technique has had significant results at huge accuracy and speed by providing added potential to medical investigators. The optical tissue characteristics implement valuable diagnostic results. The medical analysis is widely done by HSI due to its ability to provide biomarker results of real-time data and spectral tissue information. HSI is also used in surgery image-guided other than medical analysis. Kumar et al. [61] presented Fourier transform infrared (FTIR) and principal component analysis (PCA) for breast cancer analysis using a spectroscopy imaging system. The approach is applied to specimens of histopathological carcinoma on breast cancer and data analysis. The spectral band across 5882–6250 nm may be used for breast cancer. Liu et al. [62] proposed a reflectance spectrum for the detection of the tumor. Dicker et al. [63] use the hyperspectral high resolution to detect skin abnormality using hematoxylin stain eosin for normal and abnormal skin. The biopsy is characterized by thickness, magnification, and staining to determine spectral wavelength. It is spacious that the absorption band (533 nm approximately) could be recognized through the sample implying that hematoxylin blemishes almost every little thing. The grayscale representation of melanoma lesions and transmission spectra achieved from the internal area and within the nucleus are presented in Fig. 7.

Fig. 7.

Grayscale representation of melanoma lesion and internal area with transmission spectra [63].

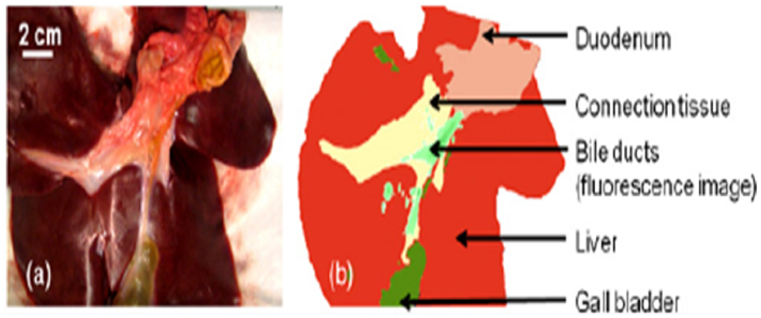

Mitra et al. [64] propose biliary anatomy identification and classification of reflectance and fluorescence imaging. Fig. 8 indicates photographic and segmented images of biliary tissue after hyperspectral and fluorescence imaging. The image processing and spectral information approaches are applied for the detection and classification of biliary anatomy. Fluorescence imaging provides surgical dynamic motion information whereas HSI provides contaminated exclusion tissues for bile duct identification. As seen from the figure, distinct tissue types exhibit distinct spectral characteristics due to different tissue properties. The color segmentation allows clear differentiation between the gallbladder, duodenum, liver, and ligament tissues. This segmented image with fluorescence image flows in a similar tissue to add the flow of real-time fluorescence on the top background of ICG-loaded micro balloons. The dual image identifies the biliary tissue and its location with the surrounding.

Fig. 8.

(a) Photographic image of biliary (b)Classification of biliary based on hyperspectral imaging [64].

Components. Campbell et al. [65] presented a renal tumor diagnosis approach using laparoscopic nephrectomy partial. Oluenty et al. [66] proposed digital processing light to categorize oxygenation renal. This medical analysis was implemented on 18 patients. The literature reports different HSI-based medical diagnosis evaluations as shown in Table 4.

-

C.

Agriculture

Table 4.

Distinct HSI-based medical analysis.

| Author | System | Applications |

|---|---|---|

| Huang et al. [67] | Multi HSI | Classify Hemocytes |

| Huang et al. [68] | HSI | Blood Cells |

| Wang et al. [69] | HSI | Leukocyte |

| Sommer et al. [70] | Deep HSI | Cancer Cells |

| Li et al. [71] | HSI | Cancer Cells |

| Bengs et al. [72] | HSI | Vivo Tumor |

| Grigoroiu et al. [73] | HSI | Endoscopy |

| Manifold et al. [74] | HSI | Multiple Drug Location |

| Halicek et al. [75] | HSI | Cancer Detection |

| Trajanovski et al. [76] | VIS/NIR | Tumor Capture |

| Cervantes et al. [77] | HSI | Liver/Thyroid |

| Garifullin et al. [78] | HSI | Retinal Vessels |

| Trajanovski et al. [79] | HSI | Carcinoma Tumor |

| Seidlitz et al. [80] | HSI | Organ Segmentation |

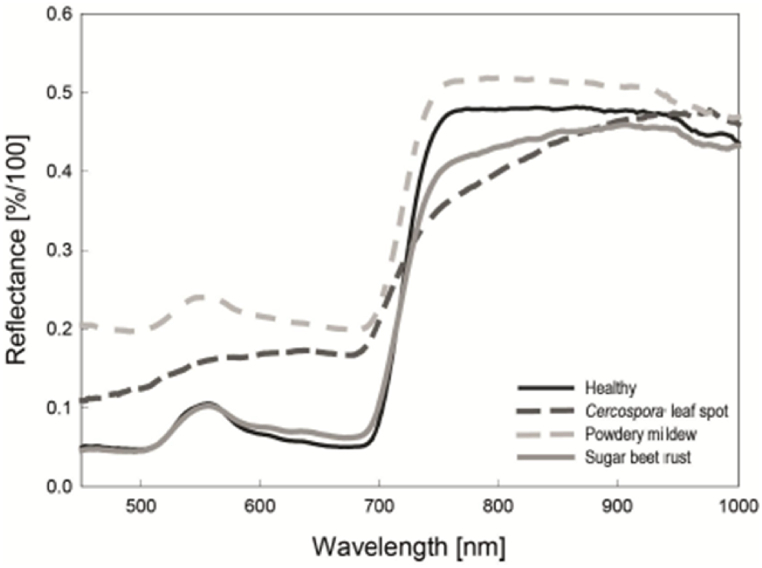

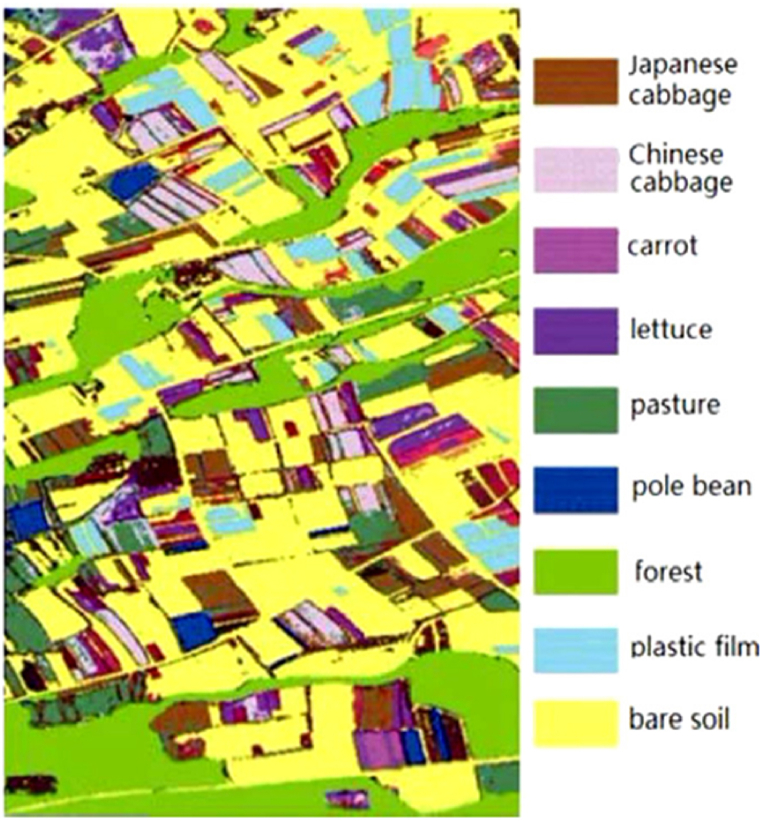

The promptly increasing population across the world will lead to an increase in crop production till the end of 2050 [81]. Nonetheless, numerous studies present that crop production is not developing at a rate to accomplish the increasing population requirement. Various studies have represented that food quality can be effective by limiting the cultivation of land by improving crops [82,83]. The global undernourishment and poverty can be precisely diminished by improving crop generation [84,85]. Traditionally, manual inspection was done to monitor attacks of insects, diseases, nutrients, and stress of water. The manual visual inspection limits the identification since disease symptoms may emerge lately. These traditional methods can be replaced by HSI ground and airborne methods for crop evaluation, soil analysis, and measuring vegetation effectively. The major factor in crop yield is stress drought. The crop can be successfully increased by detection of water stress in time. The photosynthetic variation is measured in high-level water stress. These variations lead to tint yellow in crop, because of improved red reflectance wavelength. The HSI system distinguishes this variation former stage than human eye. Colombo et al. [86] proposed water leaf thickness detection in the infrared and visible spectrum during reflectance. Mahlein et al. [87] reported a distinct advancement of sugar leaves. The spectral resolution of healthy/defective sugar leaves is shown in Fig. 9. Liu et al. [88] proposed wheat prediction via spectral parameters with fine matured classification indicated in Fig. 10. The literature reports different HSI-based medical diagnosis evaluations as shown in Table 5.

Fig. 9.

Spectral resolution of healthy/defective sugar leaves [87].

Fig. 10.

Spectral parameters with matured classification [87].

Table 5.

Different HSI-based medical diagnosis evaluations.

| Authors | Spatial Resolution | Application |

|---|---|---|

| Ferguson et al. [89] | 1 m | Crop Production |

| Zhang et al. [90] | 1 m | Crop Production |

| Caturegli et al. [91] | 30 m | Crop Production |

| Tian et al. [92] | 1.4 m | Crop Production |

| Kokhan et al. [93] | 2 m | Crop Production |

| Ahn et al. [94] | 2.8 m | Crop Production |

| Meroni et al. [95] | 40 m | Crop Production |

| Chua et al. [96] | 3.2 m | Crop Production |

| Baraldi et al. [97] | 20 m | Water Management |

| Choubey et al. [98] | 72 m | Water Management |

| Hasab et al. [99] | 15 m | Water Management |

| Goetz et al. [100] | 3.2 m | Nutrient Management |

| Caturegli et al. [101] | 1.65 m | Nutrient Management |

| Sharifi et al. [102] | 10 m | Nutrient Management |

| Yang et al. [103] | 3.2 m | Crop Harvest |

| Maselli et al. [104] | 250 m | Crop Harvest |

| Aicha et al. [105] | 10 m | Crop Harvest |

| Denis et al. [106] | 4 m | Crop Harvest |

| Vibhute et al. [107] | 1 km | Soil Moisture |

| Yang et al. [108] | 6 m | Crop Detection |

| Sidike et al. [109] | 1.24 m | Weed Management |

Zuiggelaar [110] reviews the spectral proprieties of plants to differentiate the weeds and crops. This review compares distinct modeling methods to evaluate the reflection using chemical and optical information. Tian and Thorp [111] reviewed weed identification using remote sensing in agriculture. This review reports different spectral canopy responses of crop and weed discrimination using remote sensing technology and lighting. Hadoux et al. [112] represent a distinct method using spectra resolution in uncontrolled orientation and lightning. The vegetation index for wheat and weed is very similar, therefore huge spectral analysis was enforced to differentiate between wheat and weed. PIron et al. [113] report the adequate wavelength for differentiating between weeds and carrots or weeds and potatoes using artificial lighting. Various research papers have been published [[114], [115], [116]] focused on particular techniques while others are centred on real time framework.

-

D.

Water Resource Management

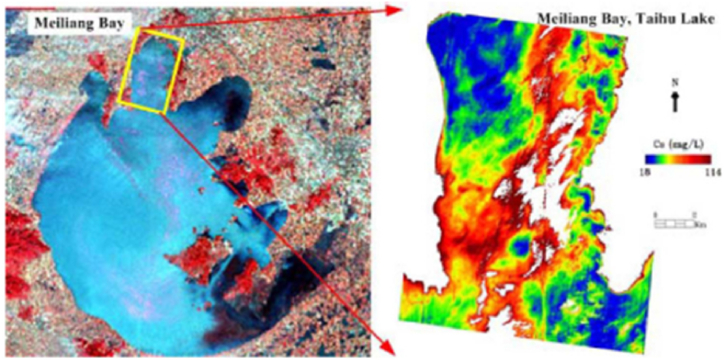

Humanity's survival on earth can be achieved by the biggest critical resource like water. Therefore, efficient management, analysis, and quality monitoring of water ardent a lot of consideration from the investigators [[117], [118], [119], [120], [121], [122]]. The distinct application of HSI is in water management. The water bodies' parameters like temporal, spectral, and spatial are accurately measured by temporal deviation. Xingtang et al. [123] efficiently proposed their investigation on approaches relevant to powerful HSI water monitoring. Li et al. [124] further utilize this approach to evaluate the matter-suspended concentration of Bay Meiliang in Taihu Chris Lake data as indicated in Fig. 11.

-

E.

Plant Stress Identification

Fig. 11.

Suspended concentration of Bay Meiliang in Taihu Chris Lake [117].

The distinct symptoms of stress in plants depend upon abiotic and biotic factors. When a plant develops into stress, productivity is reduced significantly. Consequently, plant stress identification is crucial at an early stage for reducing productivity losses. Various researchers proposed HSI and machine vision for the identification of plant stress in orchards olive, canopies tomato [[125], [126], [127], [128]]. Nonetheless, stress in plants may be the cumulative result of nutrient, disease, and water effects that cause a huge challenge for authentic stress plant identification. Several studies report that for efficient plant stress identification, HSI is a powerful tool. Using an HSI camera, the spectral resolution of the plant was inspected to classify the intensity and onset of plant stress.

Kim et al. [129] reported apple of the greenhouse with five distinct levels of treatment in water. An HSI with a digital camera and illuminated sensor vegetation within 385–1000 nm spectral sensor range benefitted from monitoring in plants. The visible region absorbs xanthophylls and chlorophyll as the NIR region results in reflectance. The photosynthetic absorption occurs at reflectance which results in stress in plants. This stress improves the visible region and reduces the NIR region during reflectance. Therefore, stress in plants can be predicted by these distinct spectral regions. Zygielbaum et al. [130] reported the stress in maize at two reflectance wavelengths associated with optimum results and water content. The vegetation index is the combination of the spectral spectrum which accentuates the green spectral features. Sanches et al. [131] reported remote sensing and photogrammetry for plant stress identification.

-

F.

Flood Management

The ground and airborne observation of water conditions are insufficient which limits the capability of monitoring and detecting floods. I. P. et al. [132] reported the enhanced and early detection system within hours using remote sensing technology. Brackenridge et al. [133] incorporated probable comprehensive application for early flood identification in the field of lands, rivers, and rainfall using NASA space observations and geological surveys. Reinartz and Glabu [134] report the flood impact, content of moisture in plain flood areas, and sediment accumulation using data gathered from remote sensing. Dartus & Roux [135] investigated the discharge river estimation and hydrographs flood by minimizing the optimized model in system response. The numerous challenges related to HSI in radiometric and metrological situations are reported in Ref. [136]. The approach minimizes the illumination variation condition using radio metrics. Distinct investigators investigate timely mapping and monitoring using remote sensors for restoration [[137], [138], [139]].

3. Conclusion

The role of HSI in material detection, identification, geo-observation, and physical parameter estimation is not adequate among other remote sensing approaches. Therefore, spaceborne and airborne HSI-based research has been increased. Recently several concomitant applications have used HSI to encourage the investigators. Various mathematical algorithms and tools are investigated such as classification, fusion data, unmixing, detection of anomaly, and efficient computation for HSI data. Distinct applications of HSI use these mathematical algorithms. This review generally focuses on HSI sensors to maintain and increase safety and quality assessment of food, medical analysis, agriculture, water resources, plant stress identification, weed & crop discrimination, and flood management. Various investigators have promising solutions for the automatic system depending upon HSI. Future research may use this review as a baseline and future advancement analysis.

Funding

“This work was supported by (i) Suranaree University of Technology (SUT), (ii) Thailand Science Research and Innovation (TSRI) and (iii) National Science, Research and Innovation Fund (NSRF)”.

Ethical approval

This article does not contain any studies with human participants performed by any of the authors.

Informed consent

Not applicable.

CRediT authorship contribution statement

Anuja Bhargava: Writing – original draft, Methodology, Conceptualization. Ashish Sachdeva: Writing – original draft, Methodology, Conceptualization. Kulbhushan Sharma: Writing – original draft, Resources, Investigation. Mohammed H. Alsharif: Writing – review & editing, Validation, Project administration, Formal analysis. Peerapong Uthansakul: Writing – review & editing, Visualization, Investigation, Funding acquisition. Monthippa Uthansakul: Writing – review & editing, Validation.

Declaration of competing interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Contributor Information

Anuja Bhargava, Email: anuja1012@gmail.com.

Ashish Sachdeva, Email: er.ashishsachdeva@gmail.com.

Kulbhushan Sharma, Email: kulbhushan.sharma@chitkara.edu.in.

Mohammed H. Alsharif, Email: malsharif@sejong.ac.kr.

Peerapong Uthansakul, Email: uthansakul@sut.ac.th.

Monthippa Uthansakul, Email: mtp@sut.ac.th.

List of abbreviations

Abbreviations Definition

- HSI

Hyperspectral Imaging

- 2D/3D

Two/Three dimensional

- RGB

Red Green Blue

- UAV

Unmanned Aerial Vehicles

- AVIRIS

Airborne Visible Infrared Imaging Spectrometer

- AISA

Airborne Imaging Spectroradiometer Application

- PROBA

Project for on board Antimony

- CHRIS

Compact High Resolution Imaging Spectrometer

- UHD

Ultra-High Definition

- NIR

Near Infrared

- VIR

Visual & Infrared

- UV

Ultra Violet

- PLSA

Partial Least Square Analysis

- PLSDA

Partial Least Square Discriminant Analysis

- PLSRM

Partial Least Square Regression Model

- GAPLS

Genetic Algorithm Partial Least Square

- FIR

Far Infrared Radiation

- MIR

Mid Infrared Radiation

- GLCM

Gray Level Cooccurrence matrix

- SVM

Support Vector Machine

- ANN

Artificial Neural Network

- BPNN

Back Propagation Neural Network

- CNN

Convolutional Neural Network

- PCA

Principle Component Analysis

- MRI

Magnetic Resonance Imaging

- CT

Computed Tomography

- FTIR

Fourier Transform Infrared

- ICG

Indocyanine Green

- NASA

National Aeronautics and Space Administration

References

- 1.Selci S. The future of hyperspectral imaging. J. Imaging. 2019;5:84. doi: 10.3390/jimaging5110084. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Kuswidiyanto L.W., Noh H.-H., Han X. Plant disease diagnosis using deep learning based on aerial hyperspectral images: a review. Rem. Sens. 2022;14:6031. doi: 10.3390/rs14236031. [DOI] [Google Scholar]

- 3.Goetz A.F.H., Vane G., Solomon J.E., Rock B.N. Imaging spectrometry for earth remote sensing. Science. 1985;228(4704):1147–1153. doi: 10.1126/science.228.4704.1147. [DOI] [PubMed] [Google Scholar]

- 4.Transon J., d'Andrimont R., Maugnard A., Defourny P. Survey of hyperspectral earth observation applications from space in the sentinel-2 context. Rem. Sens. 2018;10(2) [Google Scholar]

- 5.Transon J., d'Andrimont R., Maugnard A., Defourny P. 2017 9th International Workshop on the Analysis of Multitemporal Remote Sensing Images (MultiTemp) 2017. Survey of current hyperspectral earth observation applications from space and synergies with sentinel-2; pp. 1–8. [Google Scholar]

- 6.Huadong G., Jianmin X., Guoqiang N., Jialing M. A new airborne earth observing system and its applications. IGARSS 2001. Scanning the Present and Resolving the Future. Proceedings. IEEE 2001 International Geoscience and Remote Sensing Symposium (Cat. No. 01CH37217) 2001;1:549–551. 1. [Google Scholar]

- 7.Wendel A. The University of Sydney; Sydney, Australia: 2018. Hyperspectral Imaging from Ground Based Mobile Platforms and Applications in Precision Agriculture; School of Aerospace, Mechanical and Mechatronic Engineering. [Google Scholar]

- 8.Boldrini B., Kessler W., Rebner K., Kessler R.W. Hyperspectral imaging: a review of best practice, performance and pitfalls for in-line and on-line applications. J. Near Infrared Spectrosc. 2012;20:483–508. [CrossRef] [Google Scholar]

- 9.Vasefi F., Booth N., Hafizi H., Farkas D.L. In: Hyperspectral Imaging in Agriculture, Food and Environment. Maldonado A.I.L., Fuentes H.R., Contreras J.A.V., editors. InTech; London, UK: 2018. Multimode hyperspectral imaging for food quality and safety. [Google Scholar]

- 10.Chen Y., Guerschman J., Cheng Z., Guo L. Remote sensing for vegetation monitoring in carbon capture storage regions: a review. Appl. Energy. 2019;240:312–326. [CrossRef] [Google Scholar]

- 11.Shaw G.A., Burke H.K. Spectral imaging for remote sensing. Linc. Lab. J. 2003;14:3–28. [Google Scholar]

- 12.Qian S.E. CRC Press; Boca Raton, FL, USA: 2020. Hyperspectral Satellites and System Design. [Google Scholar]

- 13.Hagen N., Kudenov M.W. Review of snapshot spectral imaging technologies. Opt. Eng. 2013;52 [Google Scholar]

- 14.Lu B., Dao P., Liu J., He Y., Shang J. Recent advances of hyperspectral imaging technology and applications in agriculture. Rem. Sens. 2020;12:2659. [Google Scholar]

- 15.Pearlman J.S., Barry P.S., Segal C.C., Shepanski J., Beiso D., Carman S.L. Hyperion, a space-based imaging spectrometer. IEEE Trans. Geosci. Rem. Sens. 2003;41(6):1160–1173. [Google Scholar]

- 16.Kunkel B., Blechinger F., Lutz R., Doerffer R., van der Piepen H., Schroder M. In: Proc. SPIE 0868 Optoelectronic Technologies for Remote Sensing from Space. Seeley J., Bowyer S., editors. 1988. ROSIS (Reflective Optics System Imaging Spectrometer) - a candidate instrument for polar platform missions; p. 8. [Google Scholar]

- 17.Green R.O., Eastwood M.L., Sarture C.M., Chrien T.G., Aronsson M., Chippendale B.J., Faust J.A., Pavri B.E., Chovit C.J., Solis M., Olah M.R., Williams O. Imaging spectroscopy and the airborne visible/infrared imaging spectrometer (AVIRIS) Remote Sens. Environ. 1998;65(3):227–248. [Google Scholar]

- 18.Babey S., Anger C. A compact airborne spectrographic imager (casi) Quantitative Remote Sensing: An Economic Tool for the Nineties. 1989;1:1028–1031. [Google Scholar]

- 19.Cocks T., Jenssen R., Stewart A., Wilson I., Shields T. Proceedings of the 1st EARSeL Workshop on Imaging Spectroscopy. EARSeL; 1998. The hymaptm airborne hyperspectral sensor: the system, calibration and performance; pp. 37–42. [Google Scholar]

- 20.Rickard L.J., Basedow R.W., Zalewski E.F., Silverglate P.R., Landers M. Vol. 1937. International Society for Optics and Photonics; 1993. Hydice: an airborne system for hyperspectral imaging; pp. 173–180. (Imaging Spectrometry of the Terrestrial Environment). [Google Scholar]

- 21.Eckardt A., Horack J., Lehmann F., Krutz D., Drescher J., Whorton M., Soutullo M. 2015 IEEE International Geoscience and Remote Sensing Symposium (IGARSS) IEEE; 2015. Desis (dlr earth sensing imaging spectrometer for the iss-muses platform) pp. 1457–1459. [Google Scholar]

- 22.Gonzalez R.C. Pearson; London, U.K.: 2009. Digital Image Processing. [Google Scholar]

- 23.Smith R. 2001. Introduction to Remote Sensing of the Environment.http://www.microimages.com [Online]. Available: [Google Scholar]

- 24.Jensen J.R. Pearson; London, U.K.: 2005. Introductory Digital Image Processing: A Remote Sensing Perspective. [Google Scholar]

- 25.Thau J. Encyclopedia of GIS. Springer; New York, NY, USA: 2008. ′′Temporal resolution,''; pp. 1150–1151. [Online]. Available: [DOI] [Google Scholar]

- 26.Clark R.N., Swayze G.A. ′′Mapping minerals, amorphous materials, environmental materials, vegetation, water, ice and snow, and other materials: the USGS tricorder algorithm,''. Proc. Summaries 5th Annu. JPL Airborne Earth Sci. Workshop. 1995;1:39–40. [Google Scholar]

- 27.Khan M.J., Khan H.S., Yousaf A., Khurshid K., Abbas A. Modern trends in hyperspectral image analysis: a review. IEEE Access. 2018;6:14118–14129. 10.1109/ACCESS.2018.2812999. keywords: {Hyperspectral imaging;Spatial resolution;Imaging;Safety;Agriculture;document images;food quality and safety;hyperspectral imaging;medical imaging;remote sensing} [Google Scholar]

- 28.Shaw G.A., Burke H.K. ′′Spectral imaging for remote sensing,''. Linc. Lab. J. 2003;14(1):3–28. [Google Scholar]

- 29.Lu Guolan, Fei Baowei. Medical hyperspectral imaging: a review. J. Biomed. Opt. 20 January 2014;19(1) doi: 10.1117/1.JBO.19.1.010901. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Chao K., Chen Y.R., Early H., Park B. ′′Color image classi_- cation systems for poultry viscera inspection,''. Proc. SPIE. Jan. 1999;3544:363–369. [Google Scholar]

- 31.Lu, Tan J., Shatadal P., Gerrard D.E. ′′Evaluation of pork color by using computer vision,''. Meat Sci. 2000;56(1):57–60. doi: 10.1016/s0309-1740(00)00020-6. [DOI] [PubMed] [Google Scholar]

- 32.Tan J. ′′Meat quality evaluation by computer vision,''. J. Food Eng. 2004;61(1):27–35. [Google Scholar]

- 33.Sullivan M.G., Byrne D.V., Martens H., Gidskehaug L.H., Andersen H.J., Martens M. ′′Evaluation of pork colour: prediction of visual sensory quality of meat from instrumental and computer vision methods of colour analysis,''. Meat Sci. 2003;65(2):909–918. doi: 10.1016/S0309-1740(02)00298-X. [DOI] [PubMed] [Google Scholar]

- 34.Faucitano L., Huff P. F. Teuscher, Gariepy C., Wegner J. ′′Application of computer image analysis to measure pork marbling characteristics,''. Meat Sci. 2005;69(3):537–543. doi: 10.1016/j.meatsci.2004.09.010. [DOI] [PubMed] [Google Scholar]

- 35.Huang H., Liu L., Ngadi L.M., Gariépy C. ′′Prediction of pork marbling scores using pattern analysis techniques,''. Food Control. 2013;31(1):224–229. [Google Scholar]

- 36.Rødbotten R., Nilsen B.N., Hildrum K.I. ′′Prediction of beef quality attributes from early post mortem near infrared re_ectance spectra,''. Food Chem. 2000;69(4):427–436. [Google Scholar]

- 37.Prevolnik M., et al. ′′Predicting intramuscular fat content in pork and beef by near infrared spectroscopy,''. J. Near Infr. Spectrosc. 2005;13(2):77–85. [Google Scholar]

- 38.Qiao J., Ngadi M.O., Wang N., Gariépy C., Prasher S.O. ′′Pork quality and marbling level assessment using a hyperspectral imaging system,''. J. Food Eng. 2007;83(1):10–16. [Google Scholar]

- 39.Xing J., Bravo C., Jancsók P.T., Ramon T., Baerdemaeker J. ′′Detecting bruises on ′golden delicious' apples using hyperspectral imaging with multiple wavebands,''. Biosyst. Eng. 2005;90(1):27–36. [Google Scholar]

- 40.Nagata M., Tallada J.G., Kobayashi T. ′′Bruise detection using NIR hyperspectral imaging for strawberry (Fragaria _ ananassa Duch.),''. Environ. Control Biol. 2006;44(2):133–142. [Google Scholar]

- 41.Yao H., Hruska Z., Kincaid R., Brown R.L., Bhatnagar D., Cleveland T.E. ′′Detecting maize inoculated with toxigenic and atoxigenic fungal strains with _uorescence hyperspectral imagery,''. Biosyst. Eng. 2013;115(2):125–135. [Google Scholar]

- 42.Kim I., Kim M.S., Chen Y.R., Kong S.R. ′′Detection of skin tumors on chicken carcasses using hyperspectral _uorescence imaging,''. Trans. ASAE (Am. Soc. Agric. Eng.) 2004;47(5):1785–1792. [Google Scholar]

- 43.Archibald D.D., Thai C.N., Dowell F.E. Development of short-wavelength nearinfrared spectral imaging for grain color classification, Precision Agricult. Biological Quality. 1999;3543:189–198. [Google Scholar]

- 44.Mahesh S. A. Manickavasagan, Jayas D., Paliwal J., White N. Feasibility of nearinfrared hyperspectral imaging to differentiate Canadian wheat classes. Biosyst. Eng. 2008;101(1):50–57. [Google Scholar]

- 45.Singh, Jayas C.S., Paliwal J., White N.D. Identification of insect-damaged wheat kernels using short-wave near-infrared hyperspectral and digital colour imaging. Comput. Electron. Agric. 2010;73(2):118–125. [Google Scholar]

- 46.Singh, Jayas C.B., Paliwal J., White N.D. Detection of midge-damaged wheat kernels using short-wave near-infrared and digital colour imaging. Biosyst. Eng. 2010;105(3):380–387. [Google Scholar]

- 47.Williams P., Manley M., Fox G., Geladi P. Indirect detection of Fusarium verticillioides in maize (Zea mays L.) kernels by near infrared hyperspectral imaging. J. Near Infrared Spectrosc. 2010;18(1):49–58. [Google Scholar]

- 48.Leiva-Valenzuela G.A., Lu R., Aguilera J.M. ′′Prediction of rmness and soluble solids content of blueberries using hyperspectral re_ectance imaging,''. J. Food Eng. 2013;115(1):91–98. [Google Scholar]

- 49.Huang R., Zhu Q., Wang B., Lu R. ′′Analysis of hyperspectral scattering images using locally linear embedding algorithm for apple mealiness classi_cation,''. Comput. Electron. Agric. Nov. 2012;89:175–181. [Google Scholar]

- 50.Huang H., Liu L., Ngadi M.O., Gariépy C. ′′Rapid and non-invasive quanti_cation of intramuscular fat content of intact pork cuts,''. Talanta. Feb. 2014;119:385–395. doi: 10.1016/j.talanta.2013.11.015. [DOI] [PubMed] [Google Scholar]

- 51.Ivorra E., Giron J., Sanchez A.J., Verdu S., Barat S.M., Grau R. ′′Detection of expired vacuum-packed smoked salmon based on PLS-DA method using hyperspectral images,''. J. Food Eng. 2013;117(3):342–349. [Google Scholar]

- 52.Serranti S., Cesare D., Marini F., Bonifazi G. ′′Classi_cation of oat and groat kernels using NIR hyperspectral imaging,''. Talanta. Jan. 2013;103:276–284. doi: 10.1016/j.talanta.2012.10.044. [DOI] [PubMed] [Google Scholar]

- 53.McGoverin C.M., Engelbrecht P., Geladi M., Manley P. Characterisation of nonviable whole barley, wheat and sorghum grains using near-infrared hyperspectral data and chemometrics. Anal. Bioanal. Chem. 2011;401(7):2283–2289. doi: 10.1007/s00216-011-5291-x. [DOI] [PubMed] [Google Scholar]

- 54.Weinstock B.A., Janni J., Hagen L., Wright S. Prediction of oil and oleic acid concentrations in individual corn (Zea mays L.) kernels using near-infrared reflectance hyperspectral imaging and multivariate analysis. Appl. Spectrosc. 2006;60(1):9–16. doi: 10.1366/000370206775382631. [DOI] [PubMed] [Google Scholar]

- 55.Williams P.J., Geladi P., Britz T.J., Manley M. Investigation of fungal development in maize kernels using NIR hyperspectral imaging and multivariate data analysis. J. Cereal. Sci. 2012;55(3):272–278. [Google Scholar]

- 56.Shahin M.A., Hatcher D.W., Symons S.J. Assessment of mildew levels in wheat samples based on spectral characteristics of bulk grains. Qual. Assur. Saf. Crop Foods. 2010;2:133–140. [Google Scholar]

- 57.Caporaso N., Whitworth M.B., Fisk I.D. Near infrared spectroscopy and hyperspectral imaging for non-destructive quality assessment of cereal grains. Appl. Spectrosc. Rev. 2018;53(8):667–687. [Google Scholar]

- 58.Aviara Ndubisi A., Jacob Tizhe Liberty, Olatunbosun Ojo S., Shoyombo Habib A., Oyeniyi Samuel K. Potential application of hyperspectral imaging in food grain quality inspection, evaluation and control during bulk storage. Journal of Agriculture and Food Research. 2022;8 ISSN 2666-1543. [Google Scholar]

- 59.Paty D. ′′MRI in the diagnosis of MSA prospective study with comparison of clinical evaluation, evoked potentials, oligoclonal banding, and CT,''. Neurology. Feb. 1988;38(2):180. doi: 10.1212/WNL.38.2.180. [DOI] [PubMed] [Google Scholar]

- 60.Hövels A.M. ′′The diagnostic accuracy of CT and MRI in the staging of pelvic lymph nodes in patients with prostate cancer: a metaanalysis,''. Clin. Radiol. 2008;63(4):387–395. doi: 10.1016/j.crad.2007.05.022. [Online]. Available: [DOI] [PubMed] [Google Scholar]

- 61.Kumar C. Desmedt, Larsimont D., Sotiriou C., Goormaghtigh C. ′′Change in the microenvironment of breast cancer studied by FTIR imaging,''. Analyst. 2013;138(14):4058–4065. doi: 10.1039/c3an00241a. [DOI] [PubMed] [Google Scholar]

- 62.Liu Z., Wang H., Li Q. ′′Tongue tumor detection in medical hyperspectral images,''. Sensors. 2012;12(1):162–174. doi: 10.3390/s120100162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Dicker D.T. ′′Differentiation of normal skin and melanoma using high resolution hyperspectral imaging,''. Cancer Biol. Ther. 2016;5(8):1033–1038. doi: 10.4161/cbt.5.8.3261. [DOI] [PubMed] [Google Scholar]

- 64.Mitra E. ′′Indocyanine-green-loaded microballoons for biliary imaging in cholecystectomy,''. J. Biomed. Opt. 2012;17(11) doi: 10.1117/1.JBO.17.11.116025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Campbell S.C. ′′Guideline for management of the clinical T1 renal mass,''. J. Urol. 2009;182(4):1271–1279. doi: 10.1016/j.juro.2009.07.004. [DOI] [PubMed] [Google Scholar]

- 66.Olweny E.O. ′′Renal oxygenation during robotic-assisted laparoscopic partial nephrectomy: characterization using laparoscopic digital light processing hyperspectral imaging,''. J. Endourol. 2013;27(3):265–269. doi: 10.1089/end.2012.0207. [DOI] [PubMed] [Google Scholar]

- 67.Huang Q., Li W., Xie X. Proceedings of the BIBE 2018; International Conference on Biological Information and Biomedical Engineering, Shanghai, China. 6–8 June 2018. Convolutional neural network for medical hyperspectral image classification with kernel fusion. [Google Scholar]

- 68.Huang Q., Li W., Zhang B., Li Q., Tao R., Lovell N.H. Blood cell classification based on hyperspectral imaging with modulated gabor and CNN. IEEE J. Biomed. Health Inform. 2020;24:160–170. doi: 10.1109/JBHI.2019.2905623. [DOI] [PubMed] [Google Scholar]

- 69.Wang Q., Wang J., Zhou M., Li Q., Wen Y., Chu J. A 3D attention networks for classification of white blood cells from microscopy hyperspectral images. Opt Laser. Technol. 2021;139 [Google Scholar]

- 70.Sommer F., Sun B., Fischer J., Goldammer M., Thiele C., Malberg H., Markgraf W. Hyperspectral imaging during normothermic machine perfusion—a functional classification of ex vivo kidneys based on convolutional neural networks. Biomedicines. 2022;10:397. doi: 10.3390/biomedicines10020397. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Li Y., Deng L., Yang X., Liu Z., Zhao X., Huang F., Zhu S., Chen X., Chen Z., Zhang W. Early diagnosis of gastric cancer based on deep learning combined with the spectral-spatial classification method. Biomed. Opt Express. 2019;10:4999. doi: 10.1364/BOE.10.004999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Bengs M., Gessert N., Laffers W., Eggert D., Westermann S., Mueller N.A., Gerstner A.O.H., Betz C., Schlaefer A. Spectral spatial recurrent-convolutional networks for in-vivo hyperspectral tumor type classification. arXiv. 2020 arXiv:2007. [Google Scholar]

- 73.Grigoroiu A., Yoon J., Bohndiek S.E. Deep learning applied to hyperspectral endoscopy for online spectral classification. Sci. Rep. 2020;10:3947. doi: 10.1038/s41598-020-60574-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Manifold B., Men S., Hu R., Fu D. A versatile deep learning architecture for classification and label-free prediction of hyperspectral images. Nat. Mach. Intell. 2021;3:306–315. doi: 10.1038/s42256-021-00309-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Halicek M., Dormer J.D., Little J.V., Chen A.Y., Fei B. Tumor detection of the thyroid and salivary glands using hyperspectral imaging and deep learning. Biomed. Opt Express. 2020;11:1383. doi: 10.1364/BOE.381257. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Trajanovski S., Shan C., Weijtmans P.J.C., de Koning S.G.B., Ruers T.J.M. Tongue tumor detection in hyperspectral images using deep learning semantic segmentation. IEEE Trans. Biomed. Eng. 2021;68:1330–1340. doi: 10.1109/TBME.2020.3026683. [DOI] [PubMed] [Google Scholar]

- 77.Cervantes-Sanchez F., Maktabi M., Köhler H., Sucher R., Rayes N., Avina-Cervantes J.G., Cruz-Aceves I., Chalopin C. Automatic tissue segmentation of hyperspectral images in liver and head neck surgeries using machine learning. AIS. 2021;1:22–37. [Google Scholar]

- 78.Garifullin A., Koobi P., Ylitepsa P., Adjers K., Hauta-Kasari M., Uusitalo H., Lensu L. Proceedings of the 2018 Digital Image Computing: Techniques and Applications (DICTA), Canberra, Australia. 2018. Hyperspectral image segmentation of retinal vasculature, optic disc and macula; pp. 1–5. 10–13 December. [Google Scholar]

- 79.Trajanovski S., Shan C., Weijtmans P.J.C. Proceedings of the International Conference on Medical Imaging with Deep Learning–Extended Abstract Track, London, UK. April 2019. Tumor semantic segmentation in hyperspectral images using deep learning. [Google Scholar]

- 80.Seidlitz S., Sellner J., Odenthal J., Özdemir B., Studier-Fischer A., Knödler S., Ayala L., Adler T., Kenngott H.G., Tizabi M., et al. Robust deep learning-based semantic organ segmentation in hyperspectral images. Med. Image Anal. 2022;80 doi: 10.1016/j.media.2022.102488. [DOI] [PubMed] [Google Scholar]

- 81.Tilman D., Balzer C., Hill J., Befort B.L. ′′Global food demand and the sustainable intensi_cation of agriculture,''. Proc. Nat. Acad. Sci. USA. 2011;108(50):20260–20264. doi: 10.1073/pnas.1116437108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Cassman K.G. ′′Ecological intensi_cation of cereal production systems: yield potential, soil quality, and precision agriculture,''. Proc. Nat. Acad. Sci. USA. 1999;96(11):5952–5959. doi: 10.1073/pnas.96.11.5952. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Ray D., Ramankutty, Mueller N.D., West P.C., Foley J.A. ′′Recent patterns of crop yield growth and stagnation,''. Nat. Commun. Dec. 2012;3 doi: 10.1038/ncomms2296. Art. no. [DOI] [PubMed] [Google Scholar]

- 84.Godfray H.C.J. ′′Food security: the challenge of feeding 9 billion people,''. Science. 2010;327(5967):812–818. doi: 10.1126/science.1185383. [DOI] [PubMed] [Google Scholar]

- 85.Foley J.A. ′′Solutions for a cultivated planet,''. Nature. 2011;478(7369):337–342. doi: 10.1038/nature10452. [DOI] [PubMed] [Google Scholar]

- 86.Colombo R. ′′Estimation of leaf and canopy water content in poplar plantations by means of hyperspectral indices and inverse modeling,''. Remote Sens. Environ. 2008;112(4):1820–1834. [Google Scholar]

- 87.Mahlein A.-K., Steiner U., Hillnhütter C., Dehne H.W., Oerke E.C. ′′Hyperspectral imaging for small-scale analysis of symptoms caused by different sugar beet diseases,''. Plant Methods. 2012;8(1):3. doi: 10.1186/1746-4811-8-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Liu L.Y., Wang L.H., Huang H.J., Zhao C.J., Zhang B., Tong Q.X. ′′Improving winter wheat yield prediction by novel spectral index,''. Trans. CSAE. 2004;20:172–175. [Google Scholar]

- 89.Ferguson Richard, Rundquist Donald. Precision Agriculture Basics. 2018. Remote sensing for site‐specific crop management; pp. 103–117. [Google Scholar]

- 90.Zhang Chongyuan, Marzougui Afef, Sankaran Sindhuja. High-resolution satellite imagery applications in crop phenotyping: an overview. Comput. Electron. Agric. 2020;175 [Google Scholar]

- 91.Caturegli Lisa, et al. GeoEye-1 satellite versus ground-based multispectral data for estimating nitrogen status of turfgrasses. Int. J. Rem. Sens. 2015;36(8):2238–2251. [Google Scholar]

- 92.Tian Jinyan, et al. Comparison of UAV and WorldView-2 imagery for mapping leaf area index of mangrove forest. Int. J. Appl. Earth Obs. Geoinf. 2017;61:22–31. [Google Scholar]

- 93.Kokhan Svitlana, Anatoliy Vostokov. Using vegetative indices to quantify agricultural crop characteristics. Journal of Ecological Engineering. 2020;21:4. [Google Scholar]

- 94.Ahn Ho-yong, et al. Radiometric cross calibration of KOMPSAT-3 and lnadsat-8 for time-series harmonization. Korean Journal of Remote Sensing. 2020;36(6_2):1523–1535. [Google Scholar]

- 95.Meroni Michele, et al. Comparing land surface phenology of major European crops as derived from SAR and multispectral data of Sentinel-1 and-2. Rem. Sens. Environ. 2021;253 doi: 10.1016/j.rse.2020.112232. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 96.Chua Randy, Xie Qingbin, Yuan Bo. 2020. Crop Monitoring Using Multispectral Optical Satellite Imagery. [Google Scholar]

- 97.Baraldi Andrea, et al. Automatic spectral-rule-based preliminary classification of radiometrically calibrated SPOT-4/-5/IRS, AVHRR/MSG, AATSR, IKONOS/QuickBird/OrbView/GeoEye, and DMC/SPOT-1/-2 imagery—Part I: system design and implementation. IEEE Trans. Geosci. Rem. Sens. 2009;48(3):1299–1325. [Google Scholar]

- 98.Choubey V.K. Monitoring water quality in reservoirs with IRS-1A-LISS-I. Water Resour. Manag. 1994;8(2):121–136. [Google Scholar]

- 99.Hasab Hashim Ali, et al. Monitoring and assessment of salinity and chemicals in agricultural lands by a remote sensing technique and soil moisture with chemical index models. Geosciences. 2020;10(6):207. [Google Scholar]

- 100.Goetz Scott J., et al. IKONOS imagery for resource management: tree cover, impervious surfaces, and riparian buffer analyses in the mid-Atlantic region. Rem. Sens. Environ. 2003;88(1–2):195–208. [Google Scholar]

- 101.Caturegli L., Casucci M., Lulli F., Grossi N., Gaetani M., Magni S., Bonari E., Volterrani M. GeoEye-1 satellite versus ground-based multispectral data for estimating nitrogen status of turfgrasses. Int. J. Rem. Sens. 2015;36:2238–2251. [Google Scholar]

- 102.Sharifi Alireza. Using sentinel-2 data to predict nitrogen uptake in maize crop. IEEE J. Sel. Top. Appl. Earth Obs. Rem. Sens. 2020;13:2656–2662. [Google Scholar]

- 103.Yang Chenghai. High resolution satellite imaging sensors for precision agriculture. Frontiers of Agricultural Science and Engineering. 2018;5(4):393–405. [Google Scholar]

- 104.Fabio, et al. Evaluation of Terra/Aqua MODIS and Sentinel-2 MSI NDVI data for predicting actual evapotranspiration in Mediterranean regions. Int. J. Rem. Sens. 2020;41(14):5186–5205. [Google Scholar]

- 105.BellakanjiAicha Chahbi, et al. Forecasting of cereal yields in a semi-arid area using the simple algorithm for yield estimation (SAFY) agro-meteorological model combined with optical SPOT/HRV images. Sensors. 2018;18(7):2138. doi: 10.3390/s18072138. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 106.Denis Antoine, et al. Multispectral remote sensing as a tool to support organic crop certification: assessment of the discrimination level between organic and conventional maize. Rem. Sens. 2021;13(1):117. [Google Scholar]

- 107.Vibhute Amol D., et al. Estimation of soil nitrogen in agricultural regions by VNIR reflectance spectroscopy. SN Appl. Sci. 2020;2(9):1–8. [Google Scholar]

- 108.Yang Chenghai. Remote sensing and precision agriculture technologies for crop disease detection and management with a practical application example. Engineering. 2020;6(5):528–532. [Google Scholar]

- 109.Sidike Paheding, et al. dPEN: deep Progressively Expanded Network for mapping heterogeneous agricultural landscape using WorldView-3 satellite imagery. Rem. Sens. Environ. 2019;221:756–772. [Google Scholar]

- 110.Zwiggelaar R. A review of spectral properties of plants and their potential use for crop/weed discrimination in row-crops. Crop Protect. 1998;17(3):189–206. [Google Scholar]

- 111.Thorp K.R., Tian L.F. A review on remote sensing of weeds in agriculture. Precis. Agric. 2004;5(5):477–508. [Google Scholar]

- 112.Hadoux X., Gorretta N., Roger J.M., Bendoula R., Rabatel G. Comparison of the efficacy of spectral pre-treatments for wheat and weed discrimination in outdoor conditions. Comput. Electron. Agric. 2014;108:242–249. [Google Scholar]

- 113.Piron A., Leemans V., Kleynen O., Lebeau F., Destain M.F. Selection of the most efficient wavelength bands for discriminating weeds from crop. Comput. Electron. Agric. 2008;62(2):141–148. [Google Scholar]

- 114.Uppal M., Gupta D., Goyal N., Imoize A.L., Kumar A., Ojo S., Pani S.K., Kim Y., Choi J. A real-time data monitoring framework for predictive maintenance based on the internet of things. Complexity. 2023;2023:1–14. [Google Scholar]

- 115.Malhotra Priyanka, Gupta Sheifali, et al. Deep neural networks for medical image segmentation. J. Healthcare Eng. 2022;1:1–15. doi: 10.1155/2022/9580991. [DOI] [PMC free article] [PubMed] [Google Scholar] [Retracted]

- 116.Anand Vatsala, Gupta Sheifali, et al. 2022. Modified U-NET Architecture for Segmentation of Skin Lesion; p. 867. Sensors3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 117.Zagolski F. ′′Forest canopy chemistry with high spectral resolution remote sensing,''. Int. J. Rem. Sens. 1996;17(6):1107–1128. [Google Scholar]

- 118.Asner G.P. ′′Biophysical and biochemical sources of variability in canopy re_ectance,''. Remote Sens. Environ. Jun. 1998;64(3):234–253. [Google Scholar]

- 119.McGwire K., Minor T., Fenstermaker L. ′′Hyperspectral mixture modeling for quantifying sparse vegetation cover in arid environments,''. Remote Sens. Environ. 2000;72(3):360–374. [Google Scholar]

- 120.Stone A., Chisholm L., Coops N. ′′Spectral re_ectance characteristics of eucalypt foliage damaged by insects,'' Austral. J. Botany. 2001;49(6):687–698. [Google Scholar]

- 121.Coops N., Dury S., Smith M.L., Martin M., Ollinger S. ′′Comparison of green leaf eucalypt spectra using spectral decomposition,'' Austral. J. Botany. 2002;50(5):567–576. [Google Scholar]

- 122.Underwood E., Ustin S., DiPietro D. ′′Mapping nonnative plants using hyperspectral imagery,''. Remote Sens. Environ. 2003;86(2):150–161. [Google Scholar]

- 123.Xingtang H. ′′A new architecture for remote-sensing environmental monitoring system REMS: design and implementation,''. Proc. IEEE Int. Geosci. Remote Sens. Symp. (IGARSS) Sep. 2004:2115–2118. [Google Scholar]

- 124.Li J.S. Ph.D. Dissertation, Inst. Remote Sens. Appl., Chinese Acad. Sci., Beijing, China. 2007. ′′Study on retrieval of inland water quality parameters from hyperspectral remote sensing data by analytical approach_Taking Taihu Lake as an example,''. [Google Scholar]

- 125.Kurata K., Yan J. Water stress estimation of tomato canopy based on machine vision. Acta Hortic. 1996;440:389–394. [Google Scholar]

- 126.Kacira M., Ling P.P., Short T.H. Machine vision extracted plant movement for early detection of plant water stress. Transactions of the ASAB. 2002;45(4):1147–1153. doi: 10.13031/2013.9923. [DOI] [PubMed] [Google Scholar]

- 127.Sepulcre-Canto G., Zarco-Tejada P.J., Jiménez-Muñoz J.C., Sobrino J.A., de Miguel E., Villalobos F.J. Detection of water stress in an olive orchard with thermal remote sensing imagery. Agric. For. Meteorol. 2006;136(1–2):31–44. [Google Scholar]

- 128.Naor A. Water stress assessment for irrigation scheduling of deciduous trees. Acta Hortic. 2008;792:467–481. [Google Scholar]

- 129.Kim Y., Glenn D.M., Park J., Ngugi H.K., Lehman B.L. Hyperspectral image analysis for water stress detection of apple trees. Comput. Electron. Agric. 2011;77:155–160. [Google Scholar]

- 130.Zygielbaum A.I., Gitelson A.A., Arkebauer T.J., Rundquist D.C. Nondestructive detection of water stress and estimation of relative water content in maize. Geophys. Res. Lett. 2009;36(12) [Google Scholar]

- 131.Sanches I.D., Filho C.R.S., Magalhães L.A., Quitério G.C.M., Alves M.N., Oliveira W.J. Assessing the impact of hydrocarbon leakages on vegetation using reflectance spectroscopy. ISPRS J. Photogrammetry Remote Sens. 2013;78:85–101. [Google Scholar]

- 132.Ip F. ′′Flood detection and monitoring with the autonomous sciencecraft experiment onboard EO-1,''. Remote Sens. Environ. 2006;101(4):463–481. [Google Scholar]

- 133.Brakenridge G.R., Anderson E., Nghiem S.V. ′′Satellite microwave detection and measurement of river _oods,''. in Proc. AGUSpring Meeting Abstracts. 2006;1:5. [Google Scholar]

- 134.Gläÿer C., Reinartz P. ′′Multitemporal and multispectral remote sensing approach for _ood detection in the Elbe_Mulde region 2002,''. Acta Hydrochim. Hydrobiol. 2005;33(5):395–403. [Google Scholar]

- 135.Roux H., Dartus D. ′′Use of parameter optimization to estimate a_oodwave: potential applications to remote sensing of rivers,''. J. Hydrol. 2006;328(1_2):258–266. [Google Scholar]

- 136.Honkavaara E., et al. ′′Processing and assessment of spectrometric, stereoscopic imagery collected using a lightweight UAV spectral camera for precision agriculture,''. Remote Sens. 2013;5(10):5006–5039. [Google Scholar]

- 137.Zhu W., Chen J., Sun Q., Li Z., Tan W., Wei Y. Reconstructing of high-spatial-resolution three-dimensional electron density by ingesting SAR-derived VTEC into IRI model. Geosci. Rem. Sens. Lett. IEEE. 2022;19:1–5. doi: 10.1109/LGRS.2022.3178242. Art no. 4508305. {Synthetic aperture radar;Indexes;Rough surfaces;Image reconstruction;Ionosphere;Radar polarimetry;Orbits;Data ingestion;ionospheric electron density;synthetic aperture radar (SAR);vertical total electron content∼(VTEC)} [DOI] [Google Scholar]

- 138.Xu H., Li Q., Chen J. Highlight removal from A single grayscale image using attentive gan. Appl. Artif. Intell. 2022;36(1) doi: 10.1080/08839514.2021.1988441. [DOI] [Google Scholar]

- 139.Zheng W., Lu S., Yang Y., Yin Z., Yin L. Lightweight transformer image feature extraction network. PeerJ Computer Science. 2024;10 doi: 10.7717/peerj-cs.1755. [DOI] [Google Scholar]