Abstract

Auditory localization is a fundamental ability that allows to perceive the spatial location of a sound source in the environment. The present work aims to provide a comprehensive overview of the mechanisms and acoustic cues used by the human perceptual system to achieve such accurate auditory localization. Acoustic cues are derived from the physical properties of sound waves, and many factors allow and influence auditory localization abilities. This review presents the monaural and binaural perceptual mechanisms involved in auditory localization in the three dimensions. Besides the main mechanisms of Interaural Time Difference, Interaural Level Difference and Head Related Transfer Function, secondary important elements such as reverberation and motion, are also analyzed. For each mechanism, the perceptual limits of localization abilities are presented. A section is specifically devoted to reference systems in space, and to the pointing methods used in experimental research. Finally, some cases of misperception and auditory illusion are described. More than a simple description of the perceptual mechanisms underlying localization, this paper is intended to provide also practical information available for experiments and work in the auditory field.

Keywords: acoustics, auditory localization, ITD, ILD, HRTF, action perception coupling

1. Introduction

A bird singing in the distance, a friend calling us, a car approaching quickly… our auditory system constantly works to transmit information coming from our surroundings. From exploring our environment to identifying and locating dangers, auditory localization plays a crucial role in our daily lives and fast and accurate auditory localization is of vital importance. However, how does our perceptual system locate the origin of sounds so accurately? This review aims to provide a comprehensive overview of the capabilities and mechanisms of auditory localization in humans. The literature has so far extensively described the fundamental mechanisms of localization, whereas recent findings add new information about the importance of ancillary mechanisms to resolve uncertainty conditions and increase effectiveness. This paper aims to summarize the totality of these factors. Moreover, for the sake of completeness, we have supplemented the review with some practical insights. We enriched the functional description with relevant information about the methods of study, measurement, and perceptual limits.

There is growing interest in auditory localization mechanisms, as they have a great potential for improving the spatialization of sound in emerging immersive technologies, such as virtual reality and 3D cinema. Even more interesting and challenging is their use in sensory augmentation or substitution devices, used to improve the lives of people with perceptual disabilities. This work aims to provide a concise and effective explanation of the relation between the structure of the acoustic signals and the human sound source localization abilities, for both theoretical researches and practical areas. Accordingly, we have omitted an examination of the neural correlates involved in auditory localization. We invite readers interested in this topic to refer to the specific literature.

The body of this review is divided into three sections. In the first part, an overview of the mechanisms involved in human 3D sound localization, as well as the associated capabilities and limitations, is given in order to provide a holistic understanding of the field. In the second part, we provide a more detailed explanation of the auditory cues. Finally, we present other factors that influence the localization of sound source, such as pointing and training methods, and sound characteristics (frequency, intensity…), alterations that can even lead to illusionary phenomena.

2. Localizing a sound in space

2.1. Auditory localization is based on auditory perception

Auditory localization naturally relies on auditory perception. Its characteristics and limitations are primarily determined by the capabilities of the human perceptual system and exhibit considerable interindividual variability. Although the study of the auditory system has ancient origins, it was not until the 19th century that research started to focus on the functional characteristics of our auditory system, as well as on localization abilities. In the 20th century, the growing knowledge of the perceptual system and the adoption of more rigorous protocols revealed the complexity of the mechanisms of acoustic localization as well as the importance of using appropriate methods of investigation (Grothe and Pecka, 2014; Yost, 2017). Indeed, measures of auditory localization can be influenced by many factors. In experimental tests, for example, participants’ responses depend on the type of auditory stimuli as well as the order in which they are presented, the way the sound spreads through the environment, the age of the listener, and the method used to collect responses (Stevens, 1958; Wickens, 1991; Reinhardt-Rutland, 1995; Heinz et al., 2001; Gelfand, 2017). Results of experimental research also highlight a high level of inter-subject variability that affects responses and performance in various experimental tests (Middlebrooks, 1999a; Mauermann et al., 2004; Röhl and Uppenkamp, 2012).

The cues used by the auditory system for localization are mainly based on the timing, intensity, and frequency of the perceived sound. The perceptual limits of these three quantities naturally play an important role in acoustic localization. Regarding the perception of sound intensity, the threshold varies with frequency. Given a sound of 1 kHz, the minimum pressure difference that the human hearing system can detect is approximately 20 μPa, corresponding by definition to the intensity level of 0 dB SPL (Howard and Angus, 2017). The perceived intensity of a sound does not correspond to the physical intensity of the pressure wave, and the perceptual bias varies depending on the frequency of the sound (Laird et al., 1932; Stevens, 1955). Fletcher and Munson, and Robinson and Dadson successively, carried out the best-known studies concerning the correspondence between physical and perceived sound intensity (Fletcher and Munson, 1933; Robinson and Dadson, 1956). Today, ISO 226:2003 defines the standard auditory equal-loudness level chart. To do this, it uses a protocol based on free-field frontal and central loudspeaker playback to participants aged 18 ÷ 25 years from a variety of countries worldwide. With regard to the spectrum of frequencies audible to the human auditory system, the standard audible range is considered to be between 20 Hz and 20,000 Hz. However, auditory perception depends on many factors, and especially on the age of the listener. Performance is at its highest at the beginning of adulthood, at around 18 years of age, and declines rapidly: by 20 years of age, the upper limit may have dropped to 16 kHz (Howard and Angus, 2017). The reduction is continuous and progressive, mainly affecting the upper threshold. Above the age of 35~40 years, there is a significant reduction in the ability to hear frequencies above 3–4 kHz (Howarth and Shone, 2006; Fitzgibbons and Gordon-Salant, 2010; Dobreva et al., 2011).

Finally, the perceived frequency of sounds does not correspond exactly to their physical frequency but instead shows systematic perceptual deviations. The best-known psychoperceptual scales that relate sound frequency to pitch are the Mel scale (Stevens and Volkmann, 1940), the Bark scale (Zwicker, 1961), and the ERB scale (Glasberg and Moore, 1990; Moore and Glasberg, 1996).

2.2. Main characteristics of localization

Auditory localization involves several specialized and complementary mechanisms. Four main types of cue are usually mentioned: the two binaural cues (interaural time and level differences), monaural spectral cues due to the shading of the sound wave by the listener body, and additional factors such as reverberation or relative motion that make localization more effective – and more complex to study. These mechanisms operate simultaneously or complementarily in order to compensate for weaknesses in any of the individual mechanisms, resulting in high accuracy over a wide range of frequencies (Hartmann et al., 2016). In this section, we provide an overview of the main mechanisms that are explained in more detailed in the second and third sections.

The main binaural cues that our perceptual system uses to localize sound sources more precisely are the Interaural Time Difference (ITD) and the Interaural Level Difference (ILD) (mechanisms based on differences in time and intensity, respectively, as described below). At the beginning of the last century, Lord Rayleigh proposed the existence of two different mechanisms, one operating at low frequencies and the other at high frequencies. This is known as the Duplex Theory of binaural hearing. Stevens and Newman found that localization performances are best for frequencies below about 1.5 kHz and above about 5 kHz (Rayleigh, 1907; Stevens and Newman, 1936). The smallest still perceivable interaural difference between our ears is about 10 μs for ITD, and about 1 dB for ILD (Mills, 1958; Brughera et al., 2013; Gelfand, 2017).

The localization of a sound source in space is characterized by a certain amount of uncertainty and bias, which result in estimation errors that can be measured as constant error (accuracy) and random error (precision). The type and magnitude of estimation errors depend on the properties of the emitted sound, the characteristics of the surroundings, the specific localization task, and the listener’s abilities (Letowski and Letowski, 2011). Bruns and colleagues investigated two methods (error-based and regression-based) for calculating accuracy and precision. The authors pointed out that accuracy and precision measures, while theoretically distinct in the two paradigms, can be strongly correlated in experimental datasets (Bruns et al., 2024). Garcia and colleagues proposed a comparative localization study, comparing performance before and after training. Their results show that both constant errors and variability in auditory localization tend to increase when auditory uncertainty increases. Moreover, such biases can be reduced through training with visual feedback (Garcia et al., 2017).

As we will see below, sound source localization is more accurate in the horizontal plane (azimuth) than in the vertical plane (elevation). Localization performances in the third dimension (distance) are less accurate than for either azimuth or elevation and are subject to considerable inter-subject variability (Letowski and Letowski, 2011). Moreover, these abilities change with the age of the listener. Dobreva and colleagues found that young subjects systematically overestimate (overshoot) horizontal position and systematically underestimate vertical position. Moreover, the magnitude of the effect varies with the sound frequency. In middle-aged subjects, these authors found a pronounced reduction in the precision of horizontal localization for narrow-band targets in the range 1,250 ÷ 1,575 Hz. Finally, in elderly subjects, they found a generalized reduction in localization performance in terms of both accuracy and precision (Dobreva et al., 2011). Otte and colleagues also performed a comparative study of localization abilities, testing three different age groups ranging from 7 to 80 years. Their results are somewhat more positive, especially for the older age group: localization ability remains fully effective, even in the early phase of hearing loss. Interestingly, they also found that older adults with big ears had significantly better elevation localization abilities. This advantage does not appear in azimuth localization. Young subjects, with smaller ears, require higher frequencies (above 11 kHz) to accurately localize the elevation of sounds (Otte et al., 2013).

The quantitative evaluation of human performance is based on two types of localization estimation: Absolute localization (a sound source must be localized directly, usually with respect to a listener-centered reference system), and Discrimination (two sound sources have to be distinguished in the auditory signal, either simultaneously or sequentially).

Concerning absolute localization, in frontal position, peak accuracy is observed at 1÷2 degrees for localization in the horizontal plane and 3÷4 degrees for localization in the vertical plane (Makous and Middlebrooks, 1990; Grothe et al., 2010; Tabry et al., 2013). Investigating the frontal half-space, Rhodes – as well as Tabry and colleagues more recently – found that the azimuth and the elevation error grows linearly as the distance of the target from the central position increases. Using the head-pointing method, Tabry et al. found that the error grows up to ~20 degrees for an azimuth of ±90° and up to ~30 degrees for an elevation of −45° and +67° (Rhodes, 1987; Tabry et al., 2013).

Among the discrimination paradigms, the most commonly used is the Minimal Audible Angle (MAA), which is defined as the smallest angle that a listener can discriminate between two successively presented stationary sound sources. Mills developed the MAA paradigm and studied the human ability to discriminate lateralization (azimuth). He showed that the MAA threshold also depends on the frequency of the sound and found that MAA performance is better for frequencies below 1,500 Hz, and above 2,000 Hz. The best performance is obtained in the frontal field with an MAA accuracy in the frontal-central position equal to 1 ÷ 2 degrees in azimuth. In a more recent study, Aggius-Vella and colleagues found slightly larger values: they reported an MAA threshold in azimuth of 3° in frontal position, and 5° in rear position. The above values refer to sources positioned at ear level. When they moved the sound source to foot level, Aggius-Vella and colleagues found an MAA threshold of 3° in both front and rear positions. In a more recent study, Aggius-Vella and colleagues placed the sound source 1 m above the floor and found an MAA threshold of 6° in the front position and 7° in the rear position. It is important to note that the works of Mills and Aggius-Vella used two different protocols: while Mills used audio headphones to play the sound, Aggius-Vella and colleagues used a set of aligned loudspeakers (Mills, 1958, 1960; Mills and Tobias, 1972; Aggius-Vella et al., 2018, 2020). Similarly, a discrimination paradigm known as MADD (Minimal Audible Distance Discrimination) is used for the distance dimension. Using a MADD-type paradigm, Aggius-Vella et al. (2022) reported better distance discrimination abilities in the front space (19 cm) than in the rear space (21 cm). They found a comparable effect of the spatial region using a distance bisection paradigm, which revealed a lower threshold (15 cm) in the front space than in the rear space (20 cm) (Aggius-Vella et al., 2022). It is also relevant to note that some authors have criticized the MAA paradigm, claiming that the experimental protocol enables responses to be produced based on criteria other than relative discrimination through the use of identification strategies (Hartmann and Rakerd, 1989a).

A second, and important, discrimination paradigm is the CMAA (Concurrent Minimum Audible Angle), which measures the ability to discriminate between two simultaneous stimuli. In the frontal position, Perrott found a CMAA threshold of 4°÷10° (Perrott, 1984). Brungart and colleagues investigated the discrimination and localization capabilities of our auditory system when faced with multiple sources (up to 14 tonal sounds) with or without allowed head movement. They found that although localization accuracy systematically decreased as the number of concurrent sources increased, overall localization accuracy was nevertheless still above chance even in an environment with 14 concurrent sound sources. Interestingly, when there are more than five simultaneous sound sources, exploratory head movements cease to be effective in improving localization accuracy (Brungart et al., 2005). Zhong and Yost found that the maximum number of simultaneous separate stimuli that our perceptual system can easily discriminate is approximately 3 for tonal stimuli and 4 for speech stimuli (Zhong and Yost, 2017), which is in line with many studies that have shown that localization accuracy is significantly improved when localizing broadband sounds (Butler, 1986; Makous and Middlebrooks, 1990; Wightman and Kistler, 1992, 1997; Gelfand, 2017).

2.3. Reference system and localization performances

Each target in space is localized according to a reference system. In the case of human perception, experimental research suggests that our brain uses different reference systems, both egocentric and allocentric, and is able to switch easily between them (Graziano, 2001; Wang, 2007; Galati et al., 2010). More specifically, with regard to auditory perception, Majdak and colleagues describe the different mechanisms involved in the creation of the internal representation of space (Majdak et al., 2020). Moreover, research works such as those of Aggius-Vella and Viaud-Delmon also show that these mechanisms are closely related to other perceptual channels, and in particular the visual and sensorimotor channels, making it possible to calibrate the reference system more accurately and improve the spatial representation (Viaud-Delmon and Warusfel, 2014; Aggius-Vella et al., 2018).

With regard to the experimental protocols used in the field of auditory localization, almost all research works have adopted a reference system centered on the listener, generally positioning the origin at the midpoint of the segment joining the two ears (Middlebrooks et al., 1989; Macpherson and Middlebrooks, 2000; Letowski and Letowski, 2011). In contrast, some research has used an allocentric reference system in which the positions of the localized sound sources have to be reported with reference to a fictional head (tangible or digital) that represents that of the participant (tangible: Begault et al., 2001; Pernaux et al., 2003; Schoeffler et al., 2014) (digital: Gilkey et al., 1995).

The reference system and the pointing system used to provide the response are closely related. The use of an egocentric reference system is usually preferred because it prevents participants from making projection errors when giving responses. For example, Djelani and colleagues demonstrated that the God’s Eye Localization Pointing (GELP) technique, that is an allocentric reference-and-response system where the perceived direction of the sound is indicated by pointing at a 20 cm diameter spherical model of auditory space, brings about certain systematic errors as a consequence of the projection from the participant’s head to its external representation (Djelani et al., 2000). Similarly, head or eye pointing is preferred since it avoids the parallax errors that frequently occur with pointing devices. In addition, many authors prefer to use head or gaze orientation as a pointing system, because it is considered more ecological and does not require training or habituation (Makous and Middlebrooks, 1990; Populin, 2008).

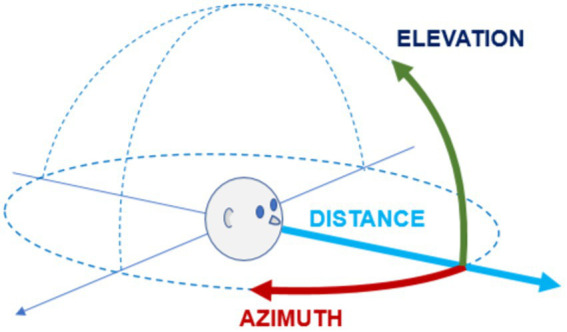

The most commonly used reference system in studies on spatial hearing is the bipolar spherical coordinate system (Figure 1). This coordinate system consists of two angular dimensions, θ (azimuth or declination) and φ (elevation), and one linear dimension, d (distance or depth) (Middlebrooks, 1999b; McIntyre et al., 2000; Jerath et al., 2015). In some cases, a cylindrical system (in which the angular elevation is replaced by a linear elevation parameter) (Febretti et al., 2013; Sherlock et al., 2021), or a Cartesian system (Parise et al., 2012) is preferred. An alternative reference system is the Interaural-polar coordinate system, which has been described by Majdak as corresponding more closely to the human perceptual system and consists of a lateral angle α, a polar angle β, and a linear distance r (Majdak et al., 2020).

Figure 1.

Reference system. The most commonly used reference system for locating a sound source in three-dimensional space is the polar coordinate system. The reference system is centered on the listener and divides space according to two angular coordinates (azimuth in the horizontal plane, elevation in the vertical plane) and one linear coordinate (distance or depth), as shown in the figure.

2.3.1. Azimuth

In spherical coordinate systems, the Azimuth is defined as the angle between the projection of the target position on the horizontal plane and a reference meridian, measured from above either clockwise (Rychtáriková et al., 2011; Parseihian et al., 2014) or, less commonly, counter-clockwise (Bronkhorst, 1995; Werner et al., 2016). The standard “zero” reference meridian is the frontal meridian (Vliegen and Van Opstal, 2004; Risoud et al., 2020). Starting from the reference meridian, the horizontal plane is then indexed on a continuous scale of 360 degrees (Iwaya et al., 2003; Oberem et al., 2020) or divided into two half-spaces of 180 degrees, i.e., left and right, with the left half-space having negative values (Makous and Middlebrooks, 1990; Boyer et al., 2013; Aggius-Vella et al., 2020). Conveniently, the horizontal plane can be also simply divided into front and rear (or back) half-spaces.

The most important cues for auditory localization in the azimuthal plane are the ILD and ITD. However, the effectiveness of ILD and ITD is subject to some limitations relating to the frequency of the sound, and other mono-or bin-aural strategies are required to resolve ambiguous conditions (see section ILD and ITD) (Van Wanrooij and Van Opstal, 2004).

The best localization performance in the azimuthal plane is found at about 1-2 degrees, namely in the frontal area approximately at the intersection with the sagittal plane (Makous and Middlebrooks, 1990; Perrott and Saberi, 1990).

2.3.2. Elevation

In the spherical coordinate system, the Elevation (or polar angle) is the angle between the projection of the target position on a vertical frontal plane and a zero-elevation reference vector. This is commonly represented by the intersection of the vertical frontal plane with the Azimuth plane, with positive values being assigned to the upper half-space and negative values to the lower half-space, thus obtaining a continuous scale [−90°, +90°] (Figure 1; Middlebrooks, 1999b; Trapeau and Schönwiesner, 2018; Rajendran and Gamper, 2019). Occasionally, the zero-elevation reference is assigned to the Zenith and the maximum value is assigned to the Nadir, resulting in a measurement scale consisting only of positive values [0°, +180°] (Oberem et al., 2020).

Elevation estimation relies primarily on monaural spectral cues, mainly resulting from the interaction of the sound with the auricle. These interactions cause modulations of the sound spectrum reaching the eardrum and are grouped together under the term Head-Related Transfer Functions (HRTF), see HRTF section (Ahveninen et al., 2014; Rajendran and Gamper, 2019). Otte et al. (2013) graphically show the variation of the sound spectrum as a function of both elevation and the various individual anatomies of the outer ear. Auditory localization in the vertical plane has lower spatial resolution than that in the horizontal plane. The best localization performance in terms of elevation is of the order of 4-5 degrees (Makous and Middlebrooks, 1990).

2.3.3. Distance

In acoustic localization, distance is simply defined as the linear measure of the conjunction between the midpoint of the segment joining the two ears and the sound source. The human auditory system can use multiple acoustic cues to estimate the distance of a sound source. The two main strategies for estimating the distance from a sound source are both based on the acoustic intensity of the sound reaching the listener. The first is based on an evaluation of the absolute intensity of the direct wave. The second, called the Direct-to-Reverberant energy Ratio “DRR,” is based on a comparison between the direct wave and the reverberated sound waves (Bronkhorst and Houtgast, 1999; Zahorik, 2002; Guo et al., 2019). In addition, other cues, such as familiarity with the source or the sound, the relative motion between listener and source, and spectral modifications, provide important indications for distance estimation (Little et al., 1992). Prior knowledge of the sound and its spectral content plays a role in the ability to correctly estimate the distance (Neuhoff, 2004; Demirkaplan and Haclhabibogˇlu, 2020). Some studies have suggested that listeners may also use binaural cues to determine the distance of sound, especially if the sound source is close to the side of the listener’s head. These strategies are thought to use the ITD to localize the azimuth and the ILD to estimate the distance. Given the limitations of the ILD, these strategies would only be effective for distances less than 1 meter (Bronkhorst and Houtgast, 1999; Kopčo and Shinn-Cunningham, 2011; Ronsse and Wang, 2012). Generally speaking, the accuracy of distance estimation varies with the magnitude of the distance itself. Distance judgments are generally most accurate for sound sources approximately 1 m from the listener. Closer distances tend to be overestimated, while greater distances are generally underestimated (Fontana and Rocchesso, 2008; Kearney et al., 2012; Parseihian et al., 2014). For distant sources, the magnitude of the error increases with the distance (Brungart et al., 1999).

3. Auditory cues for sound source localization

Sound localization is based on monaural and binaural cues. Monaural cues are processed individually in one ear, mostly providing information that is useful for vertical and antero-posterior localization. Binaural cues, by contrast, result from the comparison of sounds reaching the two ears, and essentially provide information about the azimuth position of the sound source. The sections below explore these localization mechanisms.

3.1. ITD and IPD

Let us consider a sound coming, for instance, from the right side of the head: it reaches the right ear before the left ear. The difference in reception times between the two ears is called the Interaural Time Difference (ITD). It constitutes the dominant cue in estimating the azimuth of sound sources at frequencies below 1,500 Hz and loses its effectiveness at higher frequencies. ITD is actually related to two distinct processes for measuring the asynchrony between the acoustic signals received by the left and the right ears. The first process measures the temporal asynchrony of the onset between the two sounds reaching the left and right ear or between distinctive features that serve as a reference, such as variations. The second process measures the phase difference between the two sound waves reaching each ear, which represents an indirect measure of the temporal asynchrony. We refer to this second mechanism as the Interaural Phase Difference (IPD). Panel B of Figure 2 represents the two processes in graphic form.

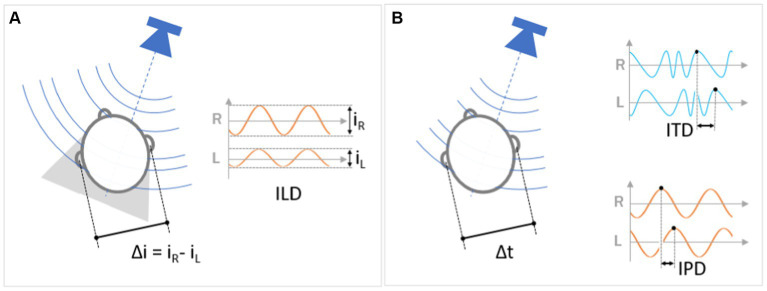

Figure 2.

ILD, ITD and IPD. The fundamental binaural cues for auditory localization are based on the difference in perception between the two ears in terms of both intensity and time. A source located in front of the listener produces a sound wave that arrives at both ears identically (the direct wave arrives at the same time and with the same intensity). By contrast, a lateral source results in a difference in signal intensity between the right and left ears, respectively iR and iL (Δi, Panel A), and in arrival time (Δt, Panel B). (A) In the case of a lateral source, the sound stimulus arriving at the more distant ear is less intense, due to its greater distance from the source and the shadow effect produced by the head itself. Interaural Level Difference (“ILD”) is the perceptual mechanism that estimates the position of the source as a function of the intensity difference between the two ears. (B) The ear more distant from the source receives the sound with a time delay. The Interaural Time Differences (“ITD”) is the perceptual mechanism for localizing the sound source based on the time delay between the two ears. Fine variations in azimuth localization are also measured as Interaural Phase Differences (“IPD”), based on the phase differences between the waves reaching each ear.

The smallest detectable interaural time difference (i.e., the maximum ITD sensitivity) is in the order of 10 μs, both for noise or complex stimuli (9 μs) (Klumpp and Eady, 1956; Mills, 1958) and for pure tones (11 μs) (Klumpp and Eady, 1956; Brughera et al., 2013). More recently, Thavam and Dietz found a larger value with untrained listeners (18.1 μs), and a smaller value with trained listeners (6.9 μs), using a band-pass noise of 20–1,400 Hz at 70 dB (Thavam and Dietz, 2019). By contrast, the largest ITD is of the order of 660–790 μs and corresponds to the case of a sound generated in front of one ear (Middlebrooks, 1999a; Gelfand, 2017). For instance, considering the spherical model of a human head with radius Rh = 8.75 cm combined with a sound speed Vs = 34,300 cm/s (at 20°C), we obtain an ITD threshold value = (3*Rh/Vs)*sin(90°) = 765.3 μs (Hartmann and Macaulay, 2014).

Tests reveal that the best azimuth localization performances using only ITD/IPD are obtained with a 1,000 Hz sound, allowing an accuracy of 3~4 degrees (Carlile et al., 1997). Beyond this frequency, ITD/IPD rapidly lose effectiveness due to the relationship between the wavelength of the sound and the physical distance between the listener’s ears. Early research identified the upper threshold value at which ITD loses its effectiveness at between 1,300 Hz and 1,500 Hz (Klumpp and Eady, 1956; Zwislocki and Feldman, 1956; Mills, 1958; Nordmark, 1976). Most recent research has found residual efficacy for some participants at 1,400 Hz and a generalized complete loss of efficacy at 1,450 Hz (Brughera et al., 2013; Risoud et al., 2018).

Due to the cyclic nature of the sound signals, an IPD value for a given frequency can be encountered for multiple azimuth positions. In such cases, the information from the IPD becomes ambiguous and can easily lead to an incorrect azimuth estimation, especially with pure tones (Rayleigh, 1907; Bernstein and Trahiotis, 1985; Hartmann et al., 2013). Various different azimuthal positions may appear indistinguishable by IPD because the phase difference is equal to a multiple of the wavelength (Elpern and Naunton, 1964; Sayers, 1964; Yost, 1981; Hartmann and Rakerd, 1989a). The quantity and angular values of these ambiguous directions depend on the wavelength of the sound: the higher the frequency of the sound, the greater the number of ambiguous positions generated. Consequently, the ITD/IPD operates more effectively at low frequencies.

3.2. ILD

When a sound source is positioned to the side of the head, one of the ears is more exposed to it. The presence of the head produces a shadowing effect on the sound in the direction of propagation (sometimes referred to as HSE – head-shadow effect). As a result, the sound intensity (or “level”) at the ear shadowed by the head is lower than at the opposite ear (see Panel A of Figure 2). The amount of shadowing depends on the angle, frequency and distance of the sound as well as on individual anatomical features. Computing the difference in intensity between the two ears provides the auditory cue named Interaural Level Difference (ILD). ILD is zero for sounds originating in the listener’s sagittal plane, while for lateral sound sources it increases approximately proportionally to the sine of the azimuth angle (Mills, 1960). From a physical point of view, the head acts as an obstacle to sound propagation for wavelengths shorter than the head size. For longer wavelengths (i.e., lower frequency), however, the sound wave passes relatively easily around the head and the difference in intensity of the soundwaves reaching the two ears becomes imperceptible. Consequently, sound frequencies higher than 4,000 Hz are highly attenuated and the ILD is a robust cue for azimuth estimation, whereas for frequencies lower than 1,000 Hz, the ILD becomes completely ineffective (Shaw, 1974).

In a reverberant environment, as the distance from the sound source increases, the sound waves reflect off multiple surfaces, resulting in a more complex received binaural signal. This leads to fluctuations in the Interaural Level Differences (ILDs), which have been shown to affect the externalization of sound (the perception that the sound is located at a distance from the listener’s head) (Catic et al., 2013).

3.3. Limits of ITD and ILD

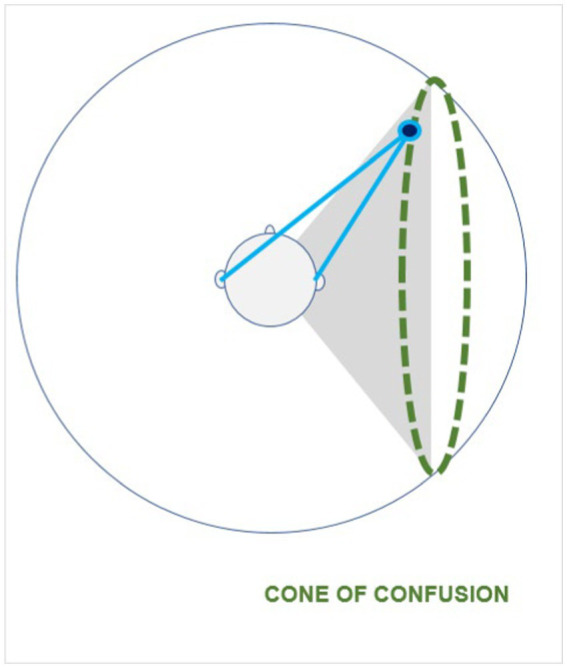

ITD and ILD appear to be two complementary mechanisms, the former being optimized for low frequencies and the latter for high frequencies. Therefore, our acoustic system exhibits the poorest performance in terms of acoustic localization in the range between 1,500 Hz and 4,000 Hz (approximately) (Yost, 2016; Risoud et al., 2018). However, given a spherical head shape, even a perfect determination of the ILD or the ITD would not be sufficient to permit complete and unambiguous pure tone localization. The ITD depends on the difference between the distances from the sound source to each of the two ears, and the ILD depends on the angle of incidence of the sound wave relative to the axis of the ears. Thus, every point situated at the same distance and the same angle of incidence would theoretically result in the same ITD and ILD. Mathematically, the solution to both systems is not a single point, but a set of points located on a hyperbolic surface, whose axis coincides with the axis of the ears. This set of points, for which the difference in distance to the two ears is constant, is called the “cone of confusion” (Figure 3). More information is required in order to obtain an unambiguous localization of the sound source. Additional factors such as reverberation, head movement, and a wider sound bandwidth greatly reduce the uncertainty of localization. In ecological conditions with complex sounds, this type of uncertainty is mainly resolved by analyzing the frequency modulation produced by the reverberation of the sound wave at the outer ear, head and shoulders: the Head-Related Transfer Function.

Figure 3.

Cone of confusion. A sound emitted from any point on the dotted line will give rise to the same ITD because the difference between the distances to the ears is constant. This set of points forms the “cone of confusion.”

3.4. Head-related transfer function (HRTF)

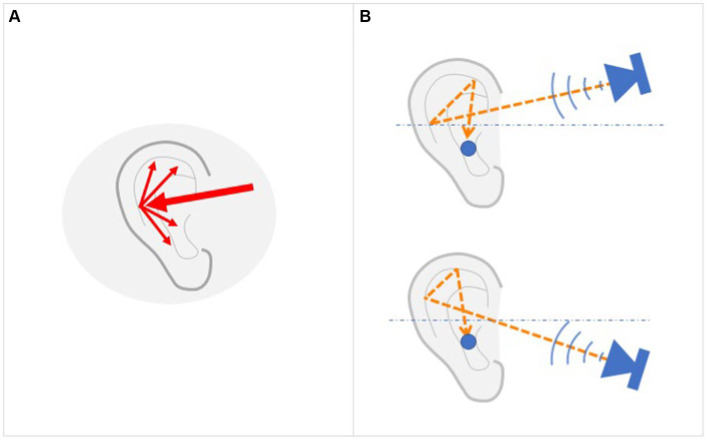

Our perceptual system has evolved with a special ability to decode the complex structure of the sounds reaching our ears, thus enabling us to estimate the spatial origin of sounds. Under ecological conditions, each eardrum receives not only the direct sound wave of each sound that reaches the listener’s ear but also a complex series of sound waves reflected from the shoulders, head, and auricle (Figure 4). This complex set of new waves that depend on the orientations of the head and the torso relatively to the sound source, greatly enriches the spatial information contained in and carried by the sound. These reflected waves are used by the auditory system to extract spatial information and to infer the origin of the sound. This acoustic filtering can be characterized by transfer functions called the Head-Related Transfer Functions (HRTFs). HRTFs are considered monaural cues because the spectral distortions they produce depend solely on the position of the sound source relative to the orientation of the body, the head, and the ear. No comparison between the signals received by both ears is required.

Figure 4.

HRTF. (A) Arrival of a sound wave at the outer ear and the generation of a series of secondary waves due to reflection in the auricle. (B) Each sound wave that reaches the ear thus generates a different set of reflected waves, depending on its original orientation. Using this relationship, our auditory system is able to reconstruct the origin of the sound by analyzing the set of waves that reach the eardrum.

Several studies have reported better HRTF localization performance for sound sources positioned laterally than for sources positioned frontally and rearwardly. For example, Mendonça, and later Oberem, found an improvement in lateral localization ranging from a few degrees to ten degrees, depending on the test conditions (Wightman and Kistler, 1989; Mendonça et al., 2012; Oberem et al., 2020). However, a marked interindividual variability in localization performances as well as in the ability and time required to adapt to non-individualized HRTFs has also been observed (Mendonça et al., 2012; Stitt et al., 2019). Begault and colleagues conducted a study on the localization of speech stimuli in which they compared individualized and non-individualized HRTFs (obtained from a dummy head). One of the aims of the research was to assess whether the relationship between listener and dummy head size was a predictor of localization errors. Contrary to initial expectations, the results showed no correlation between localization error and head size difference (Begault et al., 2001). Another interesting and rather unexpected result reported by both Begault et al. and Møller et al. was that individualized HRTFs do not bring about an advantage in speech localization accuracy compared to non-individualized HRTFs. To explain this finding, Begault and colleagues suggest that most of the spectral energy of speech is in a frequency range in which ITD cues are more prominent than HRTF spectral cues (Møller et al., 1996; Begault et al., 2001).

The way in which the sound is modified by the reflection in the outer ear and the upper body can be recorded experimentally and reproduced by transfer functions. The corresponding information can be used in practice to play sounds through headphones and create the perception that each sound is coming from a distant desired origin, thus creating a three-dimensional virtual auditory environment (Wightman and Kistler, 1989; Møller, 1992). Nowadays, HRTFs are the most frequent way of creating acoustic spatialization systems, strongly driven by the demand for higher-performance entertainment systems, games, and specially augmented/virtual reality systems (Begault, 2000; Poirier-Quinot and Katz, 2018; Geronazzo et al., 2019; Andersen et al., 2021). Because everyone’s anatomy is different and ear shapes are very individual, HRTF techniques can be divided into two main categories depending on whether they use individualized or non-individualized transforms. Although special environments and extensive calibrations are needed in order to obtain individualized transforms, they do, however, permit more accurate auditory spatial perception (Pralong and Carlile, 1996; Meshram et al., 2014; Gan et al., 2017). Individualized HRTFs also require interpolation techniques, as HRTFs are typically measured at discrete locations in space (Freeland et al., 2002; Grijalva et al., 2017; Acosta et al., 2020). Conversely, non-individualized HRTFs are generic HRTFs, obtained on the basis of averaged or shared parameters, which are then universally applied. They are easier to obtain, but are known to cause spatial discrepancies such as poor externalization, elevation errors, misperception, and front-back confusion (Wenzel et al., 1993; Begault, 2000; Berger et al., 2018).

Various methods have been developed to generate individualized HRTF based on anthropometric data: by analytically solving the interaction of the sound wave with the auricle (Zotkin et al., 2003; Zhang et al., 2011; Spagnol, 2020), using photogrammetry (Mäkivirta et al., 2020), or based on deep-learning neural networks (Chun et al., 2017; Lee and Kim, 2018; Miccini and Spagnol, 2020). At the same time, several studies have also investigated the possibility of using a training phase to improve the effectiveness of non-individualized HRTFs. Stitt and colleagues found a positive effect of training (Stitt et al., 2019). Mendonça and colleagues investigated whether feedback is necessary in the training phase. Their results clearly indicate that simple exposure to the HRTF sounds without feedback does not produce a significant improvement in acoustic localization (Mendonça et al., 2012).

3.5. Reverberation

Reverberation enriches the sound along its path with additional information concerning the environment, the sound itself, and its source. Under anechoic conditions, the listener estimates the direction and distance of the sound source based on its intensity and the spectral content of the sound. When reverberation is present, however, it provides additional cues for direction and distance estimation, thereby potentially improving localization accuracy. In fact, due to reverberation, successive waves resulting from the reflection of the sound on the surfaces and objects in the environment are added to the direct train of sound waves, acquiring and conveying information about the size and the nature of these surfaces as well as their positions relative to the sound source (Gardner, 1995).

A listener who can move its head is better able to utilize the beneficial effects of reverberation. However, under certain conditions, such as in environments with high levels of reverberation or in the Franssen effect, reverberation can negatively impact localization accuracy (Hartmann and Rakerd, 1989b; Giguere and Abel, 1993).

3.5.1. Reverberation and estimation of azimuth and elevation

In the presence of reverberation, the ITD and ILD must process both the direct wave and the trains of reflected waves, which may come from directions very different from the original direction of the sound. Although reverberation adds a great deal of complexity to auditory percepts, our nervous system has developed the ability to decode the different overlapping pieces of information. A very effective solution for localization in this context is based on the Precedence Effect. As mentioned, when a sound is emitted from a given source, our auditory system first receives the direct sound wave and then, at very short time intervals, sound waves reflected from various surfaces in the surrounding environment. The Precedence effect is a mechanism by which our brain is able to ignore successive reflections and correctly localize the source of sound based on the arrival of the direct sound wave. This mechanism is crucial in supporting localization in echogenic environments (Blauert, 1996; Hartmann, 1999; Nilsson and Schenkman, 2016).

The literature reports conflicting results concerning the effect of reverberation on localization accuracy in terms of the estimation of azimuth and elevation. In a perceptual study in a reverberant room, Hartmann reported a degradation of azimuth localization due to the presence of reverberation (Hartmann, 1983).

Begault and colleagues, on the other hand, found a significant improvement in azimuth localization (of about 5°) in the presence of reverberation, although for some participants the improvement in accuracy was achieved only when head motion was allowed. However, they also found an increase in the average elevation error from 17.6° without reverberation to 28.7° with reverberation (Begault et al., 2001). Conversely, Guski compared the no-reverberation condition with the reverberation condition in which the sound reverberated from a single surface in different orientations. His results showed an overall increase in correct localizations with a sound-reflecting surface on the floor, especially in terms of elevation (Guski, 1990).

3.5.2. Reverberation and distance estimation

Reverberation has proven to be a useful aid when estimating the distance from a sound source. The reverberant wave train is reflected off surfaces, walls and objects and this causes its energy to remain nearly constant over distance – especially indoors. Under ideal conditions, direct propagation in air causes the direct sound wave to lose 6 dB of intensity for every doubling of distance. In a study conducted in a small auditorium, Zahorik demonstrated that the intensity of reflected waves, while being smaller than the direct wave, decreases by only 1 dB for each doubling of distance (Zahorik, 2002). As a result, the ratio between the direct-wave energy and the reflected-wave energy (called the Direct-to-Reverberant Energy Ratio, or DRR) decreases as the distance from the source increases, and has been shown to be a useful perceptual cue for distance estimation (von Békésy, 1938; Mershon and King, 1975; Bronkhorst and Houtgast, 1999).

3.5.3. Reverberation and front-back confusion

Front-back (and back-front) confusion refers to the misperception of a sound position, with the sound being perceived in the wrong hemifield (front or back). This perceptual confusion is particularly common when synthetic sounds are played or audio headphones are used (i.e., in particular when non-individualized HRTFs are used) (Begault et al., 2001; Rychtáriková et al., 2011). It is particularly critical when bone-conduction audio headphones are used since these, by exploiting an alternative communication channel to the inner ear, completely bypass the outer ear and its contribution to spatial perception (Wang et al., 2022). One way to reduce front-back confusion could be to introduce reverberations in synthesized signals. However, the experimental results are ambiguous. Some studies, such as Begault et al. (2001), find that reverberation does not significantly reduce front-back confusion (Begault et al., 2001), while other studies have found that the presence of acoustic reverberation waves significantly improves antero-posterior localization and reduces front-back confusion (Reed and Maher, 2009; Rychtáriková et al., 2011).

3.5.4. Reverberation and sound externalization

The presence of reverberation significantly improves the perceived externalization of sound. Externalization refers to the perception of sound as external to and distant from the listener. Poor externalization causes the listener to perceive sound as being diffused “inside his/her head” and is a typical problem when sound is played through headphones (Blauert, 1997). The three factors known to contribute the most to effective externalization are the use of individualized HRTFs, the relative motion between source and listener, and sound reverberation. When creating artificial sound environments, the addition of reverberation – thus reproducing the diffusion conditions found in the real environment – significantly increases the externalization of the sound, giving the listener a more realistic experience (Zotkin et al., 2002, 2004; Reed and Maher, 2009). Reverberation positively influences the externalization of sounds such as noise and speech (Begault et al., 2001; Catic et al., 2015; Best et al., 2020), including in the case of the hearing aids used by hearing-impaired people (Kates and Arehart, 2018). In some cases, the “early” reflections are sufficient to produce a significant effect (Begault, 1992; Durlach et al., 1992).

3.6. Action – perception coupling

Auditory perception in everyday life is strongly related to movement and active information-seeking. The gesture of “lending an ear” is probably the simplest example of action in the service of auditory perception. Experimental research has shown that our auditory system localizes sounds more accurately in two areas: in front of the listener (i.e., 0° azimuth, 0° elevation) and laterally to the listener, i.e., in front of each ear (i.e., ±90° azimuth, 0° elevation). The first position permits the most accurate ITD and ILD-based localization (Makous and Middlebrooks, 1990; Brungart et al., 1999; Tabry et al., 2013), while the second guarantees the highest accuracy that can be obtained on the basis of the HRTF and maximizes the intensity of the sound reaching the eardrum (Mendonça et al., 2012; Oberem et al., 2020).

Unlike some animal species, the human auricle does not have the ability to move independently. As a result, listeners are obliged to move their heads in order to orient their ears. These movements allow them to align the sound in a way that creates the most favorable angle for perception. It should be noted that head movements are strongly related to the orientation of the different senses mobilized, and the resulting movement strategy can be remarkably complex. In addition, head movements are a crucial component in resolving ambiguous or confusing localization conditions (Thurlow et al., 1967; Wightman and Kistler, 1997; Begault, 1999).

The natural way for humans to hear the world is through active whole-body processes (Engel et al., 2013). Movement brings several improvements to auditory localization. Compared to static perception, a perceptual strategy that includes movement results in a richer and more varied percept. Although some early works reported equal or poorer sound localization during head movement (Wallach, 1940; Pollack and Rose, 1967; Simpson and Stanton, 1973), subsequent research has shown several benefits and has revealed the perceptual improvements permitted by perception during movement (Noble, 1981; Perrett and Noble, 1997a, b). Goosens and Van Opstal suggested that head movements could provide richer spatial information that allows listeners to update the internal representation of the sound and the environment (Goossens and Van Opstal, 1999). Some authors have also suggested that a perceptual advantage occurs only when the sound lasts long enough (Makous and Middlebrooks, 1990), with a minimum duration of the order of 2 s appearing to be necessary to allow subjects to achieve the conditions required for maximum performance (Thurlow and Mergener, 1970). Iwaya and colleagues also found that front-back confusion can only be effectively resolved with longer-lasting sounds (Iwaya et al., 2003). Some studies on acoustic localization have taken advantage of this condition for their experimental protocols: for example by using very short stimuli (typically ≤150 ms) to ensure that the sound ends before the subject can initiate a head movement, thus making it unnecessary to restrain the participant’s head (Carlile et al., 1997; Macpherson and Middlebrooks, 2000; Tabry et al., 2013; Oberem et al., 2020). Conversely, when the sound continues throughout the entire movement, the listener can implement a movement strategy within a closed-loop control paradigm (Otte et al., 2013).

Although both conditions of relative motion between source and listener bring about a perceptual advantage, there is a relative advantage in spatial processing when it is the listener who is moving (Brimijoin and Akeroyd, 2014). The presence of motion helps resolve or reduce ambiguities, such as front-back confusion (Wightman and Kistler, 1999; Begault et al., 2001; Iwaya et al., 2003; Brimijoin et al., 2010) and this is true even for listeners with cochlear implants (mainly through head movement) (Pastore et al., 2018). The relative motion improves the perception of distance (Loomis et al., 1990; Genzel et al., 2018), the perception of elevation (Perrett and Noble, 1997b), the effectiveness of HRTF systems (Loomis et al., 1990), and the assessment of one’s own movement (Speigle and Loomis, 1993).

4. Elements influencing auditory localization

4.1. Sound frequency spectrum

Acoustic localization performance is highly dependent on the frequency of the sound. Our perceptual system achieves the best localization accuracy for frequencies below 1,000 Hz and good localization accuracy for frequencies above 3,000 Hz. Localization accuracy decreases significantly in the range between 1,000 Hz and 3,000 Hz. These results are a consequence of the functional characteristics of our localization processes (see ITD and ILD). Experimental research such as that of Yost and Zhong, who tested frequencies of 250 Hz, 2,000 Hz, and 4,000 Hz, has confirmed the different localization abilities for the three frequency ranges (Yost and Zhong, 2014). ITD works best for frequencies below 1,500 Hz, while ILD is most effective for frequencies above 4,000 Hz.

Concerning the HRTF, Hebrank and Wright showed that sound information within the 4,000–16,000 Hz spectrum is necessary for good vertical localization. Langendijk and Bronkhorst consistently showed that the most important cues for vertical localization are in the 6,000–11000 Hz frequency range. More precisely, Blauert found that the presence of frequency components from about 8,000–10,000 Hz is critical for accurate estimation of elevation. Langendijk and Bronkhorst showed that antero-posterior localization cues occur in the 8,000–16,000 Hz range (Blauert, 1969; Hebrank and Wright, 1974; Langendijk and Bronkhorst, 2002).

The bandwidth of a sound plays an important role in acoustic localization: the broader the bandwidth, the better the localization performance (Coleman, 1968; Yost and Zhong, 2014) under both open-field and reverberant-room conditions (Hartmann, 1983). Furthermore, the spectral content of the sound is an important cue for estimating the distance of the sound source. This type of cue works under two different conditions. Over long distances, high frequencies are more attenuated than low frequencies due to propagation through the air. As a result, sounds with reduced high-frequency content are perceived as being farther away (Coleman, 1968; Butler et al., 1980; Little et al., 1992). However, in order to obtain a noticeable effect, the distance between the source and the listener must be greater than 15 m (Blauert, 1997). For sound sources close to the listener’s head (about 1 m), by contrast, the spectral content is modified due to the diffraction of the sound around the listener’s head. For this reason, for sources in the proximal space (<1.7 m), sounds at lower frequencies (<3,000 Hz) actually result in more accurate distance estimation than sounds at higher frequencies (>5,000 Hz) (Brungart and Rabinowitz, 1999; Kopčo and Shinn-Cunningham, 2011).

Finally, sound frequency appears to play a role in front-back confusion errors. Both Stevens and Newman, and Withington, found that the number of confusion errors was much higher for sound sources below 2,500 Hz. Letowski and Letowski reported more frequent errors for sound sources located near the sagittal plane for narrow-band sounds and for a spectral band below 8,000 Hz. The number of confusion errors decreases rapidly as the energy of the high-frequency component increases (Stevens and Newman, 1936; Withington, 1999; Letowski and Letowski, 2012).

4.2. Sound intensity

Sound intensity plays an important role in several aspects of auditory localization, and especially in determining the distance between the listener and the sound source. It does so by underpinning two important mechanisms: the estimation of the intensity of the direct wave, and the comparison between the intensities of the direct wave and the reverberated waves.

At the theoretical level, the intensity of a spherical wave falls by 6 dB with each doubling of distance (Warren, 1958; Warren et al., 1958). In the real word, however, both environmental factors and sound source features can alter this simple mathematical relationship (Zahorik et al., 2005). Experimental tests have shown that the reduction in intensity during propagation in air is greater than the theoretical value and that this reduction amounts to about 10 dB for each doubling of distance (Stevens and Guirao, 1962; Begault, 1991). However, Blauert found an even higher value of 20 dB (Blauert, 1997). Petersen confirmed that the relationship between intensity reduction and distance can be assumed to be linear (Petersen, 1990).

Some perceptual factors influence the accuracy with which we can estimate the distance to a sound source. The first, of course, is related to our ability to discriminate small changes in intensity. Research has shown that the smallest detectable change in intensity level for humans is about 0.4 dB for broadband noise, while this threshold increases to 1–2 dB for tonal sounds (this value varies with the frequency and sound level) (Riesz, 1932; Miller, 1947; Jesteadt et al., 1977).

It might be expected that a sound of higher intensity would always be easier to localize. However, research has shown that the ability to localize sounds in the median plane deteriorates above about 50 dB (Rakerd et al., 1998; Macpherson and Middlebrooks, 2000; Vliegen and Van Opstal, 2004). Performance degradation is more pronounced for short sounds and affects almost only the median plane – the reduction in localization accuracy on the left–right axis being much less pronounced. Some studies have found that at higher levels, localization performance improves again as the sound intensity increases. Marmel and colleagues tested localization ability at different sound intensity levels and compared artificial HRTF and free-field conditions. They found that in free-field listening, localization ability increases and then deteriorates monotonically up to 100 dB, whereas in the HRTF condition, performance still improves at 100 dB (Macpherson and Middlebrooks, 2000; Brungart and Simpson, 2008; Marmel et al., 2018).

The intensity value provides information relating to both the power and distance of the source. In the absence of information provided by other sensory channels, such as vision, this condition can lead to a state of indecision in the measurement of the two parameters. Researchers are still examining the way the auditory system handles the two pieces of information. The evidence produced by Zahorik and Wightman supports the hypothesis that the two processes are separate. These authors reported good power estimation even when distance estimation was less accurate (Zahorik and Wightman, 2001). The most commonly accepted way of resolving the confusion between power and distance is based on the Direct-to-Reverberant energy Ratio, which consists in a comparison between the direct wave and the reverberant wave train (see “Reverberation”) (Zahorik and Wightman, 2001).

4.3. Pointing methods

Research over the past 30 years has shown that pointing methods can affect precision and accuracy in localization tasks. Pointing paradigms can be classified as egocentric or allocentric, with egocentric methods generally being reported to be more accurate.

When defining a protocol for a localization task, several pointing/localizing methods are possible: the orientation of a body part, such as pointing with a hand or a finger (Pernaux et al., 2003; Finocchietti et al., 2015), the orientation of the chest (Haber et al., 1993), the nose (Middlebrooks, 1999b), or the head (Makous and Middlebrooks, 1990; Carlile et al., 1997; Begault et al., 2001); use of a hand-tool (Langendijk et al., 2001; Cappagli and Gori, 2016), or a computer interface (Pernaux et al., 2003; Schoeffler et al., 2014); walking (Loomis et al., 1998); or simply using a verbal response (Klatzky et al., 2003; Finocchietti et al., 2015). In 1955, Sandel and colleagues conducted three localization experiments in which participants gave the response using an acoustic pointer. The method made use of a mobile loudspeaker that participants could place at the location where they felt the stimulus had been emitted (Sandel et al., 1955).

Several studies have focused on evaluating or comparing different localization methods. In a study conducted on blind subjects, Haber and colleagues compared nine different response methods using pure tones as stimuli in the horizontal plane. They showed that using body parts as the pointing method provides the best performance by optimizing localization accuracy and reducing intersubject variability (Haber et al., 1993).

An interesting research was conducted by Lewald et al. (2000). They conducted some auditory localization experiments, investigating the influence of head rotation relative to the trunk. In the different experiments proposed, they used both headphones and an array of 9 speakers arranged in the azimuthal plane to deliver the sound stimuli. For the response, they tested head pointing, a laser pointer attached to the head, and a swivel pointer (which must be directed with both hands toward the sound source). The authors highlighted that sound localization is systematically underestimated (localization is biased toward the sagittal plane) when the head is oriented eccentrically. The orientation of the head on the azimuthal plane and the localization error appear almost linear. The presentation of virtual sources through headphones also showed similar deviations. When a visual reference of the head’s median plane was provided, sound localization was more accurate. Odegaard and colleagues used a very large sample of subjects (384 participants) in a study investigating the presence and direction of bias in both visual and auditory localizations. They used an eye tracking system to record participants’ responses. Contrary to Lewald, in the unimodal auditory condition they found a peripherally oriented localization bias (i.e., overestimation), which was also more pronounced as stimulus eccentricity increased (Odegaard et al., 2015). Recanzone and colleagues conducted comparative research, in which they found that the eccentricity of peripheral auditory targets is typically overestimated when using hand pointing, and typically underestimated when using head pointing methods. They suggested that the different relative position of the head with respect to the sound source and the trunk may explain these results (Recanzone et al., 1998).

One study show that the dominant hand also influences responses. The study by Ocklenburg investigated the effect of laterality in a sound localization task. The protocol is based on diffusion of the auditory stimuly through a set of 21 horizontal speakers, and a pointing by head orientation or hand pointing. Interestingly, results show that both right-and left-handers have a tendency to localize sound toward the side contralateral to the dominant hand, regardless of their overall accuracy (bias similar to that observed in visual perception, suggesting same supramodal neural processes involved) (Ocklenburg et al., 2010).

Majdak and colleagues used individualized HRTFs to compare head-and hand-pointing. In a virtual environment, they found that the pointing method had no significant effect on the localization task (Majdak et al., 2010). Tabry and colleagues also assessed head-and hand-pointing performance. They assessed the participants’ response to real words both in a free-field environment and in a semi-anechoic room. Under these conditions, and in contrast to Majdak’s findings, they found large and significant differences in performance between the two pointing methods. More specifically, they found better performance in the horizontal plane with the hand-pointing method, while head-pointing resulted in better performance in the vertical plane (Tabry et al., 2013). In addition, they reported lower accuracy for head-pointing at extreme upward and downward elevations, probably due to the greater difficulty of the articular movements.

Populin compared head-and gaze-pointing. He reported similar performances with the two methods in the most eccentric positions. However, in frontal positions, he unexpectedly found that gaze-pointing resulted in significantly larger errors than head-pointing (Populin, 2008).

Gilkey and colleagues proposed an original method using an allocentric paradigm called GELP (God’s Eyes Localization Pointing) designed to accelerate response collection in auditory-localization experiments. GELP uses a 20-cm-diameter sphere as a model of the listener’s head, on which the participant can indicate the direction from which he/she perceives the sound coming. Test results obtained with GELP showed that it was a fast way to record participants’ responses and that it was also more accurate than the verbal response method. However, when they compared their results with those of Makous and Middlebrooks (1990), the authors found that the GELP technique is significantly less accurate than the head-pointing technique (Makous and Middlebrooks, 1990; Gilkey et al., 1995). These results were subsequently confirmed by the work of Djelani et al. (2000).

To collect responses in a localization task, it is also possible to use a computer-controlled graphical interface (Graphical User Interface, GUI) through which participants can indicate the perceived direction. Pernaux and colleagues, and Schoeffler and colleagues, compared two different GUI methods, consisting of a 2D or 3D representation, with the participants using a mouse to give their responses. Both reported that the 3D version was more effective. Moreover, Pernaux also compared the finger-pointing method with the two previous methods and showed that finger-pointing was faster and more accurate (Pernaux et al., 2003; Schoeffler et al., 2014).

Table 1 shows and classifies a selection of articles that have investigated the characteristics of different pointing methods. This table provides an overview of the main categories into which the literature on auditory localization can be pragmatically classified. It also includes a selection of key reference works that illustrate these categories. The information catalogued in the table can serve as a framework for organizing new related work.

Table 1.

Experimental research articles on pointing methods in auditory localization.

| References | Pointing method | Spatial dimension | Auditory cue | Environ. | ||||

|---|---|---|---|---|---|---|---|---|

| Head (H), Gaze (G), Hand / Finger (HF), Hand Pointer Tool (T), Other (specified) | ||||||||

| H | G | HF | T | Other | Azimuth (A) Elevation (E) Distance (D) |

Real (R), Virtual (V) |

||

| Aggius-Vella et al. (2018) | Verbally | A | R | |||||

| Aggius-Vella et al. (2020) | Verbally (f2) | A | R | |||||

| Bahu et al. (2016) | × | × | × (a) | A, E | R | |||

| Begault et al. (2001) | × | Graph_Int (c) | A, E, D | HRTF | V | |||

| Berthomieu et al. (2019) | Verbally | D | V | |||||

| Bidart and Lavandier (2016) | Scale (d), verbally | D | V | |||||

| Boyer et al. (2013) | (×) | × | A | V | ||||

| Brungart et al. (1999) | (e) | A, E | ITD, ILD, HRTF | V | ||||

| Cappagli et al. (2017) | Verbally (f2) | D | R | |||||

| Cappagli and Gori (2016) | × | A | R | |||||

| Carlile et al. (1997) | × | A, E | R | |||||

| Chandler and Grantham (1992) | PB, verbally (f2) | A, E, D | R | |||||

| Djelani et al. (2000) | × | × | GELP | A, E | HRTF | V | ||

| Dobreva et al. (2011) | Joystick | A, E | ITD, ILD | R | ||||

| Finocchietti et al. (2015) | × | A, E | R | |||||

| Getzmann (2003) | × | Verbally | E | R | ||||

| Gilkey et al. (1995) | GELP | A, E | R | |||||

| Guo et al. (2019) | Verbally | (A), D | R | |||||

| Han and Chen (2019) | Verbally (f2) | A | HRTF | V | ||||

| Klingel et al. (2021) | Keyboard (f2) | A | ITD, ILD | V | ||||

| Langendijk et al. (2001) | × (a) | A, E | V | |||||

| Lewald et al. (2000) | × | × | Laser pointer | A | R,V | |||

| Loomis et al. (1998) | Verbally, walk (g) | D | R | |||||

| Macpherson (1994) | Verbally | (A), E | HRTF | R | ||||

| Macpherson and Middlebrooks (2000) | × | (A), E | R | |||||

| Majdak et al. (2010) | × | × | A, E, D | HRTF | V | |||

| Makous and Middlebrooks (1990) | × | A, E | R | |||||

| Mershon and King (1975) | Writing | D | R | |||||

| Middlebrooks (1999b) | × | (i) | A, E | HRTF | R,V | |||

| Nilsson and Schenkman (2016) | Keyboard (f2) | A | ITD, ILD | V | ||||

| Oberem et al. (2020) | × | × (b) | E | HRTF | V | |||

| Ocklenburg et al. (2010) | × | × | A | R | ||||

| Odegaard et al. (2015) | × | A | R | |||||

| Otte et al. (2013) | × | A, E | R | |||||

| Pernaux et al. (2003) | × | Graph_Int (c) | A, E | V | ||||

| Populin (2008) | × | A, E | R | |||||

| Rajendran and Gamper (2019) | Keyboard (f3) | E | HRTF | V | ||||

| Recanzone et al. (1998) | × | Switch | A | R | ||||

| Rhodes (1987) | Verbally | A | R | |||||

| Risoud et al. (2020) | Verbally | A | ITD, ILD, HRTF | R | ||||

| Rummukainen et al. (2018) | Verbally (f2) | A | ITD, ILD | V | ||||

| Schoeffler et al. (2014) | Graph_Int (c) | A, E | R | |||||

| Spiousas et al. (2017) | Verbally | D | R | |||||

| Tabry et al. (2013) | × | × | A, E | R | ||||

| Yost (2016) | Keyboard (f4) | A | R | |||||

| Zahorik (2002) | Writing (h) | D | R | |||||

| Van Wanrooij and Van Opstal (2004) | × | A, E | HRTF | R | ||||

The table presents a selection of research articles on experimental auditory localization and the pointing methods used. The selected articles propose a comparison of different pointing methods or provide information about a specific method. The Pointing method column provides the used or analyzed pointing methods [Head (H), Gaze (G), Hand/Finger (F), Hand Pointer Tool (T), Other (specified)]. The Spatial dimension column indicates which spatial dimensions are considered [azimuth (A), elevation (E), distance (D)]. The parentheses indicate that the variable is a part of the assessment, even though it is not the main object of the research. The Auditory cue column indicates whether the focus of the article is on the analysis of a specific auditory cue: ITD, ILD, HRTF. The Environ. column indicates whether the test was conducted in a real environment (R), using loudspeakers placed in real space around the listener, or in a virtual environment (V), with the listener wearing audio headphones and using HRTF techniques. “Graph_Int” for Graphical Interface. “PB” for Push Button. (a) Gun pointer. (b) Hand-held marker. (c) Schematic 2D and 3D views on which the subject reported his localization judgment with a mouse or a joystick. (d) Representative scale with hand selection. (e) HRTF measurement. (f2) Two-alternative forced choice. (f3) Three-alternative forced choice. (f4) Fifteen-alternative forces choice. (g) Listener had to walk to the location perceived as the source. (h) On a computer terminal with a numeric keypad. (i) Listener was instructed to “point with her/his nose.”

4.4. Training

The considerable research and extensive experimental tests conducted in recent decades have shown that habituation and training are factors that significantly influence participants’ performance in localization tasks. Habituation allows participants to become familiar with the task and the materials used, and a few trials are usually enough. Training is a deeper process that aims to enable participants to “appropriate” the methods and stimuli and requires a much greater number of trials (Kacelnik et al., 2006; Kumpik et al., 2010). There is no consensus on the duration required for an effective initial training phase. The training task plays an equally important role. For instance, Hüg et al. (2022) investigated the effect of training methods on auditory localization performance for the distance dimension. Comparing active and passive movements, they observed that the training was effective in improving localization performance only with the active method (Hüg et al., 2022). Deep and effective training results in much lower variability in the results. However, from an ecological point of view, deep training may affect the spontaneity of responses (Neuhoff, 2004).

Although some authors prefer not to subject their participants to a training phase, thus prioritizing unconditioned responses, this approach appears to be very uncommon (Mershon and King, 1975; Populin, 2008). Some studies have foregone the use of a habituation or training phase and have instead performed a calibration and/or verification of task understanding (Otte et al., 2013). Bahu et al. and Hüg et al. proposed a simple habituation phase consisting of 10 or 4 trials, respectively, that were identical to the task used in the subsequent test (Bahu et al., 2016; Hüg et al., 2022). Macpherson and Middlebrooks proposed training consisting of five consecutive phases, each composed of 60 trials. The five phases progressively introduced the participant to the complete task. The entire training phase lasted 10 min and was performed immediately before the tests (Macpherson and Middlebrooks, 2000).

Other studies, by contrast, have proposed a more extensive training phase. In a study specifically devoted to the effects of training on auditory localization abilities, Majdak and colleagues, found that for the head-and hand-pointing methods, respectively, listeners needed 590 and 710 trials (on average) to achieve the required performance (Majdak et al., 2010). To enable their participants to learn how to use the pointing method correctly, Oberem and colleagues proposed training consisting of 600 localization trials with feedback (Oberem et al., 2020). Middlebrooks trained participants with 1,200 trials (Middlebrooks, 1999b). Oldfield and Parker’s participants were trained for at least 2 h before performing the test (Oldfield and Parker, 1984). Makous and Middlebrooks administered 10 to 20 training sessions to listeners, with and without feedback (Makous and Middlebrooks, 1990).

4.5. Auditory localization illusions

In auditory illusions, the perception or interpretation of a sound is not consistent with the actual sound in terms of its physical, spatial, or other characteristics. Some auditory illusions concern the localization or lateralization of sound. One of the earliest and best-documented auditory illusions is the Octave illusion (or Deutsch illusion), discovered by Diana Deutsch in 1973. Deutsch has identified a large number of auditory illusions of different types, of which the Octave illusion is the best known. This illusion is produced by playing a “high” and a “low” tone through stereo headphones, while alternating the sound-ear correspondence (“high” left and “low” right, and vice-versa) four times per second. The two tones are an octave apart. The illusion takes the form of a perceptual alteration of the nature and lateralization of the sounds, which are perceived as a single tone that continuously alternates between the right and left ears (Deutsch, 1974, 2004). Although the explanation of this illusion is still a matter of debate, the most widely accepted solution is the one proposed by the author herself and derives from the existence of a conflict between the “what” and “where” decision-making mechanisms (Deutsch, 1975). One of the most robust and fascinating auditory illusions is the Franssen effect, discovered by Nico Valentinus Franssen in 1960. The Franssen effect is created by playing a sound through two loudspeakers, resulting in an auditory illusion in which the listener mislocalizes the lateralization of the sound. At the beginning of the illusion, a sound is emitted from only one of the loudspeakers (it is unimportant whether this is the left or right speaker) before then being completely transferred to the opposite side. Although the first speaker has stopped playing, the listener does not perceive the change of side. The most widely accepted explanation of the Franssen effect identifies the use of a pure sound, the change in laterality through “rapid fading” from one side to the other, the dominance of onsets for localization (in accordance with the law of the first wave front) and, most importantly, the presence of reverberation as the key elements. In the absence of reverberation, the effect does not occur (Hartmann and Rakerd, 1989b). The illusion created by the Franssen effect is an excellent example of how perception (and in this particular case, auditory localization) also arises from the individual’s prior experience and is not just the result of momentary stimulation.

Advances in the understanding of the functioning of the auditory system have stimulated new and more original research, and this has led to the discovery (or creation) of new auditory illusions. Bloom studied and experimented with the perception of elevation; he created an illusion of sound elevation through spectral manipulation of the sound (Bloom, 1977). A more recent auditory illusion is known as the Transverse-and-bounce illusion. This illusion uses front-to-back confusion and volume changes to create the perception that a single sound stimulus is in motion. When the volume increases, the sound is perceived as approaching, while when it decreases, it is perceived as moving away from the listener. This illusion can be reproduced using either speakers or headphones (Bainbridge et al., 2015). Di Zio and colleagues investigated the Audiogravic Illusion (i.e., head-centered auditory localization influenced by the intensity and direction of gravity). To do this, they used an original and interesting experimental setup to manipulate the direction of gravity perceived by participants. The results of their research show that by increasing the magnitude of the resulting gravitational force and changing its direction relative to the head and torso, it is possible to obtain an apparent displacement of a sound relative to the head in the opposite direction (DiZio et al., 2001).

Some auditory illusions have subsequently been used in a number of important applications. Stereophony is perhaps the most widely used illusion. Stereophony is based on the “summing localization” effect: when two sounds reach the two ears with a ‘limited incoherence’ in time and level, the stimuli are merged into a single percept. Under these conditions, our brain infers a “phantom source,” located away from the listener, whose location is consistent with the perceived differences between the right and left ear stimuli. The purpose of using this illusion is to achieve a wider spatial perception in the diffusion of sounds and music with headphones or speakers (Chernyak and Dubrovsky, 1968).

5. Conclusion

The human ability to localize sounds in our surroundings is a complex and fascinating phenomenon. Through a sophisticated set of mechanisms, our auditory system enables us to perceive the spatial location of sounds and orient ourselves in the world around us.

In this article, we examined the main processes involved in auditory localization, based on monoaural and binaural cues, time and intensity differences between the ears, and frequencies that make it easier – or more difficult – to localize the source. We have supplemented the “traditional” description of these mechanisms with the most recent research findings, which show how some ancillary cues, such as reverberation or relative motion, are essential to achieve our impressive localization performance. We also have enhanced the functional description with relevant information concerning methodologies and perceptual limitations in order to provide a broader information set.