Abstract

Real‐world evidence (RWE) trials have a key advantage over conventional randomized controlled trials (RCTs) due to their potentially better generalizability. High generalizability of study results facilitates new biological insights and enables targeted therapeutic strategies. Random sampling of RWE trial participants is regarded as the gold standard for generalizability. Additionally, the use of sample correction procedures can increase the generalizability of trial results, even when using nonrandomly sampled real‐world data (RWD). This study presents descriptive evidence on the extent to which the design of currently planned or already conducted RWE trials takes sampling into account. It also examines whether random sampling or procedures for correcting nonrandom samples are considered. Based on text mining of publicly available metadata provided during registrations of RWE trials on clinicaltrials.gov, EU‐PAS, and the OSF‐RWE registry, it is shown that the share of RWE trial registrations with information on sampling increased from 65.27% in 2002 to 97.43% in 2022, with a corresponding increase from 14.79% to 28.30% for trials with random samples. For RWE trials with nonrandom samples, there is an increase from 0.00% to 0.95% of trials in which sample correction procedures are used. We conclude that the potential benefits of RWD in terms of generalizing trial results are not yet being fully realized.

Study Highlights.

WHAT IS THE CURRENT KNOWLEDGE ON THE TOPIC?

Increasing the generalizability of RWE trials is important for the transferability of trial results to marginalized patient groups. There is currently one review that identifies methodological challenges in the generalizability of RWE trials. Empirical evidence on the generalizability of existing RWE trial results is still lacking, however.

WHAT QUESTION DID THIS STUDY ADDRESS?

This study is the first to provide empirical evidence on the extent to which RWE trials utilize randomly sampled data or, if not randomly sampled, have applied sampling correction procedures. Random sampling or sample correction procedures are a prerequisite for the generalizability of RWE trial results.

WHAT DOES THIS STUDY ADD TO OUR KNOWLEDGE?

This study shows that the potential of RWE trials to increase the generalizability of results is far from exhausted. However, the proportion of RWE trials registered on clinicaltrials.gov, EU‐PAS and OSF that used either random sampling or sample correction procedures to increase generalizability increased to almost one third between 2002 and 2009.

HOW MIGHT THIS CHANGE CLINICAL PHARMACOLOGY OR TRANSLATIONAL SCIENCE?

The study results are particularly important for translational science because they demonstrate the potential that can be leveraged to increase the generalizability of RWE trials. Increasing the generalizability of RWE trials can lead to novel biological insights and help to develop targeted therapeutic strategies.

INTRODUCTION

According to the US Food and Drug Administration (FDA), real‐world data (RWD) are “data relating to patient health status and/or the delivery of health care routinely collected from a variety of sources.” 1 The sources from which RWD can be obtained range from disease registries, insurance claims, and population surveys to wearables, apps, and electronic health records. 2 , 3 Trials based on RWD, that is, real‐world evidence (RWE) trials, are more efficient to conduct, can include investigations of outcomes that would not be possible with RCTs, and include observations of real‐world patient behavior outside the setting of common clinical trials. 4 RCTs offer a high internal validity (the extent to which causal effects can be estimated on the basis of a study design), but RWE trials typically offer greater external validity, that is, the results from RWE trials are often better generalizable to a target population. 5 , 6 , 7 , 8 Theoretically, RCTs can also have high external validity. This assumes that the RCT trial sample represents the actual target population. In research practice, however, this is not the case for the vast majority of RCTs conducted: Novel therapeutics for the treatment of a specific disease are tested in RCTs, in which patients are enrolled in selected trial centers. In most cases, however, trial center patient populations differ from the actual target population, that is, all patients with the disease against which the therapeutic is being tested. Thus, most RCTs conducted have low external validity. 9 , 10 The potentially higher external validity of the RWE trials is mainly due to the data sources and survey methods used, which may allow a more comprehensive coverage of population strata.

A high degree of trial generalizability, that is, external validity, contributes to new biological insights and supports the development of targeted therapeutics. 11 , 12 The basic requirement for such generalizability is random sampling of study participants: random sampling involves specifying a probability p ∈ (0,1) for each study participant to be included in the trial sample, 3 , 10 thereby avoiding selection bias. Selection bias implies that outcomes from RWE trials cannot be unreservedly generalized to all population groups of interest. One reason for this is that selection bias distorts statistical estimates of treatment effects. A second reason is that most inferential statistical methods used in RWE trials are based on normality assumptions, which are only met with random sampling – although obtaining a truly random and representative sample is challenging in practice for both RCTs and RWE trials.

Hence, in principle, sampling methods must be distinguished according to whether they are suitable for generating random samples or not. Nonrandom sampling per se does not preclude the use of RWD. On the one hand, the generalizability of results does not necessarily have to be the goal of every RWE trial. For instance, the primary objective may be to record patient behavior in order to obtain more detailed information about the effects of a tested drug. If, on the other hand, the generalizability of results is one of the objectives of an RWE trial, it must be assumed that in many cases random samples will not be available or can only be obtained at great cost and in a time‐consuming manner. This is particularly true for trials with rare disease end points, as the cost and time required to obtain RWD increases as the disease prevalence decreases. In such cases where random samples are not available, correction procedures can be used. Weighting or raking 13 , 14 , 15 as well as sample selection 16 , 17 , 18 and outcome regression models 19 , 20 can help to improve the generalizability of results from nonrandom samples in RWE trials. 3 , 10 These correction procedures are typically not used in RCTs because of lower sample sizes and a lack of statistical power for employing these methods.

Regarding the generalizability of findings from RWE trials, there is currently a lack of transparency. It is worth noting that both the FDA and the European Medicines Agency (EMA) have established regulatory pathways for RWD, 21 , 22 , 23 , 24 which determine the design of RWE trials. In principle, standardized study protocols can be used to increase transparency along these pathways regarding the generalizability of findings from RWE trials. However, existing protocols, such as the Harmonized Protocol Template to Enhance Reproducibility (HARPER), do neither document random or nonrandom sampling nor the use of sample correction procedures. 25 Information from current trial protocols does not allow conclusions to be drawn about the generalizability of results.

Also, trial registration can increase the transparency of RWE trials regarding the generalizability of findings. In 2019, the ISPO‐ISPER working group proposed registrations of RWE trials as part of its Real‐World Evidence Transparency Initiative. 26 As suggested by ISPO‐ISPER, trial registration involves documenting the entire research design in order to track deviations from standardized study designs and to improve the overall transparency of individual trials. 26 Currently, there are three repositories available for registering RWE trials. The first is the OSF Real‐World Evidence (OSF‐RWE) Registry, which is offered by ISPOR in conjunction with the Open Science Framework. The second is the European Union's electronic registry for post‐authorization studies (EU‐PAS), which is frequently used for Phase IV trial registration. Finally, clinicaltrials.gov, originally developed for clinical trials, also permits the registration of RWE trials. In terms of transparency, a key advantage of trial registries over standard protocols is that trial design information can be provided voluntarily at the time of registration, going beyond what is required in existing standard protocols. In fact, clinicaltrials.gov registrations even require information on random or nonrandom sampling. In addition, other information, such as information on the use of sample correction procedures, can be provided on a voluntary basis, although providing this information is not mandatory for registration in any of the clinicaltrials.gov, OSF‐RWE, and EU‐PAS repositories.

On the basis of information that may be contained in publicly available trial registration documents, it is therefore possible to assess the extent to which considerations of random or nonrandom sampling, and where appropriate, sample correction procedures in the design of registered RWE trials are presented transparently. The transparent presentation of sampling procedures or procedures for the correction of nonrandom sampling is a prerequisite for the assessment of the generalizability of findings from RWE trials. This study provides descriptive evidence regarding the extent to which information on random and non‐sampling has been shared to date in registrations of RWE trials on clinicaltrials.gov, OSF, and EU‐PAS. For trials with nonrandom sampling on clinicaltrials.gov, OSF, and EU‐PAS, we also report the extent to which the utilization of sample correction procedures is reported. Our study thus contributes to increasing transparency regarding the generalizability of results from RWE trials.

METHODS

Our descriptive study first describes the number of registrations of RWE trials on clinicaltrials.gov and in the OSF and EU‐PAS registries. In order to report how many of the RWE trials listed in these databases provided information on random and nonrandom sampling as well as sample correction procedures, we first extracted study metadata from all three databases, where publicly available. Trial submissions were eligible for inclusion if they were explicitly designed to utilize RWD in a non‐interventional and nonrandomized trial setting. The number of RWE trial registrations was calculated based on the number of trials registered as observational studies in clinicaltrials.gov, OSF, and EU‐PAS. Since there were no duplicates across the databases, we did not deduplicate our records. To gather information on the number of trials providing information on random and nonrandom sampling and sample correction procedures, the metadata obtained for each registry were searched separately using natural language processing. We utilized a keyword search algorithm discussed with all authors that allowed us to capture text in all database fields. The same search strings were applied to all three databases. The search was confined to registrations in English language dating back to the earliest ones in all three databases. Hence, the period between 2002 for clinicaltrials.gov, from 2009 for EU‐PAS and from 2021 for OSF to 2022 is reported. Other limits, restrictions, or filters were not used. The database searches were conducted in November 2023 and were not subsequently updated. A table of the elements of the defined search strings can be found in Table S1.

RESULTS

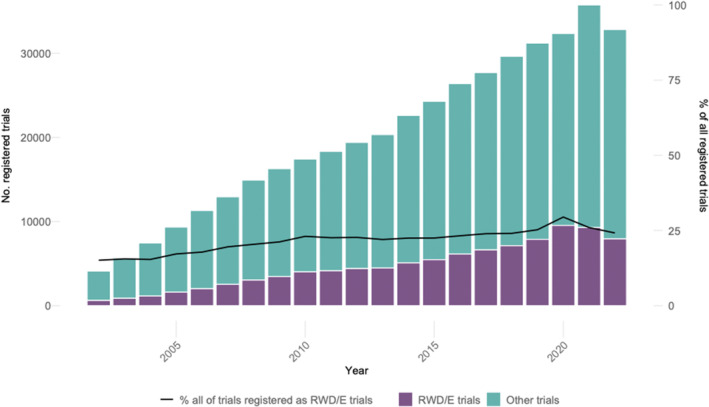

A total of 455,081 registrations were identified over the period from 2002 to 2022. Of these, 452,752 (99.49%) were registered on clinicaltrials.gov and 2275 (0.5%) on the EU‐PAS and 54 (0.01%) on the OSF databases. As illustrated in Figure 1, the total yearly number of registered RWE trials increased from 622 in 2002 to 7939 in 2022. This represents a compound annual increase of 13.14%. The proportion of RWE trials in all registered trials has been on the rise from 15.12% (2002) to 24.19% (2022).

FIGURE 1.

Absolute numbers and shares of RWE trials among all registered trials. Sources: clinicaltrials.gov, OSF‐RWE & EU‐PAS registries.

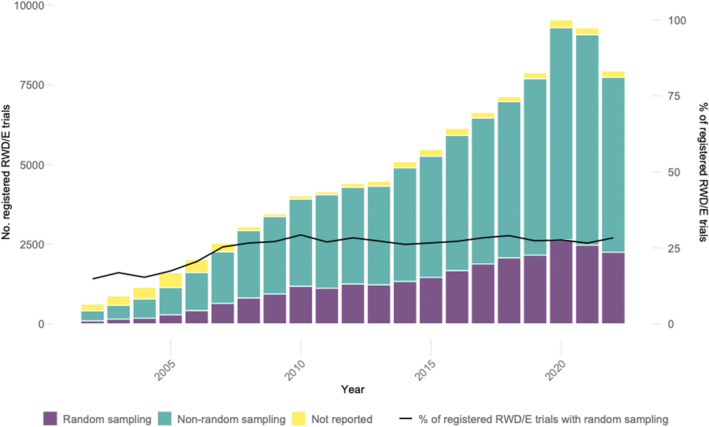

Similarly, as depicted in Figure 2, shares of registered RWE trials providing information on the sampling methods used in all RWE trials increased from 65.27% in 2002 to 97.43% in 2022. Furthermore, the yearly numbers of registered RWE trials that utilized random sampling have increased from 92 in 2002 to 2247 in 2022, representing an average annual growth rate of 17.33%. Figure 2 also demonstrates that shares of RWE trials with random sampling in all RWE trials have remained stable from 2007 to 2022: percentages of RWE trials with random sampling increased from 14.79% to 25.31% in 2007, with less significant increases in the following years until 2022 (28.30%).

FIGURE 2.

Shares of trials reporting random or nonrandom sampling among all registered RWE trials. Sources: clinicaltrials.gov, OSF‐RWE & EU‐PAS registries.

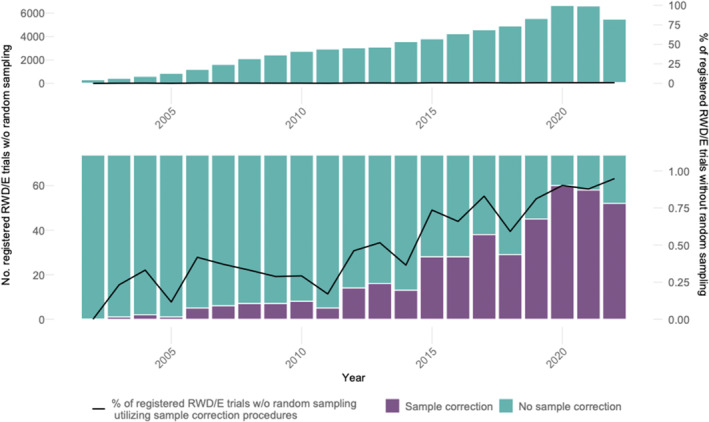

Figure 3 illustrates the increase in RWE trials with nonrandom sampling from 2002 to 2022. A visual inspection of the lower panel of Figure 3 reveals that the number of RWE trials with nonrandom sampling, in which sample correction procedures were planned, grew from 1 in 2003 to 52 in 2022, with an average annual growth rate of 23.12%. However, as the lower panel of Figure 3 also shows, shares of sample correction procedures applied in RWE trials with nonrandom sampling remained consistently low over the entire observation period: between 2002 and 2022, an increase from 0.00% to 0.95% was observed.

FIGURE 3.

Shares of trials reporting the (planned) use of sample correction procedures among all registered RWE trials with nonrandom samples. The lower panel illustrates a larger scale than the upper panel in order to depict the small shares of trials with sample correction methods. Sources: clinicaltrials.gov, OSF‐RWE, and EU‐PAS registries.

Table 1 presents results categorized by trial registry. The results shown are aggregated over the entire observation period. It is evident that clinicaltrials.gov accounts for the largest share of registered RWE trials (97.77%), followed by EU‐PAS (2.17%) and OSF (0.05%). Additionally, the share of registrations of RWE trials with no information on the sampling methods used is lowest on clinicaltrials.gov (4.02% vs. 96.62% on EU‐PAS and 88.89% on OSF). The share of RWE trials that provide information on the use of random sampling methods is highest on clinicaltrials.gov (26.90%), compared with EU‐PAS (3.34%) and OSF (11.10%). Similar findings are observed in RWE trials with nonrandom sampling (69.08% on clinicaltrials.gov vs. <0.01% on EU‐PAS and 0.00% on OSF). In contrast, shares of RWE trials with nonrandom sampling and sample correction procedures remain consistently low across all three registries. On clinicaltrials.gov, the share amounts to 0.60%, while there are no shares on EU‐PAS and OSF (0.00% and 0.00%).

TABLE 1.

Shares of registered RWE trials reporting Random or Nonrandom Sampling and Sample Correction Procedures.

| Clinicaltrials.gov | EU‐PAS | OSF | |

|---|---|---|---|

| Registered RWE trials, n (%) | 102,471 (97.77%) | 2275 (2.17%) | 54 (0.05%) |

| RWE trials among registered RWE trials … | |||

| reporting Random Sampling, n (%) | 27.562 (26.90%) | 76 (3.34%) | 6 (11.10%) |

| reporting Nonrandom Sampling, n (%) | 70.787 (69.08%) | 1 (<0.01%) | 0 (0%) |

| not reporting on Sampling, n (%) | 4.122 (4.02%) | 2.198 (96.62%) | 48 (88.89%) |

| RWE trials with Sample Correction Procedures among RWE trials reporting Nonrandom Sampling, n (%) | 423 (0.60%) | 0 (0.0%) | 0 (0.0%) |

Sources: clinicaltrials.gov, OSF‐RWE & EU‐PAS registries.

DISCUSSION

Over the observation period from 2002 to 2022, the share of RWE trials in all clinical trials has risen continuously and was just under a quarter during the last observation year. Thus, it can be assumed that some of the advantages of RWD over RCT data, such as information on health‐related behavior or patient‐reported outcomes, are used on a regular basis in the context of registered trials. However, this does not apply to the same extent to the generalizability of trial results, which is one of the key advantages of RWD: on the one hand, the share of registered RWE trials reporting on the sampling method employed also increased between 2002 and 2022 and was approaching 100% in 2022. On the other hand, this trend differs significantly depending on the registry considered: the rise in reported sampling methods is primarily due to clinicaltrials.gov's requirement for trial registrations to include information on the sampling method used. The absence of sampling information in 4.02% of RWE trials registered on clinicaltrials.gov is due to registrations made before the requirement to provide such information. In contrast, the share of RWE trials without information on sampling methods is significantly higher in EU‐PAS and OSF at 96.62% and 88.89%, respectively. In neither of these registers is the provision of information on sampling methods a prerequisite for registration.

When registering RWE trials on clinicaltrials.org, it is further necessary to specify whether the trial to be registered is based on random sampling or whether the data are generated by nonrandom processes. Compared with trials registered on EU‐PAS and OSF, clinicaltrials.org has a higher share of RWE trials with random sampling (26.90% vs. 3.34% and 11.10%). At a comparatively low level, a similar pattern can be observed for the use of sample correction procedures in nonrandomized RWE trials. The increase to 0.95% in 2022 results from the fact that sample correction procedures have so far only been used or planned for RWE trials with nonrandom sampling registered on clinicaltrials.org (0.60% vs. 0.00% and 0.00%). One explanation for the higher share of RWE trials with generalizable results on clinicaltrials.org may be that the mandatory information on sampling methods leads to an increased awareness among researchers of the limited generalizability associated with nonrandom samples. This may, in turn, lead to greater attention to the generalizability of results in the design and conduct of more RWE trials. This would provide an approach for a cross‐registry policy to improve the generalizability of RWE trials. However, these hypotheses cannot be tested with the data available for this study. Data suitable for testing the hypotheses should be collected in future prospective studies.

Currently, just under a third of registered RWE trials use either sampling or sampling correction methods in order to increase generalizability. This means that one of the great potentials of RWD remains untapped in the majority of cases. As mentioned at the beginning, this may also be due to the fact that RWD is not necessarily used with the primary aim of increasing generalizability. However, current calls to improve generalizability 12 refer to all forms of RWE trials in addition to RCTs. Thus, when conducting RWE trials, random sampling should be used more than in the past or, if this is not possible, sample correction procedures should be applied. To this end, when registering RWE trials, registries and regulatory authorities should take measures to ensure the highest possible generalizability across registries. Further measures should also be taken to verify that the chosen approaches for sampling or sample correction are the most appropriate for specific trial demands. In addition to mandatory reporting of sampling procedures, the information provided as part of trial registrations could be subject to peer review with a checklist including details of the sampling strategy. Based on this procedure, the selection of a different sampling strategy or adjustments for non‐probability samples could be recommended, if necessary. By defining appropriate procedures at an early stage, the generalizability of results in RWE trials can be significantly improved.

LIMITATIONS

This study has several limitations. First, despite a thorough search, registries other than clinicaltrials.org, EU‐PAS, and OSF may have been missed. This may have led to the omission of some publicly available registered RWE trials. In addition, due to the large number of records identified, not all trial metadata could be screened manually. As a result, some descriptions in the text fields may not have been considered. For instance, clinicaltrials.org not only requires the reporting of sampling methods, but also provides a specific field for this information. In contrast, OSF and EU‐PAS registries do not have a specific field for sampling methods. For this reason, researchers can only deliberately report sampling methods used in open text fields for trial descriptions. Due to the use of natural language processing to extract information, we may have overlooked some trials where sampling approaches were described using different terminologies. However, the search was carried out after the selection of keywords had been reviewed by a larger group of scientific peers. Thus, the risk of omission is considered low. Third, the ability or need to generalize the results of RWE trials arises only if the study population represents a subset of the population of interest. If the study population represents the entire population of interest, the results of the study cannot or need not be generalized. Thus, the results of a share of those trials for which sampling methods are not reported may apply to the entire population of interest. In these cases, there is no possibility or need to generalize results. We assume that the share of trials in question is small because in the vast majority of trials, a complete survey of all individuals in a population of interest is not feasible. 10 , 27

CONCLUSIONS

Overall, there is room for improvement in the generalizability of RWE trials. However, it should be noted that randomized selection of study participants is often difficult to implement in RWE trials. The policy measures suggested in the discussion section above should therefore be further complemented by trust‐building measures that increase participation in trials and the willingness to share health data. Moreover, access to registries and claims data should be facilitated in order to simplify the definition of reference populations for sampling or the well‐guided selection of sample correction procedures. Widespread implementation of these measures will ensure that therapies are better adapted to the needs of patient groups that are currently under‐represented in trials, thereby leading to further improvements in evidence‐based care.

AUTHOR CONTRIBUTIONS

A‐F.N., M.K., and F.B. wrote the manuscript; A‐F.N. designed the research; A‐F.N. and M.S‐A. performed the research; M.K. analyzed the data.

FUNDING INFORMATION

No funding was received for this work.

CONFLICT OF INTEREST STATEMENT

FB reports grants from the Hans Böckler Foundation, the Einstein Foundation, and the Berlin University Alliance; travel expenses covered by the German Society for Anesthesia and Intensive Care Medicine and the Robert Koch Institute; royalties from Elsevier; consulting fees from Medtronic; and honoraria from GE Healthcare, outside of the submitted work. All other authors declared no competing interests in this work.

Supporting information

Table S1.

ACKNOWLEDGMENTS

Open Access funding enabled and organized by Projekt DEAL.

Näher A‐F, Kopka M, Balzer F, Schulte‐Althoff M. Generalizability in real‐world trials. Clin Transl Sci. 2024;17:e13886. doi: 10.1111/cts.13886

Anatol‐Fiete Näher and Marvin Kopka shared first authorship.

REFERENCES

- 1. US Food & Drug Administration . Real‐World Evidence. FDA; 2023. Available from: https://www.fda.gov/science‐research/science‐and‐research‐special‐topics/real‐world‐evidence [accessed Jan 8, 2024] [Google Scholar]

- 2. Liu F, Panagiotakos D. Real‐world data: a brief review of the methods, applications, challenges and opportunities. BMC Med Res Methodol. 2022;22(1):287. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Näher AF, Vorisek CN, Klopfenstein SA, et al. Secondary data for global health digitalisation. Lancet Digital Health. 2023;5(2):e93‐e101. [DOI] [PubMed] [Google Scholar]

- 4. Sherman RE, Anderson SA, Dal Pan GJ, et al. Real‐world evidence—what is it and what can it tell us. N Engl J Med. 2016;375(23):2293‐2297. [DOI] [PubMed] [Google Scholar]

- 5. Booth CM, Tannock IF. Randomised controlled trials and population‐based observational research: partners in the evolution of medical evidence. Br J Cancer. 2014;110(3):551‐555. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Dang A. Real‐world evidence: a primer. Pharmaceut Med. 2023;37(1):25‐36. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Maissenhaelter BE, Woolmore AL, Schlag PM. Real‐world evidence research based on big data: motivation—challenges—success factors. Onkologe. 2018;24:378‐389. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Kennedy‐Martin T, Curtis S, Faries D, Robinson S, Johnston J. A literature review on the representativeness of randomized controlled trial samples and implications for the external validity of trial results. Trials. 2015;16:1‐14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Bell SH, Olsen RB, Orr LL, Stuart EA. Estimates of external validity bias when impact evaluations select sites nonrandomly. Educ Eval Policy Anal. 2016;38(2):318‐335. [Google Scholar]

- 10. Degtiar I, Rose S. A review of generalizability and transportability. Annual Review of Statistics and its Application. 2023;10:501‐524. [Google Scholar]

- 11. National Academies of Sciences, Engineering, and Medicine . Improving Representation in Clinical Trials and Research: Building Research Equity for Women and Underrepresented Groups. National Academies Press; 2022. [PubMed] [Google Scholar]

- 12. Schwartz AL, Alsan M, Morris AA, Halpern SD. Why diverse clinical trial participation matters. N Engl J Med. 2023;388(14):1252‐1254. [DOI] [PubMed] [Google Scholar]

- 13. Valliant R. Poststratification and conditional variance estimation. J Am Stat Assoc. 1993;88(421):89‐96. [Google Scholar]

- 14. Horvitz DG, Thompson DJ. A generalization of sampling without replacement from a finite universe. J Am Stat Assoc. 1952;47:663‐685. [Google Scholar]

- 15. Angrist J, Fernandez‐Val I. Extrapolate‐Ing: External Validity and Overidentification in the Late Framework (No. w16566). National Bureau of Economic Research; 2010. [Google Scholar]

- 16. Heckman JJ. Sample selection bias as a specification error. Econometrica. 1979;47:153‐161. [Google Scholar]

- 17. Lewbel A. Endogenous selection or treatment model estimation. J Econ. 2007;141(2):777‐806. [Google Scholar]

- 18. Cortes C, Mohri M, Riley M, Rostamizadeh A. Sample selection bias correction theory. International Conference on Algorithmic Learning Theory. Springer Berlin Heidelberg; 2008:38‐53. [Google Scholar]

- 19. Dahabreh IJ, Robertson SE, Tchetgen EJ, Stuart EA, Hernán MA. Generalizing causal inferences from individuals in randomized trials to all trial‐eligible individuals. Biometrics. 2019b;75(2):685‐694. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Dahabreh IJ, Robertson SE, Steingrimsson JA, Stuart EA, Hernan MA. Extending inferences from a randomized trial to a new target population. Stat Med. 2020;39(14):1999‐2014. [DOI] [PubMed] [Google Scholar]

- 21. Seifu Y, Gamalo‐Siebers M, Barthel FMS, et al. Real‐world evidence utilization in clinical development reflected by US product labeling: statistical review. Ther Innov Regul Sci. 2020;54:1436‐1443. [DOI] [PubMed] [Google Scholar]

- 22. Sola‐Morales O, Curtis LH, Heidt J, et al. Effectively leveraging RWD for external controls: a systematic literature review of regulatory and HTA decisions. Clin Pharmacol Therapeut. 2023;114(2):325‐355. [DOI] [PubMed] [Google Scholar]

- 23. Arondekar B, Duh MS, Bhak RH, et al. Real‐world evidence in support of oncology product registration: a systematic review of new drug application and biologics license application approvals from 2015–2020. Clin Cancer Res. 2022;28(1):27‐35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Haas J, Meise D, Braun S. HPR56 the rise of real‐world evidence (RWE) in EU marketing authorization decisions–past, present, and future. Value Health. 2022;25(12):S242‐S243. [Google Scholar]

- 25. Wang SV, Pottegård A, Crown W, et al. HARmonized protocol template to enhance reproducibility of hypothesis evaluating real‐world evidence studies on treatment effects: a good practices report of a joint ISPE/ISPOR task force. Value Health. 2022;25(10):1663‐1672. [DOI] [PubMed] [Google Scholar]

- 26. Orsini LS, Berger M, Crown W, et al. Improving transparency to build trust in real‐world secondary data studies for hypothesis testing—why, what, and how: recommendations and a road map from the real‐world evidence transparency initiative. Value Health. 2020;23(9):1128‐1136. [DOI] [PubMed] [Google Scholar]

- 27. Blonde L, Khunti K, Harris SB, Meizinger C, Skolnik NS. Interpretation and impact of real‐world clinical data for the practicing clinician. Adv Ther. 2018;35:1763‐1774. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Table S1.