Corresponding Author

Key words: artificial intelligence, coronary angiography, coronary artery disease, coronary physiology

Coronary angiography (CAG) was pioneered in the 1950s by Eduardo Coelho (who performed the first nonselective in vivo CAG in 1952 at the Santa Marta Hospital in Lisbon, Portugal) and Frank Mason Sones Jr (who performed the first selective CAG in vivo in 1958 at the Cleveland Clinic in Ohio, USA). Sones further developed the technique by contributing to the development of the C-arm, enabling multiangular views and a comprehensive assessment of coronary artery anatomy and disease. This approach remains essentially the same today.

When interpreting CAG images, an essential step is the assessment of the severity/significance of coronary lesions, paramount for considering revascularization. Furthermore, the presence of significant lesions increases the risk of cardiovascular events and symptoms, rendering optimal medical therapy mandatory for addressing both.

In everyday practice, when interpreting CAG images, the severity of a lesion is primarily assessed by operators as the percentage diameter stenosis (DS), by comparing the diameter in a stenosed region with a normal reference segment, by visual estimation. This “age-old” method has also been adopted in clinical trials for decades. Indeed, from the seminal CASS (Coronary Artery Surgery Study) trial,1 conducted during the late 1970s and early 1980s (which defined a lesion as significant if DS ≥70% in any main vessel and 50% if left main), to the more contemporary ISCHEMIA (International Study of Comparative Health Effectiveness with Medical and Invasive Approaches) trial2 (used a DS ≥50% cutoff) and in virtual every major revascularization trial, visual estimation of percentage DS has remained the cornerstone of initial lesion severity assessment, either as the criterion for proceeding with revascularization or considering it (with the additional presence of symptoms or demonstration of ischemia by either invasive or noninvasive methods), regardless of the revascularization modality (percutaneous coronary intervention or coronary artery bypass surgery) or clinical context (acute vs chronic coronary syndromes), clearly illustrating the ubiquity of this approach.

Consequentially, current guidelines follow suit, adopting the visual estimation of percentage DS as the first step for either considering revascularization or proceed with further testing, despite recognizing its limitations.3 A DS ≥70% (≥50% if left main) threshold is suggested for considering revascularization, especially in contexts where further testing may be limited (such as valvular heart disease). Guidelines also define intermediate lesions based on visual DS estimation (U.S. revascularization guidelines consider a DS of 50%-70%3).

All of the above considerations clearly reflect how widespread and persistent the use of visual percentage DS estimation remains, in both clinical practice and research. This method is, however, not without problems. Because no exact measurement is actually undertaken, it is prone to interoperator variability. Both interventional and noninterventional cardiologists often find themselves in disagreement with regard to how severe a lesion is when reviewing CAG images. In fact, studies from as early as the 1970s to relatively recent ones have consistently confirmed not only that there is significant heterogeneity in the visual assessment of percentage DS but also that operators mostly tend to overestimate the severity of lesions. The term “occulostenotic reflex,” as coined by Eric Topol, is often used to describe this phenomenon.

Given these limitations, other methods for assessing the severity of the disease emerged. Quantitative Coronary Analysis (QCA) software was developed, enabling effective measurement of percentage DS based on angiographic images. Coronary physiology, namely fractional flow reserve (FFR), was also developed. Intracoronary imaging, either with ultrasound (IVUS) or optical coherence tomography, was also an important innovation. But while multiple randomized trials have shown that both physiology and imaging can improve patient outcomes, the same cannot be said of QCA, as no meaningful trials have shown that its use has a major clinical impact. Furthermore, QCA is semiautomatic but not fully automatic, as it still requires some level of manual input with existing software. Hence, it is not often used routinely in clinical practice. And despite the benefits of physiology and imaging, both require additional invasive steps (using a guide-catheter, wiring the target vessel) as well as costs, and are therefore persistently underused.

Thus, it is no surprise that the primary method of asserting the severity of coronary artery disease based on invasive CAG images—visual estimation—has hardly changed since its inception in the 1950s, despite its well-recognized limitations. Is it not time this persistent conundrum is finally overcome? We believe artificial intelligence (AI) can and should be a game changer in this setting.

The application of AI to CAG interpretation can be conceptualized in several forms. Arguably, the first step would be to correctly separate the coronary tree from noise (ie background). This might be achieved by means of exact demarcation of the arteries (semantic segmentation) or more broadly by lesion identification (a bounding box object detection method). The second step would be to assist in interpreting the findings and, especially, improve the ability of operators to assess the severity of lesions, be it by objectively measuring the percentage DS or, perhaps more interestingly, directly deriving physiology or other insights from CAG images alone.

The majority of published papers regarding the application of AI to CAG are technical and come from engineering repositories, focusing primarily on segmentation. There have been, however, some notable publications in medical journals. Tianming Du et al4 developed an AI segmentation model trained with large data set of 13,373 images. Using pixel overlap as reference, the authors reported a segmentation accuracy of 98,4%. The models were also able to correctly identify thrombus, calcium, and lesion location, by using bounding boxes, adding to the array of potential uses of an AI model in assisting with CAG interpretation. Notwithstanding, lesion severity was not addressed by this algorithm. Furthermore, no evaluation regarding the quality of the segmentation, from a clinical perspective, was employed. Indeed, if the system missed a critical part of the coronary tree, such as incorrectly segmenting a left main or proximal left anterior descending, very high overlap accuracy would still be achieved, yet a major flaw, from a clinical point of view, would occur.

We too have successfully developed an AI model capable of accurate CAG segmentation ourselves. In the process, we also developed and validated a method, the Global Segmentation Score, to assess how well CAG segmentation is performed from a clinical perspective, ensuring that in addition to a high pixel overlap, no such major flaws in the coronary tree segmentation are present. With external validation with data from four different centers, across a wide range of stenosis severity, target vessel, operators, and equipment, we have shown that fully automatic AI segmentation of CAG images is both feasible and reliable.5

Considering the assessment of the severity of coronary lesions, especially percentage DS, almost no AI studies have also been published. Notwithstanding, fully automatic auto-QCA systems have been described, with reasonable correlation with state-of-the-art systems (Pearson's R = 0.765).6 Other authors have created models capable of identifying lesions based on bounding boxes, with a percentage DS ≥70% as assessed by QCA or of any severity.7

In addition to directly measuring the severity of stenosis, AI-segmented images may also impact operators’ visual estimation of percentage DS, by enhancing or segmenting CAG images in fully automatically (ie with zero human input other than the image itself) fashion. We have recently demonstrated that converting the gray-scale image to a black (background) and white (artery) one with AI segmentation models renders the interpretation more objective, by very clearly demarcating the vessel lumen. This simple approach significantly reduced interoperator variability, namely overestimation of lesion severity.8

Another important issue would be the derivation of physiology from CAG images alone. There already is software with this capability, but it uses mostly non-AI methods. Current systems have been developed to derive FFR, as it remains the most consensual physiology tool in clinical practice, with the largest evidence base. While several studies (and even a large clinical trial) have reported impressive results, very few papers regarding the derivation of physiology from CAG images using solely AI methods have been published. Since AI has consistently outperformed traditional computational methods in many areas, one might argue than an AI approach to this particular goal should at least be attempted.

Recently, one group developed an AI FFR derivation software (Autocath FFR). The authors published a small pilot study with only 31 patients and, since then, conducted a larger validation multicenter study, with 304 vessels from 297 patients, reporting a 94% accuracy.9 The software is capable of deriving the FFR value from images alone. The training database, according to the authors, consisted of more than 13,000 invasive coronary angiogram procedures and 1500 FFR measurements, from centers in Israel, the United States, Japan, and India. Labeling was made with QCA and off-label FFR measurements were excluded. No further details have been disclosed, namely the exact details regarding the AI training architecture or clinical data of the training data set.9 Another group has developed binary FFR classifier (using the 0.80 FFR cutoff) with 82% accuracy using solely AI methods as well.10

To our knowledge, no publications regarding AI models capable of deriving other indexes are yet available. Instantaneous wave-free ratio would be particularly interesting, given its noninferiority to FFR has been demonstrated in two major outcomes randomized trials, as well as the fact that it is commonly used in many catheterization laboratories worldwide. However, one trial (REVEAL iFR [Radiographic Imaging Validation and EvALuation for Angio iFR]) has reportedly explored this approach—and published results are awaited. We too have developed AI models capable of binary instantaneous wave-free ratio classification images which greatly outperform human operators, the results of which will be published soon as well (article in press).

The traditional limitations of AI will too, of course, apply to this particular application as well. “Big data” in Medicine is often constrained due to privacy and medical privilege. Hence, training data sets are often much smaller than in other contexts, potentially limiting performance. Furthermore, data annotation can be exceedingly cumbersome and time-consuming, and physicians can sparingly take part in such tasks. Also, the exact mechanism by which many AI systems function is not always entirely understood, raising ethical concerns as to its application in clinical practice.

Given the ever-expanding capabilities of AI, which is increasingly becoming multimodal, the use of AI in Medicine is both exciting and daunting, as the risk of overreliance in AI may paradoxically reduce human capabilities of autonomous medical assessment and decision-making. In spite of all this, the potential of AI remains enormous, and a responsible pursue of this technology is likely the most plausible and sensible course of action, as the AI “train” seems unstoppable at this point.

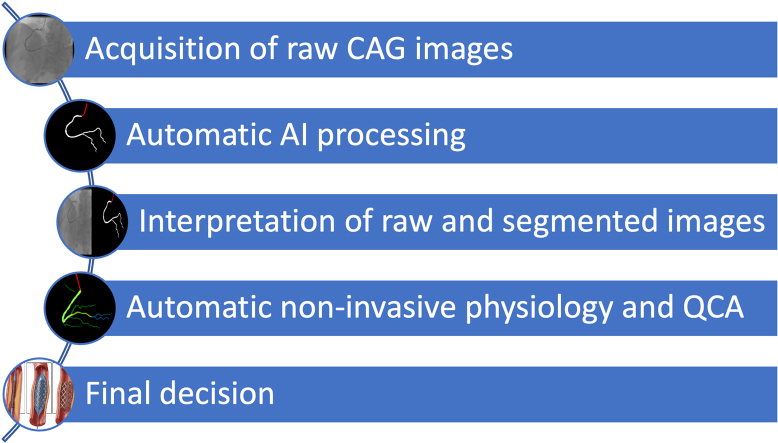

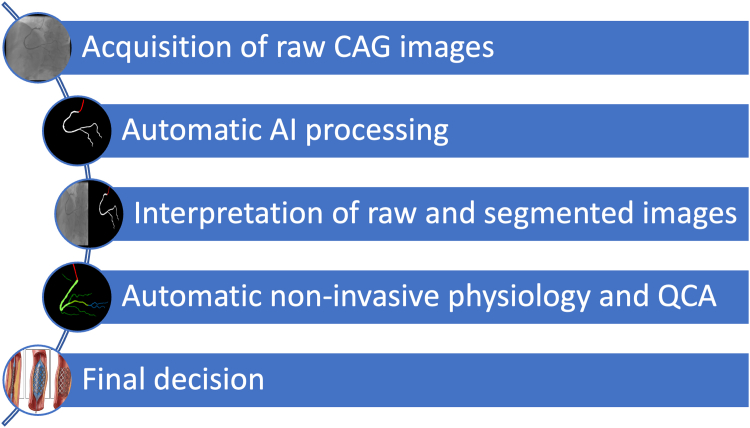

In conclusion, further research is necessary for developing and deploying AI tools capable of effectively assisting in the interpretation of CAG images. Notwithstanding, it seems the persistent conundrum of “eyeballing” CAG images may finally be brought to an end, as the Digital Revolution and Artificial Intelligence transport this seminal technique to the 21st century. We believe a modern workflow in the cath lab will consist of acquiring raw CAG images as usual, which will then be fully automatically processed by AI, including segmentation, QCA, and physiology calculations (Figure 1). New, currently unknown, insights may also be discovered by AI methods. This approach may, hopefully, render CAG interpretation and subsequent therapeutic decisions far more objective, thereby improving outcomes and reducing costs. Time will tell whether the promise of AI will come true, but as active researchers in the field, we remain both optimistic and committed to this important endeavor.

Figure 1.

A Proposed Workflow for an AI-Assisted CAG System in the Future

AI = artificial intelligence; CAG = coronary angiography; QCA = quantitative coronary analysis.

Funding support and author disclosures

The authors have reported that they have no relationships relevant to the contents of this paper to disclose.

Footnotes

The authors attest they are in compliance with human studies committees and animal welfare regulations of the authors’ institutions and Food and Drug Administration guidelines, including patient consent where appropriate. For more information, visit the Author Center.

References

- 1.Coronary artery surgery study (CASS): a randomized trial of coronary artery bypass surgery. Survival data. Circulation. 1983;68(5):939–950. doi: 10.1161/01.cir.68.5.939. [DOI] [PubMed] [Google Scholar]

- 2.Maron D.J., Hochman J.S., Reynolds H.R., et al. Initial invasive or conservative strategy for stable coronary disease. N Engl J Med. 2020;382(15):1395–1407. doi: 10.1056/NEJMoa1915922. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Lawton J.S., Tamis-Holland J.E., Bangalore S., et al. 2021 ACC/AHA/SCAI guideline for coronary artery revascularization: a report of the American College of Cardiology/American heart Association Joint committee on clinical practice guidelines. J Am Coll Cardiol. 2022;79(2):e21–e129. doi: 10.1016/j.jacc.2021.09.006. [DOI] [PubMed] [Google Scholar]

- 4.Du T., Xie L., Zhang H., et al. Training and validation of a deep learning architecture for the automatic analysis of coronary angiography. EuroIntervention. 2021;17(1):32–40. doi: 10.4244/EIJ-D-20-00570. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Nobre Menezes M., Silva J.L., Silva B., et al. Coronary X-ray angiography segmentation using Artificial Intelligence: a multicentric validation study of a deep learning model. Int J Cardiovasc Imag. 2023;2023:1–12. doi: 10.1007/s10554-023-02839-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Liu X., Wang X., Chen D., Zhang H. Automatic Quantitative coronary analysis based on deep learning. Appl Sci. 2023;13(5):2975. [Google Scholar]

- 7.Avram R., Olgin J.E., Ahmed Z., et al. CathAI: fully automated coronary angiography interpretation and stenosis estimation. NPJ Digital Medicine. 2023;6(1):1–12. doi: 10.1038/s41746-023-00880-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Nobre Menezes M., Silva B., Silva J.L., et al. Segmentation of X-ray coronary angiography with an artificial intelligence deep learning model: impact in operator visual assessment of coronary stenosis severity. Catheter Cardiovasc Interv. 2023;102(4):631–640. doi: 10.1002/ccd.30805. [DOI] [PubMed] [Google Scholar]

- 9.Ben-Assa E., Abu S.A., Cafri C., et al. Performance of a novel artificial intelligence software developed to derive coronary fractional flow reserve values from diagnostic angiograms. Coron Artery Dis. 2023;34(8):533–541. doi: 10.1097/MCA.0000000000001305. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Cho H., Lee J.G., Kang S.J., et al. Angiography-based machine learning for predicting fractional flow reserve in intermediate coronary artery lesions. J Am Heart Assoc. 2019;8(4) doi: 10.1161/JAHA.118.011685. [DOI] [PMC free article] [PubMed] [Google Scholar]