Abstract

Linking sensory input and its consequences is a fundamental brain operation. During behavior, the neural activity of neocortical and limbic systems often reflects dynamic combinations of sensory and task-dependent variables, and these “mixed representations” are suggested to be important for perception, learning, and plasticity. However, the extent to which such integrative computations might occur outside of the forebrain is less clear. Here, we conduct cellular-resolution two-photon Ca2+ imaging in the superficial “shell” layers of the inferior colliculus (IC), as head-fixed mice of either sex perform a reward-based psychometric auditory task. We find that the activity of individual shell IC neurons jointly reflects auditory cues, mice's actions, and behavioral trial outcomes, such that trajectories of neural population activity diverge depending on mice's behavioral choice. Consequently, simple classifier models trained on shell IC neuron activity can predict trial-by-trial outcomes, even when training data are restricted to neural activity occurring prior to mice's instrumental actions. Thus, in behaving mice, auditory midbrain neurons transmit a population code that reflects a joint representation of sound, actions, and task-dependent variables.

Keywords: calcium imaging, inferior colliculus, mixed selectivity, mouse, population analysis

Significance Statement

Neurons in superficial “shell” layers of the inferior colliculus (IC) preferentially project to higher-order thalamic nuclei that are strongly activated by sounds and their consequences, thereby combining sensory and task-dependent information. This sensory–behavior integration is thought critical for a variety of behaviorally relevant functions, such as establishing learned sound valence. However, whether such “mixed representations” reflect unique properties of thalamocortical networks, or rather are present in other areas, is unclear. We show that in behaving mice, many shell IC neurons are modulated by sounds and mice's actions. Consequently, shell IC population activity suffices to predict trial outcomes prior to the rewarded action. Our data thus establish shell IC nuclei as a novel locus of behaviorally relevant mixed representations.

Introduction

Choosing the appropriate behavioral response to appetitive or aversive stimuli confers a survival advantage. To achieve this, neural circuits must be capable of linking external sensations, instrumental actions, and their behaviorally relevant consequences. One solution is for distinct sensory and behaviorally relevant pathways to converge upon a common target region, thereby enabling postsynaptic ensembles to jointly encode sensations and their consequences such as reward, punishment, or goal-directed actions. Indeed, such “mixed selectivity” to sensory and behavioral variables is well documented in the thalamus (Ryugo and Weinberger, 1978; Komura et al., 2001; Hu, 2003; Jaramillo et al., 2014; L. Chen et al., 2019; Gilad et al., 2020) and neo-cortex and might contribute to the computational power of these high-level circuits (Rigotti et al., 2013; Naud and Sprekeler, 2018; Stringer et al., 2019; Parker et al., 2020; Saxena et al., 2022). However, whether such joint representations reflect unique integrative computations of the thalamocortical system, or can be inherited from afferent inputs, is unknown.

The inferior colliculus (IC) is a midbrain hub that transmits most auditory signals to the forebrain (Aitkin et al., 1981; Aitkin and Phillips, 1984; LeDoux et al., 1985; Coleman and Clerici, 1987; LeDoux et al., 1987). It is subdivided into primary central and surrounding dorsomedial and lateral “shell” nuclei whose neurons preferentially project to the primary and higher-order medial geniculate body (MGB) of the thalamus, respectively (Winer et al., 2002; Mellott et al., 2014; C. Chen et al., 2018). Interestingly, lesions to the shell IC or their afferent inputs do not cause central deafness but rather seemingly impair certain forms of learned auditory associations (Jane et al., 1965; Bajo et al., 2010). In tandem with their anatomical connectivity to nonlemniscal thalamic regions, these results suggest that shell IC neurons may be involved in higher-order auditory processing and learned sound valence.

Accordingly, prior studies show that neurons across many IC subregions respond differently to sound depending on task engagement (A. Ryan and Miller, 1977; A. F. Ryan et al., 1984; Slee and David, 2015; De Franceschi and Barkat, 2021; Shaheen et al., 2021), locomotion (C. Chen and Song, 2019; Yang et al., 2020), eye position (Groh et al., 2001; Porter et al., 2006), and expectation of appetitive or aversive reinforcers (Halas et al., 1970; Olds et al., 1972; Disterhoft and Stuart, 1976, 1977; Kettner and Thompson, 1985; Gao and Suga, 1998; Metzger et al., 2006; Lockmann et al., 2017). One possible explanation is that these prior results largely reflect a nonspecific, arousal-mediated scaling of acoustic responses owing to task engagement, movement, or behavioral sensitization (Ji and Suga, 2009; Saderi et al., 2021). An alternative hypothesis is that such modulation instead reflects the integration of sound cues with task-dependent signals related to behavioral outcomes or goal-directed actions. Interestingly, higher-order MGB neurons jointly encode combined sound and behavioral outcome signals, which may serve important learning-related functions (Ryugo and Weinberger, 1978; Mogenson et al., 1980; Edeline and Weinberger, 1992; McEchron et al., 1995; Schultz et al., 2003; Taylor et al., 2021). However, whether such joint coding of acoustic and task-dependent signals is also present in afferent inputs from shell IC neurons is unknown.

Here, we test the hypothesis that shell IC neurons integrate acoustic and task-dependent signals, thereby transmitting information that predicts the outcome of behavioral trials. To this end, we recorded from shell IC neuron populations using two-photon Ca2+ imaging, as head-fixed mice engaged in a challenging, psychometric auditory Go/No-Go task. We report that shell IC neurons jointly encode sound- and trial outcome–dependent information, thereby generating population activity patterns that predict mice's instrumental choice on a trial-to-trial basis. Thus, the auditory midbrain broadcasts a powerful mixed representation of sound and outcome signals, which could support some of the integrative, sensory-guided decisions often attributed to the thalamocortical system.

Materials and Methods

Animal subjects and handling

All procedures were approved by the University of Michigan's Institutional Animal Care and Use Committee and conducted in accordance with the NIH's guide for the care and use of laboratory animals and the Declaration of Helsinki. Adult CBA/CaJ x C57BL/6J mice were used in this study (n = 11, 5 females, 70–84 d postnatal at the time of surgery). These hybrids do not share the Cdh23 mutation that results in early-onset presbycusis in regular C57BL/6 mice (K. R. Johnson et al., 1997; Frisina et al., 2011; Kane et al., 2012). Following surgery, mice were single-housed to control water deprivation and to avoid damage to surgical implants. Cages were enriched (running wheels, nest building material) and kept in a temperature-controlled environment (24.4°C, 38.5% humidity) under an inverted light-dark cycle (12 h/12 h), and mice had olfactory and visual contact with the neighboring cages. Three mice entered the experiment after having spent three prior sessions where they were passively exposed to different sound stimuli than those employed in the current study (Shi et al., 2024).

Surgery

Mice were anesthetized in an induction chamber with 5% isoflurane vaporized in O2, transferred onto a stereotaxic frame (M1430, Kopf Instruments), and injected with carprofen as a presurgical analgesic (Rimadyl; 5 mg/kg, s.c.). During surgery, mice were maintained under deep anesthesia via continuous volatile administration of 1–2% isoflurane. Body temperature was kept near 37.0°C via a closed loop heating system (M55 Harvard Apparatus), and anesthesia was periodically confirmed by the absence of leg withdrawal reflex upon toe pinch. The skin above the parietal skull was removed, and a local anesthetic was applied (lidocaine HCl, Akorn). The skull was balanced by leveling the vertical difference between lambda and bregma coordinates, and a 2.25–2.5-mm-diameter circular craniotomy was carefully drilled above the left IC at lambda −900 μm (AP)/−1,000 μm (LM). The skull overlying the IC was removed, and pAAV.Syn.GCaMP6f.WPRE.SV40 (AAV1, titer order of magnitude 10−12, Addgene) was injected 200 μm below the dura at four different sites (25 nl each; 100 nl total) across the mediolateral axis of the IC using an automated injection system (Nanoject III, Drummond Scientific). In three cases, pAAV.syn.jGCaMP8s.WPRE (AAV1, titer order of magnitude 10−12, Addgene) was injected. A custom-made cranial window insert, consisting of three circular 2 mm glass coverslips stacked and affixed to a 4-mm-diameter glass outer window, was then inserted in the craniotomy. The cranial window was affixed to the skull and sealed with cyanoacrylate glue (Loctite), and a titanium head bar was mounted on the skull with dental cement (Ortho-Jet). Mice received a postsurgical subcutaneous injection of buprenorphine (0.03 mg/kg, s.c., Par Pharmaceutical). Mice received carprofen injections (5 mg/kg, s.c., Spring Meds) 24 and 48 h following surgery.

Behavior protocol

After a minimum of 14 d recovery from surgery, mice were water restricted (1–1.5 ml/d) and maintained at >75% initial body weight. Mice were habituated to the experimenter, the experimental chamber, and the head fixation. During the habituation and experimental sessions, mice sat in an acrylic glass tube in a dark, acoustically shielded chamber with their heads exposed and fixed and a lick spout in comfortable reach. Following 7 d of water restriction and acclimation, mice were trained daily in a reward-based, operant Go/No-Go paradigm (Fig. 1A–C), controlled by a Bpod State Machine (Sanworks) run with Matlab (version 2016b, MathWorks). Sounds were generated in Matlab at a sampling frequency of 100 kHz and played back via the Bpod output module. A sound was presented from a calibrated speaker (XT25SC90-04, Peerless by Tymphany) positioned 30 cm away from the mouse's right ear (1 s duration, 10 ms cosine-ramped at on and offset, 70 dB SPL). Calibration was performed with broadband noise (BBN) using a spectral band peak analysis and a 1/4″ pressure-field microphone (Brüel & Kjaer). All unmodulated and modulated sounds were presented using this calibration, and peak energy was equal (3.9 ± 0.16 rms), while overall energy modestly decreased at higher modulation depths (0%, 0.27 ± 0.01 rms; 100%, 0.18 ± 0.01 rms). Licking behavior was recorded for the entire trial time using a light gate in front of the spout, sampled down to 7.3 Hz (C57BL/6J lick frequency, Boughter et al., 2007), and binarized offline. During go trials, licking a waterspout during a 1 s “answer period” following sound offset resulted in the delivery of a reward (10% sucrose–water droplet gated through a solenoid valve housed outside the sound-attenuating microscope chamber). During No-Go trials, mice had to withhold licking during the answer period, and false alarms were punished with an increased intertrial interval (“time-out”). Licking at any other point in the trial had no consequence. Thus, intertrial intervals were 13–15 s following all Go and correctly answered No-Go trials and 18–20 s for incorrectly answered No-Go trials. Intertrial intervals were kept this long to avoid photobleaching and laser damage to the tissue, while approximately balancing the laser on time (8 s) and laser off time (5–12 s).

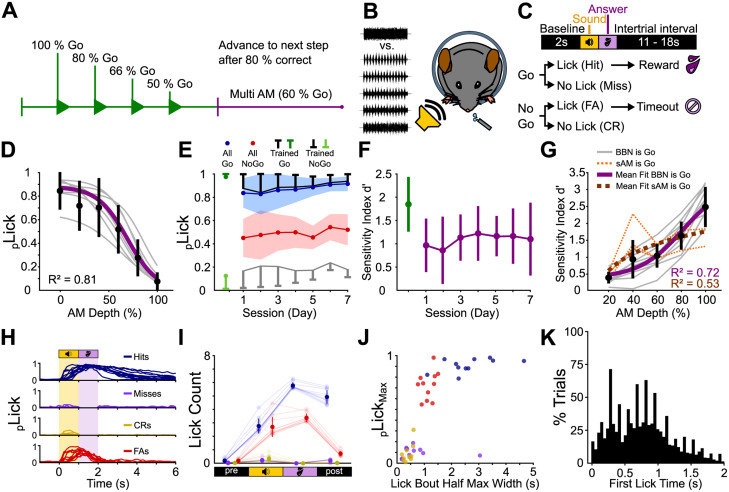

Figure 1.

Mice discriminate sAM noise from unmodulated noise in a modulation depth-dependent manner. A, Experiment structure. Head-fixed mice were trained to discriminate between 0 and 100% sAM depth (green). We progressively reduced the ratio of Go to No-Go trials as mice's task performance increased until mice reached the multi-sAM stage (purple). B, Upon reaching the criterion (see Results), mice engaged in a “multi-sAM depth” version of the task where the modulation depth of the No-Go sound was varied on a trial-by-trial basis. C, Trial structure. After a 2 s baseline, a sound was presented for 1 s. Licking a waterspout during a 1 s answer period following sound offset was rewarded with a drop of sugar water on Go trials and punished with a 5 s time-out on No-Go trials. Licking at any other point during the trial had no consequence. FA, false alarm; CR, correct rejection. D, Fitted lick probability during the answer period as a function of sAM depth for all mice during multi-sAM sessions. The gray lines are the individual mice, the black circles and lines are the mean ± standard deviation of each sAM depth, and the purple line is the mean fit. E, Mean ± standard deviation lick probability over the final training session (green) and 7 d of multi-sAM sessions for all Go stimuli (blue), all No-Go stimuli (red), and only the trained Go (black) and trained No-Go stimuli (gray). F, d′ for all mice plotted over time for the final training session (Day 0, green) and the 7 d of multi-sAM sessions (purple) as mean ± standard deviation. G, d′ per sAM depth. The gray lines are mice trained on the task contingency described in panels A–F, with BBN employed as the Go sound cue. The dashed orange lines show n = 3 mice that were trained on an opposite contingency with sAM noise as the Go sound cue. The black circles and lines are mean ± standard deviation for each sAM depth, and the purple line is the mean sigmoid fit to “BBN is Go” mice. The dashed dark orange line is the mean logarithmic fit to “sAM is Go” mouse data only. H, Individual average lick histograms for each mouse for all trial outcomes. I, Summary lick statistics for baseline, sound, answer, and intertrial interval periods (1 s each). J, Average maximal lick probability plotted against the full-width at half-maximum of lick bouts, for each mouse and trial category. The outlier miss data point originates from a mouse that routinely licked at low frequency after the end of the response window. K, Histogram of first-lick times for all trials with licks during sound or answer period (n = 3,613 trials).

All mice were trained according to the same protocol: In the first stage, only Go stimuli were presented, and rewards were manually triggered by the experimenter so that mice learned to associate the Go stimulus with a water reward (shaping, usually continuously for the first 10 trials, followed by slowly decreasing manual reward delivery until trial 50 during initial Go-only sessions). This procedure was repeated over multiple sessions until an association was present, determined by reaching a criterion of 80% response rate without shaping in two consecutive sessions. Next, the No-Go stimulus was introduced. In this stage, the number of No-Go presentations gradually increased from 20 to 33 to 50% if mice responded correctly on 80% of trials during a session. A typical session contained ∼200 trials and lasted for up to 1 h. For n = 8 mice, the Go stimulus was a broadband noise burst (BBN, 4–16 kHz), and the No-Go stimulus was an amplitude-modulated BBN modulated at 100% depth at a frequency of 15 Hz [sinusoidal amplitude modulation (sAM), 4–16 kHz BBN carrier]. To ensure that mice attend to the temporal envelope modulation of the stimulus, a subgroup of n = 3 mice was trained on the opposite stimulus contingency, with the sAM sound functioning as the Go stimulus. Although mice's discrimination behavior differed slightly across the two training contingencies (Fig. 1), we observed no differences in physiological results between the two groups, and the data were pooled for analyses (see Results).

After reaching 80% correct in the 50/50 Go/No-Go stage for two consecutive sessions, we varied the amplitude modulation (AM) depth (No-Go stimulus for Group 1, Go stimulus for Group 2) from 20 to 100% in 20% steps. Mice performed 6–7 sessions in this paradigm, with a typical session containing ∼350 trials and lasting for up to 1.5 h. If a mouse that had learned the task produced misses for six Go trials in a row, the session was terminated since these trials were indicative of a lack of motivation and licking. Due to the pseudorandomized trial order, this criterion was reached over a maximum of 14 consecutive trials once a mouse stopped licking. Thus, the final 14 trials of each session were discarded from all analyses. In order to account for the lower number of rewarded trials due to low discriminability stimuli (20% sAM depth), we increased the number of Go stimuli during the multi-AM stage to 60% to increase the number of rewarded trials to maintain behavioral engagement.

Water intake during the task was estimated by measuring the mice's weight difference (including droppings) before and after each session. Mice received supplementary water if they consumed <1 ml during the session. Upon conclusion of the experiment, mice received water ad libitum for at least 2 d, were deeply anesthetized via an overdose of isoflurane, and were transcardially perfused with formalin.

Behavior analysis

Lick responses to assign trial outcomes were counted only during the reward period (1 s after sound offset). Licking at any point during the reward period during Go trials resulted in a hit and was immediately rewarded. Not licking during this period was scored as a miss. Licking during the reward period during No-Go trials was counted as a false alarm and resulted in a time-out, and not licking was scored as a correct rejection and was not rewarded nor punished. Licking at any other point during any trial had no consequence.

Licks were rebinned at 7.3 Hz (C57BL/6J lick frequency, Boughter et al., 2007), averaged over trial category per mouse, and analyzed for maximal lick probability and lick bout duration (full-width at half-maximum of the lick bout). To determine lick bout duration, we first determined the maximum lick probability and then measured the duration in both directions until the half-maximum was reached. For analyses that consider the onset of licking (Figs. 1K, 5E,F, 6B–D, 7A), mice's “first lick” is defined as the first lick occurring after sound onset on each trial, including unrewarded, anticipatory licks during sound presentation.

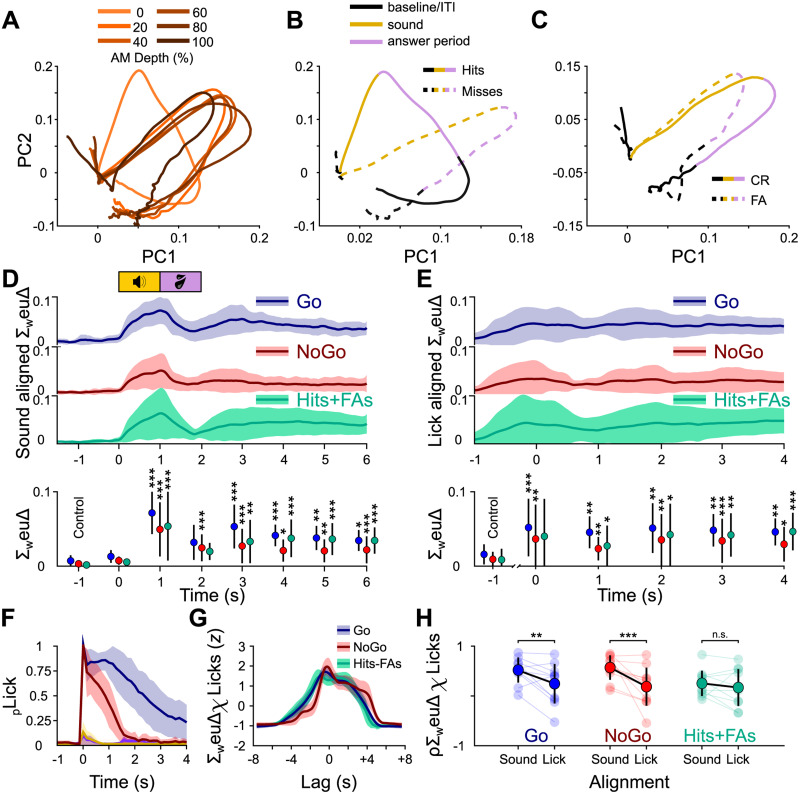

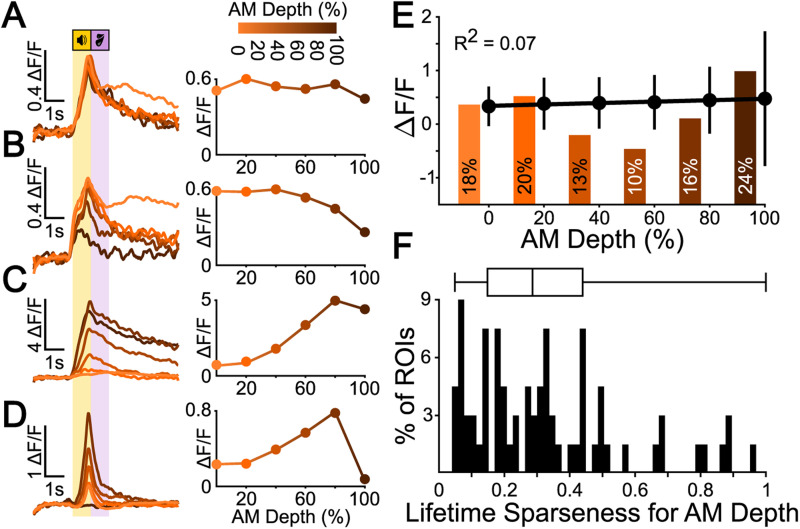

Figure 5.

Population dynamics revealed through principal component analysis show trial outcome–dependent differences. A, Example PCA-based trajectory from a single mouse sorted by AM depth. For visualization purposes, only the first two components are displayed, collectively explaining ∼80% of the total variance. B, The same example sorted by trial outcome for Go trials (hits/misses). Of note, the trajectories for hits and misses start to diverge immediately after the baseline. C, The same as in B but for No-Go trials. D, Top, The sum of weighted Euclidean distances over all principal components over time for hits/misses (blue), correct rejections/false alarms (red), and hits/false alarms (FA, green) aligned to sound onset for all mice and sessions, plotted as mean and standard deviation. Bottom, Friedman's test followed by a Dunnett's post hoc test comparing the mean sum of weighted Euclidean distances against the baseline at t = −1 s. E, The same as in D, but the data were aligned to the first lick after sound onset prior to computing the PCA. F, Average lick histograms sorted by trial outcome for the initial multi-sAM session for all mice, given as mean and standard deviation and aligned to the first sound-evoked lick. G, The mean and standard deviation z-scored cross-correlation functions for sound-aligned ΔF/F traces and lick histograms for Go (blue), No-Go (red), and hit/false alarm trials (green). H, Pearson’s correlation coefficient distributions of ΔF/F traces and lick histograms for Go (blue), No-Go (red), and hit/false alarm trials (green) for sound-aligned data and lick-aligned data. Statistics are two-sample Wilcoxon signed rank tests. *p < 0.05; **p < 0.01; ***p < 0.001.

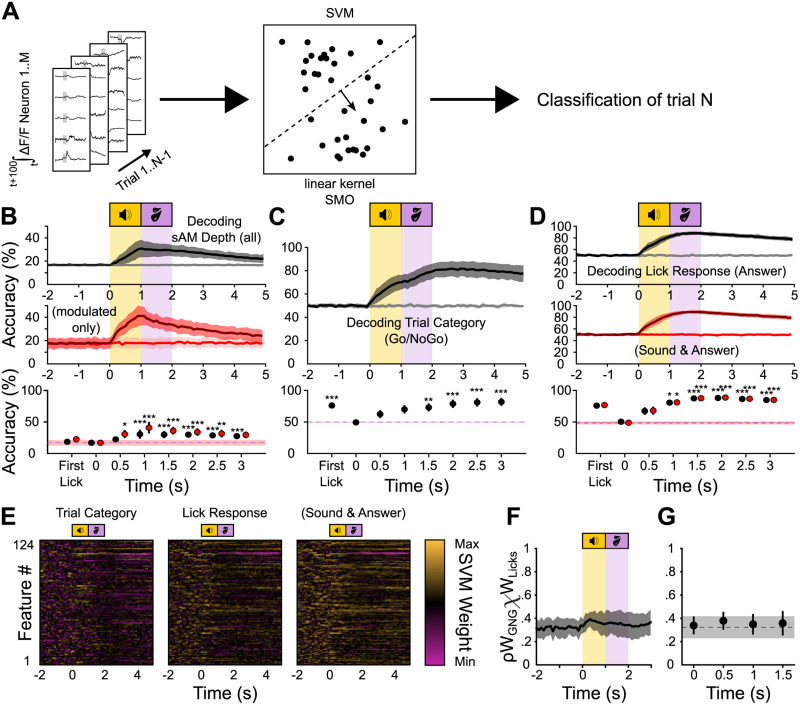

Figure 6.

An SVM classifier can predict task-related variables from the neural activity before, during, and after mice's instrumental actions. A, Schematic of the SVM classifier. Training data is the integral of the ΔF/F traces of all neurons in a 100 ms sliding window across the trial. Accuracy is plotted over the beginning of the integration time. B, Top, Classification accuracy over time for a decoder trained to classify sAM depth. The raw accuracy was normalized to obtain the balanced accuracy (black trace) and balanced shuffled accuracy (gray trace), normalizing the chance level to 16.67% (1 divided by the number of classes; see Materials and Methods). Middle, The same as in top but only using modulated stimuli (sAM depths 20–100%). Bottom, Friedman’s test with Dunnett's post hoc comparisons for each 0.5 s time bin against the baseline accuracy at −1 s (dashed line) for all sAM depth trials (black) or only modulated trials (red). Data points labeled “first lick” are classifiers trained on fluorescence data limited to before mice's first lick after sound onset on each trial. C, Same as in B but for the trial category. The chance level is normalized to 50% (see Materials and Methods). D, Top, Same as in B but for lick response during the answer period. The chance level is normalized to 50% (see Materials and Methods). Middle, The same as in top, but for lick response during the sound or answer period. Bottom, Friedman’s test with Dunnett's post hoc test comparing time points against the baseline accuracy at −1 s for top data (black) and the middle data (red). E, Examples of SVM feature (ROI) weights over time for a binary classifier distinguishing Go from No-Go trials (left), lick from no-lick trials determined by the answer (middle) and sound or answer period (right). F, Mean correlation coefficients for the feature weights of the “trial category” and “lick response” decoders from C and D (top). Each point in time represents the mean and standard deviation of Pearson’s coefficients for two matched individual columns from E (feature weights at a single time point). G, Statistics from F, Friedman's test with Dunnett's post hoc test against baseline (t = −1 s). *p < 0.05; **p < 0.01; ***p < 0.001.

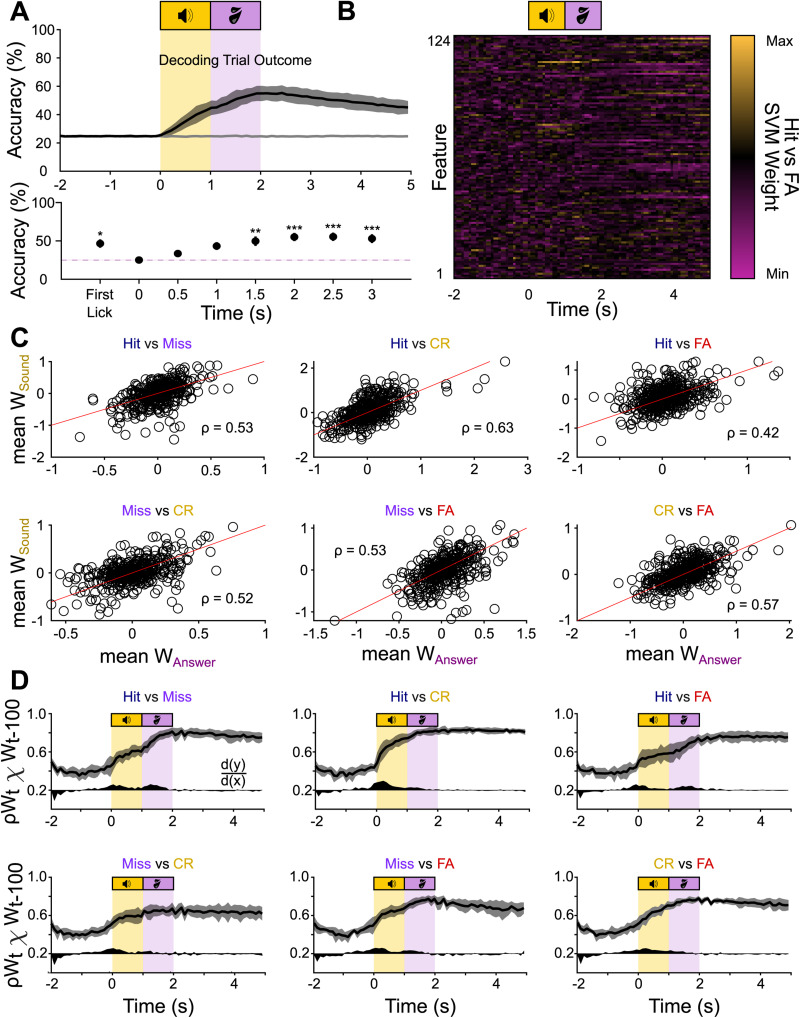

Figure 7.

The outcome classifier uses overlapping information during the sound- and the outcome period. A, Top, Classification accuracy over time for a decoder trained to classify trial outcome. The raw accuracy was normalized to obtain the balanced accuracy (black trace) and balanced shuffled accuracy (gray trace), normalizing the chance level to 25% (1 divided by the number of classes; see Materials and Methods). Bottom, Friedman’s test with Dunnett's post hoc test comparing time points against the baseline accuracy at −1 s. B, An example set of weights for a binary classifier (hit/false alarm) of the set of subclassifiers that make up the outcome classifier. C, Mean feature weights during the sound (y-axis) and answer period (x-axis) for all ROIs for the subclassifiers distinguishing hits and misses, hits and correct rejections (CR), hits and false alarms (FA), misses and correct rejections, misses and false alarms, and correct rejections and false alarms. The red lines are unity lines. D, Mean correlation coefficients for the feature weights of the subclassifiers at time t and time t − 100 ms. The black area below the curve indicates the first derivative to visualize the steps of increased correlation in arbitrary units, with d(y)/d(x) = 0 at 0.2 on the y-axis. *p < 0.05; **p < 0.01; ***p < 0.001.

The sensitivity index d′ was calculated as d′ = z(hit rate) − z(false alarm rate), where z(hit rate) and z(false alarm rate) are the z-transformations of the hit rate and the false alarm rate, respectively. Global lick rates pooled from all sessions were fitted per mouse with a four-parameter logistic equation (sigmoid fit). d′ curves were fitted with a sigmoid or logarithmic fit, and the perceptual threshold was defined as the modulation depth at which half-maximal lick probability was reached.

Ca2+ imaging

Movies were acquired at a frame rate of 30 Hz (512 × 512 pixels) using a resonance-scanning, two-photon microscope (Janelia Research Campus’ MIMMs design; Sutter Instrument) equipped with a 16× water immersion objective (Nikon, 0.8 NA, 3 mm working distance) and a GaAsP photomultiplier tube (Hamamatsu Photonics). The microscope was located in a custom-built, sound- and light-attenuated chamber on a floating air table. GCaMP6f or −8 s were excited at 920 nm using a titanium–sapphire laser (30–60 mW absolute peak power, Chameleon Ultra 2, Coherent). Images were acquired for 8 s per trial from the same field of view in each session (determined by eye using anatomical landmarks), with a variable intertrial interval (see above, Behavior protocol). Recording depth from dura was variable between mice and chosen by image quality and number and responsiveness of neurons (tested live) but generally kept between 20 and 55 µm. Behavioral data (licks) were recorded simultaneously through Matlab-based WaveSurfer software (Janelia Research Campus) and synchronized with the imaging data offline.

Ca2+ imaging analysis

We used the Python version of suite2p to motion-correct the movies, generate regions of interest (ROIs), and extract fluorescence time series (Pachitariu et al., 2016). ROIs were manually curated by the experimenter to exclude neurites without somata, and overlapping ROIs were discarded if they could not be clearly separated. Raw fluorescence time series were converted to ΔF/F by dividing the fluorescence by the mean fluorescence intensity during the 2 s baseline period on each trial, subtracting the surrounding neuropil signal scaled by a factor of 0.7, and smoothing the traces using a five-frame Gaussian kernel. ΔF/F traces and behavioral data were then analyzed using custom Matlab routines (available upon request). To determine significantly responding ROIs, we used a bootstrapping procedure based on the ΔF/F “signal autocorrelation” as in prior electrophysiology and imaging studies of the IC (Geis et al., 2011; Wong and Borst, 2019). We chose this approach because it quantifies for each neuron the degree of trial-to-trial response consistency across similar trial categories, rather than mean amplitude. Briefly, we compared the average correlation of each matching pair of trials (same stimulus identity or same trial outcome) over either the sound or the answer period to the correlations from shuffled signals originating from these same trials (10,000 iterations). Trials were matched across the same stimulus identity (sAM depth) to determine stimulus selectivity or matched across the same trial outcome (hit, miss, correct rejection, false alarm) to determine trial outcome selectivity. The p-values were then computed as the fraction of these randomly sampled signals with greater correlation than the real data and corrected for multiple comparisons using the Bonferroni–Holm method. Since our behavior employed six distinct sound stimuli, a neuron was classified as significantly sound modulated when p was below 0.05 for at least one, and at most six, stimulus condition. Similarly, our task structure has four trial outcomes; a neuron was classified as trial outcome modulated if p was below 0.05 for at least one, and at most four, outcome condition. Importantly, this method measures trial-to-trial consistency, and not response onset or strength. Thus, prolonged, but consistent responses during the sound presentation may occasionally lead to significant responses during the trial outcome period. Since decreases in fluorescence can be difficult to interpret specifically for tuning analyses, we used t-distributed stochastic neighbor embedding (t-SNE; van der Maaten and Hinton, 2008) and k-means clustering (two clusters) in the tuning analyses to separate sound-excited from sound-inhibited neurons by their average ΔF/F waveform and only analyzed sound-excited neurons. In population analyses [principal component analysis (PCA) and support vector machine (SVM)], all neurons were used, regardless of whether they were significantly responding, sound-excited, or sound-inhibited, according to our analyses. The outcome selectivity index (SI) of each neuron was calculated as follows: We first averaged ΔF/F traces of hit, miss, correct rejection, and false alarm trials. We then measured the absolute value integrals of each average waveform from sound onset to 1 s after the answer period. Outcome selectivity indices for Go and No-Go trials were calculated as (hit − miss) / (hit + miss) and (correct rejections − false alarms) / (correct rejections + false alarms), respectively.

To determine the fraction of significantly different ΔF/F values on different trial outcomes (Fig. 4G,H), we compared the mean ΔF/F over 1 s bins between different trial outcomes within the same neurons with pairwise Bonferroni-corrected t tests. To normalize for differences in lick count, rate, and bout duration on hit and false alarm trials, we divided the ΔF/F values into 1 s time bins either by the lick count in the respective second, by the mean slope of the lick histogram in the respective second, or by the full-width at half-maximum of the mean lick histogram.

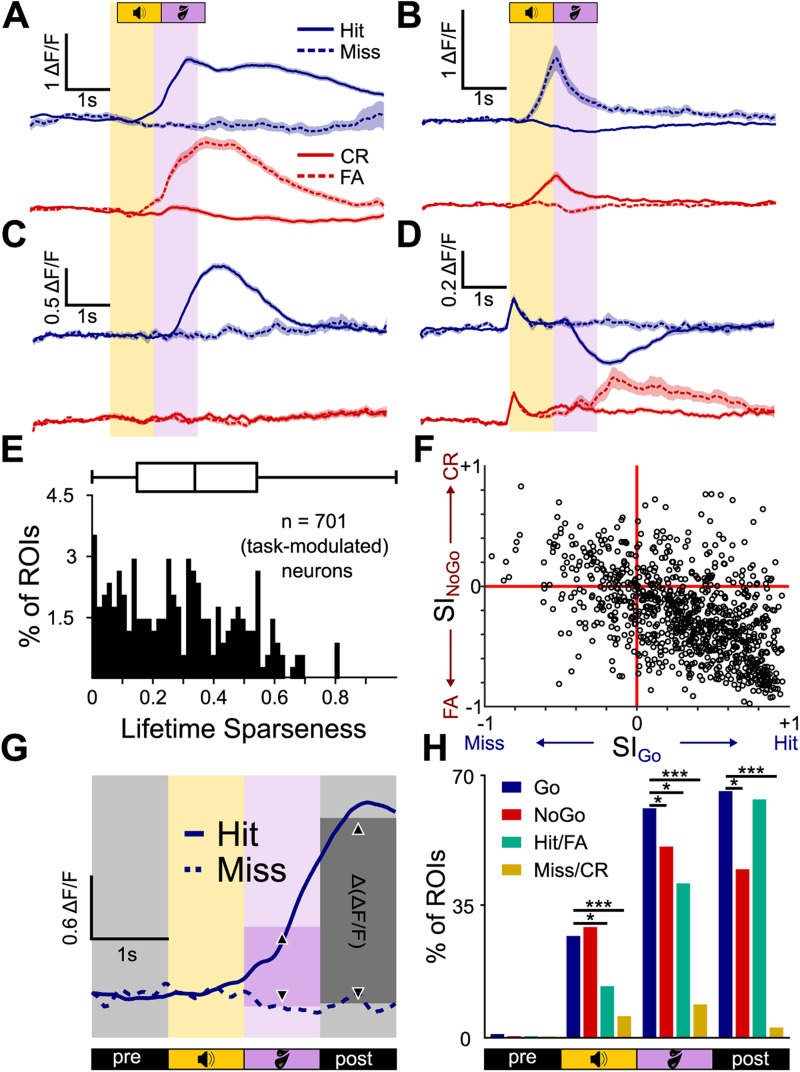

Figure 4.

Trial outcome selectivity of individual Shell IC neurons. A, Mean ± SEM ΔF/F traces of an example neuron selective for hit and false alarm (FA) trial outcomes. B–D, Same as in A, but for a neuron responding on misses and correct rejections (CR; B), hits only (C), and with opposing activity on hits and false alarms (D). E, Lifetime sparseness for outcome responses of all 701 significantly task-modulated neurons. F, Selectivity Indices on Go and No-Go trials are plotted for each neuron on the x- and y-axes, respectively. G, Schematic of the Δ(ΔF/F) analysis. The mean ΔF/F in 1 s bins was computed on a trial-by-trial basis for each neuron and compared across trial outcomes using a Wilcoxon rank sum test. H, The proportion of neurons with significantly different ΔF/F values for (from left to right) hits and misses (blue), correct rejections and false alarms (red), hits and false alarms (green), and misses and correct rejections (orange) per averaging period. *p < 0.05; **p < 0.01; ***p < 0.001.

Lifetime sparseness

As an additional measure for neuronal selectivity, we computed the lifetime sparseness per neuron, which describes a neuron's general activity variance in response to an arbitrary number of stimuli (Vinje and Gallant, 2000). Here, we computed the lifetime sparseness separately for modulation depth and trial outcome:

where is the number of different stimuli and is the mean peak ΔF/F response to stimulus from sound onset to +2 s. Before computing the lifetime sparseness, we set all negative ΔF/F traces (neurons that reduced their firing relative to baseline) to 0 to keep the lifetime sparseness between 0 and 1. Lifetime sparseness is 0 when a neuron responds to all stimuli with the same peak ΔF/F response and 1 when it only responds to a single stimulus.

Principal component analysis

We performed a population principal component analysis (PCA) using individual ROIs as observations and the trial-averaged ΔF/F samples as individual variables using Matlab's “pca” function with the default parameters. To compare the differences in the multidimensional neural trajectories, we computed the mean weighted Euclidean distances (weuΔ) of hit and miss, and correct rejection and false alarm trials, respectively. The weuΔ was obtained by computing the Euclidean distance between each component of the neural trajectories at each point in time, weighted by the amount of variance explained by each component, resulting in a weighted distance vector per component. These were then summed up to a single ∑weuΔ-curve per session that is proportionate to the general difference in network activity and normalized to the intrasession variance.

Support vector machine classifier

The support vector machine (SVM) classifiers of Figures 6 and 7 were generated in Matlab using the classification learner app, with the “templateSVM” and “fitsvm” or “fitecoc” functions as the skeleton for binary and multilabel classification, respectively. For these analyses, we used data recorded across 7 different multi-sAM sessions recorded in each of the n = 11 mice in this study, for a total of 77 imaging sessions. This includes both mice trained on BBN and mice trained on sAM noise as the Go stimulus. N-way ANOVA tests showed that neither Go stimulus type nor session # had a significant main effect on the data (see Results), and data were pooled. Separate SVMs were trained on each session, and the results were averaged per mouse, adding additional overfitting protection by introducing another data layer. In all cases, we used a linear kernel and the sequential minimal optimization algorithm to build the classifier. We used the ROIs as individual predictors and one of several sets of variables as classes: trial outcome (hit, miss, correct rejection, false alarm), action during the answer period (lick, no lick), stimulus identity (AM depth), and stimulus category (Go stimulus, No-Go stimulus), using equal priors. The integral of the ΔF/F traces over 100 ms was used as the input data, and the classifier was constructed and trained on each individual session using an equal number of Go and No-Go trials. We used periods of 100 ms in steps of 100 ms over the whole signal to extract the information content in the signal at each period. Thus, at each time point t, the classifier has access to the integral of the activity from t to t + 100 ms. For the “first-lick accuracy,” the 100 ms preceding the first lick that occurred after sound onset was used. If no lick was present during a trial, the median first-lick time of all licked trials of that session was used instead. We used fivefold validation to determine the decoding accuracy per session, i.e., five randomly sampled portions of 80% of trials as training data and the remaining five times 20% as test data. The accuracy is then given as the mean decoding accuracy among those fivefolds. Because the number of trials per class is not always balanced, we computed the “balanced accuracy,” which is calculated differently for binary (lick/no lick, Go/No Go) and nonbinary problems (trial outcome, AM depth). For binary problems, the balanced accuracy is defined as the number of true positives plus the number of true negatives, divided by 2. Thus, the balanced accuracy normalizes the accuracy to 50% at chance level even if the percentage of Go/No-Go or lick/no-lick trials per session does not follow a precise 50/50 split. For nonbinary problems, it is defined as the mean of the microrecalls (recall/class, see below), and the chance level is 1 divided by the number of classes. All data is presented as “balanced accuracy.”

For controls, we computed the “shuffled” and “shuffled balanced” accuracies, where the trials and the class labels are shuffled prior to classifier training. This method thus reflects a real chance level. To prevent overfitting to individual, strongly selective neurons, we included a “dropout” rate of 10% by setting the ΔF/F traces of 10% randomly sampled ROIs in each trial to 0 during the training (Srivastava et al., 2014). We trained and tested classifiers for all seven multi-sAM sessions per mouse and averaged the accuracy.

We further assessed the quality of our classifiers by computing the weighted precision (positive predictive value, or exactness, the number of true positives divided by the number of all positives, weighted by class prevalence) and weighted recall (sensitivity or completeness, the number of true positives divided by the number of true positives and false negatives, weighted by class prevalence) and then computing the weighted F1 score (the harmonic mean of the two, van Rijsbergen, 1979; Hand et al., 2001) and the area under the curve (AUC) as the mean of the AUCs per class (area under the receiver-operating characteristic, Huang et al., 2003). This information was used to compute the balanced accuracy for multiclass problems.

When conducting a weight analysis on SVM classifiers, correlations of features can lead to arbitrary changes in feature weights (“multicollinearity,” defined as a high proportion of Pearson's correlation coefficients >0.7, Dormann et al., 2013). To control for this, we computed the condition index for each feature (=ROI) correlation matrix, which is the square root of the ratio of the largest and the smallest eigenvalues of the correlation matrix. Values >20, not capped in either direction, are considered problematic (Belsley et al., 1980, Johnston and Dinardo, 1996, Mela and Kopalle, 2002). After applying the 10% dropout, no session had a condition index >20. Thus, multicollinearity is not a concern in our dataset by the current standards.

Statistics

All statistical analyses were run in Matlab. Significance levels *, **, and *** correspond to p-values lower than 0.05, 0.01, and 0.001, respectively. Data were tested for normality using the Kolmogorov–Smirnov test, and nonparametric tests were used when the data were not normally distributed. All descriptive values are mean and standard deviation unless otherwise noted. p-values were corrected for multiple comparisons where appropriate using the Bonferroni–Holm method. Sample sizes were not predetermined.

Results

Head-fixed mice discriminate amplitude-modulated from unmodulated noise in an operant task

Water-deprived, head-fixed mice (N = 8) were trained to discriminate the presence or absence of 15 Hz sinusoidal amplitude modulation (sAM) in a 1 s broadband noise (BBN, 4–16 kHz, 70 dB SPL) using an operant Go/No-Go paradigm (see Materials and Methods for a full description of training regimen). In Go trials, the noise carrier sound was presented without amplitude modulation (0% sAM depth). Licking a waterspout within a 1 s “answer period” following sound offset was scored as a “hit” outcome and rewarded with a drop of 10% sucrose water. Withholding licking during the answer period of Go trials was scored as a “miss” outcome and neither punished nor rewarded. On No-Go trials, the noise carrier was fully amplitude-modulated (100% sAM depth), and mice had to withhold licking during the answer period; these “correct rejection” outcomes were not rewarded. Licking during the answer period of No-Go trials was scored as a “false alarm” outcome and punished with a 5 s “time-out” (increased intertrial interval; Fig. 1A–C). Mice reached a criterion expert performance of ≥80% correctly responded to trials after 13.6 ± 2.6 training sessions.

After mice reached expert performance (<20% false alarms for two consecutive sessions), we varied the modulation depth of the sAM sound in subsequent sessions from 20 to 100% in 20% steps. False alarm rates increased in this “multi-sAM” paradigm compared with the final two sessions with only 0 and 100% sAM depths (0.46 ± 0.16 vs 0.18 ± 0.11, respectively, mean and standard deviation), as expected from an increased perceptual ambiguity of No-Go sounds on low sAM depth trials (Fig. 1D). Expectedly, false alarm rates were not evenly distributed across No-Go conditions of varying depths, and mice were more likely to lick on low sAM depth No-Go trials than on 100% sAM depth No-Go trials that they were initially trained on (mean fit half-maximal lick probability, 69% sAM depth; Fig. 1E). However, mice's hit and false alarm rates remained stable across consecutive daily sessions (Fig. 1F), indicating that discriminative performance did not increase with further training on the multi-sAM paradigm for any AM depth (ANOVA, F(6,58) = 1.46 for factor session #, p = 0.2065). Because false alarm rates increased with the perceptual similarity of Go and No-Go sounds, these data argue that performance reflects mice's attending to temporal envelope modulation.

As a separate test of whether mice were indeed attending to the discriminative sound's temporal envelope, we trained n = 3 mice on the opposite contingency with the presence of sAM serving as the Go stimulus. These mice's operant responses in the multi-sAM paradigm also varied in a manner expected from temporal envelope detection. To quantify the performance for all mice regardless of training contingency, we calculated the sensitivity index (d′) per AM depth rather than the licking probability (Fig. 1G). On average, d′ steadily rose with increasing AM depth (i.e., increasing perceptual distance from the 0% band-limited noise carrier). Discrimination curves for mice trained with sAM noise as the Go stimulus (n = 3) were better described by a logarithmic relationship, whereas data from mice trained with BBN as the Go stimulus (n = 8) were better fit with a sigmoid function. We thus compared discrimination curves across mouse groups by measuring the slope of linear fits to the psychometric data, revealing significantly shallower slopes in mice trained on sAM = Go contingency (2.88 ± 0.47 for BBN vs 1.36 ± 0.83 for sAM, Kruskal–Wallis test, χ2(1,10) = 4.17, p = 0.0412). However, both groups of mice's discrimination behavior increased as a function of modulation depth and as such appeared to solve the task by responding to the presence or absence of sinusoidal envelope modulation in a depth-dependent manner. Neural data for subsequent analyses were thus pooled across mice trained on both stimulus contingencies after confirming for each analysis that there was no significant statistical difference between the two groups.

Lick counts per second in trained mice were stable across consecutive daily sessions (four-way ANOVA, F(6,1,219) = 1.53, p = 0.166 for factor session number) and differed substantially between hit, miss, correct rejection, and false alarm trials (N-way ANOVA, F(3,351) = 1,083.55, p = 3.310 × 10−167 for factor trial outcome type, Fig. 1H,I). Intersubject variability was quite low (N-way ANOVA, F(10,351) = 1.02, p = 0.3029 for factor subject ID, Fig. 1H). Mice generally performed anticipatory licks during sound presentation on hit and false alarm trials, and lick probability peaked after sound offset during the answer period. Miss and correct rejection trials were accompanied by low lick rates. Maximal lick probability and full-width at half-maximum of lick bout histograms were also stable across sessions (N-way ANOVA; maximal probability, F(6,255) = 0.74, p = 0.6147; full-width at half-maximum, F(6,255) = 0.55, p = 0.7725) but significantly different between trial outcomes (Fig. 1J, N-way ANOVA; maximal probability, F(3,43) = 253.37, p = 1.319 × 10−25; half-width, F(3,43) = 26.62, p = 1.537 × 10−9). The major observed difference between trial outcomes was longer duration lick bouts on hit compared with false alarm trials, where mice's licking extended beyond the answer period and into the intertrial interval. However, this result is not surprising given that reward delivery occurs on hit trials and thus engages consummatory licking behavior. Mice regularly initiated licking during the sound, and the median first-lick time was 0.73 ± 0.35 s after sound onset (Fig. 1K, median ± median absolute deviation). Additionally, we found no main effect of training contingency, such that licking behavior was not different between mice trained with BBN or sAM noise as the Go stimulus (N-way ANOVA; maximal probability, F(1,43) = 0.73, p = 0.3992; half-width, F(1,43) = 1.85, p = 0.181). Licks during the baseline occurred with a mean probability across all baseline time bins of 2% for hits, 0.9% for misses, 2.7% for false alarms, and 0.7% for correct rejections, suggesting that withholding licking in the answer period is associated with slightly lower baseline lick rates (Kruskal–Wallis test, χ2(3,43) = 32.32, p = 4.482 × 10−7). However, a linear support vector machine classifier was unable to predict the trial outcome from the baseline licks above chance level (25 ± 0.4% vs 25 ± 0.3% for real and shuffled data. Kruskal–Wallis test, χ2(1,21) = 2.8, p = 0.094), suggesting that small variations in baseline lick rates were not informative of impending trial outcomes. Altogether, these data show that head-fixed mice rapidly learn and stably execute our psychometric sAM task.

Shell IC neurons are active during sound and answer periods

We next investigated the extent to which task-dependent activity is present in the shell IC of actively listening mice. To this end, we used a viral approach to broadly express a genetically encoded Ca2+ indicator (GCaMP6f or −8 s) in the IC and conducted two-photon Ca2+ imaging to record shell IC neuron activity as head-fixed mice engaged in the multi-sAM task (Fig. 2A–C). The multi-sAM paradigm enables comparing neural activity on trials with similar sounds, but distinct trial outcomes (i.e., hits vs misses; correct rejections vs false alarms), thereby testing how shell IC activity varies depending on mice's instrumental choice in the answer period.

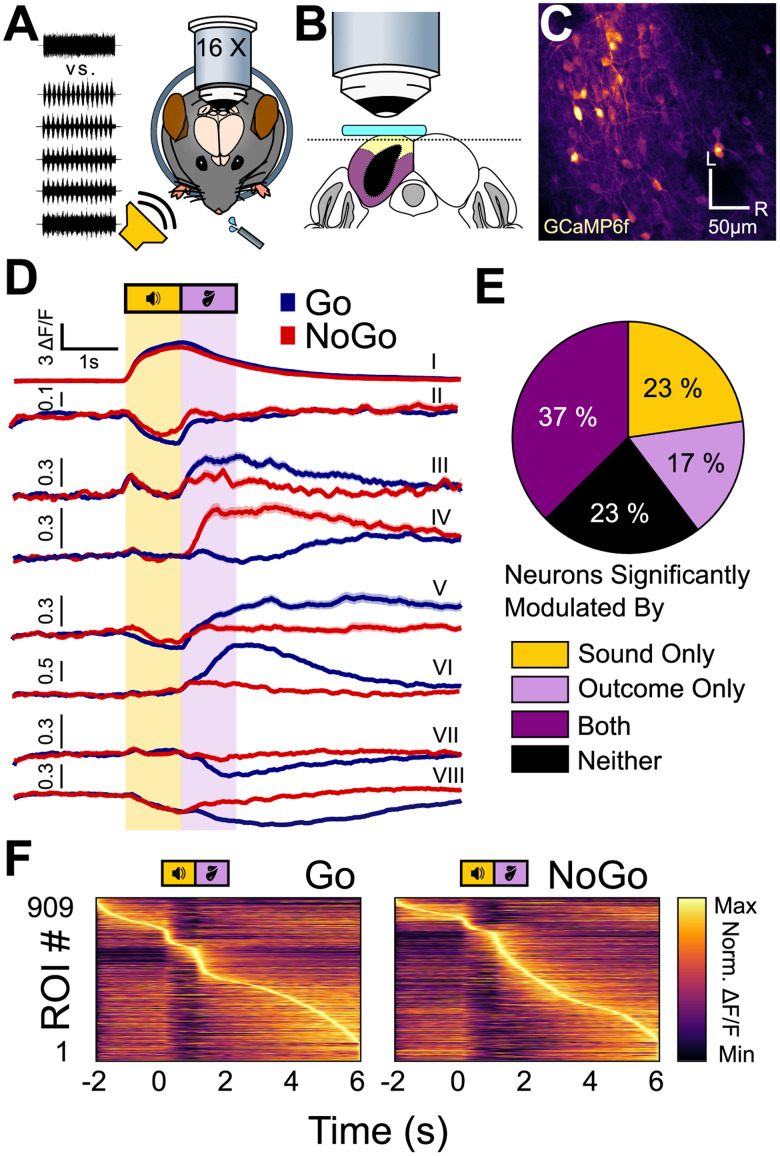

Figure 2.

Shell IC neurons are active across the entirety of Go and No-Go trials. A, Experimental approach: multiphoton Ca2+ imaging was conducted in the superficial shell IC layers to record neural activity as mice engaged in the multi-sAM task. B, Schematic of the imaging plane. A window (teal) was placed above the left IC. The imaging plane was 20–50 µm below the brain surface to reliably target the dorsal shell IC (yellow), rather than the lateral shell (purple) or central IC (black). C, Example field of view from a typical session (L, lateral; R, rostral). D, Example mean ± SEM fluorescence traces of eight separate ROIs on Go (blue) and No-Go (red) trials. All ROIs were recorded simultaneously in the same FOV. Of note is that differential neural activity on Go and No-Go trials spans across the entire trial epoch and is expressed as both increases and decreases in fluorescence. E, The proportion of cells significantly modulated by any sound or outcome, any combination of sound and outcome, or none of those three options (n = 909). F, Normalized mean activity on Go (left) and No-Go (right) trials for all recorded ROIs across all mice's first multi-sAM session, sorted by activity maxima. Of note, most ROIs have their activity maxima after the sound termination.

We recorded n = 909 regions of interest (ROI) in n = 11 multi-sAM sessions recorded across N = 11 mice (83 ± 27 ROIs per FOV). We restricted the analyses of Figures 2–5 to a single FOV from each mouse, recorded during the mouse's first multi-sAM session. This approach was taken to prevent repeated measurements from the same neurons across multiple sessions. As a first pass to determine how shell IC neurons respond to task-relevant variables, we averaged each neuron's baseline-normalized fluorescence traces (ΔF/F) separately for all Go and No-Go trials in a given session. As expected from prior imaging studies in anesthetized and passively listening mice (Ito et al., 2014; Barnstedt et al., 2015; Wong and Borst, 2019), some shell IC neurons showed strong fluorescence increases (Fig. 2D, ROI I) or decreases (Fig. 2D, ROI II) that began during sound presentation and reflect bidirectional changes in firing rates (Wong and Borst, 2019). However, many neurons showed neural activity changes that began following sound termination, such that maximal activity modulation occurred during the answer period or in the intertrial interval. This activity was driven by fluorescence increases (Fig. 2D, ROIs III–VI) and decreases (Fig. 2D, ROIs VII and VIII), with distinct neurons often showing differential activity patterns on Go and No-Go trials (Fig. 2D, compare ROIs III, IV, and VI). Moreover, many neurons showed fluorescence changes during both sound and answer periods (Fig. 2D, ROIs III, V, and VIII). Shell IC neuron activity is thus bidirectionally modulated across the entire duration of behavioral trials of our task, with substantial fluorescence activity peaking after the answer period in the intertrial interval (Fig. 2D, ROIs V and VI). Some of the activity observed following sound termination may reflect sound offset responses, as previously reported for 13% of shell IC neurons in response to pure tones (Wong and Borst, 2019). Another possibility is that since this long-latency activity often instantiates during the trial answer period, it might reflect task-dependent information in shell IC neurons.

Figure 3.

Most shell IC neurons are broadly responsive to sAM depth. A, Example ΔF/F traces (left) and sAM depth tuning curve (right) for a broadly tuned representative example cell. B, The same as in A for a cell tuned to high sAM depths. C, The same as in A for a cell tuned to low sAM depths. D, The same as in A for a cell tuned to intermediate sAM depths. E, Mean ± standard deviation of ΔF/F peak for all sound-excited cells (272) shows no linear correlation with sAM depth. The histogram bars indicate the relative proportion of significantly responsive neurons at each sAM depth as determined by trial-to-trial correlation bootstrapping analysis. F, Lifetime sparseness for sAM depth responses of all neurons with significant activity during sound presentation.

We summarized these results by quantifying the relative proportion of shell IC neurons showing significant activity during sound and answer periods. To this end, we employed an “autocorrelation” bootstrapping analysis (Geis et al., 2011; Wong and Borst, 2019; Fig. 2E) to test for significant trial-to-trial correlations during the sound presentation of specific sAM stimuli or during the answer period of distinct trial outcomes (hit, miss, correct rejection, false alarm). This analysis suggested that 23% (207/909) of all recorded neurons were consistently modulated by at least one sound stimulus during sound presentation, but showed no consistent activity changes during the trial outcome period; 17% (154/909) of neurons showed consistent activity during the answer period of one or more trial outcomes, but not during sound presentation; 37% (340/909) showed consistent trial-to-trial modulation during sound presentation and answer period, and 23% (208/909) of neurons were neither modulated by sound nor trial outcome in a systematic manner detected by these analyses. Consequently, the majority of neurons in our datasets (77%, 701/909) showed reliable activity modulation during sound presentation and/or the trial answer period. However, only a minority of neurons were exclusively modulated during sound presentation (23%). Over half of all neurons (54%) instead displayed trial outcome modulation, with many neurons reaching their activity peak several seconds after sound offset on both Go and No-Go trials (Fig. 2F). Altogether, these data suggest that task-dependent and nonstimulus-locked activity are the dominant efferent signals from shell IC neurons under our conditions.

Shell IC neuron activity during sound presentation is broadly tuned to sAM depth

Sound-evoked spike rates of central IC neurons generally increase monotonically with higher sAM depths (Rees and Møller, 1983; Joris et al., 2004). However, nonmonotonic sAM depth coding has also been reported (Preuß and Müller-Preuss, 1990), whereby neurons selectively respond to a “preferred” sAM depth akin to the nonmonotonic intensity selectivity of brainstem or auditory cortex neurons (Young and Brownell, 1976; Sadagopan and Wang, 2008). We thus wondered how the shell IC neurons in our recordings encode sAM depth. To this end, we used t-SNE/k-means clustering to identify neurons showing a fluorescence increase during sound presentation (272/464), given the interpretive difficulty of fluorescence decreases (Vanwalleghem et al., 2021) and the broad selectivity of sound-evoked inhibition in shell IC neurons (Shi et al., 2024). This clustering was only used to identify sound-excited neurons and not to cluster tuning curve shapes. Since baseline-variance–based thresholding for event detection requires significant assumptions and can confound results obtained from noisy Ca2+-imaging data, we used the trial-to-trial correlation approach to determine whether one or multiple sAM depths drove consistent responses during sound presentation. Thirty-eight percent (104/272) of neurons were significantly responsive to all sAM depths (Fig. 3A), suggesting that more than a third of shell IC neurons that increase their activity during sound presentation do so in response to each of the sound stimuli in our conditions. Twenty-two percent (60/272) of cells preferentially showed activity increases during presentation of low AM depths (0 and 20%), but not to higher ones (Fig. 3B), and only 6% (17/272) increased their activity during high sAM depth trials (80 and 100%), but not to lower ones (Fig. 3C). Only a single cell showed selective activity on medium sAM depth trials (60 and 80%, Fig. 3D). The categorically broad sAM depth selectivity was also reflected in the magnitude of these shell IC neurons’ responses, i.e., the average peak of ΔF/F traces during the sound presentation. Indeed, there was no significant correlation between ΔF/F peak and sAM depth (Pearson's ρ = 0.26, R2 = 0.07, Fig. 3E), suggesting that the average population activity of shell IC neurons during the discriminative sound cue does not systematically increase or decrease with sAM depth. Finally, we computed the lifetime sparseness of all neurons as a separate measure of response sharpness across all stimuli (Vinje and Gallant, 2000, Fig. 3F). The population distribution of lifetime sparseness values was broad, with a low median value of 0.28 (median absolute derivation 0.18), in further agreement with broad selectivity to sAM depth. Altogether these analyses suggest limited nonmonotonic sAM depth encoding and instead indicate that most shell IC neurons respond similarly during presentation of different sound cues employed in our task.

Single-neuron responses are modulated by trial outcome

Many neurons showed their strongest activity modulation in the answer period of Go and No-Go trials (Fig. 2F), and activity covaried with trial outcomes rather than acoustic sound features. The neuronal activity might thus discriminate between divergent trial outcomes, such that shell IC neurons would transmit distinct signals depending on mice's instrumental choices. We tested this hypothesis by comparing fluorescence traces averaged across trial outcomes, rather than acoustic features, for all trial outcome responsive neurons (n = 701/909). We included all task-modulated neurons in this analysis as we had no a priori reason to expect that trial outcome–dependent differences would be restricted to the answer period. Rather, sound-related activity might also covary with mice's impending actions, in accordance with prior work demonstrating context-dependent scaling of acoustic responses in IC neurons (A. F. Ryan et al., 1984; Slee and David, 2015; Joshi et al., 2016; Saderi et al., 2021; Shaheen et al., 2021).

We observed diverse trial outcome–related activity during the sound and/or answer period: Many neurons had fluorescence increases restricted to hit and false alarm (Fig. 4A) or alternatively miss and correct rejection trials (Fig. 4B). Activity in these neurons thus covaried with mice's licking of the waterspout rather than the discriminative sound cue features. However, trial outcome–related activity was not strictly yoked to mice's actions. Indeed, other neurons had activity restricted to individual trial outcomes such as hits (Fig. 4C) or had complex activity profiles, which diverged depending on whether mice's licking action was rewarded (Fig. 4D). One possibility is that outcome selectivity largely reflects a movement-related modulation of neural activity (A. Nelson and Mooney, 2016; C. Chen and Song, 2019; Stringer et al., 2019; Karadimas et al., 2020; Yang et al., 2020). Alternatively, some of the trial outcome activity may also reflect signals related to reward, choice, learned categorization, and/or arousal, although limitations of our Go/No-Go task structure preclude fully disambiguating these scenarios.

Most shell IC neurons were active on multiple trial outcomes, as reflected by a low median lifetime sparseness measure in the population data (0.37; absolute derivation = 0.21, Fig. 4E). We further summarized the trial outcome selectivity by measuring a separate trial outcome selectivity index (SI) value for Go and No-Go trials for each neuron. Index values range from −1 to +1 and quantify the extent to which fluorescence changes are selective for incorrect or correct trial outcomes; values of −1 and +1 indicate neurons that are only active on incorrect or correct trials, respectively. Plotting each neuron’s SI values revealed a distribution clustered toward positive and negative values on Go and No-Go trials, respectively (Fig. 4F). This result indicates that correlated activity on hits and false alarms (as in Fig. 4A) is the dominant (although not exclusive) form of trial outcome–dependent modulation, although a substantial variability in response types is clearly observable in the spread of population data.

We next quantified this diversity in trial outcome selectivity by calculating the fraction of neurons with significantly different ΔF/F values during the sound and postsound periods of divergent trial outcomes. To this end, we averaged the fluorescence values across 1 s time bins, beginning 1 s prior to sound onset and continuing until 1 s following the answer period (4 s total, Fig. 4G). We then compared these values across hit and miss and correct rejection and false alarm trials (Fig. 4H). In the 1 s baseline period prior to sound onset, only 1% (7/701) of neurons showed a statistically significant difference between hit and miss trials; these values align with the expected false-positive rate set by the cutoff of our statistical analysis (see Materials and Methods). In contrast, 28% (194/701) had significantly different fluorescence values during sound presentation on hit and miss trials, and this fraction increased to 62% (436/701) and 67% (470/701) during the answer and post-answer time bins, respectively (Fig. 4H, blue). Thus, in Go trials, a major fraction of task-active shell IC neurons transmit signals correlated with mice's actions rather than the features of the discriminative sound cue.

Similar results were found when comparing activity across correct rejections and false alarms of No-Go trials: Although a negligible proportion of neurons showed significant differences during the presound baseline period (0.4%; 3/701), significant differences were seen in 30% of neurons (211/701) during sound presentation (Fig. 4H, red), 52% of neurons (363/701) during the answer period, and 45% (321/701) of neurons in the post-answer period. During sound presentation, a similar fraction of neurons showed trial outcome selectivity during Go and No-Go trials (chi-square test, χ2(1) = 1.00, p = 0.3165). However, the fraction of neurons with differential activity in the answer and post-answer periods of No-Go trial outcomes was significantly lower on No-Go compared with Go trials (chi-square test, χ2(1) = 15.51, p = 0.0001 and χ2(1) = 64.40, p = 1.01 × 10−15 for answer and post-answer periods, respectively). Thus, under our conditions, outcome-selective activity preferentially occurs after sound presentation on Go trials.

The above differences between correct and incorrect outcomes could reflect an all-or-none asymmetry in mice's licking during the 1 s answer period of Go and No-Go trials. If outcome selectivity arises due to the binary presence or absence of licking activity in the answer period, few neurons should have significant fluorescence differences in the answer period of hit and false alarm trials. These outcomes are defined by the presence of licks in the answer period, with mice's anticipatory licking behavior in the preceding sound cue period being similar (Fig. 1H,I). Presound baseline differences approximated the expected false-positive rate (3/701, 0.4%), and 14% (98/701) were significantly different during sound presentation. However, 42% (293/701) of neurons had significant fluorescence differences during the answer period of hit and false alarm trials (Fig. 4H, green). Thus, trial outcome–related differences do not solely reflect the all-or-none presence of licks in the answer period. Rather, differential neural activity may be due to outcome-specific differences in mice's lick patterns or potentially the behavioral consequences of licking actions.

Indeed, an important caveat is that mice made more licks on hits than false alarm trials (7.14 ± 1.78 vs 3.87 ± 1.80 licks/s for hit and false alarm trials, respectively; Wilcoxon rank sum test, p = 5.6 × 10−187, Fig. 1I). Thus, any differential activity may reflect differences in mice's number and/or rate of licks rather than information related to trial outcome. Assuming that lick number, frequency, and/or duration monotonically influences neural activity, normalizing the trial outcome–specific fluorescence values by these parameters should reduce the fraction of neurons with significantly different activity on hit and false alarm trials. In other words, if the trial outcome–specific ΔF/F values are roughly linearly proportional to trial-specific licking patterns, dividing the fluorescence magnitude by the lick parameters on each trial should minimize the difference between hit and false alarm trials. In contrast, we expect this procedure should have minimal effect on the proportion of significant neurons if fluorescence values are largely independent of trial outcome–specific differences in licking patterns. We thus recalculated the proportion of neurons with significant fluorescence differences on hit and false alarm trials, after dividing the fluorescence traces by either the number of licks during the 1 s answer period or the slope of the lick histogram during the 1 s answer period on a trial-by-trial basis. Normalized data revealed a similar proportion of neurons with different activity on hit and false alarm trials compared with non-normalized values (normalized to number of licks, 35%, 247/701 significant; chi-square test compared with non-normalized, χ2(1) = 6.37, p = 0.186; normalized to slope, 39%, 273/701 significant, chi-square test compared with non-normalized, χ2(1) = 1.19, p = 0.276). In contrast, normalizing the fluorescence values to the full-width at half-maximum of lick histograms, thereby attempting to account for the differential duration of mice's lick bouts across hit and false alarm trials, significantly increased the proportion of neurons with significantly different trial outcome responses (65%, 459/701 significant, chi-square test compared with non-normalized data, χ2(1) = 8.17, p = 0.004). Altogether, these results suggest that potentially, trial outcome–related activity is not fully explained by differences in mice's licking patterns during the answer period. However, an important caveat is that these analyses assume that the relationship between mice's licking behavior and single-neuron activity is not saturated on false alarm trials; a change in licking behavior on hits is assumed to cause a proportional change in fluorescence values. If this assumption does not hold true, then the increase in trial outcome–related activity upon normalizing for lick bout durations could also reflect a ceiling effect, whereby longer lick bouts on hit trials do not meaningfully increase firing rates compared with false alarm trials.

Differential activity on hit and false alarm trials could also reflect distinct sound offset responses due to the different sound cues employed on the Go and No-Go trials. This hypothesis predicts that a similar proportion of neurons should have a significantly different activity on miss and correct rejection trials, where mice hear equally distinct sound cues as on hit and false alarm trials but do not lick (Fig. 4H, orange). As expected, baseline differences on miss and correct rejection trials were within the margin of error (0.3%, 2/701). In contrast, the percentage of neurons with a significantly different activity during sound, answer, and post-trial periods was 6%, (41/701), 9% (62/701), and 3% (18/701), respectively; these proportions are significantly lower than observed when comparing hit and false alarm trials (chi-square tests; sound, χ2(1) = 92.55, p = 6.555 × 10−22; answer, χ2(1) = 33.49, p = 7.165 × 10−9; post-answer, χ2(1) = 27.40, p = 1.651 × 10−7). Thus, the data suggest that the trial outcome–selective responses of shell IC neurons observed under our conditions are unlikely to solely reflect sound offset or differential licking patterns on rewarded and unrewarded trials.

Neural population trajectories are modulated by behavioral outcome

Our results thus far show that individual shell IC neurons transmit task-dependent and trial outcome–specific information following sound termination, although the extent of such activity varies in magnitude across neurons. We thus asked whether task-related information is more robustly represented in population-level dynamics of shell IC activity, rather than at the single-neuron level. To this end, we investigated the trajectories of neural population activity across trials. Neural trajectories are a simple way to express the network state of multineuronal data and have been used in the past to compare the time-varying activity of neuronal ensembles across different experimental conditions (Stopfer et al., 2003; Briggman et al., 2005; Churchland et al., 2007). If task-related information is indeed transmitted via a population code, a network state difference should be observable for different trial outcome conditions. Using only the first multi-sAM session from each mouse, we first computed a principal component analysis (PCA) of the ΔF/F traces on a timepoint-by-timepoint basis to reduce the dimensionality (Fig. 5A–C). The change of the principal components over time was then defined as a neural trajectory. We generally observed a deviation of neural trajectories for Go trials (Fig. 5B) depending on the trial outcome that we did not always observe for No-Go trials (Fig. 5C). Surprisingly, this trial outcome–specific trajectory divergence occurred immediately following sound onset, suggesting a difference in population activity during sound presentation. The difference between hit and miss trial trajectories could in principle reflect trial-specific differences in mice's licking during sound presentation. However, hit and false alarm trials have similar lick patterns during sound presentation (Fig. 1I); the initial trajectory differences during sound presentation of hit and false alarm trials should be similar if mice's licking was driving such trial outcome–specific trajectories. However, hit and false alarm trajectories often appeared to diverge during sound presentation (Fig. 5B,C), further suggesting that population activity varies as a joint function of sound cues and mice's actions.

To quantify the trial outcome divergence in ensemble activity, we computed the Euclidean distance between the principal components of correct and incorrect trials of the same trial category: hits versus misses and correct rejections versus false alarms on the Go and No-Go trials, respectively. We then weighted the principal components by their explained variance (weuΔ) and summed up the weighted Euclidean distances (ΣweuΔ) to compute the mean ΣweuΔ for Go and No-Go trials across mice and sessions (Fig. 5D). Weighted Euclidean distance curves were not significantly different between mice trained on the broadband noise = Go and sAM noise = Go task contingencies (n = 8 and 3 mice, respectively; N-way ANOVA, F(1,98) = 3.26, p = 0.0743), and data from both groups were pooled for these analyses.

On average, we found an increasing divergence during sound presentation of both Go (Friedman's test, χ2(7) = 57.85, p = 4.05 × 10−10) and No-Go sounds for correct versus incorrect trials (Friedman's test, χ2(7) = 22.94, p = 0.0017) and a slow rejoining of the trajectories toward the end of the trial (Fig. 5D, blue and red curves). This general time course recapitulates the effect seen in the raw PCA trajectories. Interestingly, a clear second phase of divergence was also seen 2–2.5 s after sound onset, immediately after the answer period ended. This second phase of divergence lasted for 1–2 s before converging. Both the first and second trajectory divergences were statistically significant, as confirmed by a post hoc Dunnett's test comparing the baseline difference at −1 s with the peak divergences at 1 and 3 s (Fig. 5D, bottom panel, p = 1.175 × 10−5 and 5.81 × 10−5 for Go trials; p = 2.167 × 10−6 and 0.006 for No-Go trials).

A primary difference between trial outcomes is mice's lick action. Since 440/701 (63%) single neurons were active on hits and false alarms (Fig. 4), we asked if the trajectory divergences could be explained by licking. To this end, we first computed the weighted Euclidian distance between the principal components of hit and false alarm trials (Fig. 5D, green curve). If licking during the sound is responsible for the trajectory divergences observed for Go and No-Go trials, the weighted Euclidean distances between hit/false alarm trajectories should be near zero during sound presentation; mice's licking patterns are similar at this time on these two trial outcomes (Fig. 1I). However, the weighted Euclidean distances of hit and false alarm trials varied across time (Fig. 5D, lower panel; Friedman's test, χ2(7) = 53.7, p = 2.6999 × 10−9), with significant divergence occurring during sound presentation. These results suggest that mice's licking does not explain trial outcome–specific neural population trajectories.

To better understand the role of lick activity, we next computed the PCA after aligning the ΔF/F traces to mice's first lick after sound onset until the end of the answer period (or the median first-lick time of a session in trials without licks; Figs. 1K, 5E,F). A trajectory divergence was similarly present in lick-aligned data, but divergence began before the first lick after sound onset and persisted for the entire recording period for both Go (Friedman's test, χ2(7) = 21.64, p = 0.0029) and No-Go trials (Friedman's test, χ2(7) = 22.94, p = 0.0017). In contrast, there was no significant divergence between hit and false alarm trials before the first lick after sound onset, but instead a prominent and prolonged late divergence 2 s later (Friedman's test, χ2(7) = 25.95, p = 0.0005). These results indicate that population trajectories may diverge slightly prior to the onset of lick bouts, implying that shell IC neurons transmit different activity patterns in close temporal alignment with mice's actions.

To further determine the extent to which our results reflect mice's licking patterns, we next cross-correlated the means of the lick histograms and weighted Euclidean distances on Go and No-Go trials, as well as all hit and false alarm trials (Fig. 5G). If the lick histogram correlates with the general curve or either of the two peaks from the sound-aligned data, we should observe one or two distinct and statistically significant maximum correlation time points (“lags”). Indeed, we found the maximum correlation for the weighted Euclidean distance curves of Go trials occurred at −0.29 s ± 0.37 s, for No-Go trials 0.07 s ± 0.96 s, and for hit/false alarm trials 0.09 s ± 1.28 s, indicating that the lick histogram correlates maximally with the neural population activity when aligned to sound. Moreover, the cross-correlation function is rather broad, and the correlation coefficient values are not significantly different at the 0 ms and maximum correlation lag index times for all three weighted Euclidean distance curves (three-way ANOVA, no main effect of lag time, F(1,131) = 0.54, p = 0.4637). These results suggest that licking dynamics and population trajectories are not closely synchronized at time scales that can be resolved via Ca2+ imaging.

Since mice lick in bouts (A. W. Johnson et al., 2010), the first-lick timing should represent the onset of significant licking activity (Fig. 5F). If licking determines trajectory divergences, one expects a greater correlational structure in lick-aligned compared with sound-aligned data. However, Pearson's correlation coefficients measured after aligning the lick histogram and the ΔF/F traces to the first lick following sound onset are significantly lower than those measured from sound-aligned data for Go and No-Go Euclidean distance curves (Fig. 5H; Go, 0.48 ± 0.24 for sound aligned vs 0.22 ± 0.39 for lick aligned; No Go, 0.53 ± 0.24 vs 0.16 ± 0.37; hit/false alarm, 0.22 ± 0.25 vs 0.14 ± 0.36; two-way ANOVA, main effect of ΔF/F alignment, F(1,65) = 9.53, p = 0.0031). The lack of significant difference across alignment types for the hit and false alarm condition may reflect a greater population variance compared with the Go and No-Go conditions, which could lower the correlation value for the sound-aligned curves. Nevertheless, the reduced correlation around the first-lick time argues that a trial outcome–dependent, time-varying divergence of population activity is not tightly time-locked to the onset of lick bouts. Rather, the initial divergence in population trajectories may reflect a trial outcome–dependent modulation of sound responses, potentially ramping activity related to reward anticipation (Metzger et al., 2006), or an intermediate task-dependent variable such as stimulus categorization (Xin et al., 2019). In contrast, the second divergence following the answer period may reflect a trial outcome–related signal that modulates shell IC neuron intertrial activity on a timescale of seconds.

An SVM classifier reliably decodes task-relevant information from shell IC population activity

Individual shell IC neurons were often broadly responsive to sAM depth (Fig. 3) and trial outcomes (Fig. 4). These single-neuron responses gave rise to prolonged, time-varying ensembles whose activity systematically varied with mice's instrumental choice (Fig. 5). Despite low individual neuron selectivity, task-dependent information might thus still be transmitted in population activity (Robotka et al., 2023). We tested this idea by training SVM classifiers to decode specific task-relevant variables—sAM depth, trial category (Go or No Go), and lick responses—using integrated fluorescence activity from discrete 100 ms time bins along the trial (Fig. 6A). All SVMs were individually trained and tested, such that classifiers only used data from mice trained with BBN or sAM sounds as the Go cue but never both. A three-way analysis of variances showed no significant main effect of training contingency (three-way ANOVA, F(1,2,799) = 0.01, p = 0.9055), and thus we pooled the data of both groups. Decoding accuracy for all variables tested remained at chance level before sound onset, which is expected given that each trial's ΔF/F signal was normalized to the 2 s baseline period prior to sound presentation.

We first trained the classifiers to decode sAM depth and tested if population activity transmits discriminative acoustic features above chance level. The maximum classification accuracy reached was 31 ± 7% at 1.1 s after sound onset (Fig. 6B, top), thereby modestly but significantly exceeding the chance level accuracy obtained from shuffled data by 14% (Friedman's test, χ2(8) = 70.47, p = 3.96 × 10−12). Conversely, sAM depth could not be decoded at all when the classifier only had access to the fluorescence data from 100 ms preceding the first lick after sound presentation (“first-lick accuracy”), and the classifier resorts to classifying everything as BBN (accuracy, 19 ± 4%; Fig. 6B, bottom). However, an important consideration is that in our experiments, sAM depth is correlated with trial category (Go or No-Go depending on training contingency), which is, in turn, correlated with mice's lick probability. To ensure that the sAM depth classifier did not simply reflect the differential behavioral significance of task-relevant sounds, we asked if classification accuracy was maintained when testing was restricted to only modulated stimuli. Under these conditions, maximum classification accuracy peaked at 41 ± 8%, 1.0 s after sound onset (Fig. 6B, middle; 22% increase compared with shuffled data. Friedman's test, χ2(8) = 78.52, p = 9.70 × 10−14). First-lick accuracy remained near chance level (23 ± 4%). These results indicate that despite a rather broad sAM depth selectivity at the single-neuron level, population codes might nevertheless transmit sufficient information to aid in discerning absolute sAM depth.

SVM classifiers also performed significantly above chance level when decoding the trial category (Friedman's test, χ2(8) = 72.58, p = 1.50 × 10−12). Interestingly, the average accuracy-over-time curve showed two separate local maxima (Fig. 6C): The first plateau peaked at 70 ± 6% 1.1 s following sound onset and overlapped with sound presentation during the trial. This may reflect sound-driven information, as it is the earliest signal available for classification. Additionally, any purely lick-related activity during the sound period is presumably of limited information as to trial category: Mice's licking during sound presentation is similar across specific outcomes of different trial categories, e.g., hit versus false alarm, miss versus correct rejection trials (Fig. 1I). The second accuracy peak rose during the answer period and reached a plateau of 82 ± 6% at 2.7 s (31% over chance level), suggesting that IC activity remains informative about trial category across the post-answer period. First-lick accuracy for the trial category was also significantly above chance level (76 ± 3%), suggesting that exact sAM depth, which is not decodable from first-lick data, is not necessary for the classification of the trial category from shell IC neuron activity.