Abstract

With the rapid advancement of computer vision, machine learning, and consumer electronics, eye tracking has emerged as a topic of increasing interest in recent years. It plays a key role across diverse domains including human–computer interaction, virtual reality, and clinical and healthcare applications. Near-eye tracking (NET) has recently been developed to possess encouraging features such as wearability, affordability, and interactivity. These features have drawn considerable attention in the health domain, as NET provides accessible solutions for long-term and continuous health monitoring and a comfortable and interactive user interface. Herein, this work offers an inaugural concise review of NET for health, encompassing approximately 70 related articles published over the past two decades and supplemented by an in-depth examination of 30 literatures from the preceding five years. This paper provides a concise analysis of health-related NET technologies from aspects of technical specifications, data processing workflows, and the practical advantages and limitations. In addition, the specific applications of NET are introduced and compared, revealing that NET is fairly influencing our lives and providing significant convenience in daily routines. Lastly, we summarize the current outcomes of NET and highlight the limitations.

Keywords: near eye tracking, video oculography, infrared oculography, electrooculography

1. Introduction

Tracking the eye gaze shows great significance in various fields, such as human–computer interaction (HCI) [1], virtual reality (VR) [2,3], driver monitoring systems [4,5], and clinical studies [6,7,8,9], and there have been decades of evolvement in the eye-gaze-tracking techniques. Based on the distance between the camera and the user, eye-tracking technologies can be categorized into remote (>10 cm) and near-eye tracking (NET) (<10 cm) scenarios. In remote settings, the images are generally captured by cameras or webcams, requiring analysis of the eye region [10] or whole face region [11]. Conversely, NET settings involve focusing solely on the eye region and capturing eye movement by glasses or head-mounted devices. NET devices are typically fixed to the eyes and capture movements at close range. This setup minimizes the impact of head movements and environmental changes. In contrast, remote eye tracking involves extracting the eye gaze from complex backgrounds and managing variations in head pose. Furthermore, NET devices generally allow free movement for users [12]. However, remote eye tracking often limits the activity of users, such as requiring users to sit in front of the camera [12]. Although head-mounted NET systems are more intrusive, they offer superior accuracy compared to remote video-based techniques [13]. Consequently, these features make NET potentially more feasible for translational applications such as stroke assessment [14] and surgical assistance [15], especially those integrated into VR or augmented reality (AR) systems [16].

The techniques for NET have evolved from early invasive stick pointers [17] and scleral search coil (SSC) [18] to non-invasive approaches such as electrooculography (EOG) [19], infrared oculography (IOG or IROG), and video oculography (VOG). Table 1 shows a comparison of these eye-tracking methods from the aspects of cost, wearability, invasiveness, and accuracy. Non-invasive methods greatly eliminate the requirement for specialized preparation and devices and reduce the related risks and discomfort for the users.

Among these non-invasive NET methods, EOG has high robustness and low power consumption, making it suitable for long-term health monitoring devices [20]. IOG provides high-precision and detailed eye movement data, enabling the possible capability for diagnosing and regular monitoring of neurological diseases [21]. However, high-quality IOG infrared cameras and relevant sensors are expensive. In contrast, VOG achieves a good balance between cost and performance, and it is suitable for evaluating eye movement and recording eye appearance [22].

Table 1.

| Cost | Portability/Wearability | Invasiveness | Accuracy | |

|---|---|---|---|---|

| Stick pointer | Low-cost | Portable | Invasive | Lower |

| SSC | Expensive | Benchtop | Invasive | High |

| EOG | Moderate cost | Portable | Non-invasive | High |

| IOG | Moderate to high-cost | Portable | Non-invasive | High |

| VOG | Moderate cost | Wearable | Non-invasive | High |

Several literature reviews on NET have been published in recent years [24,25,26,27,28,29,30,31,32]. They provided a comprehensive understanding of eye tracking (ET) technology in diverse aspects, such as attentional research [24], VR [25], information selection [26], emotion recognition [27,28], consumer platforms [29], etc. Particularly, several review papers provide detailed and thorough insight into health-related disciplines, covering endoscopy [30], surgical research [31], and radiological image interpretation [32]. Despite the continuous development and use of NET in health-related domains, there is a lack of a dedicated review that can cover the current progress and application of NET for health.

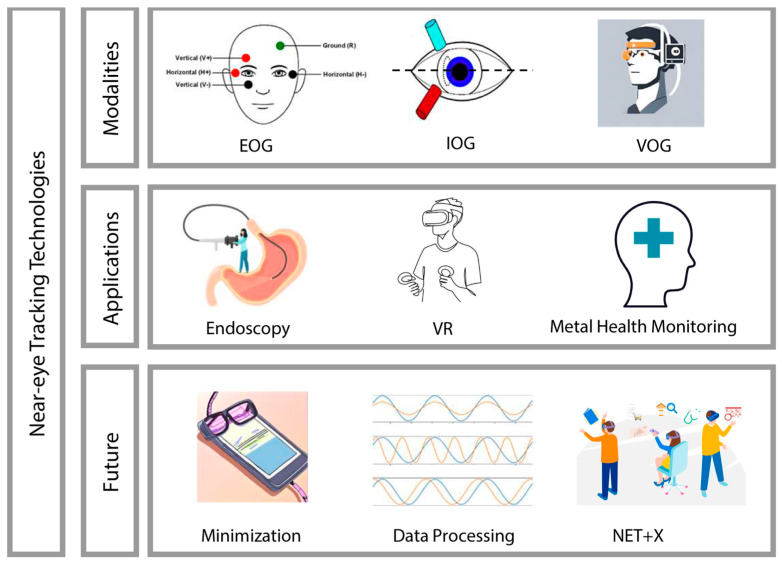

Therefore, this review synthesizes articles on health-related NET and provides a detailed overview of its technology, applications, as well as future directions. To conduct this review, we meticulously searched major academic databases, including PubMed, IEEE Xplore, and Google Scholar. Our search strategy employed a combination of keywords including: (“near eye-tracking” OR “NET”) AND (“wearable technology” OR “wearable devices” OR “health monitoring”) AND (“video oculography” OR “VOG” OR “infrared oculography” OR “IOG” OR “electrooculography” OR “EOG” OR “eye movement tracking”). This methodological approach allowed us to initially identify more than 70 related articles published over the past two decades. Additionally, the literature screening and review were conducted using explicit criteria tailored to the scope of our study. These criteria included the relevance of the studies to health-related domains, covering both clinical applications and healthcare. We meticulously identified and selected literature that specifically focused on NET technologies, as opposed to studies involving remote eye tracking where the distance exceeds 10 cm. Following this stringent selection process, we performed a more focused review of 25 articles from the past two decades with the details of devices and features. An overview of wearable near-eye tracking technologies for health is shown in Figure 1.

Figure 1.

Overview of wearable near-eye tracking technologies for health.

2. State of the Art in Wearable NET Technologies

As outlined in Section 1, non-invasive eye-tracking techniques can be classified by their signal sources into VOG, IOG, and EOG. We herein explore the underlying principles, distinctive characteristics, and medical benefits of these technologies.

2.1. Video Oculography

A VOG setup comprises a video camera that records eye movements using either visible or infrared light coupled with a computer that stores and analyzes the gaze data [23].

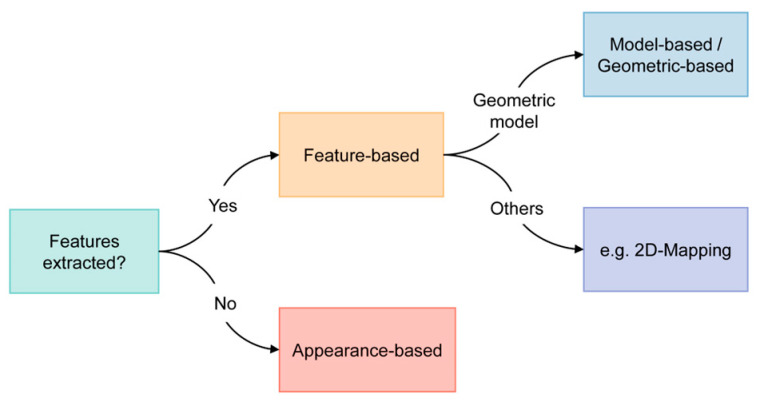

Based on existing reviews and literature, there are three main categories for methods in VOG: the feature-based, appearance-based, and model-based methods. However, our review revealed that the definitions and boundaries between these concepts are somewhat ambiguous, and these methods are often used in conjunction to fully leverage the acquired image or video data. Given this overlap and to streamline the classification, we propose categorizing these methods into two distinct groups: feature-based and appearance-based (Figure 2). It is noteworthy that the majority of the studies we reviewed employed the feature-based approach.

Figure 2.

Classification criteria of wearable NET technologies discussed in this review.

Feature-based eye tracking relies on identifying and tracking specific features or landmarks in the eye, which are often reflected by intensity levels or intensity gradients [13]. This method is often precise and robust, but it may require careful calibration and is sensitive to lighting conditions and occlusions. In contrast, appearance-based eye tracking focuses on capturing and analyzing the overall appearance of the eye, which is more robust for visual disturbances, making it more suitable for real-world applications. However, it may require a larger amount of training data and computational resources.

2.1.1. Feature-Based Eye Tracking

The initial stage in featured-based eye tracking is to extract relevant features, which often include pupil size, saccade, fixations, velocity, blink, and pupil position [33]. The extracted eye features are then used for gaze point calculation.

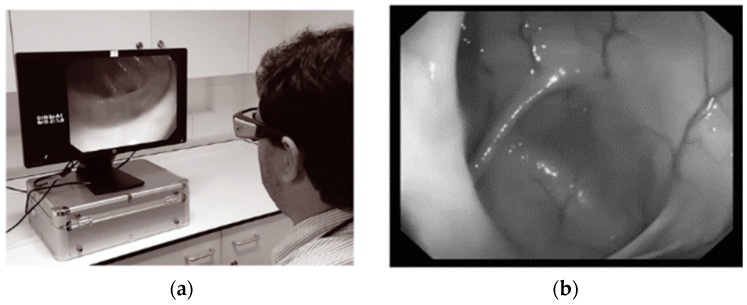

A number of NET studies investigate differences in participant groups using VOG, such as in [15], which validated the variance in visual control strategies between experts and beginners in the virtual display of urethral prostatectomy. In [34], the very first study to analyze visual gaze during actual Esophagogastroduodenoscopy, gaze patterns were detected using heatmaps, and metrics such as observation time, fixation duration, and the FD-to-OT ratio were obtained. This study provides suggestions for specific visual gaze patterns of endoscopists in real practice, which might have potential applications in medical education and training. Another study revealed that VOG can differentiate the visual gaze patterns between experienced and novice endoscopists, highlighting its potential as a powerful training tool for novice colonoscopists [35]. The analysis of gaze patterns provided insights into why adenomas are often overlooked at the hepatic flexure during colonoscopy. By establishing efficient search patterns and minimizing variability in adenoma detection rates, this study lays the groundwork for improving colonoscopy training and performance.

Other features used in disease-related studies include motion velocity and acceleration, as well as average viewing times, contrast, and saliency values for fixations made to the different regions, which can be computed via custom software [36]. Additionally, a study utilizing WearCam—a wearable wireless camera—monitored focused attention in young children during play [37]. This VOG method captures gaze direction and duration to analyze attention patterns as well as color detection and face detection, potentially enabling the early detection of attention-related disorders such as autism.

Geometric-based method

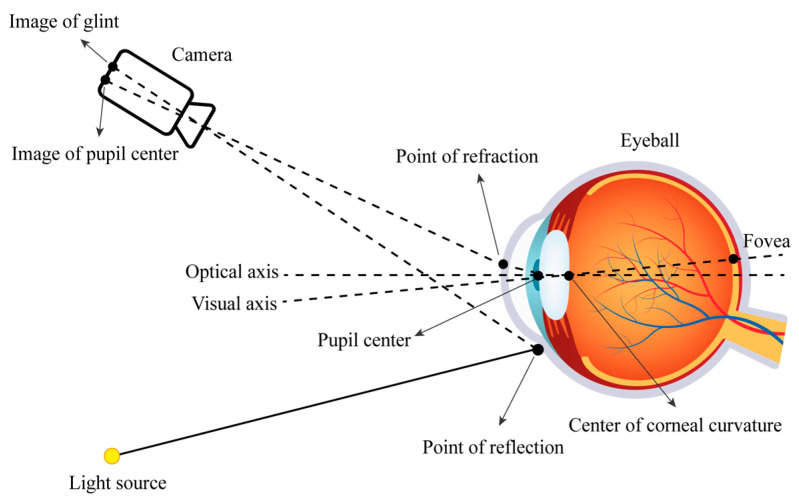

The eye tracking method based on the geometric human eye model estimates the gaze direction of a 3D coordinate by relying on invariant facial features [38]. The gaze point of the human eye is estimated by the obtained line of sight direction vector and information in the scene [39]. A schematic diagram illustrating the simulation is shown in Figure 3.

Figure 3.

Diagram of the camera, eyeball, and light source.

The application of geometric-based methods in medical and healthcare fields is relatively limited due to the complexity of the models and their potential lack of generalizability, making them less suitable for widespread clinical use. However, these methods are more applicable and prevalent in research focused on eye diseases and specialized surgical applications, where detailed anatomical modeling is crucial.

Others (non-geometric based method)

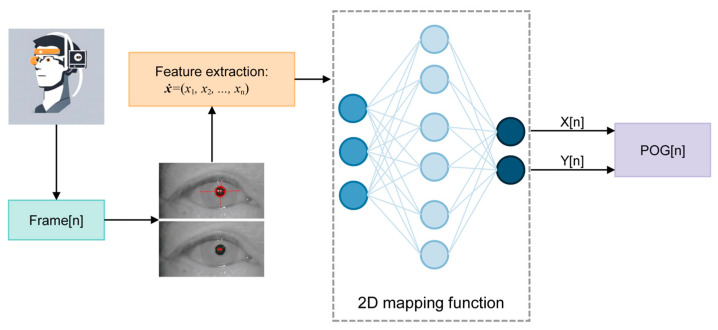

While we have previously discussed the model-based method and its drawbacks, mapping methods provide an advantageous alternative. These methods are simpler to implement, do not require additional hardware calibration, and allow for quicker setup, which greatly enhances user convenience [40]. Consequently, most commercial gaze tracking systems opt for 2D mapping feature-based methods with IR cameras and active IR illumination to ensure precise gaze estimation, as shown in Figure 4.

Figure 4.

Diagram of a standard eye tracker with a 2D mapping method. (POG: point of gaze) Adapted with permission from [40].

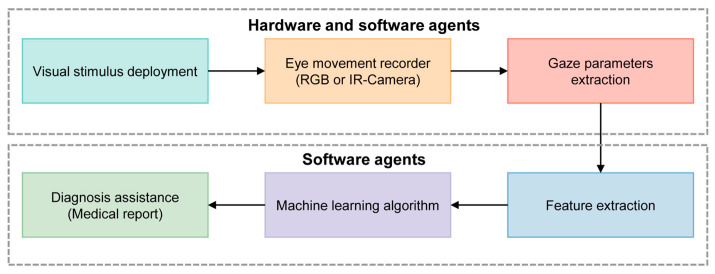

Figure 5 shows a typical processing pipeline for the diagnosis of cognitive impairment using machine learning (ML) algorithms. Initially, visual stimuli are deployed to provoke eye movements, which are then captured by a camera acting as an eye movement recorder. The software then analyzes these metrics to identify patterns indicative of cognitive impairments. ML algorithms further examine these features to detect abnormalities. The findings are then compiled into a detailed diagnostic report.

Figure 5.

Scheme of the ML concept applied to the diagnosis of cognitive impairment using an automatic video-oculography register. Adapted with permission from [41].

2.1.2. Appearance-Based Eye Tracking

The development of ML algorithms in computer vision has facilitated the emergence of appearance-based approaches in gaze estimation. Different from analytical models, these methods rely on large datasets and statistical models to construct the mapping function [23]. As a consequence, they require sufficient data rather than a deep understanding of intrinsic theories.

Appearance-based eye tracking directly analyzes raw eye images captured by cameras, treating gaze estimation as an image regression [42]. Appearance-based methods offer notable advantages in their capacity to manage intricate image features and cope with variations in lighting conditions [43]. This shift towards data-driven techniques allows for more flexible and potentially more reliable assessments in diverse patient populations and environments, as these methods do not require an in-depth theoretical understanding but rather depend on the availability of extensive training data to refine their accuracy and robustness. Nevertheless, this method is resource-intense and could encounter scalability issues, such as limitations in accommodating variations in head pose and other cerebral factors.

2.2. Infrared Oculography

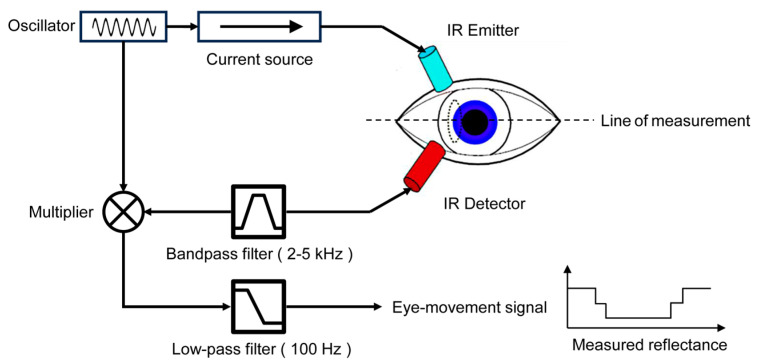

IOG is an eye-tracking method that measures the intensity of infrared light reflected from the sclera, the white part of the eye, to gather information about eye position. This method often involves the use of a wearable device, such as a pair of glasses equipped with an infrared light source. The IR light source illuminates the eye, and the changes in the reflected light can be captured with detectors and analyzed to determine eye movement and position, as shown in Figure 6.

Figure 6.

Eye movement measurement using IROG, adapted with permission from [44].

IOG is particularly advantageous in environments such as varying light or low light conditions, leveraging infrared light which is “invisible” to the human eye and thus non-distracting to subjects. The resilience of this technology to ambient lighting variations ensures reliable measurements regardless of external light conditions. Its unobtrusiveness and accuracy also extend its utility to scenarios such as driving fatigue monitoring [45], and neuroscience [46], where natural behavior and uninterrupted observation are critical.

In one study, researchers utilized both 3D and 2D methods to analyze the gaze patterns of patients suffering from Superior Oblique Myokymia [47]. Patients were asked to maintain a primary gaze and to look in various eccentric gazes, while also measuring saccade amplitudes and velocities. The results highlight the potential of IOG in better understanding Superior Oblique Myokymia and suggest that specific medications might help manage symptoms, offering new avenues for treatment and developing effective therapies. Besides gaze patterns, facial patterns could also be recorded together, such as in [48], which leveraged IOG technology to investigate facial visual attention deficits in individuals with schizophrenia, identifying specific patterns of fixations and saccades. Another study utilized a portable real-time IOG monitoring system measuring lids, iris, and blinks to enhance the clinical diagnosis of eyelid ptosis [49]. By employing infrared eye-tracking technology, the system captured key features such as blink patterns and eyelid behaviors in real time. These metrics provided novel diagnostic markers for myasthenia gravis patients, offering new avenues for clinical investigations into various eyelid movement disorders.

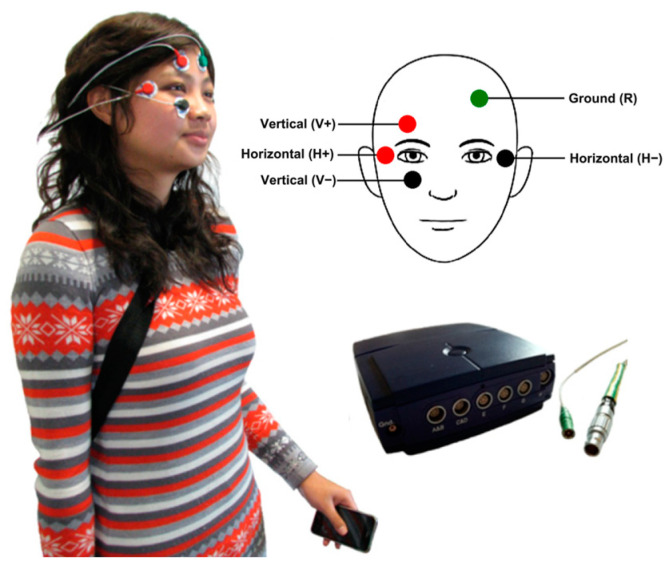

2.3. Electrooculography

EOG is a technique widely used in NET that measures the cornea-positive standing potential relative to the back of the eye, typically the retina. An EOG system captures these changes in electric potentials using electrodes placed around the eyes—typically above and below the eye for vertical movements, and on the sides for horizontal movements, as shown in Figure 7. These voltage differences can be translated into data that indicate the direction and amplitude of eye movements.

EOG is particularly useful for tracking eye movements over long periods, as it is less susceptible to external lighting conditions compared to other eye-tracking methods such as VOG-based systems. This makes EOG valuable in various applications, from mental monitoring [50] and neurological research [51] to user interface design and motor rehabilitation [20].

Figure 7.

Examples of EOG: The wearable system developed in [50]. Placement of the EOG electrodes on the head and shows the iPhone and the Mobi8 device carried by the user. Electrode placement was adapted with permission from [52].

For enhanced signal quality, an innovative eye-tracking system was developed for real-time 3D visualization of eye and head movements. This system features magneto-resistive detectors mounted on the patient’s head and includes a small magnet embedded in a contact lens [51]. Its efficacy highlights its potential for advancing neurological research and improving patient care through continuous monitoring capabilities. However, the system’s reliance on specialized equipment and the need for precise calibration may limit its accessibility and necessitate regular maintenance, posing challenges for widespread medical adoption.

EOG is often used in conjunction with other eye-tracking technologies such as IOG or VOG, including remote infrared eye-tracking systems, as demonstrated in [20]. In this particular study, the eye movements of older adults and individuals with Parkinson’s disease were accurately monitored. The setup included a wireless mobile EOG system to record horizontal saccades, a head-mounted mobile eye tracker for general saccadic recording, and a dual camera system combining a monocular infrared eye camera and a fish-eye field camera for precise pupil localization. The high temporal resolution of 1000 Hz provided by the EOG system effectively compensates for the lower tracking frequency of 50 Hz from the IR eye-tracker, ensuring detailed and responsive tracking of rapid eye movements essential for accurate analysis in clinical research. There are four stages involved in processing raw EOG voltage signal data with additional information from IOG and VOG [20]:

Preprocessing: This initial stage includes baseline offset removal to adjust the starting point of the EOG signal to a standard reference, followed by filtering and noise removal to clean the data for accurate analysis;

Calibration and peak detection: The signal is then calibrated to convert the raw EOG data into meaningful measurements that correspond to eye movements. This involves creating a calibration conversion factor that aligns the electrical signals with actual eye movement degrees. Following this, the system detects peaks corresponding to left or right eye movements that exceed 5 degrees;

Eye movement detection: establishes velocity and acceleration thresholds to categorize different types of eye movements. This includes detecting saccades (rapid movements) with specific velocity and acceleration criteria, fixations (steady gaze) with lower velocity and longer duration thresholds and blinks characterized by very high velocity and acceleration;

Quantification of eye movement events: Finally, the processed data are classified into specific events based on velocity, acceleration, and duration parameters: for saccades, the system measures the number, frequency, distance, duration, direction, and timing; for fixations, it records the number, duration, and timing.

Another study utilized a combination of EOG and VOG systems, both of which are portable and support real-time data processing, making them suitable for daily use in uncontrolled environments [50], as shown in Figure 7. This integration has led to high accuracy in capturing critical eye movement characteristics, such as saccades and smooth pursuits, which are vital for mental health monitoring.

EOG can also be integrated with electroencephalography (EEG) to simultaneously measure eye movements and brain activity, which avoids synchronization challenges that can arise with separate systems. In [53], electrodes were strategically placed in periocular regions to capture horizontal and vertical eye movements and were complemented by head stabilization techniques for EEG recording. This combined methodology is especially valuable for examining fixation- and saccade-related neural potentials, offering insights into the underlying neural mechanisms that govern eye movement control. This approach not only simplifies the experimental setup but also enriches the quality of data for advanced neuroscientific research.

3. Applications of NET for Health

Table 2 summarizes various applications of typical NET sensors in several health-related fields. Many studies rely on well-established commercial NET sensors. Compared with IOG and EOG, VOG is being used more widely in health-related domains. As a newly developed technology, NET is now primarily used in the areas of endoscopy and mental health monitoring.

Table 2.

Applications of NET sensors in health-related fields.

| Ref. | Year | Application | Method | Wearability | Transmission | Device | Sampling Frequency/Hz |

|---|---|---|---|---|---|---|---|

| [9] | 2014 | Control of electronic wheelchair | VOG | Glasses | Wired | - | - |

| [14] | 2016 | Assessment of oculomotor abnormalities | VOG | Head-mounted | Wireless | EyeTribe | 30 |

| [15] | 2014 | Surgical training | VOG | Glasses | Wired | ASL Eye tracker | 8.33 |

| [20] | 2017 | Investigation of Parkinson’s disease | EOG + IOG +VOG | EOG: Head-mounted IOG: Head-mounted |

EOG: WIFI IOG: Wired VOG: Wired |

EOG: Zerowire IOG: Dikablis VOG: dual camera system |

EOG: 1000 IOG: 50 VOG: 50 |

| [35] | 2016 | Evaluation in colonoscopy | VOG | Glasses | Wired | Model 1.4 | 30 |

| [36] | 2006 | Investigation of autism | VOG (infrared) |

Head-mounted | Wired | EyeLink II | 500 |

| [37] | 2007 | Diagnosis of Developmental Disorders | VOG | Headcoil | Radio receiver | WearCam | 30 |

| [41] | 2023 | Detection of Hepatic Encephalopathy | VOG (infrared) |

Head stabilization | Wired | Infrared Camera | 100 |

| [46] | 2011 | Evaluation in surgery | IOG | - | - | - | - |

| [47] | 2017 | Investigation of superior oblique myokymia | IOG | 3D: Glasses 2D: Head stabilization |

Wired | 3D: 3-D VOG 2D: iView-X Hi-Speed |

3D: 60 2D: 500 |

| [48] | 2015 | Investigation of schizophrenia | VOG | Head-mounted | Wired | EyeLink II | 250 |

| [49] | 2014 | Diagnosis of Ocular Myasthenia Gravis | IOG | Glasses | Wired | Pupil Labs | 30 |

| [51] | 2023 | Oculomotor Assessment in Neurological Research | EOG | - | - | Magnetoresistive-based eye tracker | 100 |

| [53] | 2019 | EEG Recording | EOG | - | Wired | 128-channel EGI (Electrical Geodesics, Inc., Eugene, OR, USA) | 500 |

| [54] | 2007 | Endoscopy | VOG | Headcoil | Wired | - | 50 |

| [55] | 2022 | Assessment in attention deficit hyperactivity disorder | EOG | Desktop Mount | Wired | Eye Link 1000 | 250 |

| [56] | 2020 | Hemostasis in Endoscopy | VOG | Glasses | - | Tobii Pro Glasses 2 | 50 |

| [57] | 2021 | Surgical training | VOG | Glasses | Wireless | Pupil Invisible | 30 |

| [58] | 2007 | Investigation of schizophrenia | EOG | Glasses | Wired | limbus tracker (Cambridge Research Systems, Cambridge, UK) |

500 |

| [59] | 2004 | Monitoring of affective state | IOG | Head Stabilization | Wired | MR-Eyetracker (Cambridge Research Systems, UK) |

1000 |

| [60] | 2014 | Investigation of Alzheimer’s disease | VOG | Glasses | Wired | Senso-Motoric | 350 |

| [61] | 2009 | Investigation of autism spectrum disorder | VOG | Head-mounted | Wired | Dual Purkinje Image eye-tracker | 200 |

| [62] | 2008 | Investigation of autism spectrum disorder | VOG | Head-mounted | Wired | ISCAN ETL-500 | 240 |

| [63] | 2013 | Measurement of startle | VOG (infrared) |

Head Stabilization | Wired | iView X Hi-Speed 500 | 500 |

| [64] | 2018 | Vestibular Function Testing | VOG (infrared) |

Head-mounted | Wired | Infrared Camera | 30 |

3.1. NET in Endoscopy

The examination of visual patterns exhibited by endoscopists during colonoscopy procedures is an interesting area of endoscopy with an evolving evidence base [65]. The visual gaze patterns of endoscopists are paramount in the detection of colonic pathology. The eye movements of an endoscopist during a colonoscopy can be assessed by gaze analysis. Research using gaze analysis is allowing us a greater insight into how visual patterns differ between experts with higher ADR and nonexperts with lower detection rates [30], as shown in Figure 8.

Figure 8.

(a) User wearing eye tracking glasses observing withdrawal video. (b) Hepatic flexure on the left side of the screen. Endoscopic application [35].

NET has the potential to provide a more objective measure for detecting lesions during endoscopy. This method is more valuable than relying on subjective feedback from endoscopists, particularly when accessing new technologies for improving adenoma or bleeding vessel detection [34]. Eye-tracking glasses can also be used as a new steering system for endoscopes, allowing endoscopists to have bimanual freedom for instrumentation [54]. The application of gaze analysis and control in endoscopy presents exciting potential for advancing the field and its crucial role in mitigating the growing global burden of gastrointestinal cancer [2]. Yet, given that gaze analysis represents a recent and novel field of research, the existing studies are limited to small sample sizes and yield inconclusive results.

3.2. NET in Mental Health Monitoring

Research in experimental psychology and clinical neuroscience has demonstrated a significant correlation between eye movements and mental disorders [66,67]. In the past, diagnostics based on eye movement were limited to controlled laboratory settings; however, wearable eye trackers now enable continuous monitoring and analysis of eye movements [68]. As a delicate function connected to the central nervous system, eye motricity is susceptible to disturbances arising from disorders and diseases affecting various brain regions such as the cerebral cortex, brainstem, or cerebellum. Analysis of resultant eye movement dysfunctions provides valuable insights into the localization of brain damage [69] and serves as a reliable marker for dementia and numerous other brain-related conditions [66,70].

Take schizophrenia as an example: it is a mental disorder characterized by antisocial personality disorder. Previous studies have proven that schizophrenia impairs smooth pursuit [71] and increases the frequency of saccades, especially catch-up saccades during smooth pursuit [72]. Despite over 35 years of investigation into eye movement impairments in schizophrenic patients, this remains an active area of research, with ongoing efforts aimed at developing portable and cost-effective devices for further studies [73]. Holzman and Levy [74] used EOG for its portability, despite acknowledging that it may be less precise than video-based trackers at the time of writing. Their findings reveal that smooth pursuit impairment not only in schizophrenia but also in psychotic patients. They demonstrated two distinct types of smooth pursuit impairment: (1) pursuits replaced by rapid eye movements or saccades; and (2) small amplitude rapid movements intruding pursuit, leaving the shape intact but having a cogwheel appearance. Furthermore, they proposed that smooth pursuit impairment may qualify as a genetic indicator of the predisposition for schizophrenia. In addition, non-smooth pursuit records are also found in the close family of schizophrenic patients, and a good number of psychotic patients without schizophrenia are found to have bad smooth pursuit eye movements, too [74].

3.3. NET + X for Health

NET can be utilized not only as a standalone tool but also in conjunction with other technologies to enhance precision and accuracy or to deliver valuable supplementary insights. By integrating NET with various technologies, such as VR, EEG, or OTA, the application’s scope has been significantly expanded. This synergistic approach, referred to as NET + X, leverages multiple data sources and technological methods to improve the overall effectiveness of the system.

3.3.1. NET + VR

The fundamental principle of VR is tailoring stimuli to user actions, including head, eye, and hand movements [75]. Head-mounted display (HMD)-based VR relies on the accurate tracking of head movements to synchronize visual scene motion with head movement, facilitated by advancements in head-tracking technology. Anticipated advancements in HMD-based eye-tracking technology, as shown in Figure 9, will allow for fundamental advances in VR applications based on eye movement [25].

Figure 9.

An HTC Vive Pro Eye HR HMD combined with a VOG NET sensor. Taken with permission from [25].

Eye-tracking technology VR has various applications in the clinical context, including for diagnostic, therapeutic, and interactive purposes [76]. Traditionally, neuro-ophthalmic diagnosis has been conducted in a very basic manner at the patient’s bedside [77]. Fortunately, this process could be greatly improved by the development of uniform HMD-based diagnostic tools that have precise stimulus control to elicit specific and relevant eye movements, such as pursuit, saccades, nystagmus, etc. For example, when doctors wear VR headsets, the patient’s body model is reconstructed in a virtual VR operating room, allowing the doctor to observe the organs or lesions in a 360° view and make more accurate preliminary measurements and estimates of the affected areas. This enables the doctor to develop more reasonable, accurate, and safer surgical implementation plans [78].

Nonetheless, most current clinical VR equipment for eye tracking uses commercial devices, often unsuitable for clinical use [79]. For example, Zhu et al. [80] mention that most HMDs have to be modified by removing, enclosing, or replacing their textile foam and Velcro components in order to comply with clinical hygiene regulations. Most HMDs and their eye-tracking components also cannot withstand clinical disinfection procedures. Therefore, further development is necessary to achieve clinical-grade HMDs.

3.3.2. NET + Other

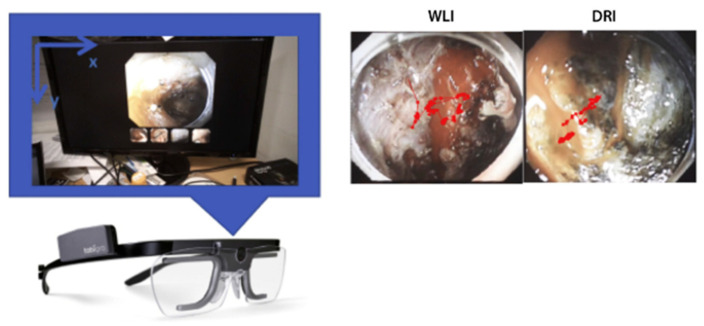

Apart from VR, there are other modalities that can be combined with NET to achieve health-related applications. In [55], an eye tracker was integrated with continuous performance tests to access patients with attention deficit hyperactivity disorder. By comparing acquired data with the health control group, this study demonstrates that eye movement measurement presented its potential to increase our theoretical understanding of attention deficit hyperactivity disorder and is beneficial for clinical decision-making. Moreover, in [56], presented in Figure 10, NET was adopted as a measurement tool to evaluate the efficacy between dual red imaging and white-light imaging for hemostasis during endoscopic submucosal dissection. The eye movements of experienced endoscopists were monitored by wearable NET glasses. The endoscopists were asked to identify bleeding points in each random video of intraoperative bleeding during endoscopic submucosal dissection. The NET glasses gave an accurate record of endoscopists’ eye movement, which also became the standard for rating the efficacy of dual red imaging and white-light imaging.

Figure 10.

A subject wearing an eye-tracking device while searching for the bleeding point (left). The example of endoscopic images of the measured eye position attached to the infrared marker (right). WLI, White-light imaging; DRI, dual red imaging. Taken with permission from [56].

EOG-based NET could also be combined with EEG, as in [53], to study fixation- and saccade-related neural potentials and advance our understanding of the neural mechanisms involved in eye movement control, offering a robust tool for both clinical and research applications. Integrating NET with remote ET could also provide a more complete picture of eye movement behavior during various tasks, allowing for the detection and analysis of saccades in both static and dynamic conditions [20].

Another important area when using VOG NET with other techniques is motion artifact removal. One study introduced a video-based real-time eye-tracking system suitable for functional magnetic resonance imaging (fMRI) applications [54]. Interference from physiological head movement is effectively reduced by simultaneous tracking of both eye and head movements. Ref. [7] suggests that using ET technology can significantly enhance the quality of optical coherence tomography angiography (OCT-A) images by reducing motion artifacts, which is particularly problematic in patients with age-related macular degeneration.

4. Discussion and Conclusions

4.1. Summary of NET in Health

The most popular NET technologies currently include VOG, IOG, and EOG. VOG benefits from high resolution and advanced camera technology, suitable for detailed eye feature analysis and robust in real-world applications. IOG employs infrared light, effective in varying light conditions and ideal for fatigue monitoring and certain medical diagnoses. EOG measures electrical potentials around the eyes, appropriate for long-term tracking. The majority of studies reviewed employ VOG, which has benefited from recent advancements in camera technology that significantly enhance its temporal resolution. The emergence of easy-to-set-up commercial portable VOG devices emphasizes their potential for wild medical use. Conversely, though IOG and EOG are useful in certain situations, they generally yield lower resolution and are more susceptible to noise, making them less suitable for medical and research applications requiring precise eye-tracking capabilities.

Applied in endoscopy, NET can enhance medical training by differentiating visual patterns between novices and experts. As for mental health monitoring, NET is beneficial for the diagnostics of schizophrenia and dementia. Additionally, integrating NET with technologies such as VR and EEG can help clinical decision-making or improve the precision of clinical and healthcare devices.

4.2. Future Trend of NET in Health

4.2.1. Sensor Design

Many current NET sensors have achieved wearable designs, such as in the form of glasses or head-mounted devices. Future development should focus on reducing size and weight to ensure long-term, continuous, and comfortable use for clinical and healthcare applications. Besides, the real-time transmission and computation of data are also worthy of further discussion. Since improving wearability may lead to a decrease in the speed and accuracy of real-time data transmission and computation, a balance between the pursuit of wearability, efficient real-time data transmission, and computation should be emphasized in future work.

Apart from designing more wearable NET sensors, another way to improve their versatility of applications is developing non-cooperative NET. Nowadays, NET usually relies on user cooperation, which often involves the utilization of dedicated sensors or devices. However, challenges arise in situations where cooperation is difficult, such as with infants, seniors, or individuals with disabilities. In such instances, developing methods that facilitate non-cooperative NET is imperative. Because it not only meets the requirements of different user groups but also allows NET to be applied in diverse fields and scenarios.

4.2.2. Standardization

As introduced in Section 3, NET has been widely applied in endoscopy and surgery to evaluate the visual patterns of doctors to facilitate the analysis of diseases or surgical training processes. Nonetheless, currently, there is no fixed quantitative standard for evaluating the NET data obtained in various clinical cases. These data are now simply categorized using traditional evaluation scales. When summarizing and comparing various NET sensors, we found that a number of studies did not specify key parameters such as resolution, accuracy, and weight. This omission makes it difficult to quantitatively assess the measurement performance and wearability of NET sensors. With the gradual development and spread of NET technology, it is hoped that new standardized methods for NET sensors and acquired data can be developed.

4.2.3. NET + X

The combination of NET and other technologies has been preliminarily applied in health-related fields. In future developments, NET can be integrated not only with clinical technologies, such as fMRI and OCT-A, but also with emerging electronic products or technologies, such as VR and AR. Since commercial VR devices are suitable for non-professionals to use, NET + VR will be developed for purposes of personal health monitoring and enhancing individual health management in daily life. Therefore, NET + VR is capable of influencing how health data are collected and utilized, eventually enabling health monitoring to be more personalized and precise.

4.3. Conclusions

This review provides the technical features, development, and application of health-related NET technologies. NET has already been effectively applied in several health-related fields. Meanwhile, as a relatively new technology, future efforts should focus on miniaturization and weight reduction to improve the wearable design of NET sensors. Additionally, developing non-cooperative NET methods will expand usability for groups such as infants, seniors, and individuals with disabilities. Standardizing data evaluation is essential to ensure reliable comparisons and assessments of NET systems. With further development and integration with other technologies, such as VR, AR, and fMRI, NET holds great potential to become a wearable, low-cost, high-precision tool that can be practically applied in clinical and healthcare applications.

Acknowledgments

HZ is supported by The Royal Society Research Grant (RGS\R2\222333) and the Engineering and Physical Sciences Research Council Grant (13171178 R00287), European Innovation Council (EIC) under the European Union’s Horizon Europe research and innovation program (grant agreement No. 101099093). RL is supported by Wellcome Trust and EPSRC through the WEISS Centre (203145Z/16/Z).

Author Contributions

Conceptualization, H.Z., S.G., L.Z. and J.C.; methodology, L.Z., J.C. and H.Z.; investigation, L.Z., J.C. and H.Y., resource collection, all of the authors; data curation, L.Z., J.C., H.Y., Q.G., S.G. and H.Z.; writing—original draft preparation, L.Z., J.C., H.Y., X.Z., R.L., S.G. and H.Z.; writing—review and editing, all of the authors; visualization, L.Z., J.C., H.Y. and H.Z.; supervision, H.Z.; project administration, R.L., S.G. and H.Z. All authors have read and agreed to the published version of the manuscript.

Conflicts of Interest

The authors declare no conflicts of interest.

Funding Statement

This work was supported by National Natural Science Foundation of China, grant number 61803017, 61827802; Beihang University grant number KG12090401, ZG216S19C8; The Royal Society Research Grant, grant number RGS\R2\222333; Engineering and Physical Sciences Research Council Grant, grant number 13171178 R00287; Wellcome Trust and EPSRC through the WEISS Centre, grant number 203145Z/16/Z; and European Innovation Council (EIC) under the European Union’s Horizon Europe research and innovation program, grant number 101099093.

Footnotes

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

References

- 1.Sharma C., Dubey S.K. Analysis of Eye Tracking Techniques in Usability and HCI Perspective; Proceedings of the 2014 International Conference on Computing for Sustainable Global Development (INDIACom); New Delhi, India. 5–7 March 2014; pp. 607–612. [Google Scholar]

- 2.Ferhat O., Vilarino F. Low Cost Eye Tracking: The Current Panorama. Comput. Intell. Neurosci. 2016;2016:8680541. doi: 10.1155/2016/8680541. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Orlosky J., Itoh Y., Ranchet M., Kiyokawa K., Morgan J., Devos H. Emulation of Physician Tasks in Eye-Tracked Virtual Reality for Remote Diagnosis of Neurodegenerative Disease. IEEE Trans. Vis. Comput. Graph. 2017;23:1302–1311. doi: 10.1109/TVCG.2017.2657018. [DOI] [PubMed] [Google Scholar]

- 4.Xu J., Min J., Hu J. Real-time Eye Tracking for the Assessment of Driver Fatigue. Healthc. Technol. Lett. 2018;5:54–58. doi: 10.1049/htl.2017.0020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Ji Q. Real-Time Eye, Gaze, and Face Pose Tracking for Monitoring Driver Vigilance. Real-Time Imaging. 2002;8:357–377. doi: 10.1006/rtim.2002.0279. [DOI] [Google Scholar]

- 6.Sipatchin A., Wahl S., Rifai K. Eye-Tracking for Clinical Ophthalmology with Virtual Reality (VR): A Case Study of the HTC Vive Pro Eye’s Usability. Healthcare. 2021;9:180. doi: 10.3390/healthcare9020180. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Lauermann J.L., Treder M., Heiduschka P., Clemens C.R., Eter N., Alten F. Impact of Eye-Tracking Technology on OCT-Angiography Imaging Quality in Age-Related Macular Degeneration. Graefe’s Arch. Clin. Exp. Ophthalmol. 2017;255:1535–1542. doi: 10.1007/s00417-017-3684-z. [DOI] [PubMed] [Google Scholar]

- 8.Dahmani M., Chowdhury M.E.H., Khandakar A., Rahman T., Al-Jayyousi K., Hefny A., Kiranyaz S. An Intelligent and Low-Cost Eye-Tracking System for Motorized Wheelchair Control. Sensors. 2020;20:3936. doi: 10.3390/s20143936. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Gautam G., Sumanth G., Karthikeyan K.C., Sundar S. Eye Movement Based Electronic Wheel Chair for Physically Challenged Persons. Int. J. Sci. Technol. Res. 2014;3:206–212. [Google Scholar]

- 10.Hosp B., Eivazi S., Maurer M., Fuhl W., Geisler D., Kasneci E. RemoteEye: An Open-Source High-Speed Remote Eye Tracker. Behav. Res. Methods. 2020;52:1387–1401. doi: 10.3758/s13428-019-01305-2. [DOI] [PubMed] [Google Scholar]

- 11.Geisler D., Fox D., Kasneci E. Real-Time 3D Glint Detection in Remote Eye Tracking Based on Bayesian Inference; Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA); Brisbane, Australia. 21–25 May 2018; Piscataway, NJ, USA: IEEE; 2018. pp. 7119–7126. [Google Scholar]

- 12.Punde P.A., Jadhav M.E., Manza R.R. A Study of Eye Tracking Technology and Its Applications; Proceedings of the 2017 1st International Conference on Intelligent Systems and Information Management (ICISIM); Aurangabad, India. 5–6 October 2017; Piscataway, NJ, USA: IEEE; 2017. pp. 86–90. [Google Scholar]

- 13.Li D., Winfield D., Parkhurst D.J. Starburst: A Hybrid Algorithm for Video-Based Eye Tracking Combining Feature-Based and Model-Based Approaches; Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05)—Workshops; San Diego, CA, USA. 20–26 June 2005; Piscataway, NJ, USA: IEEE; p. 79. [Google Scholar]

- 14.Kumar D., Dutta A., Das A., Lahiri U. SmartEye: Developing a Novel Eye Tracking System for Quantitative Assessment of Oculomotor Abnormalities. IEEE Trans. Neural Syst. Rehabil. Eng. 2016;24:1051–1059. doi: 10.1109/TNSRE.2016.2518222. [DOI] [PubMed] [Google Scholar]

- 15.Bright E., Vine S.J., Dutton T., Wilson M.R., McGrath J.S. Visual Control Strategies of Surgeons: A Novel Method of Establishing the Construct Validity of a Transurethral Resection of the Prostate Surgical Simulator. J. Surg. Educ. 2014;71:434–439. doi: 10.1016/j.jsurg.2013.11.006. [DOI] [PubMed] [Google Scholar]

- 16.Koulieris G.A., Akşit K., Stengel M., Mantiuk R.K., Mania K., Richardt C. Near-Eye Display and Tracking Technologies for Virtual and Augmented Reality. Comput. Graph. Forum. 2019;38:493–519. doi: 10.1111/cgf.13654. [DOI] [Google Scholar]

- 17.Buchner E.F. Review of The Psychology and Pedagogy of Reading, with a Review of the History of Reading and Writing and of Methods, Texts, and Hygiene in Reading. Psychol. Bull. 1909;6:147–150. doi: 10.1037/h0066540. [DOI] [Google Scholar]

- 18.Sprenger A., Neppert B., Köster S., Gais S., Kömpf D., Helmchen C., Kimmig H. Long-Term Eye Movement Recordings with a Scleral Search Coil-Eyelid Protection Device Allows New Applications. J. Neurosci. Methods. 2008;170:305–309. doi: 10.1016/j.jneumeth.2008.01.021. [DOI] [PubMed] [Google Scholar]

- 19.Stuart S., Hickey A., Vitorio R., Welman K., Foo S., Keen D., Godfrey A. Eye-Tracker Algorithms to Detect Saccades during Static and Dynamic Tasks: A Structured Review. Physiol. Meas. 2019;40:02TR01. doi: 10.1088/1361-6579/ab02ab. [DOI] [PubMed] [Google Scholar]

- 20.Stuart S., Hickey A., Galna B., Lord S., Rochester L., Godfrey A. ITrack: Instrumented Mobile Electrooculography (EOG) Eye-Tracking in Older Adults and Parkinson’s Disease. Physiol. Meas. 2017;38:N16. doi: 10.1088/1361-6579/38/1/N16. [DOI] [PubMed] [Google Scholar]

- 21.Schmitt K.U., Muser M.H., Lanz C., Walz F., Schwarz U. Comparing Eye Movements Recorded by Search Coil and Infrared Eye Tracking. J. Clin. Monit. Comput. 2007;21:49–53. doi: 10.1007/s10877-006-9057-5. [DOI] [PubMed] [Google Scholar]

- 22.Choe K.W., Blake R., Lee S.-H. Pupil Size Dynamics during Fixation Impact the Accuracy and Precision of Video-Based Gaze Estimation. Vision. Res. 2016;118:48–59. doi: 10.1016/j.visres.2014.12.018. [DOI] [PubMed] [Google Scholar]

- 23.Klaib A.F., Alsrehin N.O., Melhem W.Y., Bashtawi H.O., Magableh A.A. Eye Tracking Algorithms, Techniques, Tools, and Applications with an Emphasis on Machine Learning and Internet of Things Technologies. Expert. Syst. Appl. 2021;166:114037. doi: 10.1016/j.eswa.2020.114037. [DOI] [Google Scholar]

- 24.Basel D., Hallel H., Dar R., Lazarov A. Attention Allocation in OCD: A Systematic Review and Meta-Analysis of Eye-Tracking-Based Research. J. Affect. Disord. 2023;324:539–550. doi: 10.1016/j.jad.2022.12.141. [DOI] [PubMed] [Google Scholar]

- 25.Adhanom I.B., MacNeilage P., Folmer E. Eye Tracking in Virtual Reality: A Broad Review of Applications and Challenges. Virtual Real. 2023;27:1481–1505. doi: 10.1007/s10055-022-00738-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Meng M. Using Eye Tracking to Study Information Selection and Use in Procedures. IEEE Trans. Prof. Commun. 2023;66:7–25. doi: 10.1109/TPC.2022.3228021. [DOI] [Google Scholar]

- 27.Kaushik P.K., Pandey S., Rauthan S.S. Facial Emotion Recognition and Eye-Tracking Based Expressive Communication Framework: Review and Recommendations. Int. J. Comput. Appl. 2022;184:20–28. doi: 10.5120/ijca2022922494. [DOI] [Google Scholar]

- 28.Lim J.Z., Mountstephens J., Teo J. Emotion Recognition Using Eye-Tracking: Taxonomy, Review and Current Challenges. Sensors. 2020;20:2384. doi: 10.3390/s20082384. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Kar A., Corcoran P. A Review and Analysis of Eye-Gaze Estimation Systems, Algorithms and Performance Evaluation Methods in Consumer Platforms. IEEE Access. 2017;5:16495–16519. doi: 10.1109/ACCESS.2017.2735633. [DOI] [Google Scholar]

- 30.Sivananthan A., Ahmed J., Kogkas A., Mylonas G., Darzi A., Patel N. Eye Tracking Technology in Endoscopy: Looking to the Future. Dig. Endosc. 2023;35:314–322. doi: 10.1111/den.14461. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Gil A.M., Birdi S., Kishibe T., Grantcharov T.P. Eye Tracking Use in Surgical Research: A Systematic Review. J. Surg. Res. 2022;279:774–787. doi: 10.1016/j.jss.2022.05.024. [DOI] [PubMed] [Google Scholar]

- 32.Arthur E., Sun Z. The Application of Eye-Tracking Technology in the Assessment of Radiology Practices: A Systematic Review. Appl. Sci. 2022;12:8267. doi: 10.3390/app12168267. [DOI] [Google Scholar]

- 33.Lim J.Z., Mountstephens J., Teo J. Eye-Tracking Feature Extraction for Biometric Machine Learning. Front. Neurorobot. 2022;15:796895. doi: 10.3389/fnbot.2021.796895. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Lee A., Chung H., Cho Y., Kim J.L., Choi J., Lee E., Kim B., Cho S.J., Kim S.G. Identification of Gaze Pattern and Blind Spots by Upper Gastrointestinal Endoscopy Using an Eye-Tracking Technique. Surg. Endosc. 2022;36:2574–2581. doi: 10.1007/s00464-021-08546-3. [DOI] [PubMed] [Google Scholar]

- 35.Edmondson M.J., Pucher P.H., Sriskandarajah K., Hoare J., Teare J., Yang G.Z., Darzi A., Sodergren M.H. Looking towards Objective Quality Evaluation in Colonoscopy: Analysis of Visual Gaze Patterns. J. Gastroenterol. Hepatol. 2016;31:604–609. doi: 10.1111/jgh.13184. [DOI] [PubMed] [Google Scholar]

- 36.Neumann D., Spezio M.L., Piven J., Adolphs R. Looking You in the Mouth: Abnormal Gaze in Autism Resulting from Impaired Top-down Modulation of Visual Attention. Soc. Cogn. Affect. Neurosci. 2006;1:194–202. doi: 10.1093/scan/nsl030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Piccardi L., Noris B., Barbey O., Billard A., Schiavonet G., Kellert F., von Hofsten C. WearCam: A Head Mounted Wireless Camera for Monitoring Gaze Attention and for the Diagnosis of Developmental Disorders in Young Children; Proceedings of the RO-MAN 2007—The 16th IEEE International Symposium on Robot and Human Interactive Communication; Jeju, Republic of Korea. 26–29 August 2007. [Google Scholar]

- 38.Jiang J., Zhou X., Chan S., Chen S. Lecture Notes in Computer Science. Springer International Publishing; New York, NY, USA: 2019. Appearance-Based Gaze Tracking: A Brief Review; pp. 629–640. [Google Scholar]

- 39.Brousseau B. Infrared Model-Based Eye-Tracking for Smartphones. University of Toronto; Toronto, ON, Canada: 2020. [Google Scholar]

- 40.Larrazabal A.J., García Cena C.E., Martínez C.E. Video-Oculography Eye Tracking towards Clinical Applications: A Review. Comput. Biol. Med. 2019;108:57–66. doi: 10.1016/j.compbiomed.2019.03.025. [DOI] [PubMed] [Google Scholar]

- 41.Calvo Córdoba A., García Cena C.E., Montoliu C. Automatic Video-Oculography System for Detection of Minimal Hepatic Encephalopathy Using Machine Learning Tools. Sensors. 2023;23:8073. doi: 10.3390/s23198073. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Zhang X., Sugano Y., Bulling A. Evaluation of Appearance-Based Methods and Implications for Gaze-Based Applications; Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems; Glasgow, UK. 2 May 2019; New York, NY, USA: ACM; 2019. pp. 1–13. [Google Scholar]

- 43.Wang K., Ji Q. Hybrid Model and Appearance Based Eye Tracking with Kinect; Proceedings of the Ninth Biennial ACM Symposium on Eye Tracking Research & Applications; Charleston, SC, USA. 14 March 2016; New York, NY, USA: ACM; 2016. pp. 331–332. [Google Scholar]

- 44.Singh H., Singh J. Human Eye Tracking and Related Issues: A Review. Int. J. Sci. Res. Publ. 2012;2:1–9. [Google Scholar]

- 45.Anderson C., Chang A.M., Sullivan J.P., Ronda J.M., Czeisler C.A. Assessment of Drowsiness Based on Ocular Parameters Detected by Infrared Reflectance Oculography. J. Clin. Sleep Med. 2013;9:907–920. doi: 10.5664/jcsm.2992. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Terrin M.G., De Berardinis M., Boccuzzi D., Terrin G., Magli A. Infrared Oculography as a Non Invasive Methods to Measure Visual Acuity before and after Surgery in Children with Congenital Nystagmus. Pediatr. Res. 2011;70:424. doi: 10.1038/pr.2011.649. [DOI] [Google Scholar]

- 47.Thinda S., Chen Y.R., Liao Y.J. Cardinal Features of Superior Oblique Myokymia: An Infrared Oculography Study. Am. J. Ophthalmol. Case Rep. 2017;7:115–119. doi: 10.1016/j.ajoc.2017.06.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Asgharpour M., Tehrani-Doost M., Ahmadi M. Visual Attention to Emotional Face in Schizophrenia: An Eye Tracking Study. Iran. J. Psychiatry. 2015;10:13. [PMC free article] [PubMed] [Google Scholar]

- 49.Azri M., Young S., Lin H., Tan C., Yang Z. Diagnosis of Ocular Myasthenia Gravis by Means of Tracking Eye Parameters; Proceedings of the 2014 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society; Chicago, IL, USA. 26–30 August 2014; [DOI] [PubMed] [Google Scholar]

- 50.Vidal M., Turner J., Bulling A., Gellersen H. Wearable Eye Tracking for Mental Health Monitoring. Comput. Commun. 2012;35:1306–1311. doi: 10.1016/j.comcom.2011.11.002. [DOI] [Google Scholar]

- 51.Donniacuo A., Viberti F., Carucci M., Biancalana V., Bellizzi L., Mandalà M. Development of a Magnetoresistive-Based Wearable Eye-Tracking System for Oculomotor Assessment in Neurological and Otoneurological Research—Preliminary In Vivo Tests. Brain Sci. 2023;13:1439. doi: 10.3390/brainsci13101439. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Ramkumar S., Sathesh Kumar K., Dhiliphan Rajkumar T., Ilayaraja M., Shankar K. A Review-Classification of Electrooculogram Based Human Computer Interfaces. Biomed. Res. 2018;29:1078–1084. doi: 10.4066/biomedicalresearch.29-17-2979. [DOI] [Google Scholar]

- 53.Jia Y., Tyler C.W. Measurement of Saccadic Eye Movements by Electrooculography for Simultaneous EEG Recording. Behav. Res. Methods. 2019;51:2139–2151. doi: 10.3758/s13428-019-01280-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Kanowski M., Rieger J.W., Noesselt T., Tempelmann C., Hinrichs H. Endoscopic Eye Tracking System for FMRI. J. Neurosci. Methods. 2007;160:10–15. doi: 10.1016/j.jneumeth.2006.08.001. [DOI] [PubMed] [Google Scholar]

- 55.Lev A., Braw Y., Elbaum T., Wagner M., Rassovsky Y. Eye Tracking During a Continuous Performance Test: Utility for Assessing ADHD Patients. J. Atten. Disord. 2022;26:245–255. doi: 10.1177/1087054720972786. [DOI] [PubMed] [Google Scholar]

- 56.Maehata T., Fujimoto A., Uraoka T., Kato M., Horii J., Sasaki M., Kiguchi Y., Akimoto T., Nakayama A., Ochiai Y., et al. Efficacy of a New Image-Enhancement Technique for Achieving Hemostasis in Endoscopic Submucosal Dissection. Gastrointest. Endosc. 2020;92:667–674. doi: 10.1016/j.gie.2020.05.033. [DOI] [PubMed] [Google Scholar]

- 57.Matsuda A., Okuzono T., Nakamura H., Kuzuoka H., Rekimoto J. A Surgical Scene Replay System for Learning Gastroenterological Endoscopic Surgery Skill by Multiple Synchronized-Video and Gaze Representation. Proc. ACM Hum. Comput. Interact. 2021;5:204. doi: 10.1145/3461726. [DOI] [Google Scholar]

- 58.Nagel M., Sprenger A., Nitschke M., Zapf S., Heide W., Binkofski F., Lencer R. Different Extraretinal Neuronal Mechanisms of Smooth Pursuit Eye Movements in Schizophrenia: An FMRI Study. Neuroimage. 2007;34:300–309. doi: 10.1016/j.neuroimage.2006.08.025. [DOI] [PubMed] [Google Scholar]

- 59.Anders S., Weiskopf N., Lule D., Birbaumer N. Infrared Oculography—Validation of a New Method to Monitor Startle Eyeblink Amplitudes during FMRI. Neuroimage. 2004;22:767–770. doi: 10.1016/j.neuroimage.2004.01.024. [DOI] [PubMed] [Google Scholar]

- 60.Boucart M., Bubbico G., Szaffarczyk S., Pasquier F. Animal Spotting in Alzheimer’s Disease: An Eye Tracking Study of Object Categorization. J. Alzheimer’s Dis. 2014;39:181–189. doi: 10.3233/JAD-131331. [DOI] [PubMed] [Google Scholar]

- 61.Fletcher-Watson S., Leekam S.R., Benson V., Frank M.C., Findlay J.M. Eye-Movements Reveal Attention to Social Information in Autism Spectrum Disorder. Neuropsychologia. 2009;47:248–257. doi: 10.1016/j.neuropsychologia.2008.07.016. [DOI] [PubMed] [Google Scholar]

- 62.Sterling L., Dawson G., Webb S., Murias M., Munson J., Panagiotides H., Aylward E. The Role of Face Familiarity in Eye Tracking of Faces by Individuals with Autism Spectrum Disorders. J. Autism Dev. Disord. 2008;38:1666–1675. doi: 10.1007/s10803-008-0550-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Bernard F., Deuter C.E., Gemmar P., Schachinger H. Eyelid Contour Detection and Tracking for Startle Research Related Eye-Blink Measurements from High-Speed Video Records. Comput. Methods Programs Biomed. 2013;112:22–37. doi: 10.1016/j.cmpb.2013.06.003. [DOI] [PubMed] [Google Scholar]

- 64.Kong Y., Lee S., Lee J., Nam Y. A Head-Mounted Goggle-Type Video-Oculography System for Vestibular Function Testing. EURASIP J. Image Video Process. 2018;2018:28. doi: 10.1186/s13640-018-0266-x. [DOI] [Google Scholar]

- 65.Gulati S., Patel M., Emmanuel A., Haji A., Hayee B., Neumann H. The Future of Endoscopy: Advances in Endoscopic Image Innovations. Dig. Endosc. 2020;32:512–522. doi: 10.1111/den.13481. [DOI] [PubMed] [Google Scholar]

- 66.Crawford T.J., Higham S., Renvoize T., Patel J., Dale M., Suriya A., Tetley S. Inhibitory Control of Saccadic Eye Movements and Cognitive Impairment in Alzheimer’s Disease. Biol. Psychiatry. 2005;57:1052–1060. doi: 10.1016/j.biopsych.2005.01.017. [DOI] [PubMed] [Google Scholar]

- 67.Noris B., Benmachiche K., Meynet J., Thiran J.-P., Billard A.G. Computer Recognition Systems 2. Springer; Berlin/Heidelberg, Germany: 2007. Analysis of Head-Mounted Wireless Camera Videos for Early Diagnosis of Autism; pp. 663–670. [Google Scholar]

- 68.Bulling A., Gellersen H. Toward Mobile Eye-Based Human-Computer Interaction. IEEE Pervasive Comput. 2010;9:8–12. doi: 10.1109/MPRV.2010.86. [DOI] [Google Scholar]

- 69.Cogan D.G., Chu F.C., Reingold D.B. Ocular Signs of Cerebellar Disease. Arch. Ophthalmol. 1982;100:755–760. doi: 10.1001/archopht.1982.01030030759007. [DOI] [PubMed] [Google Scholar]

- 70.Ramat S., Leigh R.J., Zee D.S., Optican L.M. What Clinical Disorders Tell Us about the Neural Control of Saccadic Eye Movements. Brain. 2006;130:10–35. doi: 10.1093/brain/awl309. [DOI] [PubMed] [Google Scholar]

- 71.Holzman P.S., Proctor L.R., Hughes D.W. Eye-Tracking Patterns in Schizophrenia. Science. 1973;181:179–181. doi: 10.1126/science.181.4095.179. [DOI] [PubMed] [Google Scholar]

- 72.Radant A.D., Hommer D.W. A Quantitative Analysis of Saccades and Smooth Pursuit during Visual Pursuit Tracking. Schizophr. Res. 1992;6:225–235. doi: 10.1016/0920-9964(92)90005-P. [DOI] [PubMed] [Google Scholar]

- 73.Greene B.R., Meredith S., Reilly R.B., Donohoe G. A Novel, Portable Eye Tracking System for Use in Schizophrenia Research. Ir. Signals Syst. Conf. 2004;2004:89–94. [Google Scholar]

- 74.Holzman P.S., Levy D.L. Smooth Pursuit Eye Movements and Functional Psychoses: A Review. Schizophr. Bull. 1977;3:15–27. doi: 10.1093/schbul/3.1.15. [DOI] [PubMed] [Google Scholar]

- 75.Whitmire E., Trutoiu L., Cavin R., Perek D., Scally B., Phillips J., Patel S. EyeContact: Scleral Coil Eye Tracking for Virtual Reality; Proceedings of the 2016 ACM International Symposium on Wearable Computers; Heidelberg, Germany. 12–16 September 2016; New York, NY, USA: ACM; 2016. pp. 184–191. [Google Scholar]

- 76.Clay V., König P., König S.U. Eye Tracking in Virtual Reality. J. Eye Mov. Res. 2019;12:1–18. doi: 10.16910/jemr.12.1.3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Niederriter B., Rong A., Aqlan F., Yang H. Sensor-Based Virtual Reality for Clinical Decision Support in the Assessment of Mental Disorders; Proceedings of the 2020 IEEE Conference on Games (CoG); Osaka, Japan. 24–27 August 2020; New York, NY, USA: IEEE; 2020. pp. 666–669. [Google Scholar]

- 78.Bell I.H., Nicholas J., Alvarez-Jimenez M., Thompson A., Valmaggia L. Virtual Reality as a Clinical Tool in Mental Health Research and Practice. Dialogues Clin. Neurosci. 2020;22:169–177. doi: 10.31887/DCNS.2020.22.2/lvalmaggia. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Santos F.V., Yamaguchi F., Buckley T.A., Caccese J.B. Virtual Reality in Concussion Management: From Lab to Clinic. J. Clin. Transl. Res. 2020;5:148–154. [PMC free article] [PubMed] [Google Scholar]

- 80.Zhu Z., Ji Q. Novel Eye Gaze Tracking Techniques Under Natural Head Movement. IEEE Trans. Biomed. Eng. 2007;54:2246–2260. doi: 10.1109/TBME.2007.895750. [DOI] [PubMed] [Google Scholar]