Abstract

Background and aims

Nongenerative speech is the rote repetition of words or phrases heard from others or oneself. The most common manifestations of nongenerative speech are immediate and delayed echolalia, which are a well-attested clinical feature and a salient aspect of atypical language use in autism. However, there are no current estimates of the frequency of nongenerative speech, and the individual characteristics associated with nongenerative speech use in individuals across the autistic spectrum are poorly understood. In this study, we aim to measure and characterize spontaneous and nongenerative speech use in minimally verbal and verbally fluent autistic children and adolescents.

Methods

Participants were 50 minimally verbal and 50 verbally fluent autistic individuals aged 6 to 21 years. Spontaneous and nongenerative speech samples were derived from SALT transcripts of ADOS-2 assessments. Participants’ intelligible speech utterances were categorized as spontaneous or nongenerative. Spontaneous versus nongenerative utterances were compared between language subgroups on frequency of use and linguistic structure. Associations between nongenerative speech use and a series of individual characteristics (ADOS-2 subscale scores, nonverbal IQ, receptive vocabulary, and chronological age) were investigated over the whole sample and for each language subgroup independently.

Results

Almost all participants produced some nongenerative speech. Minimally verbal individuals produced significantly more nongenerative than spontaneous utterances, and more nongenerative utterances compared to verbally fluent individuals. Verbally fluent individuals produced limited rates of nongenerative utterances, in comparison to their much higher rates of spontaneous utterances. Across the sample, nongenerative utterance rates were associated with nonverbal IQ and receptive vocabulary, but not separately for the two language subgroups. In verbally fluent individuals, only age was significantly inversely associated with nongenerative speech use such that older individuals produced fewer nongenerative utterances. In minimally verbal individuals, there were no associations between any of the individual characteristics and nongenerative speech use. In terms of linguistic structure, the lexical diversity of nongenerative and spontaneous utterances of both language subgroups was comparable. Morphosyntactic complexity was higher for spontaneous compared to nongenerative utterances in verbally fluent individuals, while no differences emerged between the two utterance types in minimally verbal individuals.

Conclusions

Nongenerative speech presents differently in minimally verbal and verbally fluent autistic individuals. Although present in verbally fluent individuals, nongenerative speech appears to be a major feature of spoken language in minimally verbal children and adolescents.

Implications

Our results advocate for more research on the expressive language profiles of autistic children and adolescents who remain minimally verbal and for further investigations of nongenerative speech, which is usually excluded from language samples. Given its prevalence in the spoken language of minimally verbal individuals, nongenerative speech could be used as a way to engage in and maintain communication with this subgroup of autistic individuals.

Keywords: Echolalia, self-repetition, nongenerative speech, minimally verbal, autism

Introduction

Nongenerative speech refers to segments of speech that are not novel or generated spontaneously by a speaker (see Luyster et al., 2022). Nongenerative speech encompasses a range of different unconventional spoken language behaviors, most commonly referred to as echolalia and self-repetitions. In autism, echolalia and self-repetitions are defined as rote repetitions, often with closely matched intonation, of previously heard words or phrases spoken by others or oneself, respectively. Immediate echolalia is the repetition of previously heard speech that occurs within one to just a few conversational turns, while delayed echolalia occurs after a certain lap of time (of varying length) has passed since hearing the source speech. A particularly striking expression of delayed echolalia are scripted recitations, in which a speaker repeats verbatim sequences of speech or dialogs heard from others such as caregivers, movie characters, or in advertisements, even days after first hearing it. Self-repetitions, also called palilalia or repetitive speech, involve repetitions of words and phrases that were first produced by the speaker themselves. From the earliest accounts of autism, such unconventional verbal behaviors have been described as a salient feature of atypical language use of autistic individuals (Kanner, 1943). Specifically, echolalia is commonly associated with autism and is the most studied manifestation of unconventional language use in autism.

The current version of the Diagnostic and Statistical Manual of Mental Disorders (DSM-5; American Psychiatric Association, 2013) classifies echolalia as a symptomatic manifestation of restricted and repetitive behaviors. More generally, nosological descriptions of autism recognize echolalia as a classic clinical feature of autism, and one of the most salient aspect of atypical speech (Kim et al., 2014). Contrasting accounts of echolalia argue that immediate and delayed echoes can be functional and communicative forms of language, that can be used by a child for a variety of different functions, including to promote language development (Cohn et al., 2022; Ryan et al., 2022; Stiegler, 2015). Autistic individuals were found to use echoes to perform requests, mark conversational turns or express agreement (Prizant & Duchan, 1981; Prizant & Rydell, 1984), and, in general, as a strategy to maintain social interaction when conversational demands exceed their linguistic abilities (Rydell & Mirenda, 1991). In addition, echolalia has been found to decrease along with a growth in both cognitive and language abilities (Howlin, 1982; McEvoy et al., 1988). This inverse association between echolalia and cognitive and language abilities was taken as evidence that echolalia might promote language development in autism (Gernsbacher et al., 2016), potentially in a similar way to what has been framed as linguistic alignment in typical development (Fusaroli et al., 2023).

While echolalia is a well-attested clinical sign of autism, it is also recognized that not all autistic individuals echo the words of others. However, to the best of our knowledge, there are no estimates of the prevalence of echolalia in autism. Individual characteristics associated with echoing are also poorly understood and findings are contradictory across studies. The frequency of immediate or delayed echoed speech (relative to spontaneous speech) elicited in naturalistic contexts vary greatly across studies and across participants, ranging from as low as 1% to as high as 75% for autistic children between the ages of 2 to 12 years old (Cantwell & Baker, 1978; Fusaroli et al., 2023; Gladfelter & Vanzuiden, 2020; Howlin, 1982; Local & Wootton, 2009; McEvoy et al., 1988; Prizant & Duchan, 1981; Prizant & Rydell, 1984; Rydell & Mirenda, 1991, 1994; Tager-Flusberg & Calkins, 1990; Van Santen et al., 2013). Both expressive and receptive language have been found to be associated with frequency of echoed utterances in autistic children's speech, such that children with better expressive and receptive language skills were less likely to repeat the words of others (Howlin, 1982; McEvoy et al., 1988; Van Santen et al., 2013). Interestingly, van Santen et al. (2013) reported that receptive language was negatively and moderately correlated with frequency of echoed utterances despite also finding no differences in frequency between two groups of autistic children, one with and one without core language impairments. The latter result resonates with other reports that expressive language was not associated with frequency of echoes (Cantwell & Baker, 1978; Gladfelter & Vanzuiden, 2020). Similarly, McEvoy et al. (1988) reported that autistic children with higher nonverbal intelligence echoed less frequently than those with lower nonverbal intelligence, whereas Gladfelter and Vanzuiden (2020) found no association between nonverbal IQ and frequency of echoes. Inconsistent results were also found for the association of chronological age and echolalia, with reports of no association between the two (McEvoy et al., 1988), and reports that, in a group of 4- to 8-year-olds, older children tended to echo more than younger children (Van Santen et al., 2013). Finally, social responsiveness was reported to have no association with echoing in school-aged autistic children (Gladfelter & Vanzuiden, 2020).

Not only is echolalia not present in all autistic individuals, it also occurs in early typical development (Bloom et al., 1974) as well as in other disorders, such as aphasia, developmental language disorder, Williams syndrome, and Alzheimer's disease (Da Cruz, 2010; Rossi & Giacheti, 2017; Torres-Prioris & Berthier, 2021; Van Santen et al., 2013). Despite being not present across the whole spectrum, as well as occurring in typical development and other disorders, echolalia remains commonly associated with autism. This observation suggests that further research is needed to provide a better understanding of the phenomenon as well as a more accurate description of the autistic individuals who do and do not echo. Inconsistent results in terms of frequency of echoing and the factors that influence it may stem from small and, sometimes, poorly defined samples of autistic children. It is well recognized that characteristics of autism manifest heterogeneously in diagnosed individuals, and this marked heterogeneity is also found for the specifiers of autism, such as language abilities. As such, school-aged autistic children and adolescents are usually described as falling in either of the following two categories: minimally verbal (Bal et al., 2016) and verbal. Except for a few notable exceptions (Butler et al., 2023; Joseph et al., 2019; La Valle et al., 2020; Skwerer et al., 2016), little is known about the language profiles of minimally verbal children and adolescents, even though they account for about 30% of individuals on the spectrum (Anderson et al., 2007; Pickles et al., 2014; Tager-Flusberg & Kasari, 2013). Given the unique speech profiles of minimally verbal autistic individuals (Butler et al., 2023; La Valle et al., 2020), it is fair to expect echolalia to present and manifest differently, but also to be influenced by different individual characteristics, in autistic individuals from different language subgroups.

Current study

The overarching aim of the current study is to measure and characterize nongenerative speech (i.e., repetitions of others’ and own utterances) in minimally verbal and verbally fluent autistic children and adolescents. More specifically, our study seeks to answer the three following questions:

How frequently do these different language subgroups of autistic individuals use spontaneous versus nongenerative utterances during natural speech?

Which individual characteristics (age, nonverbal IQ, autism severity, receptive vocabulary) of minimally verbal and verbally fluent autistic individuals are associated with their use of nongenerative speech?

Are the spontaneous and nongenerative utterances of minimally verbal and verbally fluent autistic individuals linguistically (morphosyntactic complexity, lexical diversity) comparable?

Methods

Participants

Participants who make up the sample described below were pooled from three different prior studies and recruited at Boston University and the Massachusetts Institute of Technology through schools, clinics, advertisements, autism-related events, and word-of-mouth. Study procedures were approved by the Boston University and Massachusetts Institute of Technology Institutional Review Boards and parents provided written informed consent. Testing of participants took place at Boston University and the Massachusetts Institute of Technology.

Participants included in the current study were 50 minimally verbal (mean age = 12.41, SD = 4.15, range = 6–21.17) and 50 verbally fluent (mean age = 12.6, SD = 3.96, range = 6.09–21.67) autistic individuals. English was the primary language spoken in their home. All participants, regardless of language status, had a diagnosis of autism spectrum disorder confirmed by meeting cut-off scores on the Autism Diagnostic Observation Schedule, second edition (ADOS-2; Lord et al., 2012), and on the Autism Diagnostic Interview-Revised (ADI-R; Lord et al., 1994) or Social Communication Questionnaire (SCQ; Rutter et al., 2003). Language subgroup status was determined based on the ADOS-2 module administered to participants in accordance with Bal et al. (2016). Minimally verbal participants younger than 12 years old (n = 17) were all administered a module 1, whereas minimally verbal participants older than 12 years old (n = 33) were administered module 1 of the Adapted-ADOS (Bal et al., 2021), which includes developmentally appropriate material and activities for older minimally verbal individuals. Verbally fluent participants received a module 3 (n = 32) or module 4 (n = 18). As a result, and based on ADOS-2 descriptions (Lord et al., 2012), participants in the minimally verbal group had language abilities ranging from no speech at all up to and including inconsistent use of simple phrases. Verbally fluent participants, on the other hand, were able to produce a range of flexible sentence types and grammatical forms, use language to refer to events beyond the immediate context, and combine sentences using logical connectors (Lord et al., 2012).

Measures

Autism severity

ADOS-2 total and subscale algorithm scores for Social Affect (SA) and Restricted and Repetitive Behaviors (RRB) were converted into calibrated severity scores (with higher scores indicating more severe symptomatology) to allow for comparisons between different modules (Hus et al., 2014). Due to a lack of validated calibrated severity scores specific to the Adapted-ADOS, calibrated severity scores for the Adapted-ADOS module 1 were derived from those available for the standard ADOS-2 (Hus et al., 2014). Readers should keep in mind that their validity for the Adapted-ADOS is not known.

Nonverbal IQ

Nonverbal IQ was measured using different assessments given that participants were pooled from three different studies, and also based on language status. Minimally verbal participants were administered the cognitive subtests of the Leiter International Performance Scale-Third Edition (n = 34; one participant could not complete the test) (Leiter-3; Roid et al., 2013) or the Raven's Colored Progressive Matrices (n = 15) (Raven et al., 1998). Verbally fluent participants were administered the Kaufman Brief Intelligence Test (n = 23) (Kaufman, 2004), the Weschler Abbreviated Scale of Intelligence (n = 17) (Wechsler & Hsiao-pin, 2011), or the Raven's Colored Progressive Matrices (n = 10) (Raven et al., 1998). Raw scores on each test were converted into nonverbal IQ standard scores to allow for comparisons across ages and tests.

Receptive vocabulary

Receptive vocabulary was assessed for a subset of 29 minimally verbal and 26 verbally fluent participants 1 using the Peabody Picture Vocabulary Test—Fourth Edition (PPVT-4; Dunn & Dunn, 2007). During the administration of the PPVT-4, the experimenter names one of four pictures and the participant is asked to point at the correct picture. Raw receptive vocabulary scores were converted into standard scores.

Participants’ characteristics, including ADOS-2 calibrated severity, nonverbal IQ and receptive vocabulary scores, are reported in Table 1.

Table 1.

Participants’ characteristics.

| Minimally verbal mean (SD) range |

Verbally fluent mean (SD) range |

W | |

|---|---|---|---|

| Age in years | 12.41 (4.15) 6–21.17 |

12.6 (3.96) 6.09–21.67 |

1217 |

| Nonverbal IQ | 62.24 (18.11) 30–112 |

103.78 (20.52) 64–152 |

172.5*** |

| PPVT-4 receptive vocabulary | |||

| Raw scores Standard scores |

33.69 (24.65) 3–93 26.55 (13.1) 20–69 |

174.25 (41.46) 73–222 98.92 (28.72) 31–135 |

2.5*** 11*** |

| ADOS-2 calibrated severity scores | |||

| Total Social affect Restricted and repetitive behaviors |

7.48 (1.46) 5–10 7.14 (1.52) 4–10 8.34 (1.53) 5–10 |

6.72 (2.34) 1–10 6.46 (2.42) 1–10 7.08 (2.48) 1–10 |

1470 1428.5 1630.5** |

| N:N | N:N | χ2 | |

| Male:female | 38:12 | 40:10 | .06 |

| N (%) | N (%) | χ2 | |

| Race | 1.92 | ||

| White Black or African American Asian Native Hawaiian or other Pacific Islander More than one |

34 (68) 1 (2) 4 (8) 1 (2) 7 (14) |

40 (80) 1 (2) 3 (6) 0 (0) 5 (10) |

|

| Ethnicity | 1.15 | ||

| Hispanic Non-Hispanic Prefer not to respond |

3 (6) 43 (86) 1 (2) |

4 (8) 45 (90) 1 (2) |

|

| Maternal education | 3.86 | ||

| Less than high school High school or GED Associates or some college Bachelor's degree Master's degree Doctoral degree or equivalent |

0 (0) 4 (8) 5 (1) 19 (38) 8 (16) 2 (4) |

1 (2) 6 (12) 3 (6) 21 (42) 6 (12) 5 (1) |

|

| Annual household income | 2.42 | ||

| < 20 000$ 20 000–49 999$ 50 000–99 999$ > 100 000$ Prefer not to respond |

1 (2) 8 (16) 8 (16) 13 (26) 17 (34) |

2 (4) 10 (20) 11 (22) 15 (30) 11 (22) |

*** p < .001, ** p < .01, * p < .05. Two-sample Mann–Whitney U tests were performed to compare groups on age, nonverbal IQ, ADOS total, social affect, restricted and repetitive behaviors calibrated severity scores and PPVT-4 receptive vocabulary. Chi-squares of independence were performed to compare groups on sex, race, ethnicity, maternal education, and annual household income.

ADOS-2=Autism Diagnostic Observation Schedule, second edition; PPVT-4=Peabody Picture Vocabulary Test—Fourth Edition.

Naturalistic language samples

ADOS-2 assessments were video- and audio-recorded and used as naturalistic spoken language samples. The ADOS-2 is a reliable context for naturalistic spoken language sampling, and has been used in previous work to sample spontaneous versus nongenerative spoken language productions (Gladfelter & Vanzuiden, 2020; Van Santen et al., 2013). In addition, it ensured context consistency within language subgroups (viz. all minimally verbal participants received a (Adapted) module 1, and all verbally fluent participants received a module 3 or 4). It also appeared to be more adapted than other traditional sampling methods which would have been inappropriate for specific subsets of our participants (e.g., parent–child interaction for older participants, narration for minimally verbal participants, free play for verbally fluent adolescents).

The first 30 min of each ADOS-2 video were transcribed following SALT transcription conventions (Miller & Nockerts, 2024). The language samples of participants and experimenters were segmented into spoken utterances using c-unit rules. An utterance was defined as a main clause with all its dependent clauses. To account for vocal productions specific to minimally verbal autistic individuals, nonverbal vocalizations containing at least an English vowel and consonant were also counted as utterances (see Barokova et al., 2020, 2021). Once segmented and transcribed, participants’ utterances were further coded using transcripts following a pragmatic coding scheme designed by La Valle et al. (2020). Codes were added in square brackets at the end of each participants’ utterance to comply with SALT conventions. Coders where encouraged to refer back to participants’ video recordings only when the pragmatic function or unconventional language category of an utterance (see Language coding scheme below) was unclear.

Language coding scheme

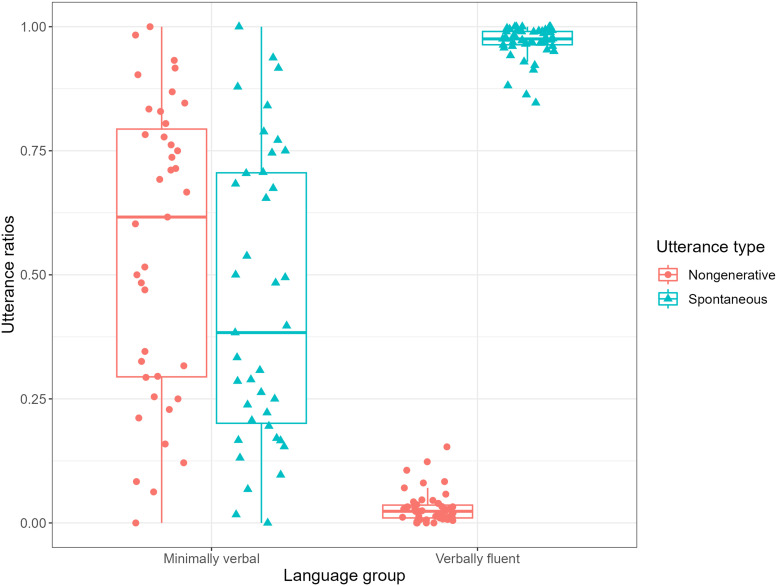

The language coding scheme was designed by La Valle et al. (2020) with the purpose of describing the pragmatic speech profiles of minimally verbal and verbally fluent autistic individuals. In their paper, La Valle et al. (2020) focused their analyses on the pragmatic functions of spontaneous utterances. The current study, however, focuses on analyses of spontaneous versus nongenerative speech. Only coding categories that are relevant or used in the analyses below will be described in detail. Detailed definitions of each relevant coding category are available in Table 2.

Table 2.

Coding scheme for spontaneity of utterance and category of unconventional language.

In the current study, intelligible verbal utterances were analyzed for spontaneity of utterance. Utterances were first assigned one of two codes: spontaneous or unconventional language (see Table 2). 2 Spontaneous utterances were further assigned one of seven pragmatic function codes: question, request, protest, labeling, comment, self-regulation, agreement/acknowledgement/confirmation/disagreement, or response to a question (see La Valle et al., 2020 for further information). Unconventional utterances were further assigned one of four categories: repetition, scripted recitation, neologism, or idiosyncratic speech (see Table 2). Both repetitions and scripted recitations were identified as instances of nongenerative speech. Neologisms and idiosyncrasies were identified as instances of unconventional generative rather than nongenerative speech and were excluded from further analyses.

The current study compared spontaneous and nongenerative speech of minimally verbal and verbally fluent autistic individuals. Participants’ spontaneous speech samples were composed of all utterances that received a “spontaneous” code regardless of pragmatic function. Participants’ nongenerative speech samples were composed of all utterances that received a “unconventional” code and a “repetition” or “scripted recitation” code (see unconventional nongenerative utterances in Table 2).

Reliability

Language transcripts were transcribed using SALT based on video recordings of the ADOS-2 sessions by a first transcriber. Each transcript was then proofed by a second transcriber who reviewed the transcript while watching the ADOS-2 video recording. Any disagreement between the two transcribers was discussed and resolved by reaching a consensus referring back to SALT conventions (Miller & Nockerts, 2024).

Subsequently, all transcripts were coded for pragmatic functions by a primary coder. To assess intercoder reliability, 20 transcripts (i.e., 20% of the language sample, see Syed & Nelson, 2015) were coded by a second coder. Transcripts were chosen at semirandom ensuring an even split of 10 minimally verbal participants’ transcripts and 10 verbally fluent participants’ transcripts to establish reliability in both language subgroups. Intercoder reliability was measured using Cohen's kappa. The primary and second coders reached excellent agreement for spontaneity of utterance (κ = .82 (95% confidence interval [CI] [.79, .84]), p < .001), and for pragmatic functions/unconventional language category (κ = 0.81 (95% CI [.8, .83]), p < .001).

Data preparation for statistical analyses

Spontaneous and nongenerative utterances were analyzed in terms of (1) frequency of use and (2) linguistic structure. Both frequency and linguistic measures were extracted from the SALT software.

For frequency measures, total number of spontaneous utterances, total number of nongenerative utterances, and total number of intelligible verbal utterances were extracted for each participant. Proportions of spontaneous and nongenerative utterances were computed for each participant by dividing the total number of spontaneous utterances by the total number of intelligible verbal utterances, and the total number of nongenerative utterances by the total number of intelligible verbal utterances, to account for differences in sample length.

For linguistic measures, Mean Length of Utterance (MLU) in morphemes, an index of morphosyntactic complexity, and Moving-Average Type-Token Ratio (MATTR) of all spontaneous and nongenerative utterances, respectively, were extracted for each participant. The MATTR is a measure of lexical diversity derived from the more traditional Type-Token ratio (i.e., the total number of different words divided by the total number of words) (Covington & McFall, 2010; Fergadiotis et al., 2015). The MATTR measures lexical diversity using a moving window that estimates Type-Token ratios of successive windows of fixed length. The measure of lexical diversity is obtained by averaging the successive Type-Token ratios, which allows to account for sample length differences. High MATTR values suggest high lexical diversity while low MATTR values suggest low lexical diversity. The moving window has to be lower than the smallest sample length in the language samples. Given very low total word samples in spontaneous and/or nongenerative speech for some participants, the MATTR window size was set to 10 (i.e., the lowest value available on SALT).

Analytic plan

All statistical analyses were implemented in R (R Core Development Team, 2019). Between-group differences in frequency of use of spontaneous and nongenerative utterances were investigated with generalized linear regression models using the glm function. Type of utterance (spontaneous vs. nongenerative) and Group (minimally verbal vs. verbally fluent) were used as independent variables. Relationships between proportions of nongenerative speech and individual characteristics (chronological age, nonverbal IQ, ADOS-2 SA and RRB calibrated severity scores, PPVT-4 receptive vocabulary) were investigated using Spearman correlations over the whole sample first, and then for each language group separately. Between-group differences in morphosyntactic complexity and lexical diversity of spontaneous and nongenerative utterances were investigated with linear regression models using the lm function. Type of utterance (spontaneous vs. nongenerative) and Group (minimally verbal vs. verbally fluent) were used as independent variables. Post hoc pairwise comparisons were implemented with Tukey adjustment using the emmeans function of the emmeans package (Lenth et al., 2020).

Results

Frequency of spontaneous vs. nongenerative speech

Table 3 reports descriptive statistics for percentages of spontaneous and nongenerative utterances in both groups. There were 12 individuals in the minimally verbal group (24%) who did not produce any nongenerative utterances. However, 11 of these 12 individuals did not produce any verbal utterances (nongenerative or spontaneous). In other words, all but one minimally verbal participant with spoken language produced nongenerative utterances (i.e., in total, 97% of minimally verbal individuals with spoken language). There were four individuals in the verbally fluent group who did not produce any nongenerative utterances (i.e., 92% of verbally fluent individuals produced nongenerative utterances). Thus, 84% of all participants and 94% of those who had at least some spoken language produced one or more nongenerative utterances. A weighted generalized linear model with quasibinomial distribution on proportions of utterances with a Type of utterance×Group interaction as fixed effect revealed a significant main effect of Type of utterance (β = −.7, SE = .22, p < .01) and of Group (β = −3.8, SE = .23, p < .001), and a significant Type of utterance×Group interaction (β = 7.56, SE = .33, p < .001).

Table 3.

Descriptive statistics for percentage of spontaneous and nongenerative utterance in minimally verbal and verbally fluent autistic individuals.

| % | |||

|---|---|---|---|

| Group | Type of utterance | Mean (SD) | Range |

| Minimally verbal Verbally fluent |

Nongenerative Spontaneous Nongenerative Spontaneous |

55.51 (29.51) 44.64 (29.29) 3.02 (3.2) 96.85 (3.35) |

0–100 0–100 0–15.34 84.66–100 |

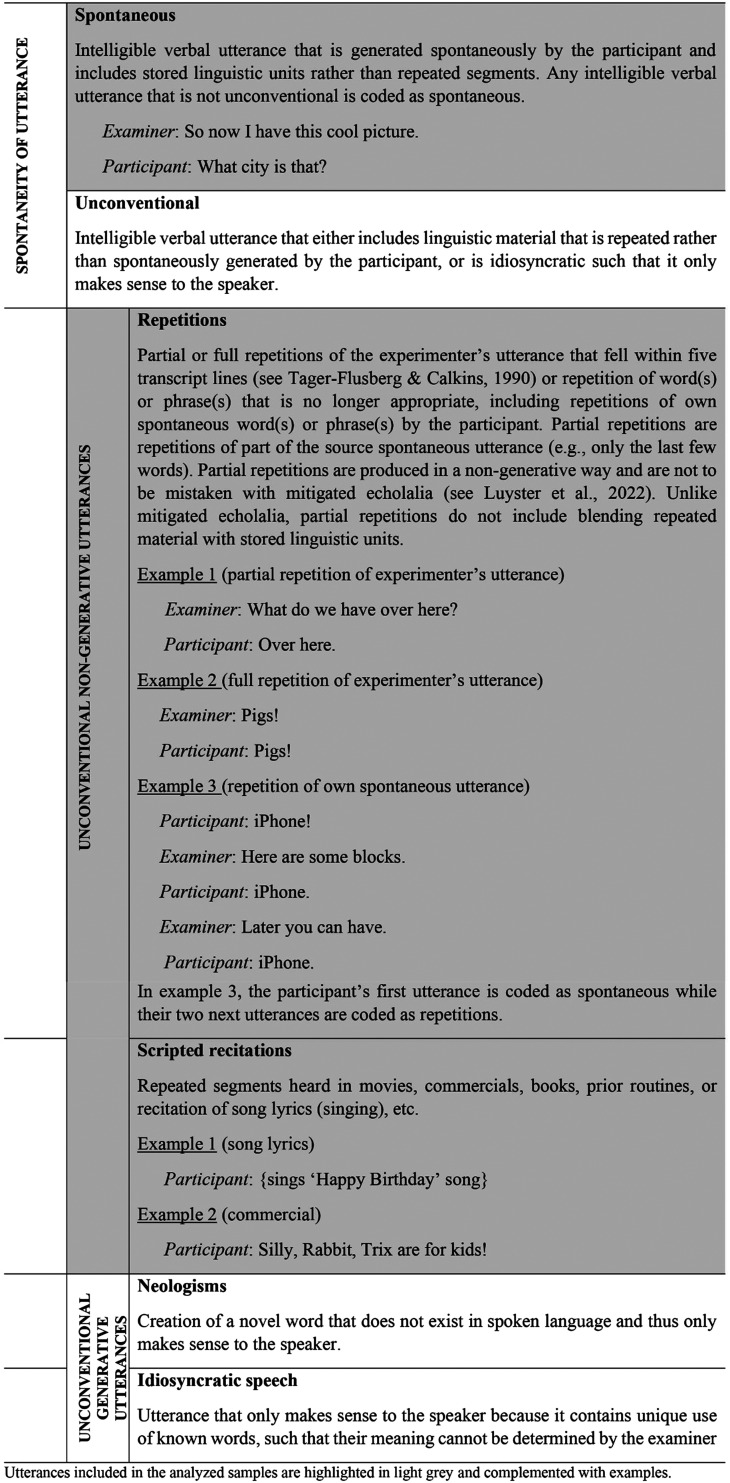

Post hoc pairwise comparisons on the Type of utterance×Group interaction showed that minimally verbal individuals produced higher rates of nongenerative versus spontaneous utterances (β = .7, SE = .22, p < .01), while verbally fluent individuals produced higher rates of spontaneous than nongenerative utterances (β = −6.86, SE = .25, p < .001). Consequently, minimally verbal individuals produced higher rates of nongenerative utterances than verbally fluent individuals (β = 3.8, SE = .23, p < .001) who, in turn, produced higher rates of spontaneous utterances than minimally verbal individuals (β = −3.76, SE = .23, p < .001). Figure 1 depicts proportions of nongenerative versus spontaneous utterances in minimally verbal and verbally fluent individuals.

Figure 1.

Boxplots of the proportions of nongenerative and spontaneous utterances in minimally verbal and verbally fluent autistic individuals.

Individual characteristics

The relationship between each individual characteristic (i.e., chronological age, nonverbal IQ, social affect, restricted and repetitive behaviors, and receptive vocabulary) and proportions of nongenerative utterances were investigated using Spearman correlations. Spearman correlations were computed over the whole sample first, and then repeated for each group separately (see Table 4).

Table 4.

Spearman's ρ for relationships between individual characteristics and proportions of nongenerative utterances.

| All participants N = 100 |

Minimally verbal N = 50 |

Verbally fluent N = 50 |

|

|---|---|---|---|

| Chronological age | −.21 | −.29 | −.48*** |

| Nonverbal IQ | −.59*** | .23 | −.21 |

| PPVT-4 standard score | −.74*** | .31 | −.32 |

| ADOS-2 SA | .11 | .26 | −.07 |

| ADOS-2 RRB | .18 | .23 | .18 |

*** p < .001, ** p < .01, * p < .05. PPVT-4 scores are based on a subsample of 29 minimally verbal and 26 verbally fluent participants.

ADOS-2=Autism Diagnostic Observation Schedule, second edition; PPVT-4=Peabody Picture Vocabulary Test—Fourth Edition; RRB=Restricted and Repetitive Behaviors; SA=Social Affect.

Whole sample

Results of the Spearman correlations over the whole sample showed significant negative relationships between proportions of nongenerative utterances and nonverbal IQ (ρ = −.59, p < .001), and receptive vocabulary as measured by the PPVT-4 (ρ = −.74, p < .001). Participants who had higher nonverbal IQ and those who had higher receptive vocabulary scores tended to have lower proportions of nongenerative utterances in their speech. Relationships between proportions of nongenerative utterances and ADOS-2 SA and RRB calibrated severity scores, and chronological age were not significant (all p > .05).

Minimally verbal individuals

None of the individual characteristics were significantly correlated with proportions of nongenerative utterances when looking at the relationships in the group of minimally verbal individuals only (all p > .07).

Verbally fluent individuals

In the verbally fluent group, there was a statistically significant negative relationship between chronological age and proportions of nongenerative utterances (ρ = −.47, p < .001). Relationships between all other individual characteristics and proportions of nongenerative utterances were not significant (all p > .1).

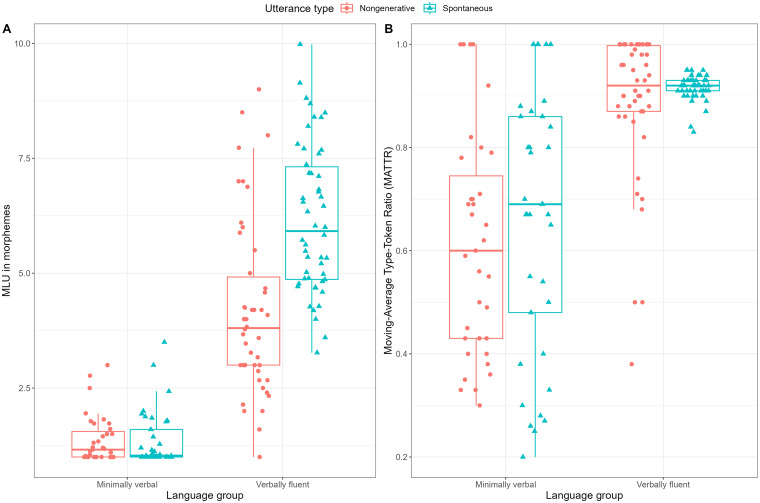

Linguistic structure of nongenerative speech

To compare the morphosyntactic complexity of spontaneous and nongenerative utterances between minimally verbal and verbally fluent autistic participants, we ran a linear regression model on MLU in morphemes with the Type of utterance×Group interaction as fixed effect. The model reached significance (F (3, 164) = 123.5, p < .001, R2 = .69) (see Figure 2A). Further exploration of the model indicated that, overall, there was no statistically significant difference in MLU in morphemes between spontaneous and nongenerative utterances (β = −.02, SE = .32, p = .96), and that, unsurprisingly, verbally fluent individuals produced utterances of significantly greater length than minimally verbal individuals (β = 2.8, SE = .31, p < .001). Post hoc pairwise comparisons on the Type of utterance×Group interaction revealed that minimally verbal individuals produced spontaneous and nongenerative utterances of comparable length (β = .02, SE = .32, p = .99), while verbally fluent individuals’ spontaneous utterances were significantly longer than their nongenerative utterances (β = −1.99, SE = .28, p < .001).

Figure 2.

Morphosyntactic complexity (A) and lexical diversity (B) of spontaneous and nongenerative utterances in minimally verbal and verbally fluent individuals.

Similarly, we ran a second linear regression model to compare MATTR between nongenerative and spontaneous utterances. A model with the Type of utterance×Group interaction as fixed effect was fitted and reached significance (F (3, 164) = 33.29, p < .001, R2 = .37) (see Figure 2B). Overall, there was no difference in lexical diversity (as measured by MATTR) between spontaneous and nongenerative utterances (β = .05, SE = .04, p = .21), and verbally fluent individuals showed a greater lexical diversity than minimally verbal individuals (β = .27, SE = .04, p < .001). The model also indicated that there was no statistically significant Type of utterance×Group interaction (β = −.02, SE = .05, p = .68).

Discussion

Using naturalistic language samples collected during semistructured experimenter–participant interactions, we compared and characterized minimally verbal and verbally fluent autistic children and adolescents’ use of spontaneous and nongenerative speech, including immediate repetitions of others’ speech, self-repetitions, and scripted recitations. Specifically, we sought to compare the frequency of spontaneous and nongenerative utterances between language subgroups, to identify individual characteristics associated with nongenerative speech use, and to compare the linguistic structure of spontaneous and nongenerative utterances in both subgroups.

Frequency of spontaneous vs. nongenerative speech

A very high proportion of participants engaged in nongenerative speech overall. Nongenerative speech was especially prevalent in minimally verbal individuals. First, almost all minimally verbal individuals who had some spoken language produced nongenerative speech. Second, minimally verbal individuals used nongenerative speech more frequently than verbally fluent individuals. Finally, minimally verbal individuals, unlike their verbally fluent peers who were far more spontaneous than nongenerative, were more nongenerative than spontaneous in their speech. Taken together, these observations indicate that nongenerative speech is a salient and prevalent feature of minimally verbal children and adolescents’ spoken language.

Ours is the first study to systematically examine and describe how minimally verbal children and adolescents use spontaneous versus nongenerative speech. Our results show that the speech of minimally verbal individuals is composed of both spontaneous and nongenerative utterances. However, minimally verbal individuals use nongenerative speech more frequently than spontaneous speech. There is also great variability in the proportion of nongenerative utterances produced by minimally verbal individuals, ranging from 0% to 96%. Those numbers, and their wide variability, are consistent with those found in previous research (Cantwell & Baker, 1978; Fusaroli et al., 2023; Gladfelter & Vanzuiden, 2020; Howlin, 1982; Local & Wootton, 2009; McEvoy et al., 1988; Prizant & Duchan, 1981; Prizant & Rydell, 1984; Rydell & Mirenda, 1991, 1994; Tager-Flusberg & Calkins, 1990; Van Santen et al., 2013).

Most verbally fluent individuals engaged in some nongenerative speech as well, but proportionally, used it far less often than minimally verbal individuals. The vast majority of verbally fluent individuals’ speech was spontaneous. The fact that many verbally fluent individuals repeat their words or those of others but not often may reflect different aspects of more mature conversations, in which linguistic repetitions happen for a variety of reasons as a fruitful sociocommunicative tool (see Dideriksen et al., 2023; Fusaroli & Tylén, 2016), rather than a “symptomatic” manifestation of immediate or delayed echolalia. Complete or partial repetitions can also be framed, from a more normative than clinical perspective, as lexical or syntactic alignment which has been found to occur in both autistic and typically developing children's conversations during parent–child free play (Fusaroli et al., 2023). Comparing the quantity and quality of verbally fluent autistic individuals’ repetitions during conversations to that of neurotypical peers would help determine whether there exists repetitions that are atypical and symptomatic in nature, and repetitions that are naturally occurring and expected in typical conversations.

Individual characteristics

The marked difference in frequency of nongenerative utterances between language groups as well as the striking variability in frequency in the group of minimally verbal individuals strongly advocate for a thorough investigation of individual characteristics that may relate to nongenerative speech.

Over the whole sample of participants, nonverbal IQ and receptive vocabulary were associated with the use of nongenerative speech. Autistic individuals with higher nonverbal IQ scores and those with higher receptive vocabulary tended to use less nongenerative speech, which is consistent with some previous reports (McEvoy et al., 1988; Van Santen et al., 2013). However, neither relationship held when we investigated them in each subgroup separately. The fact that nonverbal IQ is not associated with nongenerative speech in verbally fluent individuals is consistent with previous findings on school-aged children (Gladfelter & Vanzuiden, 2020). These associations are in line with the idea that echolalia decreases as cognitive and language comprehension abilities increase (Gernsbacher et al., 2016; Howlin, 1982). The fact that the relationships over the whole sample did not replicate in each language subgroup when investigated separately could indicate that they simply reflect between-group differences that were already manifest in our first analysis. Observation of our participants’ characteristics show that minimally verbal participants have lower nonverbal IQ and receptive vocabulary scores than verbally fluent participants.

None of the associations between nongenerative speech and different individual characteristics turned out to be statistically significant in the group of minimally verbal individuals. This is all the more surprising given the marked within-group heterogeneity both in frequency of nongenerative speech and in each of the individual characteristics under scrutiny. Echolalia has been argued to be used by autistic individuals when the cognitive demand is too high and exceeds their comprehension abilities (Rydell & Mirenda, 1991). However, this does not seem to explain why some minimally verbal individuals echo more than others. There might be other factors at play in this specific subgroup of individuals, such as anxiety, level of dysregulation, sensory profile, attention-deficit hyperactivity disorder, restricted and repetitive behaviors or co-occurring obsessive-compulsive disorder.

Finally, verbally fluent individuals’ use of nongenerative speech was only significantly inversely associated with chronological age. As mentioned above, echolalia is said to decrease as cognitive and language abilities increase (Gernsbacher et al., 2016). Echolalia is also thought as a language acquisition tool and a potential productive bridge toward self-generated spontaneous speech for some autistic children (viz. those with higher cognitive and language abilities) (Prizant, 1983). From that perspective, it would make sense that verbally fluent individuals rely more on (self-)repetitions when they are younger and are thus more likely to have conversations where the demand is too high for their language abilities. As they grow older and build stronger language abilities, they face fewer situations where they might need to repeat their words or those of others to keep up with the conversation.

Linguistic structure

Nongenerative utterances of minimally verbal individuals were found to be comparable to their spontaneous utterances in terms of both morphosyntactic complexity and lexical diversity. This finding shows that the linguistic complexity of nongenerative speech does not exceed that of spontaneous speech. This was also the case for verbally fluent individuals whose spontaneous utterances were, in fact, significantly more complex than their nongenerative utterances when it came to morphosyntax. Their spontaneous and nongenerative utterances were comparable in terms of lexical diversity.

The fact that, in our sample, the linguistic structure of nongenerative speech reflects autistic individuals’ spontaneous language ability is very interesting, and inconsistent with early studies who found echoed utterances to be more grammatically advanced than spontaneous utterances (Howlin, 1982; Prizant & Rydell, 1984), as well as recent findings that stressed the role that partial repetitions and linguistic alignment have in promoting language development in both autistic and typically developing preschoolers (Fusaroli et al., 2023). If echolalia is potentially promoting language development, echoes could be expected to be more linguistically complex than spontaneous utterances to act as language rehearsal. The sample in our study is composed of older children and adolescents and does not represent the most appropriate population to draw firm conclusions about the developmental role of echolalia. However, one early notable study on a limited sample (n = 4) of autistic preschoolers also found echoed utterances to be shorter in length (as measured by MLU) relative to spontaneous utterances (Tager-Flusberg & Calkins, 1990).

Limitations and future directions

Nongenerative speech seems to represent a significant part of minimally verbal individuals’ speech. In autism research, standard practices for analyzes of naturalistic language samples is to only include spontaneous utterances (Tager-Flusberg et al., 2009). However, our results suggest that accounts of minimally verbal individuals’ speech might be more comprehensive if they included descriptions of both spontaneous and nongenerative language. More focus should be put on nongenerative speech in minimally verbal individuals. In order to understand what leads minimally verbal individuals to repeat the words of others, future studies could dig deeper into the (communicative) functions of their echoes and self-repetitions. There has been extensive work looking at the functions of both immediate and delayed echolalia in autism (Prizant & Duchan, 1981; Prizant & Rydell, 1984). However, there was never a specific focus on older minimally verbal children and adolescents, despite the fact that they are likely to repeat words more frequently than other language subgroups of autistic individuals.

Relying on sociocommunicative and prosodic cues may help conduct a greater investigation into the communicative intent behind autistic individuals’ repetitions and their specific functions. In our study, we relied on transcripts, that is, on the linguistic content of utterances, to determine whether it was spontaneous or nongenerative. Integrating information from sociocommunicative and prosodic cues may help differentiate repetitions that are used as a fruitful linguistic alignment tool from echoes that may warrant further attention from speech therapists. In addition, coding for spontaneity of utterance remains challenging and sometimes involves a lot of coder's “gut” and subjectivity, especially when relying on prosody. Comparing the efficiency of different coding schemes (e.g., one that relies on linguistic structure alone vs. one that relies on linguistic structure and prosody) on a single dataset may be extremely useful to researchers willing to integrate analyzes of spontaneous and nongenerative speech in their work.

Our study was the first to investigate nongenerative speech in minimally verbal children and adolescents, but it was limited to spoken language only. Some minimally verbal individuals may also use other modes of communication, including Augmentative and Alternative Communication (AAC). Minimally verbal individuals thus may be able to produce both spontaneous and nongenerative AAC productions, with a variety of forms and functions, in addition to spoken utterances. Future studies could purposefully design sampling methods where both spoken language and AAC productions are sampled in order to capture minimally verbal individuals’ language profiles more comprehensively.

Given the age of our participants, the interpretation of our results on the linguistic structure is limited when it comes to determining the role that echolalia could play in the development of language. Our results thus advocate for more research looking at the characteristics of echolalia in young autistic preschoolers who are in the process of acquiring language and who may display different levels of language abilities.

Conclusions

Nongenerative speech presents very differently in minimally verbal and verbally fluent children and adolescents. In comparison to verbally fluent individuals, nongenerative speech appears more significantly present in minimally verbal individuals’ speech. Not only do most minimally verbal individuals who have access to spoken language produce nongenerative utterances, they also produce them at higher rates than spontaneous utterances. This shows that nongenerative speech is highly prevalent and a major feature of spoken language in minimally verbal individuals, and likely holds a different role for minimally verbal and verbally fluent individuals.

Implications

Taken together, our results for both minimally verbal and verbally fluent individuals advocate for clinical practices that promote and work with echoes and self-repetitions rather than seeking to decrease it.

First, nongenerative speech was the dominant form of speech in minimally verbal individuals. Given that most manifestations of language in minimally verbal individuals seem to be repeated, echoes and self-repetitions should be regarded as instances of language that could be functionalized for communicative purposes (Sterponi & Shankey, 2014) rather than as something to decrease or suppress (Neely et al., 2016). In fact, trying to decrease or suppress echoes or self-repetitions in minimally verbal individuals will deprive them of what composes most of their spoken language. In a clinical setting, it may be important to reply to and engage with echoes and self-repetitions of minimally verbal individuals as a way to maintain social communication and joint engagement events which both favor language development (Shih et al., 2021).

Similarly, if verbally fluent individuals’ patterns of repetitions evoke those referred to, in the nonclinical literature, as linguistic alignment, seeking to decrease or suppress those kinds of repetitions would be equally detrimental. As mentioned earlier, linguistic alignment seems to play a role not only in typical mature conversations (Fusaroli & Tylén, 2016) but also in language development (Fusaroli et al., 2023). As a result, young autistic children should not be discouraged to repeat the words of others and align their words to those of their conversational partner as they start engaging in conversations.

All in all, if there exist different types of echoes and repetitions, with one being characteristic of minimally verbal autism and the other being a common tool for successful conversations, more care should be put into trying to tell those apart, both for clinical and research purposes.

Acknowledgments

The authors would like to thank the study participants and their families, the members of the Center for Autism Research Excellence who made this work possible, as well as the National Institute on Deafness and Other Communication Disorders and the Belgian American Educational Foundation for their financial support.

Only a subset of participants completed the PPVT-4 because it was not included in the study procedures of all three studies participants were drawn from.

The coding scheme follows conventions established by Luyster et al. (2022) to categorize the different forms of (un)conventional language behaviors coded in our language sample.

Footnotes

The authors declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Funding: The authors disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This work was supported by research Grants from the National Institute on Deafness and Other Communication Disorders (P50 DC013027, P50 DC18006, RO1 DC011339, and RO1 DC01029003S1) and by the Belgian American Educational Foundation.

ORCID iD: Pauline Maes https://orcid.org/0000-0001-6283-5896

Contributor Information

Pauline Maes, Department of Psychological & Brain Sciences, Boston University, Boston, MA, USA.

Chelsea La Valle, Down Syndrom Program, Division of Developmental Medicine, Department of Pediatrics, Boston Children's Hospital, Harvard Medical School, Boston, MA, USA.

Helen Tager-Flusberg, Department of Psychological & Brain Sciences, Boston University, Boston, MA, USA.

References

- American Psychiatric Association (2013). Diagnostic and statistical manual of mental disorders (5th ed). [Google Scholar]

- Anderson D. K., Lord C., Risi S., DiLavore P. S., Shulman C., Thurm A., Welch K., Pickles A. (2007). Patterns of growth in verbal abilities among children with autism spectrum disorder. Journal of Consulting and Clinical Psychology, 75(4), 594–604. 10.1037/0022-006X.75.4.594 [DOI] [PubMed] [Google Scholar]

- Bal V. H., Katz T., Bishop S. L., Krasileva K. (2016). Understanding definitions of minimally verbal across instruments: Evidence for subgroups within minimally verbal children and adolescents with autism spectrum disorder. Journal of Child Psychology and Psychiatry, 57(12), 1424–1433. 10.1111/JCPP.12609 [DOI] [PubMed] [Google Scholar]

- Bal V. H., Maye M., Salzman E., Huerta M., Pepa L., Risi S., Lord C. (2021). Correction to: The adapted ADOS: a new module set for the assessment of minimally verbal adolescents and adults. Journal of Autism and Developmental Disorders, 51(12), 4504–4505. 10.1007/S10803-021-04888-Y [DOI] [PubMed] [Google Scholar]

- Barokova M. D., Hassan S., Lee C., Xu M., Tager-Flusberg H. (2020). A comparison of natural language samples collected from minimally and low-verbal children and adolescents with autism by parents and examiners. Journal of Speech, Language, and Hearing Research, 63(12), 4018–4028. 10.1044/2020_JSLHR-20-00343 [DOI] [PubMed] [Google Scholar]

- Barokova M. D., La Valle C., Hassan S., Lee C., Xu M., McKechnie R., Johnston E., Krol M. A., Leano J., Tager-Flusberg H. (2021). Eliciting language samples for analysis (ELSA): A new protocol for assessing expressive language and communication in autism. Autism Research, 14(1), 112–126. 10.1002/AUR.2380 [DOI] [PubMed] [Google Scholar]

- Bloom L., Hood L., Lightbown P. (1974). Imitation in language development: If, when, and why. Cognitive Psychology, 6(3), 380–420. 10.1016/0010-0285(74)90018-8 [DOI] [Google Scholar]

- Butler L. K., Shen L., Chenausky K. V., La Valle C., Schwartz S., Tager-Flusberg H. (2023). Lexical and morphosyntactic profiles of autistic youth with minimal or low spoken language skills. American Journal of Speech-Language Pathology, 32(2), 733–747. 10.1044/2022_AJSLP-22-00098 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cantwell D. P., Baker L. (1978). Imitations and echoes in autistic and dysphasic children. Journal of the American Academy of Child Psychiatry, 17(4), 614–624. 10.1016/S0002-7138(09)61015-3 [DOI] [PubMed] [Google Scholar]

- Cohn E. G., McVilly K. R., Harrison M. J., Stiegler L. N. (2022). Repeating purposefully: Empowering educators with functional communication models of echolalia in autism. Autism & Developmental Language Impairments, 7. 10.1177/23969415221091928 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- Covington M. A., McFall J. D. (2010). Cutting the gordian knot: The moving-average type–token ratio (MATTR). Journal of Quantitative Linguistics, 17(2), 94–100. 10.1080/09296171003643098 [DOI] [Google Scholar]

- Da Cruz F. M. (2010). Verbal repetitions and echolalia in Alzheimer’s discourse. Clinical Linguistics & Phonetics, 24(11), 848–858. 10.3109/02699206.2010.511403 [DOI] [PubMed] [Google Scholar]

- Dideriksen C., Christiansen M. H., Tylén K., Dingemanse M., Fusaroli R. (2023). Quantifying the interplay of conversational devices in building mutual understanding. Journal of Experimental Psychology General, 152(3), 864–889. 10.1037/XGE0001301 [DOI] [PubMed] [Google Scholar]

- Dunn L. M., Dunn D. M. (2007). Peabody picture vocabulary test (4th ed.). American Guidance Service. [Google Scholar]

- Fergadiotis G., Wright H. H., Green S. B. (2015). Psychometric evaluation of lexical diversity indices: Assessing length effects. Journal of Speech, Language and Hearing Research, 58(3), 840–852. 10.1044/2015_JSLHR-L-14-0280 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fusaroli R., Tylén K. (2016). Investigating conversational dynamics: Interactive alignment, interpersonal synergy, and collective task performance. Cognitive Science, 40(1), 145–171. 10.1111/COGS.12251 [DOI] [PubMed] [Google Scholar]

- Fusaroli R., Weed E., Rocca R., Fein D., Naigles L. (2023). Repeat after me? Both children with and without autism commonly align their language with that of their caregivers. Cognitive Science, 47(11), e13369. 10.1111/COGS.13369 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gernsbacher M. A., Morson E. M., Grace E. J. (2016). Language and speech in autism. Annual Review of Linguistics, 2(1), 413–425. 10.1146/ANNUREV-LINGUIST-030514-124824 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gladfelter A., Vanzuiden C. (2020). The influence of language context on repetitive speech use in children with autism spectrum disorder. American Journal of Speech-Language Pathology, 29(1), 327–334. 10.1044/2019_AJSLP-19-00003 [DOI] [PubMed] [Google Scholar]

- Howlin P. (1982). Echolalic and spontaneous phrase speech in autistic children. Journal of Child Psychology and Psychiatry, 23(3), 281–293. 10.1111/J.1469-7610.1982.TB00073.X [DOI] [PubMed] [Google Scholar]

- Hus V., Gotham K., Lord C. (2014). Standardizing ADOS domain scores: Separating severity of social affect and restricted and repetitive behaviors. Journal of Autism and Developmental Disorders, 44(10), 2400–2412. 10.1007/S10803-012-1719-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Joseph R. M., Skwerer D. P., Eggleston B., Meyer S. R., Tager-Flusberg H. (2019). An experimental study of word learning in minimally verbal children and adolescents with autism spectrum disorder. Autism & Developmental Language Impairments, 4, 1–13. 10.1177/2396941519834717 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kanner L. (1943). Autistic disturbances of affective contact. Nervous Child, 2, 217–250. [PubMed] [Google Scholar]

- Kaufman A. S. (2004). Kaufman brief intelligence test (2nd ed.). American Guidance Services. [Google Scholar]

- Kim S. H., Paul R., Tager-Flusberg H., Lord C. (2014). Language and communication in autism. In Volkmar F. R., Paul R., Rogers S. J., Pelphrey K. A. (Eds.), Handbook of autism and pervasive developmental disorders: diagnosis, development, and brain mechanisms (pp. 230–262). John Wiley & Sons, Inc. [Google Scholar]

- La Valle C., Plesa-Skwerer D., Tager-Flusberg H. (2020). Comparing the pragmatic speech profiles of minimally verbal and verbally fluent individuals with autism Spectrum disorder. Journal of Autism and Developmental Disorders, 50(10), 3699–3713. 10.1007/s10803-020-04421-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lenth R., Singmann H., Love J., Buerkner P., Herve M. (2020). Package ‘ emmeans .’. CRAN Repository. [Google Scholar]

- Local J., Wootton T. (2009). Interactional and phonetic aspects of immediate echolalia in autism: A case study. Clinical Linguistics & Phonetics, 9(2), 155–184. 10.3109/02699209508985330 [DOI] [Google Scholar]

- Lord C., Rutter M., DiLavore P. C., Risi S., Gotham K., Bishop S. (2012). Autism diagnostic obversation schedule, second edition (ADOS-2). Western Psychological Services. [Google Scholar]

- Lord C., Rutter M., Le Couteur A. (1994). Autism diagnostic interview-revised: A revised version of a diagnostic interview for caregivers of individuals with possible pervasive developmental disorders. Journal of Autism and Developmental Disorders, 24(5), 659–685. 10.1007/BF02172145/METRICS [DOI] [PubMed] [Google Scholar]

- Luyster R. J., Zane E., Weil L. W. (2022). Conventions for unconventional language: Revisiting a framework for spoken language features in autism. Autism & Developmental Language Impairments, 7. 10.1177/23969415221105472 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- McEvoy R. E., Loveland K. A., Landry S. H. (1988). The functions of immediate echolalia in autistic children: A developmental perspective. Journal of Autism and Developmental Disorders, 18(4), 657–668. 10.1007/BF02211883 [DOI] [PubMed] [Google Scholar]

- Miller J., Nockerts A. (2024). Systematic analysis of language transcripts (SALT), version 24 [computer software]. SALT Software, LLC. [Google Scholar]

- Neely L., Gerow S., Rispoli M., Lang R., Pullen N. (2016). Treatment of echolalia in individuals with autism spectrum disorder: A systematic review. Review Journal of Autism and Developmental Disorders, 3(1), 82–91. 10.1007/S40489-015-0067-4 [DOI] [Google Scholar]

- Pickles A., Anderson D. K., Lord C. (2014). Heterogeneity and plasticity in the development of language: A 17-year follow-up of children referred early for possible autism. Journal of Child Psychology and Psychiatry, and Allied Disciplines, 55(12), 1354–1362. 10.1111/JCPP.12269 [DOI] [PubMed] [Google Scholar]

- Prizant B. M. (1983). Language acquisition and communicative behavior in autism: Toward an understanding of the “whole” of it. Journal of Speech and Hearing Disorders, 48(3), 296–307. 10.1044/JSHD.4803.296 [DOI] [PubMed] [Google Scholar]

- Prizant B. M., Duchan J. F. (1981). The functions of immediate echolalia in autistic children. The Journal of Speech and Hearing Disorders, 46(3), 241–249. 10.1044/JSHD.4603.241 [DOI] [PubMed] [Google Scholar]

- Prizant B. M., Rydell P. J. (1984). Analysis of functions of delayed echolalia in autistic children. Journal of Speech and Hearing Research, 27(2), 183–192. 10.1044/jshr.2702.183 [DOI] [PubMed] [Google Scholar]

- Raven J. C., Court J. H., Raven J. E. (1998). Raven’s coloured progressive matrices. Harcourt Assessment. [Google Scholar]

- R Core Development Team (2019). R: A language and environment for statistical computing. R Foundation for Statistical Computing. [Google Scholar]

- Roid G. H., Miller L. J., Pomplun M., Koch C. (2013). Leiter international performance scale—third edition. Stoelting. [Google Scholar]

- Rossi N. F., Giacheti C. M. (2017). Association between speech-language, general cognitive functioning and behaviour problems in individuals with Williams syndrome. Journal of Intellectual Disability Research, 61(7), 707–718. 10.1111/jir.12388 [DOI] [PubMed] [Google Scholar]

- Rutter M., Bailey A., Lord C. (2003). SCQ. The social communication questionnaire. Western Psychological Services. [Google Scholar]

- Ryan S., Roberts J., Beamish W. (2022). Echolalia in autism: A scoping review. International Journal of Disability, Development and Education, 69(6), 1–16. 10.1080/1034912X.2022.2154324 [DOI] [Google Scholar]

- Rydell P. J., Mirenda P. (1991). The effects of two levels of linguistic constraint on echolalia and generative language production in children with autism. Journal of Autism and Developmental Disorders, 21(2), 131–157. 10.1007/BF02284756 [DOI] [PubMed] [Google Scholar]

- Rydell P. J., Mirenda P. (1994). Effects of high and low constraint utterances on the production of immediate and delayed echolalia in young children with autism. Journal of Autism and Developmental Disorders, 24(6), 719–735. 10.1007/BF02172282 [DOI] [PubMed] [Google Scholar]

- Shih W., Shire S., Chang Y. C., Kasari C. (2021). Joint engagement is a potential mechanism leading to increased initiations of joint attention and downstream effects on language: JASPER early intervention for children with ASD. Journal of Child Psychology and Psychiatry, 62(10), 1228–1235. 10.1111/JCPP.13405 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Skwerer D. P., Jordan S. E., Brukilacchio B. H., Tager-Flusberg H. (2016). Comparing methods for assessing receptive language skills in minimally verbal children and adolescents with autism spectrum disorders. Autism, 20(5), 591–604. 10.1177/1362361315600146 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sterponi L., Shankey J. (2014). Rethinking echolalia: Repetition as interactional resource in the communication of a child with autism. Journal of Child Language, 41(2), 275–304. 10.1017/S0305000912000682 [DOI] [PubMed] [Google Scholar]

- Stiegler L. N. (2015). Examining the echolalia literature: Where do speech-language pathologists stand? American Journal of Speech-Language Pathology, 24(4), 750–762. 10.1044/2015_AJSLP-14-0166 [DOI] [PubMed] [Google Scholar]

- Syed M., Nelson S. C. (2015). Guidelines for establishing reliability when coding narrative data. Emerging Adulthood, 3(6), 375–387. 10.1177/2167696815587648 [DOI] [Google Scholar]

- Tager-Flusberg H., Calkins S. (1990). Does imitation facilitate the acquisition of grammar? Evidence from a study of autistic, Down’s syndrome and normal children. Journal of Child Language, 17(3), 591–606. 10.1017/S0305000900010898 [DOI] [PubMed] [Google Scholar]

- Tager-Flusberg H., Kasari C. (2013). Minimally verbal school-aged children with autism spectrum disorder: The neglected end of the spectrum. Autism Research, 6(6), 468–478. 10.1002/AUR.1329 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tager-Flusberg H., Rogers S., Cooper J., Landa R., Lord C., Paul R., Rice M., Stoel-Gammon C., Wetherby A., Yoder P. (2009). Defining spoken language benchmarks and selecting measures of expressive language development for young children with autism spectrum disorders. Journal of Speech, Language, and Hearing Research, 52(3), 643–652. 10.1044/1092-4388(2009/08-0136) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Torres-Prioris M. J., Berthier M. L. (2021). Echolalia: Paying attention to a forgotten clinical feature of primary progressive aphasia. European Journal of Neurology, 28(4), 1102–1103. 10.1111/ENE.14712 [DOI] [PubMed] [Google Scholar]

- Van Santen J. P. H., Sproat R. W., Hill A. P. (2013). Quantifying repetitive speech in autism spectrum disorders and language impairment. Autism Research, 6(5), 372–383. 10.1002/AUR.1301 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wechsler D., Hsiao-pin C. (2011). WASI II: Wechsler abbreviated scale of intelligence (2nd ed.). Psychological Corporation. [Google Scholar]