Abstract

The rapid advancement of computational infrastructure has led to unprecedented growth in machine learning, deep learning, and computer vision, fundamentally transforming the analysis of retinal images. By utilizing a wide array of visual cues extracted from retinal fundus images, sophisticated artificial intelligence models have been developed to diagnose various retinal disorders. This paper concentrates on the detection of Age-Related Macular Degeneration (AMD), a significant retinal condition, by offering an exhaustive examination of recent machine learning and deep learning methodologies. Additionally, it discusses potential obstacles and constraints associated with implementing this technology in the field of ophthalmology. Through a systematic review, this research aims to assess the efficacy of machine learning and deep learning techniques in discerning AMD from different modalities as they have shown promise in the field of AMD and retinal disorders diagnosis. Organized around prevalent datasets and imaging techniques, the paper initially outlines assessment criteria, image preprocessing methodologies, and learning frameworks before conducting a thorough investigation of diverse approaches for AMD detection. Drawing insights from the analysis of more than 30 selected studies, the conclusion underscores current research trajectories, major challenges, and future prospects in AMD diagnosis, providing a valuable resource for both scholars and practitioners in the domain.

Keywords: age-related macular degeneration (AMD), deep learning (DL), machine learning (ML), retinal segmentation, retinal disease diagnosis

1. Introduction

The initial definition of age-related macular degeneration (AMD), described as symmetric central chorioretinopathy in the elderly, was in 1874. AMD is a chronic ocular disease that impairs the eye’s central vision. The macula, the core region of the retina responsible for sharp, clear vision, is degenerating [1,2]. AMD now accounts for 8.7% of blindness worldwide and is one of the leading causes of permanent vision loss in the elderly (>50 years old). In Europe, approximately 1% of people have advanced AMD. It is projected that 288 million people worldwide will suffer from AMD by 2040. In addition to aging, hereditary and environmental variables are the primary causes of AMD [3,4].

Eye diseases are a major health concern for the elderly. People who have eye disorders usually do not realize their symptoms are slowly getting worse. Routine eye exams are, therefore, necessary for an early diagnosis. For prompt intervention, AMD must be accurately diagnosed and detected as early as possible. Typically, a slit-lamp examination is used by an ophthalmologist to diagnose eye conditions [5]. Due to variations in the ophthalmologist’s analytical abilities, irregularities in the analysis of eye disorders, and problems with record keeping, slit-lamp interpretations are insufficient. Fundus images, captured through retinal imaging, provide valuable information about the structural changes in the macula associated with AMD [6].

Analyzing images from Optical Coherence Tomography (OCT), OCT Angiography, and fluorescein angiography (FA), or fundus cameras is known as retinal image analysis. These predominant non-invasive methods for capturing alterations in retinal structure, encompassing changes in the optic disc, blood vessels, macula, and fovea, are fundoscopy and OCT imaging. Analyzing these images assists in identifying various conditions such as diabetic retinopathy (DR), cataracts, glaucoma, AMD, myopia, and high blood pressure [7].

AMD fundus image classification into distinct categories plays a vital role in understanding the disease progression and determining appropriate treatment plans. As shown in Table 1, Four phases are commonly used to classify AMD: normal, early, intermediate, and advanced. No or early-stage AMD is diagnosed when there are either no Drusen or only a few small Drusen (macular yellow deposits) present in the eye. In early AMD, Drusen are considered small to medium in size. Intermediate AMD is characterized by numerous medium-sized Drusen or at least one large Drusen, sometimes accompanied by pigmentary changes. Advanced AMD can manifest in either dry or wet forms. Dry AMD occurs when the retinal pigment epithelium near the macula degenerates, leading to Geographic Atrophy (GA). This atrophic area gradually progresses toward the center of the macula, resulting in permanent vision loss if it involves the central region. On the other hand, wet AMD progresses rapidly with a sudden onset. It is caused by abnormal growth of blood vessels behind the retina, which can rupture or leak, causing swift deterioration of vision [8].

Table 1.

Tabular depiction of AMD fundus image stages showcasing a comparison along with visual samples.

| No AMD (Normal) | Early | Intermediate AMD | Advanced Dry AMD | Advanced Wet AMD |

|---|---|---|---|---|

| Refers to images showing no signs of AMD-related changes, often depicting individuals without clinical evidence of AMD or those in early stages of the disease with minimal abnormalities, such as a small number (5–15) of small (<63 m) or absent Drusen without pigment alterations. | Early AMD clinically presents with Drusen and abnormalities in the retinal pigment epithelium (RPE). It may involve one or both eyes, showing pigmentary changes, a few small Drusen, and/or intermediate-sized (63–124 m) Drusen. | Intermediate AMD lies between early and advanced stages, characterized by pigmentary changes and Drusen, small yellow deposits beneath the retina. It may include one large druse (>125 m), one extensive intermediate-sized druse (20 soft or 65 hard without any soft), and/or GA excluding the macula in one or both eyes. | GA is marked by the gradual loss of RPE cells in specific macular areas, forming well-defined atrophic patches. It results in progressive central vision loss and is a major cause of significant visual impairment in AMD patients. | Wet AMD is an advanced and severe form of the disease, characterized by the growth of abnormal blood vessels beneath the retina. These vessels can leak fluid or blood, causing retinal damage and rapid vision loss if untreated. It commonly results in sudden and severe central vision impairment. |

|

|

|

|

|

Computer-aided diagnostic (CAD) systems leverage advancements in machine learning (ML) and computer vision to diagnose conditions like DR and AMD. ML techniques exhibit significant potential in accurately categorizing AMD by identifying subtle characteristics indicative of various disease stages [9]. These methods automate the classification process, ensuring consistent and objective assessments while reducing variability among observers and facilitating timely diagnoses [10,11]. Despite the challenges involved, such as the need for extensive effort in feature extraction, analysis, and engineering, as well as a deep understanding of disease-specific traits, ML-inspired approaches have shown promising outcomes [12]. Furthermore, the emergence of deep learning (DL) as a subfield of ML has shown promise in detecting specific retinal illnesses. DL techniques have significantly advanced the identification and classification of ocular diseases such as Diabetic Macular Edema (DME), AMD, and DR. Transformer networks, initially popularized in natural language processing, are being adapted for computer vision tasks, particularly in retinal disease diagnosis [13,14]. The development of a reliable system for classifying Optical Coherence Tomography and fundus images holds great promise for clinical application. Such a system could assist ophthalmologists in guiding patients toward appropriate treatment plans [15].

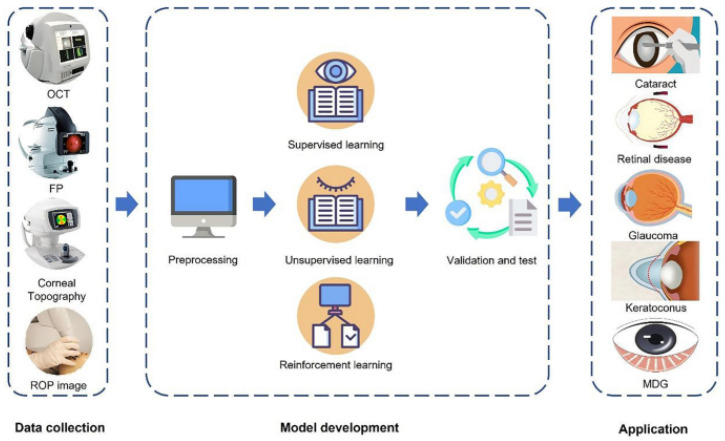

In medicine, artificial intelligence (AI) has been extensively used in a variety of settings Figure 1. Particularly in the field of ophthalmology, partnerships between medical imaging and AI disciplines have shown to be particularly fruitful. Professional ophthalmologists currently cannot afford to do such a high volume of AMD screening, particularly in impoverished nations and areas. Three areas of AI technology for AMD diagnosis exist (1) Disease/no disease, (2) wet/dry/no disease, and (3) AMD severity. With AI’s help, AMD may reduce the time it takes to diagnose and treat patients, enhance the effectiveness of diagnosis and therapy, and delay the onset of AMD blindness, which addresses a flaw in many medical businesses [16].

Figure 1.

AI workflow in ophthalmology usually includes model building, training, validation, testing, and implementation in addition to data gathering and preprocessing. It is intended to use deep learning and machine learning methods to diagnose, treat, and manage eye conditions [17].

The primary goal of the proposed review is to give a thorough analysis of the different ML and DL techniques that have been recently used for AMD using different modalities. The proposed review discusses the methodology employed, encompassing data collection, pre-processing techniques, feature extraction methods, and the selection of ML algorithms. The contributions can be summarized as follows:

-

-

It discusses the findings, challenges, advantages, disadvantages, and limitations of ML and DL-based AMD classification systems, offering insights for future research directions in AI-based AMD diagnosis.

-

-

It utilizes the DL process pipeline technique for AMD retinal disease diagnosis, evaluating recent papers on AMD and providing information on DL backbone models, assessment measures, and image pre-processing methods.

-

-

It includes a tabulated analysis of comparative performances of various DL implementations, a thorough literature study, a discussion on the utilization of public and private datasets, and outlines current avenues of research, contributing to the advancement of computer-aided diagnosis of AMD and enhancing patient care and outcomes.

The structure of this review is organized as follows: Section 2 presents prevalent imaging modalities. Section 3 outlines the AMD diagnosing framework with its internal three main components: AMD Datasets Acquisition, Pre-Processing Techniques, and Deep and Machine Learning Techniques for AMD Segmentation and Diagnoses. Section 4 presents the Discussion and Summary of this research. Section 5 presents the future research directions. Finally, Section 6 concludes the review.

2. Prevalent Imaging Modalities

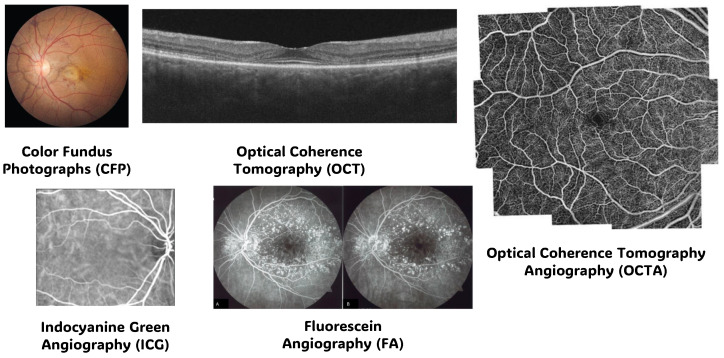

In exploring the complexities of the human eye, various imaging methods have been developed such as Color Fundus Photographs (CFP), OCT, OCT Angiography (OCTA), Fluorescein Angiography (FA), and Indocyanine Green Angiography (ICG), each with its unique role in understanding ocular health, in addition, each imaging modality is better suited for the type of disease that doctors are dealing with and to interpret the findings [18].

This section discusses various imaging methods used in AMD and retinal disorders diagnosis and focuses on the different modalities techniques that have demonstrated potential in treating AMD and retinal diseases diagnostics because they are helpful in the identification, monitoring, counseling, diagnosis, and prognosis of several ocular illnesses according to [2,10,19,20,21,22,23], including glaucoma, DR, ARMD, and retinal vascular disorders.

CFP provides detailed images of the back of the eye, capturing the retina, optic nerve, and blood vessels, aiding in the diagnosis and monitoring of various eye conditions such as macular degeneration and diabetic retinopathy. OCT utilizes light waves to produce high-resolution cross-sectional images of the retina, allowing for precise assessment of its layers and identifying abnormalities like macular edema and retinal thinning. OCTA enhances OCT by visualizing blood flow within the retina and choroid without the need for dye injection, facilitating the detection of vascular irregularities like neovascularization and microaneurysms. FA involves injecting a fluorescent dye into the bloodstream to capture detailed images of the retinal vasculature, helping diagnose conditions such as macular edema, retinal vein occlusion, and choroidal neovascularization (CNV). ICG employs a different dye and longer wavelength light to visualize deeper choroidal vessels, aiding in the assessment of conditions like choroidal tumors, polyps, and inflammatory disorders. Each imaging modality offers unique insights into ocular health, allowing for comprehensive evaluation and management of retinal diseases. Sample from each modality is presented in Figure 2 [18,24,25,26,27,28,29,30].

Figure 2.

Samples from the prevalent imaging modalities: Color Fundus Photographs (CFP), Optical Coherence Tomography (OCT), OCT Angiography (OCTA), Fluorescein Angiography (FA), and Indocyanine Green Angiography (ICG) [26,27,28,29,30].

2.1. Color Fundus Photographs (CFP) Modality

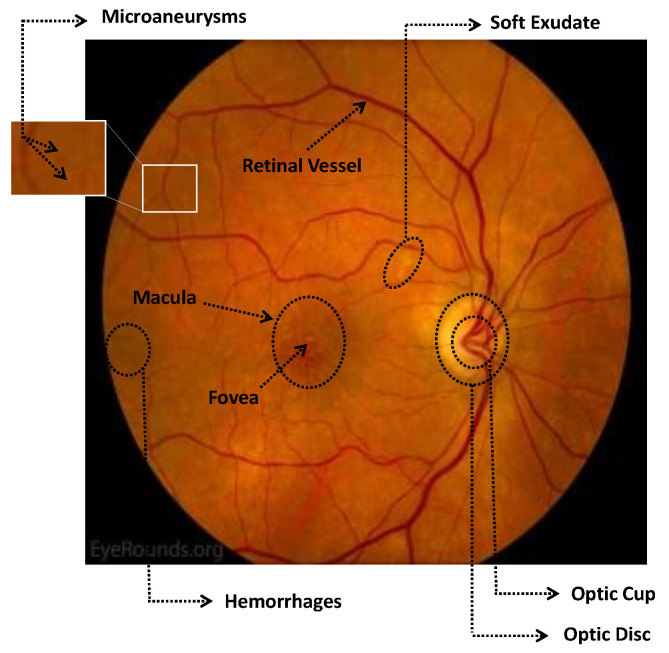

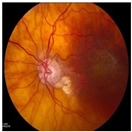

Numerous imaging methods have been created over the years to study the human eye; among them, “Fundus Imaging” has become more well-known because it is non-invasive and reasonably priced. When screening for retinal abnormalities, color fundus photography is the most economical imaging technique to use. The technique of utilizing a monocular camera to project the fundus, or the back of an eye, onto a two-dimensional plane is known as fundus photography. Many ocular structures and biomarkers, including various abnormalities, can be identified from a recorded 2D fundus image. Numerous of these visual indicators are crucial in the diagnosis of retinal disorders [31]. Figure 3 displays a fundus image with pathologies.

Figure 3.

A fundus image with pathologies that presents the microaneurysms, hemorrhages, and fovea.

The fundus image, which is often captured by image sensors in three colors, is a reflection of the eye’s inside surface. It contains details on the biological features something the unaided eye can see, such as the macula, optic disc, retinal vasculature, and retinal surface. Because blue light is absorbed by the posterior retinal pigment layer and blood vessels, their spectral range makes the anterior retinal layers more visible. Concurrently, the pigmentation of the retina reflects the green spectrum, enabling its filters to improve the retinal layer’s visibility and providing more data from underneath the retinal surface. Content about choroidal melanomas, choroidal nevi, choroidal ruptures, and pigmentary abnormalities is found in the red spectrum, which is exclusive to the choroidal layer under the pigmented epithelium [32,33].

A few of CFP’s drawbacks are its inconsistent pigmentation and Drusen look, its inability to resolve fundus depth, and its dearth of comprehensive quantitative data. The microscopic alterations inside the retina that are indicative of the disease’s early stages cannot be observed in FP. Therefore, OCT image interpretation may be used to acquire it [34]. In addition to the costly equipment, the spherical form of the globe results in image distortion, artifacts from eyelashes, and misleading conclusions from insufficient color representation. For fundus imaging, normal 30° fundus photography continues to be the best option. It takes a long time to acquire stereo images, and patients have to endure twice as many light flashes. To fuse the image stereoscopically and produce depth, image interpretation takes time and specific goggles or optical viewers. Large-scale clinical studies and ordinary clinics can still use CFP, despite these drawbacks and the introduction of newer imaging technologies [35].

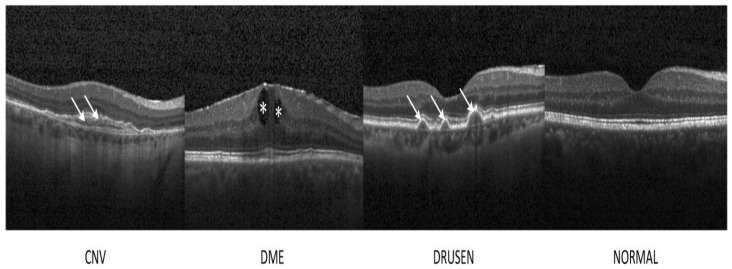

2.2. Optical Coherence Tomography (OCT) Modality

OCT has developed into a potent imaging technique for the non-invasive evaluation of a variety of retinal disorders, including helping to diagnose DME, DRUSEN (AMD), and CNV, as shown in Figure 4. OCT is a high-resolution imaging, micron-level, and non-invasive method that provides real-time retinal imaging by utilizing infrared light with a wavelength range of 800–840 nm. The Michelson interferometry principle is the foundation of OCT. A light beam is split into two channels, one from the reference mirror and the other from the targeted tissue. The beams are then bounced back and combined again using semitransparent mirrors to create an interference pattern [36].

Since the method was first described in 1991, OCT imaging has quickly become commercially available, with advancements in technology enabling faster and higher-resolution imaging. The slower and more antiquated time domain OCT has mostly been superseded by Fourier- or spectral-domain OCT technology (SD-OCT). The technique’s main advantages include a much deeper tissue penetration, a much better ability to penetrate opaque media than SD-OCT, and a wider imaging depth that allows for high-quality imaging of the choroid and sclera at a collection rate that is at least four times faster (100,000 scans/s). Retinal thickness has been frequently measured by OCT for the assessment of ME resulting from conditions such as hereditary retinal degenerations, DR, AMD, ERM, cataract surgery, following RVO and uveitis [37]. OCT has several drawbacks. OCT uses light waves, hence medium opacities can impede the best possible imaging. Consequently, in the presence of vitreous hemorrhage, thick cataracts, or corneal opacities, the OCT will be restricted. The cost of OCT equipment is high. The quality of the image is influenced by the operator’s skill and may suffer when there is media opacity. Age, gender, and race-specific normative data are few, making it difficult to compare eyes with retinal illness [38].

Figure 4.

Representative OCT images. The lesion sites were denoted by asterisks and arrows. CNV: choroidal neovascularization; DME: diabetic macular edema, DRUSEN (AMD) and Normal [39].

2.3. Optical Coherence Tomography Angiography (OCTA) Modality

In recent years, OCT Angiography (OCTA) has been developed. It is an imaging method based on OCT that makes it possible to see the eye’s functioning blood vessels. This method is especially useful for the detection of microvascular alterations in the retina and choroid, which are indicative of illnesses that proceed slowly, including AMD, as was covered in [40,41,42,43,44]. The idea behind OCTA is to image blood flow by using the contrast mechanism created by moving particles, including red blood cells, which generate variations in the OCT signal. OCTA can identify erythrocyte mobility. It is quick, which gives it a significant benefit over other techniques for assessing patients who need to see you often.

Unlike the two-dimensional images of FA and ICGA, it is three-dimensional, making it possible to see the precise location and size of a lesion. Since foreign dyes are not needed for OCTA, it is non-invasive and safer than standard angiography. It enables direct in vivo visualization of the retinal and choroidal vasculature without staining or dye leakage, which might potentially hide the boundaries and morphology of diseases. Additionally, there is a distinction between the two primary OCTA technologies: spectral-domain (SD)-OCTA and swept-source (SS)-OCTA. The majority of commercial SD-OCTA devices employ a lower wavelength, about 840 nm, which is significantly attenuated by the RPE. Sub-RPE tissue attenuation may have a more significant role in Drusen or RPE thickening because the RPE functions as a significant hyperreflective barrier, obstructing light transmission. SS-OCTA employs a longer wavelength—roughly 1050 nm and can reach deeper into the choroid as presented in Figure 5 [45,46].

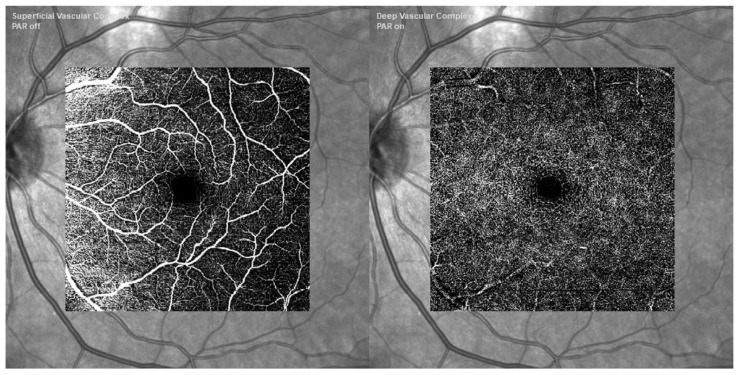

Figure 5.

A patient with intermediate AMD was imaged using retinal imaging. Deep vascular complex OCTA (bottom right), superficial vascular complex OCTA (bottom left). Images captured with Heidelberg Engineering Ltd.’s Spectralis OCTA Module at Hemel Hempstead, UK [47].

2.4. Fluorescein Angiography (FA) Modality

In the 1960s, Novotny and Alvis introduced fluorescein angiography (FA) to the field of ophthalmology. While FA is still highly helpful in certain situations, OCT is now more commonly employed to assess the response to therapy. One of the most important methods for identifying and categorizing CNV and its activity in eyes with neovascular AMD is FA. It is a diagnostic process that is intrusive and facilitates the evaluation of retinal and choroidal circulation pathology. It assists in the identification of several ocular illnesses, including AMD as discussed in [48,49].

It aids in the planning of decisions related to the management of ocular pathology. The formation of aberrant blood vessels under the retina’s RPE layer, known as CNV, is a defining feature of both dry and wet types of macular degeneration. These blood vessels have the potential to bleed, which may eventually lead to macular scarring and a significant loss of central vision (i.e., disciform scar). A specialized fundus camera with barrier and excitation filters is necessary for FA. Antecubital veins are typically used to provide fluorescein dye intravenously quickly enough to get high contrast early phase angiogram images. A blue excitation filter is applied to white light coming from a flash.

Following their absorption by free fluorescein molecules, blue light (wavelength 465–490 nm) is converted to green light (520–530 nm), which has a larger wavelength. To capture just the light released by the fluorescein in the photographs, a barrier filter eliminates any reflected light. After injection, images are taken right away, and they stay that way for fifteen minutes, depending on the pathology being examined. The images are taken either on 35mm film or digitally as shown in Figure 6 [50].

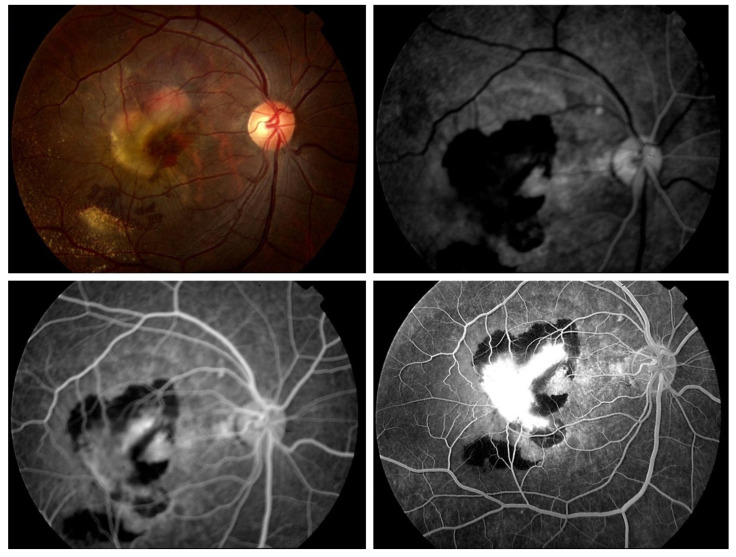

Figure 6.

The FA imaging of the classic choroidal neovascular membrane in AMD shows early hyper fluorescence that increases in intensity over subsequent images, encircled by hypofluorescence from subretinal hemorrhage occlusion [51].

2.5. Indocyanine Green Angiography (ICG) Modality

Initially introduced in 1973 to investigate the choroidal circulation in ophthalmology, ICG angiography gained widespread usage in the early 1990s. For the identification of several retinal and choroidal disorders Including AMD as described in [52], ICG is a well-established method. With a molecular weight of 775, indocyanine green is a tricarbocyanine dye that is soluble in water. When it comes to the therapeutic usefulness of choroidal circulation visualization, two characteristics of ICG are significant. ICG first absorbs in serum between 790 and 805 nm, peaking at 835 nm. Secondly, ICG is a dye that is 98% attached to proteins and has a restricted diffusion via the choriocapillaris’s tiny fenestrations. The near-infrared spectrum is where both the excited and emitted light are found, which enables deeper penetration into the retina and easier passage of the emitted light through blood, lipid deposits, pigment, and moderate opacities (i.e., cataracts) to produce images.

Moreover, the dye typically stays inside the fenestrated walls of the choriocapillaris, as opposed to fluoresce, which leaks freely from these vessels, because it has a much higher molecular weight and a larger percentage of molecules stay bound to proteins in the bloodstream than fluorescein. Because of this characteristic, ICG is a perfect method for depicting the choroidal veins’ architecture and hemodynamics. High-definition images are produced by scanning laser ophthalmoscopes used for ICG angiography. ICG filters are also included in ultrawide field systems, which can produce ICG images with a 200-degree field of view as shown in Figure 7 [53,54].

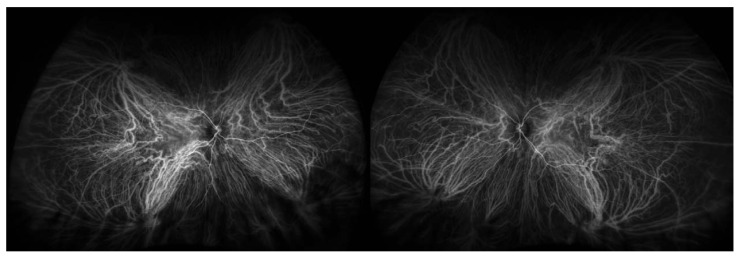

Figure 7.

Bilateral wide field indocyanine green angiography [54].

Table 2 compares between the different imaging modalities: Color Fundus Photographs (CFP), Optical Coherence Tomography (OCT), Optical Coherence Tomography Angiography (OCTA), Fluorescein Angiography (FA), and Indocyanine Green Angiography (ICG).

Table 2.

Tabular comparison between the different imaging modalities.

| Modality | When to Use | Cost | Pros. | Cons. |

|---|---|---|---|---|

| Color Fundus Photographs (CFP) | Screening for retinal abnormalities | Low cost | Detailed images of the back of the eye. | Inconsistent pigmentation, inability to resolve fundus depth. |

| Optical Coherence Tomography (OCT) | Evaluation of retinal disorders | High cost | High-resolution cross-sectional images of the retina. | Limited by medium opacities, operator skill required. |

| Optical Coherence Tomography Angiography (OCTA) | Detection of microvascular alterations | Moderate cost | Non-invasive, three-dimensional imaging of blood vessels. | Limited normative data, affected by media opacity. |

| Fluorescein Angiography (FA) | Identifying and categorizing CNV | Moderate cost | Evaluation of retinal and choroidal circulation pathology. | Invasive, requires dye injection, limited to 2D imaging. |

| Indocyanine Green Angiography (ICG) | Visualization of choroidal circulation | Moderate cost | Deeper penetration into the retina, clearer images of choroidal veins. | Invasive, limited diffusion, requires skilled interpretation. |

3. AMD Diagnosing Framework

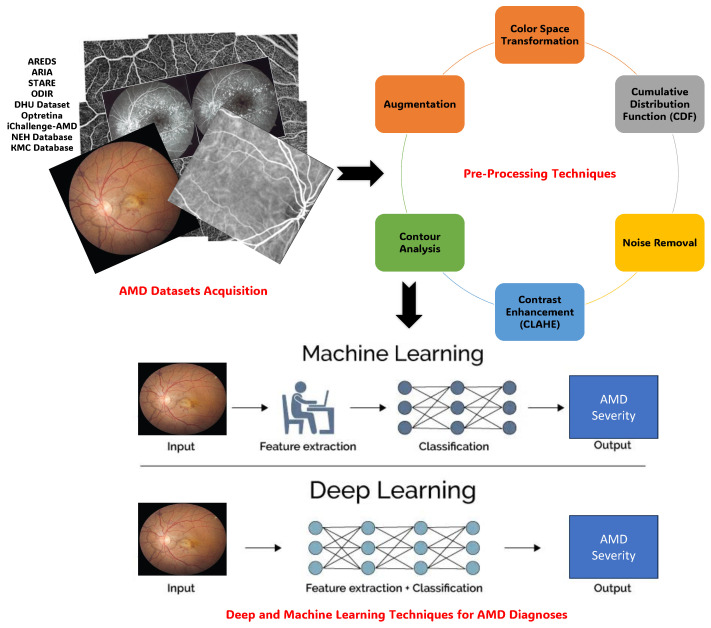

The AMD diagnosing Framework encompasses three main components: AMD Datasets Acquisition, Pre-Processing Techniques, and Deep and Machine Learning Techniques for AMD Segmentation and Diagnoses. The section on AMD Datasets Acquisition highlights both public and private databases crucial for research in AMD, such as STARE, DRIVE, and the AREDS database, each offering unique advantages and insights. Pre-processing techniques are then discussed, focusing on optimizing image quality, noise removal, contrast enhancement, and segmentation masking to prepare fundus images and OCT scans for analysis using ML/DL approaches. Lastly, Deep and Machine Learning Techniques for AMD Diagnoses utilize the rich landscape of methodologies harnessing AI, particularly DL, to enhance diagnostic capabilities. Techniques like CNNs, RNNs, and ensemble learning are explored, along with studies employing transfer learning and handcrafted features for classification. The performance evaluation metrics section concludes the framework, highlighting fundamental criteria such as accuracy, specificity, and sensitivity essential for assessing algorithm effectiveness and reliability in fundus image analysis. This comprehensive framework, as presented in Figure 8, provides a roadmap for advancing research and innovation in AMD diagnosis.

Figure 8.

Graphical visualization of the AMD diagnosing framework that encompasses three main components: AMD Datasets Acquisition, Pre-Processing Techniques, and Deep and Machine Learning Techniques for AMD Diagnoses.

3.1. AMD Datasets Acquisition

This section provides a comprehensive overview of major public and private image databases integral to recent literature. The majority of public databases were produced with OCT and FP images [55]. The landscape of data repositories for advancing research in AMD is diverse, ranging from public resources such as DRIVE and STARE to proprietary datasets designed for specific investigations. They are summarized in Table 3.

3.1.1. Public Databases

Research groups have been compelled to establish and make public their datasets due to the growing necessity of validating or training models. Due to their better fundus image resolutions, the open-access databases STARE and DRIVE are two of the most popular retinal databases [56].

AREDS Age-Related Eye Disease Study (AREDS): a 12-year investigation, AREDS was conducted. The AMD situations of numerous patients were monitored during this time in the study. Cases of GA, cases of Neovascular AMD, and control patients were added to the study. For the length of the trial, retinal images of each patient’s left and right sides were obtained. The AMD severity of the images was rated by several ocular doctors. During the study, several individuals who had previously experienced moderate AMD symptoms progressed to more severe AMD stages. This database’s test, validation, and training sets consist of 86,770, 21,867, and 12,019 images of color fundus photography (CFP), respectively [57].

ARIA (Automatic Retinal Image Analysis): There are 450 images in the JPEG format ARIA database. Three groups of photographs are identified: one with AMD, one with DR, and one with a healthy control group. It took two skilled ophthalmologists to annotate the images [58].

STARE (Structured Analysis of the Retina): Out of the 400 retinal images in STARE, 40 have manually segmented blood vessels and labeled veins/arteries. Experts have labeled each image. The PPM format was used to compress the image data. Also included are algorithms for the identification of the optic nerve head (ONH). Thirteen distinct abnormalities were linked to a total of 44 diseases that were identified [59].

ODIR Dataset Ocular Disease Intelligence Recognition (ODIR): The structured dataset known as ODIR consists of 5000 patients from the Peking University National Institute of Health Sciences. Eight retinal diseases (i.e., Diabetes, AMD, myopia, glaucoma, hypertension, cataract, normal, and others) are represented by the various label annotations in it. The JPEG format saves images in various sizes. The following is the distribution of the labels for the image classes: Typical: 3098, Glaucoma: 224 Diabetes: 1406, 265 is the cataract—242 Pathological Myopia, Hypertension: 107, AMD: 293, and Additional diseases: 791. The annotating exercise involved ophthalmologists with expertise [60].

DHU Dataset: 45 OCT collections from Duke University, Harvard University, and the University of Michigan are included in a publicly accessible dataset. This dataset includes 15 participants for each of the three classes (i.e., normal, DME, and dry AMD) consisting of volumetric scans using non-unique procedures [20].

Optretina Dataset: Since 2013, Optretina has conducted telemedicine screening programs in optical centers, and since 2017, it has expanded to include commercial businesses and workplaces. The 306,302 retinal images that make up the labeled dataset from Optretina serve as the basis for this investigation and are tagged with quality, laterality, and diagnostic. All of these images have been assessed by ophthalmologists, who are all specialists in retinal diseases; 20% of the data set has abnormal retinas, whereas the remaining 80% have normal ones. It is anonymized and based on real-world instances rather than clinical trials. Images are obtained using OCT and NMC (color fundus and red-free). The collection contains images taken with many brands and models of cameras. The collection contains additional non-medical images intended to trick the AI, as well as retinal nerve fiber layer OCTs (RNFL), macular OCTs, and CFP [61].

iChallenge-AMD: The AMD reference standard existence derived from the medical records takes into account Visual Field, OCT, and other information in addition to fundus images alone. The image folder contains AMD and non-AMD labels (also known as the reference standard) that are utilized for training data [62].

UMN: The University of Minnesota Ophthalmology Clinic collected 600 OCT B-scan images from 24 individuals who were diagnosed with exudative AMD, and this dataset is freely accessible. Every patient received about 100 B-scans, which is how the Spectrally technology took the images. Twenty-five of the images with the biggest fluid area were chosen to serve as the samples. Two ophthalmologists annotated and validated the retinal fluid, SRF, IRF, and PED. This database is utilized for the segmentation process [63].

OPTIMA: Through the cyst segmentation challenge at the Medical Image Computing and Computer-Assisted Intervention (MICCAI) 2015 conference, the OPTIMA dataset was made publicly available, and it has subsequently gained popularity as a resource for IRF Fluid/Cyst segmentation in OCT. Thirty volumes of patient data from different OCT equipment, such as Spectralis, Topcon, Cirrus, and Nidek, make up the dataset. Every retinal OCT volume is centered on the macula and is around 6 × 6 × 2 mm3. In order to better represent the wide variety of scans seen in clinical settings as well as the various appearances and distributions of IRF, the dataset has been further split into 15 training and 15 testing volumes. Two medical University of Vienna expert graders annotated the IRF in the training data. This database is utilized for the segmentation process [64].

RETOUCH: The RETOUCH dataset was obtained from the 2017 MICCAI conference’s retinal OCT fluid challenge, in which the OCT images were labeled with the following retinal fluid (fluid/cyst) labels: IRF, SRF, and PED. Seventy OCT volumes obtained from individuals diagnosed with macular edema related to AMD or RVO comprise the dataset. Twenty-four of these volumes came from the Spectralis OCT system, twenty-two from the Triton OCT system, and twenty-four from the Cirrus OCT system. Under the guidance of retina specialists from the Medical University of Vienna and Radboud University Medical Centre in the Netherlands, human graders annotated the images. There are 128, 128, and 49 B-scan images total that were obtained by the Cirrus, Triton, and Spectralis OCT systems, respectively. The resolution of each image varies. Much pertinent research on this subject has been based on the RETOUCH dataset, which is frequently used as a benchmark for assessing the effectiveness of retinal cyst/fluid segmentation algorithms [65].

VRC: The Vienna Reading Centre offers top-notch imaging services for ophthalmology-related clinical, pharmacological, and scholarly research. Established in 2005 as the world’s first entirely digital image analysis platform, the VRC is a division of the Medical University of Vienna’s Department of Ophthalmology, which is among the biggest medical schools in Europe. Their group’s main area of interest is the creation of computational image analysis techniques. Close cooperation with the Department of Ophthalmology’s independent research unit, the Christian Doppler Laboratory for Ophthalmic Image Analysis (OPTIMA), fosters this effort comprising 1200 full OCT volume scans of the eyes of patients afflicted with AMD, DME, and RVO, the three main disorders known to cause macular fluid. This database is utilized for the segmentation process [66].

3.1.2. Private Databases

Using private databases, researchers have also evaluated algorithm performance in the AMD detection area. Images are anonymized in compliance with ethical standards and subject to privacy protection before being used for model design and performance assessment. In certain instances, the medical facilities and authors that own the data or funded the study may be able to access certain private databases upon request.

NEH Database: The Noor Eye Hospital in Tehran is the source of the NEH dataset, which is made up of 48 dry AMD, 50 DME, and 50 normal OCTs. The axial resolution in this dataset is 3.5 m, and the scan dimension is 8.9 × 7.4 mm2. However, not all patients have the same lateral and azimuthal resolutions. Consequently, the number of A-scans varies from 512 to 768 scans, while different patients provide 19, 25, 31, and 61 B-scans per volume. The dataset is available for access at http://www.biosigdata.com (accessed on 8 July 2024) [20].

KMC Database: Ophthalmology Department of Kasturba Medical College (KMC), Manipal, India from Kasturba Medical Hospital. There are 402 eyes in the fundus image dataset that are normal, 125 retinal images that show wet AMD, and 583 early, intermediate, or GA AMD retinal images. Featuring a pixel resolution of , the Zeiss FF450 plus mydriatic fundus camera was used to capture the images [1].

KSH Database: The database consists of 775 high-resolution color fundus images from patients with early AMD from the Department of Ophthalmology at Kangbuk Samsung Hospital, taken between 2007 and 2018, are included in the collection. Nonmydriatic fundus cameras from many manufacturers were used to capture fundus images: Topcon, Japan made the TRC NW300, TRC-510X, NW200, and NW8, Canon, Japan made the CR6-45NM and CR-415NM, and Zeiss, USA made the VISUCAM 224. The fundus images’ digital images were examined using an image archiving communication system (INFINITT, Seoul, Republic of Korea) [67].

An overview of the retinal image databases that were previously addressed can be found in Table 3, which also includes information on the number of images, resolutions, cameras, and purposes for which the databases were made.

Table 3.

Tabular summary of public and private available AMD datasets.

| Dataset | Description | Images | Purpose | Resolution | Annotation | Availability | Modality |

|---|---|---|---|---|---|---|---|

| AREDS [57] | Longitudinal study monitoring AMD progression | 86,770 | Classification | Various | Graded by ocular experts | Public | CFP |

| ARIA [58] | 450 JPEG images with AMD, DR, and healthy controls | 450 | Classification | Various | Annotated by ophthalmologists | Public | CFP |

| STARE [59] | 400 retinal images with manually segmented blood vessels | 400 | Classification, Segmentation | () | Expert-labelled abnormalities | Public | CFP |

| ODIR [60] | Contains 8 retinal diseases (i.e., Diabetes, AMD, myopia, glaucoma, hypertension, cataract, normal, and others) from the Peking University National Institute of Health Sciences | 5000 | Classification | Various | Annotations by ophthalmologists | Public | CFP |

| DHU Dataset [20] | 45 OCT volumes with normal, DME, and dry AMD from Duke University, Harvard University, and the University of Michigan | 45 | Classification | Various | Expert-labelled classes | Public | OCT |

| Optretina [61] | labeled retinal images from telemedicine screening programs. | 306,302 | Classification | Various | Annotated by ophthalmologists | Public | CFP, OCT |

| iChallenge-AMD [62] | Fundus image dataset with AMD and non-AMD labels | - | Classification | Various | Reference standard derived from medical records | Public | CFP, OCT |

| UMN Database [63] | 600 OCT B-scan images from the University of Minnesota Ophthalmology Clinic | 600 | Segmentation | Various | Ophthalmologists | Public | OCT |

| OPTIMA Database [64] | Cyst segmentation challenge at the MICCAI 2015 conference | 15 training, 15 testing volumes | Segmentation | Various | Medical University of Vienna experts | Public | OCT |

| RETOUCH Database [65] | Retinal fluid (fluid/cyst) labels: IRF, SRF, and PED from the 2017 MICCAI conference’s retinal OCT fluid challenge | 70 volumes | Segmentation | Various | Retina specialists | Public | OCT |

| VRC Database [65] | Eyes of patients afflicted with AMD, DME, and RVO, three disorders known to cause macular fluid from Medical University of Vienna’s Department of Ophthalmology | 1200 volume | Segmentation | Various | Retina specialists | Public | OCT |

| NEH Database [20] | OCT dataset from Noor Eye Hospital, Tehran | 148 | Classification | 3.5 μm axial resolution | Anonymized annotations | Private | OCT |

| KMC Database [1] | Fundus image dataset from Kasturba Medical College | 1110 | Classification | Expert annotations | Private | CFP | |

| KSH Database [67] | High-resolution images from patients with early AMD from Department of Ophthalmology at Kangbuk Samsung Hospital | 775 | Classification | Various | Expert annotations | Private | CFP |

3.2. Pre-Processing Techniques

Pre-processing of fundus images and OCT scans is crucial for optimizing image quality, removing noise, and preparing them for analysis with ML/DL approaches. This step ensures the development of robust prediction models for challenges such as uneven illumination, noise, artifacts, and other imperfections that could affect the analysis process [31,68].

Various techniques enhance DL model performance in analyzing fundus images and OCT scans. Color Space Transformation selectively uses a single color channel, often eliminating the green channel to avoid interference with analysis, especially in high-contrast images. The Cumulative Distribution Function (CDF) helps understand pixel intensity distribution, providing insights into image characteristics [69].

Noise Removal methods, such as non-local means denoising and median filters, ensure cleaner and more accurate analysis results. Contrast Enhancement, particularly through methods like Contrast Limited Adaptive Histogram Equalization (CLAHE), improves the visibility of small lesions like microaneurysms. Segmentation Masking isolates regions of interest (ROIs) within fundus images, enhancing diagnostic accuracy by focusing on specific areas for analysis. Contour Analysis refines ROIs and identifies object boundaries, aiding in modifying ROIs and determining object attributes [70].

Augmentation techniques, such as rotation and flipping, balance image datasets, ensuring robustness in DL models. Cropping and Extracting Regions of Interest (ROI) isolate areas containing significant information, reducing unnecessary learning effort [71]. Histogram Equalization enhances contrast, improving image clarity, while resizing images to standard dimensions maintains consistency across the dataset. Lastly, Enhancing Image Contrast reduces noise and enhances visual clarity for more accurate analysis [72]. Table 4 summarizes these frequently employed pre-processing techniques for fundus images utilized in diagnosing retinal diseases.

Table 4.

Summarization of the pre-processing techniques for fundus images utilized in diagnosing retinal disease [31,69,70,72].

| Pre-Processing Technique | Description | Complexity | Effectiveness | Robustness | Ease of Implementation |

|---|---|---|---|---|---|

| Color Space Transformation | Enhances DL model performance by selectively utilizing a single color channel from the RGB Channels, often removing the green channel to eliminate visually rich information, particularly in high contrast images | Low | Moderate | High | High |

| Cumulative Distribution Function (CDF) | Simplifies understanding of image features and pixel intensity distribution through a cumulative probability distribution, aiding in identifying areas of interest | Low | High | Moderate | Moderate |

| Noise Removal | Eliminates unwanted noise using various denoising algorithms such as non-local means denoising, median filters, and Gaussian filters | Moderate | High | High | Moderate |

| Contrast Enhancement (CLAHE) | Widely used approach for enhancing contrast and addressing over-amplified contrast in certain pixel portions, improving the quality of fundus images for analysis | Moderate | High | High | High |

| Segmentation Mask | Utilizes binary masks to isolate regions of interest (ROIs) within fundus images, improving diagnostic accuracy by selecting specific regions for analysis while excluding background noise | Moderate | High | High | Moderate |

| Contour Analysis | Essential for fine-tuning ROIs and locating object boundaries in images, providing attributes like centroid, area, and perimeter for object modification and determination | Moderate | High | Moderate | Moderate |

| Augmentation | Balances image datasets through techniques like rotation, translation, flipping, and rescaling, enhancing model robustness and performance | Low | High | High | High |

| Cropping and Extracting ROI | Isolates significant areas within images for analysis, reducing unnecessary learning effort during model training | Low | Moderate | High | High |

| Histogram Equalization | Enhances overall contrast in fundus images, making background pixels stand out and improving image clarity. | Low | Moderate | Moderate | High |

| Resized Image | Maintains consistency across the dataset by resizing images to standard dimensions | Low | Low | High | High |

| Enhanced Image (Improving Contrast) | Lowers noise and enhances contrast in images, improving overall image quality | Moderate | High | Moderate | High |

3.3. Deep and Machine Learning Techniques for AMD Segmentation

Image segmentation is necessary for a quantitative assessment of the lesion area [73]. Researchers have been concentrating more and more on using DL and standard ML techniques to automate the segmentation of retinal cysts and fluid in recent years. A fluid-filled pocket in the retina known as a retinal cyst or fluid is a pathological result of several common eye conditions, such as AMD. Ophthalmologists can benefit greatly from the automated procedures by using them to better interpret and quantify retinal characteristics, which can lead to more precise diagnosis and well-informed treatment options for retinal illnesses. AMD may be diagnosed with the aid of Drusen, the primary disease manifestation. The aberrant deposition of metabolites from RPE cells is the cause of them. The process of Drusen segmentation faces four primary obstacles: Their color is comparable to that of the fundus image and OD, with a yellowish-white hue; they frequently have irregular forms and indistinct borders, as well as uneven brightness and interference from other indicators like blood vessels. Several Drusen segmentation technologies are available in the field of image study in ophthalmology.

Yan et al. [74] suggested a deep random walk technique for effectively Drusen segmentation from fundus images. There are three primary components to the suggested architecture. First, fundus images are sent into a deep feature extraction module, which is divided into two branches: a low-level feature-capturing three-layer CNN and a deep semantic feature-capturing SegNet-like network. Subsequently, the acquired data are combined and sent into a designated affinity learning module to derive pixel-by-pixel affinities for creating the random walk’s transition matrix. Lastly, manual labels are propagated using a deep random walk module. On the STARE and DRIVE datasets, this model demonstrated state-of-the-art performance with accuracies of 97.13, sensitivity of 92.02, and specificity of 97.30. The acquired deep characteristics can assist in managing Drusen variations in size and form as well as color resemblance to different tissues. Furthermore, accurate segmentation is achieved at hazy Drusen borders via the random walk approach.

Contributions: A deep random walk method for Drusen segmentation from fundus pictures is presented in this research. The technique’s strength lies in the random walk process driving the learning algorithms for pixel-pixel affinities and deep picture representations. Compared to other cutting-edge methods, their network is more capable of handling the difficulties associated with Drusen segmentation because of its ability to handle Drusen fluctuations in size and form as well as color resemblance to other tissues thanks to its learned deep characteristics. Furthermore, accurate segmentation is achieved at hazy Drusen borders via the random walk approach.

Another work was proposed, based on Drusen segmentation for AMD detection, by Pham et al. [67] since there were far more non-Drusen pixels than Drusen pixels, they attempted to address the issue of data imbalance by employing a multi-scale deep learning model. Other studies attempted to analyze cropped images, which may lose global information, in an attempt to tackle the high-resolution image problem. Other than cropping the central image to eliminate unnecessary background areas, they do not use any particular pre-processing procedures. The associated approach consists of two networks: a patch-level network that makes final predictions based on related patch images and associated probability maps, and an image-level network that generates Drusen probability maps using a Deeplabv3+ base architecture. The U-Net-based segmentation is used by the Patch level network. For training, a total of 775 fundus photographs from the Samsung Hospital in Kangbuk were utilized. The STARE dataset was used to assess the model’s performance as well. Using data from Kangbuk Samsung Hospital, this model produced an accuracy of 0.995, sensitivity of 0.662, specificity of 0.997, and dice score of 0.625. Using the STARE dataset, this model produced results with an accuracy of 0.981, sensitivity of 0.588, specificity of 0.991, and dice score of 0.542.

Contributions: Their approach predicts more accurate Drusen segmentation masks by integrating local and global data. Furthermore, they can enhance the accuracy of Drusen detection in the early stages of AMD by utilizing the pre-trained model and a mix of several loss functions. They attempted to address the issue of data imbalance by employing a multi-scale deep learning model.

Limitations: There are many restrictions on the model. Binary segmentation may be done with the present configuration. In multi-class segmentation, the Patch-Level Network’s input channel count grows in proportion to the number of classes. It may result in issues with computational complexity and scalability. Furthermore, their approach has to forecast many patches for every high-resolution fundus image to get the Drusen prediction. As a result, their model may require a longer inference time than the others.

Several CNN and FCN designs have been put out since 2017 to segment the cyst/fluid area in OCT images. Assigning a label to every pixel in an image is the goal of semantic segmentation tasks, which are especially suited for FCNs, a subset of CNNs. FCNs have a structure akin to CNNs, but they use a distinct design that preserves the image’s spatial information across the network. Using CNNs for image identification tasks has several benefits, including the ability to handle high-dimensional data, like images, automatically learn and extract features from data, and generalize well to new data. In addition to using CNN’s advantages, FCN can handle complex prediction jobs like semantic segmentation.

Chen et al. [75] suggested a quicker R-CNN-based deep learning-based segmentation method to segment various retinal fluids, such as the pigment epithelium detachment (PED) areas, sub-retinal fluid (SRF), and intra-retinal fluid (IRF). To prevent overfitting, they first segmented the IRF areas and then enlarged the regions to segment the SFT. The PED areas were then divided using the RPE layer. Three distinct forms of retinal SD-OCT volumes from various equipment, Cirrus, Spectralis, and Topcon, are used for the research. Using a general approach to separate different types of illnesses was challenging. As a result, they created various segmentation strategies for various illnesses. The segmentation of IRF was done using a quicker R-CNN-based approach, the segmentation of SRF was done using a 3D region growing-based method, and the segmentation of PED was conducted using an RPE layer segmentation-based method. Three approaches provide passable outcomes. For lesion locations, this approach almost attains an overlapping ratio of 60%.

Contributions: This research presented a quicker R-CNN-based deep learning-based segmentation method to segment various retinal fluids. They created various segmentation strategies for various illnesses. Three approaches provide passable outcomes.

Limitations: The highest overlapping ratio for three types of lesion areas is close to 0.7. The reason is that it is hard to detect lesion areas whose boundary is not obvious and the area is very small. Also, SRF and PEDs interfere with each other seriously.

On the other hand, Schlegl et al. [66] developed an eight-layer FCN for end-to-end segmentation using a dataset made up of 1200 OCT scans from AMD, DME, or RVO patients. A deep learning-based technique was created to automatically identify and measure subretinal fluid (SRF) and intraretinal cystoid fluid (IRC). The Medical University of Vienna’s Ethics Committee acquired the dataset. With a (AUC) of 0.94, a mean precision of 0.91, and a mean recall of 0.84, they were able to obtain excellent accuracy for the identification and measurement of IRC for all three retinal diseases. With an AUC of 0.92, a mean precision of 0.61, and a mean recall of 0.81, the identification and measurement of SRF were likewise quite accurate. This was especially true for neovascular AMD and RVO, which performed better than DME, which was not frequently seen in the population under study. Between automated and manual fluid localization and quantification, a high linear correlation was verified, resulting in an average Pearson’s correlation coefficient of 0.90 for IRC and 0.96 for SRF.

Contributions: They created and validated a completely automated technique to identify, classify, and measure macular fluid in standard OCT scans. For all three retinal diseases, the newly developed, totally automated diagnostic approach based on deep learning obtained excellent accuracy for IRC identification and quantification. Moreover, fluid quantification obtains a high degree of agreement with expert manual evaluation.

Limitations: There is room for improvement based on the SRF detection performance in DME eyes. The decreased performance can be attributed to the fact that DME eyes often have a lower prevalence and less SRF than neovascular AMD or RVO. As a result, the model is unable to adjust for the various class label distributions when the approach is trained only on AMD and RVO instances and then applied to DME situations. However, because of the limited number of target labels, the approach would not be able to obtain a satisfactory representation for SRF when it was directly trained on DME examples. Simultaneous training on AMD, RVO, and DME instances would result in reduced test performance on DME because of changes in label distributions. A significant number of ground truth situations should be available to get around this restriction.

Also, ASPP contributed to Sappa’s work on RetFluidNet, Sappa et al. [76] demonstrated the use of RetFluidNet, an enhanced convolutional neural network (CNN)-based architecture, to separate three different kinds of fluid abnormalities from SD-OCT images. The RetFluidNet design could segment three types of retinal fluids: IRF, SRF, and PED. The RetFluidNet did that by integrating skip-connect techniques with ASPP, the author additionally looked at the effects of hyperparameters and skip-connect approaches on fluid segmentation. B-scans from 124 patients were used for training and testing RetFluidNet and all the comparison techniques. A Cirrus SD-OCT machine (Carl Zeiss Meditec, Inc., Dublin, CA) provided the B-sans. For IRF, PED, and SRF, RetFluidNet obtained accuracy values of 80.05%, 92.74%, and 95.53%, correspondingly. When compared to competing efforts, RetFluidNet showed a notable improvement in clinical applicability, respectable accuracy, and time efficiency.

Contributions: A completely automated technique called RetFluidNet helps to separate three different kinds of fluid abnormalities from SD-OCT images and this leads to AMD early identification and follow-up.

Limitations: They did not investigate the impact of downsampling on the tiny fluid areas. Given that there could be tiny fluid pockets, particularly for IRF, it might be wise to look at the effects of downsampling in the future and develop a different method for scaling images. The primary obstacle faced by the work was the complex optimization procedure that resulted from the numerous hyperparameters and their interdependencies. It takes time to examine the impact of changing the hyperparameters, which makes hyperparameter tuning laborious and thorough.

Kang et al. [77] designed a two-phase neural network for fluid-filled areas segmentation such as pigment epithelial detachment (PED), subretinal fluid (SRF), and intraretinal fluid (IRF) in retinal OCT images. Using the RETOUCH challenge, a satellite event of the MICCAI 2017 conference. The first network’s objective was to identify and divide fluid areas, while the second network enhanced the first one’s resilience. To prevent overfitting during training, the network used a U-net design with a classification layer and max-out and dropout activation. The loss function of the neural network was altered for the U-Net design.

Contributions: In this study, a deep learning approach for fluid segmentation and image identification from OCT images was developed. They created the two-step network to increase the network’s resilience. The suggested approach performs similarly for every data set, even though the three suppliers’ images have distinct qualities.

To enhance the segmentation performance, changes to the loss functions have also been taken into consideration in addition to changes to the network topologies. Liu et al. [78] prevented nearby areas from being recognized as a single entity and addressed the problem of tiny fluid area detection using an uncertainty-aware semi-supervised framework. The RETOUCH dataset was used to assess this approach. The framework was made up of two networks: one for teachers and the other for students. Both networks used the same architecture and three decoders to forecast the probability, contour, and distance maps of the image, which allowed for segmentation. The teacher network could direct students toward the acquisition of more trustworthy information by restricting consistency loss.

Contributions: Suggested a semi-supervised, uncertainty-aware architecture for segmenting retinal fluid. These networks have decoders that can predict the probability, contour, and distance maps at the same time. Their approach can enhance the network’s prediction power on the fluid’s boundary while reducing the instability of the nearby fluid regions that are merging.

Limitations: Some IRF areas are merged by ALNet. ASDNet can segment certain tiny fluid areas and separate some near-fluid regions in comparison to ALNet. Certain false positive areas can be rejected by RFCUNet. Some fluid zones did, however, continue to combine.

Deep learning research in segmentation utilizes the U-Net architecture, a popular type of Fully Convolutional Network (FCN) widely applied in medical image segmentation. The U-Net’s design features a contracting path and an expanding path, enabling precise feature localization and effective network training. This architecture has proven effective in various biomarker segmentation studies. For instance, Tennakoon et al. [79] introduced a method based on U-Net architecture trained with a mixed loss function incorporating dice, adversarial loss terms, and cross-entropy. The inclusion of adversarial loss enhances the encoding of higher-order pixel correlations, eliminating the need for separate post-processing in segmentation tasks. Their approach focused on voxel-level segmentation of three types of fluid lesions (IRF, SRF, and PED) in OCT images from the ReTOUCH challenge, demonstrating the efficacy of deep neural networks with integrated adversarial learning for accurate medical image segmentation.

Contributions: They suggested a deep learning-based approach to the segmentation and categorization of retinal fluid in OCT images. The suggested approach is predicated on a modified U-Net architecture that was trained with a mixed loss function that included an adversarial loss term. The suggested technique has demonstrated efficacy in predicting the existence and voxel-level segmentation of retinal fluids, according to validation data. Retinal fluid segmentation has improved as a result of the network design and loss functions employed.

Diao et al. [80] developed a complementary mask-guided CNN (CM-CNN) as part of a deep learning framework to perform the classification of OCT B-scans with Drusen or CNV from normal ones. The auxiliary segmentation task generated the guiding mask for the CNN. Second, utilizing the CAM output from the CM-CNN, a class activation map guided UNet (CAM-UNet) was presented to achieve the segmentation of Drusen and CNV lesions. The suggested dual guidance network outperformed five classification networks, four segmentation networks, and three multi-task networks in terms of both classification and segmentation accuracy when tested on a portion of the public UCSD dataset. The segmentation Dice coefficient was 77.51%, and the classification accuracy was 96.93%. The suggested model’s generalizability was further demonstrated by the results obtained by segmenting retinal fluids and detecting macular edema on an additional dataset.

Contributions: To classify OCT pictures, a complementary mask-guided CNN is suggested. An auxiliary job of lesion segmentation is added, and the segmentation mask that is produced is employed in a complementary form to guide the extraction of classification features. To accomplish autonomous AMD lesion segmentation, a class activation map guided UNet is suggested. The segmentation job is directed by the CAM from classification, which is fused into the features at each layer.

Table 5 summarizes the discussed machine and deep learning segmentation approaches.

Table 5.

Summary of the machine and deep learning segmentation approaches.

| Study | Method | Dataset | Segmentation Type | Performance | Modality |

|---|---|---|---|---|---|

| Yan et al. [74] | Encoder-decoder Network | STARE, DRIVE | Drusen segmentation | Accuracy: 97.13%, Sensitivity: 92.02%, Specificity: 97.30% | CFP |

| Pham et al. [67] | DeeplabV3,U-Net | Kangbuk Samsung hospital, STARE | Drusen segmentation | Accuracy: 0.99, 0.981%, Sensitivity: 0.662, 0.588%, Specificity: 0.997, 0.991% Dice Score: 0.625, 0.542 | CFP |

| Chen et al. [75] | Faster R-CNN | RETOUCH | Cysts/fluid | Accuracy: 0.665%, Dice Score: 0.997 | OCT |

| Schlegl et al. [66] | FCN | 1200 volumes OCT | Cysts/fluid | Sensitivity: IRC 0.84, SRF 0.81%, Precision: IRC 0.91, SRF 0.61, Area under the curve (AUC): IRC 0.94, SRF 0.92 | OCT |

| Sappa et al. [76] | RetFluidNet | 124 volumes OCT | Cysts/fluid | Accuracy: IRF 80.05, PED 92.74, SRF 95.53%, Dice Score: 0.885 | OCT |

| Kang et al. [77] | U-Net | RETOUCH | Cysts/fluid | Accuracy: 0.968%, Dice Score: 0.9 | OCT |

| Liu et al. [78] | FCN | RETOUCH | Cysts/fluid | Dice Score: 0.744 | OCT |

| Tennakoon et al. [79] | U-Net | RETOUCH | Cysts/fluid | Dice Score: 0.737 | OCT |

| Diao et al. [80] | CM-CNN, (CAM-UNet) | Heidelberg Engineering, Germany | Drusen and CNV lesions | Dice Score: 77.51% | OCT |

3.4. Deep and Machine Learning Techniques for AMD Diagnoses

Exploring DL/ML Techniques for AMD diagnoses reveals a landscape rich in methodologies harnessing AI to enhance diagnostic capabilities. These techniques, particularly DL, analyze vast datasets with complex algorithms and neural networks, unveiling patterns beyond human capacity and automating AMD detection and classification. CNNs, inspired by human visual processing [81], excel in recognizing subtle features in retinal images, aiding early and accurate diagnoses of AMD [82,83]. Recurrent neural networks (RNNs) and Long Short-Term Memory Networks (LSTMs) analyze sequential data like OCT scans over time, crucial for tracking AMD progression [84].

Ensemble learning combines predictions from multiple models, enhancing diagnostic accuracy and generalizability across datasets. Transfer learning utilizes pre-trained models on large datasets to optimize efficiency, especially when annotated data are limited in medical imaging. This amalgamation of DL/ML techniques propels AMD diagnostics into a new era of precision. From CNNs to ensemble learning, these methodologies collectively redefine AMD assessment, promising a more efficient and accurate diagnostic landscape [85,86].

3.4.1. Deep Learning Techniques

Recent advancements in DL for AMD classification are discussed in this section, showing promising results in categorizing retinal fundus images for different AMD stages. Studies have explored network depth and transfer learning, indicating that training networks from scratch with sufficient data can lead to higher accuracy than using pre-trained models [87,88]. However, addressing model limitations, such as generalizability to diverse datasets and matching attention maps with underlying vision transformer (ViT0 sickness, requires further research. In diagnosing retinal diseases, DL tasks mainly involve segmentation and classification. Classification directly categorizes input images into illness groups, while segmentation tasks reveal detailed information about retinal disorders from fundus images, including critical lesions and biomarkers [7,89,90,91].

Several studies have examined categorizing AMD modalities, using different techniques and methodologies. For example, Karri et al. [19] proposed a transfer learning method based on the Inception network, utilizing the DHU database to classify dry AMD, DME, or normal using a pre-trained CNN and GoogLeNet. Initially, saturated pixels were replaced with pixels of intensity 10. Following RPE estimation, smoothing, and retinal flattening, the RPE lower contour was relocated to a fixed position 71% of the height). The image was resized to and filtered using the BM3D filter. Three BM3D filter results were replicated, each treated as channel information. The proposed method utilized flattened and filtered images to classify retinal OCT images as normal, DME, or AMD. Across all validations (N1, N2, and N3), the average prediction accuracy for normal, DME, and AMD was 99%, 86%, and 89%, respectively, with the best model achieving 94% accuracy.

Philippe et al. [92] utilized DL techniques, including transfer learning and universal features trained on fundus images, along with input from a clinical grader, to develop automated methods for AMD detection from fundus images. Their approach aimed to classify images into disease-free/early stages and referable intermediate/advanced stages using the AREDS database. The deep CNN achieved an accuracy ranging from 88.4% to 91.6% and an AUC between 0.94 and 0.96.

Li et al. [21] utilized the VGG16 to classify CNV and normal AMD using a private retinal OCT image database. They employed image normalization but did not incorporate image denoising to avoid overfitting and enhance generalization. To address variations in image intensities, they normalized and resized OCT volumes to ensure uniform dimensions for processing with VGG16. They developed a normalization technique to adjust eye curvature, normalize volume intensities, and align BM layers achieving 100% AUC, 98.6% accuracy, 97.8% sensitivity, and 99.4% specificity.

Bulut et al. [93] proposed a DL method for identifying retinal defects using color fundus images. They employed the Xception model and transfer learning technique, training on images from the eye diseases department of Akdeniz University Hospital, and additional open-access fundus datasets for testing. The study explored 50 potential parameter combinations of the Xception model to optimize performance. The fourth model achieved the highest accuracy of 91.39% for the training set, while the zeroth model attained the best accuracy of 82.5% for the validation set.

Xu et al. [94] devised a DL approach using a ResNet50 model on a private database from Peking Union Medical College Hospital. They also constructed alternative machine-learning models with random forest classifiers for comparison. Three retinal specialists independently diagnosed the same testing dataset for a human-machine comparison, with participant photographs presented in a single partition subset. Utilizing fundus and OCT image pairs, their dual deep CNN model classified polypoidal choroidal vasculopathy (PCV) and AMD. Transfer learning involved initially applying ResNet-50 weights to two separate models handling fundus and OCT images, refining weights on new data, and then transferring them to corresponding convolutional blocks. The final FC layer classified input pairs into four categories: PCV, Wet AMD, Dry AMD, and AMD. The bimodal DCNN outperformed the top expert (Human1, Cohen’s 0.810) with 87.4% accuracy, 88.8% sensitivity, 95.6% specificity, and full agreement with the diagnostic gold standard (Cohen’s 0.828).

Contributions: Studies [19,21,92,93,94] showed the effectiveness of fine-tuning models trained on medical/non-medical images for improved disease recognition compared to traditional methods. It also highlighted the feasibility of utilizing pre-trained models for faster convergence with less data. Some studies compared their method with alternative machine-learning models, such as random forest classifiers.

Limitations: Studies [19,21,92,93,94] encountered challenges such as overfitting, reliance on a private database, gradient disappearance or explosion and slight class imbalance in the number of fundus images during training were encountered. Additionally, some studies relied only on accuracy. Furthermore, some studies relied solely on the AREDS dataset for evaluation, lacking validation on independent clinical datasets such as MESSIDOR. Additionally, the reliance on transfer learning and a single model architecture may limit the generalizability of the approach to different datasets or clinical settings, suggesting the need for further validation and exploration of alternative methodologies.

Rasti et al. [20] developed a CAD system using public OCT images (NEH dataset; DHU dataset) to differentiate between dry AMD, DME, and normal retina. Their approach utilized a multi-scale convolutional mixture of expert (MCME) ensemble model, a data-driven neural structure employing a cost function for discriminative and fast learning of image attributes. Image preparation involved normalization, retinal flattening, cropping, ROI selection, VOI creation, and augmentation. On the DHU dataset, the model achieved accuracy, recall, and AUC of 98.33%, 97.78%, and 99.90% for N1, N2, and N3, respectively, while on the NEH dataset, it achieved total precision, total recall, and total AUC of 99.39%, 99.36%, and 0.998.

Contributions: The study introduced a CAD system employing the MCME ensemble model for classifying OCT images into dry AMD, DME, or normal retina categories. By incorporating a mixture model, the study achieved high accuracy, recall, and AUC, demonstrating the effectiveness of CNNs on multiple-scale sub-images.

Limitations: The study lacked occlusion testing and qualitative assessment of model predictions. Additionally, it did not include image denoising, complete retinal layer segmentation, or lesion detection algorithms, which are essential for comprehensive analysis in clinical settings.

Thakoor et al. [41] developed a custom-built 3D CNN with two dense layers, four 3D convolutional layers, and a final SoftMax classification. They discuss 3D–2D hybrid CNNs. Patients who were 18 years of age or older who were treated by collaborating vitreoretinal faculty at Columbia University Medical Centre provided the data utilized in this study. Patients with non-neovascular AMD, patients with no significant vascular pathology on OCTA (non-AMD), and patients with neovascular AMD who have actionable CNV based on patient diagnosis make up the three patient groups for CNN training. They achieve a 93.4% testing accuracy in the binary categorization of neovascular AMD vs. non-AMD by using stacked 2D OCTA images of the retinal layers with 97 healthy, 80 neovascular AMD (NV-AMD) and 160 non-neovascular AMD (non-NV-AMD).

Contributions: The findings demonstrate the superiority of models with various imaging modalities concatenated into one, such as OCT volumes with b-scan images and OCTA, over models with a single input image modality. By using dataset balance, the models’ performance was improved. GradCAM visualization at each layer of the 3D input volumes indicates that model performance may be enhanced by including a high-resolution b-scan cube across the retina. Very modest angiographic results, particularly in early types of AMD, need to be confirmed by high-resolution b-scans.

Limitations: Imbalanced settings and data-limited are the study’s limitations.

Tan et al. [1] proposed a deep CNN for diagnosing AMD using fundus images. Their custom-designed CNN, consisting of 14 layers, aimed to classify images into AMD (dry and wet) and normal categories. Utilizing data from the Ophthalmology Department of Kasturba Medical College (KMC), they employed blindfold and 10-fold cross-validation methods, achieving high classifier accuracies of 91.17% and 95.45%, respectively.

Contributions: The study proposed a deep CNN for diagnosing AMD using fundus images, consisting of 14 layers, and aimed at classifying images into AMD (dry and wet) and normal categories. The model’s portability and affordability make it suitable for deployment in regions with limited access to ophthalmology services, facilitating rapid screening for AMD among the elderly.

Limitations: The study faced limitations such as the need for large amounts of labeled ground truth data for optimal model performance. Additionally, the complexity of the CNN model led to issues with overfitting and convergence, requiring continuous parameter adjustments for optimal performance. Moreover, CNN model training was laborious and time-consuming, although testing of fundus images became quick and precise once the model was trained.

Jain et al. [95] aimed to classify retinal fundus images as diseased or healthy without explicit segmentation or feature extraction. They developed the LCDNet system for binary categorization using DL techniques. Two datasets were utilized: one from the ML data repository at Friedrich-Alexander University and another from the Retinal Institute of Karnataka, India. The model achieved an accuracy ranging from 96.5% to 99.7%. Notably, color images performed worse than red-free images, aligning with medical community beliefs.

Contributions: The study developed the LCDNet system for binary categorization of retinal fundus images as diseased or healthy, utilizing DL techniques without explicit segmentation or feature extraction. The study highlighted the potential of DL for automated disease classification in retinal images, with red-free images showing better performance compared to color images, aligning with medical community beliefs.

Limitations: The study identified the need for a larger and more diverse dataset to enhance the model’s performance for specific diseases. Additionally, the focus on binary categorization limits the model’s ability to distinguish between different diseases or severity levels within diseased images. Comprehensive training with multi-class datasets would be necessary to address this limitation and improve the model’s applicability in clinical settings.

Islam et al. [96] proposed a CNN-based method to identify eight ocular disorders and their affected areas. After standard pre-processing, data are fed into the network for classification. Using the ODIR dataset and a state-of-the-art GPU, the model achieved an AUC of 80.5%, a score of 31%, and an F-score close to 85%.

Contributions: This study marked the first evaluation of various eye disorders on a real-life dataset, demonstrating strong performance across different datasets. Additionally, the model showed flawless performance when tested on alternative datasets, highlighting its accuracy in identifying all eight categories of ocular disorders. The user-friendly nature of the system and its practical viability in real-time testing underscore its potential for revolutionizing public healthcare services through image processing and neural networks.

Limitations: The study did not address specific challenges or limitations encountered during model development or evaluation. Further exploration of potential limitations, such as computational resource requirements, dataset biases, or generalizability to diverse populations, could provide valuable insights for future research and implementation in clinical settings.

Bhuiyan et al. [97] utilized CNNs and the AREDS dataset to classify Referable AMD of fundus images into no, early, intermediate, or advanced AMD and predict late AMD progression: dry or wet. They developed six automated AMD prediction algorithms based on color fundus images by integrating DL, ML, and algorithms for AMD-specific image parameters. These methods produced image probabilities, which were combined with demographic and AMD-specific parameters in a machine-learning prediction model to identify individuals at risk of progressing from intermediate to late AMD.

Contributions: The study utilized CNNs and the AREDS dataset to develop six automated AMD prediction algorithms, classifying Referable AMD of fundus images into various stages and predicting late AMD progression. By integrating DL, ML, and algorithms for AMD-specific image parameters, the study produced image probabilities combined with demographic and AMD-specific parameters, facilitating the identification of individuals at risk of progressing from intermediate to late AMD. The immediate applicability of these methods in AMD studies using color photography could significantly reduce human labor in image classification, potentially advancing telemedicine-based automated screening for AMD and improving patient care in the field of public health.

Limitations: The study faced limitations, particularly in lower prediction accuracy when stratified according to GA and CNV. This discrepancy might be attributed to the predominance of non-incident events compared to pure dry and wet AMD cases during the construction of machine-learning models.

Zapata et al. [61] utilized the Optretina dataset, comprising approximately 306,302 images, to develop a CNN-based classification algorithm for AMD Disease vs. No disease. They designed five algorithms and involved three retinal specialists to classify all images. Three CNN architectures were employed, two of which aimed to reduce parameters while maintaining accuracy. The study achieved an accuracy of 0.863, an AUC of approximately 0.936, a sensitivity of 90.2%, and a specificity of 82.5%.

Contributions: The study utilized the Optretina dataset to develop a CNN-based classification algorithm for AMD Disease vs. No disease, involving three retinal specialists in image classification. The study designed five algorithms and employed three CNN architectures, achieving impressive performance metrics. These algorithms demonstrated the ability to assess image quality, distinguish between left and right eyes, and accurately identify AMD and GON with notable sensitivity, specificity, and accuracy.

Limitations: The study may have faced limitations such as potential biases introduced by the involvement of retinal specialists in image classification. Additionally, the performance metrics achieved might vary across different datasets or clinical settings, warranting further validation and evaluation on diverse datasets to ensure the generalizability and robustness of the algorithms.