Abstract

Automated blood vessel segmentation is critical for biomedical image analysis, as vessel morphology changes are associated with numerous pathologies. Still, precise segmentation is difficult due to the complexity of vascular structures, anatomical variations across patients, the scarcity of annotated public datasets, and the quality of images. Our goal is to provide a foundation on the topic and identify a robust baseline model for application to vascular segmentation using a new imaging modality, Hierarchical Phase-Contrast Tomography (HiP-CT).

We begin with an extensive review of current machine learning approaches for vascular segmentation across various organs. Our work introduces a meticulously curated training dataset, verified by double annotators, consisting of vascular data from three kidneys imaged using Hierarchical Phase-Contrast Tomography (HiP-CT) as part of the Human Organ Atlas Project. HiP-CT, pioneered at the European Synchrotron Radiation Facility in 2020, revolutionizes 3D organ imaging by offering resolution around 20μm/voxel, and enabling highly detailed localized zooms up to 1μm/voxel without physical sectioning. We leverage the nnU-Net framework to evaluate model performance on this high-resolution dataset, using both known and novel samples, and implementing metrics tailored for vascular structures. Our comprehensive review and empirical analysis on HiP-CT data sets a new standard for evaluating machine learning models in high-resolution organ imaging.

Our three experiments yielded Dice scores of 0.9523 and 0.9410, and 0.8585, respectively. Nevertheless, DSC primarily assesses voxel-to-voxel concordance, overlooking several crucial characteristics of the vessels and should not be the sole metric for deciding the performance of vascular segmentation. Our results show that while segmentations yielded reasonably high scores—such as centerline Dice values ranging from 0.82 to 0.88, certain errors persisted.

Specifically, large vessels that collapsed due to the lack of hydro-static pressure (HiP-CT is an ex vivo technique) were segmented poorly. Moreover, decreased connectivity in finer vessels and higher segmentation errors at vessel boundaries were observed. Such errors, particularly in significant vessels, obstruct the understanding of the structures by interrupting vascular tree connectivity. Through our review and outputs, we aim to set a benchmark for subsequent model evaluations using various modalities, especially with the HiP-CT imaging database.

1. Introduction

The vascular, or circulatory system consists of the network of blood vessels responsible for circulating blood, delivering oxygen, and facilitating the removal of waste from tissues and organs1. Abnormalities in the structure or function of vascular networks can lead to, or be indicative of, various pathologies ranging from tumor growth and metastasis to strokes and cardiovascular disorders. Hence, accurate segmentation of vasculature and quantitative evaluation of its morphology are widely used to better understand these pathophysiological processes2–5.

Annotation of images by experts has been regarded as the segmentation gold standard, but it is time-consuming and requires specialised knowledge6. The success of convolutional neural networks (CNN) in classification leads to researchers attempting to employ deep learning technologies for image segmentation7. Consequently, a growing number of automated and semi-automatic vessel segmentation methods have been developed over the last years for the diagnosis of diseases associated with the vascular system4,6,8.

For clinical applications to be effective, segmentation algorithms must handle a broad spectrum of anatomical and sample variations. They should also be versatile enough to operate across various imaging modalities and quality levels. Magnetic resonance imaging (MRI), positron emission tomography (PET), and computed tomography (CT) have been the three major imaging modalities utilised for decades7. For vascular imaging, magnetic resonance angiography (MRA) is one of the most common choices due to its noninvasive nature, absence of ionising radiation exposure, capability for non-contrast examination, and capacity to provide volumetric representations that highlight vascular disease9.

However, the complexity of vascular imaging data and the anatomical variability among subjects make vessel segmentation a challenging task. Despite the clinical need, a fully automated segmentation method for 3D vascular segmentation and subsequent feature extraction has not yet been developed due to several specific challenges10. The main hurdles are the variations across scales, differences in individual anatomies, complexities of slender structures, a limited volume ratio (i.e. vessel/background), and a scarcity of publicly available labeled data. In terms of individual anatomical variations, there are significant disparities in vessel length, diameter, and tortuosity, complicating the tasks of vascular tracking and segmentation10–13. Additionally, narrower vessels with diameters at the border of the imaging resolution are particularly difficult to capture10,14.

Hierarchical Phase-Contrast Tomography (HiP-CT)15 is a nascent imaging technology developed in 2020 on the BM05 bealine at the ESRF. The technique is a propagation-based phase-contrast tomography technique that utilises the increased brilliance and resulting high spatial coherence of the European Synchrotron Radiation Facility’s upgrade to a 4th Generation X-ray source (The Extremely Brilliant Source (EBS). With HiP-CT, it is possible to resolve very small refractive index difference (density difference) in soft tissue structures, even in very large samples such as whole adult human organs. The propagation through free space of the X-rays, after interaction with the sample allow the refractive index shifts to be converted to intensity variation through single-distance phase retrieval at reconstruction16. HiP-CT has been used to image whole human organs, down to resolution of some single-cell types in regions of interest15.

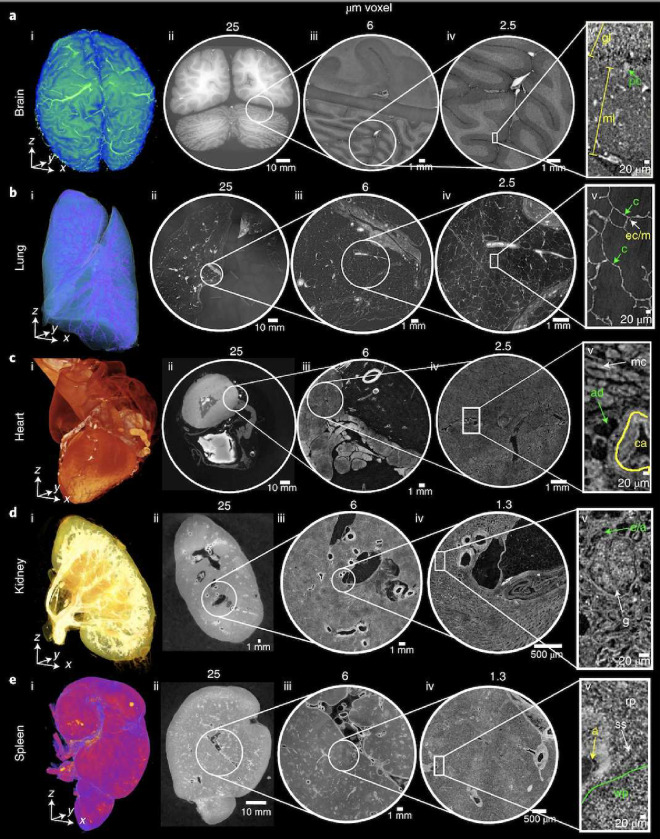

A specific novelty of HiP-CT imaging as it relates to the vasculature, lies in its hierarchical nature. Whole human organs can be imaged with overview resolution of ~20μm/voxel, then followed by high resolution (down to ~2μm/voxel) regions of interest anywhere within the sample without sectioning (Figure 1). This unique feature allows researchers to image the hierarchical structure of the vascular tree from the largest vessels down to near the capillary bed in intact human organs. This novel capability has already allowed unique insights into vascular abnormalities in COVID-19 lung lobes to be identified17–19, as well as vascular quantification across the whole organ20. Despite its advantages and suitability for vessel imaging, HiP-CT also poses many challenges for CNN-based methods of vascular segmentation:

large data volume (routinely ≥ 1Tb) require cutting-edge hardware

multi-scale vessels to segment

lack of available ground truth training data due to the novelty of the method

phase contrast generates fringes in intensity at vessel boundaries

large vessels can collapse

no targeted vascular staining to enhance contrast between vessel lumen and surrounding tissue

continually developing technique with ongoing changes in image quality (e.g. improvement to resolution, SNR and CNR) result in heterogeneous datasets

Figure 1.

Figure after15, HiP-CT of brain (a), lung (b), heart (c) kidney (d) and spleen (e); for each organ, a 3D rendering (i) of the whole organ is shown using scans at 25μm per voxel. Subsequent 2D slices (ii–iv) show positions of the higher-resolution VOI relative to the previous scan. (v), Digital magnification of the highest-resolution image with annotations depicting characteristic structural features in the brain (ml, molecular layer; gl, granule cell layer; pc, Purkinje cell), in the lung (c, blood capillary; ec/m, epithelial cell or macrophage), in the heart (mc, myocardium; ca, coronary artery; ad, adipose tissue), in the kidney (e/a, efferent or afferent arteriole; g, glomerulus) and in the spleen (rp, red pulp; wp, white pulp; a, arteriole; ss, splenic sinus). All images are shown using 2× binning.

In light of the opportunity and challenges that HiP-CT poses for vascular research, we review the literature on blood vessel segmentation with a particular focus on identifying current models that are most suited to the segmentation of blood vessels from HiP-CT imaging. We review segmentation on an organ-by-organ basis as the vascular and parenchymal tissue structures surrounding the vasculature differ vastly between different tissues. Our ultimate aim is to identify and then develop a model and training strategy which will provide a robust and generalisable method that can be easily adapted to segment multi-scale vascular structures across all organs imaged by HiP-CT.

The main contributions of the journal are as follows:

a comprehensive review of deep learning methods for vascular segmentation

creation of novel Hierarchical Phase-Contrast Tomography (HiP-CT) dataset kidney dataset

implementation of the nnU-Net model, tailored for kidney vessel segmentation, using our novel Hierarchical Phase-Contrast Tomography (HiP-CT) dataset

integration of various loss functions to U-Net architecture better suited for tubular structures like vessels

in-depth discussion on the challenges of blood vessel segmentation and the significance of performance metrics

establishment of a benchmark for future model evaluations in the context of phase contrast tomography imaging data

This paper is arranged as follows: In Section 2, deep learning models used for vessel segmentation, the datasets used in their training, and the evaluation metrics in the literature are briefly explained. In Section 3, the most prominent method in the literature is tested on HiP-CT kidney vascular data to establish a baseline of performance and highlight specific challenges in the adaptation of these models to HiP-CT data. The findings from our experiments are presented in Section 4, followed by a discussion of these results in Section 5. Finally, We conclude the paper in Section 6 with a summary of our conclusions and suggestions for future research directions.

2. Vessel segmentation

2.1. Modalities for 3D vascular imaging

Whilst the ideal vessel segmentation algorithm or model is robust across a range of imaging modalities, in reality, the imaging modality will impose contrast and resolution limits that must be considered in segmentation approaches as well as imaging-specific artefacts (e.g anisotropic voxels). Here, we review some of the most common imaging modalites for vascular networks and contextualise the development of HiP-CT within this field.

The most widely adopted procedure for visualising vessels in human organs in vivo is angiography, i.e. where a contrast agent is injected into the vessels. The major angiographic modalities utilised in clinical practice are computed tomography angiography (CTA), magnetic resonance angiography (MRA), and digital subtraction angiography (DSA) also known as a conventional angiogram.

MRA emerged as the predominant modality in vessel segmentation studies, as evidenced in Tables 1, 2, 3, and 4. Among the 31 deep learning-based vessel segmentation studies we reviewed across various organs, 9 employed MRA. Notably, for brain vessel segmentation, 9 out of the 12 studies utilised this modality. This is largely attributed to the fact that commonly available datasets for brain vasculature studies, such as PEGASUS21, MIDAS22, SCAPIS23, and 1000PLUS24, utilise MRA.

Table 1.

Review of the papers applying deep learning for brain vessel segmentation.

| References | Modality | Data source | No. of subj. | ML model | Input | DSC | Add’l perf. metrics |

|---|---|---|---|---|---|---|---|

|

| |||||||

| Livne et al.52 | TOF MRA | Private (PEGASUS) | 66 | U-Net | 2D patches | 0.88 | 95HD = ~7 voxels and an AVD = ~0.4 voxels |

| Phellan et al.53 | TOF MRA | Private | 5 | 2D CNN | 2D patches | between 0.764 and 0.786 | - |

| Hilbert et al.54 | TOF MRA | Private (PEGASUS+ 7UP+ 1000Plus) | 264 | 3D CNN (BRAVE-NET) | 3D patches | 0.931 | 95HD = 29.153, and AVD = 0.165 |

| Patel et al.55 | DSA | Private | 100 | 3D CNN (DeepMedic) and 3D U-Net | 3D patches | 0.94±0.02 and 0.92±0.02 respectively | CAL= 0.84±0.07 and 0.79±0.06 respectively |

| Tetteh et al.56 | TOF MRA and μCTA | Publicly available | Synthetic data | NN with 2D orthogonal cross-hair filters (DeepVesselNet) | 3D volumes | 0.79 | Prec=0.77 and Recall=0.82 |

| Garcia et al.57 | 3DRA | Private | 5 | 3DUNet-based architectures | 3D patches | 0.80 ± 0.06 | Prec=0.75 ± 0.10 and Recall=0.90 ± 0.07 |

| Vos et al.58 | TOF-MRA | Private | 69 | 2D and 3D U-Net | 2D and 3D patches | 0.74 ±0.17 and 0.72 ±0.15 respectively | MHD=47.6 ± 40.4 and 581.3 ± 57.0 respectively |

| Chatterjee et al.59 | TOF MRI | Not explicity stated | 11 | U-Net MSS | 3D patches | 0.79 ± 0.091 | IOU=65.89±1.25 |

| Zhang and Chen60 | TOF-MRA | Not explicity stated | 42 | DD-Net | 3D patches | 0.67 | Sensitivity=67.86 and IOU=33.66 |

| B.Zhang et al.61 | TOF-MRA | Publicly available (MIDAS) | 109 | DD-CNN | 3D patches | 0.93 | PPV=96.4730, Sensitivity=90.1443, and Acc=99.9463 |

| Lee et al.62 | MRA | Private (SNUBH) | 26 | 2D U-Net with LSTM (Spider U-Net) | ‘2D images | 0.793 | IOU=74.3 |

| Quintana et al.63 | TOF-MRA | Private (UNAM) | 4 | Dual U-Net-Based cGAN | 2D images | 0.872 | Prec=0.895 |

CNN: Convolutional neural network; GAN: Generative adversarial network; cGAN: Conditional GAN; DD-Net: Dense-dilated neural network; LSTM: Long short-term memory; U-Net MSS: Multi-scale supervised U-Net; TOF MRA: Time of flight magnetic resonance angiography; DSA: Digital subtraction angiography; μCTA: Micro-computed tomography angiography; 3DRA: 3D rotational angiographies; DSC: Dice similarity coefficient; HD: Hausdorff distance; AVD: Average distance; Prec: Precision; MHD: Mahalanobis distance; IOU: Intersection over union; PPV: Positive predictive value; CAL: Connectivity-area-length.

Table 2.

Review of the papers applying deep learning for kidney vessel segmentation.

| References | Modality | Data source | No. of subj. | ML model | Input | DSC | Add’l perf. metrics |

|---|---|---|---|---|---|---|---|

|

| |||||||

| Karpinski et al.64 | WSI | Publicly available | 35 | 2D UNet with Resnet34 backbone | 2D images | - | Acc: 0.893 |

| He et al.65 | CT | Private | 170 | DPA-DenseBiasNet | 3D volumes | 0.861 | MCD:1.976 |

| Taha et al.66 | CT | Private | 99 | Kid-Net (a 3D CNN) | 3D patches | - | F1 score: 0.72 (artery); 0.67 (vein) |

| He at al.67 | CTA | Private | 122 | EnMcGAN | 3D patches | 0.89±0.6 (artery); 0.77±0.12 (vein) | - |

| Zhang et al.68 | CT | Publicly available | 392 | DPA-DenseBiasNet | 2D images | 0.884 | - |

| Xu et al.69 | micro-CT | Private | 8 | CycleGAN | 3D patches | 0.768 ± 0.3 | Acc: 0.992 |

| Li et al.70 | CT | Publicly available | 35 | DUP-Net | 3D patches | 0.883 | Precision:0.911; Recall:0.858 |

CNN: Convolutional neural network; GAN: Generative adversarial network; WSI: Whole slide imaging; DPA: Deep priori anatomy; MCD: Mean centerline distance; EnMcGAN: Ensemble multi-condition GAN; DUP-Net: Double UPoolFormer networks; DSC: Dice similarity coefficient.

Table 3.

Review of the papers applying deep learning for coronary vessel segmentation.

| References | Modality | Data source | No. of subj. | ML model | Input | DSC | Add’l perf. metrics |

|---|---|---|---|---|---|---|---|

|

| |||||||

| Dong et al.71 | CCTA | Private | 338 | Di-Vnet | 3D patches | 0.902 | Prec=0.921, Recall=0.97 |

| Gao et al.72 | XCA | Private | 130 | GBDT | 2D images | - | F1=0.874, Sen= 0.902, Spec=0.992 |

| Wolterink et al.73 | CCTA | Publicly available | 18 | GCN | 2D images | 0.74 | MSD=0.25mm |

| Li et al.74 | CCTA | Private | 243 | 2D U-Net with 3DNet | 2D images | 0.771 ± 0.021 | AUC=0.737 |

| Song et al.75 | CCTA | Private | 68 | 3D FFR U-Net | 3D patches | 0.816 | Prec=0.77, Recall=0.87 |

| Zeng et al.76 | CCTA | Publicly available | 1000 | 3D-UNet | 3D volumes | 0.82 | - |

DSC: Dice similarity coefficient; Prec: Precision; Sen: Sensitivity; Spec: Specificity; GBDT: Gradient boosting decision tree; CCTA:Coronary computed tomographic angiography; XCA: X-ray coronary angiography; GCN: Graph convolutional networks; MSD: Mean surface distance; AUC: Area under the receiver operating characteristic curve; ROI: Region of interest; 3D FFR U-Net: 3D feature fusion and rectification U-Net

Table 4.

Review of the papers applying deep learning for pulmonary vessel segmentation.

| References | Modality | Data source | No. of subj. | ML model | Input | DSC | Add’l perf. metrics |

|---|---|---|---|---|---|---|---|

|

| |||||||

| Tan et al.77 | CT and CTA | Publicly available | 16 | 2D-3D U-Net nnU-net | 2D images 3D volume | 0.786, 0.797, 0.77 (on CT) | OR=0.281, 0.285, 0.304 |

| Nam et al.78 | CTA | Private | 104 | 3D U-Net | 3D patches | 0.915±0.31 | AUROC=0.995 |

| Guo et al.79 | CT | Private | 50 | 3D CNN | 3D patches | 0.943 | - |

| Xu et al.80 | CT | Private | 2D CNN | - | - | ||

| Nardelli et al.81 | CT | Publicly available | 55 | 3D CNN | 2D and 3D patches | - | Sen=0.93 Prec=0.83 |

| Wu et al.82 | CT | Private | 143 | MSI-U-Net | 3D volume | 0.7168 | Sen=0.7234, Prec=0.7893 |

CNN: Convolutional neural network; CT: computed tomography; CTA: computed tomography angiography; MSI-U-Net: Multi-scale interactive U-Net; OR: Over segmentation rate; AUROC: Area under the receiver operating characteristic curve; Sen: Sensitivity; Spec: Specificity; Prec: Precision.

MRA can be divided into three categories depending on the technique behind the image acquisition / generation: TOF (time-of-flight), PC (phase contrast), and CE (contrast-enhanced). Among those, TOF-MRA is the most frequently used non-contrast bright-blood technique for imaging the human vascular system25,26. As suggested by its name, TOF MRA relies on a principle known as flow-related enhancement, which happens when completely magnetised blood flows into a slab of magnetically saturated tissue whose signal has been muted by repeated RF-pulses27. However, the long acquisition time and high operational costs make it difficult to employ for cerebral artery visualisation28,29. Furthermore, MRA has been shown to overestimate stenosis compared to other modalities30.

CTA, on the other hand, is faster, takes a few minutes to complete, and is more accurate than MRA. However, unlike MRA, all CTAs need the administration of an IV contrast agent and involve radiation exposure, making it less safe.

DSA has been utilised as the gold standard for imaging vessels; nonetheless, this invasive and labor-intensive method is rather costly and comes with discomforts and possible dangers31,32. For the in vivo methods described above the resolution of imaging is on the order of 0.5–2mm26,31, with the state-of-the-art TOF techniques able to capture at most (300μm-500μm). Capturing the microvasculature (1–100μm) is only possible in vivo at very shallow depths using e.g. contrasted ultrasound33, or must be performed ex vivo on extracted tissue using optical or X-ray methods34,35. Even within the ex vivo techniques, there are only two, Light-sheet imaging and HiP-CT capable of imaging intact human organ vascular networks that bridge the length scales from large arteries and veins down to the capillary bed15,36. Of the two techniques, HiP-CT can perform multi-scale imaging far more quickly than light sheet imaging as HiP-CT does not rely on staining of the vasculature shortening sample preparation,20, and imaging itself of a whole organ at 20μm/voxel can be performed in as little as 1 hr20. Whilst the acquisition of these large vascular network datasets is becoming increasingly fast, automated segmentation has also been a rapidly developing area of research37.

Before the arrival of complex machine learning methods, vessel segmentation relied heavily on traditional techniques such as kernel-based methods, tracking methods, mathematical morphology-based methods, and model-based methods38. In kernel-based methods, edge detection techniques, like the Canny or Sobel operators, identify vessel boundaries using gradient information39. More sophisticated approaches, such as Hessian-based methods can be categorised under model-based methods40. These methods utilise second-order intensity derivatives to capture vessel-like structures, and level-set methods evolve contours within the image domain to detect vessels. Active contours, or snakes, are tracking methods dynamically adjusted to capture vessel boundaries, and mathematical tools like B-splines offer precise modeling of vessel structures41,42.

These traditional methods provided a foundation that paved the way for machine learning in the realm of medical image analysis.

With the rapid advancement of computational capabilities, a paradigm shift has taken place in the realm of image processing and machine learning: the rise of deep neural networks (NNs). These NNs, employing multi-layered architectures, extracted patterns within data through multiple levels of abstraction, and transformed the way images are understood and processed.

Among various NN architectures, CNNs have stood out as highly effective for image analysis tasks. They possess the capability to learn image features in a hierarchical manner, progressing from simple to complex, thereby eliminating the need for manual feature extraction. This inherent feature has made CNNs especially popular in the field of medical image analysis.

Recently, the landscape of computer vision has seen another transformative shift with the emergence of Transformers43. Initially successful in natural language tasks, Transformers have begun to find application in various computer vision challenges, prompting researchers to reevaluate the dominant role of CNNs. These advancements in computer vision have also generated significant interest in the medical imaging domain44. Transformers, with their ability to capture a more extensive global context compared to CNNs have now become a subject of considerable attention in the medical imaging community45,46.

2.2. Evaluation metrics

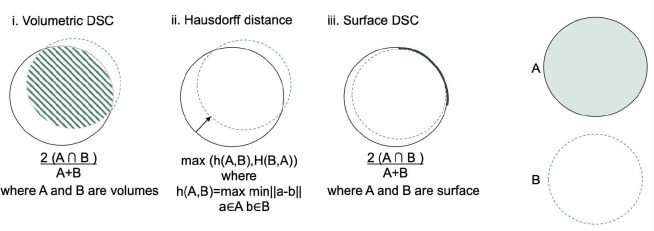

In order to evaluate any automated vessel segmentation model or algorithm, a quantitative assessment of a segmentation task can be done through various performance metrics. Depending on the theory behind them, these metrics can be divided into overlap-based, volume-based, pair-counting-based, information-theoretic-based, probabilistic-based, and spatial distance-based measures38. These are summarised in Figure 2

Figure 2.

Illustration for calculation of volumetric Dice score coefficient (DSC), Hausdorff distance, and surface DSC. The solid line represents the ground truth contour, whereas the dashed line is the prediction. i. A Volumetric DSC, defined as the union of two volumes (green volume region) normalised by the mean of the two volumes. ii. Hausdorff distance, defined as the maximum nearest neighbor Euclidean distance (green arrow). iii. Surface DSC, is defined as the union of two contours (yellow contour region) normalised by the mean surface of the two contours. - after47

When assessing the performance of segmentation algorithms in comparison to ground truth, a contingency table is often employed, featuring True Positive (TP), True Negative (TN), False Negative (FN), and False Positive (FP) values. In this context, positive and negative denote pixels that, according to the ground truth segmentation, are attributed to vessels and background respectively.

A review of the literature reveals varied metric reporting across studies (as evidenced in Tables 1, 2, 3, and 4). This section delves into the most frequently used performance metrics, elucidating their underlying theory, strengths, and weaknesses.

Overlap-Based Measures

Overlap-based measures are used to evaluate the similarity between the segmented result and the ground truth based on their overlapping regions. Such measures are the most widely utilised metrics with all studies we reviewed reporting at least one overlap based metric. However they have some limitations when applied to vascular segmentation which will be discussed further. The Dice Similarity Coefficient (DSC) is one of the most common overlap-based metrics that calculates the spatial overlap of two segmentations 26 of the 31 studies reviewed here utilise DSC. Given its properties, DSC is particularly used in vascular segmentation where the vasculature accounts for a small fraction (e.g. around 3% in the brain) of the organ volume, leading to unbalanced data challenges48.

| (1) |

Sensitivity, also known as TP Rate or Recall, is another overlap-based metric. It measures how well a model identifies TPs (e.g., correctly segmented vessels). It provides insight into how much of the ground truth overlaps with the predicted positive segment. Specificity, on the other hand, measures the proportion of TNs (e.g., correctly identified non-vessel areas) that are correctly detected. It reflects on how much of the ground truth’s negative area overlaps with the predicted negative segment. These two measures are defined as follows:

| (2) |

| (3) |

Another related measure is precision, often referred to as the positive predictive value (PPV). While not frequently utilised in medical image validation, it plays a role in determining the F-Measure. This is defined as

| (4) |

whereas F-Measure, also known as F1 score is the harmonic mean of precision and recall. In 2020, Shit et al. developed centerline Dice (clDice), a unique topology-preserving loss function for tubular structure segmentation, as another DSC modification49. Their work highlighted that DSC metric does not necessarily assess the connectedness of a segmentation because Dice does not equally weight tubular structures with large, medium, and small radii. As connectivity is a crucial biological feature of vascular networks, bespoke metrics should be used to evaluate vascular segmentations. The authors demonstrated that training on a globally averaged loss causes a considerable bias towards the volumetric segmentation of big arteries in real vascular datasets compared to arterioles and capillaries (radius ranges e.g., 30 μm for arterioles and 5 μm for capillaries). To enable topology preservation, centerline Dice is formulated as follows:

| (5) |

| (6) |

| (7) |

where the ground truth mask is , the predicted segmentation mask is and skeletons are and .

Volume-Based Measures

These metrics focus on the volume or size of the segmented structures. As the name implies, Volume Difference (VD) is a typical volume-based metric that is based on the absolute difference in volumes of the segmented structure and the ground truth, usually normalised by the ground truth volume. Contrary to a simple volumetric subtraction, VD accounts for the volumetric overlap by considering voxels exclusive to each mask as well as those shared. This ensures that VD accurately reflects both the volume and the spatial agreement between the two segmentations. VD is formulated as follows:

| (8) |

where is the volume of the predicted segmentation and is the volume of the ground truth.

Pair-Counting-Based Measures

The pair counting based measures are calculated based on the correspondence between object pairs in the segmentation and the ground truth. One such metric is Adjusted Rand Index (ARI) and it is calculated as follows:

| (9) |

where RI is the Rand Index and is calculated as:

| (10) |

where a is the number of pairs of objects that are in the same group in both the predicted and the ground truth and corresponds to the TPs; is the number of pairs of objects that are in different groups in both and corresponds to the TNs; and b and c correspond to FPs and FNs, respectively.

Information-Theoretic-Based Measures

As the name implies, information-theoretic-based measures use information theory concepts to estimate the quality and performance of the segmentation. For instance, one such measure called Mutual Information (MI) is calculated based on the shared information between the segmented result and the ground truth. For two discrete random variables X (segmentation result) and Y (ground truth), the MI is defined as:

| (11) |

where

is the joint probability distribution function of and .

is the marginal probability distribution function of .

is the marginal probability distribution function of .

might represent the predicted segmentation where

a specific value taken by could be either “object” (e.g., a vessel) or “background.” and represents the ground truth (actual segmentation) where

a specific value taken by could similarly be either “object” or “background.”

In that context:

corresponds to the probability of a TP.

corresponds to the probability of a TN.

corresponds to the probability of a FP.

corresponds to the probability of a FN.

Probabilistic-Based Measures

Probabilistic-based measures evaluate the performance of segmentation and classification algorithms based on predicted probabilities rather than strict binary or discrete decisions. In binary classification, for example, a probabilistic model may assign a probability indicating the likelihood that an item belongs to the positive class rather than simply categorizing it as positive or negative.

The ROC curve (Receiver Operating Characteristic) stands as a prime example of a probabilistic-based measure. It graphs the TP rate (sensitivity) against the TN rate (specificity) over a spectrum of decision thresholds. This curve offers a holistic perspective on a model’s capability to distinguish between classes.

The Area Under the Curve (AUC) encapsulates the model’s overall discriminative prowess between positive and negative classes. From a mathematical standpoint, the AUC represents the integral of the ROC curve:

| (12) |

where is the TP rate at a given threshold . This metric or variations appear in 3 of the 31 models reviewed in Tables 1, 2, 3, and 4).

Spatial Distance-Based Measures

Spatial distance-based measures focus on the spatial discrepancies between the segmented structures and the ground truth. For instance, the 95th Percentile Hausdorff Distance (95HD) assesses the greatest distance between a point in the true segmentation and the nearest point in the segmented result. More formally, if we define as the set of all distances from points in set to their nearest points in set , then:

| (13) |

The Average Volumetric Distance (AVD), on the other hand, is typically computed as the absolute difference in volumes between the predicted segmentation and the ground truth , normalised by the volume of the ground truth. Mathematically, it can be expressed as:

| (14) |

Boundary-based techniques are another important subcategory of distance-based measures50. Normalised surface Dice, mean average surface distance, and average symmetric surface distance are three key metrics, especially in the field of vessel segmentation. Surface dice is a measurement to evaluate the similarity between the segmented surface and a ground truth surface. Normalised surface Dice takes this a step further by normalising the measurement and making it less sensitive to size differences between the predicted segmentation and the ground truth. This is particularly useful for segmenting smaller structures, or size variability between subjects. Surface distance is another measurement that indicates the shortest distance of each point on the surface of the segmented structure to the surface of the ground truth and is calculated as follows:

| (15) |

where is the Euclidean distance between points and , and and are the numbers of surface points in A and respectively..

Of the 31 paper reviewed 5 use a spatial distance metric.

The use of the appropriate assessment metric is crucial, as each measurement has specific biases dependent on the characteristics of the segmented structures. Whilst our review of literature shows that DCS or other generalised overlap measures are still the most frequently used metrics, more specialised vascular specific metrics are available and are becoming more widely implemented. The evaluation metric can strongly influence the choice of the final optimal model and hence should be chosen in accordance with the segmentation task’s nature and taking into consideration what downstream analyses are to be conducted on the segmentations, or the biological implications of a particular metric. For example for vascular structures, quantitative analyses of network topology for flow simulations are common end goals for segmentation3,20. In such cases meshing or skeletonising are common steps after segmentation. Meshing or skeletonisation algorithms are often highly sensitive to holes or cavities in the segmentations, or to breaks in connectivity in vascular components51. Thus, evaluation methods highly sensitive for connectivity breaks (e.g. clDice) should be implemented in these cases.

2.3. Deep learning models for lung, kidney, cardiovascular, and cerebral vessel segmentation

In this section, we delve into the latest deep-learning (DL) strategies used for blood vessel segmentation. We’ve grouped the DL-based techniques for segmenting vessels under three primary network structures: Convolutional Neural Networks, Generative Adversarial Networks, and Vision Transformers.

2.3.1. Convolutional neural network based models (CNNs)

Traditional CNNs have been foundational in vessel segmentation before the widespread adoption of architectures like U-Net. These neural networks, based on fundamental concepts of learning features and building hierarchical representations, leverage the presence of spatial structures and patterns inherent in image data. Unlike the Fully Convolutional Networks (FCN) that perform dense predictions, traditional CNNs often operate on patches or regions, aiming at classifying central pixels or aggregates.

Yao et al. used a 2D CNN architecture to extract blood vessels from fundus images83. The output of their CNN architecture is the confidence level of each pixel being blood vessels. In 2018, Tetteh et al. developed an architecture called DeepVesselNet. Their design leverages 2-D orthogonal cross-hair filters using 3-D context information while minimizing computational demands. Addinionally, they introduce a class balancing cross-entropy loss function with false positive rate correction specifically tailored to address the prevalent issues of significant class imbalances and high false positive rates observed with traditional loss functions56.

Cervantes-Sanchez et al. trained a multilayer perceptron with X-ray Coronary Angiography (XCA) images enhanced by using Gaussian filters in the spatial domain and Gabor filters in the frequency domain for segmentation of coronary arteries in X-ray angiograms84. Nasr-Esfahani et al. presented a multi-stage model where a patch around each pixel is fed into a trained CNN to determine whether the pixel is of vessel or background regions.85. Samuel and Veeramalai proposed a two-stage vessel extraction framework to learn well-defined vessel features from global features (learned by the pre-trained VGG-16 base network) using the Vessel Specific Convolutional (VSC) blocks, Skip chain Convolutional (SC) layers, and feature map summations86. In 2021, Iyer et al. developed a new CNN for angiographic segmentation: AngioNet, which combines an Angiographic Processing Network (APN) with Deeplabv3+ because of its ability to approximate more complex functions. The APN was tailored to tackle several challenges specific to angiographic segmentation, including low-contrast images and overlapping bony structures87.

Fully convolutional neural network (FCN) based models: U-Net and its variants

Fully Convolutional Networks (FCNs) are a subtype of CNNs specialised in semantic segmentation tasks. Deep learning techniques have revolutionised medical image segmentation, achieving precise pixel-level classification with the help of FCN89. FCNs are designed to produce an output of the same size as the input (i.e., a pixel-wise map). They replace the dense layers with convolutional layers. Expanding upon FCN’s foundation, many researchers have pioneered advanced 2D fully convolutional neural networks, including U-Net, SegNet, DeepLab, and PSPNet88,90–92. Khan et al., presented a fully convolutional network called RC-Net for retinal image segmentation. The network itself is relatively small and the number of filters per layer is optimised to reduce feature overlapping and complexity as compared to alternatives elsewhere in the literature. In the model, they kept pooling operations to a minimum and integrated skip connections into the network to preserve spatial information93.

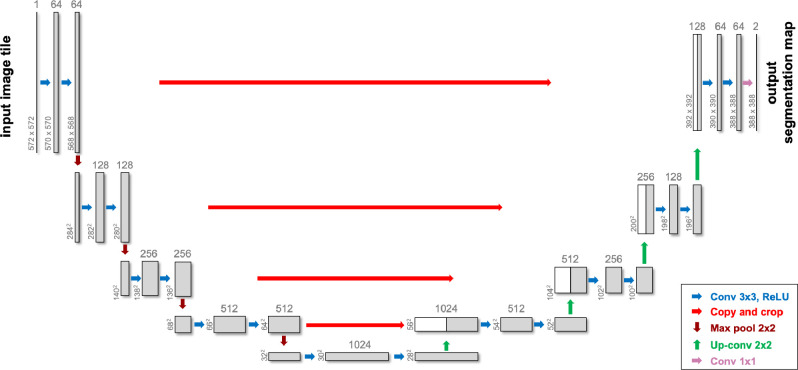

U-Net, distinguished by its unique U-shaped architecture, has become particularly popular for medical image segmentation tasks, especially when the dataset is limited88. It leverages skip-layer connections combined with encoder-decoder multi-scale features, enhancing segmentation precision. An example of a Unet architecture is illustrated in Figure 3.

Figure 3.

A sample U-Net architecture used in medical image segmentation tasks - after88

Given U-Net’s success in medical image segmentation, Livne et al. streamlined it by halving the channels in each layer, coining it “half U-Net” for brain vessel segmentation52. Even though there has been extensive exploration of 2D methodologies in the literature (see Tables 1 especially in coronary vessel segmentation, 2, 3, and 4), it is observed that relying solely on 2D segmentation networks may overlook inter-slice relationships, leading to subpar segmentation82. Additionally, given the nonplanar nature of blood vessels, attempting to segment them without adequate volumetric information, such as on a single slice or in 2.5D scenarios, poses challenges. Although direct comparison of different studies is hampered due to the use of different datasets, the results from the studies detailed in Tables 1, 2, 3, and 4 suggest that 3D models outperform 2D models. Specifically, 3D models achieve an average Dice score of 0.83, compared to the 0.79 average for 2D models, underscoring the significance of interslice relationships.

Consequentially, researchers have proposed 3D segmentation networks like 3D U-Net, V-Net, and VoxResNet94–96. Huang et al. utilised 3D U-Net with data augmentation for liver vessel segmentation and introduced a weighted Dice loss function to address the voxel imbalance between vessels and other tissues97.

To enhance the segmentation abilities of the U-Net network, several modifications have been proposed. One notable advancement is the attention U-Net, introduced by Oktay et al., which is designed to suppress irrelevant regions in an input image while highlighting salient features useful for a specific task.98. Bahdanau et al. originally conceived the attention mechanism to tackle the challenges arising from using a fixed-length encoding vector, which restricted the decoder’s access to input information99. This mechanism mimics human attention, enabling the model to concentrate on specific input areas while generating an output. The core idea behind this mechanism is to assign different weights to different parts of the input data, indicating how much “attention” each part should receive relative to others when producing a specific output.

Building upon the U-Net framework, Zhou and colleagues implemented U-Net ++ with the premise that the network would face a more straightforward learning challenge when the feature maps from both the decoder and encoder networks have semantic similarities100. With this goal in mind, they reconfigured the skip pathways to diminish the semantic disparity between the encoder’s and decoder’s feature maps.

Sanches et al. melded 3D U-Net and Inception, dubbing it “Uception” for brain vessel segmentation101. Dong and colleagues introduced a cascaded residual attention U-Net, termed CRAUNet, for a layered analysis of retinal vessel segmentation. The architecture capitalizes on the advantages of U-Net, coupled with cascaded atrous convolutions and residual blocks that are further enhanced by squeeze-and-excitation features102. Drawing inspiration from U-Net and DropBlock, Guo et al. introduced the Structured Dropout U-net (SD-Unet) for coronary vessel segmentation. This design amalgamates the U-Net and DropBlock frameworks to omit specific semantic details, thereby preventing the network from overfitting103.In 2021, Pan et al. adopted a 3D Dense-U-Net model and replaced the standard DSC loss function with the focal loss function to tackle the issue of class imbalance in order to achieve fully automated segmentation of the coronary artery104. In 2022, Wu and colleagues introduced the Multi-Scale Interactive U-Net (MSI-U-Net), an enhancement of the 3D U-Net that enhances the precision of segmenting smaller vessels82. They offered a strategy for interacting with information at multiple scales, where features are transferred among vessels of varying sizes through shared convolution kernel parameters, strengthening the relationship between small, medium, and large vessels in lung CT scans.

nnU-Net105 is one of the most widely utilised framework for vessel segmentation tasks, consistently delivering impressive results. It is considered the state-of-the-art for a wide variety of medical image segmentation tasks, with the original or variant of nnU-Net winning international biomedical challenges across 11 tasks105,106. It has also recently proposed as the network for the standardised evaluation of continual segmentation106. Rather than introducing a novel model architecture, nnU-Net is designed to autonomously adapt and configure the entire segmentation framework, encompassing preprocessing, network architecture, training, and post-processing, to suit any new task. This deep learning framework is known for its flexibility, scalability, and ease of use in tackling various medical imaging segmentation problems. To this end, it is frequently used to benchmark new model approaches, or combined with other models as in ensembles to improve performance.

It should be noted that as Moccia et al.4 and Garcia et al.57 recently mentioned, the success of the segmentation approaches is highly influenced not only by the algorithm but also by factors such as imaging modalities the presence/absence of noise or artifacts, and the anatomical region of interest. This makes direct comparisons among the studies in the literature challenging. On top of using different modalities from CTA to MRA only 29% of the herein reviewed papers used publicly available data, further complicating efforts to replicate or compare their findings.

The data presented in Tables 1, 2, 3, and 4 reveals a predominant use of U-Net or variants of CNNs in the majority of studies, accounting for 80% of the cases.

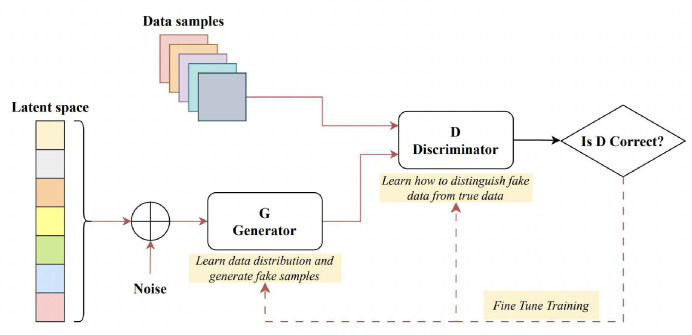

2.3.2. Generative adversarial networks (GANs)

Goodfellow et al. introduced the Generative Adversarial Network (GAN) for synthesizing images from random noise107. GANs represent generative models designed to approximate real data distributions, enabling them to generate novel image samples. GAN models are commonly employed for tasks such as image-to-image translation (cross-modality synthesis), image synthesis, and data augmentation. Comprising two distinct networks, a generator and a discriminator, GANs function by pitting these networks against each other during training. A sample GAN architecture can be seen from Figure 4. The generator crafts artificial images to deceive the discriminator, while the discriminator endeavours to distinguish genuine images from fabricated ones, a process termed “adversarial training”. This methodology can be adapted to train segmentation networks, where a generator is tasked with generating segmented images, and the discriminator differentiates between the predicted segmentation maps and the authentic ones. This modification prompts the segmentation network to yield more anatomically accurate segmentation maps108,109. Conditional GANs (cGANs), on the other hand, are a modified version where the generator creates images depending on specific conditions or inputs, which can be useful in vessel segmentation.

Figure 4.

A sample generative adversarial network architecture - after115

Son et al. introduced a technique employing generative adversarial training to create retinal vessel maps in the context of vascular segmentation. Their method enhanced the segmentation efficacy by employing binary cross-entropy loss during the training of the generator110. In 2020, K.B. Park et al. introduced a novel architecture called M-GAN, which aimed to enhance the accuracy and precision of retinal blood vessel segmentation by combining the conditional GAN with deep residual blocks111. The M-generator incorporated two deep FCNs interconnected through short-term skip and long-term residual connections, supplemented by a multi-kernel pooling block. This setup ensured scale-invariance of vessel features across the dual-stacked FCNs. They integrated a set of redesigned loss functions to optimise performance, encompassing BCE, LS, and FN losses. Recently, Amran et al. introduced an adversarial DL-based model for automatic cerebrovascular vessel segmentation112. Their BV-GAN model utilised attention techniques, allowing the generator to focus on voxels more likely to contain vessels. This is achieved by leveraging latent space features derived from a prior vessel segmentation map, effectively addressing the issue of imbalance settings. In related research, Subramaniam et al. introduced a 3D GAN-based approach for cerebrovascular segmentation113. While their method used GANs for dataset augmentation (generating an extensive set of examples with self-supervision), Amran et al. aimed to enhance the U-NET-based segmentation directly through the GAN technique. Finally, earlier in 2023, Xie et al. introduced the MLP-GAN for brain vessel segmentation114. This method divided a 3D brain vessel image into three separate 2D views (sagittal, coronal, and axial) and processed each through distinct 2D conditional GANs. Every 2D generator incorporated a modified skip connection pattern integrated with the MLP-Mixer block. This design enhances the ability to grasp global details.

2.3.3. Vision transformers

Transformers are primarily designed for sequence-to-sequence tasks but have shown significant promise in various domains, including computer vision. Vision Transformers (ViTs) are a type of neural network architecture that use transformer mechanisms to process images. First presented by Google Research in 2020, Vision Transformers have shown competitive results with traditional CNNs on image classification tasks, even surpassing them in some benchmarks. In Transformers, attention allows the model to consider other words in the input sequence when encoding a particular word, leading to the capture of long-range dependencies. Vision Transformers (ViTs) use the same principle on image patches, allowing the model to consider distant parts of an image when encoding a particular patch. Following their success in the realm of computer vision, researchers began to explore the potential of vision transformers for medical image segmentation tasks116.

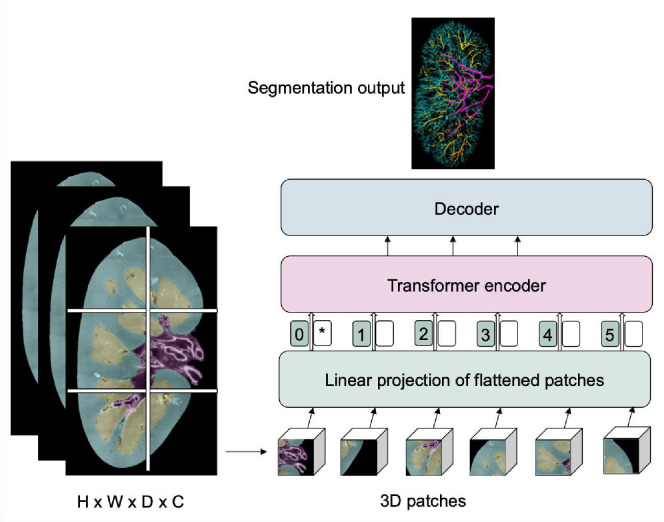

Instead of being processed by pixel values, images are segmented into setsized, non-overlapping sections, such as 16×16 pixels for medical image segmentation using ViTs. These sections are linearly transformed into singular vectors, a procedure called tokenization. Subsequently, to preserve the spatial context, positional embeddings are integrated with the tokenised patches. These enhanced embeddings navigate through various layers of the standard transformer encoder. To form a segmentation mask, a decoding method, which might be an upsampling layer or an alternative transformer, is applied to produce labels for each pixel in the image. A sample vision transformer framework for vasculature segmentation is illustrated in Figure 5.

Figure 5.

A sample vision transformer architecture for renal vasculature segmentation

In 2021, Chen et al. came up with a model called TransU-Net, where a hybrid CNN-Transformer architecture is created to leverage both detailed high-resolution spatial information from CNN features and the global context encoded by Transformers by treating the image features as sequences117.

Pan et al. introduced the Cross Transformer Network (CTN), a new approach designed to understand 3D vessel features while considering their overall structure. CTN accomplishes this by combining the U-Net and transformer modules, allowing the U-Net to be more globally aware and better handle issues like disconnected or imprecise segmentation118.

Yu et al. introduced two new deep learning modules, called CAViT (Channel Attention Vision Transformer) and DAGC (Deep Adaptive Gamma Correction), to solve the problem of retinal vessel segmentation. CAViT combines two components: efficient channel attention (ECA) and the vision transformer (ViT). The ECA module examines how different parts of the image relate to each other, while the ViT identifies important edges and structures in the entire image. On the other hand, the DAGC module figures out the best gamma correction value for each input image. It does this by training a CNN model together with the segmentation network, ensuring that all retinal images have the same brightness and contrast settings119.

Zhang and his team, on the other hand, introduced a model named TiM-Net for efficient retinal vessel segmentation120. To capitalize on multiscale data, TiM-Net takes in multiscale images after maximum pooling as its inputs. Following this, they incorporated a dual-attention mechanism after the encoder to minimize the effects of noisy features. Concurrently, they utilised the MSA mechanism from the Transformer module for feature re-coding to grasp the extensive relationships within the fundus images. Finally, a weighted SideOut layer was created to complete the final segmentation.

The Swin Transformer is a modified version of the Vision Transformer (ViT), designed to further adapt the transformer structure for image-related tasks and boost its efficiency. “Swin” gets its name from “Shifted Window,” reflecting a key feature of its design. In July 2023, Wu and his team developed the Inductive BIased Multi-Head Attention Vessel Net (IBIMHAV-Net)121. The architecture is formed by extending the Swin Transformer to 3D and merging it with a potent mix of convolution and self-attention techniques. In their approach, they used voxel-based embedding instead of patch-based, to pinpoint exact liver vessel voxels, while also utilizing multi-scale convolution tools to capture detailed spatial information.

The analysis of Tables 1, 2, 3, and 4 indicates a less frequent application of GANs and transformers (around 20%), suggesting that while U-Net and CNNs are the preferred methods in the field, there is still room for exploration and potential growth in the utilisation of GANs and transformers for vessel segmentation.

3. nnU-Net for kidney vessel segmentation

From the above review of the literature, we concluded that segmentation of blood vessels from HiP-CT data should be initially attempted using nnU-Net105. As nnU-Net is one of the most widely utilised frameworks for vessel segmentation tasks and has consistently delivered impressive results, we felt that this would provide the initial baseline against which any future development of more HiP-CT-specific frameworks should be benchmarked. Therefore, we prepared a training dataset from three different human kidneys, imaged with HiP-CT and semi-manually segmented by expert annotators. The goal was to assess how much training data is needed to provide adequate segmentation for a single kidney but also to investigate if nnU-Net trained on a subset of kidney data would generalise to a new kidney dataset given the high inter-sample variability seen between human organs.

In this section, we will provide a description of the HiP-CT kidney datasets, including its acquisition protocol and the segmentation process (subsection 3.1). Following that, we will present detailed information about the employed nnU-Net framework configuration (subsection 3.2), and finally, we will present the results for nnU-Net application to kidney vascular segmentation of HiP-CT data subsection 3.3.

3.1. Hierarchical phase-contrast tomography (HiP-CT) kidney dataset

Three human kidneys were used to create the training dataset - termed kidney 1, kidney 2 and kidney 3. Kidney 1 and kidney 3 , were collected from donors who consented to body donation to the Laboratoire d’ Anatomie des Alpes Françaises before death.Kidney 2 was obtained after clinical autopsy at the Hannover Institute of Pathology at Medizinische Hochschule, Hannover (Ethics vote no. 9621 BO K 2021). The transport and imaging protocols were approved by the Health Research Authority and Integrated Research Application System (HRA and IRAS) (200429) and French Health Ministry. Post-mortem study was conducted according to Quality Appraisal for Cadaveric Studies scale recommendations122. Sample preparation and scanning protocols are described in the references15,20,123. Basic scan parameters and demographic information are provided in Table 5

Table 5.

The size of the kidney volumes from the dataset used in the experiments together with their gender and resolution information

| Donor identifier | Experiment name | Dataset size (x,y,z) (pixels) | Gender | Age | Scanning voxel size (um) | Scan energy (keV) | Binned voxel size (um) |

|---|---|---|---|---|---|---|---|

|

| |||||||

| LADAF-2021-17 | Kidney 1 | 1303,912,2279 | M | 63 | 25.0 | 81 | 50.0 |

| S20-28 | Kidney 2 | 1041,1511,2217 | M | 84 | 25.0 | 88 | 50.0 |

| LADAF-2020-27 | Kidney 3 | 1706×1510,500 | F | 94 | 25.08 | 93 | 50.16 |

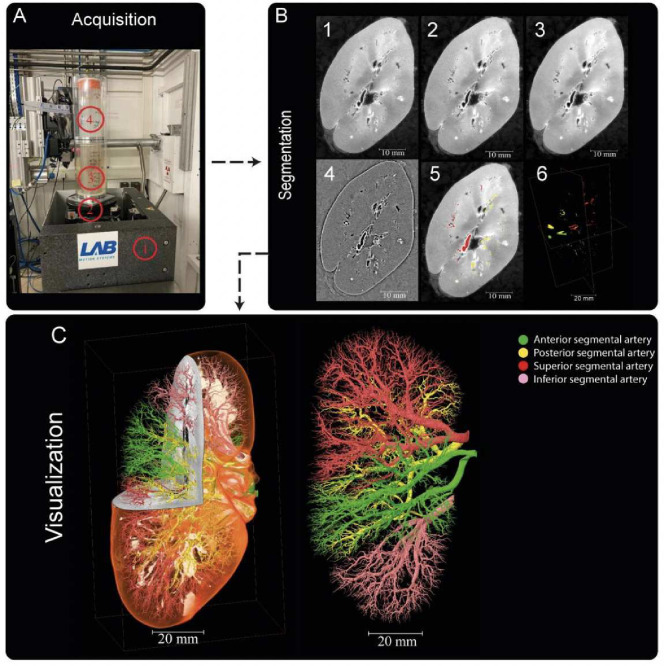

The segmentation process of the three kidneys was carried out in Amira Version 2021.1. The reconstructed raw image data Figure 6 B1 first underwent average binning x2 Figure 6 B2 from the acquired resolution (ca. 25μm) to ca. 50μm, 3D median filtering was applied in Amira-Avizo v2021.1 (3 iterations 26 voxel neighbourhood) Figure 6 B3 and filtering to visually enhance vessels appearance performed using background detection correction (Amira v2021.1; default parameter settings)Figure 6B4. Segmentation was performed in a semi-manual fashion using the Amira v2021.1 magic wand tool. This is an interactive 3D region growing tool. Using this tool, annotators select a seed voxel within a vessel in a slice (slices can be in any one of three orthogonal directions); in addition, the annotator selects and refines a combination of intensity threshold, contrast threshold and hard-drawn limits, which are used to specify the stopping criteria of the 3D region growing. In some cases, it is necessary to manually draw using a paintbrush tool in slice-by-slice locations where vessels are infilled with blood or have largely collapsed. To provide assurance for labelling quality, an expert annotation validation process was implemented. Firstly, an independent, experienced annotator meticulously conducted a 3D proofreading of the binary labels filling in any missing vessel labels in the three orthogonal planes. After this, five randomly selected 2D circular regions of the image are selected, a third annotator marks all the labeled regions (one mark per region) (these label regions are cross sections of the vessels). The third annotator then counts the True Positive (correctly segmented vessels), False Negative (missed vessels), (note false positives are rare and easily removed due to the connected nature of the arterial tree). This provides a metric for what proportion of vessels have been traced or missed, and by sampling random areas in the dataset, directs the annotators to regions that may be poorly segmented. This triple validation process was iterated to achieve final segmentations which can be accessed via124. It should be noted that with the binning, median filter and manual approach, vessels with diameter as small as 1–2 pixels could be segmented. Given the resolution of the images and their location within the generation vascular tree, these vessels represent interlobular arteries within the renal vascular tree and have been estimated from higher resolution HiP-CT of the same kidney, to be two branching generations from the kidney glomeruli (where the capillary bed is found). It is also of note that these samples come from a diverse age grouping 63–94yr old and have two male and one female represented. Finally it is also worthy of note that in the case of kidney 2 (S20–28) the donor’s cause of death was COVID-19 which can cause microthrombi in the renal vasculature and is seen as hyperintensities particualrly around the edge of the kindey cortext, this can be seen in Supplementary movies (1–3) which show slice by slice views of each kidney.

Figure 6.

Processing pipeline for HiP-CT imaging and segmentation of human kidney vasculature. (A) Setup for imaging acquisition using HiP-CT at BM05; 1) tomographic stage, 2) platform, 3) sample, 4) reference sample (B) Image processing pipeline; 1) a 2D reconstructed image at 25 μm3 /voxel resolution; 2) binning the image by 2, 3) applying 3D median filter to increase signal-to-noise ratio, 4) Image normalization using background detection correction, 5) Segmentation and thresholding, 6) Labelling the four main arterial branches (C) 3D rendering of the segmented vascular network of a human kidney. Each of the main four branching of the renal artery entering the kidney are colour-coded, Figure after20

The number of slices and size of each slice segmentation were as followed (Z, X, Y): kidney 1: (2279, 1303, 912); kidney 2: (2217, 1041, 1511); and kidney 3: (501, 1706, 1510). The network topologies automatically generated for 3D full resolution configuration for experiment 1 were a patch size (Z, X, Y)of [112,112,192], a batch size of 2 and the number of pool per axis (Z, X, Y) were [4,4,5].

3.2. nnU-Net framework

Preprocessing.

Image preprocessing is a necessary step in machine learning, especially for image segmentation tasks. Notably, this step is essential in deep learning since it prepares the input data for effective model training and ensures that the model can learn meaningful patterns and relationships from the data. Proper preprocessing can improve model performance, generalisation, and robustness in handling real-world data. nnU-Net includes some automated preprocessing steps as part of its data preparation pipeline. These preprocessing steps include data augmentation (rotations, scaling, gaussian noise, gaussian blur, brightness, contrast, simulation of low resolution, gamma correction and mirroring), intensity normalization (global dataset percentile clipping, z-score with global foreground mean, z-score with per image mean), image and annotation resampling strategies (in-plane with third-order spline, out-of-plane with the nearest neighbour, third-order spline), and image target spacing (lowest resolution axis tenth percentile, axes median, median spacing for each axis).

Architecture.

It is important to mention that the nnU-Net framework does not have a new deep learning architecture.

However, it covers the U-Net family, which has encoder-decoder architectures, including 2D U-Net88, 3D U-Net95, and Cascaded 3D U-Net125. It also provides an ensemble option, which explores 2D U-Net, 3D U-Net, or 3D cascade results and chooses the best model (or combination of two) according to cross-validation performance.

Postprocessing.

nnU-Net framework also provides optional configuration for postprocessing on the full set of training data and annotations, including treating all foreground classes as one individual class (depending on the largest component suppression increases in cross-validation performance)

Configuration and Training Process.

We trained the nnU-Net framework using the 3D U-Net version with 5-fold cross-validation implemented on the training sets. The evaluation results presented in the following section are the averages on testing sets of 5-fold cross-validation. We used the nn-UNet default auto-generated hyper-parameters for training, which include the learning rate91 initialised as 0.01 with a polynominal decay policy of , the loss function as the sum of cross-entropy and Dice loss126, an optimizer based on stochastic gradient descent (SGD) with Nesterov momentum value of 0.99 and epoch number of 1000 with mini-batches of 250. Notably, the default nnU-Net typically requires > 30 million parameters for the training. The proposed model was implemented in Python language1 using Pytorch127. All experiments were on a NVIDIA TITAN RTX 24GB GPU.

3.3. Evaluation

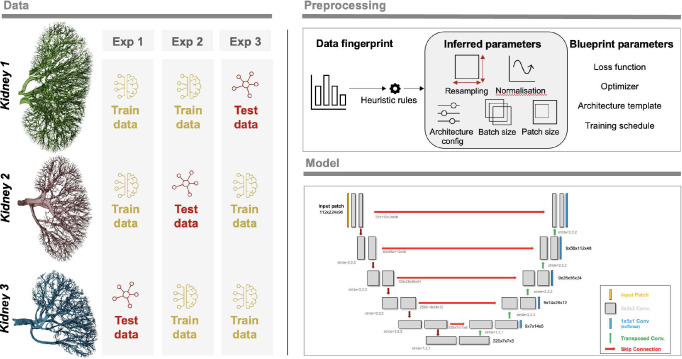

We chose to perform four experiments. In three experiments, we used two of the kidneys as training data and one as test data. These aimed to investigate how well the nnU-Net approach would generalise to a new dataset where the donor and thus anatomy and sample prep may differ slightly; it also had the virtue of testing how different-sized training datasets impacted nnU-Net training output. The overview of the experiments can be seen in Figure 7.

Figure 7.

Overview of the experiments together with a sample 3D nnU-Net architecture

The fourth experiment aimed to see how well nnU-Net could segment the remainder of a dataset given a limited amount of labelled slices from the same dataset. Due to the size of HiP-CT datasets, such a model still has utility as segmenting vascular networks for entire organs is highly time-consuming20. In this section, we selected kidney 1 for our experimental evaluation. Half of the kidney has been utilized as a training set (1138 slices), and the remaining half as a testing set (1140 slices).

The importance of evaluation metrics in automatic image processing with machine learning cannot be emphasised enough, as they serve as a basis for determining the choice or practical applicability of a method. However, much of the research has been on creating novel image processing algorithms leaving the critical issue of reliably and objectively evaluating the performance of these algorithms largely unexplored50. Moreover, some of the commonly used evaluation metrics do not always correlate with clinical applicability47,128, and the specific features of a biomedical problem may make certain metrics unsuitable, such as when the Dice Similarity Coefficient (DSC) is utilised to evaluate extremely small structures129. As a result, carefully choosing the right evaluation metric for a given problem becomes important for validating and comparing the performance of image processing methods.

In vessel segmentation, class imbalance presents a significant challenge where the pixel count for the foreground class (vasculature) is notably lesser than that of the background class (non-vasculature).

Class imbalance can hinder effective network training, as most data points are typically negative samples (or backgrounds) that often do not offer valuable learning insights. Moreover, these negative samples can dominate the positive ones (such as arteries) during the training process as loss values are predominantly generated from the negative samples.

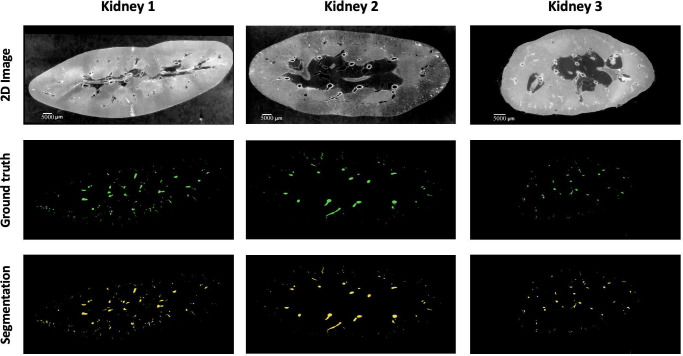

A combination of overlap-based and boundary-based metrics is selected to evaluate different properties of the model predictions50. For overlap-based metrics, Dice Similarity Coefficient (DSC or Dice) is computed and is complemented by the Centerline Dice (clDice)49 due to the tubular nature of blood vessels and the importance of connectivity for vascular structures. Overlap-based metrics have certain limitations such as shape unawareness and inaccurate assessment when dealing with small structures hence, boundary-based metrics, specifically Normalised Surface Dice (NSD) and Average Symmetric Surface Distance (ASSD), are also computed using MONAI v1.2.0130. The results of the four experiments are provided in Table 6 and representative images for each kidney shown in Figure 8.

Table 6.

Results of all four experiments done using nn-UNet.

| Expt. | Train data | Test data | Dice | CLD | NSD (t=1) | NSD (t=0) | Avg. sym. SD |

|---|---|---|---|---|---|---|---|

|

| |||||||

| 1 | Kidney 1,2 | Kidney 3 | 0.9410 | 0.8886 | 0.9651 | 0.7631 | 4.300 |

| 2 | Kidney 1,3 | Kidney 2 | 0.9523 | 0.8533 | 0.9518 | 0.7120 | 0.8639 |

| 3 | Kidney 2,3 | Kidney 1 | 0.8585 | 0.8228 | 0.8968 | 0.7132 | 2.9270 |

| 4 | Half of kidney 1 | Other half of kidney 1 | 0.9513 | 0.8631 | 0.9404 | 0.8549 | 2.1561 |

SD: Surface Distance; NSD: Normalised Surface Dice; CLD: Centerline Dice; t: tolerance; Sym: symmetric

Figure 8.

A sample 2D slice from each kidney together with their corresponding ground truth labels and the output of the model segmentations (for kideny 1 the segmentation is provided by Expt. 3

4. Results

In this study, we evaluated model performance for the segmentation of the arterial network from HiP-CT images of whole human kidney. Five metrics: Dice, clDice, NSD (t=1), NSD (t=0), and ASSD, with results presented in Table 6 were used to evaluate the outputs. The range of metrics we employed in our experiments provides a thorough overview of model performance and allows the state-of-the-art presented to be rigorously benchmarked against future research. For each experiment, one unseen kidney is selected as test data for evaluation of the model’s generalisation capability. The 5 metrics are calculated on test data after the model training is finished. Beyond quantitative evaluations, we provided visual insights through 3D representations of the ground truth, model predictions, and false negatives.

Based on our literature review (see Tables 1, 2, 3, and 4), it is clear that the Dice Similarity Coefficient (DSC), also known as Dice, is the predominant metric for evaluating vascular segmentation model performance. Therefore, employing DSC for result evaluation is essential to align with existing literature, such as in54. This metric quantifies the overlap between segmentation predictions and ground truth. In our three experiments, the first and second experiments yielded superior segmentation results on the unseen kidney, with Dice scores of 0.9523 and 0.9410, respectively, whereas the third experiment attained only 0.8585. 3D examination of kidney 1, as shown in Figure 9; revealed more collapsed vessels compared to kidney 2 and 3, potentially explaining the lower Dice score when the first kidney was the test subject. Nevertheless, DSC primarily assesses voxel-to-voxel concordance, overlooking several crucial characteristics of the vessels. Hence, it should not be the sole metric for deciding the performance of vascular segmentation.

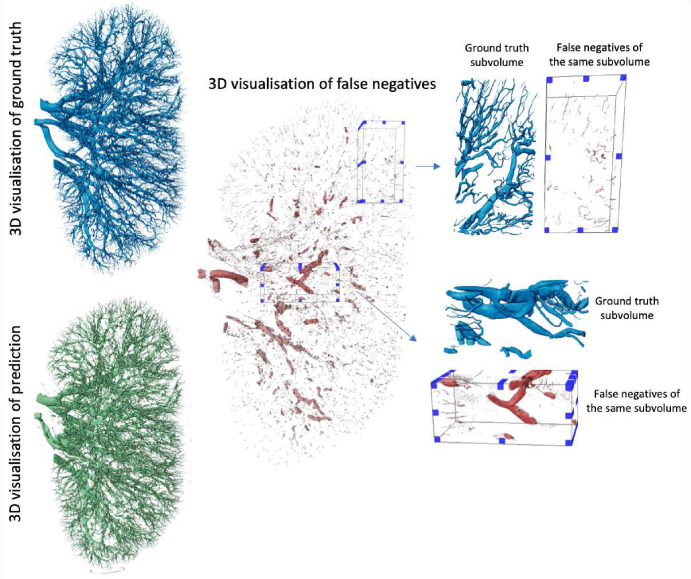

Figure 9.

The visualization of kidney 1 and model’s false negative prediction together with subvolumes taken from it indicating the regions where model did perform worst

In Section 3.3, we describe an additional experiment (experiment four) to evaluate the nnU-Net’s performance with a limited dataset. In this case we used just kidney 1. We trained the model on half the data and tested it on the remaining half. This experiment’s training-test division was designed to test the model’s capabilities, to extrapolate from a small subset of images within a single organ to the entire dataset. This reduces the challenge of the segmentation task but also reduces the utility of the final model, however given the size of HiP-CT datasets, such an approach is likely to still have utility in particularly challenging or anatomically unique datasets. The performance metrics for the test set were Dice = 0.9513, clDice = 0.8631, NSD (t=1) = 0.9404, NSD (t=0) = 0.8549, and ASSD = 2.1561. These results indicate that nnU-Net is effective at segmenting ‘unseen’ kidney tissues from the same organ, reflecting its capability to learn kidney anatomy and thereby enhancing the efficiency of the labeling process. It also shows the expected improvement by comparison to the other three experiments, indicating the challenges with accounting for anatomical variation across human samples, or variations in the data aquisition and data reconstruction parameters see15,123,131 for details on protocol variations. The variation is also expected as the HiP-CT technique continues to develop and as the Human Organ Atlas extends it would be possible to use automated segmentation techniques to perform large studies to analyse vascular network differences due to pathology, age or sex etc of the donor.

5. Discussion

Given the nature of vasculature networks - heirarchial structures of thin and elongated tubular structures, ensuring sensitivity to small vessels and mitigating boundary ambiguities is pivotal. Therefore, we also reported the distance measurement by a distance metric known as ASSD (see equation 15) to evaluate the boundary distances between predictions and ground truths. On top of ASSD, we also calculated the NSD to evaluate the similarity of the segmented regions’ surfaces (or boundaries) rather than the volumetric overlap. Instead of considering the overlap of the entire segmented regions, NSD assesses the closeness of their borders. It is particularly useful in scenarios where small boundary deviations can be critical, such as in segmenting thin structures or lesions. Tolerance is an important hyper-parameter when it comes to NSD, which relates to the acceptable amount of deviation in the segmentation boundary in pixels. If a point on the boundary of the prediction is within this tolerance distance from a point on the boundary of the ground truth, it is considered a TP. Therefore, an appropriate NSD tolerance should usually be selected based on inter-annotator variability or some other heuristic. In our experiment, we initially set tolerance to a strict 0 pixels and relaxed it to 1 pixel afterwards, with the latter achieving around 25% increase in the NSD score. This shows that the model fails to predict the exact boundaries of the vasculature, but 1-pixel error tolerance can highly increase the segmentation results, which indicates that while a post-processing step could be used to fix the larger vessels, the vessels that might be only 1 pixel in diameter (as is the case in our dataset) would still be missed. Consequently, models that improve segmentation of such thin vessels are needed.

On the other hand, capturing vessel continuity and centerline of the structure is vital even if the overall vessel shape is largely captured. To this end, we applied clDice (see equation 7), a modified Dice, as another evaluation metric to our experiment. Minor deviations or inaccuracies in the centerline of vasculature structures can lead to a significant reduction of the clDice score, as it treats larger as well as thinner structures equally, thereby reducing bias toward algorithms that predict larger structures such as large arteries but miss microvasculature structures. A centerline-based metric can also provide a more consistent basis for comparison of vasculature continuity as it reduces the influence of boundary details which might be more susceptible to variations in resolution. In our case, discontinuities on the boundaries (see Figure 9) and a decrease in performance capturing smaller vessels at the end can result in lower clDice than standard Dice reported in Table 6. Moreover, if the model underestimates or overestimates the width of the vessels the standard DSC might still be relatively high because it considers the overlap of the entire segmented region with the ground truth. However, these inaccuracies can disrupt the vessel’s centerline, leading to a lower clDice score. It should be noted that since clDice depends on the underlying skeletonization algorithm, it should be carefully used, as inefficiencies or method chosen for skeletonisation can lead to inaccurate computation of the clDice score.

At this stage, it is also important to acknowledge that some of the challenges in detecting the smallest vessels come from annotation. Notably, in the kidney 1 dense dataset, certain smallest labels are missing due to annotation errors. This is because the labeling process is semi-manual, utilising an interactive 3D region growing tool. In this method, annotators begin by selecting a seed voxel within a vessel on a given slice, which may be oriented in any of the three orthogonal directions. The process involves setting and adjusting several parameters: intensity threshold, contrast threshold, and manually drawn boundaries. These parameters define the stopping criteria for the 3D region’s expansion. Despite its utility, this technique sometimes results in incomplete labeling of the tiniest vessels due to its reliance on human input and decision-making.

From the discussion on metrics above, the benchmarking methodology of nnUNet fails in several scenarios when applied to HiP-CT data on vascular segmentation. With whole organ HiP-CT we are able to manually segment and create training data down to the level of the interlobular arteries or approx. two generations from the capillary bed. These arteries have a minimum radius of ca. 40μm20, (1–2 pixels in diameter). However, the experiment scores reveal that the failed prediction on such small vessels leads to discontinuity problems for the vasculature structure estimation. The work in49 has shown an improved continuity performance on vasculature segmentation with clDice as part of the loss function when training the network. Integration of rich hierarchical representations of thin and long structures by use of spatial attention and channels attention modules also can potentially improve the outcomes132. Another challenge that may hinder vasculature continuity is edge identification and segmentation. Considering the imbalance of edge and non-edge voxels, an edge-reinforced neural network (ER-Net)133 combining a reverse edge attention module61 and an edge-enforced optimisation loss to discover spatial information of the edge structures could potentially increase the vasculature continuity on segmentation results. In both the small vessel and edge enhancement cases it is important to remember the HiP-CT is a propagation-based phase contrast technique and thus has intrinsic contrast for small structures and edges between structures. Tomographic reconstruction is a part of the process of HiP-CT data volume data production, and can have a large impact on the ease of segmentation. Tuning the tomographic reconstruction pipeline to enhance specific features, whilst challenging could be a powerful approach to segmenting specific smaller structures.

Relative to the ground truth, the benchmark model appears to overlook certain vasculature, particularly struggling to identify certain large vessels Figure 9. Upon closer examination of the pertinent sub-volumes, it becomes evident that these misdetected large vessels correspond to collapsed vessels within the organs. As shown in Figure 9 of the prediction on kidney 1, collapsed vessels have a flat appearance, morphologically different from the noncollapsed vessels seen elsewhere. This suggests that the model’s performance falters notably in the presence of disrupted structures, and these could be the potential cause for the observed lower scores when kidney 1 is used as the test set. In future approaches, addressing the challenge of collapsed vessels could involve employing a data augmentation method. By applying transformations that accurately simulate the morphological changes observed in collapsed vessels, the algorithm’s ability to recognize and accurately segment collapsed vessels can be enhanced. Key transformations could include selectively compressing or flattening portions of the vessels in certain images. Additionally, adjusting the vessels’ shape while ensuring these modifications are medically realistic would be essential.

HiP-CT scans in high-resolution present challenges to deep learning methods applied to different tasks such as segmentation. These scans highlight more details that must be captured by the models. Using a larger patch size for training can ease the problem. However, there is a trade-off between the GPU memory and the size of the receptive field. As a result, integrating regional features and their global dependencies remains a research direction.

6. Conclusion and future work

The vascular system is vital for nurturing and supporting every organ in the body, and its abnormalities can be indicative of various diseases. Developing accurate and automated segmentation of vasculature, that can be applied to nasent imaging technologies such as HiP-CT, could pave the way for scalable vascular segmentation across large-scale datasets. Scalability in turn enables quantification across a large demographic population, therefore assisting the construction of a data-driven Vascular Common Coordinate Framework134,135 for human atlasing projects such as the Human BioMolecular Atlas Program136, the Human Organ Atlas Project137 and the Cellular Senescence Network Program138.

This paper offers a comprehensive review of recent literature focusing on deep-learning techniques for blood vessel segmentation. We primarily delve into the recent deep-learning methodologies, emphasizing the challenges associated with vasculature segmentation. Our aim with this review is to lay a solid foundation for researchers, building robust models for vessel segmentation, especially using phase contrast imaging.