Abstract

Sentiment analysis in the public security domain involves analysing public sentiment, emotions, opinions, and attitudes toward events, phenomena, and crises. However, the complexity of sarcasm, which tends to alter the intended meaning, combined with the use of bilingual code-mixed content, hampers sentiment analysis systems. Currently, limited datasets are available that focus on these issues. This paper introduces a comprehensive dataset constructed through a systematic data acquisition and annotation process. The acquisition process includes collecting data from social media platforms, starting with keyword searching, querying, and scraping, resulting in an acquired dataset. The subsequent annotation process involves refining and labelling, starting with data merging, selection, and annotation, ending in an annotated dataset. Three expert annotators from different fields were appointed for the labelling tasks, which produced determinations of sentiment and sarcasm in the content. Additionally, an annotator specialized in literature was appointed for language identification of each content. This dataset represents a valuable contribution to the field of natural language processing and machine learning, especially within the public security domain and for multilingual countries in Southeast Asia.

Keywords: Sarcastic sentiment, Multilingual, Southeast asia, Public security threat, Multitask learning

Specifications Table

| Subject | Computer Science: Computer Science Application |

| Specific subject area | Machine Learning, Natural Language Processing |

| Type of data | Table Raw, Selected, Filtered |

| Data collection | Data was collected from 11 September to 21 September 2022, using the Twitter API and TikTok Comment Scraper in Jupyter Notebook1, based on a bilingual keyword database for public security domain, specifically covering natural and non-natural disasters. Pre-processing involved filtering out duplicates, content under ten characters, URLs, hashtags, and user mentions to ensure relevance and quality. The merging and selection were performed using OpenRefine2, allowing for precise data cleaning before annotation. A total of 10,000 relevant comments and tweets were then manually annotated for sentiment ('positive', 'negative', 'neutral') and sarcasm ('sarcastic', 'not sarcastic') in Doccano3, with language identification for English, Malay, and Code-Mixed contents. Discrepancies were resolved through majority voting among three expert annotators. Details of the data collection are provided in the Experiment Design/Materials and Methods Section. |

| Data source location | Malaysia |

| Data accessibility | Repository name: Zenodo Data identification number: 10.5281/zenodo.11642494 Direct URL to data: https://doi.org/10.5281/zenodo.11642494 |

| Related research article | M. S. Md Suhaimin, M. H. Ahmad Hijazi, E. G. Moung and M. A. Mohamad Hamza, “Data Augmentation Approach for Language Identification in Imbalanced Bilingual Code-Mixed Social Media Datasets,” 2023 IEEE International Conference on Artificial Intelligence in Engineering and Technology (IICAIET), Kota Kinabalu, Malaysia, 2023, pp. 257–261, doi: 10.1109/IICAIET59451.2023.10292108. |

1. Value of the Data

-

•

These data are useful for research dealing with sentiment analysis and sarcasm detection in social media, especially in bilingual and code-mixed data. The dual-label annotation for each piece of data is useful for the study of multi-objective and multitask learning.

-

•

The data will benefit the researcher in the fields of Natural Language Processing, Computational Linguistics, Machine Learning, and those conducting local studies within multilingual communities in Southeast Asia. Additionally, the data is also beneficial to public security analysts, law enforcement agencies and non-governmental organizations.

-

•

Data can serve as training data for machine learning model for multiclass sentiment analysis and sarcasm detection in social media, particularly within the public security domain. The data also can serve as a reference for modelling or building applications of public security threat detection in social media.

-

•

This dataset uniquely includes annotated bilingual, code-mixed content, addressing the complex challenge of how sarcasm impacts sentiment analysis. The merging data from two social media platforms—Twitter and TikTok—broadens the scope of target data, ensuring more comprehensive and reliable coverage of public sentiments. Additionally, each data is labelled for language, aiding further analysis and clarity.

2. Background

In the recent digital landscape, social media has emerged as a vital indicator of public sentiment, especially in the domain of public security. Analysing social media content facilitates the real-time assessment of public emotions, opinions, and attitudes towards security-related events and crises. Recognizing its importance, this paper aims to: (1) address the scarcity of domain-independent data for public security sentiment analysis [1], and (2) tackle the complexities created by sarcasm—which can vague the intended sentiment [[2], [3], [4]]—and the complexities involved in processing bilingual code-mixed content that complicates the interpretation of sentiment [5,6]. By presenting a comprehensive dataset, this work is tailored to overcome the challenges essential in the sentiment analysis within the public security domain, particularly in multilingual contexts. Its valuable annotations enable various studies and applications, from academic research to practical tools for public security threat detection.

3. Data Description

Our dataset includes two main files: the dataset file (csv) and a readme file. The dataset was acquired from two social media platforms, X (Twitter) and TikTok. The dataset was constructed using the acquisition and annotation process detailed in the next section. After the construction process, the dataset containing 10,000 rows of selected attributes were annotated by three expert annotators from different fields. Two label categories were produced. First, the sentiment of the comments/ tweets. The multiclass sentiment is labelled as ‘positive’, ‘negative’ or ‘neutral’. Second, the sarcasm of the comments/ tweets. The binary class of sarcasm is labelled as ‘sarcastic’ or ‘not sarcastic’. A majority vote determined the final label for inclusion in the dataset. An additional labelling task is provided by an expert annotator in literature for the language identification of each comment/tweet. Table 1 presents the attribute details of the columns in our dataset.

Table 1.

Description of selected attributes.

| Attribute | Description |

|---|---|

| post/keyword | The related post resulting from a query in TikTok; or the keyword used for querying in Twitter. |

| Example: | |

| “#Floods reported around #KL (??: Social Media) #KesasHighway”; ‘banjir kilat, flash flood’ | |

| comment/tweet | The comment/tweet made on TikTok and Twitter. |

| Example: | |

| “Is this a hint from God? In Malaysia, for some, even a flash flood is deemed an indication from God that all is not well! It's clogged drains, Mr Watson! https://t.co/hZz03gqBQJ” | |

| username | The identifier for the user (pseudonym) who made the comment/tweet. |

| Example: | |

| ‘33d2a31d-c13’ | |

| like count | The number of 'likes' received by the comment/tweet. |

| Example: | |

| ‘2’ | |

| replied to | The user identifier (pseudonym) that the comment/tweet was in response to. |

| Example: | |

| ‘99e7b493–9d9’ | |

| reply count | The total number of replies that the comment/tweet received. |

| Example: | |

| ‘7’ | |

| 2nd level comment/ reply | Indicates whether the comment/tweet is a second-level comment or a reply to another. |

| Example: | |

| ‘Yes’ | |

| time created | The timestamp when the comment/tweet was created. |

| Example: | |

| ‘2020–03–16T11:26:11.000Z’ | |

| majority_sent | The majority voting of the sentiment label. |

| ‘positive’, ‘negative’, or ‘neutral’ | |

| majority_sarc | The majority voting of the sarcasm label. |

| ‘sarc’= sarcastic, or ‘notsarc‘= not sarcastic | |

| lang_id | Language identification of the comment/tweet. |

| ‘eng’= English, ‘my’= Malay, or ‘cm’ = code-mixed. |

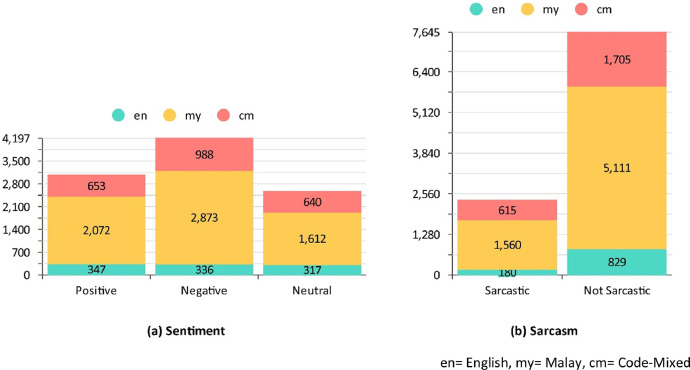

From the 10,000 comments/ tweets selected for annotation, sentiment labels were distributed as follows: 3072 were labelled ‘positive’, 4197 ‘negative’, and 2569 ‘neutral’. From these, 347 positive, 336 negative, and 317 neutral comments were identified in English; 2072 positive, 2873 negative, and 1612 neutral comments in Malay; and 653 positive, 988 negative, and 640 neutral comments were code-mixed. In 162 cases, there were no majority vote due to discrepancies among the annotators, indicating challenging cases where annotator agreement could not be reached. Regarding sarcasm, 2355 comments/ tweets were identified as ‘sarcastic' and 7645 as ‘not sarcastic'. Within these, sarcastic comments consisted of 180 in English, 1560 in Malay, and 615 in code-mixed languages; non-sarcastic comments included 829 in English, 5111 in Malay, and 1705 in code-mixed languages. Table 2 details the distribution of the sentiment, sarcasm, and language identification labels. Additionally, Fig. 1 visually presents this detailed distribution, illustrating the stacked sentiment and sarcasm labels across their respective language identification.

Table 2.

Dataset distribution of sentiment, sarcasm, and language identification annotation.

| Category | i. Sentiment |

ii. Sarcasm |

|||

|---|---|---|---|---|---|

| Label | Positive | Negative | Neutral | Sarcastic | Not Sarcastic |

| Total | 3072 | 4197 | 2569 | 2355 | 7645 |

| Language Identification | en=347, my=2072, cm=653 | en=336, my=2873, cm=988 | en=317, my=1612, cm=640 | en=180, my=1560, cm=615 | en=829, my=5111, cm=1705 |

en= English, my= Malay, cm= Code-Mixed

Fig. 1.

Stacked distribution of sentiment and sarcasm labels by language.

4. Experimental Design, Materials and Methods

The dataset construction process in this research work is a two-fold procedure involving data acquisition and data annotation, as illustrated in Fig. 2. The left side, (a) Data Acquisition, illustrates the process of collecting data, which starts with keyword searching, querying, scraping, and ends with an acquired dataset. The right side, (b) Data Annotation, illustrates how the raw data is refined and labelled, beginning with data merging, selection, and ending in an annotated dataset.

Fig. 2.

Dataset construction process: (a) Data acquisition; (b) Data annotation.

4.1. Data acquisition

The data acquisition stage begins with keyword searching, where relevant terms and phrases are identified from a keyword database. Predefined keywords were collected from a list of disasters in the public security domain, encompassing both natural and non-natural disasters from sentiment analysis survey in the domain [1]. Keywords in both English and Malay combinations are stored in the keyword database. Examples of keywords for the natural disaster domain include ‘flood’ / ‘banjir’, ‘earthquake’ / ‘gempa bumi’, ‘landslide’ / ‘tanah runtuh’, ‘food crisis’ / ‘krisis makanan’, and ‘epidemic’ / ‘wabak’. Examples for the non-natural disaster domain include ‘strike’ / ‘mogok’, ‘protest’ / ‘protes’, ‘riot / ‘rusuhan’, ‘invasion’ / ‘pencerobohan’, and ‘demonstration’ / ‘demonstrasi’. Utilizing these keywords, a series of queries are conducted, which initiate the data scraping process from social media platforms. Data scraping involves systematically collecting data from each platform until all keyword combinations have been thoroughly queried. On X (Twitter), the user post content (tweet), is selected using the Twitter API, while on TikTok, the comment to the user post is selected, using TikTok Comment Scraper. The process is repeated several times until all the keyword searches are complete, conducted using Jupyter Notebook. The scraping process for the keywords was conducted from 11 September until 21 September 2022. Once all relevant keywords have been searched, the process concludes, resulting in a comprehensive collection of raw data. Table 3 lists all the non-natural and natural disaster keywords with the amount of data scraped for each keyword.

Table 3.

Non-natural and natural disaster raw data based on keywords.

| Non-Natural Disaster Keyword | X (Twitter) | TikTok | Total | Natural Disaster Keyword | X (Twitter) | TikTok | Total |

|---|---|---|---|---|---|---|---|

| demonstrasi / demonstration | 173 | 1518 | 1691 | banjir / flood | 300 | 11,599 | 11,899 |

| himpunan turun / | 15 | 2080 | 2095 | bencana / disaster | 164 | 3023 | 3187 |

| krisis air / water crisis | 129 | 413 | 542 | covid-19 | 131 | 15,916 | 16,047 |

| krisis ekonomi / economic crisis | 203 | 2476 | 2679 | gempa bumi / earthquake | 207 | 5966 | 6173 |

| mogok / strike | 313 | 2449 | 2762 | gunung berapi / volcano | 146 | 2372 | 2518 |

| pencerobohan / invasion | 290 | 781 | 1071 | influenza outbreak | 43 | 2288 | 2331 |

| pendatang tanpa izin / illegal immigrant | 129 | 4104 | 4233 | kemarau / drought | 280 | 1438 | 1718 |

| protes / protest | 166 | 1243 | 1409 | krisis makanan / food crisis | 123 | 2775 | 2898 |

| rusuhan / riot | 109 | 762 | 871 | puting beliung / tornado | 277 | 7707 | 7984 |

| tunjuk perasaan / | 186 | 91 | 277 | tanah runtuh / landslide | 181 | 6678 | 6859 |

| #lawan | 60 | No data | 60 | wabak / epidemic | 147 | 1138 | 1285 |

| #bersih | 85 | No data | 85 | perubahan iklim / climate change | 294 | 292 | 586 |

| #lrtcrash | 1 | 106 | 107 | tsunami | 142 | No data | 142 |

| #hartaldoktorkontrak | 15 | 1349 | 1364 | kebakaran hutan / forest fire | 186 | No data | 186 |

| jerebu / haze | 142 | No data | 142 | ||||

| sekuriti makanan / food security | 273 | 773 | 1046 | ||||

| #wuhan | 71 | No data | 71 | ||||

| #coronavirus | 158 | 1276 | 1434 | ||||

| #lockdown | 91 | 1410 | 1501 | ||||

| #pkp | 145 | 880 | 1025 | ||||

| #daruratbanjir | 100 | 3995 | 4095 | ||||

| Total | 19,246 | Total | 73,127 | ||||

4.2. Data annotation

The data annotation begins commences with data selection, in which attributes based on the queried relevant keywords are chosen. The attributes selected are those that are common across both platforms, given in Table 1. This selection process ensures the consistency of the data from different platforms and eases the annotation task. Next, the selection of content is carried out. To ensure that each content selected is meaningful, only content containing more than ten characters are chosen. This criterion guarantees that each content delivers sufficient meaning [3]. As part of this pre-processing, content consisting solely of a Uniform Resource Locator (URL), a hashtag (#), or a user mention are excluded to ensure the quality and relevancy. Duplicate contents or those irrelevant to the public security domain are also filtered out. To ensure a balanced representation, an equal number of 5000 contents for each of the natural and non-natural disaster was selected. This selection involved manually choosing the top contents from each keyword search result. Contents were chosen proportionally: smaller result sets were included entirely, while larger ones were sampled to ensure that a nearly equal number of comments/ tweets were covered for each keyword. This process is crucial as it gathers all the data from all the keywords from both the natural and non-natural disasters from each platform. A total of 10,000 contents were selected and merged at this stage using the OpenRefine software. Table 4 illustrates the distribution of content before and after merging and filtering, as well as the content selected for annotation across different platforms.

Table 4.

Merging and selection content distribution, and annotation selection.

| Merging and Filtering |

Selected for Annotation |

||||

|---|---|---|---|---|---|

| Before | After | X (Twitter) | TikTok | Combine | |

| Natural Disaster | 73,127 | 48,369 | 1850 | 3150 | 5000 |

| Non-Natural Disaster | 19,246 | 15,942 | 890 | 4110 | 5000 |

| Both Domain | 92,373 | 64,311 | 2740 | 7260 | 10,000 |

The final step is the actual annotation, where the data is manually reviewed and labelled in accordance with the selected and merged dataset. The annotation was performed by three human annotators, each from a different field of study and with distinct professional backgrounds, ensuring a diverse range of perspectives in the analysis of social media content.

-

▪

Annotator 1: A lecturer in Malay Literature, brings a deep understanding of language and cultural context, which is crucial for interpreting tones in social media posts.

-

▪

Annotator 2: A lecturer in tourism, offers insights into geographical and environmental impacts, vital for discussions around natural disasters.

-

▪

Annotator 3: Working as a data analyst, contributes a strong analytical perspective, essential for the methodical assessment of data patterns and factual accuracy in posts.

The annotators were briefed on the dataset and the labelling tasks. There were two types of labels: one for determining the sentiment of the content as positive, negative, or neutral, and another for detecting sarcasm, categorizing content as sarcastic or not. A web-based tool, Doccano was used for annotation. The number of annotators was chosen to enable majority voting in the event of a tie in decision-making, as displayed in Table 2 [7,8]. Additionally, a supplementary task of language identification was carried out by Annotator 1 to recognize- the English, Malay and Code-Mixed.

Limitations

Not applicable.

Ethics Statement

We strictly adhered to the X's Developer Policy 2023 [9] and TikTok Privacy Policy 2024 [10] in the acquisition and distribution of our data. All private attributes (username, replied to, post/keyword, comment/tweet) are fully pseudonymized for security and confidentiality, in compliance with data redistribution regulations.

CRediT authorship contribution statement

Mohd Suhairi Md Suhaimin: Conceptualization, Methodology, Writing – original draft. Mohd Hanafi Ahmad Hijazi: Resources, Supervision, Writing – review & editing. Ervin Gubin Moung: Resources, Supervision, Writing – review & editing.

Acknowledgments

Acknowledgements

This research was funded by the Ministry of Higher Education Malaysia, Fundamental Research Grant Scheme (FRGS), grant number FRGS/1/2022/ICT02/UMS/02/3.

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Declaration of Generative AI and AI-Assisted Technologies

During the preparation of this work, the author(s) used ChatGPT for proofreading. After using this tool/service, the author(s) reviewed and edited the content as needed and take full responsibility for the content of the publication.

Contributor Information

Mohd Suhairi Md Suhaimin, Email: mohd_suhairi_di21@iluv.ums.edu.my.

Mohd Hanafi Ahmad Hijazi, Email: hanafi@ums.edu.my.

Ervin Gubin Moung, Email: ervin@ums.edu.my.

Data Availability

References

- 1.Suhaimin M.S.M., Hijazi M.H.A., Moung E.G., Nohuddin P.N.E., Chua S., Coenen F. Social media sentiment analysis and opinion mining in public security: taxonomy, trend analysis, issues and future directions. J. King Saud Univ. 2023 [Google Scholar]

- 2.Gupta M., Bansal A., Jain B., Rochelle J., Oak A., Jalali M.S. Whether the weather will help us weather the COVID-19 pandemic: using machine learning to measure twitter users’ perceptions. Int. J. Med. Inform. 2021;145 doi: 10.1016/j.ijmedinf.2020.104340. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Chandra J.K., Cambria E., Nanetti A. IEEE International Conference on Data Mining Workshops. ICDMW; 2020. One belt, one road, one sentiment? A hybrid approach to gauging public opinions on the new silk road initiative; pp. 7–14. [Google Scholar]

- 4.Chen N., Chen X., Pang J. A multilingual dataset of COVID-19 vaccination attitudes on Twitter. Data Br. 2022;44 doi: 10.1016/j.dib.2022.108503. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Fuadvy M.J., Ibrahim R. ICEEIE 2019 - International Conference on Electrical, Electronics and Information Engineering: Emerging Innovative Technology for Sustainable Future. 2019. Multilingual sentiment analysis on social media disaster data; pp. 269–272. [Google Scholar]

- 6.Garcia K., Berton L. Topic detection and sentiment analysis in Twitter content related to COVID-19 from Brazil and the USA. Appl. Soft Comput. 2021;101 doi: 10.1016/j.asoc.2020.107057. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Wallace B.C., Charniak E. Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing (Volume 1: Long Papers) 2015. Sparse, contextually informed models for irony detection: exploiting user communities, entities and sentiment; pp. 1035–1044. [Google Scholar]

- 8.Kunneman F., Liebrecht C., Van Mulken M., Van den Bosch A. Signaling sarcasm: from hyperbole to hashtag. Inf. Process. Manag. 2015;51(4):500–509. [Google Scholar]

- 9.X, Developer Agreement and Policy. https://developer.twitter.com/en/developer-terms/agreement-and-policy, 2023 (Accessed 28 March 2024).

- 10.TikTok, Privacy Policy. https://www.tiktok.com/legal/page/row/privacy-policy/en, 2024 (Accessed 28 March 2024).

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.