Abstract

The maturation of pervasive computing technologies has dramatically altered the face of healthcare. With the introduction of mobile devices, body area networks, and embedded computing systems, care providers can use continuous, ecologically valid information to overcome geographic and temporal barriers and thus provide more effective and timely health assessments. In this paper, we review recent technological developments that can be harnessed to replicate, enhance, or create methods for assessment of functional performance. Enabling technologies in wearable sensors, ambient sensors, mobile technologies, and virtual reality make it possible to quantify real-time functional performance and changes in cognitive health. These technologies, their uses for functional health assessment, and their challenges for adoption are presented in this paper.

1. Introduction

Advances in health care have been dramatic since the beginning of the millennium. As a result, global life expectancy has increased by 5 years since 2000 [1]. The resulting aging of the population is placing a heavy burden on our healthcare systems. With the aging of the population there is an accompanying increase in chronic diseases [2]. According to the Association of American Medical Colleges, the increasing demand for healthcare will cause a shortage of 124,400 physicians by 2025 [3]. While the future of healthcare availability and quality of services seems uncertain, at the same time advances in pervasive computing and intelligent embedded systems provides innovative strategies to meet these needs. These technologies are driving a radical transformation that offers a potential to scale healthcare assessments and treatments.

One particular need which technology can help address is the assessment of a person’s functional performance. Assessing the ability of an individual to be functionally independent supports family planning and creation of an appropriate treatment and intervention plan. Scalable approaches to functional assessment pave the way for early detection and more effective treatment. Additionally, while researchers continue to make advances to slow and stop the progression of debilitating diseases, they need a way to quantitatively measure the outcome of pharmaceutical and behavioral interventions both in the clinic and at home [4].

Here we focus on technologies that assist with functional health assessment. Functional decline is a common presentation of many disease states and is often the result of acute and chronic problems that act together to adversely affect the ability to independently perform both basic [5] and more complex [6] activities of daily living (ADLs). The studies that we review involve participants undergoing treatment for diseases that dramatically impact ADL function. These diseases include Alzheimer’s disease, Parkinson’s disease, other causes of dementia, autism spectrum disorder, Huntington’s disease [7], stroke [8], epilepsy [9], multiple sclerosis [10], and schizophrenia [11]. Functional assessment does not conform to a traditional screening model based on history and examination. In fact, 66% of providers do not address functional limitations even when they are specifically reported by older adult patients during an office visit [12]. Functional health has been defined as the ability to conduct tasks and activities that are important in a person’s daily life [13]. There are many contributing factors to ADL functioning, including cognition and physical mobility.

Technology offers many potential improvements to functional assessment. Because many technology-based tests can be administered without a clinician present, they can be utilized by people living in rural settings [14] without imposing many of the time and space constraints that make thorough assessment difficult in clinical settings [15]. Performing such assessments in the home instead of the clinic is believed to be more representative of an individual’s capabilities [16]. Furthermore, continuous sensor-based monitoring can bring new sources of information and insights to help care providers understand each individual’s situation and needs and help researchers better understand diseases and their cures.

In this paper, we review classes of technologies that are most commonly used to automate or assist with functional health assessment. These include computers and mobile devices, wearable sensors, ambient sensors, virtual reality systems, and robots. We detail the types of assessments that these systems replicate or enhance, the diseases that have been analyzed in related studies, and the specific states or components of an individual’s behavior that are analyzed. Finally, we discuss the gaps in the research, challenges for engineers and clinicians, and provide suggestions for future directions that must be addressed to not only design new technologies but make them reliable and usable for functional health assessment.

2. Traditional Functional Health Assessment

A traditional assessment of functional performance is performed in a clinic setting under the supervision of trained raters. There are numerous tests that have been performed over the years. Here we review a subset of these tests, limiting our discussion to those that factor prominently in the technology-based studies of functional health assessment reviewed in this paper. The tests vary in terms of the functional health components they examine and the way data is collected, thus a full assessment typically involves administering a large number of the tests [17]. Studies have shown that mobility, cognition, and compensatory strategies all play a role in functional health and need to be assessed accordingly [10], [11], [18].

Assessing functional health is difficult outside of monitoring a person’s ability to perform critical activities in a variety of everyday settings. However, health components including cognition, motor function, and executive function, or task planning and completion, contribute to functional health [19]. As a result, we consider traditional and technology-supported assessments of these components as well as assessments of overall functional health. While there are many traditional assessment tests, we focus here on the tests that are replicated by digital technologies, enhanced by technologies, or are used to validate technology-based assessment in the surveyed papers.

Cognitive screening tests.

A number of tests have been designed that are administered in lab or clinic settings and assess cognition. These include the mini mental state examination (MMSE), the Montreal cognitive assessment (MoCA), the Cognitive abilities screening instrument (CASI), the trail making test (TMT), the repeatable battery for the assessment of neuropsychological status (RBANS), and the Stroop test. These tests are used specifically as validation mechanisms for assessment technologies but there are many other that have been created as well. The tests have been designed to assess functions such as visuospatial skills, language, judgement, recall, naming, attention, delayed memory, and the ability to follow simple commands.

Tests of mobility and motor function.

Another key component of functional health is physical mobility. Again, there are standardized tests that have been used for assessment in this context. These include the timed up and go (TUG), the finger-tapping test (FTT), the short physical performance battery (SPPB), the de Morton mobility index (DEMMI) that progresses thoroughly increasingly-demanding mobility and balance tasks, and the functional independence measure (FIM) that is applied in rehabilitation settings to monitor recovery.

Self and informant-report questionnaires.

Questionnaire-based assessments are commonly used in the clinic as proxy measures for real-world functioning. Questionnaires are easy to administer and can provide a reasonable representation of real-world functional performance if the reporter has good insight and can answer questions without bias [20]. One such questionnaire is the Katz index of independence in activities of daily living. The Katz ADL measures functional status based on the ability of the subject to perform activities of daily living (ADLs) independently. Other questionnaires have been developed that are sensitive to mild cognitive impairment, such as the ADCS-ADL, the Functional Activities Questionnaire, the Direct Assessment of Functional Status (DAFS), and the Weintraub Activities of Daily Living Index Scale. The Clinical Global Impression scale, Functional Assessment Staging, and Global Deterioration Scale specifically quantify change in the ability to perform routine tasks independently.

Performance-based tests.

These tests measure functional capacity directly by having individuals solve real-world problems in lab or clinic settings. Examples include the performance-based skills assessment (UPSA), the revised observed tasks of daily living (OTDL-R), the medication management ability assessment (MMAA), and the night out Task (NOT). These tests allows participants to perform activities that simulate complex everyday tasks.

Naturalistic observation tests.

These tests may be the most ecologically valid of the functional status measures, as they make use of more open-ended tasks and allow for the analysis of subtle behavioral changes and processes [20]. As an example, the multiple errands test (MET) evaluates the effect of executive function deficits on everyday functioning through real-world tasks performed in a community setting, such as shopping, collecting information, and navigating to a specific location. Similarly, the assessment of motor and process skills (AMPS), conducted in peoples’ own homes, is used to evaluate the impact of motor and process skills on ability to complete complex ADLs. Other recent measures have been developed that allow subjects to be observed as they perform complex activities in their own homes. These include the ADL Profile [21] and the Day Out Task [22].

3. Computer-Assisted Assessment

A first step in automating functional health assessment is to use computers that are found in most homes. Because computers, televisions, and mobile devices are not only ubiquitous (an estimated 6.1 billion people will be using mobile apps by 2020 [23]) but they are convenient, assessment can be performed more often, with more varied patient states, and at lower cost [24]. The American Psychological Association acknowledges the value of computerized psychological testing and so developed guidelines for the development and interpretation of such tests [25]. Here, technology can be used to provide telemedicine support for clinician-administered traditional assessment tests, to replicate standardized clinical tests, to enhance tests with additional digitally-collected features, or to create new assessment tests.

3.1. Technology-supported clinician-based assessment

One of the most straightforward uses of technology is to provide a digital format to deliver tests or enhance existing functional assessment tests. As an example, online delivery of standard test questionnaires makes it possible for patients to provide frequent feedback on self-perceived memory, mood, fatigue, activity level, falls, and performance on ADLs. Beauchet et al. [26] and Seelye et al. [27] found a strong relationship between self-administered questionnaires and physician-administered assessment of similar factors. However, in both cases the answers to provided questions lost integrity for individuals with cognitive impairment, and self-report error generally increases with the level of cognitive impairment [28]. Interestingly, factors that differentiated individuals with mild cognitive impairment (MCI) from cognitively intact participants were not the questionnaire answers but rather the context in which the questionnaire was completed. Over the course of one year MCI participants began to submit their questionnaires later in the day, to take more time to complete the questionnaires, and to request increased staff assistance [29].

While the online accessibility of questionnaires improves the convenience of assessment techniques [30], the self-report methods can be problematic because they rely on retrospective recollection of symptoms and functioning. Ecological momentary assessment (EMA) holds promise as a method to capture more accurate self-report values [31] because questions can be delivered via a mobile device that a subject answers based on how they are feeling at that particular moment [32].

A primary concern regarding self-administered tests is the lack of controlled conditions, because variation in the test administrator and environmental distractions can affect test results [33]. One possible compromise is to employ telemedicine, in which functional testing is remotely administered. Some researchers video conference with patients to conduct remote evaluation of tests such as the MoCA [14], [34]. In both studies, reliability was found to be good to excellent in all measures and correlated strongly with in-clinic assessment. On the other hand, subjects did get distracted by visuals and noises and technical difficulties raised frustration levels. Overall, participants expressed their preference for telemedicine because of the savings in time, energy, and resources. If successful, telemedicine approaches would allow clinicians to “see” many more patients with greater frequency, increasing the likelihood of early detection and effective treatment.

3.2. Technology-based traditional assessment

In the next automation step, technologies can replicate or enhance traditional assessments. In addition to providing additional digital features for analysis, more patients can be assessed with greater frequency. Some of the digital tests appear almost identical to traditional validated cognition tests. For example, Tenório et al. [35] digitally reproduced the clock-drawing test and the letter/shape drawing test to facilitate bias-free testing. Mitsi et al. [36] created a phone app that quantifies motor function by replicating the finger tapping task with digital monitoring. When testing the app on 38 participants, they were able to identify Parkinson’s disease participants with an accuracy of 0.98. Shigemori et al. [37] created a smartphone app to replicate the MMSE with a correlation of r=0.73 to the clinician-administered MMSE for 28 older adults.

When technologies are employed, additional features can be extracted that provide novel insights on task performance. This can improve functional assessment overall, as researchers have demonstrated that traditional assessment techniques may not be sufficient on their own to perform an accurate assessment of functional health and are boosted with extra variables [38]. As an example, Ghosh et al. [39] designed smartphone versions of the Trail making test, Stroop test, and letter number sequencing. Correlation between conventional and computerized tests was as high as r=0.95 for 93 college students. The phone also provided an easy way of introducing additional distractors. Coelli et al. [40] enhanced the computerized Stroop test by partnering it with EEG data to monitoring subjects’ cognitive engagement throughout the test. Shi et al. [41] paired the clock drawing test with a digital pen which facilitates quantifiable drawing precision scores, and demonstrated in their experiments a greater variance in clinician scores (due to subjectivity) than in the digital scores.

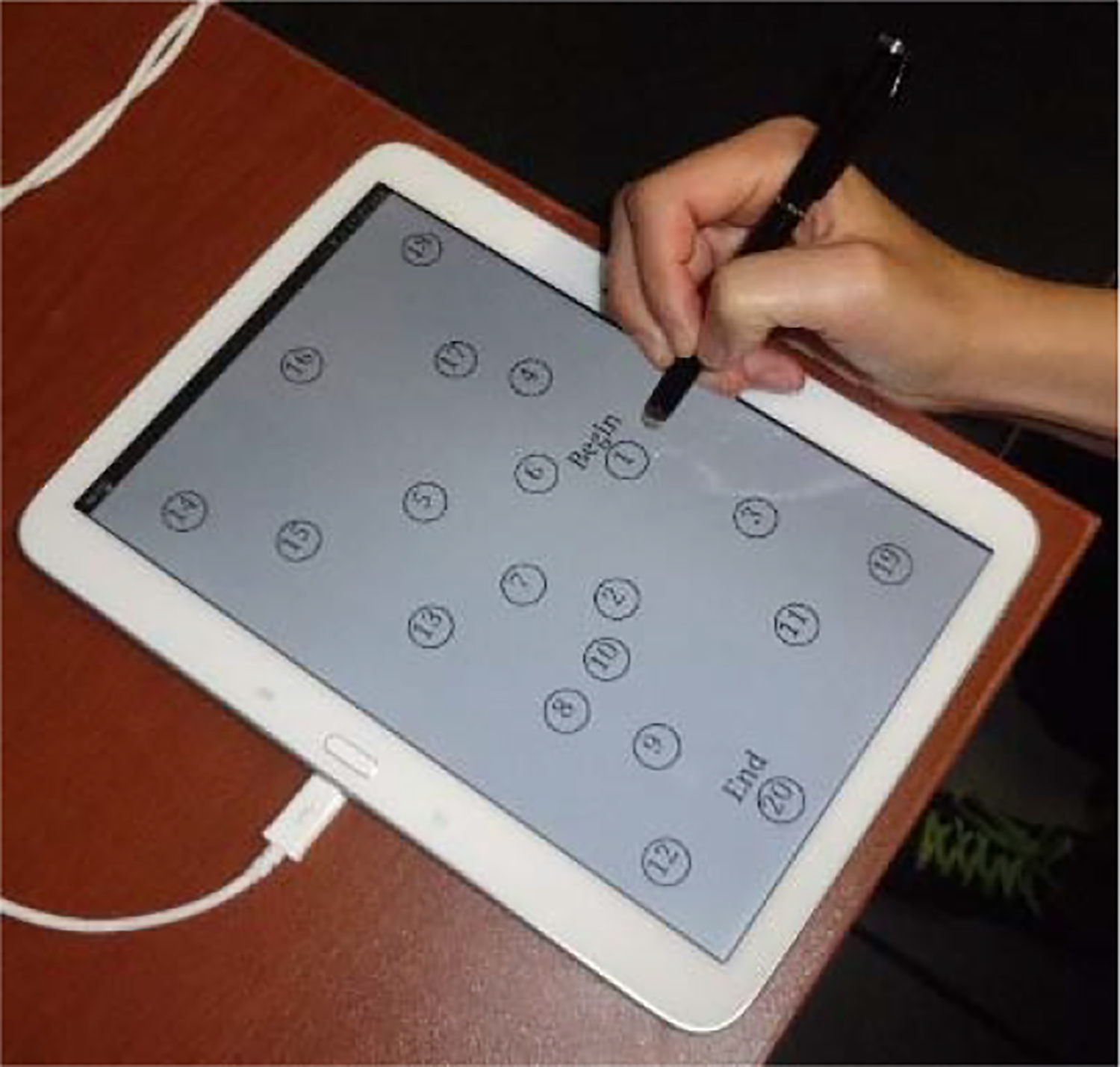

Similarly, Fellows et al. [42] constructed a tablet-based version of the Trails test called the dTMT (see Figure 1). In addition to the standard features of task completion time and number of errors, the dTMT records several digital measures of performance such as the average duration of stylus pauses and lifts, the drawing rate between and inside circles, the total number of pauses and lifts, and the stylus pressure. A study with 68 older adult participants revealed a moderate correlation with clinician-administered TMT Part A (r=0.530) and Part B (0.795). In addition, the digital measures of performance were used to isolate cognitive processes such as speeded processing, inhibitory processes, and working memory.

Figure 1.

Mobile TMT test [42].

3.3. Technology-enabled new assessment tests

Finally, technology can also be used to create new digital assessment tests. The computerized format facilitates test delivery in game contexts that make the tests more enjoyable for individuals as well as captures new variables. As an example, Lordthong et al. [43] created a set of smartphone exercises that are designed to assess perception, visual-motor control, and speed of response as well as executive functioning and problem solving. This phone app includes a TouchTap game where users match colors between a button and a display, a MindCal game to perform mental calculations, and a MatchTap game to match symbols and values. Similarly, Brouillette et al. [44] designed a phone-based color shape test whose results were significantly correlated with cognitive test scores from the MMSE and the TMT for 57 older adults.

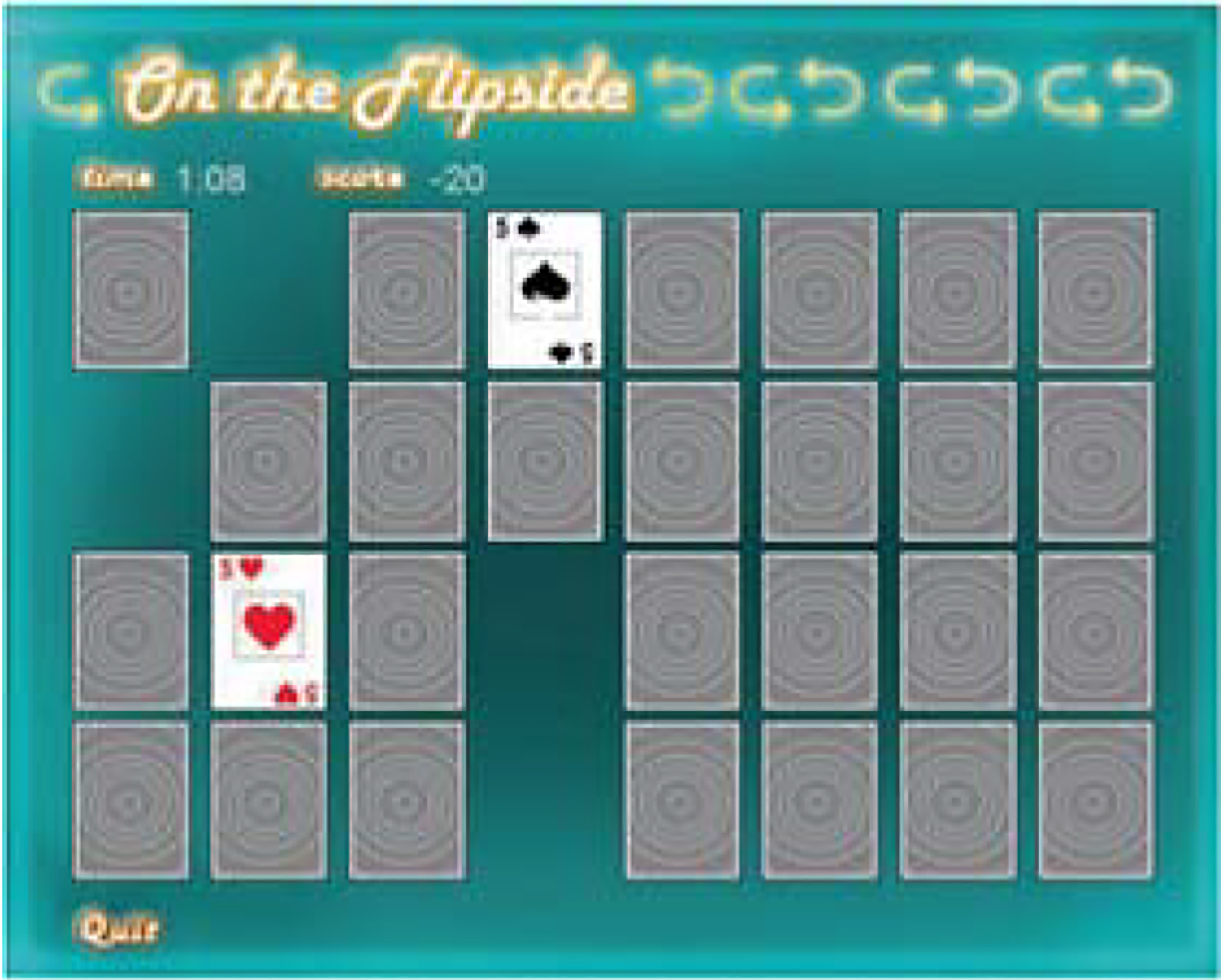

Instead of mimicking standardized assessment tests, other approaches to technology-enabled assessment mimic well-known computer games but also introduce new features from game performance that provide insights on cognitive abilities, one component of functional health. Sudoku and word search are two popular computer-based games. Joshi et al. [45] extracted a detailed set of performance metrics for these games and found that game performance correlated significantly with both MMSE and MoCA scores in a pilot study. Hagler et al. [46] designed a mouse-driven scavenger hunt with arbitrary target configurations that prevent the tasks from being learned too easily for older adults who play the game often. Another game, called “On the flipside” (see Figure 2), mimics a well-known memory card game [47] to assess user response time and working memory performance. These games offer another important role: motivating adults to get tested often by playing games.

Figure 2.

Card games motivate more frequent memory assessment [43].

Other groups have created new, innovative platforms for assessment-based computer games. In one case, Czaja et al. [48] created a computer-based graphics environment for participants to perform realistic tasks that require money management and correlated (r=.50) with the USPA functional capacity measure for 77 schizophrenia patients. They also found that performance on simulated financial tasks and doctor’s visits was related to TMT scores for 62 older adults with amnestic MCI [49]. Two other games use the Kinect motion sensing device: Liu et al. [50] created a sport-based set of cognitive function tests to assess the same cognitive components as the MMSE but in a sport game setting. Users answer questions and perform tasks that assess short-term memory, language ability, and attention detection. Figure 3 shows a screenshot of the game in which users must remember physical movements and perform the movement in the correct order. Hou et al. [51] also developed a Kinect-based set of games to perform cognitive function assessment but draw on nostalgic therapy elements to engage older adults and help them remember past experiences. This game-based assessment yielded an 86.4% accuracy in identifying individuals with MCI and those with moderate or severe cognitive impairment from among a sample of 59 participants.

Figure 3.

Kinect game-based assessment [50].

Instead of designing new environments, other researchers analyze existing computer functions for a less intrusive, time-demanding type of assessment. For example, Austin et al. [52] track search terms used when performing Internet searches. Based on searches performed by 42 participants over a 6-month period, they found that individuals with higher cognitive function (based on traditional tests) used more unique terms per search. In another study, Vizer and Sears [53] analyzed linguistic terms as well as keystroke characteristics to perform early assessment of MCI with an accuracy of 77.1%.

Cognitive and functional health assessment ideally allows subjects to interact with the real world rather than just a digital environment. Several groups have designed technology-based assessments that combine the two. To assess cognitive health, Li et al. [54] and Kwon et al. [55] use cubes with embedded sensors to perform tactile versions of the assessments found in traditional mechanisms such as the MoCA. The participant groups cubes based on commonalities corresponding to the abstraction component of the MoCA scale.

3.4. Virtual reality tests

Virtual reality (VR) technology provides an option for immersing subjects into real-world situations to observe how they handle complex tasks [56]. VR systems facilitate three-dimensional presentation and stimulus of dynamic environments, especially for assessment scenarios that would be challenging to deliver in real-world environments or with 2D computer interfaces. As an example, Arias et al. [57] carried out a finger tapping test in a immersive VR environment to study control of movement in the context of different real-world situations. Based on a study with 34 subjects, results were consistent with the real-world tapping results as the Parkinson’s disease participants exhibited larger variability in tapping frequency than the other groups. In another movement-based assessment, Lugo et al. [58] created a VR scene that engages the subject in real-life scenarios. This project used a motion tracking system to evaluate rest and postural tremor while Parkinson’s disease patients played games in the simulator. Both tremor amplitude and time needed to complete a task correlated (r=0.45) with UPDRS scores.

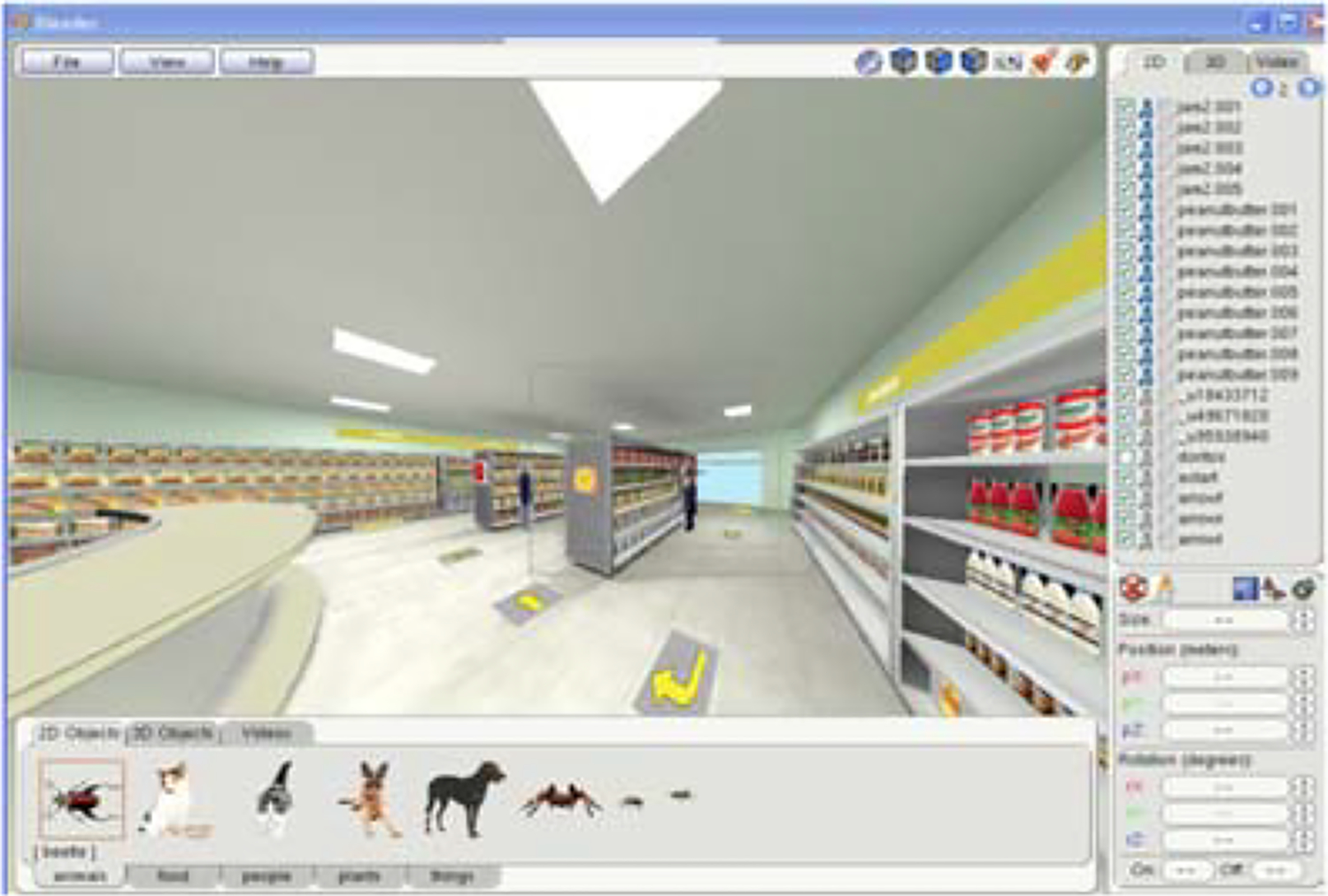

When VR systems incorporate sophisticated graphics modeling, the assessment scenarios can become even more realistic (see Figure 4 for an example). Researchers have used a simulated shopping environment that enables users to perform shopping errands [59]–[62]. This scenario approximates the Multiple Errands Test (MET) in which subjects buy products from a store and while adhering to specified rules such as do not visit any aisle more than once, do not buy more than two items in each category, and do not spend more than a specified amount of money. Raspelli et al. [60] used this environment to compare performance of post-stroke subjects to healthy controls. They observed a strong correlation (r=0.98) between the virtual MET and the traditional assessment scores for 15 subjects. They also observed a statistically significant difference in performance between younger adults, healthy older adults, and post-stroke older adults in the categories of task completion time, inefficiencies, and rule breaks.

Figure 4.

VR systems use Blender to generate a simulated shopping environment.

Table I summarizes approaches to computer-assisted assessment techniques. A limitation of these technologies is that individuals take time out of their daily routines to perform this assessment. Furthermore, assessment results vary in terms of who administers the task and the level of task realism. If tests are self-administered, then the likelihood for ongoing engagement must be considered. Additional research is needed to determine whether VR tasks remove external stimuli that may affect cognitive performance [64]. Next, we examine ways in which sensors can be employed to automate assessments that decrease the amount of daily routine interruption and potentially increase ecological validity of the assessment.

Table I.

Functional health assessment tests

| Technology | Domain | Autonomy | Tasks | Refs |

|---|---|---|---|---|

| Online questionnaires, EMA | cognition, function, mood | self-report | survey questions | [26], [27], [29], [31], [32], [63] |

| telemedicine | cognition, function, motor | remote clinician | traditional assessment tasks | [14], [34] |

| digital reproduction of clinical test | cognition, motor | self-administer | MMSE, clock/letter/shape drawing, finger tapping | [35]–[37] |

| digitally enhanced test, EEG, digital pen | cognition, motor | self-administer | TMT, Stroop, sequencing, clock drawing | [39]–[44] |

| digital test, computer, smartphone, Kinect | cognition, motor | self-administer | finger tap, card match, sudoku, word search, scavenger hunt | [45]–[47], [50], [51] |

| computer | cognition | self-administer | Internet search, text | [52], [53] |

| sensor-embedded cubes | cognition, motor | self-administer | sequence match, lexical match | [54], [55] |

| virtual reality | cognition, motor | self-administer | finger tap, blocks, MET | [57]–[62] |

4. Wearable Sensor-Assisted Assessment

With the maturing of technologies in mobile computing and pervasive computing, continuous human movement can be collected and used to enhance traditional functional assessment. Individuals can wear or carry sensors that collect data about their gestures, movement, location, interactions, and actions. Many of these sensors are now coupled with computers and are found on smart phones or smart watches that are routinely carried or worn by individuals as they perform their daily activities. Here we examine how researchers have used wearable sensors to perform functional assessment.

4.1. Wearable sensors

Sensors used in functional health assessment that are commonly found in clothing, on the body, carried, or worn by persons typically monitor various types of movement. As such, the most common wearable sensor is an accelerometer. Acceleration changes can indicate a movement beginning, ending or direction changing. This sensor measures acceleration along the x, y, and z axes. Motion tracking is further enhanced by a gyroscope sensor, which measures rotation around the three axes, commonly known as pitch, yaw, and roll.

Another sensor found on many mobile devices is a magnetometer, which measures the strength of the magnetic field in three dimensions. A magnetometer is valuable for providing orientation of the user and for detecting and locating metallic objects within its sensing radius. The location of the sensor (and the person wearing it) can then be determined based on their proximity to these detected objects. To obtain additional location information, mobile devices commonly determine a device’s latitude, longitude, and altitude using a combination of GPS, WiFi, and GSM sources, depending on whether the device is inside or outside of a building.

Contingent on the mobile device that is used, additional information can be collected. Cameras and microphones provide a dense source of data indicative of the state of the user and surrounding environment. Use of other apps on the device, including phone calls and texting, can also be captured. Smart watches offer LEDs and photodiodes that utilize light to monitor heart rate by detecting correlated changes in blood flow. If additional hardware is attached to the mobile device, other health parameters can be monitored. These include glucose meters, blood pressure monitors, and hardware that senses pulse, respiration, and body temperature.

When sensors are placed on, in, or around a physical body then data can be collected using a Body Area Network (BAN). Generally, body area networks are wireless personal area networks that act as gateways working together with small sensors and control units to collect data. BANs can utilize implanted sensors as well as sensors that are near to the body such as those embedded in clothes [65] or shoes [66]. While many of the wearable sensor technologies focus on one component such as cognition, motor, or mood, these components all clearly contribute to and correlate with overall functional health and thus play an important role for overall functional performance assessment.

4.2. Physiological monitoring with wearable sensors

A particularly appealing feature of many wearable sensors is that they can be woven into everyday life to support functional health assessment in ecologically valid settings. When smart fibers and interactive textiles are used, functional assessment is literally woven into the clothes we wear. In some cases, electrodes are made from silver-based conductive yarns which utilize body sweat to improve the conductivity of the signal quality [67]. Paradiso et al. [65] show that monitoring such physiological states via textiles sensors can enable measuring activity level, respiratory rate, and sleep quality to determine a person’s mood.

4.3. Motor function monitoring with wearable sensors

Wearable sensors offer an especially good fit for monitoring various aspects of motor function. This is because sensors located on the body are able to capture detailed motion footprints of body movement in various settings and postures. Throughout the literature, wearable sensors are typically used to monitor and model many types of ambulation, gestures, and movement activities, thus they are naturally supportive of motor function assessment.

One key aspect of motor function that is monitored with wearable sensors is gait [68]. A special-purpose sensor that can be used for this task is a shoe sensor. As Ramirez-Bautista et al. point out [66], the ability to extract a large number of gait features (e.g., pressure mean, peak, center, displacement, spatiotemporal movement, ground reaction forces) makes the shoe highly sensitive to multiple types of walking patterns. Furthermore, Mariani et al. [69] validated the accuracy of stride features using shoe sensors in comparison with motion capture analysis. The derived patterns in turn allows systems to diagnosis and assist with movement-related conditions including insensible feet, Parkinson’s disease, Huntington’s disease (HD), Amyotrophic Lateral Sclerosis (ALS), peripheral neuropathy, frailty, diabetic feet, and recovery from injuries. Saadeh et al. [7] demonstrated the ability to differentiate PD, HD, ALS, and healthy subjects from among 64 participants with an accuracy of 94% based on stride time, fluctuation, and autocorrelation delay. Ayena et al. [70] also used shoe sensors to monitor motor control. In this case, the researchers employed shoe sensors during the One-Leg Standing (OLS) test to assess the risk of falling. They evaluated the technology in a study with 23 subjects, and found that, higher severity of balance disorder was associated with lower OLS scores, indicating more mobility deficits.

No single wearable sensor type or placement is best or sufficient for monitoring all aspects of cognitive and motor functioning. While Wang et al. [71] have also used sensors to monitor and assess balance, in this case subjects wear inertial sensors on the waist and perform balance tasks both with eyes open and with eyes closed. The researchers collected sway speed and direction for 41 subjects and were able to predict MMSE scores (with a range of 0 to 30) from balance parameters with an average error of 1.43±1.08. Mancini et al. [72] also used wearable sensors to differentiate recurrent fallers based on gait and turning features. Based on a study with 35 subjects, they found that recurrent fallers had distinguishing characteristics including longer mean turn duration, slower mean turn peak speed, and more steps per turn.

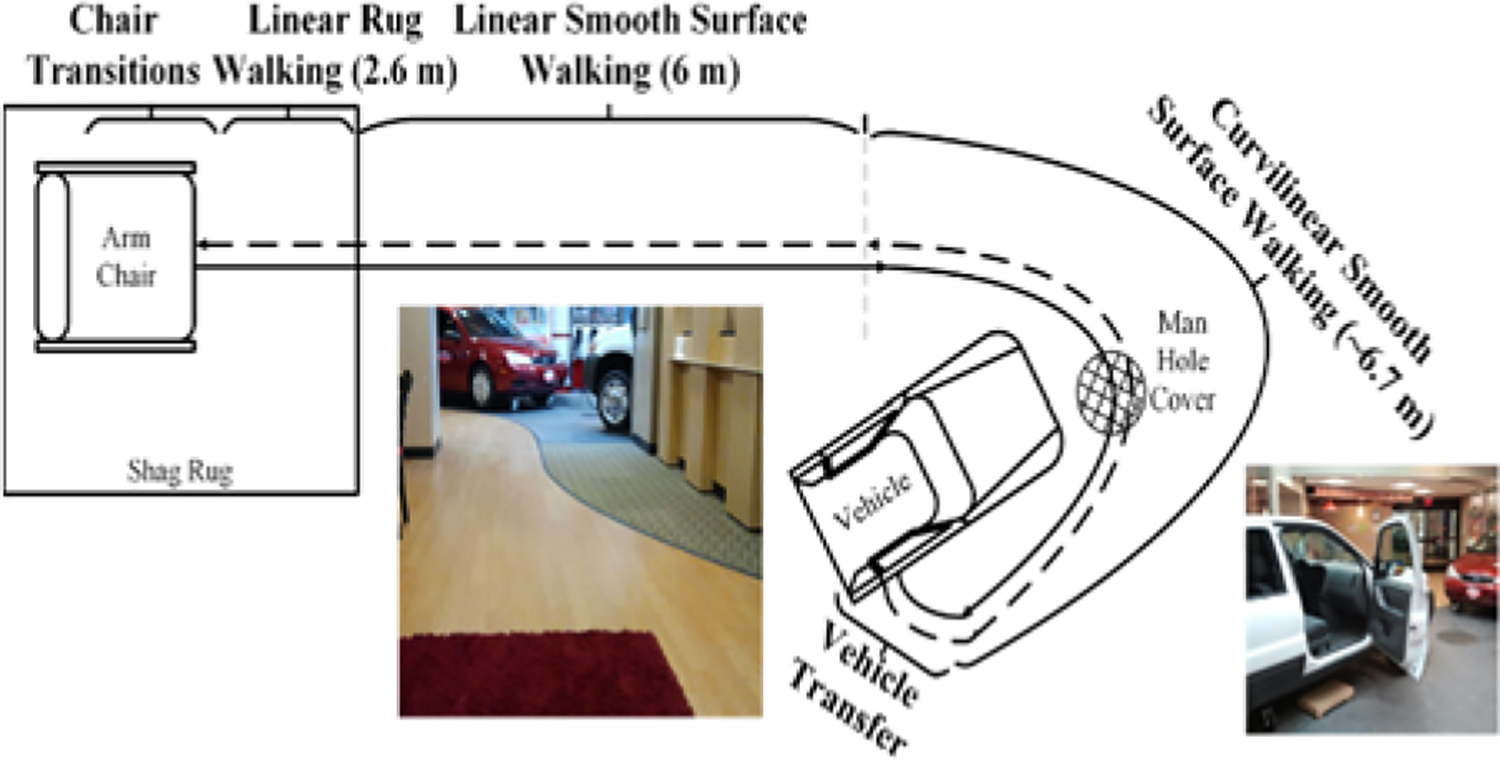

In the same way that traditional cognitive tests can now be replicated and enhanced with digital technology, so motor tests can be replicated and enhanced with wearable sensors. The use of accelerometers for assessing gait has been tested for validation and reliability in a study with 24 participants [73]. Building on this foundation, one notable example of an accelerometer-enhanced clinical test is the Timed Up and Go (TUG) test. The TUG test is commonly used in rehabilitation settings, and Sprint et al. [74] set up a more complex version of the TUG, called an ambulation circuit (AC), at an inpatient rehabilitation hospital. The AC, shown in Figure 5, also includes transitions to different types of floor surfaces, turns, vehicle transfers, and walking while answering questions. Based on 20 rehabilitation patients, wearable sensor measures from the AC correlated strongly (r=.97) with discharge functional independence measures (FIM) using leave-one-subject-out testing. This same group also collected and evaluated accelerometer-based data over two weeks of inpatient therapy to monitor rehabilitation rates [75]. In the same way that walking performance varied during the AC while answering questions, so also Howcroft et al. [76] found that 39 variables extracted from shoe and on-body accelerometers varied significantly as cognitive load increased.

Figure 5.

The ambulation circuit (AC) [74].

The link between mobility and cognition has been further investigated by Greene and Kenny [77], who used body-worn accelerometers during a TUG test to monitor baseline and changes as a way of predicting cognitive decline. In a study with 189 older adults, they found that sensor-based parameters could be used to predict cognitive decline (based on MMSE scores) with an accuracy of 75.94% for participants who were cognitively intact at baseline. Verghese et al. [78] tested 427 subjects using gait parameters extracted from wearable sensors. They found that gait rhythm change was associated with memory decline, pace change was associated with executive function decline, and pace factor predicted the risk of developing vascular dementia. Furthermore, Lord et al. [79] found an association between mild depressive symptoms and gait features in Parkinson’s disease for 206 subjects.

Because of the ubiquitous availability of mobile sensors, these technologies can be used to monitor numerous additional components of everyday function. For example, detection wandering behavior can be monitored with wearable sensors [80]. Lin et al. [80] discovered rhythmical repetition of events leading to wandering that serve as a predictor of wandering and associated functional impairments. Cella et al. [81] and Difrancesco et al. [82] use mobile devices to monitor socialization and other daily activity patterns that predict functioning and disease symptoms for schizophrenia patients. O’Brien et al. [8] also monitored time and distance traveled outside the home in order to quantify community mobility after stroke.

4.3. Mood monitoring with wearable sensors

A particularly appealing feature of wearable sensors is that they can be woven into everyday life to support functional health assessment in ecologically valid settings. As an example, Boukhecbha et al. [83] were able to predict social anxiety levels with an accuracy of 0.85 for 54 subjects using information that included GPS-based visited location types as well as text message and phone call parameters. Highlighting the diversity of information that can be used for mood detection, Quiroz et al. [84] infer emotion from walking patterns using phone-based accelerometer data as well as heart rate-based physiological data. They inferred emotional state for 50 subjects with an accuracy of 0.80.

Wearable sensor-based functional health assessment offers tremendous advantages because many of a person’s movements, activities, social interactions, and physiological parameters can be monitored continuously. However, employing wearable sensors does require effort on the part of the user. The sensor must be worn continuously to be effective and in many cases needs to be positioned very carefully on the body. Collecting, storing, processing, and transmitting sensor data from wearable sensors uses energy, thus these sensors require frequent charging and there may be an interruption in data collection during this period. An alternative solution is to make use of sensors that are placed in a person’s environment rather than on the body, and we focus on these technologies in the next section.

5. Ambient Sensor-Assisted Assessment

One of the more ecologically-valid sources of data for functional health assessment is a person’s own everyday settings. Collection of such data can be accomplished by embedding sensors into physical environments, including homes, workplaces, shopping areas, and doctor’s offices, transforming them into smart environments [85]. Sensors embedded in the environment, or ambient sensors, are valuable because they passively provide data without requiring individuals to comply with rules regarding wearing or carrying sensors in prescribed manners. Because they continuously collect data, there is no need for a person to interrupt their data to perform an assessment task – their normal daily routine is the assessment task. In this section we review approaches to using these sensors for functional health assessment.

5.1. Ambient sensors

A common ambient sensor for activity monitoring is the passive infrared (PIR) sensor. PIR sensors, or motion sensors, detect infrared radiation that is emitted by objects in their field of view. Because a PIR sensor is sensitive to heat-based movement, it operates in dim lighting conditions. A PIR sensor will sense movement from any object that generates heat, even if the origin is inorganic. As a result, the motion sensor may generate messages from a printer, a pet, or even from a home heater.

Another popular ambient sensor is a magnetic door sensor. A magnetic contact switch sensor consists of two components: a reed switch and a magnet, typically installed on a door and its frame. When the door is closed the the electric circuit is complete, thus changing the state of the sensor. When the magnet is moved by opening the door, the spring snaps the switch back into the open position, also causing a change in the state of the sensor. This feature is useful for detecting if doors, windows, drawers, or cabinets are open or shut. Additional sensors can be placed in environments to measure ambient temperature, lighting, and humidity.

Beyond these standard ambient sensors, additional sensors can be utilized throughout an environment to capture parameters of particular interest. For example, vibration sensors can be attached to specific items in order to monitor when they are moved / used. Pressure sensors can be placed under rugs, mattresses, or chair cushions to monitor resident movement. Meters can also be installed to monitor use of individual appliances or consumption of electricity, water, or gas.

5.2. Ambient sensor-based formal assessment

Many researchers use ambient sensors to imitate standard assessments in home settings. As Spooner and Pachana point out [86], determining the extent to which a medical procedure or intervention affects performance of everyday tasks can provide useful information that guides selection of treatment options, and tests designed with ecological validity are more effective than traditional tests at predicting everyday functioning. To this end, researchers have used ambient sensors to monitor and quantify performance of everyday tasks as an indicator of functional health. In one such study, Ul Alam et al. [87] equipped 17 older adult subjects with physiological sensors and equipped a residence with motion and object sensors. Participants performed scripted versions of 13 activities. Features extracted from ambient and physiological sensors were found to significantly correlate (as high as r=.96) with traditional neuropsychological test scores.

Dawadi et al. [22] also monitored individuals as they performed scripted activities in a smart home equipped with motion, door, temperature, light, item, water, and burner sensors. In this study, 179 subjects (healthy, MCI, or dementia) were asked to perform the Day Out Task, which contained 8 subtasks to be multi-tasked. Machine learning techniques mapped sensor-based features onto cognitive diagnoses. In this study, diagnoses were inferred with an AUC value of 0.94. This group also predicted diagnoses of Parkinson’s disease, PD with MCI, or healthy for 84 subjects with an AUC value of 0.96 using sensor-based features [88]. Interestingly, the wearable sensor data and movement parameters provided more sensitive features for the participants with PD while the ambient sensor data provided more sensitive features for the participants with MCI. This analysis highlights the need for analyzing multiple aspects of everyday life and behavior to understand and assess functional health.

5.3. Ambient sensor-based detection of specific states

In this section we examine research that focuses on technologies utilizing ambient sensors to analyze individual states or conditions, each of which plays an important role in functional health.

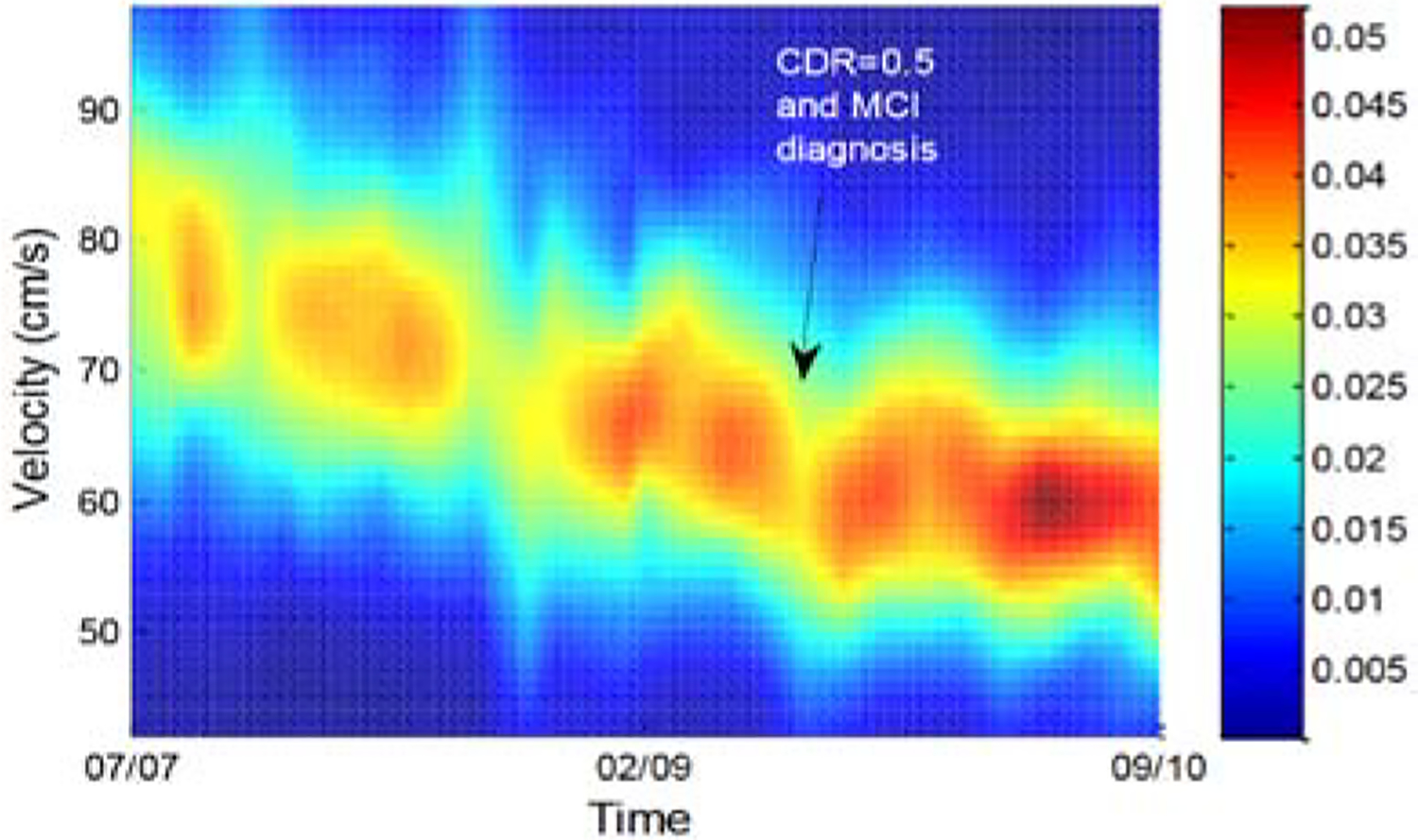

As an example, gait velocity has been shown to be a predictor of both cognitive and physical function [89]. Aicha et al. [90] and Austin et al. [91] demonstrated how to estimate gait from ambient sensor data by calculating walking durations for each path the residents followed throughout the home. These researchers were able to detect changes in gait velocity that were consistent with changes in health status. A plot showing the density of gait velocities over time along with the time at which the subject was diagnosed with MCI is shown in Figure 6.

Figure 6.

Gait velocity probability density function with clinical dementia rating scores (CDR) transitions [91].

To better understand daily contexts that affect disease symptoms, Darnall et al. utilized smart home sensors to analyze the contextual condition in which patients would enter dyskinesia “on” states [92]. To understand the effect of socialization on emotional health, Aicha et al. [93] and Austin et al. [94] analyzed motion sensor data together with phone and found for 16 older adults they found that loneliness was significantly associated with minimal sensor-detected socialization.

The relationship between these sensor-detected states and cognition has been validated in the literature. As an introduction, Roggen et al. [95] as well as Hellmers et al. [96] quantitatively determined that features tightly linked to cognitive health included time spent in different areas of the home, transitions between home areas, and times of day residents were in different areas of the home. All of these researchers discovered that changes in these values over time were of particular importance. Akl et al. [97] found that daily variations in room occupancy predicted MCI with an AUC of 0.72 data for 68 subjects over an average of 3 years. Akl et al. also found that walking speed could be used to predict MCI with AUC of 0.97.

In a study led by Petersen et al. [98], a link between time spent out of the home and health was discovered. For 85 older adult subjects, more hours spent outside the home was associated with lower CDR scores, superior physical ability, and improved emotional state. Similarly, Austin et al. [99] found a relationship between medicine adherence and cognitive performance. Ambient sensor data for 38 older adults supported the hypotheses that lower cognitive function is associated with greater variability in the timing of taking medicine and that variability increases over time.

Environment sensors are not limited to living environments. In one study [100], sensors were embedded in a car to monitor routine driving behavior over 6 months for 28 subject (7 with MCI). Here, again differences in behavior were found between MCI and cognitively healthy subjects. Specifically, MCI participants drove fewer miles, spent less time on highways, and showed less day-to-day driving habits than cognitively intact drivers.

5.4. Ambient sensor-based analysis of daily activities

We culminate our review with a discussion of how routine activities can be monitored and used to assess functional health. Daily activity performance is the ultimate indicator of functional health and inability to perform critical activities is associated with increased health care utilization, placement in long-term care facilities, time spent in the hospital, poorer quality of life, conversion to dementia, morbidity and mortality [101], [102].

To automatically detect and assess daily activities, technologies must first exist to automatically recognize activities from sensor data. Activity recognition algorithms map a sequence of sensor readings onto an activity label using machine learning algorithms such as support vector machines, Gaussian mixture models, decision trees, and probabilistic graphs [103], [104]. Once activities are recognized, they can be monitored to compare different population groups. They can also be tracked over time to provide early detection of changes in functional health. Dawadi et al. [113] tracked activity performance over 2 years for 18 subjects. By quantifying parameters such as time spent on each activity and variability in the parameters, they found a significant correlation (r=0.72) between predicted and clinician-provided RBANS scores and a significant correlation (r=0.45) between predicted and clinician-provided TUG scores. Alberdi et al. [114] expanded this study to track activities for 29 subjects and predicted clinician-generated scores for mobility (r=.96), cognition (r=.89), and mood (r=.89).

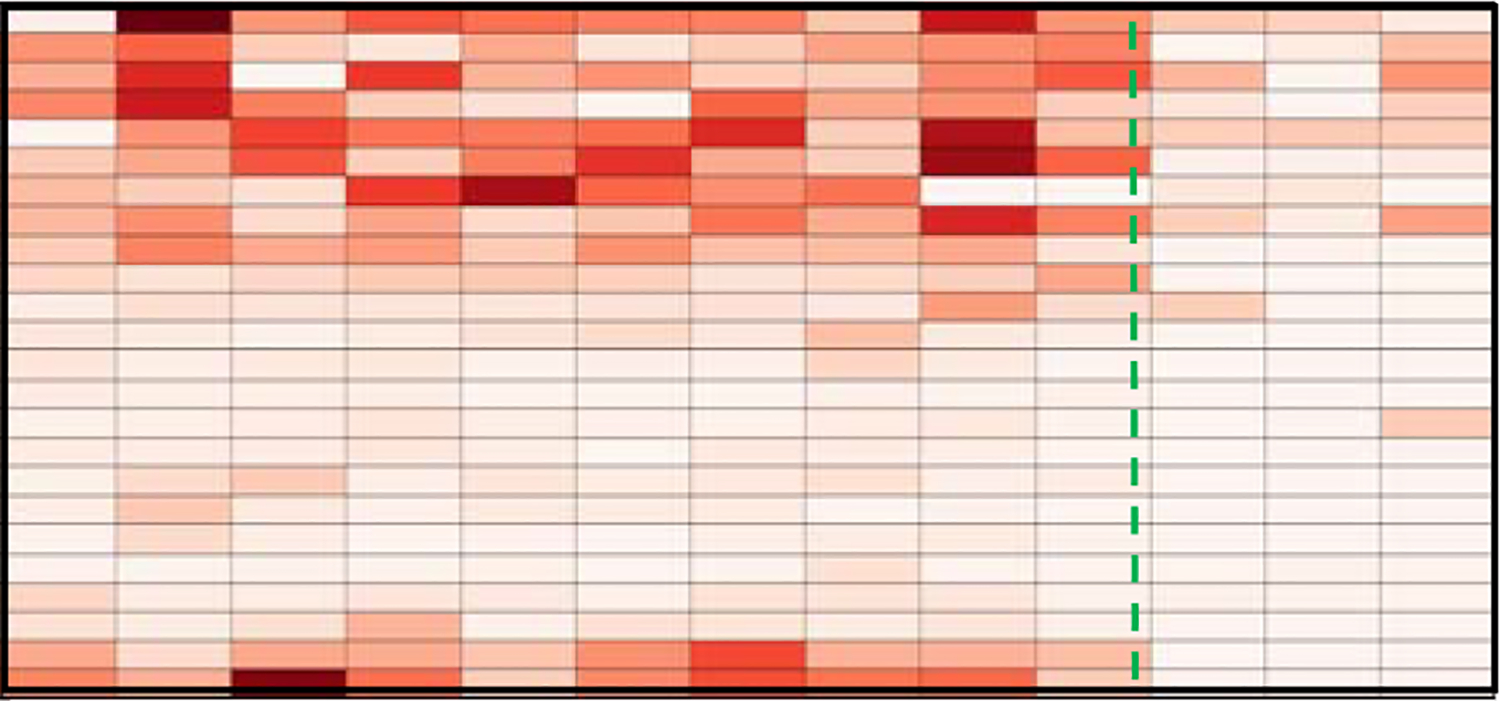

While tracking changes in activity patterns can identify gradual changes in functional health, identifying sudden health changes can be challenging. Sprint et al. [115] found that comparing activity patterns to a baseline behavior could highlight health events. In their study with 3 older adults, they were able to automatically detect sensor-based changes that were consistent with health events including chemotherapy treatment, insomnia, and a fall. As Figure 7 shows, time spent sleeping in bed at night decreased when the resident underwent radiation treatment.

Figure 7.

Time spent on sleep activity. Columns show weeks and rows show hours (midnight at top). The dashed line indicates the occurrence of insomnia [115].

Even when activity labels are not available, changes in sensor-based daily routines have been found to indicate changes in functional health. Riboni et al. [116] propose finding boundaries of normal activity behavior to support early detection of MCI. Robben et al. [110] monitored changes in room occupancy and activity levels for 13 subjects over one year. Using a random forest classifier, they mapped extracted features to predicted scores with a mean absolute error of 0.32 for AMPS scores (score range from −3 to 4,), 1.19 for Modified Kat-15 scores (score range 0 to 15), and 0.98 for walking speed.

As can be seen from this discussion, there are numerous technologies that play complementary roles in the overall assessment of functional health and related parameters including cognition, mobility, task performance, and mood. The technologies, the health parameters and conditions they monitor, and the experimental conditions in which they have been used are summarized in Table II.

Table II.

States monitored by sensor-based technology and corresponding conditions

| State | Technology | Conditions | Conditions | Refs |

|---|---|---|---|---|

| physiology | textiles, IMUs | mood | [65], [67], [105] | |

| gait | shoe sensors | PD, HD, ALS, frailty, diabetic feet | straight-line walking, OLS, lab | [7], [66], [69], [70] |

| balance, turning | IMUs | cognition, fall risk | stand, walk, turn, lab | [71], [72] |

| mobility | bed sensors | fall risk | bed departure | [106] |

| mobility, cognitive load | IMUs | rehabilitation | TUG, AC, lab | [73]–[76] |

| mobility, cognition | IMUs | cognitive decline, depression, PD | TUG | [77]–[79] |

| dyskinesia | ambient sensors | PD | in-home | [92] |

| wandering, social | mobile sensors, phone use | schizophrenia, dementia, stroke | continuous monitoring | [8], [80], [82] |

| mood | mobile devices | depression, anxiety | continuous monitoring | [30], [83], [84], [107] |

| gait | ambient sensors | fall risk | sit-stand, lab | [108] |

| scripted activities | ambient sensors | cognition | smart home testbed | [87], [88], [109] |

| gait | ambient sensors | MCI | in-home | [91] |

| socialization | ambient sensors | loneliness, cognition | in-home | [94], [98] |

| room occupancy, appliance usage | ambient sensors | MCI | in-home | [95], [96] [110], [111] |

| sleep, medicine | ambient sensors | cognition | in-home | [99], [112] |

| driving | ambient sensors | cognition | car | [100] |

| routine activities | ambient sensors | cognition, health events | in-home | [113]–[115] |

6. Conclusions and Directions for Ongoing Research

Technologies such as wireless sensor networks, mobile computing, pervasive computing, and machine learning have experienced tremendous maturing over the last decade. As a result, these technologies are being tapped to assist with functional health assessment. Harnessing the power of these technologies will allow assessments to be more accessible, provide deeper insights about performance, and support scaling clinical expertise to larger groups. As this field grows, there are some difficult challenges that need to be faced by everyone in the circle of care including patients, care providers, clinicians, scientists, and engineers. Here we discuss some of the challenges and highlight areas for ongoing research in the field.

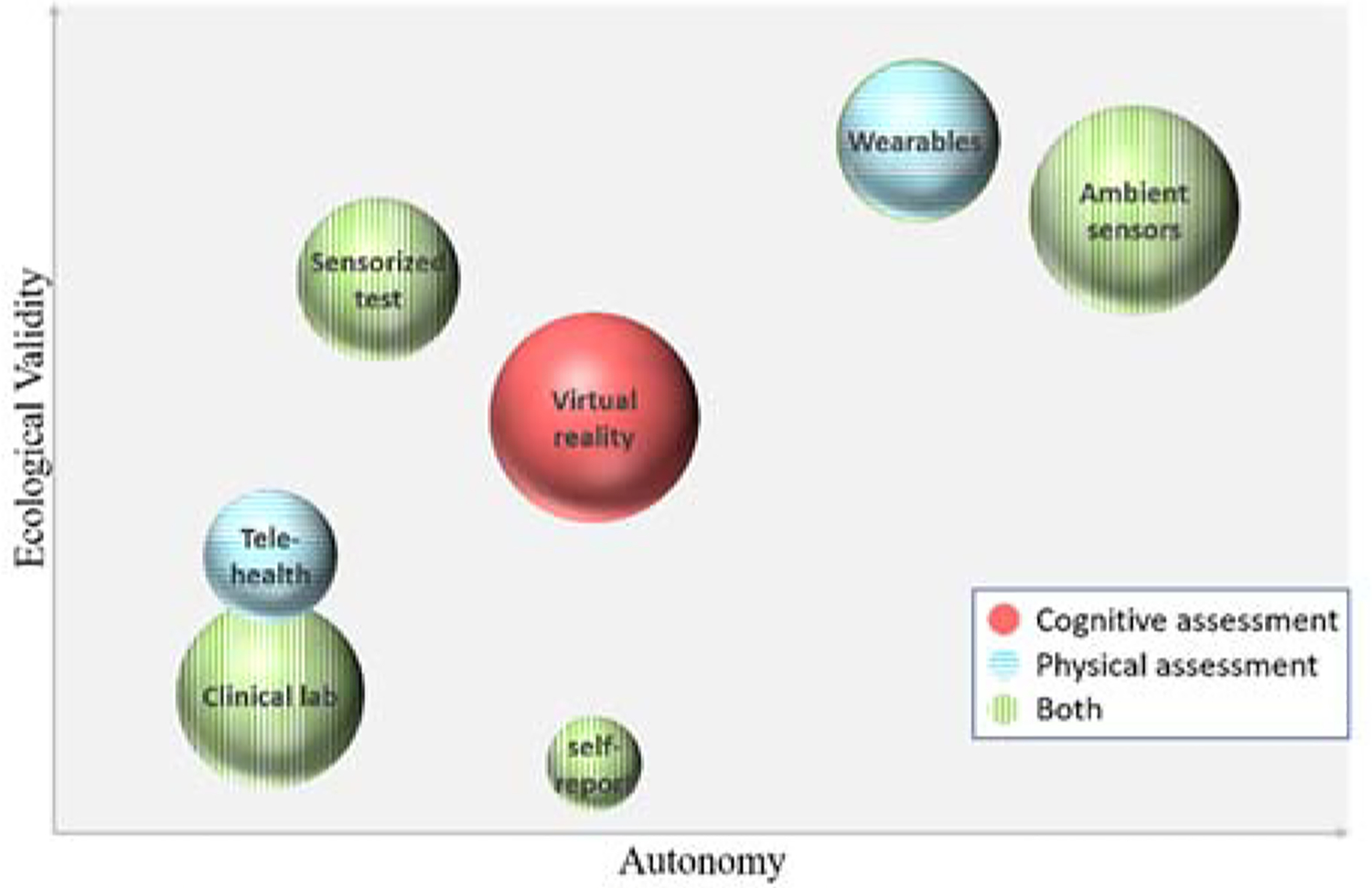

Selecting an appropriate technology.

As can be seen from this literature survey, technologies abound that can be employed for functional health assessment. They are extremely diverse, however, in their cost, usability, autonomy, ecological validity, type of assessment provided, and granularity of information generated. As a result, caregivers can be overwhelmed selecting an appropriate technology to utilize. LeadingAge [117] points out that functional assessments can be categorized as focusing on physical or cognitive aspects of cognitive health, as being passive or active, and as having varied technology costs and time investments. Figure 8 summarizes the major categories of technologies we have reviewed in this paper along these dimensions as well as an added dimension of ecological validity. More work is needed, however, to guide clinicians and care providers for the type of technology that fits individual needs and lifestyles.

Figure 8.

Functional assessment categories, organized by level of automatic assessment they provide (x axis), the ecological validity of the assessment (y axis), the type of assessment that is supported (pattern), and the level of cost investment that is required (size).

Introduction and integration of new technology.

This literature review focuses on s ensor technology that has been commonly utilized in ecologically valid studies, including computers, mobile devices, wearable sensors, and ambient sensors. There are many emerging technologies that can be considered for this problem. For example, robots could be explored both for health assessment and for assistance [68]. Another popular technology is depth cameras, which can be used to analyze movement and to predict and detect falls [118], [119]. Researchers have found that there is no one “silver bullet” technology that provides all of the necessary insight to a person’s functional health. Therefore, incorporating new sensors and methods is needed.

At the same time, each assessment technology needs to undergo clinical validation to offer evidence that the technology provides predictive validity and accurate assessment of functional health. This is important if clinicians are to begin making decision about patients’ diagnoses and treatment based on the data. This presents a challenge for researchers because while comparing findings with previously-validated instruments is an accepted mechanism for this process, the technology methods and data may be substantially different. New validation methods may need to be proposed.

Resource consumption.

Technologies can be heavy resource consumers. Power requirements limit the widespread deployment of wireless sensing systems. Wireless devices are preferred that can track functional status throughout a person’s day, but the need to frequently charge these devices changes user routine and reduces adherence to proper use of the technology, resulting in loss of valuable data. Researchers thus need to consider innovative methods of conserving resource consumption. One strategy for energy-consumptive devices is to make use of energy harvesting. Energy sources abound in natural settings and can be tapped in creative ways [120], [121]. Furthermore, technology that supports functional health assessment may collect gigabytes of data about a single person. The data needs to be stored securely and accessed with high-throughput communication technologies.

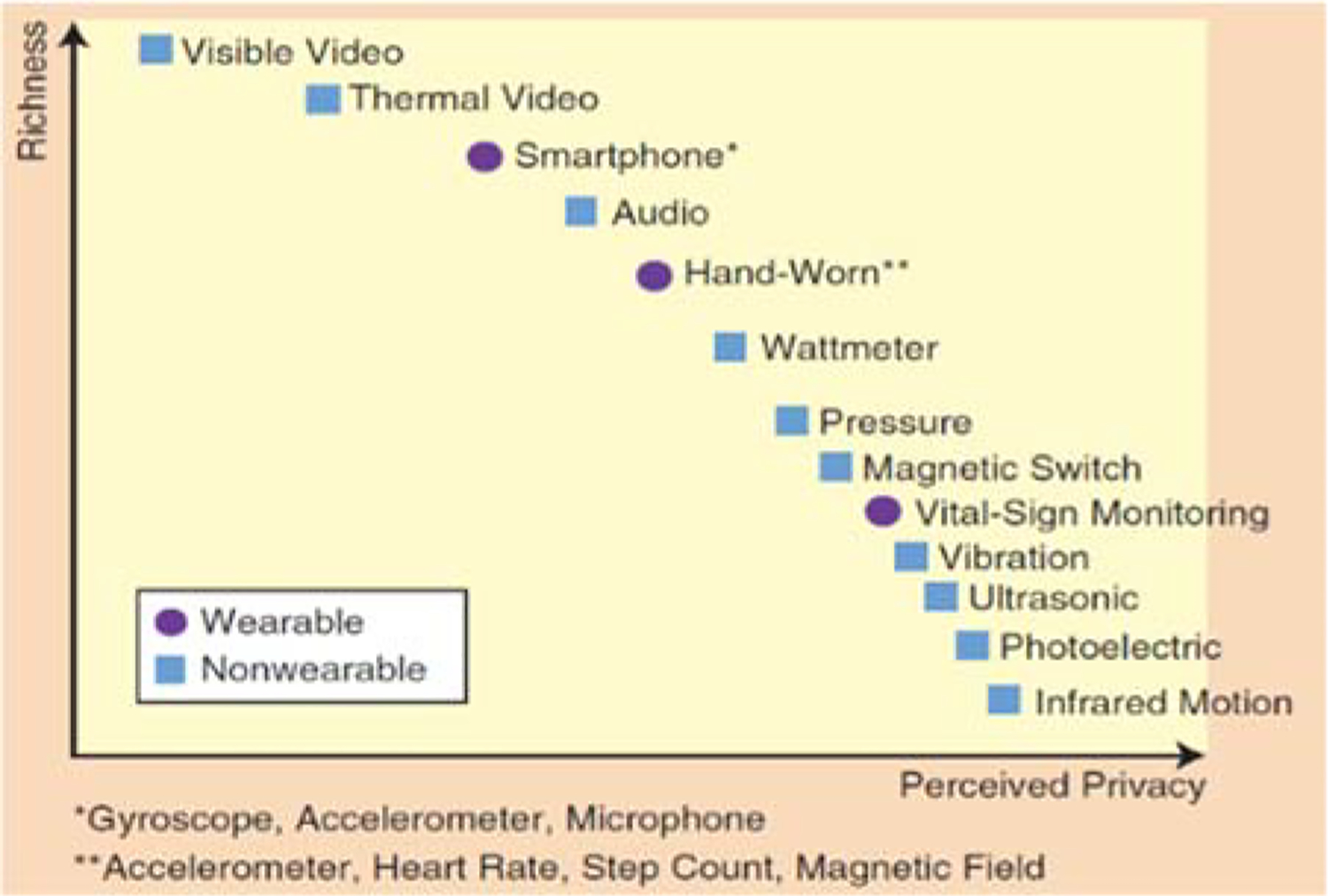

User privacy.

In order to provide accurate functional health assessment, a system needs access to information about a person’s habits. In the wrong hands such information can be abused. Even the perception of privacy invasion keeps many from adopting the technology which could potentially improve their quality of life. Additionally, there are many open questions regarding situations that may force users to share and even decrypt collected data [122]. Researchers are aware that there is a relationship between the sensitivity of devices (such as video-based assessment technologies [123]) and the corresponding perception of privacy invasion [124], as shown in Figure 9. Individuals need to be aware of the level of exposure and to grant data access to requesting groups on this basis [125]. Privacy-preserving data mining techniques are also needed to ensure that personal information cannot be gleaned from inferred models [126].

Figure 9.

Richness of sensors and a user’s perceived privacy [124].

Technology trustworthiness.

Use of technologies for functional health assessment is recent and almost none have been applied in large clinical studies. The resulting inconsistency in evaluation [127] results in a lack of trust in the technologies on the part of clinicians, care providers, policy makers, and patients. The FDA has announced plans to apply regulatory oversight to medical apps [128]. As exemplified in work by DeMasi and Recht [129], work needs to be done to place careful and substantiated bounds around each technology’s ability to quantify functional assessment. While a technological solution may work well in one setting, its power may be substantially weakened when employed for a different person, health condition, or environmental condition. If a model is so specialized that it can only assess one specific component and cannot be used for new individuals without retraining then it is impractical outside of controlled experiments [130]. At the same time, clinicians and care providers need to make important decisions regarding what level of performance (e.g., reliability, validity, false positives, responsiveness to change [131]) is acceptable to adopt the technology [132].

Technology usability.

There is often a disconnect between technology inventors and the potential beneficiaries [133]. Only a subset of the technologies we reviewed can practically be used without expensive equipment, clinical administration, or extensive user compliance [134]. A challenge for technology designers is to ensure that they can be used by the general public, including individuals who may be experiencing age-related changes or have other types of disabilities. If a technology requires compliance on the part of the user then the targeted population needs to be involved in iterative design of the technology [135].

Although the importance of usability testing is clear for health assessment, diverse approaches have been proposed for defining and measuring usability. The International Organization for Standardization defines usability as consisting of effectiveness, efficiency, and satisfaction [136]. Here, effectiveness refers to the accurateness and completeness with which users achieve certain goals. To this end, designers of functional health assessment technology need to observe and assess users’ ability to interpret and act on information provided by the technology. Efficiency refers to the resources that are required for goal achievability. For functional health assessment technologies this may include the time a user needs to spend on data collection and information interpretation as well as the computational run time and technology cost. Satisfaction, or user comfort and overall attitude toward the technology, is typically measured using ratings scales such as SUMI [137]. Like the SUMI, other survey-based usability measures can also be employed such as the ten-item, Likert-based system usability scale (SUS) [138]. Because the technology surveyed in this article require usability both on the part of the individual being assessed and for the clinician or caregiver who is interpreting the data, there is a need to refine standard usability measures for this task.

Multi-modality, multi-domain assessment.

One potential weakness of many current technology-based approaches to functional assessment is that they utilize one (or a small set) of technologies and target one component of functional health, often with one target population in mind. This is reflected in the summary provided by Table II. Clearly, functional health assessment is very complex and requires a holistic approach [139]–[141]. Researchers need to integrate multiple tests that provide insights on different domains. They need to fuse data and models from multiple sensor modalities. Even when ecologically valid testing settings are provided, data analysis needs to careful consider all of the contextual factors that are in play when a person’s functional performance is monitored [142]. Finally, while each component of functional health can be assessed and does impact the ability of a person to function independently, compensatory strategies also need to be considered [18], [143]. Many individuals are able to live independently and well despite numerous health issues, and research should be directed toward understanding these strategies as well as integrating them into our models of functional health.

Pervasive computing, wireless sensors, and machine learning technology provide important tools that can revolutionize functional health assessment. While tremendous advances have been made in this field over the last decade, much more remains to ensure that the technology is robust, understandable, usable, and safe. The ongoing collaboration between biomedical engineers, computer scientists, and clinicians will not only improve the effectiveness of this technology in performing automated and ecologically valid assessments but also to provide insights that can improve healthcare delivery and quality of life.

References

- [1].World Health Organization, “Life expectancy,” Global Health Observatory (GHO) data, 2018.. [Google Scholar]

- [2].National Council on Aging, “Health aging facts: Chronic disease,” 2015..

- [3].Dill M and Salsberg E, “The complexities of physician supply and demand: Projects through 2025.” Center for Workforce Studies, Association of American Medical Colleges, 2008. [Google Scholar]

- [4].Leurent C and Ehlers MD, “Digital technologies for cognitive assessment to accelerate drug development in Alzheimer’s disease,” Clin. Pharmacol. Ther, vol. 98, no. 5, pp. 475–476, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [5].Katz S, Down TD, Cash HR, and Grotz RC, “Progress in the development of the index of ADL,” Gerontologist, vol. 10, no. 1, pp. 20–30, 1970. [DOI] [PubMed] [Google Scholar]

- [6].Lawton MP and Brody EM, “Assessment of older people: Self-maintaining and instrumental activities of daily living,” Gerontologist, vol. 9, no. 3, pp. 179–186, 1969. [PubMed] [Google Scholar]

- [7].Saadeh W, Bin Altaf MA, and Butt SAB, “A wearable neuro-degenerative diseases detection system based on gait dynamics,” in IFIP/IEEE International Conference on Very Large Scale Integration, 2017, pp. 1–6. [Google Scholar]

- [8].O’Brien MK, Mummidisetty CK, Bo X, Poellabauer C, and Jayaraman A, “Quantifying community mobility after stroke using mobile phone technology,” in ACM International Joint Conference on Pervasive and Ubiquitous Computing, 2017, pp. 161–164. [Google Scholar]

- [9].RADAR-CNS, “RADAR-CNS: Remote assessment of disease and relapse - central nervous system,” 2018. [Online]. Available: https://www.radar-cns.org/.

- [10].Newland P, Kumutis A, Salter A, Flick L, Thomas FP, Rantz M, and Skubic M, “Continuous in-home symptom and mobility measures for individuals with multiple sclerosis: A case presentation,” J. Neurosci. Nurs, vol. 49, no. 4, pp. 241–246, 2017. [DOI] [PubMed] [Google Scholar]

- [11].Wang R, Wang W, and Aung MSH, “Predicting symptom trajectories of schizophrenia using mobile sensing,” ACM Trans. Interactive, Mobile, Wearable Ubiquitous Technol, vol. 1, no. 3, p. 110, 2017. [Google Scholar]

- [12].Overcash J, “Assessing the functional status of older cancer patients in an ambulatory care visit,” Healthc, vol. 3, no. 3, pp. 846–859, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [13].Sanchez-Moreno J, Martinez-Aran A, and Vieta E, “Treatment of functional impairment in patients with bipolar disorder,” Curr. Psychiatry Rep, vol. 19, no. 1, p. 3, 2017. [DOI] [PubMed] [Google Scholar]

- [14].DeYoung N and V Shenal B, “The reliability of the Montreal Cognitive Assessment using telehealth in a rural setting with veterans,” J. Telemed. Telecare, 2018. [DOI] [PubMed] [Google Scholar]

- [15].Cesari M, “Role of gait speed in the assessment of older patients,” J. Am. Med. Assoc, vol. 305, no. 1, pp. 93–94, 2011. [DOI] [PubMed] [Google Scholar]

- [16].Zampieri C, Salarian A, Carlson-Kuhta P, Nutt JG, and Horak FB, “Assessing mobility at home in people with early Parkinson’s disease using an instrumented Timed Up and Go test,” Parkinsonism Relat. Disord, vol. 17, no. 4, pp. 277–280, May 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [17].HealthMeasures, “Intro to NIH toolbox,” 2018..

- [18].Farias ST, Schmitter-Edgecombe M, and Weakley A, “Compensation strategies in older adults: Association with cognition and everyday function,” Am. J. Alzheimers. Dis. Other Demen, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [19].McAlister C, Schmitter-Edgecombe M, and Lamb R, “Examination of variables that may affect the relationship between cognition and functional status in individuals with mild cognitive impairment: A meta-analysis,” Arch. Clin. Neuropsychol, vol. 31, no. 2, pp. 123–147, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [20].Schmitter-Edgecombe M and Farias ST, “Aging and everyday functioning: Measurement, correlates and future directions,” in APA Handbook of Dementia, 2018. [Google Scholar]

- [21].Dutil I, Bottari C, and Auger C, “Test-retest reliability of a measure of independence in everyday activities: the ADL profile,” Occup. Ther. Int, vol. 2017, p. 3014579, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [22].Dawadi P, Cook DJ, and Schmitter-Edgecombe M, “Automated cognitive health assessment using smart home monitoring of complex tasks,” IEEE Trans. Syst. Man, Cybern. Part B, vol. 43, no. 6, pp. 1302–1313, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [23].Shealy C, “Solutions review: Application development,” 2016. [Online]. Available: https://solutionsreview.com/mobile-application-development/a-collection-of-mobile-application-development-statistics-growth-usage-revenue-and-adoption/.

- [24].Costa CR, Iglesias MJF, Rifon LEA, Carballa MG, and Rodriguez SV, “The acceptability of TV-based game platforms as an instrument to support the cognitive evaluation of senior adults at home,” PeerJ, vol. 5, p. e2845, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [25].Schoenfeldt LF, “Guidelines for computer-based psychological tests and interpretations,” Comput. Human Behav, vol. 5, no. 1, pp. 13–21, 1989. [Google Scholar]

- [26].Beauchet O, Launay CP, Merjagnan C, Kabeshova A, and Annweiler C, “Quantified self and comprehensive geriatric assessment: Older adults are able to evaluate their own health and functional status,” PLoS One, vol. 9, no. 6, p. e100636, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [27].Seelye A, Mattek N, Howieson DB, Austin D, Wild K, Dodge H, and Kaye J, “Embedded online questionnaire measures are sensitive to identifying mild cognitive impairment,” Alzheimer’s Dis. Assoc. Disord, vol. 30, no. 2, pp. 152–159, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [28].Schmitter-Edgecombe M, Parsey C, and Cook DJ, “Cognitive correlates of functional performance in older adults: Comparison of self-report, direct observation and performance-based measures,” J. Int. Neuropsychol. Soc, vol. 17, no. 5, pp. 853–864, 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [29].Seelye AM, “Weekly observations of online survey metadata obtained through home computer use allow for detection of changes in everyday cognition before transition to mild cognitive impairment,” Alzheimer’s Dement., 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [30].Pratap A, Anguera JA, and Renn BN, “The feasibility of using smartphones to assess and remediate depression in Hispanic/Latino individuals nationally,” in ACM International Joint Conference on Pervasive and Ubiquitous Computing, 2017, pp. 854–860. [Google Scholar]

- [31].Arean PA, Hoa KK, and Andersson G, “Mobile technology for mental health assessment,” Dialogues Clin. Neurosci, vol. 18, no. 2, pp. 163–169, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [32].Runyan JD and Steinke E, “Virtues, ecological momentary assessment/intervention and smartphone technology,” Front. Psychol, vol. 6, p. 481, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [33].Chaytor N and Schmitter-Edgecombe M, “The ecological validity of neuropsychological tests: a review of the literature on everyday cognitive skills,” Neuropsychol. Rev, vol. 13, no. 4, pp. 181–197, 2003. [DOI] [PubMed] [Google Scholar]

- [34].Lindauer A, Seelye A, Lyons B, Dodge HH, Mattek N, Mincks K, Kaye J, and Erten-Lyons D, “Dementia care comes home: Patient and caregiver assessment via telemedicine,” Gerontologist, vol. 57, no. 5, pp. e85–e93, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [35].Tenorio M, Williams A, Olugbenga, and Leonard M, “The Letter and Shape Drawing (LSD) test: An efficient and systematised approach to testing of visuospatial function,” in Annual International Conference of the IEEE Engineering in Medicine and Biology Society, 2016, pp. 2323–2326. [DOI] [PubMed] [Google Scholar]

- [36].Mitsi G, Mendoza EU, and Wissel BD, “Biometric digital health technology for measuring motor function in Parkinson’s Disease: Results from a feasibility and patient satisfaction study,” Front. Neurol, vol. 8, p. 273, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [37].Shigemori K and Okamoto K, “Feasibility, reliability, and validity of a smartphone-based application of the mmse (MMSE-A) for the assessment of cognitive function in the elderly,” Alzheimer’s Dement, vol. 11, no. 7, p. P899, 2015. [Google Scholar]

- [38].Chaytor N, Schmitter-Edgecombe M, and Burr R, “Improving the ecological validity of executive functioning assessment,” Arch. Clin. Neuropsychol, vol. 21, pp. 217–227, 2006. [DOI] [PubMed] [Google Scholar]

- [39].Ghosh S, Das M, Ghosh A, Nag A, Mazumdar S, Roy P, Das N, Nasipuri M, and Chowdhury B, “Development of a smartphone application for bedside assessment of neurocognitive functions,” in Applications and Innovations in Mobile Computing, 2014, pp. 54–59. [Google Scholar]

- [40].Coelli S, Tacchino G, and Rossetti E, “Assessment of the usability of a computerized Stroop Test for clinical application,” in International Forum on Research and Technologies for Society and Industry Leveraging a Better Tomorrow, 2016, pp. 1–5. [Google Scholar]

- [41].Shi D, Zhao X, Feng G, Luo B, Huang J, and Tian F, “Integrating digital pen devices with traditional medical screening tool for cognitive assessment,” in International Conference on Information Science and Technology, 2016, pp. 42–48. [Google Scholar]

- [42].Fellows R, Dahmen J, Cook DJ, and Schmitter-Edgecombe M, “Multicomponent analysis of a digital trail making test,” Clin. Neuropsychol, vol. 31, no. 1, pp. 154–167, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [43].Lordthong R and Naiyanetr P, “Cognitive function monitoring platform based on a smartphone for alternative screening tool,” in IASTED International Conference on Biomedical Engineering, 2017, pp. 212–217. [Google Scholar]

- [44].Brouillette RM, Foil H, Fontenot S, Correro A, Allen R, Martin CK, Bruce-Keller AJ, and Keller JN, “Feasibility, reliability, and validity of a smartphone based application for the assessment of cognitive function in the elderly,” PLoS One, vol. 8, no. 6, p. e65925, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [45].Joshi V, Wallace B, Shaddy A, Knoefel F, Goubran R, and Lord C, “Metrics to monitor performance of patients with mild cognitive impairment using computer based games,” in IEEE-EMBS International Conference on Biomedical and Health Informatics, 2016, pp. 521–524. [Google Scholar]

- [46].Hagler S, Jimison HB, and Pavel M, “Assessing executive function using a computer game: Computational modeling of cognitive processes,” IEEE J. Biomed. Heal. Informatics, vol. 18, no. 4, pp. 1442–1452, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [47].Pavel M, Jimison H, Hagler S, and McKanna J, “Using behavior measurement to estimate cognitive function based on computational models,” in Cognitive Informatics in Health and Biomedicine Health Informatics, 2017, pp. 137–163. [Google Scholar]

- [48].Czaja SJ, Loewenstein DA, Lee CC, Fu SH, and Harvey PD, “Assessing functional performance using computer-based simulations of everyday activities,” Schizophr. Res, vol. 183, pp. 130–136, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [49].Czaja SJ, Loewenstein DA, Sabbag SA, Curiel RE, Crocco E, and Harvey PD, “A novel method for direct assessment of everyday competence among older adults,” J. Alzheimer’s Dis, vol. 57, pp. 1229–1238, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [50].Liu K-Y, Cheng S-M, and Huang H-L, “Development of a game-based cognitive measures system for elderly on the basis of Mini-Mental State Examination,” in International Conference on Applied System Innovation, 2017, pp. 1853–1856. [Google Scholar]

- [51].Hou CJ, Huang MW, Zhou JY, Hsu PC, Zeng JH, and Chen YT, “The application of individual virtual nostalgic game design to the evaluation of cognitive function,” in Annual International Conference of the IEEE Engineering in Medicine and Biology Society, 2017, pp. 2586–2589. [DOI] [PubMed] [Google Scholar]

- [52].Austin J, Hollinshead K, and Kaye J, “Internet searches and their relationship to cognitive function in older adults: Cross-sectional analysis,” J. Med. Internet Res, vol. 19, no. 9, p. e307, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [53].Vizer LM and Sears A, “Classifying text-based computer interactions for health monitoring,” IEEE Pervasive Comput, vol. 14, no. 4, pp. 64–71, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [54].Li H, Zhang T, Yu T-C, Lin C-C, and Wong AMK, “Combine wireless sensor network and multimedia technologies for cognitive function assessment,” in International Conference on Intelligent Control and Information Processing, 2012, pp. 717–720. [Google Scholar]

- [55].Kwon GH, Kim L, and Park S, “Development of a cognitive assessment tool and training systems for elderly cognitive impairment,” in International Conference on Pervasive Computing Technologies for Healthcare and Workshops, 2013, pp. 450–452. [Google Scholar]

- [56].NeuroCog, “VRFCAT: Integrating virtual reality into the assessment of functioning in clinical trials,” 2018..

- [57].Arias P, Robles-Garcia V, Sanmartin G, Flores J, and Cudeiro J, “Virtual reality as a tool for evaluation of repetitive rhythmic movements in the elderly and Parkinson’s Disease patients,” PLoS One, vol. 7, no. 1, p. e30021, 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [58].Lugo G, Ba F, Cheng I, and Ibarra-Manzano MA, “Virtual reality and hand tracking system as a medical tool to evaluate patients with Parkinson’s,” in Pervasive Health, 2017. [Google Scholar]

- [59].Pedroli E, Cipresso P, Serino S, Riva G, Albani G, and Riva G, “A virtual reality test for the assessment of cognitive deficits: Usability and perspectives,” in International Conference on Pervasive Computing Technologies for Healthcare and Workshops, 2013, pp. 453–458. [Google Scholar]

- [60].Raspelli S, Pallavicini F, and Carelli L, “Validating the neuro VR-based virtual version of the multiple errands test: Preliminary results,” Presence, vol. 21, no. 1, pp. 31–42, 2012. [Google Scholar]

- [61].Kizony R, Segal B, Weiss PL, Sangani S, and Fung J, “Executive functions in young and older adults while performing a shopping task within a real and similar virtual environment,” in International Conference on Virtual Rehabilitation, 2017, pp. 1–8. [Google Scholar]

- [62].Parsons TD, McPherson S, and Interrante V, “Enhancing neurocognitive assessment using immersive virtual reality,” in Workshop on Virtual and Augmented Assistive Technology, 2013, pp. 27–34. [Google Scholar]

- [63].Onoda K and Yamaguchi S, “Revision of the cognitive assessment for dementia, iPad version (CADi2),” PLoS One, vol. 9, no. 10, p. e109931, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [64].Parsey CM and Schmitter-Edgecombe M, “Applications of technology in neuropsychological assessment,” Clin. Neuropsychol, vol. 27, pp. 1328–1361, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [65].Paradiso R, Faetti T, and Werner S, “Wearable monitoring systems for psychological and physiological state assessment in a naturalistic environment,” in Annual International Conference of the IEEE Engineering in Medicine and Biology Society, 2011, pp. 2250–2253. [DOI] [PubMed] [Google Scholar]

- [66].Ramirez-Bautista JA and Huerta-Ruelas JA, “A review in detection and monitoring gait disorders using in-shoe plantar measurement systems,” IEEE Rev. Biomed. Eng, vol. 10, pp. 299–309, 2017. [DOI] [PubMed] [Google Scholar]

- [67].Majumder S, Mondal T, and Deen MJ, “Wearable sensors for remote health monitoring,” Sensors, vol. 17, no. 1, p. 130, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [68].Fiorini L, Maselli M, and Castro E, “Feasibility study on the assessment of auditory sustained attention through walking motor parameters in mild cognitive impairments and healthy subjects,” in Annual International Conference of the IEEE Engineering in Medicine and Biology Society, 2017, pp. 897–900. [DOI] [PubMed] [Google Scholar]

- [69].Mariani B, Jimenez MC, Vingerhoets FJ, and Aminian K, “On-shoe wearable sensors for gait and turning assessment of patients with Parkinson’s disease,” IEEE Trans. Biomed. Eng, vol. 60, no. 1, pp. 155–158, 2013. [DOI] [PubMed] [Google Scholar]

- [70].Ayena JC, Tchakouté LDC, Otis M, and Menelas BAJ, “An efficient home-based risk of falling assessment test based on Smartphone and instrumented insole,” in IEEE International Symposium on Medical Measurements and Applications, 2015, pp. 416–421. [Google Scholar]

- [71].Wang J, Liu Z, and Wu Y, “Learning actionlet ensemble for 3D human action recognition,” IEEE Trans. Pattern Anal. Mach. Intell, vol. 36, no. 5, pp. 914–927, 2013. [DOI] [PubMed] [Google Scholar]

- [72].Mancini M, Schlueter H, El-Gohary M, Mattek N, Duncan C, Kaye J, and Horak FB, “Continuous monitoring of turning mobility and its association to falls and cognitive function: A pilot study,” Journals Gerontol. Ser. A Biol. Sci. Med. Sci, vol. 71, no. 8, pp. 1102–1108, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [73].Godfrey A, Del Din S, Barry G, Mathers JC, and Rochester L, “Within trial validation and reliability of a single tri-axial accelerometer for gait assessment,” in Annual International Conference of the IEEE Engineering in Medicine and Biology Society, 2014, pp. 5892–5895. [DOI] [PubMed] [Google Scholar]

- [74].Sprint G, Cook DJ, Weeks D, and Borisov V, “Predicting functional independence measure scores during rehabilitation with wearable inertial sensors,” IEEE Access, vol. 3, pp. 1350–1366, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [75].Borisov V, Sprint G, Cook DJ, and Weeks D, “Measuring gait changes during inpatient rehabilitation with wearable sensors,” J. Rehabil. Res. Dev, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [76].Howcroft JD, Lemaire ED, Kofman J, and McIlroy WE, “Analysis of dual-task elderly gait using wearable plantar-pressure insoles and accelerometer,” in Annual International Conference of the IEEE Engineering in Medicine and Biology Society, 2014, pp. 5003–5006. [DOI] [PubMed] [Google Scholar]

- [77].Greene BR and Kenny RA, “Assessment of cognitive decline through quantitative analysis of the Timed Up and Go Test,” IEEE Trans. Biomed. Eng, vol. 59, no. 4, pp. 988–995, 2012. [DOI] [PubMed] [Google Scholar]

- [78].Verghese J, Wang C, Lipton RB, Holtzer R, and Xue X, “Quantitative gait dysfunction and risk of cognitive decline and dementia,” J. Neurol. Neurosurg. Psychiatry, vol. 78, pp. 929–935, 2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [79].Lord S, Galna B, Coleman S, Burn D, and Rochester L, “Mild depressive symptoms are associated with gait impairment in early Parkinson’s disease,” Mov. Disord, vol. 28, pp. 634–639, 2013. [DOI] [PubMed] [Google Scholar]

- [80].Lin Q, Zhang D, Chen L, Ni H, and Zhou X, “Managing elders’ wandering behavior using sensors-based solutions: A survey,” Int. J. Gerontol, vol. 8, no. 2, pp. 49–55, 2014. [Google Scholar]

- [81].Cella M, Okruszek L, Lawrence M, Zarlenga V, He Z, and Wykes T, “Using wearable technology to detect the autonomic signature of illness severity in schizophrenia,” Schizophr. Res, 2017. [DOI] [PubMed] [Google Scholar]

- [82].Difrancesco S, Fraccaro P, van der Veer SN, Alshoumr B, Ainsworth J, Bellazzi R, and Peek N, “Out-of-home activity recognition from GPS data in schizophrenic patients,” in IEEE International Symposium on Computer-Based Medical Systems, 2016, pp. 324–328. [Google Scholar]

- [83].Boukhechba M, Huang Y, Chow P, Fua K, Teachman BA, and Barnes LE, “Monitoring social anxiety from mobility and communication patterns,” ACM Int. Jt. Conf. Pervasive Ubiquitous Comput, pp. 749–753, 2017. [Google Scholar]