Abstract

Objective

Our objective was to determine the feasibility and preliminary efficacy of a behavioral nudge on adoption of a clinical decision support (CDS) tool.

Materials and Methods

We conducted a pilot cluster nonrandomized controlled trial in 2 Emergency Departments (EDs) at a large academic healthcare system in the New York metropolitan area. We tested 2 versions of a CDS tool for pulmonary embolism (PE) risk assessment developed on a web-based electronic health record-agnostic platform. One version included behavioral nudges incorporated into the user interface.

Results

A total of 1527 patient encounters were included in the trial. The CDS tool adoption rate was 31.67%. Adoption was significantly higher for the tool that included behavioral nudges (39.11% vs 20.66%; P < .001).

Discussion

We demonstrated feasibility and preliminary efficacy of a PE risk prediction CDS tool developed using insights from behavioral science. The tool is well-positioned to be tested in a large randomized clinical trial.

Trial Registration

Clinicaltrials.gov (NCT05203185)

Keywords: clinical decision support, pulmonary embolism, computed tomography, pulmonary angiogram, behavioral economics

Introduction

Overuse of computerized tomography pulmonary angiography (CTPA) in the diagnostic management of pulmonary embolism (PE) exposes patients to unnecessary risk of contrast induced nephropathy, radiation induced malignancy, and cardiovascular disease.1–3 As such, professional society guidelines recommend the use of validated clinical prediction rules for PE risk stratification before imaging.4 Their use reduces CTPA testing by 25% without any missed PEs.5,6 PE risk stratification clinical decision support (CDS) tools have also been shown to reduce unnecessary imaging without missing PEs.7–13 However, providers do not use these tools, or use them incorrectly, in up to 80% of patients.14–16 It is estimated that one third of all CTPA studies for PE are avoidable and cost the healthcare system more than $100 million annually.17

Incorporating behavioral nudges into CDS with validated clinical prediction rules for PE risk prediction provides a potential avenue for increasing the adoption of these tools and improving care. Nudges are defined as positive reinforcement and indirect suggestions that have a non-forced effect on decision making.18 For example, “opt-out” options for organ donation consent lead to striking differences in enrollment.19 Nudges have emerged as a novel and promising avenue for impacting provider behavior as they have a non-forced effect on decision making, preserving autonomy. They additionally incorporate ease as a key principle in design, minimizing negative impacts on clinical workflow and cognitive load.

We applied insights from behavioral science to design, develop, and pilot test a CDS tool for PE risk prediction that incorporated behavioral nudges to reduce the rate of unnecessary CTPA testing in the Emergency Department (ED) setting in a pilot cluster nonrandomized controlled trial. Our objective was to determine the feasibility and preliminary efficacy of the use of nudges and their impact on CDS adoption and provider ordering behavior.

Methods

Study design, setting, and enrollment

We conducted a cluster nonrandomized controlled trial in 2 EDs at a large academic healthcare system in the New York metropolitan area. The study took place between October 1, 2021, and March 3, 2022. The tool was launched with behavioral nudges in one ED and without behavioral nudges in the other. EDs were chosen based on comparable size and acuity levels. Emergency Medicine leadership at each department consented to participate. ED providers (ie, physicians, physician assistants, and nurse practitioners) were trained before the tool launch and automatically enrolled in the trial if they ordered a CTPA for the evaluation of PE during the study period. All study procedures were approved by the Northwell Health Institutional Review Board.

Data abstraction and outcome measures

All study data were extracted from the enterprise electronic health record (EHR) (Sunrise Clinical Manager; Allscripts) reporting database. As this was a pragmatic clinical trial, all data used were routinely collected during the provision of clinical care. Data collected included patient demographic information and Charlson Comorbidity Index (CCI). The CCI predicts 10-year survival in patients with multiple comorbidities and was used as a measure of total comorbidity burden.20 Race and ethnicity data were collected by self-report in prespecified fixed categories.

For each patient encounter, the patient’s final Wells’ Score was collected as was d-dimer order and test results, CTPA order and test results, and 3-month ED or inpatient readmission along with CTPA order and test results for readmission. CTPA testing results (PE vs no PE) are specified in discrete categories by the reading radiologist as a part of routine care. Provider information was collected including age, provider type (physicians, physician assistants, or nurse practitioner), and employment type (part vs full time). Tool display, finalization, and adoption metrics were collected. The tool was considered finalized if an order was placed for either d-dimer or CTPA and it was not cancelled. The primary study outcome was concordance between CDS tool order recommended and the provider order placed (provider tool adoption). The safety outcome was a missed PE, defined as provider tool adoption on index visit, no CTPA order placed and readmission to the ED or inpatient within 3 months with CTPA positive for PE.

Intervention: EHR-agnostic PE risk prediction tool and behavioral nudge

Description of the tool’s development is summarized below and presented in detail in a previous publication.21 The design and development processes were grounded in user-centered and behavioral design principles and guided by a well-established behavioral framework, the Behavior Change Wheel.22 Specific behavioral nudges were chosen to target barriers to tool use for PE risk stratification identified during development work.23 The tool was developed on an EHR-agnostic web-based platform, designed for dissemination to work with any EHR using the open communication protocol Fast Healthcare Interoperability Resources (FHIR) (Figure 1).

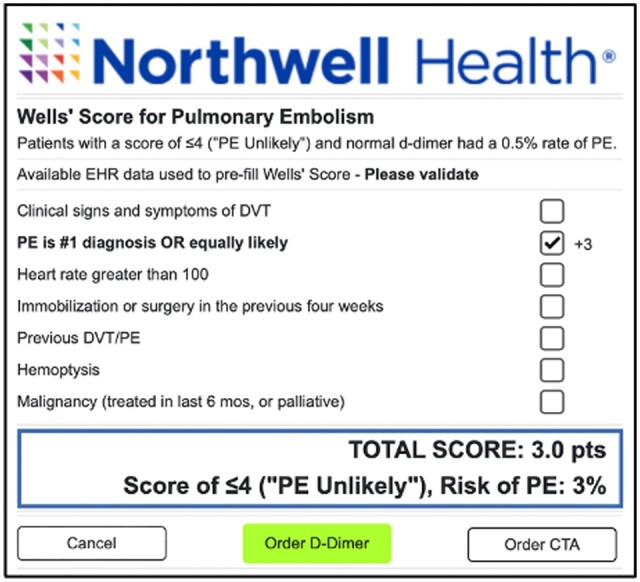

Figure 1.

Pulmonary embolism risk prediction clinical decision support tool.

The CDS tool was triggered by any order for “CT Chest w/IV contrast for Pulmonary Embolism” (CTPA) placed at a study site. This triggered an automated backend PE risk assessment. The tool calculated the Wells’ Criteria for Pulmonary Embolism using patient-specific information from the EHR and the health information exchange. The validation study for this assessment was previously published, finding an accuracy of 92.1% for this method in correctly identifying patients in “PE Unlikely” and “PE Likely” categories using the 2-tiered approach, when compared with manual chart review.24 The subjective criterion of Wells’ Score (PE is #1 diagnosis OR equally likely) was considered positive for all patients as the assessment is triggered only by a provider order for imaging. The tool only displayed for the provider if the Wells’ Score was ≤4, classified as “PE Unlikely” by the 2-tiered score. All Wells’ Score criteria were then modifiable by the provider. The tool recommended ordering a d-dimer for patients with a Wells’ Score ≤4 (“PE Unlikely”) and a CTPA for patients with a Wells’ Score >4 (“PE Likely”). Patients with a Wells’ Score ≤4 (“PE Unlikely”) and a normal d-dimer test have a 0.5% rate of PE.25 CTPA testing is unnecessary for these patients. The tool recommended order was highlighted in green and could be placed with one click. The order not recommended could be placed with 2 clicks.

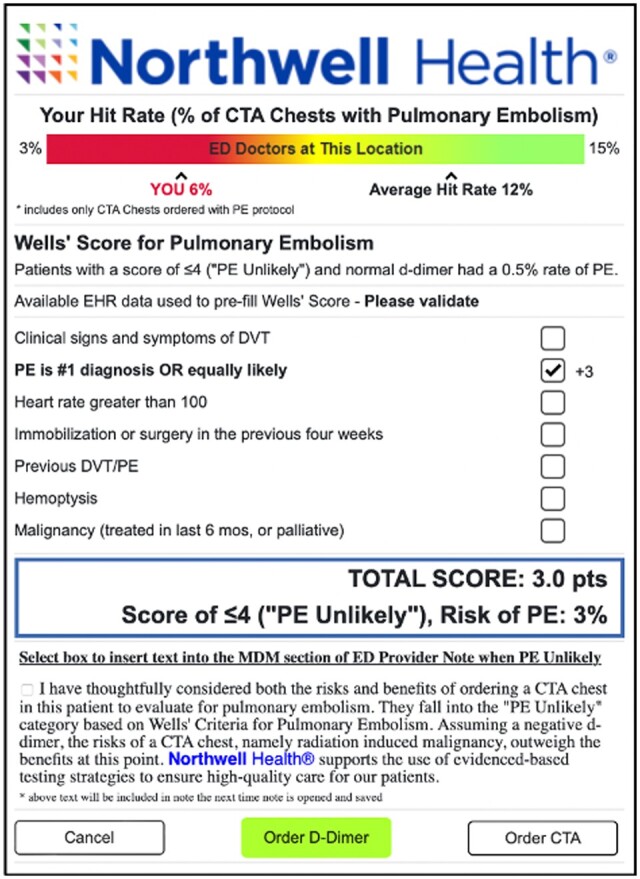

The peer comparison nudge showed the individual ordering provider’s CTPA yield or hit rate (% of CTPA tests done for PE that were positive for PE) in comparison to the median hit rate of ED doctors in their department (Figure 2). This was reported as an appropriate comparison by providers in development work.21 Individual hit rates were calculated as 3-month averages and not displayed if the provider ordered less than 10 CTPAs during this period of time. If the provider’s hit rate was less than the department average, it was shown in red type font. If the hit rate was above the department average, it was shown in green with a smiley face for encouragement and to minimize regression to the mean. The provider’s hit rate and department median were both shown on a color-coded line showing lower hit rates as red, higher ones as green with yellow in between. The green area was chosen based on systemic review data showing that provider’s using Wells’ Score for Pulmonary Embolism improved hit rates from 9% to 12% without missing any PEs.5,6

Figure 2.

Pulmonary embolism risk prediction clinical decision support tool with behavioral nudge.

The note incentive nudge allowed the provider to input a section of pre-written text explaining that the provider had considered the risks and benefits of CTPA and that the risks outweighed the benefits for this patient into their note (Figure 2). With one click the text blurb could be inserted into the medical decision-making section of the ED Provider Note. The text was editable within the note. The text served as a channeling nudge, helping providers to begin envisioning the next steps in placing an order for d-dimer, as opposed to CTPA. The last line of the text stated that the health system supports evidence-based testing strategies for patients. This was chosen to address the provider’s fear of missing a PE which emerged as a major barrier in development work.

Statistical analysis

Patient and provider characteristics were summarized descriptively using frequency and percentage for categorical variables and using mean, standard deviation, median, and interquartile range for continuous variables. Categorical characteristics were compared across control and intervention EDs using Pearson’s Chi-squared test or Fisher’s Exact test (if >20% of expected counts were <5), as appropriate, and continuous characteristics were compared across EDs using the Wilcoxon Rank-Sum test.

CDS tool display, finalization, and adoption, as well as CTPA yield, were summarized similarly across control and intervention EDs. The association between CTPA yield and ED was also assessed within the tool adoption and non-adoption subsets. All results were considered to be statistically significant at the P < .05 level of significance, and analyses were performed in R version 4.1.2 (R Project for Statistical Computing; R Foundation).

Results

Characteristics of patients and providers

A total of 1527 patient encounters were included in the study—(median age, 63.11 years; interquartile range [IQR], 51.00-78.00; range, 19.00-103.00 years; 57.17% female) (Table 1). Patients had a median Charlson Comorbidity Index of 5.00 (IQR, 2.00-8.00). This value corresponds to approximately 21% 10-year survival.20 Patients were seen by a total of 83 providers (median age, 38.63 years; IQR, 32.50-42.00; range, 27.00-70.00 years; 39.76% female). Providers included attending physicians (79.52%), nurse practitioners (2.41%), and physician assistants (18.07%).

Table 1.

Characteristics of patients and providers.

| Patient characteristics | Overall (N = 1527) | Control (N = 734) | Intervention (N = 793) | P |

|---|---|---|---|---|

| Age | .066 | |||

| Median (IQR) | 65 (51, 78) | 64 (49, 77) | 65 (53, 79) | |

| Mean (SD) | 63.11 (18.48) | 62.08 (19.13) | 64.06 (17.82) | |

| Female, No. (%) | 873 (57.17%) | 432 (58.86%) | 441 (55.61%) | .201 |

| Race/Ethnicity, No. (%) | <.001 | |||

| Asian | 44 (2.88%) | 30 (4.09%) | 14 (1.77%) | |

| Black | 475 (31.11%) | 435 (59.26%) | 40 (5.04%) | |

| Hispanic or Latino | 101 (6.61%) | 32 (4.36%) | 69 (8.70%) | |

| Other | 92 (6.02%) | 76 (10.35%) | 16 (2.02%) | |

| Unknown | 77 (5.04%) | 21 (2.86%) | 56 (7.06%) | |

| White | 738 (48.33%) | 140 (19.07%) | 598 (75.41%) | |

| Charlson Comorbidity Index | <.001 | |||

| Median (IQR) | 5.00 (2.00, 8.00) | 5.00 (2.00, 7.00) | 5.00 (2.00, 9.00) | |

| Mean (SD) | 5.44 (4.24) | 4.98 (4.01) | 5.87 (4.41) |

| Provider characteristics | Overall (N = 83) | Control (N = 34) | Intervention (N = 49) | P |

|---|---|---|---|---|

| Age | .188 | |||

| Median (IQR) | 37 (32.50, 42) | 35.00 (32.50, 38) | 38 (33, 44) | |

| Mean (SD) | 38.63 (8.80) | 36.97 (7.58) | 39.78 (9.46) | |

| Female, No. (%) | 33 (39.76%) | 15 (44.12%) | 18 (36.73%) | .499 |

| Provider type | >.999 | |||

| Attending | 66 (79.52%) | 27 (79.41%) | 39 (79.59%) | |

| Nurse practitioner | 2 (2.41%) | 1 (2.94%) | 1 (2.04%) | |

| Physician assistant | 15 (18.07%) | 6 (17.65%) | 9 (18.37%) | |

| Employment type | ||||

| Full-time (%) | 52 (62.65%) | 21 (61.76%) | 31 (63.27%) | .889 |

| Part-time (%) | 31 (37.35%) | 13 (38.24%) | 18 (36.73%) |

PE risk prediction tool display, finalization, and adoption

A total of 1527 patient encounters in which a CTPA order was initiated were included in the analysis (Table 2). The tool was displayed to providers in 20.50% of these encounters. Once displayed, the tool was finalized in almost all encounters. Overall tool adoption was 31.67%. In unadjusted analyses, tool adoption was significantly higher at the ED that received the tool with incorporated behavioral nudges (39.11% vs 20.66%; P < .001).

Table 2.

PE risk prediction tool display, finalization, and adoption.

| Characteristic, No. (%) | Overall (N = 1527) | Control (N = 734) | Intervention (N = 793) | P |

|---|---|---|---|---|

| CDS displayed | 313 (20.50%) | 129 (17.57%) | 184 (23.20%) | .006 |

| Finalization | 300 (95.85%) | 121 (93.80%) | 179 (97.28%) | .128 |

| Adoption (acceptance) | 95 (31.67%) | 25 (20.66%) | 70 (39.11%) | <.001 |

CTPA hit rate and tool adoption

Of 1527 observations in the study, 1347 had a CTPA order completed. Among 1347 observations with a CTPA order completed, 256 (19.01%) had a PE detected. The PE detection rate was significantly higher at the intervention ED as compared to the control (26.45% vs 10.76%; P < .001). This is in comparison to baseline, 3-month average (May 1, 2021-July 31, 2021), pre-intervention hit rates for the intervention and control EDs that were 9% and 10%, respectively. Overall, for both EDs combined, the CTPA hit rate was higher when the tool was adopted compared to when it was not adopted (30.88% vs 15.93%, P = .009).

There were no missed PEs at either ED during the study dates.

Discussion

We designed, developed, and pilot tested a CDS tool for PE risk prediction that incorporated behavioral nudges to reduce the rate of unnecessary CTPA testing in the ED. The tool was developed on an EHR-agnostic web-based platform. Our study demonstrated feasibility of this technical and design approach. Additionally, we demonstrated preliminary efficacy of the incorporated behavioral nudge with noted higher provider CDS tool adoption rates and therefore more appropriate ordering behavior by providers. Differences between baseline and study hit rates were also higher for the ED receiving the behavioral nudge. Differences in hit rates with tool adoption support previous work showing a 25% reduction in CTPA with tool use. These pilot study results are well positioned to support a well-powered randomized controlled clinical trial.

Our findings are consistent with the growing literature supporting the use of behavioral design techniques for impacting provider behavior. Several studies and systematic reviews have shown that peer comparison, default, and salient messaging nudge types are acceptable, feasible, and impactful.26–31 The growing literature has also detailed key design aspects that are important for high-quality, impactful nudges.32 Small design choices can significantly impact nudge effect. For example, for peer-comparison nudges, simple feedback on one metric designed to minimize cognitive load, delivered frequently, with a comparison group similar to the target group is more likely to be impactful. We incorporated these and many other key design considerations. Notably, this is the first study to incorporate a peer-comparison nudge within the user interface of a CDS tool, providing peer-comparison feedback synchronously with the target behavior.

CDS tool adoption rates at both sites were high in comparison to meta-analyses of CDS, with typical adoption rates as low as 10%.33–37 The user-centered approach for designing the tools likely contributed to this. The tool with and without nudges included an EHR automated PE risk assessment, minimizing provider clicks to complete the tool and allowing for a significant reduction in tool triggering. The tool displayed only about 20% of the time providers placed an order for CTPA, reducing alert fatigue, which is a significant contributor to low tool adoption. The tool’s technological development on a web-based platform additionally allowed for a simple intuitive user interface that is often not possible when constrained by EHR functionality.

Our study has limitations. First, this was a nonrandomized pilot study where differences between the 2 EDs may have contributed to differences in observed provider CDS tool adoption. For example, there were significant differences in the diversity of the patient populations at the 2 EDs. Second, findings might not generalize to EDs dissimilar to those enrolled. Third, results are dependent on EHR data collected during routine clinical practice, which can be imperfect; however, validity was demonstrated for key metrics.24 Lastly, our safety analyses were limited to return visits to the study site health system, so if a patient presented to a site outside of the study site health system, it would not have been captured.

Conclusion

We applied insights from behavioral science to design, develop, and pilot test a CDS tool for PE risk prediction that incorporated behavioral nudges to reduce the rate of unnecessary CTPA testing in the ED. The tool was developed on an EHR-agnostic web-based platform, designed for dissemination. We demonstrated feasibility and preliminary efficacy of this approach. The tool is well-positioned to be tested in a randomized clinical trial.

Contributor Information

Safiya Richardson, New York University (NYU) Langone, New York, NY 10016, United States.

Katherine L Dauber-Decker, Feinstein Institutes for Medical Research, Northwell Health, Manhasset, NY 11030, United States.

Jeffrey Solomon, Feinstein Institutes for Medical Research, Northwell Health, Manhasset, NY 11030, United States.

Pradeep Seelamneni, Feinstein Institutes for Medical Research, Northwell Health, Manhasset, NY 11030, United States.

Sundas Khan, Center for Innovations in Quality, Effectiveness and Safety, Michael E. DeBakey Veterans Affairs Medical Center, Houston, TX 77030, United States; Baylor College of Medicine, Houston, TX 77030, United States.

Douglas P Barnaby, Feinstein Institutes for Medical Research, Northwell Health, Manhasset, NY 11030, United States; Northwell/Zucker School of Medicine, Hempstead, NY 11549, United States.

John Chelico, CommonSpirit Health, Chicago, IL 60606, United States.

Michael Qiu, Feinstein Institutes for Medical Research, Northwell Health, Manhasset, NY 11030, United States.

Yan Liu, Feinstein Institutes for Medical Research, Northwell Health, Manhasset, NY 11030, United States.

Shreya Sanghani, Feinstein Institutes for Medical Research, Northwell Health, Manhasset, NY 11030, United States.

Stephanie M Izard, Feinstein Institutes for Medical Research, Northwell Health, Manhasset, NY 11030, United States.

Codruta Chiuzan, Feinstein Institutes for Medical Research, Northwell Health, Manhasset, NY 11030, United States.

Devin Mann, New York University (NYU) Langone, New York, NY 10016, United States.

Renee Pekmezaris, Feinstein Institutes for Medical Research, Northwell Health, Manhasset, NY 11030, United States; Northwell/Zucker School of Medicine, Hempstead, NY 11549, United States.

Thomas McGinn, Baylor College of Medicine, Houston, TX 77030, United States; CommonSpirit Health, Chicago, IL 60606, United States.

Michael A Diefenbach, Feinstein Institutes for Medical Research, Northwell Health, Manhasset, NY 11030, United States; Northwell/Zucker School of Medicine, Hempstead, NY 11549, United States.

Author contributions

All authors contributed to the conception of the work, the acquisition or analysis of data, and the drafting of intellectual content. All authors are accountable for all aspects of the work and provided final approval of the work.

Funding

This work was supported by National Heart, Lung, and Blood Institute grant number K23HL145114.

Conflicts of interest

None declared.

Data availability

The data underlying this article will be shared on reasonable request to the corresponding author.

References

- 1. Mitchell AM, Jones AE, Tumlin JA, Kline JA.. Prospective study of the incidence of contrast‐induced nephropathy among patients evaluated for pulmonary embolism by contrast‐enhanced computed tomography. Acad Emerg Med. 2012;19(6):618-625. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Niemann T, Zbinden I, Roser H, Bremerich J, Remy-Jardin M, Bongartz G.. Computed tomography for pulmonary embolism: assessment of a 1-year cohort and estimated cancer risk associated with diagnostic irradiation. Acta Radiol. 2013;54(7):778-784. [DOI] [PubMed] [Google Scholar]

- 3. Little MP, Azizova TV, Richardson DB, et al. Ionising radiation and cardiovascular disease: systematic review and meta-analysis. BMJ. 2023;380:e072924. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Qaseem A, Snow V, Barry P, Joint American Academy of Family Physicians/American College of Physicians Panel on Deep Venous Thrombosis/Pulmonary Embolism, et al. Current diagnosis of venous thromboembolism in primary care: a clinical practice guideline from the American Academy of Family Physicians and the American College of Physicians. Ann Internal Med. 2007;146(6):454-458. [DOI] [PubMed] [Google Scholar]

- 5. Wang RC, Bent S, Weber E, Neilson J, Smith-Bindman R, Fahimi J.. The impact of clinical decision rules on computed tomography use and yield for pulmonary embolism: a systematic review and meta-analysis. Ann Emerg Med. 2016;67(6):693.e3-701.e3. [DOI] [PubMed] [Google Scholar]

- 6. Costantino MM, Randall G, Gosselin M, Brandt M, Spinning K, Vegas CD.. CT angiography in the evaluation of acute pulmonary embolus. AJR Am J Roentgenol. 2008;191(2):471-474. [DOI] [PubMed] [Google Scholar]

- 7. Yan Z, Ip IK, Raja AS, Gupta A, Kosowsky JM, Khorasani R.. Yield of CT pulmonary angiography in the emergency department when providers override evidence-based clinical decision support. Radiology. 2017;282(3):717-725. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Mills AM, Ip IK, Langlotz CP, Raja AS, Zafar HM, Khorasani R.. Clinical decision support increases diagnostic yield of computed tomography for suspected pulmonary embolism. Am J Emerg Med. 2018;36(4):540-544. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Raja AS, Ip IK, Prevedello LM, et al. Effect of computerized clinical decision support on the use and yield of CT pulmonary angiography in the emergency department. Radiology. 2012;262(2):468-474. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Richardson S, Cohen S, Khan S, et al. Higher imaging yield when clinical decision support is used. J Am Coll Radiol. 2020;17(4):496-503. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Soo Hoo GW, Wu CC, Vazirani S, Li Z, Barack BM.. Does a clinical decision rule using D-dimer level improve the yield of pulmonary CT angiography? AJR Am J Roentgenol. 2011;196(5):1059-1064. [DOI] [PubMed] [Google Scholar]

- 12. Drescher FS, Chandrika S, Weir ID, et al. Effectiveness and acceptability of a computerized decision support system using modified wells criteria for evaluation of suspected pulmonary embolism. Ann Emerg Med. 2011;57(6):613-621. [DOI] [PubMed] [Google Scholar]

- 13. Deblois S, Chartrand‐Lefebvre C, Toporowicz K, Chen Z, Lepanto L.. Interventions to reduce the overuse of imaging for pulmonary embolism: a systematic review. J Hosp Med. 2018;13(1):52-61. [DOI] [PubMed] [Google Scholar]

- 14. Newnham M, Stone H, Summerfield R, Mustfa N.. Performance of algorithms and pre-test probability scores is often overlooked in the diagnosis of pulmonary embolism. BMJ. 2013;346:f1557. [DOI] [PubMed] [Google Scholar]

- 15. Hsu N, Hoo GWS.. Underuse of clinical decision rules and d-dimer in suspected pulmonary embolism: a nationwide survey of the veterans administration healthcare system. J Am Coll Radiol. 2020;17(3):405-411. [DOI] [PubMed] [Google Scholar]

- 16. National Academies of Sciences, Engineering, and Medicine. Achieving Excellence in the Diagnosis of Acute Cardiovascular Events: Proceedings of a Workshop–In Brief. The National Academies Press; 2021. [Google Scholar]

- 17. Venkatesh AK, Kline JA, Courtney DM, et al. Evaluation of pulmonary embolism in the emergency department and consistency with a national quality measure: quantifying the opportunity for improvement. Arch Intern Med. 2012;172(13):1028-1032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Thaler RH, Sunstein CR.. Nudge: Improving Decisions about Health, Wealth, and Happiness. Penguin; 2009. [Google Scholar]

- 19. Johnson EJ, Goldstein D.. Do defaults save lives? Am Assoc Adv Sci. 2003;302(5649):1338-1339. [DOI] [PubMed] [Google Scholar]

- 20. Charlson ME, Pompei P, Ales KL, MacKenzie CR.. A new method of classifying prognostic comorbidity in longitudinal studies: development and validation. J Chronic Dis. 1987;40(5):373-383. [DOI] [PubMed] [Google Scholar]

- 21. Richardson S, Dauber-Decker K, Solomon J, et al. Nudging health care providers’ adoption of clinical decision support: protocol for the user-centered development of a behavioral economics-inspired electronic health record tool. JMIR Res Protoc. 2023;12(1):e42653. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Michie S, Van Stralen MM, West R.. The behaviour change wheel: a new method for characterising and designing behaviour change interventions. Implementation Sci. 2011;6(1):1-12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Richardson S, Dauber-Decker KL, McGinn T, Barnaby DP, Cattamanchi A, Pekmezaris R.. Barriers to the use of clinical decision support for the evaluation of pulmonary embolism: qualitative interview study. JMIR Hum Factors. 2021;8(3):e25046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Zhang NJ, Rameau P, Julemis M, et al. Automated pulmonary embolism risk assessment using the wells criteria: validation study. JMIR Form Res. 2022;6(2):e32230. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. van Belle A, Büller HR, Huisman MV, Christopher Study Investigators, et al. Effectiveness of managing suspected pulmonary embolism using an algorithm combining clinical probability, D-dimer testing, and computed tomography. J Am Med Assoc. 2006;295(2):172-179. [DOI] [PubMed] [Google Scholar]

- 26. Adusumalli S, Kanter GP, Small DS, et al. Effect of nudges to clinicians, patients, or both to increase statin prescribing: a cluster randomized clinical trial. JAMA Cardiol. 2022;8(1):23-30. 10.1001/jamacardio.2022.4373. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Belli HM, Chokshi SK, Hegde R, et al. Implementation of a behavioral economics electronic health record (BE-EHR) module to reduce overtreatment of diabetes in older adults. J Gen Intern Med. 2020;35(11):3254-3261. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Meeker D, Linder JA, Fox CR, et al. Effect of behavioral interventions on inappropriate antibiotic prescribing among primary care practices: a randomized clinical trial. J Am Med Assoc. 2016;315(6):562-570. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Yoong SL, Hall A, Stacey F, et al. Nudge strategies to improve healthcare providers’ implementation of evidence-based guidelines, policies and practices: a systematic review of trials included within Cochrane systematic reviews. Implementation Sci. 2020;15(1):1-30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Wang SY, Groene O.. The effectiveness of behavioral economics-informed interventions on physician behavioral change: a systematic literature review. PLoS One. 2020;15(6):e0234149. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Last BS, Buttenheim AM, Timon CE, Mitra N, Beidas RS.. Systematic review of clinician-directed nudges in healthcare contexts. BMJ Open. 2021;11(7):e048801. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Fox CR, Doctor JN, Goldstein NJ, Meeker D, Persell SD, Linder JA.. Details matter: predicting when nudging clinicians will succeed or fail. BMJ. 2020;370:m3256. [DOI] [PubMed] [Google Scholar]

- 33. Bright TJ, Wong A, Dhurjati R, et al. Effect of clinical decision-support systems: a systematic review. Ann Intern Med. 2012;157(1):29-43. [DOI] [PubMed] [Google Scholar]

- 34. Van Der Sijs H, Aarts J, Vulto A, Berg M.. Overriding of drug safety alerts in computerized physician order entry. J Am Med Inform Assoc. 2006;13(2):138-147. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Chow A, Ang A, Chow C, et al. Implementation hurdles of an interactive, integrated, point-of-care computerised decision support system for hospital antibiotic prescription. Int J Antimicrob Agents. 2016;47(2):132-139. [DOI] [PubMed] [Google Scholar]

- 36. Liberati EG, Ruggiero F, Galuppo L, et al. What hinders the uptake of computerized decision support systems in hospitals? A qualitative study and framework for implementation. Implementation Sci. 2017;12(1):1-13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Moxey A, Robertson J, Newby D, Hains I, Williamson M, Pearson S-A.. Computerized clinical decision support for prescribing: provision does not guarantee uptake. J Am Med Inform Assoc. 2010;17(1):25-33. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data underlying this article will be shared on reasonable request to the corresponding author.