Abstract

The pharynx is one of the few areas in the body where blood vessels and immune tissues can readily be observed from outside the body non-invasively. Although prior studies have found that sex could be identified from retinal images using artificial intelligence, it remains unknown as to whether individuals’ sex could also be identified using pharyngeal images. Demographic information and pharyngeal images were collected from patients who visited 64 primary care clinics in Japan for influenza-like symptoms. We trained a deep learning-based classification model to predict reported sex, which incorporated a multiple instance convolutional neural network, on 20,319 images from 51 clinics. Validation was performed using 4869 images from the remaining 13 clinics not used for the training. The performance of the classification model was assessed using the area under the receiver operating characteristic curve. To interpret the model, we proposed a framework that combines a saliency map and organ segmentation map to quantitatively evaluate salient regions. The model achieved the area under the receiver operating characteristic curve of 0.883 (95% CI 0.866–0.900). In subgroup analyses, a substantial improvement in classification performance was observed for individuals aged 20 and older, indicating that sex-specific patterns between women and men may manifest as humans age (e.g., may manifest after puberty). The saliency map suggested the model primarily focused on the posterior pharyngeal wall and the uvula. Our study revealed the potential utility of pharyngeal images by accurately identifying individuals’ reported sex using deep learning algorithm.

Subject terms: Computer science, Translational research

Introduction

Deep learning is a branch of artificial intelligence that involves neural network models with multiple processing layers. These layers enable the learning of data representations at various levels of abstraction1. Deep learning has been increasingly used in various medical fields and it demonstrated diagnostic accuracy comparable to human experts in many areas of radiology2. Deep learning is a powerful tool for predictive modeling, but due to its structural complexity, deep learning algorithms are often not directly interpretable by humans, and explainability has been a major challenge in their clinical application3.

Recent studies have found that deep learning algorithms could be used in retinal images or chest radiographs (CXRs) to accurately identify individuals’ systemic health4,5 and reported sex6,7. These studies, which predict individuals’ systemic information from their local appearance, have the potential to lead to physiological discoveries and expand existing knowledge of pathology. This approach has been applied to retinal images and CXRs, but it remains unclear whether other modalities, such as pharyngeal images, could also be used to identify individuals’ systemic information.

The pharynx is a region composed of mucous membranes, tonsils, lymphatic tissues, and muscles. Acute upper respiratory infections are known to cause inflammation of the pharynx, resulting in pharyngeal redness, tonsillar exudates, and other visual changes in the region8. Palatal petechiae and tonsillar exudate are specific signs of bacterial infections such as streptococcal infections9,10, and in the case of influenza, the development of unique follicles on the posterior pharyngeal wall has been reported11,12. Furthermore, a recent study used deep learning algorithms on pharyngeal images to diagnose influenza with high accuracy13. Given that the pharynx is one of the few areas in the body where blood vessels and immune tissues can readily be observed from the outside of the body, pharyngeal images may be also informative in identifying individuals’ systemic information, such as reported sex. Compared to retinal images that require special retinal imaging devices, and CXRs that subject the individual to radiation exposure, pharyngeal images can be captured with much lower invasiveness using a standard smartphone camera or a small medical camera.

This study aims to provide new insights into physiology by developing a deep learning algorithm that identifies sex from pharyngeal images. Additionally, we propose a quantitative interpretation framework that combines an instance segmentation14 model that identifies tissues in the pharynx and saliency heatmaps of the classification model to address the explainability issue of deep learning models.

Methods

Study design

We conducted a secondary analysis of the data from a clinical trial targeting patients who visited a clinic with influenza-like symptoms (registered to the Japan Registry of Clinical Trial as jRCTs032190120). In the original clinical trial, patients who visited 64 primary care clinics across Japan between November 2019 and January 2020 were recruited, and the pharyngeal images and patient background data were collected. In this study, in addition to the original clinical trial’s inclusion and exclusion criteria (Supplementary Table S1), patients with no image passed the image screening module mentioned later were excluded. Also, to prevent the impact of specific biases that could arise in the pharynx due to chronic diseases, patients with a history of either hypertension, diabetes, or dyslipidemia were excluded.

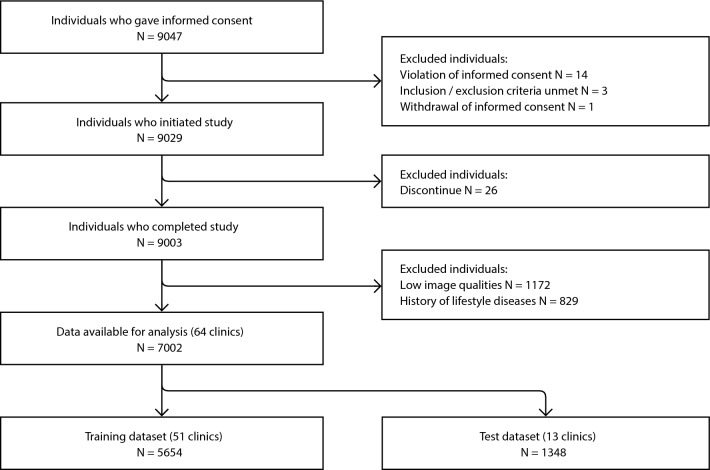

The patient flowchart is shown in Fig. 1. A total of 7002 patients were included in the analysis. Patients who met the criteria were divided into the training dataset (approximately 80%) and the test dataset (approximately 20%). The training dataset was used for the development and internal validation of the deep learning algorithm. The test dataset was blinded during the training phase and was used only for the final evaluation. Considering the possibility of target leakage due to variations in physicians’ techniques and to ensure external validity, the data was split in a way that there was no overlap of clinics between the training and test datasets. The training dataset included 20,319 pharyngeal images from 5654 patients from 51 clinics, while the test dataset included 4869 throat images from 1348 patients collected from 13 clinics. The baseline characteristics of both datasets are shown in Table 1. The study protocol was approved by the ethics committee of Health Outcome Research Institute (submission id 2024-05). All methods were performed in accordance with the relevant guidelines and regulations.

Figure 1.

Patient flowchart.

Table 1.

Dataset characteristics.

| Training dataset (N = 5654/51 clinics) | Test dataset (N = 1348/13 clinics) | |||

|---|---|---|---|---|

| Male | Female | Male | Female | |

| Participants, n (%) | 2703 (47.8) | 2951 (52.2) | 714 (53.0) | 634 (47.0) |

| Age, mean (SD) | 30.5 (16.2) | 32.3 (16.3) | 26.4 (16.8) | 30.2 (17.5) |

| Age ≦ 10, n (%) | 376 (13.9) | 345 (11.7) | 178 (25.0) | 111 (17.5) |

| 10 < age ≦ 20, n (%) | 468 (17.3) | 455 (15.4) | 144 (20.2) | 111 (17.5) |

| 20 < age ≦ 40, n (%) | 1086 (40.2) | 1189 (40.3) | 225 (31.5) | 219 (34.5) |

| 40 < age, n (%) | 773 (28.6) | 962 (32.6) | 167 (23.4) | 193 (30.4) |

In this study, a special medical camera13 equipped with a light source that could illuminate deep into the throat and a part to hold down the patient’s tongue was used to obtain high-quality pharyngeal images. Supplementary Figure S1 provides details about this camera. Along with the pharyngeal images, the patient’s age, reported sex, and medical history were also collected at the clinic.

Development of the classification model

In developing the algorithm to identify sex from pharyngeal images, there were two major challenges. The first was the variation in image quality due to factors such as the blur caused by the patient’s movement or camera shake. The second was how to capture the three-dimensional structure of the pharynx into two-dimensional images while minimizing the loss of information.

To address the variation in the quality of input images, a physician in the research team (MF) annotated a subset of images in the training dataset based on five visual criteria (visibility of pharynx, focus, brightness, motion blur, and fog). Based on these annotations, we built an image screening module to infer the quality of images. The image screening module adopted a lightweight convolutional neural network (CNN) architecture based on MobileNetV215 and achieved a sensitivity of 0.914 and a specificity of 0.988 in the internal validation dataset consisting of 535 images. As shown in Fig. 1, out of 9003 patients, 1172 patients were unable to pass through the image screening module.

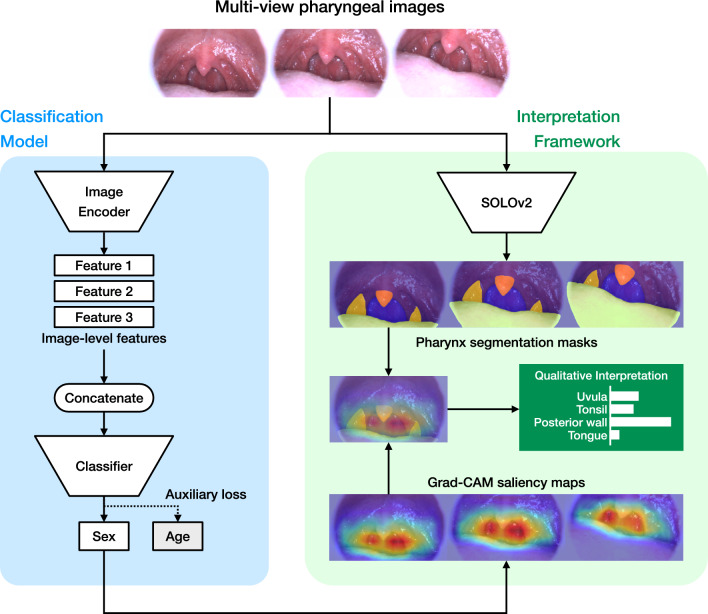

To efficiently extract the three-dimensional information of the pharynx, we designed a multiple instance CNN (MI-CNN) that inputs multiple images simultaneously. The structure of the model is shown in Fig. 2. The MI-CNN inputs images taken from multiple directions and locations in the throat into a CNN-based feature extractor (encoder) to obtain image-level features. The features from multiple images are concatenated and passed to a multilayer perceptron classifier to obtain the final predictions.

Figure 2.

Model architecture. Multiple input images are fed into the classification model, where an encoder calculates the features of each image. These features are then concatenated, and the classifier outputs a sex prediction score. During this process, saliency maps for each image are generated from the gradients flowing through the neural network. The input images are also fed into the SOLO model, which outputs segmentation masks identifying the regions of the pharynx. By overlaying these masks with the saliency maps, we can quantitatively evaluate the areas of the model’s focus. CNN convolutional neural network, SOLO segmenting objects by locations, Grad-CAM gradient-weighted class activation mapping.

To achieve higher accuracy, we conducted preliminary experiments for hyperparameters such as the architecture of the encoder, the number of input images for the MI-CNN, the resolution of input images, and the use of auxiliary loss functions. Based on the results (Supplementary Table S2), we adopted ConvNext-base16 for the encoder architecture, three for the number of input images, and 384 × 384 × 3 for the image resolution. The auxiliary loss function is a regularization technique used in models like GoogLeNet17 and AlphaFold218. By adding the regression loss for age (mean squared loss) to the classification loss for sex (binary cross-entropy), we observed improved generalization performance.

During the training phase, five-fold cross-validation was performed. The parameters and configurations used for training are listed in Supplementary Table S3. Transfer learning19 was performed for the encoder using weights pre-trained on ImageNet20. Our classification algorithm is an ensemble consisting of models from five-fold cross-validation, and the final prediction was the average of the output values from the five models.

Development of the interpretation framework

To address the issue of interpretability in deep learning and to lead to new physiological discoveries, we have devised the following quantitative interpretation framework for deep learning models: (1) calculate a saliency heatmap that represents the focus areas of the classification model; (2) calculate a segmentation map that identifies the tissues in pharynx; (3) overlay the saliency map and segmentation map to quantify the intensity of focus on each tissue; (4) extract tissues with particularly high focus, and calculate and compare geometric and color-related features.

A subset of the training data (2000 images) and annotations for the tissue regions provided by physician TH in the research team were used for the development of the instance segmentation model. We constructed a neural network with the SOLOv221 architecture and trained the neural network to detect the posterior pharyngeal wall, tongue, tonsils, and uvula. Out of a total of 2000 images, 399 were used for evaluation, and the average precision was 0.921 for the posterior wall, 0.826 for the tongue, 0.573 for the tonsils, and 0.590 for the uvula. Gradient-weighted class activation mapping (Grad-CAM), a technique to produce a localization map highlighting the important regions for prediction based on the gradients flowing in the neural network22, was used to generate saliency heatmaps of the classification model.

For each part segmented, the following geometric features were calculated using OpenCV23: area; perimeter; aspect ratio; solidity; convexity; circularity. Solidity is defined as the ratio of the part’s area to the area of its convex hull, i.e., the smallest convex shape that completely encloses the part. Convexity is defined as the ratio of the perimeter of the convex hull to the perimeter of the part, which refers to the extent to which a shape is convex. Circularity is a measure of how closely the shape of the part resembles a circle, defined as the ratio of the area of the part to the square of its perimeter. For color-related features, redness and relative redness were calculated. Redness is defined as the R (red) component divided by the total of RGB values for each pixel, and then averaging this value within the part of interest. Relative redness is defined as the redness of the part of interest divided by the redness of the entire image.

Statistical analysis

The performance of the classification model was evaluated by calculating the area under the receiver operating characteristic curve (AUROC). To further examine the characteristics of the classification performance, an additional analysis stratified by age (10 years or younger, 10–20 years, 20–40 years, and over 40 years) was also performed. The confidence interval calculation and testing for AUROC were performed using DeLong’s method24, and P values of < 0.05 were considered significant.

Results

Classification results

The classification model exhibited an AUROC of 0.883 (95% CI 0.866–0.900) on the whole test dataset (Fig. 3). The cutoff threshold that maximizes sensitivity + specificity was 0.445, with a sensitivity of 0.822 and a specificity of 0.756. When classified at this threshold, 155 women and 127 men were misclassified among a total of 1348 subjects. In the stratified analysis, the classification model showed relatively low performance with AUROC of 0.561 and 0.767 in the groups of ages 10 or younger and teenagers, respectively. In contrast, it demonstrated extremely high classification performance with AUROC of 0.965 and 0.963 in the groups of ages 20–40 and over 40, respectively.

Figure 3.

Classification model evaluation. The cutoff threshold that maximizes sensitivity + specificity was 0.445, with a sensitivity of 0.822 and a specificity of 0.756.

Interpretation results

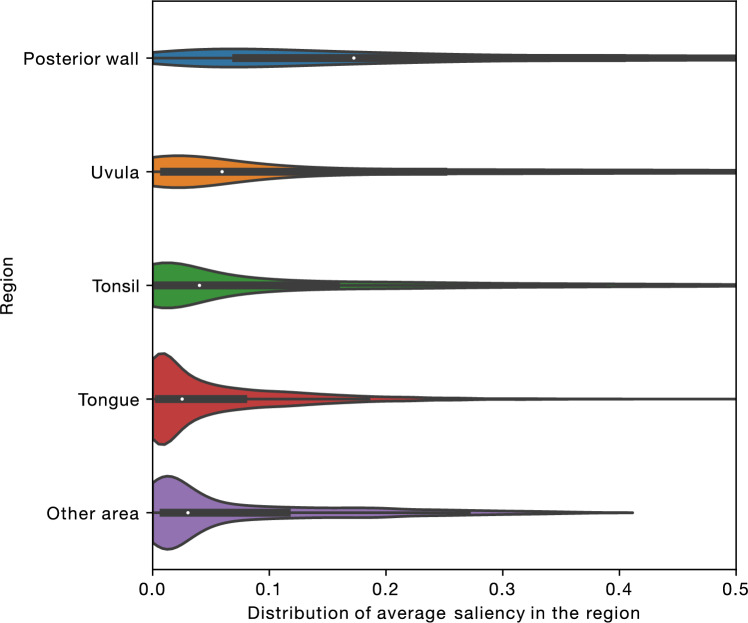

By combining the throat segmentation outcomes and the saliency heatmap of the classification model, it was revealed that the classification model specifically focused on the posterior pharyngeal wall and the uvula (Fig. 4). Based on these results, we calculated the visual features (both geometric and color-related) of the posterior pharyngeal wall and the uvula. According to Supplementary Figure S2, the uvula of patients predicted to be male had larger areas, larger perimeters, and were redder. For the posterior pharyngeal wall, no clear differences in contour or color were observed between males and females, suggesting the possibility that specific patterns may emerge within the texture of the posterior wall.

Figure 4.

Grad-CAM analysis. A box-and-whisker plot is shown in each violin. The neural network places higher attention on the uvula and the posterior pharyngeal wall compared to other regions.

Discussion

We found that people’s reported sex can be identified with high accuracy from pharyngeal images using deep learning. To our knowledge, this is the first study to demonstrate the utility of pharyngeal images in identifying reported sex. Furthermore, we discovered that the classification performance of our model depends on age, achieving extremely high accuracy with an AUROC exceeding 0.96 in patients over 20 years old. This suggests that differences between males and females associated with growth are present in pharyngeal images.

According to the results of our interpretation framework, which combines instance segmentation and saliency heatmaps, the posterior pharyngeal wall and uvula are the regions of importance. It was found that men have a larger and redder uvula compared to women. This is consistent with a previous study which reported that in the context of sleep apnea, men are more likely than women to have an enlarged uvula25. The color difference may reflect differences in the immune response of the throat mucosa. This could potentially provide clues to explain sex differences in various diseases in the future. While no remarkable differences in the shape or size of the contours of the posterior wall were observed, this suggests that other characteristics, such as unique patterns in the texture, may be present.

Our study was inspired by prior studies that identified age and sex from retinal images4,6. In addition to past research, our study provided a framework that quantifies, aggregates and visualizes the focus areas of deep learning algorithms. Although a direct comparison of accuracy is not possible due to differences in the datasets used, we found that it is possible to identify sex from pharyngeal images with an accuracy comparable to that from retinal images. The strength of pharyngeal images is that they can be captured with low invasiveness using consumer devices such as smartphones. While we used a custom camera specifically designed for pharynx imaging, which provided stable color tones and images with less occlusion by the tongue compared to those taken with a smartphone, it may be possible to develop algorithms that can compensate for the variability in image quality caused by smartphone photography in the future.

The present study has limitations. First, the subjects of this study visited the clinic with influenza-like symptoms such as fever, so the insights derived from this study cannot exclude the possibility that they are specific to infectious diseases or immune responses. When classified at the cutoff threshold that maximizes sensitivity + specificity, 155 women were misclassified as men, and 127 men were misclassified as women. The more frequent misclassification among women is thought to be due to swelling and redness of the pharyngeal tissue associated with infections. Also, this can be considered as indirect support for the results of the interpretation framework which suggested the sex difference in size and redness of the uvula. Second, the subjects were recruited from primary clinics within Japan. This may result in a high ethnic homogeneity, potentially limiting the external validity of this study. We aimed to ensure external validity as much as possible by conducting validation using test data from clinics other than the training data. However, we recognize the need for future studies that use a complete external validation set. Third, the average precision of the uvula and tonsils in the segmentation model used in the interpretation framework was not high. This is likely because these regions have ambiguous boundaries and are small in size, making the technical difficulty higher. However, it is unlikely that the errors or biases present in the segmentation masks are dependent on predicted sex, and thus we believe their impact on the conclusions of this analysis is minimal.

Conclusion

We demonstrated the potential utility of pharyngeal images as a new modality in medical imaging by identifying reported sex from pharyngeal images using deep learning. Furthermore, by using our interpretation framework that combines segmentation masks and saliency heatmaps, we discovered that the algorithm focuses on the posterior pharyngeal wall and the uvula. This approach allowed us to provide quantitative insights into the differences between males and females in the uvula and posterior wall.

Supplementary Information

Acknowledgements

We would like to thank the AI team of Aillis, led by Atsushi Fukuda, for processing and providing the data for the secondary analysis. We also thank Sho Okiyama for his contribution in conception, and Quan Huu Cap for reviewing the methodology of this study. The development of the deep learning models was performed on the AI Bridging Cloud Infrastructure of the National Institute of Advanced Industrial Science and Technology (AIST), Japan.

Author contributions

All authors contributed to conception and study design. H.Y. contributed to algorithm development, data analysis, and manuscript writing. M.F., T.H., and Y.T. contributed to interpretation of data. Y.T. provided scientific direction. All authors reviewed the manuscript and approved the submitted version. All authors had access to all the data in the study. H.Y. had full access to all the data in the study and takes responsibility for the integrity of the data and the accuracy of the data analysis.

Funding

This work was supported by Aillis, Inc.

Data availability

The data used in this study, mainly pharyngeal images, are licensed to Aillis, Inc., and could be used for future projects for the development of medical devices and diagnostic technologies. Due to ethical reasons, the data used in this study cannot be shared publicly. Proposals and requests for data access should be directed to the corresponding author.

Competing interests

H.Y., M.F., T.H. are employees of Aillis, Inc. Y.T. was supported by the National Institutes of Health (NIH)/NIMHD Grant R01MD013913 and NIH/NIA Grant R01AG068633 for other work not related to this study. This article does not necessarily represent the views and policies of the NIH. Y.T. received consultant fees from Aillis to supervise the study.

Footnotes

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

The online version contains supplementary material available at 10.1038/s41598-024-68817-6.

References

- 1.LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature521, 436–444 (2015). 10.1038/nature14539 [DOI] [PubMed] [Google Scholar]

- 2.Shen, J. et al. Artificial intelligence versus clinicians in disease diagnosis: Systematic review. JMIR Med. Inform.7, e10010 (2019). 10.2196/10010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Borys, K. et al. Explainable AI in medical imaging: An overview for clinical practitioners—Saliency-based XAI approaches. Eur. J. Radiol.162, 110787 (2023). 10.1016/j.ejrad.2023.110787 [DOI] [PubMed] [Google Scholar]

- 4.Poplin, R. et al. Prediction of cardiovascular risk factors from retinal fundus photographs via deep learning. Nat. Biomed. Eng.2, 158–164 (2018). 10.1038/s41551-018-0195-0 [DOI] [PubMed] [Google Scholar]

- 5.Pyrros, A. et al. Opportunistic detection of type 2 diabetes using deep learning from frontal chest radiographs. Nat. Commun.14, 4039 (2023). 10.1038/s41467-023-39631-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Korot, E. et al. Predicting sex from retinal fundus photographs using automated deep learning. Sci. Rep.11, 10286 (2021). 10.1038/s41598-021-89743-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Ting, D. S. W. & Wong, T. Y. Eyeing cardiovascular risk factors. Nat. Biomed. Eng.2, 140–141 (2018). 10.1038/s41551-018-0210-5 [DOI] [PubMed] [Google Scholar]

- 8.Sykes, E. A., Wu, V., Beyea, M. M., Simpson, M. T. W. & Beyea, J. A. Pharyngitis: Approach to diagnosis and treatment. Can. Fam. Phys.66, 251–257 (2020). [PMC free article] [PubMed] [Google Scholar]

- 9.Choby, B. A. Diagnosis and treatment of streptococcal pharyngitis. Am. Fam. Phys.79, 383–390 (2009). [PubMed] [Google Scholar]

- 10.McIsaac, W. J., White, D., Tannenbaum, D. & Low, D. E. A clinical score to reduce unnecessary antibiotic use in patients with sore throat. Can. Med. Assoc. J.158, 75–83 (1998). [PMC free article] [PubMed] [Google Scholar]

- 11.Miyamoto, A. & Watanabe, S. Influenza follicles and their buds as early diagnostic markers of influenza: Typical images. Postgrad. Med. J.92, 560–561 (2016). 10.1136/postgradmedj-2016-134271 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Takeoka, H. et al. Useful clinical findings and simple laboratory data for the diagnosis of seasonal influenza. J. Gen. Fam. Med.22, 231–236 (2021). 10.1002/jgf2.431 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Okiyama, S. et al. Examining the use of an artificial intelligence model to diagnose influenza: Development and validation study. J. Med. Internet Res.24, e38751 (2022). 10.2196/38751 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Lakshmanan, V., Görner, M. & Gillard, R. Practical Machine Learning for Computer Vision: End-to-End Machine Learning for Images (O’Reilly Media, Incorporated, 2021). [Google Scholar]

- 15.Sandler M, Howard A, Zhu M, Zhmoginov A, Chen LC. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 4510–4520. (2018).

- 16.Liu, Z. et al. A ConvNet for the 2020s. In 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 11966–11976. (2022).

- 17.Szegedy C et al. Going deeper with convolutions. In Proceedings of the IEEE conference on computer vision and pattern recognition. 1–9. (2015).

- 18.Jumper, J. et al. Highly accurate protein structure prediction with AlphaFold. Nature596, 583–589 (2021). 10.1038/s41586-021-03819-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Bengio, Y. Deep learning of representations for unsupervised and transfer learning. In Proceedings of the 2011 International Conference on Unsupervised and Transfer Learning workshop 17–37 (JMLR, 2011). [Google Scholar]

- 20.Deng, J. et al. ImageNet: A large-scale hierarchical image database. In 2009 IEEE Conference on Computer Vision and Pattern Recognition 248–255 (IEEE, 2009). [Google Scholar]

- 21.Wang, X., Zhang, R., Kong, T., Li, L. & Shen, C. SOLOv2: Dynamic and fast instance segmentation. In Proceedings of the 34th International Conference on Neural Information Processing Systems 17721–17732 (Curran Associates Inc., 2020). [Google Scholar]

- 22.Selvaraju RR et al. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. In 2017 IEEE International Conference on Computer Vision (ICCV). 618–626. (2017).

- 23.Bradski, G. The OpenCV library. Dobbs J. Softw. Tools25, 120–123 (2000). [Google Scholar]

- 24.DeLong, E. R., DeLong, D. M. & Clarke-Pearson, D. L. Comparing the areas under two or more correlated receiver operating characteristic curves: A nonparametric approach. Biometrics44, 837–845 (1988). 10.2307/2531595 [DOI] [PubMed] [Google Scholar]

- 25.Chang, E. T., Baik, G., Torre, C., Brietzke, S. E. & Camacho, M. The relationship of the uvula with snoring and obstructive sleep apnea: A systematic review. Sleep Breath.22, 955–961 (2018). 10.1007/s11325-018-1651-5 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The data used in this study, mainly pharyngeal images, are licensed to Aillis, Inc., and could be used for future projects for the development of medical devices and diagnostic technologies. Due to ethical reasons, the data used in this study cannot be shared publicly. Proposals and requests for data access should be directed to the corresponding author.