Abstract

Background

The increasing prevalence of artificial intelligence (AI)-driven mental health conversational agents necessitates a comprehensive understanding of user engagement and user perceptions of this technology. This study aims to fill the existing knowledge gap by focusing on Wysa, a commercially available mobile conversational agent designed to provide personalized mental health support.

Methods

A total of 159 user reviews posted between January, 2020 and March, 2024, on the Wysa app’s Google Play page were collected. Thematic analysis was then used to perform open and inductive coding of the collected data.

Results

Seven major themes emerged from the user reviews: “a trusting environment promotes wellbeing”, “ubiquitous access offers real-time support”, “AI limitations detract from the user experience”, “perceived effectiveness of Wysa”, “desire for cohesive and predictable interactions”, “humanness in AI is welcomed”, and “the need for improvements in the user interface”. These themes highlight both the benefits and limitations of the AI-driven mental health conversational agents.

Conclusions

Users find that Wysa is effective in fostering a strong connection with its users, encouraging them to engage with the app and take positive steps towards emotional resilience and self-improvement. However, its AI needs several improvements to enhance user experience with the application. The findings contribute to the design and implementation of more effective, ethical, and user-aligned AI-driven mental health support systems.

Keywords: Mental health, artificial intelligence (AI), chatbot, conversational agent, qualitative analysis

Highlight box.

Key findings

• Wysa could be a useful tool for people facing mental health challenges, but it has usability and artificial intelligence (AI) limitations that pose a considerable challenge in its acceptance and effectiveness.

What is known and what is new?

• Users appreciate non-judgmental, ubiquity, and personality of AI conversational agents.

• Personalization and context-awareness could enhance user satisfaction and engagement with the Wysa app.

• Users invest effort into improving a tool to manipulate it for their own use.

What is the implication, and what should change now?

• Further research should focus on utilizing advancements in natural language processing to enhance personalized interactions with AI-driven chatbots.

• Long-term studies are necessary to understand the impact of personalization on tool’s effectiveness.

• Ethical issues related to the use of AI in mental health need further investigation and integration.

Introduction

Background

A considerable segment of the global population grapples with mental health challenges, including, but not limited to, depression, stress, anxiety, sleep disorders and phobias. In a cross-national analysis of mental health surveys conducted across 29 countries from 2001 to 2022 (1), the lifetime prevalence of any mental disorder was reported to be 28.6% (95% confidence interval: 27.9–29.2%) for males and 29.8% (95% confidence interval: 29.2–30.3%) for females. The most common disorders identified were alcohol abuse and major depression in males, and major depression and specific phobia disorder in females. Mental health treatment varies by condition, incorporating psychotherapy (e.g., cognitive behavioral therapy; CBT) for emotional regulation, medications (antidepressants, antipsychotics) for symptom relief, and integrated care for complex needs. Access to mental health care remains critically hindered by barriers including stigma, resource scarcity, and geographical limitations (2,3). These factors disproportionately affect marginalized communities and those in remote areas. Technology based health interventions are a key to overcoming many of these challenges and providing alternatives for mental health care delivery.

Artificial intelligence (AI)-driven conversational agents have gained significant attention in recent years (4). They have evolved from simple scripted interactions to sophisticated AI-driven systems capable of engaging in human-like conversations (5). Modern AI chatbots utilize advanced algorithms and neural networks to process complex user inputs, facilitating open-ended discussions that mimic human interaction (6). They learn from each interaction, offering customized responses and adeptly handling intricate questions. In healthcare, these AI-driven agents have been developed to perform various tasks, including symptom assessment and triaging, chronic disease management, health information dissemination, and administrative task assistance such as appointment scheduling.

Within the mental healthcare system, AI-driven conversational agents are being used to make care more accessible and cost-effective (7). Initially designed to support psychological care through structured models (8), therapeutic chatbots now strive to engage individuals in brief dialogues about their mental well-being. The fear of judgment or stigma, particularly pronounced among men and certain minority groups, poses a significant barrier to accessing mental health care (9,10). Chatbots mitigate this issue by providing an anonymous and private platform for users to express their concerns. Furthermore, they provide round-the-clock tailored support, crucial for those seeking help at unconventional times. By delivering personalized interactions and learning from each user’s feedback, these chatbots not only make the first steps towards seeking help less daunting but also ensure personalized care for everyone.

A growing body of literature has explored AI chatbots within the mental health sector, examining their efficacy and clinical outcomes (11) in delivering therapeutic and preventative interventions. For instance, Ly et al. (12) demonstrated the effectiveness of a chatbot grounded in CBT and positive psychology in a non-clinical population. Other research has primarily focused on technical advancements (13,14), such as the work by Mehta et al. (15), which aimed to enhance sentiment analysis accuracy of user conversations using a bidirectional Long Short-Term Memory model. A significant research gap exists in understanding these interventions from the patient’s perspective, with the subjective experiences, preferences, and satisfaction of users remaining largely unexplored. This lack of insight underscores the necessity for more comprehensive research on how individuals interact with chatbots in real-world settings. A deeper understanding of the patient perspective can help tailor chatbot interventions to better serve those in need of mental health support, thereby enhancing the overall accessibility and effectiveness of digital mental health care.

Objective

This paper seeks to address the above-mentioned gap by concentrating on the patient perspectives of using mental health chatbots. Specifically, we evaluate user experiences with Wysa (16), a commercially available mobile conversational agent that leverages AI to offer personalized support and therapy. Our primary aim is to shed light on user perceptions, the level of support a user feels, and identify any potential barriers to the effectiveness of AI-based chatbot interventions.

Methods

Study overview

We used a qualitative approach to study users’ perceptions and experiences regarding the Wysa app. Specifically, we focus on how users perceive the support offered by the app and how they engage with it. The findings of this research are expected to contribute to the design and implementation of more effective, ethical, and user-aligned AI mental health support systems. They also highlight the importance of continuous improvement and user feedback in enhancing the accessibility and efficacy of AI-driven mental health solutions.

Study design

The study was conducted in accordance with the Declaration of Helsinki (as revised in 2013). We obtained approval for the study from the Institutional Review Board of our home institution. We obtained 300 user reviews from Wysa’s Google Play page, where users had voluntarily posted their feedback on the application. The reviews were gathered using the Google-Play-Scraper (17), a Python-based set of application programming interfaces (APIs) that allows for crawling the Play Store without relying on any external dependencies. After reading all the reviews, we excluded those that lacked useful information, were written before January 2020 or were irrelevant to our research, resulting in a final set of 159 reviews for analysis. The review selection happened at two instances, in October 2023 and then again in March 2024. The Google Play Store user reviews are publicly accessible, requiring no gatekeeper for access. According to Google Play’s policy (18,19), publicly posted reviews are visible to all Play Store users and may be utilized by developers to gain insights into user experiences. To safeguard the privacy of the reviewers, in this article, we adjusted the wording of original quotes slightly while preserving their original meaning. This approach aligns with the recommendations of Eysenbach and Till (20), who suggest modifying the content of publicly posted user reviews to reduce the likelihood of the original quotes being located through search engine queries. Additionally, we did not collect any identifiable information such as names or email addresses that may have been posted by the reviewers on the Google Pay page to ensure reviewers’ anonymity.

Application

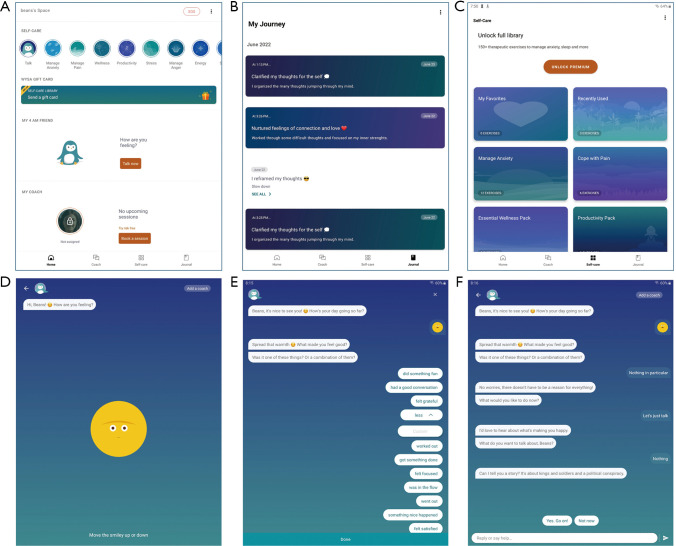

Wysa (16) is a commercially available mobile application that is designed as a virtual mental health companion. It offers text-based conversations with an AI-powered chatbot that aims to engage users in empathetic and supportive dialogue. The app can be downloaded from both Apple App and Google Play stores on tablets or mobile phones. It incorporates evidence-based therapeutic techniques such as CBT, breathing, and mindfulness exercises aimed at helping individuals manage stress, anxiety, depression, and other mental health challenges. These additional functionalities can be summoned as components during a conversation with the chatbot or used stand-alone outside the chat. Users can tailor their experiences by setting their preferences and goals within the app. They can then interact with the chatbot, access instructional tools and approaches, keep a record of their thoughts, check their mood, take part in online discussions, and receive daily motivation and reminders. The app can act as a supplementary tool to traditional therapy or as a self-care resource for those seeking assistance with their mental well-being. Some screenshots of the application are shown in Figure 1.

Figure 1.

Screenshots from the Wysa application (top left to bottom right). (A) Main screen, (B) journal page, (C) self-care features, (D) chat interface, (E) chatbot offering response options, (F) chatbot offering conversation options.

Data analysis

We used Braun and Clarke’s thematic analysis technique (21) to understand how people are using the Wysa app, how they feel about it, and what they think are its benefits and drawbacks. We began with becoming thoroughly familiar with the corpus through repeated readings, allowing for an understanding of content and the identification of key ideas. By the end of this phase, we had categorized the reviews into four types based on sentiment, as described below:

Positive reviews (n=69), which solely highlighted app benefits, for example, “Very helpful practices, prompts, activities and ‘chats’! Even on the free version, it is a BIG help for managing anxiety, getting perspective, and prioritizing self-care.”

Negative reviews (n=24), which focused on drawbacks, for example, “The bot doesn’t understand me. It seems to work by recognizing words like stressed or sad. It commonly misunderstands me because of this and it’s usually in a set path and unable to talk about what you really want. Unhelpful.”

Mixed reviews (n=57), which noted both strengths and weaknesses, for example, “Sometimes the responses seem like they would be the same no matter what I say, but just being able to bounce my thoughts off Wysa and think about different perspectives is helpful.”

Neutral reviews (n=9), which made feature suggestions for improving the application, for example, “I would like to have the option to customize the screen with themes. Perhaps choose a character to interact with.”

We then used an open and inductive approach to code the reviews such that significant ideas were labeled (coded) in each user review. To ensure accuracy and reliability, one author first coded all the reviews, and then the second re-reviewed and refined them. Once the codes were finalized, based on similarities and differences, the first author grouped the codes such that each group could become a potential theme or sub-theme of the recurrent ideas in the reviews. The two authors worked together through the iterative process of refining and revising the groups such that a set of themes emerged that accurately represented our dataset. Each theme was then clearly defined and named, and a detailed explanation was formulated to articulate its meaning and fit within the overall narrative.

Results

The qualitative analysis of user reviews identified seven key themes that reflect the viewpoints of Wysa users. These themes are: “a trusting environment promotes wellbeing”, “ubiquitous access offers real-time support”, “AI limitations detract from the user experience”, “perceived effectiveness of Wysa”, “desire for cohesive and predictable interactions”, “humanness in AI is welcomed”, and “the need for improvements in the user interface”. Each theme highlights essential aspects of what users consider important for an effective AI-based chatbot in mental health. Below we present a detailed explanation of each theme. The thematic map is presented in Table 1.

Table 1. Thematic map of the user reviews along with the frequency of each theme and subtheme.

| Themes | Number |

|---|---|

| A trusting environment promotes well-being | 36 |

| Anonymous and private interactions | 7 |

| Non-judgmental companionship | 11 |

| Active listening and validation | 22 |

| Ubiquitous access offers real-time support | 10 |

| Real-time support | 4 |

| Ubiquitous availability | 6 |

| Humanness in AI is welcomed | 16 |

| Emotional responsiveness | 12 |

| Evolution and improvement over ELIZA | 9 |

| Personality and humor | 8 |

| Perceived effectiveness of Wysa | 59 |

| Comprehensive mental health support | 47 |

| Personalized engagement and growth | 30 |

| Not a substitute for therapy | 9 |

| AI Limitations detract from the user experience | 45 |

| Understanding and contextualization issues | 26 |

| Redundancy and predictability | 14 |

| Limited conversational flow | 13 |

| Desire for cohesive and predictable interactions | 21 |

| Integrated experience | 3 |

| Personalized interactions | 8 |

| Learning and adjusting to AI | 7 |

| The need for improvements in the user interface | 19 |

| Content accessibility and organization | 4 |

| Session continuity | 7 |

| Enhanced user control | 4 |

| Preference for the conversational format | 5 |

AI, artificial intelligence.

A trusting environment promotes well-being

A total of 36 user reviews were related to this theme. These reviews suggest that the judgment-free and confidential environment is a significant appeal of the Wysa app. Users prefer a safe and non-judgmental space where they can express their thoughts and feelings without fear of misunderstanding or criticism. The following subthemes were identified in the comments belonging to this category.

Anonymous and private interactions (n=7)

Four user comments discussed how the fact that they can interact anonymously and that no other human is reading their conversations provided them a sense of privacy and safety. A notable testimonial discussing this is as follows: “The best part is that it is completely anonymous and I am speaking to an AI. It’s not a real person hearing my issues or knowing who I am from behind a screen. Everything we talk about is kept private,” (October 15, 2022). Furthermore, users explained that the private and safe environment of the app has encouraged them to express themselves more openly and honestly. “The concept of it being anonymous really helped me open up and experience the stress-relieving nature of the app,” (July 11, 2021). However, three users were skeptical about the confidential nature of the application, stating that the app did not clarify its privacy policy. As a result, they were hesitant about sharing their thoughts and feelings with the app.

Non-judgmental companionship (n=11)

The absence of judgment, a stark contrast to interactions with humans, was frequently mentioned as a key advantage of the chatbot. One user highlighted the therapeutic benefits of this approach, stating, “I really struggle with opening up to real people, but this AI is surprisingly helpful. The more you interact, the better you feel, thanks to its judgment-free listening and the soothing techniques it offers for your convenience.” (July 26, 2022). This shows that users feel that the chatbot is able to listen without passing judgment and is able to create a safe space for them to open up about their feelings and thoughts. “Wysa helps talk me through without all the added, unhelpful and unnecessary human reactions, judgments and passive projections,” (August 28, 2021). Interacting with a non-judgmental entity has allowed users to share their feelings and thoughts freely, enhancing their comfort and willingness to engage with the offerings within the app.

Active listening and validation (n=22)

Users indicated how the app’s ability to actively listen and validate their feelings is a key component of its support. They believed that this quality enhances their emotional connection to the app. One user shared their experience, saying, “This app actually listens and acknowledges your thoughts and feelings at the same time helps you without being imposing or pushy. It is like a true friend with whom you can share anything,” (March 26, 2024). This feedback shows that the app is not only able to listen attentively but also validate users’ feelings, which contributes significantly to emotional processing and healing.

However, seven user reviews thought that the app does not allow them to freely express themselves, instead it tries to push its own agenda on the users. “It’s hard to even vent using this app, because it constantly insists on ‘reframing’ issues,” (February 8, 2022).

Overall, users’ comments in this theme highlight how the non-judgmental and confidential listening of the app allowed users to place their trust in the app, build emotional connection with it, and focus on their mental well-being.

Ubiquitous access offers real-time support

This theme shows that the ubiquitous availability of the AI chatbot fulfilled the real-time needs of the patients. Ten comments were found this category.

Real-time support (n=4)

Users appreciated the real-time help from the app during moments of anxiety and stress. One user commented, “Real-time help in the moment of anxiety and stress is really what we are all looking for, which Wysa chatbot can provide.” (February 26, 2021). This subtheme highlights the value of having immediate access to support, something that traditional therapy may not always offer.

Ubiquitous availability (n=6)

The ability to access the chatbot at any time was praised by the reviewers. One user explained, “It’s great that you can start a ‘session’ with the AI at 3 am with no appointment and no thoughts of ‘sorry to bother you’,” (August 26, 2021). This subtheme highlights the ubiquity of the AI chatbot and how it fills a gap in support when human connections may not be available for mental health patients. Another user mentioned, “This is great for anyone curious about taking their mind off the day before going to bed to help with anxiety and depression,” (December 18, 2021), showing that having everyday access was an important aspect of seeking therapy.

This theme suggests that ubiquitous and real-time support is invaluable for individuals seeking mental well-being. A mental health chatbot can successfully secure such an environment for a user.

Humanness in AI is welcomed

This theme reflects users’ appreciation for the chatbot’s ability to exhibit human-like qualities through the exhibition of a light-hearted personality. Sixteen comments fell into this category.

Emotional responsiveness (n=12)

One user expressed that “Wysa feels like a friend. It understands my mood and even cracks jokes when I’m down.” (April 6, 2020) highlighting the emotional connection they feel with the bot. Another user’s comment, “Wysa seems to know when I’m upset and responds with comforting words and sometimes a gentle joke. It’s like it really gets me,” (March 27, 2022), illustrates the bot’s emotional responsiveness. These comments illustrate the user’s perception of Wysa’s ability to recognize and respond to emotional cues, suggesting a sophisticated level of emotional responsiveness that enhances the user’s connection with the bot. However, an equal number of user reviews expressed discontent with the chatbot’s ability to decipher emotions and nuances in their conversations. A google user commented, “I find the AI a bit lacking it often fails to understand my meaning … a psychoanalyst it is not,” (March 6, 2020).

Evolution and improvement over ELIZA (n=9)

Two users compared Wysa with an older chatbot., i.e., ELIZA, emphasizing the advancement, “I’ve tried ELIZA before, but Wysa is different. It’s more human-like, with emotions and a sense of humor.” (January 7, 2022). This comment highlights the evolution and improvement in AI’s ability to mimic human-like emotions and humor, albeit with limitations, reflecting a significant advancement in creating more relatable and engaging AI experiences. However, seven users commented on the robotic nature of the chatbot, stating that it clearly not able to produce a human-like conversation. “Since it is a robot it doesn’t fully understand what I am trying to say,” (October 21, 2023).

Personality and humor (n=8)

One user commented on the personality of the bot, “Wysa’s has a cute personality, which is what keeps me coming back. It’s not just a machine; it feels like a real person talking to me.” (February 12, 2022). The use of humor was also valued, with a user noting, “I love how Wysa lightens the mood with a funny remark. It makes therapy less intimidating.” Here, the user is appreciating Wysa’s use of humor in making the therapeutic process more approachable and less formal, indicating that humor can be a valuable tool in mental health support.

Overall, these user comments and the theme itself underscore the shift from mechanical interaction to a more empathetic and personable approach. The subthemes of emotional responsiveness, evolution over traditional AI, and the integration of personality and humor resonate with users and contribute to a more satisfying and supportive experience with Wysa. The theme emphasizes the importance of designing AI that is not only functional but also emotionally intelligent and engaging, fostering a deeper connection with users.

Perceived effectiveness of Wysa

This theme highlights the app’s significant role in users’ mental health journey and personal development. Fifty-nine user reviews were directly related to this theme.

Comprehensive mental health support (n=47)

A dominant theme across the reviews was the app’s comprehensive support for mental health issues, frequently mentioned in the context of managing anxiety, depression, and attention deficient disorder. Users were impressed with the fact that the app “combined features from many types of apps in one”, stating that this has given them a peace of mind and simplified their lives, as they no longer have to juggle many different apps. They expressed gratitude for the app’s ability to offer practical coping strategies and emotional support. For instance, one user mentioned, “Wysa helped me not just reframe my thoughts towards a bad situation … but actually helped me accept my pains and turn them into strengths,” (March 21, 2024). The users credited the app with aiding them in managing difficult emotions and fostering resilience during tough times. Another user stated, “Very comforting app … It really helps you stay resilient in hard times,” (January 31, 2022). The frequency of such feedback underscores the app’s effectiveness in providing a reliable source of support, helping users navigate their mental health challenges with greater confidence and ease.

Personalized engagement and growth (n=30)

The user reviews demonstrated that the tailored interactions with the app helped foster a deeper understanding of themselves and facilitated personal development. A significant number of reviews highlighted how the app’s guided exercises and daily support routines have become integral to their personal growth journey. “I’ve had the lowest confidence my entire life, and I have been fixing it with the help of Wysa,” (January 7, 2024) shared one user, illustrating the app’s impact on building self-esteem. Another reflected, “I enjoy using this app as a journal to help me reorder my thoughts at the end of the day and help meet mental health goals,” (February 8, 2022) capturing the details of how the user had incorporated the app into their daily lives. This theme is characterized by feedback on how the app aids in building confidence, enhancing positivity, and encouraging self-reflection and mindfulness practices.

Not a substitute for therapy (n=9)

The user reviews emphasized that while the app is not a replacement for professional therapy, it serves as a valuable supplementary tool for mental health support. Users acknowledged the app’s utility in providing assistance and features beneficial in various contexts, particularly as an adjunct between actual therapy sessions. “It offers additional support and can be especially helpful on a day-to-day basis, complementing the work done in therapy,” (March 1, 2023). The reviewers agreed that the app should be used in conjunction with, rather than as a substitute for, therapy from a professional therapist, enhancing the overall support system for individuals accustomed to or currently undergoing therapy.

The analysis highlights the app’s efficacy in addressing the complexities of mental health challenges while also nurturing an environment conducive to personal development. Through its blend of emotional support, coping strategies, and personalized interactions, the app emerges as a valuable ally in users’ mental health and personal growth journeys.

AI limitations detract from the user experience

This theme highlights specific areas where the AI technology in the Wysa app falls short. These limitations not only affect the user’s interaction with the app but also diminish the overall effectiveness of the tool. Forty-five comments discussed three different limitations of AI.

Understanding and contextualization issues (n=26)

Numerous users had highlighted Wysa’s challenges in managing the nuances of different situations, leading to frustration among those who expected more intelligent and adaptive responses. This issue frequently resulted in misinterpretations or generic answers that failed to meet the users’ actual needs and expectations. One user clarified, “The AI often doesn’t grasp what’s happening in our conversations. It appears to be programmed to identify trigger words (anxious, overwhelmed, depression, etc.) and offer relief for those symptoms, regardless of their relevance to the conversation’s context,” (July 4, 2023). There was a general agreement that interacting with such a limited AI could be fruitless and potentially detrimental. For instance, a user’s feedback revealed, “I downloaded it for my paranoid schizophrenia, but it was of no help. The AI’s failure to understand and tailor its responses to my specific circumstances has only worsened my mental health, making this app unsuitable for me,” (August 17, 2023). Users thought that the chatbot’s inability to contextualize user prompts impacts its capability to perform various in-app tasks. Another user expressed disappointment, saying, “I was seeking guidance on establishing a detailed plan for my goals, but Wysa seemed overwhelmed and unable to assist me through the intricacies of my situation,” (August 17, 2021).

Redundancy and predictability (n=14)

Many users thought that Wysa is repetitive, which made people believe that it was unhelpful: “And while I like telling them [my health issues] out, and having a listening ear, you need to work on Wysa’s ability to adapt in conversation, because a lot of what it says is repetitive and that can trigger a feeling of not being heard.” (December 6, 2022). Another user explained how repetition could lead to severe problems. “I just had an incredibly unhelpful and frustrating ‘conversation’ with Wysa where it parroted back at me to demonstrate my ‘progress’ with the situation. I ended up much worse off than when I began,” (August 5, 2021). Other users demonstrated some concerns about Wysa becoming redundant and unhelpful over time. A user expressed, “Also, after a few months’ usage, the AI starts to sound very redundant, and doesn’t help anymore.” (February 18, 2020). This means that the rigid, structured pattern in Wysa’s responses makes it less desirable over time. This can lead to a lack of engagement and dissatisfaction among users, particularly during long-term usage.

Limited conversational flow (n=13)

Participants expressed discontent with Wysa’s AI system stating that it was unable to reciprocate in the same way a human would. Its reliance on scripts combined with limited follow-up capability significantly constrained the depth and flexibility of interactions that the users expected. One user explained, “The chat function has no actual interaction ability. No matter what I say to it, the chatbot seems to have its own topic that it is stuck trying to discuss,” (November 7, 2023). Some users complained that the Wysa’s conversations felt mechanical and impersonal. “It’s painfully robotic sometimes and sometimes answers don’t align with what you request,” (May 1, 2023). They yearned for an AI that could engage in a dynamic dialogue mirroring the evolving nature of human thought and emotion, without breaking the flow of conversation and diminishing the sense of engagement.

These comments collectively paint a picture of an AI system that, while promising and helpful in some areas, still has significant limitations that hinder its ability to provide fully personalized, intuitive, and effective mental health support. The users’ feedback underscores the need for improvements in AI understanding, adaptability, and technical robustness to enhance the overall user experience.

Desire for cohesive and predictable interactions

This theme encompasses the ways in which users expected their interaction with the app to be designed, including the actions they wanted to take, the responses they wanted to receive, and the overall flow of communication they expected. Twenty-one comments discussed this issue in detail.

Integrated experience (n=3)

Users’ comments in this category encapsulate the desire for a smooth, logical, and interconnected user experience that feels natural and satisfying. They wanted an experience where all parts work together seamlessly, enhancing the overall effectiveness and enjoyment for the user. For example, a user explained what an ideal interaction with the chatbot would look like: “It would be great if transition from the chatbot to the other features within the app is made seamless, like using the info from reframing (conversation with Wysa chatbot) to set SMART goals and then have Wysa checks in periodically to remind us of the goal and to log progress, at a time interval that we choose,” (December 18, 2021). Additionally, reviewers wanted the chatbot to remember and build upon past interactions to create a more coherent and individualized support experience over time.

Personalized interactions (n=8)

Though users’ comments revealed an overall satisfaction with the chatbot, they also revealed a desire from participants to improve chatbot’s ability to personalize its interactions with the users. A few users highlighted the importance of having the ability to customize therapy options to better suit their unique mental health journey. “I’m autistic and it would be great to have more tailored responses or advice to those on the spectrum,” (November 16, 2023). Another suggested that personalization could extend to the type of exercises and coping strategies recommended by the app, allowing for a more targeted approach to mental wellness. Overall, the comments within this subtheme emphasized the potential for enhanced user satisfaction through greater personalization.

Learning and adjusting to AI (n=7)

Users demonstrated curiosity and engagement with Wysa’s AI, exploring its operational mechanics and adaptability. “I thought it would be a little fun to have an experiment with the system, the first question I asked was ‘if you could help me with an experiment’, it didn’t register the question and just said it didn’t understand, after I asked a second time it straight up told me it was a system with specific answers, basically a script,” (December 14, 2021). Here, the user actively experimented with the AI to understand its capabilities and limitations, concluding that it operates on a script rather than true AI reasoning. The purpose of this action appeared to be multifaceted; users are trying to understand AI’s inner workings to enhance their experience, provide constructive feedback to improve its functioning, or re-think their usage strategy. One user explained, “The responses of Wysa … become loopy at times and miss the point of what I was saying entirely. When this happens, I adjust my statement to the AI’s ‘thought process’,” (April 8, 2022).

Overall, this theme underscores a wish for an app experience that is not only integrated and personalized but also flexible, supportive, and engaging, reflecting key aspects that could enhance user satisfaction and engagement with the Wysa app. Users are seeking an application that understands them, responds accurately, and provides a smooth interaction that feels natural and satisfying. The feedback provides valuable insights for developers and designers to refine the app, enhancing user engagement and satisfaction with the Wysa app.

The need for improvements in the user interface

This theme refers to the physical or virtual elements that users want to interact with, focusing on their visual and interaction designs. The subthemes highlight specific areas where users have expressed a desire for improvements. Nineteen comments discussed different kinds of interface enhancements.

Content accessibility and organization (n=4)

Users felt overwhelmed by the disorganization of the content on the interface, suggesting a need to simplify and better organize the interface for enhanced accessibility and ease of use. One user commented on the cluttered user interface, “I’m overwhelmed by the main menu and mentally block it out when trying to reach the chatbot. I think there’s some potential for UI improvement here.” (January 4, 2022). Furthermore, there were specific requests for a feature to allow searching through recommended exercises and previously tried activities, indicating a need for enhanced navigation and better content organization within the app.

Session continuity (n=7)

Session continuity was identified as a critical issue, with users highlighting their frustration when unable to pick up and continue an interrupted chat session at a later time. “If I accidentally exit out of the chat feature, I can’t go back in to work on the same thought. Wish there was an option to go back to the old conversation to continue it,” (February 17, 2022). Key suggestions for improvement included enabling users to return to a previous conversation after an accidental exit, saving chat progress to prevent information loss, and maintaining conversations on the screen, even if the user navigates away from the app momentarily, to avoid repetition. Collectively, these suggestions emphasized the necessity for features that facilitate a more fluid and uninterrupted user experience.

Enhanced user control (n=4)

The users desired a more tailored app experience. They expressed a need for customization options that allow for altering the app’s appearance or themes, potentially extending to interactive characters, enriching the user interface and engagement. “I would like to have the option to customize the screen with themes. Perhaps choose a character to interact with,” (December 16, 2023). Other users wanted to have the ability to customize the app’s content/chatbot’s conversations based on their personal information. “I’m autistic and it would be great to have more tailored responses or advice to those on the spectrum by maybe having the option to give more information about yourself in the setup,” (November 14, 2023). Additionally, there was a call for greater control over initiating conversations. Suggestions included incorporating a mode conducive to unstructured input, such as ranting, offering users a more flexible and expressive platform. These aspects collectively point towards a user preference for an app experience that is not only aesthetically customizable but also functionally adaptive to individual interaction styles and content needs.

Preference for the conversational format (n=5)

Users expressed a preference for a conversational approach to addressing mental health issues. Users appreciated the simplicity and accessibility of the chat format, finding it less intimidating than navigating through extensive lists of exercises and options. They thought that the interactive dialogue facilitates a more natural exploration of their feelings and challenges and allows them to uncover internal solutions and coping strategies. They also found the guidance provided by the app well-directed, describing the prompts as friendly and reassuring, enabling users to navigate through their difficult situations and emotions in a supportive and manageable manner. “AI chat can be beneficial in helping guide and organize emotions and thoughts,” (March 1, 2024).

These themes reflect a broader user need for an application that is more intuitive, flexible, and accommodating to various user preferences and interaction styles. Implementing these changes could potentially enhance the overall user experience by making the app more user-friendly, personalized, and efficient in supporting users’ needs.

Discussion

The purpose of this qualitative descriptive study was to explore users’ perceptions and experiences with the Wysa app, specifically focusing on how they perceive the support offered by the app and their engagement with it. The qualitative analysis of the user sheds light on the subjective effectiveness and satisfaction of users, as well as potential limitations of the studied intervention. Following, we discuss both research and practical directions for future developments of AI-based mental health interventions.

Key findings and their explanations

The findings are quite mixed; showing that some things are perceived positively, some negatively. Overall, a majority of the users have found the Wysa app to be helpful in addressing their mental health concerns, despite its limitations and room for improvement. However, it is clear that this therapy modality is not effective for all types of users and mental health conditions. The results reveal that a non-judgmental and private environment is essential for driving user engagement and trust. They appreciate ubiquity and accessibility, as well as the wealth of features and guided exercises within their virtual AI therapists. However, users need ample opportunities to vent, as sometimes they are not looking for feedback or help but simply want an opportunity to express themselves. A rigid help script of AI that is focused on pushing certain techniques on users was not received positively by those who wanted to process their emotions. Users want more than simply the ability to click and select given options, they want the opportunity to express themselves. Hence, some users expressed reservations about AI’s readiness to replace therapists entirely. They saw Wysa’s value more in terms of providing supplemental support alongside human therapists. A few were impressed with Wysa’s ability to exhibit human-like qualities, such as emotional responsiveness, humor, and personality, which made the interaction more relatable and engaging. But many desired more human-like qualities within the agent to sufficiently engage in conversations with it.

Our findings are clear that the user experience with an AI tool can be significantly compromised due to an unfriendly user interface and limited interactivity. Users identified areas in Wysa’s design that need improvement, such as content accessibility and organization, session continuity and user control. These issues can lead to confusion, difficulty in task completion, and, for those dealing with certain mental health challenges, significant frustration that may ultimately cause them to abandon the tool (22). Moreover, lack of a cohesive, integrated and personalized user experience can lead to disengagement or dissatisfaction with the app. People who are skeptical find their fears, apprehensions, resistance strengthened when they do not find the bot responding humanly or according to their expectations. Hence, the importance of user-centered design for mental health apps cannot be overstated.

Additionally, users pointed out specific limitations in Wysa’s AI capabilities, including its problems with understanding and contextualizing user inputs, inability to emulate human-like conversation and a tendency toward redundancy and predictability. The users found the chatbot to be inadequate in terms of interpreting and responding to context. Understanding the context is vital because it influences how users perceive the reliability and trustworthiness of the AI tool (23). When people can predict how the system will respond in various situations, they feel in control and are comfortable relying on it. Moreover, by grasping the chatbot’s context-processing capabilities, users can tailor their interactions to maximize its effectiveness. They can learn how to phrase questions or provide information in a way that the system can understand and respond to effectively. The need to better understand context also aligns with users’ expressed desires for a cohesive, integrated, and personalized experience with Wysa, where many sought an app that understood them, responded accurately, and provided an experience that is relevant to their situations. The existing shortcomings of the AI were, hence, seen as obstacles to personalized, adaptive, and robust mental health support experience. Specifically, many users were concerned that these limitations would limit the long-term uptake and effectiveness of the app.

Comparison with similar research

A few other published works have also examined user experiences and perceptions of Wysa or comparable mental health conversational agents, yet they diverge from our report. Malik et al. (24) explored user interactions with the Wysa app, differing in methodology, scale, and analytical depth. Their research presented a broad overview utilizing a large dataset (n=7,929), in contrast to our study, which delivers a more detailed insight on a smaller scale. Moreover, they used a deductive approach in their analysis, restricting themselves to specific domains such as acceptability, usefulness, usability, and integration, while we adopted an inductive approach, exploring various facets of user experiences without using any pre-defined categories. In this way, our approach adds depth, flexibility and a fresh perspective to the literature on mental health conversational agents, uncovering new insights that can inform both theory and practice.

Kettle and Lee (25) explored user experiences of two different types of conversational agents to identify important features for user engagement. However, they focused on understanding experiences of students transitioning into university life and facing challenges like the pandemic. Their themes are very similar to our findings. They also found that accessibility and availability, communication style, conversational flow and anthropomorphism are important features of a mental health chatbot. In addition, they found that user response modality, perceived conversational agent’s role and question specificity are essential for user engagement.

Maharjan et al. (26) focused on studying the user experiences of individuals with diagnosed mental health conditions using speech-enabled conversational agents. Their findings highlight participants’ strategies to mitigate conversational agents’ flaws, their personalized engagement with these agents, and the value placed on conversational agents within their communities. The data suggests conversational agents’ potential in aiding mental health self-reporting but emphasizes the urgent need to improve their technical and design aspects for better interaction and at-home use.

Strengths and limitations

The overall research presents valuable insights but comes with its own set of limitations that warrant consideration. The user reviews are inherently subjective and captured in a snapshot in time, lacking longitudinal data that could offer insights into the evolving user experiences. It is also unclear how long people used the app for and what were their characteristics. Given that user reviews often reflect extreme opinions (27), user demographics can aid in their interpretation. Alternative methods, such as focus groups or consumer panels can help engage with users in a more meaningful way. Moreover, other mental health apps (28-30) are undertaking codesign phases and research to obtain a broader and more detailed understanding of user experiences, characteristics, and engagement nuances. Such methods could indeed complement our current approach by addressing some of its limitations, such as not knowing how long people use Wysa or their characteristics.

Despite its limitations, it is crucial to recognize the strengths of this research. User reviews offer valuable insights into lived experiences, filling gaps left by clinical research and presenting a grounded perspective on intervention effectiveness. Thematic analysis of user reviews excels in uncovering perceptions, and satisfaction nuances that quantitative metrics frequently overlook. Such detailed understanding is indispensable for developing AI systems for sensitive areas like mental health. Additionally, incorporating up-to-date user reviews enhances the study’s relevance in the fast-evolving field of technology.

Overall, the study offers a balanced and insightful contribution to the field.

Implications

This study affirms that AI needs to be more adaptive and personalized in responding to individual needs and complex scenarios. The reviews pointed out that predictable and rigid nature of AI would be its failure in long-term adoption. Moreover, they considered personality and humor an important dimension of a therapy chatbot. Developers should focus on incorporating advanced natural language processing (NLP) techniques to better understand and respond to the nuances of human conversation, including context and emotional states. This involves moving beyond static scripted responses to dynamic scripting that adapts to the individual user’s needs and the conversation’s flow, alongside improving AI’s ability to maintain coherent dialogue through enhanced follow-up capabilities. Large Language Models (LLMs) (31), sophisticated neural networks trained on extensive text data, are a pivotal development in this space. They excel in understanding and generating empathetic and human-like text by identifying patterns, relationships, and structures within the data. They can potentially revolutionize personalized interventions and provide immediate, nuanced, diverse and caring support (32). Research is needed to learn whether LLM-based personalized interventions are more effective than traditional methods and what are their limitations. Particularly, are more flexible and personality-oriented LLM-based mental health tools effective alternative therapeutic tools in the long run?

However, healthcare is a field deeply rooted in ethical principles like beneficence, non-maleficence, and patient autonomy (33). Replacing human judgment with AI introduces various challenges (34). Even an advanced AI technique such as LLM lack the emotional nuance that human compassion offers. Its responses are based on patterns learned from vast datasets rather than genuine emotional intelligence or an understanding of human feelings. Human healthcare providers adapt their approach based on social and cultural factors, but AI might not be able to do this effectively. AI’s output is unpredictable, and therefore, there is a need to carefully vet its output, which can lack the evolutionary thought process of a human being. Such limitations can potentially lead to ineffective or harmful care in contexts requiring empathy, such as mental health support. Hence, mental health professionals need to integrate these AI tools into their practices thoughtfully, staying informed about their capabilities and ethical considerations (34,35). Investigating the ethical implications of replacing human compassion and judgement with AI-generated responses by fostering collaborations between technologists, researchers, and mental health professionals thus remains crucial for developing AI-driven mental health interventions (36).

Another critical concern that was brought up by the users and which is of paramount importance across all AI applications is user data protection for safeguarding their privacy and rights. The literature presents a variety of approaches aimed at addressing this challenge. Ethical guidelines specifically tailored for AI applications in healthcare, alongside robust encryption methods, form the cornerstone of efforts to protect sensitive data. Innovations such as differential privacy (37), which introduces controlled noise to data to prevent individual identification, and federated learning (38), allowing AI models to learn from data without it being centralized, offer promising avenues for enhancing user privacy without undermining the AI’s learning capabilities. However, the effectiveness of these solutions is not uniform. Encryption and anonymization techniques have proven successful in securing data, yet the quest to balance privacy with the utility of data remains ongoing. The application of differential privacy and federated learning necessitates careful optimization to ensure that learning outcomes are not compromised. Looking forward, advancement in privacy-enhancing techniques is imperative. Such efforts should be underpinned by interdisciplinary collaboration, bringing together AI developers, stakeholders (patients and healthcare professionals), ethicists, and legal experts to ensure that AI systems are both ethically sound and privacy conscious (36).

Our research indicates that while many users had positive experiences, a subset found their mental health issues exasperated when the bot was unable to respond appropriately to their situation. This observation points to the necessity of a nuanced approach to understanding user interactions with AI in therapeutic contexts. Investigating the role of individual differences in mental health technology acceptance could offer deeper insights into user receptivity. Factors such as tech-savviness, attitudes towards mental health, and previous experiences with therapy (both traditional and digital) might significantly influence how users perceive and interact with the therapy bot. It might also be feasible to investigate what user personality types are more accepting of working with and using an AI-based mental health therapy bot. In any case, the mental health apps need to carefully set user expectations at the onset, so patients are not approaching the apps with unrealistic results in their minds.

Furthermore, this research shows that AI-based mental health conversational agents need to be more personalized and adaptable with enhanced user experience (39). The development of such apps needs to involve users in the design process, adopting user-centered (40) and participatory (41) design approaches. Users can help identify unique needs, preferences, and potential challenges, ensuring that the AI-driven mental health apps are tailored to provide the most effective and engaging support. This collaborative approach can foster trust (42), enhance user satisfaction, and ultimately contribute to the broader goal of making mental health care more accessible and effective for all.

Conclusions

This study aimed to understand user engagement and experiences with Wysa, a commercially available AI-driven mental health conversational agent. Through a qualitative analysis of 159 user comments posted on the Wysa app’s Google Play page, we identified seven major themes that highlight the app’s strengths and areas for improvement.

Our findings suggest that, with AI-driven conversational agents, users can experience non-judgmental space to express their thoughts and emotions. The ubiquitous availability and real-time support can be particularly valuable to users during moments of acute stress or anxiety. Furthermore, the anonymity and confidentiality features encourage users to engage more deeply with the app’s therapeutic offerings. However, the study also points out certain limitations, such as the AI’s inability to understand context, maintain a human-like conversational flow and the redundancy in its responses. These limitations indicate areas for future development and refinement of the app.

The insights gained from this study contribute to the growing body of research on AI-driven mental health support systems. They provide valuable information for healthcare providers, app developers, and policymakers aiming to design and implement more effective, ethical, and user-aligned mental health support systems.

In summary, mental health chatbots show promise in fostering strong user engagement and providing effective mental health support. However, there is a need for ongoing research and development to address the identified limitations and to further optimize this technology for broader user needs.

Supplementary

The article’s supplementary files as

Acknowledgments

We want to acknowledge Wysa users for sharing their experiences on the user forum. We also want to thank Wysa for letting us review their application.

Funding: None.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved. The study was conducted in accordance with the Declaration of Helsinki (as revised in 2013).

Footnotes

Conflicts of Interest: Both authors have completed the ICMJE uniform disclosure form (available at https://mhealth.amegroups.com/article/view/10.21037/mhealth-23-55/coif). B.M.C. serves as an unpaid editorial board member of mHealth from March 2023 to February 2025. The other author has no conflicts of interest to declare.

References

- 1.McGrath JJ, Al-Hamzawi A, Alonso J, et al. Age of onset and cumulative risk of mental disorders: a cross-national analysis of population surveys from 29 countries. Lancet Psychiatry 2023;10:668-81. 10.1016/S2215-0366(23)00193-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Carbonell Á, Navarro-Pérez JJ, Mestre MV. Challenges and barriers in mental healthcare systems and their impact on the family: A systematic integrative review. Health Soc Care Community 2020;28:1366-79. 10.1111/hsc.12968 [DOI] [PubMed] [Google Scholar]

- 3.Troup J, Fuhr DC, Woodward A, et al. Barriers and facilitators for scaling up mental health and psychosocial support interventions in low- and middle-income countries for populations affected by humanitarian crises: a systematic review. Int J Ment Health Syst 2021;15:5. 10.1186/s13033-020-00431-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Caldarini G, Jaf S, McGarry K. Literature Survey of Recent Advances in Chatbots. 2022. doi: 10.20944/preprints202112.0265.v1/arXiv.2201.06657 10.20944/preprints202112.0265.v1 [DOI]

- 5.Adamopoulou E, Moussiades L. An Overview of Chatbot Technology. Artificial Intelligence Applications and Innovations 2020;584:373-83. 10.1007/978-3-030-49186-4_31 [DOI] [Google Scholar]

- 6.Bansal G, Chamola V, Hussain A, et al. Transforming Conversations with AI—A Comprehensive Study of ChatGPT. Cogn Comput 2024. doi: . 10.1007/s12559-023-10236-2 [DOI] [Google Scholar]

- 7.Bilquise G, Ibrahim S, Shaalan K. Emotionally intelligent chatbots: A systematic literature review. Hum Behav Emerg Technol 2022. doi: . 10.1155/2022/9601630 [DOI] [Google Scholar]

- 8.Weizenbaum J. ELIZA—a computer program for the study of natural language communication between man and machine. Communications of the ACM 1966;9:36-45. 10.1145/365153.365168 [DOI] [Google Scholar]

- 9.Schomerus G, Stolzenburg S, Freitag S, et al. Stigma as a barrier to recognizing personal mental illness and seeking help: a prospective study among untreated persons with mental illness. Eur Arch Psychiatry Clin Neurosci 2019;269:469-79. 10.1007/s00406-018-0896-0 [DOI] [PubMed] [Google Scholar]

- 10.Vogel DL, Wester SR, Hammer JH, et al. Referring men to seek help: the influence of gender role conflict and stigma. Psychol Men Masc 2014;15:60-7. 10.1037/a0031761 [DOI] [Google Scholar]

- 11.Vaidyam AN, Wisniewski H, Halamka JD, et al. Chatbots and Conversational Agents in Mental Health: A Review of the Psychiatric Landscape. Can J Psychiatry 2019;64:456-64. 10.1177/0706743719828977 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Ly KH, Ly AM, Andersson G. A fully automated conversational agent for promoting mental well-being: A pilot RCT using mixed methods. Internet Interv 2017;10:39-46. 10.1016/j.invent.2017.10.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Sood P, Yang X, Wang P. Enhancing Depression Detection from Narrative Interviews Using Language Models. In: 2023 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Istanbul, Turkiye, 2023:3173-80. [Google Scholar]

- 14.Guluzade L, Sas C. Towards Heuristics for AI-Based Eating Disorder Apps. In: Designing (with) AI for Wellbeing Workshop at CHI 2024, 1-11. [Google Scholar]

- 15.Mehta A, Virkar S, Khatri J, Thakur R, Dalvi A. Artificial intelligence powered chatbot for mental healthcare based on sentiment analysis. In: 2022 5th International Conference on Advances in Science and Technology (ICAST), Mumbai, India; 2022:185-9. [Google Scholar]

- 16.Wysa. Available online: https://www.wysa.com/

- 17.Google-Play-Scraper . Available online: https://pypi.org/project/google-play-scraper/

- 18.Google Play Policies for posting reviews. Available online: https://play.google.com/about/comment-posting-policy/

- 19.Google Play Terms of Service . Available online: https://play.google.com/intl/en-US_us/about/play-terms/index.html

- 20.Eysenbach G, Till JE. Ethical issues in qualitative research on internet communities. BMJ 2001;323:1103-5. 10.1136/bmj.323.7321.1103 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Braun V, Clarke V. Using thematic analysis in psychology. Qual Res Psychol 2006;3:77-101. 10.1191/1478088706qp063oa [DOI] [Google Scholar]

- 22.Ainsworth J, Palmier-Claus JE, Machin M, et al. A comparison of two delivery modalities of a mobile phone-based assessment for serious mental illness: native smartphone application vs text-messaging only implementations. J Med Internet Res 2013;15:e60. 10.2196/jmir.2328 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Jacovi A, Marasović A, Miller T, Goldberg Y. Formalizing trust in artificial intelligence: Prerequisites, causes and goals of human trust in AI. In: Proceedings of the 2021 ACM conference on fairness, accountability, and transparency; 2021:624-35. [Google Scholar]

- 24.Malik T, Ambrose AJ, Sinha C. Evaluating User Feedback for an Artificial Intelligence-Enabled, Cognitive Behavioral Therapy-Based Mental Health App (Wysa): Qualitative Thematic Analysis. JMIR Hum Factors 2022;9:e35668. 10.2196/35668 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Kettle L, Lee YC. User Experiences of Well-Being Chatbots. Hum Factors 2024;66:1703-23. 10.1177/00187208231162453 [DOI] [PubMed] [Google Scholar]

- 26.Maharjan R, Doherty K, Rohani DA, et al. Experiences of a speech-enabled conversational agent for the self-report of well-being among people living with affective disorders: an in-the-wild study. ACM Transactions on Interactive Intelligent Systems 2022;12:1-29. 10.1145/3484508 [DOI] [Google Scholar]

- 27.Berger J, Humphreys A, Ludwig S, et al. Uniting the tribes: Using text for marketing insight. J Mark 2020;84:1-25. 10.1177/0022242919873106 [DOI] [Google Scholar]

- 28.Bevan Jones R, Stallard P, Agha SS, et al. Practitioner review: Co-design of digital mental health technologies with children and young people. J Child Psychol Psychiatry 2020;61:928-40. 10.1111/jcpp.13258 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Thabrew H, Fleming T, Hetrick S, et al. Co-design of eHealth Interventions With Children and Young People. Front Psychiatry 2018;9:481. 10.3389/fpsyt.2018.00481 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Wrightson-Hester AR, Anderson G, Dunstan J, et al. An Artificial Therapist (Manage Your Life Online) to Support the Mental Health of Youth: Co-Design and Case Series. JMIR Hum Factors 2023;10:e46849. 10.2196/46849 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Min B, Ross H, Sulem E, et al. Recent advances in natural language processing via large pre-trained language models: A survey. ACM Computing Surveys 2023;56:1-40. 10.1145/3605943 [DOI] [Google Scholar]

- 32.Wang C, Liu S, Yang H, et al. Ethical Considerations of Using ChatGPT in Health Care. J Med Internet Res 2023;25:e48009. 10.2196/48009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Mustafa Y. Islam and the four principles of medical ethics. J Med Ethics 2014;40:479-83. 10.1136/medethics-2012-101309 [DOI] [PubMed] [Google Scholar]

- 34.Keskinbora KH. Medical ethics considerations on artificial intelligence. J Clin Neurosci 2019;64:277-82. 10.1016/j.jocn.2019.03.001 [DOI] [PubMed] [Google Scholar]

- 35.Walsh CG, Chaudhry B, Dua P, et al. Stigma, biomarkers, and algorithmic bias: recommendations for precision behavioral health with artificial intelligence. JAMIA Open 2020;3:9-15. 10.1093/jamiaopen/ooz054 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Dwivedi YK, Hughes L, Ismagilova E, et al. Artificial Intelligence (AI): Multidisciplinary perspectives on emerging challenges, opportunities, and agenda for research, practice and policy. Int J Inf Manage 2021;57:101994. 10.1016/j.ijinfomgt.2019.08.002 [DOI] [Google Scholar]

- 37.Ficek J, Wang W, Chen H, et al. Differential privacy in health research: A scoping review. J Am Med Inform Assoc 2021;28:2269-76. 10.1093/jamia/ocab135 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Nguyen DC, Pham QV, Pathirana PN, et al. Federated learning for smart healthcare: A survey. ACM Computing Surveys 2022;55:1-37. 10.1145/3453476 [DOI] [Google Scholar]

- 39.Ahmad R, Siemon D, Gnewuch U, et al. Designing Personality-Adaptive Conversational Agents for Mental Health Care. Inf Syst Front 2022;24:923-43. 10.1007/s10796-022-10254-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Vredenburg K, Mao JY, Smith PW, Carey T. A survey of user-centered design practice. In: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems. 2002:471-8. [Google Scholar]

- 41.Bødker S, Dindler C, Iversen OS, et al. What is participatory design? In: Participatory design. . Synthesis Lectures on Human-Centered Informatics. Cham: Springer; 2022:5-13. [Google Scholar]

- 42.Wang J, Moulden A. AI trust score: A user-centered approach to building, designing, and measuring the success of intelligent workplace features. Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems. 2021:1-7. [Google Scholar]