Significance

Handheld phone use while driving is a major cause of car crashes. A behavioral intervention that involved gamified goals and social competition reduced handheld use by 20% relative to control. Adding modest incentives led to a 28% reduction. These reductions persisted even after the interventions ended, suggesting that drivers formed lasting habits around avoiding handheld use. Because these interventions were successfully deployed within a usage-based auto insurance program, they could be brought to scale in similar contexts. In the United States alone, the intervention that included incentives could result in 2 billion fewer hours of distracted driving per year.

Keywords: distracted driving, crash risk, randomized controlled trial, behavioral intervention, habit formation

Abstract

Distracted driving is responsible for nearly 1 million crashes each year in the United States alone, and a major source of driver distraction is handheld phone use. We conducted a randomized, controlled trial to compare the effectiveness of interventions designed to create sustained reductions in handheld use while driving (NCT04587609). Participants were 1,653 consenting Progressive® Snapshot® usage-based auto insurance customers ages 18 to 77 who averaged at least 2 min/h of handheld use while driving in the month prior to study invitation. They were randomly assigned to one of five arms for a 10-wk intervention period. Arm 1 (control) got education about the risks of handheld phone use, as did the other arms. Arm 2 got a free phone mount to facilitate hands-free use. Arm 3 got the mount plus a commitment exercise and tips for hands-free use. Arm 4 got the mount, commitment, and tips plus weekly goal gamification and social competition. Arm 5 was the same as Arm 4, plus offered behaviorally designed financial incentives. Postintervention, participants were monitored until the end of their insurance rating period, 25 to 65 d more. Outcome differences were measured using fractional logistic regression. Arm 4 participants, who received gamification and competition, reduced their handheld use by 20.5% relative to control (P < 0.001); Arm 5 participants, who additionally received financial incentives, reduced their use by 27.6% (P < 0.001). Both groups sustained these reductions through the end of their insurance rating period.

Distracted driving contributes to more than 3,000 deaths and 400,000 injuries each year in the United States (1). Among all distracted driving deaths, 13% involve phone use; for drivers aged 15 to 24, this figure is 20%. Naturalistic studies have found that not all phone use is equally risky. While both handheld and hands-free phone use are cognitively distracting, handheld use is also visually and physically distracting. Engaging in handheld use increases the odds of a crash by between 2 to 12 times, whereas hands-free use generally does not increase the odds of a crash (2–4). Auto insurance claims data likewise show that handheld phone use predicts crash claims while hands-free does not (5). In response to these risks, lawmakers in 27 states have banned all handheld phone use while driving (6). The track record of these bans in terms of reducing the rate of car crashes has been, at best, mixed (7). Enforcing handheld bans is challenging, and drivers find ways to evade detection—such as holding their phone lower during use—that may increase their crash risk (8, 9). Alternative ways to counter drivers’ handheld phone use are needed.

One promising avenue is usage-based insurance (UBI), a fast-growing segment representing 17% of all US auto insurance customers (10). UBI programs typically measure a driver’s handheld phone use and other risky driving behaviors during a months-long rating period, then price the driver’s upcoming policy based on their predicted risk of crash. This provides a built-in incentive for drivers to reduce their handheld phone use. By leveraging principles from social psychology and behavioral economics, it may be possible to design UBI incentives and feedback for maximal impact and cost-effectiveness (11). Our prior RCT (n = 2,020) with Progressive Snapshot UBI customers found that providing weekly social comparison feedback in conjunction with modest financial incentives significantly reduced handheld phone use and that this reduction was greater when incentives were loss-framed and delivered weekly (12). The behavior change observed—up to 23% less handheld phone use relative to control—was sizeable; however, during a follow-up period after the 7-wk intervention, handheld phone use in the treatment groups was no longer significantly different from control.

Building on this earlier trial, the present RCT tested interventions designed to create more sustained behavior change (Materials and Methods). Efforts were made to foster intrinsic motivation to change, which can be essential for sustaining a behavior when extrinsic rewards such as financial incentives cease (13). In addition, rather than ask participants to exert self-control and refrain from phone use entirely, interventions encouraged them to exert situational self-control by removing temptations (14) and to substitute handheld use with less risky hands-free alternatives (15, 16). Last, to provide more time for participants to build their new habits, the intervention period was lengthened to 10 wk (17, 18).

The RCT recruited Snapshot customers and randomly assigned each consenting participant to one of five study arms. Participants in each numbered arm received all the interventions of lower-numbered arms plus an additional intervention. For example, arm 1, the control group, received education about the risks of handheld phone use—as did treatment arms 2 to 5; arm 2 received a free phone mount—as did arms 3 to 5; and so on. This additive design was chosen for pragmatic reasons (e.g., ease of implementation and statistical power) and because it enabled interventions that built on each other and promised insights into whether the incremental benefit of an intervention justified its cost. Each trial arm is described next, and illustrations of intervention messaging are available in the online Supplementary Materials.

Arm 1: Education-Only Control.

At the end of an intake survey, all participants (arms 1 to 5) received information about how handheld phone use makes driving less safe. Statistics highlighted the increase in crash risk for each additional second of looking at their phone; reading a text for 5 s while driving 55 mph was compared to driving the length of a football field with one’s eyes closed. Laws banning handheld phone use were described, and a color-coded map of the United States indicated the jurisdictions that at the time had total (48 states) or partial (1 state) bans on texting while driving. Last, it was recommended that participants use hands-free options and place their phone in a phone mount, keeping themselves and others safer and helping them earn a bigger Snapshot discount. This education was meant to ensure that all participants had at least some motivation to reduce handheld phone use.

Arm 2: Phone Mount.

Participants in arms 2 to 5 were mailed a free Beam Electronics® air vent phone mount with a Progressive-branded sticker bearing the message “Driving? Park your phone here.” An included note instructed participants to 1) clip the mount onto an air vent close to eye level; 2) use the mount whenever they needed their phone while driving; and 3) remember that hands-free phone use is safer and could lead to a bigger Snapshot discount. Of interest was whether this one-shot intervention involving a simple environmental modification would be enough to change drivers’ behavior.

Arm 3: Commitment Plus Habit Tips.

For participants in arms 3 to 5, the intake survey also included a commitment exercise and habit formation tips after the education component. Committing to a specific goal has been found to increase the success of behavior change efforts (19). To secure participants’ commitment to reduce their handheld phone use while driving, first, a social norm was invoked (20) by informing them that “90 percent of surveyed Snapshot customers are interested in reducing phone use while driving” [a finding from our prior RCT (12)]. Next, participants were asked to write, in their own view, how it would be beneficial if everyone reduced their handheld phone use while driving—an intervention that can change a person’s attitudes to be more in line with what they have written (21). Then, participants were informed about their own baseline handheld use and told that the safest drivers in the Snapshot program—and thus the drivers with the biggest discounts—have handheld use <1 min/h. Next, participants were told how much they would need to reduce their own use each week (rounded to the nearest 10 s/h) over the course of the 10-wk study to get down to 1 min/h. Finally, they were asked whether they would commit to this weekly reduction goal—in effect, breaking down what could be a relatively large request (e.g., 5-min/h overall reduction) into a series of manageable weekly goals (e.g., 30 s/h in a week) (22, 23). These smaller goals meant that, on any given week, participants would be close to achieving a goal, thereby increasing motivation (24). Participants could decline to commit, but yes was the default response (25).

The intake survey then gave participants tips intended to help them build better habits (“habit tips”). An implementation intentions exercise was designed to help participants anticipate and plan for the three biggest obstacles to putting their phone down while driving (26, 27). They could choose from a menu of six common obstacles and plans to surmount them (e.g., “If I know I’ll need GPS, then I will enter where I’m going ahead of time.”), or they could write their own. Participants were informed that the plans they chose or wrote would be texted back to them later as reminders. Next, participants received information about the do not disturb while driving (DNDWD) features available on smartphones and were asked to set the feature on their own phone to come on automatically when driving (28). Last, participants received encouragement to use a phone mount (their own or the one provided) whenever they needed to use their phone while driving.

During the 10-wk intervention period, participants also received habit tips three times per week for the first 2 wk and once per week for the remaining 8 wk. These included reminders about the safety and financial benefits of cutting down on handheld use, nudges about using DNDWD and a phone mount, and their personal intake survey responses about the benefits of reducing handheld use and their plans for surmounting obstacles. Another habit tip provided a mindfulness technique to reduce phone use (e.g., “Tip: The urge to check your phone is normal. We can’t change the urge, but we can choose how we respond. When driving, notice the urge and tell yourself, “I won’t check now”  ”) (29). Others encouraged participants to create their own prompts and rewards so that they were not wholly dependent on study prompts and rewards (e.g., “When you get in your car, what will remind you not to use your phone? How can you make sure any phone use is hands-free?

”) (29). Others encouraged participants to create their own prompts and rewards so that they were not wholly dependent on study prompts and rewards (e.g., “When you get in your car, what will remind you not to use your phone? How can you make sure any phone use is hands-free?  ” and “Good habits stick when they’re rewarded… When you don’t touch your phone while driving, how can you give yourself a pat on the back?

” and “Good habits stick when they’re rewarded… When you don’t touch your phone while driving, how can you give yourself a pat on the back?  ) (30).

) (30).

These interventions were bundled together because they were higher-touch and digitally delivered yet did not involve processing trip data or providing performance feedback.

Arm 4: Gamification Plus Competition.

Each Monday evening of the intervention period, participants in arms 4 and 5 received text messages indicating their handheld phone use goal for the new week (“pledged goal” for those who committed earlier) and whether they met their goal for the prior week. These goals were “gamified,” with the possibility to earn or lose points and to level up or down. Prior research has established the effectiveness of gamification on behavior change in a variety of domains (31–33). Participants began with 100 points at silver level. They could gain 10 points, maintain points, or lose 10 points each week depending on whether they met their goal, fell short (missed their goal by less than twice their target weekly improvement increment), or backslid (missed their goal by at least twice their target weekly improvement increment). If they met their prior week’s goal, the new goal for the upcoming week was incrementally more challenging; if they backslid, the new goal was incrementally easier (but never easier than their initial goal). Those who met their goals received positive reinforcement (e.g., “Keep rolling  ”), while those who backslid were encouraged to think of the upcoming week as a fresh start (e.g., “Turn over a new leaf

”), while those who backslid were encouraged to think of the upcoming week as a fresh start (e.g., “Turn over a new leaf  ”) (34). Participants who did not drive in the prior week—and, therefore, had no handheld phone use while driving—were considered to have met their goal.

”) (34). Participants who did not drive in the prior week—and, therefore, had no handheld phone use while driving—were considered to have met their goal.

In addition, each week participants entered a competition with similar drivers to see who could engage in handheld phone use the least. Social competition of this kind has been found to promote lasting behavior change (35). Participants were grouped with up to 9 others in their study arm who had similar levels of baseline handheld use and received weekly emails with a leaderboard that ranked group members from least to most handheld use during the prior week. Their own use (in minutes and seconds per hour of driving) appeared next to the name “YOU”; everyone else’s use appeared next to anonymized initials. The leaderboard included only participants who drove at least 1 h. Text accompanying the leaderboard reminded participants that they could get a discount on their auto insurance if they reduced their handheld phone use while driving.

The goal gamification and social competition elements were bundled together because both were evidence-based, low-cost approaches to providing feedback that were also complementary in nature. Goal gamification was a solitary pursuit rewarding consistency and persistence, with the participant fully in control of whether they met their goals. Social competition involved peer comparison and rewarded avoiding handheld phone use each week regardless of how much the participant had engaged in handheld use in the past.

Arm 5: Prize Money.

Arm 5 participants alone could earn financial incentives. Those who met most of their weekly goals and finished the 10-wk intervention period at the platinum level (≥170 points) received an equal share of a $2,000 prize. As part of the weekly goal messaging, those who still had a chance to finish at the platinum level were reminded of the potential prize money. Unlike a “lottery” incentive, in which a randomly chosen participant wins the entire prize (36), this shared prize ensured that all participants who met the standard received an award, while keeping its size unknown until it was awarded (in the end, platinum level finishers each took home $15.63).

In addition, participants were told they could earn $5 each week they finished atop their group’s leaderboard for having the lowest rate of handheld phone use. When multiple participants tied for first place, they each earned $5. The weekly leaderboard included a “Total Winnings” column showing each participant’s cumulative winnings.

Relative to the other interventions, financial incentives would be costliest to scale. The study design allowed us to test whether the non-incentive-based interventions by themselves were effective and whether adding incentives increased their effectiveness.

Primary Outcome.

Proportion of drive time engaged in handheld phone use was the primary outcome. Handheld use during the 10-wk intervention was compared between each of the intervention arms and control, and between each successively numbered treatment arm to test the incremental effect of adding each set of interventions. See Data Analysis for details on power analysis, statistical model, and adjustment for multiple comparisons. To examine the longevity of behavior change, this analysis was repeated for a follow-up period.

Results

Participant Characteristics.

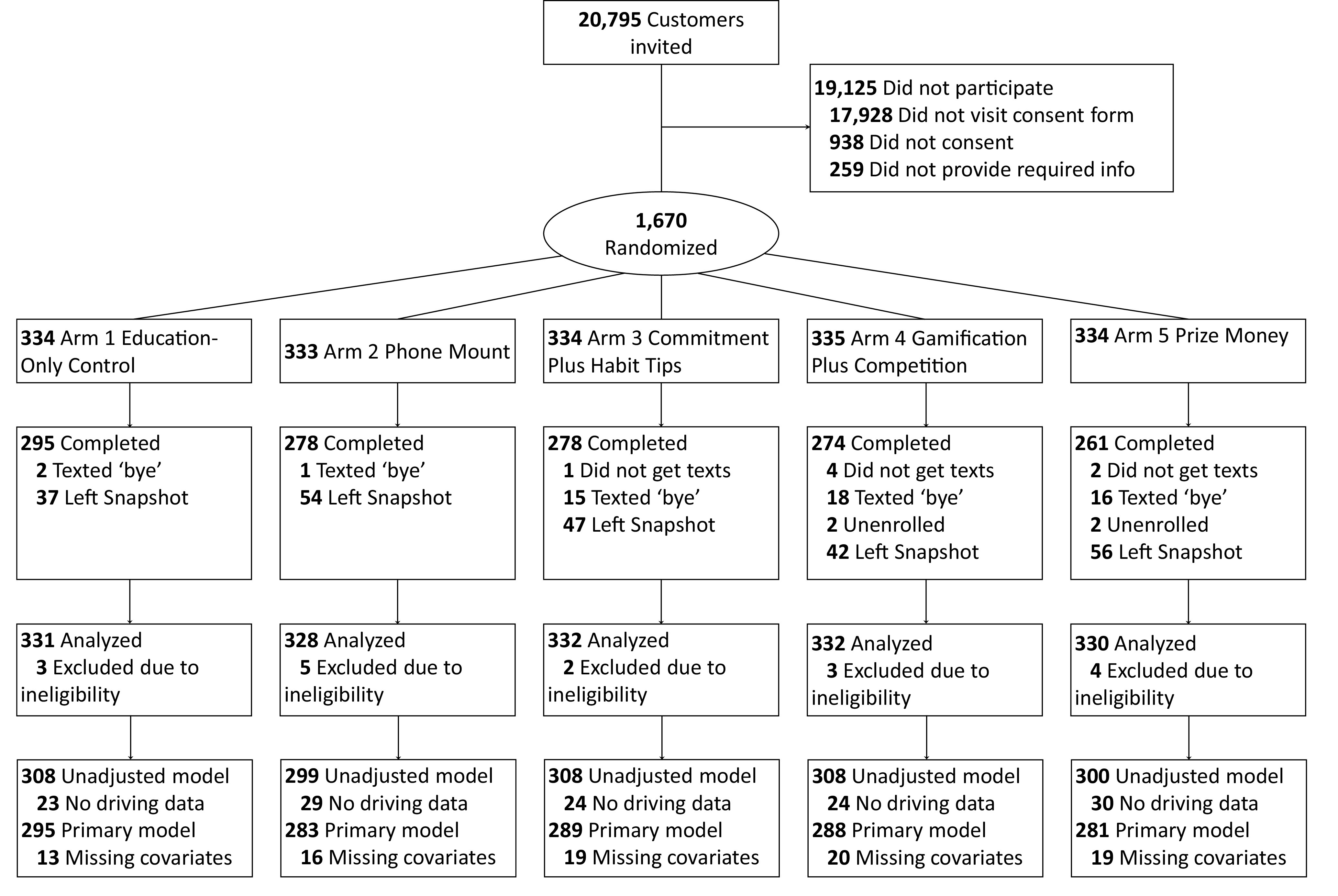

In total, 1,653 eligible participants were randomized to a study arm (Fig. 1). Of these, 1,560 (94.4%) started and completed the intake survey; 975 (59.0%) started and 901 (54.5%) completed the exit survey. Mean age was 32.8 y old (range: 18 to 77) and 66.5% were female. The sample was diverse ethnically (14.0% Hispanic), racially (60.3% white, 20.5% Black, 13.6% other), and geographically (20.3% urban, 59.5% suburban, 19.8% rural) (Table 1). Participants were more likely to be female, urban, and college-educated than those who were eligible and chose not to participate (SI Appendix). For participants who requested to unenroll (n = 4) or left the Snapshot program (n = 236), data were analyzed until the point of unenrollment or departure. Participants were excluded from the primary analysis if they did not have any intervention period driving data (n = 130) or were missing prespecified covariates (additional n = 87); 1,436 remained for the complete-case analysis. (Table 2).

Fig. 1.

Participant flow diagram. Participants were included in the intention-to-treat analysis regardless of whether they completed the intervention period. It was found that 17 participants did not meet study eligibility requirements (15 were under 18 y old and 2 were not the intended recipient of the invitation email) and were excluded, yielding a sample of 1,653. The unadjusted model, reported in the SI Appendix, excluded 130 participants who did not have any intervention period trip data, yielding a sample of 1,523. The complete-case primary model reported in the Main Text further excluded 87 who were missing covariate data, yielding a sample of 1,436.

Table 1.

Baseline handheld phone usage, demographics, and other characteristics by study arm

| 1. Education-Only Control | 2. Phone Mount | 3. Commitment Plus Habit Tips | 4. Gamification Plus Competition | 5. Prize Money | |

|---|---|---|---|---|---|

| n | 331 | 328 | 332 | 332 | 330 |

| Baseline handheld use minutes per hour (mean (SD)) | 6.4 (5.2) | 6.5 (4.6) | 6.4 (5.0) | 6.6 (5.0) | 6.4 (4.9) |

| Progressive’s estimated max potential insurance discount (mean (SD), in USD) | 61.93 (18.33) | 60.44 (17.86) | 60.44 (18.03) | 60.66 (18.29) | 61.80 (18.80) |

| Age (mean (SD)) | 32.3 (9.0) | 33.5 (10.3) | 32.8 (9.6) | 32.8 (9.6) | 32.7 (10.2) |

| Sex—Female (%) | 208 (62.8) | 218 (66.5) | 216 (65.1) | 228 (68.7) | 229 (69.4) |

| Marital status—Married (%) | 88 (26.6) | 73 (22.3) | 97 (29.2) | 81 (24.4) | 85 (25.8) |

| Location (%) | |||||

| Rural | 52 (15.7) | 69 (21.0) | 69 (20.8) | 77 (23.2) | 61 (18.5) |

| Suburban | 205 (61.9) | 199 (60.7) | 186 (56.0) | 189 (56.9) | 204 (61.8) |

| Urban | 73 (22.1) | 60 (18.3) | 75 (22.6) | 64 (19.3) | 63 (19.1) |

| State has handheld ban (%) | 55 (16.6) | 53 (16.2) | 73 (22.0) | 66 (19.9) | 54 (16.4) |

| Race (%) | |||||

| White | 203 (61.3) | 201 (61.3) | 198 (59.6) | 202 (60.8) | 193 (58.5) |

| Black | 60 (18.1) | 67 (20.4) | 75 (22.6) | 66 (19.9) | 71 (21.5) |

| Other | 51 (15.4) | 43 (13.1) | 40 (12.0) | 45 (13.6) | 45 (13.6) |

| Ethnicity (%) | |||||

| Hispanic | 59 (17.8) | 44 (13.4) | 47 (14.2) | 38 (11.4) | 44 (13.3) |

| Non-Hispanic | 250 (75.5) | 260 (79.3) | 261 (78.6) | 267 (80.4) | 261 (79.1) |

| Level of education (%) | |||||

| High school or less | 55 (16.6) | 59 (18.0) | 51 (15.4) | 49 (14.8) | 40 (12.1) |

| Some college | 89 (26.9) | 103 (31.4) | 100 (30.1) | 100 (30.1) | 110 (33.3) |

| College degree and above | 170 (51.3) | 149 (45.4) | 162 (48.7) | 164 (49.4) | 159 (48.1) |

| Phone type (%) | |||||

| Android | 68 (20.5) | 106 (32.3) | 113 (34.0) | 100 (30.1) | 92 (27.9) |

| iOS | 246 (74.3) | 205 (62.5) | 200 (60.2) | 213 (64.2) | 217 (65.8) |

| Baseline phone mount installed (%) | 116 (35.0) | 92 (28.0) | 111 (33.4) | 109 (32.8) | 102 (30.9) |

| Baseline use of Do Not Disturb While Driving (%) | 75 (22.7) | 79 (24.1) | 65 (19.6) | 58 (17.5) | 74 (22.4) |

| Dashboard touchscreen (%) | 172 (52.0) | 176 (53.7) | 180 (54.2) | 172 (51.8) | 180 (54.5) |

| Frequency of letting passenger use phone (%) | |||||

| Never | 118 (35.6) | 128 (39.0) | 116 (34.9) | 112 (33.7) | 117 (35.5) |

| 1 to 2 d | 107 (32.3) | 84 (25.6) | 81 (24.4) | 111 (33.4) | 112 (33.9) |

| 3 d or more | 89 (26.9) | 99 (30.2) | 116 (34.9) | 90 (27.1) | 80 (24.2) |

| Frequency of riding as a passenger (%) | |||||

| Never | 73 (22.1) | 62 (18.9) | 68 (20.5) | 62 (18.7) | 64 (19.4) |

| 1 to 2 d | 137 (41.4) | 118 (36.0) | 117 (35.2) | 123 (37.0) | 114 (34.5) |

| 3 d or more | 104 (31.4) | 131 (39.9) | 128 (38.6) | 128 (38.6) | 131 (39.7) |

| Number of traffic violations in prior 5 y (%) | |||||

| 0 | 189 (57.1) | 194 (59.1) | 184 (55.4) | 172 (51.8) | 183 (55.5) |

| 1 | 79 (23.9) | 67 (20.4) | 69 (20.8) | 95 (28.6) | 77 (23.3) |

| 2 | 32 (9.7) | 35 (10.7) | 41 (12.3) | 29 (8.7) | 39 (11.8) |

| 3 or more | 13 (3.9) | 15 (4.6) | 19 (5.7) | 16 (4.8) | 10 (3.0) |

| Number of car crashes in prior 5 y (%) | |||||

| 0 | 185 (55.9) | 182 (55.5) | 201 (60.5) | 172 (51.8) | 185 (56.1) |

| 1 | 97 (29.3) | 97 (29.6) | 79 (23.8) | 99 (29.8) | 89 (27.0) |

| 2 | 22 (6.6) | 26 (7.9) | 24 (7.2) | 34 (10.2) | 26 (7.9) |

| 3 or more | 10 (3.0) | 6 (1.8) | 9 (2.7) | 8 (2.4) | 9 (2.7) |

Table 2.

Raw and Holm–Bonferroni adjusted P-values for the 7 planned comparisons, for both the intervention and postintervention periods. Adjusted p-values may be directly compared to an alpha threshold of 0.05

| Comparison | Raw P Value | Holm Threshold | Adjusted P Value |

|---|---|---|---|

| Intervention | |||

| 5. Prize Money vs. 1. Control | 0.00000001 | 0.0071 | <0.001 |

| 4. Gamification Plus Competition vs. 1. Control | 0.000009 | 0.0083 | <0.001 |

| 4. Gamification Plus Competition vs. 3. Commitment Plus Habit Tips | 0.0069 | 0.01 | 0.035 |

| 3. Commitment Plus Habit Tips vs. 2. Phone Mount | 0.089 | 0.0125 | 0.35 |

| 3. Commitment Plus Habit Tips vs. 1. Control | 0.126 | 0.0167 | 0.38 |

| 5. Prize Money vs. 4. Gamification Plus Competition | 0.141 | 0.025 | 0.28 |

| 2. Phone Mount vs. 1. Control | 0.819 | 0.05 | 0.82 |

| Postintervention | |||

| 5. Prize Money vs. 1. Control | 0.00001 | 0.0071 | <0.001 |

| 4. Gamification Plus Competition vs. 1. Control | 0.0055 | 0.0083 | 0.033 |

| 5. Prize Money vs. 4. Gamification Plus Competition | 0.053 | 0.01 | 0.26 |

| 3. Commitment Plus Habit Tips vs. 1. Control | 0.054 | 0.0125 | 0.22 |

| 3. Commitment Plus Habit Tips vs. 2. Phone Mount | 0.107 | 0.0167 | 0.32 |

| 4. Gamification Plus Competition vs. 3. Commitment Plus Habit Tips | 0.441 | 0.025 | 0.88 |

| 2. Phone Mount vs. 1. Control | 0.785 | 0.05 | 0.78 |

Manipulation Checks.

To gauge the reach of the phone mount intervention, the percentage of participants who reported having a phone mount installed in their car was examined for the intake and exit surveys, including only those who responded to both (n = 940). In arms 2 through 5, which received the free mount, 30.2% reported having a mount installed at intake and 88.7% at exit, a significantly greater increase than in arm 1 (31.4% at intake, 42.4% at exit; treated by time interaction P < 0.001). Likewise, self-reported use of a DNDWD setting was compared at intake versus exit (n = 945). In arms 3, 4, and 5, which were encouraged to use this setting, 19.0% of respondents reported using this setting at intake versus 43.6% at exit, a significantly greater increase than in arms 1 and 2 (22.3% at intake, 31.9% at exit; treated by time interaction P = 0.001). For the goal commitment intervention given to arms 3, 4, and 5, 98.7% of respondents said they would commit to reducing their handheld phone use to under 1 min/h.

Of interest was the percentage of participants in each arm who opted out of receiving text messages by texting “bye,” “stop,” or a similar expression. Opt-out rates were low across arms, but higher for arms that received study texts throughout the intervention period (arm 1, 0.6%; arm 2, 0.3%; arm 3, 4.5%; arm 4, 5.4%; arm 5, 4.8%). Even though messages delivered to arms 3 and 4 were not tied to financial incentives, these participants were no more likely to opt out than were participants in arm 5.

Primary Analysis.

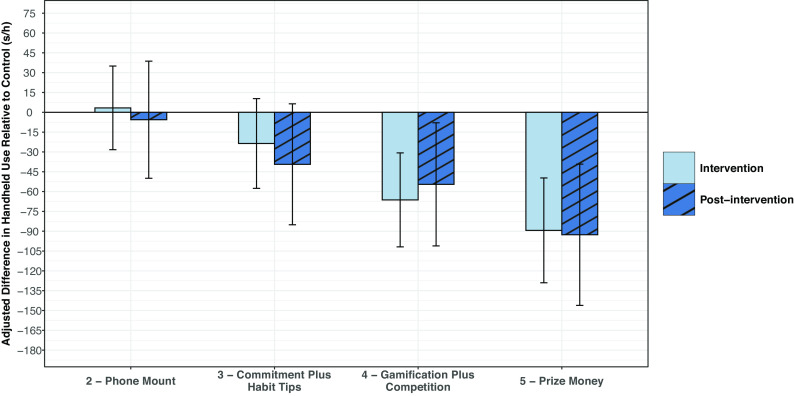

There was no evidence of multicollinearity in the model (all variance inflation factors <3). Participants in arm 2 (phone mount) and arm 3 (commitment plus habit tips) did not significantly reduce their handheld phone use relative to control (Fig. 2). Participants in arm 4 (gamification plus competition) reduced handheld use by 66 sec/h, or 20.5%, relative to control (Holm-adjusted P < 0.001); this was also a significant improvement relative to arm 3 (adjusted P = 0.035). The largest reduction occurred among participants in arm 5 (prize money), which provided the same goal gamification and social competition elements as arm 4 while linking performance to monetary rewards. These participants reduced their handheld use by 89 s/h, or 27.6%, relative to control (adjusted P < 0.001).*

Fig. 2.

Plot of differences in adjusted mean handheld use between each of the four intervention arms and control, for both the intervention and postintervention periods, with 95% confidence intervals.

To test whether behavior change persisted after study messages and incentives ceased, this analysis was repeated for the postintervention period. Participants in arm 4 still had 55 s/h, or 16.2%, less handheld use than control (adjusted P = 0.033) and those in arm 5 had 93 s/h, or 27.6%, less use (adjusted P < 0.001).

Additional analyses with total phone use (handheld plus hands-free) as the outcome found no significant differences between any of the four treatment arms and control, during both the intervention and postintervention periods (all adjusted Ps > 0.14). This pattern of results indicates a shift from handheld (riskier) to hands-free (less risky) phone use among some participants, with little change in overall phone use.

Discussion

This RCT tested interventions designed to create sustained change in drivers’ phone use habits. The results showed that giving participants a phone mount or a commitment exercise and habit tips did not, by themselves, decrease handheld phone use while driving. Adding weekly gamified goals and social competition led to a significant decrease in handheld phone use, suggesting that engaging performance feedback is crucial for behavior change. Including modest financial incentives (average cost per participant: $6 for gamified goals, $5 for social competition) led to the greatest decrease in handheld phone use; participants who received these incentives as part of the weekly goals and competition showed a 28% decrease relative to control. Importantly, those who received the performance feedback or the feedback plus incentives continued to engage in handheld phone use at reduced levels even after the feedback and incentives ended.

One limitation of this study is that it was opt-in, making it difficult to gauge the acceptability and effectiveness of interventions if scaled to all customers. Those who enrolled may have been more open to changing their driving behavior or more willing to receive text messages encouraging them to change. A second limitation owes to the additive nature of the study design, which prevents certain inferences from being drawn. For instance, the results showed that providing a phone mount was not sufficient to cause a shift in phone usage; however, it may be that having a phone mount is necessary to facilitate and sustain a transition from handheld to hands-free use. A follow-up study with a factorial design would help to clarify the independent and interactive effects of each of the interventions. A third limitation is that after interventions ceased, we could only monitor participants until the end of their insurance rating period (25 to 65 d later). Therefore, we cannot know how long, or even if, behavior change persisted beyond this point.

The average driver in the United States spends 310 h behind the wheel each year, totaling 70 billion hours across the population (37). At the population level, an intervention that induced a long-term, 90 s/h reduction in handheld phone use could mean close to 2 billion fewer hours of distracted driving per year. Given that approximately 60,000 crashes owe to phone use distraction each year (1), a 28% reduction in the most distracting kind of phone use could prevent 16,000 crashes. UBI programs, which have large and growing numbers of customers and already offer rewards to drivers who put down their phones, are a promising channel for scaling behavioral interventions to encourage focused driving.

Materials and Methods

The Institutional Review Board at the University of Pennsylvania approved the study under expedited review (Protocol 842968). Respondents provided informed consent prior to enrollment.

Participants.

Snapshot customers were eligible to participate if they lived in a state where phone use while driving was factored into their insurance rating; had 16 to 56 d of baseline driving data from their rating period at the time of invitation; took ≥7 driving trips in one of the weeks during the month of February, 2021; averaged ≥2 min/h of handheld phone use while driving; could read and understand English; and were ≥18 y old. Beginning March 1, 2021, Progressive sent eligible customers study invitation emails with an estimate of how much they could save on their auto insurance policy (M = $63) by reducing their handheld phone use to less than 1 min/h and how much they could earn by completing intake and exit surveys ($20 total in Amazon® gift codes). Interested customers could click a link to an informed consent form in Qualtrics.

Intake Survey and Interventions.

Those who consented and provided contact information were immediately randomized to a treatment arm and taken to the intake survey. This survey asked about their phone make, driving history, phone use while driving, use of settings to limit distracted driving, and demographics (SI Appendix). After completing these survey items, all participants received education about distracted driving (see arm 1 description). Those in arms 3, 4, and 5 also received a goal commitment exercise and habit tips (see arm 3 description) and were asked to choose a time when they typically would not be driving to receive tips by text message.

Intervention, Exit survey, and Postintervention.

The intervention period began March 15, 2021, and lasted 10 wk. During this period, the Snapshot app monitored driving behaviors and the University of Pennsylvania’s Way to Health platform delivered automated messaging to arms 3, 4, and 5. At the end of the 10 wk, Way to Health sent all participants the exit survey. This survey repeated certain questions from the intake survey to see whether participants had changed; delivered an adaptive, five-item delay discounting task (38); asked participants what, if anything, motivated them to change and which interventions helped them to do so; and collected feedback about how the interventions could be improved and whether they would recommend the study to others (SI Appendix). Participants’ driving continued to be monitored until the end of their Snapshot rating period, which constituted a variable-length postintervention period lasting 25 to 65 d.

Measures.

The Snapshot app is a mobile telematics application that uses location and phone sensor data to measure information about trip events, such as start and end time, speeding, hard braking, and rapid acceleration. It also measures the amount of three kinds of smartphone use during trips: handheld call, handheld noncall, and hands-free. The app discriminates between handheld and hands-free use using information from the OS about hands-free audio connectivity and from the phone’s accelerometer and gyroscope sensors. According to Cambridge Mobile Telematics, Progressive’s mobile technology vendor, the app can distinguish between handheld and hands-free use with 82% precision. Handheld use—call and noncall—was the target of our interventions and served as our primary outcome. A deep learning algorithm classifies each trip as a likely driver or nondriver trip and has been found to be 97% accurate (39). Only driver-classified trips were included in our analyses.

Data Analysis.

Prior to the trial, a power analysis was performed that assumed, based on previous research with Snapshot customers, a mean of 8.9% (SD = 8.8%) of drive time engaged in handheld phone use, or 320 s/h (12). It found that to have a power of 0.80 to detect a reduction of 2 percentage points in the amount of drive time engaged in handheld phone use (72 sec/h of driving), the minimum sample size was 1,204 (301 per arm). For trial data, the primary outcome was computed by summing each participant’s handheld call and handheld noncall phone use while driving and dividing by their total seconds of driving time during the intervention period. The primary analytic model was fractional regression with a logit link function and variables coding for the four intervention arms as predictors. In addition to comparing each intervention arm to control, three additional contrasts were prespecified—arm 3 versus 2, arm 4 versus 3, arm 5 versus 4—for a total of seven.

The primary analysis was intention to treat; participants randomly assigned to a trial arm were included regardless of whether they received the intake survey interventions or intervention period messages. However, participants who had no intervention period driving data (n = 130) were excluded from this analysis.

The analytic model included several prespecified covariates: baseline period handheld phone use as a proportion of drive time; length of baseline period; proportion of baseline handheld use due to calls versus noncalls; mean hours of driving per week during baseline; age; sex; marital status; urban, suburban, or rural residence; residence in state with universal handheld ban; race; ethnicity; household income level; education level; phone make; baseline use of DNDWD; the presence of dashboard touchscreen in vehicle; frequency of letting passengers use their (driver’s) phone; frequency of riding as a passenger; the number of traffic violations in prior 5 y; and the number of car crashes in prior 5 y. We used the variance inflation factor technique to detect for multicollinearity in the model. The primary analysis took a complete-case approach to missingness. Participants who had intervention period driving data but were missing one or more covariates (n = 87) were excluded from the primary analysis. Covariate-adjusted means are reported in the main text. SI Appendix reports unadjusted results for all participants who had intervention period driving data. The Holm method was used to correct for multiple preplanned contrasts. Holm-adjusted p-values are reported and can be compared directly to an alpha threshold of 0.05. This analysis was repeated for the postintervention period.

Supplementary Material

Appendix 01 (PDF)

Acknowledgments

We wish to thank Dylan Small for providing statistical guidance, Mohan Balachandran and Michael Kopinsky for overseeing the study build and data integration at Way to Health, and Jessica Hemmons for assisting with the design of participant educational materials. Federal Highway Administration Exploratory Advanced Research Program Contract No. 693JJ31750012 (M.K.D.). National Institutes of Health K23HD090272001 (M.K.D.). Abramson Family Foundation philanthropic grant (M.K.D.). Progressive Casualty Insurance Company provided in kind, cost-shared services.

Author contributions

J.P.E., R.A.X., W.C.E., C.C.M., F.K.W., R.M.R., K.G.V., I.J.B., D.J.W., S.D.H., and M.K.D. designed research; J.P.E., N.K., D.A.-R., A.L., K.L.G., W.J.F., and M.K.D. performed research; R.A.X. and I.J.B. analyzed data; and J.P.E., W.C.E., C.C.M., F.K.W., R.M.R., K.G.V., S.D.H., and M.K.D. wrote the paper.

Competing interests

While involved with this research, W.J.F., K.L.G., and W.C.E. were employed by The Progressive Corporation. As employees of the company, W.J.F., K.L.G., and W.C.E. owned stock in The Progressive Corporation. K.G.V. is part owner of a consulting company, VALHealth. F.K.W. has an intellectual property and financial interest in Diagnostic Driving, Inc. The Children’s Hospital of Philadelphia (CHOP) has an institutional interest in Diagnostic Driving, Inc. Diagnostic Driving, Inc., created a virtual driving assessment system that is used in Ohio as an assessment at licensing centers and in driving schools to assess driver training programs but not used in this study. Flaura K. Winston serves as the chief scientific advisor of Diagnostic Driving, Inc. This potential conflict of interest is managed under a conflict-of-interest management plan from CHOP and the University of Pennsylvania.

Footnotes

This article is a PNAS Direct Submission.

*For all participants with intervention period driving data (n = 1,523), the unadjusted difference between arm 4 and control was 54 s/h, and the unadjusted difference between arm 5 and control was 90 s/h.

Data, Materials, and Software Availability

Requests for access to deidentified data that support the findings may be made to the corresponding author. Data may only be used for non-commercial research purposes.

Supporting Information

References

- 1.NCSA, Distracted driving, NCSA, Distracted driving 2019 (National Highway Traffic Safety Administration, 2021). [Google Scholar]

- 2.Klauer S. G., et al. , Distracted driving and risk of road crashes among novice and experienced drivers. N Engl. J. Med. 370, 54–9 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Dingus T. A., et al. , Driver crash risk factors and prevalence evaluation using naturalistic driving data. Proc. Natl. Acad. Sci. U.S.A. 113, 2636–41 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Dingus T. A., et al. , The prevalence of and crash risk associated with primarily cognitive secondary tasks. Saf. Sci. 119, 98–105 (2019). [Google Scholar]

- 5.New Progressive Data Shows Putting the Phone Down Correlates to Lower Insurance Claims. (Progressive Insurance, 2019). https://www.prnewswire.com/news-releases/new-progressive-data-shows-putting-the-phone-down-correlates-to-lower-insurance-claims-300780370.html. Accessed 20 November 2023. [Google Scholar]

- 6.Distracted Driving | Cellphone Use, in NCSL. (2023). https://www.ncsl.org/transportation/distracted-driving-cellphone-use. Accessed 20 November 2023. [Google Scholar]

- 7.Cheng C., Do cell phone bans change driver behavior? Econ. Inquiry 53, 1420–1436 (2015). [Google Scholar]

- 8.Oviedo-Trespalacios O., Getting away with texting: Behavioural adaptation of drivers engaging in visual-manual tasks while driving. Transp. Res. Part A: Policy Pract. 116, 112–121 (2018). [Google Scholar]

- 9.Rudisill T. M., Baus A. D., Jarrett T., Challenges of enforcing cell phone use while driving laws among police: A qualitative study. Injury Prevention 25, 494–500 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Auto Insurance Customer Satisfaction Plummets as Rates Continue to Surge, J.D. Power Finds. (2023). https://www.jdpower.com/sites/default/files/file/2023-07/2023007%20U.S.%20Auto%20Insurance%20Study.pdf. Accessed 20 November 2023.

- 11.Stevenson M., et al. , The effect of telematic based feedback and financial incentives on driving behaviour: A randomised trial. Accid. Anal. Prev. 159 (2021). [DOI] [PubMed] [Google Scholar]

- 12.Delgado M. K., et al. , Distracted driving decreased after receiving comparative feedback and loss-framed incentives from an auto insurer (JAMA Network Open, 2024). [Google Scholar]

- 13.Deci E. L., Ryan R. M., Self-determination theory: A macrotheory of human motivation, development, and health. Canad. Psychol. 49, 182–185 (2008). [Google Scholar]

- 14.Duckworth A. L., Gendler T. S., Gross J. J., Situational strategies for self-control. Perspect. Psychol. Sci. 11, 35–55 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Hajek P., et al. , A randomized trial of E-cigarettes versus nicotine-replacement therapy. N Engl. J. Med. 380, 629–637 (2019). [DOI] [PubMed] [Google Scholar]

- 16.Benedetti M. H., et al. , Talking on hands-free and handheld cellphones while driving in association with handheld phone bans. J. Safety Res., (2022). [DOI] [PubMed] [Google Scholar]

- 17.Lally P., et al. , How are habits formed: Modelling habit formation in the real world. Eur. J. Soc. Psychol. 40, 998–1009 (2010). [Google Scholar]

- 18.Buyalskaya A., et al. , What can machine learning teach us about habit formation? Evidence from exercise and hygiene. Proc. Natl. Acad. Sci. U.S.A. 120 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Locke E. A., Latham G. P., Building a practically useful theory of goal setting and task motivation - A 35-year odyssey. Am. Psychol. 57, 705–717 (2002). [DOI] [PubMed] [Google Scholar]

- 20.Nolan J. M., et al. , Normative social influence is underdetected. Pers. Soc. Psychol. Bull. 34, 913–923 (2008). [DOI] [PubMed] [Google Scholar]

- 21.Miller R. L., Wozniak W., Counter-attitudinal advocacy: Effort vs. self-generation of arguments. Curr. Res. Soc. Psychol. 6, 46–55 (2001). [Google Scholar]

- 22.Burger J. M., The foot-in-the-door compliance procedure: A multiple-process analysis and review. Pers. Soc. Psychol. Rev. 3, 303–325 (1999). [DOI] [PubMed] [Google Scholar]

- 23.Chokshi N. P., et al. , Loss-framed financial incentives and personalized goal-setting to increase physical activity among ischemic heart disease patients using wearable devices: The active reward randomized trial. J. Am. Heart Assoc. 7, e009173 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Bonezzi A., Brendl C. M., De Angelis M., Stuck in the middle: The psychophysics of goal pursuit. Psychol. Sci. 22, 607–612 (2011). [DOI] [PubMed] [Google Scholar]

- 25.Li D., Hawley Z., Schnier K., Increasing organ donation via changes in the default choice or allocation rule. J. Health Econ. 32, 1117–1129 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Gollwitzer P. M., Implementation intentions - Strong effects of simple plans. Am. Psychol. 54, 493–503 (1999). [Google Scholar]

- 27.Wang G. X., Wang Y., Gai X. S., A meta-analysis of the effects of mental contrasting with implementation intentions on goal attainment. Front. Psychol. 12 565202 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Oviedo-Trespalacios O., Truelove V., King M., “It is frustrating to not have control even though I know it’s not legal!”: A mixed-methods investigation on applications to prevent mobile phone use while driving Accid. Anal. Prev. 137, 105412 (2020). [DOI] [PubMed] [Google Scholar]

- 29.Bowen S., Marlatt A., Surfing the urge: Brief mindfulness-based intervention for college student smokers. Psychol. Add. Behav. 23, 666–671 (2009). [DOI] [PubMed] [Google Scholar]

- 30.Stawarz K., Cox A. L., Blandford A., “Beyond Self-Tracking and Reminders: Designing Smartphone Apps That Support Habit Formation” in Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems (Seoul, Republic of Korea, 2015), pp. 2653–2662. [Google Scholar]

- 31.Bai S. R., Hew K. F., Huang B. Y., Does gamification improve student learning outcome? Evidence from a meta-analysis and synthesis of qualitative data in educational contexts Educ. Res. Rev. 30 (2020). [Google Scholar]

- 32.Johnson D., et al. , Gamification for health and wellbeing: A systematic review of the literature. Internet Interv. 6, 89–106 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Patel M. S., et al. , Effect of a game-based intervention designed to enhance social incentives to increase physical activity among families the be fit randomized clinical trial. Jama Internal Med. 177, 1586–1593 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Dai H., Milkman K. L., Riis J., The fresh start effect: Temporal landmarks motivate aspirational behavior. Manage. Sci. 60, 2563–2582 (2014). [Google Scholar]

- 35.Patel M. S., et al. , Effectiveness of behaviorally designed gamification interventions with social incentives for increasing physical activity among overweight and obese adults across the United States the step up randomized clinical trial. Jama Internal Med. 179, 1624–1632 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Vlaev I., et al. , Changing health behaviors using financial incentives: a review from behavioral economics. BMC Public Health 19, 1059 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Kim W., Anorve V., Tefft B. C.. American Driving Survey: 2014-2017 (AAA Foundation for Traffic Safety, Washington, D.C., 2019) [Google Scholar]

- 38.Koffarnus M. N., Bickel W. K., A 5-trial adjusting delay discounting task: accurate discount rates in less than one minute. Exp. Clin. Psychopharmacol. 22, 222–8 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Ebert J. P., et al. , Validation of a smartphone telematics algorithm for classifying driver trips. Transp. Res. Interdiscip. Perspect. 25 (2024). [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Appendix 01 (PDF)

Data Availability Statement

Requests for access to deidentified data that support the findings may be made to the corresponding author. Data may only be used for non-commercial research purposes.