Abstract

Pharmacovigilance (PV) is a data-driven process to identify medicine safety issues at the earliest by processing suspected adverse event (AE) reports and extraction of health data. The PV case processing cycle starts with data collection, data entry, initial checking completeness and validity, coding, medical assessment for causality, expectedness, severity, and seriousness, subsequently submitting report, quality checking followed by data storage and maintenance. This requires a workforce and technical expertise and therefore, is expensive and time-consuming. There has been exponential growth in the number of suspected AE reports in the PV database due to smart collection and reporting of individual case safety reports, widening the base by increased awareness and participation by health-care professionals and patients. Processing of the enormous volume and variety of data, making its sensible use and separating “needles from haystack,” is a challenge for key stakeholders such as pharmaceutical firms, regulatory authorities, medical and PV experts, and National Pharmacovigilance Program managers. Artificial intelligence (AI) in health care has been very impressive in specialties that rely heavily on the interpretation of medical images. Similarly, there has been a growing interest to adopt AI tools to complement and automate the PV process. The advanced technology can certainly complement the routine, repetitive, manual task of case processing, and boost efficiency; however, its implementation across the PV lifecycle and practical impact raises several questions and challenges. Full automation of PV system is a double-edged sword and needs to consider two aspects – people and processes. The focus should be a collaborative approach of technical expertise (people) combined with intelligent technology (processes) to augment human talent that meets the objective of the PV system and benefit all stakeholders. AI technology should enhance human intelligence rather than substitute human experts. What is important is to emphasize and ensure that AI brings more benefits to PV rather than challenges. This review describes the benefits and the outstanding scientific, technological, and policy issues, and the maturity of AI tools for full automation in the context to the Indian health-care system.

Keywords: Artificial intelligence, individual case safety reports processing, pharmacovigilance

INTRODUCTION

Artificial intelligence (AI) is the dawn of a new era. Unknowingly, it has become an integral part of our personal lives from home to street and the technology is now pervading scientific research, health-care system, and pharmacovigilance (PV). The objective of PV is to reduce the incidence and the risk associated with the use of medicines at the earliest by processing suspected adverse reaction reports and extraction of health data to identify drug safety signals. Worldwide postmarketing safety reports of medical products have been collected through spontaneous reporting system in a structured and systematic way by means of individual case safety reports (ICSRs). Electronic health-care records, periodic safety update reports, published medical literature, registries, and pharmacoepidemiology studies are complementary data sources for routine PV practices, nonetheless, having unstructured text.

NEED OF ARTIFICIAL INTELLIGENCE IN PHARMACOVIGILANCE

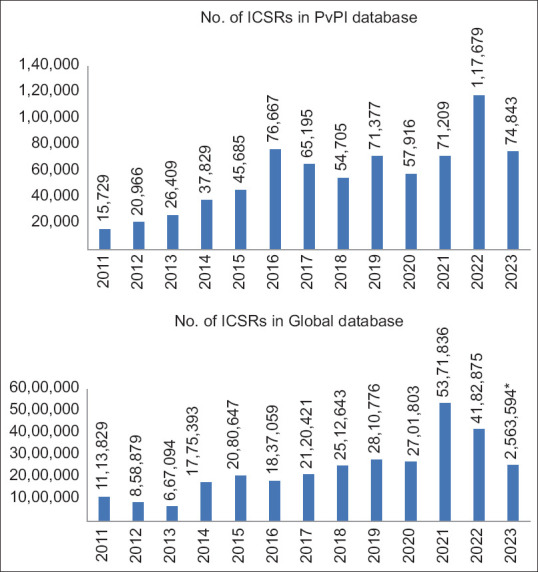

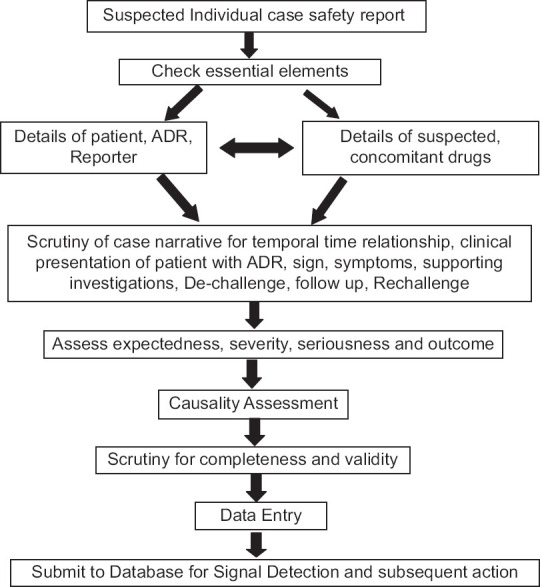

There has been exponential growth in the number of suspected adverse event (AE) reports in the PV database [Figure 1]. Processing of the enormous volume and variety of data sources, making its sensible use and separating “needles from haystack,” is a challenge for key stakeholders such as pharmaceutical firms, regulatory authorities, medical and PV experts, and National Pharmacovigilance Program managers. Conventionally, the case processing of ICSR needs essential elements (details of patient, reporter, adverse reaction, suspected and concomitant medications, and outcome). In addition, ICSR case processing is evaluated for the expectedness of AE as per the prescribing information leaflet, the likelihood of causal relationship, determine severity and seriousness criteria, and finally scrutinize for completeness and validity for regulatory submission. Importantly, it encompasses manual tasks along with human cognition [Figure 2]. Essentially, it needs a workforce and technical expertise and, therefore, is expensive and time consuming. To cope with this increased workload, there has been a lot of excitement and enthusiasm to adopt AI technology to automate PV.

Figure 1.

Individual case safety reports received by the Pharmacovigilance Program of India (PvPI) and the WHO Global database has increased dramatically in the past several years. Source: PvPI, IPC, MoHFW. *Data as on October 10, 2023. PvPI = Pharmacovigilance Program of India, ICSR = Individual case safety report, IPC = Indian pharmacopoeia commission, MoHFW = Ministry of Health and Family Welfare

Figure 2.

Individual case safety report case processing and evaluation in pharmacovigilance. ICSR = Individual case safety report, ADR = Adverse drug reaction

ARTIFICIAL INTELLIGENCE

Background

AI is a branch of computer science. AI system contains a database of facts and uses an algorithm to make machines to imitate human behavior that requires understanding, creative composition, speech recognition, and decision-making.[1] The machine acquires human intelligence by learning and training using a huge volume of robust datasets, just like a child learns from teaching and training from the environment and becomes an intelligent human being. The new technology entails deep learning and natural language processing techniques having neural networks (like neurons in the human brain) to teach computers to process data like the human brain to solve a given problem that requires human understanding and reasoning.[2] Interestingly, the computer creates an expert algorithm that has the ability to read structured and unstructured text, extract information from free text, and recognize simple and complex patterns in pictures and text to produce accurate insights to interpret images and predict medicine safety issues.[3] Fundamentally, the predictions by these machines are based on the training datasets and models that determine the performance and outcome of AI. Remarkable success has been achieved by AI in medical specialties that depend on the interpretation of images such as radiology, ophthalmology, and pathology.[4]

IMPLEMENTATION OF ARTIFICIAL INTELLIGENCE TOOLS IN PHARMACOVIGILANCE

Opportunities and benefits

The AI tool has been proposed to be beneficial for the manual repetitive and routine task of data entry, identifying AE, drug–drug interactions, subtle data patterns, and review of single cases.[5] In addition, AI can convert the unstructured, free-text format of drug safety data and hand-written documents into machine-readable format.[6,7]

Further, the tool can automate the Medical Dictionary for Regulatory Activities coding, check duplicate reports, categorize reports into physician or consumer reports, identify serious reports, and exclude nonserious reports.[8] Interestingly, the AI platform can also analyze unstructured data, extract the text, and identify relevant information to build clinically robust auto-narratives and identify patterns within structured and unstructured narratives, refuting the need for routine review of single cases and manual identification and validation of signals.[8] Furthermore, it can extract ICSR information from various published documents such as medical literature, case reports, medication reviews in social media, free-text clinical notes in electronic health records, and discharge summaries.[9,10] A recent survey reports that the use of AI tools processes the data very fast, speeds up computations that were not previously feasible, and saves scientists time and money.[11] With the large amount of drug safety data being stored in an electronic manner, the adoption of AI tools will reduce the efforts, time, and cost of case processing, improve data quality, and possibly be a game changer for PV activities [Table 1].

Table 1.

Opportunities and challenges of adoption of artificial intelligence-based pharmacovigilance

| Opportunities | Challenges |

|---|---|

| Reduce the burden of repetitive and routine manual data entry task | Complete automation of PV system to recognize complex patterns, heterogeneous data may be misleading and inaccurate for decision-making |

| Automate the MedDRA coding | Availability of robust and valid training datasets having all diseases and therapeutic areas in sufficient sample size from different sources for accuracy and quality assessment in real-world settings |

| Convert the unstructured, free-text, hand-written documents into machine-readable format and extract the required information | Lack of high sensitivity algorithm would miss potentially important AEs and lack of a specific algorithm would identify false-positive reports creating background noise |

| Extract ICSR information from various published documents and electronic health records | Variation in names of the drugs and diseases, description of adverse drug effects, diversity and difficulties in local languages, ambiguities, and lack of essential information may cause technical challenges for data processing and labeling |

| Build clinically robust auto-narratives refuting the need for routine reviews of single cases | Privacy and ethical concerns as data used without consent from individuals and breach the trust among doctor–patient relationship |

| Identify ADRs and subtle data pattern within narratives from structured and unstructured narratives | Data infrastructure to establish a comprehensive database for our patient population |

| Check duplicate reports, categorize reports into physician or consumer reports, identify serious reports, and exclude nonserious reports | Robust research and development infrastructure, educational infrastructure, and financial support from the government to invest in infrastructure, research, and training |

| Reduce time, efforts, and cost of case processing | Regulations to ensure validation and accuracy of AI tool, and balance commercial interest and transparency of technology firms against patient safety and well-being |

MedDRA=Medical dictionary for regulatory activities, ICSR=Individual case safety report, ADRs = Adverse drug reactions, PV=Pharmacovigilance, AEs=Adverse events, AI=Artificial intelligence

CHALLENGES OF ADOPTION OF ARTIFICIAL INTELLIGENCE IN PHARMACOVIGILANCE

Despite being a promising tool, its implementation and practical impact raise several questions and challenges [Table 1]. Whether the qualitative assessment by AI algorithm to determine causality and safety signals (that requires clinical evaluation plus expert opinion) be as good as human experts for decision making? Whether the AI algorithm can be integrated across the PV lifecycle to identify black swans? Will it completely replace PV professionals? There seem to be no simple straightforward answers. Let us introspect them from PV case processing and application perspectives in the context to Indian health-care systems.

Scientific challenges

Interpretation and prediction

The AE case processing in PV is a complex task that involves multiple decision-making points and adjudication within a regulated and audited system. There has been a definite role of clinical evaluation and clinician’s perspective for causality assessment and signal detection. The causality assessment of AE principally depends on expert judgment and global introspection.[12,13] The medical science and therapeutics are complex and ever-changing. The assessment of ICSRs is not a standardized or homogenous process that can be computerized. In fact, variations in the clinical presentation of the patients and adverse effects typically require human intervention and clinical evaluation for decision-making. The central question is whether the current AI tool is strong enough to determine temporality, causal association, predict potential drug–drug interaction and flag safety alerts in real-world data processing, and ensure generalizability and quality performance.[2] Huysentruyt et al. reported that full automation of PV by AI is still under development for harmonization and best practices.[14] The adoption of AI tool to heterogeneous complex data for full automation is inappropriate and risky. Complete automation of the PV system to recognize these complex patterns may be misleading and inaccurate. This leads to another question of accountability. If an AI tool makes a mistake in spite of being thoroughly validated, who will be held responsible: developer, technology firm, or regulator? Importantly, AI technology must be flexible and recognize the need for expert judgment for the assessment of complex difficult case scenarios. On the other hand, the researchers have warned for the naïve use of AI tool in science as this can lead to mistakes and false positives resulting in a waste of time and resources.[11] Remarkably, when the Bayesian approach for automated disproportionality analysis for data mining was introduced, Uppsala Monitoring Center which provides technical support and guidance to the WHO Program for International Drug Monitoring emphasized not to replace detailed clinical evaluation.[13] India being one of the active member countries of the WHO Program for International Drug Monitoring also follows the same operational strategy. The use of modern technology and tools cannot undermine the importance of clinical evaluation and human touch in PV data processing. Full automation of PV system is a double-edged sword and needs to consider two aspects – people and processes. The focus should be a collaborative approach of technical expertise (people) combined with intelligent technology (processes) to augment human talent that meets the objective of the PV system and benefit all stakeholders.

Technological challenges

Training datasets and validation

The fundamental key to this impressive technology is training datasets used for the generation of AI algorithms. The dataset has to be vast and diverse, from different sources, covering all types of reports, representing the world’s population to make the algorithm valid and robust in real-world settings.[15] This requires integration, linkage, annotation, labeling, and maintenance of datasets to teach and train the computers right from concept to implementation. Subsequently, the training model needs to be tested and validated before application on real-world data.

India has a well-established PV system and database. However, it does not represent the actual AEs happening in the real world due to underreporting and selective reporting.[16] For a robust dataset, spontaneous AE reports need to be linked to electronic health records of public and private sector hospitals, outdoor patients, general practice records, disease registries, and published medical literature to provide high-quality evidence for causal association and signal detection. Unfortunately, the majority of the public and private hospitals across India use traditional systems for medical records, and their quality, completeness, and retrieval could be a challenge. Furthermore, the fragmented health-care system in India and different administrative arrangements will be another challenge to integrate and link the data from different sources.[16] Few superspecialty hospitals in India have initiated preparing disease-specific registries, albeit, require substantial efforts for quality, completeness, and linkage.[17]

Readymade datasets prepared by technology firms from well-developed countries cannot be applied for the Indian patient population due to ethnic variation and may result in bias and erroneous predictions. Similarly, a training dataset generated from a single source, representing an underserved or marginalized group of patients, or otherwise can introduce systematic bias along with the risk of inequities and injustice.[18,19] Unless the data are comprehensive, covering the public and private health-care sector, representing all diseases and therapeutic areas, including all patient populations and ethnic groups in sufficient numbers, the prediction by AI tool might be misleading and inaccurate.

From an algorithm perspective, it should be sensitive, specific, high degree of consistency, validity, and reproducibility to flag potential drug safety signals. The objective of PV is not only to find out AEs but also to list out contingent and contributory factors and identify statistical outliers. The small number of patients with harmful experiences together gives a vital message about causality and signals that can prevent further damage to at-risk patients on exposure. An algorithm without high sensitivity would miss potentially important AEs and safety signals. An AI algorithm without high specificity would identify false-positive reports creating background noise, thereby making signal detection difficult. Moreover, with advancing medical science and therapeutics, the algorithm will need to be updated regularly, retrained, and revalidated. On the other hand, wide variation in the performance of AI algorithms has been reported for case processing, determination of seriousness, and causality assessment in the published literature.[20] In fact, the algorithm should be dynamic, flexible, and intelligent to identify complex cases that require human judgment and add value for qualitative assessment. Nevertheless, it would be more interesting and useful to have specially designed algorithms such as noncardiac drugs causing QTc prolongation, drug-induced alteration of liver functions, and Stevens–Johnson syndrome etc.

Technical considerations

Variation in names of the drugs and diseases, description of adverse drug effects, diversity and difficulties in local languages, ambiguities, and lack of information on self-medication (commonly practiced) may cause technical challenges for data processing, labeling, and integration. One of the major limitations is language ambiguity and multiple meanings or implications of a medical word. For example, “skin rashes” are an extremely common condition due to more than 20 potential causes, including drug induced. However, its location, description, associated signs, and symptoms details are required to define, distinguish, and diagnose. If PV data from different place use a different definition for “skin rashes” as an AE, the decision and outcome are likely to be erroneous. Similar uncertainty may result from medical words used to describe the same reaction term such as “skin rash and erythema” and “hypotension and orthostatic hypotension.” The AI tool may be familiar with different meanings of a medical term but may fail to recognize the precise meaning in the context in which it occurs. This indicates the need for case definition when the description and interpretation differ from reporters for accuracy.[21] Similarly, the use of social media safety data has its own limitations due to nonstandard and nonmedical terminology, slang, wrong spellings, abbreviations, and missing important information.[22]

Ethical concerns

The contentious issue is access, ownership, and use of individual patient data in the absence of adequate regulations, and consent can compromise patient privacy, raise ethical concerns, and breach the trust in doctor–patient relationship.[23] The patient’s personal data, sensitive information, and images used for research should be with the consent and anonymized to obscure identity and handle them responsibly.

Regulatory concerns

Interestingly, all machines or computers that uses AI technology for clinical predictions, diagnosis, prevention, and treatment of diseases are considered under medical device and known as the Clinical Decision Support tool regulated by the US Food and Drug Administration (FDA).[24] However, a recent case series reported that the analysis of evidence supporting FDA approval for medical devices used in critical care failed to meet expectations and standards for independent validation and clinical efficacy assessment.[25] This is a matter of concern and justifies the need for preapproval studies of the validity, efficacy, and safety of AI-based devices developed before the technology was advanced with statistical learning methods.[25] While in India, the Indian Council of Medical Research has recently introduced Ethical Guidelines for Application of AI in Biomedical Research and Health, albeit, there are no specific laws regulating AI.

On the similar lines, the adoption of AI technology to automate PV system needs to be regulated for validation and quality. Although Indian regulatory authorities are yet to specify the regulatory framework for the use of AI, regulations are essential to ensure validation and accuracy for application in real-world settings and specific patient populations. Moreover, regulations are crucial to balance the commercial interest and transparency of technology firms against patient safety and well-being for medical professionals.[26]

Importantly, over a period of time when the new data are available and the technology is updated, the regulatory framework needs to consider the process of certification and approval for adaptive AI systems.

Finally, the foundation for AI models is very complex and resource-intensive. It requires a robust research and development infrastructure, educational infrastructure for training health-care professionals and data infrastructure to establish a comprehensive database for our patient population. All these will need funds for investment in infrastructure, research, training, and financial support from the government for sustainable AI-based PV systems. Without investment in research and development, the country will have to depend on the AI tools developed in resource-intensive countries, and the costs could be exorbitant.

CONCLUSION

AI in health care has been very impressive for a well-defined, discrete task like the interpretation of medical images; however, its application to heterogeneous data is complicated. The application of AI tool to PV system has potential benefits to minimize the burden of manual workload and boost efficiency. However, it cannot replace or overtake the importance of medical review and judgment of trained PV professionals for final adjudication of causality and signal detection. To date, full automation of PV system comes at risks and several challenges. It requires more testing, validation, and approval from medical professionals and regulators. Neither AI experts appreciate the intricacy and complexity of the interpretation of medical data nor do medical professionals comprehend the operations of AI technology. AI technology should enhance human intelligence rather than substitute human experts. What is important is to emphasize and ensure that AI brings more benefits to PV rather than challenges.

Financial support and sponsorship

Nil.

Conflicts of interest

There are no conflicts of interest.

REFERENCES

- 1.Aronson JK. Artificial intelligence in pharmacovigilance: An introduction to terms, concepts, applications, and limitations. Drug Saf. 2022;45:407–18. doi: 10.1007/s40264-022-01156-5. [DOI] [PubMed] [Google Scholar]

- 2.Ibrahim H, Abdo A, El Kerdawy AM, Eldin AS. Signal detection in pharmacovigilance: A review of informatics-driven approaches for the discovery of drug-drug interaction signals in different data sources. Artif Intell Life Sci. 2021;1:100005. [Google Scholar]

- 3.Bate A, Luo Y. Artificial intelligence and machine learning for safe medicines. Drug Saf. 2022;45:403–5. doi: 10.1007/s40264-022-01177-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Rajpurkar P, Chen E, Banerjee O, Topol EJ. AI in health and medicine. Nat Med. 2022;28:31–8. doi: 10.1038/s41591-021-01614-0. [DOI] [PubMed] [Google Scholar]

- 5.Bhatt A. Artificial intelligence in managing clinical trial design and conduct: Man and machine still on the learning curve? Perspect Clin Res. 2021;12:1–3. doi: 10.4103/picr.PICR_312_20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Bate A, Hobbiger SF. Artificial intelligence, real-world automation and the safety of medicines. Drug Saf. 2021;44:125–32. doi: 10.1007/s40264-020-01001-7. [DOI] [PubMed] [Google Scholar]

- 7.Henry S, Buchan K, Filannino M, Stubbs A, Uzuner O. 2018 n2c2 shared task on adverse drug events and medication extraction in electronic health records. J Am Med Inform Assoc. 2020;27:3–12. doi: 10.1093/jamia/ocz166. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Sujith T, Chakradhar T, Sravani M, Sowmini K. Aspects of utilization and limitations of artificial intelligence in drug safety. Asian J Pharm Clin Res. 2021;14:34–9. [Google Scholar]

- 9.Luo Y, Thompson WK, Herr TM, Zeng Z, Berendsen MA, Jonnalagadda SR, et al. Natural language processing for EHR-Based pharmacovigilance: A structured review. Drug Saf. 2017;40:1075–89. doi: 10.1007/s40264-017-0558-6. [DOI] [PubMed] [Google Scholar]

- 10.Negi K, Pavuri A, Patel L, Jain C. A novel method for drug –Adverse event extraction using machine learning. Inf Med Unlocked. 2019;17:100190. [Google Scholar]

- 11.Van Noorden R, Perkel JM. AI and science: What 1,600 researchers think. Nature. 2023;621:672–5. doi: 10.1038/d41586-023-02980-0. [DOI] [PubMed] [Google Scholar]

- 12.Agbabiaka TB, Savović J, Ernst E. Methods for causality assessment of adverse drug reactions: A systematic review. Drug Saf. 2008;31:21–37. doi: 10.2165/00002018-200831010-00003. [DOI] [PubMed] [Google Scholar]

- 13.Ralph Edwards I. Causality assessment in pharmacovigilance: Still a challenge. Drug Saf. 2017;40:365–72. doi: 10.1007/s40264-017-0509-2. [DOI] [PubMed] [Google Scholar]

- 14.Huysentruyt K, Kjoersvik O, Dobracki P, Savage E, Mishalov E, Cherry M, et al. Validating intelligent automation systems in pharmacovigilance: Insights from good manufacturing practices. Drug Saf. 2021;44:261–72. doi: 10.1007/s40264-020-01030-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Mockute R, Desai S, Perera S, Assuncao B, Danysz K, Tetarenko N, et al. Artificial intelligence within pharmacovigilance: A means to identify cognitive services and the framework for their validation. Pharmaceut Med. 2019;33:109–20. doi: 10.1007/s40290-019-00269-0. [DOI] [PubMed] [Google Scholar]

- 16.Desai M. Pharmacovigilance and spontaneous adverse drug reaction reporting: Challenges and opportunities. Perspect Clin Res. 2022;13:177–9. doi: 10.4103/picr.picr_169_22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Bhardwaj P. National quality registry for India: Need of the hour. Indian J Community Med. 2022;47:157–8. doi: 10.4103/ijcm.ijcm_543_22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Larrazabal AJ, Nieto N, Peterson V, Milone DH, Ferrante E. Gender imbalance in medical imaging datasets produces biased classifiers for computer-aided diagnosis. Proc Natl Acad Sci U S A. 2020;117:12592–4. doi: 10.1073/pnas.1919012117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Obermeyer Z, Powers B, Vogeli C, Mullainathan S. Dissecting racial bias in an algorithm used to manage the health of populations. Science. 2019;366:447–53. doi: 10.1126/science.aax2342. [DOI] [PubMed] [Google Scholar]

- 20.Ball R, Dal Pan G. “Artificial Intelligence” for pharmacovigilance: Ready for prime time? Drug Saf. 2022;45:429–38. doi: 10.1007/s40264-022-01157-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Brown EG, Harrion JE. Dictionaries and Coding in pharmacovigilance. In: Talbot J, Aronson JK, editors. Stephens’ Detection and Evaluation of Adverse Drug Reactions: Principles and Practice. 6th ed. Oxford: Wiley Blackwell; 2011. pp. 545–72. [Google Scholar]

- 22.Pappa D, Stergioulas LK. Harnessing social media data for pharmacovigilance: A review of current state of the art, challenges and future directions. Int J Data Sci Anal. 2019;8:113. [Google Scholar]

- 23.Kumar T, Sharma P, Singha M, Singh J. Big data analytics and pharmacovigilance-an ethical and legal consideration. Curr Trends Diagn Treatment. 2018;2:58–65. [Google Scholar]

- 24.Lee JT, Moffett AT, Maliha G, Faraji Z, Kanter GP, Weissman GE. Analysis of devices authorized by the FDA for clinical decision support in critical care. JAMA Intern Med. 2023;183:1399–401. doi: 10.1001/jamainternmed.2023.5002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Habib AR, Gross CP. FDA regulations of AI-Driven clinical decision support devices fall short. JAMA Intern Med. 2023;183:1401–2. doi: 10.1001/jamainternmed.2023.5006. [DOI] [PubMed] [Google Scholar]

- 26.Chino Y. AI in medicine: Creating a safe and equitable future. Lancet. 2023;402:503. doi: 10.1016/S0140-6736(23)01668-9. [DOI] [PubMed] [Google Scholar]