Abstract

Preventing and treating mental health and substance use problems requires effective, affordable, scalable, and efficient interventions. The multiphase optimization strategy (MOST) framework guides researchers through a phased and systematic process of developing optimized interventions. However, new methods of systematically incorporating information about implementation constraints across MOST phases are needed. We propose that early and sustained integration of community-engaged methods within MOST is a promising strategy for enhancing an optimized intervention's potential for implementation. In this article, we outline the advantages of using community-engaged methods throughout the intervention optimization process, with a focus on the Preparation and Optimization Phases of MOST. We discuss the role of experimental designs in optimization research and highlight potential challenges in conducting rigorous experiments in community settings. We then demonstrate how relying on the resource management principle to select experimental designs across MOST phases is a promising strategy for maintaining both experimental rigor and community responsiveness. We end with an applied example illustrating a community-engaged approach to optimize an intervention to reduce the risk for mental health problems and substance use problems among children with incarcerated parents.

Keywords: multiphase optimization strategy (MOST), experimental, interventions, community-based

Plain Language Summary Title

Strategies for Engaging Communities and Ensuring Research Quality in the Multiphase Optimization Strategy

Plain Language Summary

What is already known about the topic? Interventions must be effective, affordable, scalable, and efficient to be successfully implemented and achieve maximum public health impact. The multiphase optimization strategy is a strategic and phased approach to developing optimized interventions. Community-engaged research has been used to bolster an intervention's potential for implementation.

What does this paper add? The article guides researchers who are employing community-engaged research methods to systematically conduct activities in different phases of the intervention optimization process. The end goal is to create an optimized intervention ready for successful implementation in its intended delivery setting.

What are the implications for practice, research, or policy? Incorporating input from key stakeholders in every phase of the intervention optimization process can enhance the public health impact of community-based interventions for mental health and substance use problems.

Introduction

The multiphase optimization strategy (MOST) is an innovative and principled framework for the systematic development and optimization of multicomponent interventions (Collins, 2018). In MOST, multicomponent interventions are systematically developed and evaluated through a series of phases: Preparation, Optimization, and Evaluation. Researchers using MOST have the explicit goal of identifying an optimized intervention, defined as the combination of components that produces the best-expected outcome within the constraints of the intervention's intended implementation setting(s). Comprehensive overviews of MOST (Collins, 2018) and the application of MOST in various fields have been described in detail elsewhere (see Guastaferro et al., 2021; Wells et al., 2020). MOST can be conceptualized as an implementation-forward framework for developing, improving, or redesigning interventions to be successfully delivered in community settings because of its focus on scalability. An optimized intervention strategically balances effectiveness with affordability and efficiency, as only components demonstrating a meaningful effect in the intended direction are included in the optimized intervention package. In theory, this makes the intervention immediately scalable. However, actual scalability hinges on designing an intervention that addresses the implementation determinants of the setting in which it will be delivered. Although MOST emphasizes identifying these critical factors by establishing the optimization objective as a central task of the Preparation phase, developing systematic methods and processes to inform and refine the optimization objective throughout the intervention optimization process is an area needing further development.

Designing interventions specifically for those it is intended to benefit and serve is necessary to address the nearly two-decade delay it takes for evidence-based interventions to have a public health impact (Balas & Boren, 2000). An intervention is only as effective as its implementation—it must reach people it is designed to help and fit in applied settings to make a demonstrable difference. Many researchers use community-engaged methods to bolster successful implementation of evidence-based interventions in community settings. Although scalability is a key priority for researchers using the MOST framework, few have explicitly incorporated these methods to develop optimized interventions (see examples by O’Hara et al., 2022; Whitesell et al., 2019; Windsor et al., 2021). Across intervention development frameworks, community-engaged methods are most often leveraged in the latter stages of intervention development and testing, to evaluate the effectiveness of an intervention in real-world settings or to address implementation determinants, as seen in hybrid effectiveness-implementation studies (Curran et al., 2022) and as illustrated in the NIH Stage Model for Behavioral Intervention Development (Onken et al., 2014). Community-engaged methods are also frequently employed to promote broad dissemination and adoption of effective interventions in community settings (Wallerstein & Duran, 2010). We propose that incorporating community-engaged methods early in the intervention optimization process is a promising approach for designing interventions to have meaningful public health impacts.

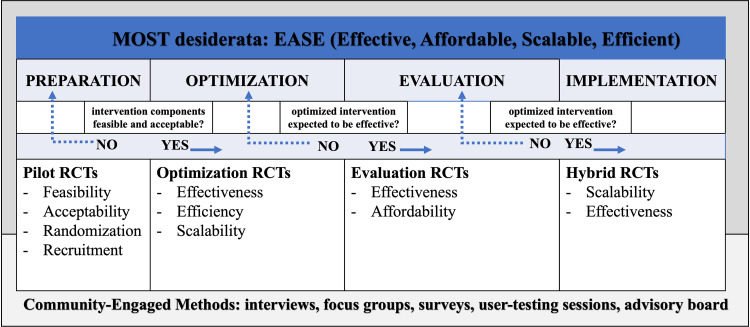

The purpose of this article is to champion the successful integration of community-engaged methods into the MOST framework (see Figure 1). Our ideas build on the work of Whitesell et al., 2019 and Windsor et al., 2021, who initially highlighted the benefits of integrating community-engaged methods within the MOST framework. We discuss how the principle of resource management can guide researchers to balance strict experimental standards, as the MOST framework demands, with common practical challenges of community-engaged research. Specifically, we discuss how strategic selection of experimental designs, based on (1) key research questions and (2) stage of intervention development, allows researchers to maximize scientific information gained during early stages of intervention optimization in community settings. We also offer suggestions for addressing barriers that may arise when using community-engaged methods within the MOST framework. Finally, we present an applied example from a project where, at various stages of the intervention development process, strategic selection of experimental designs successfully balanced addressing central research questions with practical feasibility.

Figure 1.

An Integrated Model of Community-Engaged Methods and Experimental Designs Across MOST Phases

Note. MOST = multiphase optimization strategy; RCTs = randomized controlled trials.

Benefits of Community-Engaged Methods in Intervention Optimization

In the following subsection, we highlight how community-engaged methods offer unique benefits across MOST Phases.

Community-Engaged Methods in the Preparation Phase of MOST

Primary activities in the Preparation Phase include developing and/or refining the conceptual model (i.e., the intervention's “blueprint” or outline of the causal process to be intervened upon), identifying corresponding candidate components (e.g., if behavioral tracking is hypothesized to lead to the desired behavioral change, then a candidate component may be teaching tracking skills), and identifying the optimization objective (i.e., operationalization of resource constraints such as time or money functioning as barriers or facilitators to implementation of the intervention). Community-engaged methods can inform each of these activities.

First, intended beneficiaries hold expertise in their own lived experience, which is necessary for the development of a conceptual model that accurately reflects contextually appropriate risk and protective mechanisms (Barrera & Castro, 2006). This is evident in the development of the conceptual model underpinning the Strong African American Families (SAAF) program (Murry & Brody, 2004). Community partners highlighted the importance of discrimination, racial socialization, and racial pride as culturally specific risk and protective mechanisms. Analyses of SAAF and its subsequent adaptations showed these critical intervention targets explain program effects on the outcomes of interest (e.g., Berkel et al., 2024; Murry et al., 2019). Individuals with lived experience guide expectations about the potential effectiveness, acceptability, feasibility, or appropriateness of candidate components, particularly when these components have not been tested specifically with similar populations (Smith et al., 2018).

Second, input from professionals who understand the context, needs, and priorities of the population the intervention is intended to serve is critical for identifying aspects of the setting that will support or impede successful implementation (e.g., the intervention will only be used if it costs less than $100 per person and takes less than 2 hr of staff time per week). From this perspective, establishing an optimization objective is equally as crucial as producing content in the early stages of intervention development. Community-engaged methods are critical for developing realistic and useful optimization objectives. Stakeholders may have important insights about prioritizing certain constraints over others (i.e., cost per person vs. implementation complexity). Prioritizing stakeholders’ perspectives in making decisions generally improves implementation success (Ramanadhan et al., 2018). However, there is a range of approaches researchers use to manage decision-making and power dynamics when conducting community-engaged work (Key et al., 2019). These approaches should be clearly defined and agreed upon at the start of the academic-community partnership and revisited as necessary throughout the collaboration.

Community-Engaged Methods in the Optimization Phase of MOST

The primary activities of the Optimization Phase are to conduct an optimization randomized controlled trial (RCT) to experimentally test unique effects of individual candidate intervention components and to use those data to identify an intervention that meets the optimization objective. Identifying implementation constraints starts in the Preparation Phase with specification of the optimization objective. However, these constraints need to be revisited in the optimization phase because they may change over time and vary across contexts and settings. For example, financial resources available to subsidize treatment may fluctuate with budgets or new changes in leadership and policymakers. A key benefit of optimization RCTs is that data can be used to re-evaluate decisions (i.e., select a different optimized set of components) as needed by the implementation setting. Community-engaged methods are crucial in using the optimization objective, which is initially set in the Preparation phase and revisited as necessary, to determine the components of the optimized intervention using data from the optimization RCT. Involving community partners in Optimization Phase decision-making efforts helps strike a balance between intervention effectiveness and desired implementation determinants of affordability, scalability, and efficiency. Ultimately, an intervention with this balance is more likely to be adopted and sustained in its intended delivery setting.

The Role and Challenges of MOST Experimental Trials Within Community Settings

Incorporating community-engaged methods while using the MOST framework is a promising way to identify an optimized intervention. However, the complexity of conducting experiments—a cornerstone of MOST—increases outside of controlled lab environments. These experiments, often demanding extensive planning and fixed protocols, may not easily accommodate the dynamic nature of community engagement, which requires flexibility to adapt plans based on ongoing stakeholder input. Thus, executing such structured experiments within community-engaged research frameworks requires careful balancing of experimental control and responsive adaptation.

Let us suppose the researcher is in the Preparation Phase of MOST and is working to identify the candidate components to include in their optimization RCT. The researcher uses qualitative methods (e.g., focus groups, qualitative interviews) to collaboratively identify and develop the set of candidate components to be subsequently evaluated in the optimization RCT. They soon realize that, depending on the number of candidate components identified through community-engaged methods, the experimental design selected for the optimization RCT could quickly become complicated or cumbersome, limiting feasibility. For example, it may be challenging to conduct a pilot study with relatively few participants or an optimization RCT in a community setting where implementation of many conditions may be difficult. It may also be difficult to persuade community partners to implement complex designs. Furthermore, the more complex the design, the more problematic it becomes to make necessary changes while in the field. There may be additional challenges with group-format interventions, which require a minimum number of participants for an adequate group process. Logistical concerns raised by stakeholders may necessitate the intervention developer to exclude certain candidate components that might otherwise be under consideration to maintain a feasible experimental design. From the perspective of MOST's continual optimization principle (Collins, 2018), this may mean postponing the testing of certain components rather than discarding them. Community-engaged researchers working under real-world constraints often face limited budgets and must remain flexible and responsive to stakeholder input, especially during early stages of the intervention development process. In the following sections, we propose that resource constraints may be addressed—at least in part—by considering alternative experimental designs and selecting a design that makes best use of the available resources.

The Resource Management Principle and Strategic Selection of Experimental Designs

A fundamental tenet of the MOST framework is the resource management principle (Collins, 2018; see Chapter 1). The resource management principle states researchers should make the best use of available resources (i.e., funding, staffing, time) at every stage of the intervention optimization process (Collins, 2018). To do this, researchers focus on gathering the most beneficial information they can feasibly obtain. The information deemed “most useful” is situation- and context-specific but will always be the data that most efficiently moves the intervention optimization process forward and allows the researcher to achieve relevant MOST phase activities. Adherence to the resource management principle therefore supports careful consideration of various experimental designs and strategic selection of a design that is most appropriate for the research question at hand (i.e., guided by phase-specific activities and objectives) and can feasibly be implemented with available resources.

Preparation Phase

In the Preparation Phase, the goal is to gather information about acceptability and feasibility of candidate components. Some researchers opt for non-randomized trials instead of experimental designs to answer these questions. A non-randomized trial can provide useful information about acceptability of candidate components and feasibility of some aspects of the research design (e.g., will participants understand and complete research assessments?), and may be useful for early pilot work. However, it may not be the best choice from the perspective of the resource management principle. For example, an open trial design will not provide critical information about whether the research team can execute the complex randomized experimental design that will be used in the Optimization Phase. An open trial will not help answer questions such as: Can the research team feasibly randomize participants to 8, 16, or 32 conditions? Is each candidate component able to be implemented independently? Does it make sense to provide each possible combination of candidate components?

Experimental pilot studies can be used to examine the acceptability and feasibility of recruiting, retaining, and randomizing participants in a complex experimental design (Leon et al., 2011). It is valuable, especially for research teams new to MOST, to pilot acceptability and feasibility of the experimental design selected for the Optimization Phase. The pilot study can use any reasonable experimental design, but by definition, does not draw inferences about intervention effects and optimization implications (Leon et al., 2011). Pilot studies are not powered to detect effects and, as such, usually use a small sample size. Thus, effect sizes from pilot studies are prone to bias and should not be used to estimate effect sizes for power analyses in planning for the optimization RCT (see Westlund & Stuart, 2017).

A common concern for many intervention researchers is whether implementing multiple conditions in a pilot trial, with few participants, is in and of itself feasible. Perhaps they only have access to 20 participants to pilot their new group-based intervention and implementing it with groups of 2–3 people is not clinically justified. In this scenario, we strongly encourage researchers to consider the resource management principle. For example, a fundamental requirement of the factorial experiment is that to draw inferences about component effects, every combination must be implemented (see Collins, 2018). However, given that in a pilot study, the research team is not concerned about drawing inferences, this may be the one—and only—time that hand-selecting a subset of conditions makes sense. For example, suppose three candidate components (A, B, C) are under consideration for inclusion, but there are not enough resources to pilot all eight experimental conditions in the full factorial experiment (2 × 2 × 2 or 23; see Table 1). If the goal of the pilot study is to test feasibility of different component combinations (e.g., will Component B make sense to participants who do not receive Component C, and vice versa?), the researchers may decide to pilot test Conditions 1, 2, 3, and 8 (see Table 1). This would provide necessary information on how feasible and acceptable it is to implement conditions that include all three components and no components, as well as Component B without C and Component C without B. In other words, this approach answers the most salient research question at that stage of the process. The research team will not have pilot data to demonstrate feasibility or acceptability of delivering all eight experimental conditions, but they would have the information needed to advance toward optimization, which is what matters according to the resource management principle.

Table 1.

Full Factorial Experiment (Factors: 3, Experimental Conditions: 8)

| Condition | A | B | C |

|---|---|---|---|

| 1 | ON | ON | ON |

| 2 | ON | ON | OFF |

| 3 | ON | OFF | ON |

| 4 | ON | OFF | OFF |

| 5 | OFF | ON | ON |

| 6 | OFF | ON | OFF |

| 7 | OFF | OFF | ON |

| 8 | OFF | OFF | OFF |

Note. Condition = experimental condition; A = candidate component A; B = candidate component B; C = candidate component C.

Optimization Phase

In the Optimization Phase, the main focus is on gathering information about main and interactive effects of candidate components on a clinically or theoretically relevant outcome. The optimization RCT is an adequately powered, randomized experiment that guides decisions to identify an optimized intervention (Collins et al., 2018). An optimization RCT trial can use various experimental designs, but it always involves randomization with several conditions, designed to examine the individual contribution of all candidate components on the outcome of interest, and how components work together (i.e., interact). The adequately powered and efficient optimization RCT may be a factorial and fractional factorial experiment, sequential multiple assignment randomized trial (SMART; Almirall et al., 2014), micro-randomized trial (MRT; Klasnja et al., 2015), or systems engineering experiment (Rivera et al., 2018). Experimental design notwithstanding, the optimization RCT enables researchers to gather empirical data about individual and combined effects of intervention candidate components. The goal is to ensure the intervention is comprised only of active ingredients (i.e., components demonstrating meaningful effects before evaluation trials).

Full Factorial Experiments

Full factorial experiments are the most common, and often most efficient, experimental design selected in the optimization RCT to assess the contribution of candidate components in fixed interventions (see Collins, 2018; Chapter 3 and Collins et al., 2009 for a comprehensive overview of the factorial experiment). Briefly, full factorial experiments are powerful, yet complex trials that require randomizing participants to one of several conditions and implementing combinations of components (i.e., A but not B or C; A and C but not B, etc.). In a full factorial experiment, experimental conditions represent every combination of candidate component levels (e.g., component A is PRESENT/ABSENT or set to HIGH/LOW intensity). Three candidate components with two levels each (2 × 2 × 2 or 23) require eight conditions, four candidate components with two levels each (2 × 2 × 2 × 2 or 24) require 16 conditions, and so on (Collins, 2018).

The full factorial experiment efficiently uses participant data (Collins, 2017), but the design can become quite complex depending on the number of candidate components under consideration. Suppose you are a community-engaged investigator, and your community partners feel strongly that five candidate components should be included in the optimization RCT. However, implementing the 32 conditions required by a full factorial experiment (2 × 2 × 2 × 2 × 2 or 25) is not feasible given the available resources of their agency. The community partners believe the maximum number of manageable conditions is eight. As the researcher, your main concern is executing a rigorous experiment that allows you to draw valid inferences about the individual contributions of each component. To reconcile the necessity for rigorous experimental design with practical resource constraints, MOST-aligned researchers are encouraged to explore alternative strategies. The fractional factorial experiment offers a compromise, balancing scientific rigor with the agency's resource limitations.

Fractional Factorial Experiments

The fractional factorial experiment is a potential solution to the conundrum of gathering information about scientifically important effects of intervention components while remaining aligned with a community-engaged approach throughout the intervention development process. Here we briefly review some features of fractional factorial experiments to illustrate their utility in this context (for a full review, see Collins et al. 2009 and Dziak et al. 2012).

The fractional factorial experiment is a type of reduced factorial experiment that allows the inclusion of several candidate components while reducing the number of experimental conditions required compared to a full factorial experiment (see Collins, 2018, Chapter 5). Fractional factorial experiments retain the ability to draw inferences about scientifically important effects—and they do so quite efficiently in ways that are critical to implementing an intervention trial in community settings. First, the experimental conditions in a fractional factorial experiment represent a specific fraction of the full factorial experiment (i.e., ½, ¼). This is particularly advantageous for community-engaged researchers because fewer conditions make implementation in community settings more feasible.

Second, because statistical power is determined by the level of each factor (i.e., whether a component is turned ON or OFF), fractional factorial experiments require the same number of participants as a full factorial experiment. The tradeoff is the inabilityto detect higher-order (e.g., three-way and four-way) interactions among candidate components. This is because fractional factorial experiments result in bundling or “aliasing” of certain effects; components within a bundle cannot be disentangled (Chakraborty et al., 2009). For example, if a main effect and an interaction are aliased, one cannot determine whether an observed effect is due to the main effect, interaction, or both.

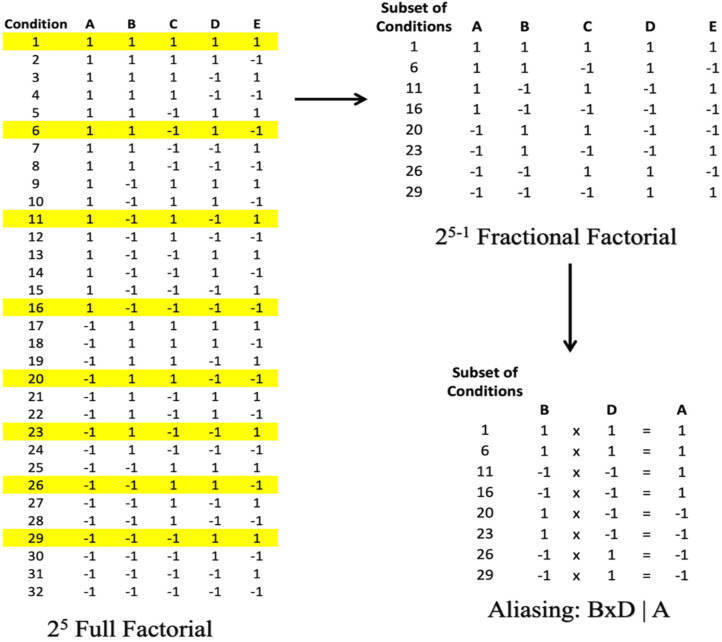

See Figure 2 for an illustration of aliasing in fractional factorial experiments. Panel A shows the 32 conditions comprising a full 25 factorial experiment. This design yields five main effects (i.e., one for each candidate component—A, B, C, D, E) and all the higher-order interaction effects among them. Panel B shows the subset of conditions that would be retained in a 25−1 fractional factorial experiment. This design also yields five main effects for candidate components, but aliases or bundles main effects with interaction effects to reduce the number of conditions from 32 to 8. Panel C demonstrates why this happens. Factorial experiments are analyzed using a regression model with effects coding. Each condition in the experiment is coded “1” in the regression equation if the candidate component is “ON” and “−1” if the candidate component is “OFF.” The entire sample is used to estimate each effect; the data from participants in conditions coded “1” are compared to the data from participants in conditions coded “−1.” In the fractional factorial experiment (Panel B), the main effect of candidate component A is assessed by comparing the mean of all conditions turned OFF (i.e., 20, 23, 26, and 29) versus the mean of all conditions turned ON (i.e., 1, 6, 11, and 16). Since interaction terms are mathematically multiplicative, the regression equation for an interaction effect is determined by multiplying the codes corresponding to the candidate components involved in the interaction term. As illustrated in Panel C, the regression equation that yields the main effect of Component A is the same as the regression equation used to assess the interaction effect of Component B and Component D.

Figure 2.

An Illustration of Conditions Included in a Full Factorial and Corresponding Fractional Factorial Experiment With Aliasing

This “aliasing” of effects is managed strategically by deliberately bundling scientifically important effects with effects that are scientifically less important or expected to be negligible in size. However, unlike in the preparation phase, the investigator cannot hand-select conditions included in the design (described in detail below) without sacrificing the ability to draw inferences about the main and interactive effects of the candidate components. Instead, condition selection is based on the principle that main effects are most important for decisions about which candidate components will be included in an intervention package. Higher-order interactions (i.e., two-way interactions, and three-way interactions), while interesting, are less practically important (Collins et al., 2018). There are set designs available in computer packages (e.g., FrF2 package in R and PROC FACTEX in SAS) for investigators to use based on the parameters (components and conditions) they input. See Tables 1–3 for an illustration of a full factorial experiment with three components (23), a half-fractional factorial experiment with four components (24−1), and a quarter-fractional factorial experiment with five components (25−2).

Table 3.

Quarter Fractional Factorial Experiment (25−1; Factors: 5, Experimental Conditions: 8)

| Condition | A | B | C | D | E |

|---|---|---|---|---|---|

| 2 | OFF | OFF | OFF | OFF | ON |

| 7 | OFF | OFF | ON | ON | OFF |

| 11 | OFF | ON | OFF | ON | OFF |

| 14 | OFF | ON | ON | OFF | ON |

| 20 | ON | OFF | OFF | ON | ON |

| 21 | ON | OFF | ON | OFF | OFF |

| 25 | ON | ON | OFF | OFF | OFF |

| 32 | ON | ON | ON | ON | ON |

Note. The table represents 8 of 32 potential conditions from the full factorial experiment. Candidate Components = 5; Experimental Conditions = 8; Resolution = IV; Condition = experimental condition; A = candidate component A; B = candidate component B; C = candidate component C; D = candidate component D; E = candidate component E.

Table 2.

Half Fractional Factorial Experiment (24−1; Factors: 4, Experimental Conditions: 8)

| Condition | A | B | C | D |

|---|---|---|---|---|

| 1 | OFF | OFF | OFF | OFF |

| 4 | OFF | OFF | ON | ON |

| 6 | OFF | ON | OFF | ON |

| 7 | OFF | ON | ON | OFF |

| 10 | ON | OFF | OFF | ON |

| 11 | ON | OFF | ON | OFF |

| 13 | ON | ON | OFF | OFF |

| 16 | ON | ON | ON | ON |

Note. The table represents 8 of 16 potential conditions from the full factorial experiment. Candidate Components = 4; Experimental Conditions = 8; Resolution = III; Condition = experimental condition; A = candidate component A; B = candidate component B; C = candidate component C; D = candidate component D.

The fractional factorial experiment adheres to the resource management principle because it accomplishes goals of the Optimization Phase (i.e., estimating main and interaction effects to be considered in the identification of an optimized intervention) while making efficient and feasible use of resources. The design can accommodate many more candidate components while holding the number of experimental conditions and required participants constant.

Applied Example

We now present an applied example of how a research team strategically selected experimental designs for various phases of MOST, guided by the resource management principle.

Background

Recent estimates suggest more than five million children in the United States have experienced parental incarceration (The Annie E. Casey Foundation, 2016). Children with an incarcerated parent (CIP) are at an increased risk for myriad negative outcomes, including substance use (Felitti et al., 1998; Heard-Garris et al., 2019; Khan et al., 2018; Murray & Farrington, 2005; Murray et al., 2012; National Research Council, 2014; Whitten et al., 2019). Indeed, national estimates suggest CIP are seven times more likely to have a diagnosed substance use disorder than children who have not experienced parental incarceration (Rhodes et al., 2023). Because of the loss of financial, emotional, and co-parenting support, and stigma related to incarceration, many CIP caregivers avoid supports and services that could mitigate their stress and bolster their parenting skills (Kjellstrand & Eddy, 2011; Poehlmann, 2005). Currently, no programs have been designed to prevent CIP mental health and substance use problems by supporting the unique needs of their caregivers.

Intervention Development Approach

The goal of this project is to fill this void by optimizing an intervention to reduce risk for mental health problems, substance use problems, and child welfare involvement among children with incarcerated parents. This work involved two implementation challenges for a CIP caregiver parenting program: (1) the generally limited uptake of evidence-based parenting programs, and (2) the lack of empirical guidance on which components to retain when adapting evidence-based parenting programs in community settings. The research team decided to use community-engaged methods within their MOST Preparation and Optimization phase activities (see Table 4) because they recognized that once this program was established as evidence-based, community members would be free to implement as desired, which may include shortening the number of sessions and dropping core components. They prioritized the benefit of MOST which illuminates which components are likely to provide the best outcomes within constraints of specific settings. Furthermore, by assessing main and interactive effects of each component, the research team and community partners would be situated to understand the impact of different component combinations; information that would be instrumental in determining the essential elements to include in an intervention package that would best fit the constraints of the implementation setting(s).

Table 4.

Applied Example: Integration of Community-Engaged Methods into the Preparation and Optimization Phases of MOST

| MOST phase | Activity | Example |

|---|---|---|

| Preparation | Develop conceptual model | Guided by Barrera and Castro (2006), the research team specified common elements and culturally distinct elements as predicted intervention processes. |

| Identify and/or develop candidate components | The research team used qualitative interviews, focus groups, secondary data analysis, and a listening session to identify and develop candidate intervention components based on elements in the conceptual model. | |

| Specify optimization objective | The Steering Committee guided the specification of the optimization objective—resulting in the need for standalone components that can be delivered by individuals from a variety of backgrounds and training. | |

| Pilot test | The research team will conduct an open pilot to assess the acceptability and feasibility of candidate components from the perspective of CIP caregivers. | |

| Pilot test | The research team will conduct a pilot randomized factorial trial to demonstrate the feasibility of recruiting, randomizing, and retaining participants and implementing a different combination of the candidate components. | |

| Optimization | Conduct optimization randomized controlled trial | The research team will conduct a full-powered optimization randomized controlled trial using a factorial or fractional factorial experiment to assess the individual contributions of the candidate components that are found to be feasible and acceptable and selected by the Steering Committee. |

| Identify optimized intervention | Guided by the Steering Committee, the research team will identify an optimized intervention based on the results of optimization randomized controlled trial and the refined optimization objective. |

Note. MOST = multiphase optimization strategy; CIP = Children with an incarcerated parent.

Preparation Phase

The research team adopted Barrera and Castro's (2006) heuristic model, which emphasizes the inclusion of general intervention processes, or common elements, developed for a broad audience (e.g., supportive parenting) and culturally distinct elements that are unique to the population for which the adaptation is being conducted (e.g., immigration stress) to guide the cultural adaptation of the new intervention. The steps follow an iterative process of community-engaged information gathering and design. Central to the community-engaged approach, the researchers first convened a Steering Committee comprised of individuals with personal and/or professional experience with parental incarceration to ensure the cultural/contextual fit of the intervention and enhance the likelihood of effectiveness (Barrera & Castro, 2006). The research team spent the first several months of the project holding Steering Committee meetings with the primary purpose of learning one another's priorities and building the team's cohesion. During this time, the research team educated the community partners about the MOST framework, emphasizing the ultimate goal of developing an effective program that fits within the constraints of the real-world setting, by being affordable, efficient, and scalable.

To identify the conceptual model, the research team used a multi-method approach, including a series of qualitative interviews and focus groups with people with lived and professional experience with parental incarceration, secondary analysis of caregiver data from a trial of a program for incarcerated parents, collated and iterative feedback from Steering Committee meetings, and a listening session at a national conference focused on CIP. The research team developed a draft conceptual model and presented it to the Steering Committee for additional feedback with a particular focus on gaps or misalignment of the conceptual model.

Based on the conceptual model, the research team identified a set of candidate components that could be adapted from existing evidence-based parenting programs, such as active listening skills, relationship-building skills, and conflict-reduction skills. The research team then asked the Steering Committee members to identify a final set of candidate components that would be empirically evaluated in the optimization RCT. The researchers explained that the goal of the optimization RCT as “auditioning” potential “program ingredients” for a spot in the program's recipe. The Steering Committee rank ordered the importance of the potential candidate components and the research team selected which components would be evaluated in the optimization RCT, based on research resource constraints. In addition, the Steering Committee advised that community agencies may not be willing to randomize participants to receive no intervention so the team opted to include a constant component in the factorial trials to ensure all participants would get access to “standard of care” content. The effects of candidate components are then interpreted as “above and beyond” effects of the constant component. An informational session was developed as a constant component, which did not include any of the parenting skills to be tested.

Input from the Steering Committee then guided the specification of the optimization objective. For example, the researchers learned that services for CIP caregivers are often implemented via a drop-in model (i.e., participants come when it's convenient for them), rather than a cohort model, in which all caregivers start and end the program together. Given this practical constraint, components needed to be developed as standalone modules. From the MOST perspective, developing standalone modules is highly advantageous, as the factorial experiment requires each component can be turned off or on, and presented in any combination. The Steering Committee strongly advised that the program be delivered by individuals with a variety of backgrounds and training, including peer leaders, to fit the staffing model of any organization wanting to implement the program. The formative work also highlighted the importance of a strengths-based and trauma-informed perspective and attention to language justice being woven throughout all program components.

At this stage, the research team had a refined conceptual model, a set of candidate components, and a specific optimization objective. The next step was to design candidate components while iteratively incorporating input and feedback from the Steering Committee throughout the process. An open pilot was conducted with an implementation partner on the Steering Committee to obtain initial information about the acceptability of candidate components from CIP caregivers. Based on the resource management principle, the research team developed each candidate component sequentially, so that Steering Committee feedback and open pilot data on each module would inform the development of subsequent modules. This conserved resources by reducing the number of revisions. For example, the researchers needed to balance Steering Committee input that the program should be designed to be implementable by any level or type of staff, with training and supervision demands, and the need to maintain implementation fidelity. They decided to shift didactic pieces of the modules to videos that caregivers would view before the in-person group session. However, during the live session, it quickly became apparent that few caregivers watched the session or did the home practice. Consequently, for the next module, the videos were incorporated into the live session.

The research team decided the last step in the Preparation Phase would be to conduct a pilot optimization RCT to test the feasibility of implementing a factorial experiment in this setting. In particular, the ability to recruit and randomize participants and implement different combinations of the candidate components (i.e., A but not B or C; A and C but not B, etc.) is critical to show funding agencies that an optimization RCT is feasible. The researchers were not concerned about clustered data (e.g., agencies) reducing statistical power because the goals of the pilot study did not include drawing statistical inferences. Instead, they focused on assessing the feasibility and acceptability of candidate components to refine them for the Optimization Phase. This pilot optimization RCT focuses on three questions: (1) Can participants be successfully recruited, retained, and randomized to multiple conditions in numerous community-based settings? (2) Are candidate components acceptable to community partners and participants? (3) Can candidate components be delivered in different combinations?

The pilot optimization RCT also extended the research team an important opportunity to provide introductory training to community partners about the logistics of an optimization RCT and then practice implementing the factorial experiment in the community setting. The research team worked on creative solutions to present the research procedures in a way that did not feel overwhelming to their community partners. First, because the Steering Committee recommended more than four candidate components, the team decided it was not feasible or a good use of available resources to conduct the pilot optimization RCT as a full factorial experiment that would require several (>16) conditions. In this case, researchers chose specific conditions from the full factorial experiment that would yield crucial insights for advancing to the Optimization Phase. For example, they chose to evaluate the acceptability of two typically paired components as single components, to understand the impact of presenting one without the other. This flexible approach allowed for a well-developed study design plan that is open and responsive to input from community partners while also allowing the researchers to remain cognizant of available resources and focus on the key research questions for the pilot study. Second, to reduce the burden and associated training resources to manage the pilot optimization RCT as it unfolded, the researchers performed the randomization and created a clear schedule of when each new group would start and when each component would be delivered.

Optimization Phase

The experimental design for the optimization RCT will depend on input from community partners and the findings from the Preparation Phase activities. If, for example, five components are found to be acceptable and feasible to implement in the pilot optimization RCT, researchers will want to assess each component's contribution to determine which will be included in the optimized intervention. However, implementing a full factorial experiment with 32 conditions is not likely to be feasible with a hard-to-reach population, nor would it be a good use of available resources. In that case, researchers may select a fractional factorial experiment and focus only on effects likely to be scientifically important as determined by the aliasing structure. Leveraging information gathered in the Preparation Phase, the research team will determine the number of conditions and participants feasible to implement and recruit in the community setting.

Conclusion and Future Directions

MOST 1 is a principled strategy for intervention development and optimization that seeks to achieve a balance among four desired intervention characteristics: effectiveness, affordability, scalability, and efficiency. MOST uniquely holds promise for addressing the challenge of modifying interventions for different settings, a step essential for widespread public health impact (Guastaferro & Collins, 2021). By using data from optimization RCTs on unique and interactive effects of each component, researchers can tailor-make an intervention fitting the specific needs and limitations of a new community setting.

In this article, we underscored the importance of establishing explicit, methodical approaches within the MOST framework to effectively integrate insights from stakeholders with a deep understanding of the intervention's intended implementation setting and its context. A community-engaged approach that involves stakeholders throughout all early stages of intervention development provides researchers with a toolkit of methods that can, in combination with rigorous experimental designs used in the MOST framework, systematically develop an effective, affordable, scalable, and efficient intervention. Integrating community-engaged methods into the early phases of MOST can enhance the public health impact and sustainability of interventions.

We hope to see more MOST researchers adopt a community-engaged approach, as advocated for by Windsor et al. (2021) and Whitesell et al. (2019). Scalability is a key priority of MOST, inherently making it an implementation-forward approach to intervention optimization (for another example, see O’Hara et al., 2022). Using community-engaged methods within the MOST framework does not come without its challenges. The resource management principle in MOST is a key tool for navigating these challenges. The resource management principle can guide the selection of experimental designs to balance the need for rigorous methods with the flexibility and responsiveness required in community-engaged research.

For those interested in learning more, freely available resources on MOST are available at cadio.org.

Footnotes

The authors declared the following potential conflicts of interest with respect to the research, authorship, and/or publication of this article: Kate Guastaferro is a Guest Editor for Implementation Research and Practice; as such, she was not involved in the peer review process for this manuscript. All other authors have declared no conflicts of interest.

Funding: The authors disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: Karey L. O’Hara's work on this article was supported by a career development award provided by the National Institute of Mental Health (K01MH120321). Liza Hita was awarded seed funding from the Substance use and Addiction Translational Network (SATRN) and the Southwest Interdisciplinary Research Center (SIRC) which was used to support the research described in this paper. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

ORCID iDs: Kate Guastaferro https://orcid.org/0000-0002-5616-9708

Cady Berkel https://orcid.org/0000-0001-9664-9485

Karey L. O’Hara https://orcid.org/0000-0001-7429-1021

References

- Almirall D., Nahum-Shani I., Sherwood N. E., Murphy S. A. (2014). Introduction to SMART designs for the development of adaptive interventions: With application to weight loss research. Translational Behavioral Medicine, 4(3), 260–274. 10.1007/s13142-014-0265-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Balas E. A., Boren S. A. (2000). Managing clinical knowledge for health care improvement. Yearbook of Medical Informatics, 9(1), 65–70. 10.1055/s-0038-1637943 [DOI] [PubMed] [Google Scholar]

- Barrera M., Castro F. G. (2006). A heuristic framework for the cultural adaptation of interventions. Clinical Psychology: Science and Practice, 13(4), 311–316. 10.1111/j.1468-2850.2006.00043.x [DOI] [Google Scholar]

- Berkel C., Murry V. M., Thomas N. A., Bekele B., Debreaux M. L., Gonzalez C., Hanebutt R. A. (2024). The strong African American families program: Disrupting the negative consequences of racial discrimination through culturally tailored, family-based prevention. Prevention Science, 25(1), 44–55. 10.1007/s11121-022-01432-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chakraborty B., Collins L. M., Strecher V. J., Murphy S. A. (2009). Developing multicomponent interventions using fractional factorial designs. Statistics in Medicine, 28(21), 2687–2708. 10.1002/sim.3643 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Collins L. M. (2018). Optimization of behavioral, biobehavioral, and biomedical interventions: The multiphase optimization strategy (MOST). Springer International Publishing AG. [Google Scholar]

- Collins L. M., Dziak J. J., Li R. (2009). Design of experiments with multiple independent variables: A resource management perspective on complete and reduced factorial designs. Psychological Methods, 14(3), 202–224. 10.1037/a0015826 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Curran G. M., Landes S. J., McBain S. A., Pyne J. M., Smith J. D., Fernandez M. E., Chambers D. A., Mittman B. S. (2022). Reflections on 10 years of effectiveness-implementation hybrid studies. Frontiers in Health Services, 2, Article 1053496. 10.3389/frhs.2022.1053496 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dziak J. J., Nahum-Shani I., Collins L. M. (2012). Multilevel factorial experiments for developing behavioral interventions: Power, sample size, and resource considerations. Psychological Methods, 17(2), 153–175. 10.1037/a0026972 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Felitti V. J., Anda R. F., Nordenberg D., Williamson D. F., Spitz A. M., Edwards V., Koss M. P., Marks J. S. (1998). Relationship of childhood abuse and household dysfunction to many of the leading causes of death in adults. American Journal of Preventive Medicine, 14(4), 245–258. 10.1016/S0749-3797(98)00017-8 [DOI] [PubMed] [Google Scholar]

- Guastaferro K., Collins L. M. (2021). Optimization methods and implementation science: An opportunity for behavioral and biobehavioral interventions. Implementation Research and Practice, 2, Article 26334895211054363. 10.1177/26334895211054363 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guastaferro K., Strayhorn J. C., Collins L. M. (2021). The multiphase optimization strategy (MOST) in child maltreatment prevention research. Journal of Child and Family Studies, 30(10), 2481–2491. 10.1037/a0015826 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heard-Garris N., Sacotte K. A., Winkelman T. N. A., Cohen A., Ekwueme P. O., Barnert E., Carnethon M., Davis M. M. (2019). Association of childhood history of parental incarceration and juvenile justice involvement with mental health in early adulthood. JAMA Network Open, 2(9), e1910465–e1910465. 10.1001/jamanetworkopen.2019.10465 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Key K. D., Furr-Holden D., Lewis E. Y., Cunningham R., Zimmerman M. A., Johnson-Lawrence V., Selig S. (2019). The Continuum of community engagement in research: A roadmap for understanding and assessing progress. Progress in Community Health Partnerships, 13(4), 427–434. 10.1353/cpr.2019.0064 [DOI] [PubMed] [Google Scholar]

- Khan M. R., Scheidell J. D., Rosen D. L., Geller A., Brotman L. M. (2018). Early age at childhood parental incarceration and STI/HIV-related drug use and sex risk across the young adult lifecourse in the US: Heightened vulnerability of black and Hispanic youth. Drug and Alcohol Dependence, 183, 231–239. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kjellstrand J. M., Eddy J. M. (2011). Parental incarceration during childhood, family context, and youth problem behavior across adolescence. Journal of Offender Rehabilitation, 50(1), 18–36. 10.1080/10509674.2011.536720 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Klasnja P., Hekler E. B., Shiffman S., Boruvka A., Almirall D., Tewari A., Murphy S. A. (2015). Microrandomized trials: An experimental design for developing just-in-time adaptive interventions. Health Psychology, 34(S), 1220–1228. 10.1037/hea0000305 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leon A. C., Davis L. L., Kraemer H. C. (2011). The role and interpretation of pilot studies in clinical research. Journal of Psychiatric Research, 45(5), 626–629. 10.1016/j.jpsychires.2010.10.008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murry V. M., Berkel C., Inniss-Thompson M. N., Debreaux M. L. (2019). Pathways for African American success: Results of three-arm randomized trial to test the effects of technology-based delivery for rural African American families. Journal of Pediatric Psychology, 44(3), 375–387. 10.1093/jpepsy/jsz001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murry V. M., Brody G. H. (2004). Partnering with community stakeholders: Engaging rural African American families in basic research and the strong African American families preventive intervention program. Journal of Marital and Family Therapy, 30(3), 271–283. 10.1111/j.1752-0606.2004.tb01240.x [DOI] [PubMed] [Google Scholar]

- Murray J., Farrington D. P. (2005). Parental imprisonment: Effects on boys’ antisocial behaviour and delinquency through the life-course. Journal of Child Psychology and Psychiatry, 46(12), 1269–1278. 10.1111/j.1469-7610.2005.01433.x [DOI] [PubMed] [Google Scholar]

- Murray J., Farrington D. P., Sekol I. (2012). Children’s antisocial behavior, mental health, drug use, and educational performance after parental incarceration: A systematic review and meta-analysis. Psychological Bulletin, 138(2), 175. 10.1037/a0026407 [DOI] [PMC free article] [PubMed] [Google Scholar]

- National Research Council. (2014). The growth of incarceration in the United States: Exploring causes and consequences. committee on causes and consequences of high rates of incarceration. In Travis J., Western B., Redburn S. (Eds.), Committee on law and justice, division of behavioral and social sciences and education. The National Academies Press. [Google Scholar]

- O’Hara K. L., Knowles L. M., Guastaferro K., Lyon A. R. (2022). Human-centered design methods to achieve preparation phase goals in the multiphase optimization strategy framework. Implementation Research and Practice, 3, Article 26334895221131052. 10.1177/26334895221131052 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Onken L. S., Carroll K. M., Shoham V., Cuthbert B. N., Riddle M. (2014). Reenvisioning clinical science: Unifying the discipline to improve the public health. Clinical Psychological Science, 2(1), 22–34. 10.1177/2167702613497932 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Poehlmann J. (2005). Children's family environments and intellectual outcomes during maternal incarceration. Journal of Marriage and Family, 67(5), 1275–1285. [Google Scholar]

- Ramanadhan S., Davis M. M., Armstrong R., Baquero B., Ko L. K., Leng J. C., Salloum R. G., Vaughn N. A., Brownson R. C. (2018). Participatory implementation science to increase the impact of evidence-based cancer prevention and control. Cancer Causes & Control, 29(3), 363–369. 10.1007/s10552-018-1008-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rivera D. E., Hekler E. B., Savage J. S., Downs D. S. (2018). Intensively adaptive interventions using control systems engineering: Two illustrative examples. In Collins L. M., Kugler K. (Eds.), Optimization of behavioral, biobehavioral, and biomedical interventions (pp. 121–173). Springer. [Google Scholar]

- Rhodes C. A., Thomas N., O'Hara K., Hita L., Blake A., Wolchik S. A., Fisher B., Freeman M., Chen D., Berkel C. (2023). Enhancing the focus: How does parental incarceration fit into the overall picture of adverse childhood experiences (ACEs) and positive childhood experiences (PCEs)? Research on Child and Adolescent Psychopathology, 51(12), 1933–1944. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith J. D., Berkel C., Rudo-Stern J., Montaño Z., St George S. M., Prado G., Mauricio A. M., Chiapa A., Bruening M. M., Dishion T. J. (2018). The family check-up 4 health (FCU4Health): Applying implementation science frameworks to the process of adapting an evidence-based parenting program for prevention of pediatric obesity and excess weight gain in primary care. Frontiers in Public Health, 6, Article 293. 10.3389/fpubh.2018.00293 [DOI] [PMC free article] [PubMed] [Google Scholar]

- The Annie E. Casey Foundation (2016). Children of incarcerated parents, a shared sentence. Retrieved from: https://www.aecf.org/resources/a-shared-sentence

- Wallerstein N., Duran B. (2010). Community-Based participatory research contributions to intervention research: The intersection of science and practice to improve health equity. American Journal of Public Health, 100(S1), S40–S46. 10.2105/AJPH.2009.184036 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wells R. D., Guastaferro K., Azuero A., Rini C., Hendricks B. A., Dosse C., Taylor R., Williams G. R., Engler S., Smith C., Sudore R., Rosenberg A. R., Bakitas M. A., Dionne-Odom J. N. (2020). Applying the multiphase optimization strategy for the development of optimized interventions in palliative care. Journal of Pain and Symptom Management, 62(1), 174–182. 10.1016/j.jpainsymman.2020.11.017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Westlund E., Stuart E. A. (2017). The nonuse, misuse, and proper use of pilot studies in experimental evaluation research. American Journal of Evaluation, 38(2), 246–261. 10.1177/1098214016651489 [DOI] [Google Scholar]

- Whitten T., Burton M., Tzoumakis S., Dean K. (2019). Parental offending and child physical health, mental health, and drug use outcomes: A systematic literature review. Journal of child and family studies, 28, 1155–1168. [Google Scholar]

- Whitesell N. R., Mousseau A. C., Keane E. M., Asdigian N. L., Tuitt N., Morse B., Zacher T., Dick R., Mitchell C. M., Kaufman C. E. (2019). Integrating community-engagement and a multiphase optimization strategy framework: Adapting substance use prevention for American Indian families. Prevention Science, 20(7), 1136–1146. 10.1007/s11121-019-01036-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- Windsor L. C., Benoit E., Pinto R. M., Gwadz M., Thompson W. (2021). Enhancing behavioral intervention science: Using community-based participatory research principles with the multiphase optimization strategy. Translational Behavioral Medicine, 11(8), 1596–1605. 10.1093/tbm/ibab032 [DOI] [PMC free article] [PubMed] [Google Scholar]