Abstract

Spike-and-slab prior distributions are used to impose variable selection in Bayesian regression-style problems with many possible predictors. These priors are a mixture of two zero-centered distributions with differing variances, resulting in different shrinkage levels on parameter estimates based on whether they are relevant to the outcome. The spike-and-slab lasso assigns mixtures of double exponential distributions as priors for the parameters. This framework was initially developed for linear models, later developed for generalized linear models, and shown to perform well in scenarios requiring sparse solutions. Standard formulations of generalized linear models cannot immediately accommodate categorical outcomes with > 2 categories, i.e. multinomial outcomes, and require modifications to model specification and parameter estimation. Such modifications are relatively straightforward in a Classical setting but require additional theoretical and computational considerations in Bayesian settings, which can depend on the choice of prior distributions for the parameters of interest. While previous developments of the spike-and-slab lasso focused on continuous, count, and/or binary outcomes, we generalize the spike-and-slab lasso to accommodate multinomial outcomes, developing both the theoretical basis for the model and an expectation-maximization algorithm to fit the model. To our knowledge, this is the first generalization of the spike-and-slab lasso to allow for multinomial outcomes.

Keywords: Bayesian variable selection, spike-and-slab, generalized linear models, multinomial outcomes, elastic net

1. Introduction

Spike-and-slab priors have a long history in Bayesian variable selection [16,24]. The spike-and-slab prior is a mixture of two distributions, both with mean zero, but with differing scale parameters that serve to vary the degree of shrinkage imposed on parameter estimates. The narrower ‘spike’ distribution models predictors that are irrelevant to the outcome of interest and imposes strong shrinkage towards the prior mean of zero, whereas the wider ‘slab’ distribution models predictors that are relevant to the outcome of interest and thus imposes weak shrinkage towards the prior mean of zero. The practical effect of the spike-and-slab framework is to increase the probability that relevant predictors remain in the model while irrelevant predictors are shrunk to zero, effectively excluding them from the model.

While traditionally spike-and-slab priors were mixtures of Normal distributions, other distributions may be more optimal in some circumstances. For example, when a relatively small percentage of a large number of possible predictors are expected to be relevant to the outcome of interest, a mixture of double exponential distributions, i.e. the spike-and-slab lasso (SSL), may be more appropriate, especially in cases where the number of predictors is larger than the number of subjects and/or some subsets of the predictors are highly correlated [29]. In classical settings, penalized regression models can handle correlated predictors and cases where predictors outnumber subjects, and many of these penalized regression models have Bayesian interpretations.

For example, ridge regression was developed to address multicollinearity and constrains the sum of squared parameter estimates to be less than some constant [20]. The ridge penalty is equivalent to placing Normal priors on parameters, but cannot perform variable selection [17]. In contrast, the least absolute shrinkage and selection operator (lasso) constrains the sum of the absolute value of parameter estimates to be less than some constant and is equivalent to placing double exponential priors on parameters; this results in a sparse solution, i.e. performs variable selection [26,35]. However, the lasso is not without limitations. For example, if there are J predictors and N subjects, then when J>N, at most N predictors can remain in the model, and when predictors are highly correlated, the lasso tends to select one and discard the others [38]. Together, these limitations highlight the severity of the lasso penalty.

The elastic net is a compromise between ridge and lasso penalties, allowing for variable selection (lasso) with a less severe penalty and better handling of correlated predictors (ridge), and contains ridge and lasso as special cases [38]. The downside of the elastic net framework is that it uses a single penalty parameter, which results in applying uniform shrinkage to parameter estimates, and in a Bayesian context is equivalent to assuming that all parameter values are drawn from a single distribution. Thus, how likely a parameter is to be relevant to the outcome of interest plays no role in the level of shrinkage applied to its estimates. In contrast, the spike-and-slab lasso uses two penalty parameters, which in a Bayesian context implies different distributions for parameter values of irrelevant and relevant predictors, respectively. Such a framework allows for adaptive shrinkage, i.e. the degree of shrinkage applied to a parameter estimate depends on how likely the predictor is to be relevant to the outcome [29,35].

The spike-and-slab lasso was initially developed in the context of linear models and later developed for generalized linear models (GLM), and has been especially useful in genetic/genomics studies, where often a small number of genes are relevant to an outcome of interest and the number of possible predictors, e.g. genes or single nucleotide polymorphisms (SNPs), is vastly larger than the number of subjects (J>N) [2,29,33,34]. In previous work, we generalized the spike-and-slab lasso for GLMs to the spike-and-slab elastic net (SSEN), which includes the spike-and-slab ridge/lasso as special cases, and otherwise compromises between ridge and lasso penalties; further modifications allowed incorporating spatial information into the variable selection process, e.g. when using medical images to model some clinical outcome [21]. We also demonstrated the utility of the spike-and-slab elastic net framework for prediction and/or classification in Alzheimer's disease [22]. Thus, the spike-and-slab elastic net framework may be useful for explicit variable selection where some interpretation of the selected parameters is desired and/or prediction modeling where the primary goal is to build a predictive model that performs well on novel data.

Many applications have categorical outcomes, a significant subset of which are binary, i.e. outcomes with two options. Disease outcomes are often binary by nature, e.g. whether or not a subject has had a stroke, has developed a particular type of cancer, or is cognitively normal versus having dementia. Binary outcomes are usually assumed to follow a binomial distribution, and logistic regression, a special case of GLMs, can be used to model binary outcomes as a function of some set of predictors. However, many categorical outcomes have more than two mutually exclusive categories, even in applications where a binary outcome is often used. For example, progression from cognitive normal to dementia has intermediate (prodromal) stages, e.g. mild cognitive impairment (MCI), resulting in 3 categories of interest: cognitive normal, MCI, or dementia [7]. Similarly, cancers are divided into 5 categories, cancer-free and stages I-IV.

A simple approach to modeling outcomes with more than 2 categories is to choose a reference category and fit several logistic regression models, e.g. by choosing cognitive normal as the reference, we would fit a logistic regression for MCI vs. cognitive normal and another for dementia vs. cognitive normal. However, such an approach is flawed because there is no assurance that the estimated probabilities of each outcome category will sum to 1. Moreover, categorical outcomes with mutually exclusive categories are modeled by a multinomial distribution, which is the generalization of the binomial distribution, and the corresponding generalization of logistic regression is multinomial regression, which does assure the estimated probabilities of each outcome category sum to 1.

Multinomial regression requires modifications to the standard GLM framework, namely that while standard GLMs estimate a single parameter for each predictor, multinomial regression requires multiple parameter estimates for each predictor. Multinomial regression has long existed in the classical framework and has been adapted for several Bayesian or penalized regression contexts, e.g. the elastic net, which includes the lasso as a special case [1,15]. In the same way that classical and elastic net frameworks required theoretical and computational modifications to accommodate categorical outcomes with categories, so too does the spike-and-slab lasso/elastic net framework, which has not been adapted to accommodate multinomial outcomes. This work aims to fill that gap by developing a theoretical and computational framework for incorporating the spike-and-slab elastic net prior into regression-style problems that have categorical outcomes with greater than two mutually exclusive categories.

In the first place, it is necessary to specify the log joint posterior distribution for the most general case of a spike-and-slab lasso model for multinomial regression. In contrast to logistic regression, where each predictor has a single parameter, multinomial regression results in each predictor having one parameter per outcome, e.g. if the outcomes are cognitive normal, MCI, or dementia, then each MRI-derived predictor of disease class has 3 parameters. This complicated setting requires choices regarding how shrinkage penalties are applied to parameters. We develop the most general case and argue for several simplifications that are defensible for many practical problems. These simplifications reduce the complexity of the optimization problem for fitting the proposed model. Finally, since the existing algorithm for fitting spike-and-slab lasso/elastic net GLMs cannot accommodate categorical outcomes with categories, it must be modified in order to fit the class of models developed in this work. To our knowledge, this is the first generalization of the spike-and-slab lasso to allow for multinomial outcomes.

The outline of this work is as follows. In Section 2, we define and review multinomial regression and the spike-and-slab lasso, after which we develop a generalization of the spike-and-slab lasso for multinomial outcomes as well as an EM algorithm to fit the model. We then generalize the model and EM algorithm to incorporate the spike-and-slab elastic net prior, so that the spike-and-slab lasso is a special case of the final framework. We also describe an approach for incorporating structured prior information into the variable selection process. In Section 3, we present a simulation study to demonstrate the methodology and examine some of its statistical properties related to variable selection and apply the methodology to a classification task using data from the Alzheimer's Disease Neuroimaging Initiative (ADNI) in Section 4. Section 5 concludes.

2. Methodology

2.1. Multinomial regression

GLMs are models of the expectation of an outcome whose distribution is assumed to be in the exponential family, and for subjects is given by [1,23]:

| (1) |

where is an appropriate link function, is the outcome of interest, is a vector of covariates/predictors, and is an unknown vector of parameters determining the effect of the covariates on the (linked) expectation of the outcome given the covariates. Herein we assume that there are J covariates and an intercept, so that and have length J + 1. We denote individual parameters by , .

Logistic regression is a special case of GLMs that obtains when the outcome of interest is binary with logit link function, i.e. if , then . The generalization of logistic regression obtains when the outcome of interest consists of V >2 categories, in which case the outcome is assumed to have a multinomial distribution, and multinomial regression is required to model the effects of the covariates, , on the outcome, . This scenario requires a model of the probability of each possible outcome for each subject, i.e. the following model [15]:

| (2) |

where denotes the vector of length J + 1 containing the parameters corresponding to the outcome; that is, , where indicates the parameter for the outcome. Thus, there is a set of J + 1 parameters, including an intercept, associated with each of the possible outcomes, i.e. each outcome category has its own function to describe how the predictors affect the probability of that outcome category. In classical settings the model given in Equation (2) is not identifiable, which is addressed by choosing one of the possible outcomes to be a reference group, i.e. the parameter vector for that outcome is assumed to be a vector of zeroes. In this case, the parameters are interpretable as comparisons between each category and the reference group, which is analogous to logistic regression, except that instead of one reference and one ‘event,’ we have one reference and multiple ‘events.’ However, it is not necessary to choose a reference category when applying penalized models such as the elastic net or lasso, whose built-in constraints allow for unique parameter estimates under Equation (2) [15].

2.2. Spike-and-slab lasso

Many applications have a large set of predictors that may be associated with an outcome of interest, of which we wish to select a (possibly small) subset to remain in a model of the outcome. The spike-and-slab prior framework is a commonly applied Bayesian solution to this problem [16,24]. This formulation for variable selection assumes that predictors arise from a mixture distribution where the indicator variable, , indicates whether the predictor should be included in the model, i.e. for the GLM formulation in Equation (1), the prior distribution for each parameter, , is given by the following mixture distribution:

| (3) |

where the slab prior, , has a wider distribution than the spike prior, , i.e. the variance of is larger than that of . Thus, a wide distribution obtains for parameters relevant to the outcome , which results in weak penalization/shrinkage on , and a narrow distribution obtains for parameters irrelevant to the outcome , which results in strong penalization/shrinkage on . Traditionally, Gaussian priors were given for both distributions, but the spike-and-slab lasso assigns double exponential (DE) distributions, and ultimately provides adaptive variable selection by using parameter-specific shrinkage penalties [29]:

| (4) |

where , the indicator variable determines the inclusion status of the variable, and are slab and spike scale parameters, respectively. The value of is unknown a priori and uncertainty about its value is incorporated via a Binomial prior:

| (5) |

where is a global probability of inclusion for the . The relevant theory and implementation for the spike-and-slab lasso initially focused on standard linear models, but an Expectation Maximization (EM) algorithm to fit the spike-and-slab lasso for GLMs was later developed [29,34].

Here we briefly review the model formulation and EM-algorithm, as it is the foundation of the algorithm developed to fit the models proposed in this work. The log posterior for the spike-and-slab lasso GLM is derived as follows [34]:

| (6) |

where , is the N-length outcome vector, is a -length parameter vector, is a J-length vector of inclusion indicators, ϕ is a dispersion parameter, and is the prior distribution for θ. The prior is often assumed to follow a beta distribution, which is a natural choice as a prior distribution for probability parameters as it is bounded between 0 and 1, can take a wide range of possible shapes (symmetric, skewed, U-shaped, etc.), and includes the uniform distribution on (0, 1) as a special case when a = b = 1. Henceforth, we assume that , in which case drops out of Equation (6).

The general EM algorithm was developed to address missing ‘data’ or information of some form, and has two general steps, the expectation (E-step) and maximization (M-step) steps [10]. Given the likelihood, or in our case posterior distribution, one wishes to maximize, we take the conditional expectation with respect to the missing information (E-step), replace the missing information in the objective function with the conditional expectations, and then maximize in terms of the parameters of interest (M-step); the process continues until some convergence criteria is reached.

In the case of the spike-and-slab lasso, we treat the indicator variables, , as missing. Therefore, we find the conditional expectation of the , i.e. , replace with in Equation (6), then maximize to estimate . Specifically, Equation (6) can be split into two terms:

| (7) |

| (8) |

is the log likelihood for the (Bayesian) lasso and can be fit with appropriate software, specifically glmnet in R, after replacing and with their conditional expectations, and , respectively [15]. Assuming a uniform prior for θ, the global inclusion probability is updated with . We follow previous work in setting the following convergence criteria [34]:

| (9) |

where is the estimated deviance at iteration t.

To our knowledge, no one has yet published a generalization of the spike-and-slab lasso to allow for multinomial outcomes. Having described the spike-and-slab lasso for GLMs and the EM algorithm for fitting the model, we now develop a generalization of the spike-and-slab lasso that accommodates categorical outcomes with categories, after which we further generalize the model and algorithm to incorporate the spike-and-slab elastic net, which contains the spike-and-slab lasso as a special case.

2.3. Generalization of the spike-and-slab lasso for multinomial outcomes

As mentioned above, when the outcome consists of V >2 categories, there will be V sets of parameters, one for each outcome. Hereafter, we denote the parameters associated with the predictor and outcome with the subscript j and superscript v, i.e. . Additionally, bolded parameters with subscripts indicate the vector of all parameters associated with the predictor, e.g. . The log joint posterior therefore differs from both the spike-and-slab lasso and traditional elastic net model for multinomial regression and can be derived as two independent terms as follows:

| (10) |

| (11) |

where by reference [18],

| (12) |

Here we assume that the V parameters associated with the predictor are drawn from the same distribution, i.e. , and that . If we make the additional assumption that the parameters associated with the predictor have the same inclusion probability, i.e. , and that they should be included or excluded from the model together, i.e. , then is further simplified as:

| (13) |

Note finally that the term determines whether parameters associated with the predictor are included or excluded together; when q = 1 the lasso penalty is applied to each predictor separately, but when q = 2 all parameters associated with the predictor are either zero or non-zero together [15,18]. In what follows, we assume q = 2 since we desire grouped variable selection but note that Equations (10) and (11) permit a more general scenario.

2.4. The EM algorithm

The EM algorithm for fitting the joint log posterior defined by Equations (10) and (13) is analogous to the EM-algorithm for fitting the spike-and-slab lasso for GLMs but is distinct from that algorithm in its inclusion of V parameters for each of the J predictors.

In the E-step we take the conditional expectation of and with respect to the (and if necessary, ϕ), i.e. and . These calculations differ from previous work since both conditional expectations now depend on a vector of parameters, , rather than a single parameter, . The spike-and-slab lasso for GLMs can be modified to accommodate group-specific penalties, and we employ a similar approach in this case in order impose a single penalty to the set of parameters, , i.e. we treat the parameters associated with the predictor as being a group [33,37].

Since we require a single penalty for the parameters associated with each of the j predictors, we must estimate the conditional expectations of the corresponding indicator variables, , which is dependent on the vector of predictors , rather than a single scalar value, , which by Bayes' rule is derived as:

| (14) |

where , , , and .

Here it is the case that the conditional probability of inclusion for the predictor, , is also the update for . Similar in spirit to the initial development of the spike-and-slab lasso for GLMs, it follows that the conditional expectation of is found by [33,34]:

| (15) |

This concludes the E-step, i.e. we have obtained the conditional estimates for the and using Equations (14) and (15), respectively.

In the M-step the conditional expectation estimates replace the and in the log joint posterior distribution, i.e. defined by the sum of Equations (10) and (13), each of which can be updated separately. Equation (10) is the (Bayesian) lasso for multinomial outcomes, and is maximized using cyclic coordinate descent to obtain estimates for the given conditional expectations and , which allows for some of the to be estimated as exactly 0 for all parameters contained in and in practice results in variable selection [14,15,18]. As stated above, in Equation (13) is updated with the current estimate . This process is iterated until convergence using Equation (9).

2.5. Elastic net generalization

The elastic net is a compromise between the ridge and lasso penalty, and its Bayesian interpretation is a mixture of Gaussian and double exponential distributions. In previous work we generalize the spike-and-slab lasso GLM to the spike-and-slab elastic net GLM, i.e. the spike-and-slab prior consists of a mixture of elastic net distributions rather than a mixture of double exponential distributions [21]:

| (16) |

where , is the slab scale, is the spike scale, , and . Note that corresponds to a ridge penalty and to a lasso penalty, whereas results in a compromise between the two penalties. In what follows we modify the log joint posterior and EM algorithm for fitting the spike-and-slab lasso for multinomial outcomes, described in the previous sections, to accommodate the spike-and-slab elastic net prior in Equation (16), which contains the spike-and-slab lasso as a special case ( ).

The log joint posterior can still be divided into two terms that can be updated independently. First, we modify the term , i.e. Equation (10), which in the M-step can still be maximized using cyclic coordinate descent, e.g. by the R package glmnet [15]:

| (17) |

where is the Frobenius norm and is a coefficient matrix.

The second term to maximize in the M-step, , is still described by Equation (13), but the E-step must be modified. While the conditional expectation of in Equation (14) maintains the same form, the definitions for the conditional expectations of the parameters of interest are modified to take an elastic net distribution rather than a double exponential distribution, i.e. now and .

2.6. Structured prior information

In some applications there may be prior information regarding combinations of predictors that are likely to be included or excluded together, e.g. networks of genes, or when predictors are spatial in nature, we often expect relevant predictors to cluster spatially. One approach to imposing prior structure in Bayesian variable selection is to assign structured priors to the indicators, , e.g. with an Ising prior or Markov random field [8,28]. In our setting it is more natural to incorporate structure into the prior probabilities of inclusion, , because the amount of skrinkage applied to a parameter estimate is a function of the update to at each iteration of the EM algorithm [21]. For example, an approach especially appropriate for spatial settings is a variant of Conditional Autoregressive (CAR) models, Intrinsic Autoregressions (IAR) [3,5,6,30]:

| (18) |

where and indicates the location/predictor i is a neighbor of location/predictor j, i.e. the summation is over all the neighbors of location/predictor j. For example, in spatial settings we often desire to impose spatially correlated probabilities of inclusion with decaying correlation over space; that is, a random variable at a given location is more strongly correlated with random variables at nearby locations than far away locations. However, with large dimensions it can be computationally prohibitive to take the inverse of a large covariance matrix, which makes it undesirable to specify such decaying correlation structures explicitly. Since CAR/IAR models define such structure implicitly through conditional relationships and use the inverse variance (precision: ), there is no need to specify or take the inverse of a large covariance matrix.

Including the IAR prior on the only requires updating the term of the log joint posterior distribution, and only affects the M-step in the EM algorithm, i.e. Equation (13) is easily modified to accommodate the IAR prior and the solution for the estimates of can be found with standard numerical algorithms, especially if as is common practice, we set [21,25]:

| (19) |

2.7. R package

In previous work we developed an R package, ssnet, to fit spike-and-slab elastic net GLMs [21]. We have updated ssnet to include the EM algorithm described in this work, i.e. it now possesses the capabilities necessary to fit spike-and-slab elastic net multinomial regression. This package is freely available on GitHub (https://github.com/jmleach-bst/ssnet).

3. Simulation study

3.1. Simulation description

The spike-and-slab lasso and its generalizations have been shown to be useful for variable selection when the solution is sparse, i.e. a relatively small percentage of the set of predictors is relevant to the outcome of interest, as well as when subsets of predictors are highly correlated, as can occur in practice, e.g. in genetic expression or imaging data [21,29,34]. Our simulation study focuses specifically on this situation and is inspired by the original spike-and-slab lasso paper but adapted for multinomial outcomes [29].

Specifically, the design vector for each subject, , contains 1000 predictors which are generated from a multivariate normal distribution with mean zero, unit variance, and a block correlation structure consisting of 20 blocks of 50 predictors each, where within each block the predictors have 0.90 correlation with each other and 0 correlation with predictors outside the block. That is, where is a vector of length 1000 and is a 1000 ×1000 block covariance matrix with , , matrices along its diagonal defined as follows:

where

for .

A total of 6 predictors are given non-zero values, specifically those predictors with indices 1, 51, 101, 151, 201, 251, which results in 0.6% of the parameters being relevant to the outcome. We examine 3 effect size scenarios, each of which treats one group as a reference, i.e. all parameter values are 0. The second and third groups are given non-zero values at the appropriate indices. The settings are as follows, and are generally interpreted as representing gradually larger effect sizes:

Class 2: , ; Class 3: , .

Class 2: , ; Class 3: , .

Class 2: , ; Class 3: , .

where defines the indices for parameters that are non-zero and is the parameter associated with the predictor and outcome category with v = 1, 2, 3.

We generate 5000 simulations under each scenario by drawing outcomes from the multinomial distribution with probabilities of each event, , given by Equation (2). Each simulated consists of a ‘training’ data set of size N = 300 and a ‘test’ data set of size N = 100, which as described below allows for variable selection to be conducted in on the training data and model performance estimated in the test data. These scenarios generate imbalanced data, where group 1 (reference) is most common, group 2 second most common, and group 3 least common. The imbalance is similar in all scenarios as seen in Table 1.

Table 1.

Mean frequency and percentage of each class by simulation scenario (training data).

| Class 1 | Class 2 | Class 3 | |

|---|---|---|---|

| Scenario 1 | 121.94 (40.51%) | 103.44 (34.62%) | 74.62 (24.87%) |

| Scenario 2 | 122.23 (40.62%) | 101.75 (34.04%) | 76.02 (25.34%) |

| Scenario 3 | 123.67 (41.08%) | 94.82 (31.72%) | 81.51 (27.20%) |

3.2. Simulation analysis approach

For each simulation the training data set is used to perform 5-fold cross validation to select optimal penalty parameters for the elastic net ( ), lasso ( ), and spike-and-slab elastic net, and spike-and-slab lasso. That is, for the traditional models and a spike scale, , and slab scale, , for the spike-and-slab models. For the traditional models, the range of λ is chosen internally by the R package glmnet [15,18]. A grid of values is preselected for the spike-and-slab models, and , which are then fit using the EM algorithm developed above with the R package ssnet. We then use the selected penalty parameters to fit the model on the entire training data. This model is then applied to predict the observations in the test data and calculate measures of model fitness.

We present cross-validated deviance as a general measure of model fitness and then focus on the variable selection properties of the spike-and-slab framework compared to the traditional framework. Deviance is defined as [32,34]:

| (20) |

where are the final mode estimates.

We assess the ability of the models to avoid selecting irrelevant predictors by estimating the false discovery rate (FDR) [4]. That is, we calculate the proportion of irrelevant predictors included out of all non-zero estimates in each simulation, i.e. the false discovery proportion (FDP). We can obtain an estimate of the FDR by averaging the FDP values over the simulations; better performing models will have relatively lower FDR. We assess the methods' ability to correctly select the 6 non-zero, or relevant, predictors by calculating the proportion of these 6 predictors that were estimated to be non-zero in each simulation; the average of these proportions over the simulations we call ‘Power;’ better performing models will have relatively higher ‘Power.’ Finally, we estimate the probabilities of inclusion for each predictor by the proportion of simulations in which the predictors were estimated to be non-zero.

3.3. Simulation results

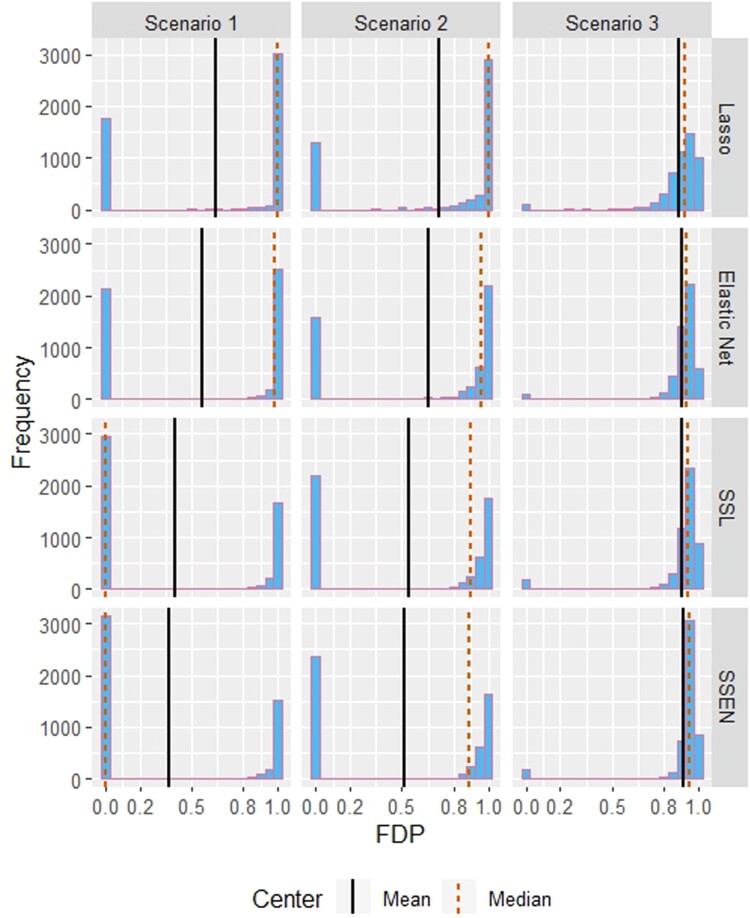

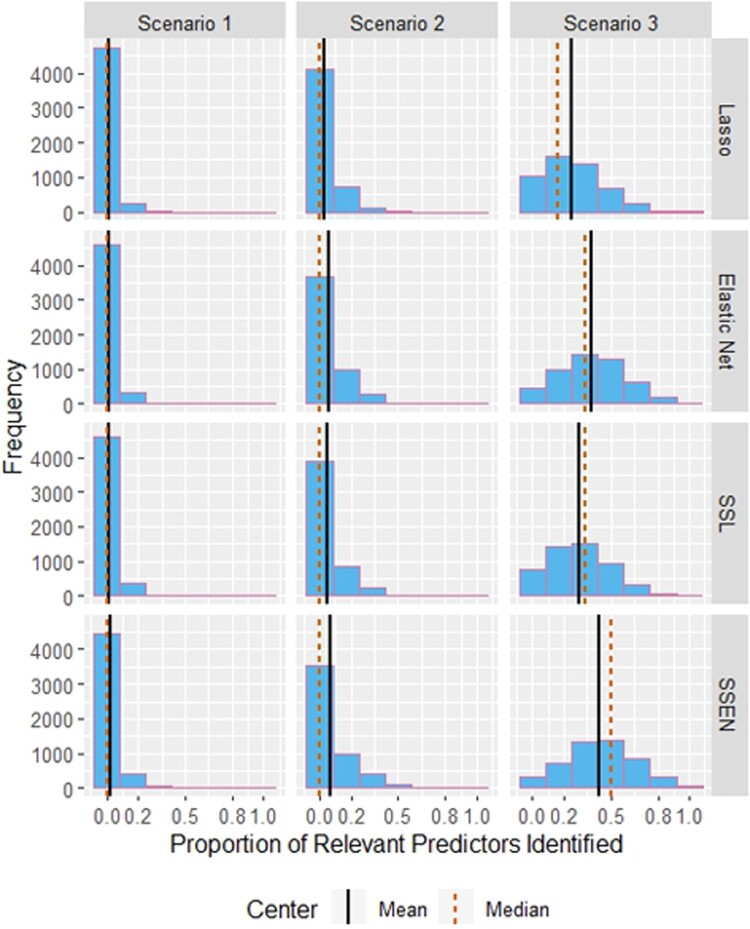

Table 2 shows the average penalty parameter estimates, deviance, false discovery proportion (FDR), and Power across the 5000 simulations for each scenario. While the traditional elastic net ( ) had the lowest deviance for all scenarios, the variability of deviance across models was slight; noticeable differences across models were with respect to FDR and Power. In scenarios 1 and 2, the spike-and-slab elastic net had the lowest FDR, while in scenario 3 all models had FDR near 0.90 with lasso having the lowest (0.8917), but the Power was highest for the spike-and-slab elastic net in all 3 scenarios. Notably, while lasso has the lowest FDR in Scenario 3, if barely, its Power is nearly half that of the spike-and-slab elastic net. Figures 1 and 2 display the distribution of FDR and Power across the 5000 simulations, which we can see are generally not symmetric. FDR is bimodal in all cases, although Scenario 3 is less striking in this respect. FDR differences between the traditional and spike-and-slab frameworks are most striking in Scenario 1, where the median FDR is zero for the spike-and-slab models, but near one for the traditional models. The distributions for ‘Power’ are generally right skewed, most severely for Scenario 1 and approaching symmetry as the effect sizes increase.

Table 2.

Model fitness and variable selection metrics by simulation scenario.

| Model | Deviance | FDP | Power* | |||

|---|---|---|---|---|---|---|

| Scenario 1 | Lasso | 0.1031 | 0.1031 | 216.80 | 0.6437 | 0.0090 |

| EN | 0.2020 | 0.2020 | 216.80 | 0.5637 | 0.0143 | |

| SSL | 0.0243 | 1.7258 | 217.27 | 0.4052 | 0.0150 | |

| SSEN | 0.0152 | 1.6154 | 217.23 | 0.3661 | 0.0211 | |

| Scenario 2 | Lasso | 0.1001 | 0.1001 | 216.71 | 0.7166 | 0.0338 |

| EN | 0.1937 | 0.1937 | 216.66 | 0.6555 | 0.0557 | |

| SSL | 0.0308 | 2.0642 | 217.24 | 0.5435 | 0.0479 | |

| SSEN | 0.0180 | 1.9542 | 217.18 | 0.5100 | 0.0697 | |

| Scenario 3 | Lasso | 0.0770 | 0.0770 | 210.90 | 0.8917 | 0.2519 |

| EN | 0.1445 | 0.1445 | 210.69 | 0.9067 | 0.3799 | |

| SSL | 0.0553 | 3.0590 | 210.95 | 0.9016 | 0.2955 | |

| SSEN | 0.0301 | 2.9224 | 210.83 | 0.9139 | 0.4264 |

*Proportion of Non-zero Parameters Estimated as Non-zero. Notes: EN = elastic net ; SSL = spike-and-slab lasso; SSEN = spike-and-slab elastic net.

Figure 1.

Histograms for the distribution of FDP over the 5000 simulations by model and scenario. Abbreviations are SSL = spike-and-slab lasso and SSEN = spike-and-slab elastic net.

Figure 2.

Histograms for the distribution of ‘Power’ over the 5000 simulations by model and scenario. Abbreviations are SSL = spike-and-slab lasso and SSEN = spike-and-slab elastic net.

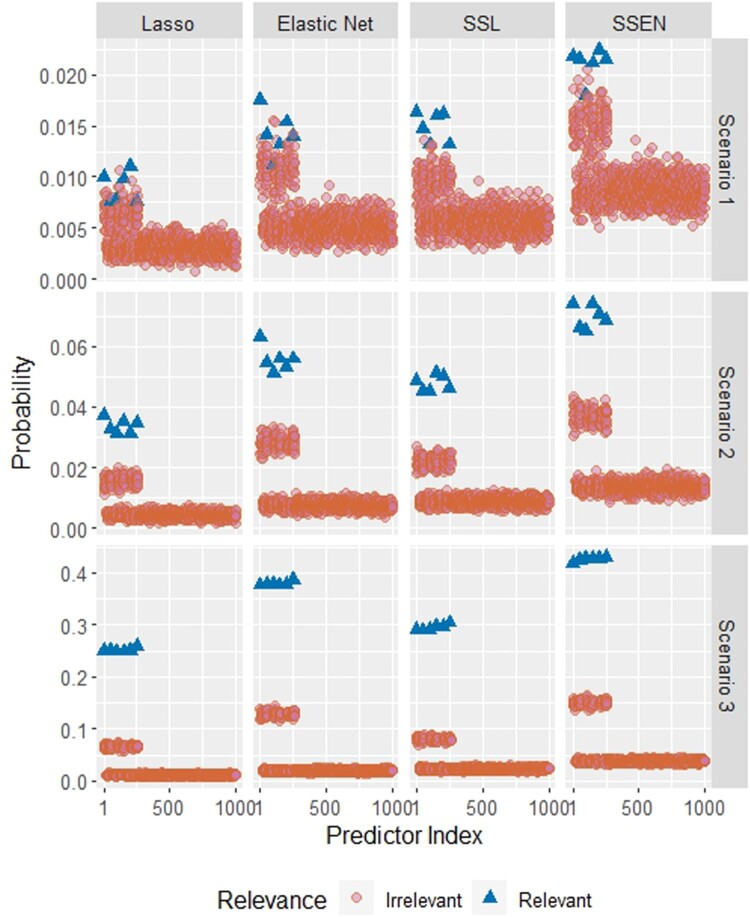

Similarly, Table 3 and Figure 3 display the inclusion probabilities for each of the 6 non-zero parameters in each scenario. In each scenario and for each predictor, the spike-and-slab elastic net had the highest probability of inclusion. The spike-and-slab elastic net also tends to have slightly higher estimates for irrelevant parameters, but the difference is less extreme across models as the effect sizes increase.

Table 3.

Probabilities of inclusion for predictors with non-zero parameters.

| Model | |||||||

|---|---|---|---|---|---|---|---|

| Scenario 1 | Lasso | 0.0100 | 0.0076 | 0.0078 | 0.0098 | 0.0110 | 0.0076 |

| EN | 0.0176 | 0.0142 | 0.0112 | 0.0132 | 0.0154 | 0.0140 | |

| SSL | 0.0164 | 0.0148 | 0.0132 | 0.0160 | 0.0162 | 0.0132 | |

| SSEN | 0.0218 | 0.0216 | 0.0180 | 0.0212 | 0.0224 | 0.0216 | |

| Scenario 2 | Lasso | 0.0372 | 0.0328 | 0.0314 | 0.0354 | 0.0314 | 0.0348 |

| EN | 0.0630 | 0.0548 | 0.0512 | 0.0560 | 0.0532 | 0.0560 | |

| SSL | 0.0488 | 0.0454 | 0.0454 | 0.0514 | 0.0502 | 0.0464 | |

| SSEN | 0.0740 | 0.0662 | 0.0650 | 0.0740 | 0.0706 | 0.0686 | |

| Scenario 3 | Lasso | 0.2514 | 0.2522 | 0.2492 | 0.2492 | 0.2504 | 0.2588 |

| EN | 0.3772 | 0.3792 | 0.3800 | 0.3770 | 0.3778 | 0.3880 | |

| SSL | 0.2922 | 0.2900 | 0.2916 | 0.2964 | 0.2982 | 0.3046 | |

| SSEN | 0.4196 | 0.4238 | 0.4292 | 0.4270 | 0.4286 | 0.4302 |

Notes: EN = elastic net; SSL = spike-and-slab lasso; SSEN = spike-and-slab elastic net.

Figure 3.

Estimated inclusion probabilities for each predictor, where blue triangles indicate predictors relevant to the outcome and pink circles indicate predictors irrelevant to the outcome. Abbreviations are SSL = spike-and-slab lasso and SSEN = spike-and-slab elastic net.

4. Application to ADNI data

4.1. Analysis setting and data description

In the simulation study we examined explicit variable selection properties of the proposed methodology, but we shift gears in the data application to demonstrate how to apply the methodology for classification problems. Consider the example from Alzheimer's disease research in Section 1 where subjects may be cognitively normal (CN), have mild cognitive impairment (MCI), or have dementia, i.e. 3 relevant categories for the outcome of interest. Suppose that we wish to use medical images to classify subjects, or perhaps to predict their future disease status. Multinomial regression of the sort proposed in this work may be appropriate for such a circumstance, since medical images are often high-dimensional and highly correlated, and the methods are straightforward to apply to classification problems because the models can produce subject-specific predicted/expected probabilities of belonging to each category. The subject is then classified as whichever class has the highest predicted probability.

Our demonstration uses data obtained from the Alzheimer's Disease Neuroimaging Initiative (ADNI) database (adni.loni.usc.edu). The ADNI was launched in 2003 as a public-private partnership, led by Principal Investigator Michael W. Weiner, MD. The primary goal of ADNI has been to test whether serial magnetic resonance imaging (MRI), positron emission tomography (PET), other biological markers, and clinical and neuropsychological assessment can be combined to measure the progression of mild cognitive impairment (MCI) and early Alzheimer's disease (AD) [27,36].

We examine two scenarios, both of which model disease class (cognitive normal, MCI, or dementia). The first scenario models disease class using cortical thickness measures, which are estimated from structural MRI using FreeSurfer [9,12,13]. The second scenario models disease class using Tau PET images. Both scenarios have imaging measures on the Desikan-Killiany atlas, which consists of 34 regions per hemisphere for a total of 68 brain regions [11]. The data for both scenarios are imbalanced with respect to the distributions of disease classes, as seen in Table 4, with cognitive normal the most common, followed by MCI then dementia.

Table 4.

Class frequencies/percentages for cortical thickness and tau PET data.

| Cognitive normal | MCI | Dementia | |

|---|---|---|---|

| Cortical Thickness | 261 (60.84%) | 127 (29.60%) | 41 (9.56%) |

| Tau PET | 234 (60.15%) | 116 (29.82%) | 39 (10.03%) |

4.2. Model evaluation

Model evaluation is tricky for multi-class classification compared to binary classification because standard measures such as sensitivity, specificity, positive and negative predictive value, , and Matthews Correlation Coefficient cannot be applied without modification [31]. We present and examine several appropriate metrics for cases where the number of classes exceeds two. The average accuracy (AA) and error rate (ER) are the average per-class effectiveness and classification error, respectively:

| (21) |

| (22) |

where , , , and are true positive, true negative, false positive, and false negative for class v.

Positive predictive value (PPV; alternatively, precision in the machine learning literature), sensitivity (SN; alternatively recall in the machine learning literature), and score can be averaged in one of two ways: micro- or macro-averaged. Micro-averaged values are given subscript μ and are calculated using cumulative counts of true positives, false positives, true negatives, and false negatives.

| (23) |

| (24) |

| (25) |

Macro averaged values are given subscript M and are obtained as the averages of the aforementioned values over each class.

| (26) |

| (27) |

| (28) |

Micro-averaging favors classes/categories with relatively larger proportions of subjects, while macro-averaging treats classes/categories equally. All the metrics presented produce values between 0 and 1, and better performing models will have values near 1 in all cases except for PCE, where lower values (near 0) indicate better performance.

4.3. Analysis approach

For each model, optimal penalty parameters were chosen by 5-fold cross validation with deviance as the measure of model performance. The R package glmnet was used to fit the traditional lasso and elastic net and the range of penalty parameters was chosen internally by the glmnet function [15,18]. The R package ssnet was used to fit the spike-and-slab models. The grid of possible spike and slab scales were and , respectively. We considered two values for α: , which corresponds to the spike-and-slab lasso, and , which corresponds to a halfway compromise between ridge and lasso penalties. Given the spatial nature of the predictors, structured priors of the sort described in Section 2.6 may be appropriate. In addition to examining the traditional and spike-and-slab frameworks, we also examine the spike-and-slab framework with IAR priors on logit inclusion probabilities where the Desikan-Killiany atlas was used to specify the neighborhood matrix. Specifically, two regions of the atlas were considered neighbors if they were in the same hemisphere and shared a border.

Tables 5 and 6 show the results for the cortical thickness data, while Tables 7 and 8 show results for the tau PET data. In both cases, metrics are similar across models, which may be the result of the relatively coarse Desikan-Killiany atlas, but which nevertheless demonstrate the methodology. In both cases the elastic net has the lowest deviance. For the cortical thickness data, the elastic net is the best performing model for all metrics except , where spike-and-slab elastic net is highest. For the tau PET data, spike-and-slab elastic net with IAR priors on logit inclusion probabilities has the best metrics across the board, except for deviance. Thus, while the differences across models are slight, even for coarse spatial predictors used here we can see the utility of both the spike-and-slab framework and the structured priors on inclusion probabilities.

Table 5.

Model fitness metrics for cortical thickness data.

| Model | Dev. | AA | PCE | ||

|---|---|---|---|---|---|

| Lasso | 0.02 | 0.02 | 115.38 | 0.7758 | 0.2242 |

| EN | 0.04 | 0.04 | 114.57 | 0.7808 | 0.2192 |

| SSL | 0.09 | 2.00 | 119.65 | 0.7770 | 0.2230 |

| SSEN | 0.19 | 1.00 | 117.71 | 0.7774 | 0.2226 |

| SSL-IAR | 0.20 | 1.00 | 118.99 | 0.7804 | 0.2196 |

| SSEN-IAR | 0.20 | 2.00 | 116.54 | 0.7806 | 0.2194 |

Notes: EN = elastic net ; SSL = spike-and-slab lasso; SSEN = spike-and-slab elastic net; SSL = spike-and-slab lasso w/ IAR prior; SSEN = spike-and-slab elastic net w/ IAR prior.

Table 6.

Macro- and micro-averaged metrics for cortical thickness data.

| Model | ||||||||

|---|---|---|---|---|---|---|---|---|

| Lasso | 0.02 | 0.02 | 0.6195 | 0.5361 | 0.5738 | 0.6637 | 0.6637 | 0.6637 |

| EN | 0.04 | 0.04 | 0.6323 | 0.5391 | 0.5811 | 0.6712 | 0.6712 | 0.6712 |

| SSL | 0.09 | 2.00 | 0.6397 | 0.4894 | 0.5506 | 0.6655 | 0.6655 | 0.6655 |

| SSEN | 0.19 | 1.00 | 0.6666 | 0.4935 | 0.5644 | 0.6661 | 0.6661 | 0.6661 |

| SSL-IAR | 0.20 | 1.00 | 0.6366 | 0.5103 | 0.5613 | 0.6706 | 0.6706 | 0.6706 |

| SSEN-IAR | 0.20 | 2.00 | 0.6119 | 0.5127 | 0.5562 | 0.6709 | 0.6709 | 0.6709 |

Notes: EN = elastic net ; SSL = spike-and-slab lasso; SSEN = spike-and-slab elastic net; SSL = spike-and-slab lasso w/ IAR prior; SSEN = spike-and-slab elastic net w/ IAR prior.

Table 7.

Model fitness metrics for tau PET data.

| Model | Dev. | AA | PCE | ||

|---|---|---|---|---|---|

| Lasso | 0.019 | 0.019 | 131.38 | 0.7670 | 0.2330 |

| EN | 0.028 | 0.028 | 131.00 | 0.7701 | 0.2299 |

| SSL | 0.20 | 9.00 | 135.12 | 0.7672 | 0.2328 |

| SSEN | 0.18 | 1.00 | 133.01 | 0.7764 | 0.2236 |

| SSL-IAR | 0.07 | 1.00 | 136.00 | 0.7671 | 0.2329 |

| SSEN-IAR | 0.20 | 5.00 | 132.55 | 0.7796 | 0.2204 |

Notes: EN = elastic net ; SSL = spike-and-slab lasso; SSEN = spike-and-slab elastic net; SSL = spike-and-slab lasso w/ IAR prior; SSEN = spike-and-slab elastic net w/ IAR prior.

Table 8.

Macro- and micro-averaged metrics for tau PET data.

| Model | ||||||||

|---|---|---|---|---|---|---|---|---|

| Lasso | 0.019 | 0.019 | 0.5326 | 0.4477 | 0.4856 | 0.6504 | 0.6504 | 0.6504 |

| EN | 0.028 | 0.028 | 0.5355 | 0.4572 | 0.4926 | 0.6551 | 0.6551 | 0.6551 |

| SSL | 0.20 | 9.00 | 0.5963 | 0.4192 | 0.5116 | 0.6508 | 0.6508 | 0.6508 |

| SSEN | 0.18 | 1.00 | 0.6088 | 0.4519 | 0.5159 | 0.6646 | 0.6646 | 0.6646 |

| SSL-IAR | 0.07 | 1.00 | 0.5890 | 0.4185 | 0.4971 | 0.6507 | 0.6507 | 0.6507 |

| SSEN-IAR | 0.20 | 5.00 | 0.6104 | 0.4582 | 0.5210 | 0.6694 | 0.6694 | 0.6694 |

Notes: EN = elastic net , SSL = spike-and-slab lasso, SSEN = spike-and-slab elastic net, SSL = spike-and-slab lasso w/ IAR prior, SSEN = spike-and-slab elastic net w/ IAR prior.

5. Conclusion and discussion

In this work we generalized the spike-and-slab lasso to accommodate categorical outcomes with categories and to incorporate the spike-and-slab elastic net prior, which contains the spike-and-slab lasso prior as a special case. To our knowledge this is the first generalization of the spike-and-slab lasso and/or spike-and-slab elastic net to accommodate categorical outcomes with categories.

We have also demonstrated applications of the methodology and shown circumstances where the proposed framework outperforms the traditional lasso and elastic net. The methodology developed in this work may prove useful to researchers in genetics/genomics or medical imaging research and can be generally useful in circumstances where the outcome consists of categories and the predictors are high-dimensional, whether in scientific, medical, or economic applications. The benefits of the spike-and-slab lasso, or its generalization, the spike-and-slab elastic net, for this setting are the same or similar to previous work, i.e. that the framework allows for adaptive shrinkage of estimates, thereby increasing the probability that predictors relevant to the outcome of interest remain in the model and retain the relatively large size, while predictors irrelevant to the outcome of interest are shrunk towards or to exactly zero [21,29,34]. We demonstrated these advantages empirically through a simulation study, where the spike-and-slab elastic net had the smallest FDR and largest Power in all simulation scenarios except that with the largest effect sizes for true non-zero parameters, in which case the FDR estimates were essentially the same for all models, but the spike-and-slab elastic net had the highest Power. Thus, the spike-and-slab elastic net was the preferable model in all simulation scenarios.

We also demonstrated the utility of the spike-and-slab elastic net for purpose of predictive modeling with an application using MRI- and PET-derived brain images from the ADNI study. While the results are very similar across models for the MRI-derived cortical thickness data, the spike-and-slab elastic net with structured priors was the best performing model with respect to every metric except for deviance when using the Tau PET data as predictors of dementia status. Thus, while the Desikan-Killiany atlas is perhaps coarser than we would prefer (68 predictors), it is instructive that the spike-and-slab elastic net with structured priors is still the best performing model for the Tau PET predictors, and it is possible that a finer-resolution atlas would show greater improvements, since the methodology is designed to accommodate high-dimensional data.

The primary complication of generalizing this methodology for multinomial outcomes is that rather than a single set of parameters to estimate, there is a set of parameters associated with each category, which necessitates decisions regarding how and where shrinkage penalties are applied to the parameter estimates. The approach developed in this work depends on the assumption that the parameters associated with each predictor should be included or excluded from the model together, which allows us to use the grouped lasso penalty as part of the estimation strategy and significantly simplifies the complexity of the EM-algorithm developed to fit the model [15,33,37]. However, it is possible to conceive of circumstances where this assumption fails, and such circumstances would require modification of the approach developed and demonstrated in the present work.

We have also generalized the approach to the spike-and-slab elastic net, of which the spike-and-slab lasso is a special case, and provided an example for how structured priors could be incorporated for inclusion probabilities, thereby providing additional structure to variable selection. However, while the IAR prior presented here is versatile and can be applied beyond spatial settings to accommodate a wide variety of neighborhood structures, other structured priors may be appropriate depending on the circumstances. The main takeaway is that incorporating structured information into the inclusion probabilities rather than the inclusion indicators has considerable computational benefits in the context of the EM-algorithm presented here, and future work could focus on exploring a wider variety of structures in various applications.

Our estimation approach employs an EM-based mode-search algorithm. This approach departs from many standard approaches in Bayesian statistics, which usually involve estimating the joint posterior distribution and often employs simulation-based Markov Chain Monte Carlo (MCMC) methods to do so. For computational reasons MCMC may be impractical in high-dimensional variable selection problems, which has led to the development of mode-search algorithms as an alternative, since such algorithms can converge much faster than MCMC methods [21,28,29,33,34]. However, the computational benefits do come with a cost, namely that the results consist of modes rather than entire posterior distributions, which limits the ability to quantify uncertainty around the resulting parameter (mode) estimates.

A last consideration is that it is not necessarily straightforward to select penalty parameters a priori; thus, methods such as k-fold cross-validation should be employed to select the spike and slab scales, and , respectively, to avoid overfitting the model to the data [19]. For demonstration purposes, in this work we selected a prior values for α parameter of the spike-and-slab elastic net, but in practice it may also be wise to select α during the cross validation process.

Finally, an R package, ssnet, for fitting the methodology presented here is available on GitHub (https://github.com/jmleach-bst/ssnet).

Funding Statement

Data collection and sharing for this project was funded by the Alzheimer's Disease Neuroimaging Initiative (ADNI) (National Institutes of Health [grant number U01 AG024904] and DOD ADNI (Department of Defense award number W81XWH-12-2-0012). ADNI is funded by the National Institute on Aging, the National Institute of Biomedical Imaging and Bioengineering, and through generous contributions from the following: AbbVie, Alzheimer's Association; Alzheimer's Drug Discovery Foundation; Araclon Biotech; BioClinica, Inc.; Biogen; Bristol-Myers Squibb Company; CereSpir, Inc.; Cogstate; Eisai Inc.; Elan Pharmaceuticals, Inc.; Eli Lilly and Company; EuroImmun; F. Hoffmann-La Roche Ltd and its affiliated company Genentech, Inc.; Fujirebio; GE Healthcare; IXICO Ltd.;Janssen Alzheimer Immunotherapy Research & Development, LLC.; Johnson & Johnson Pharmaceutical Research & Development LLC.; Lumosity; Lundbeck; Merck & Co., Inc.;Meso Scale Diagnostics, LLC.; NeuroRx Research; Neurotrack Technologies; Novartis Pharmaceuticals Corporation; Pfizer Inc.; Piramal Imaging; Servier; Takeda Pharmaceutical Company; and Transition Therapeutics. The Canadian Institutes of Health Research is providing funds to support ADNI clinical sites in Canada. Private sector contributions are facilitated by the Foundation for the National Institutes of Health (www.fnih.org). The grantee organization is the Northern California Institute for Research and Education, and the study is coordinated by the Alzheimer's Therapeutic Research Institute at the University of Southern California. ADNI data are disseminated by the Laboratory for Neuro Imaging at the University of Southern California.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Data availability statement

Code to reproduce the results of the simulation study and data analysis is available on GitHub (https://github.com/jmleach-bst/multinomial_ssnet_analyses). Note that while code for performing analysis on ADNI data is included, the ADNI data sets themselves are not, because we are not authorized to share data from ADNI. Details for access to these data can be found at http://adni.loni.usc.edu/data-samples/access-data/.

References

- 1.Agresti A., An Introduction to Categorical Analysis, 2nd ed., John Wiley & Sons, Hoboken, New Jersey, 2007. [Google Scholar]

- 2.Bai R., Rockova V., and George E.I., Spike-and-slab meets lasso: A review of the spike-and-slab lasso, arXiv:2010.06451 (2020).

- 3.Banerjee S., Carlin B.P, and Gelfand A.E., Hierarchical Modeling and Analysis for Spatial Data, 2nd ed., Chapman & Hall/CRC, Boca Raton, Florida, 2015. [Google Scholar]

- 4.Benjamini Y. and Hochberg Y., Controlling the false discovery rate: A practical and powerful approach to multiple testing, J. R. Stat. Soc. Ser. B (Methodol) 57 (1995), pp. 289–300. [Google Scholar]

- 5.Besag J., Spatial interaction and the statistical analysis of lattice systems, J. R. Stat. Soc. Ser. B (Methodol) 36 (1974), pp. 192–236. [Google Scholar]

- 6.Besag J. and Kooperberg C., On conditional and intrinsic autoregressions, Biometrika 82 (1995), pp. 733–746. [Google Scholar]

- 7.Bondi M.W., Edmonds E.C., and Salmon D.P., Alzheimer's disease: Past, present, and future, J. Int. Neuropsychol. Soc. 23 (2017), pp. 818–831. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Cressie N. and Wikle C.K., Statistics for Spatio-Temporal Data, Wiley Series in Probability and Statistics, John Wiley & Sons, Hoboken, NJ, 2011. [Google Scholar]

- 9.Dale A.M., Fischl B., and Sereno M.I., Cortical surface-based analysis: I. Segmentation and surface reconstruction, NeuroImage 9 (1999), pp. 179–194. [DOI] [PubMed] [Google Scholar]

- 10.Dempster A., Laird N., and Rubin D., Maximum likelihood from incomplete data via the em algorithm (with discussion), J. R. Stat. Soc. Ser. B 39 (1977), pp. 1–38. [Google Scholar]

- 11.Desikan R.S., Ségonne F., Fischl B., Quinn B.T., Dickerson B.C., Blacker D., Buckner R.L., Dale A.M., Maguire R.P., Hyman B.T., Albert M.S., and Killiany R.J., An automated labeling system for subdividing the human cerebral cortex on MRI scans into gyral based regions of interest, NeuroImage 31 (2006), pp. 968–980. [DOI] [PubMed] [Google Scholar]

- 12.Fischl B., FreeSurfer, NeuroImage 62 (2012), pp. 774–781. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Fischl B., Sereno M.I., and Dale A.M., Cortical surface-based analysis: II. Inflation, flattening, and a surface-based coordinate system, NeuroImage 9 (1999), pp. 195–207. [DOI] [PubMed] [Google Scholar]

- 14.Friedman J., Hastie T., Höfling H., and Tibshirani R., Pathwise coordinate optimization, Ann. Appl. Stat. 1 (2007), pp. 302–332. [Google Scholar]

- 15.Friedman J., Hastie T., and Tibshirani R., Regularization paths for generalized linear models via coordinate descent, J. Stat. Softw. 33 (2010), pp. 1–22. Available at https://www.jstatsoft.org/v33/i01/. [PMC free article] [PubMed] [Google Scholar]

- 16.George E.I. and McCulloch R.E., Variable selection via Gibbs sampling, J. Am. Stat. Assoc. 88 (1993), pp. 881–889. [Google Scholar]

- 17.Goldstein M., Bayesian analysis of regression problems, Biometrika 63 (1976), pp. 51–58. [Google Scholar]

- 18.Hastie T., Qian J., and Tay K., An introduction to glmnet, https://glmnet.stanford.edu/articles/glmnet.html (2021). Accessed: August 30, 2021.

- 19.Hastie T., Tibshirani R., and Friedman J., The Elements of Statistical Learning, Springer, 2009. [Google Scholar]

- 20.Hoerl A.E. and Kennard R.W., Ridge regression: Biased estimation for nonorthogonal problems, Technometrics 12 (1970), pp, 55–67. DOI: 10.1080/00401706.1970.10488634. [DOI] [Google Scholar]

- 21.Leach J.M., Aban I., and Yi N., Incorporating spatial structure into inclusion probabilities for bayesian variable selection in generalized linear models with the spike-and-slab elastic net, J. Stat. Plan. Inference. 217 (2022), pp. 141–152. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Leach J.M., Edwards L.J., Kana R., Visscher K., Yi N., and Aban I., The spike-and-slab elastic net as a classification tool in Alzheimer's disease, PLoS. ONE. 17 (2022), pp. e0262367. DOI: 10.1371/journal.pone.0262367. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.McCulloch C.E., Searle S.R., and Neuhaus J.M., Generalized, Linear, and Mixed Models, 2nd ed., John Wiley & Sons, Hoboken, New Jersey, 2008. [Google Scholar]

- 24.Mitchell T. and Beauchamp J., Bayesian variable selection in linear regression, J. Am. Stat. Assoc. 83 (1988), pp. 1023–1032. [Google Scholar]

- 25.Morris M., Wheeler-Martin K., Simpson D., Mooney S.J., Gelman A., and DiMaggio C., Bayesian hierarchical spatial models: Implementing the Baseg York Mollié model in stan, Spat. Spatiotemporal. Epidemiol. 31 (2019), pp. 100301. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Park T. and Casella G., The Bayesian lasso, J. Am. Stat. Assoc. 103 (2008), pp. 681–686. [Google Scholar]

- 27.Petersen R.C., Aisen P.S., Beckett L.A., Donohue M.C., Gamst A.C., Harvey D.J., Jack C.R., Jagust W.J., Shaw L.M., Toga A.W., Trojanowski J.Q., and Weiner M.W., Alzheimer's disease neuroimaging initiative (ADNI): Clinical characterization, Neurology 74 (2010), pp. 201–209. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Roc˘ková V. and George E., Emvs: The EM approach to bayesian variable selection, J. Am. Stat. Assoc. 109 (2014), pp. 828–846. [Google Scholar]

- 29.Roc˘ková V. and George E., The spike and slab lasso, J. Am. Stat. Assoc. 113 (2018), pp. 431–444. [Google Scholar]

- 30.Rue H. and Held L., Gaussian Markov Random Fields: Theory and Applications, 2nd ed., Chapman & Hall/CRC, Boca Raton, Florida, 2005. [Google Scholar]

- 31.Sokolova M. and Lapalme G., A systematic analysis of performance measures for classification tasks, Inf. Process. Manag. 45 (2009), pp. 427–437. [Google Scholar]

- 32.Steyerberg E.W., Clinical Prediction Models: Practical Approach to Development, Validation, and Updates, 2nd ed., Springer, 2019. [Google Scholar]

- 33.Tang Z., Shen Y., Li Y., Zhang X., Wen J., Qian C., Zhuang W., Shi X., and Yi N., Group spike and slab lasso generalized linear models for disease prediction and associated genes detection by incorporating pathway information, Bioinformatics 34 (2018), pp. 901–910. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Tang Z., Shen Y., Zhang X., and Yi N., The spike and slab lasso generalized linear models for prediction and associated genes detection, Genetics 205 (2017), pp. 77–88. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Tibshirani R., Regression shrinkage and selection via the lasso, J. R. Stat. Soc. 58 (1996), pp. 267–288. [Google Scholar]

- 36.Weiner M.W., Veitch D.P., Aisen P.S., Beckett L.A., Cairns N.J., Green R.C., Harvey D., Jack C.R., Jagust W., Morris J.C., Petersen R.C., Salazar J., Saykin A.J., Shaw L.M., Toga A.W., and Trojanowski J.Q., The Alzheimer's disease neuroimaging initiative 3: Continued innovation for clinical trial improvement, Alzheimer's Dementia 13 (2017), pp. 0–1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Yuan M. and Lin Y., Model selection and estimation in regression with grouped variables, J. R. Stat. Soc. B 68 (2006), pp. 49–67. [Google Scholar]

- 38.Zou H. and Hastie T., Regularization and variable selection via the elastic net, J. R. Stat. Soc. Ser. B (Methodol) 67 (2005), pp. 301–320. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Code to reproduce the results of the simulation study and data analysis is available on GitHub (https://github.com/jmleach-bst/multinomial_ssnet_analyses). Note that while code for performing analysis on ADNI data is included, the ADNI data sets themselves are not, because we are not authorized to share data from ADNI. Details for access to these data can be found at http://adni.loni.usc.edu/data-samples/access-data/.